Abstract

Many experimental studies suggest that animals can rapidly learn to identify odors and predict the rewards associated with them. However, the underlying plasticity mechanism remains elusive. In particular, it is not clear how olfactory circuits achieve rapid, data efficient learning with local synaptic plasticity. Here, we formulate olfactory learning as a Bayesian optimization process, then map the learning rules into a computational model of the mammalian olfactory circuit. The model is capable of odor identification from a small number of observations, while reproducing cellular plasticity commonly observed during development. We extend the framework to reward-based learning, and show that the circuit is able to rapidly learn odor-reward association with a plausible neural architecture. These results deepen our theoretical understanding of unsupervised learning in the mammalian brain.

Subject terms: Computational neuroscience, Learning and memory

How can rodents make sense of the olfactory environment without supervision? Here, the authors formulate olfactory learning as an integrated Bayesian inference problem, then derive a set of synaptic plasticity rules and neural dynamics that enables near-optimal learning of odor identification.

Introduction

It is crucial for animals to infer the identity of odors, in situations ranging from foraging to mating1. While some odors are hardwired2, most must be learned. Learning, however, is particularly difficult, especially in natural environments where odors are rarely presented in isolation, most odors are presented a small number of times, and odor identities are rarely supervised. Nevertheless, animals can learn to associate an odor with a reward in a few trials3–5. Our goal here is to elucidate the local plasticity mechanisms that orchestrate this rapid learning.

To gain a conceptual understand of how learning occurs, note that if the affinities of olfactory receptor neurons (OSNs) to odors were known, approximate Bayesian inference could be used to infer which odors are present given OSN activity6. And in a supervised setting—a setting in which the animal is told which odors are present—the affinities (i.e. the weights) could be learned efficiently using recently proposed Bayesian approaches7,8. Here we show that, even when the weights are not known and learning is unsupervised, we can combine these two methods to simultaneously learn the weights and infer the odors.

Our approach is as follows: when inferring which odors are present, average over the uncertainty in the weights; then use the inferred odors to update the estimates of the weights, and, importantly, decrease the uncertainty. As the estimates of the weights become more accurate, inference also improves. However, while straightforward, exact implementation of this learning process is intractable. Consequently, we have to use an approximate method9.

Although inference is approximate, our model still leads to faster learning of olfactory stimuli compared to previously proposed sparse-coding-based approaches10–12. It also provides some insight into olfactory circuitry: it reveals the advantage, relative to the rectified linear transfer function13, of sigmoidal-shaped f–I curves typical of biological neurons14,15, and it reproduces the reduction in neuronal input gain16,17 and learning rate18 commonly observed during development. In addition, it predicts that the learning rate of granule cells should decrease as they become more selective, and thus exhibit lower lifetime sparseness19,20, something that is possible (although difficult) to test experimentally. And finally, we extended our model to an odor–reward association task, and found that learning of a concentration invariant representation at the piriform cortex helps rapid odor–reward association.

While our approach gives us a model that is reasonably consistent with mammalian olfactory circuitry, the architecture predicted by our approximate Bayesian algorithm does not perfectly match the architecture of the olfactory system. However, a plausible olfactory circuit based on our model, but with the addition of recurrent inhibition among piriform neurons21, still learns to perform reward-based learning quickly. These results suggest that even at the circuit level, approximate Bayesian optimization may underlie rapid biological learning. But at the same time, our study reveals its limitation when applied to a complicated system.

Results

Problem setting

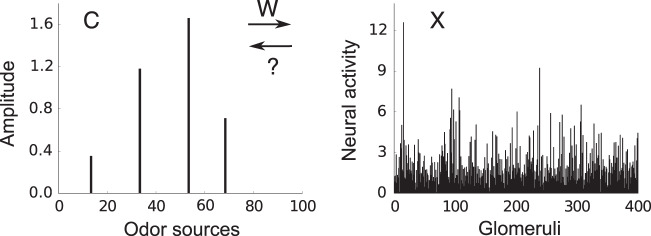

Let us denote odor concentrations by a vector c = (c1, . . . , cM), where cj > 0 if odor j is present and cj = 0 otherwise. By odor, we mean something like the odor of apple or coffee, not a single odorant molecule. In a typical environment, odors are very sparse, in the sense that few of them have a significant presence (i.e. cj > 0 for a small number of j at any time; Fig. 1 left).

Fig. 1. Problem setting.

An example odor stimulus, c (left), and the response at the glomeruli, x (right). The mixing weights (i.e., affinities), w (which are unknown to the animal) map odors, with concentration c, to OSN activity accumulated at the glomeruli, x. A goal of the animal is to infer the odor concentrations from the glomeruli activity.

In the olfactory system, odors are first detected by OSNs, and then transmitted to glomeruli as spiking activity22. Neural activity accumulated at a glomerulus, denoted xi for ith glomerulus (and thus ith OSN receptor type), is, approximately

| 1 |

where n is the noise due to sensory variability and unreliable OSN-spiking activity, and the affinity, or the mixing weight, wij, determines how strongly odor j activates glomerulus i (Fig. 1 right). OSN activity shows a roughly logarithmic dependence on odor concentration23,24. Thus the amplitude, cj, of each odor reflects log-concentration, not concentration. Below a threshold, here taken to be zero, odors are considered undetectable.

Olfactory learning as Bayesian inference

The goal of the early olfactory system is to infer which odors are present and what their concentrations are, based on OSN activity, x. However, this is a difficult problem because the animal does not know the mixing weights, w, but instead has to learn them, without supervision. One common approach to this type of unsupervised learning is the sparse coding model. Its associated learning algorithm is, however, inefficient, and thus slow, as we will see below (see the subsection “Sparse coding” in the Methods section). We thus turn to Bayesian inference.

The Bayesian approach is efficient because it takes into account uncertainty in both odor, c, and weight, w, and it can naturally incorporate a prior that reflects the sparseness of the olfactory environment. The steps are straightforward: first write down, from Eq. (1), an expression for p(c∣x, w), the distribution over odor concentrations given glomeruli activity, x, and weights w; then marginalize over the distribution of the weights given all the previous inputs, p(w∣ past observations of x) (see Methods section, Eq. (10)). However, exact marginalization is neither computationally tractable nor biologically plausible. We therefore employ a variational Bayesian approximation9, by replacing the true joint probability distribution with a fully factorized one. The effect of making a variational approximation is illustrated in Fig. 2c: the distribution of a pair of odors are typically slightly anti-correlated (Fig. 2c, left), while the variational distribution is independent (Fig. 2c, right). Because the anti-correlation is typically weak, the variational distribution captures the true distribution well.

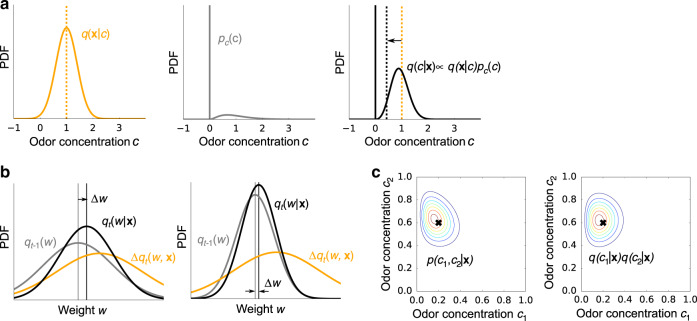

Fig. 2. Bayesian inference of odors and weights.

a Inference of odor concentration. Combining the likelihood q(x∣c) (left) and the prior pc(c) (middle), the posterior distribution q(c∣x) is obtained (right). The orange dashed line is the mean concentration associated with the likelihood, q(x∣c); the black dashed line is the mean associated with the posterior, q(c∣x). Because the prior strongly favors the absence of odors, the latter is shifted to lower concentration. b Illustration of the weight update given the same sensory evidence Δqt(w, x) when the previously estimated probability distribution over the weights, qt−1(w), is broad (left), and narrow (right). Note that the mean of qt−1(w) is the same in both panels. c Illustration of the variational approximation. The true posterior over the joint distribution of odors c1 and c2, p(c1, c2∣x) (left), is approximated by a factorized distribution q(c1∣x)q(c2∣x) (right). The black cross indicates the true concentrations, and colored lines are contours of equal probability.

The derivation of the algorithm for variational inference is described in detail in Methods section; here we simply give the results. The variational probability distribution of the concentration of odor j is updated iteratively as (see Methods section, Eq. (14b))

| 2 |

where q(x∣cj) is the variational likelihood of the concentration of the jth odor, cj, given x, and pc(cj) is the prior distribution over cj. We take the noise, n, in Eq. (1) to be Gaussian, so q(x∣cj) is Gaussian (Fig. 2a, left). And to reflect the sparsity, pc(cj) is taken to be a point mass at zero combined with a continuous piece at positive concentration (Fig. 2a, middle). Because, the prior strongly favors the absence of odors, the estimated mean concentration, 〈c〉q(c∣x) (dashed black line in Fig. 2a, right), is typically smaller than the mean over the likelihood function, 〈c〉q(x∣c) (dashed orange line in Fig. 2a, right).

Similarly, the update rule for the variational probability distribution of a weight is given by (see Methods section, Eq. (14a))

| 3 |

where Δqt(wij, x) is the evidence provided by the new information, carried in x, at trial t (Fig. 2b) and qt(wij) is the variational probability distribution of the weight, wij, given observations up to trial t (we suppress the time dependence to reduce clutter). Importantly, depending on the uncertainty in the weights, the same stimulus causes different amounts of plasticity. In particular, the higher the uncertainty in the estimated weight, wij, at t−1, the larger the change in the mean weight, Δw (left vs. right in Fig. 2b).

The update rules given in Eqs. (2) and (3) can be mapped onto neural dynamics and synaptic plasticity that closely mirrors the mammalian olfactory bulb (Fig. 3a and b). The firing rate dynamics obeys

| 4a |

| 4b |

where τ denotes time within an odor presentation (not to be confused with t, which refers to trial), mi is the firing rate of the ith M/T (mitral/tufted) cell relative to baseline, and is the firing rate of the jth granule cell. The ith M/T cell is linearly modulated by excitatory input from glomerulus i, via xi, and also by inhibitory input from granule cells, the . The granule cells, whose activity correspond to the expected concentration of the odors, are driven by excitatory input from M/T cells, mediated by a nonlinear transfer function Fj. As we discuss below, this nonlinearity plays a critical role in rapid learning.

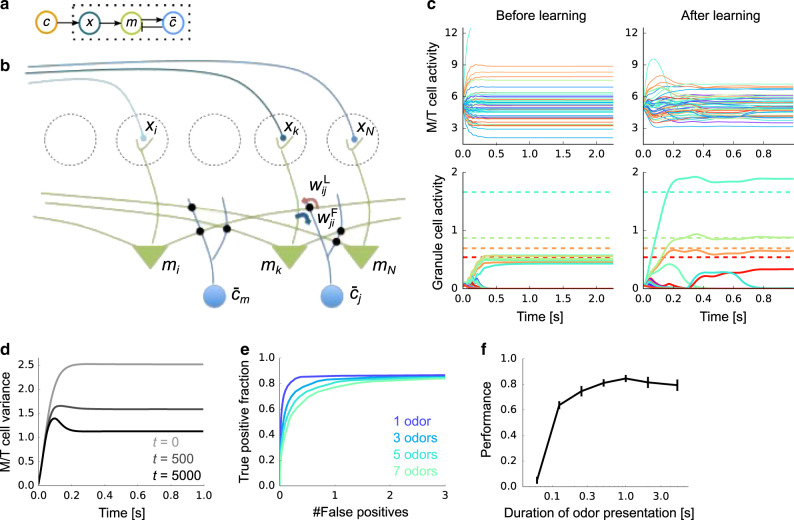

Fig. 3. Neural implementation of Bayesian learning.

a Schematic of the neural architecture. Dotted box represents the internal variables of the brain; the odor, c, comes from the outside world. b The neural implementation of our Bayesian learning model maps almost perfectly onto the circuitry of the olfactory bulb. Dotted circles are glomeruli, green triangles are M/T cells, and blue circles represent olfactory granule cells. Red and blue arrows indicate weights from granule to M/T and M/T to granule cells, respectively. c An example of firing rate dynamics before (left) and after (right) learning (M = 50 odors, N = 400 glomeruli, four odors presented). Different colors represent different neurons. Dotted horizontal lines in the bottom figures represent the true concentration of the presented odors. d Change in the variance of M/T cells during learning (t: trial). The expectation was taken over both population and trials. e Receiver operating characteristic (ROC) curves under different numbers of simultaneously presented odors (M = 100 odors, N = 400 glomeruli). See subsection “ROC curve” in the Methods section for details. f Performance under learning from various odor exposure duration (see subsection “Performance evaluation” in the Methods section), where M = 100, N = 400, and three odors are presented simultaneously, on average. The lines and their error bars are mean and standard deviation over 10 simulations.

The weights in Eq. (4), and , correspond to M/T-to-granule and granule-to-M/T synapses, respectively (blue and red arrows in Fig. 3b). These synapses jointly form a dendro-dendritic connection between M/T and granule cells25. To keep track of the variational probabilistic distribution qt(wij), both the mean and the variance of each weight need to be updated. The update of the mean is

| 5a |

| 5b |

where mi and are evaluated at the end of the odor presentation. Here is the discount factor and represents the precision (the inverse of the variance) of the synaptic weights and (see subsection “Synaptic plasticity” in the Methods section for details). This rule is Hebbian, as the update depends on the product of presynaptic and postsynaptic activity mi and . It is also adaptive, as the update depends on the precision, : because of the dependence, low precision (and thus high uncertainty) produces large weight changes while high precision (and thus low uncertainty) produces small weight changes. This is illustrated in Fig. 2b. The precision, , is also updated in an activity-dependent manner (see the Methods section, Eq. (35)). Figure 3c describes typical neural dynamics before and after learning. Before learning, when a mix of four odors is presented, M/T activity quickly converges to constant values with a relatively broad range (Fig. 3c, top-left), and granule cell activity is small and homogeneous (Fig. 3c, bottom-left). After learning, M/T cells exhibit transient activity, followed by convergence to a somewhat smaller range than before learning (Fig. 3c, top-right), as the large input-driven activity is partially canceled by the feedback from the granule cells. Granule cells, on the other hand, show very selective responses, with activity levels roughly matching the concentration of the corresponding odors (Fig. 3c, bottom-right).

The activity profiles of cells in our model have many similarities with experimental observations. For instance, as observed in experiments26, M/T cells show both positive and negative responses relative to baseline (Fig. 3c top, here the baseline is 5), and their responses become more transient after learning (Fig. 3c, top-right, and Fig. 3d). Moreover, the response range of M/T cells becomes smaller as the animal learns the odors (Fig. 3d), as observed experimentally27. In addition, after learning, granule cell activity is strongly modulated by odor concentration (Fig. 3c bottom-right; dotted horizontal lines represent the true concentrations of the corresponding odors), as observed experimentally28.

After learning, the circuit can robustly detect odors with very few false positives, even when several odors are presented simultaneously (Fig. 3e). Moreover, the learning performance was robust with respect to odor presentation time: even if the odors were presented for only a few hundred milliseconds, which corresponds roughly to one sniff cycle29,30, performance remained high (Fig. 3f). Learning was also robust to changes in the prior: a large increase in the range of possible odor concentrations had very little effect on learning performance (Supplementary Fig. 1).

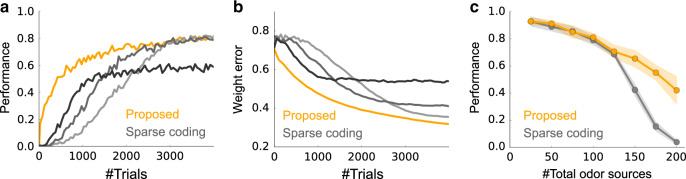

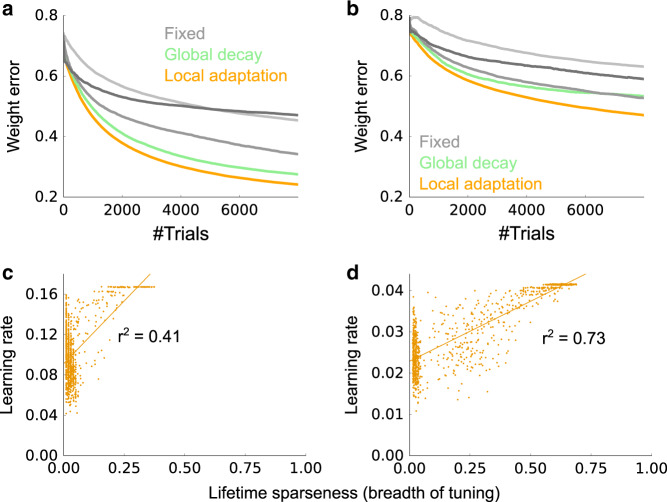

The Bayesian approach is optimal if implemented exactly, but in the approximate model used here, learning is necessarily suboptimal. To determine how suboptimal, we would need to compare against exact inference. However, that is not feasible because exact inference is intractable. Our model does, however, do better than the sparse coding model (Fig. 4): it learns much faster (Fig. 4a), and it achieves high performance without fine tuning, whereas the learning rate of the sparse coding model must be fine-tuned (gray lines in Fig. 4a). This advantage was replicated when we assessed the performance by the error in the weights (Fig. 4b). Despite faster learning, the asymptotic performance of the Bayesian model is similar to that of sparse coding when there are a relatively small number of odor sources in the environment, and much better when there are many sources, although the performance of both models deteriorates in that regime (Fig. 4c).

Fig. 4. Performance comparison.

a Learning curves for our model (orange) and sparse coding (light gray to black). M = 100 odors, N = 400 glomeruli, and on average, three odors were presented at each trial. See subsection “Performance evaluation“ in the Methods section for details. The learning rates of the sparse coding model, ηw, were 0.3, 0.5, and 1.0 from light gray to black. b Same as a, but the performance was evaluated by the error in the weight. c Performance (after learning from 4000 trials) of the proposed Bayesian model (orange) and the sparse coding model (gray) versus the number of odors. Shaded regions represent standard deviation over 10 simulations. As in panels a and b, N = 400 glomeruli and three odors were presented on average. Here, ηw was fixed at 0.5.

These results indicate that a variational approximation of Bayesian learning and inference enables data efficient learning, and does so using biologically plausible learning rules and neural dynamics. How does our model manage to perform fast and robust learning? And is there evidence that the brain uses this strategy? Below, we show that our proposed circuit performs well because it exploits the sparseness of the odors and utilizes the uncertainty in both the weights and odor concentration. We then discuss the relationship of our model to experimental observations.

The sparse prior leads to a nonlinear transfer function

An important feature of olfaction, like many real world inference problems, is that the distribution over odors has a mix of discrete and continuous components: an odor may or may not be present (the discrete part), and if it is present its concentration can take on a range of values (the continuous part). In our model, we formalize this with a spike and slab prior (Fig. 2a middle): the spike is the delta function at zero; the slab is the continuous part. In this model, sparseness is ensured by setting the cumulative probability of the slab, denoted co, close to zero.

To see how the prior affects the dynamics, note that the granule cells ( in Eq. (4)) represent the expected concentration of the odors, and so take the prior into account. Thus, after learning, most of them have near zero activity, with only a few of them active (Fig. 3c, bottom right panel). To achieve sparsity, the granule cells need a great deal of evidence to report non-negligible concentrations. That is reflected in the transfer functions of the granule cells (the function Fj in Eq. (4b); see orange curve in Fig. 5a). The function exhibits near zero response (corresponding to near zero concentration) for small input, followed by a sharp rise and then an approximately linear response for large input.

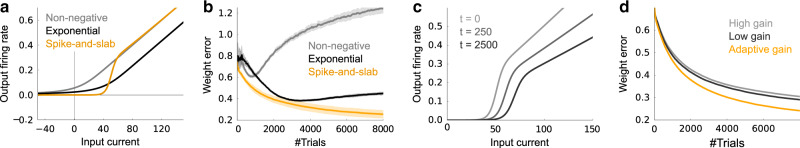

Fig. 5. Adaptive transfer functions.

a The shapes of the transfer functions of granule cells under different priors on the odor distribution. See subsection “Models with various priors on odor concentration” in the Methods section for the details. b Weight errors under different priors. Shaded regions represent standard deviation over 10 simulations. c The average transfer function at the beginning (light gray), middle (gray), and the end (black) of the learning. The x-axis represents the input current y. d The weight error under fixed input gain, compared to the control model with adaptive gain, averaged over 50 simulations. For the gray line, the transfer function was set to the top curve in panel c; for the black line it was set to the bottom curve. In all panels, M = 100 odors, N = 400 glomeruli, and three odors were presented on average.

If we derive update rules using a different prior, the transfer function changes. If we then perform inference and learning using the transfer function derived under a different prior, but drawing odors from the true prior, performance is, not surprisingly, sub-optimal (see subsection “Models with various priors on odor concentration” in the Methods section). For example, if we constrain the odors to be non-negative, the transfer functions are approximately rectified linear, a commonly used nonlinearity in artificial neural networks (gray line Fig. 5a13). However, this model failed to learn the input structure generated from the spike-and-slab prior, as the sparseness of the odor concentration is not taken into account (gray line in Fig. 5b). If we constrain the odors to be non-negative, but also ensure that they are not too large, by introducing an exponential decay10, learning improves initially, but the weight error eventually increases (black lines in Fig. 5a and b). These results suggest that the classic input–output function—sigmoidal at small input and linear at large input—found both in vitro14,31 and in biophysically realistic models of neurons15, reflects the fact that the world is truly sparse—something not captured by classical sparse coding models. These gain functions thus offer a normative explanation for the biophysical responses of typical olfaction neurons to input. The shape of the activation function for the precision update also depends on the choice of prior, but they all closely resemble the squared transfer function, F2 (Supplementary Fig. 2).

As the animal learns a better approximation to the true weights, the olfactory system can extract more information from the OSN activity; this results in a change in the transfer function. In particular, the transfer function exhibits a decrease in gain with learning (mainly a shift to the right), as shown in Fig. 5c (see subsection “The variational weight distribution” in the Methods section for details). Such a decrease in gain is a widely observed phenomenon among diverse neurons during development14,16. It is also consistent with the reduction of input resistance observed in adult-born granule cells during development17,18, as low resistance causes low excitability. If the transfer functions were held fixed during learning, performance would deteriorate gradually (gray and black curves vs. orange line in Fig. 5d), though the benefit of the adaptive gain was rather small in our model setting.

Weight uncertainty leads to adaptive synaptic plasticity

A key aspect of our model is that it explicitly takes the uncertainty of the weights into account. This leads to an adaptive learning rate (see Eq. (5)). In particular, the learning rate is the product of two terms: . The first term, 1/t, is a global decay, and reflects an accumulation of information over time: at the beginning of learning, the olfactory stimuli contains a relatively large amount of information about the weights, and so the learning rate is large, and vice versa. The second term, , is the cell-specific contribution to the learning rate. In steady state, it is given approximately by (the subscript “odors” indicates an average over odors).

It turns out that the second term is related to the lifetime sparseness, (note that smaller Sj means activity is more sparse; see subsection “Lifetime sparseness” in the Methods section and ref. 19). Assuming the mean firing rate, , is approximately constant (as we see in our simulations), then . When the granule cells have broad, non-selective tuning, the lifetime sparseness is large, and the learning rate is high; when the cells are sparse and have highly selective tuning, the lifetime sparseness is low, and so is the learning rate. Thus, if the mean granule cell responses are similar for all presented odors, the learning rate is large, encouraging neurons to modify their selectivity. If, on the other hand, the granule cell responses are sparse and selective, the learning rate is low, helping the neurons stabilize their acquired selectivity.

We examined the effects of the two factors—1/t and —on learning. When the learning rate, , was kept constant throughout learning, learning was slower, even when the learning rate was finely tuned (gray lines vs. orange line in Fig. 6a). This makes sense from a Bayesian perspective: early on, when weight uncertainty is large, learning should be fast (the dark gray line, which has the highest learning rate, drops rapidly), whereas after a large number of trials, when weight uncertainty is low, learning should be slow (the lighter gray lines, which have lower learning rates, have better asymptotic performance). It is also consistent with the fine tuning required for the sparse coding model in Fig. 4a and b. When we fixed 1/ρj but included the global factor 1/t, performance was better than the model with fixed learning rate (light-green vs. gray in Fig. 6a), yet still worse than the original fully adaptive model (light-green vs. orange in Fig. 6a). This was more clear under a less sparse setting (co = 0.07 in Fig. 6b, versus co = 0.03 in Fig. 6a). Furthermore, as predicted, we found that the learning rate of a cell, , is positively correlated with the lifetime sparseness at each time point (i.e. at fixed t) as shown in Fig. 6a and b. This correlation becomes weaker as the prior becomes more sparse (compare Fig. 6c and d, for which co = 0.03 and 0.07, respectively). That is because a very sparse prior (low co) helps the granule cells to be highly selective at an early stage, enabling the lifetime sparseness to quickly converge to a small value (vertical cluster on the left edge of Fig. 6c and d). These results indicate that the global and postsynaptic-neuron-specific adaptation of the learning rate cooperatively help fast learning.

Fig. 6. Adaptive synaptic plasticity.

a Weight error when is fixed (gray lines), is fixed (light green), and fully adaptive (orange). For the gray lines we used learning rates of 0.01, 0.1, 1.0, correspond to light gray to dark gray. The sparsity, co, was 0.03. b Same as panel a, but with a lower sparsity, co = 0.07. c, d Correlations between the lifetime sparseness and the learning rate, after 300 stimuli were presented to the network, under more sparse (c: co = 0.03) and less sparse (d: co = 0.07) conditions. Lines are linear regressions, and each dot represents one granule cell. Correlation were significant for both c and d (p ≪ 10−6). Vertical clusters appearing on the left edges of the panels correspond to neurons with very small lifetime sparseness. In all panels, M = 100 odors, N = 400 glomeruli, and 3 (a, c) or 7 (b, d) odors were presented on average. Light-green and orange lines in a and b are mean over 50 simulations, while the rest were calculated from 10 simulations.

Learning concentration invariant representation and valence

Our results so far indicate that olfactory learning is well characterized as an approximate Bayesian learning process. Our circuit estimates odor concentration, which is important for locating an odor source32. However, the perceived concentration depends on factors such as the distance from the odor source, its size, and wind speed. Thus, odor concentration is not a reliable indicator of the amount of reward expected. Hence, acquisition of a concentration-invariant representation is highly useful for many olfactory-guided behaviors.

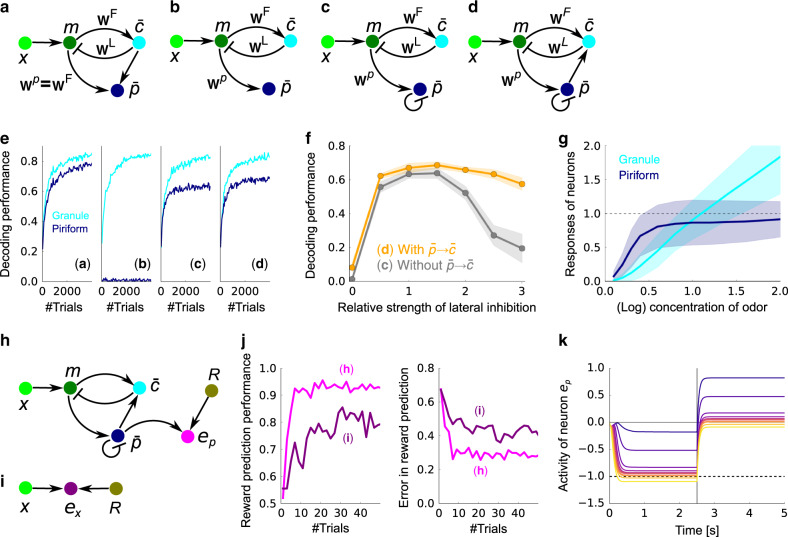

A concentration-invariant representation is essentially a representation of the probability of an odor being present, denoted . Because of the spike in our prior, , thus probability is easily decoded from M/T cells using the circuit depicted in Fig. 7a (see subsection “Learning of concentration-invariant representation” in the Methods section). Here, could be represented in layer 2 of piriform cortex neurons, as that is the main downstream target of M/T cells, and odor representation in piriform cortex is approximately concentration-invariant21,33. As the granule cells acquire odor representation, neurons in piriform cortex acquire odor probability representation (cyan and dark blue line in Fig. 7e left).

Fig. 7. Learning a concentration invariant representation and an odor-reward association.

a–d A set of increasingly realistic decoding models. a The decoding model associated with our variational Bayesian inference algorithm. Note that the weights need to be copied from wF to wp, something that is not biologically plausible. b Similar circuit, but with the mapping from m to learned via a local rule. c Same as b, but with lateral inhibition. d Same as c, but with feedback to the granule cells. e Learning performance for the models in a–d when decoding from granule cells (cyan) or piriform cortex (dark blue; see subsection “Odor estimation performance” in the “Methods” section). f Comparison of performance for model c (gray) and d (orange). Mean and standard deviation over 10 simulations are plotted. g Mean and standard deviation of responses of the granule cells, , and the piriform neurons, , for their selective odors presented at various concentrations. The responses were measured by presenting each odor in isolation with different concentration, and then averaging over populations. h Schematic of the reward prediction circuit utilizing concentration-invariant representation in the piriform cells, . i Direct reward prediction from neural activity at glomeruli. j Performance of odor–reward association measured by the classification performance (left) and the mean-squared error between the predicted reward and the actual reward (right) for the models in panels h (magenta) and i (purple). Lines are mean over 100 simulations. k The mean response of neuron ep given an odor associated with the reward. The vertical line at τ = 2.5 s represents the reward presentation, and the dotted horizontal line is the sign-flipped reward value (−R). Different colors represents the different concentrations of the presented odor, from purple (c ≈ 0.1) to yellow (c ≈ 2.0). In all panels, M = 50 odors, N = 200 glomeruli, and three odors were presented on average, except for the go/no go task where one of two selected odors was presented randomly.

While the circuit shown in Fig. 7a exhibits good performance, it is not consistent with the mammalian olfactory system, in two ways. First, the weights from the M/T cells to the granule cells have to be copied to the corresponding M/T to piriform cortex connections (i.e. wp = wF), something that is not biologically plausible. Second, a direct projection from granule cells to piriform cortex is needed, but such a connection does not exist. These inconsistencies can be circumvented by modifying the circuit heuristically (Fig. 7b–d). Weight copying can be avoided by learning wp with local synaptic plasticity (Fig. 7b), although in the absence of the teaching signal from the granule cells, this naive extension does not work (dark blue line in Fig. 7e middle-left). However, introducing lateral inhibition among the piriform neurons (Fig. 7c) as observed experimentally21, allows the piriform neurons to acquire odor representation (Fig. 7c and e middle-right), although the decoding performance was worse than the Bayesian model (Fig. 7e left vs. 7e middle-right). Finally, if connections from piriform cells to granule cells are added as well, the learning performance of granule cells became slightly better (Fig. 7d and e right), and more robust to changes in the strength of lateral inhibition (Fig. 7f). As expected, the responses of piriform neurons were mostly concentration-invariant (dark blue line in Fig. 7g), whereas granule cells showed a clear concentration dependence (cyan line in Fig. 7g). Thus, the architecture of the mammalian olfactory circuit indeed supports robust learning of concentration-invariant representation.

Once the circuit acquires a concentration-invariant representation, a circuit that performs odor–reward association can be constructed simply by taking the circuit depicted in Fig. 7d and adding a region that receives input from both piriform neurons and the reward system (ep in Fig. 7h). Olfactory tubercle could be the site for this odor–reward association5,34, but it could be other regions, such as layer 3 of piriform cortex, as well. To test performance of this circuit, we implemented a go/no go task in which one odor is associated with a reward (R = 1.0), while another odor is associated with no reward (R = 0.0), regardless of concentrations. We simulated this task by randomly presenting rewarded or unrewarded stimulus with equal probability (see subsection “Go/no go task” in the Methods section). We used the circuit pre-trained with a large number of odors but without reward. When the reward prediction was learned with the projection from piriform cells, , to olfactory tubercle cells, ep (Fig. 7h), classification performance reaches 90% after just six trials (Fig. 7j; magenta lines). On the other hand, when the circuit learns the task directly from the glomeruli (Fig. 7i), though the circuit still learns to predict the reward as suggested previously35, learning was much slower and the performance was worse even after a large amount of training (Fig. 7j; purple lines). After a dozen odor–reward association from piriform neurons, , olfactory tubercle cell activity, ep, learned to represent the reward prediction given olfactory stimuli unless the concentration is very small (left half of Fig. 7k; in our model—ep is the reward prediction), and once the reward is presented at τ = 2.5 s, the activity went back to near zero (right half of Fig. 7k; in our model, positive ep represents an error, and so drives learning).

These results indicate that unsupervised learning of odor representation may underlie fast reward-based learning, and the proposed Bayesian learning mechanism improves reward association by enabling robust odor representation in a data efficient way.

Discussion

We formulated unsupervised olfactory learning in the mammalian olfactory system as a Bayesian optimization problem, then derived a set of local synaptic plasticity rules and neural dynamics that implemented Bayesian inference (Figs. 2 and 3). Our theory provides a normative explanation of the functional roles for the nonlinear transfer function and the developmental adaptation of the neuronal input gain (Fig. 5), both widely observed among sensory neurons. The model also predicts that the learning rate of dendro-dendritic connections should be approximately linear in the lifetime sparseness of the corresponding granule cells (Fig. 6). Finally, we extended the framework to learning of odor identity by piriform cortex, and showed that such learning supports rapid reward association (Fig. 7).

Our results suggest that adaptation of both input gain (Fig. 5) and learning rate (Fig. 6) are important for successful learning. The developmental reduction in input gain can be explained by a decrease in neural excitability, which is partially caused by the increased expression of K+ channels14. Correspondingly, it is known that changes in channel expression at the dendrite modulate the sensitivity of synaptic plasticity36. In particular, it has been reported that elimination of voltage-gated K+ channels enhances the induction of long-term potentiation37. These results suggest that developmental up-regulation of K+ channel expression at the soma and the dendrite may underlie the adaptation of the input gain and learning rate.

The cellular plasticity rules we derived explain multiple developmental changes in adult born granule cells. Experimentally, relative to young cells, mature granule cells have sparser selectivity20, lower membrane resistance17,18, and are less plastic18, as predicted by our model. In addition, our results provide insight into the functional role of adult neurogenesis. As shown previously8, if each synapse keeps track of its uncertainty, by removing the most uncertain synapses while adding synapses at a random position on the dendritic tree, a neuron can achieve sample-based Bayesian learning, making neurogenesis unnecessary. However, in our unsupervised learning framework, uncertainty is defined at neurons, not at synapses. As a result, from a Bayesian perspective, there is no good way to perform synaptogenesis. Thus, the brain should instead remove the most uncertain neurons, while at the same time randomly adding new ones.

The importance of the feedback circuit between M/T cells and granule cells has been noted previously6,38, but plasticity mechanisms that generate this circuit have not been considered. Recently, several groups proposed learning algorithms for unsupervised olfactory learning using stochastic gradient descent11,12,39, as in the case of our sparse coding model. However, as we have seen (Fig. 4), these algorithms are very unlikely to be fast. In addition to the sparse coding model, our problem setting is deeply related to Independent component analysis (ICA)40. Indeed, by using the sparseness as the measure of non-Gaussianity, unsupervised olfactory learning can be reformulated as an ICA problem11.

The spike-and-slab prior employed here is widely used in machine learning41, and has been applied to the sparse coding model of the early visual system42, and a normative analysis of nonlinear transfer functions has been carried out previously43. A contribution of this work is the establishment of a link between the spike-and-slab prior and nonlinear transfer function of a neuron.

Studies of adaptive learning rates date back many decades44,45; more recent studies have taken a Bayesian approach to adaptive learning in simplified single neurons models7. In this study, we considered an unsupervised learning problem, and showed that the learning rate of excitatory feedforward connections should depend only on the postsynaptic activity, independent of the presynaptic activity. Moreover, our theory predicted a non-trivial relationship between the learning rate and the lifetime sparseness of the postsynaptic neuron (Fig. 6c and d).

Acceleration of reward-based learning by unsupervised learning (Fig. 7j) has been studied in the context of both semi-supervised learning and model-based reinforcement learning. In particular, the latter approach has been applied to rapid learning by animals, but these were limited to abstract models, not circuit-based implementations46. In the invertebrate literature, Bazhenov and colleagues (2013) studied the combination of unsupervised and reward-based learning in a computational model of the insect brain47, but plasticity was applied only to the output connections (corresponding in our model to in Fig. 7h). Interestingly, in the invertebrate brain, the connections corresponding to are mostly random and fixed48, so the acceleration shown in Fig. 7j is potentially unique to vertebrates.

While our approach gave us a model that is reasonably consistent with mammalian olfactory circuitry, it is not perfect. In particular, the architecture predicted by our approximate Bayesian algorithm does not match perfectly the architecture of the olfactory bulb, piriform cortex, and olfactory tubercle. We were able to make small modifications to our circuit so that it did match the biology, and still gave decent performance, but performance was about 10% worse than the circuit predicted purely by Bayesian inference (blue lines in Fig. 7e-left vs. 7e-right). This discrepancy between the predicted and observed architecture highlights a limitation of this approach, especially when applied to complex systems. In particular, it is difficult to include biological constraints, both because we do not know exactly what they are, and because there is no straightforward way to marry those constraints with a normative Bayesian approach. However, that is an important avenue for future work.

Methods

Stimulus configuration

On each trial, the response of the ith glomerulus is modeled as

| 6 |

where cj is the concentration of odor j, and ξi is a zero mean, unit variance Gaussian random variable. The Gaussian assumption is justified because, although olfactory sensory neurons fire with approximately Poisson statistics, 1000–10,000 sensory neurons converge to a single glomerulus22, where OSN activity is conveyed to M/T cells as stochastic currents. We take the affinities, or mixing weights, w, to be log normal, followed by a normalization step

| 7a |

| 7b |

where recall, M is the number of odors and N is the number of glomeruli. The factor multiplying is 1 on average, so the normalization step does not have a huge effect on the weights. However, it forces ∑jwij to be strictly independent of i, which makes the learning process less noisy.

On each trial, odors cj (j = 1, 2, . . . , M) are generated from the spike-and-slab prior given as

| 8 |

where Θ(x) is a Heaviside function. We used α = 3 everywhere except Supplementary Fig. 1, where we used α = 1. Under this prior, each odor is independently presented with probability co, and its amplitude follows a Gamma distribution with unit mean (Fig. 1a left). Note that the amplitude, cj, reflects log-concentration rather than concentration24. To avoid the null stimulus, we resampled the odors if all of the cj were 0 on any particular trial.

Bayesian model

As discussed in the main text, we mainly focus on unsupervised learning, in which animals see only glomeruli activity and must make sense of it. This is essentially a clustering problem: if the same pattern of glomeruli activity occurs multiple times, the brain should recognize it as an odor. The activity patterns at the glomeruli are determined by the product of odorant concentrations in the inhaled air, and the affinities of the OSNs for those odorants. Thus, to recognize an odor, animals have to effectively learn the affinities of OSNs for each odor, and store them in the olfactory circuitry. As we will see, in our model they are stored as weights between M/T cells and granule cells. Once those weights are stored, if an odor co-occurs with a reward (or punishment), the valance of that odor can be determined. And indeed, we find that unsupervised learning enables rapid learning of odor–reward associations.

More formally, the goal of the olfactory system is to infer the odor at time t, ct, given all past presentations of odors, x1:t ≡ {x1, x2, . . . , xt}. Because the weights are not known, they must be integrated out

| 9 |

Using Bayes’ theorem, this can be written in a more intuitive form

| 10 |

where, recall, pc(ct) is the prior over odors. To derive this expression, we used two facts: given ct and w, xt does not depend on past observations, and ct does not depend on past observations. The first term on the right-hand side, p(xt∣ct, w) is the likelihood given the weights; but because we do not know the weights, we have to marginalize over them given past observations. The marginalization step is intractable, as we have to introduce past odors and then integrate them out. This leaves us with an integral over w (Eq. (10)) that cannot be performed analytically. And even if it could, the circuit would have to memorize all past stimuli, x1, x2, . . , xt−1. We thus have to perform approximate inference. For that we make a variational approximation.

Variational approximation

The integral in Eq. (10) becomes easier if the distributions factorize. We thus make the variational approximation

| 11 |

where, to avoid a proliferation of subscripts, we suppress the fact that c and are to be evaluated at trial t; in line with this, to simplify subsequent equations we replace xt with x; and, as is standard, we suppress the dependence of q on x1:t.

The variational distributions, and , are found by minimizing the KL-divergence with respect to the true distribution, with the KL-divergence given by

| 12 |

As is straightforward to show9, minimizing this quantity leads to the update rules

| 13a |

| 13b |

where ~ indicates equality up to a constant, \wij indicates an average with respect to the variational distribution over all variables except wij, and, similarly, \cj indicates an average with respect to the variational distribution over all variables except cj. In the first equation, we approximate p(w∣x1:t−1) with the variational distribution at the previous time step, , which makes the marginalization self-consistent. This approximation breaks down early in the learning process; nevertheless, in practice it works quite well. Using this approximation, we arrive at

| 14a |

| 14b |

In the next two subsections we derive explicit update rules by computing the averages in these expressions.

The variational odor distribution

To find the variational distribution over odors, we need to compute the average over that appears on the right-hand side of Eq. (14b). Using the fact that the x follows a Gaussian distribution, we have

| 15 |

where the averages are with respect to the variational distribution. This is Gaussian, and it is straightforward to work out the mean and variance. Note that both depend on the first and second moments of the weights (which, as we will see below, determine the variational weight distribution) evaluated, importantly, at time t. However, synaptic plasticity is much slower than neural dynamics, so it is reasonable to update the weights on a slower timescale than concentration. Thus, when evaluating the mean and variance, we use the weight distribution on the previous time step. Using and to denote the mean and variance, and making this approximation, we have

| 16a |

| 16b |

where we made the definition

| 17 |

The distribution can now be written in a very compact form

| 18 |

As we will see below, to update the weights we just need the first and second moments of cj (see Eq. (27a)). And for the reward-based learning, we need the probability that cj is positive. These quantities are straightforward, if tedious, to compute, and are given as follows.

For the first moment,

| 19 |

where the average is with respect to the distribution in Eq. (18), Zj is the normalization constant

| 20 |

and αj and Ψ(αj) are defined by

| 21a |

| 21b |

with Φ the cumulative normal function

| 22 |

Similarly, the second moment is given by

| 23 |

And finally, the probability that an odor is present is written

| 24 |

The variational weight distribution

To find the variational distribution over weights, we need to compute the average on the right-hand side of Eq. (14a). This is the same as Eq. (15), except that the average now excludes wij rather than cj,

| 25 |

where the averages are, as above, with respect to the variational distributions. This is a quadratic function of wij; thus, if we assume that is Gaussian, then is also Gaussian. Using and to denote the mean and variance at time t, respectively (the latter to anticipate the 1/t falloff of the variance expected under Bayesian filtering), Eq. (14a) becomes

| 26 |

As in Eq. (15), appears on the right-hand side of Eq. (26). However, very fast synaptic plasticity is required for solving this equation recursively for all the weights. We thus approximate the right-hand side by using the previous timestep, t−1, rather than the current one, t; an approximation that should be good when the weights change slowly. Doing that, we arrive at the update rules

| 27a |

| 27b |

where we used Eq. (17) to simplify the second expression. Note that the update rule for is local, as it depends only on variables indexed by i and j. The update rule for ρj is also local, and in fact depends only on variables indexed by j.

Finally, it is convenient to write the update rules for the mean and precision of the variational distribution over concentration, Eq. (16), in terms of and ρj,

| 28a |

| 28b |

As shown in Fig. 5c, the transfer function shifts to the right with learning. This seems counter-intuitive: because the weights become more certain with learning, it should take less input to the granule cells to produce activity; this suggests that the transfer functions should shift left, not right. However, an increase in certainty is not the only thing that changes with learning; the weights also become more diverse, capturing the diverse responses of glomeruli for each odor. The diversity increases the variance of the input to the granule cells, and so to ensure a sparse response with increasing diversity, the transfer functions need to shift to the right. In our model, increased diversity (the first term in Eq. (28b)) had a larger effect than increased certainty (the second term), resulting in a net rightward shift in the transfer functions.

Network model

The analysis in the previous sections revealed that under the variational approximation, the distribution of the odors and the weights are updated locally. Thus, we implement the update rules in a network model of the olfactory bulb. The update of the weight distribution, , depends on 〈cj〉 and , as shown in Eq. (27), while the update of the odor distribution, , depends on and ρj, as shown in Eq. (28). Ideally, all these parameters should be updated simultaneously. However, as mentioned above, updates to synaptic weights are typically much slower than the neural dynamics, so here we consider a two step update. First, the relevant parameters of the variational odor distribution, 〈cj〉 and , are updated using the mean and precision of the weight distribution, and ρj, evaluated at t−1. Then, and ρj are updated using the first and second moments of the weights, 〈cj〉 and , evaluated at time t.

Neural dynamics

Our goal is to write down a set of dynamical equations for 〈cj〉 and whose fixed points correspond to the values given in Eqs. (19) and (23), respectively. Examining these equations, we see that 〈cj〉 and depend on αj and λj; after a small amount of algebra (involving the insertion of Eq. (28a) into Eq. (21a)), αj may be written

| 29 |

To avoid clutter, we dropped the dependence on time, but the weights should be evaluated at time t−1 and all other variables at time t.

Because neither αj nor λj (the latter given in Eq. (28b)) depend on , we can write down coupled equations for 〈cj〉 and mi; the solution of those equations gives us the values of αj and λj, which in turn gives us, via Eq. (23), . Using, for notational ease, rather than 〈cj〉, the simplest such equations (derived from Eqs. (17) and (19)) are

| 30 |

| 31 |

where τr is the time constant of the firing rate dynamics, and the nonlinear transfer function, F, is given by the right-hand side of Eq. (19)

| 32 |

with αj given in Eq. (29) and λj in Eq. (28b). Note that we have replaced the average weights, , with two different weights, and . Ideally, we should have , but, for biological plausibility, we allow these reciprocal synapses to be learned independently. Note that when evaluating αj, Eq. (29), should be used. Although the expression for Fj seems complicated, the transfer functions are relatively smooth, and resemble experimentally observed ones (see Fig. 5).

As shown in Fig. 3b, this dynamical system resembles the neural dynamics of the olfactory bulb, under the assumption that mi and are the firing rates of M/T cells and the granule cells, respectively. With this assumption, is the connection from M/T cell i to granule cell j and is the connection from granule cell j to M/T cell i.

Finally, the second moment of the concentration is given, via Eq. (23), by

| 33 |

Synaptic plasticity

After trial t, the average feedforward weights, , and the average lateral weights, , are updated as in Eq. (27b)

| 34a |

| 34b |

| 34c |

We used the firing rates mi and at the end of trial, after the neural dynamics has reached steady state. As the weight updates depend primarily on the product of mi and , the learning rules are essentially Hebbian. Note that if the initial conditions are the same (i.e., if ), then and will remain the same for all time. This is reasonable given that connections between M/T cells and granule cells are dendro-dendritic.

The variance of the weights, , consists of two components. The first, 1/t, represents the global hyperbolic decay in the learning rate due to accumulation of information. In our simulations, we started t from to suppress the influence of the initial samples; this is equivalent to using a trial-dependent discount factor instead of 1/t, where t is the actual trial count. The second, , represents the neuron-specific contribution to the precision, and is given, via Eqs. (27) and (23), by

| 35 |

where Gj, the second moment of the concentration, is given in Eq. (33).

Models with various priors on odor concentration

In our model setting, the prior over concentration, pc(c), enters via Eq. (14b), and affects the transfer functions F and G, given in Eqs. (32) and (33), respectively. Choosing different priors gives different transfer function. Below we consider two common ones: non-negative, and non-negative with an exponential decay.

The first of these is actually an improper prior, pc(c) ∝ Θ(c). This results in gain functions of the form

| 36a |

| 36b |

where μj and λj are given in Eqs. (28a) and (28b), respectively.

Under the non-negative prior introduced above, all positive concentrations are equally likely. However, that is not the case in a typical environment. Far more realistic is to assume that large concentrations are exponentially unlikely, yielding a prior of the form . (The decay constant, co, was chosen so that the mean is equal to co, the same mean as in the true generative model.) For this prior, the functions F and G are

| 37a |

| 37b |

While this prior is suboptimal for olfactory learning, experimental results from visual cortex indicate that the transfer function there resembles the one in Eq. (37a)49 (black curve in Fig. 5a). Indeed, in early visual regions, where the prior is arguably more continuous10, this shifted rectified-linear transfer function, might be more beneficial50.

Learning concentration invariant representations

Up to now we focused on the expected concentration, . However, in natural environments animals often care more about whether or not an odor exists in its vicinity than what its concentration is. From a Bayesian perspective, this means the animals should compute the probability that an odor is present, denoted . Using Eq. (24), can be estimated as the steady state of the following dynamics:

| 38 |

where Hj, which is approximately sigmoidal, is given, via Eq. (24), by

| 39 |

with αj given in Eq. (29), but with replaced by in that equation as before.

In principle, neurons receiving input, mi, from M/T cells, such as layer 2 piriform cortex neurons, can decode the odor probability, as shown in Fig. 7a and 7e-left. However, to calculate Hj given input from M/T cells, the neuron would need to know the weights, , as well as λj and (the latter because αj depends on ; see Eq. (29)). This is clearly unrealistic, because there is no known biological mechanism that enables copying weights. Moreover, because granule cells do not have output projections, except for the dendro-dendritic connections with M/T cells, piriform neurons cannot know directly. Nevertheless, piriform neurons can learn to decode the concentration-invariant representation, , as follows.

Let us use to denote the mean weight from M/T cells to the piriform neurons (see Fig. 7b–d). Assume for the moment that ; shortly we will write down a learning rule that achieves this (see Eq. (43)). This takes care of the weights, but we also need an approximation to . For that, we notice that if the estimation is unbiased, on average both and are equal to co. Thus, the simplest way to approximate with the information available to the jth piriform neuron is to use . Under this approximation, and using in place of , Eq. (38) becomes

| 40 |

where Hj is the same as Eq. (39), but with αj replaced by —the analog of αj, but with and lateral inhibition

| 41 |

where considering the analogy with Eq. (28b), is given by

| 42 |

As above, evolves with the weights set to their values updated at the end of previous trial. Once the neural dynamics reaches steady state, the weights are updated as in Eq. (34)

| 43a |

| 43b |

and the precision as in Eq. (27a)

| 44 |

Here Fj and Gj are the estimated first/second moment given in Eqs. (32) and Eq. (33), but calculated with in Eq. (41). In steady state, these two terms approximate and , respectively. In addition, to ensure sparse piriform cell firing51, we introduced Hebbian plasticity to the lateral weights Jjk,

| 45 |

while bounding Jjk > 0 and enforcing Jjj = 0. We initialized Jjk by Jjk = 0.02.

In Fig. 7e (panel d), 7f (orange line), 7g, and 7j–k, we modified the transfer function Fj of granule cells by replacing the prior term co with the input from piriform neuron . This means that is written as

| 46 |

where αj is still given by Eq. (29). We modulated the gain function Gj of granule cells, Eq. (33), in the same way, by replacing co with . In Fig. 7f, we changed the relative strength of lateral inhibition by replacing Jjk in Eq. (41) with κJJjk where κJ, the relative strength, ranged from 0 to 3, as shown in the x-axis of Fig. 7f, while using the original Jjk for the weight update.

Reward-based learning

Assuming that the reward amplitude depends only on the identity of the odors, not on their concentrations, the reward, R, on trial t is given by

| 47 |

where ζt is a zero mean, unit variance Gaussian random variable, and Θ(x) is a Heaviside function.

To estimate the reward, we augment the circuit in Fig. 7d by introducing a set of neurons, denoted ep, that receive input both from and the reward, R (see Fig. 7h). Using to denote those weights, the natural neural dynamics of ep is

| 48 |

To represent the delay in reward delivery, is zero for the first 2.5 s; after that it is set to the value of the reward,

| 49 |

Note that for the first 2.5 s of the trial, -ep carries a prediction of the upcoming reward from the olfactory input, x. Once the reward is provided, the neuron represents the difference between the expected reward and the actual reward. That difference can be used to drive learning, via Hebbian plasticity

| 50 |

where is updated only after the reward has been presented. Importantly, ep is evaluated after the reward presentation.

Similarly, for the direct readout from x depicted in Fig. 7i, the reward is predicted by

| 51 |

with hi again update via Hebbian plasticity,

| 52 |

after the reward has been presented.

Sparse coding

The sparse coding model originally proposed by Olshausen and colleagues10,52 can be applied to the model of olfactory learning as shown below. The basic idea is that the odor, denoted , and the weight matrix, denoted , that best explains the input, x, should be close to the real c and w. This means and can be estimated by performing stochastic gradient descent on the likelihood of the inputs, x.

However, this is sub-optimal, primarily because uncertainty in and are ignored, even though they are important for data efficient learning45. In addition, for tractability, the prior over the odors is taken to be a continuous function, making it difficult to capture the fact that at any given time most odors are absent. These constraints make the learning algorithm inefficient.

The log likelihood of the data with respect to an unknown set of weights, denoted , is given by

| 53 |

In the second line, the integral was approximated with the maximum a posteriori estimate . The objective function is thus given by

| 54 |

Because the noise on xt is Gaussian (see Eq. (6)), the first term is a simple quadratic function. However, the second term, , requires further approximation to remove the delta function, and thus ensure differentiability of Et with respect to . To this end, we approximated the prior with a Gamma distribution: , for which the mean is kcθc. We used kc = 3 and θc = co/3, ensuring a mean of co. Under this approximation, the objective function, Et, becomes

| 55 |

We maximize the objective function via stochastic gradient descent, which occurs in two steps. In the first step, we maximize Et with respect to ,

| 56 |

where is the analog of Eq. (17),

| 57 |

Once has converged, we update the weights via

| 58 |

To prevent divergence of the weights, after each timestep we apply L-2 normalization (see Eq. (60b) below).

In summary, on each trial, t, first, the are updated,

| 59 |

where the time step τ runs from 0 to 100,000 in each trial. At the end of trial t, the weights are then updated by

| 60a |

| 60b |

The learning rates, ηc and ηw, were manually tuned. We used ηc = 0.00001 and ηw = 0.5 unless stated otherwise.

Simulation details

The parameters used in the simulations are given in Table 1. Additional details of the simulations, from the implementation of neural dynamics to the setting of Go/no go task, are provided in Table 1.

Table 1.

Definitions and values of the parameters.

| Definition | Value | |

|---|---|---|

| M | The total number of odors presented and granule cell population | 100 (Figs. 3e, f, 4–6), 50 (Figs. 3c, d, 7) |

| N | The total number of glomeruli | 400 (Figs. 3–6), 200 (Fig. 7) |

| co | The probability of a odor being present | 3/M, except Fig. 6b, d |

| σx | The variance of noise on the glomeruli activity | 1.0 |

| ση | The variance of the noise in the reward | 0.01 |

| msp | The spontaneous firing rate of M/T cells | 5 Hz |

| τr | The timescale of firing rate dynamics | 50 ms |

| The duration of each trials | 5000 ms, except for Fig. 3f | |

| The initial count for the global learning rate, 1/t | 100 [trials] |

Implementation of neural dynamics

The M/T cell activity, mi, was defined relative to a baseline, denoted msp; in Fig. 3c, we plotted . On each trial, mi was initialized to zero and to co: mi(τ = 0) = 0 (i.e., ) and . In addition, the firing rates were lower-bounded by mi ≥ −msp and .

To avoid numerical instability, Ψ(α) in Eq. (21b) was approximated as

| 61 |

Implementation of synaptic plasticity

Both the feedforward and lateral weights were initially sampled from a log-normal distribution

| 62 |

with the variance and mean parameters set to

| 63a |

| 63b |

The precision factors, ρj, were initialized as

| 64 |

We used Zρ = 0.5, except in Fig. 6b and d, where we used Zρ = 0.3. The weights were lower-bounded by zero. As mentioned above, in the simulations we started t from to suppress the influence of the initial samples. Recurrent inhibition, J, was initialized to Jjk = 0.02 × (1−δjk).

Learning with a fixed gain function

In Fig. 5d, we fixed all λj at 200 (gray) and 342 (black), while the were updated at each trial as in Eq. (35).

Learning with a fixed learning rate

Fixing the learning rate, , to a constant, denoted η, the learning rules for and are rewritten as

| 65 |

and λj is given by

| 66 |

Go/no go task

In the simulation of the go/no go task, we selected two odors (j+ and j−) out of M total odors, then randomly presented one or the other with concentrations drawn from a Gamma distribution (as in Eq. (8), but cj > 0 and co = 1). The reward associated with j+ was R = 1.0 + ζ (i.e. ), where ζ is the noise in the observed reward sampled from a zero-mean Gaussian with variance 0.01. The reward associated with j− was R = ζ (i.e. ).

Learning of the circuit shown in Fig. 7h was done in two steps. First, the weights, and , and the precisions, ρj and , were learned with the unsupervised learning rules. During this unsupervised period, the reward, R, was kept at zero. After 4000 trials of unsupervised learning, we fixed , ρj, and , then trained the weights using Eq. (50).

The reward weights for the circuits in both Fig. 7h and i, and hj, respectively, were initialized to zero, and the learning rates were manually tuned to the largest stable rates (ηa = 0.5 and ηh = 0.0015). The latter learning rate was smaller because ∥x∥ is typically much larger than , and also because the update of the hj was more susceptible to instability.

The classification performance was measured by the probability that the predicted and actual reward were both above 0.5 or both below 0.5,

| 67 |

where is the value of ep right before the reward delivery (). Note that, as mentioned above, should converge to -Rt. Thus, the average error was defined to be

| 68 |

Performance evaluation

In the following sections, we summarized the performance evaluation methods we employed in this study.

Selectivity of granule cells

Because the network is trained with an unsupervised learning rule, we cannot know which neuron encodes which odor. We thus estimated the selectivity of a neuron from the incoming synaptic weights using a bootstrap method. Specifically, on each trial, the odor o(j) encoded by granule cell j is determined by choosing the odor that yields the maximum covariance between the estimated weights, wF, and the true mixing weight, w,

| 69 |

The selectivity can also be estimated from the activity of a neuron directly, by assuming that the granule cell with the highest activity to odor j codes for odor j. Essentially the same result holds when we take this approach, although accurate readout of selectivity requires a large number of trials. After learning, most neurons learn to encode one odor stably.

Odor estimation performance

Given the odor selectivity, o(j), the original odors can be reconstructed by

| 70 |

The denominator is the number of neurons that encode odor j, which converges to one after successful learning. If both the denominator and the numerator were zero, we set to 0. Performance was defined to be the correlation between the estimated odor concentration, , and the true concentration, cj. Evaluation of performance on trial t used o(j) calculated from wF,t−1, not from wF,t. In Fig. 7e and f, we instead calculated the correlation between and the true value of Θ[cj] using the same method, where

| 71 |

using the piriform neuron selectivity op(j).

ROC curve

We calculated the generalized ROC curves as in Fig. 7 of Grabska-Barwińska et al. (2017)6 using . We first separated the trials based on the total number of odors presented, and then for each trial we calculated the number of true/false positives under various thresholds θth. The true positive fraction is the fraction of presented odors above a threshold, θth, whereas the false positive count is the number of absent odors above a threshold, θth. The threshold, θth, was varied from 10−6 to 101 in a log scale, with an ~20% increase on every step.

Weight error

Given o(j), the error between the learned feedforward weight, , and the true mixing weight, wij, was calculated by

| 72 |

where . For ease of comparison, in Fig. 6b the weight errors were scaled by 7/3, so that the initial error was similar to the errors shown in Fig. 6a.

Lifetime sparseness

For the measurement of the lifetime sparseness19, we first presented individual odors m = 1, 2, . . . , M, then recorded the activity of granule cells . Subsequently, we calculated the sparseness using

| 73 |

The lifetime sparseness, Sj, takes a small value (Sj ≃ 0) if the activity is sparse, while Sj ≲ 1 is satisfied if the activity is uniform/homogeneous. Because of this, the lifetime sparseness is sometimes defined as 53.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

This work was supported by the Gatsby Charitable Foundation and the Wellcome Trust (110114/Z/15/Z).

Author contributions

NH and PEL designed the research; NH performed the research; NH analyzed the data; and NH and PEL wrote the paper.

Data availability

The main source codes of the simulations and the data analysis, from which our simulation date was generated, are publicly available at https://github.com/nhiratani/olfactory_learning. The rest are available from the corresponding author.

Code availability

The main codes for simulations and data analysis are publicly available as mentioned above, at http://github.com/nhiratani/olfactory_learning.

Competing interests

The authors declare no competing interests.

Footnotes

Peer review information Nature Communications thanks Brent Doiron, Matthew Smear and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information is available for this paper at 10.1038/s41467-020-17490-0.

References

- 1.Li Q, Liberles SD. Aversion and attraction through olfaction. Curr. Biol. 2015;25:R120–R129. doi: 10.1016/j.cub.2014.11.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ishii KK, et al. A labeled-line neural circuit for pheromone-mediated sexual behaviors in mice. Neuron. 2017;95:123–137. doi: 10.1016/j.neuron.2017.05.038. [DOI] [PubMed] [Google Scholar]

- 3.Staubli U, Fraser D, Faraday R, Lynch G. Olfaction and the "data" memory system in rats. Behav. Neurosci. 1987;101:757. doi: 10.1037//0735-7044.101.6.757. [DOI] [PubMed] [Google Scholar]

- 4.Linster C, Johnson BA, Morse A, Yue E, Leon M. Spontaneous versus reinforced olfactory discriminations. J. Neurosci. 2002;22:6842–6845. doi: 10.1523/JNEUROSCI.22-16-06842.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Millman DJ, Murthy VN. Rapid learning of odor–value association in the olfactory striatum. J. Neurosci. 2020;40:4335–4347. doi: 10.1523/JNEUROSCI.2604-19.2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Grabska-Barwińska A, et al. A probabilistic approach to demixing odors. Nat. Neurosci. 2017;20:98. doi: 10.1038/nn.4444. [DOI] [PubMed] [Google Scholar]

- 7.Aitchison, L., Pouget, A. & Latham, P. E. Probabilistic synapses. Preprint at https://arxiv.org/abs/1410.1029 (2017).

- 8.Hiratani N, Fukai T. Redundancy in synaptic connections enables neurons to learn optimally. Proc. Natl Acad. Sci. USA. 2018;115:E6871–E6879. doi: 10.1073/pnas.1803274115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Beal, M. J. Variational Algorithms for Approximate Bayesian Inference (University of London, London, 2003).

- 10.Olshausen BA, Field DJ. Sparse coding with an overcomplete basis set: a strategy employed by v1? Vis. Res. 1997;37:3311–3325. doi: 10.1016/s0042-6989(97)00169-7. [DOI] [PubMed] [Google Scholar]

- 11.Tootoonian S, Lengyel M. A dual algorithm for olfactory computation in the locust brain. Adv. Neural Inf. Process. Syst. 2014;27:2276–2284. [Google Scholar]

- 12.Kepple, D. et al. Computational algorithms and neural circuitry for compressed sensing in the mammalian main olfactory bulb. Preprint at 10.1101/339689 (2018).

- 13.Nair, V. & Hinton, G. E. Rectified linear units improve restricted Boltzmann machines. In Proceedings of the 27th international conference on machine learning (ICML-10), 807–814 (ACM, 2010).

- 14.Oswald A-MM, Reyes AD. Maturation of intrinsic and synaptic properties of layer 2/3 pyramidal neurons in mouse auditory cortex. J. Neurophysiol. 2008;99:2998–3008. doi: 10.1152/jn.01160.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Poirazi P, Brannon T, Mel BW. Pyramidal neuron as two-layer neural network. Neuron. 2003;37:989–999. doi: 10.1016/s0896-6273(03)00149-1. [DOI] [PubMed] [Google Scholar]

- 16.Zhang Z-W. Maturation of layer v pyramidal neurons in the rat prefrontal cortex: intrinsic properties and synaptic function. J. Neurophysiol. 2004;91:1171–1182. doi: 10.1152/jn.00855.2003. [DOI] [PubMed] [Google Scholar]

- 17.Carleton A, Petreanu LT, Lansford R, Alvarez-Buylla A, Lledo P-M. Becoming a new neuron in the adult olfactory bulb. Nat. Neurosci. 2003;6:507. doi: 10.1038/nn1048. [DOI] [PubMed] [Google Scholar]

- 18.Nissant A, Bardy C, Katagiri H, Murray K, Lledo P-M. Adult neurogenesis promotes synaptic plasticity in the olfactory bulb. Nat. Neurosci. 2009;12:728. doi: 10.1038/nn.2298. [DOI] [PubMed] [Google Scholar]

- 19.Willmore B, Tolhurst DJ. Characterizing the sparseness of neural codes. Network. 2001;12:255–270. [PubMed] [Google Scholar]

- 20.Wallace JL, Wienisch M, Murthy VN. Development and refinement of functional properties of adult-born neurons. Neuron. 2017;96:883–896. doi: 10.1016/j.neuron.2017.09.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bolding KA, Franks KM. Recurrent cortical circuits implement concentration-invariant odor coding. Science. 2018;361:6407. doi: 10.1126/science.aat6904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wilson RI, Mainen ZF. Early events in olfactory processing. Annu. Rev. Neurosci. 2006;29:163–201. doi: 10.1146/annurev.neuro.29.051605.112950. [DOI] [PubMed] [Google Scholar]

- 23.O’Connell RJ, Mozell MM. Quantitative stimulation of frog olfactory receptors. J. Neurophysiol. 1969;32:51–63. doi: 10.1152/jn.1969.32.1.51. [DOI] [PubMed] [Google Scholar]

- 24.Hopfield JJ. Odor space and olfactory processing: collective algorithms and neural implementation. Proc. Natl Acad. Sci. USA. 1999;96:12506–12511. doi: 10.1073/pnas.96.22.12506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shepherd GM, Chen WR, Willhite D, Migliore M, Greer CA. The olfactory granule cell: from classical enigma to central role in olfactory processing. Brain Res. Rev. 2007;55:373–382. doi: 10.1016/j.brainresrev.2007.03.005. [DOI] [PubMed] [Google Scholar]

- 26.Gschwend O, et al. Neuronal pattern separation in the olfactory bulb improves odor discrimination learning. Nat. Neurosci. 2015;18:1474. doi: 10.1038/nn.4089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Yamada Y, et al. Context-and output layer-dependent long-term ensemble plasticity in a sensory circuit. Neuron. 2017;93:1198–1212. doi: 10.1016/j.neuron.2017.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tan J, Savigner A, Ma M, Luo M. Odor information processing by the olfactory bulb analyzed in gene-targeted mice. Neuron. 2010;65:912–926. doi: 10.1016/j.neuron.2010.02.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Shusterman R, Smear MC, Koulakov AA, Rinberg D. Precise olfactory responses tile the sniff cycle. Nat. Neurosci. 2011;14:1039. doi: 10.1038/nn.2877. [DOI] [PubMed] [Google Scholar]

- 30.Smear M, Shusterman R, O’Connor R, Bozza T, Rinberg D. Perception of sniff phase in mouse olfaction. Nature. 2011;479:397–400. doi: 10.1038/nature10521. [DOI] [PubMed] [Google Scholar]

- 31.Chance FS, Abbott LF, Reyes AD. Gain modulation from background synaptic input. Neuron. 2002;35:773–782. doi: 10.1016/s0896-6273(02)00820-6. [DOI] [PubMed] [Google Scholar]

- 32.Baker KL, et al. Algorithms for olfactory search across species. J. Neurosci. 2018;38:9383–9389. doi: 10.1523/JNEUROSCI.1668-18.2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Roland B, Deneux T, Franks KM, Bathellier B, Fleischmann A. Odor identity coding by distributed ensembles of neurons in the mouse olfactory cortex. Elife. 2017;6:e26337. doi: 10.7554/eLife.26337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wesson DW, Wilson DA. Sniffing out the contributions of the olfactory tubercle to the sense of smell: hedonics, sensory integration, and more? Neurosci. Biobehav. Rev. 2011;35:655–668. doi: 10.1016/j.neubiorev.2010.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mathis A, Rokni D, Kapoor V, Bethge M, Murthy VN. Reading out olfactory receptors: feedforward circuits detect odors in mixtures without demixing. Neuron. 2016;91:1110–1123. doi: 10.1016/j.neuron.2016.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Shah MM, Hammond RS, Hoffman DA. Dendritic ion channel trafficking and plasticity. Trends Neurosci. 2010;33:307–316. doi: 10.1016/j.tins.2010.03.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Chen X, et al. Deletion of kv4. 2 gene eliminates dendritic a-type k+ current and enhances induction of long-term potentiation in hippocampal ca1 pyramidal neurons. J. Neurosci. 2006;26:12143–12151. doi: 10.1523/JNEUROSCI.2667-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Koulakov AA, Rinberg D. Sparse incomplete representations: a potential role of olfactory granule cells. Neuron. 2011;72:124–136. doi: 10.1016/j.neuron.2011.07.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Beck J, Pouget A, Heller KA. Complex inference in neural circuits with probabilistic population codes and topic models. Adv. Neural Inf. Process. Syst. 2012;25:3059–3067. [Google Scholar]

- 40.Hyvärinen A, Oja E. Independent component analysis: algorithms and applications. Neural Netw. 2000;13:411–430. doi: 10.1016/s0893-6080(00)00026-5. [DOI] [PubMed] [Google Scholar]

- 41.Mitchell TJ, Beauchamp JJ. Bayesian variable selection in linear regression. J. Am. Stat. Assoc. 1988;83:1023–1032. [Google Scholar]

- 42.Garrigues P, Olshausen BA. Learning horizontal connections in a sparse coding model of natural images. Adv. Neural Inf. Process. Syst. 2008;20:505–512. [Google Scholar]

- 43.Triesch J. Synergies between intrinsic and synaptic plasticity in individual model neurons. Adv. Neural Inf. Process. Syst. 2005;17:1417–1424. [Google Scholar]

- 44.Amari S. A theory of adaptive pattern classifiers. IEEE Trans. Electron. Comput. 1967;3:299–307. [Google Scholar]

- 45.MacKay DJC. A practical Bayesian framework for backpropagation networks. Neural Comput. 1992;4:448–472. [Google Scholar]

- 46.Doll BB, Simon DA, Daw ND. The ubiquity of model-based reinforcement learning. Curr. Opin. Neurobiol. 2012;22:1075–1081. doi: 10.1016/j.conb.2012.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]