Abstract

In this paper we present a novel method for analyzing the relationship between functional brain networks and behavioral phenotypes. Drawing from topological data analysis, we first extract topological features using persistent homology from functional brain networks that are derived from correlations in resting-state fMRI. Rather than fixing a discrete network topology by thresholding the connectivity matrix, these topological features capture the network organization across all continuous threshold values. We then propose to use a kernel partial least squares (kPLS) regression to statistically quantify the relationship between these topological features and behavior measures. The kPLS also provides an elegant way to combine multiple image features by using linear combinations of multiple kernels. In our experiments we test the ability of our proposed brain network analysis to predict autism severity from rs-fMRI. We show that combining correlations with topological features gives better prediction of autism severity than using correlations alone.

Keywords: Brain networks, topological data analysis, kernel regression, autism

1. INTRODUCTION

Understanding the communication network between functional regions of the brain is a vital goal towards uncovering the biological mechanisms behind several diseases and neuropsychiatric disorders. This is particularly important in autism spectrum disorder (ASD), which converging evidence has shown to be characterized by abnormal functional and structural connectivity. The development of imaging biomarkers that correlate with specific behavioral symptoms would be beneficial for early diagnosis or for tracking treatment efficacy. Achieving this requires new methods for extracting relevant network representations from neuroimaging and statistical models for regressing this high-dimensional and non-Euclidean information with behavioral measures.

Analysis of brain networks begins with a matrix of pairwise associations between brain regions, e.g., correlations between time series in resting-state functional MRI (rs-fMRI). Such an association matrix is often used directly as the representation of the network in a regression analysis, hypothesis test, or classification. Recently, graph-theoretic methods [1] have emerged as a powerful means to describe the organization of brain networks. Graph-theoretic measures (e.g., small worldness, modularity, etc.) have shown promising ability to explain impairments in brain networks characteristic of neuropsychiatric disorders such as ASD. However, graph-theoretic measures require that the association between nodes be thresholded to a binary value (connection or no connection), which loses information about the strength or certainty of the association. On the other hand, while the raw association matrix retains all information about the association strength between nodes, it does not directly capture information about the topology of the network.

To address this, we propose to use persistent homology features because they capture the topology of the association network at all levels of thresholding. These topological features are recorded in a persistence barcode, which is not a Euclidean vector, making direct use of linear statistical models difficult. However, we can define an inner product between two persistence barcodes, and use kernel partial least squares (kPLS) for regressing brain network topological features against behavioral scores. We apply our proposed methods to study how functional brain connectivity in autism is related to severity of the disorder.

2. TOPOLOGY OF BRAIN NETWORKS

Topological data analysis and persistent homology

Topological data analysis (TDA) extends mathematical concepts to the qualitative study of data from its point cloud representations; see, e.g., [2] for seminal work on the topic and [3, 4] for excellent surveys. In particular, the mathematical notion of homology captures the topological features of a space in terms of its connectivity, treating its (connected) components, tunnels and voids as 0-, 1- and 2-dimensional features; while persistent homology [2], a main ingredient in TDA, automatically detects and systematically characterizes these topological features at all scales.

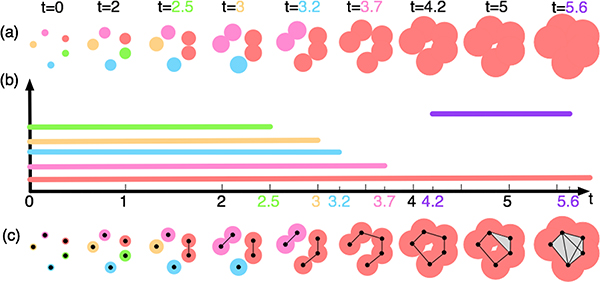

To convey the key ideas behind persistent homology, see Fig. 1 for an illustrative example. In Fig. 1(a), imaging five tiny infectious cells are born at time t = 0 in the culture and start to grow linearly in time. These cells differ by their degrees of infectiousness, where red > pink > blue > orange > green. When two cells grow large enough to intersect each other, the more infectious cell will kill the less infectious one, and both cells merge to form a highly infectious cluster. Using persistent homology, we investigate the topological changes within the growing sequence of cells indexed by time (a filtration). In particular, we focus on important events when cells merge with one another to form clusters (i.e., components) or tunnels. We begin by tracking the birth and death times of each cell (or cluster of cells) as well as its lifetime in the filtration. At t = 2.5, the green cell gets infected by the red cell and dies; and the two cells merge into one red cluster; therefore the green cell has a lifetime (i.e., persistence) of 2.5. At t = 3, the orange cell gets infected by the pink cell and turns pink; therefore it dies at t = 3. Similarly, the blue cell dies at t = 3.2 while the pink cluster of cells dies at t = 3.7. At time t = 4.2, something interesting happens as the collection of cells forms a tunnel; and the tunnel disappears at t = 5.6. We record and visualize the appearance (birth), the disappearance (death), and the persistence of topological features in the filtration via persistence diagrams [5], or equivalently, persistence barcodes [4]. A point p = (a, b) in the persistent diagram records a topological feature that is born at time a and dies at time b. Equivalently in the barcode of Fig. 1(b), such a feature is summarized by a horizontal bar that begins at a and ends at b.

Fig. 1.

Persistent homology computation and barcode.

On the other hand, from a computational point of view, the above nested sequence of spaces formed by cells can be represented by a nested sequence of simplicial complexes with a much smaller footprint, as illustrated in Fig. 1(c). Suppose we represent each cell by its nucleus, a black point within its center. At a fixed parameter t, if two cells intersect each other, we construct an edge (i.e., 1-simplex) connecting their nuclei. Similarly, we construct a triangle (i.e., 2-simplex) for every three nuclei and a tetrahedron (i.e., 3-simplex) for every four nuclei whose corresponding cells have pairwise intersections. Such a complex is referred to as the Rips complex, denoted as R(t). For example, at t = 2.5, an edge is formed connecting a pair of nuclei in the red cluster; and at t = 5, a triangle is constructed among a set of pairwise intersecting cells. It is clear that for parameters t1 ≤ t2, R(t1) ⊆ R(t2), forming a nested sequence. Persistent homology then captures the topological changes of these Rips complexes, producing similar barcode as in Fig. 1(b).

Applying persistent homology to brain networks

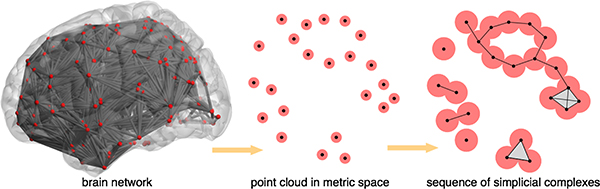

Persistent homology is becoming an emerging tool in studying complex networks (e.g., [6]), in particular, brain networks (e.g., [7, 8]). The key insight is to map a given brain network to a point cloud in the metric space (intuitively this corresponds to the set of cells in Fig. 1), where network nodes map to points, and the measures of association between pairs of nodes map to distances between pairs of points. In this paper for example, the distance d between two points u, υ in the metric space is computed using their correlation coefficients in the brain network, that is, . Subsequently, a nested sequence of simplicial (e.g., Rips) complexes could be constructed in the metric space for persistent homology computation. See Fig. 2 for an illustration.

Fig. 2.

Mapping a brain network to the metric space.

To interpret the extracted topological features from the simplicial complexes with respect to the brain network, dimension-0 features capture how nodes in the brain networks are groups into clusters based on their correlations; while dimension-1 and dimension-2 features encode how these nodes are glued together forming tunnels and voids. Low persistence features capture high correlation, and possibly microscopic interactions among the network nodes; while high persistent features reveal low correlation, and potentially mesoscopic and macroscopic interactions. Some initial works [7, 8] have shown that the distributions of the topological features with high or low persistence can be indicative of differences among network organizations; although few systematic investigations have been carried out so far.

Leveraging topological features for statistical analysis

To interface topological features with the statistical algorithms such as regression or classification, we employ a recent technique proposed by Reininghaus et al. [9] that imposes a stable, multi-scale topological kernel for persistence barcodes, which connects topological features encoded in the barcodes with popular kernel-based learning techniques such as kernel SVM and kernel PCA. In a nutshell, the topological kernel (parametrized by a scale parameter σ) measures similarity between a pair of barcodes A and B obtained from two different functional networks. It is defined as,

where for every q = (a, b) ∈ B, .

3. KERNEL PARTIAL LEAST SQUARES

Partial least squares

Partial least squares regression (PLS) [10] is a dimensionality reduction technique that finds two sets of latent dimensions from datasets X and Y such that their projections on the latent dimensions are maximally covarying. In comparison to principal component regression which separately reduces the dimension of regressors, PLS finds relevant latent variables that facilitate a better regression fit between datasets X (the predictor/regressor) and Y (the predicted response variables). Similar to Singh et al. [11], we consider here the case where the X predictors are features from brain imaging, and the Y responses are clinical measures of behavior. X is an n × N matrix of zero-mean variables and Y is an n × M matrix of zero-mean variables. PLS decomposes X and Y into X = TPT + E and Y = UQT + F, where T and U are n × p matrices of the p latent variables, the N × p matrix P and the M × p matrix Q are orthonormal matrices of loadings, and the n × N matrix E and the n × M matrix F are residuals. The first latent dimension in the PLS regression can be computed using the iterative NIPALS algorithm [12], which finds loading vectors w and u, such that the data projected onto these vectors, t = Xw and u = Y c, has maximal covariance. Subsequent latent dimensions are then found by deflating the previous latent dimension from X and Y, then repeating the NIPALS procedure.

Kernel partial least squares regression

Rosipal and Trejo [13] derived the kernel partial least squares (kPLS) algorithm which assumes that the regressor data X is mapped by some mapping Φ to a higher dimensional inner product space . Let K be the Gram matrix of data X, such that the entries of the kernel k(x, x′) between two vectors in is equal to the inner product . The kernel form of the NIPALS algorithm scales to unit norm vectors t and u instead of the vectors w and c. It initializes a random vector u and repeats the following steps until convergence:

| (1) |

| (2) |

| (3) |

| (4) |

| (5) |

At convergence, K is then deflated by K ← (I − ttT)K(I − ttT) to compute additional latent dimensions. Similar to PLS, the regression equation is .

4. RESULTS

We applied our proposed kPLS regression between brain network topological features and behavior in an rs-fMRI study of autism. Our goal was to test the ability to predict autism severity, as measured by the Autism Diagnostic Observation Schedule (ADOS), from topological features of functional networks. We first tested raw correlations from rs-fMRI as the brain network features in the kPLS. Our results below show that augmenting these raw correlation features with the proposed topological features improves the predictive power of the kPLS model.

Data

We use data from the Autism Brain Imaging Data Exchange (ABIDE), a joint effort across multiple international sites aggregating subjects’ rs-fMRI scans and behavioral information such as ADOS. To avoid data heterogeneity from site differences, such as different scanner models, protocols, etc., we limit our analysis to a single site. There was a total of n = 87 subjects with both rs-fMRI and ADOS information (30 typically-developing control subjects and 57 ASD subjects). The ADOS is an evaluation for autism based on social and communication behaviors. Subscores are assigned for both criteria, and at the clinician’s discretion, subjects scoring a total > 8 are diagnosed with ASD.

Preprocessing

All fMRI data were preprocessed using the Functional Connectomes-1000 scripts, which include skull stripping, motion correcting, registration, segmentation and spatial smoothing. Next, the time series for each of 264 regions is extracted based on Power’s regions of interest [14]. The Pearson correlation coefficient was then computed between each pair of regions, resulting in a 34,716 dimensional feature space for each subject (by vectorizing the strictly upper triangular part of the 264 × 264 correlation matrix). These pairwise correlations were used to compute the persistence barcodes for the topological features in Section 2.

Relating fMRI correlations to ADOS total scores

Using the rs-fMRI correlation values, we defined a linear kernel Kcor by taking the Euclidean dot products of the features. We used kPLS to regress the ADOS score Y against rs-fMRI correlations. The predictive power of the model was tested using leave-one-out cross-validation (LOOCV), i.e., for each subject, we trained the kPLS regression on the other n − 1 subjects and predicted the left out subject’s ADOS score. We evaluated the prediction of the true ADOS scores Y using the root mean squared error (RMSE).

Topological kernels

From each subject’s fMRI correlation matrix we computed the persistence barcodes of the dimension 0 and dimension 1 topological features using the procedure detailed in Section 2. The kernels and obtained from these barcodes were normalized by the median of the absolute values of their entries. There are four free parameters in the kPLS regression: the kernel parameters σ0 and σ1 for dimension 0 and dimension 1 features, and linear weights w0, w1 for combining the kernels to give . We performed LOOCV over all combinations of parameters, with weights w0 and w1 in the range from 0 to 1 by 0.05, and log kernel sizes log10(σ0) and log10(σ1) from −8 to 6 by 0.2. We also evaluated KTDA, with constrains (1−w0−w1) = 0, such that the combined kernel only uses topological features.

The above methods Kcor, KTDA+cor, and KTDA were compared against a baseline of using the mean ADOS value of the other n − 1 subjects for prediction. Our RMSE results show that correlation matrices and topological features have promising predictive power over the mean prediction baseline (see Table 1). To ensure that these regressions are also better than using random signals, we generated n random correlation matrices from i.i.d. N(0, 1) time series of the same size as the real fMRI data and computed their linear kernel. Random signals performed worse than the ADOS mean prediction baseline, with an RMSE of 6.47359.

Table 1.

ADOS prediction results. Columns 2 to 4 are p-values for the permutation test of improvement of row method over column method.

| RMSE | ADOS mean | KTDA | Kcor | |

|---|---|---|---|---|

| ADOS mean | 6.4302 | - | - | - |

| KTDA | 6.3553 | 0.316 | - | - |

| Kcor | 6.0371 | 0.055 | 0.095 | - |

| KTDA+cor | 6.0156 | 0.048 | 0.075 | 0.288 |

We used permutation tests to determine the p-value significance of our RMSE results. We looked at the test statistics RMSEmethod2 - RMSEmethod1 for all pairwise comparisons of the three kernel methods plus baseline. In each of 100,000 permutations, we performed random pairwise swaps of method2 and method1 predictions for subjects and computed the new statistic. The p-value is the percentage of permuted difference statistics that were greater than the unpermuted statistic.

From our results, both KTDA (parameters: σ0 = −6.6, σ1 = 1.8, w0 = 0.05, w1 = 0.95) and Kcor show evidence of improvement over baseline and noise. Augmenting Kcor with topological features, KTDA+cor has the best predictive power, and is the only method that is statistically significantly better than baseline. This best result is with the parameters σ0 = −7.8, σ1 = 2.8, w0 = 0.10, w1 = 0.40. These results show that topological features derived from correlations of rs-fMRI have the potential to explain the connection between function brain networks and autism severity. For future work, we will investigate the predictive power of persistence barcodes derived from other metrics, e.g., partial correlations.

Acknowledgements

This work was supported by NSF grants IIS-1513616 and IIS-1251049.

5. REFERENCES

- [1].Bullmore E and Sporns O, “Complex brain networks: graph theoretical analysis of structural and functional systems,” Nature Reviews Neuroscience, vol. 10, no. 3, pp. 186–198, 2009. [DOI] [PubMed] [Google Scholar]

- [2].Edelsbrunner H, Letscher D, and Zomorodian AJ, “Topological persistence and simplification,” DCG, vol. 28, pp. 511–533, 2002. [Google Scholar]

- [3].Carlsson G, “Topology and data,” Bullentin of the AMS, vol. 46, no. 2, pp. 255–308, 2009. [Google Scholar]

- [4].Ghrist R, “Barcodes: The persistent topology of data,” Bulletin of the AMS, vol. 45, pp. 61–75, 2008. [Google Scholar]

- [5].Cohen-Steiner D, Edelsbrunner H, and Harer J, “Stability of persistence diagrams,” DCG, vol. 37, no. 1, pp. 103–120, 2007. [Google Scholar]

- [6].Horak D, Maletić S, and Rajković M, “Persistent homology of complex networks,” JSTAT, p. P03034, 2009. [Google Scholar]

- [7].Lee H, Kang H, Chung MK, Kim B-N, and Lee DS, “Persistent brain network homology from the perspective of dendrogram,” T-MI, vol. 31, no. 12, pp. 2267–2277, 2012. [DOI] [PubMed] [Google Scholar]

- [8].Cassidy B, Rae C, and Solo V, “Brain activity: Conditional dissimilarity and persistent homology,” ISBI, pp. 1356–1359, 2015. [Google Scholar]

- [9].Reininghaus J, Huber S, Bauer U, and Kwitt R, “A stable multi-scale kernel for topological machine learning,” CVPR, 2015. [Google Scholar]

- [10].McIntosh AR, Bookstein FL, Haxby JV, and Grady CL, “Spatial pattern analysis of functional brain images using partial least squares,” NeuroImage, vol. 3, no. 3, pp. 143–157, 1996. [DOI] [PubMed] [Google Scholar]

- [11].Singh N, Fletcher PT, Preston SJ, King RD, Marron JS, Weiner MW, Joshi S, and ADNI, “Quantifying anatomical shape variations in neurological disorders,” MedIA, vol. 18, no. 3, pp. 616–633, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Wold H, “Soft modeling by latent variables; the nonlinear iterative partial least squares approach,” Perspectives in Probability and Statistics, Papers in Honour of M.S. Bartlett, pp. 520–540, 1975. [Google Scholar]

- [13].Rosipal R and Trejo L, “Kernel partial least squares regression in reproducing kernel hilbert space,” JMLR, vol. 2, pp. 97–123, 2002. [Google Scholar]

- [14].Power JD et al. , “Functional network organization of the human brain,” Neuron, vol. 72, no. 4, pp. 665–678, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]