Abstract

Introduction

Historically, ambulance services were established to provide rapid transport of patients to hospital. Contemporary prehospital care involves provision of sophisticated ‘mobile healthcare’ to patients across the lifespan presenting with a range of injuries or illnesses of varying acuity. Because of its young age, the paramedicine profession has until recently experienced a lack of research capacity which has led to paucity of a discipline-specific, scientific evidence-base. Therefore, the performance and quality of ambulance services has traditionally been measured using simple, evidence-poor indicators forming a deficient reflection of the true quality of care and providing little direction for quality improvement efforts. This paper reports the study protocol for the development and testing of quality indicators (QIs) for the Australian prehospital care setting.

Methods and analysis

This project has three phases. In the first phase, preliminary work in the form of a scoping review was conducted which provided an initial list of QIs. In the subsequent phase, these QIs will be developed by aggregating them and by performing related rapid reviews. The summarised evidence will be used to support an expert consensus process aimed at optimising the clarity and evaluating the validity of proposed QIs. Finally, in the third phase those QIs deemed valid will be tested for acceptability, feasibility and reliability using mixed research methods. Evidence-based indicators can facilitate meaningful measurement of the quality of care provided. This forms the first step to identify unwarranted variation and direction for improvement work. This project will develop and test quality indicators for the Australian prehospital care setting.

Ethics and dissemination

This project has been approved by the University of Adelaide Human Research Ethics Committee. Findings will be disseminated by publications in peer-reviewed journals, presentations at appropriate scientific conferences, as well as posts on social media and on the project’s website.

Keywords: accident & emergency medicine, quality in health care, health services administration & management

Strengths and limitations of the study.

The scoping review, which was used to establish a preliminary list of prehospital care quality indicators (QIs), used systematic methods.

By incorporating systematically synthesised literature into the expert consensus process, it will be evidence informed.

Selection of an Australian prehospital care expert panel will ensure that validity of proposed QIs is evaluated with contextual considerations.

Testing of candidate QIs will involve the participation of paramedics and ambulance services.

Considering the relatively young age of the paramedicine discipline, the evidence supporting many of the QIs is expected to be weak.

Introduction

The quality and safety of healthcare is on the agenda in any modern healthcare organisation, including ambulance services. Strategies to continuously improve the quality of service should primarily be based on information about the level of quality produced by the healthcare organisation.1 Indicators of desirable structures, processes and outcomes allow the quality of care and services to be measured. This assessment can be facilitated by systematically developing quality indicators (QIs) that describe the performance that should occur, and then measuring and monitoring whether a service’s operations and patient care are consistent with these indicators.2 Thus, an indicator may be defined as an explicitly described and measurable element of healthcare services and, as far as possible, should possess the fundamental characteristics of clarity, validity, acceptability, feasibility and reliability.3 A QI is an indicator for which there is evidence or consensus that it can be used to assess the quality, and hence measure changes in quality over time.4

For the purpose of this project, the context of prehospital care is limited to the healthcare services provided by ambulance services. Historically, the function of ambulance services was primarily one of transport; paramedics would provide only stabilising care to patients with high-acuity presentations before transporting to an emergency department. However, ambulance service models of care have evolved considerably. Contemporary prehospital care involves provision of often complex ‘mobile healthcare’ to patients across the lifespan presenting with injury or illness across the spectrum of acuity. An increasingly aged population and an increased incidence of chronic disease have led to a substantial increase in non-emergency, or ‘low acuity’ presentations for whom the traditional emergency department disposition may not be most appropriate.5 6 Ambulance services now play a key role in integrated healthcare frameworks, with transport to an emergency department being one of many disposition outcomes following care from paramedics alongside referral into primary and community-based healthcare. On the other verge of the patient spectrum, ambulance services continue to provide critical care and transport for those suffering life-threatening illness or injury.6 7 Therefore, this project adopts the definition of prehospital care previously developed which encompasses this range of patients seen by ambulance services: Prehospital care is the care that ambulance services provide for patients with real or perceived emergency or urgent care needs from the time point of emergency telephone access until care is concluded or until arrival and transfer of care to a hospital or other healthcare facility.8 9

Similarly to many other countries, Australia has measures in its national performance indicator framework for ambulance services that track the quality of care delivered to its residents across the various jurisdictions.10 However, the scope of current measurement is limited. For example, a short response time interval may be an important indicator in certain, time-critical patient cohorts11–13; however, its validity as a holistic prehospital care QI is questionable.14 15 Response times and other similarly simple QIs have predominated in ambulance services’ performance reports since they are easily measured and readily understood by the public and policymakers alike.16 With increasing research activity and the recent commencement of national registration of paramedics in Australia, a timely need to expand the nationally used indicators of prehospital care quality exists. Both, an expanding evidence-base and regulations which primarily ensure patient and community safety, ultimately aim to protect and continuously improve the quality of prehospital care. Meaningful measurement based on systematically developed QIs not only produces data to ensure the maintenance of quality, it also provides information on whether or not change is effective in achieving improvement.

This paper reports the context and methods for a project on development and testing of prehospital care QIs. The primary aim of the project is to develop and test QIs for the Australian prehospital care setting. To achieve this, the project addresses the following objectives:

To map the attributes or dimensions of ‘quality’ in the context of prehospital care and explore indicators that have been developed internationally to measure prehospital care quality.

To develop prehospital care QIs for the Australian setting and to evaluate their validity.

To test selected candidate prehospital care QIs for acceptability, feasibility and reliability.

Methods and analysis

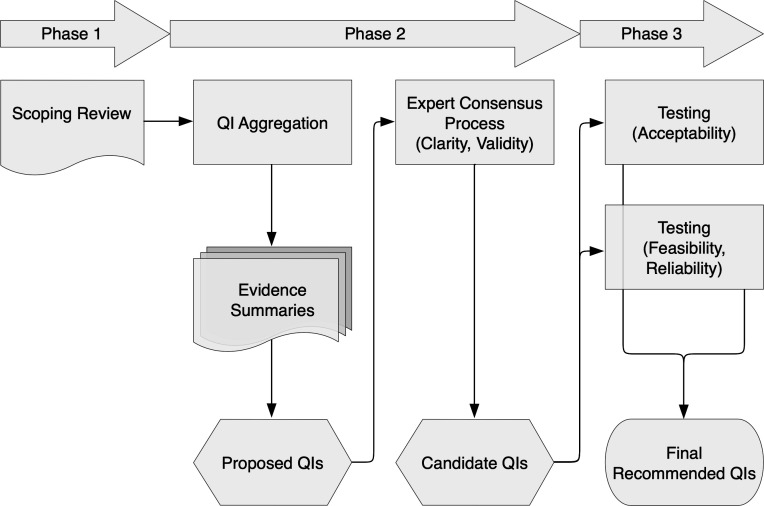

This project consists of three phases (figure 1): an initial scoping review addressing objective 1; evidence-informed development of prehospital care QIs and an evaluation of their validity using an expert consensus process (modified RAND/UCLA Appropriateness Method (RAM)) to address objective 2; and finally, a mixed methods approach (explanatory sequential design) to test the QIs as detailed in objective 3.

Figure 1.

Flow diagram detailing the three phases of the project. QI, quality indicator.

Phase 1: scoping review

This phase has been completed and involved preparatory work in the form of a scoping review.17 The purpose of the review was to map the attributes of ‘quality’ in the context of prehospital care and to chart existing international prehospital care QIs. The review employed the Joanna Briggs Institute (JBI) methodology for conducting scoping reviews.18 The objectives, inclusion and exclusion criteria, and methods were specified in advance and documented in a protocol.19

The review’s systematic search confirmed paucity in the literature that defines prehospital care quality or examines which dimensions of generic healthcare quality definitions are important in prehospital care. However, synthesis of included articles suggested that timely access to appropriate, safe and effective care which is responsive to a patient’s needs and efficient and equitable to populations is reflective of high-quality prehospital care. There is growing interest in developing QIs to evaluate prehospital care. In total, the review charted 526 QIs addressing clinical and non-clinical aspects of ambulance services providing prehospital care. The scoping review highlighted the need for validation of existing prehospital care QIs and de novo QI development.

Phase 2: evidence-informed expert consensus process

Phase 2 will comprise an evidence-informed expert consensus process to optimise the clarity of QIs and evaluate which are valid for the measurement of prehospital care quality in Australia. Preparative work will involve aggregating the dimensions of prehospital care quality and the prehospital care QIs charted in phase 1, as well as compliling evidence summaries to inform the expert panel. There are practical advantages, including the critical appraisal of QIs, in aggregating multiple dimensions of quality into a smaller number of principal dimensions.20 Campbell and colleagues20 argued that there are two overarching dimensions of quality of care: access and effectiveness. Aggregation of attributes of prehospital care quality into these two key dimensions has previously been performed by Owen.9

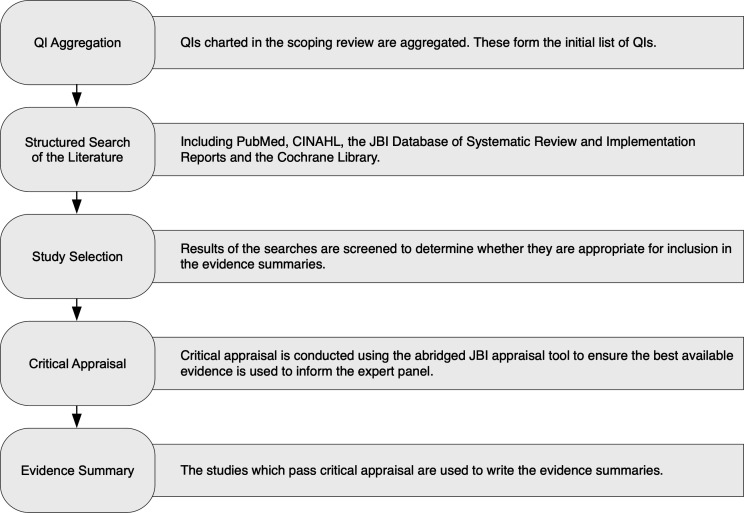

The development of the evidence summaries to inform the expert panel of best available evidence for each QI will be guided by the JBI approach for rapid reviews and evidence summaries.21 Figure 2 provides a diagrammatic outline of the rapid review and evidence summary process.

Figure 2.

The evidence summary development process (adopted from Munn et al21). JBI, Joanna Briggs Institute; QI, quality indicator.

Literature searches will be undertaken in the following databases: PubMed, CINAHL, the JBI Database of Systematic Reviews and Implementation Reports and the Cochrane Library. Table 1 details an example of search terms used. Generally, terms related to prehospital care will be combined with QI specific terms. Development of the terms related to prehospital care will be guided by search filters created by Olaussen et al.22 Only English language papers will be included for pragmatic reasons. Searches will not be limited by date. The search will also include backtracking of references. In line with JBI’s approach to evidence summaries,21 23 the best available evidence will be incorporated in each summary. This means that lower-level evidence will be included only when no systematic reviews are located. The JBI levels of evidence are detailed in table 2.

Table 1.

Example of search terms/filters used in PubMed

| Concept | [1] Prehospital care | [2] QI |

| Search terms | Ambulances[mh] OR Emergency Medical Technicians[mh] OR Air Ambulances[mh] OR paramedic*[tiab] OR ems[tiab] OR emt[tiab] OR prehospital[ti[ab] OR pre-hospital[tiab] OR first responder*[tiab] OR emergency medical technician*[tiab] OR emergency services(tiab] OR ambulance*[tiab] | (QI related search terms) |

| Search filter | [1] AND [2], English only; Systematic Reviews and Meta-Analyses/Meta-Synthesis only (Change to ‘[1] AND [2], English only’ if no or poor-quality Systematic Reviews and Meta-Analyses/Meta-Synthesis are identified) | |

QI, quality indicator.

Table 2.

JBI levels of evidence for effectiveness, diagnosis and meaningfulness23

| Level of evidence | Study designs | ||

| Effectiveness | Diagnosis | Meaningfulness | |

| 1 | Experimental designs including: | Studies of test accuracy among consecutive patients: | Qualitative or mixed-methods systematic review |

| a. Systematic review of randomised controlled trials (RCTs) | a. Systematic review of studies of test accuracy among consecutive patients | ||

| b. Systematic review of RCTs and other study designs | |||

| c. mRCTs | b. Study of test accuracy among consecutive patients | ||

| d. Pseudo-RCTs | |||

| 2 | Quasi-experimental designs including: | Studies of test accuracy among non-consecutive patients: | Qualitative or mixed-methods synthesis |

| a. Systematic review of quasi-experimental studies | a. Systematic review of studies of test accuracy among non-consecutive patients | ||

| b. Systematic review of quasi- experimental and other lower study designs | |||

| d. Quasi-experimental prospectively controlled study | b. Study of test accuracy among non-consecutive patients | ||

| e. Pretest post-test or historic/retrospective control group study | |||

| 3 | Observational—Analytical designs including: | Diagnostic case-control studies: | Single qualitative study |

| a. Systematic review of comparable cohort studies | a. Systematic review of diagnostic case-control studies | ||

| b. Systematic review of comparable cohort and other lower study designs | |||

| c. Cohort study with control group | b. Diagnostic case-control study | ||

| d. Case controlled study | |||

| e. Observational study without a control group | |||

| 4 | Observational—Descriptive designs including: | Diagnostic yield studies: | Systematic review of expert opinion |

| a. Systematic review of descriptive studies | a. Systematic review of diagnostic yield studies b. Individual diagnostic yield study |

||

| b. Cross-sectional study | |||

| c. Case series | b. Individual diagnostic yield study | ||

| d. Case study | |||

| 5 | Expert opinion and bench research including: | Expert opinion and bench research: | Expert opinion |

| a. Systematic review of expert opinion | a. Systematic review of expert opinion | ||

| b. Expert consensus | b. Expert consensus | ||

| c. Bench research/single expert opinion | c. Bench research/ single expert opinion | ||

JBI, Joanna Briggs Institute.

Following the search, titles and abstracts will be screened. If potentially eligible, the full text of the papers will be read to determine whether the article should be included in the applicable evidence summary. Full-text reading will involve an assessment of internal validity using an abridged critical appraisal tool (table 3). The rapid reviews and evidence summaries that will be developed for this study will have several limitations. The more a rapid review adheres to the methodological rigour of systematic reviews, the longer it will take to complete.21 24 25 Therefore, the less time is taken to complete a rapid review the less thorough it will be. The JBI approach to evidence summaries aims for a rapid development cycle.21 This method is considered suitable for the purpose of this project considering the limited resources and time available. These restrictions also mean that there will be only one researcher to screen, select, appraise and summarise the evidence and no peer review will be undertaken which may inevitable introduce increased risk of bias and error.

Table 3.

Abridged quality appraisal criteria for JBI evidence summaries21

| Type of study/evidence | Quality appraisal criteria |

| Systematic review | Is the review question clearly and explicitly stated? |

| Was the search strategy appropriate? | |

| Were the inclusion criteria appropriate for the review question? | |

| Were the criteria for appraising studies appropriate? | |

| Was critical appraisal by two or more independent reviewers? | |

| Were there methods used to minimise error in data extraction? | |

| Were the methods used to combine studies appropriate? | |

| Quantitative evidence | Was there appropriate randomisation? |

| Was allocation concealed? | |

| Was blinding to allocation maintained? | |

| Was incompleteness of data addressed? | |

| Were outcomes reported accurately? | |

| Qualitative evidence | Was the research design appropriate for the research? |

| Was the recruitment strategy appropriate for the research? | |

| Were data collected in a way that addressed the research issue? | |

| Has the relationship between researcher and participants been considered? | |

| Was the data analysis sufficiently rigorous? |

JBI, Joanna Briggs Institute.

An Australian prehospital care expert panel of 7–15 members will be recruited. Panellists must have perspectives and areas of expertise in Australian paramedicine, prehospital care, ambulance service leadership and management, quality improvement, performance/quality measurement and patient perspective. There are 8 state/territory-based ambulance services, 1 paramedicine professional associations and 18 universities offering paramedicine programmes. These institutions will be contacted and asked to nominate experts for participation in the study. The nomination process will require the nominator making a project information and nomination form available to the nominee for perusal and signature. Self-nomination will be allowed. The completed forms and attached curriculum vitae (CV) will be emailed to the lead investigator. The research team will select expert panel members based on information provided in the forms and attached CV. This is a confidential process and only the researchers will peruse the completed forms and CV. The main selection criteria to be considered will be acknowledged leadership in paramedicine, absence of conflicts of interest and geographic diversity (ideally at least one panellist from each state/territory). A RAM will be applied. RAM is a formal panel judgement process which systematically and quantitatively combines available scientific evidence with expert opinion by asking panel members to rate, discuss and then re-rate the items of interest.26 For the purpose of this project, the original method will be modified in the following ways:

Evidence summaries instead of systematic reviews: As described in the RAM user’s manual,27 the critical review of the literature summarising the best available scientific evidence is a fundamental initial step to inform panel members and as a resource to facilitate resolving any disagreements. The manual suggests that a systematic review is a good way to conduct a RAM literature review.27 Due to the rigorous methods applied when conducing a full systematic review, however, they can take an extensive amount of time to complete.28 It is anticipated that it will not be feasible to conduct systematic reviews for all QIs within the time and resources available for this project. Instead, to assist panel members in rating the validity of the QIs, evidence summaries will be compiled as described above for those QIs where published research evidence exists.

Opportunity for expert panel members to suggest additional QIs: In addition to rating the proposed QIs, panel members will also be invited to suggest additional QIs. This is optional but considered important, especially if expert panel members feel that the proposed QIs do not sufficiently address vital aspects of prehospital care essential for quality measurement in the Australian context.

Online rating and discussions instead of a postal rating sheet and face-to-face meeting: In anticipation of geographically distant locations of potential expert panel members in Australia, the second round will be conducted online. This has been found feasible in other studies using the method among geographically distributed participants.29

The consensus method will be a two-round online process. The online process will be designed on Qualtrics (Qualtrics, Provo, Utah, USA). In round one, panellists will be asked to separately rate the clarity and validity of each QI on scales from 1 to 9. To improve clarity, panellist will have the opportunity to make suggestions on changing the wording of the QIs. Panellists will also have an opportunity to suggest additional QIs, ideally supported by best available evidence. For the assessment of the QIs’ validity, panellist will be asked to consider the summarised evidence as well as their own knowledge and experience. In round two, panellists will join an asynchronous online discussion platform (Kialo, Brooklyn, New York, USA) moderated by one of the researchers. Discussions will be informed by individualised and anonymised results from the first round consisting of each panellist’s own rating compared with the frequency distribution for the ratings, the overall panel median and the mean absolute deviation around the median. Panellists will have an opportunity to discuss each QI before re-rating its validity.

Data analysis will be performed using Microsoft Excel V.2019 (Microsoft, Richmond, Washington, USA) and in accordance with the RAM.27 To proceed to the third and final phase of the project, there needs to be consensus that the QI is valid in the Australian prehospital care context. Validity will be signalled by a final panel median score of greater than or equal to seven with no disagreement. The definition of disagreement will depend on the number of panellists.

Phase 3: mixed methods

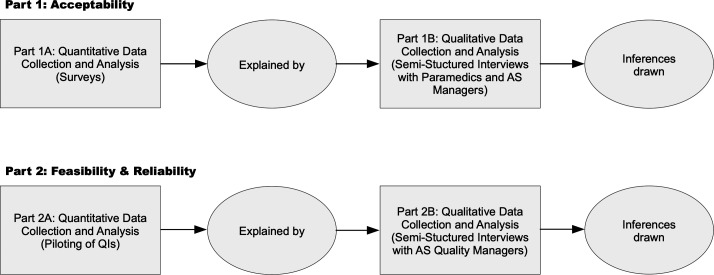

In this final phase, focus will be shifted from evaluating which QIs are valid to assessing which QIs are useful. As such, this phase is based on pragmatism as a philosophical foundation.30 Taking a social science theory perspective informed by reviews and frameworks of acceptability as a criterion for evaluating performance measures,31–33 phase 3 will involve the successional collection of quantitative and qualitative data to facilitate integrated interpretations and conclusions about the acceptability of the candidate QIs. Feasibility and reliability will be investigated in the same fashion. Thus, this phase will see the utilisation of explanatory sequential designs as illustrated in figure 3. The choice of mixed methods is in line with broad consensus that the rationale for a mixed approach must be a pragmatic one.34

Figure 3.

Explanatory sequential design of phase 3. AS, ambulance service.

Target participants for part 1 will be Australian paramedics and ambulance service managers, the individuals and representatives of services whose quality of prehospital care would be measured after implementation of the QIs. Based on the Australian registered paramedic population of approximately 17 000,35 and using a sample size estimation with a CI of 95% and margin of error of 8%, an ideal sample size of 149 will be required for the survey (part 1A). The survey will be disseminated through Australian paramedicine professional associations and social media. Participants will be asked to complete an anonymous online non-validated survey instrument purpose-built for this project (designed on Qualtrics; Qualtrics, Provo, Utah, USA). The survey will collect basic demographic data such as gender, age, paramedic qualification, years of experience in paramedicine, employment location and role. Depending on the number of candidate QIs stemming from the phase 2 of the project, the survey will consist of all or a random sample of the QIs. Using a five-point Likert scale, participants will be asked to rate the acceptability of each QI ranging from very unacceptable to very acceptable. At the end of the survey, participants will be asked if they would like to volunteer to partake in the subsequent semistructured interviews (part 1B). It will be made clear that by participating in part 1B, anonymity cannot be maintained. However, information gathered in this part will be kept confidential. Quantitative data analysis will be performed using Microsoft Excel 2019 (Microsoft, Richmond, Washington, USA). Non-parametric procedures, based on the median, as well as distribution-free methods such as tabulations, frequencies, contingency tables and chi-squared statistics will be used for analysing these data.36 37 Analysed data from part 1A will inform the development of a semistructured interview guide for part 1B. The interview guide will also contain some a priori questions (box 1). Questions will be open-ended and aimed at facilitating the explanation of what makes QIs acceptable or unacceptable and how the candidate QIs align to professional standards and values. To ensure diversity in the participants and to optimise credibility of results, maximum variation sampling will be used in part 1B.38 39 This will be achieved by combining self-selected participants with purposeful recruitment of individuals meeting demographic criteria poorly accounted for in the self-selected cohort. Targeted recruitment will be done through the professional networks of the researchers. Interviews will be conducted in English by the principle investigator (RP) and recorded for transcription. During and immediately after, field notes will be taken. Qualitative data will be collected until saturation is achieved,40 and descriptive approaches will be taken by conducting content analyses using Nvivo V.12 (QRS International, Doncaster, Australia).41 42 This will involve disassembling the data through coding, reassembling the coded data by putting it into context with each other to create categories and ultimately themes, and finally interpreting the data thereby drawing analytical conclusions.43 44 Several techniques will be used to enhance trustworthiness; these will include prolonged engagement, triangulation of recorded interviews, transcripts and field notes, and member checking.45

Box 1. Questions set a priori in the interview guide for phase 3, part 1B.

Opening

How long have you been involved in the ambulance service and what roles have you held?

Transition

What makes a quality indicator acceptable or not acceptable?

Key

How acceptable did you find the quality indicators in general?

How well do you think the quality indicators align to professional standards and values?

Clinician: Would you agree for your clinical practice to be measured and evaluated using these quality indicators? Manager/Supervisor: Would you agree to measure and evaluate the clinical practice of the staff you are supervising by using these quality indicators?

Closing

Is there anything you would like to add?

Do you have any questions about the interview or the research?

For part 2, voluntary participation of Australian state/territory ambulance services and their quality managers will be sought. The research team will make direct contact with the ambulance services to enquire about interest in participating. There are eight jurisdictional ambulance services in Australia and participation of as many as possible will be pursued. Depending on the number of candidate QIs stemming from phase 2 of the project, participating ambulance services will be asked to pilot all or a random sample of the QIs (part 2A). A questionnaire will collect service-describing data on variables such as size, call volume, datasets and quality measurement/management/improvement practices, and elicit details about the feasibility and reliability of measuring ambulance service performance using the candidate QIs. Quantitative data analysis will be performed using Microsoft Excel V.2019 (Microsoft, Richmond, Washington, USA). Similar to part 1, summarised results from part 2A will inform the development of a semistructured interview guide for part 2B. This guide will also contain some a priori questions (box 2). Questions will be open-ended and aimed at facilitating the explanation of what makes QIs feasible or unfeasible, especially from a non-technical perspective. Data collection during the interviews and subsequent processing and analysis will be conducted using the same approach described for part 1 above.

Box 2. Questions set a priori in the interview guide for phase 3, part 2B.

In relation to specific QIs

Do you think the target population is well described?

Is the numerator and denominator sufficiently defined?

Are the exclusions clear?

(In the pilot results form, it was indicated that IT/software is insufficient. What would need to be done to upgrade the system/software? Are there any barriers to this?)

(In the pilot results form, it was indicated that data is not available from existing sources. What would need to be done to obtain the required data? Are there any barriers to this?)

Is the data consistent with repeated measurements?

Do you think the indicator measures an aspect of your service that occurs often enough to detect clinically (or other) important changes?

(In the pilot results form, it was indicated that piloting the indicator was not successful in producing an accurate reflection of (Ambulance Service name) performance. What made the results unreliable/imprecise? What would need to be changed to make it reliable/precise?)

Are the results understandable?

Do you believe using this indicator as a quality improvement tool induces risk of data manipulation?

Closing

Is there anything you would like to add?

Do you have any questions about the interview or the research?

Patient and public involvement

Neither patients nor the public have been involved in the design of this project. The findings of the project will be made available to patients and the general public as part of the dissemination strategy. Future research may evaluate patient and public perceptions of the QIs.

Discussion

Not only is there rising demand for ambulance services but also increasing requirements to improve, maintain and evidence quality of care. QIs are often selected arbitrarily46 47; however, there appears to be growing interest in finding better ways to measure the quality of prehospital care provided by ambulance services.17 Measurement using intelligent and meaningful QIs over time is key to understanding variation and ultimately where and how to conduct improvement efforts.48 The QIs which will be developed in this project provide a mechanism to appraise Australian ambulance services’ performance and a framework to direct, monitor and demonstrate quality improvement efforts. Essential for the development of QIs is a definition of quality. Proceeding to develop indicators for the measurement of quality without understanding and consensus on what the concept of quality entails is unlikely to result in meaningful assessment of quality.49 Indicators can be developed using non-systematic and systematic methods.3 Non-systematic methods are relatively quick; however, they tend not to incorporate all available evidence during their development. Systematically developed QIs are ideally based on high-level scientific evidence or they are derived from evidence-informed guidelines.3 50 In areas or disciplines with limited scientific evidence, such as paramedicine, it may be necessary to combine the available evidence with expert consensus.51

A good QI needs to possess certain attributes which will assure that it can be used to make an accurate and fair judgement about quality. QIs should be valid, acceptable, feasible and reliable and must therefore be assessed or tested for these attributes before implementation. A good QI also has clear meaning which enables what is being assessed to be precisely attributable to that indicator.3 52 In other words, a clear QI is one which is free of ambiguity, inaccuracy or imprecision. Validity is arguably the most important property of a QI. In science, validity refers to the degree to which evidence and theory support the interpretation of scores entailed by proposed uses of an instrument.53 Thus, in the quality measurement context, validity refers to the degree to which evidence and theory support the expected interpretation of measured elements of practice performance related to the QIs. In more simple terms, validity refers to the extent to which the given statement represents high-quality care and would therefore be an endorsed indicator of quality. When assessing the validity of QIs, careful consideration of the intended context is important.54–56 While there are considerable benefits in using work from other locations, QIs cannot simply be transferred directly between different settings without an intermediate process to allow for variation in professional culture and clinical practice.57 As such, rating the validity of QIs entails as much assessment of whether they represent high-quality care as it does of how contextually applicable they are. Therefore, a method of group consensus using current scientific evidence in conjunction with Australian expert opinion to develop the clarity and assess the contextual validity of proposed QIs is deemed to be the approach of choice for this particular phase of the project. Several consensus processes have been used for the development of QIs. The original RAM was developed in the mid-1980s by the RAND Corporation in collaboration with the University of California Los Angeles (UCLA) as an instrument to facilitate the measurement of medical and surgical intervention appropriateness.27 RAM has been used extensively as a method of QI development,3 52 58 59 including QIs to evaluate prehospital care.9

Acceptability refers to the quality of being satisfactory or agreeable in terms of professional standards and values. If the aim of measurement is to provide direction for quality improvement, then the QIs need to be interpretable and meaningful to the audience, that is, clinicians and managers. However, the benefit of assessing QIs for acceptability extends beyond their development and testing. Measurement provides information to direct improvement efforts and is thus central to quality improvement.3 47 60–63 Involvement of clinicians and managers in the development of indicators is likely to improve their uptake and contributes to sustainability in quality improvement.32 Measurement of the quality of care may also serve as or contribute to performance appraisal systems. In this instance, user acceptance of such systems may be a critical criterion to ensure the successful implementation.32 Feasibility and reliability relate to the measurability of a QI. Testing QIs for these attributes is critical and ensures that implementation and sustained measurement is successful. Feasibility relates to the availability or attainability of accurate data and whether these data are realistically collectable.52 Feasibility thus encompasses technical and non-technical aspects of data collection and analysis. A feasible QI also facilitates measurement which is applicable to quality improvement, sensitive to improvement over time and useful for decision-making.64 Reliability, in this instance, is closely related to precision and refers to the consistency of scores across replications of a testing procedure.65 Testing reliability intends to assess whether the QIs are non-erroneously reproducible and for any errors to be identified.52 A reliable QI facilitates measurement which has low inter-rater or intrarater variation and suitable for statistical analyses.64

To test if and to what extent the QIs are acceptable, feasible and reliable, a mixed methods approach will be used. The reason for mixing both types of data is that neither quantitative nor qualitative methods alone would suffice to adequately capture the complex issue of QI acceptability, feasibility and reliability. Combined, quantitative and qualitative methods can complement each other and thus provide a more comprehensive picture of a research problem.66 More specifically, by applying a sequential explanatory mixed methods design, quantitative data and results will provide a general initial outline of how acceptable, feasible and reliable the QIs are, while the subsequent qualitative data and its analysis will explain those statistical results by exploring the participants’ views regarding the QIs in more depth. Although results of the quantitative and qualitative aspects will be integrated, priority will be given to the quantitative or the qualitative side during the analysis depending on which aspect is expected to require more emphasis.67 Therefore, in part 1 (acceptability), more emphasis will be placed on the qualitative component to thoroughly understand why certain QIs are deemed acceptable or not acceptable. Whilst part 2 (feasibility and reliability) will require more focus on the quantitative aspect, non-technical facilitators and barriers to feasibility will be explained through data analysis of the information obtained from participants.

There are a number of anticipated real and potential limitations. First, the preliminary scoping review bears inherent and specific limitations. Scoping reviews methods do not include an appraisal of quality or risk of bias when selecting studies for inclusion. The scoping review conducted for this project included articles written in English only and therefore the search performed may not have been exhaustive. Second and similarly, rapid reviews also have intrinsic limitations concerning their scope, comprehensiveness and rigour. However, considering the large number of QIs for which evidence needs to be identified and the time it would take to conduct systematic reviews, the rapid review and evidence summary approach is most appropriate. Third, while there are clear advantages of conducting online expert panels (eg, more efficient use of the experts’ time and making online discussions anonymous and thus reduce possible biases based on participant status or personality),29 68 this approach may also potentially present limitations. Unfamiliarity, technical issues or general dislike of online tools could decrease levels of engagement and interactions among the expert panel. This may undermine the expert panel members’ willingness to participate and affect the quality of discussions and outputs.69 Lastly, it is unlikely that all Australian state/territory ambulance services will be able or willing to participate in the final phase of the project. These services have significant differences in aspects such as size, clinical practice, data management, etc. Thus, the smaller the number of services that will participate, the less generalisable the results will be.

Ethics and dissemination

The project will be conducted in accordance with the National Health and Medical Research Council National Statement on Ethical Conduct in Human Research, as well as the approved research proposal. This project has been approved by the University of Adelaide Human Research Ethics Committee (Approval Number H-2017-157). It is supported through an Australian Government Research Training Programme Scholarship and in part by a research grant from the Australasian College of Paramedicine.

The scoping review has been published.17 Further findings of the project will be communicated using a comprehensive dissemination strategy. This strategy includes several different forms of dissemination to reach out to individuals and stakeholder groups at the national and international level. More specifically, this will involve publishing in peer-reviewed journals and presenting at national and international conference presentations, posting on social media sites such as Twitter, making announcements on the project’s website (www.aspireproject.net) and emailing study findings to participants and appropriate stakeholders.

Supplementary Material

Footnotes

Twitter: @robin_pap

Contributors: RP is the guarantor. RP incepted the project and prepared the manuscript. CL, MS and PS reviewed drafts to help refine the manuscript. All authors have read and approved the final draft.

Funding: This project is supported by an Australian Government Research Training Program Scholarship. This project is in part supported by a research grant from the Australasian College of Paramedicine (ACP).

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1.Donabedian A. An introduction to quality assurance in healthcare. Oxford: Oxford University Press, 2003. [Google Scholar]

- 2.Mainz J. Defining and classifying clinical indicators for quality improvement. Int J Qual Health Care 2003;15:523–30. 10.1093/intqhc/mzg081 [DOI] [PubMed] [Google Scholar]

- 3.Campbell SM, Braspenning J, Hutchinson A, et al. . Research methods used in developing and applying quality indicators in primary care. BMJ 2003;326:816–9. 10.1136/bmj.326.7393.816 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lawrence M, Olesen F. Indicators of quality in health care. Eur J Gen Pract 1997;3:103–8. 10.3109/13814789709160336 [DOI] [Google Scholar]

- 5.Lowthian JA, Cameron PA, Stoelwinder JU, et al. . Increasing utilisation of emergency ambulances. Aust Health Rev 2011;35:63–9. 10.1071/AH09866 [DOI] [PubMed] [Google Scholar]

- 6.Paramedics Australasia Paramedicine role descriptions [online], 2012. Available: https://www.paramedics.org

- 7.von Vopelius-Feldt J, Benger J. Who does what in prehospital critical care? an analysis of competencies of paramedics, critical care paramedics and prehospital physicians. Emerg Med J 2014;31:1009–13. 10.1136/emermed-2013-202895 [DOI] [PubMed] [Google Scholar]

- 8.Sayre MR, White LJ, Brown LH, et al. . The national EMS research agenda. Prehospital Emerg Care 2002;6:1–43. [PubMed] [Google Scholar]

- 9.Owen RC. The development and testing of indicators of prehospital care quality [dissertation]. Manchester: University of Manchester, 2010. [Google Scholar]

- 10.Australian Government Productivity Commission - Steering Committee for the Review of Government Service Provision Report on Government Services - Chapter 11: Ambulance services [online], 2020. Available: https://www.pc.gov.au/research/ongoing/report-on-government-services/2019/health/ambulance-services

- 11.Studnek JR, Garvey L, Blackwell T, et al. . Association between prehospital time intervals and ST-elevation myocardial infarction system performance. Circulation 2010;122:1464–9. 10.1161/CIRCULATIONAHA.109.931154 [DOI] [PubMed] [Google Scholar]

- 12.Takahashi M, Kohsaka S, Miyata H, et al. . Association between prehospital time interval and short-term outcome in acute heart failure patients. J Card Fail 2011;17:742–7. 10.1016/j.cardfail.2011.05.005 [DOI] [PubMed] [Google Scholar]

- 13.Byrne JP, Mann NC, Dai M, et al. . Association between emergency medical service response time and motor vehicle crash mortality in the United States. JAMA Surg 2019;154:286–93. 10.1001/jamasurg.2018.5097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Blanchard IE, Doig CJ, Hagel BE, et al. . Emergency medical services response time and mortality in an urban setting. Prehospital Emerg Care 2012;16:142–51. 10.3109/10903127.2011.614046 [DOI] [PubMed] [Google Scholar]

- 15.Pons PT, Haukoos JS, Bludworth W, et al. . Paramedic response time: does it affect patient survival? Acad Emerg Med 2005;12:594–600. 10.1197/j.aem.2005.02.013 [DOI] [PubMed] [Google Scholar]

- 16.Durham M, Faulkner M, Deakin C. Targeted response? an exploration of why ambulance services find government targets particularly challenging. Br Med Bull 2016;120:35–42. 10.1093/bmb/ldw047 [DOI] [PubMed] [Google Scholar]

- 17.Pap R, Lockwood C, Stephenson M, et al. . Indicators to measure prehospital care quality: a scoping review. JBI Database System Rev Implement Rep 2018;16:2192–223. 10.11124/JBISRIR-2017-003742 [DOI] [PubMed] [Google Scholar]

- 18.Aromataris E, Munn Z. JBI Manual for Evidence Synthesis [online], 2020. Available: https://synthesismanual.jbi.global/

- 19.Pap R, Lockwood C, Stephenson M, et al. . Indicators to measure pre-hospital care quality: a scoping review protocol. JBI Database System Rev Implement Rep 2017;15:1537–42. 10.11124/JBISRIR-2016-003141 [DOI] [PubMed] [Google Scholar]

- 20.Campbell SM, Roland MO, Buetow SA. Defining quality of care. Soc Sci Med 2000;51:1611–25. 10.1016/S0277-9536(00)00057-5 [DOI] [PubMed] [Google Scholar]

- 21.Munn Z, Lockwood C, Moola S. The development and use of evidence summaries for point of care information systems: a streamlined rapid review approach. Worldviews Evid Based Nurs 2015;12:131–8. 10.1111/wvn.12094 [DOI] [PubMed] [Google Scholar]

- 22.Olaussen A, Semple W, Oteir A, et al. . Paramedic literature search filters: optimised for clinicians and academics. BMC Med Inform Decis Mak 2017;17:1–6. 10.1186/s12911-017-0544-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Joanna Briggs Institute The JBI Approach [online], 2017. Available: http://joannabriggs.org/jbi-approach.html

- 24.Ganann R, Ciliska D, Thomas H. Expediting systematic reviews: methods and implications of rapid reviews. Implement Sci 2010;5:1–10. 10.1186/1748-5908-5-56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Wyer PC, Rowe BH. Evidence-based reviews and databases: are they worth the effort? developing evidence summaries for emergency medicine. Acad Emerg Med 2007;14:960–4. 10.1197/j.aem.2007.06.011 [DOI] [PubMed] [Google Scholar]

- 26.Nair R, Aggarwal R, Khanna D. Methods of formal consensus in classification/diagnostic criteria and Guideline development. Semin Arthritis Rheum 2011;41:95–105. 10.1016/j.semarthrit.2010.12.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fitch K, Bernstein SJJ, Aguilar MDD, et al. . The RAND/UCLA appropriateness method user’s manual [online], 2001. Available: http://www.rand.org

- 28.Borah R, Brown AW, Capers PL, et al. . Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the prospero registry. BMJ Open 2017;7:e012545. 10.1136/bmjopen-2016-012545 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Khodyakov D, Hempel S, Rubenstein L, et al. . Conducting online expert panels: a feasibility and experimental replicability study. BMC Med Res Methodol 2011;11:174–82. 10.1186/1471-2288-11-174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Perla RJ, Provost LP, Parry GJ. Seven propositions of the science of improvement: exploring foundations. Qual Manag Health Care 2013;22:170–86. 10.1097/QMH.0b013e31829a6a15 [DOI] [PubMed] [Google Scholar]

- 31.Levy PE, Williams JR. The social context of performance appraisal: a review and framework for the future. J Manage 2004;30:881–905. 10.1016/j.jm.2004.06.005 [DOI] [Google Scholar]

- 32.Hedge JW, Teachout MS. Exploring the concept of acceptability as a criterion for evaluating performance measures. Group Organ Manag 2000;25:22–44. 10.1177/1059601100251003 [DOI] [Google Scholar]

- 33.Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res 2017;17:1–13. 10.1186/s12913-017-2031-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Biesta G. Pragmatism and the philosophical foundations of mixed methods research : Tashakkori A, Teddlie C, SAGE handbook of mixed methods in social and behavioral research. 2nd ed Thousand Oaks: SAGE Publications, Inc, 2010. [Google Scholar]

- 35.Australian Health Practitioner Regulation Agency Annual report 2018/19 our national scheme: for safer healthcare [online], 2019. Available: https://www.ahpra.gov.au

- 36.Sullivan GM, Artino AR. Analyzing and interpreting data from likert-type scales. J Grad Med Educ 2013;5:541–2. 10.4300/JGME-5-4-18 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Harpe SE. How to analyze Likert and other rating scale data. Curr Pharm Teach Learn 2015;7:836–50. 10.1016/j.cptl.2015.08.001 [DOI] [Google Scholar]

- 38.Creswell J, Clark P. Designing and conducting mixed methods research. 2nd ed Los Angeles: Sage Publications Inc, 2011: 457. [Google Scholar]

- 39.Palinkas LA, Horwitz SM, Green CA, et al. . Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm Policy Ment Health 2015;42:533–44. 10.1007/s10488-013-0528-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Saunders B, Sim J, Kingstone T, et al. . Saturation in qualitative research: exploring its conceptualization and operationalization. Qual Quant 2018;52:1893–907. 10.1007/s11135-017-0574-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sandelowski M. What’s in a name? Qualitative description revisited. Res Nurs Heal 2010;33:77–84. [DOI] [PubMed] [Google Scholar]

- 42.Vaismoradi M, Turunen H, Bondas T. Content analysis and thematic analysis: implications for conducting a qualitative descriptive study. Nurs Health Sci 2013;15:398–405. 10.1111/nhs.12048 [DOI] [PubMed] [Google Scholar]

- 43.Castleberry A, Nolen A. Thematic analysis of qualitative research data: is it as easy as it sounds? Curr Pharm Teach Learn 2018;10:807–15. 10.1016/j.cptl.2018.03.019 [DOI] [PubMed] [Google Scholar]

- 44.Erlingsson C, Brysiewicz P. A hands-on guide to doing content analysis. African J Emerg Med 2017;7:93–9. 10.1016/j.afjem.2017.08.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Hadi MA, José Closs S. Ensuring rigour and trustworthiness of qualitative research in clinical pharmacy. Int J Clin Pharm 2016;38:641–6. 10.1007/s11096-015-0237-6 [DOI] [PubMed] [Google Scholar]

- 46.Jones P, Shepherd M, Wells S, et al. . Review article: what makes a good healthcare quality indicator? A systematic review and validation study. Emerg Med Australas 2014;26:113–24. 10.1111/1742-6723.12195 [DOI] [PubMed] [Google Scholar]

- 47.Barr S. Practical performance Measurment: using the PuMP blueprint for fast, easy and engaging KPIs. Samford: PuMP Press, 2014: 356. [Google Scholar]

- 48.Siriwardena AN, Gillam S. Measuring for improvement. Qual Prim Care 2013;21:293–301. [PubMed] [Google Scholar]

- 49.Donabedian A. The quality of care: how can it be assessed? J Am Med A 1988;260:1743–8. [DOI] [PubMed] [Google Scholar]

- 50.Kötter T, Blozik E, Scherer M. Methods for the guideline-based development of quality indicators–a systematic review. Implem Sci 2012;7:21. 10.1186/1748-5908-7-21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Mainz J. Developing evidence-based clinical indicators: a state of the art methods primer. Int J Qual Health Care 2003;15 Suppl 1:5i–11. 10.1093/intqhc/mzg084 [DOI] [PubMed] [Google Scholar]

- 52.Campbell SM, Kontopantelis E, Hannon K, et al. . Framework and indicator testing protocol for developing and piloting quality indicators for the UK quality and outcomes framework. BMC Fam Pract 2011;12:85. 10.1186/1471-2296-12-85 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Krabbe PFM. The measurement of health and health status: concepts, methods and applications from a multidisciplinary perspective. San Diego: Elsevier Science & Technology, 2016: 380. [Google Scholar]

- 54.Delnoij DMJ, Westert GP. Assessing the validity of quality indicators: keep the context in mind! Eur J Public Health 2012;22:452–3. 10.1093/eurpub/cks086 [DOI] [PubMed] [Google Scholar]

- 55.Lawrence M, Olesen F. Indicators of quality in health care. EurJ Gen Prac 1997;3:103–8. 10.3109/13814789709160336 [DOI] [Google Scholar]

- 56.Frongillo EA, Baranowski T, Subar AF, et al. . And Cross-Context equivalence of measures and indicators. J Acad Nutr Diet 2018:1–14. [DOI] [PubMed] [Google Scholar]

- 57.Marshall MN, Shekelle PG, McGlynn EA, et al. . Can health care quality indicators be transferred between countries? Qual Saf Health Care 2003;12:8–12. 10.1136/qhc.12.1.8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Campbell SM, Braspenning J, Hutchinson A, et al. . Research methods used in developing and applying quality indicators in primary care. Qual Saf Health Care 2002;11:358–64. 10.1136/qhc.11.4.358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Campbell SM, Cantrill JA. Consensus methods in prescribing research. J Clin Pharm Ther 2001;26:5–14. 10.1046/j.1365-2710.2001.00331.x [DOI] [PubMed] [Google Scholar]

- 60.Siriwardena AN, Gillam S. Quality improvement in primary care: the essential guide. London: CRC Press, Taylor & Francis Group, 2014: 159. [Google Scholar]

- 61.Sollecito WA, Johnson JK. McLaughlin and Kaluzny’s continuous quality improvement in health care. 4th ed Burlington: Jones & Bartlett Learning, 2013: 619. [Google Scholar]

- 62.Lloyd R. Quality health care: a guide to developing and using indicators. Sudbury: Jones and Bartlett, 2004: 343. [Google Scholar]

- 63.Mainz J. Quality indicators: essential for quality improvement. Inter J Qual Heal 2004;16:i1–2. 10.1093/intqhc/mzh036 [DOI] [Google Scholar]

- 64.Wollersheim H, Hermens R, Hulscher M, et al. . Clinical indicators: development and applications. Neth J Med 2007;65:15–22. [PubMed] [Google Scholar]

- 65.American Educational Research Association, American Psychologial Association, National Council on Measurement in Education . Standards for educational and psychological testing. Washington: American Educational Research Association, 2014: 230. [Google Scholar]

- 66.Teddlie C, Tashakkori A. Overview of contemporary issues in mixed methods research : Tashakkori A, Teddlie C, SAGE Handbook of mixed methods in social and behavioral research. 2nd ed Thousand Oaks: SAGE Publications, Inc., 2010. [Google Scholar]

- 67.Onwuegbuzie A, Combs J. Emergent data analysis techniques in mixed methods research: a synthesis : Teddlie C, Tashakkori A, SAGE Handbook of mixed methods in social and behavioral research. 2nd ed Thousand Oaks: SAGE Publications, Inc., 2010. [Google Scholar]

- 68.Bowles KH, Holmes JH, Naylor MD, et al. . Expert consensus for discharge referral decisions using online Delphi. AMIA Annu Symp Proc 2003:106–9. [PMC free article] [PubMed] [Google Scholar]

- 69.Snyder-Halpern R, Thompson CB, Schaffer J. Comparison of mailed vs. internet applications of the Delphi technique in clinical informatics research. Proc AMIA Symp 2000:809–13. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.