Abstract

Background and Purpose:

The NIHSS, designed and validated for use in clinical stroke trials, is now required for all stroke patients at hospital admission. Recertification is required annually but no data support this frequency; the effect of mandatory training prior to recertification is unknown.

Methods:

To clarify optimal recertification frequency and training effect, we assessed users’ mastery of the NIHSS over several years using correct scores (accuracy) on each scale item of the 15-point scale. We also constructed 9 technical errors that could result from misunderstanding the scoring rules. We measured accuracy and the frequency of these technical errors over time. Using multi-variable regression, we assessed the effect of time, repeat testing, and profession on user mastery.

Results:

The final dataset included 1.3×106 examinations. Data was consistent among all 3 online vendors that provide training and certification. Test accuracy showed no significant changes over time. Technical error rates were remarkably low, ranging from 0.48 to 1.36 per 90 test items. Within two vendors (that do not require training), the technical error rates increased negligibly over time (p<0.05). In data from a third vendor, mandatory training prior to recertification improved (reduced) technical errors but not accuracy.

Conclusions:

The data suggest that mastery of NIHSS scoring rules is stable over time, and the recertification interval should be lengthened. Mandatory retraining may be needed after unsuccessful recertifications, but not routinely otherwise.

Keywords: Stroke, clinimetrics, rating scales

The National Institutes of Health Stroke Scale (NIHSS) has become the de facto standard for rating neurological deficits in stroke patients1–3. The NIHSS emerged during the National Institutes for Neurological Disorders and Stroke (NINDS) trial of rt-PA for Acute Ischemic Stroke (the Trial) to standardize assessments and minimize variation across trial sites4. During the Trial, participating investigators and coordinators were asked to train and certify at trial launch, to recertify after 6 months, and then to recertify annually5. No data supported this arbitrary annual recertification frequency.

Now, private vendors offer on-line training and certification3. Certification is required annually, but we sought data to support that certification frequency. We further asked whether training should be mandated prior to re-certification.

Methods

The data that support the findings of this study are available from the corresponding author upon reasonable request. The Cedars-Sinai Institutional Review Board determined no informed consent was necessary for this project. Online NIHSS video training and certification is managed by 3 vendors using 3 groups of patient videos, Groups A, B, or C1. Each group contains video of 6 stroke patients, chosen to include a balanced sample of deficits. Vendor 1 does not require training before the user attempts certification; Vendor 2 has always required training; Vendor 3 began requiring training before certification on May 5, 2017. User identities are masked on all 3 vendor sites.

We defined 9 technical errors that would occur without recalling the NIHSS scoring rules (Supplemental Table I). Correct patient assessment (accuracy) was measured by comparing each user response to the correct answers. In each cohort there were 90 answers (15 items scored in 6 patients), so accuracy was averaged (±SD) per 90 items. A general linear model was used to predict error rate while holding constant Year, Exam (A,B,C), Cohort Size, and User Type (New, Repeat).

Results

We assessed NIHSS scoring across 1,313,733 raters from Vendor 1 (n=255,147), Vendor 2 (n=547,949), and Vendor 3 (n=510,637). Scores for each of the 90 items showed consistency across all 3 vendors (Supplemental Figure).

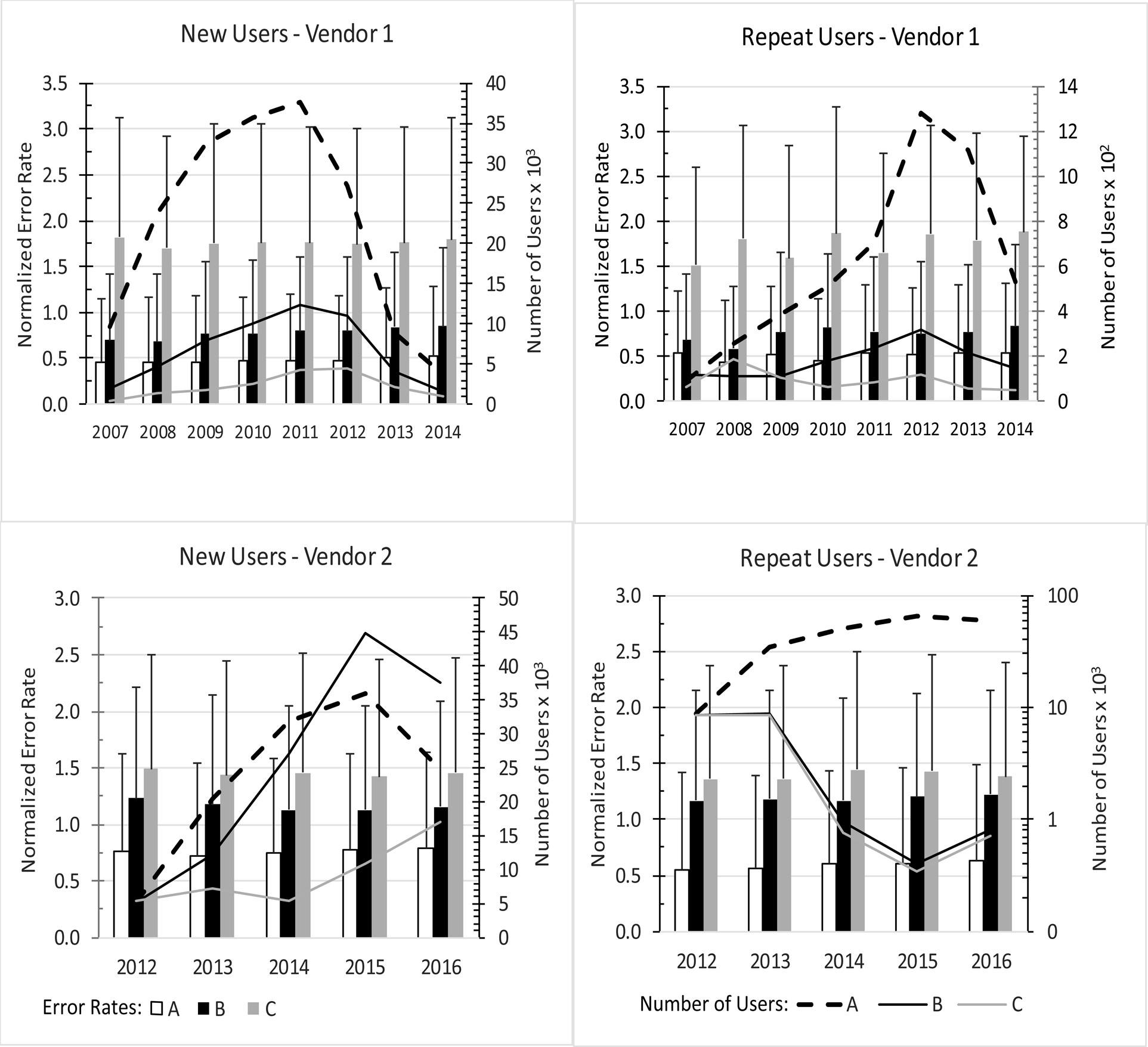

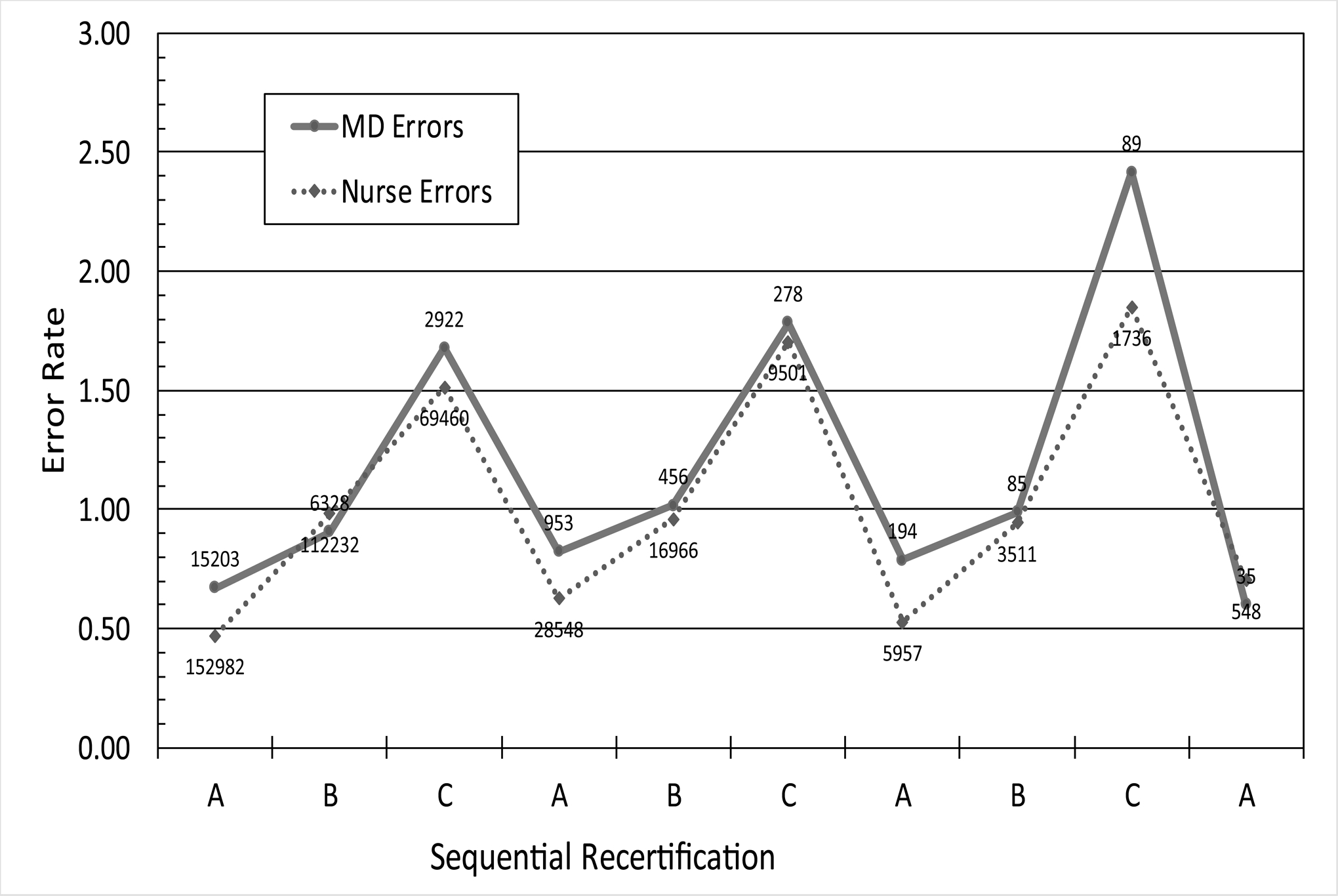

Results are detailed in Supplemental Table II. Accuracy ranged from 82.08 to 88.0 per 90 test items, showing remarkable consistency. Technical error rates ranged from 0.48 to 1.36 errors per 90 test items. There was no difference between repeat and new users in either testing accuracy or technical errors. Over several years the number of technical errors in Vendor 1—which never required training before certification—increased trivially (0.13 error/year, p<0.001, Figure 1, Supplemental Table III). Errors in Vendor 2—which always required training— decreased negligibly (0.014/year; p < 0.05, Figure 1, Supplemental Table IV). Within Vendor 3, there was no significant change in error rate over several years (p>0.05) (Figure 2, Supplemental Table V). By profession, RNs had significantly decreased error rates compared to MDs (p<0.05) when holding constant all else (Figure 2).

Figure One. Technical Errors for New and Repeat Users in Vendors 1 and 2.

The Technical Error rate in Groups A, B or C is plotted by year as mean and standard deviation. Total number of users in each year is plotted on the right-hand vertical axis.

Figure Two. Technical Errors for MD and RN users in Vendor 3.

Users’ self-described profession (MD or RN) was used to assess error rates by profession over time. Users repeated certification up to 10 times and the certification groups are labeled on the x-axis. Number of users per data point is labeled. RN users made significantly fewer technical errors than MD users (p<0.05). Over the 10 years, the technical error rates trended higher, reaching statistical significance for Group C (p<0.001).

Discussion

We found little evidence of decrement in NIHSS mastery over time (Supplemental Table II, Figures 1 and 2), suggesting that users retain a fundamental understanding of the proper use of the scale. Since re-certification is time-consuming and burdensome; and since the original annual re-certification frequency was arbitrary; and since the present data offers no rationale for preserving the annual requirement; therefore, we recommend lengthening the time interval between re-certifications (Table).

Table. Recommendations for NIHSS Training and Certification.

Based on the data we examined, we propose these training and certification intervals. The opinions expressed here are solely those of the authors and do not represent any recommendation or endorsement by the American Heart Association/American Stroke Association

| Recommendations | |

|---|---|

1. |

Training should be required prior to first certification. |

2. |

Recertification should occur 1 year after first certification. After that, recertification should be required 2 years after the last successful recertification. After 4 successful recertifications, the interval should increase to 3 years between recertifications. |

3. |

Retraining should be required after any unsuccessful recertification. |

4. |

Vendors should attempt to identify and track users across platforms, to standardize users’ certification and reduce gaming. |

The comparability of the error rates between the vendors who never required training and who always did support an inference that frequent retraining may be unnecessary. Given the enormity of the data sets included here, and the triviality of the increases in error rates among users of Vendor 1, it is clear that users retain a fundamental understanding of the scoring rules over time; that training does not materially affect error rates; and that users tend to use the scale correctly over many years. There is a considerable opportunity cost associated with overly-frequent training/certification and we feel that our data—limited though it is—affords the opportunity to reduce the re-certification burden on stroke professionals.

Physicians and nurses perform comparably but nurses exhibited fewer technical errors (Figure 2). This finding suggests that nurse scoring could substitute for physicians when needed during hospital admission.

The strengths of this study are the large dataset from 3 online vendors that offer online NIHSS certification. Users come from several countries and include users taking NIHSS training and certification in their own language.

This study comes with limitations. Some statistically significant findings are not clinically meaningful, due to the large sample size. We cannot track individual users across vendors, relying instead on users to self-identify profession and if they are new or repeat users. We based this analysis on 9 technical errors that rely on understanding the NIHSS scoring rules; other constructed errors, or the crude pass/fail rate could lead to different results.

In conclusion, using very large datasets comprising the 3 vendors offering NIHSS on-line certification, we confirmed the scale performs as intended over many years of recertification. We found no decrement in users’ mastery of the scoring rules suggesting the interval between recertifications could be lengthened. As intended, physician and nurse performance remain comparable.

Supplementary Material

Funding

Supported by R01 NS075930 (PL) and U01 NS088312 (PL) both from the National Institute of Neurological Disorders and Stroke and R03 MH106922 (AA) from the National Institute of Mental Health and K25AG051782 (AA) from the National Institute on Aging, and the Burroughs Welcome Fund Career Award at the Scientific Interface (AA).

Footnotes

Disclosures

PL, AA, AL: None

JK, BW, and MB are employees of Apex Innovations

AP and JH are shareholders and officers of HealthCarePoint

References

- 1.Lyden P Using the National Institutes of Health Stroke Scale: A Cautionary Tale. Stroke. 2017;48:513–519 [DOI] [PubMed] [Google Scholar]

- 2.Lyden P, Raman R, Liu L, Emr M, Warren M, Marler J. National Institutes of Health Stroke Scale certification is reliable across multiple venues. Stroke. 2009;40:2507–2511 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lyden P, Raman R, Liu L, Grotta J, Broderick J, Olson S, et al. NIHSS training and certification using a new digital video disk is reliable. Stroke. 2005;36:2446–2449 [DOI] [PubMed] [Google Scholar]

- 4.Brott T, Adams HP Jr., Olinger CP, Marler JR, Barsan WG, Biller J, et al. Measurements of acute cerebral infarction: a clinical examination scale. Stroke. 1989;20:864–870. [DOI] [PubMed] [Google Scholar]

- 5.The National Institute of Neurological Disorders and Stroke rt-PA Stroke Study Group. Tissue plasminogen activator for acute ischemic stroke. N Engl J Med. 1995;333:1581–1587 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.