Abstract

Capsule endoscopy has revolutionized the management of small-bowel diseases owing to its convenience and noninvasiveness. Capsule endoscopy is a common method for the evaluation of obscure gastrointestinal bleeding, Crohn’s disease, small-bowel tumors, and polyposis syndrome. However, the laborious reading process, oversight of small-bowel lesions, and lack of locomotion are major obstacles to expanding its application. Along with recent advances in artificial intelligence, several studies have reported the promising performance of convolutional neural network systems for the diagnosis of various small-bowel lesions including erosion/ulcers, angioectasias, polyps, and bleeding lesions, which have reduced the time needed for capsule endoscopy interpretation. Furthermore, colon capsule endoscopy and capsule endoscopy locomotion driven by magnetic force have been investigated for clinical application, and various capsule endoscopy prototypes for active locomotion, biopsy, or therapeutic approaches have been introduced. In this review, we will discuss the recent advancements in artificial intelligence in the field of capsule endoscopy, as well as studies on other technological improvements in capsule endoscopy.

Keywords: Artificial intelligence, Capsule endoscopy, Convolutional neural network, Locomotion

INTRODUCTION

Since the introduction of the first capsule endoscope in 2000, capsule endoscopy (CE) has become an essential noninvasive modality for the investigation and diagnosis of small-bowel (SB) diseases [1,2]. The capsule endoscope could move through the entire gastrointestinal (GI) tract and facilitate the detection of SB mucosal abnormalities, which conventional endoscopes could not reach. Furthermore, the ease of use, patient comfort, and safety have led to the extensive use of CE [3]. Over time, CE has become the first-line investigation tool for obscure GI bleeding, and has been an important method for the evaluation of Crohn’s disease, evaluation of SB tumors, and surveillance of polyposis syndromes [4,5]. However, CE also presents several challenges such as the time-consuming and tedious reading process, lack of active locomotion, inability to obtain biopsies, and inability to perform therapeutic interventions such as drug delivery. To overcome these drawbacks, novel technologies are being developed by several research groups, especially the application of artificial intelligence (AI) in the field of CE as part of recent evolutions in AI. Here, we present AI approaches for the detection of SB abnormalities using CE, and introduce recent studies about innovative technologies in CE.

ARTIFICIAL INTELLIGENCE IN CAPSULE ENDOSCOPY

Role of artificial intelligence in capsule endoscopy

Together with the numerous medical data, the evolution of computer technology has led to recent advances in AI using deep learning in the medical field [6]. Computer-aided diagnosis (CAD) systems using esophagogastroduodenoscopy (EGD) and colonoscopy images have become a vigorous research field, and these systems have demonstrated promising performance in the field of gastroenteroloy [7-9]. Typically, a CE video includes an average number of 50,000–60,000 frames in a single examination, requiring an average of 30–120 min of reading time by physicians, depending on the experience level of the reader [5,10]. Because physicians passively read numerous images with intense focus and attention, CE reading is a time-consuming and tedious process. Furthermore, SB abnormalities may present in only one or two frames of the video and appear with a wide diversity of color, shape, and size. This highlights the inherent risk of oversights during manual reading by physicians. With a substantial number of CE images, the use of AI in CE is an attractive solution for reducing the reading time and simplifying the identification of specific landmarks and suspicious abnormalities. There is growing evidence for the clinical implications of AI in the field of CE [11].

Application of artificial intelligence in capsule endoscopy

Since late 2000, AI has been developed to detect SB abnormalities based on CE images. Early studies concentrated on technical issues and usually used support vector machine (SVM) [12-16] or multilayer perceptron network [17-19] as AI classifiers. Studies from the biocomputational field have shown good performance in detecting polyps/tumors [12,13], ulcers [14,15], celiac disease [16,20], hookworms [21], angioectasia [22], and bleeding [19,23]. However, these studies used data from a limited number of patients with insufficient clinical information in terms of inclusion and exclusion criteria. Therefore, more robust evidence is needed to apply the proposed CAD system from the biocomputational field to the clinical situation. Along with the evolution of deep learning algorithm, convolutional neural network (CNN), which extracts specific features by convolutional and pooling layers and performs back-propagation to make the best-feature map, has become the main deep learning algorithm for image analysis [24]. The CNN system has shown outstanding performance in the detection or characterization of esophageal [7], gastric [8], and colorectal abnormalities [9], and has been actively investigated for the utilization of CE in clinical practice (Table 1).

Table 1.

Summary of Clinical Studies Using Machine Learning in the Field of Capsule Endoscopy

| Study | Aim of study | Design of study | Number of subjects | Type of AI | Outcomes |

|---|---|---|---|---|---|

| Iakovidis et al. (2014) [32] | Lesion detection based on color saliency | Retrospective | Training: 1,370 images from 252 patients | SVM | Average AUROC 89.2% |

| Test: 137 images | |||||

| Aoki et al. (2019) [25] | Detection of SB erosions/ulcers | Retrospective | Training: 5,360 images from 115 patients | CNN | AUROC 0.958, accuracy 90.8%, sensitivity 88.2%, specificity 90.9% |

| Validation: 10,440 images from 65 patients | |||||

| Aoki et al. (2020) [26] | Clinical usefulness of CNN for detection of mucosal breaks | Retrospective | 20 CE videos | CNN | Detection rate: expert 87%, trainee 55% |

| Reading time: expert 3.1 min, trainee 5.2 min | |||||

| Klang et al. (2020) [27] | Detection of SB ulcers in Crohn’s disease | Retrospective | Training and test: 17,640 images from 49 patients | CNN | AUROC 0.99, accuracy 96.7%, sensitivity 96.8%, specificity 96.6% (5-fold cross validation) |

| Leenhardt et al. (2019) [28] | Detection of GI angioectasia | Retrospective | Training: 600 images | CNN | Sensitivity 100%, specificity 96%, reading time 46.8 ms/ image |

| Test: 600 images | |||||

| Tsuboi et al. (2020) [29] | Detection of SB angioectasia | Retrospective | Training: 2,237 images from 141 patients | CNN | AUROC 0.998, sensitivity 98.8%, specificity 98.4% |

| Validation: 10,488 images from 20 patients | |||||

| Aoki et al. (2020) [30] | Detection of blood content | Retrospective | Training: 27,847 images from 41 patients | CNN | AUROC 0.9998, sensitivity 96.6%, specificity 99.9%, accuracy 99.8%, reading speed 40.8 images/sec |

| Test: 10,208 images from 5 patients | |||||

| Ding et al. (2019) [33] | Detection of SB abnormal imagesa) | Retrospective | Training: 158,235 images from 1,970 patients | CNN | Sensitivity 99.9%, reading time 5.9 min |

| Validation: 113,268,334 images from 5,000 patients | |||||

| Saito et al. (2020) [31] | Detection and classification of protruding lesions | Retrospective | Training: 30,584 images from 292 patients | CNN | Detection: AUROC 0.911, sensitivity 90.7%, specificity 79.8%, reading time 530.462 sec |

| Test: 17,507 images from 73 patients | Classification: sensitivity 86.5%, 92.0%, 95.8%, 77.0%, 94.4% (polyps, nodules, epithelial tumors, submucosal tumors, venous structure) |

AI, artificial intelligence; AUROC, area under the receiver-operating characteristic curve; CE, capsule endoscopy; CNN, convolutional neural network; GI, gastrointestinal; SB, small bowel; SVM, support vector machine.

SB abnormal images included inflammation, ulcer, polyps, lymphangiectasia, bleeding, vascular disease, protruding lesion, lymphatic follicular hyperplasia, diverticulum, parasite, and other.

For the detection of ulcers or erosion in CE, Aoki et al. designed a CNN-based program for detecting mucosal erosions and ulceration in CE using 5,360 CE images, which were all manually annotated with rectangular bounding boxes [25]. This CNN model processed 10,440 SB images including 440 images of erosions and ulcerations as the validation dataset in 233 sec, and showed promising performance with area under the receiver-operating characteristic curve (AUROC) of 0.958, sensitivity of 88.2%, specificity of 90.9%, and accuracy of 90.8%. Furthermore, they demonstrated the clinical usefulness of the established CNN system for relieving the reviewer’s workload without missing SB mucosal breaks [26]. This study compared the detection rate of mucosal breaks and the reading time between endoscopist-only readings and endoscopist readings after first being screened by established CNN using 20 CE videos. When endoscopists analyzed CE images detected by CNN, the mean reading time was significantly reduced (expert 3.1 min, trainee 5.2 min vs. expert 12.2 min, trainee 20.7 min); however, the detection rate was not decreased (expert 87%, trainee 55% vs. expert 84%, trainee 47%), thus showing the potential of the application of the CNN system as the first screening tool in clinical practice. Klang et al. reported the performance of a CNN model to detect Crohn’s disease ulcers using 17,640 CE images from 49 patients [27]. Unlike Aoki et al., they did not use bounding boxes or other markings to specify the lesion, and tested the developed CNN model with both 5-fold cross validation and individual patient-level experiment, which trained datasets from 48 different patients and tested the dataset of one individual patient [25]. This CNN model showed good results with AUROC of 0.99 and accuracy ranging from 95.4% to 96.7% for 5-fold cross validation, and AUROC of 0.94–0.99 for individual patient-level experiments.

For the detection of SB angioectasia, two studies were reported in 2018 and 2019. Leenhardt et al. proposed a CNN model for the detection of angioectasia using 300 typical angioectasia images and 300 normal images [28]. This model reached a sensitivity of 100%, a specificity of 96%, and a 39-min-long reading process for a full-length SB video. Tsuboi et al. trained a CNN system using 2,237 CE images of angioectasia, and assessed its diagnostic accuracy with 10,488 SB images including 488 images of angioectasia [29]. The AUROC for detecting angioectasia was 0.998, and the sensitivity and specificity were 98.8% and 98.4%, respectively. In 2019, Aoki et al. reported a CNN system for detecting blood content, and compared its performance with that of the suspected blood indicator (SBI), which automatically tags images with suspicious hemorrhages in the reading system [30]. The dataset consisted of 27,847 total CE images, including a training dataset of 6,503 images depicting blood content from 29 patients and a validation dataset of 10,208 images with 208 images depicting blood content. This CNN system outperformed the conventional SBI in terms of sensitivity (96.6% vs. 76.9%), specificity (99.9% vs. 99.8%), and accuracy (99.9% vs. 99.3%). The AUROC of the CNN system was 0.9998, and the CNN system took 250 sec to read 10,208 test images.

To detect protruding lesions and classify them into polyps, nodules, epithelial tumors, submucosal tumors, and venous structures, Saito et al. developed a CNN model using 30,584 CE images from 292 patients [31]. When this CNN model analyzed 17,507 test images (including 7,507 images of protruding lesions from 73 patients), the AUROC was 0.91 and the sensitivity and specificity were 90.7% and 79.8%, respectively. In the analysis of the classification of protruding lesions, the sensitivity for the detection of polyps, nodules, epithelial tumors, submucosal tumors, and venous structures were 86.5%, 92.0%, 95.8%, 77.0%, and 94.4%, respectively.

Although the above-mentioned studies showed good performance of CNN for detecting diverse SB lesions, they only focused on detecting one category of abnormalities. In 2014, Iakovidis et al. developed an automatic lesion detection software using SVM, and the average performance with AUROC for the detection of various abnormalities such as angioectasias, ulcers, polyps, and hemorrhage was 89.2% [32]. However, they used only 137 CE images including 77 pathologies, which were insufficient to evaluate the diagnostic accuracy. Recently, Ding et al. reported a CNN model that could differentiate various abnormal lesions such as inflammation, ulcer, polyps, lymphangiectasia, bleeding, vascular disease, protruding lesion, lymphatic follicular hyperplasia, diverticulum, and parasite from normal mucosa using 113 million CE images from 6,970 patients at 77 medical centers [33]. The CNN model showed a significantly higher level of sensitivity for the identification of abnormalities than conventional analysis by endoscopists in per-patient analysis (99.9% vs. 74.6%) and per-lesion analysis (99.9% vs. 76.9%). In addition, the CNN model significantly reduced the reading time compared with conventional reading by endoscopists (5.9 min vs. 96.6 min), thus showing the outstanding effectiveness of CNN models. Finally, in a systematic review and meta-analysis, Soffer et al. analyzed 10 studies that provided sufficient data for a quantitative meta-analysis of the CNN technique [34]. The pooled sensitivity and specificity for ulcer detection were 0.95 and 0.94, respectively, and the pooled sensitivity and specificity for bleeding or the bleeding source were 0.98 and 0.99, respectively. However, there was high heterogeneity between studies and most studies had a high risk of bias.

Challenges and future direction for the application of artificial intelligence in the field of capsule endoscopy

Although many research groups have obtained remarkable results on the use of AI in the field of CE, AI has not yet been applied in real-world patient management beyond clinical studies. Several obstacles need to be overcome for the clinical implementation of AI. First, most published studies to date were performed retrospectively and used data from a single center or a small number of centers, which leads to inherent selection and spectrum bias and restricts the generalization of the established CNN system. The AI system for medical applications, especially CNN, is highly dependent on training data and a high quality of data for model development is essential. In addition, because the mechanism of the AI system is difficult to explain (black box, lack of interpretability), the validation of the AI system is an important step in evaluating AI performance. Investigations without prospective or external validation have the risk of overfitting (meaning that the learning model is customized too closely to the distinct training dataset), thus failing to predict future observations. Therefore, for meticulous evaluation and verification of the clinical relevance of the CNN system, further multicenter, prospective studies and external validation with irrelevant data for model development are mandatory. Second, in most studies, CNN systems were trained and validated using still CE images rather than videos, and clear and accurate images rather than insufficiently prepared images with significant bubble, debris, and bile. Furthermore, light limitation, low resolution (320×320 pixels), and various orientations of SB lesions due to the free mobilization of the capsule endoscope in real-world practice could worsen the quality of CE images. The performance of the published CNN system could not be guaranteed in actual clinical settings. Third, most studies developed a CNN system using data from a specific kind of CE. Because each CE system has different image processing characteristics, it is questionable whether the established CNN system can be adopted for other CE systems. Therefore, acceptance of various kinds of CE systems and use of CE data from a large variety of clinical situations are crucial for the clinical application of upcoming CNN systems.

There are several unsolved issues for the clinical application of AI in CE, such as AI application in other medical fields. Before the incorporation of AI in community use, cost-effectiveness and the satisfaction of both patients and physicians should be demonstrated. We believe that the AI system will be used as a supplement, but not a replacement, in the medical filed. Therefore, a system for educating physicians on AI implementation and for helping them understand the technology must be established. Further, legal and ethical problems concerning the responsibility of AI application and significant reimbursement concerns must be addressed.

OTHER TECHNICAL ADVANCEMENTS IN CAPSULE ENDOSCOPY

Potential for novel capsule endoscopy in clinical practice

Beyond SB examination, colon capsule endoscopy (CCE) has the advantage of being noninvasive and painless. The second-generation CCE-2 capsule has double-headed lenses, longer recording capacity, and variable frame capture rates. In a meta-analysis of studies with 1,292 patients, Spada et al. compared the results of CCE-2 and colonoscopy [35]. The sensitivity and specificity of CCE-2 for the detection of polyps larger than 6 mm was 86.0% and 88.1%, respectively, and all invasive cancers that were observed by colonoscopy were also identified by CCE-2, showing the potential role of CCE-2 as an alternative screening method [35]. In controlled studies, CCE-2 also showed acceptable performance for the assessment of the colonic mucosa in patients with Crohn’s disease and ulcerative colitis [36,37].

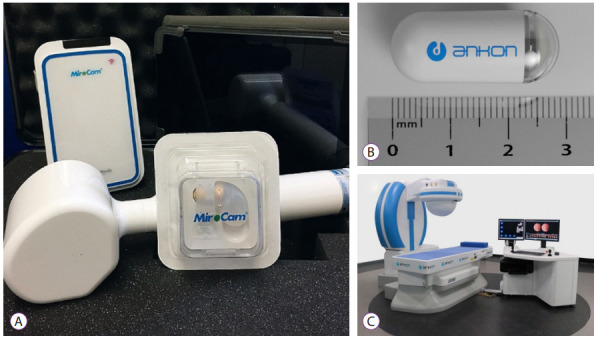

The major challenge of conventional CE is that physicians could not control the movement, orientation, and speed of the capsule. These limitations interfere with the navigation and meticulous observation of the area of interest, and reduce the diagnostic and therapeutic efficacy of CE. Because capsule locomotion and navigation could improve mucosal visualization, and further enable biopsy of the target lesion and treatment delivery in the long term, various research groups have developed CE, which is controlled by physicians with two approaches [38]. The first approach is a self-propelled mechanism including crawling or swimming as an internal locomotion mechanism. The second approach is a mechanism externally propelled by magnetic force as an external locomotion mechanism [39]. However, restricted power capacity and limited propulsion force are the main obstacles to the clinical implementation of internal locomotion mechanisms beyond experimental studies. Conversely, external propulsion using magnetic power is more practical and has emerged in several types of capsule endoscopes for navigating the device to the desired area (Fig. 1). Recently, magnetically guided CE was evaluated as a screening tool for gastric cancer in 3,182 asymptomatic patients, and seven patients (0.22%) were diagnosed with gastric cancer, accounting for 0.74% (7/948) of patients aged ≥50 years, showing the potential role of magnetic CE [40]. In a multicenter blinded study involving 350 patients with upper abdominal complaints, Liao et al. compared the detection of focal lesions between robotically assisted magnetically guided CE and conventional EGD [41]. The diagnostic accuracy was comparable between the two examinations, and >95% patients preferred magnetically guided CE because of its noninvasiveness. The prospective study by Ching et al. demonstrated that magnetically assisted CE (MACE) had better diagnostic yield than EGD in patients investigated for iron deficiency anemia [42]. Beg et al. evaluated the performance of MACE for the detection of Barrett’s esophagus and esophageal varix using a handheld magnet for the capture of the capsule, and MACE correctly diagnosed 15 of 16 cases of Barrett’s esophagus and 11 of 15 cases of esophageal varix [43]. These results show the feasibility of magnetically guided CE for the diagnosis of GI disease, and further clinical trials with respect to superior performance, cost-effectiveness, and safety are warranted for clinical implementation.

Fig. 1.

Magnetically guided (assisted) capsule endoscope. (A) MiroCam NAVI system (Intromedic Co., Ltd., Seoul, Korea). (B, C) NaviCam capsule endoscope and NaviCam magnetic control system (Ankon Technologies Co., Ltd., Wuhan, China).

Recent studies on capsule prototypes

With respect to the locomotion of the capsule, Fontana et al. developed single-camera spherical capsule endoscope for colorectal screening [44]. The spherical shape of this novel capsule endoscope was designed to reduce friction during its locomotion in the colon. The interaction between the integrated permanent magnet in the capsule and the external electromagnet leads to its actuation. Fu et al. proposed a magnetically actuated micro-robotic capsule with hybrid motion such as screw jet motion, paddling motion, and fin motion [45]. This capsule endoscope is moved by an electromagnetic actuation system, which generates a rotational magnetic field and an alternate magnetic field. Guo et al. reported a spiral robotic capsule with a modular structure guided by an external magnetic field [46]. This robotic capsule comprises a guided robot and an auxiliary robot, both of which have two helical diversion grooves with different spiral directions between them. The two capsules move relative to each other under the same external magnetic field. After observing the guide robot, the treatment robot is swallowed and docked with the guide robot, which reduces the time needed to navigate the target lesion.

Several approaches have recently been proposed to enhance the imaging capability of present-day CE. Jang et al. developed a capsule endoscope with four Video Graphics Array cameras [47]. Each camera has a 120° field of view, which enables capturing full 360° high-resolution images of 640×480 pixels at four frames per second. Moving apart from conventional white-light imaging, Demosthenous et al. proposed CE for the detection of fluorescence released by very low levels of indocyanine green fluorophores [48]. Because physicians would only need to examine whether the observed fluorescence level exceeded a predefined threshold, early-stage SB cancer could be cost-effectively screened by eliminating the present CE reviewing process.

Several research groups have attempted to develop CE with biopsy capabilities. Micro-jaw forceps and two multiscale magnetic-based robotic devices including centimeter-scaled untethered magnetically actuated soft capsule endoscope (MASCE) and a submillimeter-scale self-folding micro-gripper have been previously reported [49,50]. Son et al. recently developed B-MASCE, which enables fine-needle aspiration biopsy [51]. B-MASCE was developed to enable axial jabbing motion of the needle and rolling locomotion in the stomach for biopsy. A magnetic field is employed for the control and torque of the magnet in CE, and four soft legs guide the penetration of the needle into the target lesion.

In addition to diagnostic capsules, several therapeutic capsule prototypes have been proposed by various research groups. Stewart et al. introduced SonoCAIT for an ultrasound (US)-mediated targeted drug delivery [52]. The proposed capsule consists of a US transducer, drug delivery channel, vision module, and multichannel external tether, and use US to release drugs and/or to enhance drug uptake via sonoporation for drug delivery to the target lesion. When drug-filled microbubbles arrive at the target lesion, the drug is released by US. Leung et al. developed a capsule for hemostasis using an inflated balloon [53]. This capsule was composed of a gas generation chamber, an acid injector, and a circuit box with flexible joints. The balloon was inflated by acid injection into a gas generation chamber filled with base powder, resulting in hemostasis by tamponade at the bleeding site.

CONCLUSIONS

With its rapid evolution, CE has become an important method for the investigation of obscure GI bleeding, Crohn’s disease, SB tumors, and polyposis syndrome. However, the time-consuming and tedious reading process and lack of active locomotion are major challenges for the widespread use of CE in clinical practice. Here, we reviewed recent CNN-based approaches for CE that have been applied to detect various SB abnormalities including erosion/ulcers, angioectasias, blood content, and protruding lesions. Although published studies showed the promising diagnostic accuracy of the CNN system, several challenges need to be overcome for its clinical application in real-world practice. Further multicenter, prospective studies and external validation will provide robust evidence for the performance of the CNN system in CE. Moreover, in the colon, magnetically guided CE showed the potential for clinical use as an alternative method to conventional endoscopy. Further technical advancements in CE in terms of active locomotion, image enhancement, and therapeutic approaches are being actively investigated and could be integrated into patient management in the near future.

Footnotes

Conflicts of Interest: The authors have no financial conflicts of interest.

REFERENCES

- 1.Iddan G, Meron G, Glukhovsky A, Swain P. Wireless capsule endoscopy. Nature. 2000;405:417. doi: 10.1038/35013140. [DOI] [PubMed] [Google Scholar]

- 2.Kopylov U, Seidman EG. Diagnostic modalities for the evaluation of small bowel disorders. Curr Opin Gastroenterol. 2015;31:111–117. doi: 10.1097/MOG.0000000000000159. [DOI] [PubMed] [Google Scholar]

- 3.Eliakim R. Video capsule endoscopy of the small bowel. Curr Opin Gastroenterol. 2013;29:133–139. doi: 10.1097/MOG.0b013e32835bdc03. [DOI] [PubMed] [Google Scholar]

- 4.Pennazio M, Spada C, Eliakim R, et al. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) clinical guideline. Endoscopy. 2015;47:352–376. doi: 10.1055/s-0034-1391855. [DOI] [PubMed] [Google Scholar]

- 5.ASGE Technology Committee. Wang A, Banerjee S, et al. Wireless capsule endoscopy. Gastrointest Endosc. 2013;78:805–815. doi: 10.1016/j.gie.2013.06.026. [DOI] [PubMed] [Google Scholar]

- 6.Yang YJ, Bang CS. Application of artificial intelligence in gastroenterology. World J Gastroenterol. 2019;25:1666–1683. doi: 10.3748/wjg.v25.i14.1666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Horie Y, Yoshio T, Aoyama K, et al. Diagnostic outcomes of esophageal cancer by artificial intelligence using convolutional neural networks. Gastrointest Endosc. 2019;89:25–32. doi: 10.1016/j.gie.2018.07.037. [DOI] [PubMed] [Google Scholar]

- 8.Cho BJ, Bang CS, Park SW, et al. Automated classification of gastric neoplasms in endoscopic images using a convolutional neural network. Endoscopy. 2019;51:1121–1129. doi: 10.1055/a-0981-6133. [DOI] [PubMed] [Google Scholar]

- 9.Wang P, Berzin TM, Glissen Brown JR, et al. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study. Gut. 2019;68:1813–1819. doi: 10.1136/gutjnl-2018-317500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McAlindon ME, Ching HL, Yung D, Sidhu R, Koulaouzidis A. Capsule endoscopy of the small bowel. Ann Transl Med. 2016;4:369. doi: 10.21037/atm.2016.09.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Le Berre C, Sandborn WJ, Aridhi S, et al. Application of artificial Intelligence to gastroenterology and hepatology. Gastroenterology. 2020;158:76–94.e2. doi: 10.1053/j.gastro.2019.08.058. [DOI] [PubMed] [Google Scholar]

- 12.Liu G, Yan G, Kuang S, Wang Y. Detection of small bowel tumor based on multi-scale curvelet analysis and fractal technology in capsule endoscopy. Comput Biol Med. 2016;70:131–138. doi: 10.1016/j.compbiomed.2016.01.021. [DOI] [PubMed] [Google Scholar]

- 13.Li B, Meng MQ. Tumor recognition in wireless capsule endoscopy images using textural features and SVM-based feature selection. IEEE Trans Inf Technol Biomed. 2012;16:323–329. doi: 10.1109/TITB.2012.2185807. [DOI] [PubMed] [Google Scholar]

- 14.Eid A, Charisis VS, Hadjileontiadis LJ, Sergiadis GD. A curvelet-based lacunarity approach for ulcer detection from wireless capsule endoscopy images. Proceedings of the 26th IEEE International Symposium on Computer-Based Medical Systems; 2013 Jun 20-22; Porto, Portugal. Piscataway (NJ): IEEE; 2013. pp. 273–278. [Google Scholar]

- 15.Yuan Y, Wang J, Li B, Meng MQ. Saliency based ulcer detection for wireless capsule endoscopy diagnosis. IEEE Trans Med Imaging. 2015;34:2046–2057. doi: 10.1109/TMI.2015.2418534. [DOI] [PubMed] [Google Scholar]

- 16.Tenório JM, Hummel AD, Cohrs FM, Sdepanian VL, Pisa IT, de Fátima Marin H. Artificial intelligence techniques applied to the development of a decision-support system for diagnosing celiac disease. Int J Med Inform. 2011;80:793–802. doi: 10.1016/j.ijmedinf.2011.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Li B, Meng MQ. Computer-based detection of bleeding and ulcer in wireless capsule endoscopy images by chromaticity moments. Comput Biol Med. 2009;39:141–147. doi: 10.1016/j.compbiomed.2008.11.007. [DOI] [PubMed] [Google Scholar]

- 18.Li B, Meng MQ, Xu L. A comparative study of shape features for polyp detection in wireless capsule endoscopy images. Conf Proc IEEE Eng Med Biol Soc. 2009;2009:3731–3734. doi: 10.1109/IEMBS.2009.5334875. [DOI] [PubMed] [Google Scholar]

- 19.Sainju S, Bui FM, Wahid KA. Automated bleeding detection in capsule endoscopy videos using statistical features and region growing. J Med Syst. 2014;38:25. doi: 10.1007/s10916-014-0025-1. [DOI] [PubMed] [Google Scholar]

- 20.Zhou T, Han G, Li BN, et al. Quantitative analysis of patients with celiac disease by video capsule endoscopy: a deep learning method. Comput Biol Med. 2017;85:1–6. doi: 10.1016/j.compbiomed.2017.03.031. [DOI] [PubMed] [Google Scholar]

- 21.Wu X, Chen H, Gan T, Chen J, Ngo CW, Peng Q. Automatic hookworm detection in wireless capsule endoscopy images. IEEE Trans Med Imaging. 2016;35:1741–1752. doi: 10.1109/TMI.2016.2527736. [DOI] [PubMed] [Google Scholar]

- 22.Noya F, Alvarez-Gonzalez MA, Benitez R. Automated angiodysplasia detection from wireless capsule endoscopy. Conf Proc IEEE Eng Med Biol Soc. 2017;2017:3158–3161. doi: 10.1109/EMBC.2017.8037527. [DOI] [PubMed] [Google Scholar]

- 23.Hassan AR, Haque MA. Computer-aided gastrointestinal hemorrhage detection in wireless capsule endoscopy videos. Comput Methods Programs Biomed. 2015;122:341–353. doi: 10.1016/j.cmpb.2015.09.005. [DOI] [PubMed] [Google Scholar]

- 24.Chao WL, Manickavasagan H, Krishna SG. Application of artificial intelligence in the detection and differentiation of colon polyps: a technical review for physicians. Diagnostics (Basel) 2019;9:99. doi: 10.3390/diagnostics9030099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Aoki T, Yamada A, Aoyama K, et al. Automatic detection of erosions and ulcerations in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest Endosc. 2019;89:357–363.e2. doi: 10.1016/j.gie.2018.10.027. [DOI] [PubMed] [Google Scholar]

- 26.Aoki T, Yamada A, Aoyama K, et al. Clinical usefulness of a deep learning-based system as the first screening on small-bowel capsule endoscopy reading. Dig Endosc. 2020;32:585–591. doi: 10.1111/den.13517. [DOI] [PubMed] [Google Scholar]

- 27.Klang E, Barash Y, Margalit RY, et al. Deep learning algorithms for automated detection of Crohn’s disease ulcers by video capsule endoscopy. Gastrointest Endosc. 2020;91:606–613.e2. doi: 10.1016/j.gie.2019.11.012. [DOI] [PubMed] [Google Scholar]

- 28.Leenhardt R, Vasseur P, Li C, et al. A neural network algorithm for detection of GI angiectasia during small-bowel capsule endoscopy. Gastrointest Endosc. 2019;89:189–194. doi: 10.1016/j.gie.2018.06.036. [DOI] [PubMed] [Google Scholar]

- 29.Tsuboi A, Oka S, Aoyama K, et al. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig Endosc. 2020;32:382–390. doi: 10.1111/den.13507. [DOI] [PubMed] [Google Scholar]

- 30.Aoki T, Yamada A, Kato Y, et al. Automatic detection of blood content in capsule endoscopy images based on a deep convolutional neural network. J Gastroenterol Hepatol. 2020;35:1196–1200. doi: 10.1111/jgh.14941. [DOI] [PubMed] [Google Scholar]

- 31.Saito H, Aoki T, Aoyama K, et al. Automatic detection and classification of protruding lesions in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest Endosc. 2020;92:144–151.e1. doi: 10.1016/j.gie.2020.01.054. [DOI] [PubMed] [Google Scholar]

- 32.Iakovidis DK, Koulaouzidis A. Automatic lesion detection in capsule endoscopy based on color saliency: closer to an essential adjunct for reviewing software. Gastrointest Endosc. 2014;80:877–883. doi: 10.1016/j.gie.2014.06.026. [DOI] [PubMed] [Google Scholar]

- 33.Ding Z, Shi H, Zhang H, et al. Gastroenterologist-level identification of small-bowel diseases and normal variants by capsule endoscopy using a deep-learning model. Gastroenterology. 2019;157:1044–1054.e5. doi: 10.1053/j.gastro.2019.06.025. [DOI] [PubMed] [Google Scholar]

- 34.Soffer S, Klang E, Shimon O, et al. Deep learning for wireless capsule endoscopy: a systematic review and meta-analysis. Gastrointest Endosc. doi: 10.1016/j.gie.2020.04.039. 2020 Apr 22 [Epub]. https://10.1016/j.gie.2020.04.039. [DOI] [PubMed] [Google Scholar]

- 35.Spada C, Pasha SF, Gross SA, et al. Accuracy of first- and second-generation colon capsules in endoscopic detection of colorectal polyps: a systematic review and meta-analysis. Clin Gastroenterol Hepatol. 2016;14:1533–1543.e8. doi: 10.1016/j.cgh.2016.04.038. [DOI] [PubMed] [Google Scholar]

- 36.Hall B, Holleran G, McNamara D. PillCam COLON 2© as a pan-enteroscopic test in Crohn’s disease. World J Gastrointest Endosc. 2015;7:1230–1232. doi: 10.4253/wjge.v7.i16.1230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Ye CA, Gao YJ, Ge ZZ, et al. PillCam colon capsule endoscopy versus conventional colonoscopy for the detection of severity and extent of ulcerative colitis. J Dig Dis. 2013;14:117–124. doi: 10.1111/1751-2980.12005. [DOI] [PubMed] [Google Scholar]

- 38.Li Z, Chiu PW. Robotic endoscopy. Visc Med. 2018;34:45–51. doi: 10.1159/000486121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Shamsudhin N, Zverev VI, Keller H, et al. Magnetically guided capsule endoscopy. Med Phys. 2017;44:e91–e111. doi: 10.1002/mp.12299. [DOI] [PubMed] [Google Scholar]

- 40.Zhao AJ, Qian YY, Sun H, et al. Screening for gastric cancer with magnetically controlled capsule gastroscopy in asymptomatic individuals. Gastrointest Endosc. 2018;88:466–474.e1. doi: 10.1016/j.gie.2018.05.003. [DOI] [PubMed] [Google Scholar]

- 41.Liao Z, Hou X, Lin-Hu EQ, et al. Accuracy of magnetically controlled capsule endoscopy, compared with conventional gastroscopy, in detection of gastric diseases. Clin Gastroenterol Hepatol. 2016;14:1266–1273.e1. doi: 10.1016/j.cgh.2016.05.013. [DOI] [PubMed] [Google Scholar]

- 42.Ching HL, Hale MF, Kurien M, et al. Diagnostic yield of magnetically assisted capsule endoscopy versus gastroscopy in recurrent and refractory iron deficiency anemia. Endoscopy. 2019;51:409–418. doi: 10.1055/a-0750-5682. [DOI] [PubMed] [Google Scholar]

- 43.Beg S, Card T, Warburton S, et al. Diagnosis of Barrett’s esophagus and esophageal varices using a magnetically assisted capsule endoscopy system. Gastrointest Endosc. 2020;91:773–781.e1. doi: 10.1016/j.gie.2019.10.031. [DOI] [PubMed] [Google Scholar]

- 44.Fontana R, Mulana F, Cavallotti C, et al. An innovative wireless endoscopic capsule with spherical shape. IEEE Trans Biomed Circuits Syst. 2017;11:143–152. doi: 10.1109/TBCAS.2016.2560800. [DOI] [PubMed] [Google Scholar]

- 45.Fu Q, Guo S, Guo J. Conceptual design of a novel magnetically actuated hybrid microrobot. 2017 IEEE International Conference on Mechatronics and Automation (ICMA); 2017 Aug 6-9; Takamatsu, Japan. Piscataway (NJ): IEEE; 2017. pp. 1001–1005. [Google Scholar]

- 46.Guo J, Liu P, Guo S, Wang L, Sun G. Development of a novel wireless spiral capsule robot with modular structure. 2017 IEEE International Conference on Mechatronics and Automation (ICMA); 2017 Aug 6-9; Takamatsu, Japan. Piscataway (NJ): IEEE; 2017. pp. 439–444. [Google Scholar]

- 47.Jang J, Lee J, Lee K, et al. 4-Camera VGA-resolution capsule endoscope with 80Mb/s body-channel communication transceiver and Sub-cm range capsule localization. 2018 IEEE International Solid - State Circuits Conference - (ISSCC); 2018 Feb 11-15; San Francisco (CA), USA. Piscataway (NJ): IEEE; 2018. pp. 282–284. [Google Scholar]

- 48.Demosthenous P, Pitris C, Georgiou J. Infrared fluorescence-based cancer screening capsule for the small intestine. IEEE Trans Biomed Circuits Syst. 2016;10:467–476. doi: 10.1109/TBCAS.2015.2449277. [DOI] [PubMed] [Google Scholar]

- 49.Yim S, Gultepe E, Gracias DH, Sitti M. Biopsy using a magnetic capsule endoscope carrying, releasing, and retrieving untethered microgrippers. IEEE Trans Biomed Eng. 2014;61:513–521. doi: 10.1109/TBME.2013.2283369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Chen W-W, Yan G-Z, Liu H, Jiang P-P, Wang Z-W. Design of micro biopsy device for wireless autonomous endoscope. International Journal of Precision Engineering and Manufacturing. 2014;15:2317–2325. [Google Scholar]

- 51.Son D, Dogan MD, Sitti M. Magnetically actuated soft capsule endoscope for fine-needle aspiration biopsy. 2017 IEEE International Conference on Robotics and Automation (ICRA); 2017 May 29-Jun 3; Singapore. Piscataway (NJ): IEEE; 2017. pp. 1132–1139. [Google Scholar]

- 52.Stewart FR, Newton IP, Näthke I, Huang Z, Cox BF, Cochran S. Development of a therapeutic capsule endoscope for treatment in the gastrointestinal tract: bench testing to translational trial. 2017 IEEE International Ultrasonics Symposium (IUS); 2017 Sep 6-9; Washington, D.C., USA. Piscataway (NJ): IEEE; 2017. pp. 1–4. [Google Scholar]

- 53.Leung BHK, Poon CCY, Zhang R, et al. A therapeutic wireless capsule for treatment of gastrointestinal haemorrhage by balloon tamponade effect. IEEE Trans Biomed Eng. 2017;64:1106–1114. doi: 10.1109/TBME.2016.2591060. [DOI] [PubMed] [Google Scholar]