Abstract

Background

User-centered design (UCD) is a powerful framework for creating useful, easy-to-use, and satisfying mobile health (mHealth) apps. However, the literature seldom reports the practical challenges of implementing UCD, particularly in the field of mHealth.

Objective

This study aims to characterize the practical challenges encountered and propose strategies when implementing UCD for mHealth.

Methods

Our multidisciplinary team implemented a UCD process to design and evaluate a mobile app for older adults with heart failure. During and after this process, we documented the challenges the team encountered and the strategies they used or considered using to address those challenges.

Results

We identified 12 challenges, 3 about UCD as a whole and 9 across the UCD stages of formative research, design, and evaluation. Challenges included the timing of stakeholder involvement, overcoming designers’ assumptions, adapting methods to end users, and managing heterogeneity among stakeholders. To address these challenges, practical recommendations are provided to UCD researchers and practitioners.

Conclusions

UCD is a gold standard approach that is increasingly adopted for mHealth projects. Although UCD methods are well-described and easily accessible, practical challenges and strategies for implementing them are underreported. To improve the implementation of UCD for mHealth, we must tell and learn from these traditionally untold stories.

Keywords: user-centered design, research methods, mobile health, digital health, mobile apps, usability, technology, evaluation, human-computer interaction, mobile phone

Introduction

The user-centered design (UCD), or human-centered design, is a framework for iteratively researching, designing, and evaluating services and systems by involving end users and other stakeholders throughout a project life cycle. [1-3]. Mobile health (mHealth) projects benefit from UCD by using input from patients, informal caregivers, clinicians, and other stakeholders during the project life cycle to create better designs and iteratively improve interventions, thus enhancing their usability, acceptance, and potential success when implemented [4-6]. Increasingly, UCD has been recommended and adopted in mHealth projects to great success [7], with many examples of mHealth for people living with HIV [5,8], chronic conditions [9-11], or mental illness [6,12,13].

UCD is supported by popular tools and methods, such as cognitive task analysis, workflow studies and journey mapping, participatory design, rapid prototyping, usability testing, and heuristic evaluation [14-17]. Textbooks, articles, and other resources offer easily accessible and detailed guidance on the general UCD process and specific UCD methods [18,19]. However, an informal review of the literature reveals little information about the practical implementation of UCD methods.

Practical challenges reported in studies largely in non–health care domains include ensuring participants’ representativeness of the target population [20]; threats to innovation [21]; difficulty communicating with people from different backgrounds [22]; and organizational barriers, such as not having convenient access to participants [23].

Although studies applying UCD for mHealth are on the rise, very few mHealth studies report the challenges they face while planning and executing UCD activities, or they do so parenthetically. UCD challenges may be unique or amplified in the field of mHealth. For example, there are known difficulties in evaluating the effectiveness of mHealth solutions, in part because of the variable and multifactorial nature of health and illness trajectories [6]. mHealth projects often involve unique stakeholders, drawn from vulnerable patient populations (eg, older adults, patients with chronic conditions) [24,25] and busy clinician experts [6,26], or sometimes both. Moreover, on the one hand, there is sometimes a mismatch between the use of UCD methods (eg, rapid prototyping, user testing) and emerging technologies (eg, sensors) and, on the other hand, traditions (eg, clinical trials) and technological conservatism that characterize much of the health care sector [27-29].

Given the presence and importance of the practical challenges for implementing UCD, coupled with the increased use of UCD for mHealth, we argue for the need to explicitly describe those challenges. As mHealth technologies become more pervasive, navigating practical UCD challenges is essential for the development of “safe, sound, and desirable” [30] mHealth solutions that improve health outcomes while involving stakeholders in the design process [31,32]. We believe that identifying, reporting, and discussing the untold stories of actually implementing UCD for mHealth will help in overcoming the significant gaps between research and practice [33,34].

Methods

Overview

The objectives of this study were as follows:

Characterize practical challenges encountered while implementing UCD to design an mHealth app for older adults with heart failure.

Discuss strategies that we used or considered using to manage these challenges.

In presenting these challenges and strategies, we offer illustrations from our own experiences, particularly the Power to the Patient (P2P) project (R21 HS025232) and cite others that have been described in the literature in and outside the mHealth arena.

Power to the Patient Project

From 2017 to 2019, we performed a UCD study to design and evaluate information technology for older adults with heart failure. On the basis of previous literature, we knew that these patients had unmet needs and required additional support to monitor and manage symptoms and various related behaviors, including medication use, dietary and fluid restriction, and physical activity [35-37]. Our work focused on delivering information and decision support by leveraging a novel technological opportunity, namely, sharing with patients the data from their cardiac implanted electronic devices (CIEDs). Many patients with heart failure have CIEDs for the delivery of timely cardiac therapy and the capturing of data that can predict decompensation and other events leading to hospitalization and other downstream outcomes [38,39]. Patients seldom receive their CIED data [40,41], but technical and cultural changes are increasing the likelihood that they will in the future [41-43].

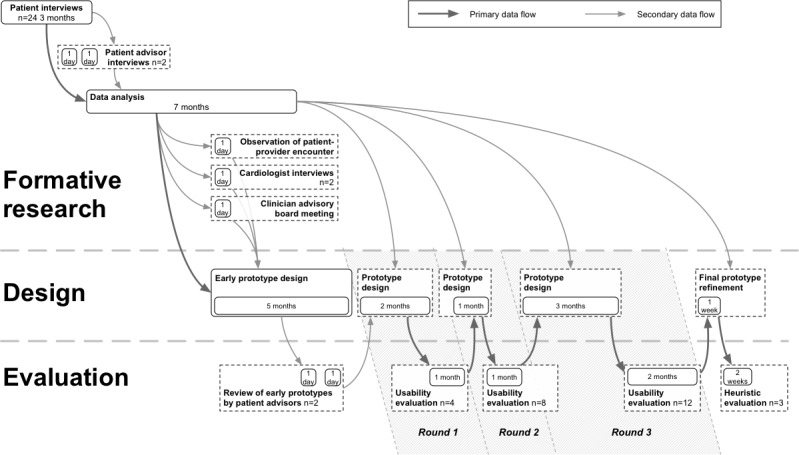

Figure 1 presents an overview of the project’s timeline, and Textboxes 1 and 2 further elaborate on the methods used. Select methods and results from the project are available elsewhere [19,32,44-47].

Figure 1.

Timeline of the Power to the Patient project.

Formative research methods used to establish the Power to the Patient’s domain space.

Patient interviews

Method: 70-min (1) critical incident interviews and (2) scenario-based cognitive interviews to understand the decision-making process of older adults with heart failure

Participants: 24 English-speaking older adults (≥65 years) diagnosed with heart failure (New York Heart Association Class II-IV) and 14 accompanying support persons (family and friends). Patients were receiving care at Parkview Health (Fort Wayne, Indiana)

Procedure: (1) Participants were asked to describe a recent minor adverse health event and were probed with questions about their thought, feelings, and actions. (2) Participants were presented with a picture of a fictitious device that could give them a CIED score representing their heart health; they were asked to describe what they would think and do depending on the score displayed on the device

Patient advisory meetings

Method: One-on-one meetings with patient advisors soliciting feedback on (1) personas and use-case scenarios and (2) early design concepts and prototypes

Participants: 2 older adults with heart failure from the community (Indianapolis, Indiana) who voluntarily assisted the study in an advisory capacity

Procedure: (1) Advisors met with the research team to discuss the preliminary findings from the interviews and early persona development. They provided feedback on the findings, methods, and relevance of the work. (2) Advisors were presented with design alternatives of a Power to the Patient prototype, and then they interacted with it while thinking aloud using a computer and a mouse

Clinician advisory board meeting

Method: Group dinner with clinician experts to elicit feedback on personas, use-case scenarios, and early concepts

Participants: 7 Parkview Health clinicians (2 cardiologists, a device clinic supervisor, 2 technicians, a nurse, and the vice president of operations for the Parkview Heart Institute)

Procedure: Personas and scenarios were presented, among other findings, and questions were asked of clinicians regarding the validity of the findings and related current protocols (some of which were subsequently collected)

Individual interviews with 2 cardiologists

Observation of clinical encounters with a patient in the device clinic

Evaluation methods used during the Power to the Patient development.

Usability evaluations, round 1 (R1) and round 2 (R2)

Method: 90-min task-driven evaluation sessions of Power to the Patient prototypes to assess usability (primarily) and acceptability (secondarily)

Participants: 4 (R1) and 8 (R2) English-speaking older adults diagnosed with heart failure and 3 accompanying support persons (2 in R1 and 1 in R2)

Procedure: Participants performed specific tasks in the prototype while thinking aloud. Testing occurred in a private room, with an interactive prototype made in Axure RP 9 running on a Samsung Galaxy S7 smartphone. Participants’ manual interactions were video recorded. Pretest, participants completed a demographic survey (ie, age, gender, technology use, and education level) and the Newest Vital Sign health literacy screening (NVS) [51]; posttest, they completed the system usability scale [52], National Aeronautics and Space Administration Task Load Index (NASA-TLX) [51], and user acceptance survey. Participants were also interviewed about their understanding and projected use of Power to the Patient prototypes.

Usability evaluation, round 3 (R3)

Method: 90-min scenario-driven evaluation sessions of Power to the Patient prototypes to assess acceptability (primarily) and usability (secondarily)

Participants: 12 English-speaking older adults diagnosed with heart failure, with cardiac implanted electronic devices, and accompanied by 5 support persons

Procedure: Participants simulated days 1 and 10 of longitudinal use of the Power to the Patient prototype while thinking aloud. They completed the same assessments as in earlier rounds and were interviewed about their understanding and projected use of the Power to the Patient prototype.

Heuristic evaluation

Method: Heuristic evaluation questionnaire to assess usability of Power to the Patient prototypes

Participants: 3 user-centered design experts external to the team

Procedure: Participants explored the prototype based on 2 use cases. They then reported their observations for 9 heuristics and gave an overall rating for the usability of the prototype

The project began with a problem analysis or formative study of the domain as a precursor to design. This phase comprised interviews with 24 older adults with heart failure, half of whom had CIEDs, to learn about how they made decisions about their health. These interviews used the critical incident technique, a method that asks participants to recall and describe a specific event or scenario and uses probes to better understand the participants’ thoughts and actions during the event or scenario [48,49]. We also examined participants’ decision-making strategies in response to fictitious scenarios [16]. In these scenarios, individuals were presented with hypothetical situations related to data from a fictitious CIED and asked to think aloud as they made decisions about how to respond. Other formative study methods were a brief observation of a device clinic, meetings with 2 cardiologists, and sharing of findings and design work from 2 recent similar studies on CIED data sharing [43,50].

Findings from the formative study were analyzed to develop personas, representing distinct ways patients made decisions, and use-case scenarios, representing decision-making situations in which hypothetical patients with CIEDs might find themselves. A model was also created depicting the flow of naturalistic decision making for heart failure self-care. These products were presented separately to 2 patient advisors and a panel of clinicians, who provided feedback on the realism and relevance of the personas and scenarios. The personas, use-case scenarios, and a review of the literature and market landscape (eg, app store reviews of similar mHealth products) were used to formulate requirements and early design concepts to be presented to patient advisors.

The design involved writing requirements and 5 months of iterative prototyping, concluding with an interactive prototype. Subsequently, we performed 3 rounds of formal laboratory-based usability testing with 24 participants, interleaved with periods of prototype redesign. Each round had a more complete prototype and an increasing number of participants (ie, n=4 in round 1, n=8 in round 2, and n=12 in round 3). The project concluded with a final refinement of the prototype and formal heuristic evaluations by 3 outside UCD experts.

The P2P app designed in this study had 4 core patient-facing components: a heart health score calculated from CIED data (a fictitious concept inspired by existing research [53,54]), self-assessments on recommended heart failure self-care domains (eg, medication use, sodium-restricted diet), tips and strategies for better self-management, and logs of data captured by the app. We designed and tested different implementations of these core concepts, changing information architecture and amending feature sets as we received feedback from study participants. For example, in one iteration, assessments, tips, and strategies were organized using the concept of plans that users could select.

Results

Overview

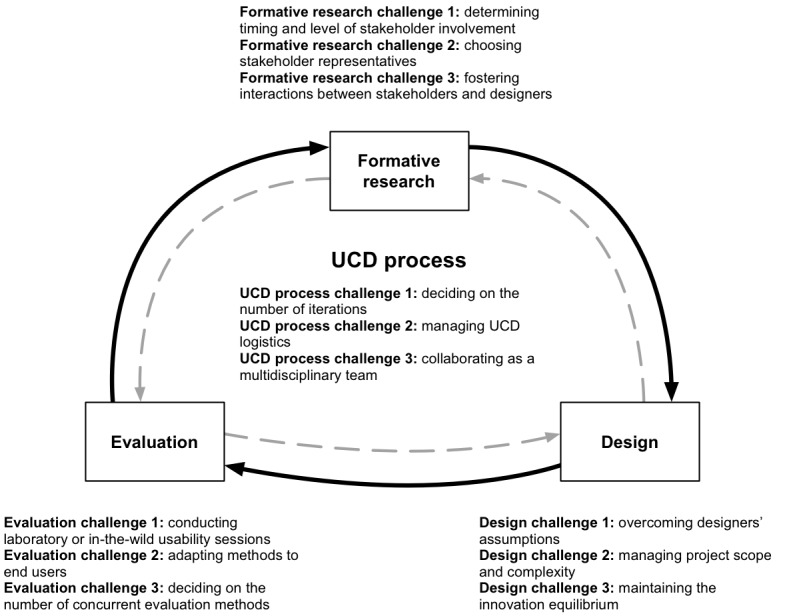

The UCD process as a whole and the 3 major UCD phases of formative research, design, and evaluation present associated implementation challenges. Twelve of the most pervasive challenges are summarized in Figure 2. Next, we discuss each, with examples from our experience with P2P, supplemented by relevant literature.

Figure 2.

UCD challenges encountered during Power to the Patient research, development, and evaluation. UCD: User-centered design.

Whole User-Centered Design Process

We identified 3 challenges related to the UCD process as a whole: deciding on the number of design-and-test iterations (UCD process challenge 1); managing logistics associated with UCD projects, such as preparing materials and recruitment costs (UCD process challenge 2); and collaborating as a multidisciplinary team by navigating misaligned goals and communication breakdowns (UCD process challenge 3). Recommendations for the UCD process are listed in Textbox 3.

Recommendations for the user-centered design process.

Keep the number of iterations flexible, estimating the range based on available resources but adjusting as the project progresses.

Avoid endless iterations by having a clear criteria for when to end the design and testing activities.

Consult or collaborate with experienced practitioners to anticipate and manage logistic challenges.

Explicitly discuss and seek to align multidisciplinary team members’ goals and preferences early on, managing these over time through open communication.

Involve a multilinguistic conductor to lead the team and coordinate members with diverse values, norms, practices, vocabularies, theories, and methods.

User-Centered Design Process Challenge 1: Deciding on the Number of Iterations

We planned the number of design-and-test iterations early in the project to satisfy the requirements of the funding agency and Institutional Review Board (IRB). Three iterations were originally estimated as feasible, given the timeline and amount of funding. Each iteration was assumed to require an average of at least 2 months of time and budget. As the project progressed, it became apparent that those assumptions were correct and that to adhere to budget and project timeline restrictions, we would be able to complete the 3 planned iterations. The number of participants in total and per iteration was based on several considerations. The total of 24 test participants were based on the project budget and timeline, as above, and the goal of having an average of 8 participants per test, a typical upper range for formative testing. For the initial 2 iterations, our focus was on identifying overt usability issues, and based on Nielsen’s recommendations, we planned to enroll about 4 to 8 participants (a few participants can uncover most usability issues [55]). In the last round, as our focus shifted toward evaluating acceptance and the extent to which the design was usable across a more diverse set of users, we recruited 12 participants.

Iterations in UCD benefit software development by reducing usability issues and improving features before it is too late or too expensive to make changes [56]. Iteration is generally recommended [56], but the number of iterations is not fixed and often depends on the project and how it progresses. However, deviating from a planned number of deviations can be costly or prohibited for other (eg, regulatory or management) reasons. Conversely, endless iteration is counterproductive and delays in-the-wild field testing and actual implementation.

UCD Process Challenge 2: Managing UCD Logistics

Many of our recurring challenges were related to logistics, such as meeting recruitment goals, preparing and managing materials (ie, instructions, presentations, and design documentation), and arranging a specific time for members and advisors to meet despite being geographically dispersed. Others have reported similar challenges in mHealth projects [57,58], but more often they are assumed and discussed informally between practitioners. This means that novice UCD practitioners tend to underestimate logistic challenges and have limited information on how to address them. UCD logistics challenges often mirror challenges to implementing patient-centered research in general, for example, the taxonomy of challenges by Holden et al [59], which includes patient identification and recruitment; privacy and confidentiality; conflicts with compensation; and logistical issues, such as travel, timing, and communication. Authors have described strategies to achieve buy-in, trust, transparency, accommodation (flexibility), openness, and anticipation [59,60], as well as checklists for implementing these general strategies [61].

Others have described the additional costs of recruiting end users for UCD projects [20,57]. It is even more difficult to recruit a representative sample in a technology project when technology ownership and proficiency are among the eligibility criteria [62] or the notion of technology leads individuals to decline participation [63]. Age- and illness-related physical and cognitive limitations may also exclude some individuals from mHealth studies [6,64]. In such cases, UCD projects must also consider whether to involve informal caregivers or others (eg, translators) who could assist patients during formative research or testing.

UCD Process Challenge 3: Collaborating as a Multidisciplinary Team

Our P2P project was the fruit of collaboration between a research university and a research center in a large health system, with support from patient and clinician advisors. Our team included a cardiologist coinvestigator who provided invaluable clinical information, feedback, and access to local and national clinical leaders. However, with disciplinary diversity comes disagreement, communication difficulty, and differences in assumptions, and although these are all desirable elements, they require efforts to manage, for example, by frequently asking team members to state their assumptions.

Multidisciplinary collaboration is often encouraged in UCD [6,65,66], including partnerships between designers and clinicians [67,68]. Those who have attempted such collaborations are aware of the methodological and cultural misalignment or divergent goals between Human-Computer Interaction technologists and clinicians [65,69]. Strategies to overcome these collaboration challenges include structured communication and the involvement of a multilinguistic (symphonic) conductor, a person who has learned “each team member’s discipline- or profession-specific values, norms, practices, vocabularies, theories, and methods to coordinate and translate between dissimilar members” [70].

Formative Research

We encountered 3 types of formative research challenges in the P2P project: determining when and how to involve stakeholders (formative research challenge 1), recruiting participants and advisors who are representative of stakeholder groups (formative research challenge 2), and fostering meaningful interactions between stakeholders and designers despite personnel constraints (formative research challenge 3). Recommendations for formative research are listed in Textbox 4.

Recommendations for formative research.

Involve stakeholders early.

Avoid over recruiting or collecting more data than can be expediently analyzed.

Use shorter-cycle iterative research sprints with smaller sample sizes instead [55].

Carefully balance expectations placed on stakeholders versus what they are able or willing to do.

Deprioritize but do not discard the ideas that stakeholders rejected early on before the ideas reached maturity.

When possible, generate involvement from multiple stakeholder groups.

Use diverse recruitment methods to ensure stakeholders are chosen for representativeness, not convenience.

Foster direct relations between designers and stakeholders.

Minimize avoidable personnel changes and practice cross-staffing across user-centered design phases.

Formative Research Challenge 1: Determining Timing and Level of Stakeholder Involvement

We involved patients and clinicians early in the P2P project in several ways, including informant interviews and feedback on analyses, requirements, and early design concepts. This helped learn lessons early, such as clinicians insisting that because of interindividual variability in physical activity, activity goals should be highly individualized, whereas other goals (eg, medication adherence) could be identical for all users. Early learning allowed earlier decisions about scope, facilitated evidence-based design choices, and prevented having to make costly future design revisions. In terms of the scope, early stakeholder involvement helped eliminate especially difficult or risky design concepts, for example, including medication titration advice.

Early involvement was not always simple. The number and depth of P2P interviews yielded more data than could be analyzed in the time allotted. As a result, several important findings from stakeholder interviews were not discovered until further in the design process, negating some of the benefits of early learning. Furthermore, having sought feedback early may have prematurely terminated concepts that were promising but premature at the time.

The forms of involvement vary from collecting extensive data to asking individuals to assess early products [65]. The level of involvement can also be adjusted between informing (as in interviews), advising (as in reviewing concepts), and doing (as in having stakeholders co-perform research or design work) [71]. However, more active or laborious stakeholder involvement risks asking individuals to do more than what is realistic, reasonable, or affordable [21,72]. This is often the case when individuals are asked to be co-designers without adequate training in design, compensation for their contribution, or understanding of the problem space. Although some involvement is essential to UCD, more is not always better [71].

Formative Research Challenge 2: Choosing Stakeholder Representatives

P2P was fortunate to obtain input from patients sampled from a pool of current patients; volunteer advisors who were willing to meet repeatedly with the design team; and various clinicians, some of whom also offered access to their clinic and protocols. Not every design team can easily access stakeholders for formative research in a timely manner, far less multiple stakeholder groups, especially when the stakeholders include busy professionals. Some researchers resort to gathering data from less representative convenience samples, including online services offering access to paid volunteers, such as Amazon’s Mechanical Turk or the Qualtrics Panel [73-75].

Despite having adequate access, P2P was also limited in the variety and representativeness of stakeholders. The patients we interviewed in our formative research were all white, and two-third were male. Patient advisors were more likely to be educated and engaged in their health than peers, consistent with the general trend that patient advisors are rarely ordinary people. Clinicians in our study may have been more motivated than nonparticipants. Although stakeholder involvement is essential to UCD [1,76], it is predicated on stakeholders having unique knowledge or insights that designers do not have. However, stakeholders too have limited knowledge and represent primarily the communities to which they belong, meaning even with stakeholder involvement, there may exist multiple blind spots. When those who are involved differ from end users (eg, on race, education, or motivation), those blind spots may disadvantage underrepresented groups [63]. In practice, however, few design studies have the opportunity to conduct formative research with large samples representative of the population, whereas increasing sample size exacerbates formative research challenge 1 (“When and how much to involve stakeholders”), as discussed above.

Those who have worked extensively with patient advisory boards offer useful advice on assembling the right group of stakeholders, especially when they must work together, as on a panel. Suggestions include leadership commitment to listening to stakeholder suggestions, diverse recruitment (to avoid the abovementioned blindspots), careful selection of individuals who will work well with others, and adequate funding to compensate or otherwise support stakeholders [77].

Formative Research Challenge 3: Fostering Interactions Between Stakeholders and Designers

We attempted to promote direct interactions between designers and both patient and clinician stakeholders. Designers attended many of the formative research sessions or had direct access to the collected raw data. Furthermore, to ensure continuity, there was cross-staffing of formative research, design, and evaluation teams. One project member personally participated in almost every interview, feedback session, design meeting, and usability test. However, she was the only design team member who had interviewed patients and was therefore expected to be the voice of patient participants on the design team. Over time, turnover greatly reduced the number of team members who had been present from the beginning of the project and had therefore participated in any formative research activities.

Having designers interact directly with stakeholders, and especially end users, has been shown to yield better results [78] than hearing about the stakeholders and end users from another source [71]. Continual interaction with stakeholders during the UCD process helps designers gain a firsthand experience of the domain [78,79]. However, when projects progress sequentially from formative research with stakeholders to design and evaluation, turnover and staffing limitations may mean that those designing or testing the product may not have had such firsthand experience.

Design

We identified 3 challenges related to the design phase of UCD: overcoming designers’ assumptions with empirical research findings (design challenge 1), managing project scope and complexity and avoiding scope creep (design challenge 2), and maintaining the innovation equilibrium by balancing new ideas with outside constraints (design challenge 3). Recommendations for design are listed in Textbox 5.

Recommendations for design.

Engage stakeholders during design as ad hoc informants or co-designers to challenge incorrect assumptions.

Conduct iterative new rounds of data collection during the design phase as questions arise that are best answered by gathering evidence.

Seek simplicity and thus reducing complexity.

Monitor for scope creep [80] and overly complex designs, relative to what end users need.

Plan for feature deimplementation (ie, removing features from design), using techniques such as a formal termination plan [33].

Without stifling innovation, ensure stakeholders can rule out designs that are unsafe, unacceptable, infeasible, inconsistent with clinical reality, or otherwise impractical.

For innovative ideas transcending conventional practice, develop clear plans for how the design will fit in or overcome existing infrastructure constraints, regulations, preferences, and habits.

Design Challenge 1: Overcoming Designers’ Assumptions

Designers naturally make decisions that are inspired by but immediately validated by end user evidence. Some of these decisions are based on assumptions that go unquestioned during design but are discovered to be incorrect during testing. This situation underscores the value of testing and the limitations of design. In the P2P project, for example, we incorporated rewards based on the literature. Nothing in the formative data contradicted the potential value of rewards, so it was not until testing that we learned that most participants thought the rewards were distracting. Some assumptions are also persistent and can be made despite disconfirming formative research findings. For example, members of the design team persistently believed that end users would have little technology experience, despite evidence to the contrary from formative research and usability testing.

Designer bias is difficult to overcome, even when UCD methods are used to collect contradictory evidence. The sequence of design following formative research means some assumptions are not tested or contradicted until the testing phase, by which time the assumptions may have greatly influenced the design. An alternative would be to conduct additional research to challenge the design team’s assumptions during the design phase, but before formal testing [81]. Furthermore, designers should be judicious in the use of design techniques, such as personas, which can lead to oversimplification and encourage misleading assumptions about end users [82]. Other strategies to mitigate incorrect assumptions include conducting more frequent testing or including stakeholders on design teams to challenge assumptions during the design process [50,71].

Design Challenge 2: Managing Project Scope and Complexity

Similar to other design projects, P2P produced many ideas, which were easier to generate than to dismiss. As a result, we attempted to include in a single app a large variety of features. We also attempted to integrate these many features to produce a coherent product. Often, multiple features were being slowly designed in parallel, rather than perfecting 1 feature before moving on to the next. These conditions sometimes led to confusion about the purpose of the app. More features also meant less time and effort spent designing or testing each.

Complexity and scope need to be carefully managed to avoid natural tendencies to add (rather than subtract) from taking over. Additional research could be used to help prioritize features and determine which features are attractive to designers but not needed by end users [28]. When a project’s scope is intentionally large, steps can be taken to create distinct modules (chunks of features) [83], which provide coherent structure and separation. If complexity is inevitable, the project team will need to plan for more extensive testing by conducting longer sessions or sessions with more users.

Design Challenge 3: Maintaining the Innovation Equilibrium

In our experience, designers, clinician stakeholders, and patient stakeholders were divided on what was possible for and needed from the product being designed. Generally, clinicians were more conservative, preferring to replicate existing practices and avoid less studied or riskier options. For instance, clinicians were more conservative than designers about how much unedited information and control over its interpretation to offer patients. Another point of contention was whether to integrate the product into other health information systems, including electronic medical records. Patients preferred integration, whereas designers were divided on leveraging those systems at the expense of their practical limitations and regulatory constraints. Innovation also conflicted with clinical reality, a case where a patient or designer might envision something that is not technically possible or clinically relevant [32]. For example, the design team assumed an ability to predict heart failure events through CIED data that were beyond publicly available scientific knowledge. Designers’ innovative ideas could also be mismatched with what patient end users were used to and could comfortably perform. This may have been the case with patients’ dislike of rewards or reluctance to rate their health using standard online rating conventions (eg, out of 5 stars). In general, end users tend to have more conventional preferences than designers [26]. In mHealth projects, patients may be unaware of or reluctant to suggest all the technological possibilities granted by smartphones [29], such as push notifications [84] or smartphone sensors [27].

In conversations with innovators, UCD professionals often hear the statement attributed to Henry Ford, “If I had asked people what they wanted, they would have said faster horses.” The broader challenge is maintaining the innovation equilibrium: allowing innovators to innovate, while also allowing stakeholders to influence or evaluate their design, especially when it comes to usability, safety, and privacy. The related challenge is to prevent innovation from creating products that commit what Cornet et al [32] call type 2 design error, which “occurs when designers do not accommodate the clinical reality, including biomedical knowledge, clinical workflows, and organizational requirements.”

Evaluation

We identified 3 evaluation challenges: managing the tradeoff between laboratory and in-the-wild usability sessions (evaluation challenge 1); adapting standardized methods to the end user population, in our case, older adults (evaluation challenge 2); and deciding on the number of concurrent evaluation methods relative to the effort spent setting up sessions and analyzing data (evaluation challenge 3). Recommendations for evaluation are listed in Textbox 6.

Recommendations for evaluation.

Use a laboratory setup for usability testing to improve efficiency and effectiveness.

Begin testing in the laboratory but transition to in-the-wild testing as time and budget allow.

Adapt methods to end user needs, when necessary, even if this means deviating from the standard.

Allow for flexibility and experimentation, at times sacrificing standardization.

Control the number of concurrent evaluation methods; use efficiency, pacing, and workload management strategies if multiple methods are implemented.

Evaluation Challenge 1: Conducting Laboratory or In-the-Wild Usability Sessions

P2P usability testing was conducted in a laboratory setting, albeit in meeting rooms without built-in usability or simulation equipment (eg, a control room, multicamera recording, eye tracking). Although this setting was adequate in most cases, it was at times inconvenient. The laboratory setting was more challenging because it required participants to test prototypes in a time and place dissimilar from the intended context of use. Participants spent 30 min using a prototype technology meant to be used for weeks, months, and years. They were then asked to project how they would use the technology in practice. In the third round of testing, scenarios were used to simulate several days in the prolonged use of the product to help participants project future acceptance and use. However, the cross-sectional and laboratory-based design of our testing limited our confidence in our findings regarding acceptance and future use, relative to findings of usability (eg, observed errors or subjective usability ratings).

Evaluating mHealth prototypes in a laboratory setting offers ideal conditions for detecting product software usability issues, such as navigation or layout issues. Such evaluation however lacks external validity in reproducing the context of the use of the mHealth product [85] and therefore fails to assess most issues related to product hardware usability, operating system usability, acceptance, and longer term outcomes (eg, changes in behavior or health) [6]. Laboratory evaluation is appropriate to quickly iterate on designs and address usability issues before in-the-wild testing to avoid fielding a poorly designed product. However, in-the-wild testing is expensive and time-intensive and may not be possible in every project.

Evaluation Challenge 2: Adapting Methods to End Users

We adapted the standard methods in several ways, including accommodating older adult participants. For example, we administered a simplified version of the System Usability Scale (SUS) self-report measure, which we developed specifically for older adults [9,86,87]. We built flexibility for breaks during testing, especially given the use of diuretic medications by patients with heart failure. We also discovered challenges that we had not anticipated, for example, a participant having difficulty completing computer-based surveys because of vision and motor impairment.

In the context of mHealth projects, adapting standardized usability evaluation methods to end users is often necessary to accommodate patient abilities and limitations. For example, most standardized usability scales have technical or difficult words [86]; thus, many studies edit these measures, for example, by changing the “cumbersome” in the SUS to “awkward” [88]. Although standardized methods ensure scientific reproducibility, rigidity in the UCD process can undermine the goal of iteratively improving a product, which often requires flexibility and experimentation [65]. If, for example, researchers discover that some older participants have difficulty using a touchscreen device, it may be worth adapting the protocol to permit the use of a mouse or stylus in subsequent testing.

Evaluation Challenge 3: Deciding on the Number of Concurrent Evaluation Methods

Our testing involved participant consenting, lengthy pre- and posttest surveys, posttest interviews, and task-based usability testing with think-aloud. In addition, each testing session required a pretest room and equipment setup and posttest aggregation of data from audio, video, computerized, and written recordings. At times, the multiplicity of methods in a single session resulted in testing sessions being cut short. Moreover, the amount of data collected during testing affected the speed at which the team could analyze usability test findings and prepare the next design for another round of testing.

Using multiple concurrent evaluation methods improves triangulation and therefore mHealth usability [89]. However, each method adds burden and affects the timeline. Thus, those implementing UCD should pursue strategies to reduce inefficiency (eg, use of a dedicated testing room to reduce setup labor), ensure pacing (eg, blocking off staff time for testing and analysis), and reduce workload (eg, use of automated usability data collection or analysis) [90].

Discussion

Limitations

The challenges reported were based on our experience with a P2P project, supplemented by firsthand experience with multiple other mHealth projects and a review of the literature. However, the literature yielded few explicit depictions of challenges and less formal discussion of them. (This validated the goals of this paper.) Both our experiences and most of those described in the literature originated in academic environments, which have unique staffing, timing, and funding characteristics. The UCD implementation challenges and strategies encountered in the industry may be different, although an examination of gray literature (eg, blog posts and popular design books) shows some similarities. Finally, our recommendations are to be taken with caution, as they have not been formally validated across projects, project teams, or environments. The mHealth UCD community should actively debate these recommendations and produce new ones.

Conclusions

UCD implementation for mHealth apps can lead to highly usable and acceptable patient-centered and clinically valid solutions. Implementation is challenging, as the 12 practical challenges in this paper easily illustrate. However, these challenges can be overcome, and our recommendations may help others apply UCD to mHealth or similar arenas. Telling and learning from the typically untold stories will result in more efficient, effective, and sustainable mHealth design efforts, effectively bridging the gap between the science and practice of UCD and mHealth implementation. We call on our fellow researchers, designers, and UCD experts to document and share their own challenges and strategies toward improving the implementation of UCD.

Acknowledgments

This study was sponsored by the Agency for Healthcare Research and Quality (AHRQ) grant R21 HS025232 (Holden, PI). The content is solely the responsibility of the authors and does not necessarily represent the official views of the AHRQ. The authors acknowledge the participants for their contribution. The authors also thank their entire research team, their patient and clinical advisory board members, and Parkview Health staff for their contribution throughout the project. They also thank the two peer reviewers for their helpful comments on the manuscript.

Abbreviations

- AHRQ

Agency for Healthcare Research and Quality

- CIED

cardiac implanted electronic device

- mHealth

mobile health

- P2P

Power to the Patient

- SUS

system usability scale

- UCD

user-centered design

Footnotes

Conflicts of Interest: MM has research funding from AHRQ and the following financial relationships with industry to disclose: research grants from Medtronic, Inc; consulting fees/honoraria with iRhythm Technologies, Inc; and Zoll Medical Corporation; and financial partnership with Medical Informatics Engineering, outside the submitted work.

References

- 1.Ergonomics of human-System Interaction — Part 210: Human-Centred Design for Interactive Systems. International Organization for Standardization. 2010. [2020-06-10]. https://www.iso.org/standard/52075.html.

- 2.Gould J, Lewis C. Designing for usability: key principles and what designers think. Commun ACM. 1985;28(3):300–11. doi: 10.1145/3166.3170. doi: 10.1145/3166.3170. [DOI] [Google Scholar]

- 3.Norman D. The Design of Everyday Things: Revised and Expanded Edition. New York, USA: Basic Books; 2013. [Google Scholar]

- 4.McCurdie T, Taneva S, Casselman M, Yeung M, McDaniel C, Ho W, Cafazzo J. mHealth consumer apps: the case for user-centered design. Biomed Instrum Technol. 2012;Suppl:49–56. doi: 10.2345/0899-8205-46.s2.49. [DOI] [PubMed] [Google Scholar]

- 5.Schnall R, Rojas M, Bakken S, Brown W, Carballo-Dieguez A, Carry M, Gelaude D, Mosley JP, Travers J. A user-centered model for designing consumer mobile health (mHealth) applications (apps) J Biomed Inform. 2016 Apr;60:243–51. doi: 10.1016/j.jbi.2016.02.002. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(16)00024-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ben-Zeev D, Schueller SM, Begale M, Duffecy J, Kane JM, Mohr DC. Strategies for mhealth research: lessons from 3 mobile intervention studies. Adm Policy Ment Health. 2015 Mar;42(2):157–67. doi: 10.1007/s10488-014-0556-2. http://europepmc.org/abstract/MED/24824311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Marcolino MS, Oliveira JA, D'Agostino M, Ribeiro AL, Alkmim MB, Novillo-Ortiz D. The impact of mhealth interventions: systematic review of systematic reviews. JMIR Mhealth Uhealth. 2018 Jan 17;6(1):e23. doi: 10.2196/mhealth.8873. https://mhealth.jmir.org/2018/1/e23/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wray T, Kahler C, Simpanen E, Operario D. User-centered, interaction design research approaches to inform the development of health risk behavior intervention technologies. Internet Interv. 2019 Mar;15:1–9. doi: 10.1016/j.invent.2018.10.002. https://linkinghub.elsevier.com/retrieve/pii/S2214-7829(18)30036-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Srinivas P, Cornet VP, Holden RJ. Human factors analysis, design, and evaluation of engage, a consumer health IT application for geriatric heart failure self-care. Int J Hum Comput Interact. 2017;33(4):298–312. doi: 10.1080/10447318.2016.1265784. https://www.tandfonline.com/doi/abs/10.1080/10447318.2016.1265784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Giunti G, Mylonopoulou V, Romero OR. More stamina, a gamified mhealth solution for persons with multiple sclerosis: research through design. JMIR Mhealth Uhealth. 2018 Mar 2;6(3):e51. doi: 10.2196/mhealth.9437. https://mhealth.jmir.org/2018/3/e51/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Morita PP, Yeung MS, Ferrone M, Taite AK, Madeley C, Lavigne AS, To T, Lougheed MD, Gupta S, Day AG, Cafazzo JA, Licskai C. A patient-centered mobile health system that supports asthma self-management (breathe): design, development, and utilization. JMIR Mhealth Uhealth. 2019 Jan 28;7(1):e10956. doi: 10.2196/10956. https://mhealth.jmir.org/2019/1/e10956/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Vilardaga R, Rizo J, Zeng E, Kientz J, Ries R, Otis C, Hernandez K. User-centered design of learn to quit, a smoking cessation smartphone app for people with serious mental illness. JMIR Serious Games. 2018 Jan 16;6(1):e2. doi: 10.2196/games.8881. https://games.jmir.org/2018/1/e2/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Biagianti B, Hidalgo-Mazzei D, Meyer N. Developing digital interventions for people living with serious mental illness: perspectives from three mhealth studies. Evid Based Ment Health. 2017 Nov;20(4):98–101. doi: 10.1136/eb-2017-102765. http://europepmc.org/abstract/MED/29025862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Nielsen J. Usability Engineering. Cambridge, MA: AP Professional; 1994. [Google Scholar]

- 15.Rubin J, Chisnell D. Handbook of Usability Testing: How to Plan, Design, and Conduct Effective Tests. Indianapolis, IN: Wiley Publishing; 2008. [Google Scholar]

- 16.Crandall B, Klein GA, Klein G, Hoffman RR. Working Minds: A Practitioner's Guide to Cognitive Task Analysis. Cambridge, MA: MIT Press; 2006. [Google Scholar]

- 17.Simonsen J, Robertson T, editors. Routledge International Handbook of Participatory Design. New York, USA: Routledge; 2012. [Google Scholar]

- 18.Holden RJ, Voida S, Savoy A, Jones JF, Kulanthaivel A. Human factors engineering and human–computer interaction: supporting user performance and experience. In: Finnel JT, Dixon BE, editors. Clinical Informatics Study Guide: Text and Review. Cham, Switzerland: Springer International Publishing; 2016. pp. 287–307. [Google Scholar]

- 19.Cornet VP, Daley C, Cavalcanti LH, Parulekar A, Holden RJ. Design for self care. In: Sethumadhavan A, Sasangohar F, editors. Design for Health: Applications of Human Factors. Cambridge, MA: Academic Press; 2020. pp. 277–302. [Google Scholar]

- 20.Kujala S, Kauppinen M. Identifying and Selecting Users for User-Centered Design. Proceedings of the third Nordic Conference on Human-Computer Interaction; NordiCHI'04; October 23-37, 2004; Tampere, Finland. 2004. [DOI] [Google Scholar]

- 21.Heinbokel T, Sonnentag S, Frese M, Stolte W, Brodbeck F. Don't underestimate the problems of user centredness in software development projects - there are many! Behav Inf Technol. 1996 Jan;15(4):226–36. doi: 10.1080/014492996120157. doi: 10.1080/014492996120157. [DOI] [Google Scholar]

- 22.Shah LM, Yang WE, Demo RC, Lee MA, Weng D, Shan R, Wongvibulsin S, Spaulding EM, Marvel FA, Martin SS. Technical guidance for clinicians interested in partnering with engineers in mobile health development and evaluation. JMIR Mhealth Uhealth. 2019 May 15;7(5):e14124. doi: 10.2196/14124. https://mhealth.jmir.org/2019/5/e14124/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Matthew-Maich N, Harris L, Ploeg J, Markle-Reid M, Valaitis R, Ibrahim S, Gafni A, Isaacs S. Designing, implementing, and evaluating mobile health technologies for managing chronic conditions in older adults: a scoping review. JMIR Mhealth Uhealth. 2016 Jun 9;4(2):e29. doi: 10.2196/mhealth.5127. https://mhealth.jmir.org/2016/2/e29/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Arsand E, Demiris G. User-centered methods for designing patient-centric self-help tools. Inform Health Soc Care. 2008 Sep;33(3):158–69. doi: 10.1080/17538150802457562. [DOI] [PubMed] [Google Scholar]

- 25.Kushniruk A, Nøhr C. Participatory design, user involvement and health IT evaluation. Stud Health Technol Inform. 2019:139–51. doi: 10.3233/978-1-61499-635-4-139. doi: 10.3233/978-1-61499-635-4-139. [DOI] [PubMed] [Google Scholar]

- 26.van Velsen L, Wentzel J, van Gemert-Pijnen JE. Designing eHealth that matters via a multidisciplinary requirements development approach. JMIR Res Protoc. 2013 Jun 24;2(1):e21. doi: 10.2196/resprot.2547. https://www.researchprotocols.org/2013/1/e21/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cornet VP, Holden RJ. Systematic review of smartphone-based passive sensing for health and wellbeing. J Biomed Inform. 2018 Jan;77:120–32. doi: 10.1016/j.jbi.2017.12.008. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(17)30278-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mann DM, Quintiliani LM, Reddy S, Kitos NR, Weng M. Dietary approaches to stop hypertension: lessons learned from a case study on the development of an mHealth behavior change system. JMIR Mhealth Uhealth. 2014 Oct 23;2(4):e41. doi: 10.2196/mhealth.3307. https://mhealth.jmir.org/2014/4/e41/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Fiordelli M, Diviani N, Schulz PJ. Mapping mHealth research: a decade of evolution. J Med Internet Res. 2013 May 21;15(5):e95. doi: 10.2196/jmir.2430. https://www.jmir.org/2013/5/e95/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Albrecht U, von Jan U. Safe, sound and desirable: development of mHealth apps under the stress of rapid life cycles. Mhealth. 2017;3:27. doi: 10.21037/mhealth.2017.06.05. doi: 10.21037/mhealth.2017.06.05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Becker S, Miron-Shatz T, Schumacher N, Krocza J, Diamantidis C, Albrecht U. mHealth 2.0: experiences, possibilities, and perspectives. JMIR Mhealth Uhealth. 2014 May 16;2(2):e24. doi: 10.2196/mhealth.3328. https://mhealth.jmir.org/2014/2/e24/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Cornet VP, Daley C, Bolchini D, Toscos T, Mirro MJ, Holden RJ. Patient-centered design grounded in user and clinical realities: towards valid digital health. Proc Int Symp Hum Fact Erg Health Care. 2019 Sep 15;8(1):100–4. doi: 10.1177/2327857919081023. https://journals.sagepub.com/doi/abs/10.1177/2327857919081023?journalCode=hcsa. [DOI] [Google Scholar]

- 33.Boustani MA, van der Marck MA, Adams N, Azar JM, Holden RJ, Vollmar HC, Wang S, Williams C, Alder C, Suarez S, Khan B, Zarzaur B, Fowler NR, Overley A, Solid CA, Gatmaitan A. Developing the agile implementation playbook for integrating evidence-based health care services into clinical practice. Acad Med. 2019;94(4):556–61. doi: 10.1097/ACM.0000000000002497. [DOI] [Google Scholar]

- 34.Waterson P. Ergonomics and ergonomists: lessons for human factors and ergonomics practice from the past and present. In: Shorrock S, Williams C, editors. Human Factors and Ergonomics in Practice: Improving System Performance and Human Well-Being in the Real World. Boca Raton, FL: CRC Press; 2017. pp. 29–44. [Google Scholar]

- 35.Roger VL. Epidemiology of heart failure. Circ Res. 2013 Aug 30;113(6):646–59. doi: 10.1161/CIRCRESAHA.113.300268. http://europepmc.org/abstract/MED/23989710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lainscak M, Blue L, Clark A, Dahlström U, Dickstein K, Ekman I, McDonagh T, McMurray JJ, Ryder M, Stewart S, Strömberg A, Jaarsma T. Self-care management of heart failure: practical recommendations from the patient care committee of the heart failure association of the European society of cardiology. Eur J Heart Fail. 2011 Feb;13(2):115–26. doi: 10.1093/eurjhf/hfq219. doi: 10.1093/eurjhf/hfq219. [DOI] [PubMed] [Google Scholar]

- 37.Holden RJ, Schubert CC, Mickelson RS. The patient work system: an analysis of self-care performance barriers among elderly heart failure patients and their informal caregivers. Appl Ergon. 2015 Mar;47:133–50. doi: 10.1016/j.apergo.2014.09.009. http://europepmc.org/abstract/MED/25479983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Klersy C, Boriani G, de Silvestri A, Mairesse G, Braunschweig F, Scotti V, Balduini A, Cowie MR, Leyva F, Health Economics Committee of the European Heart Rhythm Association Effect of telemonitoring of cardiac implantable electronic devices on healthcare utilization: a meta-analysis of randomized controlled trials in patients with heart failure. Eur J Heart Fail. 2016 Feb;18(2):195–204. doi: 10.1002/ejhf.470. doi: 10.1002/ejhf.470. [DOI] [PubMed] [Google Scholar]

- 39.Hawkins NM, Virani SA, Sperrin M, Buchan IE, McMurray JJ, Krahn AD. Predicting heart failure decompensation using cardiac implantable electronic devices: a review of practices and challenges. Eur J Heart Fail. 2016 Aug;18(8):977–86. doi: 10.1002/ejhf.458. doi: 10.1002/ejhf.458. [DOI] [PubMed] [Google Scholar]

- 40.Mirro M, Daley C, Wagner S, Ghahari RR, Drouin M, Toscos T. Delivering remote monitoring data to patients with implantable cardioverter-defibrillators: does medium matter? Pacing Clin Electrophysiol. 2018 Nov;41(11):1526–35. doi: 10.1111/pace.13505. [DOI] [PubMed] [Google Scholar]

- 41.Daley CN, Allmandinger A, Heral L, Toscos T, Plant R, Mirro M. Engagement of ICD patients: direct electronic messaging of remote monitoring data via a personal health record. EP Lab Digest. 2015;15(5):-. https://www.eplabdigest.com/articles/Engagement-ICD-Patients-Direct-Electronic-Messaging-Remote-Monitoring-Data-Personal-Health. [Google Scholar]

- 42.Daley CN, Chen EM, Roebuck AE, Ghahari RR, Sami AF, Skaggs CG, Carpenter M, Mirro M, Toscos T. Providing patients with implantable cardiac device data through a personal health record: a qualitative study. Appl Clin Inform. 2017 Oct;8(4):1106–16. doi: 10.4338/ACI-2017-06-RA-0090. http://europepmc.org/abstract/MED/29241248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Rohani Ghahari R, Holden RJ, Flanagan M, Wagner S, Martin E, Ahmed R, Daley CN, Tambe R, Chen E, Allmandinger T, Mirro MJ, Toscos T. Using cardiac implantable electronic device data to facilitate health decision making: a design study. Int J Ind Ergon. 2018 Mar;64:143–54. doi: 10.1016/j.ergon.2017.11.002. doi: 10.1016/j.ergon.2017.11.002. [DOI] [Google Scholar]

- 44.Daley CN, Bolchini D, Varrier A, Rao K, Joshi P, Blackburn J, Toscos T, Mirro MJ, Wagner S, Martin E, Miller A, Holden RJ. Naturalistic decision making by older adults with chronic heart failure: an exploratory study using the critical incident technique. Proc Hum Factors Ergon Soc Annu Meet. 2018 Sep 27;62(1):568–72. doi: 10.1177/1541931218621130. https://journals.sagepub.com/doi/10.1177/1541931218621130. [DOI] [Google Scholar]

- 45.Daley CN, Cornet VP, Patekar G, Kosarabe S, Bolchini D, Toscos T, Mirro M, Wagner S, Martin E, Rohani Ghahari R, Ahmed R, Miller A, Holden RJ. Uncertainty management among older adults with heart failure: responses to receiving implanted device data using a fictitious scenario interview method. Proc Int Sym Hum Fact Erg Health Care. 2019 Sep 15;8(1):127–30. doi: 10.1177/2327857919081030. https://journals.sagepub.com/doi/abs/10.1177/2327857919081030. [DOI] [Google Scholar]

- 46.Holden RJ, Joshi P, Rao K, Varrier A, Daley CN, Bolchini D, Blackburn J, Toscos T, Wagner S, Martin E, Miller A, Mirro MJ. Modeling personas for older adults with heart failure. Proc Hum Factors Ergon Soc Annu Meet. 2018 Sep 27;62(1):1072–6. doi: 10.1177/1541931218621246. doi: 10.1177/1541931218621246. [DOI] [Google Scholar]

- 47.Holden RJ, Daley CN, Mickelson RS, Bolchini D, Toscos T, Cornet VP, Miller A, Mirro MJ. Patient decision-making personas: an application of a patient-centered cognitive task analysis (P-CTA) Appl Ergon. 2020 Apr 15;87:103107. doi: 10.1016/j.apergo.2020.103107. https://www.sciencedirect.com/science/article/abs/pii/S0003687020300685. [DOI] [PubMed] [Google Scholar]

- 48.Kain D. Owning significance: the critical incident technique in research. In: de Marrais K, Lapan SD, editors. Foundations for Research: Methods of Inquiry in Education and the Social Sciences. Mahwah, NJ: Lawrence Erlbaum Associates; 2004. pp. 69–85. [Google Scholar]

- 49.Flanagan JC. The critical incident technique. Psychol Bull. 1954 Jul;51(4):327–58. doi: 10.1037/h0061470. [DOI] [PubMed] [Google Scholar]

- 50.Ahmed R, Toscos T, Ghahari RR, Holden RJ, Martin E, Wagner S, Daley CN, Coupe A, Mirro MJ. Visualization of cardiac implantable electronic device data for older adults using participatory design. Appl Clin Inform. 2019 Aug;10(4):707–18. doi: 10.1055/s-0039-1695794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Hart S, Staveland L. Development of NASA-TLX (task load index): results of empirical and theoretical research. Adv Psychol. 1988;52:139–83. doi: 10.1016/s0166-4115(08)62386-9. https://www.sciencedirect.com/science/article/pii/S0166411508623869. [DOI] [Google Scholar]

- 52.Brooke J. SUS: a 'quick and dirty' usability scale. In: Jordan PW, Thomas B, McClelland IL, Weerdmeester B, editors. Usability Evaluation In Industry. London, England: Taylor & Francis; 1996. pp. 189–94. [Google Scholar]

- 53.Boehmer JP, Hariharan R, Devecchi FG, Smith AL, Molon G, Capucci A, An Q, Averina V, Stolen CM, Thakur PH, Thompson JA, Wariar R, Zhang Y, Singh JP. A multisensor algorithm predicts heart failure events in patients with implanted devices: results from the multisense study. JACC Heart Fail. 2017 Mar;5(3):216–25. doi: 10.1016/j.jchf.2016.12.011. https://linkinghub.elsevier.com/retrieve/pii/S2213-1779(17)30048-3. [DOI] [PubMed] [Google Scholar]

- 54.Gardner RS, Singh JP, Stancak B, Nair DG, Cao M, Schulze C, Thakur PH, An Q, Wehrenberg S, Hammill EF, Zhang Y, Boehmer JP. Heartlogic multisensor algorithm identifies patients during periods of significantly increased risk of heart failure events: results from the multisense study. Circ Heart Fail. 2018 Jul;11(7):e004669. doi: 10.1161/CIRCHEARTFAILURE.117.004669. [DOI] [PubMed] [Google Scholar]

- 55.Nielsen J, Landauer TK. A Mathematical Model of the Finding of Usability Problems. Proceedings of the INTERACT '93 and CHI '93 Conference on Human Factors in Computing Systems; INTERCHI '93; April 24-29, 1993; Amsterdam, The Netherlands. 1993. pp. 206–13. https://dl.acm.org/doi/10.1145/169059.169166. [DOI] [Google Scholar]

- 56.Nielsen J. Iterative user-interface design. Computer. 1993 Nov;26(11):32–41. doi: 10.1109/2.241424. doi: 10.1109/2.241424. [DOI] [Google Scholar]

- 57.Baez M, Casati F. Agile Development for Vulnerable Populations: Lessons Learned and Recommendations. Proceedings of the 40th International Conference on Software Engineering: Software Engineering in Society; ICSE-SEIS'18; May 27-June 3, 2018; Gothenburg, Sweden. 2018. [DOI] [Google Scholar]

- 58.Holden RJ, Bodke K, Tambe R, Comer RS, Clark DO, Boustani M. Rapid translational field research approach for eHealth R&D. Proc Int Sym Hum Fact Erg Health Care. 2016 Jul 22;5(1):25–7. doi: 10.1177/2327857916051003. https://journals.sagepub.com/doi/10.1177/2327857916051003. [DOI] [Google Scholar]

- 59.Holden RJ, Scott AM, Hoonakker PLT, Hundt AS, Carayon P. Data collection challenges in community settings: insights from two field studies of patients with chronic disease. Qual Life Res. 2015 May;24(5):1043–55. doi: 10.1007/s11136-014-0780-y. http://europepmc.org/abstract/MED/25154464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Holden RJ, Or CK, Alper SJ, Joy Rivera A, Karsh BT. A change management framework for macroergonomic field research. Appl Ergon. 2008 Jul;39(4):459–74. doi: 10.1016/j.apergo.2008.02.016. https://www.sciencedirect.com/science/article/abs/pii/S0003687008000471?via%3Dihub. [DOI] [PubMed] [Google Scholar]

- 61.Valdez RS, Holden RJ. Health care human factors/ergonomics fieldwork in home and community settings. Ergon Des. 2016 Oct;24(4):4–9. doi: 10.1177/1064804615622111. http://europepmc.org/abstract/MED/28781512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Toscos T, Drouin M, Pater J, Flanagan M, Pfafman R, Mirro MJ. Selection biases in technology-based intervention research: patients' technology use relates to both demographic and health-related inequities. J Am Med Inform Assoc. 2019 Aug 1;26(8-9):835–9. doi: 10.1093/jamia/ocz058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Holden RJ, Toscos T, Daley CN. Researcher reflections on human factors and health equity. In: Roscoe R, Chiou EK, Wooldridge AR, editors. Advancing Diversity, Inclusion, and Social Justice Through Human Systems Engineering. Boca Raton, FL: CRC Press; 2019. pp. 51–62. [Google Scholar]

- 64.Cosco TD, Firth J, Vahia I, Sixsmith A, Torous J. Mobilizing mHealth data collection in older adults: challenges and opportunities. JMIR Aging. 2019 Mar 19;2(1):e10019. doi: 10.2196/10019. https://aging.jmir.org/2019/1/e10019/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Pagliari C. Design and evaluation in ehealth: challenges and implications for an interdisciplinary field. J Med Internet Res. 2007 May 27;9(2):e15. doi: 10.2196/jmir.9.2.e15. https://www.jmir.org/2007/2/e15/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Poole ES. HCI and mobile health interventions: how human-computer interaction can contribute to successful mobile health interventions. Transl Behav Med. 2013 Dec;3(4):402–5. doi: 10.1007/s13142-013-0214-3. http://europepmc.org/abstract/MED/24294328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Aidemark J, Askenäs L, Nygårdh A, Strömberg A. User involvement in the co-design of self-care support systems for heart failure patients. Procedia Comput Sci. 2015;64:118–24. doi: 10.1016/j.procs.2015.08.471. doi: 10.1016/j.procs.2015.08.471. [DOI] [Google Scholar]

- 68.Blandford A, Gibbs J, Newhouse N, Perski O, Singh A, Murray E. Seven lessons for interdisciplinary research on interactive digital health interventions. Digit Health. 2018;4:2055207618770325. doi: 10.1177/2055207618770325. http://europepmc.org/abstract/MED/29942629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Calvo RA, Dinakar K, Picard R, Christensen H, Torous J. Toward impactful collaborations on computing and mental health. J Med Internet Res. 2018 Feb 9;20(2):e49. doi: 10.2196/jmir.9021. https://www.jmir.org/2018/2/e49/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Holden RJ, Binkheder S, Patel J, Viernes SH. Best practices for health informatician involvement in interprofessional health care teams. Appl Clin Inform. 2018 Jan;9(1):141–8. doi: 10.1055/s-0038-1626724. http://europepmc.org/abstract/MED/29490407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Kujala S. User involvement: a review of the benefits and challenges. Behav Inf Technol. 2003 Jan;22(1):1–16. doi: 10.1080/01449290301782. doi: 10.1080/01449290301782. [DOI] [Google Scholar]

- 72.Baek EO, Cagiltay K, Boling E, Frick T. User-centered design and development. In: Spector JM, Merrill MD, van Merrienboer J, Driscoll MP, editors. Handbook of Research on Educational Communications and Technology: A Project of the Association for Educational Communications and Technology. New York, USA: Taylor and Francis; 2008. pp. 660–8. [Google Scholar]

- 73.Kittur A, Chi EH, Suh BW. Crowdsourcing user studies with mechanical turk. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems; CHI'08; April 5-10, 2008; Florence, Italy. 2008. https://dl.acm.org/doi/10.1145/1357054.1357127. [DOI] [Google Scholar]

- 74.Guillory J, Kim A, Murphy J, Bradfield B, Nonnemaker J, Hsieh Y. Comparing Twitter and online panels for survey recruitment of e-cigarette users and smokers. J Med Internet Res. 2016 Nov 15;18(11):e288. doi: 10.2196/jmir.6326. https://www.jmir.org/2016/11/e288/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Walter SL, Seibert SE, Goering D, O’Boyle EH. A tale of two sample sources: do results from online panel data and conventional data converge? J Bus Psychol. 2018 Jul 21;34(4):425–52. doi: 10.1007/s10869-018-9552-y. [DOI] [Google Scholar]

- 76.Eshet E, Bouwman H. Context of use: the final frontier in the practice of user-centered design? Interact Comput. 2016 Oct 13;29:368–90. doi: 10.1093/iwc/iww030. doi: 10.1093/iwc/iww030. [DOI] [Google Scholar]

- 77.Sharma A, Angel L, Bui Q. Patient advisory councils: giving patients a seat at the table. Fam Pract Manag. 2015;22(4):22–7. http://www.aafp.org/link_out?pmid=26176505. [PubMed] [Google Scholar]

- 78.Keil M, Carmel E. Customer-developer links in software development. Commun ACM. 1995;38(5):33–44. doi: 10.1145/203356.203363. doi: 10.1145/203356.203363. [DOI] [Google Scholar]

- 79.Béguin P. Design as a mutual learning process between users and designers. Interact Comput. 2003 Oct;15(5):709–30. doi: 10.1016/S0953-5438(03)00060-2. doi: 10.1016/S0953-5438(03)00060-2. [DOI] [Google Scholar]

- 80.Lorenzi NM, Riley RT. Managing change: an overview. J Am Med Inform Assoc. 2000;7(2):116–24. doi: 10.1136/jamia.2000.0070116. http://europepmc.org/abstract/MED/10730594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Magrabi F, Ong M, Coiera E. Health IT for patient safety and improving the safety of health IT. Stud Health Technol Inform. 2016;222:25–36. [PubMed] [Google Scholar]

- 82.Turner P, Turner S. Is stereotyping inevitable when designing with personas? Design Stud. 2011 Jan;32(1):30–44. doi: 10.1016/j.destud.2010.06.002. doi: 10.1016/j.destud.2010.06.002. [DOI] [Google Scholar]

- 83.Norman D. Living with Complexity. Cambridge, Massachusetts: The MIT Press; 2011. [Google Scholar]

- 84.Ahmed I, Ahmad NS, Ali S, Ali S, George A, Danish HS, Uppal E, Soo J, Mobasheri MH, King D, Cox B, Darzi A. Medication adherence apps: review and content analysis. JMIR Mhealth Uhealth. 2018 Mar 16;6(3):e62. doi: 10.2196/mhealth.6432. https://mhealth.jmir.org/2018/3/e62/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Cho H, Yen PY, Dowding D, Merrill JA, Schnall R. A multi-level usability evaluation of mobile health applications: a case study. J Biomed Inform. 2018 Oct;86:79–89. doi: 10.1016/j.jbi.2018.08.012. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(18)30167-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Cornet VP, Daley CN, Srinivas P, Holden RJ. User-centered evaluations with older adults: testing the usability of a mobile health system for heart failure self-management. Proc Hum Factors Ergon Soc Annu Meet. 2017 Sep;61(1):6–10. doi: 10.1177/1541931213601497. https://journals.sagepub.com/doi/abs/10.1177/1541931213601497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Holden RJ, Campbell NL, Abebe E, Clark DO, Ferguson D, Bodke K, Boustani MA, Callahan CM, Brain Health Patient Safety Laboratory Usability and feasibility of consumer-facing technology to reduce unsafe medication use by older adults. Res Social Adm Pharm. 2020 Jan;16(1):54–61. doi: 10.1016/j.sapharm.2019.02.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Bangor A, Kortum P, Miller J. An empirical evaluation of the system usability scale. Int J Hum-Comput Interact. 2008 Jul 30;24(6):574–94. doi: 10.1080/10447310802205776. doi: 10.1080/10447310802205776. [DOI] [Google Scholar]

- 89.Georgsson M, Staggers N. An evaluation of patients' experienced usability of a diabetes mHealth system using a multi-method approach. J Biomed Inform. 2016 Feb;59:115–29. doi: 10.1016/j.jbi.2015.11.008. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(15)00276-2. [DOI] [PubMed] [Google Scholar]

- 90.Maramba I, Chatterjee A, Newman C. Methods of usability testing in the development of eHealth applications: a scoping review. Int J Med Inform. 2019 Jun;126:95–104. doi: 10.1016/j.ijmedinf.2019.03.018. [DOI] [PubMed] [Google Scholar]