Abstract

Color constancy involves disambiguating the spectral characteristics of lights and surfaces, for example to distinguish red in white light from white in red light. Solving this problem appears especially challenging for bluish tints, which may be attributed more often to shading, and this bias may underlie the individual differences in whether people described the widely publicized image of #thedress as blue-black or white-gold. To probe these higher-level color inferences, we examined neural correlates of the blue-bias, using frequency-tagging and high-density electroencephalography to monitor responses to 3-Hz alternations between different color versions of #thedress. Specifically, we compared relative neural responses to the original “blue” dress image alternated with the complementary “yellow” image (formed by inverting the chromatic contrast of each pixel). This image pair produced a large modulation of the electroencephalography amplitude at the alternation frequency, consistent with a perceived contrast difference between the blue and yellow images. Furthermore, decoding topographical differences in the blue-yellow asymmetries over occipitoparietal channels predicted blue-black and white-gold observers with over 80% accuracy. The blue-yellow asymmetry was stronger than for a “red” versus “green” pair matched for the same component differences in L versus M or S versus LM chromatic contrast as the blue-yellow pair and thus cannot be accounted for by asymmetries within either precortical cardinal mechanism. Instead, the results may point to neural correlates of a higher-level perceptual representation of surface colors.

Keywords: color perception, color constancy, EEG, frequency tagging, individual differences

Introduction

Our perceptual experience of color arises through a complex transformation of the initial responses in three classes of retinal cone photoreceptors into extended cortical representations (e.g., for reviews: Dacey, 2000; Gegenfurtner, 2003; Lee, 2004; Solomon & Lennie, 2007; Conway et al., 2010; Shapley & Hawken, 2011; Conway, 2014; Johnson & Mullen, 2016). Within this processing stream, the representation shifts from the proximal stimulus - such as the cone contrasts at different points in the scene - to include causal inferences about the distal properties of the stimulus, such as the inferred surface reflectance. A well-studied example of these inferences is color constancy, in which the visual system forms a representation of surface color that is relatively independent of the spectrum of the incident lighting (Foster, 2011). Many different processes contribute to color constancy (Foster, 2011; D'Zmura & Lennie, 1986; Smithson, 2005; Shevell & Kingdom, 2008), from low-level sensory adjustments, e.g., adapting to the average chromaticity in the scene (Land & McCann, 1971; Brainard & Wandell, 1992; Hurlbert, 1998; Smithson, 2005; Foster, 2011), to learned inferences about the causal structure of the world (Lotto & Purves, 2002), to potentially conceptual representations (e.g., color constancy is typically better when observers are asked to judge if two samples are the same material rather than directly judging their chromaticity; Arend & Reeves, 1986). At a neural level, differing traces of color constancy have been found throughout color-sensitive areas from the retina to the ventral occipito-temporal cortex (e.g., Gegenfurtner, 2003; Foster, 2011; Smithson, 2005; Walsh, 1999). Thus, it remains unclear at which point the representation of color more closely resembles the observer's percepts than the retina's signals (Engel, Zhang, & Wandell, 1997; Wandell et al., 2000; Brouwer & Heeger, 2009).

We explored high-order color percepts and how they are manifest in neural measurements of chromatic responses with use of an ambiguous color image characterized by large individual differences in its perceptual interpretation. In 2015, this image of #thedress sparked global interest because people differed vehemently and persistently over whether the dress itself appeared “blue-black” (i.e., blue with black stripes) or “white-gold” (i.e., white with gold stripes). A common explanation for the differing percepts of the dress was that individuals differed in the inference of a distal stimulus property, i.e., whether they saw the dress to be in direct light (blue-black) or in shadow (white-gold) (e.g., Brainard & Hurlbert, 2015; Gegenfurtner, Bloj, & Toscani, 2015; Lafter-Sousa, Hermann, & Conway, 2015; Winkler et al., 2015; Witzel, 2015; Chetverikov & Ivanchei, 2016; Hesslinger & Carbon, 2016; Toscani, Gegenfurtner, & Doerschner, 2017; Wallisch, 2017; Witzel, Racey, & O'Regan, 2017). The individual differences in this inference have in turn been attributed to the special ambiguity of blue percepts (Winkler et al., 2015), in line with the gamut of chromaticities in the dress image being largely distributed along the natural “blue-yellow” daylight locus (Gegenfurtner, Bloj, & Toscani, 2015; Lafter-Sousa, Hermann, & Conway, 2015; Winkler et al., 2015, Witzel, Racey, & O'Regan, 2017). Here, we used the dress image to investigate neural correlates of both (1) the perceptual asymmetries in the representations of blue and yellow and (2) individual differences in the perception of blue, defining white-gold and blue-black dress observers.

In the first case, perceptual blue-yellow asymmetries may again be related to the ambiguity of blue as a property of the surface or illumination. Bluish hues may pose a special challenge to color constancy because they correspond to the hue of cast shadows, and thus observers may be more likely to attribute bluish tints to the diffuse lighting (e.g., from the sky) rather than to the object. In fact, individuals tend to underestimate the blue of actual shadows (Churma, 1994) and show better color constancy (less sensitivity to an illuminant change) for blue illuminants (Pearce et al., 2014). They are also more likely to call a bluish chromaticity “white” than the equivalent (complementary) yellow chromaticity, and thus may perceive blues as reduced in contrast (Winkler et al., 2015). Consistent with this, forming a “color negative” of the dress by inverting the chromatic contrasts from blue to yellow removed the ambiguity and thus the observer differences in the reported color (such that almost all observers now agreed that the dress stripes appeared yellow; Gegenfurtner, Bloj, & Toscani, 2015; Winkler et al., 2015). This is potentially because yellow tints are inconsistent with shading, since natural blue lighting from the sky is diffuse while natural yellow lighting from the sun is directional. Moreover, these effects are specific to the blue-yellow axis: when the dress colors are rotated off this axis (e.g., along a reddish-greenish axis), the reds and greens appear more similar in saturation and there is again little disagreement in how people describe the colors (Gegenfurtner, Bloj, & Toscani, 2015; Winkler et al., 2015).

Thus we first tested for group-level neural responses correlated with the reduced perceived contrast of blue. To this end, we compared electroencephalography (EEG) response asymmetries to paired blue-yellow versions of the dress, as well as paired green-red versions of the dress. Note that we use these labels to refer to the nominal perceived colors, and not to any specific chromatic axes or mechanisms. In particular, the labels do not refer to the cardinal axes of early color coding (Krauskopf, Williams, & Heeley, 1982). However, the differences in chromatic contrast along the L-M and S-(L+M) cardinal chromatic dimensions were constructed to be identical for the blue-yellow and green-red stimulus pairs, but combined in opposite phase (see Figure 1A and Methods). Thus differences in the responses to the blue-yellow versus green-red pairs could not be accounted for by the separable cardinal color mechanisms representing precortical color coding coding Derrington, Krauskopf, and Lennie (1984) (although asymmetries within either cardinal mechanism would be expected to produce [the same] asymmetric responses within either the blue-yellow or green-red pair). Such a comparison of cone-contrast–balanced stimuli has been applied in a number of previous studies to test for the separability of the cardinal axes (e.g. Goddard et al., 2011; McDermott et al., 2010). To provide a reference for an extreme contrast difference (achromatic to chromatic), we additionally measured electrophysiological response differences to a pair of gray-yellow dress images. Overall, we predicted that group differences in neural responses to blue-yellow would be greater than those to green-red (in line with larger perceptual blue-yellow asymmetries: Winkler et al., 2015) but smaller than those to gray-yellow (an extreme achromatic-chromatic contrast), even though again the blue-yellow and green-red pairs were matched for their early-stage color differences.

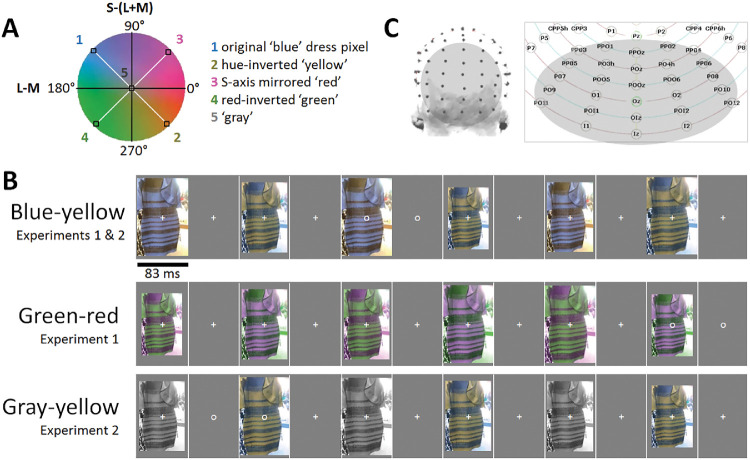

Figure 1.

(A) A scaled version of the MacLeod-Boynton/Derrington-Krauskopf-Lennie chromatic plane showing the construction of the stimuli. The chromatic contrast of each pixel in the original “blue” image (e.g., point 1) was inverted to form the yellow image (e.g., point 2). In turn, the red and green images (e.g. points 3 and 4) were formed by reflecting the blue yellow contrasts along the L-M or equivalently S-(L+M) axes. Consequently, the blue-yellow and green-red pairs had the same component L-M and S-(L+M) contrast, but combined in opposite phase. Additionally, another independent transformation was applied to convert each pixel of the original dress image into achromatic gray (point 5). (B) Three experimental conditions comprised of testing in order blue-yellow, green-red, and gray-yellow pairs of dress stimuli. (C) Left: A 128-channel ActiveTwo BioSemi EEG system was used for the recordings. Right: A section of relabeled posterior electrode positions on a 2D scalp map. Analyses were performed on a 28-channel occipitoparietal region-of-interest (highlighted in gray; shown on a 3D head map on the left), encompassing all occipital, occipitoparietal, occipitoinferior, and inferior channels.

In the second case, we assessed individual differences in the blue-yellow percepts. Here, we reasoned that white-gold and blue-black observers would be identifiable with a system-level measure of visual cortical responses (recorded with high-density over the occipito-parietal cortex), again given the extensive traces of surface/illuminant resolution throughout the human visual system (e.g., Gegenfurtner, 2003; Foster, 2011; Smithson, 2005; Walsh, 1999). Neural correlates at this level have not been found in previous studies. Instead, one previous neuroimaging study reported differences across observers only in frontal and parietal “higher cognition” cortical areas when contrasting the dress to uniform color patches (Schlaffke et al., 2015). This effect was interpreted as modulation of the percepts by top-down, post-perceptual processes. Other studies have pointed instead at low-level visual influences, such as spectral sensitivity differences from variations in macular pigment density (Rabin et al., 2016) or normal variations in pupil diameter (Vemuri et al., 2016). In this vein, neural correlates of visual evoked potential waveforms at a single medial-occipital electrode with differences in perception of the dress were reported (Rabin et al., 2016), although these low-level findings were not reliable at the individual participant level.

We took a novel approach to explore neural correlates of individual differences in perception of the dress, hypothesizing that differences across white-gold and blue-black observers would manifest as differences in the recorded blue-yellow EEG response differences. Although blue-black and white-gold observers both rely on high-level assumptions about the source of illumination in this image, the differences in these assumptions result in different perceived contrasts of the dress surface color, which in turn may predict different contrast responses to the surface color. Specifically, we predicted that observers who perceived the dress as white-gold might represent the blue hues in the image as a lower effective (perceived) contrast, leading to blue-yellow amplitude differences largest over medial-occipital channels sensitive to early-stage contrast differences (Kulikowski et al., 1994; Crognale et al., 2013). Additionally, since these response asymmetries potentially reflect a lower effective stimulus contrast in the blue image, we predicted greater similarities in blue-yellow and gray-yellow asymmetries for these observers, in terms of response amplitude, topography, and phase. Conversely, observers reporting the dress as blue-black might show responses to the blue and yellow pair that are more similar to those between green and red, i.e., not differing in perceived contrast, but relying on chromatic-chromatic distinctions, possibly from later-stage visual areas projecting beyond the occipital midline of the scalp (e.g., Anllo-Vento, Luck, & Hillyard, 1998). These observers in particular are expected to have larger gray-yellow than blue-yellow responses. Such differences between the two perceptual groups would lay the foundation for classification of observers as white-gold or blue-black based on their blue-yellow response asymmetries.

To monitor neural asymmetries, we used fast periodic visual stimulation and high-density electroencephalogram (FPVS-EEG; also referred to as “frequency-tagging” or “steady-state visually evoked potentials”; e.g., Regan, 1966; Rossion, 2014a; Rossion, 2014b; Norcia et al., 2015). Generally, in this approach, stimuli presented at a fixed frequency generate EEG responses locked to that frequency, which can be identified objectively in the temporal frequency domain with a high signal-to-noise ratio. Here, we applied a paradigm variant designed specifically to measure response asymmetries, or differences in the relative responses to two stimuli (e.g., Tyler & Kaitz, 1977; Ales & Norcia, 2009; Coia et al., 2014; Retter & Rossion, 2016a; Retter & Rossion, 2017; reviewed in Norcia et al., 2015). In this symmetry/asymmetry paradigm, the stimulus alternates between two images (see Figure 1B), generating responses at the presentation frequency (F = 6 Hz) and its harmonics, but potentially also at the alternation rate (F/2 = 3 Hz) and its unique harmonics if differences in the responses to the two images (e.g., blue and yellow dresses) are present. In other words, common (symmetrical) visual responses to both stimuli are tagged at 6 Hz, while differential (asymmetrical) responses to the two stimuli are tagged at 3 Hz. As a concrete example, suppose a grating is shown 6 times per second, but alternates between horizontal and vertical. The EEG response will modulate at 6 Hz (the stimulus presentation rate). However, if the magnitude of response to the two gratings differs (e.g., because the horizontal grating has a lower contrast and thus produces a weaker neural signal), then the EEG response will also show a modulation at the alternation rate of 3 Hz, reflecting the asymmetry in the neural responses. Here we test for response asymmetries which might arise from the perceptual color contrast differences between blue and yellow versions of the dress image.

By using FPVS to record implicit responses to briefly presented images (167 ms SOA), we limited post-perceptual attentional or decisional modulation of the response to any one image (e.g., Rossion, 2014a; Norcia et al., 2015). By using high-density EEG, we also expanded our recording beyond a traditional medial-occipital channel, which has been shown to relate dominantly to early-stage visual cortical responses (Kulikowski et al., 1994; Crognale et al., 2013; see also Anllo-Vento, Luck, & Hillyard, 1998 and the Discussion). Therefore this paradigm was optimal for investigating both group- and individual-level perceptual effects that relate to complex visual inferences about surface and lighting color, which again may emerge from throughout early- and late-stage visual areas. At a practical level, discovering sensitive and reliable neural correlates of perceptual reports of color appearance would provide important foundations for future studies, e.g., for testing the development of (blue-yellow) color perception in infants. At a theoretical level, investigating these neural correlates allowed us to explore the visual representation of higher-order aspects of color perception.

Materials and methods

Participants

Fourteen participants, recruited from students and staff at the University of Nevada, Reno, took part in the primary experiment (Experiment 1). Their ages ranged from 20 to 30 years old (M = 23 years; SD = 3.2 years). Nine were male, and 2 were left-handed. A novel group of 14 participants took part in a second experiment, none of whom had participated in Experiment 1. Their ages ranged between 21 to 41 years old (M = 26 years; SD = 5.2 years), 5 were male, and 1 was left-handed. All reported normal or corrected-to-normal visual acuity and normal color vision. They gave signed, informed consent before participation in the experiment, which was approved by the University of Nevada, Reno's Institutional Review Board, and conducted in accordance with the Code of Ethics of the World Medical Association (Declaration of Helsinki).

Stimuli

Experiment 1 used 2 stimulus pairs (Figure 1A). One pair included the original image of #thedress (Roman Originals, 2018; made famous on Swiked, 2015), and an image formed by inverting the chromaticity of each pixel across both the L-M and S-(L+M) axes to the cone-opponent color (see Figure 1A). Note that this inversion maintained the same luminance at each pixel and thus altered only the chromaticity. Specifically, the vector defining the chromaticity of each pixel was effectively rotated 180° within a cone-opponent color space, so that the inverted color was equal in magnitude (cone-contrast) but opposite in direction relative to the achromatic point. The space was a modified version of the MacLeod-Boynton chromaticity diagram centered on a nominal gray value equivalent to the chromaticity of illuminant C (CIE 1931 x,y = 0.310, 0.316), and scaled to roughly equate threshold sensitivity along the cardinal L-M and S-(L+M) cone-opponent axes of the space (Winkler et al., 2015). We refer to these images as the blue-yellow (original-inverted) pair. The second pair was formed by inverting the pixel chromaticities of the blue-yellow pair along only the L-M (or S-[L+M]) axes of the space. This changes the hue of the original dress from blue to red, and the yellow complement to green. We refer to these images as the green-red pair.

Importantly, the green-red pair has the same component L-M and S-(L+M) chromatic contrast as the blue-yellow pair, but the components are combined in opposite phase. Consequently, the 2 pairs are matched for their signals along the independent L-M and S-(L+M) dimensions that are thought to be the principal or cardinal color directions along which color is coded in the retina and geniculate (Krauskopf, Williams, & Heeley, 1982). Experiment 2 used the same blue-yellow pair but compared to a third pair corresponding to the yellow image of pair 1 and a grayscale image of the dress. For the grayscale image, each pixel retained the same luminance as the original, but was set to the chromaticity of the nominal gray. This gray-yellow pair was included to assess the EEG responses to an actual (rather than perceived) asymmetry in the chromatic contrast of the images.

The stimuli were sized to a width of 215 pixels and height of 327 pixels, and were presented on a gray background (with a luminance of 34.5 cd/m2, equivalent to the medium gray level of the screen) on a 21-inch cathode ray tube monitor (NEC AccuSync 120), with a 120 Hz screen refresh rate. The monitor was gamma-corrected based on calibrations obtained with a PhotoResearch PR655 spectroradiometer, and was controlled by a standard PC. In Experiment 1, at a viewing distance of 80 cm, the images subtended a height of 6.8 degrees of visual angle. In Experiment 2, stimuli were viewed at a distance of 57 cm, changed to correspond with other experiments in the same testing session; they consequently subtended a height of 9.5 degrees of visual angle. Though percepts of #thedress have been found to change with size and spatial frequency (Lafter-Sousa, Hermann, & Conway, 2015; Dixon & Shapiro, 2017), informal assessments suggest that the size change between the experiments did not impact observers’ color judgments of the images (in each Experiment, 5/14 observers reported seeing the dress as white-gold).

Fast periodic visual stimulation (FPVS) procedure

Setting up the EEG system lasted about 30 to 40 minutes, with two experimenters preparing the headcap (alternating across participants which side of the cap into which each put the conductive gel). Capped participants were positioned in front the cathode ray tube monitor and used a keyboard to start testing trials and give responses. Viewing was binocular and in a room illuminated only by the experimental and acquisition displays. A symmetry/asymmetry fast periodic visual stimulation (FPVS) design was used (e.g., Tyler & Kaitz, 1977; Ales & Norcia, 2009; Coia et al., 2014; Retter & Rossion, 2016a; Retter & Rossion, 2017; reviewed in Norcia et al., 2015). As noted, in this paradigm, two images are presented in alternation, leading to two distinct frequency-tagged responses expected in the EEG recording. At the image presentation rate (6 Hz), responses common to the two images, i.e., symmetrical responses, are expected. At the rate at which the images repeat (6 Hz/2 = 3 Hz), aspects of the responses differing between the 2 images, that is, asymmetrical responses, are expected. Note that in most previous applications of this paradigm, one of the stimuli is adapted before the alternation, to enhance intra-population response differences (e.g., see Figure 2 of Ales & Norcia, 2009, investigating directional motion), but adaptation is not necessary when the amplitude and phase of these differences is already substantial at the neural population level (e.g., Coia et al., 2014, investigating a chromatic illusion). Here, we aimed to investigate the inherent, unadapted neural response asymmetries to paired color images.

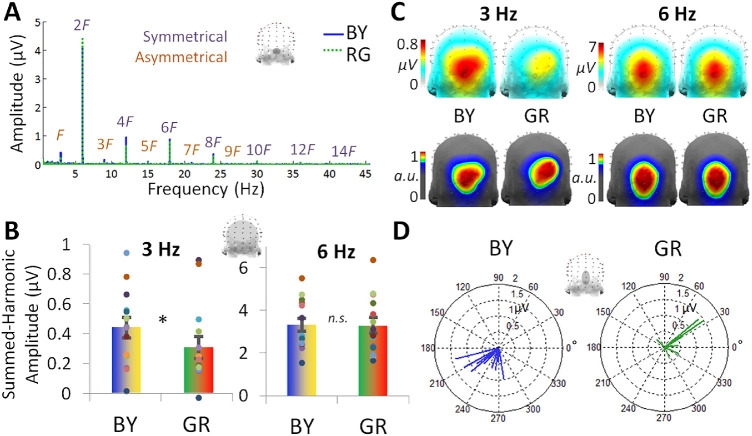

Figure 2.

Experiment 1 EEG results for the blue-yellow (BY) and green-red (GR) image pairs (n = 14). (A) The asymmetrical response at 3 Hz (F) and its unique harmonics is evident in the baseline-subtracted frequency spectrum, shown here for channel OIz. Its unique, asymmetrical harmonics are labeled up to 27 Hz (9F), the highest frequency giving a significant response at the group level across all 128 channels. The symmetrical response at 6 Hz (2F) and its harmonics are plotted in the same manner, up to 42 Hz (14F). (B) The summed-harmonic baseline-subtracted amplitudes over the occipitoparietal ROI for each 3 and 6 Hz. Individual data are shown with dots, paired by color. (C) The topographical distribution of the summed-harmonic baseline-subtracted amplitudes for each 3 and 6 Hz (top row). The corresponding normalized topographies are also plotted to emphasize the spatial differences, occurring within the occipitoparietal ROI (bottom row). (D) The phase of the 3 Hz response over the average of channels POz, Oz, and OIz. Data are shown with separate vectors for each individual participant, with the angle of the vector representing the cosine phase and the length of the vector representing the baseline-subtracted amplitude over these channels at 3 Hz.

Images were presented with a 50% duty cycle square wave at 6 Hz, resulting in each image being displayed at full contrast for 83.3 ms, followed by 83.3 ms of the background. The processing of each image likely persists to some extent until it is interrupted, such that rapid serial visual presentation paradigms have led to behavioral and neural response signatures within less than 20 ms presentation duration (Keysers et al., 2001; Potter et al., 2014). While the display time of each image here is brief, a study by Foster, Craven, and Sale (1992), showed that illuminant vs. surface color changes were detected extremely rapidly, even below 83 ms. At each image presentation, the size varied from 90-110% of the size of the original image in 10% steps to reduce pixel-based image repetition effects (see Dzhelyova & Rossion, 2014). As described in the Introduction, in Experiment 1 there were two experimental conditions, in each of which a pair of images of the dress were presented in alternation: (1) blue-yellow, alternating the original bluish and hue-inverted yellowish images of the dress; and (2) green-red, alternating the greenish and reddish images of the dress. In Experiment 2, there were also two experimental conditions, the first of which repeated the blue-yellow condition of Experiment 1. The second, gray-yellow condition, consisted of alternating a grayscale and the color-inverted image of the original (Figure 1B).

Each testing sequence lasted for 50 s, immediately preceded and followed by a jittered 2-3 s presentation of a white fixation cross in the middle of the gray background, to establish fixation before recording and prevent abrupt movements on trial completion. Participants were instructed to fixate on this cross, which remained present throughout the testing sequence, superimposed on the presented images. To encourage fixation and sustained attention during trials, the cross would briefly change to an open circle (persisting for 200 ms) 8 times throughout each sequence at random time points, and participants were instructed to press the space bar each time they detected the change.

Participants viewed four repetitions of the sequences for each of the two conditions, in an individually randomized order, leading to a total recording time of 6.7 minutes. Given the short testing time, participants also took part in additional experiments during the same session, none of which concerned color perception. Stimuli were presented with Java SE Version 8.

EEG acquisition

EEG was recorded with a 128-channel BioSemi ActiveTwo EEG system. The Ag-AgCl Active-electrodes were organized in several sizes of head caps in the default BioSemi configuration, which centers around 9 standard 10/20 locations on the primary axes (BioSemi B.V., Amsterdam, Netherlands; for exact coordinates, see http://www.biosemi.com/headcap.htm). The default labels of BioSemi (organized in 4 groups [A–D] of 32) were relabeled to closely match those of a more conventional 10/5 system (Oostenveld & Praamstra, 2001; for exact relabeling, see Rossion et al., 2015, Figure S2; for a relevant example, see also (Figure 1C). Offsets of each electrode, relative to the common mode sense and driven right leg feedback loop, were held below 40 mV. In addition, vertical and horizontal eye movements were recorded with four flat-type Active-electrodes, placed above and below the right eye and lateral to the external canthi. Recordings were digitized at a sampling rate of 2048 Hz and saved in BioSemi Data Formats, and then down-sampled offline to 512 Hz to reduce file size for processing.

EEG analysis

Preprocessing

Data were processed with Letswave 5, an open source toolbox (http://nocions.webnode.com/letswave), running over MATLAB R2013b (MathWorks, Natick, MA). After importation of the recorded BioSemi Data Formats, data were filtered with a fourth-order zero-phase Butterworth band-pass filter, with cutoff values of high-pass 0.05 Hz and low-pass 120 Hz, as well as a 60 Hz fast-Fourier transform (FFT) notch filter with a width of 0.5 Hz to remove 60 Hz electrical noise and its second harmonic. To correct for noise from eye-blinks in 2 participants blinking the most frequently in Experiment 1, that is, more than 0.2 times per s (M = 0.11; SD = 0.13 blinks/s), and for 3 participants in Experiment 2 (M = 0.12, SD = 0.22 blinks/s), independent-component analysis was used to remove a single component accounting for blink activity. Channels which had artifacts (deflections of greater than 100 µV) across 2 or more testing sequences were linearly interpolated with 3 to 5 pooled neighboring channels (6 or fewer channels per participant; Experiment 1: M = 1.8; Experiment 2: M = 0.57). Data were then re-referenced to the common average of the 128 EEG channels and segmented to 50 s per testing sequence, corresponding to exactly 300 image presentation cycles at 6 Hz and 150 image repetition cycles at 3 Hz. Testing sequences were averaged in time for each participant, preserving phase-locked responses evoked by image presentation and reducing non-phase-locked noise.

Frequencies-of-interest

Asymmetrical responses, that is, responses differing between the 2 images presented in each sequence, are expected at 3 Hz and its unique, odd harmonic frequencies (i.e., 9 Hz, 15 Hz, etc.; see Procedure). Symmetrical responses, in common to each of the two images presented in each sequence, are expected at the image presentation rate of 6 Hz and its (even) harmonic frequencies (i.e., 12 Hz, 18 Hz, etc.). To determine the number of harmonics to analyze for each of these two fundamental frequencies, data from Experiment 1 was Fourier-analyzed to obtain the frequency-domain amplitude spectra (see below) based on activity pooled across all channels and grand-averaged across participants. Z-scores were computed at each frequency bin, x, with a respective baseline defined as 20 surrounding frequency bins, excluding the immediately adjacent bins (Z = (x − baseline mean) / baseline standard deviation) (e.g., Retter & Rossion, 2016b; Srinivasan et al., 1999). Harmonic frequencies with responses significant at Z > 2.32, P < 0.01 (1-tailed, testing signal>noise) were used in subsequent analyses: this included five unique harmonics of 3 Hz (i.e., 3, 9, 15, 21, and 27 Hz) and 7 harmonics of 6 Hz (i.e., 6, 12, 18, 24, 30, 36, and 42 Hz) in both conditions (here, equivalent numbers of harmonics would be selected if P < 0.05 were used). Z-score values ranged from 3.15 to 15.9 at 3 Hz and its harmonics (peaking at 15 Hz), and from 2.83 to 125 at 6 Hz and its harmonics (peaking at 6 Hz). The same harmonic frequency ranges were used for analysis in Experiment 2.

Region-of-interest

A single occipitoparietal region-of-interest (ROI) was defined for response amplitude quantification, encompassing all 28 occipital, occipitoparietal, occipitoinferior, and inferior channels. This expansive ROI was chosen to include potentially diffusive visual responses across occipitoparietal regions (e.g., Anllo-Vento, Luck, & Hillyard, 1998; see also Forder et al., 2017; Thierry et al., 2009) and the potential neural sources considered in the Discussion. It was validated post-hoc that it included the channels producing the maximal amplitude at 3 Hz and 6 Hz and their unique harmonics, despite variance across conditions at 3 Hz and its unique harmonics (e.g., the 2 electrodes giving the maximal responses were Oz and OIz in the blue-yellow condition vs. POO6 and O2 in the green-red condition).

Frequency-domain analysis: amplitude

A discrete Fourier transform (FFT) was used to convert the data into an amplitude (µV) frequency spectrum, normalized by the number of samples output. This spectrum had a range of 0-256 Hz and a resolution of 0.02 Hz. Quantification of the comprehensive responses tagged at 3 and 6 Hz were computed as a sum of the significant harmonics of each respective frequency (Retter & Rossion, 2016b). First, a baseline-subtraction was performed to reduce differences in noise-level across the frequency spectrum (e.g., being generally higher at lower frequencies and increasing locally in the alpha band), as well as across participants. The baseline of each frequency bin, x, was defined with 20 surrounding frequency bins, excluding the immediately adjacent bins, as well as the local minimum and maximum (e.g., Retter & Rossion, 2016b; Rossion et al., 2012). Finally, the baseline-subtracted amplitude of each significant frequency was combined at each channel. Grand-averaged summed-harmonic responses were computed for display of group-level frequency spectra and topographies. For further comparison of response topographies, normalization was applied using McCarthy and Wood's (1985) method to remove overall amplitude differences across conditions.

In Experiment 1, to statistically compare the asymmetrical responses at 3 Hz and its summed harmonics across the 2 conditions, a paired-sample t-test was performed over the occipitoparietal ROI (see above), 1-tailed given the specific hypothesis that larger blue-yellow than green-red asymmetries would be observed. In Experiment 2, a 1-tailed paired-sample t-test was again performed on the occipito-parietal ROI of the summed-harmonic response to compare the two conditions, constrained by the hypothesis that the gray-yellow condition would produce a larger asymmetry at 3 Hz and its unique harmonics than the blue-yellow condition. In both Experiments 1 and 2, the symmetrical responses at 6 Hz and their summed harmonics were compared separately with 2-tailed paired-sample t-tests over the same ROI, given that there was no predicted directionality of differences between conditions. In preparation for these paired-samples t-tests, the normalcy of the distribution of differences was confirmed with Shapiro-Wilk's test (P > 0.05).

Frequency-domain analysis: phase

Additionally, the FFT cosine phase spectrum was similarly computed. Phase was considered over the average of only a few medialoccipital channels (POOz, Oz, and OIz), because the phase appeared variable across the scalp (data not shown). Because the reliability of phase depends on the recorded amplitude, phase values averaged across participants at each frequency and channel were weighted by the corresponding response amplitude for each participant. Mean amplitude-weighted phase differences were calculated, and compared across paired samples with a circular Hotelling test (van den Brink, 2014).

Perceptual differences (Experiments 1 and 2 Combined)

To compare the EEG responses with the individual differences in perceivers’ reported color perception of the dress, that is, blue-black or white-gold, the data from the blue-yellow condition were combined across Experiments 1 and 2. Note that despite the change in viewing distance across the two experiments, the blue-yellow 3-Hz response did not differ across groups in terms of amplitude (0.02 µV mean difference), peak response topography (maximal response at the same 2 channels) or phase (2° mean difference). As 1 participant in the first experiment did not report how they perceived the original dress color, this sample included (14 + 14 − 1 =) 27 different participants. There were 5 white-gold perceivers in each Experiment 1 and Experiment 2, leading to a total of 10 white-gold and 17 blue-black perceivers. Mean perceptual-group data were examined as above for amplitude and phase differences, except with independent-samples tests. Thus independent-samples 1-tailed t-tests were used, given the hypothesis that white-gold would have larger blue-yellow summed-harmonic 3 Hz asymmetries than blue-black participants (equal variances were confirmed with Levene's test (P > 0.05).) Additionally, independent-samples Watson-Williams tests were applied to investigate amplitude-weighted phase differences, using the CircStat MATLAB toolbox (Berens, 2009).

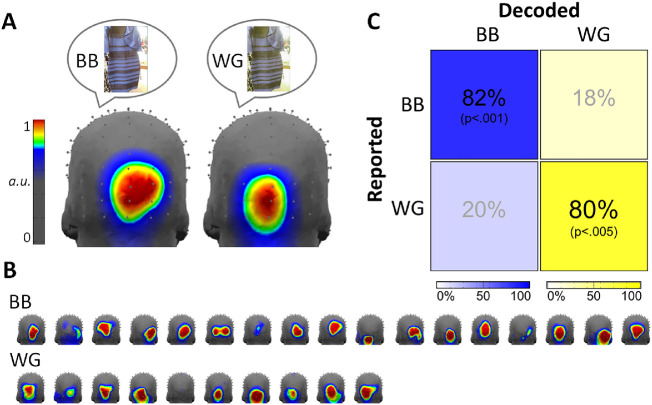

Additionally, summed-harmonic 3 Hz response topographies were explored. First, these topographies were normalized according to the method of McCarthy and Wood (1985) to compensate for amplitude differences across individuals. Then, significant differences across perceptual groups were tested with a leave-one-participant-out decoding analysis (e.g., Poldrack, Halchenko, & Hanson, 2009; Coggan et al., 2016). In this analysis, data from each participant is sequentially left-out and compared to the average of the remaining blue-black and white-gold observers at each channel within the occipito-parietal ROI; each participant is assigned the perceptual label of the group giving the higher correlation across channels, i.e., a winner-take-all approach (e.g., Jacques, Retter, & Rossion, 2016). A permutation test (5000 permutations) with Monte Carlo randomization of perceptual group was used to create a null distribution from which reference a significance score (P-value) was derived. The mean of this null distribution for the blue-black observers was 53.6% (95% confidence interval [CI] [53.3%, 54.0%]), and 44.9% (95% CI: [44.5%, 45.2%]) for the white-gold observers. In addition, a control decoding analysis was performed identically with the 6 Hz response topographies, which are not frequency-tagged to chromatic asymmetries but still may reflect general individual differences. In this case, the mean of the permutation distribution for the blue-black observers was 54.5% (95% CI: [54.2%, 54.9%]), and 44.1% (95% CI: [43.8%, 44.5%]) for the white-gold observers.

Results

Experiment 1: blue-yellow versus green-red responses

To test for an overall blue-yellow asymmetry, we first compared the 3-Hz responses to the blue-yellow alternation vs. the green-red alternation. As noted, these image pairs were matched for their chromatic contrasts along the L-M and S-(L+M) axes, and thus for their effective strength in precortical color mechanisms. As also noted, the difference along each axis might itself lead to an asymmetry (e.g., because both image pairs alternated between +S and –S signals, and there are known differences in the coding of S-cone increments and decrements; Tailby, Solomon, & Lennie, 2008; Dacey, Crook, & Packer, 2014; Wang, Richter, & Eskew, 2014). However, these early-level asymmetries predict identical 3-Hz responses for both image pairs, whereas a stronger asymmetry for blue-yellow would instead implicate a higher-level transformation of the precortical color signals.

As expected, significant asymmetric responses were found at 3 Hz and its harmonics for both the blue-yellow and green-red image pairs, but were stronger for the blue-yellow pair (Figure 2A). Pooled across all 128 channels, the response was significant at 3 Hz and 4 additional unique harmonics, that is, 9, 15, 21, and 27 Hz, in both conditions (all Zs >2.32, Ps <0.01). These harmonic responses were baseline-subtracted and summed to create a comprehensive response profile(Retter & Rossion, 2016b). A comparison of the amplitude of the summed-harmonic 3-Hz response, calculated over a 28-channel occipitoparietal ROI (see Methods), revealed a significantly larger response for the blue-yellow, with a medium effect size (M = 0.44 µV, SE = 0.069 µV) than green-red condition (M = 0.31 µV, SE = 0.073 µV), t13 = 1.86, d = 0.51, P = 0.043 (Figure 2B). Indeed, 10 of the 14 observers have a positive difference (blue-yellow > green-red).

Although our experimental hypothesis here targeted response amplitudes, we aimed additionally to exploit the richness of FPVS-EEG data in terms of spatial and temporal information. The phase of the 3-Hz response, showing relative latency differences, was also considered for the 2 conditions (Figure 2D). The mean phase of the blue-yellow response was 237°; SE = 7.73°; for the green-red response it was 29.8° (SE = 12.4°), a highly significant difference, F2,12 = 17.0, P < 0.001. The absolute difference between the phase for the 2 conditions across each participant was on average 202° (SE = 15.3°). This phase difference is somewhat close to that of a 180° phase reversal, which would indicate that differences in latency or amplitude within each stimulus pair were inverted across stimuli in the blue-yellow and green-red conditions (note that the blue and green images were shown first in their respective sequences). This phase inversion could reflect differences in the polarity of the cone contrasts for the 2 image pairs, and thus, as described above, the latency responses to both pairs are consistent with a common (in phase) baseline asymmetry in the response to the S-cone (or L-M cone) contrasts in the images. (In other words, if the latencies in the responses were measured relative to only the S-cone or only the L-M cone modulation in the stimuli, then they would be similar for the blue-yellow and green-red pairs). By this account, the asymmetrical responses between blue and yellow are superimposed on top of the response differences from any cardinal axis asymmetries common to both the blue-yellow and green-red pairs.

The summed-harmonic 3-Hz response scalp topographies were distributed across the occipitoparietal channels in both the blue-yellow and green-red conditions (Figure 2C, top row). Although in both conditions the maximal responses encompassed the same five channels (Oz, OIz, POO6, O2, and POOz), in the blue-yellow condition the response was maximal over channels Oz and OIz (each 0.76 µV), and in the green-red condition the response was maximal over channels POO6 and O2 (each about 0.53 µV). Given differences in amplitude across conditions, the response topographies were normalized for display. The normalized topographies appeared to show a slightly more dorsal and rightward response topography for the green-red than blue-yellow condition (Figure 2C, bottom row).

Finally, symmetrical 6 Hz responses, reflecting visual responses common to the presentation of both stimuli, were examined as a control, with the prediction that there would be no differences here across conditions. The 6-Hz responses were significant up to 42 Hz in both conditions (Figure 2A). A statistical comparison of the summed-harmonic 6-Hz response amplitude, again calculated over the occipito-parietal ROI, indicated no differences across conditions (blue-yellow: M = 3.32 µV, SE = 0.31 µV; green-red: M = 3.29 µV, SE = 0.37 µV), t13 = 1.96, d = 0.022, P = 0.85 (Figure 2B). The 6-Hz response topographies also appeared to be similar across conditions, with the maximal channel along the occipital midline: first at OIz, followed by Oz, Iz, and POOz in both conditions (Figure 2C). Additionally, the phase of the 6 Hz responses showed no systematic differences between the pairs (blue-yellow = 169°; SE = 5.98°; green-red = 179°; SE = 8.58°), F2,14 = 1.74, P = 0.22.

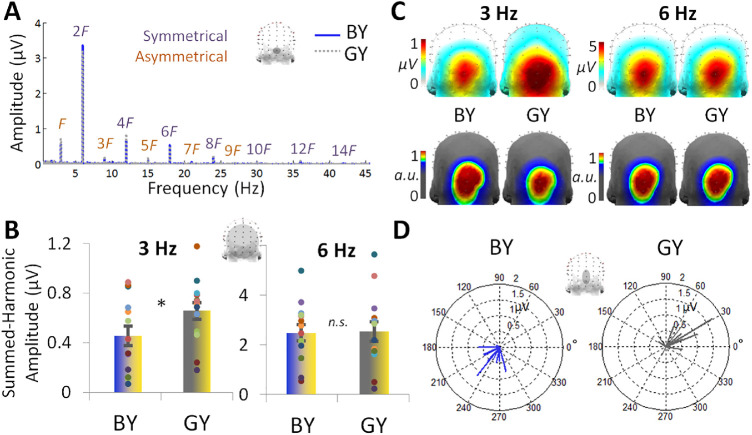

Experiment 2: blue-yellow versus gray-yellow responses

To further assess the basis for the blue-yellow asymmetries, we compared the amplitude of asymmetries when the blue-yellow pair was instead compared to a gray-yellow pair, for which the 2 images differed in actual (as opposed to potentially perceived) chromatic stimulus contrast. The distribution of harmonic responses was similar to that found in the first experiment: asymmetric responses at 3 Hz and its odd harmonics persisted up to 27 Hz in both conditions (Figure 3A). As expected, the amplitudes of the summed-harmonic 3-Hz response over the 28-channel occipito-parietal ROI were significantly lower for the blue-yellow (M = 0.46 µV, SE = 0.079 µV) than gray-yellow condition (M = 0.66 µV, SE = 0.068 µV), t13 = 2.77, d = 0.73, P = 0.008 (Figure 3B), with a medium effect size. The stronger asymmetry for gray than blue is not surprising given that the gray-yellow pair included an actual (physical) chromatic contrast difference in the stimuli. However, this effect is consistent with the conjecture that the stronger asymmetry for blue-yellow than green-red is due to differences in the effective (perceptual) chromatic differences in the stimuli.

Figure 3.

Experiment 2 EEG responses for the blue-yellow (BY) and gray-yellow (GY) pairs (n = 14). (A) As in Figure 2 of Experiment 1, channel OIz is plotted in the baseline-subtracted amplitude frequency domain. (B) Responses were quantified at the occipitoparietal ROI over the labeled harmonic frequencies for each the asymmetrical 3 Hz and symmetrical 6 Hz responses shown in Part A. Individual data are shown with dots, paired by color. (C) The topographical distributions of the summed-harmonic baseline-subtracted data as shown in Part B, for 3 and 6 Hz (top row). The corresponding normalized topographies are shown below (bottom row). (D). The 3 Hz phase over the average of POz, Oz, and OIz. Individual participant data is plotted in separate vectors, with the angle representing the cosine phase and the length representing baseline-subtracted amplitude over these channels at 3 Hz.

In terms of temporal information, the mean phase of the 3-Hz response was 235° (SE = 8.46°) in the blue-yellow condition was different from the gray-yellow condition, 38.0° (SE = 10.8°), F2,14 = 10.5, P = 0.002 (Figure 3D). The absolute difference in phase across the conditions was 185° (SE = 11.6°). Again, a speculative explanation for these phase-reversal effects is how the stimuli differed in terms of the activation they produced in S-cone and L-M cone pathways (e.g., Derrington et al., 1984, Rabin et al., 1994; see also Goddard et al., 2011). Spatially, the scalp distribution of the 3-Hz responses did not appear to differ considerably across the blue-yellow and gray-yellow conditions (Figure 3C, top row). In both conditions the 2 channels at which the response was maximum were Iz and OIz (about 0.90 µV in the blue-yellow vs. 1.89 µV in the gray-yellow condition). Indeed, despite a more focal gray-yellow response at the group-level, the normalized topographies also appeared similar, giving the same five maximum channels in the same order across conditions: Iz, OIz, Oz, POI2, and O2 (Figure 3C, bottom row).

Symmetrical 6-Hz responses, again reflecting the visual responses common to the presentation of both stimuli, were not predicted to differ across conditions. These 6-Hz responses were present in both conditions up to 42 Hz (Figure 3A). The summed-harmonic 6-Hz response amplitudes did not differ between the blue-yellow (M = 2.48 µV, SE = 0.068 µV) and gray-yellow (M = 2.53 µV, SE = 0.40 µV) conditions, t13 = 0.33, d = 0.040, p = .75 (Figure 3B). The topographies of these responses were not different across conditions, centered around the midline, with the same maximal three channels: OIz, Oz, and Iz (Figure 3C). Finally, the phase of these responses was also similar across conditions (blue-yellow: 51.5° [SE = 15.4°]; gray-yellow: 121° [SE = 18.7°]), F2,12 = 1.90, P = 0.19.

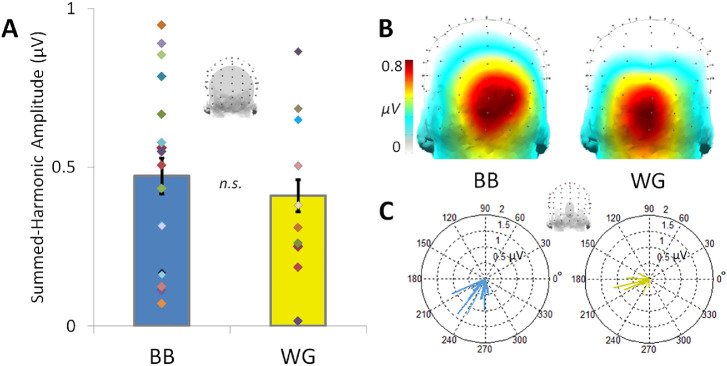

Individual differences in the dress percepts

The preceding results point to clear differences in blue versus yellow responses at the group level. Again, a second aim of our study was to explore individual differences in these asymmetries. In the simplest case, we might expect observers who described the dress as white-gold to exhibit stronger blue-yellow asymmetries than blue-black observers. However, across participants in both Experiments 1 and 2, there were no significant differences in summed-harmonic 3-Hz response amplitude over the occipitoparietal ROI for blue-black (M = 0.47 µV, SE = 0.056 µV) and white-gold perceivers of the original dress (M = 0.41 µV, SE = 0.050, t25 = 0.56, d = 0.22, P = 0.29, 1-tailed), seen in alternation with the hue-inverted yellowish dress, with a small effect size (Figure 4A). This null result in amplitude differences across perceptual groups may be due to several reasons, for example, the large amount of interindividual variability in response amplitude within both perceptual groups, or as a lack of statistical power (see the Discussion). However, this finding is also in line with behavioral evidence that the 2 groups show only weak overall differences in their saturation boundaries for blue and yellow (Winkler et al., 2015).

Figure 4.

The blue-yellow summed-harmonic 3-Hz responses across blue-black (BB) and white-gold (WG) observers. (A) The mean response amplitude does not significantly differ across blue-black and white-gold perceivers over the occipito-parietal region-of-interest; individual participant amplitudes, indicated by randomly colored diamonds, occur over a wide range in each group of observers. Error bars indicate ±1 standard error from the mean. (B) Response amplitudes are nevertheless distributed with apparent spatial differences within the occipitoparietal ROI across the perceptual groups (see also Figure 5). (C) The phase of the responses across blue-black and white-gold perceivers. Individual phase values, taken from the small medial-occipital ROI indicated above, are plotted according to cosine phase angle, with the length of each vector determined by the respective amplitude.

Interestingly, there were notable topographical differences across the two groups in terms of the summed-harmonic 3-Hz responses, with the white-gold observers displaying more focal medial occipitoinferior responses (Figure 4B), as would be predicted from dominant input from early cortical visual areas (compare to the 6-Hz visual responses in Figure 2C and 3C; see also Kulikowski et al., 1994; Crognale et al., 2013). Normalized topographies were plotted with a color map scaled to emphasize these group differences (Figure 5A). The differences also appeared reliable across individual observers within the blue-black and white-gold groups (Figure 5B). To statistically test the reliability of the topographical differences across the perceptual groups, we applied a decoding analysis correlating 3 Hz summed-harmonic response amplitudes across electrodes in the occipitoparietal ROI. This revealed a significant correct decoding accuracy of 82.4% (14/17 observers; P < 0.001) for blue-black and 80.0% (8/10 observers; P = 0.003) for white-gold observers (Figure 5C). Despite the focused occipitoparietal responses, this effect was robust: a homologous decoding applied across all 128 channels still gave significant results across the perceptual groups (blue-black accuracy: 76.5%, P = 0.048; white-gold accuracy: 70%, P = 0.025). Finally, a control decoding of perceptual group using the response at 6 Hz, expected to capture individual variance but not reflect perceptual differences, failed to produce results above chance level: only 40.0% (4/10; P = 0.47) of white-gold observers were accurately classified, and 47.1% (8/17; P = 0.32) of blue-black observes.

Figure 5.

(A) Topography of summed-harmonic blue-yellow asymmetry (3 Hz) responses for blue-black (BB) and white-gold (WG) observers. Illustrative dress images are intended to represent perceptual differences; the dress stimuli presented were identical for both groups. Response topographies are normalized across all 128 channels. (B) The corresponding data of each individual observer, sorted by reported perceptual group, plotted to the same scale as in section A. (C) Decoding accuracy of white-gold and blue-black observers based on correlating the spatial distribution of responses over the occipitoparietal region-of-interest with a leave-one-participant-out across-groups approach.

Given our hypothesis that the blue-yellow asymmetries of white-gold observers would more closely match gray-yellow (achromatic-chromatic) asymmetries, and those of blue-black observers would more closely match green-red (chromatic-chromatic) asymmetries, we applied a similar topographical decoding analysis to sort the blue-yellow asymmetries across all 27 observers into gray-yellow or green-red groups. These results matched white-gold observers to gray-yellow with 90.0% accuracy (9/10; P = 0.003) and blue-black observers to green-red with 70.6% accuracy (12/17; P = 0.042). This supports the observation that the white-gold 3-Hz response topography is reminiscent of that of the gray-yellow topography (Figure 3C, for comparison), whereas the blue-black topography is more similar to the green-red topography (Figure 2C, for comparison).

Further, there was a slight difference in phase apparent across perceptual groups at 3 Hz (Figure 4C). The mean phase of the blue-black perceivers was 241° (SE = 5.38°), while that of the white-gold perceivers was 227° (SE = 9.10°); this difference was not significant, F1,26 = 2.67, P = 0.11. The mean difference was only 13.8°. This difference most likely corresponds to an 11.8 ms difference between the response latency across perceptual groups, although the present analysis does not allow us to say whether the asymmetry response of white-gold observers precedes that of blue-black observers or the converse.

Discussion

In this study we used high-density FPVS-EEG to examine the neural representation of ambiguous color percepts, focusing on 2 aspects of color ambiguity that have been demonstrated behaviorally. The first is the general within-observer tendency for blues to be perceived as more achromatic than equivalent (complementary) yellows (Winkler et al., 2015). The second was the pronounced between-observer differences in blue percepts revealed by #thedress. Both of these effects appear specific to chromatic variations along the natural daylight locus, because the behavioral differences largely vanish for stimuli varied along other (non-blue-yellow) axes of color space. Moreover, the differences cannot be accounted for by variations in spectral sensitivity, because contrast thresholds for detecting the blue and yellow stimuli are similar (Winkler et al., 2015). Thus the asymmetries are likely to reflect inferences or priors for natural lighting and how these shape higher-order distal percepts of color in terms of illuminants and surfaces, particularly in regard to blue. Our measurements thus point to neural signatures of these higher-order color representations, at stages closer to participants’ subjective experience of color.

Electrophysiological correlates of blue-yellow asymmetries

We first explored neural correlates of perceptual blue-yellow asymmetries, in comparison to those of equivalent chromatic-contrast along a non-daylight axis (green-red) and to an actual physical chromatic contrast difference (gray-yellow). Consistent with percepts observed behaviorally (Winkler et al., 2015), asymmetries in the responses to the blue-yellow alternation were greater than to green-red, but less than to gray-yellow.

The asymmetric (summed-harmonic 3 Hz) response amplitude for blue-yellow was 44% larger than for green-red over occipitoparietal channels, which while only marginally significant (P = 0.043) was a medium effect size (d = 0.51). Note again that these 2 dress image pairs were matched for their component contrasts along the cardinal opponent axes, and thus the differences across conditions are unlikely to be accounted for by the independent signals along the L-M or S-(L+M) axes. As noted, the asymmetries common to both the blue-yellow and green-red pairs could reflect common influences of early color coding, such as the variations that both stimuli presented between S cone increments and decrements (e.g., Tailby, Solomon, & Lennie, 2008; see Wool et al., 2015 for an example with local field potentials). This factor could also account for the phase relationship between the blue-yellow and green-red 3 Hz signals. However, these early factors cannot account for the larger amplitude for the blue-yellow difference.

In turn, this suggests that the representation of color, as indexed by the asymmetry responses, is not governed by the separable cardinal geniculate axes but instead involves a transformation of these axes. Such transformations have been indicated in a variety of previous studies pointing to neural representations that more closely parallel perceptual color metrics (e.g., Brouwer & Heeger, 2009) or to either weaker or stronger neural responses along the non-cardinal blue-yellow axis (e.g., Brouwer & Heeger, 2009; Conway, 2009). Neural correlates of higher-order aspects of color have also been observed in high-density EEG measurements showing that red and blue produced different responses in an attentional selection task (Anllo-Vento, Luck, & Hillyard, 1998). Our results suggest that this transformation is evident within the blue-yellow axis, again presumably as a weakened or differentially attributed response to blue.

The blue-yellow asymmetry was also significantly weaker, with a medium effect size, than the gray-yellow modulation, which again was 44% larger. This difference is not surprising, given the extensive reports of reliable differences when comparing color vs. grayscale stimuli (e.g., Goddard et al., 2011; Goffaux et al., 2005; Zhu, Drewes, & Gegenfurtner, 2013; Lafer-Sousa, Conway, & Kanwisher, 2016). However, this control contrast further supports the possibility that the perceptual differences driving the blue-yellow asymmetries were consistent with the “effective” (perceived) contrast of the patterns. Finally, the fact that all 3 stimulus pairs produced different levels of asymmetry provides support that it was in fact the chromatic differences in the stimuli driving these response differences.

Perceptual differences: white-gold versus blue-black observers

In our sample of 27 participants across the two experiments, 17 participants described the dress as blue-black and 10 as white-gold, in line with an approximate 2:1 ratio of blue-black to white-gold observers (with a large sample size: Lafter-Sousa, Hermann, & Conway, 2015). Analyses of the individual differences in the summed-harmonic 3-Hz blue-yellow response topographies turned out to provide a highly reliable classifier of the individuals’ percepts. These decoding analyses separated blue-black from white-gold observers with over 80% accuracy, significantly above chance (p <. 005 for both groups). This outcome is in spite of the fact that significant asymmetries in blue-yellow responses were not found in five of the participants, and that amplitude differences between the groups were not manifest in the asymmetrical signals averaged across the occipitoparietal ROI (Figure 4A). A control decoding of observers’ symmetrical (summed-harmonic 6-Hz) responses was not successful (neither group was classified with accuracy above chance-level). Thus the decoding effectively discriminated the observers only for the tagged asymmetry frequency where they would be expected to differ.

The amplitude of the asymmetry response was not diagnostic of white-gold or blue-black perception, perhaps as the result of great inter-individual differences in response amplitude on the scalp, which may be due to physiological factors unrelated to the processes of interest, for example, cortical folding orienting dipole sources and skull thickness (Luck, 2005). However, this null result may be influenced by many factors, including a lack of statistical power. Note that at the traditional chromatic visual evoked potential (VEP) recording site, medial-occipital electrode Oz, amplitude differences were also not found across groups: 0.85 µV (SD = 0.51 µV) blue-black versus 0.78 µV (SD = 0.51 µV) white-gold. Phase differences were also not significant at the group level, and were not reliable for categorizing individual participants (note the overlapping ranges in Figure 4C). The success of our decoding was thus possible only because we used high-density EEG.

Note that the performance of this decoding was determined using a binary split of individuals into either white-gold or blue-black based on their descriptions of the dress, and was thus based on how observers labeled the dress colors and not necessarily how they perceived them. It has been shown that across observers there may be a continuum of saturation percepts of the dress's color (Gegenfurtner et al., 2015; Witzel et al., 2017). Indeed, individual differences in perceived saturation may have contributed to the wide variety of blue-yellow asymmetry amplitudes in both perceptual groups (Fig. 5A). Such differences may be influenced by the extent to which individuals interpret the lighting of the dress as direct or indirect, and thus the extent to which they attribute the bluish tint to the surface or illuminant. As such the differences across individuals may reflect relatively high-level visual inferences regarding illumination. It has also been suggested that these differences may reflect differences in the pattern of lighting observers are exposed to—early-rising “larks” versus late-rising “owls” may have different learned illumination priors. By this account late-risers are likely exposed to more artificial, yellower light and thus may tend to more frequently report perceiving the dress (rather than the lighting) as blue (Wallisch, 2017; Lafer-Sousa & Conway, 2017).

Yet, despite the possible graded variation in the dress percepts, the fact that the classifier could discriminate the two groups indicates that the neural signals carried sufficient information about these categorical differences, or that there was at least a strong relation between the percepts and the labels (e.g., so that those who described it as blue by and large saw it as more blue). Categorical effects in perception of the dress have also been reported: approximately 9 of 10 individuals are satisfied by using the terms white-gold or blue-black to describe its colors (Lafter-Sousa, Hermann, & Conway, 2015). Here, we hypothesize that a categorical interpretation of the dress as chromatic (blue) or achromatic (white), may lead to the blue-yellow alternation as either a chromatic-chromatic (perceived blue-yellow) or achromatic-chromatic (perceived white-yellow) asymmetry, which may differentially activate different sets of neural sources (as will be discussed in the follow section), leading to the reliable topographical differences across perceptual groups.

One test of this hypothesis was performed through our comparison of white-gold and blue-black observers’ blue-yellow dress asymmetries to the green-red and gray-yellow dress asymmetries. We hypothesized that the topographies of blue-black observers’ blue-yellow asymmetries would resemble those of green-red asymmetries, while the topographies of white-gold observers’ blue-yellow asymmetries would resemble those of gray-yellow asymmetries. A topographical decoding analysis was applied across experiments, which was able to classify 90% of white-gold observers’ blue-yellow asymmetries as more similar to gray-yellow than green-red, and 71% of blue-black observers as more similar to green-red than gray-yellow (both Ps < .05; see Results).

To further test this hypothesis, we would predict that blue-black, but not white-gold observers, have larger blue-yellow asymmetry amplitudes than blue-black observers relative to their gray-yellow asymmetry amplitudes in Experiment 2. To follow up on this here, we performed an extra comparison of white-gold observers’ responses, which revealed no significant differences with a small effect size between blue-yellow (M = 0.54 µV, SE = 0.126 µV) and gray-yellow asymmetries (M = 0.67 µV, SE = 0.158 µV), t4 = 1.63, d = 0.40, P = 0.18 (2-tailed, paired-sample). Conversely, the blue-black observers had a medium effect-size, significantly lower blue-yellow (M = 0.41 µV, SE = 0.103 µV) than gray-yellow asymmetries (M = 0.65 µV, SE = 0.208 µV), t8 = 2.30, d = 0.92, P = 0.025 (1-tailed, paired-sample), again consistent with a higher effective blue contrast in the blue-black observers. Note however that an interaction between perceptual group (blue-black and white-gold) and condition (blue-yellow and gray-yellow) could not be tested appropriately, due to the small number of participants per perceptual group in the second experiment (9 blue-black and 5 white-gold observers).

The lower accuracy for identifying blue-black observers as closer to green-red responses may be due to differences in the responses to each of these pairs of colors (akin to differences in blue and red EEG responses reported by Anllo-Vento, Luck, & Hillyard, 1998). Differences in population-level responses to different colors may also be predicted by intracranial EEG and imaging studies (e.g., Brouwer & Heeger, 2009; Murphey, Yoshor, & Beauchamp, 2008; Kuriki et al., 2011). Potential sources of these differences are discussed in the following section. Note that since roughly equal proportions of white-gold and blue-black observers were present in the green-red and gray-yellow groups (5/13 vs. 5/14, respectively, green-red and gray-yellow topographical differences were likely not driven by differences across white-gold or blue-black individuals.

Some differences in ocular anatomy and physiology have been reported across blue-black and white-gold observers of the dress (Rabin et al., 2016; Vemuri et al., 2016), and genetic factors have been estimated to account for about a third of the variation in the percept (Mahroo et al., 2017). Additionally, in our data, variability in the asymmetric modulations to green-red stimuli also occurs across observers, but is unlikely to be correlated with the (negligible) differences in the relative perceptual salience of the red and green hues. As noted, one previous study investigating neural markers of perception of the dress found small group-level differences in early-stage cortical processing (Rabin et al., 2016). Here, stimulus sets were balanced for precortical color signals, such that while other early-level processes might contribute to the percepts, they are unlikely to be the primary factor. In contrast, another study reported correlates of perception of the dress with late-stage frontal and parietal “higher cognition” areas, such as those involved in attention or decision making (Schlaffke et al., 2015). While it is possible that these “post-perceptual” factors play a role, our results point to neural traces at stages that are likely both perceptual and high-level.

We attribute our asymmetry responses to perceptual rather than “higher cognition” processing, because they are predominant over inferior occipitoparietal cortical areas associated with visual responses. Moreover, these responses are elicited from participants naïve to the experimental design and without a stimulus-related task, such that here is no incentive for selective modulation of attention to either stimulus or for post-perceptual decision or task-related processes. Finally, we attribute them to high-level perception because the pattern of responses corresponds more closely to the observers’ percepts than to the spectral sensitivities of early color coding.

Potential sources of the EEG response asymmetries to color

Although we cannot target the specific sources of the neural asymmetries reported here, we can hypothesize as to their origins (neural sources may be better identified in future studies with the aid of spatially precise neuroimaging). Cortical responses to color extend from early occipital visual areas along much of the ventral visiocortical stream. However, in terms of perceptual correlates at a population-level, several studies have pointed to the importance of more anterior, ventral occipitotemporal cortical (VOTC) regions. These areas were originally implicated by studies of patients with damage to these areas presenting with achromatopsia or dyschromatopsia, that is, complete or partial loss of color perception (e.g., Verrey, 1888; Meadows, 1974; Jaeger, Krastel, & Braun, 1988; Zeki, 1990; Bouvier & Engel, 2006). The importance of VOTC regions in color coding, particularly the fusiform and lingual gyri, has been further supported by neuroimaging (e.g., Brouwer & Heeger, 2009; Goddard et al., 2011; Lueck et al., 1989; Zeki et al., 1991; Liu & Wandell, 2005; Mullen et al., 2007) and intracerebral EEG studies (Murphey, Yoshor, & Beauchamp, 2008; Allison et al., 1993; see Conway & Tsao, 2009 for single-cell correspondence in macaques).

Asymmetries between color and grayscale images

Given the wealth of previous neuroimaging studies, we expected that achromatic versus chromatic (gray-yellow, and white-gold observers’ blue-yellow) response asymmetries would arise from a network of implicated color-sensitive visual regions, including the occipital lobe, dorsolateral occipital cortex, and areas of the ventral posterior fusiform and lingual gyri (e.g., Goddard et al., 2011; Lueck et al., 1989; Mullen et al., 2007; Sakai et al., 1995; Hadjikhani et al., 1998; Beauchamp et al., 1999). Such areas have also been associated with attention to color with neuroimaging (Corbetta et al., 1990; Corbetta et al., 1991), and of particular relevance here, EEG source localization (Anllo-Vento, Luck, & Hillyard, 1998).

Here, these asymmetries presented maximally over medial ventral occipital channels (OIz, Iz, then Oz), and were similar to the response to stimulus presentation in general at 6 Hz and its harmonics (occurring maximally at OIz, Oz, then Iz) (see Figs. 3C and 4B). Despite a slightly ventral shift in the response, this correspondence suggests a lack of specialized processing for these summed-harmonic 3-Hz asymmetries. Furthermore, in a previous study of cerebral dyschromatopsia (in this case, lesions primarily to an extensive region of the bilateral VOTC), chromatic VEPs were unaffected at the single recording site Oz (Crognale et al., 2013). This was taken as evidence that, at least at early response components over this channel, the chromatic VEP was characterized by responses from early visual areas. Similarly, macaques with lesions of a higher-level visual region (V4) also showed preserved occipital chromatic VEPs, attributed to responses from early areas (V1 and V2) (although these animals also showed preserved wavelength discrimination abilities; Kulikowski et al., 1994). Taken together, we propose that the achromatic-chromatic asymmetries reported here, maximal over ventral medial occipital channels, are driven by early visual processes.

Blue-yellow versus green-red asymmetries

In contrast, the topography of the green-red asymmetries (and blue-black observers’ blue-yellow asymmetries, to a lesser extent) is maximal over a relatively more dorsal and laterally translated (i.e., rightward) region of the scalp (see Figs. 2C and 4B). In the green-red contrast, the response was maximal over channels POO6, O2, then Oz, whereas in the blue-yellow contrast for blue-black perceivers it was maximal at OIz, Oz, then O2. Both of these summed-harmonic 3-Hz responses, sensitive to the chromatic differences between paired images, are spread more laterally across the occipitoparietal cortex than the summed-harmonic 6-Hz responses to stimulus presentation. Population-level differences in the responses to numerous different colors have been reported with voxel-pattern classification throughout the occipital lobe and ventral visual areas (e.g., Brouwer & Heeger, 2009; Kuriki et al., 2011; Parkes et al., 2009). Supporting the perceptual relevance of such activation, responses in the fusiform gyrus following fMRI-adaptation have been shown to correlate with perceptual color after-images (Sakai et al., 1995; Hadjikhani et al., 1998). Moreover, electrical stimulation in human participants in the posterior fusiform and lateral lingual gyri and dorsolateral occipital cortex evoked color percepts (Allison et al., 1993; Schalk et al., 2017); furthermore, in the fusiform gyrus, the evoked percept matched the color preference of the stimulation site (Murphey, Yoshor, & Beauchamp, 2008). Thus EEG asymmetries to different color pairs may have their primary sources in the posterior fusiform gyrus, where EEG sources were modeled by Anllo-Vento, Luck & Hillyard, (1998), with additional inputs from the lateral lingual gyrus, dorsolateral occipital cortex, and more general (early) visual areas.

The shift in the maximal response toward the occipitoparietal cortex is in line with an increased contribution of more specialized visual areas. For example, differences in responses to the “unique hues” were reported by (Forder et al., 2017) in a late EEG response component including more dorsal channels (P1, Pz, and P2), and an effect of language categories on discriminating light versus dark blue in Greek speakers was reported over occipitoparietal channels (Thierry et al., 2009). In our case, the differences in responses between blue-black and white-gold observers cannot be driven by stimulus attributes (all observers were tested with the same blue-yellow stimulus pair), lending support to chromatic VEPs being capable of reflecting perceptual aspects of color vision, particularly over regions of the occipitoparietal cortex extending beyond the posterior midline and traditional Oz recording site.

Applying the FPVS approach to high-order color perception: strengths and limitations

The present results provide another illustration of the power and sensitivity of the FPVS method for characterizing visual processes (Norcia et al., 2015). First, the blue-yellow comparison replicated extremely well across entirely different groups of 14 participants. In both experiments, its amplitude was close to 0.45 µV (0.44 µV in Experiment 1 and 0.46 µV in Experiment 2). The response was maximal at the same two channels, Oz and OIz across experiments, and had a similar phase of about 236° (237° in Experiment 1 and 235° in Experiment 2). Furthermore, by using high-density EEG and inspection of amplitude, phase, and topography response attributes, the approach was highly sensitive to differences in the neural responses to our paired images. This demonstrates the use of FPVS-EEG for providing high-order color-selective neural responses. Another advantage of this paradigm is that we were able to obtain these results with short SOAs (167 ms between images; a 333-ms repetition rate for each image) and without a stimulus-related task.

With our paradigm, a limitation was imposed by the presentation of stimuli in alternation at 6 Hz: we were not able to dissociate clearly the responses to each image within a testing sequence. Thus we cannot say with certainty which way the response amplitude or latencies differed between blue and yellow, or the direction of this difference across blue-black or white-gold observers. Additionally, we chose not to use a current-source density (CSD) transform of our data in investigating the topographic responses. While CSD is reference-free and accounts for volume conductance contributions, it is less sensitive to electrophysiological sources deeper in the brain, and less reliable for electrodes at the border of the montage (Luck, 2005); however, the results of CSD-transformation on the topographies of the blue-black and white-gold participants’ blue-yellow asymmetry responses was checked, and did not appreciably change the results (data not shown). Finally, a potential confound of our paradigm is that most observers are now highly familiar with the original image of the dress, so that the blue-yellow pairing modulated familiarity while both versions of the green-red pair were novel. However, the finding that the 3 Hz responses were strongest for the gray-yellow pair (again both novel), argues against this account.

A robust neural correlate for blue-yellow differences both within and across observers opens opportunities for exploring how these perceptual asymmetries emerge. As we have noted, the special ambiguity of blues may reflect experience with light and shading in the natural environment. Developmental studies suggest that infants can begin to disambiguate shadows by the age of about 7 months (e.g., Granrud, Yonas, & Opland, 1985; Sato, Kanazawa, & Yamaguchi, 2016). However, little is known about how, or how long, it takes children to learn about the correlations between color and shading, and how this might influence their color percepts. The paradigms we devised could be readily extended to track this development and potentially reveal how infants might experience the colors in #thedress. More generally, our results suggest that EEG responses to color, particularly those situated away from the occipital midline, are reflective of perceptual experiences of color, and thus not attributable to early-stage chromatic processing. Therefore they underline the possibility of exploring perceptually relevant system-level responses to color in the human brain.

Acknowledgments

This work was supported by grants from the Belgian National Fund for Scientific Research (FNRS; FC7159 to TR), and the National Institutes of Health (NIH; EY10834, P20 GM103650 to MW, and EY023268 to FJ). We thank Andrea Conte and Bruno Rossion for development and access to the stimulation program (XPMan, revision 111) and Corentin Jacques for help with the decoding analysis.

Commercial relationships: none.

Corresponding author: Talia L. Retter.

Email: talia.retter@uclouvain.be.

Address: Department of Psychology, Center for Integrative Neuroscience, University of Nevada, NV, USA.

References

- Ales J. M., & Norcia A. M. (2009). Assessing direction-specific adaptation using the steady-state visual evoked potential: Results from EEG source imaging. Journal of Vision, 9(7): 8, 1–13. [DOI] [PubMed] [Google Scholar]

- Allison T., Begleiter A., McCarthy G., Roessler E., Nobre A. C., & Spencer D. D. (1993). Electrophsyiological studies of color processing in human visual cortex. Electroencephalography and Clinical Neurophysiology, 88(5), 343–355. [DOI] [PubMed] [Google Scholar]

- Anllo-Vento L., Luck S. J., & Hillyard S. A. (1998). Spatio-temporal dynamics of attention to color: evidence from human electrophysiology. Human Brain Mapping, 6(4), 216–238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arend L., & Reeves A. (1986). Simultaneous color constancy. Journal of the Optical Society of America A, 3, 1743–1751. [DOI] [PubMed] [Google Scholar]

- Beauchamp M. S., Haxby J. V., Jennings J. E., & DeYoe E. A. (1999). An fMRI version of the Farnsworth-Munsell 100-Hue test reveals multiple color-selective areas in human ventral occipitotemporal cortex. Cerebral Cortex, 9(3), 257–263. [DOI] [PubMed] [Google Scholar]

- Berens P. (2009). CircStat: A MATLAB toolbox for circular statistics. Journal of Statistical Software, 31, 1–21. [Google Scholar]

- Bouvier S. E., & Engel S. A. (2006). Behavioral deficits and cortical damage loci in cerebral achromatopsia. Cerebral Cortex, 16, 183–191. [DOI] [PubMed] [Google Scholar]

- Brainard D. H., & Wandell B. A. (1992). Asymmetric color matching: How color appearance depends on the illuminant. Journal of the Optical Society of America A, 9, 1433–1448. [DOI] [PubMed] [Google Scholar]

- Brainard D. H., & Hurlbert A. C. (2015). Colour vision: Understanding #TheDress. Current Biology, 25, R551–R554. [DOI] [PubMed] [Google Scholar]

- Brouwer G. J., & Heeger D. J. (2009). Decoding and reconstructing color from responses in human visual cortex. Journal of Neuroscience, 29(44), 13992–14003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chetverikov A., & Ivanchei I. (2016). Seeing “the dress” in the right light: perceived colors and inferred light sources. Perception, 45(8), 910–930. [DOI] [PubMed] [Google Scholar]

- Churma M.E. (1994). Blue shadows: physical, physiological, and psychological causes. Applied Optics, 33, 4719–4722. [DOI] [PubMed] [Google Scholar]

- Coggan D. C., Liu W., Baker D. H., & Andrews T. J. (2016). Category-selective patterns of neural response in the ventral visual pathway in the absence of categorical information. NeuroImage, 135, 107–114. [DOI] [PubMed] [Google Scholar]