In this In Practice report, we describe a novel educational resource using online patient simulations—the electronic Clinical Reasoning Educational Simulation Tool (eCREST). eCREST seeks to improve the quality of diagnoses from common respiratory symptoms seen in primary care by focusing on developing clinical reasoning skills. It has recently been tested with final-year medical students in three UK medical schools. In response to interest, we are exploring the use of eCREST to other medical schools in the UK and internationally and to other professional groups and will conduct further evaluation.

Background

The idea for eCREST arose following research using online patient simulations assessing how physicians make decisions about whether to investigate for cancer. This research found that general practitioners (GPs) made appropriate decisions when they had the relevant information they needed (ie, including common, non-specific symptoms that were not initially volunteered by patients). In cases where they did not have essential information, they were less likely to investigate for possible cancer. In 40% of cases, however, GPs did not elicit this essential information.1 If these patterns are seen in clinical practice, they could lead to delays in diagnosis of cancer.

To reduce diagnostic delays, the Institute of Medicine, among others, recommends the teaching of clinical reasoning should start in medical school, to equip future doctors with the skills necessary to elicit essential information.2 Clinical reasoning can be broadly defined as the thought processes required to apply clinical knowledge to seek information, identify likely diagnoses and reach clinical decisions. Clinical reasoning teaching in medical schools often relies on exposure to real patients, for example during clinical placements.3 There are several logistical and educational reasons to introduce online patient simulation as an adjunct to face-to-face patient contact. Organising learning with real patients is time and resource intensive, which may restrict provision of clinical reasoning teaching. In addition, the range of cases that students encounter during clinical placements is unpredictable, the quality of supervision and feedback may vary, and in a real consultation there is limited time for students to adequately reflect.3

We, therefore, set out to develop an online patient simulation resource for medical students to teach clinical reasoning. The resource, targeted at final-year medical students in UK medical schools, was co-developed with doctors-in-training, medical students, medical educators and experts in diagnostics, respiratory health, primary care and cancer.

A description of the eCREST online patient simulation resource

eCREST’s simulations seek to support an experience comparable to real clinical consultations. Patient cases were designed by clinicians (GP registrars) with input from clinical experts. They are typical of respiratory cases seen in primary care in which symptoms are vague and the diagnosis is unclear. ‘Patient’ videos were produced using actors with input on the design from patients to enhance their authenticity. Just as in real consultations, students do not receive a score nor does the feedback provide a ‘correct’ diagnosis. Instead, students receive video feedback, tailored to their responses, presented by GP trainers or registrars. These professionals describe the thought processes they used to decide on likely and important diagnoses for each case. A key feature of eCREST, that distinguishes its simulated cases from clinical cases, is the interruption of simulated consultations with prompts to the student to review possible diagnoses, and reflect on what influenced their decisions. By facilitating students to further reflect on their decisions, eCREST targets the thought processes involved in clinical reasoning. It helps to mitigate the effects of three cognitive biases relevant to diagnostic errors: confirmation bias— the tendency to seek information to confirm a hypothesis rather than refute it; anchoring— the tendency to stick to an initial hypothesis despite new contradictory information and the unpacking principle—failure to elicit necessary information to make an informed judgement.4

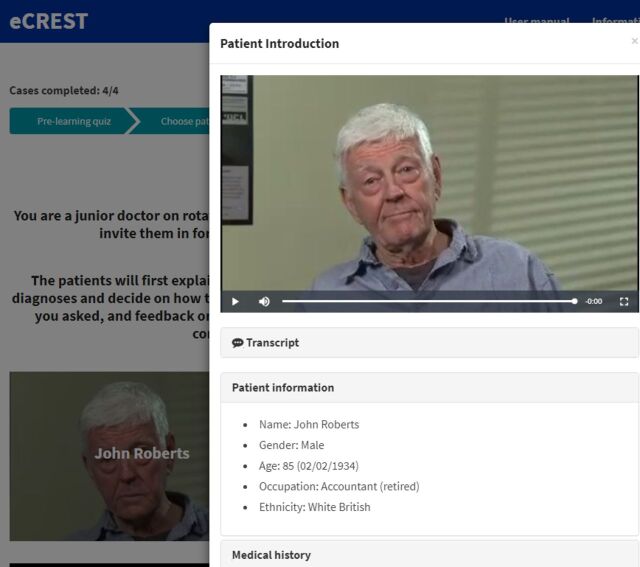

As shown in figure 1, in eCREST, the student acts as a junior doctor in primary care. Students begin a patient case by watching a short video of a patient describing their problem. They gather data from the patient by selecting questions, to which there is a video response from the patient. For each case 30–40 questions are available with no limit to the amount of questions students can ask. They can also access the patient’s health record, and select to up to eight results from a range of physical examinations and bedside tests, displayed as text. eCREST regularly prompts students to review their diagnosis. They can change their differential diagnosis by adding, removing or re-ordering their diagnoses; and they must explain why they chose to change their diagnoses. At the end of each case students are asked to list their final diagnoses and explain why their choices changed, or not, throughout the consultation. They then choose how to manage their patient by selecting from a list of further tests and follow-up options.

Figure 1.

Screen grab of eCREST ‘waiting room’.

Evaluation and next steps

Three UK medical schools have recently taken part in a feasibility randomised controlled trial to assess acceptability to students and to inform a trial of the effectiveness of eCREST.5 In the trial, eCREST was offered before or during clinical placements in primary care. Analysis is underway and feedback from students was very positive, suggesting eCREST influenced their data gathering approach and decision-making processes.

We have received interest in using or testing eCREST from other medical schools in the UK and internationally and from other student groups, namely physician associates, and GPs in training. In response, we joined the EDUCATE programme for promising educational technology projects to develop opportunities for adoption and testing in more medical schools and with other student groups. We are now seeking to explore collaboration opportunities with medical schools or other organisations interested to use eCREST or exchange learning with others addressing similar questions in educational research or practice.

Acknowledgments

We are grateful to the excellent work of eCREST’s web developers, Silver District, to Dr Sarah Bennett, Senior Clinical Teaching Fellow, Admissions Tutor for MBBS and Deputy Academic Lead for MBBS Year 6 Curriculum and Assessment at UCL for her invaluable advice on the design of eCREST.

Footnotes

P and RP contributed equally.

Contributors: APK led the codevelopment of eCREST, managing site and content development. RP conducted evidence reviews to inform development design and content, and contributed to all elements of the development process. PS, NK, SM and JH devised the online patient simulated cases. SB and CV advised on the initial design of eCREST and how to maximise its value to medical students, commented on versions of eCREST during its development and facilitated recruitment of students at UCL. JS had the initial idea for the study, secured funding for it as the PI and oversaw aspects of the study. RP and JS produced the initial draft of the manuscript. All authors commented on drafts of the manuscript and agreed the decision to submit for publication.

Funding: This report presents independent research commissioned andfunded by the National Institute for Health Research (NIHR) Policy ResearchProgramme, conducted through the Policy Research Unit in Cancer Awareness, Screening and early Diagnosis, PR-PRU-1217-21601. JS is supported by theNational NIHR Collaboration for Leadership in Applied Health Research and Care NorthThames at Barts Health NHS Trust. The views expressed are those of the authorsand not necessarily those of the NIHR, the Department of Health and Social Careor its arm’s length bodies, or other Government Departments. RP was funded by The Health Foundation Improvement Science PhD Studentship.

Competing interests: None declared.

Ethics approval: University ethics approval for the feasibility RCT was obtained from all participating medical schools: UCL Research Ethics Committee, ref: 9605/001 31st October 2016; Institute of Health Sciences Education review committee at Barts and The London medical school, ref: IHSEPRC-41 31st January 2017; the Faculty of Medicine and Health Sciences Research Ethics Committee at Norwich medical school University of East Anglia, ref: 2016/2017 – 99 21st October 2017.

Provenance and peer review: Not commissioned; internally peer reviewed.

Correction notice: This article has been corrected since it was published online first. The article is now open access with CC BY-NC license badge.

References

- 1. Sheringham J, Sequeira R, Myles J, et al. Variations in GPs' decisions to investigate suspected lung cancer: a factorial experiment using multimedia vignettes. BMJ Qual Saf 2017;26:449–59. 10.1136/bmjqs-2016-005679 [DOI] [PubMed] [Google Scholar]

- 2. Institute of Medicine. Improving diagnosis in health care. In: Balogh EP, Miller BT, Ball JR eds. Washington, DC: The National Academies Press, 2015:472. [PubMed] [Google Scholar]

- 3. Schmidt HG, Mamede S. How to improve the teaching of clinical reasoning: a narrative review and a proposal. Med Educ 2015;49:961–73. 10.1111/medu.12775 [DOI] [PubMed] [Google Scholar]

- 4. Norman GR, Eva KW. Diagnostic error and clinical reasoning. Med Educ 2010;44:94–100. 10.1111/j.1365-2923.2009.03507.x [DOI] [PubMed] [Google Scholar]

- 5. Sheringham J, Kassianos A, Plackett R. eCREST feasibility trial: A protocol. 2019. https://www.ucl.ac.uk/dahr/research-pages/gp_study (cited 3 Apr 2019).