Abstract

Single Particle Tracking (SPT) is a powerful class of tools for analyzing the dynamics of individual biological macromolecules moving inside living cells. The acquired data is typically in the form of a sequence of camera images that are then post-processed to reveal details about the motion. In this work, we develop an algorithm for jointly estimating both particle trajectory and motion model parameters from the data. Our approach uses Expectation Maximization (EM) combined with an Unscented Kalman filter (UKF) and an Unscented Rauch-Tung-Striebel smoother (URTSS), allowing us to use an accurate, nonlinear model of the observations acquired by the camera. Due to the shot noise characteristics of the photon generation process, this model uses a Poisson distribution to capture the measurement noise inherent in imaging. In order to apply a UKF, we first must transform the measurements into a model with additive Gaussian noise. We consider two approaches, one based on variance stabilizing transformations (where we compare the Anscombe and Freeman-Tukey transforms) and one on a Gaussian approximation to the Poisson distribution. Through simulations, we demonstrate efficacy of the approach and explore the differences among these measurement transformations.

I. INTRODUCTION

Single particle tracking (SPT) is an important class of techniques for studying the motion of single biological macromolecules. With its ability to localize particles with an accuracy far below the diffraction limit of light and the ability to track the trajectory across time, SPT continues to be an invaluable tool in understanding biology at the nanometer-scale. Under the standard approach, the images are post-processed individually to determine the location of each particle in the frame and then these positions are linked across frames to create a trajectory [1]. This trajectory is then further analyzed, typically by fitting the Mean Square Displacement (MSD) curve to an appropriate motion model to determine parameters such as diffusion coefficients. Regardless of the algorithms used, the paradigm separates trajectory estimation from model parameter identification, though it is clear that these two problems are coupled. In addition, the techniques for model parameter estimation assume a simple linear observation of the true particle position corrupted by additive white Gaussian noise. The actual data, however, are intensity measurements from a CCD camera. These measurements are well modeled as Poisson-distributed random variables with a rate that depends on the true location of the particle as well as on experimental realities, including background noise and details of the optics used in the instrument. This already nonlinear model becomes even more complicated at the low signal intensities common to SPT data where noise models specific to the type of camera being used become important [2], [3].

To handle such nonlinearities, one of the authors previously introduced an approach based on nonlinear system identification that uses Expectation Maximization (EM) combined with particle filtering and smoothing [4]. This general approach can handle nearly arbitrary nonlinearities in both the motion and observation models and has been shown to work as well as current state-of-the-art methods in the simple setting of 2-D diffusion. However, a major drawback of this approach is the computational complexity of the particle filtering scheme. In this paper we address this issue by replacing the particle-based methods with an Unscented Kalman filter (UKF) and Unscented Rauch-Tung-Striebel smoother (URTSS) [5], [6]. This Sigma Points based EM scheme, which we simply term as Unscented EM (U-EM), is significantly cheaper to implement, allowing it to be applied to larger data sets and for more complicated models. This reduction in complexity comes, of course, at the cost of generality in the posterior distribution describing the position of the particle at each time point since the UKF-URTSS approximates this distribution as a Gaussian while the particle-based approaches can represent other distributions [6].

One of the challenges in applying the UKF is that it assumes Gaussian noise in both the state update and measurement equations. In this work we focus on diffusion to focus the discussion on a concrete setting. As the corresponding dynamic model is linear with additive Gaussian noise applying the UKF in terms of the state update equations is straightforward. The observation model discussed above, however, involves Poisson distributed noise whose parameters depend upon the state and experimental settings. Thus, to apply the UKF, the model must be transformed into one where the measurement noise is Gaussian instead of Poisson. Two possible approaches are considered: One is a choice of a variance stabilizing transformation, such as the Anscombe or Freeman-Tukey transform, that yields a measurement model with additive Gaussian noise with unity variance (both are used here); the other is a straightforward replacement of the Poisson distribution by a Gaussian with a mean and variance equal to the rate of the original distribution.

The remainder of this paper is organized as follows. In Sec. II, we describe the problem formulation, including the motion and observation models in SPT application. Also, we describe the SPT application and introduce the motion and observation models used. This is followed in Sec. III by a description of the general U-EM technique. In Sec. IV we use simulations to demonstrate the efficacy of our approach and to investigate the effect of the choice of transformation of the observation model under different experimental settings. Brief concluding remarks are provided in Sec. V.

II. PROBLEM FORMULATION

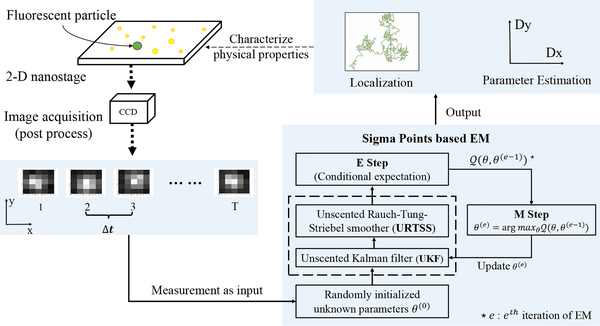

The outline of our scheme is shown in Fig. 1. The left side of the figure represents the experimental techniques for acquiring data in which a particle of interest is labeled with a fluorescent tag (such as a fluorescent protein or quantum dot) and imaged through an optical microscope using a CCD camera. The image frames are then segmented to isolate individual particles. These segmented frames are then the input to the U-EM algorithm. In the remainder of this section we describe the motion and observation models used.

Fig. 1.

Generic framework of SPT study by Sigma Points based EM

A. Motion Model

For concreteness and simplicity of presentation, we focus on anisotropic diffusion in 2-D, though the extension to 3-D or to other common motion models (including directed motion, where the labeled particle is carried by the machinery of the cell, Ornstein-Uhlenbeck motion, which captures tethered motion, or confined diffusion) is straightforward. The model of anisotropic diffusion is

| (1) |

where represents the location of the particle in the lateral plane at time t and Q is a covariance matrix given by

| (2) |

Here Dx and Dy are independent diffusion coefficients and Δt is the time between frames of the image sequence.

B. Observation Model

Because the single particle is smaller than the diffraction limit of light, the image on the camera is described by the point spread function (PSF) of the instrument. In 2-D (and in the focal plane of the objective lens), the PSF is well approximated by

| (3) |

where σx and σy are given by

| (4) |

Here λ is the wavelength of the emitted light and NA is the numerical aperture of the objective lens being used [7]. This PSF is then imaged by the CCD camera.

Assuming segmentation has already been done (which is a standard pre-processing step), the image acquired by the camera is composed of P pixels arranged into a square array. The pixel size is Δx by Δy with the actual dimensions determined both by the physical size of the CCD elements on the camera and the magnification of the optical system. At time step t, the expected photon intensity measured for the pth pixel is then

where G denotes the peak intensity of the fluorescence and the integration bounds are over the given pixel.

In addition to the signal, there is always a background intensity rate arising from out-of-focus fluorescence and autofluorescence in the sample. This is typically modeled as a uniform rate Nbgd [4]. Combining these signals and accounting for the shot noise nature of the photon generation process, the measured intensity in the pth pixel at time t is

| (5) |

where Poiss(·) represents a Poisson distribution.

C. Measurement Model Transformation

The UKF is developed with an assumption of Gaussian-distributed noise [6]. We therefore need to transform the Poisson distributed model in (5) into an appropriate form. We consider three possibilities.

Direct Gaussian Approximation

For a sufficiently high rate, a Poisson distribution of rate λ is well approximated by a Gaussian of mean and covariance equal to that rate [8]. One approach, then, is to replace (5) with

| (6) |

This approach requires no modification to the measured data. However, the noise term vk itself depends upon the state variable since the rate λp,t is a function of Xt.

Anscombe Transformation

The Anscombe transformation is a variance-stabilizing transformation that (approximately) converts a Poisson-distributed random variable into a unit variance Gaussian one [9]. Under this approach, the measurements are first transformed by

| (7) |

The measurement model (5) is then replaced by

| (8) |

Freeman Tukey Transformation

An alternative variance stabilizing transform is the Freeman and Tukey [10]. Under this approach, the measurements are first transformed by

| (9) |

and the measurement model is replaced by

| (10) |

III. UNSCENTED EXPECTATION MAXIMIZATION

In this section we describe the U-EM approach which consists of the expectation maximization algorithm for finding an (approximate) maximum likelihood estimate of the parameters together with the UKF and URTSS for estimating the smoothed distribution of the latent variable (the trajectory of the particle in the SPT application).

A. Parameter Estimation via Expectation Maximization

Consider the problem of identifying an unknown parameter for the nonlinear state space model

| (11a) |

| (11b) |

where the , , and wt and vt are independent, identitically distributed white noise processes (not necessarily Gaussian).

The primary goal is to determine a maximum likelihood (ML) estimate of θ from the data since that estimator is known to be asymptotically consistent and efficient. That is, we would like

| (12) |

where we have expressed the estimator using the log likelihood. However, it is often the case that pθ(Y1:T) is unknown or intractable, and thus (12) cannot be solved directly.

The EM algorithm overcomes this challenge by taking advantage of the latent variables X1:T and seeks to optimize the complete log likelihood Lθ(X0:T, Y1:T), given by

| (13) |

Unfortunately the latent state is not available, only the measurements Y1:T. EM handles this by forming an approximation Q(θ, θ(e)) of Lθ to achieve the minimum variance estimate of the likelihood given the observed data and an assumption θ(e) of the true parameter value. This is of course given by the conditional mean

| (14) |

| (15) |

where

| (16a) |

| (16b) |

| (16c) |

The calculation of is called the Expectation (E) step at the eth iteration. It has been shown [11] that any choice of θ(e+1) such that also increases the original likelihood, that is . Thus, the expectation step is followed by a Maximization (M) step to produce the next estimate of the parameter,

| (17) |

To implement the E step (that is, to calculate ) by carrying out the expectations in (16), it is necessary to know the posterior densities p(Xt|Y1:T) and p(Xt,Xt−1|Y1:T). If the underlying model in (11) is linear with Gaussian noise then these distributions are easily obtained [12]. For nonlinear systems, however, there is no hope of any exact, analytical solution. Therefore, either some form of approximation or numerical approach must be used. Here we take an approximation approach and apply the UKF and URTSS.

B. Unscented Kalman Filter

The UKF was developed by Julier and Uhlman to capture (an approximation to) the mean and covariance of a nonlinear stochastic process without relying on the linearization approach of the EKF [13]. More details can be found in many sources, such as [6].

The UKF forms a Gaussian approximation of the filtering posterior distribution,

| (18) |

where mean and covariance are calculated as follows.

Prediction step

First calculate the 2n + 1 sigma points (where n is the dimension of the state) according to

| (19a) |

| (19b) |

| (19c) |

for i = 1, … , n. Here [·]i denotes the ith column of the matrix, is the matrix square root of A, and ζ is a scaling parameter defined by

| (20) |

where α, β and κ allow the users to tune the algorithm performance [14], [15]. The sigma points are then propagated through the motion model

| (21) |

and then combined to produce the predicted mean and covariance at time t given data up to time t−1 according to

| (22) |

| (23) |

The weights are given by

| (24a) |

| (24b) |

Update and filter

A new set of sigma points are formed from the predicted mean and covariance according to (19) using and in lieu of mt−1 and Pt−1. These sigma points are then propagated through the measurement

| (25) |

and combined to form

| (26) |

| (27) |

| (28) |

where Rt is a covariance matrix in measurement model. Finally, these are used to produce the filtered estimates of the mean and covariance of the process at time t using the data up to time t through

| (29) |

| (30) |

| (31) |

C. Unscented Rauch-Tung-Striebel Smoother

To obtain (an approximation to) the distribution p(Xt|Y1:T), we apply the URTSS [16]. The URTSS begins with the final results of the UKF, and , and then runs a backward recursion from t = T − 1, …, 0. as follows.

Prediction and update

First form the sigma points from (19) using mt and Pt. These are then propagated through the motion model

| (32) |

and combined to form

| (33) |

| (34) |

| (35) |

where the weights are given in (24).

Calculate the smoothed estimate

The mean and covariance defining the smoothed Gaussian density at time t are calculated from

| (36) |

| (37) |

| (38) |

From the UKF and URTSS, we form the approximated posterior densities needed for the EM algorithm

| (39) |

| (40) |

D. Applying U-EM to the SPT Setting

Applying U-EM is primarily a matter of identifying the specific model for (11) and the parameters to be identified. As we are focusing on anisotropic diffusion, the motion model is given by (1) which depends on unknown diffusion coefficients. The observation model depends on the choice of transformation and is given either by (6), (8), or (10). The unknown parameters are diffusion coefficients Dx and Dy.

IV. DEMONSTRATION AND ANALYSIS

To demonstrate the performance of the U-EM algorithm in the SPT setting, we performed several simulations. 40 different ground truth trajectories were generated from the diffusion motion model (1) and used to create simulated images according to the observation model in (5). The optical parameters and other fixed constants used in these simulations are shown in Table I; these were chosen to mimic experimental settings found in many SPT experiments.

TABLE I.

Parameter settings

| Symbol | Parameter | Values |

|---|---|---|

| Δt | Image period (discrete time step) | 100 ms |

| T | Number of images per dataset | 100 |

| P | Number of pixels per squared image | 25 |

| Dx | Diffusion coefficient in x direction | 0.005 μm2/s |

| Dy | Diffusion coefficient in y direction | 0.01 μm2/s |

| Δx | Length of unit pixel | 100 nm |

| Δy | Width of unit pixel | 100 nm |

| λ | Emission wavelength | 540 nm |

| NA | Numerical aperture | 1.2 |

A. Demonstration

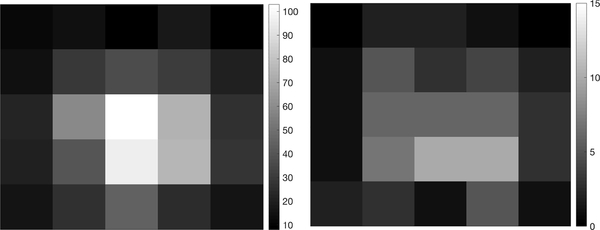

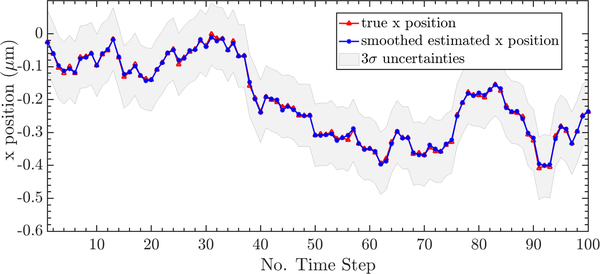

To demonstrate, we fixed the background rate Nbgd = 10 and the peak signal intensity G = 100, representing a strong but not atypical signal in actual SPT experiments [1], [4]. A typical image is shown in Fig. 2. The algorithm was applied across 40 sample trajectories. A typical trajectory estimation result, calculated using the Anscombe transform to the measurement model, is shown in Fig. 3. One interesting feature of the U-EM approach is that the trajectory estimation yields a (Gaussian) distribution at each time step rather than a single point estimate. In Fig. 3, the results show the mean tracks the true path very closely with a tight distribution.

Fig. 2.

Typical data images with (left) Nbgd = 10 and G = 100 and (right) Nbgd = 1 and G = 10. There are a total of 867 photon counts captured among the 25 pixels in the left image and 85 counts in the right image. Notice the different scaling in the two images.

Fig. 3.

Position estimation through Anscombe transform in x direction

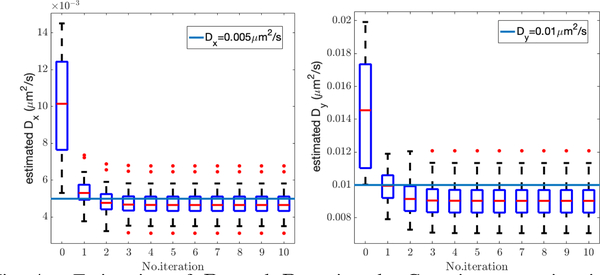

The evolution of the diffusion coefficient estimate as a function of EM iteration is shown in Fig. 4. (These estimates were done using the Gaussian approximation to the measurement model.) These results show rapid convergence to a value quite close to the true diffusion coefficients.

Fig. 4.

Estimation of Dx and Dy using the Gaussian approximation to the measurement model. As with all box plots, the (red) line in the box denotes the median, the edges of the box show the first and third quartiles, the vertical dashed lines indicate bounds of 1.5 times the interquartile range, and the red dots indicate outliers.

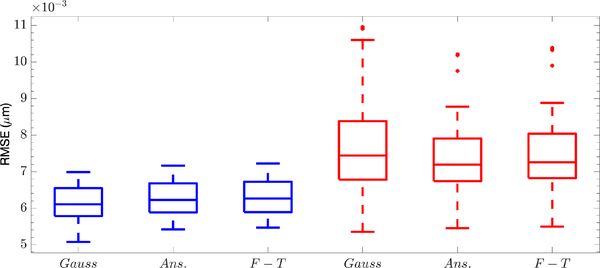

To explore the difference among these transformations, the simulations were repeated with each of the three choices. The estimated position at each time was taken as the mean value of the smoothed distribution. The resulting root mean square errors (RMSE) are shown in Fig. 5. As can be seen, all approaches perform well with an estimation error of approximately 6.25 nm in x position and 7.30 nm in y position. Both the similarity and the actual error level is as expected given that the signal level is high.

Fig. 5.

Box plots of 2-D position estimation error using the (Gauss) Gaussian approximation, (Ans.) Anscombe transform, and (F-T) Freeman-Tukey transform. Blue and red box correspond to RMSE in x and y position respectively.

Performance of parameter estimation over the 40 runs and with the three different transformation choices is shown in Table II.

TABLE II.

PARAMETER ESTIMATION OF Dx AND Dy ON 40 DATASETS

| Method | Dx (μm2/s) | Dy (μm2/s) |

|---|---|---|

| Gaussian | 0.0047 ± 7.3e-4 | 0.009 ± 0.0011 |

| Anscombe | 0.0046 ± 7.3e-4 | 0.009 ± 0.0011 |

| Freeman Tukey | 0.0046 ± 7.3e-4 | 0.009 ± 0.0011 |

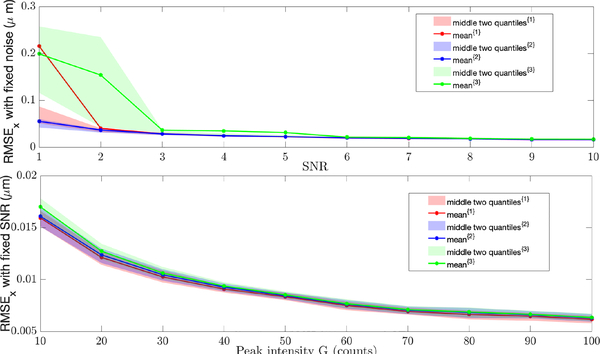

B. Performance across Different Signal Levels

The primary differences among the different observation model transformations become meaningful only when the rate of the Poisson distribution is low (as determined by the combination of signal level and background). We performed two sets of simulations at different noise levels [1]. In the first set, the noise Nbgd was fixed at one and the signal G increased from one to 10. In the second, Signal to Noise Ratio (SNR) was fixed to 10 and Nbgd increased from 1 to 10. Other imaging and model parameters were kept the same.

The localization results are shown in Fig. 6 with the top graph corresponding to Nbgd = 1 (and thus extremely low signal levels) and the bottom to simulations for a fixed SNR. In both plots, red corresponds to Gaussian approximation, blue to Anscombe transform and green to Freeman-Tukey transform. It is clear that differences only appear at the low signal levels. Note that in the first plot with a peak intensity of G = 6, the rate in the pixel at the center of the PSF is still only 7 counts. At the lowest signal levels, the Anscombe transform outperforms the other two. While the Gaussian approach is close, the difference between the mean and the center quartiles indicates that it has several large outliers. To put these estimation errors in context, note that for the given imaging parameters, the diffraction limit of light is approximately 270 nm.

Fig. 6.

RMSE of x position estimation with different {Nbgd, G}. The superscript {1}, {2} and {3} indicates results based on the Gaussian approximation, Anscombe transform, and Freeman-Tukey transform, respectively. (top) Results holding Nbgd=1 fixed and varying G, showing the behavior at very low signal levels. (bottom) Results holding the ratio SNR = 10 fixed while varying G. Note that for space reasons, only results for Dx are shown; estimation of Dy is similar.

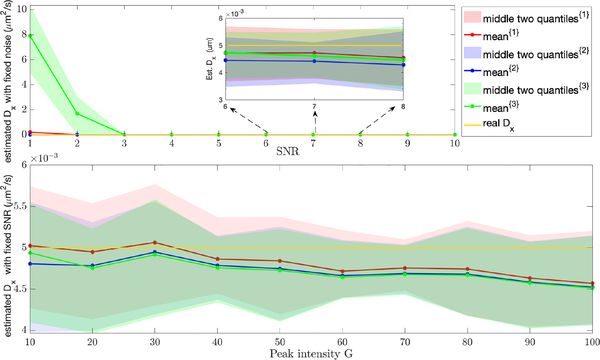

The corresponding results for the estimation of the diffusion coefficients are shown in Fig. 7. As before, red corresponds to Gaussian approximation, blue to Anscombe transform, and green to Freeman-Tukey transform. The true value is Dx = 0.005 μm/s2. These results parallel the trajectory estimation, with all transformations of the observation equation being essentially equivalent at high signal levels and Freeman-Tukey failing at the lowest signals.

Fig. 7.

Results of estimation of Dx with a true value of Dx = 0.005 μm/s2. (top) Results holding Nbgd = 1 fixed while varying SNR. (bottom) Results holding the ratio SNR fixed while varying G.

As noted in Sec. I, compared with SMC-EM, the method is faster with all of the transformation methods (on the order of a few minutes using unoptimized code in Matlab on a standard laptop with 10 EM iterations), the Gaussian approximation runs the slowest of the three (approximately 10–15% slower). This is easily explained from the equations (6) – (10) where we see that under the Gaussian approximation, the variance λp,t must be calculated at each time step while both Anscombe and Freeman-Tukey avoid this since they are variance stabilized to one. Since the Anscombe transform outperforms at low signal level and has lower computational load, it should be the preferred approach.

V. CONCLUSIONS

In this paper the U-EM algorithm is introduced to the application of localization and parameter estimation in SPT. We explored the use of three different transformation methods to bring the observation model describing the camera images in SPT into a form amenable to the UKF, namely using a direct Gaussian approximation of the Poisson-distributed random variable modeling the intensity measurements on the camera and transforming the measurements using an Anscombe or Freeman-Tukey transform to convert them into unity variance, Gaussian distributed random variables. At high signal levels, all three approaches produce similar results but that at very low signal levels, the Anscombe outperforms the others (though with the Gaussian approximation close behind). In future work we plan to incorporate other, biologically relevant motion models, as well as introduce additional complexities into the observation model to capture, for example, camera-specific noise.

ACKNOWLEDGEMENT

The authors gratefully acknowledge B. Godoy and N. A. Vickers for insightful discussions. This work was supported in part by NIH through 1R01GM117039-01A1.

REFERENCES

- [1].Chenouard N et al. , “Objective comparison of particle tracking methods,” Nature methods, vol. 11, no. 3, pp. 281–289, 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Krull A, Steinborn A, Ananthanarayanan V, Ramunno-Johnson D, Petersohn U, and Tolić-Nørrelykke IM, “A divide and conquer strategy for the maximum likelihood localization of low intensity objects,” Optics express, vol. 22, no. 1, pp. 210–228, 2014. [DOI] [PubMed] [Google Scholar]

- [3].Lin R, Clowsley AH, Jayasinghe ID, Baddeley D, and Soeller C, “Algorithmic corrections for localization microscopy with scmos cameras-characterisation of a computationally efficient localization approach,” Optics express, vol. 25, no. 10, pp. 11701–11716, 2017. [DOI] [PubMed] [Google Scholar]

- [4].Ashley TT and Andersson SB, “Method for simultaneous localization and parameter estimation in particle tracking experiments,” Physical Review E, vol. 92, no. 5, p. 052707, 2015. [DOI] [PubMed] [Google Scholar]

- [5].Van Der Merwe R et al. , “Sigma-point Kalman filters for probabilistic inference in dynamic state-space models,” Ph.D. dissertation, OGI School of Science & Engineering at OHSU, 2004. [Google Scholar]

- [6].Särkkä S, Bayesian filtering and smoothing. Cambridge University Press, 2013, vol. 3. [Google Scholar]

- [7].Zhang B, Zerubia J, and Olivo-Marin J-C, “Gaussian approximations of fluorescence microscope point-spread function models,” Applied optics, vol. 46, no. 10, pp. 1819–1829, 2007. [DOI] [PubMed] [Google Scholar]

- [8].Gnedenko BV, Theory of probability. Routledge, 2017. [Google Scholar]

- [9].Anscombe FJ, “The transformation of poisson, binomial and negative-binomial data,” Biometrika, vol. 35, pp. 246–254, 1948. [Google Scholar]

- [10].Freeman MF and Tukey JW, “Transformations related to the angular and the square root,” Ann. Math. Statist, vol. 21, no. 4, 12 1950. [Online]. Available: 10.1214/aoms/1177729756 [DOI] [Google Scholar]

- [11].Dempster AP, Laird NM, and Rubin DB, “Maximum likelihood from incomplete data via the EM algorithm,” Journal of the Royal Statistical Society: Series B (Methodological), vol. 39, pp. 1–22, 1977. [Google Scholar]

- [12].Gibson S and Ninness B, “Robust maximum-likelihood estimation of multivariable dynamic systems,” Automatica, vol. 41, no. 10, 2005. [Google Scholar]

- [13].Julier SJ and Uhlmann JK, “New extension of the Kalman filter to nonlinear systems,” in Signal processing, sensor fusion, and target recognition VI, vol. 3068 International Society for Optics and Photonics, 1997, pp. 182–194. [Google Scholar]

- [14].Arasaratnam I and Haykin S, “Cubature Kalman filters,” IEEE Transactions on automatic control, vol. 54, no. 6, pp. 1254–1269, 2009. [Google Scholar]

- [15].Wan EA and Van Der Merwe R, “The unscented Kalman filter for nonlinear estimation,” in Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No. 00EX373). Ieee, 2000, pp. 153–158. [Google Scholar]

- [16].Särkkä S, “Unscented Rauch–Tung–Striebel Smoother,” IEEE Transactions on Automatic Control, vol. 53, no. 3, pp. 845–849, April 2008. [Google Scholar]