Highlights

-

•

Considerable deficiency in reliability and quality of information was noted.

-

•

The majority of websites had low to moderate readability and usability.

-

•

Lack for proper standardisation and monitoring are possible causes for poor quality.

Abbreviations: USA, United States of America; FRES, Flesch reading ease score; HON-code, Health on the net Code of Conduct; SPSS, Statistical package for social sciences; NICE, National Institute for Health and Care Excellence; WHO, World Health Organization

Keywords: Quality, Quality of information, COVID-19, Education websites, General public, Internet, 2019-novel coronavirus, SARS-CoV-2

Abstract

Objectives

To analyse the quality of information included in websites aimed at the public on COVID-19.

Methods

Yahoo!, Google and Bing search engines were browsed using selected keywords on COVID-19. The first 100 websites from each search engine for each keyword were evaluated. Validated tools were used to assess readability [Flesch Reading Ease Score (FRES)], usability and reliability (LIDA tool) and quality (DISCERN instrument). Non-parametric tests were used for statistical analyses.

Results

Eighty-four eligible sites were analysed. The median FRES score was 54.2 (range: 23.2−73.5). The median LIDA usability and reliability scores were 46 (range: 18−54) and 37(range:14−51), respectively. A low (<50 %) overall LIDA score was recorded for 30.9 % (n = 26) of the websites. The median DISCERN score was 49.5 (range: 21–77). The DISCERN score of ≤50 % was found in 45 (53.6 %) websites. The DISCERN score was significantly associated with LIDA usability and reliability scores (p < 0.001) and the FRES score (p = 0.024).

Conclusion

The majority of websites on COVID-19 for the public had moderate to low scores with regards to readability, usability, reliability and quality.

Practice Implications

Prompt strategies should be implemented to standardize online health information on COVID-19 during this pandemic to ensure the general public has access to good quality reliable information.

1. Introduction

The coronavirus COVID-19 pandemic has become the greatest global health crisis of the 21 st century [1]. During this pandemic, the demand for information on COVID-19 has skyrocketed. Information such as latest news updates on the pandemic, its symptoms, prevention and mechanism of transmission are highly sought by the public [2]. On the other hand, free access to information, especially through social media, which is accessed by the majority [3] has led to an increase in misinformation and panic associated with COVID-19 [4]. Although, high quality health information is known to be related to lower stress levels and better psychological health [5], previous studies have shown that online information on many medical disorders to be of substandard quality [6,7].

A previous study done on websites related to COVID-19 has reported substandard quality information that could potentially mislead the public [8]. However, this study has used a limited search strategy and had not assessed some important areas including usability and reliability of the information. Therefore, this topic remains a knowledge gap in COVID-19 research [9]. Therefore, we conducted this study to analyse the current COVID-19 websites targeting the general public in terms of quality, usability, readability, and reliability using a wide search strategy and validated instruments.

2. Methods

Yahoo!, Google and Bing, were searched using the keywords “novel coronavirus”,”SARS-CoV-2”, “severe acute respiratory syndrome coronavirus-2”, “COVID-19” and “coronavirus”. The search was performed during the first week of May 2020. The details of the search strategy and the piloting process are provided in the supplementary material (File S1) [10].

Two independent investigators with previous experience of conducting similar studies assessed the selected websites [11,12]. Prior to the assessment, a pilot run was conducted to ensure uniformity and accuracy. The information on symptoms, investigations, public health measures, and available treatment modalities were collected. The accuracy of the content was assessed using the national institute for health and care excellence (NICE) guidelines on COVID-19 [13]. A rating was given as all or none based on congruence with the guidelines.

Validated instruments were used to assess the quality of websites. Readability was assessed using the Flesch Reading Ease Score (FRES) [14]. The LIDA Instrument (2007)-Version:1.2 was used to analyse the content and the design of the websites using the usability and reliability domains [15,16]. The quality of the content was assessed using the DISCERN questionnaire which has 16 questions in two separate groups [17]. The detailed assessment criteria and the scoring system is included in the supplementary material (File S1).

A website was classified as governmental if it was maintained by the country’s public health authority. If managed by private institutions, non-governmental organizations, or voluntary institutions independent from the government, they were considered as non-governmental. The online health-related websites are standardized in terms of their credibility and reliability by online certification sites. We chose the Health on the Net code of conduct (HON-code) which is the oldest and widely used out of the quality evaluation tools available [18].

Data analysis was performed using SPSS (Version-20) software and the associations were determined with non- parametric tests. A p-value of <0.05 was considered statistically significant.

3. Results

Of the 1500 retrieved websites, 1416 were excluded and the remaining 84 websites were included in the analysis. The characteristics of the websites are mentioned in Table 1 .

Table 1.

Website characteristics.

| Website Characteristics | Frequency | Percentage | |

|---|---|---|---|

| Governmental websites | 42 | 50 % | |

| Not-for-profit and private websites | 42 | 50 % | |

| HON-code accredited | 15 | 17.9 % | |

| Readability score of above 70 (equivalent to 7th grade) | 3 | 3.6 % | |

| Readability | Moderate | 7 | 8.3 % |

| Low | 77 | 91.6 % | |

| Usability | Moderate (50−90%) | 21 | 25 % |

| Low (<50 % score) | 63 | 75 % | |

| Reliability | Moderate (50−90%) | 6 | 7.1 % |

| Low (<50 %) | 78 | 92.9 % | |

| Date of publication stated | 34 | 40.5 % | |

| References mentioned | 51 | 60.7 % | |

| Disclosure statement by authors | 51 | 60.7 % | |

| Infographics | Used | 63 | 75 % |

| Text-only | 21 | 25 % | |

Half (50 %) were governmental websites and only 17.9 % (n = 15) were HON accredited websites. The median FRES was 54.1 (range: 23.2−73.5, 10th-12th grade readability level) which is classified as fairly difficult to read. Only three websites (3.6 %) had a readability score of above 70 (equivalent to 7th grade) which is the recommended standard.

The overall median LIDA score was 84 (range: 32–105), while the median LIDA usability and reliability scores were 46 (range: 18−54) and 37 (range: 14−51), respectively. The median DISCERN score was 49.5 (range: 21–77) which classifies websites as being of “fair quality”. (Excellent = 63–80; Good = 51–62; Fair = 39–50; Poor = 27–38; Very poor = 16–26). However, the top 10 websites (Table A 2) were of excellent quality.

Significant correlations were observed between the DISCERN score and the overall LIDA score as well as LIDA usability and reliability scores (Table 2 , p < 0.001). HON-code certified websites sites obtained significantly higher DISCERN scores (p = 0.025).

Table 2.

Correlation between DISCERN scores and other factors.

| DISCERN SCORE |

||||||

|---|---|---|---|---|---|---|

| Low (<40) |

High (40−80) |

P value | ||||

| Mean | Range | Mean | Range | |||

| LIDA Usability | 39 | 18−54 | 47 | 31−54 | P<0.001 | |

| LIDA Reliability | 23 | 14−33 | 42 | 24−51 | P<0.001 | |

| LIDA Overall | 62 | 32−84 | 89 | 68−105 | P<0.001 | |

| FRES Score | 49.9 | 34.8−63.7 | 54.5 | 23.2−73.5 | P = 0.024 | |

| N | % | N | % | |||

| Government | No | 8 | 30.8% | 34 | 58.6% | P = 0.018 |

| Yes | 18 | 69.2% | 24 | 41.4% | ||

| HON Certification | No | 25 | 96.2% | 44 | 75.9% | P = 0.025 |

| Yes | 1 | 3.8% | 14 | 24.1% | ||

Pertaining to the currency of information, only 34 (40.5 %) publishers stated the date of the publication. Most websites (n = 51, 60.7 %) did not declare the sources of evidence. This was further established by the “low” median reliability score of 37. Nevertheless, the authors have included a disclosure statement in most (n = 51, 60.7 %) websites.

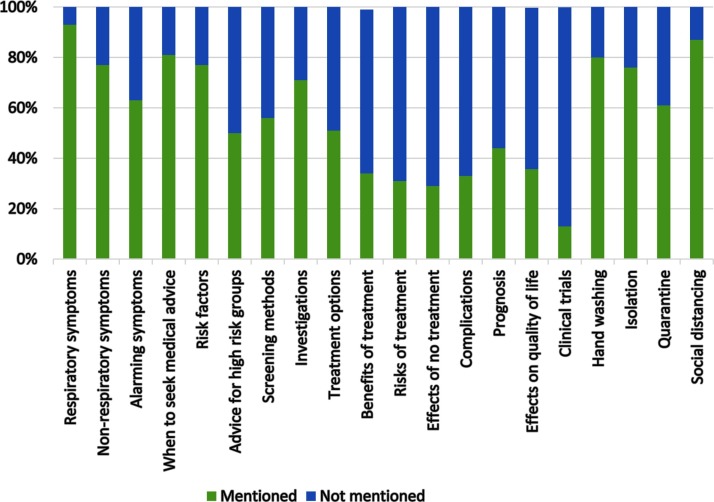

Figures A.1 and A.2 summarize the rating of websites on individual criteria assessed by the DISCERN tool. The specific information provided regarding COVID-19 is shown in Fig. 1 .

Fig. 1.

Website characteristics evaluated outside the DISCERN tool.

More than half of the websites failed to discuss the treatment options available (n = 46, 54.7 %), benefits or risks (n = 54, 64.2 %), and effects of no treatment (n = 51, 60.7 %). Furthermore, potential complications and prognosis were stated only in 28 (33.3 %) and 37 (44 %) websites, respectively.

4. Discussion and conclusion

4.1. Discussion

This study has shown that still most of the websites on online health information on COVID-19 are of suboptimal quality except for a few credible sources of good-quality health information. Nevertheless, the websites ranked among the top 10 according to the DISCERN score (Table A.2) had high scores indicating the potential for publishing credible high-quality information online which would benefit the public.

Misinformation is a major concern during this pandemic as people fail to spend adequate time to critically analyse the online information. This, in turn, causes panic which ranges from hoarding medical supplies to panic shopping and using drugs without prescription with negative social and medical consequences [19]. Therefore, measures implemented to ensure the quality and accuracy of online information by the responsible authorities may help negate these adverse consequences.

Stating the methods of content production, with names of the contributing authors may help increase the credibility of online health information while displaying the date of the publication provides an idea of the currency of the information. Absence of such information in over half of the websites was a major drawback, especially for COVID-19 where new information is generated almost daily. Health authorities should therefore ensure that the patient information websites provide the above information and certify websites based on such details so that the public can get information from trusted sources [20].

Most users of the worldwide web only have an average level of education and reading skills [21]. Guidance from the National Institute of Health (NIH) had shown that the readability should be below the level of seventh grade for the lay public to adequately understand the content [21]. However, the median readability level was found to be equivalent to 10th-12th grade readability. Such complexities with the readability of information may increase the risk of misunderstandings or misinterpretation. Using short sentences in writing, using the active voice, using 12-point or larger font size, using illustrations and non-textual media as appropriate and accompanying explanations with examples would be helpful to overcome this problem [22].

So far only a limited number of studies have been done to assess the quality of health information websites related to COVID-19. The study by Cuan-Baltazar et al. prior to February 2020, reported poor quality information with approximately 70 % of included websites with low DISCERN scores [8]. Our study done three months later shows similar results with only a minimal improvement in the quality of information. Furthermore, the Cuan- Baltazar study had several limitations which includes the limited search strategy and non-inclusion of key quality parameters including readability. Furthermore, 61 out of the 110 (55.4 %) sites they had included were online news sites that are not considered as patient information websites. In that study, the HON-code seal was present only in 1.8 % (n = 2) websites, whereas in our study, 17.9 % of the sites were HON-code certified.

There were several limitations in this study. Although most popular search engines were used in this study under default settings, they may produce variable results depending on many factors including geographical location and popularity of websites at a given point of time. The algorithms unique to those search engines are subjected to constant change, and therefore the exact results of our study may not be reproducible. However, we believe the general patterns observed in our study are valid.

4.2. Conclusion

This study has shown the quality, readability, usability, and reliability of the information on COVID-19 on majority of websites providing health information to the general public are to be of substandard quality.

4.3. Practice implications

To improve the credibility of the content, the websites should state methods of content production and display the date of the publication to give an idea about the currency of the information. To improve the readability of the content, the websites should incorporate more non-textual media, write in short sentences, using the active-voice and use larger font sizes. The patient information websites should display scores of reliability, quality, and readability as a guidance for its users. Furthermore, it is vital for medical regulatory authorities and the government to impose regulations to ensure quality and to prevent the spread of misinformation.

Availability of data and materials

On reasonable request from the corresponding author, the data used in the above study can be made available.

Ethics approval and consent to participate

Unnecessary in this type of study.

Informed consent and patient details

Not applicable in this type of study.

Funding

None.

CRediT authorship contribution statement

Ravindri Jayasinghe: Conceptualization, Methodology, Data curation, Writing - original draft, Visualization, Investigation, Validation, Formal analysis, Resources. Sonali Ranasinghe: Conceptualization, Methodology, Data curation, Writing - original draft, Visualization, Investigation, Validation, Formal analysis, Resources. Umesh Jayarajah: Conceptualization, Methodology, Data curation, Writing - original draft, Visualization, Investigation, Validation, Formal analysis, Resources. Sanjeewa Seneviratne: Conceptualization, Methodology, Writing - review & editing, Supervision, Project administration.

Declaration of Competing Interest

The authors report no declarations of interest.

Acknowledgements

None declared.

Footnotes

Supplementary material related to this article can be found, in the online version, at doi:https://doi.org/10.1016/j.pec.2020.08.001.

Appendix A. Supplementary data

The following is Supplementary data to this article:

References

- 1.Borges do Nascimento I.J., Cacic N., Abdulazeem H.M. Novel Coronavirus infection (COVID-19) in humans: a scoping review and meta-analysis. J. Clinical Med. 2020;9(4) doi: 10.3390/jcm9040941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Le H.T., Nguyen D.N., Beydoun A.S., Le X.T.T., Nguyen T.T., Pham Q.T., Ta N.T.K., Nguyen Q.T., Nguyen A.N., Hoang M.T. Demand for health information on COVID-19 among Vietnamese. Int. J. Environ. Res. Public Health. 2020;17(12):4377. doi: 10.3390/ijerph17124377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wang C., Pan R., Wan X., Tan Y., Xu L., McIntyre R.S., Choo F.N., Tran B., Ho R., Sharma V.K. A longitudinal study on the mental health of general population during the COVID-19 epidemic in China. Brain Behav. Immun. 2020 doi: 10.1016/j.bbi.2020.04.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Ho C.S., Chee C.Y., Ho R.C. Mental health strategies to combat the psychological impact of COVID-19 beyond paranoia and panic. Ann Acad Med Singapore. 2020;49(1):1–3. [PubMed] [Google Scholar]

- 5.Wang C., Pan R., Wan X., Tan Y., Xu L., Ho C.S., Ho R.C. Immediate psychological responses and associated factors during the initial stage of the 2019 coronavirus disease (COVID-19) epidemic among the general population in China. Int. J. Environ. Res. Public Health. 2020;17(5):1729. doi: 10.3390/ijerph17051729. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Waidyasekera R.H., Jayarajah U., Samarasekera D.N. Quality and scientific accuracy of patient-oriented information on the internet on minimally invasive surgery for colorectal cancer. Health Policy Technol. 2020;9(1):86–93. [Google Scholar]

- 7.Jayasinghe R., Ranasinghe S., Jayarajah U., Seneviratne S. Quality of patient-oriented web-based information on oesophageal cancer. J. Cancer Educ. 2020 doi: 10.1007/s13187-020-01849-4. In press. [DOI] [PubMed] [Google Scholar]

- 8.Cuan-Baltazar J.Y., Muñoz-Perez M.J., Robledo-Vega C., Pérez-Zepeda M.F., Soto-Vega E. Misinformation of COVID-19 on the internet: Infodemiology study. JMIR Public Health Surveill. 2020;6(2) doi: 10.2196/18444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tran B.X., Ha G.H., Nguyen L.H., Vu G.T., Hoang M.T., Le H.T., Latkin C.A., Ho C.S., Ho R. Studies of novel coronavirus disease 19 (COVID-19) pandemic: a global analysis of literature. Int. J. Environ. Res. Public Health. 2020;17(11):4095. doi: 10.3390/ijerph17114095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Eysenbach G., Köhler C. How do consumers search for and appraise health information on the world wide web? Qualitative study using focus groups, usability tests, and in-depth interviews. Brit Med J. 2002;324(7337):573–577. doi: 10.1136/bmj.324.7337.573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Prasanth A.S., Jayarajah U., Mohanappirian R., Seneviratne S.A. Assessment of the quality of patient-oriented information over internet on testicular cancer. BMC Cancer. 2018;18(1):491. doi: 10.1186/s12885-018-4436-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Udayanga V., Jayarajah U., Colonne S.D., Seneviratne S.A. Quality of the patient-oriented information on thyroid cancer in the internet. Health Policy Technol. 2020 [Google Scholar]

- 13.National Institute for Health and Care Excellence . 2020. Coronavirus (COVID-19)https://www.nice.org.uk/covid-19 (Accessed 27 April 2020) [Google Scholar]

- 14.2017. Readable, The Flesch Reading Ease and Flesch-Kincaid Grade Level.https://readable.com/blog/the-flesch-reading-ease-and-flesch-kincaid-grade-level/ (Accessed 6 February 2020) [Google Scholar]

- 15.Minervation . 2008. The Minervation Validation Instrument for Healthcare Websites (LIDA Tool)http://www.minervation.com/wp-content/uploads/2011/04/Minervation-LIDA-instrument-v1-2.pdf (Accessed 6 February 2020) [Google Scholar]

- 16.Minervation . 2012. Is the Lida Website Assessment Tool Valid?http://www.minervation.com/does-lida-work/ (Accessed 6 February 2020) [Google Scholar]

- 17.Discern Online . 1997. The DISCERN Instrument.http://www.discern.org.uk/discern_instrument.php (Accessed 6 February 2020) [Google Scholar]

- 18.Fahy E., Hardikar R., Fox A., Mackay S. Quality of patient health information on the Internet: reviewing a complex and evolving landscape. Australas. Med. J. 2014;7(1):24–28. doi: 10.4066/AMJ.2014.1900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kluger J. 2020. As Disinfectant Use Soars to Fight Coronavirus, So Do Accidental Poisonings.https://time.com/5824316/coronavirus-disinfectant-poisoning/ (Accessed 27 April 2020) [Google Scholar]

- 20.Tran B.X., Dang A.K., Thai P.K., Le H.T., Le X.T.T., Do T.T.T., Nguyen T.H., Pham H.Q., Phan H.T., Vu G.T. Coverage of health information by different sources in communities: implication for COVID-19 epidemic response. Int. J. Environ. Res. Public Health. 2020;17(10):3577. doi: 10.3390/ijerph17103577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.National Institutes of Health . 2015. How to Write Easy-To-Read Health Materials.https://www.scribd.com/document/261199628/How-to-Write-Easy-To-Read-Health-Materials-MedlinePlus (Accessed 27 April 2020) [Google Scholar]

- 22.D’Alessandro D.M., Kingsley P., Johnson-West J. The readability of pediatric patient education materials on the world wide web. Arch. Pediatr. Adolesc. Med. 2001;155(7):807–812. doi: 10.1001/archpedi.155.7.807. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

On reasonable request from the corresponding author, the data used in the above study can be made available.