Abstract

Accurate segmentation of lungs in pathological thoracic computed tomography (CT) scans plays an important role in pulmonary disease diagnosis. However, it is still a challenging task due to the variability of pathological lung appearances and shapes. In this paper, we proposed a novel segmentation algorithm based on random forest (RF), deep convolutional network, and multi-scale superpixels for segmenting pathological lungs from thoracic CT images accurately. A pathological thoracic CT image is first segmented based on multi-scale superpixels, and deep features, texture, and intensity features extracted from superpixels are taken as inputs of a group of RF classifiers. With the fusion of classification results of RFs by a fractional-order gray correlation approach, we capture an initial segmentation of pathological lungs. We finally utilize a divide-and-conquer strategy to deal with segmentation refinement combining contour correction of left lungs and region repairing of right lungs. Our algorithm is tested on a group of thoracic CT images affected with interstitial lung diseases. Experiments show that our algorithm can achieve a high segmentation accuracy with an average DSC of 96.45% and PPV of 95.07%. Compared with several existing lung segmentation methods, our algorithm exhibits a robust performance on pathological lung segmentation. Our algorithm can be employed reliably for lung field segmentation of pathologic thoracic CT images with a high accuracy, which is helpful to assist radiologists to detect the presence of pulmonary diseases and quantify its shape and size in regular clinical practices.

Keywords: Pathological lung segmentation, Convolutional neural network, Random forest, Divide-and-conquer strategy

Introduction

Pulmonary disease is one of the major causes of morbidity and mortality around the world [1, 2]. For example, the recent global outbreak of COVID-19 has killed tens of thousands of people in just a few months. Early diagnosis of pulmonary disease with computed tomography (CT) technique is crucial for making treatment decisions. In non-invasive detection and diagnosis of pulmonary disease, accurate lung segmentation is often a prerequisite for assessing the disease severity, it ensures that disease detection is not confounded by regions outside lungs [2]. However, inner structures of thoracic CT images are usually various with different textures and pixel densities. Additionally, intensities of pathological images are inhomogeneous and it is difficult to provide a reliable generic solution for a wide spectrum of lung abnormalities, which cause difficulties in lung segmentation.

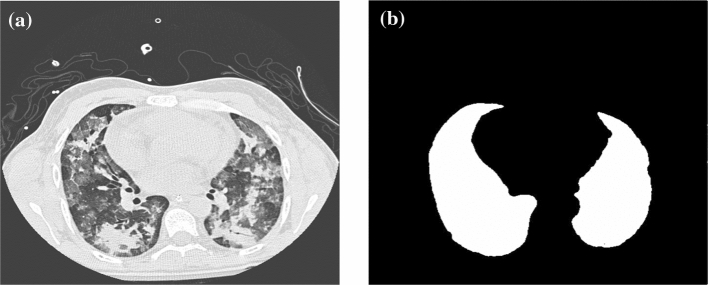

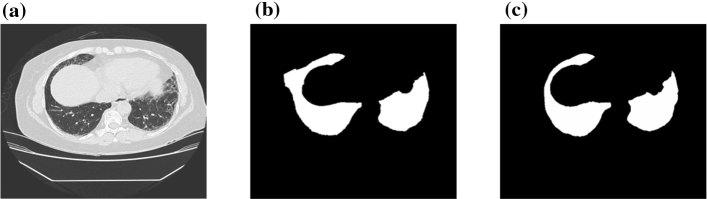

Lung segmentation refers to the computer-based process of identifying the boundaries of lungs from surrounding thoracic tissue on CT images [3], see Fig. 1 for a reference. In this paper, the key issue we want to tackle is to segment pathological lungs from thoracic CT images accurately overcoming external distractions of lung diseases and abnormalities. Thus, we proposed a novel pathological segmentation algorithm based on random forest, deep convolutional network, and multi-scale superpixels. Our contributions in the paper are:

We propose a novel pathological lung segmentation algorithm combining three principal processes: feature extraction, classification fusion, and contour correction, which can generate more complete segmentations.

We put forward an effective classification fusion method based on a fractional-order gray correlation, which can produce more accurate fusion results in multi-scale classifications.

We present a new lung segmentation refinement approach based on a divide-and-conquer strategy of contour correction of left lungs and region repair of right lungs, which contributes to generating more accurate lung segmentations.

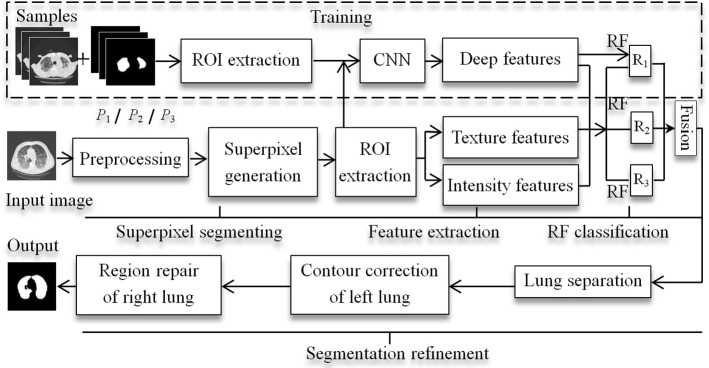

The remainder of this paper is organized as follows: in Sect. 2, we introduce the related research in lung segmentation, and in Sect. 3, we make a detailed description of our lung segmentation algorithm. In Sect. 4, we provide a set of experimental results and in Sect. 5 and Sect. 6, we make a discussion and summarize our algorithm. The basic pipeline of our algorithm is illustrated in Fig. 2.

Fig. 1.

Pathological lung segmentation. A pathological thoracic CT image (a) with its segmented lung mask (b)

Fig. 2.

The pipeline of our algorithm. A DCNN model is trained with samples captured from CT images and their ground truths. An input image is segmented into three groups of superpixels: , , and , with three different scales, respectively. The deep features, texture, and intensity features are extracted from each group of superpixels, which are classified with random forests (RFs). We fused the classification results (, , and ) and refined the fused segmentation result with a divide-and-conquer strategy and information propagation mechanism

Related Work

Many methods have been proposed to segment lungs from thoracic CT images in the past decades. In [4], three thresholding based approaches, connected threshold, neighborhood connected, and threshold level set were performed on lung segmentation. Prabin et al. [5] segmented thoracic CT images using a region growing algorithm along with a combination of supervised contextual clustering technique. Mansoor et al. [6] segmented pathological lungs from CT scans by combining region-based segmentation with a local descriptor based classification. Chen et al. [7] segmented pathological lungs from 3D low-dose CT images using an eigenspace sparse shape composition by integrating a sparse shape composition with an eigenvector space shape prior model. Revathi et al. [8] introduced a pathological lung identification system, where, FC was used for segmenting lungs with a diverse range of lung abnormalities, RF was then applied to refine the segmentation by identifying pathology and non-pathology tissues according to the features extracted from the gray-level co-occurrence matrix (GLCM), gray level run length matrices, histograms and so on. Soliman et al. [9] segmented pathological lungs from CT images based on a 3D joint Markov-Gibbs random field model which integrated the first-order visual appearance model, the second-order spatial interaction model, and a shape prior model. Hua et al. [10] used a graph search driven by a cost function combining the intensity, gradient, boundary smoothness, and rib information for pathological thoracic CT image segmentation. Hosseini-Asl et al. [11] proposed a nonnegative matrix factorization (NMF)-based pathological lung segmentation approach, which included three stages: removing image background from CT images by a conventional 3D region growing method, modeling visual appearance of the remaining chest-lung image with an NMF technique, extracting 3D lung voxels by a two-step data clustering and a region map cleaning approach. An automated lung segmentation algorithm was developed combining unsupervised and supervised techniques in [12]. The method combined an unsupervised MRF technique to provide an initial estimate of lung borders, a supervised texture analysis scheme based on an SVM classifier was applied on searching border regions and distinguishing lung tissues from their surrounding tissues. Liu et al. [13] segmented lungs from CT images with a random forest (RF) classifier, where texture and intensity features extracted from superpixels were taken as the input of RF Classifier. Meng et al. [14] presented a lung segmentation algorithm based on anatomical knowledge and a snake model. By setting the snake model’s initial curve on human’s rib edge in the thoracic CT image, their model could capture the concavities locating on lung boundaries well. Abdollahi et al. [15] segmented multi-scale initial lungs from CT images using a linear combination of discrete Gaussian approach and a Markov-Gibbs random field (GMRF) model. The initial segmentations were fused together using a Bayesian approach to obtain the final segmentation of lung regions. The potential benefits of deep learning techniques in image analysis have also generated remarkable results [16, 17]. Harrison et al. [1] presented a bottom-up deep-learning-based approach unaffected by any variations in lung shape. In the method, a deep model, progressive holistically-nested network, was used to produce finer detailed lung masks. Park et al. [18] employed a two-dimensional U-Net for lung parenchyma segmentation. Anthimopoulos et al. [19] used a deep purely convolutional neural network for the semantic segmentation of interstitial lung disease (ILD) patterns. Lung CT images of arbitrary sizes were taken as inputs and produced corresponding label maps.

In lung segmentation, manually crafted features such as shape, color, and/or texture play an important part in some algorithms. Nevertheless, the features are complementary in medical images and cannot make representations of high-level problem domain concepts [20]. Deep learning is often used to improve the descriptive ability in feature representation [21–23]. For example, Hong et al. [21] used deep neural networks to compute features for face pose estimation. Zhang et al. [22] extracted deep features with contractive autoencoders for unsupervised dimension reduction. Convolutional neural network (CNN) has an ability of representation learning, where input information is extracted and filtered layer by layer. The convolutional layers of a CNN can be seen as feature extractors, which generate local features of image patches in each layer, and the features are combined to produce a global deep feature vector in the last. Recent work demonstrated that 2D CT slices are expressive enough for segmenting complex organs [24]. Consequently, we fuse the deep features and low-level traditional features to character different regions of thoracic CT images and extract lung regions from CT images with RF according to the fused features. Furthermore, the lung segmentation is refined by contour correcting and region repairing.

Methods

The superpixel algorithm [25] can group pixels into perceptually meaningful atomic regions with similar features, such as intensity, texture, and so on. Approximately equally-sized superpixels with boundaries aligning to local image edges are more suitable for being taken as classification units than isolated image patches. Consequently, we take superpixels as classification units of deep convolutional neural network (DCNN) and RF classifiers for preserving lung contours well. Our lung segmentation algorithm mainly includes four steps: superpixel segmenting, feature extracting, RF classification, and segmentation refining.

Superpixel Segmenting

After preprocessed with morphological filters [26], thoracic CT images are segmented into a group of superpixels with the simple linear iterative clustering (SLIC) approach [25]. SLIC employs k-means clustering approach to efficiently generate superpixels with approximately equal sizes. The scales of superpixels in an image depend on a user-specified parameter, P. Assume the size of a thoracic CT image is Z, the scale of a superpixel is roughly . Hence, smaller P produces larger-scaled superpixels, which is helpful for segmenting inhomogeneous intensities images involving pathologic tissues with strong anti-noise interference ability. Conversely, bigger P generates smaller-scaled superpixels, which can capture detailed regions. Here, a thoracic CT image is segmented by SLIC with three Ps (, , ), respectively. As a consequence, we obtain three groups of superpixels with three different scales.

Feature Extraction

We map the superpixels into the thoracic CT image and extract deep, texture, and intensity features from superpixels.

Deep Feature Extraction

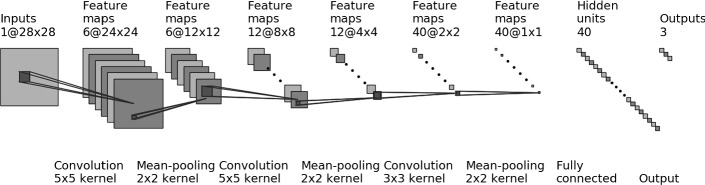

CNN is one of deep neural networks with multilayer perceptrons and usually consists of an input and an output layers, as well as multiple hidden layers typically including a series of convolutional layers, pooling layers, fully connected layers, and normalization layers [27]. Here, we construct an 8-layer DCNN model for deep feature extraction:

Fig. 3.

Our DCNN consists of three convolutional layers, three pooling layers, one fully connected layer and one output layer

The outputs of the convolutional layers are all activated by a Sigmoid function,

Three kinds of regions, lungs, pleural tissues, and backgrounds, are captured from thoracic CT images according to their ground truths. They are resized and used for training and testing of our DCNN model. Finally, the output of the last mean-pooling layer, a feature vector of 40 elements, is extracted and taken as our deep features.

Texture Feature Extraction

GLCM is a widely used texture statistical analysis and measurement tool. Its statistical characteristics are superior to those of fractal dimension, Markov model, Gabor filter and so on [28, 29]. We extract 46 features from GLCM, such as energy, contrast, homogeneity, correlation and so on. Some of them are defined as , , , , where and are the mean and standard deviations of GLCM.

Besides GLCM, moment invariants, s, have been extensively used to characterize the patterns of images in a variety of applications [30].

| 1 |

Hu [31] introduced seven moment invariants .

Moment invariants are useful properties, which are robust to image scaling, translation, and rotation. Collectively, we obtain a total of 54 texture features after adding image entropy [32] into texture features.

Intensity Features

Since color information is mainly distributed in low-order moments, color moments have been proved an effective tool in representing the color distribution in images [33]. Stricker et al. [34] introduced three color moments to represent image color distribution including first-order moments (), second-order moments (), and third-order moments (s) defined as , , , where stands for the ith color value of pixel i, N and respectively represent the total number of pixels in the image and the mean value of ith color channel.

Here, we convert a CT image into a grayscale image and extract intensity features from it, and in total, we concatenate 97 features listed in Table 1.

Table 1.

Features characterizing thoracic CT images

| Types | Features |

|---|---|

| Deep features | Features extracted with DCNN model |

| Texture features | Features from GLCM, such as energy, contrast, homogeneity, correlation and so on; Moment invariants; Image entropy |

| Intensity features | Color moments: mean, variance, skewness |

Random Forest Classification

We capture the maximum inscribed square patch from each superpixel as a region of interest (ROI), resize, and take them as inputs of our DCNN model to extract deep features. Combining with the deep, texture, and intensity features extracted from superpixels, Random forest (RF) [35] classifies the superpixels into three classes: lungs, pleural tissues, and image backgrounds.

The lung segmentation results in the initial classification with an RF are usually incomplete due to the existence of pathologic lung tissues. Because superpixels with different scales can cluster regions with different scales and details, we adopt the RF to classify multi-scale superpixels according to their features and fuse different segmentation results to get a relatively complete lung segmentation result. However, some image patches located at the same positions are assigned different classes in multiple classifications. In order to deal with the issue, we adopt a fusion technology based on a gray correlation algorithm instead of a simple and crude addition fusion.

The similarity of two data series can be determined by their slopes in corresponding periods according to the theory of gray correlation. If the slopes of two series are equal or similar in each period, the two series have a large correlation coefficient and vice versa. Thus, image patch similarity in terms of grayscale and texture is calculated by the gray correlation of two vectors.

Assume two data series are and , the similarity between them is calculated as follows:

-

Fractional accumulation [36]

We reduce the noise affect by fractional accumulation which is expressed as

where is fractional accumulation operator. Let , and2

Then .3 -

Initialization

The purpose of initialization is to make each sequence comparable.4

where is the mean value of .5 -

Inverse accumulation

Inverse accumulation is to find slops of the curve at each time point. Here, we use the absolute values of difference of adjacent data in data series to reflect the overall distribution trend of data series.6 -

Correlation coefficient calculation

The correlation coefficient is calculated by Eq. 7.7 -

Fractional-order gray correlation degree calculation

Fractional-order gray correlation degree of two data series is defined as Eq. 8.8

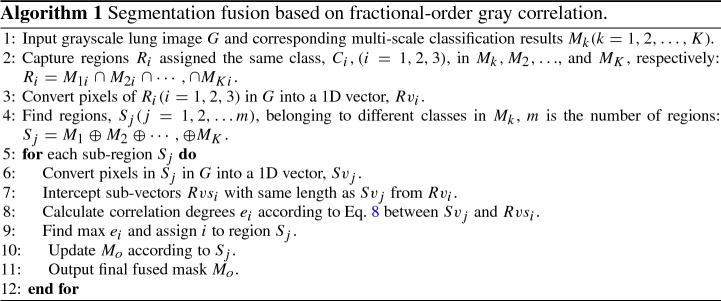

With the fractional-order gray correlation degree, we can distinguish any two image patches and assign them an appropriate class in classification. The segmentation fusion is described detailedly in Algorithm 1.

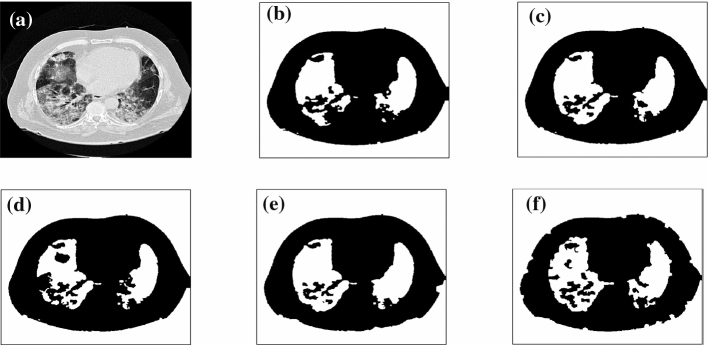

In Fig. 4, we set the numbers of superpixels, Ps, in SLIC method to 200, 300, and 500, respectively, which generates three scales of superpixels. Because lung tissues and image backgrounds have low intensities, which are very different from pleural tissues with high intensities, we group lung tissues and image backgrounds into one class and pleural tissues into another class in the output of our algorithm. By fusing the different classification results with Algorithm 1, we obtain a relatively complete segmentation.Additionally, because we use three small-sized Ps, the time cost is much less than that with a big-sized P. For example, in Fig. 4, the time cost of the fusion algorithm with , , and is 0.68 times of that with . At the same time, the fused segmentation is better than one-time segmentation.

Fig. 4.

Segmentation fusion. We segment lungs from a pathologic thoracic CT image (a), fuse the classification results of an RF with (b), (c), and (d). Our fusion result (e) is more completed compared with one-time segmentation with (f)

We obtain the initial lung segmentation results by extracting lung regions from the fusion results of RFs with morphological operations.

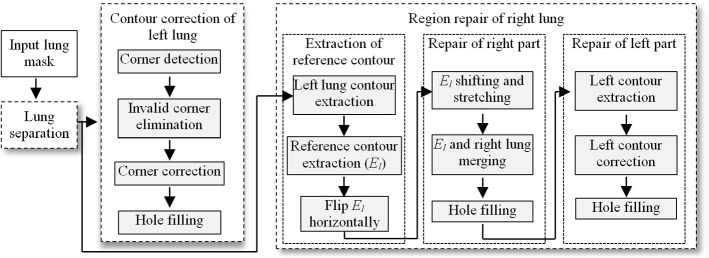

Segmentation Refinement

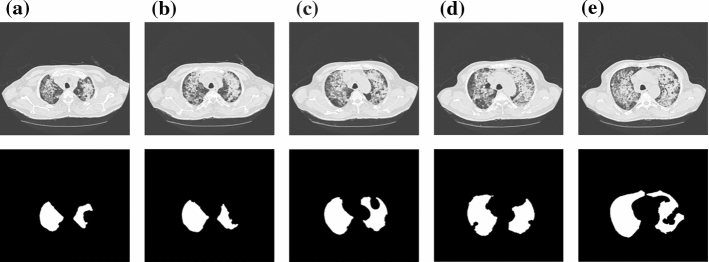

Pathologic lungs usually have similar densities with surrounding tissues, which often leads to incomplete segmentation of lung regions. Additionally, the left and right lungs closed to each other usually connected in some segmentation results. In order to tackle these problems, we utilize a lung contour correction approach and lung region repair method to refine segmentations after lung separation, see Fig. 5 for a reference.

Fig. 5.

Segmentation refinement. It covers three main steps: lung separation, contour correction of left lung and region repair of the right lung

As shown in Fig. 6, in some pathological lung CT images, the right lung regions usually have larger defects and the left lung regions are relatively more complete. Accordingly, we adopt a divide-and-conquer strategy to refine segmentation results by combing contour correction of left lung contours and region repair of the right lungs. We first presented a contour correction approach based on SUSAN operator for left lung contour correction. Because the left lung and the right lung are approximately symmetrical and they usually have similar contours in a thoracic CT image, the right lung region is repaired supervised by left lung contours. It should be noted that the segmentation refinement methods for the left and right lungs can be switched in the other general images. Anyway, the first step of our segmentation refinement is to separate two connected lungs.

Fig. 6.

A set of lung segmentation results. The right lung regions usually have larger defects and the left lung regions are usually more complete in some initial lung segmentation results

Lung Separation

In a thoracic CT slice sequence , left and right lungs are closed to each other and may connected from in a segmentation sequence . Here, we utilize a lung separation line propagation approach to separate the connected lungs by using the separation line of its former one.

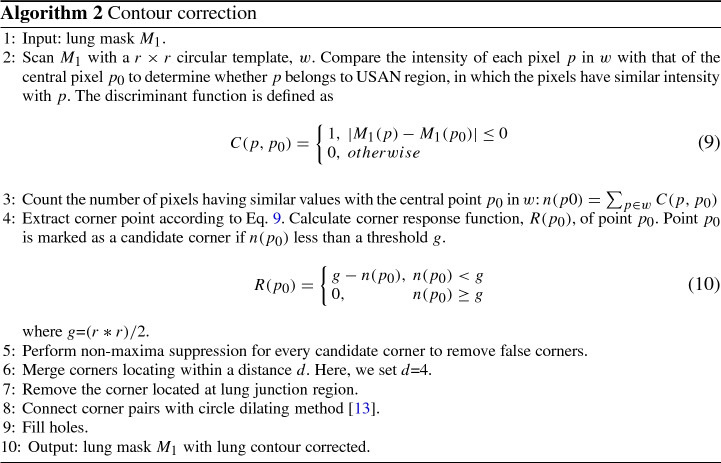

Contour Correction of Left Lung

The Smallest Univalue Segment Assimilating Nucleus (SUSAN) algorithm is a famous corner detection technique. In order to repair lung contour concaves caused by pathological abnormalities, we used a contour correction approach based on SUSAN operator [37] for lung contour correcting, which covers three stages: candidate corner detecting, validate corner filtering and corner connecting. We create a copy, , of lung mask M, remove the right lung region from and retain left lung. The main steps of contour correction are described in Algorithm 2.

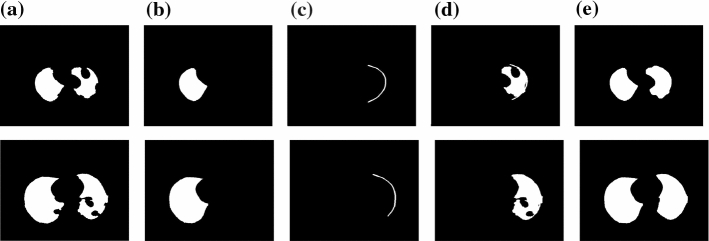

Region Repair of Right Lung

The left lung and right lung in a thoracic CT image usually have similar contours. Accordingly, instead of using the whole mask of the left lung to repair the right lung, we take a part of corrected left lung contour as the contour mask of the right lung. We divide the repair process into three stages: extraction of reference contour, repair of the right part, and repair of the left part of the lung.

- Extraction of reference contour

- Create a copy, , of lung mask M. Remove the left lung region from and retain the right lung.

- Extract single-pixel wide contour, E, of the corrected left lung in .

- Calculate bounding box, , of the left lung and capture the left part of E, , according to the top and bottom points of , see Fig. 7 for a reference.

- Extract the middle segment (reference contour) of .

- Repair of right part

- Flip horizontally, move it to the right until reaches the right boundary of right lung .

- Stretch from its two endpoint according to its slope, respectively.

- Merge with E: .

- Fill holes of with morphological operations.

- Repair of left part

- Calculate bounding box, , of the right lung .

- Extract left part of right lung according to the top and bottom points of .

- Correct the left part of right lung contours with Algorithm 2 in .

After the contour correction of left lung and region repair of the right lung, we merge their results and obtain the final refined segmentation: .

Fig. 7.

Segmentation refinements. For initial segmentations (a), we extract left lungs and correct their lung contours with a corner detection-based method (b). We capture a segment of left part contours from the left lungs, stretch them (c) to supervise right lung correction (e). With contour correction, we obtain the final corrected results (f)

Experiments

Interstitial lung disease (ILD) is the leading cause of mortality and characterized by widespread fibrotic and inflammatory abnormalities of lungs [38]. The images used for training and testing our algorithm come from ILDs database [39]. It contains high-resolution computed tomography (HRCT) image series with pathologically proven diagnoses of ILDs. The images which are difficult to segment by conventional methods are used to test the performance of our segmentation approach.

In the following, we first introduce metrics in our algorithm evaluation and then evaluate the influence of preset superpixel numbers. Finally, we perform our algorithm on a set of pathological lung CT images and analyze the experiment results.

Evaluation Method

TNR, TPR, PPV, ACC, Error (Er) are used to evaluate our lung segmentation performance [40]. Four classical metrics [41], over-segmentation rate (OR), under-segmentation rate (UR), Dice similarity coefficient (DSC) and Jaccard’s similarity index (JSI) are also utilized for evaluating the performance of our algorithm. Larger values of TNR, TPR, PPV, ACC, DSC and JSI and smaller values of Er, UR and OR indicate more accurate segmentation of lung images.

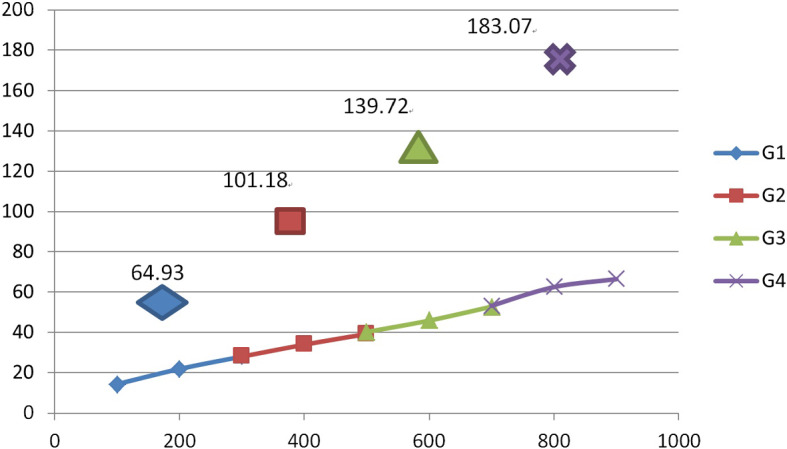

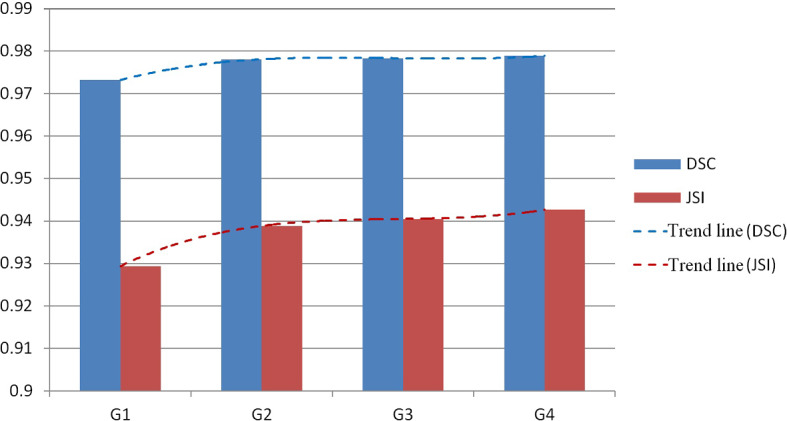

The Influence of Preset Superpixel Number

The preset superpixel number, P, affects the efficiency and accuracy of our segmentation algorithm greatly. In order to detect a suitable group of Ps, we randomly select 80 CT images affected with sarcoidosis to test our algorithm efficiency and accuracy with different Ps. We test four groups of Ps: (, , ), (, , ), (, , ), (, , ) and the corresponding average time costs are depicted in Fig. 8. For example, the average time coats on =100, =200 and =300 are 14.2s, 21.8s, and 27.8s, respectively. The total time cost of is 64.93s. The segmentation is implemented in MATLAB environment on a computer with Intel i7-6500U CPU and 16GB of RAM. It can be seen, the time cost increases with the increase of P.

Fig. 8.

Time costs (s) of our algorithm on four groups of Ps

In Fig. 9, we show the performance of our algorithm with four groups of Ps. We can see that the segmentation accuracies in terms of DSC and JSI increase with an increase of Ps. Nevertheless, the DSCs are all above 97%. In order to reduce the time cost while keeping a high segmentation accuracy, is ranged from 100 to 700.

Fig. 9.

Performance of our algorithm in terms of DSC and JSI on four groups of Ps (G1G4)

Result Analysis

We chose a challenging data set of thoracic CT images containing density pathologies in varying degrees of severity from ILDs database to test the performance of our lung segmentation algorithm: images affected with ground glass (GG), fibrosis (F), nodules (N), reticulation (R) and PCP (P). The number of the tested images in each type thoracic CT images ranged from 40 to 80, and we list the average performances of our algorithm in terms of TNR, TPR, PPV, ACC and Er in Table 2, and DSC, JSI, OR and UR in Table 3. It can be seen that the segmentation accuracy of R images has the highest accuracy such as TPR because of relatively homogeneous intensities in the images. Conversely, P images have the lowest accuracy among our segmentations due to the severe uneven distribution of gray scales.

Table 2.

Metric values of segmentation performance of our algorithm in terms of TNR, TPR, PPV, ACC and Er

| Type | TNR | TPR | PPV | ACC | Er |

|---|---|---|---|---|---|

| GG | 0.993188 | 0.968209 | 0.956047 | 0.990638 | 0.009362 |

| F | 0.993118 | 0.975091 | 0.960319 | 0.991109 | 0.008891 |

| R | 0.994181 | 0.985324 | 0.968018 | 0.993790 | 0.006210 |

| N | 0.991803 | 0.971644 | 0.958587 | 0.989572 | 0.010428 |

| P | 0.990620 | 0.861925 | 0.910564 | 0.974644 | 0.025356 |

Table 3.

Metric values of segmentation performance of our algorithm in terms of DSC, JSI, OR and UR

| Type | DSC | JSI | OR | UR |

|---|---|---|---|---|

| GG | 0.973484 | 0.929578 | 0.047004 | 0.031791 |

| F | 0.977763 | 0.936948 | 0.042058 | 0.024909 |

| R | 0.983378 | 0.953926 | 0.034278 | 0.014676 |

| N | 0.976029 | 0.933042 | 0.043707 | 0.028356 |

| P | 0.911976 | 0.803924 | 0.085096 | 0.138075 |

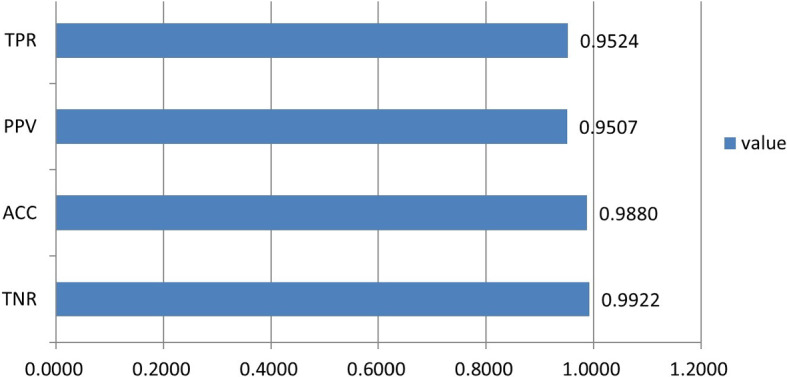

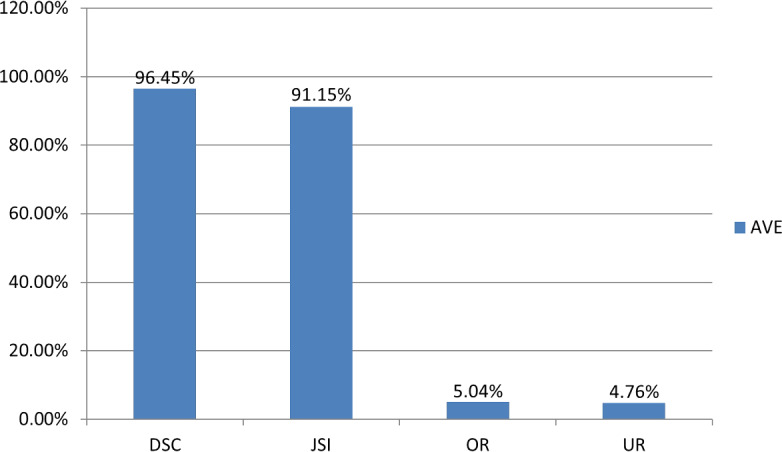

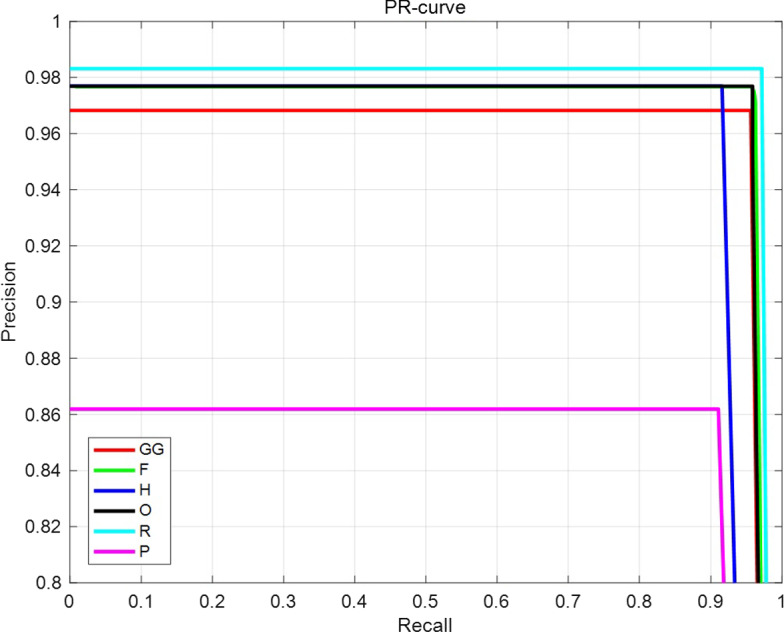

The average metric values (AVE) in terms of TNR, TPR, PPV, ACC and Er is shown in Fig. 10, and average DSC, JSI, OR and UR in Fig. 11. It can be seen that ACC and DSC are 0.9880 and 0.9645, respectively. The average PR curve of the five types of pathologic lung images is shown in Fig. 12.

Fig. 10.

Average metric values of segmentation performance of our algorithm in terms of TNR, TPR, PPV, ACC

Fig. 11.

Average metric values of segmentation performance of our algorithm in terms of DSC, JSI, OR and UR

Fig. 12.

Average Precision-Recall curve

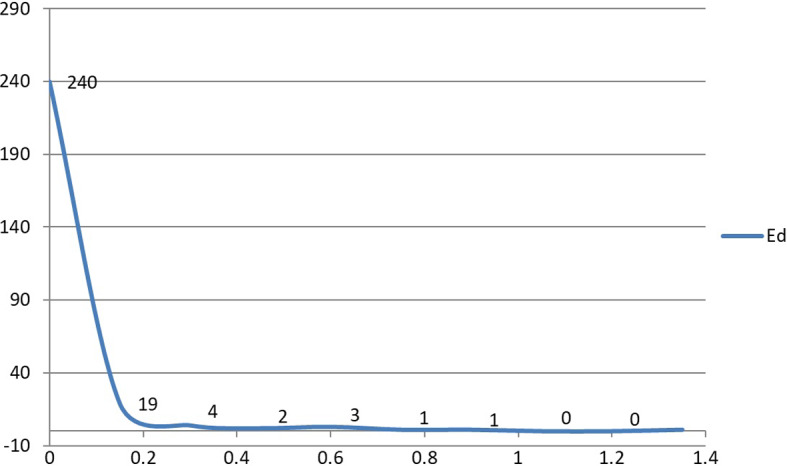

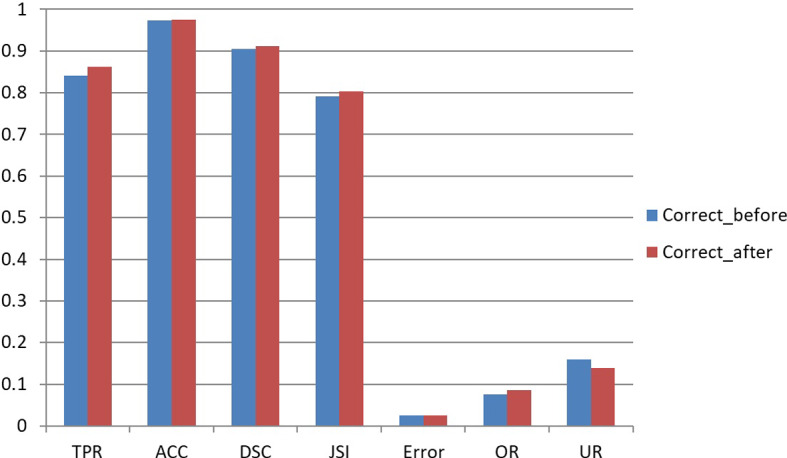

We randomly select 270 images from the above mentioned five types of images and the error distance defined as is shown in Fig. 13. Error distances are mainly concentrated in a range of (0, 0.2). The P images usually be destructed by pneumocystis pneumonia (PCP) and the intensities are inhomogeneous. Fig. 14 depicts a comparison of the segmentation accuracy of initial segmentation and refined segmentation of lungs in P images. It can be seen that the accuracies of the refined segmentation results in terms of TPR, ACC, DSC, and JSI are all higher than those of the initial segmentation results.

Fig. 13.

Error distance

Fig. 14.

Comparison of corrected and initial segmentation results of CT images of PCP

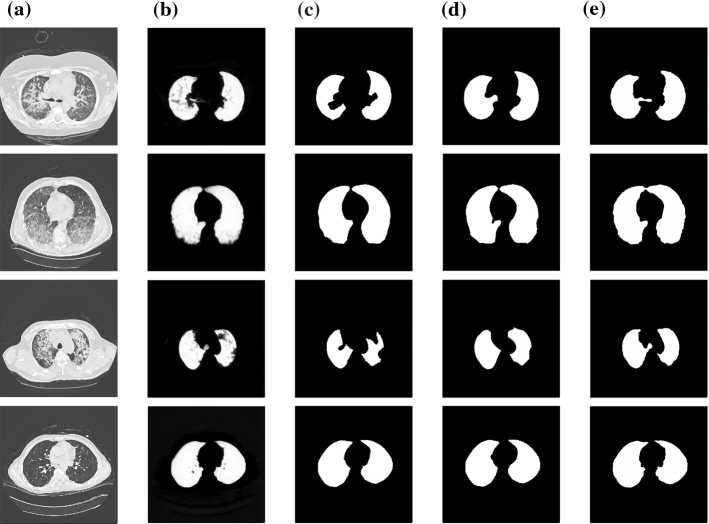

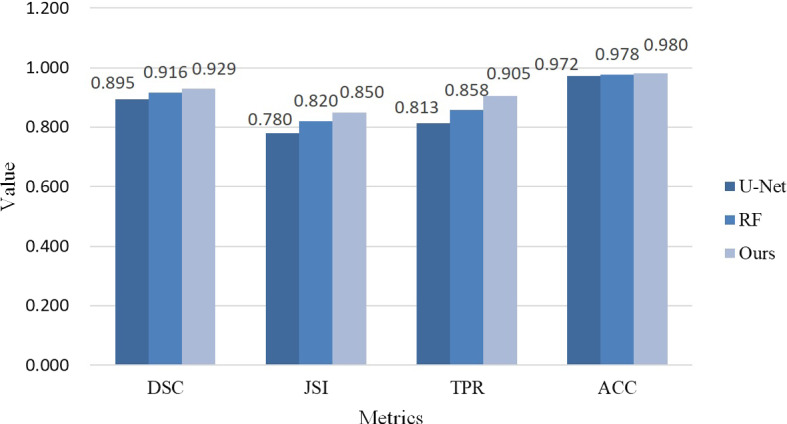

In Fig. 15, we show a group of segmentation results with some state-of-art methods [13, 18]. It can be seen that the other methods cannot deal with some intensity inhomogeneous images well. Conversely, our algorithm can segment more complete lungs from pathological lung CT images. In Fig. 16, we compare our algorithm with the other methods on a set of CT images affected with fibrosis and PCP. The results show that our algorithm achieves higher accuracies in terms of DSC, JSI, TPR, and ACC, which implies the segmentation ability of deep learning networks [18] on pathological lung CT images is hindered by a limited size of a training set (1000). The segmentation accuracy of a single-scale RF method [13] is also smaller than ours on the whole. Our algorithm is relatively more robust on lung segmentation than other methods because of the multi-scale classification fusion and effective post-processing operations.

Fig. 15.

Lung segmentations with different methods. a–e are original lung CT images, lung segmentation results with U-Net,random forest and our algorithm, respectively

Fig. 16.

Comparison of segmentation accuracy of different methods. Our algorithm achieves higher accuracy than other methods in terms of DSC, JSI, TPR, and ACC

Discussion

The fusion algorithm can generate high segmentation accuracy. However, it may generate a little bit higher OR shown in Fig. 11 due to two main factors. One factor arises from superpixel segmentation. We take superpixels as the input of the RF classifier. In order to accelerate our algorithm, we use three Ps with smaller values, which generates a group of multi-scaled superpixels with the SLIC approach. However, a bigger superpixel is difficult to capture narrow regions and sometimes brings about high ORs, see Fig. 17b for a reference. Accordingly, OR of fusion result is high by simply adding the multi-scale segmentation results together. In our algorithm, the correlation approach can solve this tissue and reduce OR. Another factor lies in region repair of right lung supervised by the left lung contour, and the over-segmentation of the left lung may propagate to the right lung. In order to overcome the issue, we capture a middle segment of the left lung contour and stretch it according to its local slope to match the right lung contour, which reduces the probability of wrong contour propagation, as well as OR.

Fig. 17.

An example of over-segmentation by our algorithm. For a thoracic CT image (a) with narrow lung regions, bigger-scale superpixels used in our multi-scale segmentation may result in over-segmentation (b) compared with the corresponding ground truth (c)

Conclusion

Pathologic thoracic CT image segmentation is a challenging issue for the presence of inhomogeneous intensities and various abnormalities. In this paper, we introduce a novel pathologic lung segmentation algorithm based on the DCNN model and random forest. In our algorithm, two fusion operations enhance the performance of our segmentation algorithm. First, the fusion of features of deep, texture and intensity enriches the classification information, which contributes to the classification of random forest. Second, the fusion of classification results of random forest based on multi-scale superpixels can produce more accurate results than one-time segmentation with relatively low time cost due to small-sized Ps. In order to improve segmentation accuracy, we introduce a divide-and-conquer strategy for refining segmentation results by correcting and repairing the left and right lungs. In our future work, we will improve the fusion techniques, augment training samples, and further enhance the performance of our lung algorithm on pathologic lung segmentation.

Funding

The work in this paper was supported by grants from the National Natural Science Foundation of China [Grant, 61702068], the Key Project of Ministry of Education for the 13th 5-years Plan of National Education Science of China [Grant No.DCA170302], the Social Science Foundation of Jiangsu Province of China [Grant No.15TQB005], the Priority Academic Program Development of Jiangsu Higher Education Institutions [Grant No.1643320H111] and the Postgraduate Research & Practice Innovation Program of Jiangsu Province [Grant No.KYCX19_0733]. The training and testing data in this paper come from Multimedia database of interstitial lung diseases (http://medgift.hevs.ch/wordpress/databases/ild-database/).

Compliance with Ethical Standards

Conflict of interest

No potential conflict of interest was reported by the authors.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Harrison A, Xu Z, George K et al (2017) Progressive and multi-path holistically nested neural networks for pathological lung segmentation from CT images. In: International conference on medical image computing and computer-assisted intervention, pp 621–629

- 2.Mansoor A, Bagci U, Xu Z, et al. A generic approach to pathological lung segmentation. IEEE Trans Med Imaging. 2014;33(12):2293–2310. doi: 10.1109/TMI.2014.2337057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Mansoor A, Bagci U, Foster B, et al. Segmentation and image analysis of abnormal lungs at CT: current approaches, challenges, and future trends. Radio Graph. 2015;35(4):1056–1076. doi: 10.1148/rg.2015140232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Amanda A, Widita R. Comparison of image segmentation of lungs using methods: connected threshold, neighborhood connected, and threshold level set segmentation. J Phys. 2016;694(1):1–5. [Google Scholar]

- 5.Prabin A, Veerappan J. Automatic segmentation of lung CT images by CC based region growing. J Theor Appl Inf Technol. 2014;68(1):63–69. [Google Scholar]

- 6.Mansoor A, Bagci U, Mollura D (2014) Near-optimal keypoint sampling for fast pathological lung segmentation. In: International conference of the IEEE engineering in medicine and biology society, pp 6032–6035 [DOI] [PubMed]

- 7.Chen G, Xiang D, Zhang B, et al. Automatic pathological lung segmentation in low-dose CT image using eigenspace sparse shape composition. IEEE Trans Med Imaging. 2009;38(7):1–13. doi: 10.1109/TMI.2018.2890510. [DOI] [PubMed] [Google Scholar]

- 8.Revathi T, Geetha P. Lung segmentation and classification for pathological lung identification. Int Conf Comput Power Energy Inf Commu. 2016;2016:148–153. [Google Scholar]

- 9.Soliman A, Elnakib A, Khalifa F, et al. Segmentationof pathological lungs from CT chest images. IEEE Int Conf Image Process. 2015;41631175:3655–3659. [Google Scholar]

- 10.Hua P, Qi S, Sonka M, et al. Segmentation of pathological and diseased lung tissue in CT images using a graph-search algorithm. From Nano to Macro. IEEE Int Symp Biomed Imaging. 2011;2011:1–4. doi: 10.1155/2011/572187. [DOI] [Google Scholar]

- 11.Hosseini-Asl E, Zurada J, El-Baz A (2015) Automatic segmentation of pathological lung using incremental nonnegative matrix factorization. In: IEEE international conference on image processing, pp 3111–3115

- 12.Korfiatis P, Kalogeropoulou C, Daoussis D, et al. Exploiting unsupervised and supervised classification for segmentation of the pathological lung in CT. J Instr. 2009;4(07):1–5. doi: 10.1088/1748-0221/4/07/P07013. [DOI] [Google Scholar]

- 13.Liu C, Zhao R, Pang M. Lung segmentation based on random forest and multiscale edge detection. IET Image Process. 2019;13(10):1745–1754. doi: 10.1049/iet-ipr.2019.0130. [DOI] [Google Scholar]

- 14.Meng L, Zhao H. A new lung segmentation algorithm for pathological CT images. Int Jt Conf Comput Sci Optim. 2009;1:847–850. [Google Scholar]

- 15.Abdollahi B, Soliman A, Civelek A et al (2013) A novel Gaussian scale space-based joint MGRF framework for precise lung segmentation. In: IEEE international conference on image processing, pp 2029–2032

- 16.Yu J, Zhu C, Zhang J, et al. Spatial pyramid-enhanced NetVLAD with weighted triplet loss for place recognition. IEEE Trans Neural Netw Learn Syst. 2019;31(2):661–674. doi: 10.1109/TNNLS.2019.2908982. [DOI] [PubMed] [Google Scholar]

- 17.Hong C, Yu J, Wan J, et al. Multimodal deep autoencoder for human pose recovery. IEEE Trans Image Process. 2015;24(12):5659–5670. doi: 10.1109/TIP.2015.2487860. [DOI] [PubMed] [Google Scholar]

- 18.Park B, Park H, Lee S, et al. Lung segmentation on HRCT and volumetric CT for diffuse interstitial lung disease using deep convolutional neural networks. J Digital Imaging. 2019;32(6):1019–1026. doi: 10.1007/s10278-019-00254-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Anthimopoulos M, Christodoulidis S, Ebner L. Semantic segmentation of pathological lung tissue with dilated fully convolutional networks. IEEE J Biomed Health Inf. 2018;23(2):714–722. doi: 10.1109/JBHI.2018.2818620. [DOI] [PubMed] [Google Scholar]

- 20.Lai Z, Deng H. Medical image classification based on deep features extracted by deep model and statistic feature fusion with multilayer perceptron. Comput Intell Neurosci. 2018;2018:1–13. doi: 10.1155/2018/2061516. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hong C, Yu J, Zhang J, et al. Multimodal face-pose estimation with multitask manifold deep learning. IEEE Trans Ind Inf. 2018;15(7):3952–3961. doi: 10.1109/TII.2018.2884211. [DOI] [Google Scholar]

- 22.Zhang J, Yu J, Tao D. Local deep-feature alignment for unsupervised dimension reduction. IEEE Trans Image Process. 2018;27(5):2420–2432. doi: 10.1109/TIP.2018.2804218. [DOI] [PubMed] [Google Scholar]

- 23.Hong C, Yu J, Tao D, et al. Image-based three-dimensional human pose recovery by multiview locality-sensitive sparse retrieval. IEEE Trans Ind Electron. 2014;62(6):3742–3751. [Google Scholar]

- 24.Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention, pp 234–241

- 25.Radhakrishna A, Appu S, Kevin S, et al. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell. 2012;34(11):2274–2282. doi: 10.1109/TPAMI.2012.120. [DOI] [PubMed] [Google Scholar]

- 26.Vincent L. Morphological grayscale reconstruction in image analysis: applications and efficient algorithms. IEEE Trans Image Process. 1993;2(2):176–201. doi: 10.1109/83.217222. [DOI] [PubMed] [Google Scholar]

- 27.LeCun Y, Bottou L, Bengio Y, et al. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278–2324. doi: 10.1109/5.726791. [DOI] [Google Scholar]

- 28.Ohanian R, Dubes R. Performance evaluation for four classes of textural features. Pattern Recogn. 1992;25(8):819–833. doi: 10.1016/0031-3203(92)90036-I. [DOI] [Google Scholar]

- 29.Chang C, Wang L. Color texture segmentation for clothing in a computer-aided fashion design system. Image Vis Comput. 1996;14(9):685–702. doi: 10.1016/0262-8856(96)84492-1. [DOI] [Google Scholar]

- 30.Huang Z, Leng J. Analysis of Hu’s moment invariants on image scaling and rotation. Int Conf Comput Eng Technol. 2010;7:476–480. [Google Scholar]

- 31.Hu M. Visual pattern recognition by moment invariants. IRE Trans Inf Theory. 1962;8(2):179–187. doi: 10.1109/TIT.1962.1057692. [DOI] [Google Scholar]

- 32.Min B, Lim D, Kim S, et al. A novel method of determining parameters of CLAHE based on image entropy. Int J Softw Eng Appl. 2013;7(5):113–120. [Google Scholar]

- 33.Keen N (2005) Color moments. School of Informatics, University of Edinburgh, pp 3–6

- 34.Stricker M, Orengo M. Similarity of color images. Proc SPIE Storag Retriev Image Video Datab. 1995;2420:381–392. doi: 10.1117/12.205308. [DOI] [Google Scholar]

- 35.Fernández-Delgado M, Cernadas E, Barro S, et al. Do we need hundreds of classifiers to solve real world classification problems? J Mach Learn Res. 2014;15(1):3133–3181. [Google Scholar]

- 36.Wu L, Liu S, Yao L. Discrete grey model based on fractional order accumulate. Syst Eng Theory Pract. 2014;34(7):1822–1827. [Google Scholar]

- 37.Smith S, Brady J. SUSAN–a new approach to low level image processing. Int J Comput Vis. 1997;23(1):45–78. doi: 10.1023/A:1007963824710. [DOI] [Google Scholar]

- 38.Khanna D, Mittoo S, Aggarwal R, et al. Connective tissue disease-associated interstitial lung diseases (CTD-ILD) report from OMERACT CTD-ILD Working Group. J Rheumatol. 2015;42(11):2168–2171. doi: 10.3899/jrheum.141182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Depeursinge A, Vargas A, Platon A, et al. Building a reference multimedia database for interstitial lung diseases. Comput Med Imaging Graph. 2012;36(3):227–238. doi: 10.1016/j.compmedimag.2011.07.003. [DOI] [PubMed] [Google Scholar]

- 40.Brown J. Classifiers and their metrics quantified. Mol Inf. 2018;37(1–2):1–11. doi: 10.1002/minf.201700127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Sahu S, Agrawal P, Londhe N, et al. A new hybrid approach using fuzzy clustering and morphological operations for lung segmentation in thoracic CT images. Biomed Pharmacol J. 2017;10(4):1949–1961. doi: 10.13005/bpj/1315. [DOI] [Google Scholar]