Abstract

Implementing a digital mental health service in primary care requires integration into clinic workflow. However, without adequate attention to service design, including designing referral pathways to identify and engage patients, implementation will fail. This article reports results from our efforts designing referral pathways for a randomized clinical trial evaluating a digital service for depression and anxiety delivered through primary care clinics. We utilized three referral pathways: direct to consumer (e.g., digital and print media, registry emails), provider referral (i.e., electronic health record [EHR] order and provider recommendation), and other approaches (e.g., presentations, word of mouth). Over the 5-month enrollment, 313 individuals completed the screen and reported how they learned about the study. Penetration was 13%, and direct to consumer techniques, most commonly email, had the highest yield. Providers only referred 16 patients through the EHR, half of whom initiated the screen. There were no differences in referral pathway based on participants’ age, depression severity, or anxiety severity at screening. Ongoing discussions with providers revealed that the technologic implementation and workflow design may not have been optimal to fully affect the EHR-based referral process, which potentially limited patient access. Results highlight the importance of designing and evaluating referral pathways within service implementation, which is important for guiding the implementation of digital services into practice. Doing so can ensure that sustained implementation is not left to post-evaluation bridge-building. Future efforts should assess these and other referral pathways implemented in clinical practice outside of a research trial.

Keywords: anxiety, depression, digital mental health, primary care, IT integration

Implication.

Practice: Integrating an innovative digital mental health service into clinical workflow benefits from designing several referral pathways to engage patients and providers.

Policy: When implementing digital mental health services in diverse care settings, implementation would benefit from policies that ensure available resources and implementation timelines accommodate monitoring and optimizing referral pathways over time.

Research: Future research should aim to evaluate the effectiveness of diverse referral pathways for a digital mental health service when implemented in clinical practice not during a clinical trial.

Introduction

Depression is an impairing, costly illness [1–6] primarily managed in primary care [7,8]. Numerous controlled trials have demonstrated the efficacy of digital mental health interventions [9], making them ripe for implementation in diverse settings, such as primary care [10,11]. However, implementing a digital mental health service in clinical practice requires designing a service that integrates into the natural flow of patient care in a particular setting (i.e., clinical workflow). Alignment with the delivery model is a key organizational-level determinant for implementing digital technologies [12]. Thus, understanding contextual/organizational factors (e.g., clinic activities and associated processes), and designing a service to align with and/or support them, is an important facet of implementing health technologies in practice [13,14].

Service design refers to the entire process during which key stakeholders engage with the service, from the first point of contact through monitoring outcomes over time. One component of this is designing a referral pathway that effectively identifies and reaches patients with problems targeted by the service and connects them with the service in a manner that integrates into clinic workflow. Ideally, integrating into clinic workflow means making the service fit with the processes, timing, and cognitive awareness that providers and other stakeholders do regularly and leveraging tools stakeholders frequently use, while harnessing the electronic health record (EHR) [15]. However, referral pathways within primary care for mental health generally have been poor [16] and failure points in the implementation of digital mental health [17,18].

We conducted a clinical trial to evaluate a digital service for depression and anxiety, known as IntelliCare [19,20], for implementation in primary care clinics. We composed a multidisciplinary team (comprised of clinical scientists and researchers, a designer, programmers, and a business executive) to work with stakeholders at the clinical site (comprised of clinical scientists, providers, administrators, and clinical informaticists) to design and deliver the service, including the referral pathways and their integration across the targeted primary care clinics. Building off the expertise of members of our team in utilizing diverse techniques to recruit and enroll participants into digital health research trials [21,22], the current trial provides a means to evaluate referral pathways for digital health in primary care. Although this work was conducted in the context of a research trial, it facilitates our overall aim of informing the implementation of effective referral pathways to digital mental health services in this clinical setting. We focus on referral pathways, given the importance of ensuring this point-of-entry component of service design functions effectively for the service to succeed when implemented in routine practice.

Thus, the purpose of this article is to inform the implementation of referral pathways to services in primary care settings using the results of our efforts designing referral pathways to engage primary care patients and providers to enroll patients in a trial of a digital mental health service. We present results of (a) the yield of our referral pathways and the penetration rate of our trial; (b) whether referral pathways differed by individual characteristics; and (c) feedback from providers on the provider-facing referral pathway into the service/study. We close with a discussion of design considerations for future implementation in primary care settings.

METHODS

This article presents an analysis of baseline data from a randomized controlled trial testing the efficacy of a digital service delivered through primary care clinics at the [University of Arkansas for Medical Sciences (UAMS)], an academic medical center in [Little Rock, Arkansas].

Setting

Primary care clinics run and staffed by the [UAMS] were included. Originally, we planned to target one on-site primary care clinic; we expanded to satellite clinics based on providers’ suggestions that this would match their workflow (e.g., the clinics share patients and the EHR). During the trial, these clinics integrated with other internal medicine clinics, which enabled offering our service across all primary care clinics affiliated with internal medicine at [UAMS].

Participants

This study examined all potential trial participants who completed a web-based screener. Criteria for trial inclusion included: registered primary care patient at [UAMS]; age ≥18; English-speaking; had a score ≥10 on the Patient Health Questionnaire-8 (PHQ-8) [23] or ≥8 on the 7-item Generalized Anxiety Disorder scale (GAD-7) [24,25] at screening; and had a compatible smartphone with a text message and data plan. Individuals were excluded if they exceeded the suicide risk criterion (i.e., had ideation, plan, and intent) at baseline; were currently receiving or planning to receive psychotherapy in the next 4 months; had a change in psychotropic medication in the past 14 days; had bipolar disorder, psychotic disorder, dissociative disorder, or any other psychiatric condition for which the treatment under study might not be appropriate; or had a visual, motor, or hearing impairment that prevented completing study procedures.

Procedure

Study enrollment occurred between July 17, 2018 and December 14, 2018. Recruitment occurred through three referral pathways, shown in Table 1. Recruitment materials indicated this was a research study of the IntelliCare service. Because enrolled individuals were randomized to IntelliCare or a waitlist condition (i.e., received IntelliCare after 8 weeks), interested patients and referring providers were informed that all eligible patients would be offered the intervention.

Table 1.

Referral pathways, strategies, sites, and techniques

| Pathway | Direct to consumer | Provider referral | Other | |||||

|---|---|---|---|---|---|---|---|---|

| Strategy | Digital | Registry | Clinic | EHRa | Recommend | Other | Campus buzzb | |

| Sites where delivered | Facebook [UAMS] Websites | [UAMS] Research Volunteer Registryc | [UAMS] Campus | [UAMS] Clinicsd | [UAMS] Clinicsd | [UAMS] Clinicsd | [UAMS] Campus | [UAMS] Campus |

| Techniques | Social media marketing | Email to individuals interested in mental health research | Flyers | Email to patients with at least 1 primary care visit in last 12 mo | Best practice alert and mental health order set following PHQ-9 screening | Provider recommendation to patient | Word of mouth† | Presentations at two digital health events at [UAMS] |

| Two online articles | Articles in [UAMS] newsletters | Flyers and brochures in clinic rooms | Email through EHR in-basket | Self-referral to recruitment website | Other (miscellaneous campus buzz) | |||

EHR, electronic health record; [UAMS], [University of Arkansas for Medical Sciences].

aDue to the complexity of building this technological feature, the EHR order and best practice alert were not released as a recruitment strategy until October 1, 2018 (2.5 mo into study enrollment).

bWord of mouth was coded separately from campus buzz when a person indicated that they heard about the study from a specific, named individual. Campus buzz referred to instances when a person indicated that they heard about the study through various discussions on campus that may not have been intended to recruit participants (e.g., presentations to [UAMS] providers).

cThe [UAMS] Research Volunteer Registry is supported by the university’s CTSA-funded Translational Research Institute.

d[UAMS] clinics include the on-site primary care clinic, affiliated satellite primary care clinics, and internal medicine clinics.

Study procedures occurred remotely via electronic communication or by telephone as needed. Interested individuals were directed to a recruitment website, which had information about the study, procedures, IntelliCare, mental health resources, and a link to the screen and informed consent form. Website content and study recruitment materials were developed with the [UAMS] Center for Health Literacy to ensure they were easy to use, understand, and tailored to the population so that those interested could make informed decisions about this study/service.

From the recruitment website, interested individuals then completed an online screen and informed consent. Based on screen results and an EHR chart review, potentially eligible individuals were invited to complete an online baseline questionnaire. Individuals eligible for participation were randomized to the intervention or waitlist control conditions. Participants were followed for the subsequent 4 months. Participants could receive up to $100 for completing all five study assessments (i.e., at baseline and every 4 weeks through 16 weeks), but not for engaging with the intervention. This study was approved by the [UAMS] Institutional Review Board (IRB) and monitored by an independent Data Safety and Monitoring Board.

Primary care referral workflow

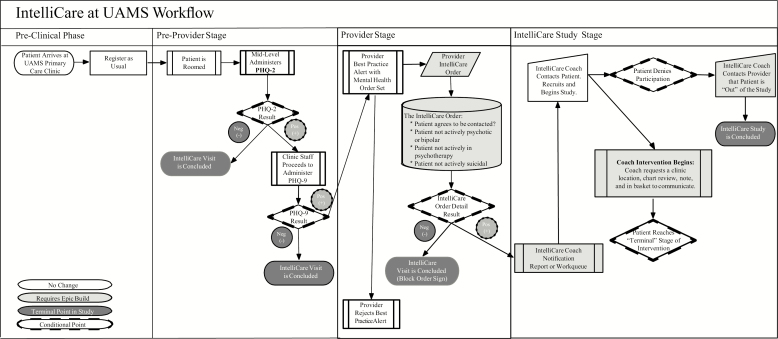

To encourage providers to recommend the IntelliCare service to their patients, we conducted several outreach activities with providers (e.g., campus presentations, providing flyers for clinic rooms). Providers were given information about the study and IntelliCare, including service descriptions, literature of previous research findings, and information on how to download the apps. We also engaged in discussions with providers to determine how the service could fit within their workflow, and with the clinical informatics team to determine what new EHR builds within UAMS’s EHR software, Epic, would support providers’ workflow. The study team learned that it would be helpful to add features to the EHR that would integrate with existing tasks in the typical workflow of providers’ visits with patients. The decision was to enable providers to recommend IntelliCare as an EHR order following administration of the PHQ-9 [26], the routinely administered depression screen in their clinics. Figure 1 shows the process workflow for how IntelliCare would be integrated within primary care. For patients who screened positive on the PHQ-9 (typically administered by clinic staff), a best practice alert was launched for the physician, along with a mental health order set to recommend the service as a treatment option. The order had four checkboxes for the provider to (a) determine whether the patient met certain study exclusion criteria, making it easier for the provider to know whether the patient would be ineligible to enroll so they could instead recommend a different resource for depression management and (b) confirm that the patient was willing to be contacted about a study.

Fig 1.

Process workflow chart.

Once the process workflow design was created, the best practice alert and order were built into the EHR. However, due to the complexity of building the logic of the new order within the preferred EHR workflow structure, the EHR features experienced significant delay, negatively affecting the timeline available for direct provider referral. The full EHR build was not released as a recruitment strategy until October 1, 2018 (2.5 months into study enrollment).

Assessing participants’ referral pathway

During the screen, participants were prompted to report their age, indicate whether they were a [UAMS] patient, and complete the PHQ-8 and GAD-7. Those who met study criteria associated with these constructs then were prompted to report basic contact information and how they heard about the study. The screen was discontinued for individuals who did not meet these entry criteria, such that not all who started the screen indicated how they heard about the study.

For some screen completers, additional referral information was obtained or used if known. We noted individuals whose self-reported email address matched their email address on the registry as a way to validate or supplement their self-report responses. However, we did not add these data for individuals whose screener cited an email address that was attached to another person on the registry (e.g., some individuals share emails), as we could not verify who received the email. We also tracked participants who received an EHR referral. Intervention coaches asked enrolled participants how they learned about the study during an onboarding phone call.

Participants’ referral pathways were categorized within a four-tiered system (Table 1). Tier 1 refers to the pathway. Tier 2 indicates the strategy used within that pathway. Tier 3 shows the sites at which the strategy was implemented. Tier 4 indicates the specific techniques used.

Assessing providers’ perspectives on the provider referral pathway

As part of implementation and design activities, we engaged providers in ongoing dialogue and collaboration. Provider feedback specific to the referral pathway was obtained by the study designer via notes from observations of one staff meeting, four semistructured individual interviews (approximately 20 min each) in which a provider referral technique was discussed, and informal interactions with clinical team members. Interviews comprised a convenience sample of [UAMS] providers (three physicians, one behavioral health consultant) willing to engage in discussions to optimize an implementation plan; although questions were flexibly asked based on participants’ responses, the designer had a prompt for EHR integration. Across discussions, the overall consistency of responses provided sufficient feedback to equip the research team with ideas for next steps toward optimization, in line with the project goals.

Analyses

For aim one, we used descriptive statistics to determine the number of patients interested in the study via each referral pathway and associated recruitment technique. We quantified the success of two referral techniques (email and EHR referral) based on knowing the number of individuals directly engaged by that technique. We calculated the trial penetration rate as the number of enrolled patients divided by the total number of [UAMS] primary care patients who had positive depression screens in the EHR during the enrollment period. (The [UAMS] clinics do not screen for anxiety as part of routine clinical practice; therefore, the penetration rate among potential patients with depression and/or anxiety was unable to be determined.). The denominator represented those for whom the intervention was clinically appropriate and therefore eligible for enrollment, as defined by Hermes et al. [27] and consistent with Proctor et al. [28]. For aim two, we used analysis of variance to evaluate differences in referral pathways by participants’ age, depression severity, and anxiety severity at screening, given that referral pathways may have differing yields based on individual characteristics. These analyses were performed using SPSS version 25; p < .05 were considered statistically significant. For aim three, the designer aggregated and summarized responses on the provider referral pathway to yield key insights.

RESULTS

During enrollment, 435 individuals initiated the screen, of whom 313 completed the screen and reported how they heard about the study. One hundred forty-six individuals (34% of those assessed for eligibility) enrolled in the study and were randomized. Among the 313 screen completers, mean age was 41.35 years (SD = 13.41), mean depression score was 14.69 (SD = 4.81), and mean anxiety score was 13.61 (SD = 4.55). Compared with screen completers, nonscreen completers were slightly older (n = 117; mean = 44.68; SD = 15.48; t(1,185) = 2.06; p = .041), with lower depression scores (n = 101; mean = 7.29; SD = 4.07; t(1,198) = −15.19; p < .001) and anxiety scores (n = 101; mean = 5.62; SD = 3.62; t(1, 219) = −18.22; p < .001); significant differences in depression and anxiety scores reflect study procedures in which the screen ended prematurely for those with low scores on these measures.

Over the enrollment period, 6,401 emails were sent to individuals on the primary care clinic patient list (comprised patients with ≥1 visit in the last year), and 1,351 emails were sent to individuals on the research volunteer registry. Providers (n = 7) placed 16 orders via the EHR.

Yield of the referral pathways

Table 2 shows the yield of the referral pathways based on recruitment technique used. Direct to consumer had the highest yield, followed by more than one pathway, provider referral, and other. Within the direct to consumer pathway, the most common technique was an email from the study team. Among those who learned about study through more than one technique (via the direct to consumer or more than one referral pathway), the most common technique was receiving an email plus at least one other technique, most frequently provider recommendation.

Table 2.

Yield of recruitment techniques

| Technique | n (% of 313) |

|---|---|

| Direct to consumer referral pathway | 257 (82) |

| Facebook post | 1 (<1) |

| Online article | 0 (0) |

| Email from study team | 228 (73) |

| Research volunteer registry (n = 53) | — |

| Primary care clinic list (n = 150) | — |

| Both lists (n = 25) | — |

| Flyer | 14 (4) |

| Print article | 0 (0) |

| More than one technique | 14 (4) |

| Email + flyer (n = 12) | — |

| Email + online article (n = 1) | — |

| Email + Facebook post (n = 1) | — |

| Provider referral pathway | 14 (4) |

| Electronic health record order | 6 (2) |

| Provider recommendation | 8 (3) |

| Other referral pathway | 11 (4) |

| Word of mouth | 3 (1) |

| Self-referral | 0 (0) |

| Presentation | 1 (<1) |

| Other (campus buzz) | 7 (2) |

| More than one referral pathway | 31 (10) |

| Email + provider recommendation | 14 (4) |

| Email + electronic health record order | 2 (1) |

| Email + word of mouth | 3 (1) |

| Email + self-referral to recruitment website | 2 (1) |

| Email + presentation | 2 (1) |

| Email + campus buzz | 2 (1) |

| Provider recommendation + campus buzz | 1 (<1) |

| Two emails + provider recommendation | 2 (1) |

| Email + provider recommendation + word of mouth | 1 (<1) |

| Email + provider recommendation + campus buzz | 1 (<1) |

| Email + flyer + word of mouth + campus buzz | 1 (<1) |

Of the 7,752 total recruitment emails sent to patients, 272 (3.5%) completed the screen and 126 (1.6%) enrolled in the trial. Sixteen patients were referred via EHR, of whom 8 (50%) completed the screen and 5 (31.2%) enrolled in the trial. Of the 8 EHR-referred patients who did not initiate screening, the patient either expressed to the research team that they were not interested, did not initiate the screen following outreach attempts, or was unable to be reached. All EHR-referring providers were updated on their patients’ enrollment outcome.

During the enrollment period, 1,135 primary care patients were potentially eligible for the trial based on having positive depression screens in the EHR. Penetration was 13% (146/1,135).

Differences in referral pathways by individual characteristics

There were no significant differences in recruitment approach based on participants’ age (F(3,309) = 0.33; p = .80), depression severity (F(3,309) = 2.23; p = .08), or anxiety severity (F(3,309) = 2.00; p = .11) at screening.

Feedback from providers on the service design

Several insights were gleaned from providers as to why the EHR order was underutilized. First, providers remarked that, despite discussions in advance that informed how to map the EHR features to their workflow, in practice, the timing of the order alert was not optimized for the desired workflow. Because depression screening occurred before the physician met with the patient, the alert and order were one of the first notifications the physician received when they opened the patient’s EHR chart. However, because the patient’s depression screen results may not have been the primary presenting problem or were among the myriad presenting problems the physician needed to discuss with their patient, providers reported that the best practice alert and order were frequently overlooked within the clinic visit. Some providers also indicated that they did not refer to the study (a) because behavioral health services already were available or integrated within their clinic or (b) because they felt it was the role of a behavioral health provider, not the physician, to refer to this service. Finally, the delayed release of the EHR order within the study’s recruitment timeline negatively affected providers’ memory of the service.

At the same time, providers were enthusiastic about the service and the value it offered patients. For example, providers said that, by having flyers in the clinic rooms and hospital common areas (as well as email and word of mouth), patients initiated conversations about the study during their visit, which led to mental health discussions that might not have occurred.

Discussion

Organizational frameworks and implementation science approaches for digital health highlight the importance of studying contextual/organizational factors that impact technology use [13,14,29], which can inform conditions under which the technology works and guide its implementation into practice. Consistent with these recommendations, this article reports the results of our efforts designing referral pathways to engage primary care patients and providers with a digital mental health service as part of a clinical trial, to inform the design of effective referral pathways for implementation in primary care settings. Several key findings emerged.

First, we highlight the success of our referral pathways. In 5 months, 435 individuals initiated the screen, of whom 146 were eligible for and enrolled in the trial. Our penetration rate was 13%, which is higher than the 8% reach rate observed in a trial implementing a digital addiction intervention in primary care [11]. We also showed some benefit of avoiding “one size fits all” approaches to engaging patients, as 14% reported learning about the study via multiple techniques. Given the hypothesis that referrals may be more likely for some individuals than others (e.g., individuals referred by their provider may have more severe depression or anxiety), we showed that individual characteristics did not significantly differ by referral pathway.

Direct to consumer techniques, most commonly emails, attracted more individuals to initiate the screen than provider referrals or other techniques. This may be due to several factors. For one, because our study had an enrollment target and limited timeline, our team controlled the rate at which we sent emails to potential participants, whereas providers only communicated with patients during clinic visits. As such, we had greater access to reach clinic patients in a rapid way, which was helpful given the demands of our timeline but which lacks relevance to real-world implementation in primary care. It is possible that with a longer enrollment timeline and no enrollment cap, more patients would be referred by providers. Techniques in the “other” pathway like word of mouth or “campus buzz” are relevant to future implementation, but likely benefit from a longer implementation period and possibly larger sample. Furthermore, because our screening procedures occurred electronically, the email technique eliminated extra steps that individuals had to complete if they learned about the screen through nonelectronic techniques.

Yet, although email was the most commonly cited technique, <5% of patients targeted by email completed the screen or enrolled. This may be because our clinic registry comprised all patients with a primary care visit in the past year, rather than a targeted list of patients who screened positive for depression (due to IRB specifications to protect patients’ anonymity), and we used a research volunteer registry, which is not relevant to clinical practice. Thus, for future implementation, registries of patients with the targeted problem may result in higher yield.

Despite developing EHR features that aimed to align with providers’ workflow, provider referrals were substantially fewer than direct to consumer techniques. Many barriers have been cited that limit primary care providers’ willingness to engage patients in mental health research or digital mental health approaches, such as protecting the doctor–patient relationship, lack of confidence, skills, or experience, time constraints, and low priority within the session [30,31]. Best practice alerts also have been ineffective in improving provider referrals [32,33]. In our study, providers indicated that the EHR referral may have been hindered by the technologic and workflow limitations of the order, despite collaborating with providers to design the alert and order set. Although our design intended to link the service referral to activities providers were already doing in patients’ visits (i.e., depression screening), this did not consistently translate to clinical practice. Because medical visits are not always linear, it is difficult to design a referral workflow that functions across all visits. Providers also felt that behavioral health specialists should oversee referrals for a depression service, suggesting that future implementation may benefit from more targeted engagement with behavioral health specialists in primary care.

Translational potential

Although this service was offered through a trial, this work has relevance to the design of referral pathways for future implementation of digital mental health services in primary care, as several aspects of our referral design seemed to facilitate a successful patient flow into the IntelliCare service. Consistent with recommendations for accelerating digital mental health research to promote successful implementation [34], our referral pathways were designed with stakeholders to fit the needs of the setting. We used multiple, site-specific recruitment techniques to engage patients and make providers aware of this service/study. We also used a tailored, user-friendly, and digestible recruitment website as the entry point, which provided clear descriptions of the study and IntelliCare service prior to entry. In fact, one indication that our approaches may have been more successful than previous research is that 34% (146/435) of assessed individuals in our trial enrolled over 5 months. By contrast, in a large clinical trial of a digital intervention for depression, 18% (405/2,244) of assessed individuals enrolled over 1 year [35], and in the field trial of IntelliCare, 23% (105/458) of assessed individuals enrolled over 1 year [20].

Many techniques could be harnessed beyond a research study for implementation in practice, while balancing costs of the technique against benefits to the care system. For example, it may be feasible to email patients who are identified by providers given the proliferation of care managers and behavioral health specialists embedded in primary care who might support such an effort. Clinics with resources to build a referral order in the EHR could make it automated for those who screen positive for depression, rather than relying on providers to order the referral. Digital and print media have minimal costs to produce, but we observed that these techniques had low yield. As was the case in this study, it may be difficult to assess the success of certain techniques: it is impossible to quantify how many people view flyers without sophisticated tracking equipment, and quantifying how many view digital advertisements requires access to and analysis of web data analytics that clinics may be unable to pursue. Presentations require presenters and attendees to devote time to attend the session. In this trial, we linked presentations to meetings providers already were attending (e.g., monthly meetings for residents) to integrate within routine clinic activities and avoid adding burden on providers. Finally, word of mouth or “campus buzz” techniques do not rely on resources to implement but are outside the control of an implementation team; however, any yield (i.e., benefit) is generated at zero cost. Taken together, future implementation needs to consider the resources available to implement different referral pathways to identify and engage patients into a new service, balanced against the benefits to the care system, such as clinical benefit to the patient population and value to the care system.

An ideal consideration for future implementation of digital services is iterating on referral pathways over time to achieve optimization. Service design is based on user-centered design principles and methodologies, which involves working with stakeholders to iteratively create and test designs based on information about the users and contexts in which the service will be used. For example, in this study, our team worked with stakeholders to design how providers could recommend IntelliCare within their workflow. Once we learned that the EHR features did not consistently match providers’ workflows, a user-centered design approach would entail working with providers and informaticists to adapt the workflow design and EHR features. However, our enrollment timeline and limited resources to update EHR features did not allow for testing adapted service designs. Future implementation over a longer duration would allow for iterating on identified needs within the referral pathways to achieve an optimized service design.

Limitations

Despite designing multiple referral pathways to engage patients and providers in a study of a digital mental health service, limitations should be noted. The most notable limitation is that, although our inclusion criteria reflected our target population and aligned with the needs of the clinics (e.g., we intentionally used as few exclusion criteria as possible to ensure a generalizable sample and allow as many patients as appropriate for the service to engage with it), this service was delivered as a research study, so results may not generalize to nonresearch efforts. Although study criteria were known by referring providers, study procedures may have deferred providers from referring patients to the study and deferred patients from considering the study/service. Our assessment of certain study criteria may not reflect enrollment procedures in actual clinic settings and may have limited the number of individuals who might otherwise have been eligible. Second, the number of individuals who learned about the study through multiple sources may be higher than what we captured, as we asked individuals to only select one referral pathway on the screener. Third, although we calculated patient penetration for our trial, we do not report other implementation outcomes, such as provider penetration, acceptability, feasibility, or cost, which are important outcomes for future research. Fourth, we were unable to assess individual differences in recruitment approaches based on factors such as gender and income, given that these items were not assessed among all screen completers, and our analysis of individual differences was limited by a small sample size in certain subgroups. Last, given our project goals to inform optimization of a digital service in primary care, the methodology surrounding the provider feedback sessions may have been less formal or detailed than other qualitative research projects. We also did not conduct focus groups with patients to inform the design of the referral pathways.

Conclusions

We designed several referral pathways to engage patients and providers in a trial of a digital mental service delivered through primary care clinics. This work is important for guiding the implementation of technologies into practice by informing conditions under which a digital service may be successfully integrated. Our results highlight the need to design and evaluate referral pathways within service implementation, which can ensure sustained implementation of digital services is not left to post-evaluation bridge-building. Future efforts should assess these and other referral pathways when implemented outside of research in clinical practice.

Funding:

This work was supported by grants from the National Institutes of Health (R44 MH114725 and K01 DK116925) as well as by the Translational Research Institute (U54 TR001629) through the National Center for Advancing Translational Sciences of the National Institutes of Health.

Compliance with Ethical Standards

Conflict of Interest: The authors and this study were supported by an NIMH-funded SBIR award, which supports the goal of disseminating academically developed technology-based interventions and implementing them in real-world settings through a nonacademic entity, in this case, Actualize Therapy, Inc. Actualize Therapy has a license to the IP, which is owned by Northwestern University. P.L. is an employee, and H.B. and O.K. are former employees of this company. D.C.M. cofounded this company, and A.K.G. and S.M.K. have received consulting fees.

Authors’ Contributions: All authors were involved in the preparation of this manuscript and read and approved the final version.

Human Rights: All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent: Informed consent was obtained from all individual participants included in the study.

Welfare of Animals: This article does not contain any studies with animals performed by any of the authors.

References

- 1. Loeppke R, Taitel M, Haufle V, Parry T, Kessler RC, Jinnett K.. Health and productivity as a business strategy: A multiemployer study. J Occup Environ Med. 2009;51(4):411–428. [DOI] [PubMed] [Google Scholar]

- 2. Herrman H, Patrick DL, Diehr P, et al. Longitudinal investigation of depression outcomes in primary care in six countries: The LIDO study. Functional status, health service use and treatment of people with depressive symptoms. Psychol Med. 2002;32(5):889–902. [DOI] [PubMed] [Google Scholar]

- 3. Whooley MA, Simon GE. Managing depression in medical outpatients. N Engl J Med. 2000;343(26):1942–1950. [DOI] [PubMed] [Google Scholar]

- 4. Rost K, Zhang M, Fortney J, Smith J, Coyne J, Smith GR. Persistently poor outcomes of undetected major depression in primary care. Gen Hosp Psychiatry. 1998;20(1):12–20. [DOI] [PubMed] [Google Scholar]

- 5. Merikangas KR, Ames M, Cui L, et al. The impact of comorbidity of mental and physical conditions on role disability in the US adult household population. Arch Gen Psychiatry. 2007;64(10):1180–1188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Simon G, Ormel J, VonKorff M, Barlow W. Health care costs associated with depressive and anxiety disorders in primary care. Am J Psychiatry. 1995;152(3):352–357. [DOI] [PubMed] [Google Scholar]

- 7. Wang PS, Lane M, Olfson M, Pincus HA, Wells KB, Kessler RC. Twelve-month use of mental health services in the United States: Results from the National Comorbidity Survey Replication. Arch Gen Psychiatry. 2005;62(6):629–640. [DOI] [PubMed] [Google Scholar]

- 8. Wang PS, Demler O, Olfson M, Pincus HA, Wells KB, Kessler RC. Changing profiles of service sectors used for mental health care in the United States. Am J Psychiatry. 2006;163(7):1187–1198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Andrews G, Basu A, Cuijpers P, et al. Computer therapy for the anxiety and depression disorders is effective, acceptable and practical health care: An updated meta-analysis. J Anxiety Disord. 2018;55:70–78. [DOI] [PubMed] [Google Scholar]

- 10. Raney L, Bergman D, Torous J, Hasselberg M. Digitally driven integrated primary care and behavioral health: How technology can expand access to effective treatment. Curr Psychiatry Rep. 2017;19(11):86. [DOI] [PubMed] [Google Scholar]

- 11. Quanbeck A, Gustafson DH, Marsch LA, et al. Implementing a mobile health system to integrate the treatment of addiction into primary care: A hybrid implementation-effectiveness study. J Med Internet Res. 2018;20(1):e37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Hermes EDA, Burrone L, Heapy A, et al. Beliefs and attitudes about the dissemination and implementation of internet-based self-care programs in a large integrated healthcare system. Adm Policy Ment Health. 2019;46(3):311–320. [DOI] [PubMed] [Google Scholar]

- 13. Rippen HE, Pan EC, Russell C , Byrne CM, Swift EK. Organizational framework for health information technology. Int J Med Inform. 2013;82(4):e1–13. [DOI] [PubMed] [Google Scholar]

- 14. Glasgow RE, Phillips SM, Sanchez MA. Implementation science approaches for integrating eHealth research into practice and policy. Int J Med Inform. 2014;83(7):e1–e11. [DOI] [PubMed] [Google Scholar]

- 15. Menachemi N, Collum TH. Benefits and drawbacks of electronic health record systems. Risk Manag Healthc Policy. 2011;4:47–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Bower P, Gilbody S. Managing common mental health disorders in primary care: Conceptual models and evidence base. BMJ. 2005;330(7495):839–842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Mohr DC, Weingardt KR, Reddy M, Schueller SM. Three problems with current digital mental health research . . . and three things we can do about them. Psychiatr Serv. 2017;68(5):427–429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Bertagnoli A, Digital mental health: Challenges in implementation. In: American Psychiatric Association Annual Meeting. New York, NY; 2018. [Google Scholar]

- 19. Lattie EG, Schueller SM, Sargent E, et al. Uptake and usage of IntelliCare: A publicly available suite of mental health and well-being apps. Internet Interv. 2016;4(2):152–158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Mohr DC, Tomasino KN, Lattie EG, et al. IntelliCare: An eclectic, skills-based app suite for the treatment of depression and anxiety. J Med Internet Res. 2017;19(1):e10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Palac HL, Alam N, Kaiser SM, Ciolino JD, Lattie EG, Mohr DC. A practical do-it-yourself recruitment framework for concurrent eHealth clinical trials: Simple architecture (Part 1). J Med Internet Res. 2018;20(11):e11049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Lattie EG, Kaiser SM, Alam N, et al. A practical do-it-yourself recruitment framework for concurrent eHealth clinical trials: Identification of efficient and cost-effective methods for decision making (Part 2). J Med Internet Res. 2018;20(11):e11050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Kroenke K, Strine TW, Spitzer RL, Williams JB, Berry JT, Mokdad AH. The PHQ-8 as a measure of current depression in the general population. J Affect Disord. 2009;114(1–3):163–173. [DOI] [PubMed] [Google Scholar]

- 24. Kertz S, Bigda-Peyton J, Bjorgvinsson T. Validity of the Generalized Anxiety Disorder-7 scale in an acute psychiatric sample. Clin Psychol Psychother. 2013;20(5):456–464. [DOI] [PubMed] [Google Scholar]

- 25. Spitzer RL, Kroenke K, Williams JB, Lowe B. A brief measure for assessing generalized anxiety disorder: The GAD-7. Arch Intern Med. 2006;166(10):1092–1097. [DOI] [PubMed] [Google Scholar]

- 26. Kroenke K, Spitzer RL, Williams JB. The PHQ-9: Validity of a brief depression severity measure. J Gen Intern Med. 2001;16(9):606–613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Hermes ED, Lyon AR, Schueller SM, Glass JE. Measuring the implementation of behavioral intervention technologies: Recharacterization of established outcomes. J Med Internet Res. 2019;21(1):e11752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Cresswell K, Sheikh A. Organizational issues in the implementation and adoption of health information technology innovations: An interpretative review. Int J Med Inform. 2013;82(5):e73–e86. [DOI] [PubMed] [Google Scholar]

- 30. Hoffman L, Benedetto E, Huang H, et al. Augmenting mental health in primary care: A 1-year study of deploying smartphone apps in a multi-site primary care/behavioral health integration program. Front Psychiatry. 2019;10(94). doi: 10.3389/fpsyt.2019.00094 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Mason V, Shaw A, Wiles N, et al. GPs’ experiences of primary care mental health research: A qualitative study of the barriers to recruitment. Fam Pract. 2007;24(5):518–525. [DOI] [PubMed] [Google Scholar]

- 32. Zazove P, McKee M, Schleicher L, et al. To act or not to act: Responses to electronic health record prompts by family medicine clinicians. J Am Med Inform Assoc. 2017;24(2):275–280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Fitzpatrick SL, Dickins K, Avery E, et al. Effect of an obesity best practice alert on physician documentation and referral practices. Transl Behav Med. 2017;7(4):881–890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Mohr DC, Lyon AR, Lattie EG, Reddy M, Schueller SM. Accelerating digital mental health research from early design and creation to successful implementation and sustainment. J Med Internet Res. 2017;19(5):e153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Buntrock C, Ebert DD, Lehr D, et al. Effect of a web-based guided self-help intervention for prevention of major depression in adults with subthreshold depression: A randomized clinical trial. JAMA. 2016;315(17):1854–1863. [DOI] [PubMed] [Google Scholar]