Abstract

As ordinary citizens increasingly moderate online forums, blogs, and their own social media feeds, a new type of censoring has emerged wherein people selectively remove opposing political viewpoints from online contexts. In three studies of behavior on putative online forums, supporters of a political cause (e.g., abortion or gun rights) preferentially censored comments that opposed their cause. The tendency to selectively censor cause-incongruent online content was amplified among people whose cause-related beliefs were deeply rooted in or “fused with” their identities. Moreover, six additional identity-related measures also amplified the selective censoring effect. Finally, selective censoring emerged even when opposing comments were inoffensive and courteous. We suggest that because online censorship enacted by moderators can skew online content consumed by millions of users, it can systematically disrupt democratic dialogue and subvert social harmony.

Keywords: Censorship, Selective censoring, Identity politics, Moderators, Identity fusion, Social media

Highlights

-

•

We use a novel experimental paradigm to study censorship in online environments.

-

•

People selectively censor online content that challenges their political beliefs.

-

•

People block online authors of posts they disagree with.

-

•

When beliefs are rooted in identity, selective censoring is amplified.

-

•

Selective censoring occurred even for comments without offensive language.

1. Introduction

In the run-up to the 2016 presidential elections, the moderators of a large online community of Trump supporters deleted the accounts of over 2000 Trump critics. The moderators even threatened to “throw anyone over our walls who fails to behave themselves” (Conditt, 2016). This phenomenon of silencing challenging voices on social media is not limited to the hundreds of thousands of designated moderators of online communities and forums; even ordinary citizens can delete comments on their own posts and report or block political opponents (Linder, 2016). To study this new form of censorship, we developed a novel experimental paradigm that assessed the tendency for moderators to selectively censor (a) content that is incongruent with their political cause (a political position or principle that people strongly advocate) and (b) the authors of such incongruent content. The studies also tested whether identity-related processes amplified the selective censorship of cause-incongruent content. Further, we tested whether the identity-driven selective censoring of political opponents' posts occurs even when opponents express their views in a courteous and inoffensive manner. To set the stage for this research, we begin with a discussion of past literature on biased exposure to online content.

1.1. Biased exposure to online content: selective information-seeking and avoidance

Behavioral scientists have long noted that people create social environments that support their values and beliefs (McPherson et al., 2001). People gravitate to regions, neighborhoods or occupations in which they are surrounded by individuals with similar personalities (Rentfrow et al., 2008) or political ideologies (Motyl et al., 2014). Once in these congruent environments, people are systematically exposed to information that aligns with their own views (Hart et al., 2009; Sears and Freedman, 1967). In addition, people actively display biases in behavior (e.g. choice of relationship partners) and cognition (e.g. attention, recall, and interpretation of feedback) that encourage them to see more support for their beliefs than is justified by objective reality (Garrett, 2008).

Parallel phenomena can occur in virtual worlds. People often find themselves in online bubbles of individuals who share political beliefs and information with each other but not with outsiders (Adamic and Glance, 2005; Barberá et al., 2015). They also actively seek websites or online communities that support their pre-existing opinions (Garimella and Weber, 2017; Iyengar and Hahn, 2009), and follow or connect with individuals whose opinions they endorse (Bakshy et al., 2015; Brady et al., 2017). And when they process information that they encounter, they display confirmation biases that warp their visions of reality (Hart et al., 2009; Van Bavel and Pereira, 2018). Some evidence also suggests that in addition to actively seeking attitude-consistent online content, people also avoid attitude-inconsistent content (Garrett, 2009a). Importantly, biases in information seeking are strongest for content related to political and moral issues (Stroud, 2017) and are most prevalent among those who have strong views or ideologies (Boutyline and Willer, 2017; Hart et al., 2009; Lawrence et al., 2010).

Although researchers have investigated biases in how people seek, consume, or avoid information in online contexts, to the best of our knowledge they have yet to examine how people might influence the content to which they and others are exposed through censorship. It is increasingly possible for individuals to censor others in online contexts by deleting others' comments on their own posts and pages (John and Dvir-Gvirsman, 2015; Sibona, 2014). For moderators of popular social media pages and large forums, the scope of their ability to censor is multiplied as they often exercise control over content that millions view (Matias, 2016a; Wright, 2006).

Censorship is more extreme than biased information seeking because, in addition to biasing one's own online environment, censorship delimits the online content that other people are exposed to. Also, by silencing dissenters, censorship prevents them from voicing their views. And although the psychological processes underlying censorship may overlap with some of the defensive motivations producing selective information seeking (Hart et al., 2009), censorship may in addition entail a hostile motivation to nullify opponents of the cause.

1.2. Censorship in offline and online environments

The majority of past studies on censorship have examined the association between political orientation and attitudes toward censorship. Whereas some studies have suggested that conservatives support censorship (Fisher et al., 1999; Hense and Wright, 1992; Lindner and Nosek, 2009), others have reported evidence of censorship by people on both sides of the political spectrum (Crawford and Pilanski, 2014; Suedfeld et al., 1994). One limitation of this work is that researchers have typically explored people's attitudes toward censorship rather than their censoring behaviors. Further, to our knowledge, no studies have systematically examined censoring behaviors in online settings.

As public pages and forums are increasingly moderated by everyday citizens (Matias, 2016a), the power to censor others is now widely available. For example, on the popular social media platform Reddit, almost 100,000 community moderators have the power to delete comments or entirely ban accounts associated with millions of users (https://mods.reddithelp.com/). Even internet users who have no particular stature within online communities are able to moderate other people's comments on their own posts and blogs. People can “report” social media posts they find disagreeable (John and Dvir-Gvirsman, 2015; Sibona, 2014) or simply delete or hide cause-incongruent comments on their own posts or blogs. Given that censoring in online contexts is easier (e.g., requires a single click) and may have fewer personal repercussions relative to offline contexts (e.g., more anonymity), it seems likely that online censoring will become increasingly prevalent. Here, we examine people's tendency to selectively censor content that is incongruent with a political cause they support.

1.3. Identity as a censorship amplifier

Not everyone will be equally motivated to selectively censor cause-incongruent content. For example, motivation to censor content will be particularly high when it challenges a political cause with which people's identities are strongly “fused” (Swann Jr et al., 2012). For people who are strongly fused with a cause, threats to the cause will feel like threats to the self. This will induce strongly fused people to be particularly reactive to threatening content (Gómez et al., 2011; Swann Jr et al., 2009). They may, for instance, go to great lengths to protect their group (Swann Jr et al., 2014) and are even attempt to inflict serious harm on threatening outgroups (Fredman et al., 2017). Therefore, we expect that strongly fused individuals would be especially apt to selectively censor incongruent content to preserve their cause against challenges.1

Although we focused primarily on identity fusion as a potential amplifier of censorship, we also investigated several other identity-related measures that have been associated with intolerance of political opposition. The literature on self and identity broadly suggests that people's social identities relating to political groups and causes are potent predictors of action intended to advance one's group or cause (e.g., Ashokkumar et al., 2019; Swann Jr et al., 2012; Tajfel and Turner, 1979) and counter opponents (Brewer, 2001; Fredman et al., 2017). In line with this reasoning, we investigated the effects of various other identity-related measures: indices of attitude strength, moral conviction, and identification with other supporters of the cause. Attitude strength and moral conviction are part of people's identities because their preferences and moral values are important parts of their self-related mental representations (McAdams, 1995). Past research on attitude strength has revealed that people who hold extreme views about a cause or whose views are associated with feelings of certainty and personal significance are intolerant of others with dissimilar attitudes (e.g., Singh and Ho, 2000; Singh and Teoh, 1999). Similarly, moral convictions reflect people's deeply held beliefs regarding the morality of a cause (Skitka and Mullen, 2002) and is known to predict an aversion to attitudinally dissimilar others (Skitka et al., 2005). Finally, we assessed participants' identification with cause supporters, since identification has been found to be a potent predictor of pro-cause action (Thomas et al., 2016). Although the foregoing variables have all been associated with intolerance of outgroups and are important components of people's identities (i.e. their mental self-representations), the causal, structural, and temporal relationships between these variables have not been clearly established. For example, it is unclear whether strong moral convictions cause greater group identification or the reverse (Van Zomeren et al., 2012; Zaal et al., 2017). Similarly, the temporal relationship between fusion with cause and group identification is not clear (Gómez et al., 2019). Prior work has shown that identity fusion is associated with moralized attitudes (Talaifar and Swann Jr, 2019) but the causal relationship between these variables is unclear. Nevertheless, given that these variables have been found to predict a suite of behaviors related to intolerance of political opposition, we included them as potential predictors of selective censoring.

1.4. Overview of studies

The current research had two primary goals. First, we asked whether people assigned to moderate online content would selectively censor opposition to their political causes by deleting opposing comments and banning opponents from a forum. Second, we examined whether people whose cause-related beliefs were rooted in their identities would be especially likely to selectively censor incongruent content. In all studies, we recruited participants from the United States (US). Based on past reports that biases in information consumption are stronger for political and moral issues (Stroud, 2017), we focused on political causes that are deemed to have a moral component. Specifically, we chose abortion rights (Studies 1–2) and gun rights (Study 3) as the focal issues. We also selected these issues because they are highly controversial in the US to raise the likelihood that most people would have relevant opinions. In fact, many believe that over the last half century these issues determined the outcome of multiple elections in the U.S. (Leber, c., 2016; Riffkin, 2015).

All studies used a longitudinal design in which we measured all predictors at Time 1 (T1) and censoring at Time 2 (T2). At T1, we measured participants' position on an issue (e.g., abortion rights) and their identity fusion with the corresponding cause (e.g., pro-life or pro-choice cause). In Studies 2 and 3, we also measured other prominent identity-related measures, including strength of attitudes, moral conviction, and identification with cause supporters. As part of a seemingly unrelated study administered two weeks later (Time 2 or “T2”), we measured participants' censoring behavior using a novel simulation of an online forum. We sought participants' assistance in moderating the content of a putative online forum. Participants read comments and decided whether the comments needed to be retained or removed from the forum. Comments they chose to remove were considered “censored.” Each comment was systematically manipulated to be either congruent or incongruent with the participant's cause and either offensive or inoffensive. In Studies 2 and 3, we also asked participants whether the authors of the congruent and incongruent comments they read should be banned from the forum.

We operationalized selective censorship as either a preference for cause-congruent content or an intolerance of cause-incongruent content. We expected that cause supporters would selectively censor comments incongruent with their cause (Studies 1–3) and selectively ban the author of those incongruent comments (Study 2 & 3). We also expected that people whose identities were strongly aligned (“fused”) with the cause would be particularly likely to selectively censor incongruent comments (Studies 1–3) and selectively ban the authors of those comments (Study 2–3). We examined whether the effect of fusion was influenced by the presence of offensive language in the comments (Studies 1–3) and also whether the effect generalized to an array of other identity-related measures (Study 2 & 3). Further, in SOM-III we explored one potential mechanism driving the effect of fusion on selective censoring: strongly fused people's tendency to essentialize the cause. In all studies, we examined whether there were partisan differences in selective censoring (i.e. if selective censoring was stronger among pro-life vs. pro-choice supporters in Studies 1 and 2; pro-gun-rights vs. pro-gun-control supporters in Study 3), and we report any asymmetries between the two sides. For all three studies, we report all measures, manipulations, and exclusions.

2. Study 1

2.1. Study 1 method

2.1.1. Time 1 (T1)

2.1.1.1. Participants

In August 2017, we recruited 477 participants from Amazon's Mechanical Turk (MTurk), an appropriate source of data for our purposes given that MTurkers routinely review comments by actual website moderators (Schmidt, 2015).2 Participants first indicated their position on the issue of abortion rights (pro-choice vs. pro-life vs. neither/don't know). Thirty-five participants who reported neutral or no views on abortion rights were not allowed to proceed because a person's pre-existing position on abortion rights needs to be known in order to identify which comments are congruent vs. incongruent with their cause. We removed 32 respondents with identical IP addresses or MTurk Worker IDs to eliminate the possibility of a single respondent completing the survey twice. We excluded four participants who failed our attention check (see SOM-I). Our final T1 sample had 406 participants (49.8% female; 71.6% White; M age = 36.06; SD age = 11.59; 274 pro-choice and 132 pro-life participants). The higher proportion of pro-choice participants is typical in liberal-skewed online crowdsourcing platforms such as MTurk (e.g., Ashokkumar et al., 2019). In this and all studies, sample size was determined prior to data analysis.

2.1.1.2. Identity measures

Participants completed the seven-item verbal fusion scale (α = 0.91, 95% CI = [0.89, 0.93]) measuring fusion with their cause (e.g. “I am one with the pro-life/pro-choice position”; Gómez et al., 2011). They also completed a five-item measure of the mediating mechanism explored in SOM-III: essentialist beliefs relating to the cause (α = 0.91, 95% CI = [0.90, 0.93]) adapted from Bastian and Haslam (2006); (e.g., “There are two types of people in this world: pro-life and pro-choice”). Both constructs were rated on seven-point scale ranging from 1 (Strongly Disagree) to 7 (Strongly Agree). We standardized the fusion and essentialism scores prior to analysis. Means, standard deviations, and inter-variable correlations in the final sample are reported in Table 1 .

Table 1.

Means, standard deviations, and correlations of measures in Study 1 (N = 223).

| Variable | M | SD | 1 | 2 | 3 |

|---|---|---|---|---|---|

| 1. Fusion with cause | 4.71 | 1.39 | |||

| 2. Censoring rate -congruent comments | 0.20 | 0.19 | −0.06 | ||

| 3. Censoring rate - incongruent comments | 0.26 | 0.22 | 0.13⁎ | 0.56⁎⁎ | |

| 4. Censoring rate - irrelevant comments | 0.34 | 0.16 | 0.12 | 0.56⁎⁎ | 0.51⁎⁎ |

Note. The censoring rates, ranging from 0 to 1, refer to the proportion of comments of each type (congruent, incongruent, or irrelevant) that participants censored. Fusion's effect on selective censoring is the difference between fusion's association with the censoring rates of congruent and incongruent comments. Fusion's effect was not influenced by position on abortion rights. * indicates p < .05. ** indicates p < .01.

Participants provided demographic information before completing the survey (see https://osf.io/4jtwk/?view_only=10627a9892464e5aa90fe92360b846ad for a full list of measures). At the end of the study, participants learned that they might be contacted again for other studies. We did not specify when or why we would re-contact them because we wanted to discourage them from associating the first session of the study with the second.

2.1.2. Time 2 (T2)

2.1.2.1. Participants

Two weeks later we re-contacted the participants regarding a seemingly unrelated “comment moderation task.” A total of 251 participants completed the second session of the study, amounting to a 38.2% attrition rate, which is comparable to previously reported attrition rates on MTurk (Stoycheff, 2016). There were no differences in fusion (t(400) = −0.19, p = .85, d = −0.02) between those who did vs. did not complete the second session of the study. We excluded 25 respondents with identical IP addresses or MTurk worker IDs and three participants who evaluated fewer than 50% of the comments in the comment moderation task, resulting in a final sample of 223 participants (52% female; 71.8% White; M age = 38.36; SD age = 11.99; 148 pro-choice and 75 pro-life participants) who completed both time points. We were unable to conduct an a priori power analysis because the lack of previous research on censoring made it difficult for us to estimate expected path coefficients, which is required for power analyses for Structural Equation Models (SEM; Muthén and Muthén, 2012). To give a general sense of how much power we had with the present sample size, we conducted a sensitivity analysis, which revealed that the sample had 80% power to detect a minimum effect size of f 2 = 0.04 in a multiple regression.

2.1.2.2. Comment moderation procedure

In the comment moderation task, participants read about a new blog purportedly launched with the goal of “encouraging discussion about current issues.” We informed participants that we had received complaints regarding a surge in inappropriate comments posted on the blog and that we needed their help in deleting inappropriate comments. To make sure that participants took the task seriously, we informed them that the blog's administrator would delete all comments that they flagged. Participants then read a series of 40 statements that were adapted from comments from real online blogs and forums. Of the 40 comments, 15 were pro-choice (e.g.: “I love that even though Norma couldn't herself get an abortion (because of the terrible world we live in), she fought so hard to make sure other women could.”), 15 were pro-life (e.g.: “I love that Lily didn't have an abortion even though she didn't want to be a parent. She hadn't planned a baby and wasn't ready for it, but she didn't get an abortion.”), and 10 were irrelevant to the cause (e.g.: “I still can't wrap my head around this horrific, senseless act. Sickening.”). Participants could recommend either deletion or retention of each comment. The full list of comments is available at https://osf.io/4jtwk/?view_only=10627a9892464e5aa90fe92360b846ad.

For each participant, we calculated three censoring rates corresponding to the proportion of comments that the participant deleted among (a) congruent comments (i.e., comments endorsing the participant's position on abortion rights), (b) incongruent comments (i.e., comments against the participant's position on abortion rights), and (c) irrelevant comments (i.e., comments irrelevant to abortion rights). The three censoring rates were inter-correlated (see Table 1), which indicates that individual differences in people's general tendency to censor were relatively stable across comments.

2.1.2.3. Post-hoc assessment of comment offensiveness

To determine whether strongly fused people's tendency to selectively censor incongruent comments depended on whether the comments included offensive language, we asked five objective judges from MTurk to provide post-hoc ratings of each comment's offensiveness. Of the five judges, two were pro-choice, two were pro-life, and one was neutral (i.e., did not favor either side of the abortion debate). The judges were told that offensive comments were those that “a reasonable person would consider to be abusive, harassing, or involving hate speech or ad hominem attacks.” The inter-judge reliability across the five judges was α = 0.84. We coded each comment as offensive or inoffensive based on the judges' majority opinion (see SOM-I for more details). The offensive vs. inoffensive classification generated from the post-hoc pilot was then applied in the selective censoring analyses.3 For each participant, we computed four censoring rates corresponding to the proportion of comments that the participant censored among comments of four categories: Offensive-Congruent, Offensive-Incongruent, Inoffensive-Congruent, and Inoffensive-Incongruent.

2.2. Study 1 results

2.2.1. Did people selectively censor comments incongruent with their cause?

To test whether people censored incongruent comments at a higher rate than congruent comments, censoring rates for incongruent vs. congruent comments were compared via a paired t-test. A significant effect emerged (t(220) = 4.0, p < .001, d = 0.25). On average, people censored 25.64% (SD = 22.35) of the incongruent comments they read but only 20.41% (SD = 18.72) of the congruent comments. Later in this section, we report differences in selective censoring between pro-life and pro-choice participants.

2.2.2. Did identity fusion amplify the selectively censoring of incongruent comments?

We used structural equation modeling (SEM) for our analyses to simultaneously model fusion effects on two dependent variables: censoring rate for congruent and incongruent comments. We also conducted alternate analyses treating the difference between people's rates of censoring incongruent and congruent comments as the index of selective censoring and regressing the index over fusion (see SOM-II). Although this method feels intuitively appealing, it is not ideal because the method would not tell us whether any detected effect is driven by people's preference for congruent comments or their antagonism against incongruent comments. Past theorists have warned against conflating these two separate processes and recommend that each should be modeled separately (Garrett, 2009a, Garrett, 2009b; Holbert et al., 2010). The SEM approach allows us to simultaneously model effects on censoring rates for congruent and incongruent comments treating them as two separate variables with different variances rather than assuming them to constitute a single variable. Note however that both the methods (SEM and computing a difference index) lead us to the same conclusions.

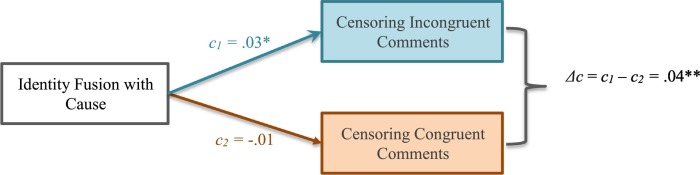

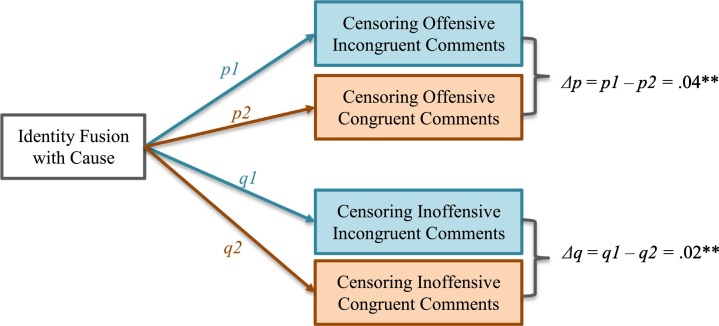

To evaluate our hypothesis that strongly fused people would be especially likely to selectively censor incongruent comments relative to congruent comments, we tested whether the effect of fusion on censoring incongruent comments (indicated by the c 1 path in Fig. 1 ) is significantly larger than the effect of fusion on censoring congruent comments (c 2 path). A significant difference between the two path coefficients (i.e., Δc = c 1 - c 2) would suggest that fusion is associated with disproportionately censoring incongruent, over congruent, comments. In this and all other models, we allowed for residual covariances between the censoring rates. In all the models, we used standardized scores for the continuous predictors, but we did not standardize the censoring rates (they ranged from 0 to 1) to allow the censoring effects to be interpreted in meaningful units. We report unstandardized regression coefficients.

Fig. 1.

Structural Equations Model depicting the effect of identity fusion on selective censoring of incongruent vs. congruent comments (Study 1). The c1 and c2 paths represent the effects of fusion on censoring incongruent and congruent comments respectively. The significant difference between the two paths (i.e., Δc) indicates that fusion is associated with selectively censoring incongruent comments. The coefficients reported are unstandardized. * indicates p < .05. ** indicates p < .01.

Fusion was associated with censoring incongruent comments (c 1 path; b = 0.03, 95% CI = [0.001, 0.06], p = .04) but not with censoring congruent comments (c 2 path; b = −0.01, 95% CI = [−0.04, 0.01], p = .38). A Wald test revealed that the difference between the two paths was statistically significant, (χ2(1) = 9.88, p = .002), which is evidence for our main hypothesis that strongly fused individuals are more likely to selectively censor incongruent than congruent comments. To illustrate, participants who were strongly fused (1 SD above the mean) censored 29.56% of the incongruent comments they read but only 15.75% of the congruent comments, while those who were weakly fused (1 SD below the mean) did not censor incongruent comments (20.74%) any more than they censored congruent comments (20.37%). The significant c 1 path suggests that the effect of fusion on selective censoring is driven by strongly fused people's intolerance for incongruent comments rather than their leniency toward congruent comments.

Controlling for the censoring rate of comments irrelevant to abortion rights (to account for participants' general censoring rate and other response biases) did not alter the effect of fusion on selective censoring (χ2(1) = 9.88, p = .002). The fusion effect remained robust when we controlled for participants' position on abortion rights (i.e., pro-life vs. pro-choice; χ2(1) = 8.33, p = .004). Further, the fusion effect was not influenced by the participant's abortion rights position (χ2(1) = 1.28, p = .26), indicating that fusion was equally associated with selective censoring among both pro-life and pro-choice participants. In SOM-III, we report exploratory analyses testing whether essentialist beliefs about people's views on abortion rights mediates the fusion effect on selective censoring.

2.2.2.1. Did offensiveness influence the effect of fusion on selectively censoring?

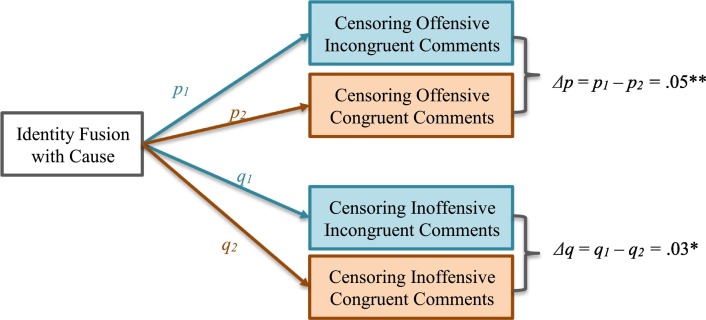

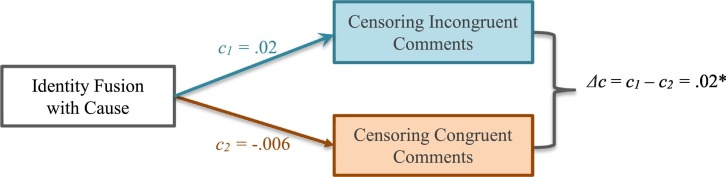

We asked whether the tendency for strongly fused participants to selectively censor incongruent comments depended on how offensive the comments were. As depicted in Fig. 2 , we modeled the paths from fusion to participants' censoring rates for four types of comments: Offensive-Congruent, Offensive-Incongruent, Inoffensive-Congruent, and Inoffensive-Incongruent. We allowed for residual covariances between the censoring rates.

Fig. 2.

Structural Equations Model examining the effect of identity fusion on selective censoring of incongruent vs. congruent comments among offensive and inoffensive comments (Study 1). Δp and Δq represent fusion's effects on selective censoring among offensive comments and inoffensive comments, respectively. The significant effects indicate that strongly fused people selectively censored incongruent comments whether the comments were offensive or inoffensive. See SOM-IV for path coefficients. * indicates p < .05. ** indicates p < .01.

We first computed the effects of fusion on selective censoring of incongruent vs. congruent comments separately for offensive and inoffensive comments. To compute the effect of fusion on selective censoring for offensive comments, we compared fusion's effect on censoring Offensive-Incongruent (path p1) vs. Offensive-Congruent (path p2) comments. The significant difference between the two p paths (Δp = p1 – p2, b = 0.05, 95% CI = [0.01, 0.09], p = .008) suggests that among offensive comments, strongly fused individuals selectively censored incongruent comments more than congruent comments. (Refer to SOM-IV for the path coefficients). Similarly, we computed fusion's effect on selective censoring for inoffensive comments as the difference between fusion's effect on censoring Inoffensive-Incongruent comments (path q1) vs. Inoffensive-Congruent comments (path q2). The resulting significant difference (Δq = q1 – q2; b = 0.03, 95% CI = [0.002, 0.05], p = .04) indicated that among inoffensive comments, participants censored incongruent comments more than congruent comments. In short, strongly fused individuals selectively censored incongruent comments more than congruent comments both when the comments were offensive and inoffensive.

Finally, to test whether strongly fused people's tendency to selectively censor incongruent comments was stronger for offensive comments, we compared the two selective censoring effects reported above for offensive vs. inoffensive comments. The difference (Δp – Δq) was non-significant (χ2(1) = 2.10, p = .15), suggesting that the effect of fusion on selective censoring was independent of the offensiveness of comments. That is, strongly fused individuals selectively censored incongruent, as opposed to congruent, comments regardless of whether the content of the comments included offensive language.

2.2.3. Did selective censoring of incongruent comments depend on people's ideologies?

Using a SEM model similar to the fusion analysis, we tested whether there were differences in people's tendency to selectively censor incongruent vs. congruent comments as a function of their stance on abortion rights (i.e., whether they were pro-choice or pro-life). Participants who endorsed the pro-life position showed a stronger tendency to selectively censoring incongruent comments relative to those who endorsed the pro-choice position (χ2(1) = 7.36, p = .007). Pro-life participants also reported marginally higher fusion levels than did pro-choice participants [t(220) = 1.76, p = .08, d = 0.25].

2.3. Study 1 discussion

Study 1 used a novel paradigm to explore people's censoring behaviors in online settings. People tended to censor online content more if the content was incongruent, rather than congruent, with their cause, and this tendency was higher among supporters of the pro-life cause. Importantly, identity-related processes amplified selective censoring of incongruent online content for people on both sides of the abortion rights cause. Specifically, the results showed that people whose identities were strongly fused with a cause were most willing to selectively censor online content posted by their ideological opponents. Interestingly, strongly fused people's tendency to selectively censor comments was driven by their intolerance for incongruent comments rather than an elevated affinity for congruent comments. Post-hoc analyses also showed that fusion's effect on selective censoring occurred regardless of whether the incongruent comments used offensive language. It is notable that strongly fused people showed a stronger selective censoring effect than weakly fused people even though they were not primed to think about their identity before reading the comments.

3. Study 2

Study 2 attempted to replicate Study 1 in a pre-registered longitudinal study. The method was largely similar to that of Study 1. To verify the preliminary findings from Study 1's post-hoc analysis on the effects of offensiveness, Study 2 systematically manipulated comment offensiveness a priori. The comments used in the study were pretested and categorized as containing offensive vs. inoffensive content. This allowed us to more robustly probe whether the fusion effect on selective censoring was moderated by offensiveness. Further, it was not clear from Study 1 whether strongly fused people's tendency to selectively censor incongruent comments would extend to censoring the authors of the comments. To test this possibility, the study tested whether strongly fused individuals would opt to ban people who repeatedly posted content that threatened their position on the cause. The hypotheses were pre-registered prior to data collection (see https://osf.io/2jvau?view_only=754165d77cbe4e69baf6b11740b1a422).

Finally, although we have only focused on identity fusion thus far, we wanted to test whether the effects generalize to other identity-related measures explored in the broad literature: attitude strength, moral conviction, and identification with cause supporters. Studies have found that these constructs predict pro-cause action and an intolerance for opposition (e.g., Singh and Ho, 2000; Skitka et al., 2005; Thomas et al., 2016). We examined the extent to which each of these identity-related measures predicted selective censoring.

4. Study 2 method

4.1. Power analysis

An a priori power analysis was conducted using Monte Carlo simulations to estimate the sample size required to detect the SEM models reported in Study 1. As mentioned in our pre-registration, a sample of 345 participants was required to detect the selective censoring effect computed from the mediation model explored in Study 1 (see SOM-III) with an alpha of 0.05 and 80% power. In addition to replicating Study 1 effects, we wanted to test models examining the impact of the other identity-related measures (attitude strength, moral conviction, and identification with cause supporters) on censoring and also test a model with all identity-related measures simultaneously entered into a structural equation model. Because we had no easy way to estimate the path coefficients for these models, we estimated the required sample size by conducting a conservative power analysis using the models reported in Study 1. As mentioned in our pre-registration, we conducted Monte Carlo simulations to detect the Study 1 mediation model with a conservative alpha of 0.01 and found that we would need a sample size of 510. This conservative estimate would give us sufficient power to detect smaller effects than the ones reported in Study 1. Given the longitudinal nature of the study, we estimated that about 35% of the sample would either drop out between T1 and T2 or be excluded because of failing attention checks, and so we decided to recruit 800 participants at T1. The power analysis and exclusion criteria followed were specified in the pre-registration. Any deviations from the pre-registered plan are noted.

4.2. Comment offensiveness pretest

We wanted to systematically manipulate the offensiveness of comments. To classify comments as offensive vs. inoffensive, we conducted a pilot study on MTurk. We recruited five Mturkers who reported having neutral or no opinions about the abortion rights issue to be objective judges. We piloted 40 comments of which 20 were pro-choice and 20 were pro-life. For each position (pro-choice and pro-life), we piloted 10 comments that we believed contained offensive content and 10 that did not. The instructions provided to the objective judges were the same as in Study 1. The judges evaluated the content of each comment as either offensive or inoffensive. The inter-judge reliability across the five judges was α = 0.87. For each of the four types of comments (Offensive-Prochoice, Inoffensive-Prochoice, Offensive-Prolife, and Inoffensive-Prolife), the seven comments with the highest levels of agreement among the judges were selected for the study. At least three of the five judges agreed on the categorization of the 28 comments that were finally selected for the study (see https://osf.io/4jtwk/?view_only=10627a9892464e5aa90fe92360b846ad for the final list of comments).

4.3. Time 1 (T1)

4.3.1. Participants

A sample of 793 participants from Prolific Academic completed the first part of the study in July 2019. The method followed was largely similar to Study 1. As mentioned in the pre-registration, only participants who endorsed either the pro-choice or pro-life position were eligible for the study. This was ensured by setting a pre-screening condition on Prolific such that the study posting was visible only to participants who had previously identified as pro-choice or pro-life. To be sure that the pre-screening worked, participants' views on abortion rights were measured again in the T1 survey, and 15 participants who indicated holding neutral views on abortion were excluded. We also excluded 29 participants who failed either of two attention checks or did not complete them (see SOM-I). Our final sample at T1 had 749 participants (48% female; 69.88% White; M age = 32.88; SD age = 11.79; 616 pro-choice and 133 pro-life participants).

4.3.2. Identity measures

As in Study 1, participants completed the seven items of the verbal fusion scale measuring fusion with their own position on the abortion rights (either pro-choice or pro-life) on a seven-point scale (α = 0.92, 95% CI = [0.91, 0.93]). The survey also included measures of a series of identity-related measures including four facets of attitude strength such as attitude extremity (“What is your opinion about the pro-life/pro-choice position?”; 1 = Strongly against, 9 = Strongly favor; Binder et al., 2009), attitude centrality (“To what extent does your opinion toward the pro-life/pro-choice position reflect your core values and beliefs”; Clarkson et al., 2009), attitude certainty (e.g., “How certain are you of your opinion about the pro-life/pro-choice position?”; 1 = Not certain at all, 9 = Extremely certain; Fazio and Zanna, 1978) and attitude importance (e.g., “To what extent is the pro-life/pro-choice position personally important to you?”; Boninger et al., 1995; α = 0.91, 95% CI = [0.89, 0.92]). Attitude extremity, centrality, and certainty were measured using one item each, and attitude importance was measured using two items. Attitude centrality and attitude importance used nine-point scales (e.g., 1 = Not at all; 9 = Very Much). We also measured moral conviction (e.g., “To what extent is your position on the pro-life position a reflection of your core moral beliefs and convictions?”; Skitka and Morgan, 2014) using two items on a five-point scale (α = 0.86, 95% CI = [0.83, 0.88]) and identification with cause supporters (e.g. “I identify with other supporters of the prochoice position”; adapted from Thomas et al., 2016) using three items and on a seven-point scale (α = 0.83, 95% CI = [0.81, 0.86]). The order of presentation of the above measures was randomized. Participants then completed a measure of the mediating mechanism explored in SOM-III: people's essentialist beliefs about a cause (α = 0.92, 95% CI = [0.90, 0.93]); Bastian and Haslam, 2006). Finally, they provided demographic information before exiting the survey. No mention was made of the second session of the study. Means, standard deviations, and inter-variable correlations are reported in Table 2 .

Table 2.

Means, standard deviations, and correlations of measures in Study 2 (N = 540).

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1. Fusion with cause | 4.48 | 1.44 | ||||||||

| 2. Attitude extremity | 8.14 | 1.22 | 0.39⁎⁎ | |||||||

| 3 Attitude centrality | 7.27 | 1.92 | 0.51⁎⁎ | 0.52⁎⁎ | ||||||

| 4. Attitude certainty | 8.17 | 1.22 | 0.43⁎⁎ | 0.69⁎⁎ | 0.57⁎⁎ | |||||

| 5. Attitude importance | 7.09 | 1.96 | 0.64⁎⁎ | 0.58⁎⁎ | 0.66⁎⁎ | 0.60⁎⁎ | ||||

| 6. Moral conviction | 3.76 | 1.10 | 0.49⁎⁎ | 0.43⁎⁎ | 0.72⁎⁎ | 0.47⁎⁎ | 0.55⁎⁎ | |||

| 7. Identification with cause supporters | 6.25 | 1.03 | 0.52⁎⁎ | 0.72⁎⁎ | 0.48⁎⁎ | 0.58⁎⁎ | 0.58⁎⁎ | 0.47⁎⁎ | ||

| 8. Rate of censoring congruent comments | 0.21 | 0.16 | −0.03 | −0.16⁎⁎ | −0.12⁎⁎ | −0.15⁎⁎ | −0.08 | −0.11⁎ | −0.17⁎⁎ | |

| 9. Rate of censoring incongruent comments | 0.32 | 0.23 | 0.11⁎⁎ | 0.06 | 0.02 | 0.08 | 0.09⁎ | 0.03 | 0.01 | 0.53⁎⁎ |

Note. The censoring rates, ranging from 0 to 1, refer to the proportion of comments of each type (congruent, incongruent, or irrelevant) that participants censored. Fusion's effect on selective censoring is the difference between fusion's association with the censoring rates of congruent and incongruent comments. This effect was not moderated by position on abortion rights. * indicates p < .05. ** indicates p < .01.

4.4. Time 2 (T2)

4.4.1. Participants

Approximately two weeks later, the second session of the study, titled “Comment Moderation Task”, was posted. Only participants who completed the T1 survey could view the posting, but they were not aware of this, and the study posting did not describe the eligibility criterion or its connection to the first part of the study. Under these circumstances, it is highly likely that participants perceived no connection between the first and second session of the study. A total of 542 participants completed the second session of the study. Two participants who completed less than 50% of the task were excluded,4 leaving us with a final sample of 540 participants (48.70% female; 68.83% White; M age = 33.53; SD age = 12.30; 440 pro-choice and 100 pro-life participants). A sensitivity analysis using Monte Carlo simulations revealed that our sample had 99.8% power to detect the fusion effect on selective censoring reported in Study 1. There were no differences in fusion (t(743) = 1.19, p = .23, d = 0.10) between those who did vs. did not complete the second session of the study.

4.4.2. Comment moderation procedure

Participants read about an online forum for discussions on current affairs. They learned that the forum's administrators had received complaints about inappropriate posts by some users and that their task was to help the administrators identify inappropriate posts and block people who repeatedly posted such content. Participants also learned that the comments and users flagged by them would be removed from the forum by its moderators. Because the study was posted on Prolific using a lab account that had previously been used to post other research studies, participants may have easily linked the task to our university and thus may have felt skeptical about our claims that they were evaluating comments from an actual discussion forum and that their evaluations would have real-world consequences. To address this, the study description said that users of the forum were college students and that the forum was owned and run by our university.

Participants then read a series of 28 comments on the abortion rights issue. The comments were designed to look like screenshots of posts from an actual online discussion forum (see Fig. 3 for an example). As shown in the figure, a user icon and handle were displayed next to each comment. The comments that participants read were systematically varied on two factors: Each comment was either pro-choice or pro-life and either offensive or inoffensive. Of the 28 comments, 14 were pro-choice and 14 were pro-life; 14 were pre-determined via the pilot study to be offensive and 14 were inoffensive. In sum, there were four types of comments (N = 7 for each type): Offensive-Prochoice, Inoffensive-Prochoice, Offensive-Prolife, and Inoffensive-Prolife. The pro-choice comments were all posted by a single user, and the pro-life comments were all posted by another user. For each comment, participants could recommend deletion or retention, which was our primary measure of censoring. After evaluating all comments, participants were also asked whether the two users whose comments they read should be banned from the blog (“Ban this user from the blog” or “Do not ban this user from the blog”). Finally, participants were asked about the extent to which they doubted the veracity of our claims on a five-point scale (1 = Not at all; 5 = A great deal), and the mean rating (M = 2.56, SD = 0.98) was lower than the mid-point of the scale (i.e., 3 = A moderate amount; t(533) = −10.282, p < .001, d = −0.45), suggesting that a considerable proportion of participants believed that they were helping the moderators of a real blog.

Fig. 3.

Example of an inoffensive pro-choice comment used in the comment moderation task (Study 2).

For each participant, we calculated censoring rates corresponding to the proportion of comments congruent with the participant's position on abortion rights and also the proportion of incongruent comments that they flagged. As in Study 1, selective censoring was indicated by a higher censoring rate for incongruent than congruent comments. For the offensiveness-related analyses, we also computed censoring rates for each of the four types of comments (Offensive-Congruent, Offensive-Incongruent, Inoffensive-Congruent, and Inoffensive-Incongruent) to determine whether participants selectively censored incongruent comments among both offensive and inoffensive comments. Overall, participants censored offensive comments (M = 0.47, SD = 0.29) more than inoffensive comments (M = 0.06, SD = 0.13; t(559) = 35, p < .001, d = 1.79) indicating that the offensiveness manipulation was successful. The censoring rates for offensive and inoffensive comments were correlated [r(538) = 0.27, p < .001], indicating that there are relatively stable individual differences in participants' censoring rates.

5. Study 2 results

5.1. Did people selectively censor comments incongruent with their cause and the comments' authors?

Although not pre-registered, we tested whether people generally selectively censored incongruent comments more than congruent comments We compared the censoring rates for incongruent vs. congruent comments via a paired t-test. Replicating Study 1 findings, people censored 32.40% (SD = 22.88) of the incongruent comments but only 20.64% (SD = 16.18%) of the congruent comments, t(539) = 13.84, p < .001, d = 0.58.

We also conducted exploratory analysis testing whether people were disproportionately willing to ban the author of the incongruent comments relative to the author of the congruent comments. We used a McNemar's Chi-squared test to account for the within-subjects nature of the data and found a significant effect (χ2(1) = 9.24, p = .002) such that 21.31% of participants opted to ban the user who posted incongruent comments as opposed to just 15.41% who banned the user posting congruent comments.

5.2. Did identity fusion amplify the selectively censoring of incongruent comments and their authors?

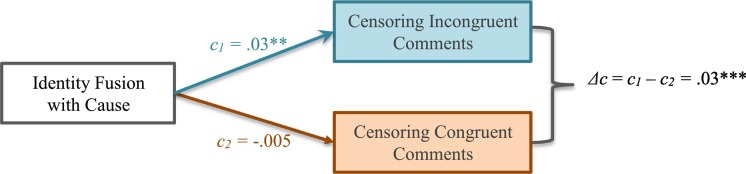

5.2.1. Selectively censoring of incongruent comments

To test our pre-registered hypothesis that strongly fused individuals would be especially likely to selectively censor incongruent comments, we tested a SEM model similar to Study 1 (see Fig. 4 ) with residual covariances between the censoring rates. Alternate analyses treating the difference between censoring rates of incongruent and congruent comments as the selective censoring index did not alter our conclusions (see the last column in Table 3 in the article and SOM-II). As in Study 1, we standardized the continuous predictors in all the SEM analyses, and we report unstandardized regression coefficients. Fusion positively predicted censoring incongruent comments (c 1 path; b = 0.03, 95% CI = [0.01, 0.045], p = .008) but not censoring congruent comments (c 2 path; b = −0.005, 95% CI = [−0.02, 0.01], p = .50). Replicating Study 1's main finding, the difference between the fusion effects on censoring incongruent vs. congruent comments was statistically significant, (Δc = c 1 - c 2; χ2(1) = 13.14, p < .001), which is evidence that fusion is associated with selective censoring. To illustrate, participants who were strongly fused (+ 1 SD) censored 36.36% of the incongruent comments they read but only 18.65% of the congruent comments. Weakly fused participants censored 29.49% of the incongruent comments and 21.26% of the congruent comments, indicating a weaker selective censoring tendency. Fusion's effect on selective censoring remained significant when we controlled for whether participants were pro-choice or pro-life (χ2(1) = 13.50, p < .001), and the effect was not moderated by position on abortion rights (χ2(1) = 0.04, p = .85).

Fig. 4.

Structural Equations Model depicting the effect of identity fusion on selective censoring of incongruent vs. congruent comments (Study 2). The c1 and c2 paths represent the effects of fusion on censoring incongruent and congruent comments respectively. The path coefficients in the figure are unstandardized. The significant difference between the two paths (Δc) indicates that fusion is associated with selectively censoring incongruent comments. ** indicates p < .01. *** indicates p < .001.

Table 3.

Path coefficients (c1 and c2) and Chi-sq values (χ2) of SEM models and coefficients from regression models testing the effects of each identity-related measure on selective censoring (Study 2). Note that each model included only one predictor.

| Predictor in model | Semantic equation modeling (SEM) |

Selective Censoring difference index (b) |

||

|---|---|---|---|---|

| Censoring incongruent comments (c1) |

Censoring congruent comments (c2) |

Selective censoring (Δc = c1-c2) χ2 |

||

| Model 1: Fusion with cause | 0.03⁎⁎ | −0.005 | 13.14⁎⁎⁎ | 0.03⁎⁎⁎ |

| Model 2: Attitude importance | 0.02⁎ | −0.01† | 15.09⁎⁎⁎ | 0.03⁎⁎⁎ |

| Model 3: Attitude certainty | 0.02† | −0.025⁎⁎⁎ | 25.25⁎⁎⁎ | 0.04⁎⁎⁎ |

| Model 4: Attitude centrality | 0.004 | −0.02⁎⁎ | 7.35⁎⁎ | 0.02⁎⁎ |

| Model 5: Attitude extremity | 0.01 | −0.025⁎⁎⁎ | 20.095⁎⁎⁎ | 0.04⁎⁎⁎ |

| Model 6: Identification with cause supporters | 0.002 | −0.03⁎⁎⁎ | 11.595⁎⁎⁎ | 0.03⁎⁎⁎ |

| Model 7: Moral conviction | 0.007 | −0.02⁎ | 8.68⁎⁎ | 0.03⁎⁎ |

Note. In each model, the predictor was standardized, but the censoring rates were not. The censoring rates ranged from 0 to 1. The path coefficients reported are unstandardized. † indicates p < .1. * indicates p < .05. ** indicates p < .01. *** indicates p < .001.

Our pre-registered mediational analyses (see SOM-III) suggest that essentialistic beliefs regarding people's stance on abortion rights might be at least one mediating mechanism explaining the fusion effect on selective censoring. In our pre-registration, we also proposed to test the fusion effect controlling for other identity-related measures. We accordingly report a model in which the predictive ability of all the identity-related measures are compared (see SOM-V). Nevertheless, because the measured variables are all strongly related both conceptually and empirically (see Table 2), after establishing that multicollinearity was not a problem, we examined whether each of these variables independently predicts selective censoring.

5.2.2. Selective censoring of the authors of incongruent comments

The foregoing analyses revealed that identity fusion with a cause is associated with a tendency to disproportionately censor online content that is incongruent with the cause. To test the pre-registered hypothesis that strongly fused individuals would also display a censoring bias against the authors of incongruent content, we examined a SEM model with two dependent variables corresponding to the binary indicators of whether the participant decided to ban the authors of incongruent, and congruent comments. Fusion was not significantly associated with banning the author of the incongruent comments (OR = 1.17, 95% CI = [0.95, 1.45], p = .14) or congruent comments (OR = 0.99, 95% CI = [0.78, 1.25], p = .90). The difference between the two paths, computed as two times the negative loglikelihood of the difference between the two paths, was not significant (χ2(1) = 1.18, p = .28), indicating that fusion was not associated with selectively censoring authors of incongruent comments. However, given that the non-significant coefficients of the two paths were in the predicted direction, it is possible that there exists a small effect that our sample was not sufficiently powered to detect.

5.2.3. Did offensiveness moderate the effect of fusion on selectively censoring?

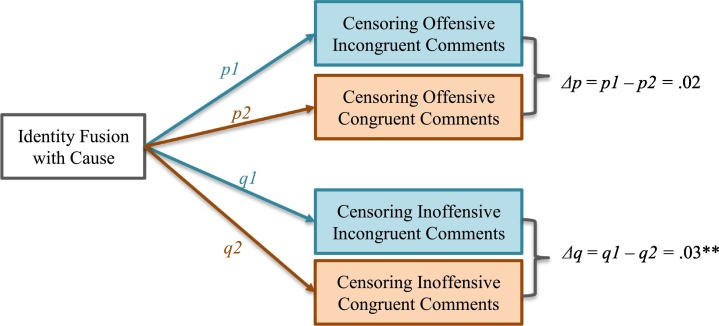

To verify Study 1's exploratory finding and our pre-registered hypothesis that the offensiveness of comments would not moderate the effect of fusion on selective censoring, we modeled the paths from fusion to participants' censoring rates for four types of comments: Offensive-Congruent, Offensive-Incongruent, Inoffensive-Congruent, and Inoffensive-Incongruent (see Fig. 5 ).

Fig. 5.

Structural Equations Model examining the effect of identity fusion on selective censoring of incongruent vs. congruent comments among offensive and inoffensive comments (Study 2). Δp and Δq represent fusion's effects on selective censoring among offensive comments and inoffensive comments, respectively. The significant effects indicate that strongly fused people selectively censored incongruent comments whether the comments were offensive or inoffensive. See SOM-IV for path coefficients. * indicates p < .05. ** indicates p < .01.

Among offensive comments, fusion was associated with selectively censoring incongruent comments over congruent comments (Δp = p1 – p2; b = 0.04, 95% CI = [0.02, 0.06], p = .001). Similarly, among inoffensive comments, strongly fused individuals selectively censored incongruent comments (Δq = q1 – q2; b = 0.02, 95% CI = [0.005, 0.04], p = .008). (The four path coefficients are reported in SOM-IV). The two significant selective censoring effects suggest that strongly fused people's selective intolerance for incongruent comments was observable among both offensive and inoffensive comments. Comparing two selective censoring effects for offensive vs. inoffensive comments (Δp – Δq) revealed a marginally significant difference (χ2(1) = 3.34, p = .07), suggesting that fusion's effect on selective censoring may have been larger for offensive than inoffensive comments. What is striking however is that as in Study 1, strongly fused people selectively censored incongruent comments even when the comments were inoffensive.

5.3. Did fusion's effect on selective censoring of incongruent comments generalize to other identity-related measures?

Thus far, we focused on the effects of identity fusion. Nevertheless, we conducted exploratory analyses testing the possibility that selective censoring of incongruent comments results from a constellation of identity-related processes. To test this possibility, we assessed the effects of attitude strength (attitude extremity, attitude centrality, attitude certainty, and attitude importance), moral conviction, and identification with supporters, which all index different aspects of people's alignment with a cause. Using the same approach as in the fusion analysis, we sequentially tested the relation of each of the seven predictors to selective censoring. Table 3 reports each model's path coefficients from the tested variable to censoring incongruent comments (c 1) and to censoring congruent comments (c 2). Table 3 also reports the chi-square difference between the two paths (c 1 – c 2) indicating the extent to which the tested variable is associated with selectively censoring incongruent comments. The last column presents linear regression coefficients from alternate analyses testing the effect of each identity-related measure on the difference in participants' censoring rates for incongruent vs. congruent comments.

As indicated by the significant chi-square differences (Δc) and the significant regression coefficients (b) in Table 3, each of the constructs produced selective censoring similar to the fusion effects, which is preliminary evidence that broader identity-related processes motivate selective censoring.

Interestingly, most of the predictors (attitude certainty, attitude centrality, attitude extremity, identification with cause supporters, and moral conviction) were negatively associated with censoring congruent comments (see c 2 coefficients in Table 3), indicating that they produce a tendency to be lenient toward congruent comments. On the contrary, fusion and attitude importance were not correlated with censoring congruent comments; instead, they were positively associated with censoring incongruent comments (see c 1 coefficients in Table 3), implying that these constructs were associated with an intolerance for incongruent comments. We speculate that a preference for congruent content and an intolerance against incongruent content reflect two independent mechanisms leading to selective censorship of incongruent comments.

5.4. Did selective censoring of incongruent comments depend on people's ideologies?

We tested another SEM model (not pre-registered) similar to the fusion analysis to assess the effect of people's stance on abortion rights (pro-choice vs. pro-life). Unlike Study 1, pro-choice participants selectively censored incongruent comments as much as pro-life participants (χ2(1) = 2.38, p = .12), which may be due to higher threat levels among pro-choice participants following the, 2018 nomination Justice Kavanaugh to the Supreme Court. That is, owing to the conservative shift in the makeup of the Supreme Court in, 2018, pro-choice participants in Study 2 may have generally faced higher threat relative to Study 1, which could have increased their tendency to selectively censor pro-life comments. There was also no difference in fusion levels among pro-choice and pro-life participants (t(537) = 0.59, p = .56, d = 0.07).

6. Study 2 discussion

Study 2 replicated Study 1's main findings that people censor online content that is incongruent with their own political views and that strongly fused individuals are especially likely to selectively censor incongruent content. Strongly fused people's tendency to selectively censor incongruent comments was robust for both offensive and inoffensive comments. Contrary to Study 1, we did not find evidence that pro-life participants selectively censored more than pro-choice participants, which we believe could be due to the socio-political environment during Study 2 data collection.

In addition to replicating Study 1 effects, Study 2 also examined people's willingness to ban the authors of incongruent vs. congruent comments from the forum. We found that cause supporters selectively banned the author who consistently posted cause-incongruent content. Contrary to our hypothesis, this effect was not amplified by fusion. This may have been because banning authors is a relatively extreme action that participants in our samples generally did not endorse. Conceivably, there is a small association of fusion with selective censoring of authors that our sample was underpowered to detect.

Finally, the study found that the selective censoring effect extends to an array of identity-related measures in the literature. The findings also indicate that there may be different paths to selective censorship of opposing content: Whereas fusion and attitude importance were associated with an increased tendency to censor incongruent comments, the other identity-related predictors were associated with a weaker tendency to censor congruent comments.

In short, the results of Study 2 replicated the selective censoring effect that emerged in Study 1. A potential limitation of these studies, however, is that both focused on an issue rooted in religious values, abortion rights. To address this, Study 3 focused on gun rights. The gun-rights issue was particularly relevant in the time that the study was conducted because gun sales peaked during the COVID-19 crisis (Collins and Yaffe-Bellany, 2020).

7. Study 3

The method used in Study 3 resembled those used in previous studies except that we used a more controlled manipulation of comment offensiveness that kept the content of the comments constant. Whereas in Study 2 comments were categorized as offensive or inoffensive based on coders' ratings, in Study 3, for each inoffensive comment, we generated an offensive version by adding offensive phrases. In this way, the content of inoffensive and comments was identical except for offensive language. Finally, as in Study 2, we assessed whether the selective censoring effect of fusion generalized to other identity-related measures such as indices of attitude strength, moral conviction, and identification with cause supporters.

8. Study 3 Method

8.1. Power analysis

As mentioned in our pre-registration (see https://osf.io/x3w7h/?view_only=a25d722f3a03405e9e4f074a622b10b4), an a priori power analysis conducted using Monte Carlo simulations indicated that a sample of 325 participants was required to detect the selective censoring effect detected in Study 2 with an alpha of 0.05 and 80% power. Given the longitudinal nature of the study, we estimated that approximately 30% of the sample would either drop out between T1 and T2 or fail attention checks, and so we decided to recruit 460 participants at T1.

8.2. Time 1 (T1)

8.2.1. Participants

A sample of 466 participants (49.6% female; 67.0% White; M age = 31.18; SD age = 11.14) from Prolific Academic completed the first part of the study in May 2020. Participants' views on gun rights were measured in the T1 survey (370 pro-gun-control and 96 pro-gun-rights participants).

8.2.2. Identity measures

Participants completed the identity fusion scale for their position on gun rights (either pro-gun or anti-gun) on a seven-point scale (α = 0.93). Using the measures used in Study 2, we measured four facets of attitude strength – attitude extremity, attitude centrality, attitude certainty and attitude importance, moral conviction, and identification with cause supporters (α = 0.86). The order of presentation of the above constructs was randomized. Means, standard deviations, and inter-variable correlations are reported in Table 5. Finally, they provided demographic information.

Table 5.

Means, standard deviations, and correlations with confidence intervals in Study 3 (N = 371).

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 |

|---|---|---|---|---|---|---|---|---|---|---|

| 1. Fusion with cause | 3.45 | 1.43 | ||||||||

| 2. Attitude extremity | 7.62 | 1.36 | 0.31⁎⁎ | |||||||

| 3 Attitude centrality | 6.75 | 1.84 | 0.49⁎⁎ | 0.53⁎⁎ | ||||||

| 4. Attitude certainty | 7.58 | 1.46 | 0.38⁎⁎ | 0.69⁎⁎ | 0.55⁎⁎ | |||||

| 5. Attitude importance | 6.55 | 1.79 | 0.61⁎⁎ | 0.55⁎⁎ | 0.73⁎⁎ | 0.59⁎⁎ | ||||

| 6. Moral conviction | 3.37 | 1.03 | 0.49⁎⁎ | 0.49⁎⁎ | 0.68⁎⁎ | 0.54⁎⁎ | 0.56⁎⁎ | |||

| 7. Identification with cause supporters | 5.65 | 1.06 | 0.50⁎⁎ | 0.63⁎⁎ | 0.56⁎⁎ | 0.63⁎⁎ | 0.61⁎⁎ | 0.57⁎⁎ | ||

| 8. Rate of censoring congruent comments | 0.28 | 0.18 | −0.04 | −0.01 | −0.05 | −0.01 | −0.04 | −0.06 | −0.04 | |

| 9. Rate of censoring incongruent comments | 0.37 | 0.20 | 0.08 | 0.11⁎ | 0.10⁎ | 0.13⁎⁎ | 0.13⁎ | 0.07 | 0.07 | 0.56⁎⁎ |

Note. The censoring rates, ranging from 0 to 1, refer to the proportion of comments of each type (congruent and incongruent) that participants censored. Fusion's effect on selective censoring is the difference between fusion's association with the censoring rates of congruent and incongruent comments. This effect was not moderated by position on gun rights. * indicates p < .05. ** indicates p < .01.

8.3. Time 2 (T2)

8.3.1. Participants

Two weeks after completing the T1 survey, participants were able to complete a “Comment Moderation Task”. A total of 373 participants completed the task. Two participants who completed less than 50% of the task were excluded, leaving us with a final sample of 371 participants (52.85% female; 66.85% White; M age = 31.45; SD age = 11.61; 297 pro-gun-control and 74 pro-gun-rights participants). A sensitivity analysis revealed that our sample had 85% power to detect the fusion effect on selective censoring reported in Study 2. We found a difference in fusion levels between people who did vs. did not complete the T2 session such that individuals who completed T2 were more fused with the cause (t(462) = 2.01, p = .05, d = −0.23).

8.3.2. Comment moderation procedure

As in the previous studies, we asked participants to help moderators of a college-run discussion forum identify inappropriate posts for removal. We gathered 14 pro-gun-rights comments and 14 pro-gun-control comments from the internet, resulting in 28 comments. We created offensive and inoffensive versions of each comment by including or excluding offensive phrases. Participants read either the offensive or inoffensive version of each of the 28 comments. Overall, participants read four types of comments (N = 7 for each type): Offensive-Pro-gun-rights, Inoffensive-Pro-gun-rights, Offensive-Pro-gun-control, and Inoffensive-Pro-gun-control (See Table 4 for example comments). As in Study 2, each comment was accompanied by a user icon and timestamp like in real online forums. The pro-gun-rights comments were all posted by a single user, and the pro-gun-control comments were all posted by another user. As in the previous studies, for each comment, participants recommended deletion or retention. After evaluating all comments, participants were also asked whether the two users whose comments they read should be banned from the blog (“Ban this user from the blog” or “Do not ban this user from the blog”). Finally, participants rated how much they doubted that the forum was not real on a five-point scale (1 = not at all, 5 = a great deal). The mean rating (M = 2.65, SD = 0.99) was lower than the mid-point of the scale (i.e., 3 = A moderate amount; t(366) = −6.77, p < .001, d = −0.35), suggesting that participants generally did not doubt the veracity of the paradigm.

Table 4.

Sample comments rated by participants in Study 3. The study included 28 comments (14 pro-gun-rights and 14 pro-gun-control), each of which had an offensive and an inoffensive version. Participants rated either the offensive or inoffensive version of each of the 28 comments. The comments were presented in the format illustrated in Fig. 3 and in random order.

| Sample comments rated by Participant 1 |

Sample comments rated by Participant 2 |

|

|---|---|---|

| Pro-gun-rights |

PostalExplorer: We must defend the right to keep and bear arms through communication and coordinated action, retarded dumbasses like you just don't get it. [offensive] PostalExplorer: Everyone should be pro gun. Pro gun = pro freedom. Pro gun = anti tyranny. [inoffensive] |

PostalExplorer: We must defend the inherent right to keep and bear arms through communication and coordinated action. [inoffensive] PostalExplorer: You're must be an unfixable dumbfuck if you don't get this: Pro gun = pro freedom. Pro gun = anti tyranny. [offensive] |

| Pro-gun-control |

Emerald-3: Why aren't guns and, oh yeah, assault rifles banned? Why aren't you banned? It is unbelievable that this has been allowed to continue. I am mortified that you exist. Enough is enough! #guncontrol #fuckguns [offensive] Emerald-3: I don't care about Thoughts and Prayers. It's just a phrase that people use instead of “Thoughts and Actions”. [inoffensive] |

Emerald-3: Why aren't guns and specifically assault rifles banned? It is unbelievable that this has been allowed to continue. Enough is enough! #guncontrol #nomoreguns [inoffensive] Emerald-3: I Don't Give a Fuck About Your Thoughts and Prayers. It's just a shitty, waste of words that people use instead of “Thoughts and Actions”. [offensive] |

For each participant, we calculated censoring rates corresponding to comments congruent and incongruent with their own position on guns. For the offensiveness-related analyses, we also computed censoring rates for each of the four types of comments (Offensive-Congruent, Offensive-Incongruent, Inoffensive-Congruent, and Inoffensive-Incongruent). Overall, participants censored offensive comments (M = 0.58, SD = 0.28) more than inoffensive comments (M = 0.07, SD = 0.12; t(370) = 33.98, p < .001¸d = 2.27) indicating that the offensiveness manipulation was successful. The censoring rates for offensive and inoffensive comments were correlated albeit more weakly than in Study 1 (r(369) = 0.17, p < .001).

9. Study 3 results

9.1. Did people selectively censor comments incongruent with their cause and the comments' authors?

We tested the pre-registered hypothesis that people would selectively censor incongruent comments more than congruent comments. We conducted a paired t-test comparing the censoring rates for incongruent vs. congruent comments. Replicating findings from the first two studies, people censored more incongruent comments (M = 36.97%; SD = 19.64) than congruent comments (M = 27.88%; SD = 17.62), t(370) = 10.02, p < .001, d = 0.49.

We also conducted a pre-registered analysis testing whether people were disproportionately willing to ban the author of the incongruent comments relative to the author of the congruent comments. Contrary to our hypothesis and the results of Study 1, we did not find a significant difference (χ2(1) = 1.92, p = .17). Nevertheless, the means trended in the expected direction. That is, 32.69% of participants banned the user who posted incongruent comments as opposed to just 29.51% who banned the user posting congruent comments.

9.2. Did identity fusion amplify the selectively censoring of incongruent comments?

To test our pre-registered hypothesis that strongly fused individuals would be especially likely to selectively censor incongruent comments, we tested a SEM model (see Fig. 6 ) with residual covariances between the censoring rates. (Alternate analyses treating the difference between censoring rates of incongruent and congruent comments as the selective censoring index, reported in Table 6 below and in SOM-II, result in the same findings). As in Studies 1 and 2, we standardized the predictors in all the SEM analyses, and we report unstandardized regression coefficients. Fusion positively (but not significantly) predicted censoring incongruent comments (c 1 path; b = 0.02, 95% CI = [−0.004, 0.04], p = .12) but not censoring congruent comments (c 2 path; b = −0.006, 95% CI = [−0.02, 0.01], p = .49). The difference between the fusion effects on censoring incongruent vs. congruent comments was significant, (Δc = c 1 - c 2; χ2(1) = 6.01, p = .01), which is evidence that fusion is associated with selective censoring. To illustrate, participants who were strongly fused (+ 1 SD) censored 41.47% of the incongruent comments they read but only 28.56% of the congruent comments. Weakly fused participants censored 35.92% of the incongruent comments and 29.52% of the congruent comments, indicating weaker selective censoring. The effect of fusion on selective censoring remained significant when we controlled for whether participants favored pro-gun-rights or pro-gun-control (χ2(1) = 9.24, p = .002), and the effect was not moderated by position on gun rights (χ2(1) = 0.05, p = .83).

Fig. 6.

Structural Equations Model depicting the effect of identity fusion on selective censoring of incongruent vs. congruent comments (Study 3). The c1 and c2 paths represent the effects of fusion on censoring incongruent and congruent comments respectively. The significant difference between the two paths (Δc) indicates that fusion is associated with selectively censoring incongruent comments. * indicates p < .05.

Table 6.

Path coefficients (c1 and c2) and Chi-sq values (χ2) of SEM models and coefficients from regression models testing the effects of each identity-related measure on selective censoring (Study 3). Note that each model included only one predictor.

| Predictor in model | Semantic equation modeling (SEM) |

Selective Censoring difference index (b) |

||

|---|---|---|---|---|

| Censoring incongruent comments (c1) |

Censoring congruent comments (c2) |

Selective censoring (Δc = c1-c2) χ2 |

||

| Model 1: Fusion with cause | 0.02 | −0.006 | 6.01⁎ | 0.02⁎ |

| Model 2: Attitude importance | 0.03⁎ | −0.01 | 13.45⁎⁎⁎ | 0.03⁎⁎⁎ |

| Model 3: Attitude certainty | 0.03⁎⁎ | −0.002 | 9.86⁎⁎ | 0.03⁎⁎ |

| Model 4: Attitude centrality | 0.02⁎ | −0.01 | 11.26⁎⁎⁎ | 0.03⁎⁎⁎ |

| Model 5: Attitude extremity | 0.02⁎ | −0.002 | 7.01⁎⁎ | 0.02⁎⁎ |

| Model 6: Identification with cause supporters | 0.02 | −0.007 | 5.51⁎ | 0.02⁎ |

| Model 7: Moral conviction | 0.01 | −0.01 | 7.33⁎⁎ | 0.03⁎⁎ |

Note. In each model, the predictor was standardized, but the censoring rates were not. The censoring rates ranged from 0 to 1. The path coefficients reported are unstandardized. * indicates p < .05. ** indicates p < .01. *** indicates p < .001.

9.2.1. Did offensiveness moderate the effect of fusion on selectively censoring?

As in the previous studies and consistent with the pre-registration, we modeled the paths from fusion to participants' censoring rates for four types of comments: Offensive-Congruent, Offensive-Incongruent, Inoffensive-Congruent, and Inoffensive-Incongruent (see Fig. 7 ). Among inoffensive comments, fusion was associated with selectively censoring incongruent comments over congruent comments (Δq = q1 – q2; b = 0.03, 95% CI = [0.009, 0.04], p = .003). Among offensive comments, the effect was in the predicted direction but not significant (Δp = p1 – p2; b = 0.02, 95% CI = [−0.007, 0.04], p = .16). (The four path coefficients are reported in SOM-IV). Comparing two selective censoring effects for offensive vs. inoffensive comments (Δp – Δq) revealed no difference (χ2(1) = 0.39, p = .53).

Fig. 7.

Structural Equations Model examining the effect of identity fusion on selective censoring of incongruent vs. congruent comments among offensive and inoffensive comments (Study 3). Δp and Δq represent fusion's effects on selective censoring among offensive comments and inoffensive comments, respectively. The difference between them was not significant, which indicates that comment offensiveness did not moderate fusion's effect on selective censoring. See SOM-IV for path coefficients. ** indicates p < .01.

9.3. Did fusion's effect on selective censoring of incongruent comments generalize to other identity-related measures?

We then tested our pre-registered hypothesis that fusion's effect on selective censoring would extend to seven identity-related measures. Using models similar to the fusion analysis, we tested the effect of each predictor on selective censoring. Table 6 reports each model's path coefficients from the tested variable to censoring incongruent (c 1) and congruent (c 2) comments, and the chi-square difference between the two paths (c 1 – c 2) indicating the extent to which the tested variable is associated with selective censoring. The last column in Table 6 presents linear regression coefficients from alternate models testing the effect of each identity-related measures on the difference between participants' censoring rates for incongruent and congruent comments. The significant chi-square differences (Δc) and regression coefficients (b) indicate that the selective censoring effect generalized to each of the seven identity-related measures. In contrast to Study 2, the selective censoring effect was largely driven by positive associations between the identity-related measures and censoring incongruent comments.

9.4. Did selective censoring of incongruent comments depend on people's ideologies?

We tested another exploratory SEM model to assess the effect of people's stance on gun rights (pro-gun-rights vs. pro-gun-control). Gun-control supporters selectively censored incongruent comments more than gun-rights supporters (χ2(1) = 17.09, p < .001) even though pro-gun- rights supporters tended to be more strongly fused than pro-gun- control supporters (t(367) = 2.18, p = .03, d = 0.28). Study 3 was conducted during a period that saw increased gun sales (Collins and Yaffe-Bellany, 2020), which should have increased the threat perceived by gun-control supporters, increasing their tendency to selectively censor opposition.\.

10. Study 3 discussion

Study 3 demonstrated that the selective censoring effect extends to issues beyond religiously tinged issues such as abortion rights. Specifically, people selectively censored comments that opposed their views on the gun rights debate, and this effect was amplified among people who were strongly fused with their cause. As in Studies 1 and 2, people selectively censored incongruent comments even when they were inoffensive. Contrary to Study 2, we did not find a significant selective censoring effect on offensive comments, but it could be that our study was underpowered to detect this effect. Further, gun-control proponents selectively censored more than gun-rights proponents, which when taken together with Studies 1 and 2, suggests that people's willingness to selectively censor may depend on the cause at hand (pro-choice or pro-gun-control) and the political context (e.g., level of threat faced by the cause) rather than political ideology (left or right).

Study 3 also replicated the Study 2 finding that selective censoring extends to a range of identity related constructs including attitude strength, identification with supporters, and moral conviction. Nevertheless, we did not find similar results across Studies 2 and 3 regarding the degree to which each identity-related process produced a lenience toward congruent content or an intolerance of incongruent content. Future research will need to disentangle the links between identity related processes and selective censoring.

10.1. General discussion