Summary

The difficulty of obtaining reliable individual identification of animals has limited researcher's ability to obtain quantitative data to address important ecological, behavioral, and conservation questions. Traditional marking methods placed animals at undue risk. Machine learning approaches for identifying species through analysis of animal images has been proved to be successful. But for many questions, there needs a tool to identify not only species but also individuals. Here, we introduce a system developed specifically for automated face detection and individual identification with deep learning methods using both videos and still-framed images that can be reliably used for multiple species. The system was trained and tested with a dataset containing 102,399 images of 1,040 individuals across 41 primate species whose individual identity was known and 6,562 images of 91 individuals across four carnivore species. For primates, the system correctly identified individuals 94.1% of the time and could process 31 facial images per second.

Subject Areas: Zoology, Ethology, Artificial Intelligence

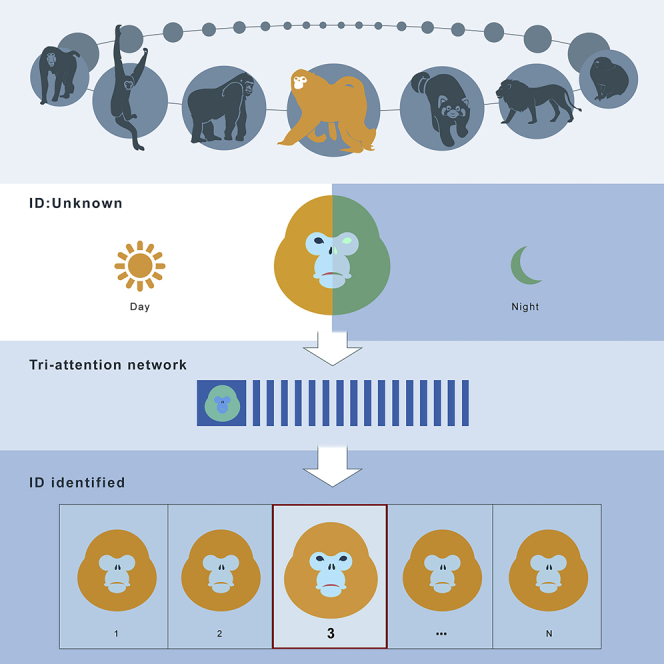

Graphical Abstract

Highlights

-

•

The Tri-AI system can rapidly detect and identify individuals from videos and images

-

•

Tri-AI had an ID identification accuracy of 94% for 41 primates and 4 carnivores

-

•

The system could individually recognize 31 animals/s with images taken day or night

-

•

Systems like Tri-AI make around-the-clock monitoring and behavior analysis possible

Zoology; Ethology; Artificial Intelligence

Introduction

Answering many theoretical questions in ecology and conservation frequently requires the identification and monitoring of individual animals (Nathan, 2008). However, traditional marking methods are often costly and involve considerable risk to the animal, a risk that is typically unacceptable for endangered species (Fernandezduque et al., 2018). With the maturity of digital image acquisition and camera traps, it has become relatively easy to repeatedly capture images of animals; however, using these images to address many ecological questions requires accurate individual identification (Wang et al., 2013).

By employing image matching methods (Zeppelzauer, 2013; Zhu et al., 2013; Chu and Liu, 2013; Finch and Murray, 2003) and machine learning (Loos and Ernst, 2013; Swanson et al., 2016; Nathan, 2008), researchers have accurately identified species from images using animal body surface characteristics, like colors (Zeppelzauer, 2013; Zhu et al., 2013; Wichmann et al., 2010), shape (Chu and Liu, 2013; Tweed and Calway, 2002; Finch and Murray, 2003), and texture (Crouse et al., 2017). To identify individuals, however, the images of specific body parts are required (Norouzzadeh et al., 2018; Burghardt et al., 2004; Lahiri et al., 2011; Hiby et al., 2009; Karanth, 1995), and this has been done with penguin's abdomens (Burghardt et al., 2004), stripes of zebra (Lahiri et al., 2011) and tigers (Xu and Qi, 2008), and the unique spot and scar features on the backs of killer whales (Arzoumanian et al., 2005). Although helpful for specific studies, these methods are using species-specific traits and thus they cannot be used across species.

The objective of our study was to determine if animal facial images could be used as a universal part for individual detection and identification. Machine learning for facial recognition has been developed for animals. For example, Burghardt and Calic (2006) presented a method based on Haar-like features and AdaBoost algorithm to detect the lion's face and Ernst and Küblbeck (2011) extracted the features of the key facial points of chimpanzees for individual identification. Recently, Hou et al. (2020) used VGGNet for face recognition on 65,000 face images of 25 pandas and obtained an individual identification accuracy of 95%. Schofield et al. (2019) presented a deep convolutional neural network (CNN) approach for face detection, tracking, and recognition of wild chimpanzees from long-term video records in a 14-year dataset yielding 10 million face images from 23 individuals, and they obtained an overall accuracy of 92.5% for identity recognition and 96.2% for sex recognition. Such studies suggest that facial images could be a universal feature for individual detection and identification for mammals (Kumar et al., 2017).

Past studies have applied machine learning to recognize species; however, these methods use classical image segmentation and artificial features to perform coarse-grained species or individual identification and require high image quality, which results in that the recognition performances of these methods become poor if the image is occluded or the animal changes posture. As a result, this method is not practical for uncontrolled field conditions.

CNNs deal well with nonlinear problems due to their powerful feature extraction capabilities that provide new solutions for occlusion and posture changes of animals faces (Krizhevsky et al., 2012). CNNs have become an exciting and active research topic in the field of computer vision, and various derivative network models have been proposed, such as ZFNet (Zeiler and Fergus, 2014), VGG (Simonyan and Zisserman, 2015), GoogleNet (Szegedy et al., 2015), ResNet (He et al., 2016), and DenseNet (Huang et al., 2017). In the detection and identification of wildlife, the Log-Euclidean framework was used by Freytag et al. (2016) to improve the identification ability of CNN-based methods to predict the identity, age, and gender of chimpanzees. Norouzzadeh et al. (2018) used a variety of CNN frameworks and demonstrated that deep learning (DL) can automate species identification for 99.3% of 3.2 million wildlife images in the Serengeti and performed slightly better (96.6% accuracy) than teams of volunteers. Species or individual identification based on machine learning has achieved reasonably good results, especially when many images are available.

To accurately identify individuals using the same methods across multi-species, we designed a deep neural network with attention mechanism for individual identification of primates and other species. Subsequently, we developed and tested our system for automatic individual identification that uses DL techniques to identify body parts. We believe that this represents a breakthrough in software engineering to identify individual animals that will be very useful for biological research. We call our system Tri-AI, which is used for automated face detecting, identifying, and tracking. Here we report on our efforts to design the system Tri-AI for individual identification of 41 primate and 4 carnivore species (Figure 1).

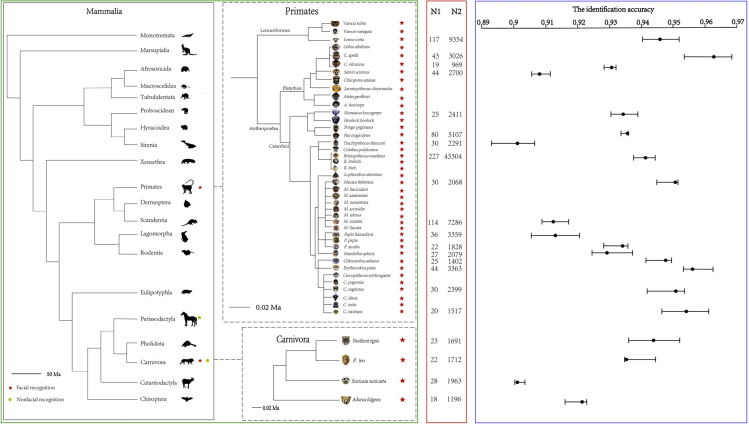

Figure 1.

Application of Deep Learning in Animals and the Individual Identification Accuracy of 21 Species

Green dot, Non-facial (body) biometric character recognition; red stars, facial biometric recognition. Break line box: Tri-AI has successfully performed individual identification in the species in this study (in left green box). N1 is the number of the individuals, and N2 is the number of facial images for the corresponding species in our image dataset (in middle red box). For each species, we give the average, maximum, and minimum values of the accuracy from multiple tests (in right blue box).

Results

Overview of the Experimental Results

We designed Tri-AI (related to Supplemental Information) to quickly detect, identify, and track individuals from videos or still-framed images of multiple species (related to Transparent Methods). To accomplish these tasks, the system Tri-AI needed to be fed in a great deal of data. Therefore, we established an Image Acquisition Standard for animals (related to Transparent Methods), and then obtained facial images of known individuals from images or videos to build an image set (related to Transparent Methods). This image set was used for face detection and individual identification separately. We trained and tested Tri-AI with an initial database that contained 102,399 images of 1,040 individuals whose identity was known, across 41 primate species and 6,562 images of 91 individuals across four carnivore species (related to Figure 1 and Table S1). Tri-AI achieved individual identification accuracy of 94.11% (mean ± SE = 94.11% ± 0.0024; Figure 1, Table S1) with an average detection and recognition time of 31 images per second. The identification accuracy for different species by the proposed Tri-attention network (related to Transparent Methods) in the system Tri-AI was obtained on the test image dataset (Table S1), and the accuracy of individual identification for golden snub-nosed monkey test images was 94.12% (related to Table S1). These images were obtained by mobile phones or single-lens reflex (SLR) cameras and have relatively high resolutions. The facial images are clear with little shielding. The images of most golden snub-nosed monkeys were captured on different days, whereas the images of other species were obtained in one day in zoos. Across the species examined, Tri-AI achieved correct detection accuracy (i.e., detection of the animal from the environment) of 91.1%–97.7%. Thus, our work suggests that the animals' faces can be used to identify individuals. The dataset and the related testing models have been released publicly at the database AFD (Animal Face Database): http://dx.doi.org/10.17632/z3x59pv4bz.2.

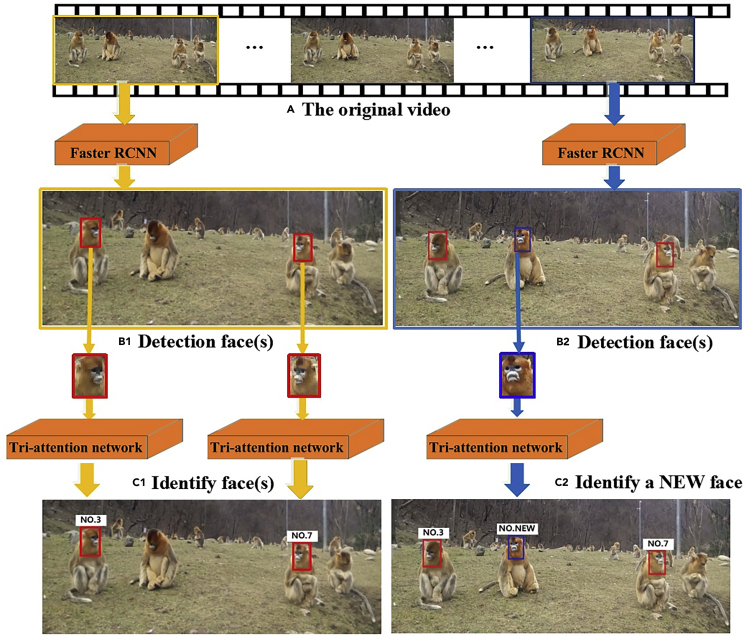

The three still-framed images in Figure 2A depict Tri-AI detection of the animal's facial area and subsequently identify the individual. If the individual is included in the existing database, Tri-AI identifies them and provides their identification number or name (Figures 2A, 2B1, and 2C1). If the individual is not in the database, Tri-AI identifies the animal as a new individual and gives them a new number or name (Figures 2A, 2B2, and 2C2).

Figure 2.

Face Detection and Identification of the Golden Snub-Nosed Monkeys by Using Deep Learning Methods in Tri-AI

(A) An original video has many frames, and the face areas of the monkeys must first be detected using Faster RCNN (Ren et al., 2015) from each frame.

(B1 and B2) The detected monkeys' faces are all marked in each frame by Faster RCNN and then are input to Tri-attention network for individual identification.

(C1) Tri-AI identifies and names all monkeys known in the database.

(C2) If Tri-AI finds a new monkey face, a new name is then given and automatically added to the database.

For videos, Tri-AI detects animal faces and identifies their identity frame by frame (Figures 2A–2C), and again if a new individual appeared in the videos, Tri-AI gives the animal a new ID or name (Figures 2A, 2B2, and 2C2). Meanwhile, all the facial images of the new individual can be collected to update the original image dataset. With video, more facial images of the new individual are collected. When the new individual has more than 60 facial images, the updated dataset is used to retrain Tri-attention network offline. If images of these individuals are collected in the future, the updated Tri-attention network will identify the individuals. Most importantly, this update will occur even if the observer has not been able to distinguish features to know the animal (Figure 2) and thus will help the researcher to learn new individuals. Our model identifies new individuals and collects the face images of the new individual according to the locations of the detected faces. Then the model is retrained offline on the update dataset with new individuals.

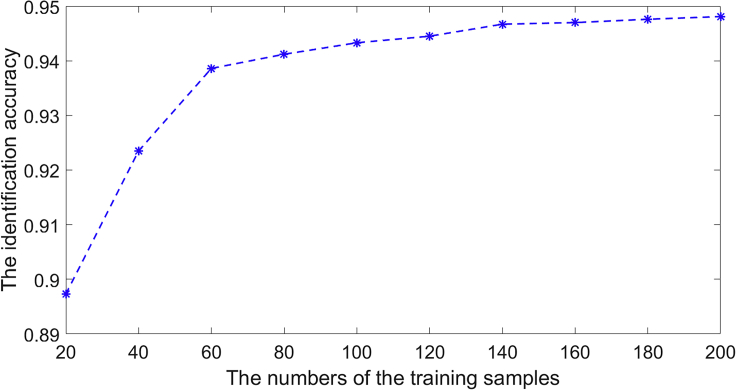

We obtained 98.70% accuracy in facial detection and 92.01% accuracy for individual identification with test videos of golden snub-nosed monkeys. Tri-AI needs to be trained with 60 or more images per individual to obtain stable identification accuracy (Figure 3). These results demonstrate that this AI software can reliably identify individuals across species using facial images (related to Tables S1 and S2). The promising performance of Tri-AI inspires that this approach can be used to automatically identify and track individuals of many wildlife species. Tri-AI can do real-time monitoring in the day or at night.

Figure 3.

The Relationship between the Identification Accuracy and the Training Sample Numbers

The accuracy is improving with increasing in training samples for each individual, and the inflection point number of training images is around 60 for each individual of golden snub-nosed monkey.

Details of Experimental Results

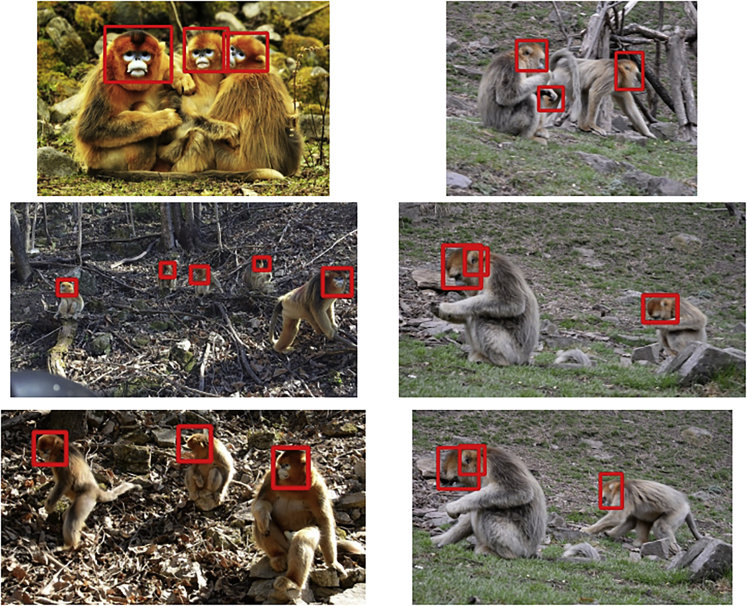

Face Detection for Images

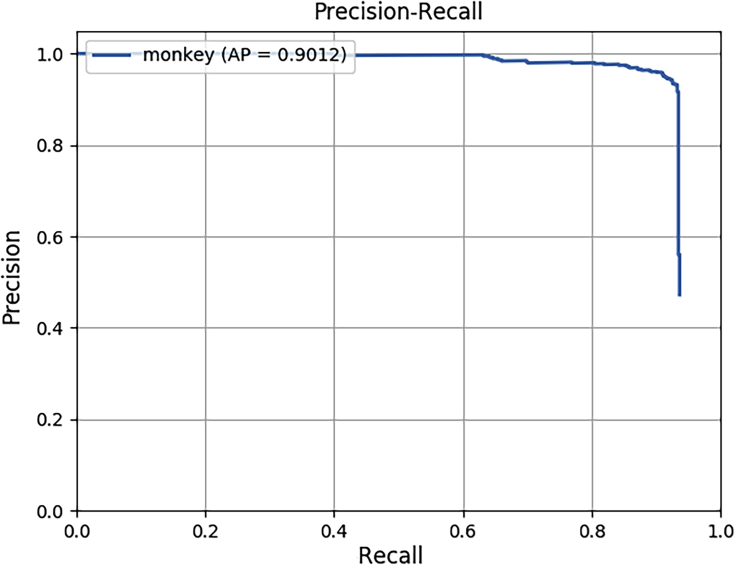

Tri-AI can detect faces with a high level of accuracy. We randomly tested Tri-AI with 500 gray scale images of golden snub-nosed monkey and found that it successfully found 632 faces, but failed to detect a face in 56 incidences (48 faces cannot be detected because they were shaded and all the faces that were falsely detected had only a partial face as judged by S.G.). We demonstrated that Faster RCNN (Figure S1) can be used to detect other species as long as it was provided with a sufficiently robust labeling file. We tested 1,500 images of three species: 500 images of golden snub-nosed monkeys, 500 images of Tibetan macaques (Macaca thibetana), and 500 images of individually known tigers (Panthera tigris). We checked the tested images one by one and obtained a high face detection level for each species: 91.13% for golden snub-nosed monkeys, 97.71% for Tibetan macaques, and 97.70% for tigers. The full precision-recall curve of Faster RCNN for face detection of golden snub-nosed monkeys is shown in Figure 4. It can be seen that the face detection performance for golden snub-nosed monkeys by Faster RCNN was high due to the upper right convex effect. We also provide samples of false face detection cases (Figure 5).

Figure 4.

Precision-Recall Curves of Face Detection by Faster RCNN on Golden Snub-Nosed Monkeys

Figure 5.

The Detected Results of Golden Snub-Nosed Monkeys by Faster RCNN

In addition, Faster RCNN can be pre-trained by the labeled images of golden snub-nosed monkey, and then fine-tuned by small-scale labeled images of other species. For example, we pre-trained Faster RCNN with 1,200 labeled images of golden snub-nosed monkey, then fine-tuned the pre-trained model by 500 labeled images of Tibetan macaque, and we can get an average face detection accuracy of 96.00%, compared with 97.7% obtained by Faster RCNN trained on 1,200 labeled images of Tibetan macaque without pre-training.

Face Recognition for Images

We determined the identity of detected faces using Tri-AI and tested its ability to correctly assign individual identities. To do this, we used our image dataset of 41 primate species from wild and captive animals in China (102,399 facial images of 1,040 individuals) (related to Transparent Methods). We selected 17 primate species from the dataset that had more than 19 identified individuals and tested Tri-AI's accuracy using non-repetitive random sampling for five times. Tri-AI obtained an average identification accuracy of 93.58% (90.14%–96.27%; Figure 1 and Table S1). This accuracy can be achieved rapidly as Tri-attention network (Figures S2–S6) accomplished individual identification of 1,000 facial images in 20 s. There were 24 species (No. 18–41, related to Table S1) in the dataset with fewer than 12 individuals. Despite having few individuals Tri-AI (Figures S7–S16) could identify species with an accuracy of 97.58% (a total of 7,883 facial images).

Training and Testing Samples

We randomly selected 60% of the facial images of all species as training samples, 10% of the images as validation samples, and the remaining 30% of the images as the tested samples. We detected the monkeys' facial images from all the captured images, and removed facial images with high similarities. To determine the samples size needed for training the Tri-AI system, we made a correlation analysis between the number of training samples and the identification accuracy. The identification accuracy was improving with increasing training sample numbers for each individual, whereas the inflection point number of training image was approximately 60 for reliably identifying an individual from its face images (see in Figure 3).

Face Detection and Individual Identification in Videos

We used 10 videos of golden snub-nosed monkeys as the test data with 22 individuals and evaluated the success rates of detection and identification frame by frame. The Tri-AI system obtained 98.70% face detection accuracy, 92.01% individual identification accuracy, and 87.03% identification accuracy for new individuals. For the individual identification accuracy, we used 864 frontal facial images in 10 videos; we found that 795 facial images could be correctly identified, but the remaining 69 facial images were incorrectly identified as wrong individuals.

Discussion

Our objective was to develop a system for identifications of individuals with deep network models (Tri-AI), which could quickly detect, identify, and track individuals from video or still-framed images from multiple species. To accomplish this, our system needed to be fed and learn tremendous prior knowledge. We built a dataset that contained 102,399 images of 1,040 individuals, whose identity was known, across all subfamilies of non-human primates and four carnivore species. Primates were chosen as the main testing species because advanced face identification techniques had been developed in human. Four carnivore species were chosen, as they do not have typical human-like faces.

Tri-AI can deal with color images and gray images (including night vision images [Figure S17]). As a result, Tri-AI can do real-time monitoring in the day or at night, meeting research needs of many research programs. The night vision images of golden snub-nosed monkeys and the individual recognition model have been also made publicly available in the database AFD (Animal Face Database): http://dx.doi.org/10.17632/z3x59pv4bz.2.

To extend the applications of Tri-AI, we examined Tri-AI on meerkats (Suricata suricatta), lions (Panthera leo), red panda (Ailurus fulgens), and tigers (P. tigris) (see in Figure 1), and Tri-AI had identification accuracy of 90.13%, 93.55%, 92.16%, and 94.38%, respectively. For the night vision images of golden snub-nosed monkeys, Tri-AI achieved an identification accuracy of 92.03% (related to Transparent Methods). This suggests that Tri-AI is generally effective for individual identification of a broad range of mammals.

The key to individual identification is to extract the effective features from the relatively fixed parts of a species, and the face is an effective and discriminating feature. Tri-AI has good performances in terms of face detection and identification for primates with their human-like faces and even for carnivores with their more divergent facial features. However, we have found that Tri-AI cannot obtain high identification accuracy for the animals with non-typical or odd face structure. For instance, the face of elephants with their long trunk is rather different and facial images of each individual have large variations due to their unstable and moving trunks, which leads to lower accuracy in elephant face recognition (Tri-attention network had an accuracy of 0.54 for 7 elephants with 459 images).

It is typically hard to capture the facial images of animals in the wild as they intend to avoid humans and their living environment often makes it very difficult to clearly capture their faces (related to Transparent Methods). Therefore, based on the existing commonly used image acquisition equipment, including cell phones, digital SLR cameras, and camera traps, we have developed basic strategies, parameters, and standards for capturing animal facial images needed to build the facial image dataset that can be widely applicable among species. Interspecific variations in the accuracy and efficiency of Tri-AI are caused by many factors, including the structures of network models, the number and the diversity of samples, and the quality of images.

Research into individual recognition has mainly focused on how to design a robust network model for complex data. The traditional machine learning methods use the artificial features and classifiers to identify individuals, and these methods can deal with the task of individual identification on relatively small datasets. Their ability to improve their performance is limited when the number of images becomes large. In contrast, DL methods can automatically extract the effective features from images and have superior abilities with large image datasets. These methods require large computational resources, and training DL methods is time-consuming. For individual identification, the CNN models are commonly used to extract the features from the whole images, but ignore some distinguishing parts, which may have significant distinguishing features. Therefore, we designed a new DL model with three channels of attention mechanism to improve the performance of individual recognition. Our Tri-attention network in the system Tri-AI achieved the individual identification accuracy of 94.11% at a speed of 50 images per second.

Nevertheless, when the image samples of each individual increase beyond approximately 60 images, the improvements in performance increase only slightly. Therefore, we attempted to develop individual recognition methods with higher recognition performance when the number of individuals is large and keeps increasing.

In general, the promising performance of Tri-AI provides us confidence that this approach can be used to automatically identify and geo-track individuals of many wildlife species. The operational use of this technology will improve wildlife research, conservation, and management.

Limitations of the Study

Although our database contains 102,399 images across 41 primate species and 6,562 images across 4 carnivore species, there were 24 primate species in the dataset with fewer than 12 individuals. Therefore, we need further to capture the images of more individuals of those species with fewer individuals in our dataset.

Resource Availability

Lead Contact

Songtao Guo (Email: songtaoguo@nwu.edu.cn) takes responsibility for the Lead Contact role.

Materials Availability

The codes of Tri-AI were written in C++ and Python and compiled using g++ on the operation system Ubuntu 14.04. Tri-AI can run on the workstation with Intel Xeon(R) CUP E5-2650 V4, Graphics: GeForce GTX 1080 8G, RAM: 64GB, and Storage: 1TB; Tri-AI also can run on the computer servers with higher hardware configuration.

Data and Code Availability

All the original animal facial images, the night vision images, the test videos, and the related testing models have been released publicly at the database AFD (Animal Face Database): http://dx.doi.org/10.17632/z3x59pv4bz.2.

Acknowledgments

This work was supported by National Natural Science Foundation of China, 31872247, 31672301, 31270441, 31730104, 61973250; Strategic Priority Research Program of the Chinese Academy of Sciences, XDB31000000; Natural Science foundation of Shaanxi Province in China, 2018JC-022, 2016JZ009; National Key Programme of Research and Development, Ministry of Science and Technology, 2016YFC0503200; and the Shaanxi Science and Technology Innovation Team Support Project, 2018TD-026. We thank Prof. Patrick A. Zollner and Prof. Ruliang Pan for their comments and suggestions on the initial manuscript; we thank Shenzhen Safari Park and Shaanxi Academy of Forestry for their support; we thank Dr. Ya Wen for helping make the graphic abstract.

Author Contributions

S.G. and P.X. designed the research and wrote the manuscript. G.S., C.A.C., B.L., Q.M., D.F., and X.C. contributed to the improvement of our ideas and to the revision of the manuscript. S.G., P.X., G.H., H.Z., Y.S., and Z.S. carried out the data collection and research experiments.

Declaration of Interests

The authors declare no competing interests.

Published: August 21, 2020

Footnotes

Supplemental Information can be found online at https://doi.org/10.1016/j.isci.2020.101412.

Methods

All methods can be found in the accompanying Transparent Methods supplemental file.

Supplemental Information

For each species, 60% of the facial images are used as the training samples, 10% for validation, and 30% as test samples.

References

- Arzoumanian Z., Holmberg J., Norman B. An astronomical pattern-matching algorithm for computer-aided identification of whale sharks Rhincodon typus. J. Appl. Ecol. 2005;42:999–1011. [Google Scholar]

- Burghardt T., Thomas B., Barham P.J., Calic J. Automated Visual Recognition of Individual African Penguins. Fifth International Penguin Conference, Ushuaia, Tierra del Fuego, Argentina. 2004:1–10. [Google Scholar]

- Burghardt T., Calic J. Analyzing animal behaviour in wildlife videos using face detection and tracking. IEEE Proceedings-vision, Image Signal Process. (P-vis IMAGE Sign) 2006;153:305–312. [Google Scholar]

- Chu W., Liu F. ICCNCE (Atlantis Press); 2013. An Approach of Animal Detection Based on Generalized Though Transform; pp. 117–120. [Google Scholar]

- Crouse D., Jacobs R.L., Richardson Z., Klum S., Jain A.K., Baden A.L., Tecot S.R. LemurFaceID: a face recognition system to facilitate individual identification of lemurs. BMC Zoolog. 2017;2:1–14. [Google Scholar]

- Ernst A., Küblbeck C. 8th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS) IEEE; 2011. Fast Face Detection and Species Classification of African Great Apes; pp. 279–284. [Google Scholar]

- Fernandezduque M., Chapman C.A., Glander K.E., Fernandezduque E. Darting Primates: steps toward procedural and reporting standards. Int. J. Primatol. 2018;39:1009–1016. [Google Scholar]

- Finch N., Murray P. Machine Vision Classification of Animals. 10th Annual Conference on Mechatronics and Machine Vision in Practice (MMVIP) (Perth, Australia) 2003:9–11. [Google Scholar]

- Freytag A., Rodner E., Simon M., Loos A., Kuhl H.S., Denzler J. Proceedings of German Conference on Pattern Recognition (GCPR) Springer; 2016. Chimpanzee Faces in the Wild: Log-Euclidean Cnns for Predicting Identities and Attributes of Primates; pp. 51–63. [Google Scholar]

- Hiby L., Lovell P., Patil N., Kumar N.S., Gopalaswamy A.M., Karanth K.U. A tiger cannot change its stripes: using a three-dimensional model to match images of living tigers and tiger skins. Biol. Lett. 2009;5:383–386. doi: 10.1098/rsbl.2009.0028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- He K., Zhang X., Ren S., Sun J. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) IEEE; 2016. Deep Residual Learning for Image Recognition; pp. 770–778. [Google Scholar]

- Huang G., Liu Z., Der Maaten L.V., Weinberger K.Q. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) IEEE; 2017. Densely Connected Convolutional Networks; pp. 2261–2269. [Google Scholar]

- Hou J., He Y., Yang H.B., Connor T., Gao J., Wang Y.Y., Zeng Y.C.H., Zhang J.D., Huang J.Y., Zheng B.C.H., Zhou S. Identification of animal individuals using deep learning: a case study of giant panda. Biol. Conserv. 2020;242:108414. [Google Scholar]

- Karanth K.U. Estimating tiger Panthera tigris populations from camera-trap data using capture-recapture models. Biol. Conserv. 1995;71:333–338. [Google Scholar]

- Kumar S., Singh S.K., Singh R., Singh A. Springer; 2017. Animal Biometrics: Concepts and Recent Application. Animal Biometrics; pp. 1–20. [Google Scholar]

- Krizhevsky A., Sutskever I., Hinton G.E. Neural Information Processing Systems (NIPS) Curran Associates Inc; 2012. Imagenet Classification with Deep Convolutional Neural Networks; pp. 1097–1105. [Google Scholar]

- Lahiri M., Tantipathananandh C., Warungu R., Rubenstein D.I., Berger-Wolf T.Y. 1st ACM international conference on multimedia retrieval. Vol. 6. ACM; 2011. Biometric animal databases from field photographs: identification of individual zebra in the wild; pp. 1–8. [Google Scholar]

- Loos A., Ernst A. An automated chimpanzee identification system using face detection and recognition. EURASIP J. Image Vid. 2013;49:1–17. [Google Scholar]

- Nathan R. An emerging movement ecology paradigm. Proc. Natl. Acad. Sci. U S A. 2008;105:19050–19051. doi: 10.1073/pnas.0808918105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norouzzadeh M.S., Nguyen A., Kosmala M., Swanson A., Palmer M.S., Packer C., Clune J. Automatically identifying, counting, and describing wild animals in camera-trap images with deep learning. Proc. Natl. Acad. Sci. U S A. 2018;115:E5716–E5725. doi: 10.1073/pnas.1719367115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ren S., He K.M., Girshick R., Sun J. Faster r-cnn: towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015:91–99. doi: 10.1109/TPAMI.2016.2577031. [DOI] [PubMed] [Google Scholar]

- Schofield D., Nagrani A., Zisserman A., Hayashi M., Matsuzawa T., Biro D., Carvalho S. Chimpanzee face recognition from videos in the wild using deep learning. Sci. Adv. 2019;5:eaaw0736. doi: 10.1126/sciadv.aaw0736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simonyan K., Zisserman A. ICLR; 2015. Very Deep Convolutional Networks for Large-Scale Image Recognition; pp. 1–14. [Google Scholar]

- Szegedy C., Liu W., Jia Y., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) IEEE; 2015. Going deeper with convolutions; pp. 1–9. [Google Scholar]

- Swanson A., Kosmala M., Lintott C., Packer C.A. Generalized approach for producing, quantifying, and validating citizen science data from wildlife images. Conserv Biol. 2016;30:520–531. doi: 10.1111/cobi.12695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tweed D., Calway A. IEEE International Conference on Pattern Recognition (ICPR) IEEE; 2002. Tracking Multiple Animals in Wildlife Footage; pp. 24–27. [Google Scholar]

- Wang B.S., Wang Z.L., Lu H. Facial similarity in Taihangshan macaques (Macaca mulatta tcheliensis) based on modular principal components analysis. Acta Theriol. Sin. 2013;33:232–237. [Google Scholar]

- Wichmann F.A., Drewes J., Rosas P., Gegenfurtner K.R. Animal detection in natural scenes: critical features revisited. J. Vis. 2010;10:1–27. doi: 10.1167/10.4.6. [DOI] [PubMed] [Google Scholar]

- Xu Q.J., Qi D.W. Parameters for texture feature of Panthera tigris altaica based on gray level co-occurrence matrix. J. Northeast For. Univ. 2008;37:125–128. [Google Scholar]

- Zeppelzauer M. Automated detection of elephants in wildlife video. EURASIP J. Image Vid. 2013;46:1–23. doi: 10.1186/1687-5281-2013-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu W., Drewes J., Gegenfurtner K.R. Animal detection in natural images: effects of color and image database. PLoS One. 2013;8:e75816. doi: 10.1371/journal.pone.0075816. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeiler M.D., Fergus R. European conference on computer vision (ECCV); 2014. Visualizing and Understanding Convolutional Networks; pp. 818–833. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

For each species, 60% of the facial images are used as the training samples, 10% for validation, and 30% as test samples.

Data Availability Statement

All the original animal facial images, the night vision images, the test videos, and the related testing models have been released publicly at the database AFD (Animal Face Database): http://dx.doi.org/10.17632/z3x59pv4bz.2.