Abstract

Introduction

An increasing global need for pharmacovigilance training cannot be met with classroom courses alone. Several e-learning modules have been developed by Uppsala Monitoring Centre (UMC). With distance learners and technological challenges such as poor internet bandwidth to be considered, UMC opted for the microlearning approach based on small learning units connected to specific learning objectives. The aim of this study was to evaluate how this e-learning course was received.

Methods

The course was evaluated through usage data and the results of two user surveys, one for modules 1–4, signal detection and causality assessment, and the other for module 5, statistical reasoning and algorithms in pharmacovigilance. The evaluation model used was based on the Unified Theory of Acceptance and Use of Technology (UTAUT). A questionnaire was developed, divided into demographic profile, performance expectancy, effort expectancy, educational compatibility and behavioural intention. The two surveys were disseminated to 2067 learners for modules 1–4 and 1685 learners for module 5.

Results

Learners from 137 countries participated, predominantly from industry (36.6%), national pharmacovigilance centres (22.6%) and academia (16.3%). The overall satisfaction level was very high for all modules, with over 90% of the learners rating it as either ‘excellent’ or ‘good’. The majority were satisfied with the learning platform, the course content and the lesson duration. Most learners thought they would be able to apply the knowledge in practice. Almost 100% of the learners would recommend the modules to others and would also study future modules. Suggested improvements were an interactive forum, more practical examples in the lessons and practical exercises.

Conclusion

This e-learning course in pharmacovigilance based on microlearning was well received with a global coverage among relevant professional disciplines.

Electronic supplementary material

The online version of this article (10.1007/s40264-020-00981-w) contains supplementary material, which is available to authorized users.

Key Points

| There is an increasing global need for pharmacovigilance training that cannot be met by classroom courses alone. |

| E-learning modules, using a microlearning approach to allow for distance learning and to address technological challenges, were developed, launched and evaluated. |

| Results of surveys to evaluate the modules, using a widely recognised model to predict technological and pedagogical acceptance, indicated a high level of satisfaction and suggestions for enhancement. |

Introduction

Pharmacovigilance Training Needs

The need for training in pharmacovigilance (PV) has been difficult to meet since the very beginning, when this discipline was born in the aftermath of the thalidomide disaster [1]. Standards and best practices needed to be developed first with colleagues from several areas of expertise working together to find common ground. Uppsala Monitoring Centre (UMC) is a World Health Organization (WHO) Collaborating Centre responsible for the operational and scientific support of the WHO Programme for International Drug Monitoring (WHO PIDM). In this role, UMC ran its first 2-week course in Uppsala in 1993, directed at an international audience, mostly colleagues working at national PV centres that were members of the WHO PIDM. As the discipline of pharmacovigilance expanded, other professional, academic and commercial organisations followed suit, offering training courses mostly in high-income countries (HIC). This increased need for training came especially from industry due to the developments in drug regulation with a corresponding need to fulfil legal obligations, including the requirement for Marketing Authorisation Holders to have a qualified person for pharmacovigilance [2]. The rapid expansion of the WHO PIDM in the last 15 years, the regulatory developments spreading to countries other than HIC [3, 4], and the need to integrate PV activities in public health programmes and other donor-driven activities, have further increased the need for training.

Challenges

For many PV professionals, it is not possible to go abroad and attend a training course for several reasons, in particular, funding availability. Furthermore, the current classroom courses have limited capacity and cannot admit all applicants. These limitations were recognised at an early stage and lectures from the UMC course were therefore recorded for dissemination on the internet. However, since these lectures were long and focused on the learners in the classroom, this solution was poor at involving distance learners and there was a need to develop training material tailored to this group. Furthermore, unstable internet connections do not support teaching units of approximately 45–60 min without a break. While UMC still believes in the value of having learners from different countries learning and working together in a focussed environment, it became apparent to us that other solutions must be found to address the unmet training needs. With internet connectivity improving and increased popularity of e-learning also in low- and middle-income countries (LMIC) [5], exploring the feasibility and value of e-learning seemed worthwhile. E-learning in general offers a maximum degree of flexibility, both in geographical and temporal terms, as the course can be taken anywhere on any device with an internet connection. It is also valuable in situations where classroom teaching is not possible, such as during the COVID-19 pandemic of 2020, and it will contribute to reducing the carbon footprint due to air travel.

Pedagogical Considerations

With the need for lessons tailored to distance learners, and technological challenges such as poor internet bandwidth to be tackled, UMC opted for the microlearning approach based on small learning units (lessons) connected to specific learning objectives [6]. The microlearning format, with its focus on one or a few learning objectives per lesson, also facilitates ‘just-in-time’ training and repetition, a key aspect of effective learning, where specific lessons that are needed during work can be easily identified and revisited [7]. A recent literature review of microlearning in the health professions concluded that it has demonstrated a positive effect on retaining both knowledge and confidence among health professional learners [8].

Course Set-Up and Content

The learning management system GO+ (TICTAC Interactive, Malmö, Sweden) was selected as the online learning platform and the UMC e-learning modules were mainly created with Articulate 360 Rise (Articulate Global, Inc, New York, NY, USA). Four e-learning modules were developed, each divided into several concise video lectures (3–5 min). Different ways of presenting the content were assessed by a small selected group of eleven pilot testers, working at national PV centres in eight different countries. The feedback from the testers included their opinions regarding the learning platform, lesson length, the projection of the lecturer on the screen, the lecturer’s clarity and pace, font size, supplementary graphic material, sound quality, etc. The feedback determined the final format of the course. The modules covered signal detection and causality assessment; topics frequently requested by pharmacovigilance centre staff. The overall learning objective of the first four modules was to be able to explain and apply the basic concepts of signal detection and assessment including causality assessment. These modules were launched in October 2017.

An additional module on statistical reasoning and algorithms in pharmacovigilance was developed and launched in November 2018. The overall learning objective of this fifth module was to describe how statistical reasoning and algorithms may bring value to pharmacovigilance, explain the idea of disproportionality analysis to aid signal detection and compute different measures of disproportionality.

An overview of the course content is presented in Table 1. All modules were freely available via a link on the UMC website [9] and were promoted to the PV community by e-mail and social media. At the launch of modules 1–4, transcripts were available as an aid to those with limited knowledge of English and could also be used to quickly review the content of each lesson without watching it. When module 5 was launched, the transcripts were complemented by subtitles in English and Spanish for all modules, as a response to user feedback obtained from the evaluation of modules 1–4. At the end of each module, the learners could answer a set of questions to check whether they understood the content and keep track of their progress.

Table 1.

The course modules, number of lessons and total duration of the video lessons

| Module | Number of lessons | Total duration (min) |

|---|---|---|

| 1. Introduction to signal detection | 6 | 15:46 |

| 2. Causality assessment of case safety reports | 7 | 25:19 |

| 3. Causality assessment of case series | 7 | 27:38 |

| 4. Signal assessment | 7 | 21:37 |

| 5. Statistical reasoning and algorithms in pharmacovigilance | 8 | 31:56 |

The modules are subdivided into lessons with specific learning objectives

E-Learning Evaluation

E-learning development, implementation and management is a resource-demanding undertaking and there are many aspects to consider [5, 10]. Cook et al. argued that one should not ask if but instead when and how web-based learning should be used, since it is a powerful tool if used wisely, but not appropriate for all situations [11, 12]. Prior to integration of e-learning into an existing curriculum, the learners’ needs should be assessed and learning goals and objectives should be defined in order to establish that e-learning is an appropriate choice of learning method [13]. E-learning can be used as a stand-alone solution for learners in remote sites and several studies have shown that it appears to be as effective as traditional classroom lectures [12, 14]. It can also be used by teachers as training material.

Understanding user acceptance of e-learning is crucial to the design, implementation and management of a successful e-learning programme. Any training should ultimately lead to improved results for the organisation whose staff have undertaken it. This is achieved by a changed behaviour of staff, which in turn requires new knowledge, skills and attitudes, provided by the training. These aspects are often the targets of evaluation, representing levels 4, 3 and 2 in Kirkpatrick’s evaluation model [15]. In this paper, the focus of evaluation represents level 1 in Kirkpatrick’s model where the focus is on learners’ reactions to the training received, including the use of technology and pedagogical approach. Evaluating the impact and cost effectiveness of health training is difficult and many other factors that influence professional practice should be considered. No such evaluation has been done in this study, but although the costs for e-learning development are high, the cost per learner is reduced when the training is delivered to large numbers of learners [16].

The authors are not aware of a previous evaluation of an e-learning course in pharmacovigilance based on microlearning. This is, however, a necessary step to determine its viability as a mode of training delivery in the context of UMC’s e-learning. In this study, the aim was to evaluate how UMC’s pedagogical approach and use of technology was received by learners all over the world.

Methods

The course was evaluated through a combination of usage data logged automatically in the learning platform and the results of two user surveys; one for modules 1–4 and one for module 5.

Usage Data

In conjunction with the dissemination of the two user surveys, usage data was collected from the learning platform, such as number of registrations, course completion and country distribution.

User Survey

The evaluation model used in this study was based on the Unified Theory of Acceptance and Use of Technology (UTAUT), a widely recognised model to predict technological acceptance [17]. Research on information technology adoption has generated many different models for explaining and predicting user behaviour, based on different sets of acceptance determinants [18–20]. In 2011, Chen proposed to integrate educational compatibility into the UTAUT and demonstrated the value of also considering learning behaviour when e-learning acceptance is evaluated [21]. Inspired by this theoretical model, UMC developed a questionnaire with 25 questions, divided into the following categories: demographic profile, performance expectancy, effort expectancy, educational compatibility and behavioural intention. A few questions of a more general nature outside of these categories were also included. Performance expectancy is defined as the degree to which an individual believes that using the e-learning system will help increase job performance. Effort expectancy is the degree of ease associated with using the system. Educational compatibility refers to an individual’s perception of how the system fits with his or her learning expectancies. Behavioural intention refers to an individual’s intention to use the system.

The format of the questionnaire was predominantly closed questions with a small number of open questions. The latter related, for example, to suggestions for improvements, including topics for future modules. It was possible to allow free-text comments for most questions so that respondents could express personal opinions. The questionnaires (Online Resource 1, see electronic supplementary material [ESM]) were created in SurveyMonkey (SurveyMonkey, San Mateo, CA, USA) and the recipients were informed that anonymity was guaranteed, and that the data would be used for research only.

For all modules, all learners who had at least started the course at the time of the user survey were invited to participate. A list of these learners, including their contact information, was obtained from the learning platform. For modules 1–4, the survey was disseminated to 2067 learners from 125 countries; the survey took place in February 2018 (4 months after the launch), and the learners had 23 days to respond (Fig. 1). The survey for module 5 was disseminated to 1685 learners from 97 countries; the survey took place in August 2019 (8 months after the launch) and the learners had 25 days to respond. Reminders were sent at the end of each survey period to increase the response rate.

Fig. 1.

Timeline of course development, launches and evaluations

To ensure that the survey respondents were geographically representative, the regional origins of survey respondents were compared with those of the learners who were invited to participate in the surveys.

Data Analysis

Usage data and questionnaire results were exported to Excel and descriptively analysed with tables and graphs. All free-text responses were analysed by inductive thematic content analysis and summarised into categories. Stratification, for example, by affiliation, was applied where relevant.

Results

Usage Data

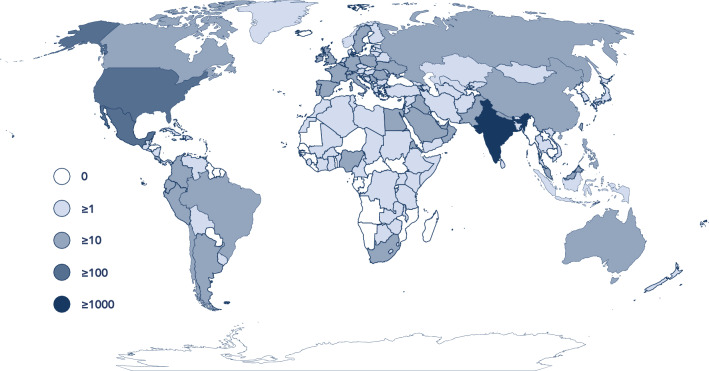

The usage data for modules 1–4 showed that, when the user survey was disseminated, 2067 learners had enrolled in the course, of whom 841 (40.7%) had completed it, and 1226 had at least started. For module 5, 1685 learners had enrolled in the course, of whom 1110 (65.9%) had completed it, and 575 had at least started. In total (modules 1–5), the learners came from 137 countries, as displayed in Fig. 2 and Online Resource 2 (see ESM), and approximately half of the learners came from India.

Fig. 2.

World map showing the geographic spread of the learners for all modules

User Surveys

Of the 550 learners who responded to the survey for modules 1–4 (26.6% response rate), 500 completed the whole survey and the rest partly. Of the 223 learners who responded to the survey for module 5 (13.2% response rate), 197 completed the whole survey and the rest partly. All responses were included in the analysis.

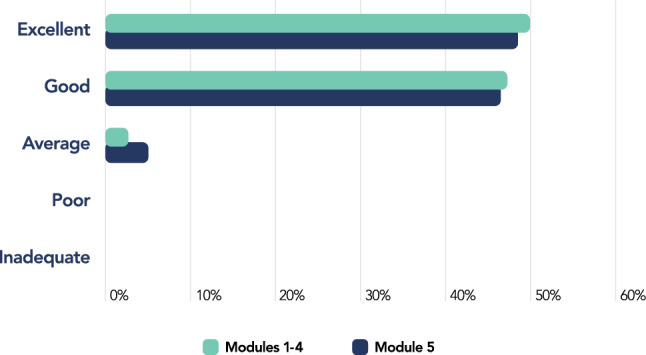

Overall Satisfaction

The overall satisfaction level was very high for all modules, as displayed in Fig. 3. When asked to rate modules 1–4 and module 5, 97.2% and 94.9% of the learners, respectively, selected either ‘excellent’ or ‘good’.

Fig. 3.

Overall ratings of modules 1–4 and module 5

Demographic Profile

The demographic profile of the learners is presented in Table 2 by country of residence, affiliation and profession. The geographic spread of the learners who participated in the surveys was categorised by WHO region [22]. The course was developed with PV centre staff in mind, especially new signal assessors, but it turned out to be very popular among pharmaceutical industry staff, and other pharmacovigilance professionals.

Table 2.

Demographic profile of the learners who participated in the user surveys

| Demographic profile | Modules 1–4 (%) | Module 5 (%) |

|---|---|---|

| Residence (WHO region) | ||

| SEARO (South-East Asia) | 43.3 | 46.5 |

| EURO (Europe) | 24.2 | 23.5 |

| PAHO (The Americas) | 19.3 | 12.5 |

| AFRO (Africa) | 5.7 | 7.0 |

| EMRO (Eastern Mediterranean) | 4.9 | 8.0 |

| WPRO (Western Pacific) | 2.6 | 2.5 |

| Affiliation | ||

| Pharmaceutical company | 36.6 | 35.0 |

| PV centre (national or regional) | 22.6 | 16.0 |

| Academia (university, hospital) | 16.3 | 25.5 |

| Health organisation | 8.1 | 10.0 |

| Drug regulatory authority | 5.9 | 5.0 |

| Other | 10.4 | 8.5 |

| Profession | ||

| Pharmacist | 53.4 | 57.5 |

| Medical doctor | 21.5 | 18.5 |

| Other health professional | 9.1 | 5.5 |

| University student | 5.1 | 6.5 |

| Other | 10.8 | 12.0 |

PV pharmacovigilance, WHO World Health Organization

For modules 1–4, the largest group of learners came from SEARO (South-East Asia, including India) (43.3%), followed by EURO (Europe) (24.2%) and PAHO (The Americas) (19.3%). Regarding affiliation, 36.6% of the learners worked in the pharmaceutical industry, followed by 22.6% PV centre staff and 16.3% academia professionals. When stratified by region for the affiliation ‘pharmacovigilance centre’, which was the primary target group of the course, the proportion of learners from AFRO (Africa) increased from 5.7 to 10.4%. More than half of the learners were pharmacists (53.4%), followed by medical doctors (21.5%). When stratified by profession for the affiliation ‘pharmacovigilance centre’, 69.6% were pharmacists and only 14.8% were medical doctors.

Similarly, for module 5, the largest group of learners came from SEARO (46.5%), followed by EURO (23.5%) and PAHO (12.5%). Regarding affiliation, the proportion of learners who worked in the pharmaceutical industry was similar to the results from modules 1–4, but PV centre staff decreased to 16.0% whereas academia professionals increased to 25.5%. When stratified by region for the affiliation ‘pharmacovigilance centre’, the proportion of learners from EMRO (Eastern Mediterranean) increased from 8.0 to 15.6%. As with modules 1–4, more than half of the learners were pharmacists (57.5%), followed by medical doctors (18.5%). When stratified by profession for the affiliation ‘pharmacovigilance centre’, 75.0% were pharmacists and only 9.4% were medical doctors.

For all modules, approximately a third of the learners had worked in pharmacovigilance for 1–4 years, a third for < 1 year and the remaining third for > 5 years.

When comparing the regional origins of the survey respondents with those of the learners who were invited to participate in the surveys, the proportions corresponded reasonably well (Table 3).

Table 3.

Comparison of the regional origins of survey respondents with those of the learners who were invited to participate in the surveys

| Residence (WHO region) | Modules 1–4 (%) | Module 5 (%) | ||

|---|---|---|---|---|

| Respondents | Learners | Respondents | Learners | |

| SEARO (South-East Asia) | 43.3 | 46.6 | 46.5 | 56.8 |

| EURO (Europe) | 24.2 | 17.3 | 23.5 | 14.4 |

| PAHO (The Americas) | 19.3 | 20.0 | 12.5 | 13.4 |

| AFRO (Africa) | 5.7 | 3.1 | 7.0 | 2.0 |

| EMRO (Eastern Mediterranean) | 4.9 | 5.5 | 8.0 | 10.3 |

| WPRO (Western Pacific) | 2.6 | 3.2 | 2.5 | 3.0 |

| Unknown | N/A | 4.2 | N/A | 0.2 |

N/A not applicable, WHO World Health Organization

Performance Expectancy

The questions and answers related to performance expectancy are presented in Table 4. For modules 1–4, most learners (64.6%) performed signal assessments or related tasks in their daily work. Almost all learners (98.5%) responded that they would be able to put what they had learnt into practice, either ‘to a great extent’ or ‘to some extent’.

Table 4.

Performance expectancy of the learners who participated in the user surveys

| Question | Answer option | Modules 1–4 (%) | Module 5 (%) |

|---|---|---|---|

| Do you perform signal assessments or related tasks in your daily work? | Yes | 64.6 | N/A |

| No | 35.4 | N/A | |

| Do you perform statistical signal detection or related tasks in your daily work? | Yes | N/A | 39.4 |

| No | N/A | 60.6 | |

| Will you be able to put what you have learnt into practice? | Yes, to a great extent | 51.4 | 32.3 |

| Yes, to some extent | 47.1 | 58.6 | |

| No, not really | 1.6 | 9.1 | |

| Was anything missing from the course? | Yes | 14.5 | 15.7 |

| No | 85.6 | 84.3 |

N/A not applicable

For module 5, only 39.4% of the learners had performed statistical signal detection or related tasks in their daily work and fewer learners responded that they would be able to put what they had learnt into practice (90.9%), compared with the result for modules 1–4, but the percentage was still high.

For all modules, approximately 85% (85.6% and 84.3%, respectively) of the learners were satisfied with the course content and the most frequent comments were that more details and practical examples would be appreciated as well as some exercises/assignments.

Effort Expectancy

The questions and answers related to effort expectancy are presented in Table 5. For modules 1–4, most learners (90.4%) used a computer to take the course and some also used a mobile phone or a tablet. Almost all learners (99.3%) found the learning platform either ‘very easy to use’ or ‘fairly easy to use’. The main complaint about the learning platform was the technical limitations related to how the transcripts were displayed. While watching a video, the whole transcript could not easily be simultaneously viewed. Some of the learners experienced a time lag when playing the videos (16.7%), but most of them attributed this problem to a poor internet connection. English as the course language satisfied most learners (90.4%), but this result may reflect a selection bias since people with limited English skills may not have chosen to take the course. Spanish was by far the most popular language suggestion for a future translation.

Table 5.

Effort expectancy of the learners who participated in the user surveys

| Question | Answer option | Modules 1–4 (%) | Module 5 (%) |

|---|---|---|---|

| Which device did you study on? (multiple answers possible) | Computer/laptop | 90.4 | 85.7 |

| iPad/tablet | 5.6 | 4.0 | |

| Mobile phone | 15.6 | 23.8 | |

| Was the learning platform easy to use? | Yes, very easy | 77.8 | 71.8 |

| Yes, fairly easy | 21.5 | 26.9 | |

| No, not very easy | 0.7 | 1.4 | |

| Did you experience any time lag when playing the lessons? | Yes | 16.7 | 14.5 |

| No | 83.3 | 85.5 | |

| Would you prefer to take the course in a different language? | Yes | 11.3 | N/A |

| No, English is fine | 88.7 | N/A | |

| Would you prefer subtitles in additional languages? | Yes | N/A | 24.6 |

| No, English and Spanish is fine | N/A | 75.4 |

N/A not applicable

For module 5, fewer learners (85.7%) used a computer to take the course, whereas the number of learners using a mobile phone increased (23.8%) compared with the result for modules 1–4. Again, almost all learners (98.7%) found the learning platform very or fairly easy to use and there were no specific complaints since the problem with the transcripts for modules 1–4 had been solved. The number of learners who experienced time lag when playing the videos had also decreased (14.5%). The learners on module 5 were free to select one of three versions and most learners (69.6%) selected the version with English subtitles, 3.9% selected the version with Spanish subtitles and the rest selected the version without subtitles. Most learners (75.4%) were satisfied with the availability of subtitles in English and Spanish, and the rest responded that they would prefer subtitles in additional languages. However, some of them may have misinterpreted the question since the most common languages requested were English and Spanish, for which subtitles already existed.

Educational Compatibility

The questions and answers related to educational compatibility are presented in Table 6. The results for modules 1–4 and module 5 were very similar. For modules 1–4, most learners (84.1%) found the duration of the lessons to be ‘just right’. Most learners (89.6%) responded that the visual look of the lessons aided their learning. Almost all learners (99.4%) thought that the lecturers conveyed the content clearly and responded that ‘everything was easy to follow’ or that ‘most of it was easy to follow’. More than 90% of the learners (93.8%) found the quiz questions helpful, but some requested that more questions be presented in a randomised order. The transcripts were used by 88.7% of the learners, despite the technical issues, and some learners felt that these were very helpful. Most learners (81.3%) would have appreciated the possibility to interact with the educators and/or the other learners during the course (the learning platform did not offer this feature at the time).

Table 6.

Educational compatibility of the learners who participated in the user surveys

| Question | Answer option | Modules 1–4 (%) | Module 5 (%) |

|---|---|---|---|

| How would you rate the duration of the lessons? | Too short | 13.3 | 9.4 |

| Just right | 84.1 | 86.5 | |

| Too long | 2.7 | 4.2 | |

| How would you rate the visual look of the lessons? | Good, it aided my learning | 89.6 | 89.3 |

| Bad, it hindered my learning | 2.1 | 1.4 | |

| No comment | 8.3 | 9.4 | |

| Did the lecturers convey the content clearly? | Yes, everything was easy to follow | 65.5 | 53.3 |

| Yes, most of it was easy to follow | 33.9 | 45.2 | |

| No, it was difficult to follow | 0.6 | 1.4 | |

| Did the quiz questions aid your learning? | Yes | 93.8 | 90.5 |

| No | 1.7 | 4.3 | |

| I have not tried the quiz yet | 4.4 | 5.2 | |

| Did you use the transcripts? | Yes, always | 48.4 | N/A |

| Yes, sometimes | 40.3 | N/A | |

| No, never | 11.3 | N/A | |

| Would you appreciate the possibility to interact during the course? | Yes, with educators | 35.2 | 30.4 |

| Yes, with learners | 4.3 | 3.9 | |

| Yes, with educators and learners | 41.8 | 46.9 | |

| No, interaction is not needed | 18.7 | 18.8 |

N/A not applicable

For module 5, 86.5% of the learners found the duration of the lessons to be ‘just right’ and 89.3% agreed that the visual look of the lessons aided their learning. Almost all learners (98.5%) thought that the lecturers conveyed the content clearly and responded that ‘everything was easy to follow’ or that ‘most of it was easy to follow’. More than 90% of the learners (90.5%) found the quiz questions helpful. The question about transcripts was not included in the survey since subtitles had been added to the lessons. Again, most learners (81.2%) would have appreciated the possibility to interact with the educators and/or the other learners during the course.

For all modules, some learners indicated that the visual look of the lessons could be improved with more animations and infographics.

Behavioural Intention

The overall satisfaction was very high for all modules. For modules 1–4, 99.2% of the learners would recommend the course to others and the corresponding number for module 5 was 99.5%. Furthermore, 99.8% of the learners who responded for modules 1–4 intended to take future UMC courses relevant to them and the corresponding number for module 5 was 100%.

Feedback and Areas of Improvement

The learners were asked to provide additional feedback on the course modules, and most comments were very positive with many requests for future courses in other pharmacovigilance topics. Some learners also expressed their appreciation of the free and easy access to the modules. Suggestions for improvements mainly concerned the need for more practical examples in the lessons as well as practical exercises to enhance the learning process and help learners apply the theory. Other common requests were to add links to references and other training resources, and to offer a forum for interaction.

Discussion

The aim of the e-learning course was to train a greater number of pharmacovigilance centre staff, with improved ability to perform pharmacovigilance tasks. Collected user statistics and the outcome of the user surveys clearly show that more people are reached and trained when face-to-face courses are complemented with online training. The overall rating of the e-learning course was high, and most learners were very satisfied with the learning platform and course content. Microlearning was an appropriate choice of educational strategy since most learners thought the duration of the lessons was just right.

The audience response to the course exceeded expectations with 2067 learners enrolled in the course when the first user survey was disseminated, 4 months after the launch of the first modules. In addition, 1635 learners had registered on the learning platform without starting the course. The discrepancy between registration and participation is a phenomenon observed in other online course evaluation studies [23, 24]. In the latter study, the authors argued that learners may undervalue a free course, signing up without making a real commitment to it. However, several learners appreciated that our course was free of charge, as reflected in the free text comments, which may have contributed to its popularity.

The course attracted learners from all over the world (134 countries) and from different settings, but the majority came from SEARO and worked in the pharmaceutical industry. This may be explained by the very large population, pharma industry growth and the expansion and regionalisation of PV in India. The learners had diverse backgrounds, but pharmacist was by far the most common profession among the learners, followed by medical doctor. Although the course was targeted at junior signal assessors, it was of interest to more senior professionals as well, and these participants are in a good position to use the material as a foundation for training in their localities.

Except for the quiz questions in the course, no evaluation of actual knowledge gained was performed. Most learners perceived that they would be able to put what they had learnt into practice, although the absence of practical exercises limited the learning of actual skills. Many learners would appreciate practical exercises as well as a forum for interaction.

Limitations

Since the surveys for modules 1–4 and module 5 were not sent out until 4 and 8 months, respectively, after the launch, the response rate and the memory of the respondents may have been affected, although the assessment that the course assisted them in practice is more likely to be a reliable response compared with an immediate post-course evaluation. The results of the survey only show the opinion of the learners who responded, and may therefore contain some selection bias. Although no internationally agreed lowest acceptable response rate exists, the low response rates in the surveys is a limitation that could have biased the results of the evaluation. A brief analysis of all modules comparing the regional origins of survey respondents with those of the learners who were invited to participate in the surveys showed a reasonably good correspondence, suggesting that the survey respondents were geographically representative. Another aspect to consider is that this e-learning course was about a specific and rather advanced topic intended for a specific and limited group of learners. Hence, the results and conclusions drawn from this evaluation may not be applicable to other learning areas.

Future Direction

Further developments might include an upgrade to a learning platform that allows interactivity since most of these learners requested the possibility to interact with each other and the teachers. Several studies have emphasised the importance of interaction for the learning process and showed that it is highly valued by learners [23–25]. The current e-learning course will be integrated with the UMC annual PV course, as a ‘blended learning’ approach. Extending the course with practical exercises is another potential improvement since it would enhance skills and it was requested by many learners.

This study demonstrated that the e-learning course was overall well received and represents a viable approach to delivering educational content. An appropriate next step is therefore to evaluate the knowledge gained during the course, whether it led to a changed behaviour after the course, and whether this changed behaviour led to improved results.

Conclusion

To conclude, this UMC e-learning course in pharmacovigilance based on microlearning was well received across all the aspects evaluated, with a global coverage among relevant professional disciplines, including the primary target group, national PV centre staff.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgements

The authors would like to thank all UMC staff who were involved in the planning, development and launch of this e-learning course, and everyone who participated in the user surveys and thereby contributed to making the course even better. The opinions and conclusions of this study are not necessarily those of the national centres that make up the WHO Programme for International Drug Monitoring, nor of the WHO.

Declarations

Author Contributions

AH and JE designed the study. PC-J and RS designed and delivered modules 1–4 of the online course. AH was responsible for survey distributions and data collection. All authors contributed to data analysis and interpretation. AH was the lead author with input from all authors.

Code availability

Not applicable.

Funding

No funding to conduct the study was received. Uppsala Monitoring Centre funded the open access.

Conflict of interest

Anna Hegerius, Pia Caduff-Janosa, Ruth Savage and Johan Ellenius have no conflicts of interest that are directly relevant to the content of this study.

Compliance with ethical standards/ethics approval

Not applicable.

Consent to participate

The learners who were selected to participate in the surveys were informed that anonymity was guaranteed, and that the data would be used for research. By responding to the surveys the respondents gave their consent to participate.

Consent for publication

The learners who were selected to participate in the surveys were informed that the outcome of the surveys would be used for research and that some data may be published. By responding to the surveys, the respondents gave their consent for publication.

Availability of data and material

The datasets generated during and/or analysed during the current study are not publicly available due to confidentiality issues but are available from the corresponding author on reasonable request.

References

- 1.Vargesson N. Thalidomide-induced teratogenesis: history and mechanisms. Birth Defects Res Part C Embryo Today Rev. 2015;105(2):140–156. doi: 10.1002/bdrc.21096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Information on the Member States requirement for the nomination of a pharmacovigilane (PhV) contact person at national level. London, United Kingdom: European Medicines Agency; 2017. https://www.ema.europa.eu/en/documents/other/information-member-states-requirement-nomination-pharmacovigilance-phv-contact-person-national-level_en.pdf. Accessed 4 June 2020.

- 3.Guidelines for qualified person for pharmacovigilance. Ghana, Accra: Food and Drugs Authority; 2013. https://www.rrfa.co.za/wp-content/uploads/2017/01/Ghana-Guidelines-for-Qualified-Person-for-Pharmacovigilance-1802.pdf. Accessed 4 June 2020.

- 4.Guidelines for the establishment of the qualified persons for pharmacovigilance. Nairobi, Kenya: Pharmacy and Poisons Board Kenya; 2018. https://pharmacyboardkenya.org/downloads. Accessed 4 June 2020.

- 5.Frehywot S, Vovides Y, Talib Z, Mikhail N, Ross H, Wohltjen H, et al. E-learning in medical education in resource constrained low- and middle-income countries. Hum Resour Health. 2013;11(1):4. doi: 10.1186/1478-4491-11-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hug T. Didactics of microlearning: concepts, discourses and examples. Münster: Waxmann; 2007. [Google Scholar]

- 7.Shail MS. Using micro-learning on mobile applications to increase knowledge retention and work performance: a review of literature. Cureus. 2019;11(8):e5307. doi: 10.7759/cureus.5307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.De Gagne JC, Park HK, Hall K, Woodward A, Yamane S, Kim SS. Microlearning in health professions education: scoping review. JMIR Med Educ. 2019;5(2):e13997. doi: 10.2196/13997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.UMC online courses. Uppsala: Uppsala Monitoring Centre; 2017. https://www.who-umc.org/education-training/online-courses/. Accessed 30 Oct 2019.

- 10.Childs S, Blenkinsopp E, Hall A, Walton G. Effective e-learning for health professionals and students—barriers and their solutions. A systematic review of the literature—findings from the HeXL project. Health Inf Libr J. 2005;22(s2):20–32. doi: 10.1111/j.1470-3327.2005.00614.x. [DOI] [PubMed] [Google Scholar]

- 11.Cook DA. Web-based learning: pros, cons and controversies. Clin Med (Lond) 2007;7(1):37–42. doi: 10.7861/clinmedicine.7-1-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300(10):1181–1196. doi: 10.1001/jama.300.10.1181. [DOI] [PubMed] [Google Scholar]

- 13.Thomas PA, Kern DE, Hughes MT, Chen BY. Curriculum development for medical education: a six-step approach. 3. Baltimore: JHU Press; 2016. [DOI] [PubMed] [Google Scholar]

- 14.Ruiz JG, Mintzer MJ, Leipzig RM. The impact of e-learning in medical education. Acad Med. 2006;81(3):207–212. doi: 10.1097/00001888-200603000-00002. [DOI] [PubMed] [Google Scholar]

- 15.Kirkpatrick D, Kirkpatrick J. Evaluating training programs: the four levels. 3. San Francisco: Berrett-Koehler Publishers; 2006. [Google Scholar]

- 16.Joynes C. Distance learning for health: what works? A global review of accredited post-qualification training programmes for health workers in low and middle income countries. London: London International Development Centre; 2011. [Google Scholar]

- 17.Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: toward a unified view. MIS Q. 2003;27(3):425–478. doi: 10.2307/30036540. [DOI] [Google Scholar]

- 18.Davis FD. A technology acceptance model for empirically testing new end-user information systems: theory and results. Ph.D. thesis, Massachusetts Institute of Technology; 1986.

- 19.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989;13(3):319–340. doi: 10.2307/249008. [DOI] [Google Scholar]

- 20.Venkatesh V, Davis FD. A theoretical extension of the technology acceptance model: four longitudinal field studies. Manag Sci. 2000;46(2):186–204. doi: 10.1287/mnsc.46.2.186.11926. [DOI] [Google Scholar]

- 21.Chen J-L. The effects of education compatibility and technological expectancy on e-learning acceptance. Comput Educ. 2011;57(2):1501–1511. doi: 10.1016/j.compedu.2011.02.009. [DOI] [Google Scholar]

- 22.WHO regional offices. Geneva, Switzerland: WHO; 2019. https://www.who.int/about/who-we-are/regional-offices. Accessed 6 Dec 2019.

- 23.Curran VR, Lockyer J, Kirby F, Sargeant J, Fleet L, Wright D. The nature of the interaction between participants and facilitators in online asynchronous continuing medical education learning environments. Teach Learn Med. 2005;17(3):240–245. doi: 10.1207/s15328015tlm1703_7. [DOI] [PubMed] [Google Scholar]

- 24.Koch S, Hagglund M. Mutual learning and exchange of health informatics experiences from around the world—evaluation of a massive open online course in ehealth. Stud Health Technol Inform. 2017;245:753–757. [PubMed] [Google Scholar]

- 25.Wong G, Greenhalgh T, Pawson R. Internet-based medical education: a realist review of what works, for whom and in what circumstances. BMC Med Educ. 2010;10:12. doi: 10.1186/1472-6920-10-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.