Abstract

Translational research in vision prosthetics, gene therapy, optogenetics, stem cell and other forms of transplantation, and sensory substitution is creating new therapeutic options for patients with neural forms of blindness. The technical challenges faced by each of these disciplines differ considerably, but they all face the same challenge of how to assess vision in patients with ultra-low vision (ULV), who will be the earliest subjects to receive new therapies.

Historically, there were few tests to assess vision in ULV patients. In the 1990s, the field of visual prosthetics expanded rapidly, and this activity led to a heightened need to develop better tests to quantify end points for clinical studies. Each group tended to develop novel tests, which made it difficult to compare outcomes across groups. The common lack of validation of the tests and the variable use of controls added to the challenge of interpreting the outcomes of these clinical studies.

In 2014, at the bi-annual International “Eye and the Chip” meeting of experts in the field of visual prosthetics, a group of interested leaders agreed to work cooperatively to develop the International Harmonization of Outcomes and Vision Endpoints in Vision Restoration Trials (HOVER) Taskforce. Under this banner, more than 80 specialists across seven topic areas joined an effort to formulate guidelines for performing and reporting psychophysical tests in humans who participate in clinical trials for visual restoration. This document provides the complete version of the consensus opinions from the HOVER taskforce, which, together with its rules of governance, will be posted on the website of the Henry Ford Department of Ophthalmology (www.artificialvision.org).

Research groups or companies that choose to follow these guidelines are encouraged to include a specific statement to that effect in their communications to the public. The Executive Committee of the HOVER Taskforce will maintain a list of all human psychophysical research in the relevant fields of research on the same website to provide an overview of methods and outcomes of all clinical work being performed in an attempt to restore vision to the blind. This website will also specify which scientific publications contain the statement of certification. The website will be updated every 2 years and continue to exist as a living document of worldwide efforts to restore vision to the blind.

The HOVER consensus document has been written by over 80 of the world's experts in vision restoration and low vision and provides recommendations on the measurement and reporting of patient outcomes in vision restoration trials.

Keywords: clinical endpoints, vision outcomes, vision restoration

Introduction

Lauren Ayton1 and Joseph Rizzo III2

1Department of Optometry and Vision Sciences and Department of Surgery (Ophthalmology), The University of Melbourne, Parkville, Australia; Centre for Eye Research Australia, Royal Victorian Eye and Ear Hospital, East Melbourne, Australia (e-mail: layton@unimelb.edu.au)

2Massachusetts Eye and Ear Infirmary, Harvard Medical School, Boston, MA, USA (e-mail: Joseph_Rizzo@MEEI.harvard.edu)

Restoration of vision to patients with neural forms of blindness is one of the Holy Grails of modern medicine. Large numbers of research teams and companies around the globe have been pursuing a wide range of approaches to achieve this goal, including genetic, prosthetic, optogenetic, stem cell and other transplantation, and sensory substitution strategies. Each approach has advantages and disadvantages, and no approach will likely prove to be well suited for all forms of neural blindness. Given this, robust activity across multiple disciplines would seem to be the best approach in the pursuit of the challenging goal of providing sight to the blind.

The preponderance of preclinical and clinical studies in sight recovery has been conducted with prostheses. The experimental foundation of the field of visual prosthetics was established in 1968 by the work of Brindley and Lewin1 and later Dobelle and Mladejovsky,2 who reported that electrical stimulation could produce visual phosphenes in subjects who were severely blind. Since that time, more than 40 research teams have been developing some form of visual prosthesis (Fig. 1), and two devices were commercialized: Argus II (Second Sight Medical Products, Sylmar, CA), which has received both Food and Drug Administration (FDA, United States) and Certification Experts (Conformité Européene, European) mark approval, and Alpha AMS (Retina Implant AG, Reutlingen, Germany), which has received CE mark approval only.

Figure 1.

Active visual prosthetic groups around the world as of November 2019. This map does not include groups that are working on genetic, optogenetic, or transplantation strategies to restore vision to the blind.

In parallel, in December 2017, the FDA approved the first directly administered gene therapy in the United States, LUXTURNA (voretigene neparvovec-rzyl; Spark Therapeutics, Philadelphia, PA) as a treatment for bi-allelic mutations in the RPE65 gene that causes Leber congenital amaurosis. This milestone presages the approval of other genetic therapies as we enter the dawn of a wide range of novel treatment options for the blind. This robust expansion of research across several disciplines brings hope to the millions of blind individuals who may be able to benefit from these sophisticated technologies in the upcoming decades.

The technical challenges faced by the various strategic approaches for visual restoration or augmentation of visual function differ considerably; however, all of these fields must contend with the need to demonstrate safety and efficacy, which are the cornerstones for regulatory approval. This document is focused on the recommended methods to collect evidence to support the latter. The need for special attention to the topic of efficacy is driven by the challenge of obtaining reliable measures of vision function, or functional vision, before and after intervention in subjects who are severely blind and who will be the earliest candidates for intervention. Inaccuracies in measuring endpoints of vision can lead to spurious conclusions of therapeutic benefit when none is present, which could unnecessarily expose patients to risks of injury to their eyes or overall health without a reasonable hope of benefit.

The best outcomes to date from any form of intervention have been meaningful but have not yet reached the level of providing substantial visual improvement for multiple tasks of daily life. Even the best performing subjects implanted with a visual prosthetic have not improved to the level of “legal blindness” on standard measures of visual acuity nor have they been able to perform the majority of assessments routinely used in standard visual testing. As such, out of necessity, the groups that led early human prosthetic testing had to develop novel test methods. An unintended consequence of the use of novel, group-based testing methods is the challenge for scientists, physicians, regulatory agencies, and the corporate sector to readily compare outcomes across groups. Thus, the time-honored scientific principle that places great significance on external (i.e., disinterested third party) confirmation of results has not been possible in this field. For the same reason, it has been challenging to interpret “validation” studies from any group to assess the potential value of a device for end users.

The notion of seeking international consensus on psychophysical testing methods in the emerging disciplines of neural visual restoration was first raised by one of us (JFR) at the inaugural “The Eye and the Chip” conference in 2000. For a variety of reasons, sufficient momentum toward this goal did not materialize until 2014, when we (LA and JFR) catalyzed an initiative by announcing that our respective Australian- and Boston-based teams had agreed to work cooperatively to develop shared testing methods.

This announcement was met with enthusiasm and attracted over 80 researchers to establish a multinational task force to establish the Harmonization of Outcomes and Vision Endpoints in Vision Restoration Trials (HOVER) Taskforce.3 This taskforce was grounded on the principles of openness, inclusiveness, and collegiality among scientists from around the world, and it sought guidance from recognized experts in visual rehabilitation and visual restoration to develop recommendations for “good practice” for visual psychophysical testing in severely blind humans.

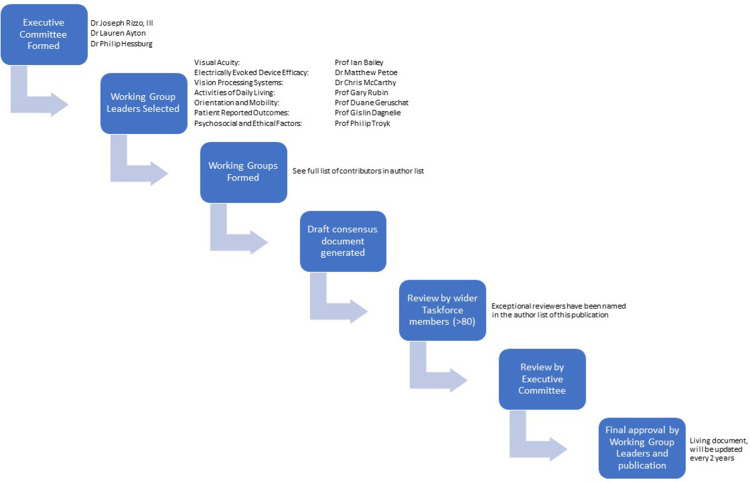

The taskforce operated through a federated structure, where seven working groups were formed in the following areas of interest: visual acuity, electrically evoked device effectiveness, vision processing systems, activities of daily living, orientation and mobility, patient reported outcomes, and psychosocial assessments and ethical considerations. The working group chairs were selected by the taskforce's Executive Committee, but they then had free choice regarding the members of their working groups. The teams represent diversity across nationality and profession and include academics, clinicians, and industry leaders.

The working groups were tasked with developing a consensus document in their area, which often invoked spirited discussions and debate. The majority of this work was done via phone teleconferences and e-mails, with some groups meeting in person when possible. When the group had developed their document and all members were in agreement with the content, the section was reviewed by the broader HOVER Taskforce members (over 80 people who had expressed interest during an initial Special Interest Group at the Association for Research in Vision and Ophthalmology Annual Meeting in 2014). Several reviewers made extraordinary contributions to this process, and they are acknowledged as part of the core HOVER Taskforce in our author list. Final reviews were completed by the Executive Committee. The structure and process of this taskforce are shown in Figure 2.

Figure 2.

Structure and process flowchart of the HOVER Taskforce.

The Executive Committee and working group leaders met at conferences throughout the process, usually once a year. The process was detailed, thorough, and required a significant time commitment. We cannot thank the contributors enough for their hard work and invaluable expertise.

This initial set of recommendations was developed by experts in the field of visual prosthetics, but our scientific panel includes experts from other sight recovery disciplines (see Acknowledgments) who have agreed to encourage members from their disciplines to provide modifications to our recommendations to better suit their fields of study.

We are proud to share this initial consensus document with our peers, with the knowledge that this will be the first in a series of updates and improvements as the field progresses.

Definition of Terms Relevant to the Psychophysical Assessment of Emerging Visual Restoration Strategies

August Colenbrander1

1Smith-Kettlewell Eye Research Institute and California Pacific Medical Center, San Francisco, CA, USA

Vision is often considered to be the most important source of information about our environment and for our interaction with that environment. This document discusses important issues regarding assessment of outcomes in any type of visual restoration trial. For clarity, the following definitions of terms used in the document are provided.

Assessing visual outcomes can be approached from different points of view. For those who study how the eye functions (and, by extension, how the visual system functions), the goal of vision seems to be the creation of a visual percept. For those who are interested in how the PERSON functions, the goal is more broad—how to facilitate the person's interaction with the environment using visual information.

The two viewpoints are obviously related, but there are distinct differences. The first concept addresses specific visual function, such as enabled perception of detail, color, and movement. The second concept addresses functional vision and the contribution that vision provides to enhance task performance, such as reading, mobility, and activities of daily living. This document specifically addresses individuals who have or will undergo some intervention in the hope of benefiting from improved visual function and functional vision.

The term vision loss is a relative term and not an all-or-none phenomenon. Loss of vision can range from mild and moderate to severe, profound, and total. On the other hand, the term blindness is not a relative term. Given the dictionary definition of blindness as “to be without light perception,” a person cannot be “a little bit blind.” However, this term also has vernacular and legal usages that may be used to reference a social category, or a range of disabilities. For the purpose of population statistics, the World Health Organization (WHO) defines blindness numerically as visual acuity “less than 3/60” (which is equivalent to the metrics 20/400, 0.05, or 6/120). The International Council of Ophthalmology provided a functional definition that “people may be considered blind when they have no vision or so little vision that they have to rely primarily on vision substitution skills (i.e. use of senses other than vision, such as Braille, long cane, text-to-speech software conversion, etc.) to conduct their activities of daily living.”4 In any characterization, it is appreciated that having any residual vision provides a useful adjunct to function.

Traditional Measures of Visual Performance Used by Ophthalmologists

Legally blind—Depends on the defining organization. WHO defines legally blind as 20/400 or worse in the better eye and/or a field of view smaller than 20 degrees.

Count fingers (CF)—Individuals can tell how many fingers the ophthalmologist is holding up.

Hand motion (HM)—Individuals can tell that the ophthalmologist is waving a hand in front of their eyes.

Light perception (LP)—Individuals can tell if the lights in a room are on or off. Roughly equivalent to a normally sighted individuals perception with their eyes closed, and generally assessed using a bright light at between 40 cm and 1 m.

No light perception (NLP)—Individuals cannot tell if the lights in a room are on or off. Generally assessed using a bright light at between 40 cm and 1m.

The term low vision refers to having less than normal vision but not being classified as “blind.” In these cases, use of any residual vision can be improved with various strategies for vision enhancement, such as use of magnification, enhanced lighting, or higher levels of contrast.4

The term ultra-low vision (ULV) refers to having very limited vision but not complete blindness. ULV is so limited that at best only crude shapes can be detected and recognized. Often vision is limited to detection of movement, light projection, or bare light perception. Traditional scales of visual performance, such as those used to describe central visual acuity, are not adequate to convey the potential capability of an individual to perform tasks of daily living, as the experiential level of function depends at least as much on non-visual skills as on the level of vision. Some people are characterized as having ULV because they were sighted and then progressively lost vision, whereas others were blind and then gained vision through various interventions, such as visual prosthetics or genetic therapies.

Vision rehabilitation aims at improving how a person functions, regardless of how the eyes function. To do this, vision rehabilitation may use vision enhancement, vision substitution, or other means. This approach builds upon whatever visual and mental abilities remain.

Vision restoration refers to efforts to restore lost visual abilities, relying on the visual system to convey information. Cataract surgery with implantation of an intraocular lens is by far the most common procedure that restores vision. A variety of emerging approaches, including visual prosthetics, genetic therapies, neurotrophic drugs, stem cells, and optogenetics, represent attempts to restore vision. Techniques that restore function through non-visual means, such as text-to-speech conversion and tactile vision substitution, are potentially valuable to an individual but are not considered to be vision restoration.

In the foreseeable future, use of any of the emerging forms of visual restoration will be restricted to individuals with ULV. Those who undergo such intervention also should have access to other means of visual rehabilitation, such as the use of specialized devices to help with specific tasks. In such cases, it is important to measure performance achieved with visual restoration alone compared to performance when relying on non-restorative rehabilitation. This will provide the greatest insight into how much benefit patients receive from medical interventions.

Assessment of outcomes can be undertaken in many different ways. This document provides guidance on methods that have been peer reviewed and considered acceptable by the HOVER Taskforce.

How the Visual System Functions

Visual acuity assesses the size of the projection of the smallest possible recognizable optotype onto the retinal surface, which, even for a 20/200 letter (0.1, 6/60), corresponds to less than 1 degree of visual angle. Generally, measurement of optotype recognition assumes that only a single fixation of eye position was used to see the optotype. Acuity measurements for larger objects can be more complex to interpret because visual recognition of larger letters may involve the use of searching eye movements and/or scanning with the head (or head-mounted camera). Assistive strategies such as these should be duly noted and recorded when measuring visual acuity. Similarly, reaction times and whether any digital or optical magnification was used should be included as part of the assessment.

The visual field is the region of visual space that corresponds to regions of retina that retain a criterion level of visual function. Efficient use of a restricted peripheral field requires conscious scanning techniques, which often requires deliberate training. This is as important for visual prostheses as it is for disease conditions such as glaucoma and retinitis pigmentosa (RP).

How the Person Functions

How individuals utilize their visual information can be assessed by observation and measurement of their performance with regard to orientation and mobility, as well as activities of daily living. Patient satisfaction can also be assessed subjectively with patient-reported outcomes. All three of these areas are covered in detail in this HOVER document.

How the Device Functions

Device effectiveness can be assessed by examining the relationship between stimulation and induced percepts or visual function benefit. Depending on the type of device that is used, device effectiveness can be enhanced by additive technical means, such as preprocessing of the visual or other stimuli to either complement or compensate for the neural processing, or to facilitate perception or function.

In summary, assessment of outcomes can legitimately be undertaken in many different ways depending on the goal of the experimenter. This document provides guidance on methods that have been peer reviewed and considered acceptable by the HOVER Taskforce.

Visual Acuity

Ian Bailey (chair)1, Michael Bach2, Rick Ferris3, Chris Johnson4, Ava Bittner5, August Colenbrander6, and Jill Keeffe7

1School of Optometry, University of California-Berkeley, Berkeley, CA, USA (e-mail: ibailey@berkeley.edu)

2Eye Center, University of Freiburg, Freiburg, Germany

3National Eye Institute, National Institutes of Health, Bethesda, MD, USA

4Department of Ophthalmology and Visual Sciences, University of Iowa Hospitals and Clinics, Iowa City, IA, USA

5Nova Southeastern University College of Optometry, Fort Lauderdale, FL, USA

6Smith-Kettlewell Eye Research Institute, San Francisco, CA, USA

7LV Prasad Eye Institute, Hyderabad, India

Ever since Snellen developed his chart, letter chart acuity has been a mainstay for the assessment of vision. The Snellen or similar type test is relatively easy to perform, generally requires little time, is inexpensive, and can be universally applied. Indeed, its use is so pervasive that the terms “visual acuity” (meaning letter chart acuity that measures foveal, or at least central, vision) and “vision” are often used synonymously. Measuring visual acuity with optotype charts serves many clinical purposes, including identifying and monitoring ocular health, guiding decision-making when correcting refractive errors, and assessing the potential benefit of medical interventions. However, measurement of central vision with optotype charts has limitations, especially when working with individuals who have ULV. Measurement of acuity in these individuals typically requires significant time and effort. It can be difficult to obtain an accurate measurement at any single sitting, complicating valid comparisons across visits. This challenge of obtaining reproducible measurements over time complicates any attempt to assess the benefit of a visual restorative intervention.

For optotype charts, the basic visual task is recognizing objects sequentially to allow an estimate to be made of the minimum angle of resolution that can be reliably reported. Typically, subjective responses for optotypes are sought from larger objects first, and progressively smaller objects are then shown, although the inverse approach is viewed as being advantageous by some, because individuals are often reluctant to acknowledge that they can recognize an optotype if it appears blurry, even with encouragement. At the common presentation distances, the largest letters on most charts have an angular size smaller than 1 degree, which is less than the diameter of the fovea. For most common visual acuity testing, the foveal area of the retina is responsible for the recognition of the optotypes as eye movements systematically shift the attention across and down the chart.

In ULV, the features in the visual acuity targets will generally have to be much larger than 1 degree of visual angle, and there is a higher likelihood of scotomas or other visual field restrictions that can further compromise testing of acuity. Scanning eye movements and sometimes even head movements are likely to be required as the subject inspects and attempts to identify and interpret the features of the test target.

When vision is too poor to perform the visual task of reading a letter chart, then the task should be systematically simplified. Recognizing single optotypes is a simpler task than reading a letter chart, and grating acuity test tasks are simpler than identifying single optotypes.

When considering the consequences of vision disorders, it is often important to assess multiple parameters of ocular function, such as visual acuity, contrast sensitivity, color discrimination, and visual fields. It is also important to identify impairments in these individual functions in each eye separately. The scores from any of the various visual acuity tests should not be assumed to be measures of the person's ability to perform visually guided functional tasks in everyday life.

How the person functions is determined by how the person is able to integrate visual information from the two eyes, as well as information from other sensory systems, into vision-related functioning. This is often referred to as visual ability. Visual ability and visual disability should be assessed with both eyes open. Vision and vision-related functioning involve more than just visual acuity; however, when actual ability assessments are not available, “visual acuity of the better eye” is often useful as an estimate or indicator of ability or disability.

Introduction

The measurement of visual acuity in vision restoration trials is of upmost importance, as it is one of the key outcome measures accepted by both regulatory bodies and by the general public as evidence of post-intervention improvement. In standard clinical practice, measurement of visual acuity using a logMAR letter acuity chart, such as the Early Treatment Diabetic Retinopathy Study (ETDRS) chart,5 is the gold standard for assessment of the minimum angle of resolution that a subject can achieve.6 However, such charts are only able to measure acuity down to levels of logMAR 1.60 (20/800 or 6/240) and so are not applicable to subjects who cannot achieve this level of acuity. Historically, subjects with vision worse than logMAR = 1.60 had their vision characterized as “count fingers,” “hand movements,” “light perception,” or “no light perception” vision, but these categories have been difficult to standardize and are not sensitive enough for use in vision restoration clinical trials.

Another major challenge with measurement of visual acuity in subjects with ULV is the significant variability (in visual acuity, contrast sensitivity, and visual fields, for example) that exists.7 Due to the range of visual outcomes in vision restoration trials, and these complicating factors, it is essential to implement a cohort of acuity tests in a standardized and repeatable manner. These tests should be administered before and after the therapeutic intervention.

These guidelines outline recommended methodologies for the testing and reporting of psychophysical results of testing in ULV subjects who participate in clinical therapeutic trials; however, all researchers are free to add other tests or develop new tests that might help identify or quantify other characteristics of vision that may be changing as a result of the interventions. We hope that this HOVER document will provide useful guidance in how to describe testing methods and results, so as to encourage reproducibility by other researchers and clinicians.

Recommended Methodology for Assessment of Visual Acuity

Acuity assessment should involve evaluation of optotype recognition acuities (letters, Landolt rings, and/or tumbling E acuity) and grating acuity. Researchers and clinicians are encouraged to use one of the existing validated tests for these purposes, including the ETDRS chart,5 the Freiburg Acuity and Contrast test (FrACT),8 the Berkeley Rudimentary Vision Test (BRVT),9 the Basic Grating Acuity (BaGA) test,10 or the Grating Acuity Test (GAT).11 There are other tests, such as the Basic Assessment of Light and Motion (BaLM) test,12 that evaluate other aspects of visual function.

General Recommendations for Testing

The examiner should be qualified in the assessment of visual acuity. Ophthalmologists, optometrists, orthoptists, or certified ophthalmic technicians or assistants are all potentially capable to perform this role, provided they are willing to follow a standardized protocol as described below.

The choice of test should depend in part on the level of vision. For subjects with vision of logMAR 1.60 (equivalent to 6/240 or 20/800) or better, the ETDRS test can be used. For subjects with worse vision, the BaLM, BaGA, FrACT, or BRVT tests should be used. New alternative tests for very low vision might be developed in the future.

For pre-intervention measurements, all tests should be completed with the subject's best refraction in place. The refractive correction should be appropriate for the testing distance being used. The pupils should be undilated. If subjects are unable to complete a subjective refraction, the refractive error may be estimated using an auto-refractor or retinoscopy.

Visual acuity measurements for ULV should be made with large targets that have very coarse detail, so that target recognition is robust to moderate magnitudes of optical defocus. If reasonable estimates of refractive error are available, it is generally recommended that the optical correction be worn during testing. If viewing distances are changed, appropriate adjustments of corrective lens power could be made, although this concern is not consequential if the change of lens power is not substantial. The use of any refractive correction, other than what would be normally used, must be described in any report or publication.

Some interventions may involve lenses or imaging systems that produce magnification (or minification) by causing the perceived image to have a larger (or smaller) angular size than the real-world object. In all reports on such cases, the magnitude of the magnification or minification must be specified, and a detailed description should be given of the lenses or imaging systems used. Quantification of any changes in visual acuity should make distinctions between changes attributed to the imaging system and changes that result from the therapeutic intervention.

For post-intervention measurements, the recommended method depends on the type of intervention used. For prosthetic devices that bypass the optical components of the eye (e.g., camera-based prostheses), refractive correction need not be used for post-intervention measurements if the pre-intervention testing did not yield quantifiable results. However, optimal refractive correction must be used if pre-intervention testing yielded quantifiable results, and it should be used if the subject reports a benefit from optical correction, even if this benefit is not quantifiable. If electronic camera zooming is used, the magnification that was used at the time that testing was performed must be recorded and specified. For photodiode-based prosthetic devices, optimal refractive correction should be used during any acuity measure, and, again, the magnitude of any magnification effects must be specified.

For ETDRS and BRVT testing, the recommended room illuminance is 500 lux, which is representative of lighting levels in well-lit office environments. Luminance levels from 250 to 1000 lux are acceptable. Within a given program or clinic, it is important that the illuminance levels be kept consistent (to within ±20%) from one test session to the next and from one testing station to another. It is also important to verify that no room lights or other bright surfaces are acting as glare sources to the patient or that their reflections are producing “hot spots” on the stimulus chart.

For other testing methods, variations in ambient light intensity, including absence of room lighting, can be used as deemed appropriate. Again, care should be taken to ensure that the lighting remains the same from one test session to the next. Other illumination levels may be considered if there is reason to believe that illumination levels are having a significant effect on performance.

Specific testing distances should be used. Generally, these will be distances recommended for specific test charts or distances determined from the calibration of screen displays to achieve the desired angular sizes.

Measurements should be made with the right eye and left eye separately and then binocularly, when appropriate. Due to time restraints, this may not always be possible; in this case, testing the study eye is always the priority. During the monocular tests, care must be taken to ensure that the other eye is fully occluded. Within a test protocol, there should be documented rules for stopping, for guessing, or for allowing subjects to correct or change responses. There should also be standard rules and procedures for encouraging guessing and pointing to help the patient locate the test target. Subjects should be allowed to move their head and eyes as they wish to assist in the identification and utilization of any islands of residual vision, as these strategies have been shown to improve performance on simulated prosthetic vision tests13 and in natural low vision. It should be reported whether or not head and eye movements were allowed.

A time limit should be set for each response (sometimes specified by the test manufacturer).

In order to identify residual islands of vision, hand-held optotype charts may be used (either an ETDRS letter chart or a BRVT chart). Such testing should begin at a distance of 4 meters, with the examiner moving the chart into all four quadrants of the visual field and asking the subject about the preferred location for seeing the chart. If an island of vision is found at this distance, then the testing distance can be changed in order to obtain a more precise measurement of visual acuity. If no island of vision is found at 4 meters, the same procedure should be administered at a distance of 1 meter.

For each stage of evaluation (i.e., subject screening and selection and pre- and post-intervention assessments), acuity measurements should be obtained from a minimum of two test sessions separated by at least 24 hours. The variability of individual subject's responses, both within sessions and between sessions, should be determined. Prior to any intervention, there should be at least two sessions of testing to establish the baseline measurement. Subjects should be excluded from the study if the variability of their responses exceeds a specified criterion.

For some subjects, it will be appropriate to make visual acuity measurements with different visual acuity test tasks. For example, a patient with poor acuity combined with very small visual fields (as is typical with a vision prosthesis) may not be able to trace out and recognize the shape of a large letter, so a grating acuity task may provide a better measure of the visual resolution ability. The visual resolution task used in the visual acuity tests becomes progressively more complex going from gratings to isolated optotypes to single optotypes with flanking bars and to charts of optotypes in logMAR or other multi-optotype formats. Measurements of visual acuity can show wide differences from one test task to another, and, at this time, there has not been a comprehensive comparison of all of the various methods and how the visual acuity scores may be affected for different pathology groups. Hence, it is vital to define which visual acuity task was used. For monitoring changes in vision over time, it is very important that consecutive test sessions include at least one of the same visual acuity tests, administered under the same conditions and following the same protocol.

Specific Recommendations for Post-Intervention Measurements

If only one eye is treated, visual acuity still should be measured in each eye separately and with binocular viewing. Any visual performance changes in the untreated eye, or changes under binocular viewing should be identified to assess if there is a bilateral effect on visual performance. The fellow eye should be patched, or at least there should be assurance that the fellow eye is occluded, during monocular acuity testing.

In post-intervention measures, the test should be completed in a random order with (1) device on, (2) device off, and (3) when practical, a control condition, which might include strategically scrambled stimulation input from the device. If a control condition is used, specific details should be given about the nature of the control. If a control condition is not used, this should be explicitly stated.

Performance can vary significantly depending on whether the optotype is white on a black background or black on a white background.14 In some prosthetic devices, contrast reversal is under the patient control. The contrast direction of the optotypes should be reported, and it should be reported whether or not this contrast direction was the choice of the patient or the experimenter. Ideally, acuity measurements should be made with both white-on-black and black-on-white optotypes, but this may be restricted by time limitations.

Specific Visual Acuity Test Methodologies

LogMAR Letter Acuity Tests

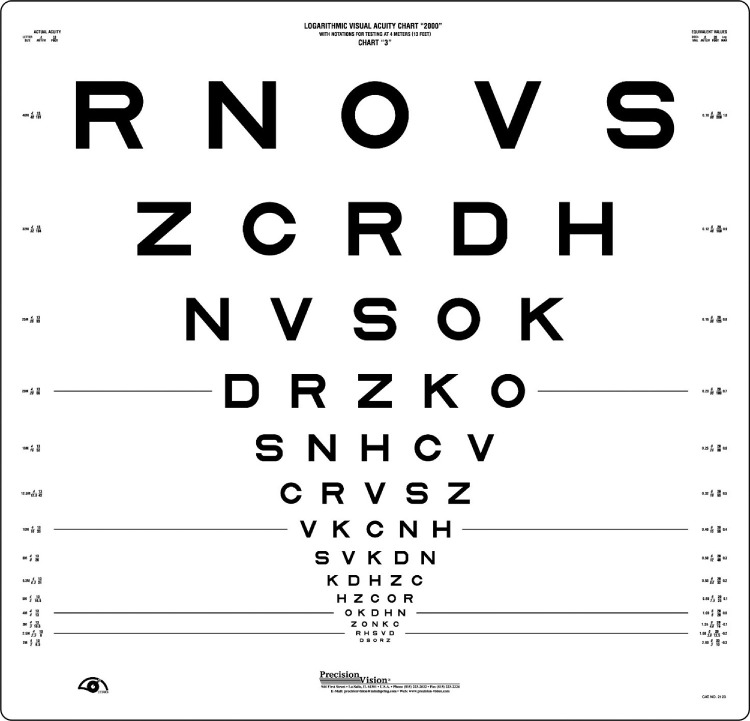

A standardized logMAR letter chart acuity is the gold standard acuity measure for subjects with vision better than logMAR 1.60 (20/800 or 6/240). The most widely used format is the ETDRS chart (see Fig. 3456).5

Figure 3.

The standard Early Treatment of Diabetic Retinopathy Study (ETDRS) logMAR visual acuity chart.

Figure 4.

The electronic Early Treatment of Diabetic Retinopathy Study (E-ETDRS) visual acuity test.

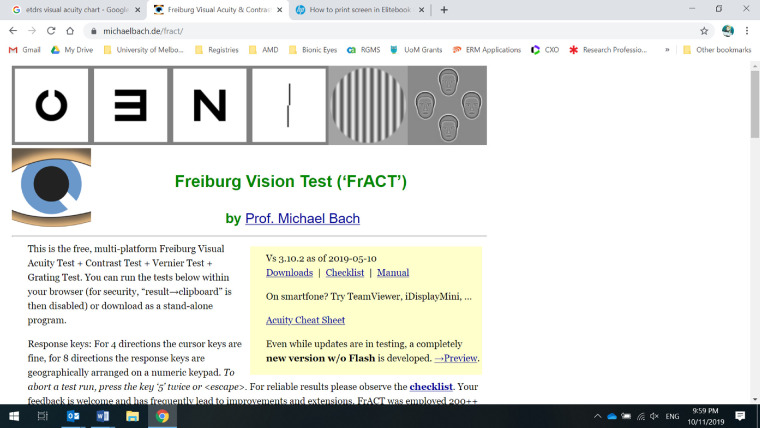

Figure 5.

Screenshot of the Freiburg Acuity and Contrast Test (FrACT), available online at http://michaelbach.de/fract/.

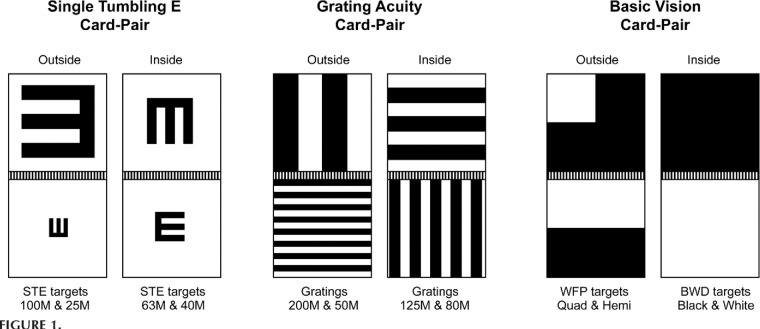

Figure 6.

The Berkeley Rudimentary Vision Test (BRVT).

The published ETDRS guidelines for measuring acuity on a letter optotype chart are summarized as follows:

-

1.

The ETDRS chart should be positioned such that the third row of letters (the 0.80 logMAR line) is 125 ± 5 cm (49 ± 2 inches) from the floor and 4 meters (13 feet) away from the subject, regardless of their vision.

-

2.

The right eye is tested first using ETDRS Chart 1.

-

3.

Subjects are instructed that they should attempt to identify all letters on the chart, from the top line down, and they are encouraged to guess when they are uncertain. The subject should be told that there are no numbers or shapes other than English letters.

-

4.

The examiner records the location and optotype of each correct identification, allocating a score of 0.02 log units per letter.5

-

5.

If the subject is unable to read more than 10 letters at 4 meters, then the chart is moved forward to a distance of 1 meter from the subject, where the test is repeated. A +0.75D lens added to the refractive correction is required to maintain clear focus.

-

6.

The left eye then is measured using the ETDRS Chart 2.

-

7.

After completion of the left eye testing, the occluder is removed from the right eye and testing is repeated with both eyes open.

-

8.

If subjects are not able to see the letters on a standard acuity chart, then a low vision optotype recognition test should be used. Recommendations for testing of ULV acuity include use of the FrACT or BRVT, as described below.

Electronic ETDRS Test

The electronic ETDRS (E-ETDRS) test16 (see Fig. 4) is a computer-based test of visual acuity that uses single letters, each with four flanking bars that are separated from the letter by one letter-width. This test has been shown to provide reliable scores of visual acuity that are well correlated with the scores from the ETDRS letter chart. At the standard test distances of 3 meters (10 feet), the upper limit of the measurable visual acuity range is logMAR 1.60 (0.025, 6/240, 20/800), but this can be extended by reducing the test distance. Computerization facilitates the recording and scoring of responses and allows easy randomization of the sequence of letters. The E-ETDRS test creates efficiencies by making a quick estimate of the acuity before strategically concentrating testing near the threshold level. The main advantages include improved testing efficiency, and randomizing the letter sequences avoids memorization issues when repeated measures are made. Because the E-ETDRS targets are single Sloan letters with flanking bars, the visual task will likely be easier than reading from charts with five letters per row; hence, some differences in visual acuity scores can be expected.

Low-Vision Optotype Test: FrACT

The FrACT8 was designed for the assessment of low vision covering the entire range of visual acuity measurable with optotypes. The FrACT is a computer-driven program that is available online without cost (http://michaelbach.de/fract/) (see Fig. 5). Full details of the test have been published elsewhere.8,17,18 The FrACT also includes tests of contrast sensitivity.

The recommended protocol is as follows:

-

1.Prior to the commencement of testing, the program must be calibrated by

-

a.Measuring the observation distance from the eye to the screen and entering this number into the “observer distance” box on the setup screen.

-

b.Measuring the blue calibration line on the computer screen and entering this value into the “length of blue ruler” box.

-

a.

-

2.

When the system has been calibrated, it will calculate the visual acuity range that can be presented from the distance measurements and screen resolution.

-

3.

Acuity can be measured using a range of optotypes, including Sloan letters, Landolt rings, and tumbling E; normally the optotypes will be black on a white background, but this can be altered in the settings.

-

4.

The provided checklist and on-screen instructions are followed to complete each optotype test.

-

5.

The size and resolution of the display screen should be chosen to avoid floor and ceiling effects. The screen size should accommodate the largest letters, and the resolution should be sufficient for satisfactory rendition of the smallest letters.

Low-Vision Optotype Test: BRVT

The BRVT test9 was developed for the clinical measurement of visual acuity in subjects with ULV in the range of logMAR acuity 1.60 and below. The test is administered with three card pairs, each of which consists of two 25-cm-square cards hinged together, thus providing four panels that can be used as targets (Fig. 6). The first card pair consists of single tumbling E (STE) letter optotypes; the second card pair displays square wave gratings to measure spatial resolution; and the third card pair is used as both a discrimination test (using a diffuse white or black card) and a detection task (by identifying the location of a white region on an otherwise back background). Full details of recommended test methods are published elsewhere.9,19

The recommended protocol for the BRVT is as follows:

-

1.

Begin testing with the STE acuity test at a viewing distance of 1 meter. At this distance, the STE acuity range is from logMAR = 2.00 to 1.40 (equivalent to 6/600 to 6/ 150, 20/2000 to 20/500), and it can be measured in increments of 0.20 log units.

-

2.

Present all cards in the BRVT at least four times to the subject, with the orientation changed randomly each time. For the four-choice STE task, successful identification is taken as better than 50% correct responses across six or more presentations.

-

3.

For the two-choice grating acuity task, successful identification is taken as 80% or more correct responses across eight or more presentations

-

4.

If the orientation of the largest STE (100 M) cannot be recognized at 1 meter, then reduce the viewing distance to 25 cm, where the acuity range becomes logMAR 2.60 to 2.00 (6/2400 to 6/600, 20/8000 to 20/2000).

-

5.

If the subject is unable to identify the orientation of the 100 M STE at 25 cm, change to the second card pair of square wave gratings. These gratings should be presented at 25 cm, which provides a grating acuity range of logMAR 2.90 to 2.30 (6/4800 to 6/1200, 20/16000 to 20/4000) in steps of 0.20 log units.

-

6.

If the subject is unable to identify the orientation of the largest grating, change to the third card pair, which has the white field projection and black–white discrimination tests. These cards also should be presented at 25 cm.

-

7.

The white field projection test has two targets. One is a white quadrant on a black background; for the other, the card is divided into black and white halves. The subject's task is to locate the white quad-field or the white hemi-field.

-

8.

If the subject fails the white field projection test, administer the black–white discrimination test. The subject's task is to distinguish the all-black card from the all-white card.

BaGA Test

The BaGA test10 uses a computer screen display to present a circular field filled with a sine-wave grating. There are four possible orientations for the gratings, two cardinal and two oblique, and four different spatial frequencies (3.3, 1.0, 0.33, and 0.10 cpd). Different viewing distances, field sizes, and gamma values may be selected. The subject responds to the four-alternative forced choice task on a keyboard. Correct responses and response times are recorded for each of the grating orientations.

GAT

The GAT,11 another computerized test, presents a square wave grating in a 37.5-cm circular field for presentation at 1.0, 2.0, or 4.0 m using a four-alternative forced-choice paradigm with responses recorded by pressing a button. The spatial frequencies of the gratings are incremented in steps of 0.10 log units, and, for each subject, the testing distance is based on the individual visual acuity at their initial visit.

BaLM Test

The BaLM test was developed specifically for use with prosthetic vision devices.12 The BaLM test includes tests for perception of light, detection of motion, light localization, and the temporal discrimination of two flashes. Subject responses are delivered through a numeric keypad, and auditory cues are provided to prompt responses at the appropriate time.

Testing for Light Perception

When subjects cannot satisfactorily respond to the various tests of visual acuity and spatial vision, then light perception should be tested.

To assess light perception,

-

1.

The brightest light delivered by an indirect ophthalmoscope should be used.

-

2.

Room lighting should remain at the same level used for normal acuity testing.

-

3.

Each eye should be tested separately. The fellow eye should be patched, and it should also be covered with the palm of the subject's hand to ensure a tight seal around the orbit and bridge of the nose.

-

4.

The indirect ophthalmoscope should be held at 1 meter and the light beam directed into and away from the pupil of the eye at least eight times.

-

5.

The subject should report when they see the light.

-

6.

If the examiner is convinced the subject can identify the onset of the light, this response can be recorded as light perception (LP); otherwise, the level of vision is classified as no light perception (NLP).

Presentation and Analysis of Results

Changes in visual acuity scores should be analyzed using logarithmic scaling. The preferred method for designating the visual acuity scores is to use logMAR. For the charts of optotypes, flanked single optotypes, single optotypes, and grating targets, the minimum angle of resolution (MAR) is the angular size of the critical detail in minutes of arc. Most optotypes are built on a 5 × 5 grid, so the MAR is assumed to be one-fifth of the height of the optotype. For grating targets with a 50/50 duty cycle, the MAR is given by the size of a stripe width when expressed in minutes of arc. Visual acuity results should be presented and reported in logMAR units, but when authors wish to use Snellen fractions or decimal notations these should be added in parentheses after the logMAR value.

This logarithmic scaling of visual acuity should be used for analysis of differences or changes, as well as for graphical presentation of population data. When datasets include visual acuity measures from different test tasks (gratings, single optotypes, flanked optotypes, or charts of optotypes) or from different viewing conditions or testing procedures, it becomes important to clearly identify which results came from which variant of the visual acuity tests.

Some vision restoration systems will be able to incorporate optical or electronic display systems that allow magnification, minification, or repositioning of some, or all, of the perceived image. The visual acuity score is a measure of the angular size that the critical detail in the test target subtends at the observer's eye. All measurements of visual acuity should be expressed in angular terms, relative to the observer's eye, and full information should be reported about the testing procedures and the test tasks (gratings, single optotypes or charts).

When any optional magnification or minification is used during visual acuity testing, the reports of all visual acuity results should include a detailed description of all image manipulations. In such cases, it may be important to measure and report associated changes in the visual field.

The tests of temporal resolution, motion detection, spatial localization, and light perception in the BaLM and the white field projection and black–white discrimination test in the BRVT are not tests of visual resolution, and the results of such tests should be reported separately.

Reporting Guidelines

Any publication or presentation should contain sufficient information so that another group can replicate the testing methodology.

When reporting the results for testing of visual acuity, the following information must be included:

-

1.

Name of the test (and version number if available)

-

2.

Type of acuity optotype used (e.g., letter, Landolt ring, STE, flanked letters)

-

3.

Room lighting (illuminance in lux), measured from the point of the subject's eye

-

4.

Luminance (in cd/m2) of any computer screens or back-illuminated charts used in testing

-

5.

Contrast polarity of the optotype (white-on-black or black-on-white); if gray or colored targets or backgrounds are used, the gray levels or color characteristics should be specified

-

6.

Time cutoff for each response

-

7.

Testing distance

-

8.

Information about the angular size of the visual display used

-

9.

Qualitative control-related information, including whether an occluder or patch was used or whether scrambled versus unscrambled stimulation inputs were used

-

10.

Quantitative control-related information, including the number of data points (test and control) acquired for each test condition, with the mean, median, and standard deviations, as appropriate.

-

11.

Indication of whether subjects used eccentric viewing when taking the tests

-

12.

Indication of whether subjects made scanning eye movements when taking the tests

-

13.

Indication of the distant refraction (as measured or estimated) and the power of any corrective lenses that were used for testing; use of optical telescopes or other low vision aids, or any electronic zooming systems, must be described in detail

-

14.

Number of test runs within sessions and number of sessions used to obtain results, including the timing and duration of any scheduled or ad hoc rest sessions

Note that reference to the use of a publicly7 available detailed refraction and visual acuity protocol can be used to summarize these details.

Figure 7.

Example of equipment that can be used for full-field electroretinography, the Espion ColorDome LED-based full-field stimulator.37 New models of the Epsion system also include software to enable full-field stimulus threshold testing.

Electrophysiology

Gislin Dagnelie (chair)1, Michael Bach2, David Birch3, Laura Frishman4, and J. Vernon Odom5

1Lions Vision Research and Rehabilitation Center, Johns Hopkins Wilmer Eye Institute, Baltimore, MD, USA (e-mail: gislin@jhu.edu)

2Functional Vision Research, Eye Center, Medical Center, Freiburg University, Freiburg, Germany

3Retina Foundation of the Southwest, Dallas, TX, USA

4College of Optometry, University of Houston, Houston, TX, USA

5WVU Eye Institute and Blanchette Rockefeller Neurosciences Institute, West Virginia University, Morgantown, WV, USA

Introduction

Electrophysiological methods are one of the few tools available to researchers and clinicians to obtain information about signal transduction in the visual system that does not depend on the behavior of the patient. Electrophysiology provides detailed timing information, albeit with limited spatial resolution. Electrical response activity along the visual pathway can be recorded non-invasively at the ocular surface (electroretinogram, ERG) and from the occipital scalp (visually evoked potential, VEP). The signals captured by electrodes on the cornea, conjunctiva, or scalp contain small responses embedded in noise that is generated by spontaneous neuronal and muscle activity and by the electrode–tissue interface. Signal amplitudes are in the microvolt range, even in normally sighted individuals, and the interpretation of abnormalities in these small responses requires sophisticated signal analysis techniques such as averaging and spectral (Fourier) analysis.

The International Society for Clinical Electrophysiology of Vision (ISCEV) has published a series of standards that allow similar stimulation and recording methods to be used worldwide; most applicable to vision restoration trials are the standards for ERG20 and VEP.21 HOVER electrophysiology standards should adhere to ISCEV standards as much as possible, but, as described below, judicious adjustments to these standards may sometimes be required to obtain meaningful ERG or VEP recordings.

In clinical practice, multiple stimulus types and recordings may be required to confirm or differentiate among clinical diagnoses, but the use of a single well-chosen ERG or VEP method may be sufficient to confirm or rule out functionality of the retina or visual pathway (i.e., efficacy of a vision restoration approach). Moreover, special adaptations may be necessary. To test functionality of a photosensitive electronic implant in the macular area of a patient with age-related macular degeneration (AMD), a VEP elicited by a visible light stimulus is ambiguous, as the response may originate in peripheral retinal photoreceptors, whereas a near-infrared stimulus would be invisible to the native photoreceptors, so a VEP elicited by this stimulus would necessarily come from the implant by virtue of its near-infrared sensitivity. Generally speaking, the choice of stimulus parameters needs to be adjusted to the particular vision restoration technique and its (potential) benefits, and the same may be true for recording and analysis methods.

Here, we will consider a number of aspects that should allow a trained clinical electrophysiologist to remain close to the ISCEV standards when developing electrophysiological outcome measures for vision restoration trials, yet make judicious adjustments to these standards as called for by the specific conditions and properties of the disorder and treatment.

ERG Stimulation and Signal Analysis Techniques

ERG responses reflect signal processing and homeostatic recovery in the retina and therefore can provide evidence of (restored) retinal function in vision restoration trials, but the extent to which this is feasible depends to a large extent on the therapeutic modality. As an example, major components of the ERG arise in response to rod and cone photoreceptor activity and recovery, so a treatment restoring outer retinal (photoreceptor and/or retinal pigment epithelium) function is much more likely to have a measurable effect on the ERG than a treatment intervening at the retinal ganglion cell level.

In normally sighted individuals, ERG responses to full-field bright flashes administered following dark adaptation can be as large as ≈500 µV, so with properly configured bandpass filtering and amplification clean responses can be obtained without any need for averaging or postprocessing. This large response is due to the combined activity of similar cellular circuitry across the entire retina, but the situation is very different in advanced vision loss and most vision restoration methods, as only a small retinal area may have residual or restored activity. The resulting small responses to repeated flashes can, in some instances, be recovered through signal averaging, possibly in combination with bandpass filtering.22,23

Further improvements in signal-to-noise ratio (SNR) can be obtained by using a train of flashes (commonly referred to as “flicker”) and analyzing the periodic response components with Fourier decomposition. The amplitude and (especially) phase of the first and sometimes higher harmonics (especially the second harmonic) are used as characteristic indicators for inner retinal function.24 Cone function is best analyzed with high flash rates (∼30/s), whereas rods have a longer refractory period and thus are best studied with lower rates (∼9–10/s).25,26 Use of flicker responses as the only outcome does have inherent risks, however, as the spectral content of the recorded signal should be carefully analyzed to verify that the harmonic (signal + noise) spectral components are significantly larger than the surrounding (noise only) components, confirming the presence of a reliable response signal.27

As indicated above, full-field stimuli are typically used. One reason to use full-field stimuli is to elicit larger ERG responses. A second motivation is that assessing local retinal function is fraught with problems such as intraocular reflections causing spurious stray light responses from the peripheral retina.28 For most vision restoration trials, where the untreated portion of the retina is non-responsive, this is not a concern, but in patients with intact peripheral retinal function (such as in AMD and Stargardt disease) a focal stimulus must be used, preferably with steady peripheral adapting illumination to minimize the possible effects of stray light on peripheral rods and cones.

VEP Stimulation and Signal Analysis Techniques

VEPs reflect cortical processing activity in response to visual stimulation. In native vision, but also in vision restored by electronic retinal implants or other vision restoration methods at the ocular or optic nerve level, VEPs are recorded on the scalp and reflect a mixture of components originating in primary (V1) and nearby higher visual cortical areas. The folding of V1, with only the foveal and parafoveal retina projected onto the occipital pole, and more peripheral areas represented along the medial wall and in the calcarine sulcus of the cerebral hemispheres, causes VEPs to peripheral stimulation to be smaller, more variable between individuals, and therefore more difficult to quantify than those elicited with foveal stimuli.

The favorable location of the foveal projection has allowed researchers to record VEPs to stimulation with retinal implants. Stronks et al.29 demonstrated a clear difference in waveforms and amplitudes when foveal versus extrafoveal electrodes in the Argus II retinal implant were stimulated. This finding highlights two important applications of the VEP, whether elicited electrically or with light stimuli: (1) to objectively demonstrate integrity of the visual pathway from retinal ganglion cells to primary visual cortex, and (2) to differentiate between retinal origins of the response and thus show integrity of the cortical projection. When using light stimuli, the latter can only be verified by using localized retinal stimulation, utilizing either a focal flickering stimulus in a steady background or a luminance-balanced pattern reversal stimulus. Pattern stimuli are preferred, as they elicit more characteristic VEPs with less interindividual variability than flash or flicker VEPs. Pattern VEPs also reflect intracortical processing and can be used to determine visual acuity independently of behavioral measures.30,31

In advanced disease of the retina or visual pathway, as well as in diseases with a central scotoma, recording reliable VEPs may be problematic. Not only will the VEP amplitudes be small, but treatment effects that do not improve macular function may be difficult to document. Improving SNR through lengthy averaging may be impractical, but use of flicker stimuli or (better) rapid reversing pattern stimuli, combined with Fourier decomposition of the recorded signals, may yield more sensitive outcome measures. Optimal flicker rates for VEPs are likely to be between 10 and 20 flashes/s, whereas pattern reversal rates should be kept under 10 rps (reversals per second).

In normal vision, the optimal pattern size eliciting a robust response will be 10 to 15 arcmin (0.17°–0.25°), but in patients with retinal degeneration or poorly developed native vision optimal sizes may be much larger (1°, 2°, or even 4°).

Small Signal Considerations

As indicated above, ERG and VEP responses are small, even in normally sighted individuals, and smaller in most conditions leading to severe vision loss. This becomes all the more vexing in vision restoration trials, which in most cases treat only a small retinal (or cortical) area. This makes ERG recordings particularly challenging, particularly if certain components of the noise are time locked with the stimulus and therefore will not be reduced by averaging. A good example of this can be found in Stronks et al.32 The ERG signals recorded in Argus II recipients were heavily contaminated by stimulus artifacts, but also by rapid pulses generated by de-multiplexing electronics in the implant itself, which could only be removed through sophisticated multistage filtering. The underlying retinal activity had extremely small amplitudes, so only through long recording times and signal averaging could these putative responses, with amplitudes well below 100 nV, be extracted—clearly not a practical procedure for assessing visual function in clinical trials.

The same authors demonstrated, however, that VEPs in these same Argus II recipients contained a clear response signature and that foveal versus extrafoveal stimulation resulted in two distinct waveforms with a larger amplitude in response to foveal stimulation, just as would be expected for flash and flicker VEPs in normally sighted observers.29 Thus, for interventions affecting the macula, the VEP can be a useful tool, by virtue of the enlarged and exposed projection of the macula on the occipital pole.

Stimulus Considerations

The sensitivity of the visual system in patients who are candidates for vision restoration trials will typically be severely reduced, and even after successful treatment the sensitivity is likely to remain well below normal levels. In general, therefore, high stimulation levels will be required to elicit even a small response, and as a general rule white light may be preferred to obtain the largest response possible. Here, again, though, the choice of stimulus parameters must be guided by the properties of the system. As an example, an intervention aiming to restore rod function will require testing under dark-adapted conditions, leaving the subject in complete darkness for at least 40 minutes to allow for slower than normal adaptation, and using a stimulus wavelength that preferentially stimulates the rods (i.e., wavelengths below 500 nm, or blue light).

As mentioned before, rod and cone function can be differentiated by the temporal frequency eliciting the optimal flicker response in the ERG. Cone photoreceptors have a shorter refractory period than rods, so flicker rates around 30 flashes/s are typically used for cone-mediated flicker ERGs, whereas 9 or 10 flashes/s (in combination with dark adaptation and short stimulus wavelength) will be optimal to elicit a rod flicker ERG. For flicker VEPs, repetition rates ranging from 10 to 20 flashes/s are optimal.

Electrical Pulse Stimulation

Most types of vision restoration aim to improve or re-create light sensitivity, and for these approaches light stimuli will generally be used to ascertain functionality. In some cases (e.g., prior to optogenic transfection of ganglion cells), electrical stimulation may be useful for testing the integrity of the retinocortical pathway. Electrical stimulation would also be useful if the VEPs to light stimuli are too small to be recorded reliably, and there is concern that the visual pathway may be compromised.

Electronic retinal implants with external cameras typically have a fixed internal frame rate, limiting the allowable stimulus repetition rate to the frame rate or an integer fraction of it. This limits the frequency choices for flicker stimuli and must be considered when designing a protocol using the ERG or VEP as an outcome measure. Moreover, the likelihood of large artifacts in the recorded ERG signals is high.32 VEPs elicited by retinal electrical stimulation are much less likely to be contaminated by artifacts, and several successful applications have been shown in the literature.29

Electrical stimulation using cortical implants is likely to cause large switching and stimulation artifacts in the VEP, so the potential to study cortical processing with these implants may be limited. This is likely to be an area of study in the next few years, as more cortical visual prostheses enter clinical trials.

Sample Special Cases

To end this section, let us look at a few special cases that illustrate some of the considerations when pursuing evidence of functional vision restoration using electrophysiology.

Central Vision Restoration in AMD

The optimal outcome measure is likely to change as the trial progresses:

-

•

In early-stage (feasibility) studies, it will be important to demonstrate that the intervention is safe (i.e., that function in the intact peripheral retina is not adversely affected by the intervention). Standard ISCEV recordings of the ERG20 before the intervention and at several follow-up times are the most appropriate tools to monitor overall retinal function, but to rule out more localized adverse effects this should be supplemented by a multifocal ERG,33 provided the subject can reliably fixate the center of the hexagonal stimulus pattern throughout the recording time, which may be in excess of 10 minutes.

-

•To establish functionality of the treatment (i.e., restored functionality in the macula), the VEP is a more appropriate outcome measure, by virtue of the large macular representation in the visual cortex. But here a more judicious choice of the appropriate stimulus will have to be made to be able to distinguish macular and peripheral responses, and this choice will depend on the nature of the treatment:

-

○For electronic implants, one can make use of the broader wavelength selectivity of electronic imagers, particularly their sensitivity to near-infrared wavelengths; stimuli with wavelengths between 800 and 850 nm will be invisible to the peripheral retina, and any measurable response in the VEP must be mediated by the implant.

-

○If the treated and native retinal areas cannot be distinguished by wavelength sensitivity, spatially restricted stimuli must be used, and fixation must be monitored to ascertain that the stimulus falls on the treated retinal area. As mentioned before, the effect of stray light from the stimulated area onto the peripheral retina can be reduced by steady illumination in the peripheral visual field, but a better approach may be to balance stimulus intensity by using a counterphase modulated pattern.

-

○

Optogenetics Treatment in the Macula

For safety monitoring, ERG methods can be used as indicated above. For efficacy, however, one can make use of the fact that transgenic light-sensitive channels created by the treatment will have different temporal and dynamic response properties from the native photoreceptors. By varying adaptation level, stimulus amplitude, and flicker frequency one can separate components in the VEP, similar to the techniques used to separate rod- and cone-mediated responses in the ERG.34 Optimal stimulus and analysis choices will be determined by the specifics of the receptor channels and the native retina.

Recording Through Electronic Implants

As mentioned above, recording ERG or VEP responses to electrical stimulation by retinal or cortical visual prostheses requires extensive averaging and postprocessing due to artifacts arising from the electrical stimulus and implant electronics; the only possible exception is VEPs in response to retinal electrical stimulation.29 It is conceivable, however, that future electronic implants will have built-in recording capabilities through the implanted electrodes. Not only will this allow signals to be captured in the source tissue rather than at a distant location (cornea or scalp), yielding much larger response amplitudes, but this may also guide proper design of the implant, helping to ensure that recording electronics are isolated during stimulation, eliminating stimulus artifacts and thus the need for arduous postprocessing. This design is common in cochlear implants and has led to improved signal processing and much better performance being achieved with those devices.

Full-Field Stimulus Threshold Test

It may seem strange to include a psychophysical test of dark-adapted threshold sensitivity in this section about electrophysiology, but it is justified by the fact that this test is run on an ERG Ganzfeld stimulator (Espion ColorDome; Diagnosys LLC, Lowell, MA (see Fig. 7)) and generally administered by technicians most familiar with that equipment (i.e., clinical electrophysiology staff).

When vision has dropped below a level that can be measured psychophysically with common visual function tests (visual field, ETDRS visual acuity, contrast sensitivity), there are few commonly accepted methods available to monitor progression or improvement. The development of the full-field stimulus threshold test (FST)35 created a psychophysical method to measure the illuminance necessary to be perceived by the most sensitive parts of the retina and thus obtain a quantifiable threshold that could be assessed before and after intervention, even in subjects with extremely low vision. This most sensitive area is tested without knowing its exact location, which is especially useful because treatment effects do not always occur in predictable areas; subretinal injections are a good example of a treatment where benefit may be patchy and are difficult to pick up with full field or even multifocal ERG.

As an additional benefit, this method does not require fixation, making it useful even in subjects with better vision who are unable to reliably perform perimetry due to conditions such as nystagmus. The further development of the method moved the test from a customized hardware base36 to software on the Espion ColorDome LED-based full-field stimulator.37 This software is now commercially available as the Diagnosys full-field stimulus threshold test (D-FST) in the software library of the Espion system. Thus, a technique for obtaining full-field thresholds has become a de facto standard that has been used as an outcome measure in the Argus II retinal prosthesis trial (Dagnelie G, unpublished observations) and Luxterna gene therapy trials.38 It is currently being explored as an outcome measure in numerous other early-phase prevention and restoration trials for inherited retinal disease.

There is not currently a standard protocol for this test that is comparable to the ISCEV standards, but the expectation is that such a standard may be published in the near future. Until then, multiple publications using the FST are available in the literature.

Reporting Guidelines

Any publication or presentation reporting the results of electrophysiological recordings in vision restoration trials should include sufficient information to allow replication of the work, including the following:

-

•

The name of the test and, if applicable, any changes made to the corresponding ISCEV standard

-

•

If the test does not correspond to a previously validated (ISCEV) standard, an explanation why this particular test was selected for the population studied and information regarding the validation procedure used to ascertain accuracy and precision in normal vision, as well as in the study population

-

•

If the test has not been previously published or is not available to the general public, accuracy and precision data in normally sighted observers of similar age and gender as the study population

-

•

Any non-standard equipment or settings required to run the tests

-

•

Normative values and confidence intervals in normally sighted observers age and gender matched to the study population

Given the availability of a number of validated and well-calibrated ISCEV standards for assessment of retinal and higher visual pathway function, the working group expresses as its strong opinion that all clinical trials seeking regulatory approval should adhere to these normative tests as closely as possible, with clear arguments why the adaptations used are8 most appropriate for the study population and the intervention.

Figure 8.

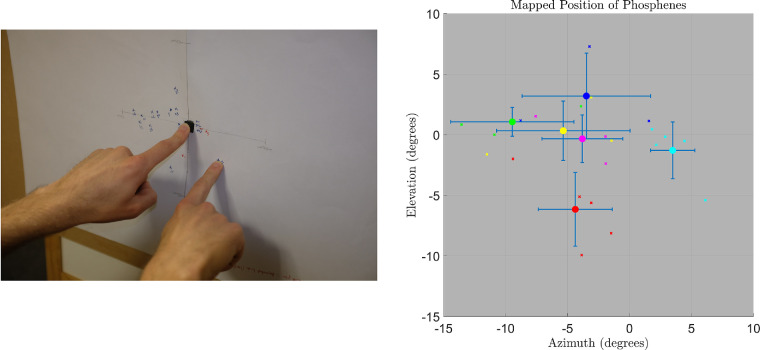

A method of phosphene mapping using an easel. (Left) The participant is instructed to place their left and right index fingers on a tactile marker positioned within a large sheet of paper mounted on an easel. After a short stimulus, the participant moves their right index finger to the remembered position and holds it in place while the researcher marks the paper. (Right) Multiple measurements (“x”) give an indication of each phosphene position, with the average position indicated by a solid colored circle. The bars indicate ±1 SD of phosphene position measurements. Data courtesy of Bionic Vision Technologies, Australia.

Electrically Evoked Device Effectiveness

Matthew Petoe (chair)1, Daniel Rathbun2, Ethan Cohen3, Ione Fine4, and Ralf Hornig5

1Bionics Institute of Australia, East Melbourne, Australia (e-mail: mpetoe@bionicsinstitute.org)

2Werner Reichardt Centre for Integrative Neuroscience and Institute for Ophthalmic Research, University of Tuebingen, Tuebingen, Germany

3Center for Devices and Radiological Health, Food and Drug Administration, Silver Spring, MD, USA

4Department of Psychology, University of Washington, Seattle, WA, USA

5Pixium Vision SA, Paris, France

Introduction

This section applies to visual restorative approaches that utilize a device, such as a visual prosthesis, to induce vision by electrically or perhaps chemically stimulating neural tissue. This section is also relevant for optogenetic and photo-switch approaches that use a device to deliver photic input to the genetically modified neurons.39 The goal of this section is to provide guidance for those who conduct human psychophysical experiments, in terms of both methodology and reporting.

Differing Technologies

There are many variations in the design of prosthetic systems, especially with respect to how visual images are captured (with external cameras or implanted photodiode arrays, for example) and the number and density of implanted electrodes.

Visual prosthetic devices all require a camera or photosensor to dynamically capture the visual scene. Translation of this visual information into induced neural activity can be mediated by image processing algorithms (for camera-based systems). The resulting output must then be converted into either a light (for optogenetics and photo-switches) or electrical stimulation protocol. This stimulation pattern is then processed by the patient's remaining visual system. For many devices, testing can be carried out either via direct stimulation of the device electrodes or under naturalistic conditions where input is provided by the camera. For others, such as photodiode arrays, only naturalistic stimulation is possible.

The location of the stimulating array varies among devices. For visual prosthetic devices, the interface with neural tissue can be in the suprachoroidal space, the retina (either epi- or sub-retinal surface), optic nerve, lateral geniculate nucleus (LGN), occipital lobe, or higher visual cortical association areas.

Sensory substitution devices (i.e., those that take visual images and provide sensory input to the patient via some non-visual sensory means) may position a stimulating array on the tongue, forehead, corneal surface, or lower back, among other possibilities.

The commonality across all these methodologies is that recipients will perceive a representation of the visual environment as a sensory construct. For devices that provide visual input, the evoked percepts are referred to as “phosphenes.” Use of the term phosphene conveys that some visual percept was induced, but by itself this term does not convey the quality, detail, or perceptual value of the percept.

Behavioral Measures

The following basic aspects of phosphene generation should be considered and reported when possible:

-

1.

Phosphene (or perceptual) threshold—A measure of the electrical charge and/or light intensity (or tactile force, in the case of a sensory substitution, tactile prosthesis) required to produce a percept. It is necessary to know the specific details of the technical methods used to achieve a given threshold, as thresholds vary in accordance with many variables, including pulse duration, frequency, inter-pulse spacing, duration of spike train, polarity, waveform, electrode geometry, material and coating, use of one or more electrodes simultaneously or interleaved, distance from the retina or retinal neurons, location of stimulation (e.g., in the macula or periphery of the retina), the status of the neuronal substrate (as might be assessed by optical coherence tomography for retinal devices), residual visual capacity of the subject, and duration of blindness.40-43 There are a variety of methods for estimating thresholds, so the method used should be described, including whether the threshold for each individual electrode or diode was independently measured or whether electrode thresholds were interpolated across the surface of the device (as might be done to speed the process of threshold determination for high electrode count devices). In addition, methods to validate the reliability and precision of the thresholds should also be reported.

-

2.

Phosphene brightness/size—Both phosphene size44 and phosphene brightness44,45 as a function of stimulation properties can generally be estimated quickly and reliably using subjective magnitude ratings. For electrical devices, useful stimulation properties to test and report include the relationships among current amplitude, pulse duration, frequency, and subjective brightness/size. For photodiode devices, the equivalent of current amplitude is external luminance, although the luminance–diode current relationship should also be reported whenever possible.