Abstract

Minimally invasive surgeries often require complicated maneuvers and delicate hand–eye coordination and ideally would incorporate “x-ray vision” to see beyond tool tips and underneath tissues prior to making incisions. Photoacoustic imaging has the potential to offer this feature but not with ionizing x-rays. Instead, optical fibers and acoustic receivers enable photoacoustic sensing of major structures—such as blood vessels and nerves—that are otherwise hidden from view. This imaging process is initiated by transmitting laser pulses that illuminate regions of interest, causing thermal expansion and the generation of sound waves that are detectable with conventional ultrasound transducers. The recorded signals are then converted to images through the beamforming process. Photoacoustic imaging may be implemented to both target and avoid blood-rich surgical contents (and in some cases simultaneously or independently visualize optical fiber tips or metallic surgical tool tips) in order to prevent accidental injury and assist device operators during minimally invasive surgeries and interventional procedures. Novel light delivery systems, counterintuitive findings, and robotic integration methods introduced by the Photoacoustic & Ultrasonic Systems Engineering Lab are summarized in this invited Perspective, setting the foundation and rationale for the subsequent discussion of the author’s views on possible future directions for this exciting frontier known as photoacoustic-guided surgery.

I. INTRODUCTION

The physical principles that govern photoacoustic imaging technology are summarized in several review articles.1–3 The basic principle relies on the illumination of a region of interest with pulsed light that is then absorbed by photoabsorbers within the tissue (such as hemoglobin), resulting in a small mK rise in temperature due to vibrational and collisional relaxation. The temperature rise results in thermal expansion generating an acoustic pressure wave. This pressure wave can be received by an ultrasound transducer and reconstructed into an image.2,3 Contrast in photoacoustic images is primarily derived from differences in the optical absorption spectrum of different tissues.3,4 Because the light is incident on a specific region of tissue, the temperature rise and, therefore, the resulting pressure wave both depend on the optical absorption properties within the illuminated region of tissue. Specific targets for photoacoustic imaging include blood, the fat within the myelin sheath of nerves, and metallic tool tips.

Photoacoustic imaging system components generally include a light source (typically a nanosecond pulsed laser) and an ultrasound system capable of clinical grade images. Ultrasound images may also be used to provide anatomical structural context for the optical absorption maps provided by photoacoustic images. Because the same ultrasound sensor is used, ultrasound and photoacoustic images can be inherently co-registered to each other.

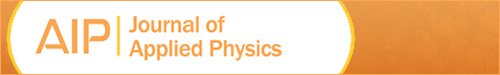

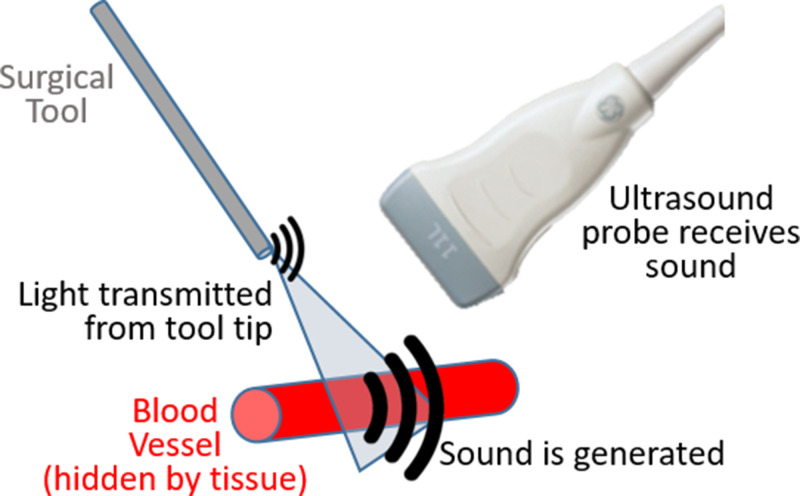

There are multiple options available to deliver light to surgical sites. Historically, light has been attached to ultrasound receivers5 or located at fixed distances from the receiver,6 as initially presented and demonstrated for sentinel lymph node detection.5,6 There is now growing interest in separating the light source from the receiver and attaching light sources to surgical tools to enable guidance of interventional procedures. One underlying goal of this approach is to visualize both the tool tip and a structure that needs to be either targeted or avoided in the same photoacoustic image, as illustrated in Fig. 1. Some of the first examples of this concept were demonstrated to visualize biopsy targets such as tumors,7 to identify vasculature and nerves during interventional procedures,8 and to detect brachytherapy seeds by inserting an optical fiber through the hollow core of brachytherapy needles.9,10 There has since been additional work to expand these examples to other types of procedures, making the concept of photoacoustic-guided surgery more versatile than initially envisioned, presented, and demonstrated. Multiple comprehensive review articles have been written to detail the history and wide scope of photoacoustic-guided surgery applications.2,11–13 The purpose of this invited Perspective is to first summarize recent applications of photoacoustic-guided surgery and specific technology developed by the Photoacoustic & Ultrasonic Systems Engineering (PULSE) Lab to enable demonstrations of feasibility. This summary is organized by specific surgical and interventional challenges that are addressed using methods to avoid blood vessels, followed by methods with blood as the surgical target, followed by integration with robotic technology. Much of the recent work in this area is interconnected through the technology, equipment, and customized light delivery design innovations implemented within the past five years, as summarized in Fig. 2, which also provides a technological overview of Sec. II. The description of surgical and interventional applications in Sec. II is then followed by a discussion of outstanding challenges and opportunities in Sec. III. This Perspective concludes with a summary and outlook in Sec. IV.

FIG. 1.

Concept of photoacoustic-guided surgery with intentional flexibility between light transmission and external sound reception.

FIG. 2.

Historical perspective of PULSE Lab photoacoustic-guided surgery applications proposing light sources attached to surgical tools and integration with robotics (Sec. II), which provides support and context for the author’s perspective on possible future innovations (Sec. III).

II. SURGICAL AND INTERVENTIONAL APPLICATIONS

A. Minimally invasive neurosurgery

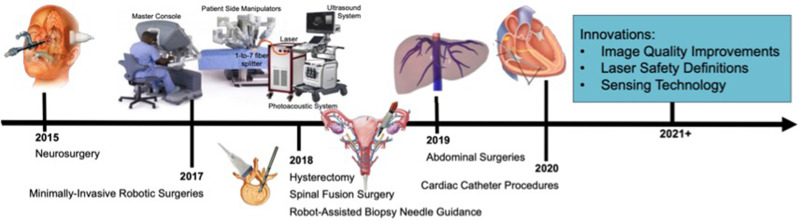

Pituitary tumors located at the base of the skull are commonly removed with endonasal, transsphenoidal surgery, implemented by passing surgical tools through the nose and nasal septum and removing the sphenoid bone to access the tumor. Although the surgery is generally safe, one of the most significant complications is caused by the location of the carotid arteries within 1–7 mm of the pituitary gland, with reported incidence rates of 1%–1.1%.15,16 Accidental injury to these carotid arteries is a serious surgical setback that causes extreme blood loss, neurological deficits, stroke, and most significantly, the possibility of death, with a reported 24%–40% mortality rate each time this injury occurs.15,17 One reason mortality rates are so high is because current procedures rely on preoperative MRI or CT images, which are not updated during surgery. The overall vision to address these challenges with photoacoustic imaging is to attach optical fibers to the surgical drill18 and detect photoacoustic signals with an external ultrasound probe. Initially, the temple was considered as a viable ultrasound probe location,19 as illustrated in Fig. 2. Follow-up simulation and cadaver studies proposed the eye and the nose as alternative locations for ultrasound signal reception.14 Blood vessels located behind bone were successfully visualized in a human cadaver head with these external acoustic receiver locations, demonstrating the feasibility of photoacoustic-guided neurosurgery with internal light delivery and multiple possible external ultrasound probe locations, as shown in Fig. 3. These results are additionally promising for other types of transcranial photoacoustic imaging applications.

FIG. 3.

(a) 3D computed tomography (CT) skull reconstruction highlighting the axial–lateral imaging planes and lateral-elevation ultrasound probe locations used for the temporal, nasal, and ocular external ultrasound probe locations. Co-registered CT and photoacoustic images acquired with the ultrasound probe placed on the (b) temple, (c) nasal, and (d) ocular regions. (e) Co-registered CT and photoacoustic images of a skull filled with brain tissues and ovine eyes and ultrasound probe placed on the right ocular region. Photoacoustic targets include the left and right internal carotid arteries (LCA and RCA, respectively) and optical source (S). Reprinted with permission from Graham et al., Photoacoustics 19, 100183 (2020). Copyright 2020 Elsevier.

B. Liver surgery

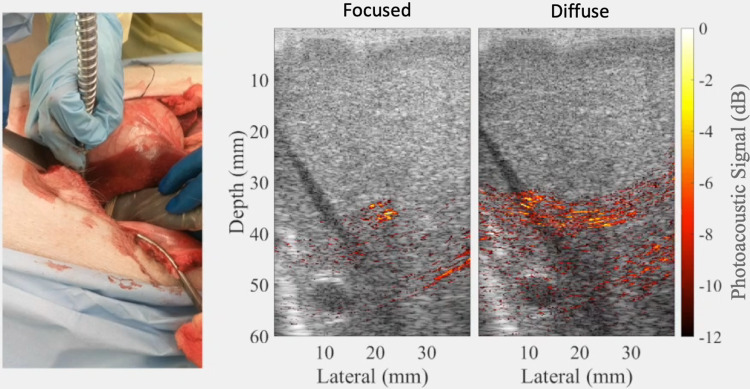

Liver surgeries suffer from the risk of gastrointestinal and intra-abdominal hemorrhage,21–23 with postoperative morbidity occurring in 23%–46% of patients who hemorrhage during liver resections and death occurring in 4%–5% of these patients.23 To address these complications, photoacoustic imaging was implemented by adjusting the location of the light source to determine the location of a major hepatic blood vessel based on its appearance as a focused signal, rather than a diffuse signal (which tends to indicate the presence of a predominantly liver tissue).20 The diffuse signal in the liver tissue was likely caused by the presence of multiple small blood vessels within the liver tissue that are either located in the image plane or located off-axis from the image plane. Examples of the differentiation between diffuse and focused signals are shown in Fig. 4, with a corollary video demonstration published in the corresponding journal publication on this topic.20 This differentiation, which was not present during ex vivo liver experiments, has two immediate implications for photoacoustic-guided liver surgeries. First, the distinguishable visualization of major blood vessels during surgery can possibly help surgeons to navigate around these vessels during tissue resection procedures. Second, this approach can be used to assist with estimating and targeting areas where cauterization of the major blood vessels is necessary in order to reduce significant bleeding and blood loss during surgery. Therefore, this is an example of the use of the proposed technology to both target and avoid blood vessels during surgery.

FIG. 4.

Example of photoacoustic signals within and nearby a major hepatic vein obtained during an in vivo liver surgery. The signals originating from within the vein had a more focused appearance, while a subtle adjustment of the light source caused more diffuse signals. Adapted from Kempski et al., Proc. SPIE 10878, 108782T (2019). Copyright 2019 Author(s), licensed under a Creative Commons Attribution 4.0 License.

C. Spinal fusion surgery

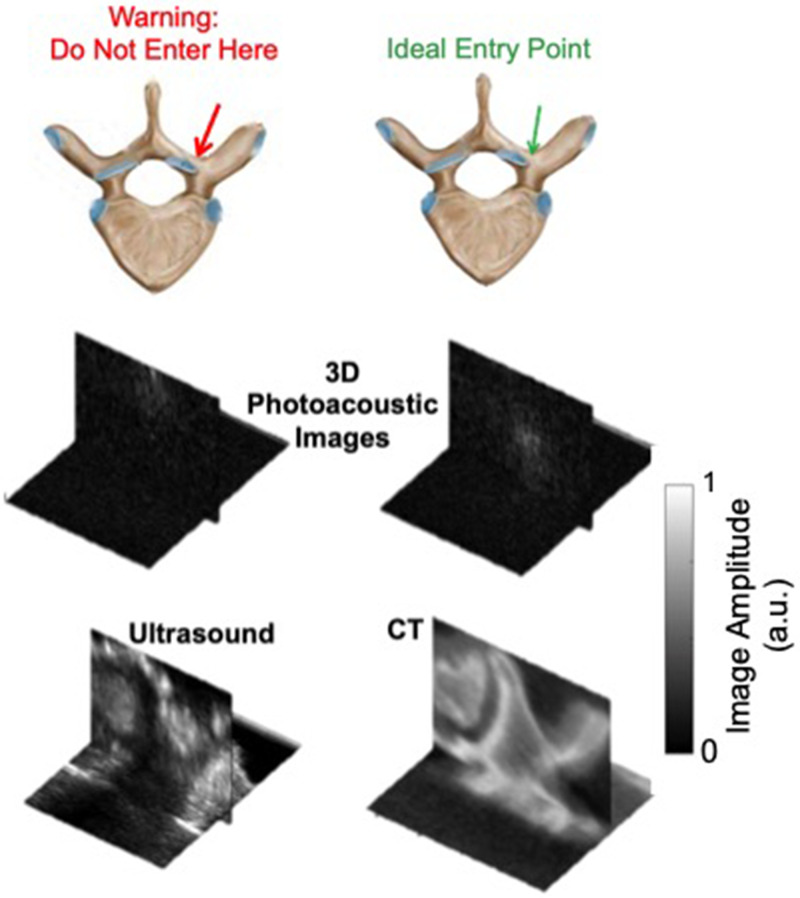

Spinal fusion surgeries are performed to alleviate pain or neurologic deficit or to repair damaged vertebrae within the spinal column25,26 by placing screws through the pedicles of vertebrae to connect them with a metal rod and stabilize the spine. The pedicles consist of a cancellous core that is targeted for screw insertion. However, the pedicle wall is breached in 4%–12% of procedures,27,28 and this rate increases up to 29% for residents.29 In addition to potential breaches, it is difficult to determine the optimal starting point without some initial removement of bone, which can weaken the remaining bone needed to support pedicle screw insertion, particularly if the initial position is incorrect and adjustments are required. Approximately 14%–39.8% of screws are misplaced during spinal fusion surgeries,30–33 which can lead to postoperative neurological injuries, vascular injuries, blindness, or necessary reoperations.34–36 Photoacoustic imaging has been explored as an option to uncover expected differences between the cortical and cancellous bone for the potential guidance of spinal fusion surgery.24 An optical fiber that delivers laser light could either be isolated from or attached to the surgical tool.37 A standard clinical ultrasound probe would then be placed with acoustic coupling gel on the vertebra of interest. The purpose of this ultrasound probe is to receive the acoustic response generated by optical absorption within the blood-rich cancellous core, noting that it is possible for the resulting acoustic response to travel through the 244 m to 1.75 mm-thick cortical bone layer covering the cancellous core.38–40

Therefore, in this application of photoacoustic imaging for surgical guidance, blood-rich regions can be targeted rather than avoided, based on the appearance of photoacoustic signals obtained with an optical fiber pointing toward the cortical bone rather than the cancellous core of the pedicle (i.e., the ideal entry point), as shown in Fig. 5. These subtle differences are achievable because the optical absorption of blood is orders of magnitude higher than that of bone, which permits a photoacoustic response from the bood-rich cancellous core. These results are additionally insightful for other applications that involve photoacoustic imaging of (or within) bony tissue.

FIG. 5.

Photoacoustic signals from cortical bone (left) and the cancellous core of the pedicle (right) and corresponding ultrasound and CT volumes confirming signal source locations. Photoacoustic imaging has the potential to target the blood-rich cancellous core to assist with pedicle screw insertion during spinal fusion surgeries. Adapted from J. Shubert and M. A. L. Bell, Phys. Med. Biol. 63(14), 144001 (2018). Copyright 2018 Author(s), licensed under a Creative Commons Attribution 3.0 Unported License.

D. Gynecological surgery

Approximately 52%–82% of iatrogenic injuries to the ureter occur during gynecologic surgery, often caused by clamping, clipping, or cauterizing the uterine arteries as they overlap the ureter,41 which are located within a few millimeters of the uterine artery (see Fig. 2). Ideally, this injury would be avoided altogether, and if it occurs, it would be best to notice it during the operation in order to address it immediately. Yet, 50%–70% of ureteral injuries are undetected during surgery,42 leading to multiple post-operative complications, including kidney failure and death. Photoacoustic imaging is one viable solution to detect hidden blood vessels in real time during minimally invasive gynecological surgeries and simultaneously differentiate these vessels from the ureter using photoacoustic imaging.43,44 Blood vessels may be distinguished from the ureter by introducing wavelength-dependent contrast agents into the urinary tract. The feasibility of this concept has thus far been explored for photoacoustic-guided hysterectomy.43–45 Results demonstrate that there is promise to use this technology to identify the uterine arteries and differentiate them from the ureter with the assistance of contrast agents such as FDA-approved methylene blue. This contrast agent may be administered intravenously during surgery and is required for ureter visualization due to the relatively low optical absorption of urine. Other possible gynecological applications of this technique include endometriosis resection and myomectomy (i.e., removal of uterine fibroids).

E. Robot-assisted biopsy guidance

Biopsies are widely performed procedures, implemented by inserting a hollow core needle to extract a small piece of abnormal or suspicious tissue for examination under a microscope to make a diagnosis. While the procedure is generally effective when guided by ultrasound, it is often difficult to localize needle tips in some patients (including obese and overweight patients) due to poor ultrasound image quality and difficulty differentiating a needle tip from a needle midsection. In addition, obesity increases the risk of complications from 4% (1 insertion) to 14% ( insertions).47

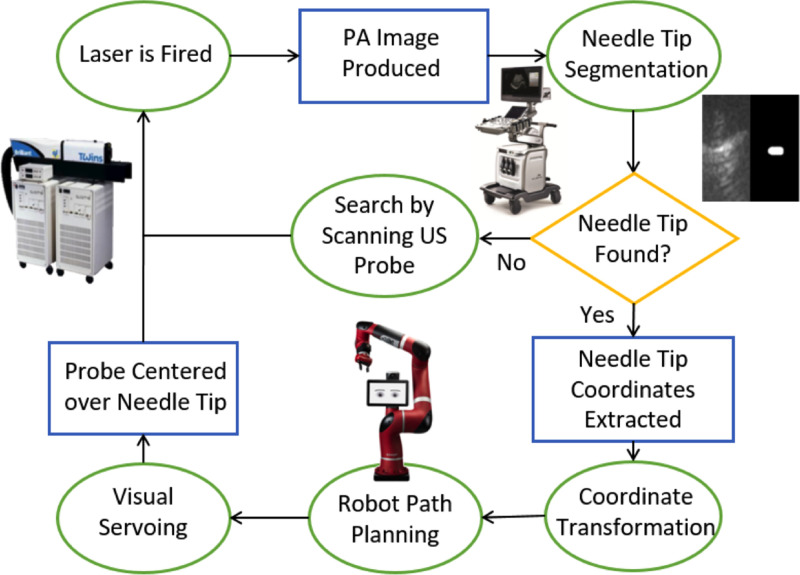

Targeting accuracy and localization of biopsy needle tips can be enhanced with photoacoustic imaging by inserting an optical fiber into the biopsy needle.7,8 This concept can be further enhanced by relieving operators of the burden to find photoacoustic signals of interest within the body. This relief was achieved by offering hands-free operation and commanding a robot to search, find, and stay centered on photoacoustic signals from the needle tip through a process known as visual servoing, which is vision based control, with the vision provided by photoacoustic images.48 This approach is illustrated in Fig. 6 and has the potential to impact multiple biopsy procedures, such as kidney, liver, and breast biopsies.

FIG. 6.

Robot-assisted photoacoustic imaging for hands-free biopsy. Reprinted with permission from J. Shubert and M. A. L. Bell, “Photoacoustic based visual servoing of needle tips to improve biopsy on obese patients,” in IEEE International Ultrasonics Symposium (IEEE, 2017). Copyright 2017 IEEE.

F. Cardiac catheter-based interventions

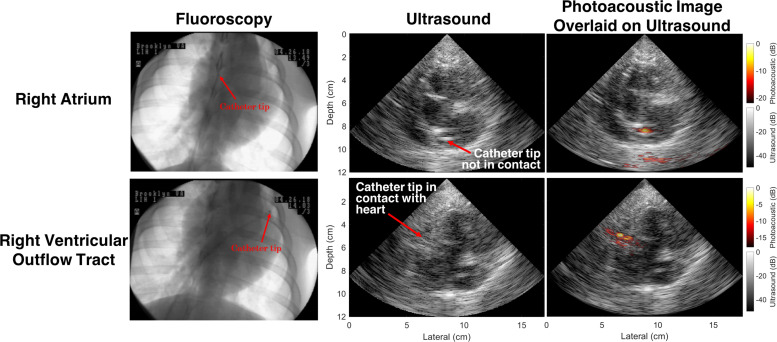

Cardiac radiofrequency ablation is the most effective treatment for atrial fibrillation (a heart disease claiming over 6.1 lives). Although guided by real-time, x-ray fluoroscopy, lesion formations are not well characterized intraoperatively, which often results in repeat surgeries. Several papers report options to differentiate ablated lesions from normal tissue using photoacoustic imaging,49 demonstrating convincing potential to identify differences in real time50 and in an ex vivo beating heart.51 This potential was later expanded by proposing a full end-to-end system for replacing (or significantly mitigating) fluoroscopy with a robotic photoacoustic imaging system.52To realize this potential, a standard cardiac catheter would be modified to accommodate an optical fiber within its hollow core in order to generate photoacoustic signals from the catheter tip as it is guided toward the heart. These photoacoustic signals would be received by an external ultrasound probe. Using the same visual servoing procedures outlined in Sec. II E, a robot arm attached to the ultrasound probe can be commanded to maintain the catheter tip at the center of the photoacoustic image.52 Figure 7 demonstrates the benefit of relying on this method to identify catheter tips with more certainty than that provided by ultrasound images (and with the inclusion of depth information that is absent from fluoroscopy), particularly when the tip of the fiber-catheter pair is in contact with the myocardial tissue. In addition, the contrast differences between photoacoustic signals obtained with and without the catheter touching the endocardium can be used to confirm catheter-tissue contact prior to ablation.52

FIG. 7.

Photoacoustic signals within an in vivo heart, obtained with and without catheter-to-myocardium contact. Adapted from Graham et al., IEEE Trans. Med. Imaging 39(4), 1015–1029 (2020). Copyright 2020 Author(s), licensed under a Creative Commons Attribution 4.0 License.

G. Teleoperated robotic surgery

A range of surgeries (such as the gynecological procedures described in Sec. II D) are trending toward incorporating robotic assistance, due to the promise of decreased hospital stays, minimal blood loss, and shorter recovery periods. These robotic systems additionally have the potential to benefit from augmentation with photoacoustic imaging systems.53 In addition to blood vessel visualization during these minimally invasive robotic surgeries, one additional challenge that can be addressed with photoacoustic imaging is the rise of ureteral injuries with the introduction of these robots. For example, one study documented a 2.4% rate of ureter injury during pelvic surgeries performed before robotic assistance, compared with 11.4% after the implementation of the technology.54 With regard to robotic hysterectomies, the da Vinci® robot is the only option available for performing robotic hysterectomies, and hysterectomies are the most popular surgery for the da Vinci® robot. Therefore, it would be impactful to introduce this technology for hysterectomies.

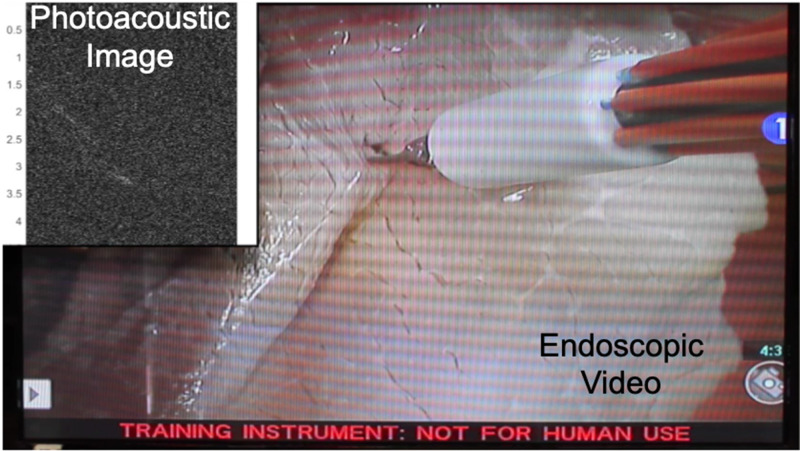

Toward this end, proof-of-concept experiments were performed in a mock operating room that contained a da Vinci® S robot, consisting of a master console (shown in Fig. 2), patient side manipulators that are teleoperated from the master console, and an endoscope to visualize the surgical field. A photoacoustic imaging system was positioned next to this surgical robot. To demonstrate initial feasibility,43 an experimental phantom was placed on a mock operating table located in a mock operating room that housed the da Vinci® robot. The integrated photoacoustic imaging system consisted of an Alpinion ECUBE 12R ultrasound system connected to an Alpinion L3-8 linear transducer (which has a 5 MHz center frequency to allow deep acoustic penetration for the received photoacoustic sound waves) and a Phocus Mobile laser with a 1-to-7 fiber splitter attached to the output port of the laser. The seven output fibers of the light delivery system surrounded a da Vinci® curved scissor tool and were held in place with a custom-designed, 3D printed fiber holder.43 The da Vinci® scissor tool was held by one of the patient side manipulators of the da Vinci® S robot. An example view of the resulting photoacoustic image is shown in Fig. 8, synchronized with the motion of the customized da Vinci® scissor tool, as viewed through the master console. A corollary video demonstration is published with the corresponding journal publication on this topic.43 The view in Fig. 8 represents one possible presentation format for surgeons during an operation. In these feasibility studies, the surgical tool and attached light delivery system was teleoperated. However, taking a broader perspective of the possibilities for robotic-photoacoustic integration, either the ultrasound probe or the fiber (or both) can be controlled with automated, semi-automated, cooperative, or teleoperative control. For example, Moradi et al.55 demonstrated the use of virtual fixtures to constrain ultrasound probe motion when implementing photoacoustic imaging with a da Vinci® research kit.56 These and other possibilities are not limited to the da Vinci® robot and may be implemented with a wide range of commercial or custom robots that offer the desired control capabilities.

FIG. 8.

Photoacoustic signals and the corresponding da Vinci® S endoscope video feed showing the surgeon’s view from the master console. Adapted from Allard et al., J. Med. Imaging 5(2), 021213 (2018). Copyright 2018 Author(s), licensed under a Creative Commons Attribution 3.0 Unported License.

III. CHALLENGES AND OPPORTUNITIES

A. Image quality

One of the major underlying and pervasive challenges with photoacoustic imaging approaches that rely on low-frequency ultrasound probes, which are ideal for deep acoustic penetration during surgery, is that these lower frequencies tend to produce relatively poor image quality (as opposed to the clearly differentiated vasculature that has historically been observed with high-frequency, microscopic photoacoustic techniques). Metrics to assess image quality should always be tied to the imaging task at hand, with common objective metrics for surgical guidance concerned with target visibility, sizing, and location accuracy, as defined by contrast, resolution, signal-to-noise ratio, and contrast-to-noise ratio. Considering the typical trade-off between these image quality metrics and the important advantage of offering deep acoustic penetration, low-frequency ultrasound probes are arguably the best candidates for surgical guidance with internal light delivery and external sound reception at large depths from the target location, provided that the desired specifications, image quality metrics, and sensitivity requirements are met. Therefore, methods to improve image quality would benefit from focusing on viable options that are compatible with the technical capabilities of low-frequency ultrasound probes.

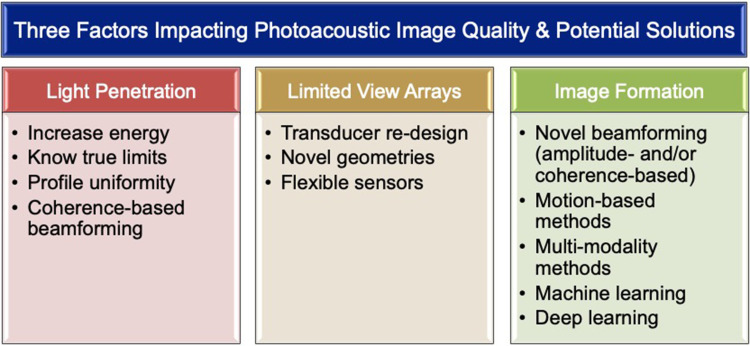

Three notable factors (excluding ultrasound transducer frequencies for the reasons stated above) that primarily impact photoacoustic image quality for surgical guidance tasks are summarized in Fig. 9: (1) limited light penetration, (2) “limited-view” linear/phased/curvilinear array ultrasound sensors (in comparison to ring arrays), and (3) image formation and beamforming models that do not consider multipath acoustic propagation. Figure 9 also includes a summary of some potential solutions, with details on possible methods to address the first two factors discussed in Secs. III B and III C, while a discussion of the third factor is the focus of this section. In particular, artifacts introduced by multiple acoustic pathways are common to both ultrasound and photoacoustic imaging, producing what is known as acoustic clutter,48,57 with larger illumination areas expected to produce more photoacoustic clutter. The simplest attempts to address acoustic clutter and related image quality challenges include post-processing to clean-up photoacoustic images and thresholding of these images prior to display. In addition, while research effort has been dedicated to addressing these challenges from an optics perspective,58 this challenge has primarily been framed from an acoustics perspective. Promising acoustic-based approaches include frequency based methods,59,60 mixed imaging modality methods,61–64 motion-based methods,64–66 and advanced beamforming techniques, such as adaptive beamforming67 or short-lag spatial coherence beamforming,10,68–71 which was recently implemented in real-time for photoacoustic-based visual servoing.72–74 With both simple and complex methods to improve image quality, it would be prudent to re-evaluate our methods to quantify image quality, as recently demonstrated with the relatively new metric of a generalized contrast-to-noise ratio75 applied to photoacoustic images.76

FIG. 9.

Three key factors impacting photoacoustic image quality for surgical guidance (top) and possible solutions to address challenges (bottom).

Another active area of recent interest and investigation to improve image quality is the integration of modern machine learning techniques with image formation.77–86 Specifically, deep learning alternatives to image formation have been used to replace either a subset of or the entire mathematical component of image formation with a well-trained model or a neural network. The use of advanced beamformers and the selection of deep neural networks for image formation should ideally be based on the specific task at hand. In addition, implementations of these methods with the same ultimate goal of improved image quality should ideally be as simple as possible, with increasing levels of complexity added only as absolutely necessary. This philosophy often results in a trade-off between image quality and real-time implementation speed, which are often inversely related.

Based on the perspective that deep learning has strong potential to be the latest frontier that optimizes the trade-off between image quality and speed, deep learning is particularly promising for image-guided surgery because unique acoustic signatures can be learned with attention to light delivery systems that are fashioned around surgical tool tips. For example, one option is to learn the unique shape-to-depth relationship of point-like photoacoustic sources from metallic surgical tool tips and nearby point-like structures in order to provide a deep learning-based replacement to common photoacoustic image formation steps.78,83 This approach has the potential to be extended to other structures with unique photoacoustic signatures.

B. Laser safety and energy requirements

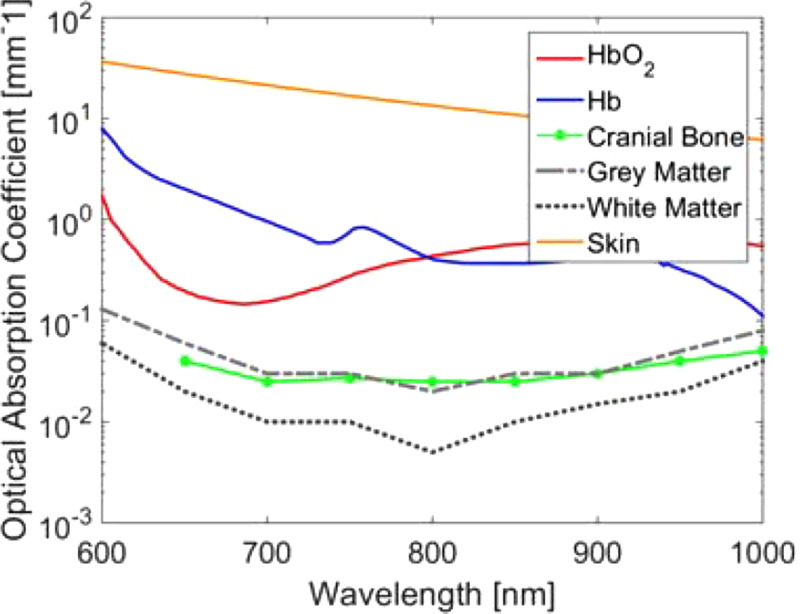

When implementing any photoacoustic-guided surgery or interventional application, it is critically important to consider the acceptable laser exposure for the surrounding tissue. If energy exposure is too great, tissue may coagulate or vaporize, which may not be desirable depending on the specific surgery. In general, laser radiation causes thermal effects depending on the wavelength, power, energy, beam diameter, and absorption spectrum of the tissue. Additional considerations include the volume of circulating blood, specific heat, thermal conductivity, non-homogeneity, and optical properties such as transmission, reflection, absorption, and scattering.87 The maximum permissible exposure (MPE) is defined by the American National Standards Institute as the level of laser radiation to which an unprotected person may be exposed without adverse biological changes in the eye or skin.88 Based on this definition, there is no standardized tissue-specific limit (e.g., for brain, prostate, liver, or heart tissue), and therefore, the photoacoustic community generally assumes that the MPE for skin is an acceptable baseline. While the community often acknowledges that this is a rather conservative limit for multiple reasons,8,9,89 the following text provides an additional perspective for brain tissues and neurosurgery that can be applied to many other tissues and surgical (or interventional) applications. Figure 10 demonstrates that brain tissue (i.e., white and gray matter) has a lower optical absorption than melanin and collagen, which are major structural components in skin.92,93 This difference indicates that the brain tissue is likely to absorb less energy compared to skin for any given applied energy. Additionally, the brain tissue has a specific heat of 3630 J/kg/°C, whereas skin has a specific heat of 3391 J/kg/°C,94 indicating that for an equal amount of absorbed energy, the temperature will be raised more in skin than in the brain tissue (assuming equal unit mass). Normal body temperature is approximately 37 °C with up to 1 °C variations. Brain damage occurs above 42 °C and skin damage occurs over 44 °C. Thus, the MPE for skin is a rather conservative value.

FIG. 10.

Optical absorption of blood, bone, brain tissues, and skin.90–93

When imaging with lasers, the MPE depends on a variety of factors such as wavelength, , and exposure duration. For –1050 nm and an exposure duration of 109 s (which is standard for photoacoustic imaging), the MPE equation for skin is88

| (1) |

Considering that exposure is defined per 1 cm2 unit, MPE comparisons can be approached by analyzing a 1 cm2 area of skin. Taking the average thickness of skin to be mm95 results in a hypothetical volume of 0.2 cm3. Given that the body is comprised largely of water (density = 1 g/cm3), the 0.2 cm3 volume is assumed to correspond to a mass of g. The MPE at 760 nm [i.e., 26.4 mJ/cm2 based on Eq. (1)] can then be expressed as (26.4 mJ)/(0.2 g) and converted using specific heat values to the following temperature rises for both skin and brain:

| (2) |

| (3) |

Assuming that 100% of the applied energy is absorbed (which is not the case), the change in the temperature for brain would be lower than that of skin, and both new temperatures would be within 1 °C of standard body temperature. These calculations suggest that MPE is a very conservative value for the brain tissue, as the maximum exposure is expected to raise temperature by only a fraction of a degree, and furthermore, only mK temperature rises are required for photoacoustic imaging. Note that this analysis does not consider potential differences in fluence profiles, particularly that the peak temperature is expected to be larger where the light first emerges from the fiber, thereby giving rise to a larger peak temperature rise than the average, which highlights the importance of developing uniform light profiles for the proposed technologies.18 Similarly, small blood vessels could experience larger local temperature rises in the brain or the skin tissue (depending on the excitation wavelength), and this additional consideration is expected to similarly impact both tissue types. Therefore, the presented estimates are expected to be conservative regardless of the inclusion of this additional consideration, and this conservativeness often comes at the expense of poor signal-to-noise ratios, which is one of the metrics of poor image quality noted in Sec. III A. The ability to increase laser energies for specific tissues beyond the existing limits for skin enables the improvement of poor image quality and target differentiation caused by limited light penetration.9,20,52

In addition to defining the maximum energy limits, it is also important to define the minimum energy requirements. Although the minimum required energy is expected to partially depend on the overall imaging system design (and this energy limit will likely be different for different photoacoustic system configurations), the minimum required energy can potentially be generalized based on the imaging environment. For example, imaging through bone is expected to require higher minimum energies than imaging through soft tissues, with all else being equal or at least similar (e.g., receiver sensitivity, beamforming method, image post-processing steps). This information will be useful to finalize novel system design and performance requirements, as the minimum required energy is one component of the overall system design that determines which type of light source is truly needed (e.g., traditional Q-switched lasers, alternative options discussed in Sec. III C, new light sources to be determined, introduced, or invented based on the knowledge of true input energy limits for a specific surgical or interventional application).

In particular, when navigating to specific targets using a combination of photoacoustic imaging and robotics (e.g., visual servoing of photoacoustic signals from a needle tip to improve the biopsy of obese patients46), one critical step of this procedure is the segmentation algorithm used to define the tip location coordinates for robot path planning, as summarized in Fig. 6. Ideally, the minimum energy required for fiber tip localization would be used. Understanding this minimum required energy for the robot to perform its visual servoing task would ensure that minimum energies are used without the empirical testing period that has preceded previous in vivo animal experiments,52 which is not ideal for operation within the human body. If this understanding of minimum energy requirements can be developed based on the theoretical knowledge of signal and image quality limitations, then the associated theoretical equations can replace empirical testing with evidence-based predictions. This information about minimum input energy requirements would then be implemented without requiring the time- and resource-intensive experiments currently needed to test multiple possible system configurations prior to initiating an interventional procedure.

C. Alternative imaging and sensing technology

Ideal acoustic sensors for guiding surgery would be small, compact, and flexible. These properties are generally uncharacteristic of current clinical and pre-clinical sensors that are used for photoacoustic imaging in other applications. For example, demonstrations of photoacoustic image guidance of the in vivo liver20 would benefit from a reduction in the ultrasound probe size to avoid interference with the tight surgical workspace (with an expected trade-off between the array size and the lateral resolution). Photoacoustic-based surgical guidance during neurosurgery would similarly benefit from a smaller, customized nasal ultrasound probe with a water-filled, balloon tip for acoustic coupling.14 Another promising and upcoming technology is the utilization of flexible ultrasound sensors96,97 for surgical guidance. These sensors have the potential to conform to the various shapes and sizes of multiple organs during surgery, thereby making the sensors as unobtrusive as possible. Flexible sensors can also be contorted to shapes that partially address the limited view-related image quality challenges noted in Sec. III A. These flexible sensors pose a new set of beamforming challenges as well, considering that the array geometry is expected to change frequently with this newfound flexibility. Wireless ultrasound signal transmission98 is yet another area that could be used to improve photoacoustic image guidance.

Alternative light sources are one additional innovative direction for the future of photoacoustic imaging to guide surgeries. There has been a growing interest in pulsed-laser diodes99,100 and light emitting diodes (LEDs)101,102 as light sources for photoacoustic imaging. Each alternative has benefits, such as lower power, smaller, more portability, and higher frame rates. Incidentally, one of the primary benefits of these alternative light sources is also one of the greatest challenges with this alternative. In particular, the lower energies of these light delivery methods produce low signal-to-noise ratios that are not suitable for deep optical penetration, often resulting in the requirement for signal averaging to improve image quality, which slows down the critical frame rates that are needed for real-time guidance (which can be overcome with coherence-based beamforming if target location information without amplitude information will suffice,103 such as in visual servoing tasks74). In addition, multiple LEDs are currently required to deliver signal quality that is on par with larger laser systems, resulting in bulkier light delivery systems that are not suitable for surgical guidance or attachment to the tips of surgical tools.

D. Clinical translation

The ultimate goal with much of the efforts described within this Perspective is to translate the described technology for the benefit of patients. In addition to the associated translational challenges and possible future directions described above and summarized in Fig. 9 (e.g., image quality improvement solutions, laser safety and energy requirements, light delivery, and sensing technology considerations), the potential for clinical adoption presents an additional set of considerations (summarized in Table I). These considerations include the development of viable phantoms for technique standardization among hospitals, training of surgeons and their assistants to interpret potentially confusing photoacoustic images, and controlling the increased flexibility introduced by separating the optical delivery from the acoustic reception to ensure that the target of interest is truly identified and sufficiently exists within the tomographic imaging plane as intended. This last detail will minimize image misinterpretation from out-of-plane photoacoustic signals.43

TABLE I.

Summary of important requirements and considerations for surgical and interventional translation of promising photoacoustic imaging methods and applications.

| Requirements | Considerations |

|---|---|

| Small/portable systems | Image quality |

| Reduced input energies | |

| Reduced lateral resolution | |

| Possibilities for wireless systems | |

| Overall miniaturization | |

| Real-time images | Transmit pulse repetition frequencies |

| Complexity of image formation software | |

| Image fidelity to detected structures | |

| Usable form factors | System components tailored to anatomy (e.g., nose, eyes) |

| Integration with medical robots | |

| Repeatable training methods | Phantom standardization across hospitals |

| Imaging plane navigation | |

| Image interpretation | |

| Reasonable cost/complexity | Balance with technological benefits |

| Regulatory clearance | Sterilization protocols |

| FDA approval | |

| Large vs small animal testing | |

| Role of human cadaver testing | |

| Patient testing |

Novel form factors that do not currently exist must also be considered, such as dual-probe systems tailored for simultaneous ocular reception of transcranial signals from both eyes of a patient.14 The development of photoacoustic-based, patient-specific, pre-operative path planning simulation methods is another important consideration. In addition, completely wireless photoacoustic imaging systems and overall system miniaturization are futuristic technological avenues to explore to support clinical adoption within operating rooms and interventional suites. Clinical adoption additionally requires overall ease of use, as well as system costs and complexity levels that do not outweigh technological benefits, which is particularly necessary when guidance tasks are extended to incorporate robotic assistance.

These technological challenges exist alongside complementary regulatory challenges, such as the requirement to develop dedicated sterilization protocols, the process to achieve regulatory device approval (such as FDA approval in the United States), and the need to complete pilot patient testing prior to commercialization. One intermediary step toward progress in these areas is the use of human cadavers and large animal models to “test-drive” the proposed technology. These valuable components of the technology development pipeline have proven to be more insightful than studies with small animal models (e.g., rodents) or ex vivo tissues. In particular, small animals often do not have the organ sizes and relative anatomical scale necessary to translate findings to humans, while in vivo studies with large animals can reveal trends that are simply not present in ex vivo and experimental phantom data (e.g., photoacoustic signal differences based on subtle positioning of the light source relative to a major hepatic blood vessel in an in vivo liver20). Similarly, new insights for photoacoustic image guidance of neurosurgery were gained by testing associated ideas with human cadaver heads and simulations.14 These paired simulation and human cadaver experiments validated the use of patient-specific simulations of acoustic wave propagation as a potential method to predict the optimal placement of acoustic receivers, resulting in exploration of the eye as an acceptable acoustic window for photoacoustic-guided surgeries of the skull base.14 These types of counter-intuitive findings are expected to be major factors that will propel the field toward translation of the proposed surgical guidance technology for widespread use by surgeons and for the benefit of patients worldwide.

IV. SUMMARY AND OUTLOOK

There is a wide range of possible directions to pursue with regard to photoacoustic image guidance of minimally invasive surgeries and interventional procedures. Augmented surgical tools range from drills and scissors to needles and catheters. The light source can also be operated independently of surgical tools. The light source or acoustic sensor may additionally be integrated into robotic systems to enhance the overall surgical experience. Opportunities for additional advancement include image quality optimizations, redefinition of laser safety (particularly with regard to identifying tissue-specific safety limits), novel wireless and flexible sensor designs, and alternative light sources to the common Q-switched lasers. One additional promising area of opportunity that was not discussed (due to the focus on novel surgical and interventional applications and associated hardware and software innovations) is the development of new contrast agents for surgical guidance.104,105 Overcoming the outstanding challenges associated with the totality of these opportunities would enable widespread clinical and surgical utility. With its excellent ability to provide optical absorption contrast, acoustic penetration depths that are common to traditional ultrasound imaging, and multiple possibilities for clinical, surgical, and interventional benefit, photoacoustic imaging has much potential to become a standard technological assistant in operating rooms and interventional suites in the years ahead.

ACKNOWLEDGMENTS

This work is supported by the Alfred P. Sloan Research Fellowship in Physics, National Science Foundation (NSF) CAREER Award No. ECCS-1751522, NSF SCH Award No. NSF IIS-2014088 (pending), and National Institutes of Health (NIH) (No. R00-EB018994). Previous funding from Cutting Edge Surgical, Inc. supported the spinal fusion feasibility studies, and many of the undergraduates who worked on various aspects of this project were supported by the NSF REU in Computational Sensing and Medical Robotics (Grant Nos. EEC-1852155, EEC-1460674, and EEC-1004782). The Carnegie Center for Surgical Innovation is also acknowledged for providing much of the infrastructure support for in vivo and cadaver studies at the Johns Hopkins Hospital. Finally, I thank my past and current graduate and undergraduate students and mentees (Michelle Graham, Alycen Wiacek, Anastasia Ostrowski, Alicia Dagle, Magaret Allard, Kelley Kempski, Eduardo Gonzalez, Mardava Gubbi, Justina Huang, Joshua Shubert, Derek Allman, Blackberrie Eddins, Neeraj Gandhi, Bria Goodson, Jasmin Palmer, Huayu Hou, and Jinxin Dong) for their outstanding work, dedication, and assistance with bringing many of these ideas to fruition, and I also thank numerous clinical collaborators (J. Chrispin, K. Wang, F. Creighton, J. He, H. Wu, F. Assis, and S. Beck) for generously lending their time, expertise, and assistance with expanding the realm of surgical and interventional possibilities for our proposed photoacoustic technology.

DATA AVAILABILITY

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

REFERENCES

- 1.Xu M. and Wang L. V., “Photoacoustic imaging in biomedicine,” Rev. Sci. Instrum. 77(4), 041101 (2006). 10.1063/1.2195024 [DOI] [Google Scholar]

- 2.Bouchard R., Sahin O., and Emelianov S., “Ultrasound-guided photoacoustic imaging: Current state and future development,” IEEE Trans. Ultrason. Ferroelectr. Freq. Control 61(3), 450–466 (2014). 10.1109/TUFFC.2014.2930 [DOI] [PubMed] [Google Scholar]

- 3.Beard P., “Biomedical photoacoustic imaging,” Interface Focus 1(4), 602–631 (2011). 10.1098/rsfs.2011.0028 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang B., Karpiouk A., Yeager D., Amirian J., Litovsky S., Smalling R., and Emelianov S., “Intravascular photoacoustic imaging of lipid in atherosclerotic plaques in the presence of luminal blood,” Opt. Lett. 37(7), 1244–1246 (2012). 10.1364/OL.37.001244 [DOI] [PubMed] [Google Scholar]

- 5.Kim C., Erpelding T. N., Maslov K. I., Jankovic L., Akers W. J., Song L., Achilefu S., Margenthaler J. A., Pashley M. D., and Wang L. V., “Handheld array-based photoacoustic probe for guiding needle biopsy of sentinel lymph nodes,” J. Biomed. Opt. 15(4), 046010 (2010). 10.1117/1.3469829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Song K. H., Stein E. W., Margenthaler J. A., and Wang L. V., “Noninvasive photoacoustic identification of sentinel lymph nodes containing methylene blue in vivo in a rat model,” J. Biomed. Opt. 13(5), 054033 (2008). 10.1117/1.2976427 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Piras D., Grijsen C., Schutte P., Steenbergen W., and Manohar S., “Photoacoustic needle: Minimally invasive guidance to biopsy,” J. Biomed. Opt. 18(7), 070502 (2013). 10.1117/1.JBO.18.7.070502 [DOI] [PubMed] [Google Scholar]

- 8.Xia W., Nikitichev D. I., Mari J. M., West S. J., Pratt R., David A. L., Ourselin S., Beard P. C., and Desjardins A. E., “Performance characteristics of an interventional multispectral photoacoustic imaging system for guiding minimally invasive procedures,” J. Biomed. Opt. 20(8), 086005 (2015). 10.1117/1.JBO.20.8.086005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bell M. A. L., Kuo N. P., Song D. Y., Kang J. U., and Boctor E. M., “In vivo visualization of prostate brachytherapy seeds with photoacoustic imaging,” J. Biomed. Opt. 19(12), 126011 (2014). 10.1117/1.JBO.19.12.126011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bell M. A. L., Kuo N., Song D. Y., and Boctor E. M., “Short-lag spatial coherence beamforming of photoacoustic images for enhanced visualization of prostate brachytherapy seeds,” Biomed. Opt. Express 4(10), 1964–1977 (2013). 10.1364/BOE.4.001964 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Karthikesh M. S. and Yang X., “Photoacoustic image-guided interventions,” Exp. Biol. Med. 245(4), 330–341 (2020). 10.1177/1535370219889323 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Steinberg I., Huland D. M., Vermesh O., Frostig H. E., Tummers W. S., and Gambhir S. S., “Photoacoustic clinical imaging,” Photoacoustics 14, 77 (2019). 10.1016/j.pacs.2019.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zhao T., Desjardins A. E., Ourselin S., Vercauteren T., and Xia W., “Minimally invasive photoacoustic imaging: Current status and future perspectives,” Photoacoustics 16, 100146 (2019). 10.1016/j.pacs.2019.100146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Graham M., Huang J., Creighton F., and Bell M. A. L., “Simulations and human cadaver head studies to identify optimal acoustic receiver locations for minimally invasive photoacoustic-guided neurosurgery,” Photoacoustics 19, 100183 (2020). 10.1016/j.pacs.2020.100183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Raymond J., Hardy J., Czepko R., and Roy D., “Arterial injuries in transsphenoidal surgery for pituitary adenoma; the role of angiography and endovascular treatment,” Am. J. Neuroradiology 18(4), 655–665 (1997). [PMC free article] [PubMed] [Google Scholar]

- 16.Valentine R. and Wormald P.-J., “Carotid artery injury after endonasal surgery,” Otolaryngol. Clin. North Am. 44(5), 1059–1079 (2011). 10.1016/j.otc.2011.06.009 [DOI] [PubMed] [Google Scholar]

- 17.Perry M. O., Snyder W. H., and Thal E. R., “Carotid artery injuries caused by blunt trauma,” Ann. Surg. 192(1), 74–77 (1980). 10.1097/00000658-198007000-00013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Eddins B. and Bell M. A. L., “Design of a multifiber light delivery system for photoacoustic-guided surgery,” J. Biomed. Opt. 22(4), 041011 (2017). 10.1117/1.JBO.22.4.041011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bell M. A. L., Ostrowski A. K., Li K., Kazanzides P., and Boctor E. M., “Localization of transcranial targets for photoacoustic-guided endonasal surgeries,” Photoacoustics 3(2), 78–87 (2015). 10.1016/j.pacs.2015.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kempski K. M., Wiacek A., Palmer J., Graham M., González E., Goodson B., Allman D., Hou H., Beck S., He J. et al., “In vivo demonstration of photoacoustic-guided liver surgery,” Proc. SPIE 10878, 108782T (2019). 10.1117/12.2510500 [DOI] [Google Scholar]

- 21.Rajarathinam G., Kannan D. G., Vimalraj V., Amudhan A., Rajendran S., Jyotibasu D., Balachandar T. G., Jeswanth S., Ravichandran P., and Surendran R., “Post pancreaticoduodenectomy haemorrhage: Outcome prediction based on new ISGPS clinical severity grading,” HPB 10(5), 363–370 (2008). 10.1080/13651820802247086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wente M. N., Veit J. A., Bassi C., Dervenis C., Fingerhut A., Gouma D. J., Izbicki J. R., Neoptolemos J. P., Padbury R. T., Sarr M. G., Yeo C. J., and Büchler M. W., “Postpancreatectomy hemorrhage (PPH)–An International Study Group of Pancreatic Surgery (ISGPS) definition,” Surgery 142(1), 20–25 (2007). 10.1016/j.surg.2007.02.001 [DOI] [PubMed] [Google Scholar]

- 23.Romano F., Garancini M., Uggeri F., Degrate L., Nespoli L., Gianotti L., Nespoli A., and Uggeri F., “Bleeding in hepatic surgery: Sorting through methods to prevent it,” HPB Surg. 2012, 169351. 10.1155/2012/169351 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Shubert J. and Bell M. A. L., “Photoacoustic imaging of a human vertebra: Implications for guiding spinal fusion surgeries,” Phys. Med. Biol. 63(14), 144001 (2018). 10.1088/1361-6560/aacdd3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Weiss A. and Elixhauser A., “Trends in operating room procedures in U.S. hospitals, 2001–2011: Statistical brief# 171,” in Healthcare Cost and Utilization Project (HCUP) Statistical Briefs (Agency for Healthcare Research and Quality, 2014).

- 26.Rajaee S. S., Bae H. W., Kanim L. E., and Delamarter R. B., “Spinal fusion in the United States: Analysis of trends from 1998 to 2008,” Spine 37(1), 67–76 (2012). 10.1097/BRS.0b013e31820cccfb [DOI] [PubMed] [Google Scholar]

- 27.Lehman, Jr. R. A., Lenke L. G., Keeler K. A., Kim Y. J., and Cheh G., “Computed tomography evaluation of pedicle screws placed in the pediatric deformed spine over an 8-year period,” Spine 32(24), 2679–2684 (2007). 10.1097/BRS.0b013e31815a7f13 [DOI] [PubMed] [Google Scholar]

- 28.Samdani A. F., Ranade A., Sciubba D. M., Cahill P. J., Antonacci M. D., Clements D. H., and Betz R. R., “Accuracy of free-hand placement of thoracic pedicle screws in adolescent idiopathic scoliosis: How much of a difference does surgeon experience make?,” Eur. Spine J. 19(1), 91–95 (2010). 10.1007/s00586-009-1183-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bergeson R. K., Schwend R. M., DeLucia T., Silva S. R., Smith J. E., and Avilucea F. R., “How accurately do novice surgeons place thoracic pedicle screws with the free hand technique?,” Spine 33(15), E501–E507 (2008). 10.1097/BRS.0b013e31817b61af [DOI] [PubMed] [Google Scholar]

- 30.Abul-Kasim K. and Ohlin A., “The rate of screw misplacement in segmental pedicle screw fixation in adolescent idiopathic scoliosis: The effect of learning and cumulative experience,” Acta Orthop. 82(1), 50–55 (2011). 10.3109/17453674.2010.548032 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gertzbein S. D. and Robbins S. E., “Accuracy of pedicular screw placement in vivo,” Spine 15(1), 11–14 (1990). 10.1097/00007632-199001000-00004 [DOI] [PubMed] [Google Scholar]

- 32.Castro W. H., Halm H., Jerosch J., Malms J., Steinbeck J., and Blasius S., “Accuracy of pedicle screw placement in lumbar vertebrae,” Spine 21(11), 1320–1324 (1996). 10.1097/00007632-199606010-00008 [DOI] [PubMed] [Google Scholar]

- 33.Laine T., Mäkitalo K., Schlenzka D., Tallroth K., Poussa M., and Alho A., “Accuracy of pedicle screw insertion: A prospective CT study in 30 low back patients,” Eur. Spine J. 6(6), 402–405 (1997). 10.1007/BF01834068 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bjarke F. C., Stender E. H., Laursen M., Thomsen K., and Bünger C., “Long-term functional outcome of pedicle screw instrumentation as a support for posterolateral spinal fusion: Randomized clinical study with a 5-year follow-up,” Spine 27(12), 1269–1277 (2002). 10.1097/00007632-200206150-00006 [DOI] [PubMed] [Google Scholar]

- 35.Lee L. A., “Risk factors associated with ischemic optic neuropathy after spinal fusion surgery,” Anesthesiology 116(1), 15–24 (2012). [DOI] [PubMed] [Google Scholar]

- 36.Deyo R. A., Ciol M. A., Cherkin D. C., Loeser J. D., and Bigos S. J., “Lumbar spinal fusion. A cohort study of complications, reoperations, and resource use in the Medicare population,” Spine 18(11), 1463–1470 (1993). 10.1097/00007632-199318110-00010 [DOI] [PubMed] [Google Scholar]

- 37.Shubert J. and Bell M. A. L., “A novel drill design for photoacoustic guided surgeries,” Proc. SPIE 10494, 104940J (2018). 10.1117/12.2291247 [DOI] [Google Scholar]

- 38.Ritzel H., Amling M., Pösl M., Hahn M., and Delling G., “The thickness of human vertebral cortical bone and its changes in aging and osteoporosis: A histomorphometric analysis of the complete spinal column from thirty-seven autopsy specimens,” J. Bone Miner. Res. 12(1), 89–95 (1997). 10.1359/jbmr.1997.12.1.89 [DOI] [PubMed] [Google Scholar]

- 39.Defino H. L. A. and Vendrame J. R. B., “Morphometric study of lumbar vertebrae’s pedicle,” Acta Ortop. 15(4), 183–186 (2007). 10.1590/S1413-78522007000400001 [DOI] [Google Scholar]

- 40.Edwards W. T., Zheng Y., Ferrara L. A., and Yuan H. A., “Structural features and thickness of the vertebral cortex in the thoracolumbar spine,” Spine 26(2), 218–225 (2001). 10.1097/00007632-200101150-00019 [DOI] [PubMed] [Google Scholar]

- 41.Bannister L. H. and Williams P. L., Gray’s Anatomy: The Anatomical Basis of Medicine and Surgery (Churchill Livingstone, 1995). [Google Scholar]

- 42.Delacroix S. E. and Winters J., “Urinary tract injures: Recognition and management,” Clin. Colon Rectal Surg. 23(02), 104–112 (2010). 10.1055/s-0030-1254297 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Allard M., Shubert J., and Bell M. A. L., “Feasibility of photoacoustic-guided teleoperated hysterectomies,” J. Med. Imaging 5(2), 021213 (2018). 10.1117/1.JMI.5.2.021213 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Wiacek A., Wang K. C., and Bell M. A. L., “Techniques to distinguish the ureter from the uterine artery in photoacoustic-guided hysterectomies,” Proc. SPIE 10878, 108785K (2019). 10.1117/12.2510716 [DOI] [Google Scholar]

- 45.Wiacek A., Wang K. C., Wu H., and Bell M. A. L., “Dual-wavelength photoacoustic imaging for guidance of hysterectomy procedures,” Proc. SPIE 11229, 112291D (2020). 10.1117/12.2544906 [DOI] [Google Scholar]

- 46.Shubert J. and Bell M. A. L., “Photoacoustic based visual servoing of needle tips to improve biopsy on obese patients,” in IEEE International Ultrasonics Symposium (IEEE, 2017).

- 47.Perrault J., McGill D. B., Ott B. J., and Taylor W. F., “Liver biopsy: Complications in 1000 inpatients and outpatients,” Gastroenterology 74(1), 103–106 (1978). 10.1016/0016-5085(78)90364-5 [DOI] [PubMed] [Google Scholar]

- 48.Bell M. A. L. and Shubert J., “Photoacoustic-based visual servoing of a needle tip,” Sci. Rep. 8(1), 15519 (2018). 10.1038/s41598-018-33931-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Dana N., Di Biase L., Natale A., Emelianov S., and Bouchard R., “In vitro photoacoustic visualization of myocardial ablation lesions,” Heart Rhythm 11(1), 150–157 (2014). 10.1016/j.hrthm.2013.09.071 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Pang G. A., Bay E., Deán-Ben X., and Razansky D., “Three-dimensional optoacoustic monitoring of lesion formation in real time during radiofrequency catheter ablation,” J. Cardiovasc. Electrophysiol. 26(3), 339–345 (2015). 10.1111/jce.12584 [DOI] [PubMed] [Google Scholar]

- 51.Iskander-Rizk S., Kruizinga P., Beurskens R., Springeling G., Knops P., Mastik F., de Groot N., van der Steen A. F., and van Soest G., “Photoacoustic imaging of RF ablation lesion formation in an ex-vivo passive beating porcine heart model (Conference Presentation),” Proc. SPIE 10878, 108782N (2019). 10.1117/12.2506871 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Graham M., Assis F., Allman D., Wiacek A., Gonzalez E., Gubbi M., Dong J., Hou H., Beck S., Chrispin J., and Bell M. A. L., “In vivo demonstration of photoacoustic image guidance and robotic visual servoing for cardiac catheter-based interventions,” IEEE Trans. Med. Imaging 39(4), 1015–1029 (2020). 10.1109/TMI.2019.2939568 [DOI] [PubMed] [Google Scholar]

- 53.Gandhi N., Allard M., Kim S., Kazanzides P., and Bell M. A. L., “Photoacoustic-based approach to surgical guidance performed with and without a da Vinci robot,” J. Biomed. Opt. 22(12), 121606 (2017). 10.1117/1.JBO.22.12.121606 [DOI] [Google Scholar]

- 54.Rahimi S., Jeppson P. C., Gattoc L., Westermann L., Cichowski S., Raker C., LeBrun E. W., and Sung V., “Comparison of perioperative complications by route of hysterectomy performed for benign conditions,” Female Pelvic Med. Reconstr. Surg. 22(5), 364–368 (2016). 10.1097/SPV.0000000000000292 [DOI] [PubMed] [Google Scholar]

- 55.Moradi H., Tang S., and Salcudean S. E., “Toward robot-assisted photoacoustic imaging: Implementation using the Da Vinci research kit and virtual fixtures,” IEEE Rob. Autom. Lett. 4(2), 1807–1814 (2019). 10.1109/LRA.2019.2897168 [DOI] [Google Scholar]

- 56.Kazanzides P., Chen Z., Deguet A., Fischer G. S., Taylor R. H., and DiMaio S. P., “An open-source research kit for the da Vinci® surgical system,” in 2014 IEEE International Conference on Robotics and Automation (ICRA) (IEEE, 2014), pp. 6434–6439.

- 57.Lediju M. A., Pihl M. J., Dahl J. J., and Trahey G. E., “Quantitative assessment of the magnitude, impact and spatial extent of ultrasonic clutter,” Ultrason. Imaging 30(3), 151–168 (2008). 10.1177/016173460803000302 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Nguyen H. N. Y., Hussain A., and Steenbergen W., “Reflection artifact identification in photoacoustic imaging using multi-wavelength excitation,” Biomed. Opt. Express 9(10), 4613–4630 (2018). 10.1364/BOE.9.004613 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Bell M. A. L., Song D. Y., and Boctor E. M., “Coherence-based photoacoustic imaging of brachytherapy seeds implanted in a canine prostate,” Proc. SPIE 9040, 90400Q (2014). 10.1117/12.2043901 [DOI] [Google Scholar]

- 60.Nan H., Chou T.-C., and Arbabian A., “Segmentation and artifact removal in microwave-induced thermoacoustic imaging,” in Engineering in Medicine and Biology Society (EMBC), 2014 36th Annual International Conference of the IEEE (IEEE, 2014), pp. 4747–4750. [DOI] [PubMed]

- 61.Singh M. K. A. and Steenbergen W., “Photoacoustic-guided focused ultrasound (PAFUSion) for identifying reflection artifacts in photoacoustic imaging,” Photoacoustics 3(4), 123–131 (2015). 10.1016/j.pacs.2015.09.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Singh M. K. A., Jaeger M., Frenz M., and Steenbergen W., “In vivo demonstration of reflection artifact reduction in photoacoustic imaging using synthetic aperture photoacoustic-guided focused ultrasound (PAFUSion),” Biomed. Opt. Express 7(8), 2955–2972 (2016). 10.1364/BOE.7.002955 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Schwab H.-M., Beckmann M. F., and Schmitz G., “Photoacoustic clutter reduction by inversion of a linear scatter model using plane wave ultrasound measurements,” Biomed. Opt. Express 7(4), 1468–1478 (2016). 10.1364/BOE.7.001468 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Jaeger M., Bamber J. C., and Frenz M., “Clutter elimination for deep clinical optoacoustic imaging using localised vibration tagging (LOVIT),” Photoacoustics 1(2), 19–29 (2013). 10.1016/j.pacs.2013.07.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Jaeger M., Siegenthaler L., Kitz M., and Frenz M., “Reduction of background in optoacoustic image sequences obtained under tissue deformation,” J. Biomed. Opt. 14(5), 054011 (2009). 10.1117/1.3227038 [DOI] [PubMed] [Google Scholar]

- 66.Lediju M. A., Pihl M. J., Hsu S. J., Dahl J. J., Gallippi C. M., and Trahey G. E., “A motion-based approach to abdominal clutter reduction,” IEEE Trans. Ultrason. Ferroelectr. Freq. Control 56(11), 2437–2449 (2009). 10.1109/TUFFc.2009.1331 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Park S., Karpiouk A. B., Aglyamov S. R., and Emelianov S. Y., “Adaptive beamforming for photoacoustic imaging using linear array transducer,” in 2008 IEEE Ultrasonics Symposium (IEEE, 2008), pp. 1088–1091.

- 68.Bell M., Boctor E., and Kazanzides P., “Method and system for transcranial photoacoustic imaging for guiding skull base surgeries,” U.S. patent 10,531,828 (14 January 2020).

- 69.Pourebrahimi B., Yoon S., Dopsa D., and Kolios M. C., “Improving the quality of photoacoustic images using the short-lag spatial coherence imaging technique,” Proc. SPIE 8581, 85813Y (2013). 10.1117/12.2005061 [DOI] [Google Scholar]

- 70.Alles E. J., Jaeger M., and Bamber J. C., “Photoacoustic clutter reduction using short-lag spatial coherence weighted imaging,” in 2014 IEEE International Ultrasonics Symposium (IEEE, 2014), pp. 41–44.

- 71.Graham M. and Bell M. A. L., “Photoacoustic spatial coherence theory and applications to coherence-based image contrast and resolution,” IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control (IEEE, 2020). 10.1109/TUFFC.2020.2999343 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Gonzalez E., Gubbi M. R., and Bell M. A. L., “GPU implementation of coherence-based photoacoustic beamforming for autonomous visual servoing,” in 2019 IEEE International Ultrasonics Symposium (IUS) (IEEE, 2019), pp. 24–27.

- 73.Gonzalez E. and Bell M. A. L., “A GPU approach to real-time coherence-based photoacoustic imaging and its application to photoacoustic visual servoing,” Proc. SPIE 11240, 1124054 (2020). 10.1117/12.2546023 [DOI] [Google Scholar]

- 74.Gonzalez E. and Bell M. A. L., “GPU implementation of photoacoustic short-lag spatial coherence imaging for improved image-guided interventions,” J. Biomed. Opt. 25, 077002 (2020). 10.1117/1.JBO.25.7.077002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Rodriguez-Molares A., Rindal O. M. H., D’hooge J., Måsøy S.-E., Austeng A., Bell M. A. L., and Torp H., “The generalized contrast-to-noise ratio: A formal definition for lesion detectability,” IEEE Trans. Ultrason. Ferroelectr. Freq. Control 67(4), 745–759 (2019). 10.1109/TUFFC.2019.2956855 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Kempski K., Graham M., Gubbi M., Palmer T., and Bell M. A. L., “Application of the generalized contrast-to-noise ratio to assess photoacoustic image quality,” Biomed. Opt. Express 11(7), 3684–3698 (2020). 10.1364/BOE.391026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Reiter A. and Bell M. A. L., “A machine learning approach to identifying point source locations in photoacoustic data,” Proc. SPIE 10064, 100643J (2017). 10.1117/12.2255098 [DOI] [Google Scholar]

- 78.Allman D., Reiter A., and Bell M. A. L., “Photoacoustic source detection and reflection artifact removal enabled by deep learning,” in IEEE Transactions on Medical Imaging (IEEE, 2018). [DOI] [PMC free article] [PubMed]

- 79.Antholzer S., Haltmeier M., and Schwab J., “Deep learning for photoacoustic tomography from sparse data,” Inverse Probl. Sci. Eng. 27(7), 987–1005 (2019). 10.1080/17415977.2018.1518444 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Davoudi N., Deán-Ben X. L., and Razansky D., “Deep learning optoacoustic tomography with sparse data,” Nat. Mach. Intell. 1(10), 453–460 (2019). 10.1038/s42256-019-0095-3 [DOI] [Google Scholar]

- 81.Hauptmann A., Lucka F., Betcke M., Huynh N., Adler J., Cox B., Beard P., Ourselin S., and Arridge S., “Model-based learning for accelerated, limited-view 3-D photoacoustic tomography,” IEEE Trans. Med. Imaging 37(6), 1382–1393 (2018). 10.1109/TMI.2018.2820382 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Waibel D., Gröhl J., Isensee F., Kirchner T., Maier-Hein K., and Maier-Hein L., “Reconstruction of initial pressure from limited view photoacoustic images using deep learning,” Proc. SPIE 10494, 104942S (2018). 10.1117/12.2288353 [DOI] [Google Scholar]

- 83.Johnstonbaugh K., Agrawal S., Durairaj D. A., Fadden C., Dangi A., Karri S. P. K., and Kothapalli S.-R., “A deep learning approach to photoacoustic wavefront localization in deep-tissue medium,” IEEE Trans. Ultrason. Ferroelectr. Freq. Control (published online). 10.1109/TUFFC.2020.2964698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Guan S., Khan A., Sikdar S., and Chitnis P., “Fully dense UNet for 2-D sparse photoacoustic tomography artifact removal,” IEEE J. Biomed. Health Inform. 24, 568 (2020). 10.1109/JBHI.2019.2912935 [DOI] [PubMed] [Google Scholar]

- 85.Vu T., Li M., Humayun H., Zhou Y., and Yao J., “A generative adversarial network for artifact removal in photoacoustic computed tomography with a linear-array transducer,” Exp. Biol. Med. 245(7), 597–605 (2020). 10.1177/1535370220914285 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Hariri A., Alipour K., Mantri Y., Schulze J. P., and Jokerst J. V., “Deep learning improves contrast in low-fluence photoacoustic imaging,” Biomed. Opt. Express 11(6), 3360–3373 (2020). 10.1364/BOE.395683 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Laser Institute of America, “American National Standards for safe use of lasers in health care,” ANSI Z136.3-2011, pp. 45–46, 2012.

- 88.Laser Institute of America, “American National Standards for safe use of lasers,” ANSI Z136.1-2014, 2014.

- 89.Su J. L., Bouchard R. R., Karpiouk A. B., Hazle J. D., and Emelianov S. Y., “Photoacoustic imaging of prostate brachytherapy seeds,” Biomed. Opt. Express 2(8), 2243–2254 (2011). 10.1364/BOE.2.002243 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Prahl S., Tabulated Molar Extinction Coefficient for Hemoglobin in Water (Oregon Medical Laser Center, 1998). [Google Scholar]

- 91.Genina E. A., Bashkatov A. N., and Tuchin V. V., “Optical clearing of human cranial bone by administration of immersion agents,” Proc. SPIE 6163, 616311 (2006). 10.1117/12.697308 [DOI] [Google Scholar]

- 92.Van der Zee P., Essenpreis M., and Delpy D. T., “Optical properties of brain tissue,” Proc. SPIE 1888, 454–465 (1993). 10.1117/12.154665 [DOI] [Google Scholar]

- 93.Jacques S., Optical Absorption of Melanin: Melanosome Absorption Coefficient (Oregon Medical Laser Center, 1998). [Google Scholar]

- 94.Hasgall P., Di Gennaro F., Baumgartner C., Neufeld E., Gosselin M., Payne D., Klingenbck A., and Kuster N., “ITIS database for thermal and electromagnetic parameters of biological tissues,” 2(6) (2015); see www.itis.ethz.ch/database.

- 95.Akkus O., Oguz A., Uzunlulu M., and Kizilgul M., “Evaluation of skin and subcutaneous adipose tissue thickness for optimal insullin injection,” J. Diabetes Metab. 3(8), 1000216 (2012). 10.4172/2155-6156.1000216 [DOI] [Google Scholar]

- 96.Hu H., Zhu X., Wang C., Zhang L., Li X., Lee S., Huang Z., Chen R., Chen Z., Wang C. et al., “Stretchable ultrasonic transducer arrays for three-dimensional imaging on complex surfaces,” Sci. Adv. 4(3), eaar3979 (2018). 10.1126/sciadv.aar3979 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Wang Z., Xue Q.-T., Chen Y.-Q., Shu Y., Tian H., Yang Y., Xie D., Luo J.-W., and Ren T.-L., “A flexible ultrasound transducer array with micro-machined bulk PZT,” Sensors 15(2), 2538–2547 (2015). 10.3390/s150202538 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Pelissier L., Hansen T., and Dickie K., “System and method for connecting and controlling wireless ultrasound imaging system from electronic device,” U.S. patent 9,763,644 (19 September 2017).

- 99.Hariri A., Fatima A., Mohammadian N., Mahmoodkalayeh S., Ansari M. A., Bely N., and Avanaki M. R., “Development of low-cost photoacoustic imaging systems using very low-energy pulsed laser diodes,” J. Biomed. Opt. 22(7), 075001 (2017). 10.1117/1.JBO.22.7.075001 [DOI] [PubMed] [Google Scholar]

- 100.Upputuri P. K. and Pramanik M., “Fast photoacoustic imaging systems using pulsed laser diodes: A review,” Biomed. Eng. Lett. 8(2), 167–181 (2018). 10.1007/s13534-018-0060-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Maneas E., Aughwane R., Huynh N., Xia W., Ansari R., Kuniyil Ajith Singh M., Hutchinson J. C., Sebire N. J., Arthurs O. J., Deprest J. et al., “Photoacoustic imaging of the human placental vasculature,” J. Biophotonics 13(4), e201900167 (2020). 10.1002/jbio.201900167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Singh M. K. A., LED-Based Photoacoustic Imaging (Springer, 2020). 10.1007/978-981-15-3984-8 [DOI] [Google Scholar]

- 103.Bell M. A. L., Guo X., Kang H. J., and Boctor E., “Improved contrast in laser-diode-based photoacoustic images with short-lag spatial coherence beamforming,” in 2014 IEEE International Ultrasonics Symposium (IEEE, 2014), pp. 37–40.

- 104.Thawani J. P., Amirshaghaghi A., Yan L., Stein J. M., Liu J., and Tsourkas A., “Photoacoustic-guided surgery with indocyanine green-coated superparamagnetic iron oxide nanoparticle clusters,” Small 13(37), 1701300 (2017). 10.1002/smll.201701300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Moore C. and Jokerst J. V., “Strategies for image-guided therapy, surgery, and drug delivery using photoacoustic imaging,” Theranostics 9(6), 1550 (2019). 10.7150/thno.32362 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data sharing is not applicable to this article as no new data were created or analyzed in this study.