Abstract

Background

Sustained return of spontaneous circulation (ROSC) is the most proximal and direct assessment of acute resuscitation quality in hospitals. However, validated tools to benchmark hospital rates for ROSC after in‐hospital cardiac arrest currently do not exist.

Methods and Results

Within the national Get With The Guidelines‐Resuscitation registry, we identified 83 206 patients admitted from 335 hospitals from 2014 to 2017 with in‐hospital cardiac arrest. Using hierarchical logistic regression, we derived and validated a model for ROSC, defined as spontaneous and sustained ROSC for ≥20 consecutive minutes, from 24 pre‐arrest variables and calculated rates of risk‐standardized ROSC for in‐hospital cardiac arrest for each hospital. Overall, rates of ROSC were 72.0% and 72.7% for the derivation and validation cohorts, respectively. The model in the derivation cohort had moderate discrimination (C‐statistic 0.643) and excellent calibration (R 2 of 0.996). Seventeen variables were associated with ROSC, and a parsimonious model retained 10 variables. Before risk‐adjustment, the median hospital ROSC rate was 70.5% (interquartile range: 64.7–76.9%; range: 33.3–89.6%). After adjustment, the distribution of risk‐standardized ROSC rates was narrower: median of 71.9% (interquartile range: 68.2–76.4%; range: 42.2–84.6%). Overall, 56 (16.7%) of 335 hospitals had at least a 10% absolute change in percentile rank after risk standardization: 27 (8.0%) with a ≥10% negative percentile change and 29 (8.7%) with a ≥10% positive percentile change.

Conclusions

We have derived and validated a model to risk‐standardize hospital rates of ROSC for in‐hospital cardiac arrest. Use of this model can support efforts to compare acute resuscitation survival across hospitals to facilitate quality improvement.

Keywords: cardiac arrest, risk model, survival

Subject Categories: Cardiopulmonary Arrest, Quality and Outcomes

Clinical Perspective

What Is New?

Validated tools to benchmark hospital rates of return of spontaneous circulation after in‐hospital cardiac arrest currently do not exist.

In this article, we have developed and validated a model for hospital rates of risk‐standardized return of spontaneous circulation, with moderate discrimination and excellent calibration.

What Are the Clinical Implications?

Creating such a tool for risk‐standardizing hospital rates of return of spontaneous circulation will support efforts to compare acute resuscitation survival for in‐hospital cardiac arrest across hospitals.

Use of this model can also provide hospitals a mechanism to more directly assess the impact of their quality improvement interventions to deliver higher quality cardiopulmonary resuscitation.

Nonstandard Abbreviations and Acronyms .

GWTG Get With The Guidelines

ROSC return of spontaneous circulation

SD standardized difference

In‐hospital cardiac arrest is common and is associated with a low rate of overall survival of 20% to 25%.1, 2 Hospital initiatives to improve outcomes have primarily focused on the acute resuscitation period, with a focus on minimizing interruptions to and maximizing quality of chest compressions, as well as ensuring timely delivery of defibrillation and vasoactive medications. Companies, in turn, have introduced devices to better monitor cardiopulmonary resuscitation quality and deliver more consistent cardiopulmonary resuscitation.

Unlike process‐of‐care measures for resuscitation (eg, timely defibrillation), which do not require risk adjustment as their performance should be independent of patient characteristics, survival outcomes require risk standardization to account for variations in patient case‐mix across sites so as to facilitate a more unbiased comparison across hospitals.3 Although risk‐standardized rates of survival to discharge exist for in‐hospital cardiac arrest,2, 4 they may not reflect the direct impact of quality improvement initiatives focused on the intra‐arrest period, as overall survival is affected by both acute and postresuscitation care. This is particularly critical for quality improvement interventions that focus on delivery of higher quality cardiopulmonary resuscitation where return of spontaneous circulation (ROSC) is the most proximal patient outcome. Developing a validated model to risk‐standardize hospital rates of acute resuscitation survival, defined as ROSC that is sustained for at least 20 consecutive minutes,5 for in‐hospital cardiac arrest would therefore provide hospitals a tool by which the impact of their quality improvement initiatives in acute resuscitation care can be assessed, as well as to facilitate benchmarking across sites. Such a model for risk‐standardized rates of ROSC does not currently exist and could help identify best practices for acute resuscitation care at high‐performing hospitals.

To address this current gap in knowledge, we derived and validated risk‐standardized hospital rates of sustained ROSC for in‐hospital cardiac arrest within Get With The Guidelines (GWTG)‐Resuscitation—the largest repository of data on hospitalized patients with in‐hospital cardiac arrest. Use of this model can assist ongoing efforts to support ongoing quality assessment and improvement efforts for acute resuscitation care.

Methods

Data Availability

The data that support the findings of this study are available from the corresponding author upon request and approval by the GWTG‐Resuscitation registry.

Study Population

GWTG‐Resuscitation, formerly known as the National Registry of Cardiopulmonary Resuscitation, is a large, prospective, national quality‐improvement registry of in‐hospital cardiac arrest and is sponsored by the American Heart Association. Its design has been described in detail previously.6 In brief, trained quality‐improvement hospital personnel enroll all patients with a cardiac arrest (defined as the absence of a palpable central pulse, apnea, and unresponsiveness) treated with resuscitation efforts and without do‐not‐resuscitate orders. Cases are identified by multiple methods, including centralized collection of cardiac arrest flow sheets, reviews of hospital paging system logs, and routine checks of code carts, pharmacy tracer drug records, and hospital billing charges for resuscitation medications.6 The registry uses standardized Utstein‐style definitions for all patient variables and outcomes to facilitate uniform reporting across hospitals.5, 7 In addition, data accuracy is ensured by rigorous certification of hospital staff and use of standardized software with data checks for completeness and accuracy.6

From 2000 to 2017, a total of 253 472 patients 18 years of age or older with an index in‐hospital cardiac arrest were enrolled in GWTG‐Resuscitation. We excluded 54 patients with missing data on the study outcome of ROSC as well as 61 patients with missing data on location of in‐hospital cardiac arrest for risk adjustment. Since rates of ROSC may have improved over time, we restricted our study population to the 82 279 patients from 335 hospitals between 2014 and 2017 to ensure that our risk models were based on a contemporary cohort of patients.

Study Outcome and Variables

The primary outcome of interest was ROSC, which was defined as the spontaneous and sustained return of a pulse for at least 20 consecutive minutes.5 A total of 24 baseline characteristics were screened as candidate predictors for the study outcome. These included age (categorized in 10‐year intervals of <50, 50–59, 60–69, 70–79, and ≥80), sex, location of arrest (categorized as intensive care, monitored unit, nonmonitored unit, emergency room, procedural/surgical area, and other), and initial cardiac arrest rhythm (ventricular fibrillation, pulseless ventricular tachycardia, asystole, pulseless electrical activity). In addition, the following comorbidities or medical conditions present prior to cardiac arrest were evaluated for the model: heart failure, myocardial infarction, or diabetes mellitus; renal, hepatic, or respiratory insufficiency; baseline evidence of motor, cognitive, or functional deficits (CNS depression); acute stroke; acute nonstroke neurologic disorder; pneumonia; hypotension; sepsis; major trauma; metabolic or electrolyte abnormality; and metastatic or hematologic malignancy. Finally, we considered for model inclusion several critical‐care interventions (mechanical ventilation, intravenous vasopressor support, or dialysis) already in place at the time of cardiac arrest. Race was not considered for model inclusion, as prior studies have found that racial differences in survival after in‐hospital cardiac arrest are partly mediated by differences in hospital care quality for blacks and whites3, 8; otherwise, adjustment for race would, in effect, allow for lower rates of ROSC for nonwhite patients.

Model Development and Validation

Using SAS, within each study hospital, we randomly selected two thirds (67%) of the study population for the derivation cohort and one third (33%) for the validation cohort. We confirmed that a similar proportion of patients from each calendar year were represented in the derivation and validation cohorts. Baseline differences between patients in the derivation and validation cohorts were evaluated using χ2 tests for categorical variables and Student t tests for continuous variables. Because of the large sample size, we evaluated for significant differences between the 2 cohorts by computing standardized differences for each covariate. Based on prior work, a standardized difference (SD) of >10% was used to define a significant difference.9

Within the derivation sample, multivariable models were constructed to identify significant patient‐level predictors of ROSC. Because our primary objective was to derive risk‐standardized rates of ROSC for each hospital, which would require us to account for clustering of observations within hospitals, we used hierarchical logistic regression models for our analyses.10 By using hierarchical models to estimate the log‐odds of ROSC as a function of demographic and clinical variables (both fixed effects) and a random effect for each hospital, this approach allowed us to assess for hospital variation in risk‐standardized rates of ROSC after accounting for patient case‐mix.

We considered for model inclusion the candidate variables previously described in the Study Outcome and Variables section. Multicollinearity between covariates was assessed for each variable before inclusion.11 To ensure parsimony and inclusion of only those variables that provided incremental prognostic value, we employed the approximation of full model methodology for model reduction.12 The contribution of each significant model predictor was ranked, and variables with the smallest contribution to the model were sequentially eliminated. This was an iterative process until further variable elimination led to a >5% loss in model prediction as compared with the initial full model.

Model discrimination was assessed with the C‐statistic, and model validation was performed in the remaining one third of the study cohort by examining observed versus predicted plots. Upon validation of the model, we pooled patients from the derivation and validation cohorts and reconstructed a final hierarchical regression model to derive estimates from the entire study sample for risk‐standardization.

Risk‐Standardized ROSC Rates at Hospitals

Using the hospital‐specific estimates (ie, random intercepts) from the hierarchical models, we then calculated risk‐standardized rates of ROSC for each hospital by multiplying the registry's unadjusted ROSC rate by the ratio of a hospital's predicted‐to‐expected ROSC rate. We used the ratio of predicted‐to‐expected outcomes (described below) instead of the ratio of observed‐to‐expected outcomes to overcome analytical issues that have been described for the latter approach.13, 14, 15 Specifically, our approach ensured that all hospitals, including those with relatively small case volumes, would have appropriate risk‐standardization of their ROSC rates.

For these calculations, the expected hospital number of cardiac arrest survivors is the number of patients expected to achieve ROSC at the hospital if the hospital's patients were treated at a “reference” hospital (ie, the average hospital‐level intercept from all hospitals in GWTG‐Resuscitation). This was determined by regressing patients’ risk factors and characteristics on likelihood of ROSC with all hospitals in the sample, and then applying the subsequent estimated regression coefficients to the patient characteristics observed at a given hospital, and then summing the expected number of patients with ROSC. In effect, the expected rate is a form of indirect standardization. In contrast, the predicted hospital outcome is the number of patients with ROSC at a specific hospital. It is determined in the same way that the expected number of those with ROSC is calculated, except that the hospital's individual random effect intercept is used. The risk‐standardized rate of ROSC was then calculated by the ratio of predicted to expected rate of ROSC, multiplied by the unadjusted rate for the entire study sample.

The effects of risk‐standardization on unadjusted hospital rates of ROSC were then illustrated with descriptive plots. In addition, we examined the absolute change (either positive or negative) in percentile rank for each hospital after risk standardization. This approach overcomes the inherent limitation of just examining the proportion of hospitals that are reclassified out of the top quintile with risk standardization, as some hospitals may be reclassified with only a 1% decrease in percentile rank (eg, from 80% percentile to 79% percentile, moving from top quintile to fourth quintile), while other hospitals would require up to a 20% decrease in percentile rank to be reclassified (eg, hospitals with an unadjusted 99% percentile rank). Lastly, we assessed the extent of site‐level variation in rates of ROSC by calculating a hospital‐level median odds ratio. This statistic is derived from the hierarchical model and quantifies the likelihood of achieving ROSC for patients with similar case‐mix treated at 2 randomly selected hospitals in the cohort.

All study analyses were performed with SAS 9.2 (SAS Institute, Cary, NC) and R version 2.10.0.16 Dr Chan had full access to the data and takes responsibility for its integrity. All authors have read and agree to the manuscript as written. The institutional review board of the Mid America Heart Institute waived the requirement of informed consent and the American Heart Association approved the final manuscript draft.

Results

Of 82 729 patients in the study cohort, 55 601 (67%) were randomly selected for the derivation cohort and 27 128 (33%) for the validation cohort. Baseline characteristics of the patients in the derivation and validation cohorts were similar (Table 1). The mean patient age in the overall cohort was 65.3±15.5 years, 59% were male, and 23% were black. Nearly 83% of patients had a nonshockable cardiac arrest rhythm of asystole or pulseless electrical activity, and nearly half were in an intensive care unit at the time of cardiac arrest. Respiratory insufficiency and renal insufficiency were the most prevalent comorbidities, while one quarter of patients were hypotensive and approximately one quarter were receiving mechanical ventilation at the time of cardiac arrest.

Table 1.

Characteristics of the Derivation and Validation Cohorts

| Derivation Cohort (n=55 601) | Validation Cohort (n=27 128) | Standardized Difference %* | |

|---|---|---|---|

| Demographics | |||

| Age (y), mean±SD | 65.2±15.6 | 65.3±15.5 | 0.5 |

| Age, y, by deciles | 1.6 | ||

| 18 to <50 | 8303 (14.9%) | 3997 (14.7%) | |

| 50–59 | 9434 (17.0%) | 4726 (17.4%) | |

| 60–69 | 14 276 (25.7%) | 6830 (25.2%) | |

| 70–79 | 13 299 (23.9%) | 6526 (24.1%) | |

| 80–89 | 10 289 (18.5%) | 5049 (18.6%) | |

| Male sex | 32 517 (58.5%) | 15 891 (58.6%) | 0.2 |

| Race | 1.6 | ||

| White | 37 658 (67.7%) | 18 382 (67.8%) | |

| Black | 12 658 (22.8%) | 6282 (23.2%) | |

| Other | 1370 (2.5%) | 626 (2.3%) | |

| Unknown | 3915 (7.0%) | 1838 (6.8%) | |

| Characteristics of arrest | |||

| Cardiac arrest rhythm | 1.9 | ||

| Asystole | 14 511 (26.1%) | 7193 (26.5%) | |

| Pulseless electrical activity | 31 575 (56.8%) | 15 323 (56.5%) | |

| Ventricular fibrillation | 5273 (9.5%) | 2645 (9.8%) | |

| Pulseless ventricular tachycardia | 4242 (7.6%) | 1967 (7.3%) | |

| Location of cardiac arrest | 1.4 | ||

| Intensive care unit | 27 060 (48.7%) | 13 320 (49.1%) | |

| Monitored unit | 8396 (15.1%) | 4054 (14.9%) | |

| Nonmonitored unit | 8221 (14.8%) | 4066 (15.0%) | |

| Emergency room | 6600 (11.9%) | 3151 (11.6%) | |

| Procedural or surgical area | 4340 (7.8%) | 2065 (7.6%) | |

| Other | 984 (1.8%) | 472 (1.7%) | |

| Pre‐existing conditions | |||

| Respiratory insufficiency | 25 911 (46.6%) | 12 795 (47.2%) | 1.1 |

| Renal insufficiency | 20 107 (36.2%) | 9960 (36.7%) | 1.1 |

| Diabetes mellitus | 18 985 (34.1%) | 9345 (34.4%) | 0.6 |

| Hypotension | 14 622 (26.3%) | 7212 (26.6%) | 0.7 |

| Heart failure this admission | 8241 (14.8%) | 3981 (14.7%) | 0.4 |

| Prior heart failure | 12 419 (22.3%) | 6052 (22.3%) | 0.1 |

| Myocardial infarction this admission | 7992 (14.4%) | 3853 (14.2%) | 0.5 |

| Prior myocardial infarction | 7525 (13.5%) | 3711 (13.7%) | 0.4 |

| Metabolic or electrolyte abnormality | 12 990 (23.4%) | 6337 (23.4%) | 0.0 |

| Septicemia | 10 298 (18.5%) | 5252 (19.4%) | 2.1 |

| Pneumonia | 7820 (14.1%) | 3918 (14.4%) | 1.1 |

| Metastatic or hematologic malignancy | 6009 (10.8%) | 2885 (10.6%) | 0.6 |

| Hepatic insufficiency | 4504 (8.1%) | 2273 (8.4%) | 1.0 |

| Baseline depression in CNS function | 4085 (7.3%) | 1954 (7.2%) | 0.6 |

| Acute CNS nonstroke event | 4004 (7.2%) | 1949 (7.2%) | 0.1 |

| Acute stroke | 2249 (4.0%) | 1066 (3.9%) | 0.6 |

| Major trauma | 2660 (4.8%) | 1316 (4.9%) | 0.3 |

| Interventions in place | |||

| Mechanical ventilation | 13 494 (24.3%) | 6601 (24.3%) | 0.1 |

| Continuous intravenous vasopressor | 13 301 (23.9%) | 6548 (24.1%) | 0.5 |

| Dialysis | 1505 (2.7%) | 767 (2.8%) | 0.7 |

CNS indicates central nervous system.

A standardized difference of >10% indicates a significant difference between groups.

Overall, 59 754 (72.2%) of patients with an in‐hospital cardiac arrest achieved ROSC. ROSC rates were similar in the derivation (40 038 of 55 601 [72.0%]) and validation cohorts (19 716 of 27 128 [72.7%]). A comparison of baseline characteristics between patients who achieved ROSC and who did not is provided in Table S1. In general, patients who achieved ROSC were younger, more frequently white, more likely to have an initial cardiac arrest rhythm of ventricular fibrillation or pulseless ventricular tachycardia, and less ill with fewer comorbidities or interventions in place (eg, intravenous vasopressors) at the time of cardiac arrest.

Initially, all 24 variables were included in the multivariable model in the derivation cohort (with 17 variables significantly associated with ROSC), resulting in a model C‐statistic of 0.643 (Table 2; see Table S2 for variable definitions). After model reduction to generate a parsimonious model with no more than 5% loss in model prediction, our final model comprised 10 variables, with only a small change in the C‐statistic (0.638). The predictors in the final model included age, initial cardiac arrest rhythm, heart failure during index admission, respiratory insufficiency, acute CNS nonstroke event, metastatic or hematologic malignancy, metabolic or electrolyte abnormality, diabetes mellitus, and requirement for mechanical ventilation or intravenous vasopressor before cardiac arrest. The beta‐coefficient estimates and adjusted odds ratios are summarized in Table 3. Importantly, there was no evidence of multicollinearity between any of these variables (all variance inflation factors <1.5). When the model was tested in the independent validation cohort, model discrimination was similar (C‐statistic of 0.630). The Hosmer–Lemeshow goodness‐of‐fit P value was 0.87, suggesting good model fit. Calibration was confirmed with observed versus predicted plots in both the derivation and validation cohorts (R 2 of 0.996 and 0.990, respectively (Figure S1).

Table 2.

Full Model for Predictors of Return of Spontaneous Circulation

| Predictor | Beta‐Weight | Odds Ratio | 95% CI | P Value |

|---|---|---|---|---|

| Estimate | ||||

| Age (y) | ||||

| <50 | 0.0000 | Reference | Reference | Reference |

| 50–59 | 0.0448 | 1.05 | 0.98–1.12 | 0.21 |

| 60–69 | −0.0327 | 0.97 | 0.91–1.03 | 0.32 |

| 70–79 | −0.1170 | 0.89 | 0.83–0.95 | <0.001 |

| ≥80 | −0.3598 | 0.70 | 0.65–0.75 | <0.001 |

| Male sex | −0.0856 | 0.92 | 0.88–0.98 | <0.001 |

| Hospital location | ||||

| Nonmonitored unit | 0.0000 | Reference | Reference | Reference |

| Intensive care unit | 0.2785 | 1.32 | 1.24–1.41 | <0.001 |

| Monitored unit | 0.2719 | 1.31 | 1.22–1.41 | <0.001 |

| Emergency room | 0.1732 | 1.19 | 1.10–1.28 | <0.001 |

| Procedural or surgical area | 0.5142 | 1.67 | 1.53–1.83 | <0.001 |

| Other | 0.0674 | 1.07 | 0.92–1.24 | 0.37 |

| Initial cardiac arrest rhythm | ||||

| Asystole | 0.0000 | Reference | Reference | Reference |

| Pulseless electrical activity | 0.1698 | 1.19 | 1.13–1.24 | <0.001 |

| Ventricular fibrillation | 0.6893 | 1.99 | 1.84–2.16 | <0.001 |

| Pulseless ventricular tachycardia | 0.7463 | 2.11 | 1.93–2.30 | <0.001 |

| Myocardial infarction this admission | −0.0424 | 0.96 | 0.90–1.02 | 0.15 |

| Prior myocardial infarction | −0.0005 | 1.00 | 0.94–1.06 | 0.99 |

| Heart failure this admission | 0.0922 | 1.10 | 1.03–1.17 | 0.003 |

| Prior heart failure | −0.0336 | 0.97 | 0.92–1.02 | 0.20 |

| Respiratory insufficiency | 0.1234 | 1.13 | 1.08–1.18 | <0.001 |

| Renal insufficiency | 0.0492 | 1.05 | 1.01–1.10 | 0.03 |

| Hepatic insufficiency | 0.0304 | 1.03 | 0.96–1.11 | 0.42 |

| Hypotension | −0.0997 | 0.91 | 0.86–0.95 | <0.001 |

| Septicemia | 0.0151 | 1.02 | 0.96–1.07 | 0.58 |

| Pneumonia | 0.0711 | 1.07 | 1.01–1.14 | 0.02 |

| Diabetes mellitus | 0.1576 | 1.17 | 1.12–1.22 | <0.001 |

| Metabolic/electrolyte abnormality | 0.0801 | 1.08 | 1.03–1.14 | 0.003 |

| Metastatic or hematologic malignancy | −0.1744 | 0.84 | 0.79–0.89 | <0.001 |

| Major trauma | −0.0973 | 0.91 | 0.83–1.00 | 0.04 |

| Acute stroke | 0.0087 | 1.01 | 0.92–1.11 | 0.86 |

| Baseline depression in CNS function | 0.0885 | 1.09 | 1.01–1.18 | 0.03 |

| Acute CNS nonstroke event | 0.1403 | 1.15 | 1.06–1.25 | <0.001 |

| Mechanical ventilation | −0.1808 | 0.83 | 0.79–0.88 | <0.001 |

| Continuous intravenous vasopressor | −0.3057 | 0.74 | 0.70–0.78 | <0.001 |

| Dialysis | −0.0100 | 0.99 | 0.87–1.12 | 0.88 |

CNS indicates central nervous system.

Table 3.

Final Reduced Model for Return of Spontaneous Circulation

| Predictor | Beta‐Weight | Odds Ratio | 95% CI | P Value |

|---|---|---|---|---|

| Estimate | ||||

| Age (y) | ||||

| <50 | 0 | Reference | Reference | Reference |

| 50–59 | 0.0446 | 1.05 | 0.98–1.12 | 0.21 |

| 60–69 | −0.0335 | 0.97 | 0.91–1.03 | 0.30 |

| 70–79 | −0.1170 | 0.89 | 0.83–0.95 | <0.001 |

| ≥80 | −0.3611 | 0.70 | 0.65–0.74 | <0.001 |

| Initial cardiac arrest rhythm | ||||

| Asystole | 0 | Reference | Reference | Reference |

| Pulseless electrical activity | 0.1858 | 1.20 | 1.15–1.26 | <0.001 |

| Ventricular fibrillation | 0.7068 | 2.03 | 1.87–2.19 | <0.001 |

| Pulseless ventricular tachycardia | 0.7636 | 2.15 | 1.97–2.34 | <0.001 |

| Heart failure this admission | 0.0878 | 1.09 | 1.03–1.16 | 0.003 |

| Respiratory insufficiency | 0.1323 | 1.14 | 1.09–1.19 | <0.001 |

| Diabetes mellitus | 0.1640 | 1.18 | 1.13–1.23 | <0.001 |

| Metabolic/electrolyte abnormality | 0.0844 | 1.09 | 1.04–1.14 | <0.001 |

| Metastatic or hematologic malignancy | −0.1826 | 0.83 | 0.78–0.88 | <0.001 |

| Acute CNS nonstroke event | 0.1215 | 1.13 | 1.04–1.22 | 0.003 |

| Continuous intravenous vasopressor | −0.2751 | 0.76 | 0.72–0.80 | <0.001 |

| Mechanical ventilation | −0.1492 | 0.86 | 0.82–0.90 | <0.001 |

CNS indicates central nervous system.

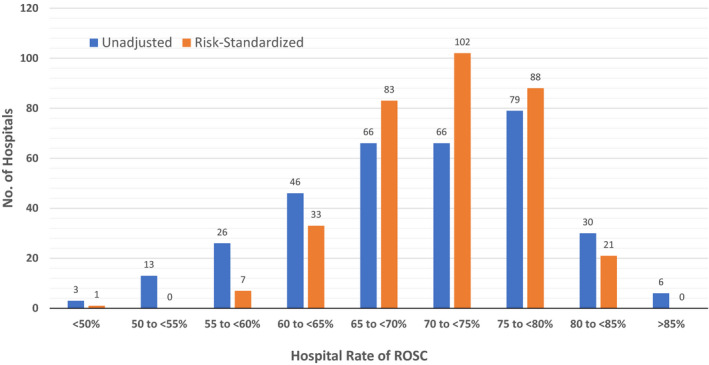

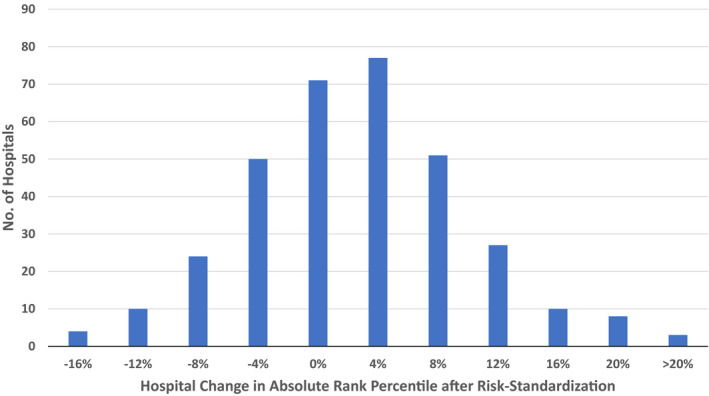

Figure 1 depicts the unadjusted and risk‐standardized distribution of hospital rates of ROSC. The median unadjusted hospital rate of ROSC was 70.5% (interquartile range: 64.7–76.9%; range: 33.3–89.6%). After adjustment, the distribution of risk‐standardized ROSC rates was narrower: median of 71.9% (interquartile range: 68.2–76.4%; range: 42.2–84.6%). The median odds ratio for risk‐standardized hospital rates of ROSC was 1.37 (95% CI: 1.33–1.41) P<0.001, which suggests that the odds of achieving ROSC for 2 identical patients treated for an in‐hospital cardiac arrest at 2 randomly selected hospitals varied by as much as 37%. Finally, to examine the effect of risk‐standardization at individual hospitals, the change in percentile rank for each hospital was examined (Figure 2). Of 335 hospitals, 56 (16.7%) had at least a 10% absolute change in percentile rank after risk standardization: 27 (8.0%) with a ≥10% negative percentile change and 29 (8.7%) with a ≥10% positive percentile change.

Figure 1. Distribution of unadjusted and risk‐standardized hospital rates of ROSC for in‐hospital cardiac arrest.

ROSC rates for 335 hospitals are shown. ROSC indicates return of spontaneous circulation.

Figure 2. Hospital change in absolute rank percentile after risk‐standardization of ROSC rates.

ROSC indicates return of spontaneous circulation.

Discussion

Within a large national registry, we derived and validated a risk‐adjustment model for hospital rates of sustained ROSC after in‐hospital cardiac arrest. The model was based on 10 clinical variables that are common variables in clinical care and easy to collect. Moreover, the model had good discrimination and excellent calibration. Importantly, our study adhered to recommended standards employed for public reporting by the use of hierarchical models and was based on a contemporary cohort of patients with in‐hospital cardiac arrest.3 This risk‐standardization methodology provides a mechanism to compare rates of ROSC across hospitals and to assess the impact of hospital resuscitation initiatives on rates of ROSC.

The American Heart Association's GWTG‐Resuscitation national registry has developed a number of target benchmarks to highlight hospitals with exceptional performance. Most of these performance metrics relate to processes‐of‐care, such as time to defibrillation or epinephrine and time to initiation of cardiopulmonary resuscitation and should be independent of patient case‐mix. However, survival outcomes such as ROSC after in‐hospital cardiac arrest may be influenced heavily by patient case‐mix, especially by variables such as age, initial cardiac arrest rhythm, and illness severity at the time of cardiac arrest. As a result, differences in unadjusted hospital rates of ROSC could simply be because of differences in patient case‐mix. To date, a risk‐standardization model to facilitate comparisons of ROSC rates across hospitals does not exist. Creating such a tool for ROSC would provide hospitals a mechanism to more directly assess the impact of their quality improvement interventions in acute resuscitation care, as the outcome of survival to discharge reflects not only acute resuscitation survival but also postresuscitation care.

Without risk‐standardization, differences in hospital rates of ROSC for in‐hospital cardiac arrest may be because of differences in: (1) patient case‐mix, and (2) the quality of care between hospitals. From a quality perspective, only the latter is of interest. With our risk‐standardization approach, which controlled for differences in patient case‐mix across hospitals, 1 in 6 hospitals changed by at least 10% in percentile rank, highlighting the importance of risk‐standardization for comparisons of hospital ROSC rates. Importantly, there was variability in ROSC rates across hospitals, suggesting heterogeneity in acute resuscitation quality. The median odds ratio of 1.37 suggests that one's odds of achieving ROSC after an in‐hospital cardiac arrest varied by as much as 37%, depending on the hospital at which one received care. Calculation of risk‐standardized rates of ROSC, therefore, can help identify hospitals that excel in acute resuscitation care. Which hospital factors or quality improvement initiatives are associated with higher ROSC rates at these hospitals remain unknown. Moving forward, identifying best practices for high rates of ROSC at these top‐performing hospitals should be a priority,17 as their dissemination to all hospitals has the potential to significantly improve outcomes for all patients with in‐hospital cardiac arrest.

Besides facilitating hospital comparisons of resuscitation performance, risk‐standardized rates of ROSC can assist in the evaluation of hospital quality improvement initiatives. With a renewed focus on chest compressions during resuscitations (eg, minimizing interruptions and optimizing their depth and rate), risk‐standardized rates of ROSC can be computed for the time period before and after a quality improvement initiative (as patient case‐mix may change between the 2 periods) to provide a more rigorous assessment of whether the initiative improved resuscitation outcomes. Similarly, device companies with technologies to improve cardiopulmonary resuscitation quality and conducting multisite studies can compute risk‐standardized rates of ROSC to ensure comparable comparisons between hospitals employing and not employing the intervention of interest.

Our study should be interpreted in the context of the following limitations. First, although our risk model was able to account for a number of clinical variables, unmeasured confounding may still exist. Specifically, our model did not have information on some prognostic factors, such as creatinine, hemoglobin, or the severity level for each comorbid condition. In addition, thorough documentation of patients’ case‐mix (eg, comorbidities) may differ across sites, which could account for some of the hospital variation in risk‐standardized ROSC rates. Second, we did not have information on do‐not‐resuscitate status for all admitted patients, and this rate may vary across hospitals. However, our models did adjust for each resuscitated patient's case‐mix severity and therefore reflects each hospital's risk‐standardized rate of ROSC for those patients undergoing active resuscitations. As such, these rates provide comparable comparisons across hospitals on resuscitation quality for patients with similar demographics, illness severity, and cardiac arrest characteristics. Third, our study population was limited to hospitals participating in GWTG‐Resuscitation and our findings may not be generalizable to nonparticipating hospitals.

There is growing national interest in developing tools to benchmark resuscitation quality for cardiac arrest. We have derived and validated a model to risk‐standardize hospital rates of sustained ROSC for in‐hospital cardiac arrest. Use of this model can support efforts to compare acute resuscitation survival across hospitals to facilitate quality improvement, as well as assess the effect of novel intra‐arrest interventions.

Sources of Funding

Dr Chan is supported by a grant (1R01HL123980) from the National Heart, Lung, and Blood Institute. Dr Chan is also supported by funding from the American Heart Association. GWTG‐Resuscitation is sponsored by the American Heart Association.

Disclosures

None.

Supporting information

Appendix S1

Tables S1 and S2

Figure S1

Acknowledgments

Dr Chan had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.

J Am Heart Assoc. 2020;9:e014837 DOI: 10.1161/JAHA.119.014837

References

- 1. Girotra S, Nallamothu BK, Spertus JA, Li Y, Krumholz HM, Chan PS. Trends in survival after in‐hospital cardiac arrest. N Engl J Med. 2012;367:1912–1920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Chan PS, Berg RA, Spertus JA, Schwamm LH, Bhatt DL, Fonarow GC, Heidenreich PA, Nallamothu BK, Tang F, Merchant RM. Risk‐standardizing survival for in‐hospital cardiac arrest to facilitate hospital comparisons. J Am Coll Cardiol. 2013;62:601–609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Krumholz HM, Brindis RG, Brush JE, Cohen DJ, Epstein AJ, Furie K, Howard G, Peterson ED, Rathore SS, Smith SC Jr, et al. Standards for statistical models used for public reporting of health outcomes: an American Heart Association Scientific Statement from the Quality of Care and Outcomes Research Interdisciplinary Writing Group: cosponsored by the Council on Epidemiology and Prevention and the Stroke Council. Endorsed by the American College of Cardiology Foundation. Circulation. 2006;113:456–462. [DOI] [PubMed] [Google Scholar]

- 4. Jayaram N, Spertus JA, Nadkarni V, Berg RA, Tang F, Raymond T, Guerguerian AM, Chan PS. Hospital variation in survival after pediatric in‐hospital cardiac arrest. Circ Cardiovasc Qual Outcomes. 2014;7:517–523. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Jacobs I, Nadkarni V, Bahr J, Berg RA, Billi JE, Bossaert L, Cassan P, Coovadia A, D'Este K, Finn J, et al. Cardiac arrest and cardiopulmonary resuscitation outcome reports: update and simplification of the Utstein templates for resuscitation registries: a statement for healthcare professionals from a Task Force of the International Liaison Committee on Resuscitation (American Heart Association, European Resuscitation Council, Australian Resuscitation Council, New Zealand Resuscitation Council, Heart and Stroke Foundation of Canada, InterAmerican Heart Foundation, Resuscitation Councils of Southern Africa). Circulation. 2004;110:3385–3397. [DOI] [PubMed] [Google Scholar]

- 6. Peberdy MA, Kaye W, Ornato JP, Larkin GL, Nadkarni V, Mancini ME, Berg RA, Nichol G, Lane‐Trultt T. Cardiopulmonary resuscitation of adults in the hospital: a report of 14720 cardiac arrests from the National Registry of Cardiopulmonary Resuscitation. Resuscitation. 2003;58:297–308. [DOI] [PubMed] [Google Scholar]

- 7. Cummins RO, Chamberlain D, Hazinski MF, Nadkarni V, Kloeck W, Kramer E, Becker L, Robertson C, Koster R, Zaritsky A, et al. Recommended guidelines for reviewing, reporting, and conducting research on in‐hospital resuscitation: the in‐hospital ‘Utstein style’. American Heart Association. Circulation. 1997;95:2213–2239. [DOI] [PubMed] [Google Scholar]

- 8. Chan PS, Nichol G, Krumholz HM, Spertus JA, Jones PG, Peterson ED, Rathore SS, Nallamothu BK. Racial differences in survival after in‐hospital cardiac arrest. JAMA. 2009;302:1195–1201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Austin PC. Using the standardized difference to compare the prevalence of a binary variable between two groups in observational research. Commun Stat Simul Comput. 2009;38:1228–1234. [Google Scholar]

- 10. Goldstein H. Multilevel Statistical Models. London and New York: Edward Arnold; Wiley; 1995. [Google Scholar]

- 11. Belsley DA, Kuh E, Welsch RE. Regression Diagnostics: Identifying Influential Data and Sources of Collinearity. New York: John Wiley & Sons; 1980. [Google Scholar]

- 12. Harrell FE. Regression Modeling Strategies With Applications to Linear Models, Logistic Regression and Survival Analysis. New York: Springer‐Verlag; 2001. [Google Scholar]

- 13. Shahian DM, Torchiana DF, Shemin RJ, Rawn JD, Normand SL. Massachusetts cardiac surgery report card: implications of statistical methodology. Ann Thorac Surg. 2005;80:2106–2113. [DOI] [PubMed] [Google Scholar]

- 14. Christiansen CL, Morris CN. Improving the statistical approach to health care provider profiling. Ann Intern Med. 1997;127:764–768. [DOI] [PubMed] [Google Scholar]

- 15. Normand SL, Glickman ME, Gatsonis CA. Statistical methods for profiling providers of medical care: issues and applications. J Am Stat Assoc. 1997;92:803–814. [Google Scholar]

- 16. Development Core Team R. R: A Language and Environment for Statistical Computing. Austria: R Foundation for Statistical Computing V; 2008. Available at: http://www.R-project.org. Accessed June 21, 2019. ISBN 3‐900051‐07‐0. [Google Scholar]

- 17. Chan PS, Nallamothu BK. Improving outcomes following in‐hospital cardiac arrest: life after death. JAMA. 2012;307:1917–1918. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Appendix S1

Tables S1 and S2

Figure S1

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon request and approval by the GWTG‐Resuscitation registry.