1. Introduction

Preclinical systematic reviews (SRs) and meta-analyses (MAs) are important research activities to address the translational challenges of pain research. Systematic reviews provide empirical evidence to gain knowledge, inform future research agendas, and grant applications concurrent to developing researchers' professional skills.

Systematic reviews are an effective approach to consolidating high-volume, rapidly accruing, and often conflicting research on a specific topic. Designed to address a specific research question, SRs use predefined methods to identify, select, and critically appraise all available and relevant literature to answer that question in an unbiased manner.18 This structured approach distinguishes SRs from narrative reviews. Where appropriate an MA can follow, whereby quantitative data are extracted and statistical techniques are used to summarise the outputs. Together, a SR and a MA can be conducted to assess the quality of experimental design, conduct, analysis and reporting and the reliability of the available and relevant data.45

Through decades of innovation by the Cochrane Collaboration and others, SRs and MAs now lie at the centre of clinical evidence. The information provided has fundamentally revolutionised clinical medicine at all levels, from informing policy and funding decisions to determining optimal treatments for individual patients. Before a clinical research project or funding application, it is best practise to conduct an SR to ascertain what is already known and to identify knowledge gaps.

In the preclinical setting, SRs are relatively novel, partly because of inherent complexities and resource requirements for processing the large number and diverse preclinical publications, paradoxically a strong justification for SRs because they provide the means to synthesise evidence from heterogeneous studies. In some fields, they are gaining popularity (eg, stroke37), and feasibility is improving with technical advances, for example, online review software, machine learning, and text mining.3 However, it is important to highlight that not all SRs require machine learning expertise: research questions can be defined based upon capacity, SR software are free and widely accessible, and large-scale SRs constantly seek help from interested researchers who can learn as they participate.

The aim of this review is to highlight the exciting possibilities a preclinical SR can bring to your research toolkit, demonstrate the importance of preclinical SRs in generating empirical evidence to aid robust experimental design, inform research strategy, and support funding applications. We provide guidance and signpost resources to conduct a preclinical SR.

2. Importance of preclinical systematic reviews

Preclinical SRs offer a framework by which the range and quality of the evidence can be assessed, to improve study design,1 rigour,12,13,27,43 and reporting.8,41 They summarise the knowledge into an easy-to-understand format in conjunction with identifying gaps in the knowledge base thereby providing the justification for raising funding for new studies.6,9,36,42

To address translational challenges, SRs can inform robust experimental design. Experimental bias is a consequence of poor internal validity leading a researcher to incorrectly attribute an observed effect to an intervention.26 Internal validity is comprised of mitigating a range of biases: selection, performance, detection, and attrition bias,57 and quality assessments provide structured insight into whether the existing data are at risk of bias.28,30,34 Concomitantly, SRs can also be used to inform study design, eg, optimal animal model and outcome measure. A MA can also be used to model the impact of publication bias (culture to publish novel, positive results, not neutral or negative data52) and the consequential magnitude of overestimation of effects.47

Systematic reviews make use of available data, prevent the unnecessary duplication of experiments, and offer the means to support scientific and technological developments that replace, reduce, or refine the use of animals in research (eg, as demonstrated by de Vries et al.17).

Finally, SRs can be used to inform clinical trial design and establish whether there is evidence to justify a clinical trial.29,46,58 Retrospective preclinical SRs for interventions that failed in clinical trials have demonstrated that prospective SRs of the animal literature would have concluded that there was insufficient evidence of effect to justify progressing into clinical development (reviewed by Pound and Ritskes-Hoitinga40).

In summary, SRs provide the empirical evidence for improving study design, methods, and analysis to produce unbiased results and increase usability, accessibility, and reproducibility thereby increasing value and reducing research waste.7,23,32 It is also important to note the challenges, for example, crediting research between primary researchers and research synthesisers, and the limitations that persist, for example, SRs and MAs cannot overcome deficiency in evidence, nor do they correct biases (reviewed by Gurevitch et al.24).

3. The practical challenges of systematic reviews

There are several unique challenges for the conduct of preclinical SRs including, high-volume and rapidly accruing data and wide variation in the design, conduct, analysis, and reporting of preclinical studies. The methods for SRs are well developed, but are resource-intensive55 and time-consuming.49 This problem is exacerbated by the exponentially increasing number of publications.4 In preclinical neuropathic pain research, the number of articles retrieved by a systematic search rose from 6506 in 2012 to 12,614 in 2015.13 Comparatively, only 129 articles were identified in an SR of neuropathic pain clinical trials.21

Widely accessible methods and resources to improve the feasibility of preclinical SRs and MAs have been developed by the Collaborative Approach to Meta-Analysis and Review of Animal Data from Experimental Studies (CAMARADES), University of Edinburgh, United Kingdom, and the Systematic Review Center for Laboratory Animal Experimentation (SYRCLE), Radboud University, NL. Both groups offer guidance and support to researchers.

The Systematic Review & Meta-analysis Facility (SyRF) is a free, fully integrated online platform for performing preclinical SRs. The SyRF includes a secure screening database, data repository, and analysis application. Educational resources are also available. In conjunction, Learn to SyRF is a platform researchers can use to create project-specific training courses enabling reviewers to learn, practice, and demonstrate reviewing skills before contributing to a review.

4. The review stages

Before starting a SR, we recommend engaging with training resources to familiarise with SR methodology. In addition to the learning resources available through the CAMARADES′ and SYRCLE's websites, there are several comprehensive reviews,35,45,56 SYRCLE's starting guide, and a recent Pain Research Forum webinar.50 Generic online courses include Systematic Review Methods (open source) and Cochrane Interactive Learning (varied subscriptions for access). You should also consider whether you have the necessary time and human resources available and how you will involve external experts including statisticians, librarians, and collaborators with existing SR experience.

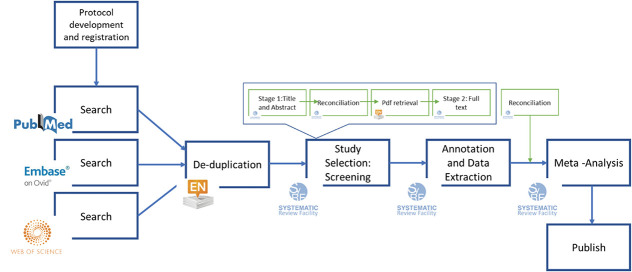

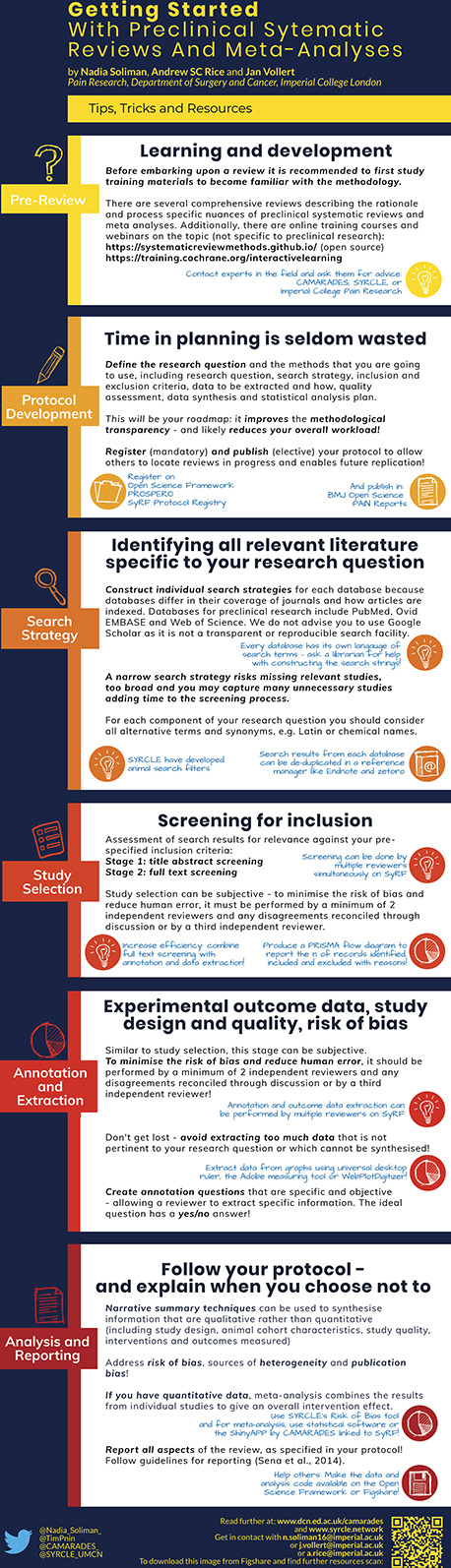

The stages of an SR are described below (corresponding to Figs. 1 and 2). Decisions required at the screening, annotation, and outcome data extraction stages can be subjective, therefore, to minimise bias and human error should be performed by 2 independent reviewers and disagreements reconciled by a third independent reviewer.

Figure 1.

“Getting Started” Infographic. This is a “how to guide” and describes each stage of the SR and MA processes and the resources for learning and development as well as performing a review. MAs, meta-analyses; SRs, systematic reviews.

Figure 2.

An example of the systematic review workflow and the platforms that can be used at each stage.

4.1. Protocol development and registration (mandatory)

This is the most important step, and the preparatory time spent here will not be wasted. The protocol provides methodological transparency and reduces the risk of introducing bias. It defines the research question and the methods you plan to use including the search strategy; inclusion and exclusion criteria; data to be extracted; risk of bias/quality assessment, which based on reporting of methodological criteria allows reviewers to determine whether a study is at low, high, or unclear risk of bias, for example, CAMARADES checklist,34 SYRCLE Risk of Bias Tool,30 and GRADE adapted for preclinical SRs28; data synthesis; and statistical analysis plan.15 Other reporting biases including financial and academic conflicts of interest can also be assessed. Registering the protocol, for example, on the Open Science Framework Registries, PROSPERO or the SYRF Protocol Registry, allows others to locate reviews in progress and enables future replication. Some journals (eg, Pain Reports and BMJ Open Science) publish protocols and the associated peer review can significantly improve your SR.51

4.2. Search strategy

The search strategy is informed by your research question. We recommend consulting a librarian or bibliographic database expert for help because this can be a complex task. An SR aims to capture all the relevant literature specific to your research question. Electronic databases of preclinical research include PubMed, Ovid Embase and Web of Science, and SYRCLE have developed animal search filters for the databases.16,31,33 We do not recommend using Google Scholar because its algorithms are not transparent and searches are not easily reproduced.25 It is necessary to construct individual search strategies for each database because databases differ in their coverage of journals and how articles are indexed. A narrow search strategy will risk missing relevant studies, too broad and you will add many irrelevant studies and consequently time to the screening process.

4.3. Study selection: screening for inclusion

This is the assessment of search results against your prespecified inclusion criteria. There are 2 phases: (1) title and abstract and (2) full-text screening. Full-text screening can be combined with the annotation and data extraction stage. A PRISMA flow diagram should be produced to report the number of records identified, included, and excluded, and the reasons for exclusions.38

4.4. Annotation and data extraction

Annotation questions about study quality, risk of bias, and study design should be specific and objective, limiting the need for reviewer judgement. Avoid temptation to extract data not pertinent to the research question that will not be analysed. There are several tools to manually extract outcome data presented in graphs, eg, WebPlotDigitizer and the inbuilt Adobe measuring tool.

4.5. Analysis

The analysis of an SR can use qualitative53 or quantitative, with MA56 and without MA5 or mixed techniques. A narrative summary can be used to synthesise study design and risk of bias information. Vesterinen et al.56 provide comprehensive guidance for conducting a preclinical MA. A MA can be used to combine the outcome data of individual studies to estimate the overall intervention effect. A stratified MA or meta-regression can be used to investigate and quantify potential sources of heterogeneity, for example, study design characteristics and how they influence outcomes. The presence and magnitude of publication bias can also be estimated using statistical methods such as funnel plots; see Refs. 54 and 56 for further reading.

4.6. Reporting

Sena et al.45 provide guidelines for the reporting of preclinical SRs. All aspects of the review process should be reported in adherence to the protocol with explanation for any deviations. It is also helpful to refer to the AMSTAR 2 critical appraisal tool for assessing the methodological quality of SRs.48 Finally, to ensure transparency and sustainability of the SR, it is encouraged to make the data and analysis code available by uploading to a repository, eg, Open Science Framework or Figshare. In doing so, you are making it possible for others to perform secondary analyses thereby increasing the reach of your work.

5. Improving feasibility: the design of the review

Embarking on a preclinical SR can be daunting due to the complex, resource-intensive, and time-consuming processes.13,20,51 Systematic reviews within the pain field to date have sought to answer broad research questions.13,20,51 These large reviews have provided understanding of the range and quality of a field, however, there are more feasible possibilities ie, conducting smaller reviews, for time poor researchers and students.

5.1. Research question

The research question should be narrow, clearly defined, and answerable. Limiting the scope to a specific population, intervention, comparator, and/or outcome measure are ways to improve feasibility. Performing trial searches will assist you to determine possible workload and hone your research question. Other limitations may be added, for example, publication date; however, this adds a source of bias and must be justified.

5.2. Inclusion criteria for meta-analyses

A MA is not always appropriate; a systematic search, screen, and annotation (study characteristics and risk of bias) can inform a narrative summary and prospective animal studies. If outcome data are required, it is possible to reduce the data extraction burden by having inclusion criteria for the MA. For example, Federico et al.20 calculated effect sizes based upon the availability of time-course data. Similarly, this could be achieved by only including studies at low risk of bias. Such decisions need to be justified and stated a priori within the protocol.

5.3. Efficient resource allocation

Multiple reviewers can be used to expedite the screening, annotation, and outcome data extraction stages (in parallel on SyRF). If you aim to conduct SRs for student projects, a single research question composed of several subquestions can be addressed. Students can collaborate during the protocol development, search, screen, and data extraction stages, thereby contributing to each stage of the project. Students will be able to demonstrate independent working by using the data pertinent to a subquestion and perform and report independent analyses in their examination submissions.

6. Improving feasibility: contribution to reviews

Systematic reviewers are regularly looking for contributors and it is worth contacting the authors of registered SRs to offer your assistance; it is a very efficient way to gain experience. Importantly, it provides collaborative opportunities for researchers with limited resources, eg, in low- and middle-income countries. As part of the recent IASP Cannabinoid Task Force, the preclinical SR recruited a crowd of reviewers to assist with the screening and data extraction phases.51 CAMARADES are currently recruiting a crowd to help them build a systematic and continually updated summary of COVID19 evidence.14 These reviews demonstrate it is possible to share the workload across a crowd, recruited locally or globally, although we recommend conducting training for reviewers to ensure quality.

7. Improving feasibility: automation tools for evidence synthesis

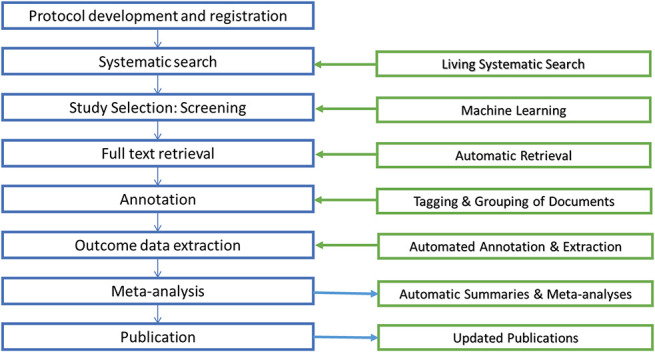

Several groups are taking advantage of emerging technologies to modernise the conventional review process and create living SRs. Systematic reviews are not often updated22 and not incorporating the most recent data risks making an SR at risk of inaccuracy.11,49 Living SRs are SRs that are continually updated, incorporating relevant new evidence as it becomes available.19 Living SRs will ensure that decisions are dynamic and based upon the full body of evidence. Technological developments are continually being made to reduce the time and human effort required for SRs and automation tools can be used without making the review living (Fig. 3).

Figure 3.

Automation technologies that are being developed for the different stages of the review process. Machine learning and text mining have improved the feasibility and efficiency of the early stages of the process2, 3 and tool development continues to ensure that the full potential of preclinical SRs are realised. Technological developments for the latter stages are in their infancy. However, we are currently developing a machine-assisted approach to extracting data from graphs that aims to reduce time and improve accuracy,10 a feature that will soon be integrated into SyRF. SRs, systematic reviews; SyRF, Systematic Review & Meta-analysis Facility.

8. The future

Performing a SR enables researchers to hone their critical analysis skills and gain an in-depth understanding of the field. Like the vision proposed by Nakagawa et al.,39 we envisage a new community for pain research that comprises of primary researchers who perform research synthesis with support from systematic reviewers, librarians, and statisticians. Primary researchers will use SRs to generate hypothesis and inform future research design. Evidence synthesis will also be recognised as an end goal of the research process. Research will be designed, conducted, analysed, and reported accordingly (eg, for eligibility in prospective MAs as described by Seidler et al.44), thereby mitigating biases and reducing research waste. Conducting SRs will lead to improvements in education, practice, and communication of pain research and improve the predictive validity of animal research, reduce research waste, and improve pain outcomes for patients.

Conflict of interest statement

The authors have no conflicts of interest to declare.

Acknowledgements

The authors thank Dr Emily Sena for her constructive feedback on the manuscript.

The work is funded by the BBSRC (grant number BB/M011178/1). J. Vollert and A.S.C. Rice are part of the European Quality In Preclinical Data (EQIPD) consortium. This project has received funding from the Innovative Medicines Initiative 2 Joint Undertaking under grant agreement No 777364. This Joint Undertaking receives support from the European Union's Horizon 2020 research and innovation programme and EFPIA.

Supplemental video content

A video abstract associated with this article can be found at http://links.lww.com/PAIN/B114.

Footnotes

Sponsorships or competing interests that may be relevant to content are disclosed at the end of this article.

References

- [1].Andrews NA, Latrémolière A, Basbaum AI, Mogil JS, Porreca F, Rice ASC, Woolf CJ, Currie GL, Dworkin RH, Eisenach JC, Evans S, Gewandter JS, Gover TD, Handwerker H, Huang W, Iyengar S, Jensen MP, Kennedy JD, Lee N, Levine J, Lidster K, Machin I, McDermott MP, McMahon SB, Price TJ, Ross SE, Scherrer G, Seal RP, Sena ES, Silva E, Stone L, Svensson CI, Turk DC, Whiteside G. Ensuring transparency and minimization of methodologic bias in preclinical pain research: PPRECISE considerations. PAIN 2016;157:901–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Bahor Z, Liao J, Macleod Malcolm R, Bannach-Brown A, McCann Sarah K, Wever Kimberley E, Thomas J, Ottavi T, Howells David W, Rice A, Ananiadou S, Sena E. Risk of bias reporting in the recent animal focal cerebral ischaemia literature. Clin Sci 2017;131:2525–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Bannach-Brown A, Przybyła P, Thomas J, Rice ASC, Ananiadou S, Liao J, Macleod MR. Machine learning algorithms for systematic review: reducing workload in a preclinical review of animal studies and reducing human screening error. Syst Rev 2019;8:23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med 2010;7:e1000326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Campbell M, McKenzie JE, Sowden A, Katikireddi SV, Brennan SE, Ellis S, Hartmann-Boyce J, Ryan R, Shepperd S, Thomas J, Welch V, Thomson H. Synthesis without meta-analysis (SWiM) in systematic reviews: reporting guideline. BMJ 2020;368:l6890. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Chalmers I. Academia's failure to support systematic reviews. Lancet 2005;365:469. [DOI] [PubMed] [Google Scholar]

- [7].Chan AW, Song FJ, Vickers A, Jefferson T, Dickersin K, Gotzsche PC, Krumholz HM, Ghersi D, van der Worp HB. Increasing value and reducing waste: addressing inaccessible research. Lancet 2014;383:257–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Clark JD. Preclinical pain research: can we do better? Anesthesiology 2016;125:846–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Clarke M. Doing new research? Don't forget the old. PLoS Med 2004;1:e35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Cramond F, O'Mara-Eves A, Doran-Constant L, Rice AS, Macleod M, Thomas J. The development and evaluation of an online application to assist in the extraction of data from graphs for use in systematic reviews. Wellcome Open Res 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Crequit P, Trinquart L, Yavchitz A, Ravaud P. Wasted research when systematic reviews fail to provide a complete and up-to-date evidence synthesis: the example of lung cancer. Bmc Med 2016;14:8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Crossley NA, Sena E, Goehler J, Horn J, van der Worp B, Bath PMW, Macleod M, Dirnagl U. Empirical evidence of bias in the design of experimental stroke studies - a metaepidemiologic approach. Stroke 2008;39:929–34. [DOI] [PubMed] [Google Scholar]

- [13].Currie GL, Angel-Scott HN, Colvin L, Cramond F, Hair K, Khandoker L, Liao J, Macleod M, McCann SK, Morland R, Sherratt N, Stewart R, Tanriver-Ayder E, Thomas J, Wang QY, Wodarski R, Xiong R, Rice ASC, Sena ES. Animal models of chemotherapy-induced peripheral neuropathy: a machine-assisted systematic review and meta-analysis. Plos Biol 2019;17:e3000243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Currie GL, Macleod M, Sena E, Bahor Z, Liao J, Sena C, Hair K, Wilson E, Wang Q, Bannach-Brown A, Ezgi TA, Ayder C, Bahor Z. ROTOCOL for A “living” evidence summary OF primary research related to COVID-19. Available at: https://osf.io/q5c2v/. Accessed June 5, 2020. [Google Scholar]

- [15].de Vries RBM, Hooijmans CR, Langendam MW, van Luijk J, Leenaars M, Ritskes-Hoitinga M, Wever KE. A protocol format for the preparation, registration and publication of systematic reviews of animal intervention studies. Evid Based Preclinical Med 2015;2:e00007. [Google Scholar]

- [16].de Vries RBM, Hooijmans CR, Tillema A, Leenaars M, Ritskes-Hoitinga M. Updated version of the Embase search filter for animal studies. Lab Anim 2014;48:88. [DOI] [PubMed] [Google Scholar]

- [17].de Vries RBM, Leenaars M, Tra J, Huijbregtse R, Bongers E, Jansen JA, Gordijn B, Ritskes-Hoitinga M. The potential of tissue engineering for developing alternatives to animal experiments: a systematic review. J Tissue Eng Regenerative Med 2015;9:771–8. [DOI] [PubMed] [Google Scholar]

- [18].Egger M, Smith GD, Sterne JAC. Uses and abuses of meta-analysis. Clin Med 2001;1:478–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Elliott JH, Synnot A, Turner T, Simmonds M, Akl EA, McDonald S, Salanti G, Meerpohl J, MacLehose H, Hilton J, Tovey D, Shemilt I, Thomas J; Living Systematic Review N. Living systematic review: 1. Introduction-the why, what, when, and how. J Clin Epidemiol 2017;91:23–30. [DOI] [PubMed] [Google Scholar]

- [20].Federico CA, Mogil JS, Ramsay T, Fergusson DA, Kimmelman J. A systematic review and meta-analysis of pregabalin preclinical studies. PAIN 2020;161:684–93. [DOI] [PubMed] [Google Scholar]

- [21].Finnerup NB, Attal N, Haroutounian S, McNicol E, Baron R, Dworkin RH, Gilron I, Haanpaa M, Hansson P, Jensen TS, Kamerman PR, Lund K, Moore A, Raja SN, Rice ASC, Rowbotham M, Sena E, Siddall P, Smith BH, Wallace M. Pharmacotherapy for neuropathic pain in adults: a systematic review and meta-analysis. Lancet Neurol 2015;14:162–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Garner P, Hopewell S, Chandler J, MacLehose H, Schunemann HJ, Akl EA, Beyene J, Chang S, Churchill R, Dearness K, Guyatt G, Lefebvre C, Liles B, Marshall R, Garcia LM, Mavergames C, Nasser M, Qaseem A, Sampson M, Soares-Weiser K, Takwoingi Y, Thabane L, Trivella M, Tugwell P, Welsh E, Wilson EC. When and how to update systematic reviews: consensus and checklist. Bmj-British Med J 2016;354:i3507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S, Michie S, Moher D, Wager E. Reducing waste from incomplete or unusable reports of biomedical research. Lancet 2014;383:267–76. [DOI] [PubMed] [Google Scholar]

- [24].Gurevitch J, Koricheva J, Nakagawa S, Stewart G. Meta-analysis and the science of research synthesis. Nature 2018;555:175–82. [DOI] [PubMed] [Google Scholar]

- [25].Haddaway NR, Collins AM, Coughlin D, Kirk S. The role of Google scholar in evidence reviews and its applicability to grey literature searching. Plos One 2015;10:e0138237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Higgins JPT, Altman DG, Gotzsche PC, Juni P, Moher D, Oxman AD, Savovic J, Schulz KF, Weeks L, Sterne JAC; Cochrane bias methods G, Cochrane stat methods G. The Cochrane collaboration's tool for assessing risk of bias in randomised trials. Bmj-British Med J 2011;343:d5928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Hirst JA, Howick J, Aronson JK, Roberts N, Perera R, Koshiaris C, Heneghan C. The need for randomization in animal trials: an overview of systematic reviews. PLoS One 2014;9:e98856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Hooijmans CR, de Vries RBM, Ritskes-Hoitinga M, Rovers MM, Leeflang MM, IntHout J, Wever KE, Hooft L, de Beer H, Kuijpers T, Macleod MR, Sena ES, ter Riet G, Morgan RL, Thayer KA, Rooney AA, Guyatt GH, Schünemann HJ, Langendam MW. On behalf of the GWG. Facilitating healthcare decisions by assessing the certainty in the evidence from preclinical animal studies. PLoS One 2018;13:e0187271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Hooijmans CR, Rovers M, de Vries RB, Leenaars M, Ritskes-Hoitinga M. An initiative to facilitate well-informed decision-making in laboratory animal research: report of the First International Symposium on Systematic Reviews in Laboratory Animal Science. Lab Anim 2012;46:356–7. [DOI] [PubMed] [Google Scholar]

- [30].Hooijmans CR, Rovers MM, de Vries RBM, Leenaars M, Ritskes-Hoitinga M, Langendam MW. SYRCLE's risk of bias tool for animal studies. BMC Med Res Methodol 2014;14:43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Hooijmans CR, Tillema A, Leenaars M, Ritskes-Hoitinga M. Enhancing search efficiency by means of a search filter for finding all studies on animal experimentation in PubMed. Lab Anim 2010;44:170–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Ioannidis JPA, Greenland S, Hlatky MA, Khoury MJ, Macleod MR, Moher D, Schulz KF, Tibshirani R. Increasing value and reducing waste in research design, conduct, and analysis. Lancet 2014;383:166–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Leenaars M, Hooijmans CR, van Veggel N, ter Riet G, Leeflang M, Hooft L, van der Wilt GJ, Tillema A, Ritskes-Hoitinga M. A step-by-step guide to systematically identify all relevant animal studies. Lab Anim 2012;46:24–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Macleod Malcolm R, O'Collins T, Howells David W, Donnan Geoffrey A. Pooling of animal experimental data reveals influence of study design and publication bias. Stroke 2004;35:1203–8. [DOI] [PubMed] [Google Scholar]

- [35].Macleod MR, Lawson McLean A, Kyriakopoulou A, Serghiou S, de Wilde A, Sherratt N, Hirst T, Hemblade R, Bahor Z, Nunes-Fonseca C, Potluru A, Thomson A, Baginskitae J, Egan K, Vesterinen H, Currie GL, Churilov L, Howells DW, Sena ES. Risk of bias in reports of in vivo research: a focus for improvement. PLOS Biol 2015;13:e1002273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Macleod MR, Michie S, Roberts I, Dirnagl U, Chalmers I, Ioannidis JPA, Salman RA, Chan AW, Glasziou P. Biomedical research: increasing value, reducing waste. Lancet 2014;383:101–4. [DOI] [PubMed] [Google Scholar]

- [37].McCann SK, Lawrence CB. Comorbidity and age in the modelling of stroke: are we still failing to consider the characteristics of stroke patients? BMJ Open Sci 2020;4:e100013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [38].Moher D, Liberati A, Tetzlaff J, Altman DG, Grp P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med 2009;151:264–W264. [DOI] [PubMed] [Google Scholar]

- [39].Nakagawa S, Dunn AG, Lagisz M, Bannach-Brown A, Grames EM, Sánchez-Tójar A, O'Dea RE, Noble DWA, Westgate MJ, Arnold PA, Barrow S, Bethel A, Cooper E, Foo YZ, Geange SR, Hennessy E, Mapanga W, Mengersen K, Munera C, Page MJ, Welch V, Carter M, Forbes O, Furuya-Kanamori L, Gray CT, Hamilton WK, Kar F, Kothe E, Kwong J, McGuinness LA, Martin P, Ngwenya M, Penkin C, Perez D, Schermann M, Senior AM, Vásquez J, Viechtbauer W, White TE, Whitelaw M, Haddaway NR; Evidence Synthesis Hackathon P. A new ecosystem for evidence synthesis. Nat Ecol Evol 2020;4:498–501. [DOI] [PubMed] [Google Scholar]

- [40].Pound P, Ritskes-Hoitinga M. Can prospective systematic reviews of animal studies improve clinical translation? J Transl Med 2020;18:15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [41].Rice ASC, Cimino-Brown D, Eisenach JC, Kontinen VK, Lacroix-Fralish ML, Machin I, Mogil JS, Stöhr T. Animal models and the prediction of efficacy in clinical trials of analgesic drugs: a critical appraisal and call for uniform reporting standards. PAIN 2008;139:243–7. [DOI] [PubMed] [Google Scholar]

- [42].Robinson KA, Goodman SN. A systematic examination of the citation of prior research in reports of randomized, controlled trials. Ann Intern Med 2011;154:50–U187. [DOI] [PubMed] [Google Scholar]

- [43].Rooke EDM, Vesterinen HM, Sena ES, Egan KJ, Macleod MR. Dopamine agonists in animal models of Parkinson's disease: a systematic review and meta-analysis. Parkinsonism Relat Disord 2011;17:313–20. [DOI] [PubMed] [Google Scholar]

- [44].Seidler AL, Hunter KE, Cheyne S, Ghersi D, Berlin JA, Askie L. A guide to prospective meta-analysis. BMJ 2019;367:l5342. [DOI] [PubMed] [Google Scholar]

- [45].Sena ES, Currie GL, McCann SK, Macleod MR, Howells DW. Systematic reviews and meta-analysis of preclinical studies: why perform them and how to appraise them critically. J Cereb Blood Flow Metab 2014;34:737–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Sena ES, Macleod MR. Concordance between laboratory and clinical drug efficacy: lessons from systematic review and meta-analysis. Stroke 2007;38:502. [Google Scholar]

- [47].Sena ES, van der Worp HB, Bath PMW, Howells DW, Macleod MR. Publication bias in reports of animal stroke studies leads to major overstatement of efficacy. Plos Biol 2010;8:e1000344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, Moher D, Tugwell P, Welch V, Kristjansson E, Henry DA. Amstar 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ 2017;358:j4008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Shojania KG, Sampson M, Ansari MT, Ji J, Doucette S, Moher D. How quickly do systematic reviews go out of date? A survival analysis. Ann Intern Med 2007;147:224–33. [DOI] [PubMed] [Google Scholar]

- [50].Soliman N. Talks. Available at: https://osf.io/hwtn7/. Accessed 5 June, 2020.

- [51].Soliman N, Hohmann AG, Haroutounian S, Wever K, Rice ASC, Finn DP. A protocol for the systematic review and meta-analysis of studies in which cannabinoids were tested for antinociceptive effects in animal models of pathological or injury-related persistent pain. Pain Rep 2019;4:e766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Song F, Parekh S, Hooper L, Loke YK, Ryder J, Sutton AJ, Hing C, Kwok CS, Pang C, Harvey I. Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess 2010;14:1. [DOI] [PubMed] [Google Scholar]

- [53].Tacconelli E. Systematic reviews: CRD's guidance for undertaking reviews in health care. Lancet Infect Dis 2010;10:226. [Google Scholar]

- [54].Thornton A, Lee P. Publication bias in meta-analysis: its causes and consequences. J Clin Epidemiol 2000;53:207–16. [DOI] [PubMed] [Google Scholar]

- [55].Tricco AC, Brehaut J, Chen MH, Moher D. Following 411 Cochrane protocols to completion: a retrospective cohort study. PLoS One 2008;3:e3684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [56].Vesterinen HM, Sena ES, Egan KJ, Hirst TC, Churolov L, Currie GL, Antonic A, Howells DW, Macleod MR. Meta-analysis of data from animal studies: a practical guide. J Neurosci Methods 2014;221:92–102. [DOI] [PubMed] [Google Scholar]

- [57].Vollert J, Schenker E, Macleod M, Bespalov A, Wuerbel H, Michel M, Dirnagl U, Potschka H, Waldron A-M, Wever K, Steckler T, van de Casteele T, Altevogt B, Sil A, Rice ASC. Systematic review of guidelines for internal validity in the design, conduct and analysis of preclinical biomedical experiments involving laboratory animals. BMJ Open Sci 2020;4:e100046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [58].Wever KE, Menting TP, Rovers M, van der Vliet JA, Rongen GA, Masereeuw R, Ritskes-Hoitinga M, Hooijmans CR, Warle M. Ischemic preconditioning in the animal kidney, a systematic review and meta-analysis. PLoS One 2012;7:e32296. [DOI] [PMC free article] [PubMed] [Google Scholar]