Highlights

-

•

In this position paper, we provide a collection of views on the role of AI in the COVID-19 pandemic, from the clinical needs to the design of AI-based systems, to the translation of the developed tools to the clinic.

-

•

We highlight key factors in designing system solutions - per specific task; as well as design issues in managing the disease on the national level.

-

•

We focus on three specific use-cases for which AI systems can be built: from the early disease detection, the management of the disease in a hospital setting, and building patient-specific predictive models that require the combination of imaging with additional clinical features.

-

•

Infrastructure considerations and population modeling in two European countries will be described.

-

•

This pandemic has made the practical and scientific challenges of making AI solutions very explicit. A discussion concludes this paper, with a list of challenges facing the community in the AI road ahead.

Keywords: COVID-19, Imaging, AI

Abstract

In this position paper, we provide a collection of views on the role of AI in the COVID-19 pandemic, from clinical requirements to the design of AI-based systems, to the translation of the developed tools to the clinic. We highlight key factors in designing system solutions - per specific task; as well as design issues in managing the disease at the national level. We focus on three specific use-cases for which AI systems can be built: early disease detection, management in a hospital setting, and building patient-specific predictive models that require the combination of imaging with additional clinical data. Infrastructure considerations and population modeling in two European countries will be described. This pandemic has made the practical and scientific challenges of making AI solutions very explicit. A discussion concludes this paper, with a list of challenges facing the community in the AI road ahead.

1. Introduction

The COVID-19 pandemic surprised the world with its rapid spread and has had a major impact on the lives of billions of people. Imaging is playing a role in the fight against the disease, in some countries as a key tool, from screening and diagnosis through the entire treatment process, but in other countries, as a relatively minor support tool. Guidelines and diagnostic protocols are still being defined and updated in countries around the world. Where enabled, Computed Tomography (CT) of the thorax has been shown to provide an important adjunctive role in diagnosing and tracking progress of COVID-19 in comparison to other methods such as monitoring of temperature/respiratory symptoms and the current- “gold standard”, molecular testing, using sputum or nasopharyngeal swabs. Several countries (including China, Netherlands, Russia and more) have elected to use CT as a primary imaging modality, from the initial diagnosis through the entire treatment process. Other countries, such as the US and Denmark as well as developing countries (Southeast Asia, Africa) are using mostly conventional radiographic (x-ray) imaging of the chest (CXR). In addition to establishing the role of imaging, this is the first time AI, or more specifically, deep learning approaches have the opportunity to join in as tools on the frontlines of fighting an emerging pandemic. These algorithms can be used in support of emergency teams, real-time decision support, and more. In this position paper1 , a group of researchers provide their views on the role of AI, from clinical requirements to the design of AI-based systems, to the infrastructure necessary to facilitate national-level population modeling.

Many studies have emerged in the last several months from the medical imaging community with many research groups as well as companies introducing deep learning based solutions to tackle the various tasks: mostly in detection of the disease (vs normal), and more recently also for staging disease severity. For a review of emerging works in this space we refer the reader to a recent review article Shi et al. (2020a) that covers the first papers published, up to and including March 2020 - in the entire pipeline of medical imaging and analysis techniques involved with COVID-19, including image acquisition, segmentation, diagnosis, and follow-up. We also want to point out several Special Issues in this space- including IEEE Special issue of TMI, April 2020; IEEE Special issue of JHBI, 2020; as well as the current Special issue of MedIA.

In the current position paper, it is not our goal to provide an overview of the publications in the field, rather we present our own experiences in the space and a joint overview of challenges ahead. We start with the radiologist perspective. What are the clinical needs for which AI may provide some benefits? We follow that with an introduction to AI based solutions - the challenges and roadmap for developing AI-based systems, in general and for the COVID-19 pandemic. In Section 2 of this paper we focus on three specific use-cases for which AI systems can be built: detection, patient management, and predictive models in which the imaging is combined with additional clinical features. System examples will be briefly introduced. In Section 3 we present a different perspective of AI in its role in the upstream and downstream management of the pandemic. Specific infrastructure considerations and population modeling in two European countries will be described in Section 4. A discussion concludes this paper, with a list of challenges facing the community in our road ahead.

1.1. The COVID-19 pandemic – clinical perspective

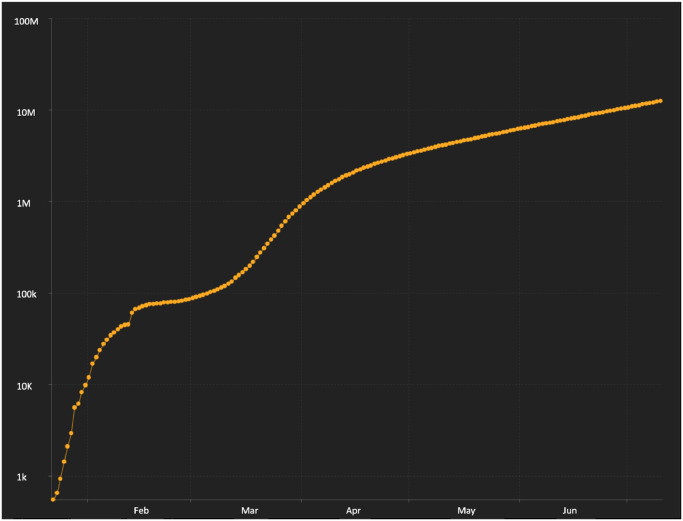

As of this writing, according to the Johns Hopkins Resource Center (https://coronavirus.jhu.edu/), there are, approximately, 12.5 million confirmed cases with 561,000 deaths throughout the world, with 32,000 deaths in New York State alone. The rate of increase in cases has continued to rise as demonstrated by the log scale plot in Fig. 1 .

Fig. 1.

Logarithmic plot of number of total confirmed cases from January through the first week in July 2020 indicating continuing acceleration in the rate new patients are testing positive for COVID-19 (plot from Johns Hopkins Resource Center).

The most common symptoms of the disease, fever, fatigue, dry cough, runny nose, diarrhea and shortness of breath are non-specific and are common to many people with a variety of conditions. The mean incubation period is approximately 5 days and the virus is probably most often transmitted by asymptomatic patients. Knowing who is positive for the disease has critical implications for keeping patients away from others. Unfortunately, the gold standard lab test, real time reverse transcription polymerase chain reaction (RT-PCR) which detects viral nucleic acid, has not been universally available in many areas and its sensitivity varies considerably depending on how early patients are tested in the course of their disease. Recent studies have suggested that RT-RPR has a sensitivity of only 61-70%. Consequently, repeat testing is often required to ensure a patient is actually free of the disease. Fang et al. (2020) found that for the 51 patients they studied with thoracic CT and RT-PCR assay performed within 3 days of each other, the sensitivity of CT for COVID-19 was 98% compared to RT-PCR sensitivity of 71% (p < .001).

On CXR and CT exams of the thorax, findings are usually bilateral (72%) early in the progression of disease and even more likely bilateral (88%) in later stages (Bernheim, Mei, Huang, Yang, Fayad, Zhang,..., Li, 2020, Zhao, Zhong, Xie, Yu, Liu, 2020). The typical presentation in ICU patients is bilateral subsegmental areas of air-space consolidation. In non-ICU patients, classic findings are transient subsegmental consolidation early and then bilateral ground glass opacities that are typically peripheral in the lungs. Pneumothorax (collapsed lung) and pleural fluid or cavitation (due to necrosis) are usually not seen. Distinctive patterns of COVID-19 such as “crazy paving” in which ground glass opacity is combined with superimposed interlobular and intralobular septal thickening and the “reverse halo sign” where a ground glass region of the lung is surrounded by an irregular thick wall have been previously described in other diseases but are atypical of most pneumonias.

The use of thoracic CT for both diagnosis of disease and tracking has varied tremendously from country to country. While countries such as China and Iran utilize it for its very high sensitivity to disease in the diagnosis and tracking of progression of disease, the prevailing recommendation in the US and other countries is to only use lab studies for diagnosis, use chest radiography to assess severity of disease, and to hold off on performing thoracic CT except for patients with relatively severe and complicated manifestations of disease (Simpson et al., 2020). This is due to concerns in the US about exposure of radiology staff and other patients to COVID-19 patients and the thought that CT has limited incremental value over portable chest radiographs which can be performed outside the imaging department. Additionally, during a “surge” period, the presumption is made that the vast majority of patients with pulmonary symptoms have the disease, rendering CT as a relatively low value addition to the clinical work-up. As a diagnostic tool, CT offers the potential to differentiate patients with COVID-19 not only from normal patients, but from those with other causes of shortness of breath and cough such as TB or other bacterial or alternatively, other viral pneumonias, bronchitis, heart failure, and pulmonary embolism. As a quantitative tool, it offers the ability to determine what percentage of the lung is involved with the disease and to break this down into areas of ground glass density, consolidation, collapse, etc. This can be evaluated on serial studies which may be predictive of a patient’s clinical course and may help to determine optimal clinical treatment.

Complications of COVID-19 are not limited to acute lung parenchymal disease. These patients have coagulopathies and are at increased risk for pulmonary embolism. Diffuse vascular inflammation can result in pericarditis and pericardial effusions. Renal and brain manifestations have been described by many authors and are increasingly recognized clinically in COVID-19 patients. Long term lung manifestations will not be apparent for many months or years, but there is the potential that these patients will develop higher rates of Chronic Obstructive Pulmonary Disease (COPD) such as emphysema, chronic bronchitis and asthma than the general population. Objective metrics for assessment and follow-up of these complications of the disease would be very valuable from a clinical perspective.

1.2. AI for COVID-19

The extraordinarily rapid spread of the COVID-19 pandemic has demonstrated that a new disease entity with a subset of relatively unique characteristics can pose a major new clinical challenge that requires new diagnostic tools in imaging. The typical developmental cycle and large number of studies required to develop AI algorithms for various disease entities is much too long to respond effectively to produce these software tools on demand. This is complicated by the fact that the disease can have different manifestations (perhaps due to different strains) in different regions of the world. This suggests the strong need to develop software more rapidly, perhaps using transfer learning from existing algorithms, to train on a relatively limited number of cases, and to train on multiple datasets in various locations that may not be able to be easily combined due to privacy and security issues. It also suggests that we determine how to balance regulatory requirements for adequate testing of the safety and efficacy of these algorithms against the need to have them available in a timely manner to impact clinical care.

AI technology, in particular deep learning image analysis tools, can potentially be developed to support radiologists in the triage, quantification, and trend analysis of the data. AI solutions have the potential to analyze multiple cases in parallel to detect whether chest CT or chest radiography reveals any abnormalities in the lung. If the software suggests a significantly increased likelihood of disease, the case can be flagged for further review by a radiologist or clinician for possible treatment/quarantine. Such systems, or variations thereof, once verified and tested can become key contributors in the detection and control of patients with the virus.

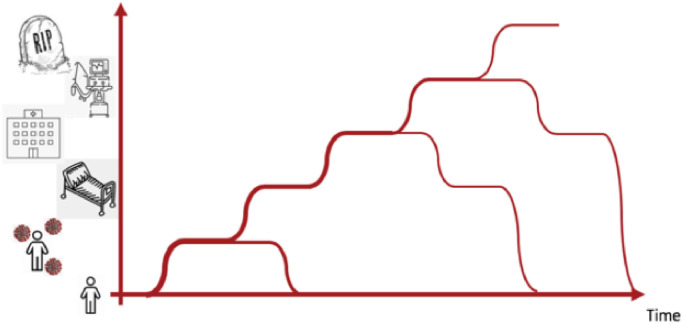

Another major use of AI is in predictive analytics: foreseeing events for timely intervention. Predictive AI can be potentially applied at three scales: the individual scale, the hospital scale, and the societal scale. An individual may go through various transitions from healthy to potentially contaminated, symptomatic, etc. as depicted in Fig. 2 . At the individual level, we may use AI for computing risk of contamination based on location, risk of severe COVID-19 based on co-morbidities and health records, risk of Acute Respiratory Distress Syndrome (ARDS) and risk of mortality to help guide testing, intervention, hospitalization and treatment. Quantitative CT or chest radiographic imaging may play an important role in risk modeling for the individual, and especially in the risk of ARDS. At the hospital level, AI for imaging may for example be used for workflow improvement by (semi-) automating radiologist’s interpretations, and by forecasting the future need for ICU and ventilator capacity. At the societal level AI may be used in forecasting hospital capacity needs and may be an important measure to aid in assessing the need for lock downs and re-openings.

Fig. 2.

Patients trajectories of the individual may be staged into contamination, symptoms, hospitalization, ventilation, and deceased.

So far, we have here concentrated on disease diagnosis and management, but imaging with AI may also have a role to play in relation to late effects like neurological, cardiovascular, and respiratory damage.

Before entering the discussion on specific usages of AI to ease the burden of the pandemic, we briefly describe the standard procedure of creating an AI solution in order to clarify the nomenclature. The standard way of developing Deep Learning algorithms and systems entails several phases (Greenspan, van Ginneken, Summers, 2016, Litjens, Kooi, Bejnordi, Setio, Ciompi, Ghafoorian, van der Laak, van Ginneken, Snchez, 2017) : I. Data-collection, in which a large amount of data samples need to be collected from predefined categories; expert annotations are needed for ground-truthing the data; II. Training phase in which the collected data are used to train network models. Each category needs to be represented well enough so that the training can generalize to new cases that will be seen by the network in the testing phase. In this learning phase, the large number of network parameters (typically on the order of millions) are automatically defined; III. Testing phase in which an additional set of cases not used in training is presented to the network and the output of the network is tested statistically to determine its success of categorization. Finally, IV, the software must be validated on independent cohorts to ensure that performance characteristics generalize to unseen data from other imaging sources, demographics, and ethnicity.

In the case of a new disease, such as the coronavirus, datasets are just now being identified and annotated. There are very limited data sources as well as limited expertise in labeling the data specific to this new strain of the virus in humans. Accordingly, it is not clear that there are enough examples to achieve clinically meaningful learning at this early stage of data collection despite the increasingly critical importance of this software. Solutions to this challenge, that may enable rapid development, include the combination of several technologies: Transfer learning will utilize pretraining on other but somehow statistically similar data. In the general domain of computer vision, ImageNet has been used for this purpose (Donahue et al., 2014). In the case of COVID-19 this may be provided by existing databases of annotated images of patients with other lung infections. Data augmentation is a trick used from the beginning of applying convolution neural networks (CNNs) to imaging data (LeCun et al., 1989), in which data are transformed to provide extra training data. Normally rotations, reflections, scaling or even group actions beyond the affine group can be explored. Other technologies include semi-supervised learning and weak learning when labels are noisy and/or missing (Cheplygina et al., 2019). Thus, the underlying approach to enable rapid development of new AI-based capabilities, is to leverage the ability to modify and adapt existing AI models and combine them with initial clinical understanding to address the new challenges and new disease entities, such as the COVID-19.

2. AI for detection, management, and prediction in COVID-19

In this Section we briefly review three possible system developments: AI systems for detection and characterization of disease, AI systems for measuring disease severity and patient monitoring, and AI systems for predictive modeling. Each category will be reviewed briefly and a specific system will be described with a focus on the AI based challenges and solutions.

2.1. AI for detection and diagnosis

The vast majority of efforts for the diagnosis of COVID-19 have been focused on detecting unique injury patterns related to the infection. Automated recognition of those patterns became an ideal challenge for the use of CNNs trained on the appearance of those patterns.

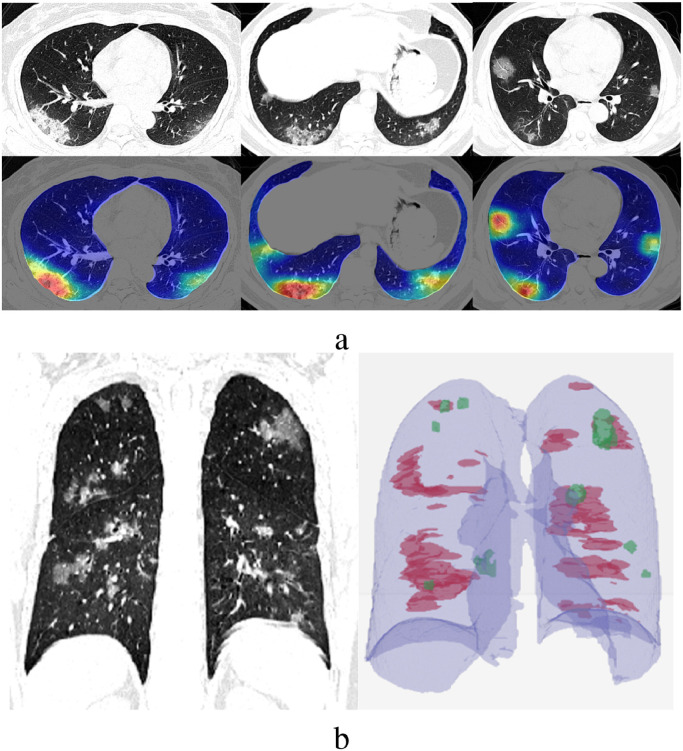

One example of a system for COVID-19 detection and analysis is shown in Fig. 3, which presents an overview of the analysis conducted in Gozes et al. (2020a). In general, as is shown here, automated solutions are comprised of several components. Each one is based on a network model that focuses on a specific task to solve. In the presented example, both 3D and 2D analysis are conducted, in parallel. 3D analysis of the imaging studies is utilized for detection of nodules and focal opacities using nodule-detection algorithms, with modifications to detect ground-glass (GG) opacities. A 2D analysis of each slice of the case is used to detect and localize COVID-19 diffuse opacities. If we focus on the 2D analysis - we again see that multiple steps are usually defined. The first step is the extraction of the lung area as a region of interest (ROI) using a lung segmentation module. The segmentation step removes image portions that are not relevant for the detection of within-lung disease. Within the extracted lung region, a COVID-19 detection procedure is conducted, utilizing one of a variety of possible schemes and corresponding networks. For example, this step can be a procedure for (undefined) abnormality detection, or a specific pattern learning task. In general, a classification neural network (COVID-19 vs. not COVID-19) is a key component of the solution. Such networks, which are mostly CNN based, enable the localization of COVID-19 manifestations in each 2D slice that is selected in what have become known as“heat maps” per 2D slice.

Fig. 3.

System architecture example for automatic CT image analysis of COVID-19.

To provide a complete review of the case, both the 2D and 3D analysis results can be merged. Several quantitative measurements and output visualizations can be used, including per slice localization of opacities, as in Fig. 4 (a), and a 3D volumetric presentation of the opacities throughout the lungs, as shown in Fig. 4(b), which presents a 3D visualization of all GG opacities.

Fig. 4.

(a) 2D visualization of network heatmap results; (b) Visualization of 3D results(Green - Focal GG Opacities,Red - Global Diffuse Opacities). Fig.s 3 and 4 from Gozes et al. (2020a).

Several studies have shown the ability to segment and classify the extracted lesions using neural networks to provide a diagnostic performance that matches a radiologist rating (Zhang, Liu, Shen, Li, Sang, Wu, Zha, Liang, Wang, Wang, Ye, Gao, Zhou, Li, Wang, Yang, Cai, Xu, Yang, Cai, Xu, Wu, Zhang, Jiang, Zheng, Zhang, Wang, Lu, Li, Yin, Wang, Li, Zhang, Liang, Wu, Deng, Wei, Zhou, Chen, Lau, Fok, He, Lin, Li, Wang, 2020, Bai, Wang, Xiong, Hsieh, Chang, Halsey, Tran, Choi, Wang, Shi, Mei, Jiang, Pan, Zeng, Hu, Li, Fu, Huang, Sebro, Yu, Atalay, Liao, 2020). In Zhang et al. (2020), 4695 manually annotated CT slices were used for seven classes, including background, lung field, consolidation (CL), ground-glass opacity (GGO), pulmonary fibrosis, interstitial thickening, and pleural effusion. After a comparison between different semantic segmentation approaches, they selected DeepLabv3 as their segmentation detection backbone (Chen et al., 2017). The diagnostic system was based on a neural network fed by the lung-lesion maps. The system was designed to classify normals from common pneumonia and COVID-19 specific pneumonia. Their results show a COVID-19 diagnostic accuracy of 92.49% tested in 260 subjects. In Bai et al. (2020), a direct classification of COVID-19 specific pneumonia versus other etiologies was performed using an EfficientNet B5 network (Tan and Le, 2019) followed by a two-layer fully connected network to pool the information from multiple slices and provide a patient-level diagnosis. This system yielded a 96% accuracy in a testing set of 119 subjects compared to an 85% average accuracy for six radiologists. These two examples exemplify the power of AI to perform at a very high level that may augment the radiologist, when designed and tested for a very narrow and specific task within a de-novo diagnostic situation. Time delay in COVID-19 testing using RT-PCR can be overcome with integrative solutions. Augmented testing using CT, clinical symptoms, and standard white blood cell (WBC) panels has been proposed in Mei et al. (2020). The authors show their AI system that integrates both sources of information is superior to an imaging-alone CNN model as well as a machine learning model based on non-imaging information for the diagnosis of COVID-19. Integrative approaches can overcome the lack of diagnostic specificity of CT imaging for COVID-19 (Rubin et al., 2020)

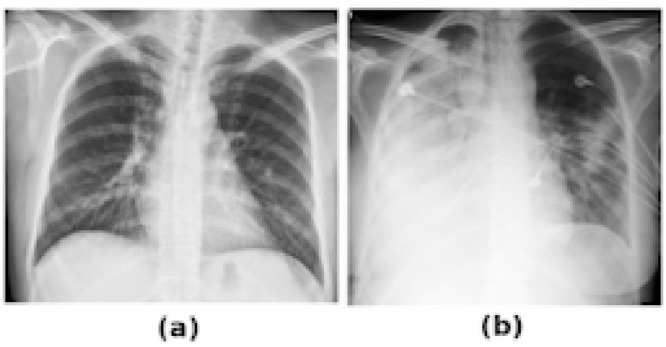

It is well understood that chest radiographs (CXR) have lower resolution and contain much less information than their CT counterparts. For example, for COVID-19 patients, the lungs may be so severely infected that they become fully opacified, obscuring details on an x-ray and making it difficult to distinguish between pneumonia, pulmonary edema, pleural effusions, alveolar hemorrhage, or lung collapse (Fig. 5 ). Still, many countries are using CXR information for initial decision support as well as throughout the patient hospitalization and treatment process (Yang et al., 2020).

Fig. 5.

(a) Normal CXR with lungs clearly visible. (b) Severely infected patient with dominant opacities making the lung boundaries hardly visible.

Deep learning pipelines for CXR opacities and infiltration scoring exist. In most publications seen to-date, researchers utilize existing public pneumonia datasets, which were available prior to the spread of Coronavirus, to develop network solutions that learn to detect pneumonia on a CXR. In Selvan et al. (2020), an attempt to solve the issue of the compact lungs is presented using variational imputation. A deep learning pipeline based on variational autoencoders (VAE) has shown in pilot studies > 90% accuracy in separating COVID-19 patients from other patients with lung infections, both bacterial and viral. A systematic evaluation of one of those system has demonstrated comparable performance to a chest radiologist (Murphy et al., 2020). This demonstrates the capability of recognizing COVID-19 associated patterns, using the CXR data. We view these results as preliminary, and to be confirmed with more rigorous experimental setup which includes access to COVID-19 and other infections from the same sources with identical acquisition technology, time-window, ethnicity, demographics, etc. Such rigorous experiments are critical in order to assess the clinical relevance of the developed technology.

2.2. AI for patient management

In this Section we focus on the use of AI for hospitalized patients. Image analysis tools can support measurement of the disease extent within the lungs, thus generating quantification for the disease that can serve as an image-based biomarker. Such a biomarker may be used to assess relative severity of patients in the hospital wards, enable tracking of disease severity over time, and thus assist in the decision-making process of the physicians handling the case. One such biomarker, termed the “Corona score”, was recently introduced in Gozes, Frid-Adar, Greenspan, Browning, Zhang, Ji, Bernheim, Gozes, Frid-Adar, Sagie, Zhang, Ji, Greenspan. The Corona score is a measure of the extent of opacities in the lungs. It can be extracted in CT and in CXR cases.

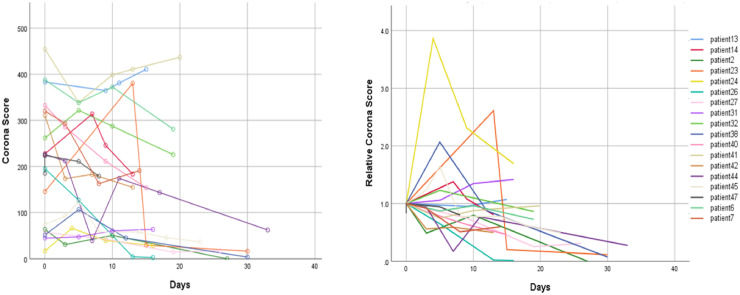

Fig. 6 presents a plot of Corona-score measurements per patient over time, in CT cases. Using the measure, we can assess relative severity of the patients (left) as well as extract a model for disease burden over the course of treatment (right). Additional very valuable information on characterization of disease manifestation can be extracted as well, such as locations of opacities within the lungs, opacities burden within specific lobs of the lungs (using a lungs lobe segmentation module) and analysis of the texture of the opacities using classification of patches extracted from detected COVID-19 areas (using a patch-based classification module). These characteristics are important biomarkers with added value for patient monitoring over time.

Fig. 6.

Tracking of patient’s disease progression over time using Corona Score (Left) and Relative Corona Score (Right). Day 0 corresponds to 1-4 days following first signs of the virus. Figure from Gozes et al. (2020a)

2.3. AI - based predictive modeling: combining the image with the clinical

COVID-19 lung infections are diagnosed and monitored with CT or CXR imaging where opacities, their type and extent, may be quantified. The picture of radiological findings in COVID-19 patients is complex (Wong et al., 2020) with mixed patterns: ground-glass opacities, opacities with a rounded morphology, peripheral distribution of disease, consolidation with ground-glass opacities, and the so called crazy-paving pattern. First reporting of longitudinal developments monitored by CXR (Shi et al., 2020b) indicate that CXR findings occur before the need for clinical intervention with oxygen and/or ventilation. This fosters the hypothesis that CXR imaging and quantification of findings are valuable in the risk assessment of the individual patient developing severe COVID-19.

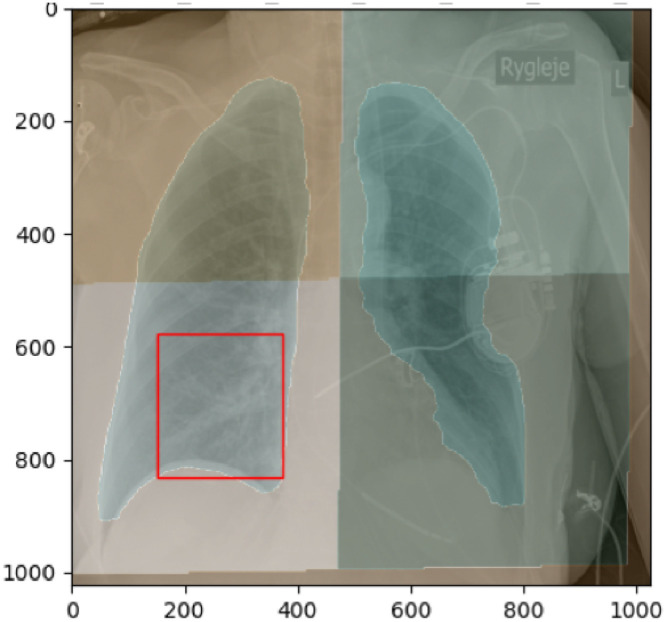

In the Capital Region of Denmark, it is standard practice to acquire a CXR for COVID-19 patients. The clinical workflow during the COVID-19 pandemic does not in general allow for manual quantitative scoring of radiographs for productivity reasons. Making use of the CXR already recorded during real time risk assessment therefore requires automated methods for quantification of image findings. Several scoring systems for the severity of COVID-19 lung infection adapted from general lung infection schemes have been proposed (Wong, Lam, Fong, Leung, Chin, Lo, Lui, Lee, Chiu, Chung, et al., 2020, Shi, Han, Jiang, Cao, Alwalid, Gu, Fan, Zheng, 2020, Cohen, Morrison, Dao). Above, in Fig. 4, it is shown how opacities may be located in CT images. Similar schemes may be used for regional opacity scoring in CXR, as shown in Fig. 7 .

Fig. 7.

Regional opacity or infiltration scoring. Here depicted in 4 quadrants but scoring systems up to 12 regions exist using 3 vertical and 2 horizontal zones of each lung being sensitive also to the peripheral patterns of COVID-19.

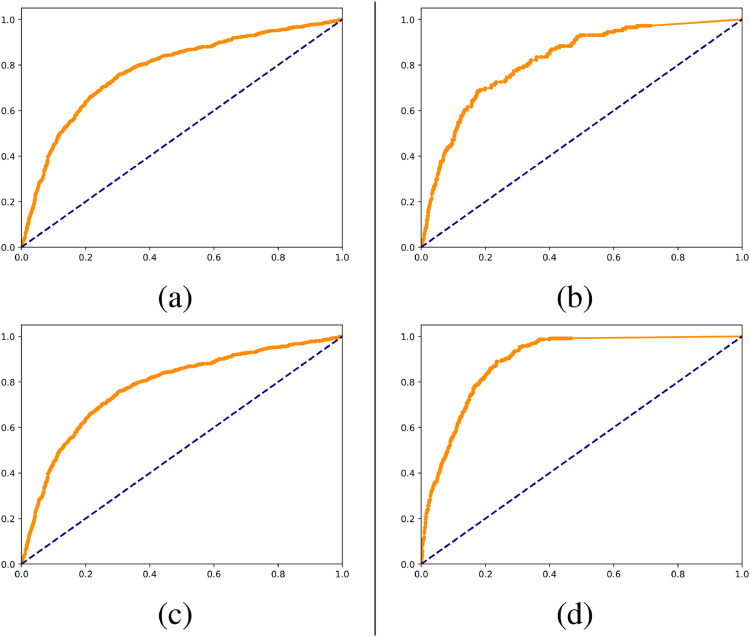

For the administration and risk profiling of the individual patient, imaging does not tell the full story. Important risk factors include age, BMI, co-morbidities (especially diabetes, hypertension, asthma, chronic respiratory or heart diseases) (Jordan et al., 2020). Combining imaging with this type of information from the EHR and with data representing the trajectory of change over time enhances the ability to determine and predict the stage of disease. An early indication is that CXR’s contribute significantly to the prediction of the probability for a patient to be on a ventilator. Here we briefly summarise the patient trajectory prognosis setup: We have in preliminary studies from the cohort from the Capital and Zealand regions of Denmark, combined clinical information from electronic health records (EHR) defining variables relating to vital parameters, comorbidities, and other health parameters with imaging information. Modeling was performed using a simple random forest implementation in a 5-fold cross-validation fashion. In Fig. 8 are as illustration AUC for prediction of outcome in terms of hospitalisation, requirement for ventilator, admission to intensive care unit, and death. These have been illustrated on 2866 Covid-19 positive subjects from the Zealand and Capital Region of Denmark. These are preliminary unconsolidated results for illustrative purposes. However, these support the feasibility of an algorithm to predict severity of COVID-19 manifestations early in the course of the disease.

Fig. 8.

Receiver operating characteristic curves for models trained on data available at time of positive PCR test from electronic health records of 2866 patients from eastern Denmark. (a) is ROC for endpoint being hospitalized, AUC=0.81. (b) is ROC for endpoint being admitted to ICU, AUC=0.83.(c) is ROC for endpoint having ventilation admitted, AUC=0.87. (d) is ROC for endpoint lethal outcome, AUC=0.89.

The combination of CXR into these prognostic tools have been performed by including a number of quantitative features per lung region as a feature vector in the random forest described above.

3. AI for upstream and downstream management of COVID-19

Imaging has played a unique role in the clinical management of the COVID-19 pandemic. Public health authorities of many affected countries have been forced to implement severe mitigation strategies to avoid the wide community spread of the virus (Parodi and Liu, 2020). Mitigation strategies put forth have focused on acute disease management and the plethora of automated imaging solutions that have emerged in the wake of this crisis have been tailored toward this emergent need. Until effective therapy is proven to prevent the widespread dissemination of the disease, mitigation strategies will be followed by more focused efforts and containment approaches aimed at avoiding the high societal cost of new confinement policies. In that regard, imaging augmented by AI can also play a crucial role in providing public health officials with pandemic control tools. Opportunities in both upstream infection management and downstream solutions related to disease resolution, monitoring of recurrence and health “security” will be emerging in the months to come as economies reopen to normal life.

Pandemic control measurements in the pre-clinical phase of the infection may seek to identify those subjects that are more susceptible to the disease due to their underlying risk factors that lead to the acute phase of COVID-19 infections. Several epidemiological factors, including age, obesity, smoking, chronic lung disease hypertension, and diabetes, have been identified as risk factors (Petrilli et al., 2020). However, there is a need to understand further risk factors that can be revealed by image-based studies. Imaging has shown to be a powerful source of information to reveal latent traits that can help identify homogeneous subgroups with specific determinants of disease (Young et al., 2018). This kind of approach could be deployed in retrospective databases of COVID-19 patients with pre-infection imaging to understand why some subjects seem to be much more prone to progression of the viral infection to acute pulmonary inflammation. The identification of high-risk populations by imaging could enable targeted preventive measurements and precision medicine approaches that could catalyze the development of curative and palliative therapies. Identification of molecular pathways in those patients at a higher risk may be crucial to catalyze the development of much needed host-targeting therapies.

The resolution of the infection has been shown to involve recurrent pulmonary inflammation with vascular injury that has led to post-intensive care complications (Ackermann et al., 2020). Detection of micro embolisms is a crucial task that can be addressed by early diagnostic methods that monitor vascular changes related to vascular pruning or remodeling. Methods developed within the context of pulmonary embolism detection, and clot burden quantification could be repurposed for this task (Huang et al., 2020).

Another critical aspect of controlling the pandemic is the need to monitor infection recurrence as the immunity profile for SARS-Cov-2 is still unknown (Kirkcaldy et al., 2020). Identifying early pulmonary signs that are compatible with COVID-19 infection could be an essential tool to monitor subjects that may relapse in the acute episode. AI methods have shown to be able to recognize COVID-19 specific pneumonia identified on radiographic images (Murphy et al., 2020). The accessibility and potential portability of the imaging equipment in comparison to CT images could enable early pulmonary injury screening if enough specificity can be achieved in the early phases of the disease. Eventually, some of those tools might facilitate the implementation of health security screening solutions that revolve around the monitoring of individuals that might present compatible symptoms. Although medical imaging solutions might have a limited role in this space, other kinds of non-clinical imaging solutions such as thermal imaging may benefit from solutions that were originally designed in the context of X-ray or CT screening.

3.1. Pandemic control using free Apps

One of the fascinating aspects that has emerged around the utilization of AI-based imaging approaches to manage the COVID-19 pandemic has been the speed of prototyping imaging solutions and their integration in end-to-end applications that could be easily deployed in a healthcare setting and even ad-hoc makeshift caring facilities. This pandemic has shown the ability of deep neural networks to enable the development of end-to-end products based on a model representation that can be executed in a wide range of devices. Another important aspect has been the need for large-scale deployments due to the high incidence of the COVID-19 infection. These deployments have been empowered by the use of cloud-based computing architectures and multi-platform web-based technologies. Multiple private and open-source systems have been rapidly designed, tested, and deployed in the last few months. The requirements around the utilization of these systems in the general population for pandemic control are:

-

•

High-throughput: the system needs to have the ability to perform scanning and automated analysis within several seconds if screening is intended.

-

•

Portable: the system might need to reach the community without bringing them to hospital care settings to avoid nosocomial infections.

-

•

Reusable: imaging augmented with AI has emerged as a highly reusable technique with scalable utilization that can adapt to variable demand.

-

•

Sensitive: the system needs to be designed with high sensitivity and specificity to detect early signs of disease.

-

•

Private: systems have to protect patient privacy by minimizing the exchange of information outside of the care setting.

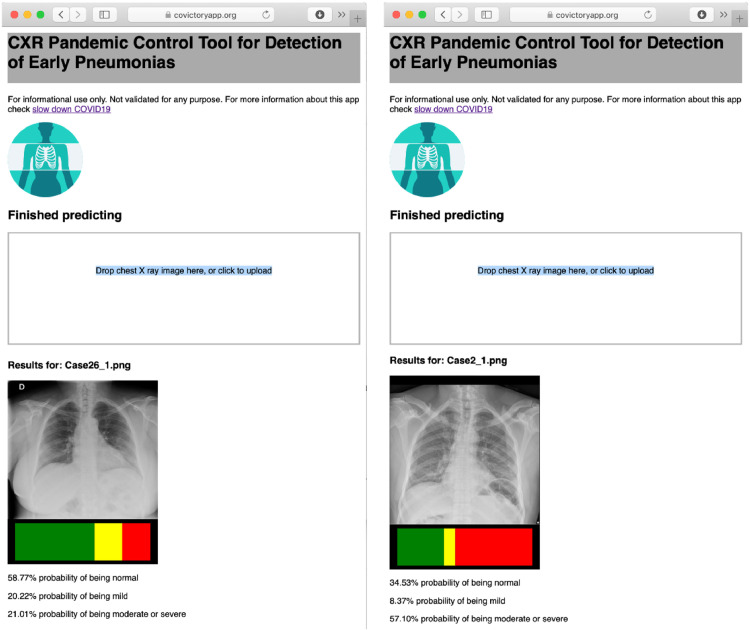

Web-based technologies that provide embedded solutions to deploy neural network systems have emerged as one of the most promising implementations that fulfill those requirements. Multiple public solutions in the context of chest X-ray detection of early pneumonia and COVID-19 compatible pneumonia have been prototyped, as shown in Fig. 9 . The Covictory App, part of the slow-down COVID project (www.slowdowncovid19.org) implements a classification neural network for the detection of mild pneumonia as an early risk detection of radiographic changes compatible with COVID-19. The developers of this system based their system in a network architecture recently proposed for tuberculosis detection that has a very compact and efficient design well-suited for deployment in mobile platforms (Pasa et al., 2019). The database that trained the network was based on imaging from three major chest X-ray databases: NIH Chest X-ray, Chexpert, and PadChest. The developers sub-classified X-ray studies labeled as pneumonia in mild versus moderate/severe pneumonia by consensus of multiple readers using spark crowd, an open source system for consensus building (Rodrigo et al., 2019). Another example is the Coronavirus Xray app that included public-domain images from COVID-19 patients to classify images into three categories: healthy, pneumonia and COVID-19. Both systems were implemented as a static web application in JavaScript using Tensorflow-JS. Although the training was carried out using customized GPU hardware, the deployment of trained models is intrinsically multi-platform and multi-device thanks to the advancement of web-based technologies. Other commercial efforts like CAD4COVID-XRay (https://www.delft.care/cad4covid/) has leveraged prior infrastructure used for the assessment of tuberculosis on X-ray to provide a readily deployable solution.

Fig. 9.

Illustration of a public AI systems for COVID-19 compatible pneumonias on chest X-rays from two COVID-19 subjects using Covictory App (www.covictoryapp.org) with mild pneumonia signs (left) and more severe disease (right).

4. AI as part of a national infrastructure

The COVID-19 crisis has seen the emergence of multiple observational studies to support research into understanding disease risk, monitoring disease trajectory, and for the development of diagnostic and prognostic tools, based on a variety of data sources including clinical data, samples and imaging data. All these studies share the theme that access to high quality data is of the essence, and this access has proven to be a challenge. The causes for this challenge to observational COVID-19 research are actually the same ones that have hampered large scale data-driven research in the health domain over the last years. Owing to the data collection that takes place in different places and different institutes, there is fragmentation of data, images and samples. Moreover there is a lack of standardization in data collection, which hampers reuse of data. Consequently, the reliability, quality and re-usability of data for data-driven research, including the development and validation of AI applications, is problematic. Finally, depending on the system researchers and innovators are working in, ethical and legal frameworks are often unclear and may sometimes be (interpreted as being) obstructive.

A coordinated effort is required to improve the accessibility to observational data for COVID-19 research. If implemented for COVID-19, it can actually serve as a blueprint for large, multi-center observational studies in many domains. As such, addressing the COVID-19 challenges also presents us with an opportunity, and in many places we are already observing that hurdles towards multicenter data accessibility are being addressed with more urgency. An example is the call by the European Union for an action to create a pan-European cohort COVID-19 including imaging data.

In the Netherlands, the Health-RI© initiative aims to build a national health data infrastructure, to enable the re-use of data for personalized medicine, and similar initiatives exist in other countries. In light of the current pandemic, these initiatives have focused efforts on supporting observational COVID-19 research, with the aim to facilitate data access to multi-center data. The underlying principle of these infrastructures is that by definition they will have to deal with the heterogeneous and distributed nature of data collection in the healthcare system. In order for such data to be re-usable, harmonisation at the source is required. This calls for local “data stewardship”, in which the different data types, including e.g. clinical, imaging and lab data, need to be collected in a harmonized way, adhering to international standards. Here, the FAIR principle needs to be adopted, i.e. data needs to be stored such that they are “Findable”, “Accessible”, “Interoperable” and “Reusable” Wilkinson et al. (2016). For clinical data, it is not only important that the same data are collected (e.g. adhering to the World Health Organisation Case Report Form (CRF), often complemented with additional relevant data), but also that their values are unambiguously defined and are machine-readable. The use of electronic CRFs (eCRFs) and accompanying software greatly supports this, and large international efforts exist to map observational data to a common data model, including e.g. the Observational Health Data Sciences and Informatics (OHDSI) model. Similarly the imaging and lab data should be processed following agreed standards. In the Health-RI© implementation, imaging data are pseudonimized using a computational pipeline that is shared between centers. For lab data, standard ontologies such as LOINC can be employed. The COVID-19 observational project will not only collect FAIR metadata describing the content and type of the data, but also data access policies for the data that are available. This will support the data search, request, and access functionalities provided by the platform. An illustration of the data infrastructure in Health-RI© is provided in Fig. 10 .

Fig. 10.

Design of Covid-19 observational data platform. In order for hospitals to link to the data platform, they need to make their clinical, imaging and lab data FAIR. Tools for data harmonization (FAIR-ification) are being shared between institutes. FAIR metadata (and in some cases FAIR data) and access policies are shared with the observational platform. This enables a search tool for researchers to determine what data resources are available at the participating hospitals. These data can subsequently be requested, and if the request is approved by a data access committee, the data will be provided, or information how the data can be accessed will be shared. In subsequent versions of the data platform, also distributed learning will be supported, so that data can stay at its location.

Next to providing data for the development of AI algorithms, it is important to facilitate their objective validation. In the medical imaging domain, challenges have become very popular to objectively compare performance of different algorithms. In the design of challenges, part of the data needs to be kept apart. It is therefore important that, while conducting efforts to provide access to observational COVID-19 data, we already plan for using part of the data for designing challenges around relevant clinical use cases.

During the pandemic, setting up such an infrastructure from scratch will not lead to timely implementation. Health-RI© was already in place prior to the pandemic, and some of its infrastructures could be adjusted to start building a COVID-19 observational data platform. In Denmark, a similar initiative was not in place. However, in eastern Denmark, the Capital and Zealand Regions share a common data platform in all hospitals with a common EHR and a PACS at each region covering in total 18 hospitals and 2.6 million citizens making data collection and curation relatively simple. At continental scale, solutions are being created, but will likely not be in place during the first wave of the pandemic. The burdens to overcome are legal, political, and technical. Access to un-consented data from patients follows different legal paths in different countries. In UK the Department of Social and Mental Care issued on March 20, 2020 a notice simplifying the legal approval of COVID-19 data processing. In Denmark, usual regulation and standards were maintained, but authorities made an effort to grant permission by the usual bodies in fast track. As access to patient information must be restricted, not every researcher with any research goal can be granted access. Without governance in place prior to an epidemic, access will be granted on an ad hoc and first-come-first-served basis, not necessarily leading to the most efficient data analysis. Finally, data are hosted in many different IT systems and the two major technical challenges lie in bringing data to a common platform, and having a (in EU GDPR) compliant technical setup for collaboration. Building such infrastructure with proper security and data handling agreements in place is complex and will lead to substantial delays if not in place prior to the epidemic. In the Netherlands, the Health-RI© platform was in place. In Denmark, the efforts have been constrained to the eastern part of the country sharing common EHR and PACS and having infrastructure in place for compliant data sharing at Computerome. At a European scale, the commission launched the European COVID-19 Data Platform on April 20 building on existing hardware infrastructure. This was followed up by a call for establishing a pan-European COVID-19 cohort. Funding decision will be in August 2020. Even though a tremendous effort has been put in place and usual approvals of access and funding have been fast-tracked, proper infrastructures have not been created in time for the first wave in Europe.

5. Discussion

The current COVID-19 pandemic offers us historic challenges but also opportunities. It is widely believed that a substantial percentage of the (as of this writing) 12.5 million confirmed cases and 561,000 deaths and trillions of dollars of economic losses would have been avoided with adequate identification of those with active disease and subsequent tracking of location of cases and prediction of emerging hotspots. Imaging has already played a major role in diagnosis and tracking and prediction of outcomes and has the potential to play an even greater role in the future. Automated computer based identification of probability of disease on chest radiographs and thoracic CT combined with tracking of disease could have been utilized early on in the development of cases, first in Wuhan, then other areas of China and Asia, and subsequently Europe and the United States and elsewhere. This could have been utilized to inform epidemiologic policy decisions as well as hospital resource utilization and ultimately, patient care.

This pandemic also represented, perhaps for the first time in history, that a disease with relatively unique imaging and clinical characteristics emerged and spread globally faster than the knowledge to recognize, diagnose and treat the disease. It also created a unique set of challenges and opportunities for the machine learning/AI community to work side by side and in parallel with clinical experts to rapidly train and deploy computer algorithms to treat an emerging disease entity. This required a combination of advanced techniques such as the use of weakly annotated schemes to train models with relatively tiny amounts of training data which has only become widely available recently, many months after the initial outbreak of disease.

The imaging community as a whole has demonstrated that extremely rapidly developed AI software using existing algorithms can achieve high accuracy in detection of a novel disease process such as COVID-19 as well as provide rapid quantification and tracking. The majority of research and development has focused on pulmonary disease with developers using standard Chest-CT DICOM imaging data as input for algorithms designed to automatically detect and measure lung abnormalities associated with COVID-19. The analysis includes automatic detection of involved lung volumes, automatic measurement of disease as compared to overall lung volume and enhanced visualization techniques that rapidly depict which areas of the lungs are involved and how they change over time in an intuitive manner that can be clinically useful.

A variety of manuscripts describing automated detection of COVID-19 cases have been recently published. When reviewing these manuscripts one can see the following interesting trend: All are focusing on one of the several key tasks, as defined herein. Each publication has a unique system design that contains a set of network models, or a comparison across models; and the results are all very strong. The compelling results, such as the ones presented herein may lead us to conclude that the task is solved; but is this the correct conclusion? It seems that the detection and quantification tasks are in fact solvable with our existing imaging analysis tools. Still, there are several data-related issues which we need to be aware of. Experimental evidence is presented on datasets of hundreds and we need to go to real world settings, in which we will start exploring thousands and even more cases, with large variability. Our systems to date are focusing on detection of abnormal lungs in a biased scenario of the pandemic in which there is a very high prevalence of patients presenting with the disease. Once the pandemic declines substantially, the shift will be immediate to the need to detect COVID among a wide variety of diseases including other lung inflammatory processes, occupational exposures, drug reactions, and neoplasms. In that future in which the prevalence of disease is lower,will our solutions that work currently be sensitive enough, without introducing too many false positives? That is the crux of many of the current studies that have tested the different AI solutions within a very narrow diagnostic scope.

There are many possibilities and promising directions, yet the unknown looms larger than the known. Just as the current pandemic has changed the way many are thinking about distance learning, the practice of telemedicine, and overall safety in a non-socially distanced society, it seems that we are similarly setting the stage with our current on the fly efforts in algorithm development for the future development and deployment of AI. We need to update infrastructure including methods of communication and sharing cases and findings as well as reference databases and algorithms for research, locally, country-based and globally. We need to prove the strengths, build the models and make sure that the steps forward are such that we can continue and expand the use of AI, particularly “just in time” AI.

We believe that imaging is an absolutely vital component of the medical space. For predictive modeling we need to not limit ourselves to just the pixel data but also include additional clinical, patient level information. For this, combined effort among many groups, as well as state and federal level support will result in optimal development, validation, and deployment.

Many argue that we were caught unaware from a communication, testing, treatment and resource perspective with the current pandemic. But deep learning-augmented imaging has emerged as a unique approach that can deliver innovative solutions from conception to deployment in extreme circumstances to address a global health crisis. The imaging community can take lessons learned from the current pandemic and use them to not only be far better prepared for recurrence of COVID-19 and future pandemics and other unexpected diseases, but also use these lessons to advance the art and science of AI as applied to medical imaging in general.

CRediT authorship contribution statement

Hayit Greenspan: Conceptualization, Writing - review & editing. Raúl San José Estépar: Conceptualization, Writing - review & editing. Wiro J. Niessen: Conceptualization, Writing - review & editing. Eliot Siegel: Conceptualization, Writing - review & editing. Mads Nielsen: Conceptualization, Writing - review & editing.

Declaration of Competing Interest

The authors declare the following financial interests/personal relationships which may be considered as potential competing interests: H. Greenspan holds shares in Radlogics Inc. R. San Jos\'e Est\'epar holds shares in Quantitative Imaging Solutions LLC. W. Niessen holds shares in Quantib. M. Nielsen holds shares in Biomediq A/S and in Cerebriu A/S.

Footnotes

motivated by an IEEE-ISBI COVID workshop, April 2020 https://ieeetv.ieee.org/event-showcase/covid-19-deep-learning-and-biomedical-imaging-panel-at-isbi-2020.

References

- Ackermann M., Verleden S.E., Kuehnel M., Haverich A., Welte T., Laenger F., Vanstapel A., Werlein C., Stark H., Tzankov A., Li W.W., Li V.W., Mentzer S.J., Jonigk D. Pulmonary vascular endothelialitis, thrombosis, and angiogenesis in Covid-19. N. Engl. J. Med. 2020 doi: 10.1056/NEJMoa2015432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bai H.X., Wang R., Xiong Z., Hsieh B., Chang K., Halsey K., Tran T.M.L., Choi J.W., Wang D.-C., Shi L.-B., Mei J., Jiang X.-L., Pan I., Zeng Q.-H., Hu P.-F., Li Y.-H., Fu F.-X., Huang R.Y., Sebro R., Yu Q.-Z., Atalay M.K., Liao W.-H. AI augmentation of radiologist performance in distinguishing COVID-19 from pneumonia of other etiology on chest CT. Radiology. 2020:201491. doi: 10.1148/radiol.2020201491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernheim A., Mei X., Huang M., Yang Y., Fayad Z.A., Zhang N., ..., Li S. Chest ct findings in coronavirus disease-19 (covid-19): relationship to duration of infection. Radiology. 2020:200463. doi: 10.1148/radiol.2020200463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen L.-C., Papandreou G., Schroff F., Adam H. Rethinking atrous convolution for semantic image segmentation. CoRR. 2017 [Google Scholar]

- Cheplygina V., de Bruijne M., Pluim J.P. Not-so-supervised : a survey of semi-supervised, multi-instance, and transfer learning in medical image analysis. Med. Image Anal. 2019;54:280–296. doi: 10.1016/j.media.2019.03.009. [DOI] [PubMed] [Google Scholar]

- Cohen, J. P., Morrison, P., Dao, L., 2020. Covid-19 image data collection. arXiv:2003.11597.

- Donahue J., Jia Y., Vinyals O., Hoffman J., Zhang N., Tzeng E., Darrell T. International Conference on Machine Learning. 2014. Decaf: a deep convolutional activation feature for generic visual recognition; pp. 647–655. [Google Scholar]

- Fang Y., Zhang H., Xie J., Lin M., Ying L., Pang P., Ji W. Sensitivity of chest ct for Covid-19: comparison to RT-PCR. Radiology. 2020:200432. doi: 10.1148/radiol.2020200432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gozes, O., Frid-Adar, M., Greenspan, H., Browning, P., Zhang, H., Ji, W., Bernheim, A., 2020a. Rapid ai development cycle for the coronavirus (covid-19) pandemic: Initial results for automated detection and patient monitoring using deep learning ct image analysis. arXiv:2003.05037.

- Gozes, O., Frid-Adar, M., Sagie, N., Kabakovitch, A., Amran, D., Amer, R., Greenspan, H., 2020b. Weakly Supervised Deep Learning Framework for COVID-19 CT Detection and Analysis, The Second International Workshop on Thoracic Image Analysis, MICCAI, 2020.

- Greenspan H., van Ginneken B., Summers R.M. Guest editorial deep learning in medical imaging: Overview and future promise of an exciting new technique. IEEE Trans. Med. Imaging. 2016;35(5):1153–1159. [Google Scholar]

- Huang S.-C., Kothari T., Banerjee I., Chute C., Ball R.L., Borus N., Huang A., Patel B.N., Rajpurkar P., Irvin J. Penet scalable deep-learning model for automated diagnosis of pulmonary embolism using volumetric ct imaging. npj Digit. Med. 2020;3(1):1–9. doi: 10.1038/s41746-020-0266-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jordan R.E., Adab P., Cheng K. Covid-19: risk factors for severe disease and death. Br. Med. J. Publishing Group, BMJ. 2020;368:m1198. doi: 10.1136/bmj.m1198. [DOI] [PubMed] [Google Scholar]

- Kirkcaldy R.D., King B.A., Brooks J.T. Covid-19 and postinfection immunity: limited evidence, many remaining questions. JAMA. 2020;323(22) doi: 10.1001/jama.2020.7869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeCun Y., Boser B., Denker J.S., Henderson D., Howard R.E., Hubbard W., Jackel L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989;1(4):541–551. [Google Scholar]

- Litjens G., Kooi T., Bejnordi B.E., Setio A.A.A., Ciompi F., Ghafoorian M., van der Laak J.A., van Ginneken B., Snchez C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- Mei X., Lee H.-C., Diao K.-Y., Huang M., Lin B., Liu C., Xie Z., Ma Y., Robson P.M., Chung M., Bernheim A., Mani V., Calcagno C., Li K., Li S., Shan H., Lv J., Zhao T., Xia J., Long Q., Steinberger S., Jacobi A., Deyer T., Luksza M., Liu F., Little B.P., Fayad Z.A., Yang Y. Artificial intelligence-enabled rapid diagnosis of patients with COVID-19. Nat. Med. 2020:1–5. doi: 10.1038/s41591-020-0931-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy K., Smits H., Knoops A.J., Korst M.B., Samson T., Scholten E.T., Schalekamp S., Schaefer-Prokop C.M., Philipsen R.H., Meijers A. Covid-19 on the chest radiograph: a multi-reader evaluation of an ai system. Radiology. 2020:201874. doi: 10.1148/radiol.2020201874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parodi S.M., Liu V.X. From containment to mitigation of COVID-19 in the US. JAMA. 2020;323(15):1441–1442. doi: 10.1001/jama.2020.3882. [DOI] [PubMed] [Google Scholar]

- Pasa F., Golkov V., Pfeiffer F., Cremers D., Pfeiffer D. Efficient deep network architectures for fast chest X-ray tuberculosis screening and visualization. Sci. Rep. 2019;9(1):6268–6269. doi: 10.1038/s41598-019-42557-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrilli C.M., Jones S.A., Yang J., Rajagopalan H., O’Donnell L., Chernyak Y., Tobin K.A., Cerfolio R.J., Francois F., Horwitz L.I. Factors associated with hospital admission and critical illness among 5279 people with coronavirus disease 2019 in New York City: prospective cohort study. BMJ. 2020;369:m1966. doi: 10.1136/bmj.m1966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodrigo E.G., Aledo J.A., Gámez J.A. spark-crowd: a spark package for learning from crowdsourced big data. J. Mach. Learn. Res. 2019;20(19):1–5. [Google Scholar]

- Rubin G.D., Ryerson C.J., Haramati L.B., Sverzellati N., Kanne J.P., Raoof S., Schluger N.W., Volpi A., Yim J.-J., Martin I.B.K., Anderson D.J., Kong C., Altes T., Bush A., Desai S.R., Goldin J., Goo J.M., Humbert M., Inoue Y., Kauczor H.-U., Luo F., Mazzone P.J., Prokop M., Remy-Jardin M., Richeldi L., Schaefer-Prokop C.M., Tomiyama N., Wells A.U., Leung A.N. The role of chest imaging in patient management during the COVID-19 pandemic: a multinational consensus statement from the Fleischner society. Chest. 2020 doi: 10.1016/j.chest.2020.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Selvan, R., Dam, E. B., Detlefsen, N.S., Rischel, S., Sheng, K., Nielsen, M., Pai, A., 2020. Lung segmentation from chest x-rays using variational data imputation. ICML Workshop on Learning with Missing Values (Artemiss), July 2020. URL: https://openreview.net/forum?id=dlzQM28tq2W.

- Shi F., Wang J., Shi J., Wu Z., Wang Q., Tang Z., He K., Shi Y., Shen D. Review of artificial intelligence techniques in imaging data acquisition, segmentation and diagnosis for Covid-19. IEEE Rev. Biomed. Eng. 2020 doi: 10.1109/RBME.2020.2987975. [DOI] [PubMed] [Google Scholar]

- Shi H., Han X., Jiang N., Cao Y., Alwalid O., Gu J., Fan Y., Zheng C. Radiological findings from 81 patients with Covid-19 pneumonia in Wuhan, China: a descriptive study. Lancet Infect. Dis. 2020 doi: 10.1016/S1473-3099(20)30086-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simpson S., Kay F.U., Abbara S., Bhalla S., Chung J.H., Chung M., ..., Litt H. Radiological society of north america expert consensus statement on reporting chest ct findings related to covid-19. Radiology. 2020;2(2):e200152. doi: 10.1148/ryct.2020200152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan, M., Le, Q. V., 2019. EfficientNet: rethinking model scaling for convolutional neural networks. arXiv.org1905.11946v3.

- Wilkinson M., Dumontier M., Aalbersberg I., Appleton G., Axton M., Baak A., Blomberg N., Boiten J., da Silva Santos L., Bourn P., Bouwman J., Brookes A., Clark T., Crosas M., Dillo I., Dumon O., Edmunds S., Evelo C., Finkers R., Gonzalez-Beltran A., Gray A., Groth P., Goble C., Grethe J., Heringa J., ’t Hoen P., Hooft R., Kuhn T., Kok R., Kok J., Lusher S., Martone M., Mons A., Packer A., Persson B., Rocca-Serra P., Roos M., van Schaik R., Sansone S., Schultes E., Sengstag T., Slater T., Strawn G., Swertz M., Thompson M., van der Lei J., van Mulligen E., Velterop J., Waagmeester A., Wittenburg P., Wolstencroft K., Zhao J., Mons B. The fair guiding principles for scientific data management and stewardship. Sci. Data. 2016;3 doi: 10.1038/sdata.2016.18. [DOI] [PMC free article] [PubMed] [Google Scholar]; Doi: 10.1038/sdata.2016.18

- Wong H.Y.F., Lam H.Y.S., Fong A.H.-T., Leung S.T., Chin T.W.-Y., Lo C.S.Y., Lui M.M.-S., Lee J.C.Y., Chiu K.W.-H., Chung T. Frequency and distribution of chest radiographic findings in covid-19 positive patients. Radiology. 2020:201160. doi: 10.1148/radiol.2020201160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang W., Sirajuddin A., Zhang X., Liu G., Teng Z., Zhao S., Lu M. The role of imaging in 2019 novel coronavirus pneumonia (COVID-19) Eur. Radiol. 2020;395(10223):1–9. doi: 10.1007/s00330-020-06827-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young A.L., Marinescu R.V., Oxtoby N.P., Bocchetta M., Yong K., Firth N.C., Cash D.M., Thomas D.L., Dick K.M., Cardoso J., van Swieten J., Borroni B., Galimberti D., Masellis M., Tartaglia M.C., Rowe J.B., Graff C., Tagliavini F., Frisoni G.B., Laforce R., Finger E., de Mendonça A., Sorbi S., Warren J.D., Crutch S., Fox N.C., Ourselin S., Schott J.M., Rohrer J.D., Alexander D.C., Genetic FTD Initiative (GENFI), Alzheimer’s Disease Neuroimaging Initiative (ADNI) Uncovering the heterogeneity and temporal complexity of neurodegenerative diseases with Subtype and Stage Inference. Nat. Commun. 2018;9(1):4273. doi: 10.1038/s41467-018-05892-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang K., Liu X., Shen J., Li Z., Sang Y., Wu X., Zha Y., Liang W., Wang C., Wang K., Ye L., Gao M., Zhou Z., Li L., Wang J., Yang Z., Cai H., Xu J., Yang L., Cai W., Xu W., Wu S., Zhang W., Jiang S., Zheng L., Zhang X., Wang L., Lu L., Li J., Yin H., Wang W., Li O., Zhang C., Liang L., Wu T., Deng R., Wei K., Zhou Y., Chen T., Lau J.Y.-N., Fok M., He J., Lin T., Li W., Wang G. Clinically applicable ai system for accurate diagnosis, quantitative measurements, and prognosis of COVID-19 pneumonia using computed tomography. Cell. 2020 doi: 10.1016/j.cell.2020.08.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao W., Zhong Z., Xie X., Yu Q., Liu J. Relation between chest ct findings and clinical conditions of coronavirus disease (Covid-19) pneumonia: a multicenter study. Am. J. Roentgenol. 2020;214(5):1072–1077. doi: 10.2214/AJR.20.22976. [DOI] [PubMed] [Google Scholar]