Abstract

Team-based learning (TBL) is a special form of collaborative learning that involves the use of permanent working teams throughout the semester. In this highly structured and interactive teaching method, students perform preparatory activities outside of class to gain factual knowledge and understand basic concepts. In class, students collaborate with peers to apply content, analyze findings, and synthesize new ideas. To better understand the learning outcomes specific to TBL courses, we analyzed end-of-semester course evaluations from an undergraduate neuroscience course taught using either a moderate structure active learning or TBL format. Our analysis reveals that the TBL taught classes had significantly higher levels of self-reported learning in the areas of gaining, understanding, and synthesizing knowledge. We propose that these gains are driven by the TBL readiness assurance process and peer evaluations. Both of these structural components are expected to increase student accountability, motivation, and engagement with course content.

Keywords: Team-Based Learning (TBL), Active Learning, STEM, Undergraduate Neuroscience Education

Classes that implement active learning have shown increases in student learning, as well as lower failure rates across undergraduate science, technology, engineering, and mathematics (STEM) disciplines (Freeman et al., 2011; Haak et al., 2011; Eddy & Hogan, 2014; Freeman et al., 2014). Active learning activities come in many forms such as think-pair-share, small-group learning, muddiest point, minute papers, clicker questions, gallery walk, and jigsaw (Freeman et al., 2007; Tanner, 2013). These practices share the goal of transitioning the classroom from a passive learning environment to one that is engaging and interactive (Bonwell & Eison, 1991). In these dynamic classrooms, students may achieve deeper levels of learning by spending less in-class time on content transmission and more time applying knowledge. One commonly used active learning strategy is small-group learning, an evidence-based, collaborative teaching method with positive impacts on both student learning and attitudes (Johnson & Johnson, 1999; Springer et al., 1999; Kyndt et al., 2013). There are many variations to small-group learning approaches: group size, rules, and structure vary. In one type, cooperative learning, students can collaborate in informal pairs (e.g., think-pair-share), formal groups lasting days to weeks, or base groups collaborating throughout an entire semester (Johnson & Johnson, 2009). The two most essential elements for successful cooperative learning are positive interdependence and individual accountability. This is typically achieved by assigning well-defined roles to individual team members and providing feedback to individual members on their personal efforts (Johnson & Johnson, 1989, 1999).

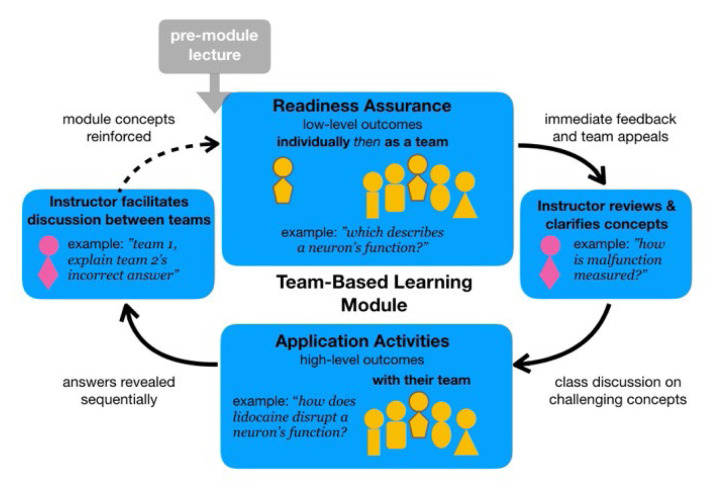

Team-based learning (TBL) is another popular form of small-group learning (Michaelsen et al., 2004). In TBL, students spend a significant amount of class time learning to apply their knowledge and solve complex problems together. Here, students work in groups of 5–7, are not preassigned roles, and remain in the same team throughout the semester. Assessment of learning follows a prescribed modular structure (Figure 1). The modules are designed to assess learning for each topic, ranging from the lower-order cognitive outcomes of remembering and understanding, to the higher-order cognitive outcomes of applying, analyzing, evaluating, and creating (Anderson & Krathwohl, 2000; Bloom, 1956).

Figure 1.

Description of a Team-Based Learning Module.

The TBL module begins with the preparation phase (Michaelsen et al., 2004). During this phase, students are responsible for completing assigned readings on their own, typically from the course textbook. Their goals are to self-learn primarily basic, lower level concepts such as definitions and recalling information which are taken from a set of learning objectives. Knowledge of the learning objectives is assessed in the second stage, the Readiness Assurance (RA) (Figure 1 and see Appendix 1 for examples). This is a formative assessment comprised of multiple-choice questions (Michaelsen et al., 2004), which vary in difficulty level, ranging from simple recall to application. In order to hold students accountable for their preparation, they first take this RA individually (iRA). Immediately afterwards, without knowing the answers, students retake the RA as a team (tRA). The diverse knowledge and perspectives are pooled from all team members. As a result, teams consistently outperform individuals. Teams receive feedback on their tRA answers immediately, which are made available by the instructor. The discrepancies in their answers reveal the most challenging concepts for students and become the main focus for a detailed class discussion in a short “mini-lecture”.

Following the RA process, students begin the team application activities (Figure 1) (Michaelsen et al., 2004). At this point, students work with their teammates on activities designed to enhance higher-order cognitive outcomes (Haidet et al., 2014). After each discussion question, teams report out to the class their responses, which gives students the opportunity to engage with other teams. The consistent feedback received by students throughout each module reveals each student’s competency and provides positive reinforcement to motivate students for learning.

While the design of a TBL course is thought to provide a strong motivational framework to promote learning, it remains unclear how students perceive their learning outcomes when compared to other instructional methods. If differences exist, it would be valuable to identify which taxonomic level of cognitive learning outcomes are influenced. A recent meta-analysis of 17 studies reported a moderate mean effect size of 0.55 for gaining content knowledge with TBL compared to lecture-based learning (Swanson et al., 2019). A number of possible explanations for these learning gains can be proposed.

First, the design of TBL is strongly supported by educational theories that social interaction and collaboration allow us to reach our full potential as learners (Vygotsky, 1978; Sibley & Ostafichuk, 2014). This social learning may also help generate positive emotions by building an interconnected community of learners (Cavanagh, 2016). Second, the structure of a TBL class provides students with distributed practice and immediate feedback, as well as increased accountability and motivation to learn (Phelps, 2012; Stephens et al., 2012; Dunlosky et al., 2013; Butler et al., 2014; Eddy & Hogan, 2014; Cavanagh, 2016; Swanson et al., 2019). Furthermore, given the large percentage of classroom time dedicated to applying new learning in a TBL course, students would be expected to achieve greater mastery of the higher order cognitive outcomes. Indeed, several studies have reported such higher order improvement with TBL when compared to lecture style classes (Kolluru et al., 2012; Ghorbani et al., 2014; Johnson et al., 2014).

The distributed practice and motivational framework used in TBL are likely to be the key elements that drive the learning gains. However, non-TBL courses with moderate to high structure also take advantage of spaced practice and engaging classroom activities that would help motivate students to learn (Freeman et al., 2014; Eddy & Hogan, 2014). These courses are characterized by frequent in-class engagement (moderate structure: students speaking 15 – 40% of course time; high structure: students speaking > 40% of course time), as well as the use of graded review assignments and/or graded preparatory assignments (Freeman et al., 2011, Eddy & Hogan, 2014). Not surprisingly, these active learning courses are also highly effective in driving learning gains and mastery of higher-order cognitive skills (Haukoos, 1983; Martin et al., 2007; Cordray et al., 2009; Jensen & Lawson, 2011; Eddy & Hogan, 2014). Additionally, high structure active learning courses have been shown to outperform moderate structure courses when looking at failure rates in introductory biology courses (Freeman et al., 2011), suggesting a correlation between student learning and the amount of classroom structure.

Both TBL and moderate to high structure active learning classrooms are designed to motivate students and provide ample opportunities to practice and engage with course content. TBL, however, has some key structural features that may enhance learning further. First, students in TBL work in permanent teams throughout the semester, and evaluate their teammates at multiple times throughout the semester. These required evaluations provide opportunities for students to provide and receive constructive feedback and are factored into the course grades (Michaelsen et al., 2004). This promotes individual accountability related to collaborative skills. For example, a student can be scored low by teammates on poor attendance, preparation, or participation, which would negatively affect that student’s grade. On the other hand, a student rated highly by teammates on being an active listener and effective contributor will gain a boost in their course grade. The multiple opportunities for feedback from peers allow students to reflect and adjust behaviors.

Another key structural feature in TBL is the retrieval practice and distributed practice (Dunlosky et al., 2013), in the form of frequent individual formative assessments and team collaborations on graded in-class activities. Failure to prepare for or attend these in-class learning opportunities could then negatively impact their scores and those of their teammates. Taken together, the use of permanent teams, peer evaluations, and graded in-class assignments creates a high level of accountability that may motivate TBL students to a greater extent than what is typically achieved in the non-TBL active learning classroom.

In this study, we asked how students perceive their learning of lower- and higher-order cognitive outcomes across these two classroom settings: moderate structure active learning and TBL. While some evidence suggests greater benefits with TBL (Zingone et al., 2010), it remains unknown which taxonomical levels of learning may be affected. To investigate this, we analyzed end-of-semester course evaluations from the same undergraduate neuroscience course taught by the same instructor over four semesters using either moderate structure active learning or TBL format. We also addressed whether the same instructor will yield consistent end-of-semester course evaluations across time. Our results suggest that converting an active learning undergraduate neuroscience course to TBL improves student-perceived learning in both lower and higher order cognitive outcomes, effects which may be driven by the motivational framework unique to TBL courses.

MATERIALS AND METHODS

Study 1 - Comparison of Student-Perceived Learning in TBL and Active Learning Classrooms

For this study, we analyzed past end-of-semester course evaluations for a single course (Cellular and Molecular Neurobiology, NEUROSCI 223), taught by the same instructor using either a moderate structure active learning or TBL format. The classes were taught at a selective, private university in the southeastern United States. Four semesters of data were analyzed (n = 34 student responses total); two TBL (summer 2018 and summer 2019, n = 22 student responses) and two active learning (summer 2015 and summer 2016, n = 12 student responses) (see Table 1). The single course used for this study is a graduation requirement for the undergraduate neuroscience major and can be counted as an elective for other STEM majors. The students are typically a mix of sophomores, juniors, and seniors. The instructor for these classes had four years of university teaching experience prior to the summer of 2015 when the first class was taught. Summer session courses meet five days per week for 75-minute class sessions over six weeks.

Table 1.

Descriptions of the Active Learning and TBL Semesters for Study 1. Su I = 1st summer session ; Su II = 2nd summer session; AL = active learning course; TBL = team-based learning course; meetings = number of class meetings per semester minus summative exam class periods and student presentations; lectures = number of class periods with a lecture (75 minutes in length); % student speaking = percent of class time in which students were speaking; #RA = number of graded readiness assurance formative quizzes; #APP = number of graded in-class application activities; #Exams = number of summative exams; #Group = number of class periods where students worked with teammates; GS = size of small learning groups; mini = 30–40 minute miniature lecture.

| Semester | Type | Size | Meetings (−Exams) | Lectures | % Student Speaking | #RA | #APPs | #Exams | #Group | GS |

|---|---|---|---|---|---|---|---|---|---|---|

| Su I 2015 | AL | 9 | 24 | 16 | 33 | 0 | 8 | 3 | 8 | 3 |

| Su I 2016 | AL | 10 | 24 | 16 | 33 | 0 | 8 | 3 | 8 | 3–4 |

| Su II 2018 | TBL | 10 | 27 | 8 (+8 mini) | 47 | 8 | 8 | 2 | 16 | 5 |

| Su II 2019 | TBL | 16 | 27 | 8 (+8 mini) | 47 | 8 | 8 | 2 | 16 | 5–6 |

Active learning course design

The two active learning semesters used in this analysis (summer 2015 and summer 2016) had the characteristics of a moderate structure, active learning format. Approximately 33% of class time was used for student speaking and at least once per week, students completed graded, in-class problem sets that required them to review new material and apply their learning (Eddy & Hogan, 2014). Specifically, students had 24 class meetings, eight of which involved in-class problem sets that were interactive in nature and completed within small groups of 3–4 students. The remaining 16 class periods were lecture based and, other than the occasional question directed at the instructor, were not interactive (8/24 = 33% Student Speaking). Table 1 shows the detailed characteristics of this course.

TBL course design

The two TBL semesters (summer 2018 and 2019) analyzed in this study incorporated all of the typical TBL design elements (Figure 1), which follow recommendations by Haidet and colleagues (Haidet et al., 2014). For this TBL class, however, an additional component was included: a lecture that preceded the readiness assurance (RA). Student speaking accounted for approximately 47% of all class time (Table 1). During a given TBL module (225 minutes), students were estimated to spend 105 out of 225 minutes interacting with each other. These interactions included conversations relevant to class content during application activities (75 minutes) and during the RA class period (30 minutes). The seven core design elements used were: 1) team formation; 2) readiness assurance; 3) immediate feedback; 4) sequencing of solving problems in class; 5) the 4 S’s: same problem, significant problem, simultaneous reporting, specific choices; 6) incentive structure; and 7) peer review.

Team formation. Each team consisted of 5–7 students. To best distribute resources evenly across teams, the following factors were considered: completion of upper-level neuroscience courses, experience doing research, and/or comfort with knowledge of biology. The goal was to maximize the cognitive diversity and experiences within and across teams. Discussions within a team would ideally represent diverse perspectives. When the time came for teams to share their discussions with other teams, students were exposed to an additional range of perspectives. Students remained on the same team for the duration of the semester.

Readiness Assurance. After an introductory lecture, a student’s fundamental knowledge of concepts was assessed. Performing well is an indication that they were ready to achieve higher-order cognitive outcomes, such as application or synthesis of concepts. After taking a readiness assurance assessment individually (iRA), a student retook the same assessment with their team (tRA). The results of the iRA are unknown when taking the tRA. This reinforced the opportunity for discussion within the group. Both the iRA and tRA contributed significantly to the course grade. Each RA consisted of approximately ten multiple choice questions, eight at Bloom’s recall and understand levels, and two at the Bloom’s level of apply (See Appendix 1).

Immediate feedback. Upon selecting a response to a question during the tRA, the team received immediate feedback as to whether or not their selection was correct (Figure 1). If a team disagreed with an answer, they had the option to submit an appeal that required references and an explanation for their argument.

Sequencing of solving problems in class. After the readiness assurance phase, students moved to the Team Application Activities. These assessments gauged higher-level cognitive outcomes by requiring application, evaluation and synthesis of concepts to solve problems. Students worked on problems within their team. After a decision had been made by every team, the instructor facilitated a discussion between teams. This inter-team conversation took advantage of the diversity of perspectives from other teams. The solution to the problem was revealed during this class discussion.

4 S’s: same problem, significant problem, simultaneous reporting, specific choices. The team application activities were designed to require in-depth knowledge to solve a significant problem. All teams were given the same problem to solve with the same specific choices from which to select, although, the option of an open-ended response was sometimes made available. After a limited time, all teams simultaneously reported their choices for every team to see.

Incentive structure. At each point of assessment (individual and team readiness assurances, team application activities, and summative assessments) students were incentivized to prepare and to contribute to team learning. Performing well on the iRA increased their chances of a better grade on the tRA; and doing well on both increased their chances of doing well on the team application activities. This structure of engagement and regular feedback contributed to preparation for summative assessments. Students were further incentivized because their peer’s review of them factored into their course grade.

Peer review. Students evaluated each teammate on a number of measures related to preparation and collaboration. Table 2 shows examples of evaluation statements (Koles Method) used across the classes in this study (Koles, 2010). Evaluations were submitted in the middle and at the end of the semester. Students did not know the identity of the evaluators and received the feedback shortly following the submissions, after the instructor reviewed them for any concerning input. Concerns were not observed in the classes investigated for this study. In all of the courses analyzed in this study, peer evaluations were counted toward course grades (approximately 5%).

Table 2.

Peer evaluation questions used in the courses for Studies 1 and 2.

| This teammate arrives on time, mentally prepared to be an engaged team member. |

| This teammate demonstrates a good balance of active listening and making their own contribution. |

| This teammate asks probing questions that contribute to the learning environment. |

| This teammate shares information and their understanding of relevant course material. |

| This teammate is well prepared for team activities and discussions. |

| This teammate shows appropriate depth of knowledge. |

| This teammate identifies limits of personal knowledge. |

| This teammate is clear when explaining things to others. |

| This teammate gives useful feedback to others. |

| This teammate accepts useful feedback from others. |

| This teammate is able to listen and understand what others are saying. |

| This teammate shows respect for the opinions and feelings of others. |

Study 2 - Comparison of Student Course Evaluations across Semesters

In this study, we sought to determine whether course evaluation scores varied over time for an instructor using the same instructional method. The course analyzed was taught at a selective, private university in the southeastern United States. A single, 300-level neuroscience seminar course (Neuroplasticity and Disease, NEUROSCI 353S) was chosen, with an enrollment cap of 18 students (Table 3). The students enrolled in this course were mostly undergraduate neuroscience majors in their senior year. The class counted as an elective toward graduation. Three semesters of data were analyzed (n = 45 student responses total). The course was taught in the spring semesters of 2017 (n = 14 student responses), 2018 (n = 14 student responses), and 2019 (n = 17 student responses). The same TBL method was used as described in Study 1. Students in this course were required to write a mock grant following the National Institutes of Health (NIH) format and present it to the class at the end of the semester. A single instructor taught the course analyzed in this study, and he had 6 years of teaching experience prior to the spring of 2017. Spring semester courses met two times per week for 75-minute class sessions over 14 weeks.

Table 3.

Description of TBL semesters for Study 2. Sp = Spring; TBL = team-based learning course; meetings = number of class meetings per semester minus summative exam class periods and student presentations; lectures = number of class periods with a lecture; % student speaking = percent of class time in which students were speaking; #RA = number of readiness assurance formative quizzes; #APP = number of in-class application activities; #Exams = number of summative exams; #Group = number of class periods where students worked with teammates; GS = size of small learning groups; mini = 30–40 minute lecture.

| Semester #Group |

Type GS |

Size | Meetings (−Exams) | Lectures | % Student Speaking | #RA | #APPs | #Exams | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Sp 2017 | TBL | 16 | 21 | 5 (+ 5 mini) | 47 | 5 | 7 | 2 | 12 | 5–6 |

| Sp 2018 | TBL | 18 | 21 | 6 (+6 mini) | 47 | 6 | 8 | 2 | 14 | 6 |

| Sp 2019 | TBL | 18 | 21 | 6 (+6 mini) | 47 | 6 | 7 | 1 | 13 | 6 |

Data collection and analysis for Studies 1 and 2

This work was approved by the Duke University Institutional Review Board. Data from end-of-semester student course evaluations were collected and provided by the Office of Assessment of Duke University, and made available to the instructors and researchers after grades were posted by the Registrar’s Office. Undergraduate students in the above described courses (Tables 1 and 3) submitted anonymous optional course evaluations of their perceptions on a range of low- to high-level cognitive outcomes, course satisfaction, and classroom dynamics. Students were asked to rate their level of agreement with the following statements:

This course helped me gain factual knowledge.

This course helped me understand fundamental concepts and principles.

This course helped me learn to apply knowledge, concepts, principles, or theories to a specific situation or problem.

This course helped me learn to analyze ideas, arguments, and points of view.

This course helped me learn to synthesize and integrate knowledge.

This course helped me learn to conduct inquiry through methods of the field.

This course helped me learn to evaluate the merits of ideas and competing claims.

This course helped me to effectively communicate ideas orally.

This course helped me to effectively communicate ideas in writing.

Overall this course is (1–5):

Overall this instructor is (1–5):

Response options were on a Likert rating scale: 5 = strongly agree, 4 = agree, 3 = neutral, 2 = disagree, and 1 = strongly disagree; or NA = not applicable. Responses were analyzed using Kruskal-Wallis H or Mann Whitney U tests, performed in Microsoft Excel® and SPSS®.

RESULTS

Study 1 - TBL courses had significantly higher self-reported low-order and high-order cognitive outcomes compared to moderately structured active learning courses

Student ratings for three of the perceived learning outcomes were significantly higher for students in the TBL classes compared to active learning semesters (Table 4). These learning outcomes were: (1) gaining factual knowledge; (2) understanding concepts; and (3) learning to synthesize. The first two are lower-order cognitive outcomes, and the last is higher-order. All other measures were not found to be significantly different.

Table 4.

Descriptive Statistics for Study 1. n = total number of student responses for each question; SD = standard deviation. See methods section for a detailed description of learning outcome questions used on the end-of-semester course evaluations. Mann U Whitney tests were used to compare means between groups. P values and effect sizes (r = (Z /✓n), 0.10 = small, 0.30 = moderate, and 0.50 = large) are shown for each category. Bold categories were statistically significantly different between the active learning and TBL classes.

| Active Learning | Team-Based Learning | |||||||

|---|---|---|---|---|---|---|---|---|

| n | mean | SD | n | mean | SD | (U), p value | Effect Size (r) | |

| Gain knowledge | 11 | 4.36 | 0.67 | 22 | 4.95 | 0.21 | (60.00), 0.001 | 0.57 |

| Understand | 11 | 4.18 | 0.60 | 22 | 4.91 | 0.29 | (43.00), 0.000 | 0.65 |

| Apply | 11 | 4.27 | 0.65 | 22 | 4.68 | 0.57 | (77.50), 0.054 | 0.33 |

| Analyze | 11 | 3.82 | 0.87 | 20 | 4.00 | 0.84 | (97.50), 0.579 | 0.10 |

| Synthesize | 11 | 4.00 | 0.89 | 22 | 4.64 | 0.49 | (73.50), 0.042 | 0.35 |

| Inquire | 11 | 4.45 | 0.52 | 22 | 4.36 | 0.79 | (120.0), 0.996 | 0.01 |

| Evaluate | 10 | 3.90 | 0.88 | 20 | 3.85 | 0.86 | (98.50), 0.944 | 0.01 |

| Oral expression | 11 | 3.91 | 1.22 | 22 | 4.00 | 0.98 | (120.0), 0.968 | 0.01 |

| Written expression | 11 | 3.55 | 0.69 | 20 | 3.30 | 1.03 | (88.00), 0.324 | 0.18 |

| Overall Course | 12 | 4.75 | 0.62 | 22 | 4.82 | 0.50 | (127.5), 0.792 | 0.04 |

| Overall Instructor | 12 | 4.75 | 0.62 | 22 | 4.86 | 0.35 | (126.5), 0.747 | 0.06 |

Study 2 - Student course evaluations are consistent between years for a single class taught by the same instructor

We did not detect a significant difference between categories, except for overall course satisfaction (Table 5). These results demonstrate that a specific teaching intervention by a given instructor can lead to reproducible course evaluation scores over a three-year time period.

Table 5.

Descriptive Statistics for Study 2. n = total number of student responses for each question; SD = standard deviation. Kruskal-Wallis tests did not reveal a significant difference for any category, except overall course satisfaction (H(6.11), p = 0.047).

| TBL 2017 | TBL 2018 | TBL 2019 | (H), p value | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| n | mean | SD | n | mean | SD | n | mean | SD | ||

| Gain knowledge | 14 | 4.71 | 0.47 | 14 | 4.71 | 0.83 | 17 | 4.94 | 0.24 | (2.81) 0.246 |

| Understand | 14 | 4.57 | 0.51 | 14 | 4.57 | 0.85 | 17 | 4.82 | 0.39 | (2.21) 0.331 |

| Apply | 13 | 4.54 | 0.88 | 14 | 4.64 | 0.63 | 17 | 4.69 | 0.48 | (0.23) 0.989 |

| Analyze | 14 | 4.29 | 1.07 | 14 | 4.14 | 0.77 | 14 | 4.21 | 0.89 | (0.61) 0.738 |

| Synthesize | 14 | 4.57 | 0.85 | 14 | 4.57 | 0.65 | 17 | 4.59 | 0.51 | (0.29) 0.866 |

| Inquire | 14 | 4.64 | 0.50 | 14 | 4.71 | 0.49 | 15 | 4.67 | 0.62 | (0.21) 0.902 |

| Evaluate | 14 | 4.36 | 1.00 | 14 | 4.29 | 0.91 | 16 | 4.38 | 0.72 | (0.24) 0.889 |

| Oral expression | 14 | 4.64 | 0.50 | 14 | 4.50 | 0.85 | 17 | 4.65 | 0.49 | (0.03) 0.984 |

| Written expression | 14 | 4.50 | 0.65 | 14 | 4.57 | 0.65 | 17 | 4.88 | 0.33 | (4.14) 0.126 |

| Overall Course | 14 | 5.00 | 0.00 | 14 | 4.57 | 0.65 | 17 | 4.82 | 0.39 | (6.11) 0.047 |

| Overall Instructor | 14 | 5.00 | 0.00 | 14 | 4.79 | 0.58 | 17 | 5.00 | 0.00 | (4.53) 0.104 |

DISCUSSION

Numerous studies have shown that TBL, a highly structured collaborative format, improves student learning when compared to the low structured lecture format (Nieder et al., 2005; Letassy et al., 2008; Chung et al., 2009; Conway et al., 2010; Koles et al., 2010; Tan et al., 2011; Anwar et al., 2012; Pollack et al., 2018; Swanson et al., 2019). Building on these findings, our analyses reveal that students perceive greater learning when taught using TBL compared to a moderate structured active learning format. Students in our TBL classes perceived greater learning along the hierarchy of Bloom’s taxonomy; specifically, gaining knowledge, understanding facts, and synthesizing knowledge (Table 4). Furthermore, this teaching intervention can lead to reproduceable course evaluations when repeated for several years (Table 5). Interestingly, previous work has shown that high structure active learning courses can decrease predicted failure rates compared to moderate structure active learning courses (Freeman et al., 2011). Based on the amount of classroom time that students speak in our TBL courses (47%) and the number of weekly graded in-class assessments, we would characterize TBL as one form of high structure active learning (Eddy & Hogan, 2014). What contributes to these perceived learning gains in TBL may be attributed to several structural components, all of which hinge on team dynamics and motivation, as well as the frequency of interaction and feedback. Below we discuss these structural components and their potential effects on learning in more detail.

The first structural component of TBL that would be predicted to increase student learning is practice. In TBL, students have numerous opportunities to practice during a module and throughout the semester, as well as individually and with teammates. Importantly, retrieval practice and distributed practice are known approaches to improve student learning (Dunlosky et al., 2013). While moderate structure active learning classrooms also make use of distributed practice with formative assessments (e.g., graded review assignments and/or preparatory work), including the active learning courses in this study, the lack of pressure to perform well with a permanent set of teammates may reduce motivation. Interestingly, the use of permanent teams in TBL has been proposed to cause a “test motivation” effect, as there is pressure for students to perform well in front of their peers (Swanson et al., 2019) Furthermore, the use of peer evaluations throughout the semester would also help hold students accountable for their learning, attendance, and collaborative efforts. In these anonymous evaluations, students share perspectives on one another’s contributions to the learning environment. These help to hold students accountable for their cooperative learning skills, self-directed learning behaviors, and interpersonal skills; characteristics that impact team dynamics and success (Table 2) (Sibley & Ostafichuk, 2014). Also, students evaluate themselves on the same metrics, which allow for self-reflection and comparison to others’ perceptions. In our active learning semesters, the absence of the readiness assurance process and peer evaluations would be predicted to decrease individual accountability and student motivation, effects which may decrease attendance, studying outside of class, and participation in class. Future work should be directed at measuring these behaviors in TBL and active learning classrooms.

A second factor that may contribute to the self-reported learning gains with TBL is the consistent use of immediate feedback, which has been shown to produce strong effects on student performance (Phelps, 2012). After each tRA, students receive immediate question-level feedback. In addition, elaborative feedback is provided during the mini-lecture and each application activity. Additionally, feedback comes from the intra-team discussions during in-class activities, further allowing students to solidify their knowledge. In the moderate structure active learning class used in this study, feedback was not immediately given and not as frequent. Typically, answers to application questions were posted after class and not discussed in class. Therefore, students may not have had as many opportunities to clarify misunderstandings and immediately correct mistakes in their logic.

A third factor contributing to the student-perceived learning gains may stem from their sense of community. In TBL, students are in permanent teams throughout the semester and they engage heavily in inter-team discussions that allow them to interact with all members of the class. Community building in the classroom is important to create a sense of belonging. Social isolation and lack of belonging are cited as major reasons that students leave STEM majors (Hewlett et al., 2008; Cheryan et al., 2009; Strayhorn, 2011). Furthermore, the social classroom is thought to promote positive emotions that increase learning (Cavanagh, 2016) and exposure to the diverse views of a heterogenous team are also thought to contribute to TBL learning gains (Sibley & Ostafichuk, 2014). The active learning classes analyzed in this study were comprised of fewer students per group, and because the class met less frequently, students had less opportunity for discussion than in the TBL classes. This meant fewer interactions with peers for exchanging diverse ideas, synergizing motivations to learn, and experiencing the positive impacts of teamwork; all of which strengthen a sense of partnership and belonging.

Lastly, given that a large percentage of classroom time is dedicated to higher-order cognitive skills in TBL, it is not surprising that we detect high values of self-reported synthesis in TBL (Table 4). This finding is consistent with studies in which students reported that TBL increased their critical thinking skills (Rania, Rebora, & Migliorini, 2015). While our active learning courses did utilize application activities during 33% of the semester, and multiple measures of higher order cognitive outcomes are not significantly different (Table 4), the TBL classes have significantly higher self-reported “synthesis” compared to active learning. In active learning, the absence of readiness assurance assessments may have had negative impacts on higher-order thinking, given its important role in helping students to first consolidate lower order cognitive outcomes.

Limitations

Although we have discussed the advantages of group learning, there are a number of challenges associated with these collaborative approaches. Given a lifetime of exposure to lecture-based courses, some students find collaborative learning challenging (Hillyard et., 2010). Many students report negative past experiences with group work, which could impact their buy-in and experience of TBL. Even among students experienced with TBL, those who suffer from bullying, social isolation, and interpersonal problems may find the team-based approach unappealing which may affect their learning outcomes (Gillespie et al., 2006a; Gillespie et al., 2006b). Resentment toward disengaged teammates, distrust, and concerns over workload distribution can also impact perceptions (Liden, 1985; Lizzio & Wilson, 2005; Micari & Pazos, 2014; Robinson et al., 2015). While the peer evaluations and individual accountability can help lessen these problems, facilitators should closely monitor team dynamics during classroom activities to help mitigate problematic aspects of small group learning.

Another variable among team members is the knowledge they possess from previous coursework. This can impact their perception of learning in the current course. Students enrolled in our classes met the prerequisites, including a gateway introductory course. However, level of mastery likely varies and can influence their perception of learning, as well as contribute to a positive or negative team experience.

Our measures of learning are based on self-report or perceived learning, and are not linked to class grades or performance on a summative exam. Interestingly, a recent study found that students in an active learning class learn more, but perceived that they learned less (Deslauriers et al., 2019). Also, despite the higher grades in the active learning class, students reported enjoying learning more with lecture (Deslauriers et al., 2019). We did not study the effects of using low structure lecture with our course content. However, there were no significant differences in evaluations for the overall course and instructor satisfaction between the moderate structure active learning classes and the high structure TBL classes.

Future directions

There are a number of remaining questions regarding the use of TBL. For example, do learning gains appear for all types of courses (e.g., small seminars, large lectures, and laboratory courses) and universities (e.g., public, private, community college, and highly selective)? Which student populations are most positively impacted? For active learning courses with moderate structure, first generation, female and underrepresented STEM students show the largest learning gains (Preszler, 2009; Haak et al., 2011; Stephens et al., 2012; Eddy & Hogan, 2014). The increased learning gains for disadvantaged student populations are thought to stem from the increased motivation, self-efficacy, and sense of belonging in active learning classrooms (van Wyk, 2012; Eddy & Hogan, 2014). However, not all student populations have been shown to benefit from active learning (van Wyk, 2012; Eddy & Hogan, 2014). Furthermore, a recent study found that black students receive lower peer evaluation scores in TBL classrooms, even when there is no corresponding decrease in course grades relative to their peers (Macke et al., 2019). Therefore, it will be important to examine individual experiences and team dynamics on the impact of student learning, the sense of belonging, and overall course satisfaction.

Significance

In conclusion, our results demonstrate that converting an undergraduate neuroscience course from a moderate structure active learning class to a highly structured team-based learning class may increase learning of both lower and higher-order cognitive outcomes. Taken together, these findings confirm past studies that team-based learning is an effective teaching method, and this study offers additional insight into the impact on learning outcomes.

Acknowledgements

We thank Shelley Newpher and Bridgette Martin Hard for helpful comments on this manuscript.

APPENDIX 1. EXAMPLE READINESS ASSURANCE QUESTIONS FROM TBL TAUGHT COURSES

Bloom’s level - Recall

1. Binding of Ca2+ to which protein is important for synaptic vesicle fusion?

Synaptotagmin

Synapsin

Clathrin

Synaptobrevin

Bloom’s level – Understand

2. Chemical synapses

work by allowing chemicals to flow passively through the gap junction pores from one neuron to another.

never cause voltage changes on their postsynaptic target cells.

require synaptic vesicles.

are much faster than electrical synapses.

are excitatory if they release positively charged ions into the synaptic cleft.

Bloom’s level – Apply

3. Organophosphate insecticides inhibit acetylcholinesterase activity at cholinergic synapses. Which drug below would be expected to have similar effects on acetylcholine levels at cholinergic synapses?

BoTX (cleaves SNARE proteins)

tetrodotoxin (a sodium channel blocker)

tetraethylammonium (a potassium channel blocker)

phenylalkylamine (a calcium channel blocker)

alpha-bungarotoxin (nAChR irreversible antagonist)

nicotine (nAChR agonist)

APPENDIX 2. EXAMPLE QUESTIONS FROM A TBL APPLICATION ACTIVITY

Bloom’s level - Evaluate

1. The authors of this paper mention that 2 of 11 green neurons were not connected to the nearest red-green neuron, based on paired recordings. From the options below, select all that could be true.

The green neuron was connected to a different red-green neuron, and that red-green neuron has now died.

Due to the transient nature of synapses, the synaptic connection originally made by the green neuron onto the red-green neuron may have been lost over time.

Autofluorescence from these neurons gives a green signal and appears similar to GFP expression, thus giving the appearance of an infected cell.

DsRed expression ends after a few days, making identification of postsynaptic neurons very difficult.

The green neuron is synaptically connected to a distant red-green neuron and not the neighboring red-green neuron in the field of view.

If you assume that the gene gun transfected neurons do not always maintain or express all 3 plasmids, it's possible that there are some TVA-glycoprotein expressing neurons that lack DsRed.

Bloom’s level - Synthesize

2a. With your teammates, go to the dry-erase board and draw an updated model for the organization of the olfactory system based on the findings from this paper. Include mitral cells, granule cells, and cortical neurons.

2b. Design an experiment using monosynaptic tracing to determine if mitral cells converge onto cortical neurons. Which cells will be transfected with which plasmids and which cells will be yellow or green?

Footnotes

Funding

This work was funded by Duke University and the Charles Lafitte Foundation Program in Psychological and Neuroscience Research at Duke University.

Conflict of Interest

The Author(s) declare(s) that there is no conflict of interest.

REFERENCES

- Anderson LW, Krathwohl DR. A taxonomy for learning, teaching, and assessment: A revision of Bloom’s taxonomy of educational objectives. White Plains, NY: Longman; 2000. [Google Scholar]

- Anwar K, Shaikh AA, Dash NR, Khurshid S. Comparing the efficacy of team based learning strategies in a problem based learning curriculum. APMIS. 2012;120(9):718–723. doi: 10.1111/j.1600-0463.2012.02897.x. [DOI] [PubMed] [Google Scholar]

- Bloom B. Taxonomy of educational objectives: The classification of educational goals. New York: Longmans Green; 1956. [Google Scholar]

- Bonwell C, Eison J. ASHE-ERIC Higher Education Report No. 1. Washington, D.C.: The George Washington University School of Education and Human Development; 1991. Active Learning: Creating excitement in the classroom. [Google Scholar]

- Butler A, Marsh E, Slavinsky J, Baraniuk R. Integrating cognitive science and technology improves learning in a STEM classroom. Educational Research Review. 2014;26(2):331–340. [Google Scholar]

- Cavanagh R. The spark of learning: energizing the college classroom with the science of emotion. Morgantown, WV: West Virginia Univesity Press; 2016. [Google Scholar]

- Cheryan S, Plaut VC, Davies PG, Steele CM. Ambient belonging: how stereotypical cues impact gender participation in computer science. J Pers Soc Psychol. 2009;97(6):1045–1060. doi: 10.1037/a0016239. [DOI] [PubMed] [Google Scholar]

- Chung EK, Rhee JA, Baik YH, A OS. The effect of team-based learning in medical ethics education. Med Teach. 2009;31(11):1013–1017. doi: 10.3109/01421590802590553. [DOI] [PubMed] [Google Scholar]

- Conway SE, Johnson JL, Ripley TL. Integration of team-based learning strategies into a cardiovascular module. Am J Pharm Educ. 2010;74(2):35. doi: 10.5688/aj740235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cordray D, Harris T, Klein S. A research synthesis of the effectiveness, replicability, and generality of the VaNTH challenge-based instructional modules in bioengineering. Journal of Engineering Education. 2009;98(4):335–348. [Google Scholar]

- Deslauriers L, McCarty LS, Miller K, Callaghan K, Kestin G. Measuring actual learning versus feeling of learning in response to being actively engaged in the classroom. Proc Natl Acad Sci U S A. 2019;116(39):19251–19257. doi: 10.1073/pnas.1821936116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunlosky J, Rawson KA, Marsh EJ, Nathan MJ, Willingham DT. Improving Students’ Learning With Effective Learning Techniques: Promising Directions From Cognitive and Educational Psychology. Psychol Sci Public Interest. 2013;14(1):4–58. doi: 10.1177/1529100612453266. [DOI] [PubMed] [Google Scholar]

- Eddy SL, Hogan KA. Getting under the hood: how and for whom does increasing course structure work? CBE Life Sci Educ. 2014;13(3):453–468. doi: 10.1187/cbe.14-03-0050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman S, Eddy SL, McDonough M, Smith MK, Okoroafor N, Jordt H, Wenderoth MP. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci U S A. 2014;111(23):8410–8415. doi: 10.1073/pnas.1319030111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman S, Haak D, Wenderoth MP. Increased course structure improves performance in introductory biology. CBE Life Sci Educ. 2011;10(2):175–186. doi: 10.1187/cbe.10-08-0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freeman S, O’Connor E, Parks JW, Cunningham M, Hurley D, Haak D, Dirks C, Wenderoth MP. Prescribed active learning increases performance in introductory biology. CBE Life Sci Educ. 2007;6(2):132–139. doi: 10.1187/cbe.06-09-0194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghorbani N, Karbalay-Doust S, Noorafshan A. Is a Team-based Learning Approach to Anatomy Teaching Superior to Didactic Lecturing? Sultan Qaboos University Medical Journal. 2014;14(1):120–125. doi: 10.12816/0003345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gillespie D, Roos J, Slaughter C. Undergraduates’ ambivalence about leadership in small groups. Journal of Excellence in College Teaching. 2006a;17(3):33–49. [Google Scholar]

- Gillespie D, Rosamond S, Thomas E. Grouped out? Default strategies for participating in multiple small groups. Journal of General Education. 2006b;55(2):81–102. [Google Scholar]

- Haak DC, HilleRisLambers J, Pitre E, Freeman S. Increased structure and active learning reduce the achievement gap in introductory biology. Science. 2011;332(6034):1213–1216. doi: 10.1126/science.1204820. [DOI] [PubMed] [Google Scholar]

- Haidet P, Kubitz K, McCormack WT. Analysis of the Team-Based Learning Literature: TBL Comes of Age. J Excell Coll Teach. 2014;25(3–4):303–333. Available at https://www.ncbi.nlm.nih.gov/pubmed/26568668. [PMC free article] [PubMed] [Google Scholar]

- Haukoos G. The influence of classroom climate on science process and content achieveent of community college students. Journal of research in science teaching. 1983;20(7):629–637. [Google Scholar]

- Hewlett S, Luce C, Servon L, Sherbin L, Shiller P, Sosnovich E, Sumberg K. The Athena factor: reversing the brain drain in science, engineering, and technology. New York, NY: Center for Work-Life Policy; 2008. [Google Scholar]

- Hillyard C, Gillespie D, Littig P. University students’ attitudes about learning in small groups after frequent participation. Active learning in higher education. 2010;11(1):9–20. [Google Scholar]

- Jensen J, Lawson A. Effects of collaborative group composition and inquiry instruction on reasoning gains and achievement in undergraduate biology. CBE Life Sci Educ. 2011;10(1):64–73. doi: 10.1187/cbe.10-07-0089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson D, Johnson F. Joining together. Upper Saddle River, NJ: Pearson; 2009. [Google Scholar]

- Johnson D, Johnson R. Cooperation and competitiion: theory and research. Edina, MN: Interaction Book Company; 1989. [Google Scholar]

- Johnson D, Johnson R. Learning together and alone: cooperative, competitive, and individualistic learning. 1st ed. Boston, MA: Allyn and Bacon; 1999. [Google Scholar]

- Johnson JF, Bell E, Bottenberg M, Eastman D, Grady S, Koenigsfeld C, Maki E, Meyer K, Phillips C, Schirmer L. A Multiyear Analysis of Team-Based Learning in a Pharmacotherapeutics Course. Am J Pharm Educ. 2014;78(7):1–9. doi: 10.5688/ajpe787142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koles PG. Team-Based Learning Peer Feedback. 2010. Available at https://cdn.ymaws.com/teambasedlearning.site-ym.com/resource/resmgr/Docs/TBLC_Peer_Feedback_form_Kole.pdf.

- Koles PG, Stolfi A, Borges NJ, Nelson S, Parmelee DX. The impact of team-based learning on medical students’ academic performance. Acad Med. 2010;85(11):1739–1745. doi: 10.1097/ACM.0b013e3181f52bed. [DOI] [PubMed] [Google Scholar]

- Kolluru S, Roesch D, de la Fuente A. A Multi-Instructor, Team-Based, Active-Learning Exercise to Integrate Basic and Clinical Sciences Content. Am J Pharm Educ. 2012;76(2):1–7. doi: 10.5688/ajpe76233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kyndt E, Raes E, Lismont B, Timmers F, Cascallar E, Dochy B. A meta-analysis of the effects of face-to-face cooperative learning. Do recent studies falsify or verify earlier findings? Educational Research Review. 2013;10:133–149. [Google Scholar]

- Letassy NA, Fugate SE, Medina MS, Stroup JS, Britton ML. Using team-based learning in an endocrine module taught across two campuses. Am J Pharm Educ. 2008;72(5):103. doi: 10.5688/aj7205103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liden RN, D Parso C. Student and faculty attitudes concerning the use of group projects. Organizational Behavior Teaching Review. 1985;10(4):32–38. [Google Scholar]

- Lizzio A, Wilson K. Self-managed learning groups in higher education: Students’ perceptions of process and outcomes. British Journal of Educational Psychology. 2005;75(3):373–390. doi: 10.1348/000709905X25355. [DOI] [PubMed] [Google Scholar]

- Macke C, Canfield J, Tapp K, Hunn V. Outcomes for black students in team-based learning courses. Journal of Black Studies. 2019;50(1):66–86. [Google Scholar]

- Martin T, Rivale S, Diller K. Comparison of student learning in challenge-based and traditional instruction in biomedical engineering. Ann Biomed Eng. 2007;35(8):1312–1323. doi: 10.1007/s10439-007-9297-7. [DOI] [PubMed] [Google Scholar]

- Micari M, Pazos P. Worrying about what others think: A social-comparison concern intervention in small learning groups. Active learning in higher education. 2014;15(3):249–262. [Google Scholar]

- Michaelsen L, Knight A, Fink L. Team-based learning: A transformative use of small groups in college teaching. Sterling, VA: Stylis Publishing; 2004. [Google Scholar]

- Nieder GL, Parmelee DX, Stolfi A, Hudes PD. Team-based learning in a medical gross anatomy and embryology course. Clin Anat. 2005;18(1):56–63. doi: 10.1002/ca.20040. [DOI] [PubMed] [Google Scholar]

- Pollack A. The neuroscinece classroom remodeled with Team-Based Learning. JUNE. 2018;17(1):A34–A39. [PMC free article] [PubMed] [Google Scholar]

- Phelps R. The effect of testing on student achievement. International Journal of Testing. 2012;12(1):21–43. [Google Scholar]

- Preszler RW. Replacing lecture with peer-led workshops improves student learning. CBE Life Sci Educ. 2009;8(3):182–192. doi: 10.1187/cbe.09-01-0002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rania N, Rebora S, Migliorini L. Team-based learning: enhancing academic performance of psychology students. Procedia - Social and Behavorial Sciences. 2015;174:946–951. [Google Scholar]

- Robinson L, Harris A, Burton R. Saving face: Managing rapport in a problem based learning group. Active learning in higher education. 2015;16(1):11–24. [Google Scholar]

- Sibley J, Ostafichuk P. Getting started with team-based learning. Sterling, VA: Stylus Publishing; 2014. [Google Scholar]

- Springer L, Stanne M, Donovan S. Effects of small-group learning on undergraduates in science, mathematics, engineering, and technoloyg: a meta-analysis. Review of Educational Research. 1999;69(1):21–51. [Google Scholar]

- Stephens NM, Fryberg SA, Markus HR, Johnson CS, Covarrubias R. Unseen disadvantage: how American universities’ focus on independence undermines the academic performance of first-generation college students. J Pers Soc Psychol. 2012;102(6):1178–1197. doi: 10.1037/a0027143. [DOI] [PubMed] [Google Scholar]

- Strayhorn T. Bridging the pipeline: Increasing underrepresented students’ preparation for college through a summer bridge program. American Behavioral Scientist. 2011;55(2):142–159. [Google Scholar]

- Swanson E, McCulley L, Osman D, Lewis N, Solis M. The effect of team-based learning on content knowlege: a meta-analysis. Active learning in higher education. 2019;20(1):39–50. [Google Scholar]

- Tan NC, Kandiah N, Chan YH, Umapathi T, Lee SH, Tan K. A controlled study of team-based learning for undergraduate clinical neurology education. BMC Med Educ. 2011;11:91. doi: 10.1186/1472-6920-11-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanner KD. Structure matters: twenty-one teaching strategies to promote student engagement and cultivate classroom equity. CBE Life Sci Educ. 2013;12(3):322–331. doi: 10.1187/cbe.13-06-0115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Wyk M. The effects of the STAD-cooperative learning method on student achievement, attitude and motivation in economics education. J Soc Sci. 2012;33(2):261–270. [Google Scholar]

- Vygotsky L. Mind in society: the development of higher psychological processes. Cambridge, MA: Harvard University Press; 1978. [Google Scholar]

- Zingone MM, Franks AS, Guirguis AB, George CM, Howard-Thompson A, Heidel RE. Comparing team-based and mixed active-learning methods in an ambulatory care elective course. Am J Pharm Educ. 2010;74(9):160. doi: 10.5688/aj7409160. [DOI] [PMC free article] [PubMed] [Google Scholar]