Abstract

In a regression model for treatment outcome in a randomized clinical trial, a treatment effect modifier is a covariate that has an interaction with the treatment variable, implying that the treatment efficacies vary across values of such a covariate. In this paper, we present a method for determining a composite variable from a set of baseline covariates, that can have a nonlinear association with the treatment outcome, and acts as a composite treatment effect modifier. We introduce a parsimonious generalization of the single-index models that targets the effect of the interaction between the treatment conditions and the vector of covariates on the outcome, a single-index model with multiple-links (SIMML) that estimates a single linear combination of the covariates (i.e., a single-index), with treatment-specific nonparametric link functions. The approach emphasizes a focus on the treatment-by-covariates interaction effects on the treatment outcome that are relevant for making optimal treatment decisions. Asymptotic results for estimator are obtained under possible model misspecification. A treatment decision rule based on the derived single-index is defined, and it is compared to other methods for estimating optimal treatment decision rules. An application to a clinical trial for the treatment of depression is presented.

Keywords: Single-index models, Treatment effect modifier, Biosignature

1. Introduction

In precision medicine, a critical concern is to identify baseline measures that have distinct relationships with the outcome from different treatments so that patient-specific treatment decisions can be made [1, 2]. Such variables are called treatment effect modifiers, and these can be useful in determining a treatment decision rule that will select a treatment for a patient based on observations made at baseline. There is a growing need to extract treatment effect modifiers from (usually noisy) baseline patient data that, more and more commonly, consist of a large number of clinical and biological characteristics.

Typically, treatment effect modifiers (or, “moderators”) are identified either one by one, using one model for each potential predictor, or from a large model which includes all potential predictors and their (two-way) interactions with treatment, and then testing for significance of the interaction terms, almost exclusively using linear models. In the linear model context, [3] proposed a model using a linear combination (i.e., an index) of patients’ characteristics, termed a generated effect modifier (GEM) constructed to optimize the interaction with a treatment indicator. Such a composite variable approach is especially appealing for complex diseases such as psychiatric diseases, in which each baseline characteristic may only have a small treatment modifying effect. In such settings, it is not common to find variables that are individually strong moderators of treatment effects.

Here we present novel flexible methods for determining composite variables that permit non-linear association with the outcome. In particular, the proposed methods allow the conditional expectation of the outcomes to have a flexible treatment-specific link function with an index. We define the index to be a one-dimensional linear combination of the covariates. This approach is related to single-index models [4, 5, 6, 7, 8, 9, 10], as well as to single-index model generalizations such as projection pursuit regression [11] and multiple-index models [12, 13]. We employ a single projection of the covariates (i.e., an index) to summarize the variability of the baseline covariates, and multiple link functions to connect the derived single-index to the treatment-specific mean responses; we call these single-index models with multiple-links (SIMML). This single-index models with multiple-links provides a parsimonious extension of the single-index model in modeling the effect of the interaction between a categorical treatment variable and a vector-valued covariate. The dependence of treatment-specific outcomes on a common single-index improves the interpretability, and helps in determining treatment decision rules. This approach generalizes the notion of a composite “treatment effect modifier” from the linear model setting, to a nonparametric context, to define a nonparametric generated effect modifier.

2. A Single-index model with multiple-links (SIMML)

Let denote the set of covariates. Let T denote the categorical (treatment assignment) variable of interest, taking values in {1, …, K} with nonzero probabilities (π1, … πK) that sum to one. Let denote an outcome variable; without loss of generality, we assume that a higher value of Y is preferred. We focus on data arising from a randomized experiment, however, the method can be extended to observational studies.

A common approach to interrogate the effect of the interaction between X and the treatment indicator T on an outcome is to fit a regression model separately for each of the K treatment groups, as functions of X. For instance, a single-index model can be fitted separately for each treatment group t, resulting in K indices, . We refer to this as a K-index model; it has the form

| (1) |

where both the treatment-specific nonparametric link functions gt(·), and the treatment-specific index vectors , need to be estimated for each group t. (The vectors βt need to satisfy some identifiability condition ([14]).) While this is a reasonable approach, the K indices of model (1) lack useful interpretation as effect modifiers and often lead to over-parametrization.

For parsimony and insight, the SIMML constrains the βt in (1) to be equal, and it requires separate nonparametrically defined curves for each treatment t as a function of a single index α⊤X common for all t:

| (2) |

where both the links gt and the vector α need to be estimated. The SIMML (2) provides a single parsimonious biosignature, . Due to the nonparametric nature of gt, the scale of α is not identifiable in (2) and to address this we restrict α to be in , i.e., to be in the upper hemisphere of the unit sphere.

If the true model for the treatment-specific outcome Yt is not a SIMML, then the SIMML can be regarded as the L2 projection of the treatment specific mean outcome on the single index u = α⊤X,

| (3) |

for each given α. Specifically, suppose the true treatment-specific model can be expressed as

| (4) |

in which . Let , where gt is defined in (3) and let

| (5) |

Then α0 can be shown to be the minimizer of the cross-entropy (e.g., [15]) between the SIMML (2) and the general model (4) under the Gaussian noise assumption. Here, the cross-entropy of an arbitrary distribution with probability density f, with respect to another reference distribution is defined as , where the expectation is take with respect to the distribution . Model (3) evaluated at α0 can be viewed as the “projection” (in the sense of the closest point) of the true distribution (4) onto the space Θ of the SIMML distribution, using the Kullback-Leibler divergence as a distance measure.

The SIMML (2) allows a visualization useful for characterizing differential treatment effects, varying with the single-index α⊤X. As varies, the mean response of model (2) changes only in the specific direction α ∈ Θ, and the effect of varying X, described by the link functions gt, is different for each treatment condition t ∈ {1, …, K}. Therefore, the single-index can be viewed as a useful biosignature for describing differential treatment effects, provided gt ≠ gt′ for at least one pair t, t′ ∈ {1, …, K}.

3. Estimation

While any smoothing technique can be used to approximate the unspecified smooth links gt(·) in (2), in this paper, we will focus on cubic B-splines. Specifically, , for some coefficients . Here, consists of a set of dt normalized cubic B-spline basis functions [16]. Let nt be the sample size for the tth treatment group and denote the total sample size. Note, dt depends on nt (see Assumption 5 and [17]). For a given α, let denote the B-spline evaluation matrix (nt × dt), so that the ith row is Zt(α⊤Xti)⊤, which is the B-spline evaluation of the ith individual from the tth treatment group. The subscript α in the matrix highlights its dependence on α. Without loss of generality we assume that the outcome and the covariates are all centered at zero for each treatment group t, so that the model does not involve any intercept terms.

For sample data, SIMML (2) can be represented by

| (6) |

where is the observed response vector with , is block-diagonal B-spline design matrix of the , is the B-spline coefficient vector, and is a mean zero noise vector with covariance matrix σ2In.

For a given α, we define the n × n single-index projection matrix to be . Assuming Gaussian noise and treating η as a nuisance parameter, the negative “profile” loglikelihood of α, up to a constant multiplier, is

| (7) |

We define the profile likelihood estimator of the index parameter α as

| (8) |

Each link function gt(·) in (2) can be estimated by

| (9) |

where is evaluated at .

To solve (8), we can perform a procedure that alternates between the following two steps: first, for a fixed α, estimate each link functions gt(·) in (2) by (9), where is taken at α; second, for a fixed , perform an iteratively reweighted least squares (IRLS) to approximately solve (8) for α. These two steps can be iterated until convergence.

4. Asymptotic theory

In this section, we establish the asymptotic results of the profile estimator in (8) under possible misspecification, when the true model is assumed to be(4). Let us denote the pth component of the vector α0 in (5) by α0,p(> 0, since α0 ∈ Θ). By the completeness property of , we can always find some c > 0 such that α0,p ≥ c, and therefore, without loss of generality, we may assume that α0 is in a compact set , with an appropriate choice of small c > 0. Further, to avoid the complication from the restricted parameter space Θc, we can consider instead the “pth component removed” R(α) in (5), as follows:

| (10) |

where a vector lives inside the unit ball. Let the “pth component removed” value of α0 in (5) be denoted by .

Similarly, let the “pth component removed” value of the corresponding profile estimator in (8) be denoted by . The following conditions are assumed for the asymptotic results.

Assumption 1. The objective function R(α−p) in (10) is locally convex at α0,−p, and its Hessian function, H(α−p) evaluated at α−p = α0,−p, is positive definite, with bounded eigenvalues.

Assumption 2. The underlying mean functions mt(X) in (4) are in , t ∈ {1, …, K} for some finite a > 0, where is the p-dimensional ball with center 0 and radius a and .

Assumption 3. The probability density function of X, , and there exist constants 0 < cf < Cf such that , if and fX(x) = 0, if .

Assumption 4. The underlying noise ϵ in (4) satisfies with , and there exists a constant Cϵ > 0, such that . For each group t ∈ {1, …, K}, the standard deviation function σt(x) is continuous in , with , for some constants .

Assumption 5. The number of interior knots, Nt(= dt − 4), in the cubic B-spline approximation of the link function gt(·) for the tth treatment group satisfies: , t ∈ {1, …, K}.

The first theorem establishes consistency of the estimator (8) and the second theorem establishes asymptotic normality of the estimator for α0,−p.

Theorem 1. (Consistency) Under Assumption 1 to 5, almost surely, where α0 is defined in (5).

Theorem 2. (Asymptotic Normality) Under Assumption 1 to 5, in distribution, with asymptotic covariance matrix , where the matrix is the Hessian matrix evaluated at α−p = α0,−p and the matrix is defined in the Appendix.

The proofs of the theorems are given in the Appendix.

5. Simulation illustrations

5.1. Performance on estimating treatment decision rules

A treatment decision function, , mapping a subject’s baseline characteristics to one of K available treatments, defines a treatment decision rule for the single decision time point [1, 2, 18, 19, 20]. Given covariates X, a treatment decision rule based on SIMML is . We investigate the performance of the estimated treatment decision rules of the form , where the conditional expectation is obtained from various modeling procedures.

In our simulation settings, the baseline covariate vector , with ΨX having 1′s on the diagonal and 0.1 everywhere else. We consider K = 2 with different noise levels for the two treatment groups: , The outcome data are generated under the following fairly broad model

| (11) |

As a function of the index μ⊤X, M is referred to as the “main effect” of X. As functions of the other index α⊤X, the Ct’s are referred to as the “contrast” functions that define the treatment-by-X interaction. Here, we will use the parameters ν and ω to control the degree of non-linearity of M and Ct’s, respectively.

An optimal treatment decision rule depends only on the Ct’s, not on M or the ϵt’s. The parameter δ in (11) controls the relative contribution of the “signal” component to the variance in the outcomes, and is calibrated to obtain a relative contribution of 0.35. The contrast functions Ct’s in (11) are set to

| (12) |

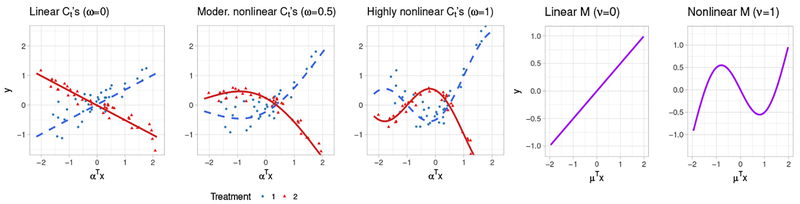

where, if ω = 0, then the Ct’s are linear functions; and they are more nonlinear for larger values of ω. We considered three cases, corresponding to linear (ω = 0), moderately nonlinear (ω = 0.5), and highly nonlinear (ω = 1) Ct’s, respectively, illustrated in the first three panels of Figure 1. We set the main effect function M in (11) to be

where, as ν increases, the degree of nonlinearity in the main effect function M increases. We considered two cases, ν = 0, corresponding to a linear M; and ν = 1, corresponding to a nonlinear M, illustrated in the fourth and the fifth panels of Figure 1. We set p = 5 and p = 10 with α = (1, …, 5)⊤ and α = (1, …, 10)⊤, respectively, each standardized to have norm one. We set μ to be proportional to a vector of 1’s, standardized to have norm one. Two treatment groups were considered, with equal sample sizes n1 = n2 = 40. We used d1 = d2 = 5 B-spline basis functions to approximate the link functions. The treatment decision rules were based on the following regression models: (i) SIMML (2) estimated from maximizing the profile likelihood; (ii) the K-Index model (1) fitted separately for each treatment group by the B-spline approach of [17], denoted as K-Index; (iii) the linear GEM model ([3]) estimated under the criterion of maximizing the difference in the treatment-specific slope, denoted as linGEM; and (iv) linear regression models fitted separately for each treatment group under the least squares criterion, denoted as K-LR. For each scenario, using the outcome Y from a simulated test set (of size 105), we computed the proportion of correct decisions (PCD) of the treatment decision rules estimated from each method and the methods were compared in terms of PCD using boxplots from training datasets.

Figure 1:

The first panel shows the linear contrast Ct’s (ω = 0), the second panel the moderately nonlinear contrast Ct’s (ω = 0.5), and the third panel displays highly nonlinear contrast Ct’s (ω = 1). Data points are generated from model (11) with δ = 0 and p = 5. The fourth and the fifth panels show the linear (ν = 0) and the nonlinear main effect M (ν = 1), respectively.

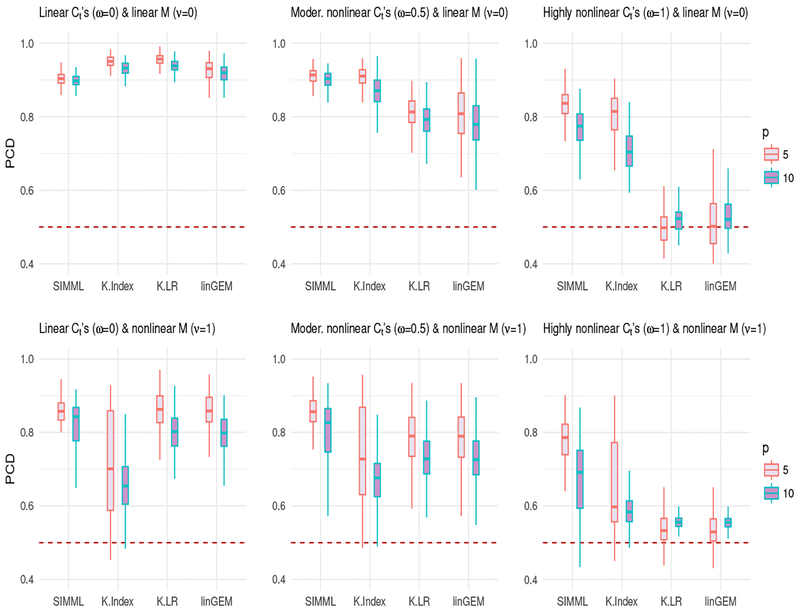

Figure 2 shows that SIMML outperforms all other methods, except for the case under the linear M and Ct’s in which all 4 approaches perform well. The K-Index model is clearly second best, under the linear M (ν = 0) (the top panels) with the nonlinear Ct’s (ω = 0.5 and ω = 1). However, with a more complex M function (ν = 1) (the bottom panels), the performance of the K-index approach is considerably worse compared to SIMML. Given a relatively small sample size and under the complex main effect, the SIMML that emphasizes the treatment contrasts through the common single-index is more effective in estimating optimal treatment decisions than the K-Index model. As would be expected, additional complexity in the contrasts Ct’s (ω = 0.5 and ω = 1) has a greater effect on the performance of the more restrictive models (linGEM and K-LR) than it does on the flexible models (SIMML and K-index). The number of covariates, p, also has a clear impact on the performance of all methods. As p changes from 5 (red) to 10 (blue), the deterioration in performance is more pronounced for the K-Index model that requires separate fits for each treatment and thus involves estimation of more parameters (K(p − 1) + Kd), compared to the more parsimonious SIMML with a fewer number of parameters (p − 1 + Kd) to be estimated.

Figure 2:

Boxplots of the proportion of correct decisions (PCD) of the treatment decision rules obtained from 200 training datasets for each of the four methods. Each panel corresponds to one of the six combinations of ω ∈ {0, 0.5, 1} and ν ∈ {0, 1}: the shape of the contrast functions Ct’s controlled by ω; the shape of the main effect function M controlled by ν; the number of predictors p ∈ {5, 10}. The sample sizes are n1 = n2 = 40.

5.2. Coverage probability of asymptotic 95% confidence intervals

The next simulation experiment assesses the coverage probability of the asymptotic confidence intervals derived from Theorem 2. The data were generated under model (11) with δ = 0 (i.e., no main effect M) with p = 5 covariates. We set the SIMML index vector α(= α0) to be stepwise increasing: (1, …, 5)⊤, normalized to have unit L2 norm. The associated contrast functions, Ct’s, are given by (12). As in Section 5.1, we consider three levels of the curvature of the contrasts, corresponding to linear (ω = 0), moderately nonlinear (ω = 0.5), and highly nonlinear (ω = 1) contrasts (see Figure 1). In (11), the standard deviations of the noise ϵt were set to 0.5. We set the sample size n = n1 + n2 with n1 = n2. With varying n ∈ {50, 100, 200, 400, 800, 1600, 3200}, the number of interior knots used in the B-spline approximation, Nt, was determined to be , as recommended by [17] ([v] denotes the integer part of v). Two hundred datasets were generated for all combinations of n and ω. For each (i.e., the jth) component αj of α, the proportion of times the 95% asymptotic confidence interval contains the true value of αj was recorded in the Table C.2 in the Appendix. Notice that the 5th (i.e., the pth) element is estimated to satisfy the constraint α ∈ Θ in Theorem 2. To obtain the confidence intervals for the 5th component, we applied Theorem 2 with the 4th component removed (without loss of generality), and obtained the confidence intervals for the 5th component.

We note that the choice of is an approximation to the Nt of Assumption 5 which requires , as such Nt can only be obtained for a very large nt. Nevertheless, in Table C.2 in the Appendix, as the sample size n(= n1+n2) increases, the “actual” coverage probability gets closer to the “nominal” coverage probability, with better coverage results for the linear and the moderately nonlinear contrasts (ω ∈ {0, 0.5}) compared to the highly nonlinear contrasts (ω = 1).

6. Application to data from a randomized clinical trial

Major depressive disorder afflicts millions and, according to the World Health Organization, it is the leading cause of disability worldwide. It is a highly heterogeneous disorder, however, no individual biological or clinical marker has demonstrated sufficient ability to match individuals to efficacious treatment. Here we illustrate the utility of the proposed SIMML method for estimating a composite biomarker and treatment decision rules, with an application to data from a randomized clinical trial comparing an antidepressant and placebo for treating depression.

Of the 166 subjects, 88 were randomized to placebo and 78 to the antidepressant. In addition to standard clinical assessments, patients underwent neuropsychiatric testing prior to treatments. Table 1 summarizes the information on p = 9 baseline patient characteristics, X = (x1, …, x9)⊤. These baseline covariates were considered as potential treatment effect modifiers, and standardized to have unit variance. The treatment outcome Y was the improvement in symptom severity from week 0 (baseline) to week 8 and thus larger values of the outcome were better.

Table 1: Depression randomized clinical trial:

Description of the p = 9 baseline covariates (means and SDs); the estimated values (“Indiv. Value”) of treatment decision rules from each individual covariate, using either the B-spline regression (“Nonpar.”, in the third column) or the linear regression (“Linear”, in the fourth column); the estimated single-index coefficients (in the last three columns), and the values of the associated treatment decision rules from the three methods (in the bottom row).

| Indiv. Value | Coefficients αj’s, j ∈ {1, …, 9} | |||||

|---|---|---|---|---|---|---|

| patient characteristics | (SD) | Nonpar. | Linear | SIMML* | SIMML | linGEM |

| (xi) Age at evaluation | 38.00 (13.84) | 8.56 | 8.24 | −0.53 | −0.50 | −0.43 |

| (x2) Severity of depression | 18.80 (4.29) | 6.85 | 7.07 | −0.07 | −0.13 | −0.37 |

| (х3) Dur. MDD (month) | 38.19 (53.17) | 7.42 | 7.33 | 0.08 | −0.18 | 0.20 |

| (x4) Age at MDD | 16.46 (6.09) | 6.29 | 6.95 | 0.23 | 0.05 | 0.31 |

| (x5) Axis II | 3.92 (1.43) | 7.16 | 7.11 | 0.23 | 0.20 | 0.17 |

| (x6) Word Fluency | 37.42 (11.68) | 7.64 | 7.11 | 0.11 | 0.09 | 0.27 |

| (x7) Flanker RT | 59.51 (26.63) | 8.19 | 8.39 | 0.12 | 0.23 | −0.18 |

| (x8) Post-conflict adjus. | 0.07 (0.12) | 6.73 | 7.23 | −0.30 | −0.29 | −0.18 |

| (x9) Flanker Accuracy | 0.22 (0.15) | 7.89 | 8.37 | 0.70 | 0.70 | 0.59 |

| Value from single-index model | 9.34 | 8.72 | 8.22 | |||

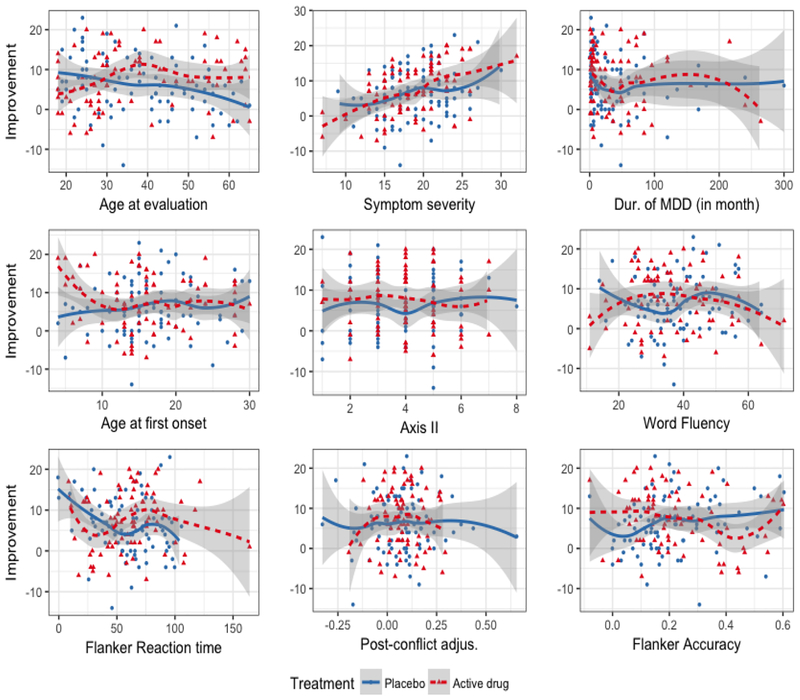

Figure 3 shows the treatment outcomes Y against each of the 9 baseline covariates, for the placebo group (blue) and the active drug group (red). The estimated B-spline approximated curves for each individual covariate are shown with the associated 95% confidence bands: the solid blue curves for the placebo group and the dotted red curves for the active drug group. In Figure 3, each individual covariate has at most a small treatment modifying effect, as its treatment-specific curves do not differ much.

Figure 3: Depression randomized clinical trial:

For each of the 9 baseline covariates individually, treatment-specific spline approximated regression curves with 5 basis functions are overlaid on to the data points; the placebo group is the blue solid curve and the active drug group is the red dotted curve. The associated 95% confidence bands of the regression curves were also plotted.

One natural measure for the effectiveness of a treatment decision rule is called the “value” (V) of a treatment decision rule [20], which is defined as the expected mean outcome if everyone in the population receives treatment according to that rule:

| (13) |

In the third and the fourth columns of Table 1, “Indiv. Value” refers to the estimated “value” of a decision rule estimated from each of the 9 individual covariates, using the following two approaches for estimating : the B-spline regressions of the treatment-specific outcome on each individual covariate (“Nonpar.” in the third column of Table 1) as suggested by the overlaid curves in Figure 3, and the linear regressions of the treatment-specific outcome on each individual covariate (“Linear” in the fourth column of Table 1). The value (13) of can be estimated by the inverse probability weighted estimator [21]:

| (14) |

using a testing set, say, , where, if one uses only the jth covariate for estimating , then Xi = xij. The data were randomly split into a training set and a testing set with a ratio of 10 to 1. This splitting was performed 500 times, each time estimating on the training set and computing (14) from the testing set. Values (14) are averaged over the 500 splits.

The SIMML can be made more efficient by incorporating a main effect component β⊤D(X) in the model, i.e., we can consider , for an appropriate vector-valued function D(X). If the n × q matrix denotes the evaluation of D(X) on the sample data, then for each α, the negative “profile” loglikelihood (7) under this extended model (with Gaussian outcome), up to constants, is , where . In this analysis, we took D(X) = X. We refer to this approach as “main effect adjusted” profile likelihood SIMML and denote it by SIMML*.

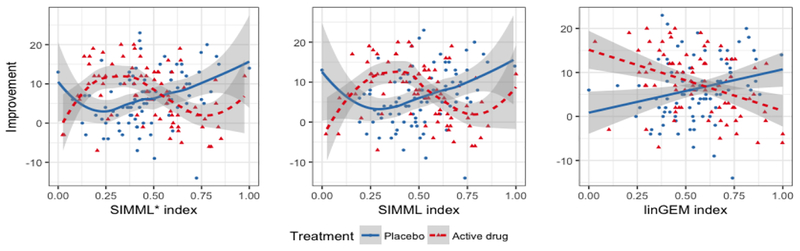

In Table 1, the last three columns show the estimated single-index coefficients α obtained by two different SIMMLs (SIMML* and SIMML) and the linear GEM (linGEM) which restricts the link gt(·) to be a linear function. In Figure 4, the estimated pairs of link functions are plotted against the approach-specific single-index α⊤X, obtained from applying the two SIMML approaches and the linear GEM approach. From Figures 3 and 4, it appears that the index α⊤X exhibits a stronger moderating effect of treatment than the individual covariates. Also, the shapes of the regression curves from the SIMML approaches appear to capture a nonlinear treatment-by-index interaction effect, especially due to some non-monotone relationship between the index and the outcome in the active drug group.

Figure 4: Depression randomized clinical trial:

Pair of estimated link functions (g1 and g2) obtained from SIMML with the “main effect adjusted” profile likelihood (first panel), SIMML with the (main effect un-adjusted) profile likelihood (second panel), and the linear GEM model estimated under the criterion maximizing the difference in the linear regression slopes (third panel), respectively, for the placebo group (blue solid curves) and the active drug group (red dotted curves). The 95% confidence bands were constructed conditioning on the single-index coefficient α. For each treatment group, the observed outcomes are plotted against the estimated single-index.

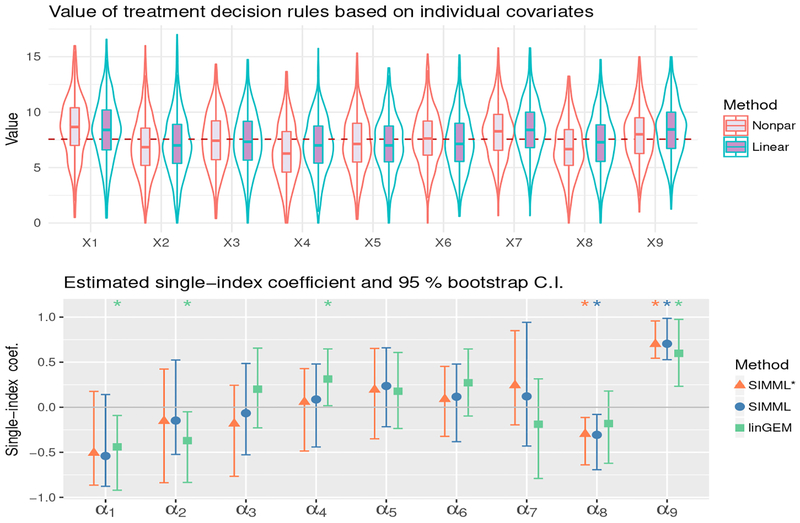

In Figure 5, we illustrate the single-index coefficient estimates from each of the methods, and the associated 95% confidence intervals obtained from a bias-corrected and accelerated (BCa, [22]) bootstrap with 500 replications. The coverage of the asymptotic-based confidence intervals for this sample size is not expected to be very good (based on the simulation results in Section 5.2) and thus instead we used bootstrap confidence intervals. The magnitude of the estimated coefficients α1, …, α9 reflects the relative importance of the covariates x1, …, x9 in determining a composite treatment effect modifier α⊤X.

Figure 5: Depression randomized clinical trial:

Top panel: Violin plots of the estimated values of treatment decision rules based on each of the individual covariates x1, …, x9, determined from univariate nonparametric and linear regressions, respectively, obtained from 500 randomly split testing sets (with higher values preferred). Bottom panel: The estimated index coefficients α1, …, α9, associated with the covariates x1, …, x9, and the 95% confidence intervals for each of the three methods, obtained from BCa bootstrap with 500 replications. An estimated significant coefficient is marked with * on top of each confidence interval.

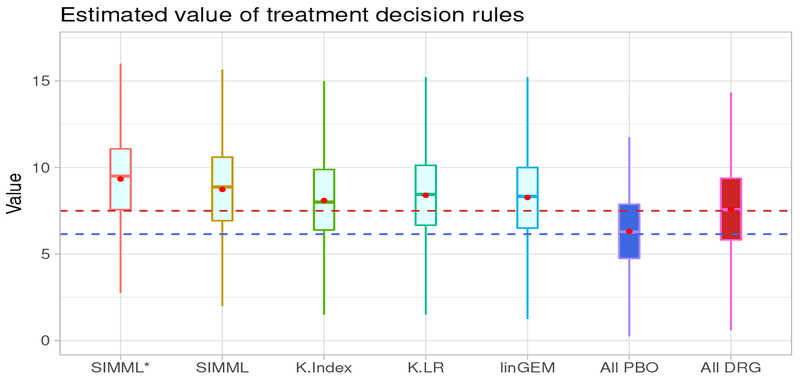

In this analysis, the incorporation of the “main effect” component improved the value of treatment decision rules determined from the proposed SIMML method, as illustrated in the boxplots in Figure 6; we compared the two SIMML approaches (SIMML* and SIMML); the linear GEM (linGEM) and the two approaches based on separate regression models for each treatment group (K-Index and K-LR), with respect to the estimated values (14) of the treatment decision rules. For comparison, we also included the decision to treat everyone with placebo (All PBO), and the decision to treat everyone with the active drug (All DRG). The results are summarized in Figure 6.

Figure 6: Depression randomized clinical trial:

Boxplots of the estimated values of treatment decision rules, obtained from the 500 randomly split testing sets (higher values are preferred). The estimated values (and the standard deviations) are given as follow. SIMML*: 9.34(2.68); SIMML: 8.72 (2.68); K-Index: 8.04 (2.69); K-LR: 8.36 (2.69); linear GEM (linGEM):8.22 (2.67); All placebo (PBO): 6.17 (2.63); All drug (DRG): 7.57 (2.67).

In Figure 6, in terms of the averaged estimated values (14) estimated from the aforementioned 500 randomly split testing sets, the proposed SIMML approaches outperform all other methods. The visualization (see Figure 4) indicates that the superiority of the active drug over placebo does not linearly decrease with the index, but rather, it appears to remain relatively constant to the left of the crossing point, exhibiting some nonlinear patterns. Finally, we note that the value of the treatment decision rule All PBO was lower than the value of the treatment decision rule All DRG, and that all treatment decision rules that took patient characteristics into account outperformed the decision of treating everyone with the drug (which is standard current clinical practice). In particular, the superiority the treatment decision rule SIMML* over treating everyone with the drug in terms of value was of similar magnitude of the superiority of the decision to treat everyone with the drug versus treating everyone with placebo. This is a clear indication that patient characteristics can help treatment decisions for patients with depression, and the more flexible SIMML methods are well suited for developing treatment decision rules. Particularly, the proposed methods show that combining patient characteristics with little moderating effects of a treatment can result in a strong treatment effect modifier which exhibits nonlinear association with the outcome that can help with making treatment decisions.

7. Discussion

The SIMML model (6) can be extended in various ways, for example, by allowing treatment-specific noise variances . Under a Gaussian noise assumption, the B-spline approximated profile log likelihood of α, that profiles out the nuisance parameters and ηt, up to constants, is , in which . The corresponding profile estimator of α is . The estimation can be performed similarly as in the estimation of in (8), but the criterion function Q(α) will be replaced by .

The SIMML can also be extended to generalized linear models (GLM) in which the outcome variable is a member of the exponential family. The standard form of the density is fY(Y; θ, ϕ) = exp {(Y θ − b(ϕ))/a(ϕ) + c(Y, ϕ)}, with a canonical link function h(·). We can extend the SIMML approach to the GLM setting with treatment-specific natural parameters θt, t ∈ {1, …, K} by modeling the treatment-specific outcomes as a function of a single-index , t ∈ {1, …, K}; gt(·), hence , can be approximated, for example, by B-splines. The approximates can be denoted by for some . As in Section 3, the general strategy of nonlinear maximization of the “profile” likelihood over α ∈ Θ, where we profile out ηt for each value of α, can be employed. The dispersion parameter ϕ can also be profiled out. Other potential extensions involve incorporating variable selection in high-dimensional covariate settings using a regularization method and incorporating functional-valued data objects (such as images) as patient covariates.

An important extension to the SIMML model is to factor out baseline effects common to all treatment groups, by allowing an unspecified main-effect term μ(X) [e.g., 23] in the model. Generally, this can be handled by an “orthogonalization” approach, and the estimation can be performed under the framework of A-learning [1, 24, 25, 26, 27]. To elaborate, consider the following extension of model (2),

| (15) |

where we impose a structural constraint, , which is a sufficient condition for orthogonality between the SIMML, gT (α⊤X), and the unspecified main effect, μ(X) in (15), as in [27]. Optimization of model (15) can be achieved by constrained least squares under this orthogonality constraint and A-learning can be employed for estimating an optimal treatment decision rule, focusing on estimating the interactions in the presence of the unspecified main effect μ(X). The technicalities of this adjustment are treated in a separate work.

Acknowledgement

This work was supported by National Institute of Health (NIH) grant 5 R01 MH099003.

Appendix A. The asymptotic covariance matrix in Theorem 2

Define , t ∈ {1, …, K}. In Theorem 2, the asymptotic covariance matrix is given as . Here, the Hessian matrix evaluated at α−p = α0,−p has its (j, q)th element given by

| (A.1) |

The matrix evaluated at α−p = α0,−p has its (j, q)th element given by

| (A.2) |

where uα = α⊤X.

Appendix B. Proof

Appendix B.1. Proof of Theorem 1

Proof. Let us write and . Under Assumptions 2–4, by the results from A.14 of [28], we have

almost surely, where is the distance between knot points, and Nt (note, Nt = dt − 4) is the number of interior knots on [0, 1]. Since we choose Nt such that for all t ∈ {1, …, K}, under Assumption 5,

almost surely. By the continuous mapping theorem,

almost surely, therefore, we have

| (B.1) |

almost surely. Denote by (Ω, , ) the probability space on which all are defined. By (B.1), for any δ > 0, ω ∈ Ω, there is an integer n*(ω), such that Q(α0, ω) − R(α0) < δ/2, whenever n > n*(ω). Since is the minimizer of Q(α, ω), we have . Also, by (B.1), there exists an integer n**(ω), such that , whenever n > n**(ω). Therefore, whenever n > max(n*(ω), n**(ω)), we have . The strong consistency follows from the local convexity of Assumption 1. □

Appendix B.2. Proof of Theorem 2

Proof. We first derive the expression (A.1) from the Appendix for the Hessian matrix. We can write , where the “pth component removed” function corresponding to the tth treatment is . Applying the chain rule for taking the derivative of Rt(α−p) with respect to αj, we obtain

| (B.2) |

for each j ∈ {1, …, p – 1}. Taking another derivative of (B.2) with respect to αq, for each q ∈ {1, …, p – 1}, again by applications of the chain rule,

| (B.3) |

After summing (B.3) over the groups t ∈ {1, …, K}, weighted by the group probabilities π1, …, πK, evaluated at α = α0, we obtain (A.1).

Next, we examine the asymptotics of the profile estimator . From A.15 of [28] and under Assumptions 2–5, we have

| (B.4) |

almost surely, with , where uα,ti = α⊤Xti and furthermore

| (B.5) |

almost surely, for each group t ∈ {1, …, K}.

Now, we will prove that the estimated score of , where , evaluated at α−p = α0,−p, is represented up to o(n−1/2) almost surely, by a sum of mean-zero independent random variables, which we denote by , i ∈ {1, …, n} where . Let us denote the estimated score function by , where . We will show

| (B.6) |

almost surely, where is the jth component of the score function and is the jth component of the random variable ηi. In order to employ the result (B.4), we first consider the score function defined on the set Θc, i.e., the score function , instead of the “pth component removed” score function defined on , i.e., . We will show that, for some mean-zero independent random variables, which we denote by , i ∈ {1, …, n}, j ∈ {1, …, p},

| (B.7) |

is satisfied almost surely. Let us set the desired mean-zero independent random variable to be , where

which must satisfy the following:

| (B.8) |

We can write

where ξα,i,j,t is defined in (B.4). Therefore, applying the continuous mapping theorem and Slutsky’s theorem to (B.4) leads to the desired result (B.8).

Next, we will show (B.6), the result corresponding to the “pth component removed” estimated score function, on . Considering the linear operator , we note that by the chain rule,

for j ∈ {1, …, p−1}, where Ψj(α−p) denotes the jth component of the gradient of R(α−p). If we set the approximation variable ηi,j of (B.6) to be

| (B.9) |

then we can show

| (B.10) |

by the triangle inequality and the result of (B.7). Since Ψj(α−p) is evaluated at the minimum α0,−p, we have

| (B.11) |

by the local convexity under Assumption 1. Then we obtain the desired result of (B.6), by (B.10) and (B.11).

The uniform consistency of the observed Hessian, , to the population Hessian H(α−p) of (A.1) follows directly from the results of (B.5) under Assumptions 2–5, with applications of the continuous mapping theorem.

Finally, we prove the main result. Consider the random variable introduced in (B.6), and the following parametrization: for each component j ∈ {1, …, p – 1}

Taking the derivative with respect to t, we have by the chain rule

Since by the definition of , it follows that . Therefore, for any particular j = 1, …, p−1, there exists by the mean value theorem, such that

which is just

| (B.12) |

where is a p − 1 dimensional random vector. Writing (B.12) in matrix notation, we have

| (B.13) |

Then, by (B.13) one can write

| (B.14) |

Meanwhile, by (B.6), for each component j ∈ {1, …, p−1} of , we can write

| (B.15) |

almost surely with . The variance-covariance matrix of the random vector evaluated at α−p = α0,−p, where ηi,j are specified in (B.9), is given in (A.2), where it is denoted by Wα0,−p. From (B.15), the central limit theorem ensures that in distribution. Now, by the representation of (B.14) together with an application of Slutsky’s theorem on the observed Hessian, we obtain in distribution, where , which is the desired result of Theorem 2. □

Appendix C. Table for Section 5.2 Coverage probability of asymptotic 95% confidence intervals

Table C.2:

The proportion of time (“Coverage”) that the asymptotic 95% confidence interval contains the true value of αj, j ∈ {1, …, 5}, for varying ω ∈ {0, 0.5, 1}, corresponding to linear, moderately nonlinear, and highly nonlinear contrasts, respectively, with varying n(= n1 + n2, where n1 = n2).

| ω = 0 (linear) | ω = 0.5 (moderate nonlinear) | ω =1 (highly nonlinear) | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| α1 | α2 | α3 | α4 | α5 | α1 | α2 | α3 | α4 | α5 | α1 | α2 | α3 | α4 | α5 | |

| 50 | 0.36 | 0.45 | 0.43 | 0.44 | 0.42 | 0.49 | 0.46 | 0.45 | 0.46 | 0.40 | 0.59 | 0.58 | 0.57 | 0.55 | 0.52 |

| 100 | 0.64 | 0.67 | 0.72 | 0.68 | 0.64 | 0.76 | 0.75 | 0.80 | 0.72 | 0.76 | 0.89 | 0.82 | 0.84 | 0.75 | 0.73 |

| 200 | 0.77 | 0.77 | 0.79 | 0.78 | 0.73 | 0.88 | 0.83 | 0.82 | 0.85 | 0.79 | 0.92 | 0.88 | 0.84 | 0.78 | 0.81 |

| 400 | 0.85 | 0.90 | 0.87 | 0.87 | 0.85 | 0.88 | 0.88 | 0.88 | 0.82 | 0.85 | 0.95 | 0.88 | 0.84 | 0.79 | 0.78 |

| 800 | 0.95 | 0.92 | 0.91 | 0.89 | 0.88 | 0.92 | 0.89 | 0.92 | 0.89 | 0.87 | 0.92 | 0.91 | 0.83 | 0.78 | 0.81 |

| 1600 | 0.93 | 0.93 | 0.92 | 0.93 | 0.92 | 0.94 | 0.94 | 0.91 | 0.93 | 0.91 | 0.93 | 0.90 | 0.87 | 0.84 | 0.81 |

| 3200 | 0.94 | 0.95 | 0.94 | 0.94 | 0.94 | 0.96 | 0.94 | 0.90 | 0.92 | 0.90 | 0.93 | 0.92 | 0.87 | 0.90 | 0.85 |

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Murphy SA, Optimal dynamic treatment regimes, Journal of the Royal Statistical Society: Series B (Statistical Methodology) 65 (2003) 331–355. [Google Scholar]

- [2].Robins J, Optimal Structural Nested Models for Optimal Sequential Decisions, Springer, New York, 2004. [Google Scholar]

- [3].Petkova E, Tarpey T, Su Z, Ogden RT, Generated effect modifiers in randomized clinical trials, Biostatistics 18 (2016) 105–118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Brillinger RD, A generalized linear model with “Gaussian” regressor variables In A Festschrift for Lehman Erich L.(Edited by Bickel PJ, Doksum KA and Hodges JL), Wadsworth, New York, 1982. [Google Scholar]

- [5].Stoker TM, Consistent estimation of scaled coefficients, Econometrica 54 (1986) 1461–1481. [Google Scholar]

- [6].Powell J, Stock J, Stoker T, Semiparametric estimation of index coefficients, Econometrica 57 (1989) 1403–1430. [Google Scholar]

- [7].Hardle W, Hall P, Ichimura H, Optimal smoothing in single-index models, Annals of Statistics 21 (1993) 157–178. [Google Scholar]

- [8].Xia Y, Li W, On single index coefficient regression models, Journal of the American Statistical Association 94 (1999) 1275–1285. [Google Scholar]

- [9].Horowitz JL, Semiparametric and Nonparametric Methods in Econometrics, Springer, 2009. [Google Scholar]

- [10].Antoniadis A, Gregoire G, McKeague I, Bayesian estimation in single-index models, Statistica Sinica 14 (2004) 1147–1164. [Google Scholar]

- [11].Friedman JH, Stuetzle W, Projection pursuit regression, Journal of the American Statistical Association 76 (1981) 817–823. [Google Scholar]

- [12].Xia Y, A multiple-index model and dimension reduction, Journal of the American Statistical Association 103 (2008) 1631–1640. [Google Scholar]

- [13].Yuan M, On the identifiabliity of additive index models, Statistica Sinica 21 (2011) 1901–1911. [Google Scholar]

- [14].Lin W, Kulasekera KB, Uniqueness of a single index model, Biometrika 94 (2007) 496–501. [Google Scholar]

- [15].Mackay DJ, Information Theory, Inference, and Learning Algorithms, Cambridge University Press, 2003. [Google Scholar]

- [16].de Boor C, A Practical Guide to Splines, Springer-Verlag, New York, 2001. [Google Scholar]

- [17].Wang L, Yang L, Spline estimation of single-index models, Statistica Sinica 19 (2009) 765–783. [Google Scholar]

- [18].Zhang B, Tsiatis AA, Laber EB, Davidian M, A robust method for estimating optimal treatment regimes, Biometrics 68 (2012) 1010–1018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Cai T, Tian L, Wong PH, Wei LJ, Analysis of randomized comparative clinical trial data for personalized treatment selections, Biostatistics 12 (2011) 270–282. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Qian M, Murphy SA, Performance guarantees for individualized treatment rules, The Annals of Statistics 39 (2011) 1180–1210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Murphy SA, A generalization error for q-learning, Journal of Machine Learning 6 (2005) 1073–1097. [PMC free article] [PubMed] [Google Scholar]

- [22].DiCiccio TJ, Efron B, Bootstrap confidence intervals, Statistical Science 11 (1996) 189–228. [Google Scholar]

- [23].Tian L, Alizadeh A, Gentles A, Tibshrani R, A simple method for estimating interactions between a treatment and a large number of covariates, Journal of the American Statistical Association 109 (508) (2014) 1517–1532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Lu W, Zhang H, Zeng D, Variable selection for optimal treatment decision, Statistical Methods in Medical Research 22 (2011) 493–504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].Shi C, Song R, Lu W, Robust learning for optimal treatment decision with np-dimensionality, Electronic Journal of Statistics 10 (2016) 2894–2921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Shi C, Fan A, Song R, Lu W, High-dimensional A-learning for optimal dynamic treatment regimes, The Annals of Statistics 46 (3) (2018) 925–957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Jeng X, Lu W, Peng H, High-dimensional inference for personalized treatment decision, Electronic Journal of Statistics 12 (2018) 2074–2089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Wang L, Yang L, Spline single-index prediction model, Technical Report. https://arxiv.org/abs/0704.0302.