Summary

Introduction : There has been a rapid development of deep learning (DL) models for medical imaging. However, DL requires a large labeled dataset for training the models. Getting large-scale labeled data remains a challenge, and multi-center datasets suffer from heterogeneity due to patient diversity and varying imaging protocols. Domain adaptation (DA) has been developed to transfer the knowledge from a labeled data domain to a related but unlabeled domain in either image space or feature space. DA is a type of transfer learning (TL) that can improve the performance of models when applied to multiple different datasets.

Objective : In this survey, we review the state-of-the-art DL-based DA methods for medical imaging. We aim to summarize recent advances, highlighting the motivation, challenges, and opportunities, and to discuss promising directions for future work in DA for medical imaging.

Methods : We surveyed peer-reviewed publications from leading biomedical journals and conferences between 2017-2020, that reported the use of DA in medical imaging applications, grouping them by methodology, image modality, and learning scenarios.

Results : We mainly focused on pathology and radiology as application areas. Among various DA approaches, we discussed domain transformation (DT) and latent feature-space transformation (LFST). We highlighted the role of unsupervised DA in image segmentation and described opportunities for future development.

Conclusion : DA has emerged as a promising solution to deal with the lack of annotated training data. Using adversarial techniques, unsupervised DA has achieved good performance, especially for segmentation tasks. Opportunities include domain transferability, multi-modal DA, and applications that benefit from synthetic data.

Keywords: Medical Imaging Informatics, deep learning, domain adaptation, domain transformation, latent feature space transformation, precision medicine

1 Introduction

Medical imaging informatics utilizes digital imaging processing and machine learning (ML) to improve the efficiency, accuracy, and reliability of imaging-based diagnosis 1 . During the past few years, medical imaging informatics has made remarkable progress due to the increasing availability of data and the rapid development of deep learning (DL) techniques 2 . However, fundamental challenges hinder the effective deployment of deep learning models to clinical settings. Annotated medical datasets are limited due to the tedious labeling process 2 and are not easily shared due to privacy concerns 3 4 . While multicenter datasets can increase the amount of annotated data, these datasets suffer from heterogeneity due to varying hospital procedures and diverse patient populations 5 6 . Due to a distribution shift (also known as domain-shift) between the available training dataset and the dataset encountered in clinical practice, pre-trained models trained by one dataset may fail for another dataset.

1.1 What is Transfer Learning and Domain Adaptation

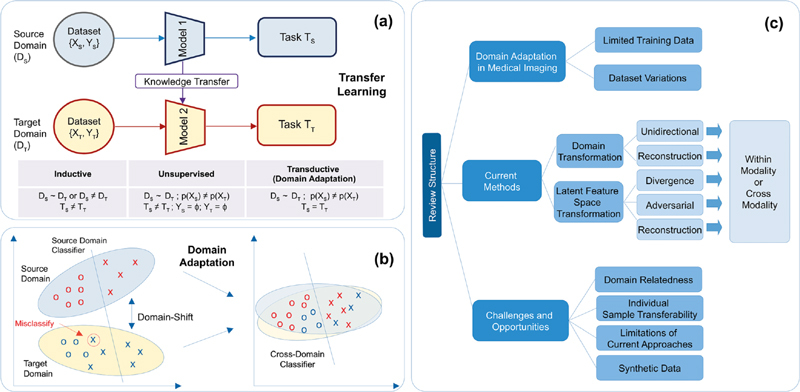

Transfer learning (TL) 7 is a technique that applies knowledge learned from one domain and one task to another related domain and/or another task, when there is insufficient labeled data for traditional supervised learning. For medical imaging, a domain usually refers to images or features, while the task refers to segmentation, classification, etc. Mathematically, X and Y being random variables, where X is d-dimensional feature space with marginal probability distribution p(X) and Y is a labeled vector with conditional probability distribution p(Y|X), we use D ={X, p(X)} to represent domain and T={Y, p(Y|X)} to represent task, where p is learnt using a function (e.g. neural network). If both source (D S ) and target domains (D T ) are similar, i.e ., D S ~ D T , then D S and D T can use the same ML model for similar tasks (T S ~T T ). However, if D S ≠D T or T S ≠T T , the ML model trained on the source domain might have decreased performance on the target domain (D T ). TL can be categorized into three types based on the relationships between domains and/or tasks:

Inductive TL requires some labeled data. While the two domains may or may not differ (D S ~D T or D S ≠D T ), the target and source tasks are different (T S ≠T T ), for e.g. 3D organ reconstruction across multiple anatomies;

Transductive TL requires labeled source data and unlabeled target data with related domains (D S ~D T ) and same tasks (T S =T T ), while the marginal probability distributions differ (p(X S )≠p(X T )), for e.g. , lung tumor detection across X-Ray and computed tomography images;

Unsupervised TL does not require labeled data in any domain and has different tasks (T S ≠T T ), for e.g. , classifying cancer for different anatomies using unlabeled histology images.

Domain Adaptation (DA) is a transductive TL approach that aims to transfer knowledge across domains by learning domain-invariant transformations, which align the domain distributions (see Figure 1-b ). DA assumes that the source data is labeled, while the target domain can be (a) fully labeled data ( i.e ., supervised setting); (b) a small set of labeled data ( i.e ., semi-supervised setting); or (c) completely unlabeled data ( i.e ., unsupervised setting).

Fig. 1.

a) Transfer learning (TL) and its different types; b) Overview of domain adaptation; c) Organization of this survey paper.

1.2 Using Domain Adaptation to Improve Model Training in Medical Imaging

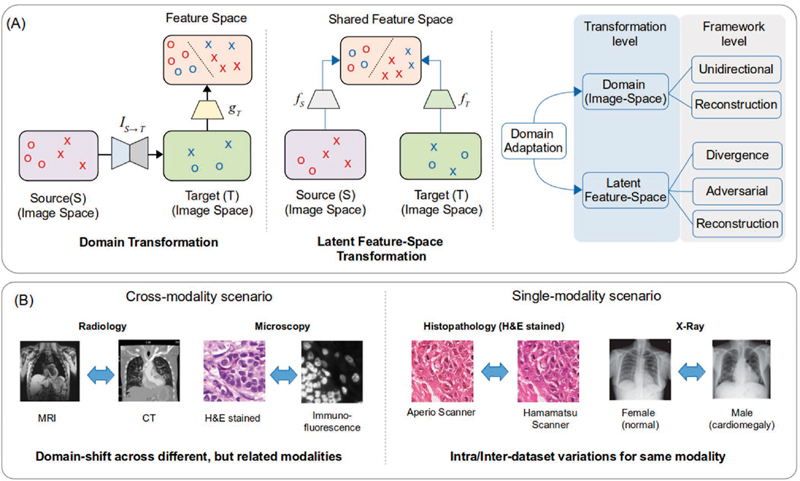

In biomedical imaging, due to the existence of multi-modality imaging ( e.g ., magnetic resonance imaging (MRI) and positron emission tomography (PET)), DA has advantages over conventional semi-supervised learning or unsupervised learning. Cross-modal DA transfers labels between distinct, but somewhat related, image modalities ( e.g ., MRI and computed tomography (CT)). Single-modality DA adapts different image distributions within the same modality 8 ( Figure 2 ).

Fig. 2.

a) Summary of domain adaptation methodologies employed in medical imaging; b) Different scenarios encountered in cross-modality 16 28 and single-modality 25 29 domain adaptation.

1.2.1 Challenge of Limited Training Data

Developing accurate DL models requires large scale training data covering a wide range of input variations. However, in biomedical imaging, due to concerns over patient privacy 3 4 and lack of manual annotation of images by clinical experts 9 , few well-labeled datasets are available for training. This situation is worse for rare diseases, where a low number of positive cases lead to significantly unbalanced datasets 10 .

DA can mitigate the lack of well-annotated data by augmenting target domain data, either by generating synthetic labeled images from source images or aligning source and target image features and training a task network on them 11 12 112 . For example, MRI achieves higher resolution for soft tissue imaging compared to CT 13 . As such, MRI is preferred for neuroimaging, and brain MRI annotations are easily accessible. On the other hand, CT imaging is fast and less expensive and may be preferred in trauma situations 14 . Thus, through DA, annotated MRI scans from historical subjects can be combined with CT to reduce the number of image acquisitions needed. As another example, Hematoxylin and Eosin (H&E) stained images are widely available, while immunohistochemistry (IHC) images, which clearly highlight nuclei via specific biomarkers 15 , are not. DA methods can translate multi-stained H&E-stained images to the IHC domain, making nuclei detection easier 16 .

1.2.2 Challenge of Dataset Variations

To train robust DL models, many studies rely on images aggregated from multiple institutes such as NCI/NIH The Cancer Genome Atlas (TCGA) 17 and Stanford’s large chest radiograph dataset, CheXpert 18 . The data in these repositories are heterogeneous due to varying hospital processes (image acquisition platforms or data preparation protocols), different demographics of patient populations (ethnicity, gender, age), or different pathological conditions 5 . Specifically, pathology images have stain variations 19 while MRIs are susceptible to varying magnetic fields and contrast agents 20 . Such intra- or inter-dataset variations cause the training and test dataset to have different distributions, resulting in a domain-shift which impacts model generalization 21 22 . Diversifying the training data by creating larger datasets is a possible solution, but recent medical imaging studies 21 23 24 have shown that it does not guarantee improved generalization. DA methods try to minimize the dataset variation, while retaining the distinguishing aspects for task classifier, and have been shown to generalize well in image segmentation tasks for multiple modalities 25 26 27 .

The organization of the survey paper is illustrated in Figure 1-c . Section 2 introduces our survey methodology in identifying and selecting relevant medical imaging studies. Section 3 presents various DL-based frameworks in the DA literature and the current best practices for medical imaging. Section 4 summarizes the current DA challenges and future opportunities.

2 Materials and Methods

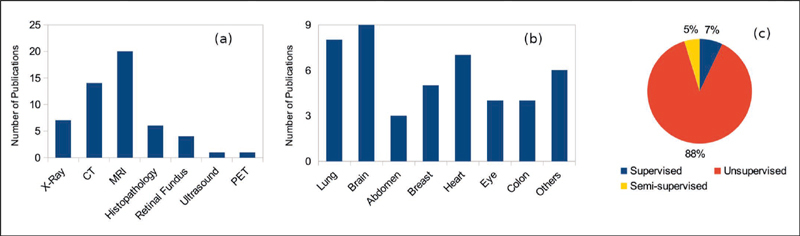

In this survey, we examined publications between 2017-2020. We considered the proceedings of leading peer-reviewed journals and conferences, including IEEE Transactions on Medical Imaging, the International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), Medical Image Analysis (Elsevier), IEEE International Symposium on Biomedical Imaging (ISBI), Conference on Computer Vision and Pattern Recognition (CVPR), Association for the Advancement of Artificial Intelligence (AAAI), and the International Conference on Medical Imaging with Deep Learning (MIDL). Additionally, we identified a few relevant works from arXiV and PubMed, which were not found in review proceedings. Our search keywords included ‘Domain Adaptation’, ‘Transfer Learning’,’ Cross Modality’, ‘Multimodal’ and ‘Medical Image Adaptation’, and ‘Medical Images’. We found that radiology and pathology were the most common application areas (characteristics of our results are illustrated in Figure 3-a and Figure 3-c ). Cross-modality segmentation is observed more extensively in radiology compared to other areas. MRI, CT, and PET maintain better relative morphological-consistency of organs and provide complementary information for disease detection 30 . On the other hand, histopathology with smaller objects, such as nuclei, are prone to artifacts during cross-modal translation 31 .

Fig. 3.

Categorization of medical imaging DA publications as per a) imaging modality; b) anatomy; c) learning scenarios

3 Deep Learning-based Domain Adaptation

DL-based DA is achieved using various representation learning strategies such as aligning the domain distributions, learning a mapping between domains, separating normalization statistics, and ensemble-based approaches 32 33 34 . As shown in Figure 2-a , there are two families of DA approaches for medical imaging: (a) Domain Transformation (DT-DA) translates images from one domain to the other domain, so that the obtained models can be directly applied to all images, and (b) Latent Feature Space Transformation (LFST-DA) aligns images from both domains in a common hidden feature space to train the task model on top of the hidden features. These two approaches can work together to improve adaptation performance by preserving finer semantic details 35 36 . We have summarized the application of these DA methods in medical imaging in Table 1 .

Table 1. Summary of DA studies in medical imaging categorized by DA methodology, task, modality, anatomy, and learning scenarios (S: Segmentation; C: Classification; 3DR: 3D Reconstruction).

|

3.1 Domain Transformation in Domain Adaptation

DT-DA translates images from one domain to another domain (i.e., image-to-image translation 37 ). Such translation is typically done using generative models ( e.g ., generative adversarial networks (GANs)) 38 , which achieve pixel-level mapping by learning the translation at a semantic level.The translation direction is usually decided by the relative ease of translation and modeling in a modality 39 . For example, Dou et al ., 36 observed lower performance in adapting CT to MRI for cardiac images, since cardiac MRI is more challenging to segment. The task networks are trained independently or jointly, with the image-translation network, using the labeled source images 35 . DT-DA performs alignment in the image space instead of the latent feature space, leading to better interpretability through visual inspection of synthesized images 40 , enforcing semantic consistency, and preserving low-level appearance aspects using shape-consistency 41 and structural-similarity constraints 42 .

3.1.1 Unidirectional Translation

Unidirectional translation maps images from the source domain to the target domain or vice versa using GANs ( e.g ., vanilla GAN and conditional GAN (cGAN)) 43 . Compared with vanilla GAN, cGAN conditions the training of the generator and discriminator on extra information such as the class label. Yoo et al ., 44 proposed pixel-level domain transfer using cGAN with a domain discriminator. Liu and Tuzel 45 utilized GANs coupled with shared weights to generate paired synthetic source and target images sharing high-level abstraction. Bousmalis et al ., 40 leveraged cGAN with the content-similarity loss to generate realistic target images and jointly trained the GAN discriminator with the task network.

Unidirectional translation has been applied to remove dataset variations. For example, Bentaieb et al ., 29 designed a stain normalization approach, using a task conditional GAN to translate H&E images to a reference stain. Madani et al ., 46 proposed a semi-supervised approach for cardiac abnormality classification using using GAN discriminator for abnormality classification in minimally labeled X-ray images, and showed that the adversarial loss could reduce domain overfitting. Mahmood et al ., 39 translated real endoscopy images to graphically-rendered synthetic colon images with ground-truth annotations, for depth-estimation during surgical navigation. Unidirectional translation has also been applied to cross-modality scenario. For instance, Zhao et al ., 47 proposed a modified U-Net to translate paired brain CT to MRI.

3.1.2 Bidirectional Translation

Bidirectional image translation (also known as reconstruction-based DT) leverages two GANs, constraining the mapping space by enforcing semantic-consistency between the original and reconstructed images. CycleGAN, by Zhu et al. 48 , is one of the most popular architectures for bidirectional translation. CycleGAN utilizes cycle-consistency to constrain the translation mapping and improve the quality of generated images. CycleGAN has been expanded to handle larger domain shifts with semantic-consistency loss functions (CyCADA 35 ), multi-domain translation (StarGAN 49 ), and translation between two domains with multi-modal conditional distributions (MUNIT 50 ). In supervised learning, bidirectional translation expands the training data to make the segmentation task model robust. The translation and segmentation network can be trained either independently (two stages) or jointly. Zhang et al ., 51 presented a one-stage framework with an additional shape-consistency loss in CycleGAN to achieve better segmentation masks and lower failures. Chartsias et al ., 11 used a two-stage framework to segment MRI images using CT images. Cai et al ., 52 combined segmentation loss on generated images as an additional shape constraint for 3D translation and leveraged MRI for pancreas segmentation in CT images. In the unsupervised setting, image translation is used to create labeled data for the target domain. Huo et al ., 12 proposed a joint optimization approach for the synthesis and segmentation of CT images using labeled MRI. Their framework achieved comparable performance in comparison to the fully labeled case.

There are a few observations about GANs: (a) CycleGAN does not guarantee consistent translation of minor anatomical structures and boundaries 53 , and thus needs additional constraints like gradient 53 and shape consistency 51 . For instance, Jiang et al ., 54 incorporated tumor-shape and feature-based losses to preserve tumors while translating CT data to MRI data; (b) Attention networks can account for varying transferability of different image regions 55 . For instance, Liu et al ., 56 proposed a novel attention-based U-Net 57 as a GAN generator to translate hard-to-generate textured regions from MRI to CT. For alternate scenarios such as 3D-2D, paired images, or semi-supervised DA-DT, Zhang et al. , 51 segmented X-ray images by using synthetic X-ray images created from accessible 3D CT annotations. Nguyen et al ., 58 used semi-supervised DA with paired CT images to constrain CycleGAN to generate more realistic images. Pan et al ., 30 leveraged MRI to generate missing PET images for patients for Alzheimer’s disease diagnosis. Chen et al ., 59 proposed state-of-the-art unsupervised segmentation method using bidirectional DA-DT between MRI and CT, combining CycleGAN with shared feature encoder layers between domains. Their method resembled CyCADA 41 and showed the efficacy of combining DT with feature-based alignment; (c) DA-DT can be used for single-modality medical imaging. Chen et al ., 28 leveraged a CycleGAN with semantic-aware adversarial loss to perform lung segmentation across different chest X-ray datasets.

3.2 Latent Feature Space Transformation in Domain Adaptation

Unlike the image-to-image translation in DT-DA, the LFST-DA transforms the source domain and target domain images to a shared latent feature space to learn a domain-invariant feature representation. The goal is to minimize domain-specific information while preserving the task-related information. The LFST-DA can be trained in an unsupervised fashion to obtain a domain-invariant representation, or in a concurrent manner where the representation network and the task network ( e.g ., image classification network) are trained simultaneously to improve the performance. LFST-DA is used in three basic implementations: divergence minimization 60 61 62 63 64 , adversarial training 65 66 67 68 , and cross-domain reconstruction 69 70 . Compared to DT-DA, LSFT-DA is more computationally efficient because it focuses on translating relevant information only instead of the complete image 34 . Also, feature-based domain alignment outperforms DT-DA by preserving task-critical features 35 .

3.2.1 Divergence Minimization

A simple approach to learn domain-invariant features and remove distribution-shift is to minimize some divergence criterion between source and target data distributions. Common choices include maximum mean discrepancy (MMD) 60 , correlation alignment (CORAL) 61 63 , contrastive domain discrepancy (CDD) 64 , and Wasserstein distance 62 . MMD, CORAL, and Wasserstein distances are class-agnostic divergence metrics and do not discriminate class labels when aligning samples. CDD-based DA aligns samples based on their labels, by minimizing the intra-class discrepancy and maximizing the inter-class discrepancy. MMD and CORAL are two of the most utilized divergence metrics that match the first-order moment (mean) and the second-order moment (covariance) of distributions. However, the represented hidden features can be complicated in the real world and may not be fully characterized by mean and covariance. Wasserstein distance aligns feature distributions between domains via optimal transport theory. Compared to the adversarial-based approaches, divergence-based DA has not been as widely explored in medical imaging. For cross-modality DA, Zhu et a l., 71 utilized maximum mean discrepancy to map MR and PET images to a common space to mitigate missing data. Several works have used same-modality DA to mitigate dataset variations in X-ray 72 , retinal fundus 73 , and electron microscopy images 74 .

3.2.2 Adversarial Training

Instead of minimizing a divergence metric, adversarial methods train a discriminator, typically a separate network, in an adversarial fashion against the feature encoder network. The goal of the feature network is to learn a latent representation such that the discriminator is unable to identify the input sample domain from the representation. For medical imaging, feature-based adversarial domain adaptation has been widely utilized for various applications. For example, in cross-modality adaptation, Zhang et al ., 75 applied a domain discriminator to adapt models trained for pathology images to microscopy images. LSFT-DA is also used for single-modality adaptation to overcome dataset variations in pathology images, MR images, and ultrasound images. For example, Lafarge et al ., 76 have utilized a domain discriminator to mitigate the color variations of histopathology images for mitosis detection in breast cancer. Kamnitsas et al ., 77 have applied a domain discriminator to MR images from different scanners and imaging protocols to improve the brain lesion segmentation performance.

3.2.3 Reconstruction-based Adaptation

The reconstruction-based adaptation maximizes the inter-domain similarity by encoding images from each domain to reconstruct images in the other domain. The reconstruction network (decoder) performs feature alignment by recreating the feature extractor’s input while the feature extractor (encoder) transforms input image into latent representation. Ghifary et al. , 70 proposed DRCN for object detection, using only target domain data reconstruction while Bousmalis et al ., 69 proposed a domain separation network that extracts image representations in two subspaces: the private domain features and the shared-domain features, the latter being used to reconstruct input image. For medical imaging, reconstruction-based methods are less developed and are usually combined with adversarial learning. For same-modality adaptation, Oliveira et al ., 80 have combined image-to-image translation with a feature-based discriminator to mitigate the variations in X-ray images and improve segmentation performance. For cross-modality adaptation, Ouyang et al ., 81 combined variational autoencoder (VAE) with adversarial training to adapt MR to CT scans.

4 Challenges and Opportunities

4.1 Domain Selection and Direction of Domain Adaptation

Selecting related domains for effective knowledge transfer is an open-research area in ML. In medical imaging, domains are often selected based on the type of imaging technique ( e.g ., radiology), anatomy, availability of labeled data, and whether the modalities are complementary for the underlying task 30 . Regarding whether DA could be performed symmetrically across domains, the potential information loss in a particular direction is critical for assessing task performance. For example, for unsupervised DA from CT to MRI, reverse DA may sometimes be needed to preserve tumors 54 . For supervised DA between multiple H&E stained images, Tellez et al ., 82 showed that mitosis-detection and cancer tissue classification in a particular color space leads to higher accuracy. Typically, to assess domain relationship and DA direction, it is necessary to use (a) large-scale empirical studies such as 6 58 exploring bi-directional DA across multiple datasets, (b) a representation-shift metric 24 to roughly quantify the risk of applying learned-representations from a particular domain to a new domain, or (c) multi-source DA 83 , which automatically explores latent source domains in multi-source datasets and quantifies the membership of each target sample. However, such experimentation requires extensive benchmarking studies that are lacking in medical imaging.

4.2 Transferability of Individual Samples

Most DA studies for medical imaging assume that all samples are equally transferable across two domains. Thus, they focus on globally aligning domain distributions. However, the ability to transfer (or align) varies across clinical samples because of: (a) intra-domain variations ( e.g ., in multi-modal DA between MRI and CT, each modality can have contrast variations) 75 ; (b) noisy annotations due to human subjectivity; (c) target label space being a subset of source label space 84 ; and (d) varying transferability among different image regions 55 ( e.g ., tumors are difficult to translate and could be missed during CT to MRI image-translation 54 ). Some samples in the source domain may be less useful and can lead to negative transferring 84 , which adversely impacts DA. Selecting relevant samples or reducing the impact of outlier samples using transferability frameworks is a potential solution. Some strategies include weighting samples based on classifier discrepancy 85 , down-weighting outlier classes using the classification probability for target data 86 , leveraging open-set based optimization 87 , and leveraging an attention mechanism 55 to focus on hard-to-transfer samples or using a noise co-adaption layer 88 . Recent medical imaging studies have explored sample selection and transferability assessment using reverse classification accuracy 89 , attention-based U-Net 56 , and transferable semantic representations 84 .

4.3 Limitations of Domain Adaptation in Medical Imaging

For medical imaging, most DL-based DA uses adversarial methods, primarily GAN for unsupervised DA. Adversarial methods are prone to errors because the discriminator can be confused, and there is no guarantee that the domain distributions are sufficiently similar 90 . Moreover, the generator in GAN is prone to “hallucinating” content to convince the discriminator that data belongs to the target distribution 91 . As such, CycleGAN could be trained to synthesize tumors in images of healthy patients. Beyond applying consistency constraints during image translation, artifacts which are not directly visible in synthesized images, are also important for consideration. For example, CycleGANs incorporate high-frequency information in the intermediate representation used by the second generator to translate the image back to the source domain 92 . This high frequency information can interfere with downstream tasks.

DT-DA approaches require translating the entire image, increasing the complexity of the models for large-sized medical images like whole slide images. Few studies 28 36 have compared adversarial DA methods for MRI-CT translation. However, a comprehensive comparison of various feature-based DA approaches is lacking. Future studies could explore combining DT-DA and LFST-DA approaches 59 . Moreover, current frameworks typically focus on source-target domain pair, while many tasks, such as stain normalization in histopathology images, can be multi-domain 93 .

4.4 Leveraging Synthetic Data

DA for medical imaging can be applied in relatively under-explored applications such as single-view 3D reconstruction 94 or temporal disease analysis 95 . This could benefit image-guided surgery, in which training data is very scarce and difficult to obtain 96 . One way is to leverage synthetic data with ground truth information, adapting it to the real data. This approach has been successfully applied in natural images 97 . Reverse domain adaptation ( i.e ., translating real data to synthetic data) is also a promising solution. Mahmood et al ., 39 generated synthetic endoscopy data with known depth information by using an anatomical colon model and a virtual endoscope. This simulated data was used for 3D reconstruction of real endoscopic images. Pan et al. , 30 translated MR data to generate synthetic PET images to infer missing patient scans for temporal analysis of Alzheimer’s disease 30 . Another area that could benefit from synthetic data is skin lesion detection 98 .

5 Conclusions and Future Directions

Deep learning has been widely applied to medical imaging data analysis. However the lack of well-annotated images and the heterogeneity of multi-center medical imaging datasets are two key challenges for DL performance. DA has emerged as an effective approach for minimizing domain-shift and leveraging labeled data from distinct but related domains. FST-DA and DT-DA are two popular approaches to minimize the distribution divergence in multiple medical imaging studies exploring same-modality or cross-modality scenarios. They have proven to achieve good performance, particularly in unsupervised DA settings and organ segmentation tasks. Current approaches are primarily adversarial with domains being selected based on certain heuristics and underlying tasks. Extensive benchmarking studies are needed to quantify the domain relationship for different imaging modalities and to compare adversarial and non-adversarial approaches. Varying sample transferability and multi-modal domains for medical imaging are two other major issues. One strategy is to explore down-weighting or attention-based networks. Also alternative multi-modal frameworks such as MUNIT 30 can be explored. Finally, for certain application areas in medical imaging such as 3D reconstruction and temporal disease analysis where DA is relatively unexplored, synthetic data can be used.

Acknowledgments

The work was supported in part by grants from the National Science Foundation EAGER Award NSF1651360, Children’s Healthcare of Atlanta and Georgia Tech Partnership Grant, Giglio Breast Cancer Research Fund, Petit Institute Faculty Fellow Award, and Carol Ann and David D. Flanagan Faculty Fellow Research Fund for Professor May D Wang. This work was also supported in part by the scholarship from China Scholarship Council (CSC) under the Grant CSC NO. 201406010343. The content of this article is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Footnotes

Equal Contributing First Authors.

References

- 1.Mendelson D S, Rubin D L. Imaging informatics: essential tools for the delivery of imaging services. Acad Radiol. 2013;20(10):1195–212. doi: 10.1016/j.acra.2013.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Litjens G, Kooi T, Bejnordi B E, Setio A AA, Ciompi F, Ghafoorian M et al. A survey on deep learning in medical image analysis. Med Image Anal. 2017;42:60–88. doi: 10.1016/j.media.2017.07.005. [DOI] [PubMed] [Google Scholar]

- 3.Adler-Milstein J, Jha A K. Sharing clinical data electronically: a critical challenge for fixing the health care system. JAMA. 2012;307(16):1695–6. doi: 10.1001/jama.2012.525. [DOI] [PubMed] [Google Scholar]

- 4.Sharma P, Shamout F E, Clifton D A.Preserving Patient Privacy while Training a Predictive Model of In-hospital Mortality. arXiv preprint arXiv:1912.00354; 2019 Dec 1

- 5.Zhang Y, Wei Y, Zhao P, Niu S, Wu Q, Tan M, Huang J.Collaborative unsupervised domain adaptation for medical image diagnosis. arXiv preprint arXiv:1911.07293; 2019 Nov 17

- 6.Yao L, Prosky J, Covington B, Lyman K.A strong baseline for domain adaptation and generalization in medical imaging. arXiv preprint arXiv:1904.01638; 2019 Apr 2

- 7.Pan S J, Yan Q. A survey on transfer learning. IEEE Trans Knowl Data Eng. 2009;22(10):1345–59. [Google Scholar]

- 8.Tajbakhsh N, Jeyaseelan L, Li Q, Chiang J N, Wu Z, Ding X. Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation. Med Image Anal. 2020:101693. doi: 10.1016/j.media.2020.101693. [DOI] [PubMed] [Google Scholar]

- 9.Dai C, Mo Y, Angelini E, Guo Y, Bai W.Transfer Learning from Partial Annotations for Whole Brain Segmentation. In: Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data; 2019 Oct 13. p. 199-206

- 10.Razzak M I, Naz S, Zaib A.Deep learning for medical image processing: Overview, challenges and the future. In: Classification in BioApps; 2018. p. 323-50

- 11.Chartsias A, Joyce T, Dharmakumar R, Tsaftaris S A.Adversarial image synthesis for unpaired multi-modal cardiac data. In: International workshop on simulation and synthesis in medical imaging; 2017 Sep 10. p. 3-13

- 12.Huo Y, Xu Z, Bao S, Assad A, Abramson R G, Landman B A.Adversarial synthesis learning enables segmentation without target modality ground truth. In: IEEE 15th International Symposium on Biomedical Imaging, ISBI 2018; 2018. p. 1217-20

- 13.Chen C, Dou Q, Chen H, Qin J, Heng P A. Synergistic image and feature adaptation: Towards cross-modality domain adaptation for medical image segmentation. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2019;33:865–72. [Google Scholar]

- 14.Lee B, Newberg A.Neuroimaging in Traumatic Brain Imaging NeuroRx 2005. Apr202372–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gurcan M N, Boucheron L E, Can A, Madabhushi A, Rajpoot N M, Yener B.Histopathological image analysis: A review IEEE Rev Biomed Eng 2009 Oct 2009. Oct3002147–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Brieu N, Meier A, Kapil A, Schoenmeyer R, Gavriel C G, Caie P Det al. Domain Adaptation-based Augmentation for Weakly Supervised Nuclei Detection. arXiv preprint arXiv:1907.04681; 2019 Jul 10

- 17.Tomczak K, Czerwińska P, Wiznerowicz M.The Cancer Genome Atlas (TCGA): an immeasurable source of knowledge Contemp Oncol 201519(1A):A68–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Irvin J, Rajpurkar P, Ko M, Yu Y, Ciurea-Ilcus S, Chute Cet al. CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison. arXiv preprint arXiv: 1901.07031; 2019 Jan 21

- 19.Ciompi F, Geessink O, Bejnordi B E, De Souza G S, Baidoshvili A, Litjens Get al. The importance of stain normalization in colorectal tissue classification with convolutional networks. In: IEEE 14th International Symposium on Biomedical Imaging, ISBI 2017; 2017. p. 160-3

- 20.Kushibar K, Valverde S, González-Villà S, Bernal J, Cabezas M, Oliver A et al. Supervised domain adaptation for automatic sub-cortical brain structure segmentation with minimal user interaction. Sci Rep. 2019;9(01):6742. doi: 10.1038/s41598-019-43299-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Pooch E HP, Ballester P L, Barros R C.Can we trust deep learning models diagnosis? The impact of domain shift in chest radiograph classification. arXiv preprint arXiv: 1909.01940; 2019 Sep 3

- 22.Lampert T, Merveille O, Schmitz J, Forestier G, Feuerhake F, Wemmert C.Strategies for training stain invariant CNNs. In: IEEE 16th International Symposium on Biomedical Imaging, ISBI 2019; p. 905-9

- 23.AlBadawy E A, Saha A, Mazurowski M A.Deep learning for segmentation of brain tumors: Impact of cross-institutional training and testing Med Phys 2018. Mar45031150–8. [DOI] [PubMed] [Google Scholar]

- 24.Stacke K, Eilertsen G, Unger J, Lundström C.A Closer Look at Domain Shift for Deep Learning in Histopathology. arXiv preprint arXiv: 1909.11575; 2019 Sep 25 [DOI] [PubMed]

- 25.Yang X, Dou H, Li R, Wang X, Bian C, Li Set al. Generalizing Deep Models for Ultrasound Image Segmentation. In: Medical Image Computing and Computer Assisted Intervention, MICCAI 2018; p. 497-505

- 26.Degel M A, Navab N, Albarqouni S. Domain and Geometry Agnostic CNNs for Left Atrium Segmentation in 3D Ultrasound. ArXiv180500357 Cs. 2018;11073:630–7. [Google Scholar]

- 27.Li H, Loehr T, Wiestler B, Zhang J, Menze B.e-UDA: Efficient Unsupervised Domain Adaptation for Cross-Site Medical Image Segmentation. arXiv preprint arXiv: 2001.09313; 2020 Jan 25

- 28.Chen C, Dou Q, Chen H, Heng P A.Semantic-aware generative adversarial nets for unsupervised domain adaptation in chest X-ray segmentation. In: International Workshop on Machine Learning in Medical Imaging 2018. p. 143-51

- 29.Bentaieb A, Hamarneh G.Adversarial stain transfer for histopathology image analysis IEEE Trans Med Imaging 2018. Mar3703792–802. [DOI] [PubMed] [Google Scholar]

- 30.Pan Y, Liu M, Lian C, Zhou T, Xia Y, Shen D.Synthesizing missing PET from MRI with cycle-consistent generative adversarial networks for Alzheimer’s disease diagnosis. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2018. p. 455-63 [DOI] [PMC free article] [PubMed]

- 31.Ehteshami Bejnordi B, Litjens G, Timofeeva N, Otte-Holler I, Homeyer A, Karssemeijer Net al. Stain specific standardization of whole-slide histopathological images IEEE Trans Med Imaging 2016. Feb;3502404–15. [DOI] [PubMed] [Google Scholar]

- 32.Csurka G.Domain adaptation for visual applications: A comprehensive survey. arXiv preprint arXiv:1702.05374; 2017 Feb 17

- 33.Wilson G, Cook D J.A Survey of Unsupervised Deep Domain Adaptation. arXiv preprint arXiv:1812.02849; 2018 Dec 6 [DOI] [PMC free article] [PubMed]

- 34.Wang M, Deng W. Deep visual domain adaptation: A survey. Neurocomputing. 2018;312:135–53. [Google Scholar]

- 35.Hoffman J, Tzeng E, Park T, Zhu J Y, Isola P, Saenko Ket al. CyCADA: Cycle-Consistent Adversarial Domain Adaptation. In: International Conference on Machine Learning 2018. p. 1989-98

- 36.Dou Q, Ouyang C, Chen C, Chen H, Glocker B, Zhuang Xet al. Pnp-adanet: Plug-and-play adversarial domain adaptation network with a benchmark at cross-modality cardiac segmentation. arXiv preprint arXiv:1812.07907; 2018 Dec 19

- 37.Isola P, Zhu J Y, Zhou T, Efros A A.Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2017. p. 1125-34

- 38.Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville Aet al. Generative adversarial nets. In: Advances in neural information processing systems 2014. p. 2672-80

- 39.Mahmood F, Chen R, Durr N J.Unsupervised reverse domain adaptation for synthetic medical images via adversarial training IEEE Trans Med Imaging 2018. Dec;37122572–81. [DOI] [PubMed] [Google Scholar]

- 40.Bousmalis K, Silberman N, Dohan D, Erhan D, Krishnan D.Unsupervised pixel-level domain adaptation with generative adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2017. p. 3722-31

- 41.Zhang Z, Yang L, Zheng Y.Translating and segmenting multimodal medical volumes with cycle-and shape-consistency generative adversarial network. In: Proceedings of the IEEE conference on computer vision and pattern Recognition 2018. p. 9242-9251

- 42.de Bel T, Hermsen M, Kers J, Van Der Laak J, Litjens G.Stain-transforming cycle-consistent generative adversarial networks for improved segmentation of renal histopathology. In: Proceedings of the 2nd International Conference on Medical Imaging with Deep Learning; Proceedings of Machine Learning Research 2019 May 24. p. 151-63

- 43.Mirza M, Osindero S.Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784; 2014 Nov 6

- 44.Yoo D, Kim N, Park S, Paek A S, Kweon I S.Pixel-level domain transfer. In: European Conference on Computer Vision 2016. p. 517-32

- 45.Liu M Y, Tuzel O.Coupled generative adversarial networks. In Advances in neural information processing systems 2016. p. 469-77

- 46.Madani A, Moradi M, Karargyris A, Syeda-Mahmood T.Semi-supervised learning with generative adversarial networks for chest X-ray classification with ability of data domain adaptation. In: IEEE 15th International Symposium on Biomedical Imaging, ISBI 2018. p. 1038-42

- 47.Zhao C, Carass A, Lee J, He Y, Prince J L.Whole brain segmentation and labeling from CT using synthetic MR images. In: International Workshop on Machine Learning in Medical Imaging 2017. p. 291-8

- 48.Zhu J Y, Park T, Isola P, Efros A A.Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision 2017. p. 2223-32

- 49.Choi Y, Choi M, Kim M, Ha J W, Kim S, Choo J.StarGAN: Unified generative adversarial networks for multi-domain image-to-image translation. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2018. p. 8789-97

- 50.Huang X, Liu M Y, Belongie S, Kautz J.Multimodal unsupervised image-to-image translation. In: Proceedings of the European Conference on Computer Vision, ECCV 2018. p. 172-89

- 51.Zhang Y, Miao S, Mansi T, Liao R.Task driven generative modeling for unsupervised domain adaptation: Application to X-ray image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2018. p. 599-607

- 52.Cai J, Zhang Z, Cui L, Zheng Y, Yang L. Towards cross-modal organ translation and segmentation: a cycle-and shape-consistent generative adversarial network. Med Image Anal. 2019;52:174–84. doi: 10.1016/j.media.2018.12.002. [DOI] [PubMed] [Google Scholar]

- 53.Hiasa Y, Otake Y, Takao M, Matsuoka T, Takashima K, Carass Aet al. Cross-modality image synthesis from unpaired data using CycleGAN. In: International workshop on simulation and synthesis in medical imaging 2018. p. 31-41

- 54.Jiang J, Hu Y C, Tyagi N, Zhang P, Rimner A, Mageras G Set al. Tumor-aware, adversarial domain adaptation from ct to mri for lung cancer segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2018; pp. 777-785 [DOI] [PMC free article] [PubMed]

- 55.Wang X, Li L, Ye W, Long M, Wang J. Transferable attention for domain adaptation. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2019;33:5345–52. [Google Scholar]

- 56.Liu X, Wei X, Yu A, Pan Z.Unpaired Data based Cross-domain Synthesis and Segmentation Using Attention Neural Network. In: Asian Conference on Machine Learning 2019. p. 987-1000

- 57.Oktay O, Schlemper J, Folgoc L L, Lee M, Heinrich M, Misawa Ket al. Attention u-net: Learning where to look for the pancreas. arXiv preprint arXiv:1804.03999; 2018 Apr 11

- 58.Nguyen H, Luo S, Ramos F.Semi-supervised Learning Approach to Generate Neuroimaging Modalities with Adversarial Training. In: Pacific-Asia Conference on Knowledge Discovery and Data Mining 2020. p. 409-21

- 59.Chen C, Dou Q, Chen H, Qin J, Heng P A. Unsupervised bidirectional cross-modality adaptation via deeply synergistic image and feature alignment for medical image segmentation. IEEE Trans Med Imaging. 2020;39(07):2494–505. doi: 10.1109/TMI.2020.2972701. [DOI] [PubMed] [Google Scholar]

- 60.Rozantsev A, Salzmann M, Fua P. Beyond sharing weights for deep domain adaptation. IEEE transactions Trans Pattern Anal Mach Intell. 2019;41(04):801–14. doi: 10.1109/TPAMI.2018.2814042. [DOI] [PubMed] [Google Scholar]

- 61.Sun B, Saenko K.Deep coral: Correlation alignment for deep domain adaptation. In: European conference on computer vision 2016. p. 443-50

- 62.Bhushan Damodaran B, Kellenberger B, Flamary R, Tuia D, Courty N.Deepjdot: Deep joint distribution optimal transport for unsupervised domain adaptation. In: Proceedings of the European Conference on Computer Vision (ECCV) 2018. p. 447-63

- 63.Sun B, Feng J, Saenko K.Return of frustratingly easy domain adaptation. In: Thirtieth AAAI Conference on Artificial Intelligence 2016 Mar 2

- 64.Kang G, Jiang L, Yang Y, Hauptmann A G.Contrastive adaptation network for unsupervised domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2019. p. 4893-902

- 65.Tzeng E, Hoffman J, Saenko K, Darrell T.Adversarial discriminative domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017. p. 7167-76

- 66.Ganin Y, Ustinova E, Ajakan H, Germain P, Larochelle H, Laviolette F et al. Domain-adversarial training of neural networks. The Journal of Machine Learning Research. 2016;17(01):2096–30. [Google Scholar]

- 67.Tzeng E, Hoffman J, Zhang N, Saenko K, Darrell T.Deep domain confusion: Maximizing for domain invariance. arXiv preprint arXiv:1412.3474. 2014 Dec 10

- 68.Tsai Y H, Hung W C, Schulter S, Sohn K, Yang M H, Chandraker M.Learning to adapt structured output space for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018. p. 7472-81

- 69.Bousmalis K, Trigeorgis G, Silberman N, Krishnan D, Erhan D.Domain separation networks. In: Advances in neural information processing systems 2016. p. 343-51

- 70.Ghifary M, Kleijn W B, Zhang M, Balduzzi D, Li W.Deep reconstruction-classification networks for unsupervised domain adaptation. In: European Conference on Computer Vision 2016. p. 597-613

- 71.Zhu X, Thung K H, Adeli E, Zhang Y, Shen D.Maximum mean discrepancy based multiple kernel learning for incomplete multimodality neuroimaging data. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2017. p. 72-80 [DOI] [PMC free article] [PubMed]

- 72.Venkataramani R, Ravishankar H, Anamandra S.Towards Continuous Domain Adaptation For Medical Imaging. In: IEEE 16th International Symposium on Biomedical Imaging, ISBI 2019. p. 443-6

- 73.Zhuang J, Chen Z, Zhang J, Zhang D, Cai Z.Domain adaptation for retinal vessel segmentation using asymmetrical maximum classifier discrepancy. In: Proceedings of the ACM Turing Celebration Conference-China 2019. p. 1-6

- 74.Bermúdez-Chacón R, Altingövde O, Becker C, Salzmann M, Fua P. Visual Correspondences for Unsupervised Domain Adaptation on Electron Microscopy Images. IEEE Trans Med Imaging. 2020;39(04):1256–67. doi: 10.1109/TMI.2019.2946462. [DOI] [PubMed] [Google Scholar]

- 75.Zhang Y, Chen H, Wei Y, Zhao P, Cao J, Fan Xet al. From whole slide imaging to microscopy: Deep microscopy adaptation network for histopathology cancer image classification. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2019. p. 360-8

- 76.Lafarge M W, Pluim J P, Eppenhof K A, Moeskops P, Veta M.Domain-adversarial neural networks to address the appearance variability of histopathology images. In: Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support 2017. p. 83-91

- 77.Kamnitsas K, Baumgartner C, Ledig C, Newcombe V, Simpson J, Kane Aet al. Unsupervised domain adaptation in brain lesion segmentation with adversarial networks. In: International conference on information processing in medical imaging 2017. p. 597-609

- 78.Orbes-Arteaga M, Varsavsky T, Sudre C H, Eaton-Rosen Z, Haddow L J, Sørensen L [DOI] [PMC free article] [PubMed]

- 79.Shanis Z, Gerber S, Gao M, Enquobahrie A.Intramodality Domain Adaptation Using Self Ensembling and Adversarial Training. In: Domain Adaptation and Representation Transfer and Medical Image Learning with Less Labels and Imperfect Data 2019. p. 28-36

- 80.Oliveira H, Ferreira E, Dos Santos J A.Truly Generalizable Radiograph Segmentation with Conditional Domain Adaptation. IEEE Access 2020 May 1

- 81.Ouyang C, Kamnitsas K, Biffi C, Duan J, Rueckert D.Data efficient unsupervised domain adaptation for Cross-Modality image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2019. p. 669-77

- 82.Tellez D, Litjens G, Bándi P, Bulten W, Bokhorst J M, Ciompi F, van der Laak J.Quantifying the effects of data augmentation and stain color normalization in convolutional neural networks for computational pathology Med Image Anal 2019 Dec 2019. Dec 158101544. [DOI] [PubMed] [Google Scholar]

- 83.Peng X, Bai Q, Xia X, Huang Z, Saenko K, Wang B.Moment matching for multi-source domain adaptation. In: Proceedings of the IEEE International Conference on Computer Vision 2019. p. 1406-15

- 84.Dong J, Cong Y, Sun G, Zhong B, Xu X.What Can Be Transferred: Unsupervised Domain Adaptation for Endoscopic Lesions Segmentation. arXiv preprint arXiv:2004.11500; 2020 Apr 24

- 85.Saito K, Watanabe K, Ushiku Y, Harada T.Maximum classifier discrepancy for unsupervised domain adaptation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018. p. 3723-32

- 86.Cao Z, Ma L, Long M, Wang J.Partial adversarial domain adaptation. In: Proceedings of the European Conference on Computer Vision, ECCV 2018. p. 135-50

- 87.Panareda Busto P, Gall J.Open set domain adaptation. In: Proceedings of the IEEE International Conference on Computer Vision 2017. p. 754-63

- 88.Goldberger J, Ben-Reuven E.Training deep neural-networks using a noise adaptation layer. In: International Conference on Learning Representations 2017

- 89.Valindria V V, Lavdas I, Bai W, Kamnitsas K, Aboagye E O, Rockall A G [DOI] [PubMed]

- 90.Arora S, Ge R, Liang Y, Ma T, Zhang Y.Generalization and equilibrium in generative adversarial nets (gans). In: Proceedings of the 34th International Conference on Machine Learning Volume 70 2017 Aug 6. p. 224-32

- 91.Martin A, Lon B.Towards principled methods for training generative adversarial networks. In: NIPS 2016 Workshop on Adversarial Training

- 92.Chu C, Zhmoginov A, Sandler M.Cyclegan, a master of steganography. arXiv preprint arXiv:1712.02950; 2017 Dec 8

- 93.Shrivastava A, Adorno W, Ehsan L, Ali S A, Moore S R, Amadi B Cet al. Self-Attentive Adversarial Stain Normalization. arXiv preprint arXiv:1909.01963; 2019 Sep 4 [DOI] [PMC free article] [PubMed]

- 94.Wang Y, Zhong Z, Hua J. DeepOrganNet: On-the-Fly Reconstruction and Visualization of 3D/4D Lung Models from Single-View Projections by Deep Deformation Network. IEEE Trans Vis Comput Graph. 2019;26(01):960–70. doi: 10.1109/TVCG.2019.2934369. [DOI] [PubMed] [Google Scholar]

- 95.Jac Jr C R, Knopman D S, Jagust W J, Petersen R C, Weiner M W, Aisen P S et al. Update on hypothetical model of Alzheimer’s disease biomarkers. Lancet Neurology. 2013;12(02):207. doi: 10.1016/S1474-4422(12)70291-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96.Mahmood F, Durr N J.Deep learning and conditional random fields-based depth estimation and topographical reconstruction from conventional endoscopy Med Image Anal 2018 Aug 2018. Aug 148230–43. [DOI] [PubMed] [Google Scholar]

- 97.Shrivastava A, Pfister T, Tuzel O, Susskind J, Wang W, Webb R.Learning from simulated and unsupervised images through adversarial training. In: Proceedings of the IEEE conference on computer vision and pattern recognition 2017. p. 2107-16

- 98.Ghorbani A, Natarajan V, Coz D, Liu Y.DermGAN: Synthetic Generation of Clinical Skin Images with Pathology. arXiv preprint arXiv:1911.0871

- 99.Gholami A, Subramanian S, Shenoy V, Himthani N, Yue X, Zhao Set al. A novel domain adaptation framework for medical image segmentation. In: International MICCAI Brainlesion Workshop 2018. p. 289-98

- 100.Tang Y, Tang Y, Sandfort V, Xiao J, Summers R M.TUNA-Net: Task-oriented UNsupervised Adversarial Network for Disease Recognition in Cross-Domain Chest X-rays. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2019. p. 431-40

- 101.Kapil A, Wiestler T, Lanzmich S, Silva A, Steele K, Rebelatto Met al. DASGAN--Joint Domain Adaptation and Segmentation for the Analysis of Epithelial Regions in Histopathology PD-L1 Images. arXiv preprint arXiv:1906.11118; 2019 Jun 26

- 102.Yang J, Dvornek N C, Zhang F, Chapiro J, Lin M, Duncan J S.Unsupervised domain adaptation via disentangled representations: Application to cross-modality liver segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2019. p. 255-63 [DOI] [PMC free article] [PubMed]

- 103.Dou Q, Ouyang C, Chen C, Chen H, Glocker B, Zhuang Xet al. Pnp-adanet: Plug-and-play adversarial domain adaptation network with a benchmark at cross-modality cardiac segmentation. arXiv preprint arXiv:1812.07907; 2018 Dec 19

- 104.Dong N, Kampffmeyer M, Liang X, Wang Z, Dai W, Xing E.Unsupervised domain adaptation for automatic estimation of cardiothoracic ration. In: International Conference on Medical Image Computing and Computer-assisted intervention 2018. p. 544-52

- 105.Javanmardi M, Tasdizen T.Domain adaptation for biomedical image segmentation using adversarial training. In: IEEE 15th International Symposium on Biomedical Imaging 2018. p. 554-8

- 106.Novosad P, Fonov V, Collins D L.Unsupervised domain adaptation for the automated segmentation of neuroanatomy in MRI: a deep learning approach. bioRxiv preprint bioRxiv:845537 2019 Jan 1

- 107.Ren J, Hacihaliloglu I, Singer E A, Foran D J, Qi X.Adversarial domain adaptation for classification of prostate histopathology whole-slide images. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2018. p. 201-9 [DOI] [PMC free article] [PubMed]

- 108.Wang S, Yu L, Li K, Yang X, Fu C W, Heng P A.Boundary and Entropy-driven Adversarial Learning for Fundus Image Segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2019. p. 102-10

- 109.Yan W, Wang Y, Xia M, Tao Q. Edge-Guided Output Adaptor: Highly Efficient Adaptation Module for Cross-Vendor Medical Image Segmentation. IEEE Signal Processing Letters. 2019;26(11):1593–7. [Google Scholar]

- 110.Hou X, Liu J, Xu B, Liu B, Chen X, Ilyas Met al. Dual Adaptive Pyramid Network for Cross-Stain Histopathology Image Segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention 2019. p. 101-9

- 111.Zhao H, Li H, Maurer-Stroh S, Gui Y, Deng Q, Cheng L. Supervised segmentation of un-annotated retinal fundus images by synthesis. IEEE Trans Med Imaging. 2018;38(01):46–56. doi: 10.1109/TMI.2018.2854886. [DOI] [PubMed] [Google Scholar]

- 112.Tong L, Wu H, Wang M D.CAESNet: Convolutional AutoEncoder based Semi-supervised Network for improving multiclass classification of endomicroscopic images J Am Med Inform Assoc 2019. Nov;26111286–96. [DOI] [PMC free article] [PubMed] [Google Scholar]