Significance

We report an empirical study of gaze deflection—a common experience in which you turn to look in a different direction when someone “catches” you staring at them. We show that gaze cueing (the automatic orienting of attention to locations at which others are looking) is far weaker for such displays, even when the actual eye and head movements are identical to more typical intentional gazes. This demonstrates how gaze cueing is driven by the perception of minds, not eyes, and it serves as a case study of both how social dynamics can shape visual attention in a sophisticated manner and how vision science can contribute to our understanding of common social phenomena.

Keywords: attention, gaze cueing, intentionality, social perception, gaze deflection

Abstract

Suppose you are surreptitiously looking at someone, and then when they catch you staring at them, you immediately turn away. This is a social phenomenon that almost everyone experiences occasionally. In such experiences—which we will call gaze deflection—the “deflected” gaze is not directed at anything in particular but simply away from the other person. As such, this is a rare instance where we may turn to look in a direction without intending to look there specifically. Here we show that gaze cues are markedly less effective at orienting an observer’s attention when they are seen as deflected in this way—even controlling for low-level visual properties. We conclude that gaze cueing is a sophisticated mental phenomenon: It is not merely driven by perceived eye or head motions but is rather well tuned to extract the “mind” behind the eyes.

One of the most important events we perceive in our daily lives is when a nearby agent shifts their attention, e.g., turning suddenly to look in a different direction. Indeed, our visual system is especially sensitive to where others are looking, as demonstrated by many previous studies of gaze shifting (for a review see ref. 1), and these events are so salient that we have an automatic tendency to look in the direction that others are looking (2). This gives rise to the phenomenon of gaze cueing: In a display with two potential target locations flanking a face, for example, observers are faster and more accurate at identifying targets that appear where the face is looking (e.g., refs. 3 and 4; for a review, see ref. 5). This sort of gaze cueing is triggered not just when viewing eyes but also when viewing simple head turns (ref. 6; see also ref. 7).

Why are such gaze shifts so powerful? They might be driven simply by the salient motions of the eyes and heads themselves. But another possibility is that they are driven by the higher-level perception that an agent has shifted their attention or intentions. Exploring these possibilities requires a stimulus in which these factors diverge, which may seem unusual; after all, we usually look toward the objects that are the focus of our intentions (8). But there is one relatively common (though previously unstudied) social phenomenon in which a gaze shift may not actually signal an intention to look at the second location. This occurs in what we will call gaze deflection—when you are surreptitiously looking at someone but then suddenly look away (perhaps toward a second person) when the first person catches you staring at them. Here the intention is not to look at the second person, but only away from the first person.

Do such “deflected” gazes still drive gaze cueing? In five experiments (including direct replications), we showed each observer an animation with three actors* either exhibiting gaze deflection (deflection animations) or performing identical movements, except now temporally reordered, such that impressions of gaze deflection were eliminated and all gaze shifts were seen as intentionally directed at their new locations (control animations). In experiment 1a, each animation (depicted in Fig. 1 and in Movies S1 and S2 and also online at http://perception.yale.edu/gaze-deflection/) began with a central person (A) turning to look at the rightmost person (B; the “first” gaze, seen as directed). In deflection animations, B turned her head to face A, who then (exhibiting gaze deflection) immediately turned to look in the other direction, thus facing a third person (C; the “second” gaze, seen as deflected). In control animations, shortly after turning to look at B, A instead spontaneously (i.e., without B “catching” her staring) turned to look toward C (the second gaze, now seen as directed). Only then did B turn her head toward A. To measure how observers’ attention varied in response to the deflection vs. control animations, we presented a single target letter along the direction of A’s gaze during either the first gaze (early targets; depicted in Fig. 2A) or second gaze (late targets; depicted in Fig. 2B). This same design was then employed in experiment 1b (a direct replication of experiment 1a).

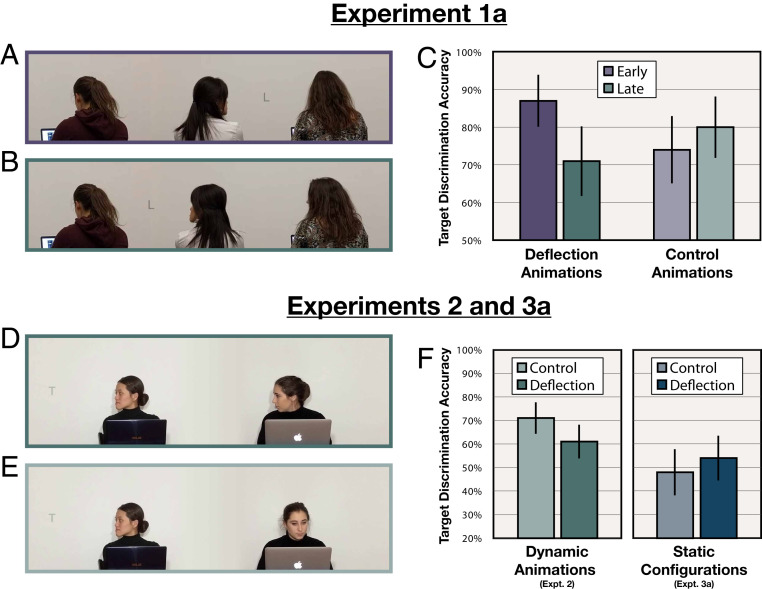

Fig. 1.

A schematic depiction of the animations observers viewed in experiments 1a and 1b.

Fig. 2.

(A and B) Examples of the stimuli used in the letter discrimination task from experiments 1a and 1b, including the early target (A) and late target (B). (C) Average accuracy in the letter discrimination task for early vs. late targets in experiment 1a. Accuracy was impaired for late targets (relative to early targets) in deflection animations but not in control animations (where both gazes were seen as directed since there was no gaze deflection). (D and E) Examples of the stimuli used in the letter identification task from experiment 2 in both the deflection animation (D) and the control animation (E). (F) Average accuracy in the letter identification task for deflection vs. control animations in experiment 2 and for deflection vs. control configurations in experiment 3a. Accuracy was impaired for targets (relative to control animations) in deflection animations, but only when presented as dynamic animations (experiment 2) and not when presented as static frames (thus eliminating impressions of gaze deflection; experiment 3a). Error bars indicate 95% CIs.

Next, we ruled out two classes of potential confounding factors, pertaining to temporal differences (experiment 2) and spatial differences (experiments 3a and 3b) in the animations employed in the original experiments. In experiment 2, we explored the role of temporal factors: Whereas the deflection vs. control animations in experiments 1a and 1b featured different numbers of head turns (and differential delays) before the late target was presented, these temporal factors were now equated (as depicted in SI Appendix, Fig. S1 and Movies S3 and S4). This experiment also served as a conceptual replication of experiments 1a and 1b since they featured different videos, now of actors facing toward the camera so that their eyes were fully visible (as in Fig. 2 D and E).

Finally, we explored the role of spatial factors: In experiment 2, deflection animations ended with both actors looking toward the target location (as in Fig. 2D), while control animations ended with one of the actors looking forward (as in Fig. 2E). To ensure that these differing spatial configurations could not explain the observed differences between deflected and control animations, experiment 3a (and experiment 3b, its direct replication) retained these final tableaus from experiment 2 but eliminated the preceding motions which led to the perception of deflected vs. directed gazes in the first place (as depicted in SI Appendix, Fig. S2).

Results

The average discrimination accuracy for early and late targets in experiment 1a is depicted separately for deflection and control animations in Fig. 2C. Inspection of this figure suggests two clear patterns of results: 1) In the deflection animations, letter discrimination accuracy was higher for first gazes (seen as directed) compared to second gazes (seen as deflected), but 2) this bias was not present in the control animations (when both gazes were directed). These impressions were confirmed by the following analyses. The proportions of correct responses for early and late targets were compared using a two-proportion z test in deflection and control animations, respectively. There was a significant difference between first and second gazes in the deflection animations (87.0 vs. 71.0%; z = 2.78, P = 0.005, Cohen's h = 0.40) but not in control animations (74.0 vs. 80.0%; z = 1.01, P = 0.313, h = 0.14). And the difference between these differences (i.e., the interaction effect) was also highly reliable (z = 2.66, P = 0.008). Thus, the gaze cueing effect is greatly reduced when the gaze is deflected, even when the actual head motion is identical. These effects were directly replicated in experiment 1b: there was a significant difference between early and late targets in deflection animations (86.0 vs. 70.0%; z = 2.73, P = 0.006, h = 0.39) but not in control animations (74.0 vs. 76.0%; z = 0.33, P = 0.744, h = 0.05), and the difference between these differences was also significant (z = 2.12, P = 0.034). And in experiment 2, gaze cueing was once again greatly reduced when the gaze was deflected (61.0 vs. 71.0%; z = 2.11, P = 0.035, h = 0.21; see Fig. 2F)—despite the identical timing of head turns, even with fully visible eyes.

Experiment 3a retained only the final tableaus from experiment 2. Now, with the elimination of the preceding head turns (that yielded impressions of gaze deflection), there was no difference between the deflection-frame and control-frame conditions. Indeed, if anything, there was a trend in the opposite direction: Accuracy was greater with final tableaus from deflection animations compared to those from control animations (54.0 vs. 48.0%; z = 1.20, P = 0.230, h = 0.12; see Fig. 2F). And crucially, there was a reliable interaction with target accuracy from experiment 2 (z = 2.32, P = 0.020), thus demonstrating that the final spatial configurations alone cannot be responsible for the gaze deflection effect. These effects were directly replicated in experiment 3b: accuracy was again trending in the opposite direction, with better performance for final tableaus from deflection compared to control animations (55.0 vs. 45.5%; z = 1.90, P = 0.057, h = 0.19), and the interaction again revealed that this was different from the gaze deflection effect observed in experiment 2 (z = 2.83, P = 0.005).

Discussion

The current study exploits the phenomenon that we have called gaze deflection. This is a familiar (perhaps all too familiar) social phenomenon from everyday life, whereas most studies of gaze cueing use either static images of isolated faces or short video clips in which eye movements are divorced from their context (such that the agents in most such experiments are not actually looking at anything; see ref. 9). The results were clear and powerful: even when tested in only a single trial per observer, eye and head movements were much less effective at cueing attention when they were seen as deflected and thus dissociated from the actual direction of intention.

These effects seemed to reflect the social significance of gaze deflection, rather than any lower-level properties. In particular, they did not reflect differences in the timing of head turns since these were equated in experiment 2. And they also cannot be explained by traditional gaze cueing mechanisms to the differing spatial configurations. When multiple people turn to look in the same direction (as in Fig. 2D), gaze cueing is typically amplified (e.g., ref. 10), and such “pooling” effects are particularly strong in the context of actual head turns as we used in the present studies (as opposed to mere eye movements; e.g., ref. 11). This sort of group-wide gaze cueing remains powerful even when people are looking directly to the right or left (12), and attention is not cued in such configurations to the space between multiple people who are gazing in the same direction (13). Accordingly, we also demonstrated directly (in experiments 3a and 3b) that such spatial configurations do not yield such differences in the absence of the head turns and eye movements that give rise to the perception of gaze deflection.

The phenomenon of gaze deflection, with its dissociation between the perceived direction of gaze and the perceived direction of intention, provides unique insights into recent debates on the relative contribution of visual cues and mental states to social attention (for a recent review, see ref. 14). It has long been assumed that gaze cueing is driven by the visual cue of eye gaze alone (4, 15). Building on other recent work uncovering humans’ remarkable ability to construct rich models of others’ attentional states (e.g., ref. 16), the current results use a familiar social phenomenon to directly demonstrate that cueing of attention is especially tuned to the perceived attentional states of others and less so to brute visual cues. Attention, in this sense, seems tuned not to follow the eyes, but rather to follow the mind behind the gaze.

Methods and Materials

Participants.

For each experiment, 400 observers were recruited through Amazon Mechanical Turk (MTurk; experiment 1a: 244 females, Mage [the participants' mean age] = 36.96; experiment 1b: 242 females, Mage = 35.77; experiment 2: 203 females, Mage = 34.40; experiment 3a: 187 females, Mage = 36.21; experiment 3b: 194 females, Mage = 34.67), and each completed a single trial in a 2- to 5-min session in exchange for monetary compensation. (For a discussion of this pool’s nature and reliability, see ref. 17. All observers were in the United States, had an MTurk task approval rate of at least 80%, and had previously completed at least 50 MTurk tasks.) This sample size was determined arbitrarily before data collection began and was fixed to be identical in each of the three experiments reported here. All experimental methods and procedures were approved by the Yale University Institutional Review Board, and all observers confirmed that they had read and understood a consent form outlining their risks, benefits, compensation, and confidentiality and that they agreed to participate in the experiment.

Apparatus.

After agreeing to participate, observers were redirected to a website where stimulus presentation and data collection were controlled via custom software written in HTML, CSS, JavaScript, and PHP. (Since the experiment was rendered on observers’ own web browsers, viewing distance, screen size, and display resolutions could vary dramatically, so we report stimulus dimensions below using pixel [px] values.)

Stimuli and Design.

Experiments 1a and 1b.

As depicted in the sample screenshots in Fig. 1, observers viewed an animation (1,202 × 297 px) centered in their browser window including a gray (hexadecimal color code #605D5D) 3 px frame on a dark gray (#404040) background. Three people were viewed from behind, on a background wall (approximately #CFCBC4). The three people were sitting in front of laptops and typing sounds played throughout the animation. The people initially looked straight ahead, with the timings of the movements described below reported with respect to the beginning of the animation.

In the deflection animations, the central person turned her head (at 3.5 s) toward the rightmost person (the first gaze, seen as directed) and then seemed to stare at her. At 7.2 s, the rightmost person turned her head to face the middle person, who then (exhibiting gaze deflection) immediately (at 7.5 s) turned to look in the other direction, thus facing the leftmost person (the second gaze, seen as deflected). (The leftmost person looked straight ahead throughout the animation.) The final tableau was then visible for an additional 1.1 s (i.e., until 8.6 s), at which point it disappeared. In the control animations, the central person again turned her head (at 3.5 s) toward the rightmost person (the first gaze, again seen as directed) and then seemed to stare at her. At 7.5 s (without having been caught), the central person then turned to look in the other direction, thus facing the leftmost person (the second gaze, also now seen as directed). Only after this (at 8.8 s) did the rightmost person turn her head toward the central person. The final tableau was then visible for an additional 1.5 s (i.e., until 10.3 s), at which point it disappeared. (Once again, the leftmost person looked straight ahead throughout the animation.)

Each observer viewed a target letter presented for 0.13 s on the background between the people (roughly in line with their eyes) while the animation was playing. This target was a gray (#9C9892) “T” or “L” (presented in Helvetica, roughly 20 × 30 px). Targets presented during first gazes (early targets; depicted in Fig. 2A) were presented between the middle and rightmost people (centered at 788 px from the image’s left border) along the direction of gaze (centered at 143 px from the image’s top border) 0.1 s after the middle person finished turning her head toward the rightmost person (at 4.4 s). Targets presented during second gazes (late targets; depicted in Fig. 2B) were presented between the middle and leftmost people (centered at 408 px from the image’s left border) along the direction of gaze (centered at 143 px from the image’s top border) 0.1 s after the middle person finished turning her head toward the leftmost person (at 8.4 s).

In the actual animations that observers viewed, the identities of the leftmost and rightmost people were counterbalanced, using the identical stimuli. In fact, since the leftmost person never turned her head, only two initial movies were filmed, but the leftmost person in each movie was the first static frame of the rightmost person from the other movie. (Given the uniformly lit wall in the background, this frame was added into the animation without any obvious segmentation cue, such that it appeared to be an animation of three separate people, as depicted in Fig. 1.) The two resulting animations were qualitatively identical, but because they were constructed from two separately filmed movies, their timing was slightly different. In particular, compared to the timing of the first pair of animations (as described above), the second movie’s key events occurred at the following time stamps: 1) The middle person turned to the right at 3.7 s. 2) In the deflection animations, the rightmost person then turned to the left at 7.2 s. 3) In the deflection animations, the middle person turned to the left at 7.6 s. 4) In the control animations, the middle person turned to the left at 7.6 s. 5) In the control animations, the rightmost person looked to the left at 9.0 s. 6) Targets presented during first gazes appeared at 5.4 s. 7) Targets presented during second gazes appeared at 8.4 s.

The design described above resulted in a total of 16 animations: 2 target timings (early/late) × 2 target identities (L/T) × 2 orders of head movements (deflection/control) × 2 identities for the rightmost vs. leftmost people, and each was viewed by 25 unique observers.

Experiment 2.

Observers viewed a silent animation (1,000 × 298 px) including a gray (#5F5D5B) 6 px frame and featuring two people viewed from the front on a background wall (approximately #DFDFD7).

In the deflection animation, the left person turned her head (at 2.0 s) toward the right person and then seemed to stare at her. At 4.0 s, the right (i.e., stared-at) person turned her head to face the left person, who then (exhibiting gaze deflection) immediately (at 4.8 s) turned to look in the other direction. The final tableau was then visible for an additional 0.7 s (i.e., until 6.0 s), at which point it disappeared. In the control animation, the right person was facing to her left in the beginning, and at 2.0 s she turned to face straight ahead. At 4.0 s, the left person turned her head toward the right person to stare at her and then immediately (at 4.8 s) turned to look in the other direction. (Since the right person was facing her laptop during these movements, this shift now appeared to be intentional rather than deflected.) The final tableau was then visible for an additional 0.7 s (i.e., until 6.0 s), at which point it disappeared.

A target letter was presented to the left of the left person (centered at 130 px from the image’s left border) 0.1 s after the left person finished turning her head toward her right (and the observer’s left) along the direction of gaze (centered at 283 px from the image’s top border). This target (a gray #B1B0A7 T, presented in Helvetica, roughly 38 × 46 px) gradually faded in over the course of 0.20 s, remained visible for 0.10 s, and gradually faded out for another 0.20 s. There were thus two animations corresponding to two orders of eye/head motions (deflection/control), and each was viewed by 200 unique observers.

Experiments 3a and 3b.

These experiments were identical to experiment 2, except as noted here. Observers viewed the final 0.7 s of the animations (which consisted of only static frames) from experiment 2 (cropped to hide the laptop logos, 1,000 × 268 px). In deflection frames, both people were thus facing to the left (as in Fig. 2D), and in control frames, the left person was facing to the left, while the right person was facing ahead (as in Fig. 2E). The target letter (#000000) was again presented to the left of the left person and began fading in as the animation began. The animation ended 0.2 s after the target faded out, at 0.7 s. There were thus two animations corresponding to two head directions (deflection/control frames), and for half of the observers (counterbalanced across conditions), the videos were horizontally flipped, for a total of four animations, each viewed by 100 unique observers.

Procedure.

Each observer was instructed to watch a single animation as closely as possible, as it would be displayed only once. Observers viewed the animation (which started playing automatically after 0.5 s in experiments 1a and 1b, upon a keypress in experiment 2, and upon a keypress and after a 1 s “Get Ready” message in experiments 3a and 3b). In experiments 1a and 1b, immediately after the animation ended (and disappeared), observers were asked three questions (only one of which was visible at a time): 1) whether they had seen a letter appear during the animation, 2) whether it was a “T” or an “L” (and to guess if they did not know), and 3) how confident they were in their response (on a scale of 1 to 7, with 1 labeled “Not at all” and 7 labeled “Entirely”). In experiment 2, observers were asked two questions (only one of which was visible at a time): 1) whether they had seen a letter appear during the animation and 2) which letter they saw (A to Z; and to guess if they did not know). In experiments 3a and 3b, observers were asked only one question: which letter they saw (A to Z; and to guess if they did not know). In all experiments, they then also answered questions that allowed us to exclude (with replacement) observers who guessed the purpose of the experiment (e.g., mentioning gaze following; n = 27, 10, 25, 15, and 7 in experiments 1a, 1b, 2, 3a, and 3b respectively), who interrupted the experiment (n = 16, 9, 21, 55, 54), who did not view the video “in full view” (n = 95, 49, 9, 11, 11), who reported past participation in a similar study (n = 4, 8, 24, 46, 45), who encountered any problems (n = 19, 7, 4, 3, 2), or who failed to answer our questions sensibly (n = 7, 2, 24, 49, 32; e.g., responding to our question about the experiment’s purpose by writing “i cant see”). In experiments 2, 3a, and 3b, we also removed observers who entered anything other than a single letter in response to the letter identification question (e.g., “jjhhgkjk”; n = 2, 17, 9). The resulting unique excluded observers (some of whom triggered multiple criteria; n = 142, 70, 79, 101, 96) were replaced without us ever analyzing their data. (The relatively high exclusion rate for observers who reported not watching the video in full view in experiments 1a and 1b may be due to observers misunderstanding our poorly worded question as involving whether the people in the videos—and not the videos themselves—were in full view. In fact, the video depicted only the upper bodies of the people, as in Fig. 1. When this question was replaced by directly measuring the size of observers’ browser windows in experiments 2, 3a, and 3b and comparing it to the size of the animation, only 9, 11, and 11 observers were excluded.)

Supplementary Material

Acknowledgments

For helpful conversations, we thank Andrew Chang, Felix Chang, Hauke Meyerhoff, Yaoda Xu, and members of the Harvard Vision Sciences Laboratory and the Yale Perception and Cognition Laboratory. This project was funded by Office of Naval Research Grant MURI N00014-16-1-2007 awarded to B.J.S.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission. M.S.A.G. is a guest editor invited by the Editorial Board.

*In fact, the people in the videos were the paper’s authors — but for reasons of agreed-upon differences in photogenic fitness, one of the authors was included twice, and one was eliminated altogether.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.2010841117/-/DCSupplemental.

Data Availability.

All raw data are available in SI Appendix. The preregistration for experiment 2 can be viewed at https://aspredicted.org/yw8az.pdf, and that for experiments 3a and 3b can be viewed at https://aspredicted.org/mm4pc.pdf.

References

- 1.Emery N. J., The eyes have it: The neuroethology, function and evolution of social gaze. Neurosci. Biobehav. Rev. 24, 581–604 (2000). [DOI] [PubMed] [Google Scholar]

- 2.Milgram S., Bickman L., Berkowitz L., Note on the drawing power of crowds of different size. J. Pers. Soc. Psychol. 13, 79–82 (1969). [Google Scholar]

- 3.Driver J. et al., Gaze perception triggers reflexive visuospatial orienting. Vis. Cogn. 6, 509–540 (1999). [Google Scholar]

- 4.Friesen C. K., Kingstone A., The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychon. Bull. Rev. 5, 490–495 (1998). [Google Scholar]

- 5.Frischen A., Bayliss A. P., Tipper S. P., Gaze cueing of attention: Visual attention, social cognition, and individual differences. Psychol. Bull. 133, 694–724 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Langton S. R. H., Bruce V., Reflexive visual orienting in response to the social attention of others. Vis. Cogn. 6, 541–567 (1999). [Google Scholar]

- 7.Langton S. R. H., Watt R. J., Bruce V., Do the eyes have it? Cues to the direction of social attention. Trends Cogn. Sci. 4, 50–59 (2000). [DOI] [PubMed] [Google Scholar]

- 8.Krajbich I., Armel C., Rangel A., Visual fixations and the computation and comparison of value in simple choice. Nat. Neurosci. 13, 1292–1298 (2010). [DOI] [PubMed] [Google Scholar]

- 9.Langton S. R. H., Gaze perception: Is seeing influenced by believing? Curr. Biol. 19, R651–R653 (2009). [DOI] [PubMed] [Google Scholar]

- 10.Gallup A. C. et al., Visual attention and the acquisition of information in human crowds. Proc. Natl. Acad. Sci. U.S.A. 109, 7245–7250 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Florey J., Clifford C. W. G., Dakin S., Mareschal I., Spatial limitations in averaging social cues. Sci. Rep. 6, 32210 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sun Z., Yu W., Zhou J., Shen M., Perceiving crowd attention: Gaze following in human crowds with conflicting cues. Atten. Percept. Psychophys. 79, 1039–1049 (2017). [DOI] [PubMed] [Google Scholar]

- 13.Vestner T., Tipper S. P., Hartley T., Over H., Rueschemeyer S.-A., Bound together: Social binding leads to faster processing, spatial distortion, and enhanced memory of interacting partners. J. Exp. Psychol. Gen. 148, 1251–1268 (2019). [DOI] [PubMed] [Google Scholar]

- 14.Capozzi F., Ristic J., Attention AND mentalizing? Reframing a debate on social orienting of attention. Vis. Cogn. 28, 97–105 (2020). [Google Scholar]

- 15.Kingstone A., Kachkovski G., Vasilyev D., Kuk M., Welsh T. N., Mental attribution is not sufficient or necessary to trigger attentional orienting to gaze. Cognition 189, 35–40 (2019). [DOI] [PubMed] [Google Scholar]

- 16.Guterstam A., Kean H. H., Webb T. W., Kean F. S., Graziano M. S. A., Implicit model of other people’s visual attention as an invisible, force-carrying beam projecting from the eyes. Proc. Natl. Acad. Sci. U.S.A. 116, 328–333 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Crump M. J. C., McDonnell J. V., Gureckis T. M., Evaluating Amazon’s Mechanical Turk as a tool for experimental behavioral research. PLoS One 8, e57410 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All raw data are available in SI Appendix. The preregistration for experiment 2 can be viewed at https://aspredicted.org/yw8az.pdf, and that for experiments 3a and 3b can be viewed at https://aspredicted.org/mm4pc.pdf.