Abstract

Observers can learn complex statistical properties of visual ensembles, such as their probability distributions. Even though ensemble encoding is considered critical for peripheral vision, whether observers learn such distributions in the periphery has not been studied. Here, we used a visual search task to investigate how the shape of distractor distributions influences search performance and ensemble encoding in peripheral and central vision. Observers looked for an oddly oriented bar among distractors taken from either uniform or Gaussian orientation distributions with the same mean and range. The search arrays were either presented in the foveal or peripheral visual fields. The repetition and role reversal effects on search times revealed observers’ internal model of distractor distributions. Our results showed that the shape of the distractor distribution influenced search times only in foveal, but not in peripheral search. However, role reversal effects revealed that the shape of the distractor distribution could be encoded peripherally depending on the interitem spacing in the search array. Our results suggest that, although peripheral vision might rely heavily on summary statistical representations of feature distributions, it can also encode information about the distributions themselves.

Keywords: visual search, ensemble perception, summary statistics, priming of pop-out, peripheral vision

Introduction

There are large differences in the processing of visual information between the central and peripheral visual fields. This reflects the fact that a much higher percentage of neural structures in both the retina and primary visual cortex are devoted to the foveal region (e.g., Curcio & Allen, 1990; Daniel & Whitteridge, 1961; Hubel & Wiesel, 1974; Drasdo, 1977; see Jóhannesson, Tagu & Kristjánsson, 2018 for review). Resolution, acuity, and contrast sensitivity are higher for foveal than peripheral vision (Anstis, 1974; Virsu & Rovamo, 1979). However, only a small central region of our visual field corresponds with the fovea, whereas the bulk of it is processed peripherally. This means that a significant amount of our daily visual perception depends on our peripheral vision. To have a complete picture of our visual capabilities, it is crucial to understand how processing differs between central and peripheral vision.

Apart from diminished resolution and acuity, one of the main reasons why visual performance is degraded in peripheral vision is crowding, which occurs when similar items (i.e., distractors, or “flankers”) are present nearby a target item (Stuart & Bruian, 1962; Bouma, 1970; Levi, Klein & Aitsabaomo, 1985; Wilkinson, Wilson & Ellemberg, 1997). Recent work on crowding has suggested that peripheral visual information is encoded as visual ensembles, which are represented in terms of summary statistics (Levi, 2008; Pelli & Tillman, 2008; Balas, Nakano, & Rosenholtz, 2009; Rosenholtz, Huang, Raj, Balas & Ilie, 2012; Ehinger & Rosenholtz, 2016). This would enable peripheral vision1 to process a large area of the visual field very quickly to detect any potentially informative visual items or events. This would then guide consequent eye movements made to project informative parts of the visual scene onto the fovea for further processing. Therefore, examining how visual ensembles are encoded in peripheral vision could increase our understanding of how the visual scene is processed.

Although the visual system can represent a distribution of features (e.g., orientation, color, size, facial expression) across many objects as ensembles, and can accurately and efficiently extract the summary statistics of these ensembles (for reviews, see Alvarez, 2011; Haberman & Whitney, 2011; Whitney & Leib 2018), foveal information is not needed for this (Wolfe, Kosovicheva, Leib, Wood & Whitney, 2015). Furthermore, it has even been argued that summarizing information in the peripheral visual field as ensembles is an automatic compulsory process, which, in turn, explains why crowding becomes stronger with increasing eccentricity (Parkes, Lund, Angelucci, Solomon, & Morgan, 2001; Fischer & Whitney, 2011; but see also Livne & Sagi, 2007; Bulakowski, Post & Whitney 2011). Although crowding can be seen as a limiting factor for object recognition in the periphery, this compulsory averaging might facilitate scene perception, because it has been shown that peripheral processing is more useful for recognizing the gist of a scene than foveal processing (Larson & Loschky, 2009; Ehinger & Rosenholtz, 2016).

Given this strong link between peripheral processing and ensemble perception, the effects of eccentricity in encoding visual ensembles have not received the attention they deserve. In particular, little work has focused on how ensemble encoding differs between central and peripheral vision. Ji, Chen, and Fu (2014) presented participants with sets of faces where certain faces appeared in the foveal visual field and others in the periphery. Participants judged whether the overall emotion of the set was positive or negative. They found that faces in the foveal region were given more weight than those in the periphery in participants’ judgments. In contrast, by using gaze-contingent displays, Wolfe et al. (2015) demonstrated that participants were equally accurate in reporting the mean emotion of a set of faces when there was a foveal occluder and when there was not.

Both of these studies required explicit judgments about the mean value of a set of features. These explicit judgments on statistical parameters of a feature distribution might have limited power in revealing how accurately feature distributions in an ensemble are encoded by the visual system. Recently, Chetverikov, Campana, and Kristjánsson (2016, 2017a, 2017b, 2017c, 2020) used a novel method to demonstrate that observers can encode the probability density function underlying the distractor distribution in an odd-one-out visual search task for orientation (2016, 2017a) and color (2017b). Instead of using explicit judgments of distribution statistics, they measured observers’ visual search times varying target similarity to previously learned distractors, which revealed observers’ expectations of distractor feature distributions. This was achieved by using role reversal effects between the target and distractors, which occur when feature values of target and distractors used on previous search trials are swapped on the next trial (Kristjánsson & Driver, 2008; Becker, 2010). This causes search times to increase owing to the similarity between the current target and the previous distractors, compared with a case where current target and previous distractor features are dissimilar. In this study, we use this implicit method and manipulate this similarity between the target and the previous distractors to assess whether observers encode orientation distributions differently with central and peripheral vision. In this way, we can examine observers’ internal representations of ensembles, rather than their explicit summary statistics judgments that may in fact rely on these ensemble representations.

Another goal of our study was to examine the effect of ensemble encoding on odd-one-out visual search performance. Ensemble encoding can strongly influence visual search for outliers (Cavanagh 2001; Alvarez, 2011; Whitney, Haberman & Sweeny, 2014). Detecting outliers among a set of items by comparing each item to all others would require complex calculations. However, creating ensemble representations of the relevant feature distribution of the items would make outlier detection much simpler. Rosenholtz et al. (2012) have shown that a model that represents the visual search area by dividing it into patches and then analyzing these patches with respect to their statistical summaries successfully predicts performance on classical visual search tasks. When the difference between the statistical summary of the patch that includes both the target and distractors and of the patch that only includes distractors increases, the search becomes easier, even when the target–distractor confusability is the same at an individual item level (e.g., search for O among Qs vs. search for Q among Os). In this study, we examine whether this influence of ensemble encoding on visual search performance changes between the central and peripheral visual fields. More important, previous studies have typically focused on the effect of target–distractor discriminability (Duncan & Humphreys, 1989, Palmer, Verghese & Pavel, 2000), segmentability of distractor features (Utochkin & Yurevich, 2016; Cho & Chong, 2019), or of summary statistics (Rosenholtz et al. 2012) on visual search performance. In contrast, our methodology allows us to investigate the effect of the shape of the distractor distribution while keeping all those other factors as constant as possible.

In Experiment 1, we assessed observers’ encoding of the orientation distribution of the distractor set in an odd-one-out visual search task, as well as its effect on search performance. The search array was presented either in the central or the peripheral visual field. In Experiment 2, we increased the spacing of the oriented bars as well as their size in the peripheral condition, while decreasing their size in the central condition, to make the two conditions more comparable in terms of processing capacity and partly accounting for the cortical magnification factor.

To summarize, our aim was to study search for targets among distractors with different distributions to answer the question of performance differences between the periphery and the center, as well as to measure feature distribution learning as described above (and in Chetverikov et al., 2016, 2017a) as a function of retinal eccentricity.

Experiment 1

Participants

Fourteen participants, seven males, M age = 32.14, took part in the study. All had normal or corrected-to-normal visual acuity. All participants signed an informed consent form before participating. Four were paid for their participation, whereas the other 10, who were staff or students at the University of Iceland, participated voluntarily. The experiment was run in accordance with the Declaration of Helsinki and the requirements of the ethics committee of the University of Iceland.

Stimuli

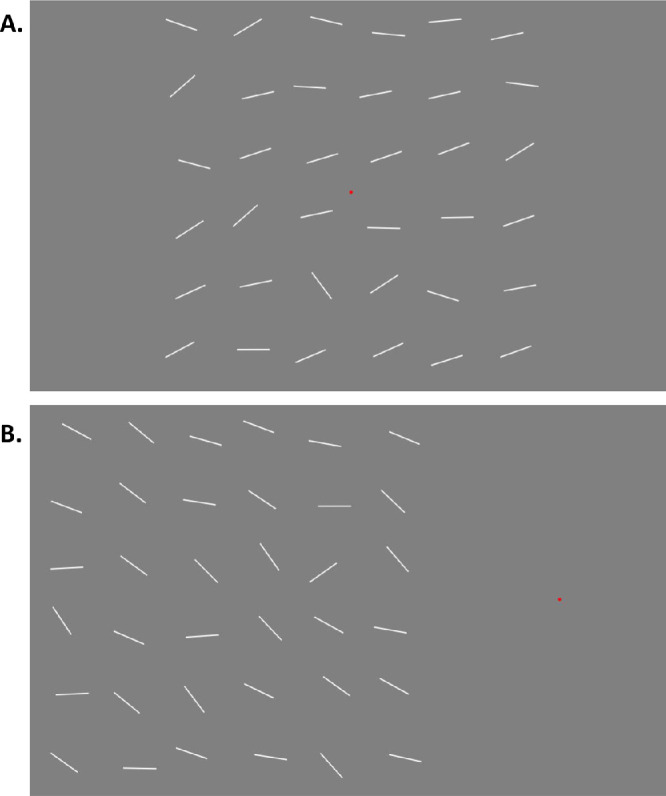

The display included a search array of 36 white lines arranged in a 6 × 6 invisible grid extending to 9° × 9° on a grey background (Figure 1). For the central condition, the search array was placed at the center of the screen, whereas for the peripheral condition it was centered 9° to the right or left of the center of the screen. The length of each line was 1°, and their position in the grid was jittered by randomly adding a value between ±0.25° to their horizontal and vertical coordinates. A red fixation dot with a radius of five pixels was placed at the center of the screen.

Figure 1.

(A) Example search array for the central condition in experiment 1. (B) Example search array for the peripheral condition in Experiment 1. In one-half of the peripheral trials, the search array was placed 9° to the right of center (red fixation dot) of the screen, and to the left of it in the other half.

Design

The details of the methodology we used in this study have been described in Chetverikov, Hansmann-Roth, Tanrikulu, and Kristjánsson (2019). Here, we followed the same design except for the addition of the peripheral condition and eye tracking to make sure that participants were fixating at the center of the screen.

The experiment included streaks of learning and test trials. Each learning streak included five or six search trials in which the orientations of the distractors were sampled either from a uniform, range = 60°, or a truncated Gaussian, SD = 15°, distribution. The Gaussian distribution was truncated at 2*SD away from the mean so that the range for the uniform and the Gaussian distribution was equal. This was additionally ensured by setting the orientation of two distractor lines to the minimal and maximal values of a given range and mean for both distributions. The mean orientation of the distractor distribution was determined randomly for each learning streak and kept fixed during that streak. The orientation of the target line was randomly determined with the restriction that the target to mean distractor distance in feature space was at least 60°.

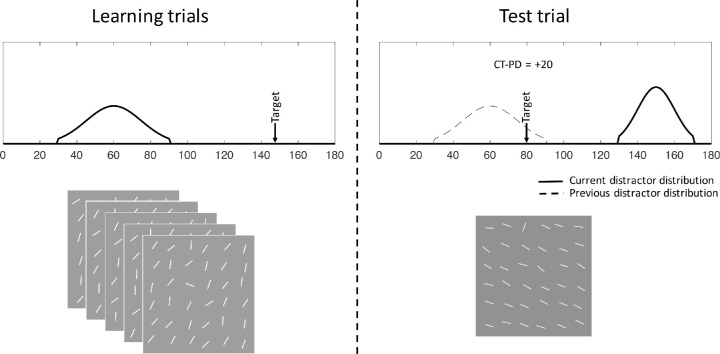

A single test trial followed the learning trials. The main variable that was manipulated in the test trial was the distance between the target orientation on the current test trial and the mean distractor orientation on the previous learning streak. This Current Target – Previous Distractor (CT–PD) distance determines the extent of the role reversal effects. To have a uniform distribution of CT–PD values, we divided the orientation space into 12 bins where each bin covered a range of 15°. Then for each test trial, a CT–PD value was randomly chosen from the bins ensuring that at the end of the experiment we had equal numbers of CT–PD values from each bin. Given the chosen CT–PD value for that test trial, the target orientation was determined to yield the chosen CT–PD distance (Figure 2). The orientations of the distractors in the test trial were sampled from a truncated Gaussian, SD = 10°, distribution. The mean orientation of the distractors was randomly determined with the restriction that the target to mean distractor distance in feature space was at least 60°.

Figure 2.

An example of learning streak trials and a test trial. On the left, the distractor distribution and the target orientation is shown for a learning streak. The distractor orientations are sampled from the same distribution throughout the learning trials, however, the target orientation is randomly chosen given that it is at least 60° away from the distractor distribution mean. On the test trial, the target orientation is chosen to yield different CT–PD values. In the example shown here, the distance between the current target orientation (80°) and the previous distractor distribution mean (60°) yields a CT–PD value of +20°.

Each participant completed 1872 search trials, which corresponds with a total of 288 (learning + test) streaks, that is, 2 (search location: central or peripheral) × 2 (distractor distribution: Gaussian or uniform) × 12 (CT–PD bins) × 2 (learning streak length: 5 or 6) × 3 (repetition).

Procedure and materials

Participants sat 100 cm away from a 24-inch LCD monitor (1920 × 1080) connected to a Windows 7 PC. An EyeLink 1000 Plus eyetracker was used to track participants’ fixations. The experiment was run using the Psychtoolbox (Brainard, 1997; Kleiner, Brainard & Pelli 2007) and EyeLink (Cornelissen, Peters & Palmer, 2002) toolbox extensions in MATLAB (Natick, MA). We recorded eye movements from observers’ right eye, while their head was stabilized with a chin rest. The experiment included eight blocks of trials, and at the beginning of each block a five-point calibration was performed. The average calibration error for the participants was 0.37°

Participants were asked to find the oddly oriented line among the 36 oriented lines, and indicate whether the oddly oriented line was in the upper three rows or in the bottom three rows of the search array by pressing the up and down arrow keys accordingly. The target position in the search array was randomized. Participants were asked to respond as quickly and correctly as possible while keeping fixation on the red dot centered on the screen. The next search trial was shown immediately after the participants responded, except when an error was made. In that case, the word “Error” appeared in the middle of the screen in a red font for 1 s. To motivate subjects, a score was calculated for each trial based on the participant's accuracy and response time (for a correct response: score = 10 + (1 − RT) × 10; for errors: score = −|10 + (1 − RT) × 10| − 10; where RT is response time in seconds). The accumulated current score was shown to participants during the breaks.

The experiment was completed in eight blocks, split into two sessions. Each block consisted of 36 learning + test streaks. Participants took as much resting time as they needed between the blocks. At the beginning of each block, the participants were told in which location on the screen the search array would appear (left, right, or center) relative to the fixation point. The location of the search array was kept fixed throughout a block, but alternated across blocks. All conditions were counterbalanced, and trials were randomized for each subject separately. The order in which participants performed trials with different search array locations was also counterbalanced across subjects. For all 14 participants, the first session included 72 practice streaks (roughly 400 search trials, with one-half of them in the center, and the other half in the periphery), which was followed by three blocks of experimental trials. The second session included 24 practice streaks (i.e., 130 search trials) and five experimental blocks. The four participants who were inexperienced with the search task were given a full practice session (i.e., five blocks, which corresponds with 180 streaks) before they started the two experimental sessions. This was done to acquaint these participants with the search task, because their response times were exceptionally high (>3 s) when they were first introduced to the task. The other 10 participants were already experienced with similar visual search tasks, and because their overall search times were already lower than 1 s, they did not perform separate practice sessions (but they still completed the practice trials embedded in the experimental sessions). On average, each session took 50 minutes to complete.

Results and discussion

In the central condition, trials where participants made an eye movement outside of the search array grid were excluded. In the peripheral condition, the trials where participants made an eye movement into the search area were excluded (overall 10% of all trials). If such an eye movement occurs during the learning streak, the learning process can potentially be interrupted. That is why we used longer learning streaks with five or six trials, rather than two trials, which has been found to be enough for observers to encode the distractor distribution with this method (Chetverikov et al., 2017a). Therefore, even if the learning streak is interrupted by such an eye movement, this would only shorten the length of the effective learning streak, and there would still be enough learning trials on average to encode the distractor distribution. Therefore, exclusion of test trials was independent of exclusion of learning trials.

Trials where RTs were too high (>3 s) or too low (<150 ms) were also excluded (<0.1% of remaining trials). Trials where participants made an error were excluded from RT analyses (9% of the remaining trials). RTs were log transformed for all analyses. In the periphery condition, whether the search array was presented on the right or left side of the screen did not have an effect on observers’ performance. The data were therefore combined across the left and right hemifield conditions (for more details see Appendix A).

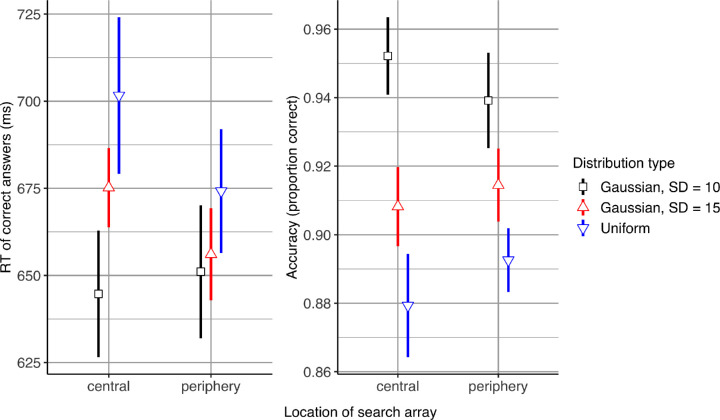

Visual search performance

Figure 3 shows visual search performance in the different conditions. Participants were fastest when the distractor orientations were sampled from the Gaussian with SD = 10° (M = 648 ms, SD = 81 ms), followed by the Gaussian with SD = 15° (M = 666 ms, SD = 98 ms) and they were slowest when sampled from a uniform distribution (M = 688 ms, SD = 118 ms). A two-way repeated measures analysis of variance (ANOVA) yielded a significant effect of distribution type on RT, F(2,26) = 10.91, p < 0.001,, as well as a significant interaction between distribution type and search location, F(2,26) = 3.87, p < 0.05, . However, search location alone did not have a significant effect on RTs, F(1,13) = 0.81, p > 0.05. When the two search locations were analyzed separately, an effect of distribution type was only observed in the central condition, F(2,26) = 12.71, p < 0.05, , whereas in the peripheral condition distribution type had little influence on RTs, F(2,26) = 3.35, p > 0.05.

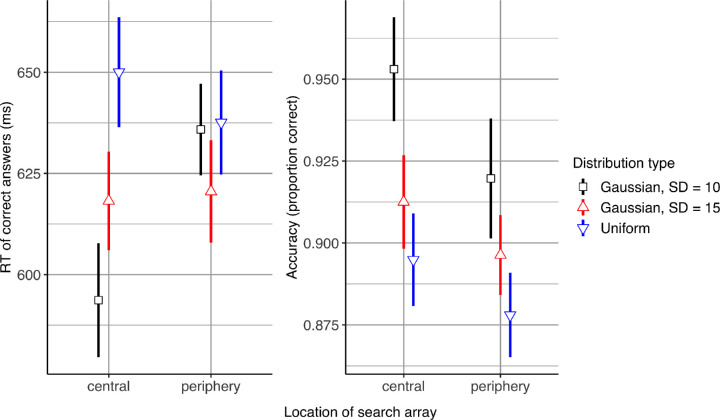

Figure 3.

Visual search performance in Experiment 1. Error bars represent 95% confidence intervals. Gaussian with SD = 10° (denoted by black) corresponds with the results obtained from the test trials, and the other two distractor distribution types (Gaussian with SD = 15° and uniform, which are denoted by red and blue respectively), correspond with the results obtained from learning trials.

Participants were most accurate when the distractor orientations were sampled from the Gaussian with SD = 10°, M = 0.95, SD = 0.02, followed by the Gaussian with SD = 15°, M = 0.91, SD = 0.03, and they were least accurate when sampled from a uniform distribution, M = 0.89, SD = 0.04. A two-way repeated measured ANOVA revealed a significant effect of distribution type on accuracy, F(2,26) = 54.15, p < 0.001, . There was no significant main effect of location, F(1,13) = 0.26, p > 0.05, or an interaction between the two factors, F(2,26) = 2.28, p > 0.05.

Overall, the search was faster and more accurate when the variance of the distractor distribution was lower. Although this finding is in line with previous studies, there was one interesting exception to this general trend. When the search array was presented in the periphery, the distractor distribution did not have a significant effect on search times, even though the accuracy was similar for the central and peripheral conditions.

Encoding of distractor distributions

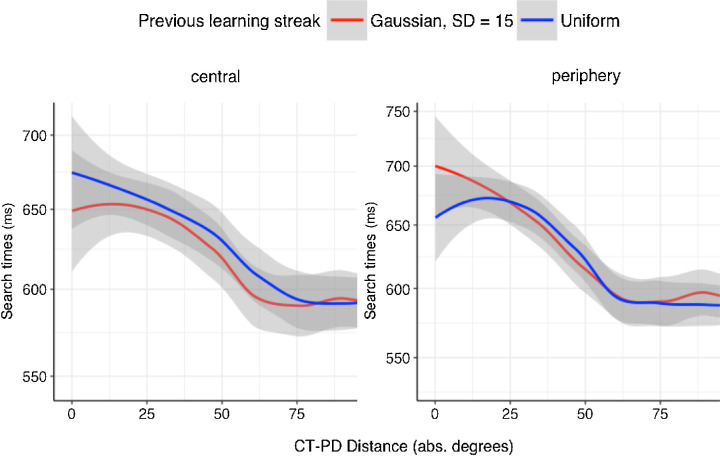

Figure 4 shows the CT–PD curves (response times on the test trials as a function of CT–PD distance), which reflect the effect of role reversals between target and distractor orientations. Since the distributions we used were symmetric, absolute values of CT–PD distances were used in the following plots and analyses. Test trials following an error were excluded from the analyses, because participants tend to slow down on a trial following an error, which overrides the role reversal effects.

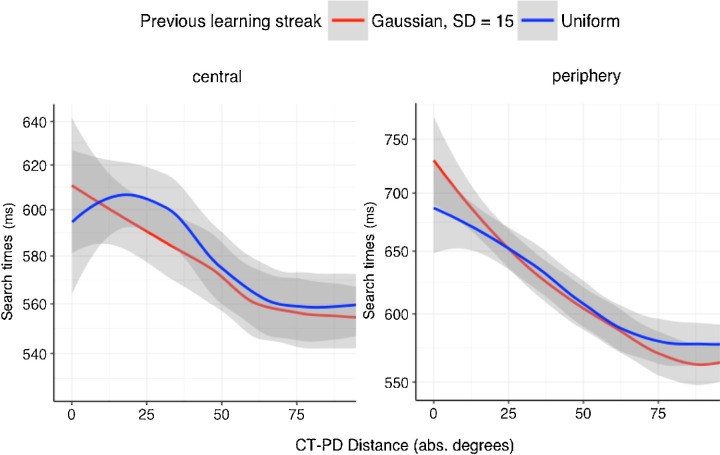

Figure 4.

Role reversal effects in Experiment 1. Search times on test trials are plotted as a function of the distance (in orientation space) between the current target orientation in the test trials and the mean distractor orientation of the preceding learning streak (CT–PD). Shaded area indicates 95% confidence intervals of the local (loess) regression fit.

To assess to what extent participants encoded the distractor distribution, we compared these CT–PD curves with the corresponding type of the distractor distribution used in the learning streak. Given that absolute values of CT–PD were used here, after a learning streak in which distractors were sampled from a Gaussian distribution, it would be expected that the search times in test trials would monotonously decrease with increasing CT–PD values. Whereas, for the test trial after a learning streak with uniform distribution of distractors with a range of 60°, the search times would be expected to be roughly constant within the 30° range, but then decrease outside of that range.

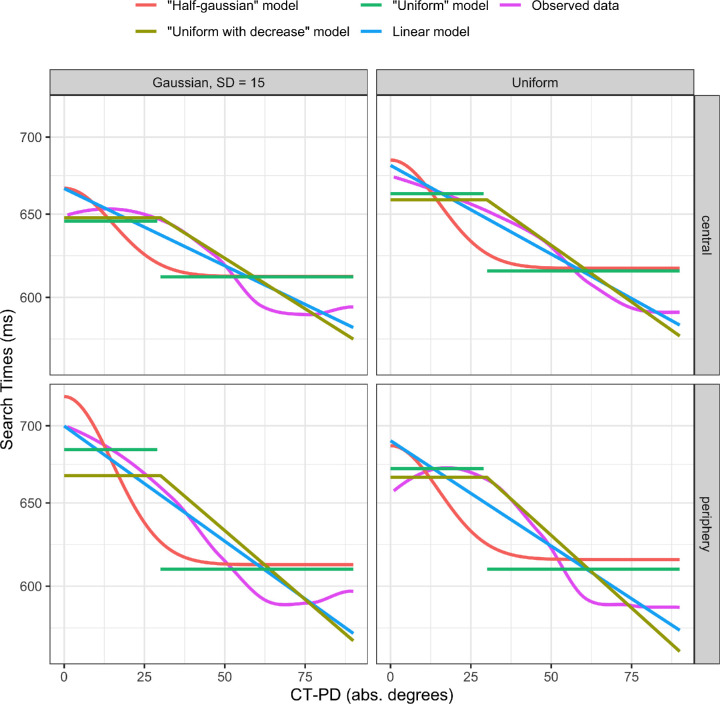

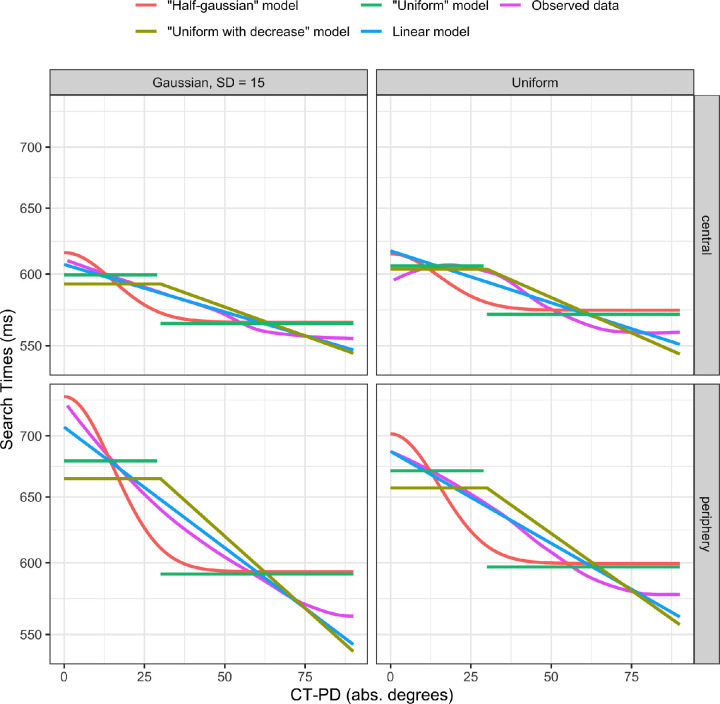

To test this hypothesis, we fit several possible distribution models to the CT–PD curves we obtained from the test trials (Figure 5). These models included a null model (i.e., constant RT over CT–PD values), a linear model (i.e., RT linearly depends on CT–PD), a half-Gaussian model (i.e., RT as a function of CT–PD described by a half-Gaussian with SD = 15°), a uniform model (i.e., constant RT but with different baselines within and outside of the 30° CT–PD range) and a uniform-with-decrease model (i.e., constant RT within the 30° CT–PD range, then linear decrease outside of that range). More formal descriptions of the models used in these analyses can be found in Chetverikov et al. (2017b). Table 1 shows the fit quality for the two best models among all models for each search array location, with accompanying Bayesian information criteria (BIC) of relevant model comparisons.

Figure 5.

Fitted models and the CT–PD curves obtained from the test trial RTs in Experiment 1.

Table 1.

Model fits to the search times as a function of CT–PD distances in Experiment 1.

| Center | Periphery | |||

|---|---|---|---|---|

| Distractor distribution | Gaussian | Uniform | Gaussian | Uniform |

| Best model | Uni. w/decrease | Linear | Linear | Uni. w/decrease |

| Second-best model | Linear | Uni. w/decrease | Uni. w/decrease | Linear |

| ΔBIC: nest vs. Null | 10.42 | 17.86 | 27.21 | 30.79 |

| ΔBIC: best vs. second best | 0.41 | 0.92 | 4.58 | 3.69 |

Notes: ∆BIC > 2 indicates “positive” evidence, ∆BIC > 6 indicates “strong” evidence, and ∆BIC > 10 corresponds to “very strong” evidence (Kass & Raftery, 1995).

When the search array was in the center, the best fits for both the Gaussian and the uniform distractor distributions were considerably better (∆BIC > 10) than the null model. However, the best fits for both conditions were not better than the second best fits (∆BIC < 1). This finding suggests that, when the search array was in the center, participants were able to encode certain summary statistics (e.g., mean orientation) of the distractor distributions, but notably, they were not able to encode the shape of that distribution. The two best models, uniform with decrease and linear, fit the CT–PD curves obtained from the Gaussian or uniform distractor distribution condition equally well.

When the search array was presented in the periphery, the two best fits were considerably better than the null model, ∆BIC > 25. Not only that, the best fit for the Gaussian condition (linear model) was better than the second best fit (uniform with decrease model), ∆BIC = 4.58. This indicates that when the distractor distribution was Gaussian, the search times linearly decreased as CT–PD value increased. When the distractor distribution was Uniform, the best fit (uniform with decrease model) was better than the second best fit (linear model), ∆BIC = 3.69, meaning that the RTs stayed roughly constant for a certain range but then decreased outside of that range.

To test whether the observed best fit models and their ∆BIC values are due to chance, we performed an additional bootstrap analysis. The data from the two distractor distribution conditions were combined and bootstrapped 10,000 times, where for each bootstrap sample the distractor distribution label (Gaussian and uniform) of each trial was randomly shuffled. We fit our main four models to each of these bootstrap samples and calculated the ∆BIC between their best and the second best fits. From this ∆BIC joint distribution, we calculated the probability of obtaining different best fits for the two distractor distribution conditions where the ∆BIC between the best and the second best fit was larger than the corresponding ∆BIC values observed in our results. This probability was 0.123 for the central condition and 0.003 for the peripheral condition. The probability of obtaining the observed results from the central condition was higher, reflecting the small ∆BIC values obtained in that condition (0.41 and 0.92; see left column in Table 1). The low probability we obtained for the peripheral condition indicates that the results from the model comparisons for the two distractor distribution conditions are unlikely to be due to chance and that participants were able to encode details about the distractor distribution (such as its shape) when the search array appeared in the periphery.

Whether the distractor distribution was encoded beyond its summary statistics was assessed by the demonstration of a statistically meaningful shape correspondence between the CT–PD curves and the physical distractor distribution. However, owing to noise in the visual system, a representation of a distribution would be expected to involve certain approximations. For example, a comparison of observers’ priors and natural statistics reveals important similarities, but not an exact match, between the two (Girshick, Landy & Simoncelli, 2011). This finding can explain why the best fit for the Gaussian distractor distribution was a linear model rather than a half-Gaussian model for the periphery condition. Both the linear and the half-Gaussian model involve a strict2 decrease in RT as CT–PD increases, which was the expected shape feature of a CT–PD curve obtained after following a Gaussian distractor distribution. However, this strict decrease is not expected after a uniform distractor distribution. Accordingly, the best model for the uniform distractors in the periphery condition was the “uniform with decrease” model, which does not involve a strict decrease in RT as CT–PD increases.

Item heterogeneity (e.g., from variance) is known to influence judgments of visual ensembles (e.g., Marchant, Simons & de Fockert, 2013). In our experiment, there was a slight variance difference between the Gaussian and the uniform distribution as their ranges were equal. Notably, however, in a previous study using the same implicit methodology we used here, Chetverikov et al. (2016) showed that the shape correspondence between CT–PD curves and distractor distributions cannot be explained by this variance difference.

Experiment 2

In Experiment 2, we changed the spacing between the oriented lines, as well as their lengths. For the peripheral condition, we increased both the spacing between the lines and their length. Because the effects of crowding increase with increasing eccentricity, these changes will partly compensate for crowding in the periphery, as well as for the cortical magnification factor. In addition, we decreased both the spacing between the lines and their length in the central condition. These changes ensured that all the lines of the search array in the central condition fell within the foveal region. These changes made the two search array location conditions more comparable in terms of general processing capacity.

Participants

Fourteen participants, seven males, M age = 29.93, took part in the study. Ten had previously participated in Experiment 1. All had normal or corrected-to-normal visual acuity. All participants signed an informed consent form before participating. Four were paid for their participation, whereas the other 10, who were staff or students at the University of Iceland, participated voluntarily. The experiment was run in accordance with the Declaration of Helsinki and the requirements of the ethics committee of the University of Iceland.

Stimuli and procedure

The search display was the same as in Experiment 1, except that the size of the search field and of the oriented lines were scaled differently for the peripheral and central conditions. In the central condition, the 36 oriented lines of the search array were arranged in a grid extending only to 4° x 4° and centered on the screen. The length of each line was 0.5° and their position was jittered randomly by adding a value between ±0.1° to their horizontal and vertical coordinates. For the peripheral condition, the search array extended 13° x 13°, and was placed 11° to the right or left of the fixation point. In this way, the distance between the fixation point and the oriented line that was closest to the fixation point (4.5°) was the same in the peripheral conditions of Experiments 1 and 2. The length of each line in the periphery condition was increased to 1.4°, and their position was jittered randomly between ±0.3° A red dot with a radius of five pixels was again used as a fixation point.

The equipment, procedure, and design of the experiment were exactly the same as in Experiment 1. A five-point eye-tracking calibration was again performed before each experimental block. The average calibration error was 0.39°.

Results and discussion

In the central condition, trials in which participants made an eye movement outside of the search array grid were excluded, whereas in the peripheral condition trials where participants made an eye movement into the search array were excluded from the analysis (overall 10% of all trials). Trials in which RTs were more than 3 s, less than 150 ms, and where participants made an error were also excluded from the RT analyses (11% of the remaining trials). RTs were log transformed for all analyses. Whether the search array was presented on the right or left side of the screen had no effect on observers’ performance in the periphery condition, so the data from the left and right hemifield conditions were combined (for more details see Appendix B).

Visual search performance

Figure 6 shows the RT and search accuracy for each of the conditions. A two-way repeated measures ANOVA yielded a significant effect of distractor distribution type on RT, F(2, 26) = 10.5, p < 0.001, . Similar to the results of Experiment 1, participants were fastest when the distractor distribution was Gaussian with SD = 10°, M = 613 ms, SD = 50 ms, followed by the Gaussian SD = 15°, M = 619 ms, SD = 59 ms, and they were slowest when the distractor distribution was uniform M = 644 ms, SD = 77 ms. Similar to what we observed in Experiment 1, there was no significant effect of search array location on RT, F(1, 13) = 3.23, p > 0.05; however, there was a significant interaction between search location and distribution type, F(2, 26) = 22.25, p < 0.001, .

Figure 6.

Visual search performance in Experiment 2. Error bars represent 95% confidence intervals. Gaussian with SD = 10° (denoted by black) corresponds to the results obtained from the test trials, and the other two distractor distribution types (Gaussian with SD = 15° and uniform, which are denoted by red and blue respectively) correspond to the results obtained from learning trials.

A repeated measures ANOVA on accuracy yielded a significant effect of distribution type, F(2, 26) = 22.74, p < 0.001, , as well as a significant effect of search location, F(1, 13) = 6.86, p < 0.05, . Participants were most accurate when the distractor distribution was Gaussian with SD = 10°, M = 0.94, SD = 0.03, followed by Gaussian with SD = 15°, M = 0.9, SD = 0.03, and were least accurate with uniformly distributed distractors, M = 0.89, SD = 0.03. Overall, participants were more accurate in the central condition, M = 0.91, SD = 0.04, than the peripheral condition, M = 0.89, SD = 0.04. There was no significant interaction between the two factors, F(2, 26) = 2.13, p > 0.05.

As in Experiment 1, participants in Experiment 2 were overall more accurate and faster when the distractor distribution had a lower variance. The effects of distribution type on search times were, however, not observed in the peripheral condition. The overall average accuracy was similar between the two experiments. The average RT in Experiment 2 was 43 ms lower than the one obtained in Experiment 1. This difference was likely due to those observers in Experiment 2 who previously participated in Experiment 1 responding slightly faster because they performed more sessions.

Encoding of distractor distributions

Figure 7 shows the CT–PD curves obtained from Experiment 2. The curves were calculated in the same way as in Experiment 1. In the central condition, when the distractor distribution was Gaussian, the search times monotonously decreased as CT–PD increased. However, when the distractor distribution was uniform, search times stayed roughly constant but then steadily decreased when the CT–PD exceeded the range of the uniform distribution. These observations were confirmed by the model comparisons done by fitting possible distribution models to RTs from the test trials (Table 2 and Figure 8). The best fit for the Gaussian distractor distribution was the linear model, whereas the best fit was the uniform-with-decrease model for the uniform distractor distribution. Both of these best fits were better than the second best fit models, ∆BIC > 2, and better than the null model, ∆BIC > 8.

Figure 7.

Role reversal effects in Experiment 2. Search times on test trials are plotted as a function of the distance (in orientation space) between the current target orientation in the test trials and the mean distractor orientation of the preceding learning streak (CT–PD), separately for each search array location. Shaded area indicates 95% confidence intervals of the local (loess) regression fit.

Table 2.

Model fits to the search times as a function of CT–PD distances in Experiment 2.

| Center | Periphery | |||

|---|---|---|---|---|

| Distractor distribution | Gaussian | Uniform | Gaussian | Uniform |

| Best model | Linear | Uni. w/decrease | Linear | Linear |

| Second-best model | Uni. w/decrease | Linear | Uni. w/decrease | Uni. w/decrease |

| ΔBIC: best vs. null | 8.92 | 11.41 | 65.00 | 34.11 |

| ΔBIC: best vs. second best | 2.11 | 2.01 | 11.04 | 4.30 |

Notes: ∆BIC > 2 indicates “positive” evidence, while ∆BIC > 6 indicates “strong” evidence, whereas ∆BIC > 10 corresponds with “very strong” evidence (Kass & Raftery, 1995).

Figure 8.

Fitted models and the CT–PD curves obtained from the test trial RTs in Experiment 2.

For the peripheral condition, the CT–PD curves did not change depending on the preceding distractor distribution type. As shown on the right side of Figure 7, search times in the periphery condition linearly decreased as CT–PD distance was increased, regardless of the preceding distractor distribution type. This finding was also confirmed by the model fit comparisons (Table 2). The best fit for both distractor distribution types was the linear model, which had a considerably better fit than the second best models, ∆BIC > 4, and better than the null model, ∆BIC > 34.

To ensure that the different results obtained from the model comparisons were not due to chance, we again performed a bootstrap analysis as in Experiment 1. The data from the two distractor distribution conditions were combined, separately for the central and peripheral conditions, and resampled 10,000 times, where the distractor distribution label for each trial was randomly shuffled. We applied the model comparison analysis to these bootstrap samples, and calculated the probability of obtaining different best-fit models for the Gaussian and uniform condition, where ∆BIC values between the best and the second-best fits were larger than the ones observed in Experiment 2. The probabilities we obtained were 0.008 and 0.013, for the central and the peripheral condition, respectively. This finding indicates that the model comparisons between the Gaussian and the uniform conditions were not due to chance.

Contrary to what was observed in Experiment 1, participants in Experiment 2 were better at encoding the shape of the distractor distribution when the search array was presented in the center, compared with in the periphery. However, role reversals with a CT–PD distance of around 0° had a greater influence on RTs in the periphery regardless of the preceding distractor distribution. Response times decreased sharply and monotonously as CT–PD increased, as seen from the high negative slopes obtained for the CT–PD curves of the peripheral condition. For example, the estimated slope of the best fit model for the Gaussian distractor distribution in the peripheral condition, B = –0.03, SE = 0.0003, z = –8.67, p < .001, was highly negative, whereas that level of steepness was not observed for the best fit model for the Gaussian distractor distribution in the central condition, B = –0.01, SE = 0.0003, z = –3.97, p < .001.3 This finding indicates that, even though participants did not encode the shape of the preceding distractor distribution in the periphery condition, it is clear that the mean of the distractor distribution was specifically encoded.

General discussion

In two experiments, we investigated similarities and differences in visual search performance and ensemble encoding of distractor sets between the central and peripheral visual fields. A crucial part of our experimental design involved investigating the effects of distractor distributions with the same mean and range, but with different shapes (Gaussian or uniform). First, in both experiments, we found that the shape of the distractor distribution strongly affected visual search times when the search was performed in the foveal region, but we did not observe its effect in the periphery. In the first experiment, more details of the shape of the distractor distribution were found to be encoded in the peripheral condition than the central condition. In the second experiment, we scaled down the search array for the central condition, but scaled it up for the peripheral condition. This time, encoding of the shape of the distractor distributions was found to be better in the central than the peripheral condition. Overall, we conclude that in addition to summary statistics, detailed information (e.g., shape) about distractor distributions can be encoded with peripheral vision, depending on the scale (or the interitem distance) of the search array.

In what follows, we speculate what these findings are likely to reflect.

Search times in central versus peripheral vision

The influence of distractor distribution type on search times in the central condition of our experiments is in line with previous studies. Distractor heterogeneity is known to have negative effects on search performance (Duncan & Humphrey, 1989; Rosenholtz, 2001). In the central condition, the lower the variance of the distractor distribution, the faster and more accurate participants were. Search times in the center differed, even though only the shape of the distractor distribution was changed while their summary statistics were kept similar. However, this finding was not observed when the search array was presented in the periphery. To our knowledge, this differential effect of distractor distribution on search times in the center and periphery has not been observed before in the literature.

Eccentricity effects on search performance have been well-established (Carrasco, Evert, Chang & Katz, 1995; Carrasco & Yeshurun, 1998). Even though crowding increases with increasing eccentricity, these eccentricity effects were found to be independent of crowding (Carrasco & Frieder, 1997; Madison, Lleras & Buetti, 2018). However, other researchers have also found effects of peripheral crowding on visual search performance (Vlaskamp, Over & Hooge, 2005). In our experiments, regardless of the influence of eccentricity and crowding, the overall search times in the central and peripheral conditions did not differ. This result was not surprising since the influences of eccentricity and crowding are not as strong in pop-out search as in other types of visual search tasks (Wertheim, Hooge, Krikke & Johnson, 2006; Gheri, Morgan & Solomon, 2007). In contrast with previous studies, however, we found that certain characteristics of the distractor distribution had no influence on the search times in the periphery, even though this influence was observed in foveal search. These characteristics include the variance (or range) and the shape of the distractor distribution. This result was observed in both experiments, in other words, even when the spacing between the search items, as well as their size, were manipulated to account for the cortical magnification factor. Moreover, this difference between central and peripheral regions was observed even though the overall search times and accuracy did not differ between the two regions.

We propose that this interaction between distractor distribution type and search array location is consistent with the summary statistical account of peripheral visual search (Rosenholtz et al, 2012). According to this view, what determines search times in the periphery is the difference in summary statistics4 of a “feature pooling” region that contains the target feature and a region that does not (i.e., only distractor features). The size of these feature-pooling regions increases with eccentricity. This view can account for the search times observed in our experiments. The summary statistics of the different distractor distributions used in our study were very similar to each other. The mean of the distractor distribution was equally distant (in orientation space) from the target on all trials of the experiment. Gaussian SD = 15° and uniform distractor distributions had the same range, which made their variance very similar. The only slightly different distribution (with respect to its summary statistics) was the Gaussian SD = 10° with its lower variance. That peripheral search times with the Gaussian SD = 10° were not lower than the other two distribution type conditions could be due to role reversal effects, because the Gaussian SD = 10° distribution was only used in the test trials. However, it should be noted that the search times observed with Gaussian SD = 10° in the central condition were lower than the other two distribution conditions, even in Experiment 1, where the overall amount of slowing owing to role reversals was fairly similar in the center and periphery.

In contrast with the peripheral search, response times in the central condition differed for the different types of distractor distributions, even though the differences between their summary statistics were small. This contrast between foveal and peripheral search times provides evidence for the view that summary statistical representations in peripheral vision account for visual search times.

Alternatively, the lack of differences in RT in the periphery can be explained by higher internal noise. We have consistently found in previous studies that the variance of the internal representation of distractors (corresponding with the “width” of the CT–PD curve) is larger than the variance in the stimuli (e.g., Hansmann-Roth, Kristjánsson, Whitney & Chetverikov, 2020). This finding is to be expected because of the internal noise added during processing in the visual system. Given that the external noise is likely to be higher in the periphery than in the center, it can mask the differences in the distribution shape (note, however, that this explanation does not agree with the role reversal results; see further discussion elsewhere in this article).

Ensemble coding with central versus peripheral vision

Notably, our results go beyond the summary statistical account of peripheral vision. We used the methods introduced by Chetverikov et al. (2016, 2017) to asses any feature distribution learning in the periphery and in the center. Chetverikov et al. have shown that observers can extract more detailed information about feature distributions within visual ensembles than previous studies on ensemble perception have indicated. In Experiment 1, when the search array was presented in the periphery, search times on test trials with respect to CT–PD distance revealed that participants were able to encode more than just the summary statistics of the distractor distribution. The shape of the CT–PD curves observed on the test trials of Experiment 1 depended on the shape of the distractor distribution used in the preceding learning trials. However, the effect of the shape of the distractor distribution was not observed in the periphery when the spacing of the search items was increased in Experiment 2. This observation is in line with other studies demonstrating that crowding facilitates ensemble coding (Parkes et al., 2001; Fischer & Whitney, 2011). Because the peripheral search array was more crowded (low interitem spacing) in Experiment 1 than Experiment 2, this increased crowding might have facilitated a more efficient process, perhaps akin to texture segmentation (Julesz, 1981), which, in turn, can explain why the shape of the distractor distribution was encoded in more detail for the periphery condition in Experiment 1 but not in Experiment 2.

However, in Experiment 2, when the target orientation on test trials was set to the mean orientation of the distractor distribution on the preceding learning trials (i.e., CT–PD = 0), the effect of role reversal was stronger in the periphery than in the center (Figure 7). Not only that, RTs decreased linearly with a relatively high negative slope as soon as CT–PD increased. This suggests that the visual system relied heavily on the mean orientation of the distractor distribution for finding the target in the periphery condition of Experiment 2, rather than encoding other details of the distractor distribution.

What factors could have facilitated the encoding of information about the shape of the distribution as opposed to its summary statistics? Apart from the effect of crowding mentioned above, another reason could be the increase in interitem spacing in Experiment 2, which made a significant part of the search array appear at even higher eccentricity. This could have made the encoding of the distractor distributions more difficult. In fact, participants made more mistakes in the periphery condition than the central condition in Experiment 2. This could force peripheral vision to rely on the summary statistics of the distractor distribution, rather than encoding the distribution itself. However, further empirical evidence is needed to confirm such an explanation.

Overall, we suggest that the difference in results between the two experiments reflect performance differences of the ensemble-encoding mechanism in peripheral vision owing to the changes introduced to the visual stimuli, rather than two different encoding mechanisms (e.g., summary statistics vs. distribution). In other words, we argue that the peripheral visual system constructed a representation of the distractor distribution in both experiments; however, this representation was less precise in Experiment 2, where more weight was given to the mean of the distribution in building the representation of the distribution, rather than its other features. Such an explanation is consistent with a distribution-based framework of visual attention (see Chetverikov et al. [2017c] for a review).

The display used in the central condition in Experiment 1 was very similar to the ones used in previous studies, which demonstrated that participants encode the shape of the distractor distribution (Chetverikov et al., 2016, 2017a). However, in this study we did not observe distribution shape learning in Experiment 1. The CT–PD curves obtained in this study were noisier than those obtained in previous studies. One of the main differences between our study and those previous ones was that we asked observers to maintain fixation while they performed the search, whereas in the previous studies participants were free to move their eyes. Even though the size of the search array in Experiment 1 was smaller than the ones used previously by Chetverikov et al. (2016, 2017a), when participants fixated at the center of the array, the lines at the outer part of the array still fell outside of the foveal region. Some participants in our study reported that, in the central condition when they saw a candidate line that they thought was the target, they sometimes struggled with inhibiting their eye movements toward that candidate line. It is possible that this inhibition of eye movements influenced the encoding of the shape of the distractor distribution. Our results from Experiment 2 support this interpretation because, when the search array was scaled down so that almost all the oriented lines fell within 2° around the fixation point, more details about the shape of the distractor distribution were encoded. However, further studies are needed to understand the influence of eye movements on the learning of distractor distribution shape.

The main difference between the two experiments was the scaling of the search array and the difference in results might also be explained by the degree of mismatch between the size of the spatial filters in the periphery (or fovea) and the spatial resolution of the search array. Such a mismatch can influence performance in texture segmentation tasks. The task and the stimuli used in our study have parallels with texture segmentation tasks. However, the effect of spatial resolution on texture segmentation has been observed in explicit performance measures such as accuracy (Yeshurun & Carrasco, 1998). In our study, the pattern of results obtained for such performance measures did not essentially differ between the two experiments. Therefore, if our method had only relied on such explicit measures, then it could not have revealed any differences in how scaling of the search array influences encoding of the distractor feature distributions. This highlights an urgent need in the literature for implicit experimental methods similar to the one used in this study. For example, future studies can use our implicit method for novel insights into texture processing mechanisms, eschewing the limitations of explicit experimental measures.

Conclusions

Our results showed that the shape and variance of the distractor distribution in a pop-out search for an oddly oriented line did not influence search performance when the search was performed with peripheral vision, whereas they had a significant effect when the search was performed in the foveal region. This result is in line with the view that visual information in the periphery is represented in terms of the summary statistics of the scene. However, our results also showed that, beyond summary statistics, more details about the distractor distribution can be encoded with peripheral vision depending on the spacing between the search items (or the overall size of the search array). When the interitem spacing was increased (in Experiment 2), participants tended to encode the mean of the distractor distribution rather than details about its shape.

Our study also suggests that visual search studies of ensemble summary statistics should be interpreted with caution. The visual search times were not sensitive to the different distractor distributions when the summary statistics of those distributions were roughly the same. This finding could suggest that peripheral vision relies mostly on summary statistics. However, our implicit method revealed that, under certain conditions, participants’ peripheral vision encoded more detailed information about the distractor distribution than only the summary statistics. These results call for the increased use of implicit experimental methods for a deeper understanding of what information is encoded from visual ensembles.

Acknowledgments

The authors thank Sabrina Hansmann-Roth for valuable feedback on the data analysis and the interpretation of the data. ODT and AK were supported by grant IRF #173947-052 from the Icelandic Research Fund, and by a grant from the Research Fund of the University of Iceland. AC is supported by Radboud Excellence Fellowship.

Commercial relationships: none.

Corresponding author: Ömer Dağlar Tanrıkulu.

Email: daglar83@gmail.com.

Address: Faculty of Psychology, School of Health Sciences, University of Iceland, Reykjavik.

Appendix A

Search performance in Experiment 1 did not significantly differ between the left and the right hemifields, both in terms of RT, F(1, 13) = 2.66, p > .05, and accuracy, F(1, 13) = 0.28, p > .05. Also, there was no significant interaction between distribution type and hemifields for RT nor accuracy, F(2, 26) = 2.56, p > .05; F(2, 26) = 0.09, p > .05, respectively.

Regarding distribution learning, although there were slight differences between the hemifields, there were no consistent effects of the hemifield that would allow us to draw any conclusions without further experimentation. The model comparisons done on the CT–PD curves obtained from the right hemifield yielded similar results to the ones shown in the periphery column of Table 1. For Gaussian distractors, the best model was “linear” and for uniform distractors the best model was a “uniform-with-decrease” one, and in both cases the best model was considerably better than the second best model, ∆BIC ≥ 2. In the left hemifield, for Gaussian distractors the best and the second best model was “uniform” and “half-Gaussian,” respectively, however, with very similar BIC values, ∆BIC = 1.08. For uniform distractors, the best and the second best model was the same as in the right hemifield (“Uni w/ decrease” and “Linear”, respectively), however, with very similar BIC values, ∆BIC = 0.42. In other words, the distribution learning was clearer in the right than in the left hemifield. However, these differences in distribution learning should be interpreted with great caution. The experiment was not designed to examine the differences between the two hemifields, and more data points from each hemifield are needed to draw firm conclusions.

Appendix B

In Experiment 2, search RTs again did not differ between the two hemifields, F(1, 13) = 0.15, p > .05; however, observers were overall slightly more accurate in the left, M = 0.92, SD = 0.04, than the right hemifield, M = 0.88, SD = 0.04, F(1, 13) = 24.37, p < .05, . There were no significant interactions between distribution type and hemifields for RT, F(2, 26) = 2.56, p > .05, nor accuracy, F(2, 26) = 0.76, p > .05.

In terms of distribution learning, the results in Experiment 2 were opposite to Experiment 1. In the right hemifield, there was no indication of distribution learning (as opposed to encoding only the mean of the distribution). For the Gaussian distractors, the best model was again “linear” and was considerably better than the second best model, “uniform-with-decrease,” ∆BIC = 3.35. For the uniform distractors, the best and the second best models were the “uniform-with-decrease” and “linear” models, respectively. However, the BIC's of these two model fits were very similar, ∆BIC = 0.46. In the left hemifield, the best model for Gaussian distractors was “linear” and for uniform distractors it was “uniform.” For both distractor distributions, the best models were considerably better than the second best models, ∆BIC > 4. We again want to note that the experiment was not designed to examine differences between the two hemifields, and more data points from each hemifield are needed to draw firm conclusions.

Footnotes

Throughout this article, the term “peripheral” visual field is used to refer to all the areas outside of the fovea, which includes parafoveal and peripheral regions (i.e., might also be referred to as extrafoveal).

We use “strictly decreasing” to refer to a function that is always decreasing and does not remain constant (i.e. cannot include a plateau). We use “monotonic decrease” to refer to a function that is always decreasing or remaining constant (i.e. can include a plateau).

Note that analyses were done on log-transformed reaction times.

Note that the “statistics” in Rosenholtz et al. (2012) account differ from typical “summary statistics” (e.g., mean and variance) in that they represent an output of a hierarchy of filters tuned to different luminance, orientation, and spatial frequency, and thus, in theory, should represent an orientation probability distribution as well (see also Rosenholtz, 2016). However, the precision of that representation is not clear.

References

- Alvarez G. A. (2011). Representing multiple objects as an ensemble enhances visual cognition. Trends in Cognitive Sciences , 15(3), 122–131, doi:10.1016/j. tics.2011.01.003. [DOI] [PubMed] [Google Scholar]

- Anstis S. M. (1974). Letter: A chart demonstrating variations in acuity with retinal position. Vision Research, 14, 589–592. [DOI] [PubMed] [Google Scholar]

- Balas B. J., Nakano L., & Rosenholtz R. (2009). A summary-statistic representation in peripheral vision explains visual crowding. Journal of Vision , 9(12): 13, 1–18, doi: 10.1167/9.12.13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becker S. I. (2010). The role of target-distractor relationships in guiding attention and the eyes in visual search. Journal of Experimental Psychology: General, 139(2), 247–265, 10.1037/a0018808. [DOI] [PubMed] [Google Scholar]

- Bouma H. (1970). Interaction effects in parafoveal letter recognition. Nature, 226, 177–178. [DOI] [PubMed] [Google Scholar]

- Brainard, D. (1997). The Psychophysics Toolbox. Spatial Vision , 10(4), 433–436. [PubMed] [Google Scholar]

- Bulakowski PF, Post RB & Whitney D (2011). Dissociating crowding from ensemble percepts. Attention, Perception, & Psychophysics, 73(4), 1003–1009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrasco M., Evert D. L., Chang I., & Katz S. M. (1995). The eccentricity effect: Target eccentricity affects performance on conjunction searches. Perception & Psychophysics, 57, 1241–1261. [DOI] [PubMed] [Google Scholar]

- Carrasco M. & Frieder K. S. (1997). Cortical magnification neutralizes the eccentricity effect in visual search. Vision Research , 37(1), 63–82. [DOI] [PubMed] [Google Scholar]

- Carrasco M., & Yeshurun Y. (1998). The contribution of covert attention to the set-size and eccentricity effects in visual search. Journal of Experimental Psychology: Human Perception and Performance, 24, 673–692. [DOI] [PubMed] [Google Scholar]

- Cavanagh P. (2001) Seeing the forest but not the trees. Nature Neuroscience, 4, 673–674. [DOI] [PubMed] [Google Scholar]

- Chetverikov A., Campana G., & Kristjánsson Á (2016). Building ensemble representations: How the shape of preceding distractor distributions affects visual search. Cognition, 153, 196–210. [DOI] [PubMed] [Google Scholar]

- Chetverikov A., Campana G., & Kristjánsson Á (2017a). Rapid learning of visual ensembles. Journal of Vision, 17(21), 1–15, 10.1167/17.2.21. [DOI] [PubMed] [Google Scholar]

- Chetverikov A., Campana G., & Kristjánsson Á (2017b). Representing color ensembles. Psychological Science, 28(10), 1510–1517, 10.1177/0956797617713787. [DOI] [PubMed] [Google Scholar]

- Chetverikov A, Campana G, & Kristjánsson Á (2017c) Learning features in a complex and changing environment: A distribution-based framework for visual attention and vision in general, In: Progress in Brain Research (pp. 97–120) New York: Elsevier, doi: 10.1016/bs.pbr.2017.07.001. [DOI] [PubMed] [Google Scholar]

- Chetverikov A., Hansmann-Roth S., Tanrikulu O.D. & Kristjánsson Á (2019). Feature Distribution Learning (FDL): A new method to study visual ensembles with priming of attention shifts. In: Neuromethods. New York: Springer. [Google Scholar]

- Chetverikov A., Campana G., & Kristjánsson Á (2020). Probabilistic rejection templates in visual working memory. Cognition , 196, 104075. [DOI] [PubMed] [Google Scholar]

- Cho J., & Chong S. C. (2019). Search termination when the target is absent: The prevalence of coarse processing and its intertrial influence. Journal of Experimental Psychology: Human Perception and Performance, 45(11), 1455–1469. [DOI] [PubMed] [Google Scholar]

- Cornelissen F.W., Peters E., & Palmer J. (2002). The Eyelink Toolbox: Eye tracking with MATLAB and the Psychophysics Toolbox. Behavior Research Methods, Instruments & Computers, 34, 613–617. [DOI] [PubMed] [Google Scholar]

- Curcio C. A., & Allen K. A. (1990). Topography of ganglion cells in human retina. Journal of Comparative Neurology , 300, 5–25, doi: 10.1002/cne.903000103. [DOI] [PubMed] [Google Scholar]

- Daniel P. M., & Whitteridge W. (1961). The representation of the visual field on the cerebral cortex in monkeys. Journal of Physiology, 159, 203–221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drasdo N. (1997) The neural representation of visual space. Nature (London), 266, 554–556 [DOI] [PubMed] [Google Scholar]

- Duncan J., & Humphreys G. W. (1989). Visual search and stimulus similarity. Psychological Review , 96, 433–458. [DOI] [PubMed] [Google Scholar]

- Ehinger K. A. & Rosenholtz R. (2016) A general account of peripheral encoding also predicts scene perception performance. Journal of Vision, 16(2), 13, 1–19 [DOI] [PubMed] [Google Scholar]

- Fischer J., & Whitney D. (2011). Object-level visual information gets through the bottleneck of crowding. Journal of Neurophysiology , 106(3), 1389–1398, doi: 10.1152/jn.00904.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gheri C., Morgan M. J., & Solomon J. A. (2007). The relationship between search efficiency and crowding. Perception, 36, 1779–1787. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girshick A.R., Landy M.S., Simoncelli E.P., 2011. Cardinal rules: Visual orientation perception reflects knowledge of environmental statistics. Nature Neuroscience, 14(7), 926–932, 10.1038/nn.2831. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haberman J., & Whitney D. (2011). Ensemble perception: Summarizing the scene and broadening the limits of visual processing. In Wolfe J., & Robertson L., editors, From Perception to Consciousness: Searching with Anne Treisman. New York: Oxford University Press. [Google Scholar]

- Hansmann-Roth S., Kristjánsson Á., Whitney D. & Chetverikov A (2020). Limits of perception and richness of behavior: Dissociating implicit and explicit ensemble representations. PsyArXiv, 10.31234/osf.io/3y4pz. [DOI] [PMC free article] [PubMed]

- Hubel D. H., & Wiesel T. N. (1974). Uniformity of monkey striate cortex: A parallel relationship between field size, scatter, and magnification factor. Journal of Comparative Neurology, 158, 295–306. [DOI] [PubMed] [Google Scholar]

- Ji L., Chen W., and Fu X. (2014). Different roles of foveal and extrafoveal vision in ensemble representation for facial expressions. Engineering Psychology and Cognitive Ergonomics Lecture Notes in Computer Science , 8532, 164–173. [Google Scholar]

- Jóhannesson ÓI, Tagu J, & Kristjánsson Á (2018). Asymmetries of the visual system and their influence on visual performance and oculomotor dynamics. European Journal of Neuroscience , 48, 3426–3445, 10.1111/ejn.14225. [DOI] [PubMed] [Google Scholar]

- Julesz B. (1981). Textons, the elements of texture perception, and their interactions. Nature, 290(12), 91–97. [DOI] [PubMed] [Google Scholar]

- Kass R. E., & Raftery A. E. (1995). Bayes factors. Journal of the American Statistical Association , 90(430), 773–795, 10.1080/01621459.1995. 10476572. [DOI] [Google Scholar]

- Kleiner M., Brainard D. H., Pelli D. G. (2007). What's new in Psychtoolbox-3? Perception , 36 (ECVP Suppl.), 14. [Google Scholar]

- Kristjánsson Á., & Driver J. (2008). Priming in visual search: Separating the effects of target repetition, distractor repetition and role-reversal. Vision Research , 48(10), 1217–1232, 10.1016/j.visres.2008.02.007. [DOI] [PubMed] [Google Scholar]

- Larson A. M., & Loschky L. C. (2009). The contributions of central versus peripheral vision to scene gist recognition. Journal of Vision , 9(10), 6, 1–16. [DOI] [PubMed] [Google Scholar]

- Levi D. M. (2008). Crowding: An essential bottleneck for object recognition: A mini-review. Vision Research , 48, 635–654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levi D., Klein S. & Aitsabaomo A. P. (1985) Vernier acuity, crowding and cortical magnification factor. Vision Research, 25, 963–967. [DOI] [PubMed] [Google Scholar]

- Livne T., & Sagi D. (2007). Configuration influence on crowding. Journal of Vision , 7(2), 4, 1–12 [DOI] [PubMed] [Google Scholar]

- Madison A., Lleras A. & Buetti S. (2018). The role of crowding in parallel search: Peripherap pooling is not responsible for logarithmic efficiency in parallel search. Attention, Perception & Psychophysics, 80(2), 352–373 [DOI] [PubMed] [Google Scholar]

- Marchant A. P., Simons D. J., & de Fockert J. W. (2013). Ensemble representations: effects of set size and item heterogeneity on average size perception. Acta Psychologica, 142(2), 245–250, doi: 10.1016/j.actpsy.2012.11.002. [DOI] [PubMed] [Google Scholar]

- Palmer J., Verghese P., & Pavel M. (2000). The psychophysics of visual search. Vision Research , 40, 1227–1268. [DOI] [PubMed] [Google Scholar]

- Parkes L., Lund J., Angelucci A., Solomon J. A., & Morgan M. (2001). Compulsory averaging of crowded orientation signals in human vision. Nature Neuroscience, 4, 739–744. [DOI] [PubMed] [Google Scholar]

- Pelli D. G., & Tillman K. A. (2008). The uncrowded window of object recognition. Nature Neuroscience , 11, 1129–1135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenholtz R. (2001). Visual search for orientation among heterogeneous distractors: Experimental results and implications for signal-detection theory models of visual search. Journal of Experimental Psychology: Human, Perception and Performance, 27, 985–999. [DOI] [PubMed] [Google Scholar]

- Rosenholtz R. (2016). Capabilities and Limitations of Peripheral Vision. Annual Review of Vision Science, 2(1), 437–457. [DOI] [PubMed] [Google Scholar]

- Rosenholtz R., Huang J., Raj A., Balas B. J., & Ilie L. (2012). A summary statistic representation in peripheral vision explains visual search. Journal of Vision , 12(4), 1–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuart J., & Burian H. (1962). A study of separation difficulty: Its relationship to visual acuity in the normal amblyopic eye. American Journal of Ophthalmology , 53(471), 163–169. [PubMed] [Google Scholar]

- Utochkin I. S., & Yurevich M. A. (2016). Similarity and heterogeneity effects in visual search are mediated by “segmentability”. Journal of Experimental Psychology: Human Perception and Performance, 42(7), 995–1007. [DOI] [PubMed] [Google Scholar]

- Virsu V., & Rovamo J. (1979). Visual resolution, contrast sensitivity, and the cortical magnification factor. Experimental Brain Research, 37, 475–494. [DOI] [PubMed] [Google Scholar]

- Vlaskamp B. N. S., Over E. A. C., & Hooge I. T. C. (2005). Saccadic search performance: The effect of element spacing. Experimental Brain Research, 167, 246–259. [DOI] [PubMed] [Google Scholar]

- Wertheim A. H., Hooge I. T. C., Krikke K., & Johnson A. (2006). How important is lateral masking in visual search. Experimental Brain Research , 170, 387–401. [DOI] [PubMed] [Google Scholar]

- Whitney D, Haberman J, Sweeny T (2014). From textures to crowds: Multiple levels of summary statistical perception. In Werner J. S., & Chalupa L. M., editors. The New Visual Neuroscience (pp. 695–710) Cambridge, MA: MIT Press [Google Scholar]

- Whitney D., & Leib A. Y. (2018). Ensemble perception. Annual Review of Psychology , 69, 12.1–12.25. [DOI] [PubMed] [Google Scholar]

- Wilkinson F., Wilson H. R. & Ellemberg D. (1997) Lateral interactions in peripherally viewed texture arrays. Journal of the Optical Society of America. A, Optics, Image Science, and Vision, 14, 2057–2068. [DOI] [PubMed] [Google Scholar]

- Wolfe B. A., Kosovicheva A. A., Leib A. Y., Wood K. & Whitney D. (2015). Foveal input is not required for perception of crowd facial expression. Journal of Vision, 15, 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeshurun Y., & Carrasco M. (1998). Attention improves or impairs visual performance by enhancing spatial resolution. Nature, 396(6706), 72. [DOI] [PMC free article] [PubMed] [Google Scholar]