Abstract

Background

The Carrot Rewards app was developed as part of a public-private partnership to reward Canadians with loyalty points for downloading the app, referring friends, completing educational health quizzes, and health-related behaviors with long-term objectives of increasing health knowledge and encouraging healthy behaviors. During the first 3 months after program rollout in British Columbia, a number of program design elements were adjusted, creating observed differences between groups of users with respect to the potential impact of program features on user engagement levels.

Objective

This study examines the impact of reducing reward size over time and explored the influence of other program features such as quiz timing, health intervention content, and type of reward program on user engagement with a mobile health (mHealth) app.

Methods

Participants in this longitudinal, nonexperimental observational study included British Columbia citizens who downloaded the app between March and July 2016. A regression methodology was used to examine the impact of changes to several program design features on quiz offer acceptance and engagement with this mHealth app.

Results

Our results, based on the longitudinal app use of 54,917 users (mean age 35, SD 13.2 years; 65.03% [35,647/54,917] female), indicated that the key drivers of the likelihood of continued user engagement, in order of greatest to least impact, were (1) type of rewards earned by users (eg, movies [+355%; P<.001], air travel [+210%; P<.001], and grocery [+140%; P<.001] relative to gas), (2) time delay between early offers (−64%; P<.001), (3) the content of the health intervention (eg, healthy eating [−10%; P<.001] vs exercise [+20%, P<.001] relative to health risk assessments), and (4) changes in the number of points offered. Our results demonstrate that reducing the number of points associated with a particular quiz by 10% only led to a 1% decrease in the likelihood of offer response (P<.001) and that each of the other design features had larger impacts on participant retention than did changes in the number of points.

Conclusions

The results of this study demonstrate that this program, built around the principles of behavioral economics in the form of the ongoing awarding of a small number of reward points instantly following the completion of health interventions, was able to drive significantly higher engagement levels than those demonstrated in previous literature exploring the intersection of mHealth apps and financial incentives. Previous studies have demonstrated the presence of incentive matters to user engagement; however, our results indicate that the number of points offered for these reward point–based health interventions is less important than other program design features such as the type of reward points being offered, the timing of intervention and reward offers, and the content of the health interventions in driving continued engagement by users.

Keywords: mHealth, behavioral economics, public health, incentives, mobile apps, mobile phone

Introduction

If modifiable chronic disease risk factors (eg, physical inactivity, unhealthy eating) were eliminated, 80% of both ischemic heart disease and type 2 diabetes and 40% of cancers could be prevented [1]; consequently, modest population-level improvements can make a big difference. For instance, a 1% reduction in the proportion of Canadians accumulating less than 5000 daily steps would yield Can $2.1 billion (US $1.615 billion) per year in health care system savings [2]. Such health behaviors, though, are notoriously difficult to stimulate and sustain, with persistent global overweight and obesity rates providing cases in point [1]. The World Health Organization [3] and others [4] suggest that broader socioecological solutions with interventions delivered at multiple levels (eg, individual, community, societal) are needed to address this issue. At the individual level, smartphones have revolutionized health promotion [5]. Their pervasiveness (eg, 1 billion global smartphone subscriptions expected by 2022) [6] and rapidly evolving functionalities (eg, built-in accelerometers, geolocating capabilities, machine learning techniques) have made it easier to deliver more timely and personalized health interventions on a mass scale.

Mobile Health Apps

The smartphone-based mobile health (mHealth) app market has grown steadily in recent years. In 2017, for example, there were 325,000 mHealth apps available on all major app stores, up by 32% from the previous year [7]. The number of mHealth app downloads also increased by 16% from 2016 to 2017 (3.2 to 3.7 billion) [7]. Although both supply (apps published in stores) and demand (app downloads) is growing, low engagement (with engagement measured as repeated usage, consistent with behavioral science approaches [8,9]) has resulted in small effect sizes and presented hurdles for financial sustainability continues to be a challenge for the industry [10-13]. For instance, 90% of all mHealth apps are uninstalled within 30 days, and 83% of mHealth app companies have fewer than 10,000 monthly active users, a standard industry engagement metric [14]. Systematic reviews of controlled studies on this topic suggest that tailoring content to individual characteristics, regularly updating apps, and incorporating a range of behavior change techniques, for example, may boost engagement [10-13]. To date, however, evaluations of only a few mHealth apps out of the thousands in the app stores have been published in peer-reviewed scientific journals [12]. To better elucidate the conditions under which mHealth app interventions are likely to succeed in real-world settings, more applied research is needed [5]. Traditional randomized controlled trials can be difficult to conduct in a fast-paced digital health environment, but mHealth has benefited from innovative approaches which attempt to determine causal mechanisms for outcomes of intervention effectiveness through methods such as microrandomized trials, factorial designs, and quasiexperimental designs such as pre-post, inverse roll-out, and interrupted time series [15]. Given the widespread proliferation of mHealth apps, evaluation methods that examine what does or does not work in the field, even when not employing methodologies that may generate interpretations of causality, may still contribute to our understanding of the contextual (eg, population characteristics) and program (eg, intervention design) factors that impact engagement, which ultimately influence the effectiveness both in terms of the financial cost of interventions as well as measurable impacts on health. This study, therefore, responds to a call in the literature to create a more comprehensive understanding of contextual and program factors that may impact engagement.

Study Context

The Carrot Rewards app (Carrot), created by a private company with support from the Public Health Agency of Canada [16], presents a unique research opportunity to explore the effects of some of these factors. Carrot was a Canadian app (ie, 1 million downloads, 500,000 monthly active users) grounded in behavioral economics, an offshoot of traditional economic theory complemented by insights from psychology [17]. Briefly, behavioral economics has demonstrated how small changes in the decision environment, particularly those that align with so-called economic rationality by cueing individuals’ financial goals, can have powerful effects on behavioral change at both individual and societal levels [17]. In exchange for engaging in short educational quizzes on a range of public health topics, the app rewarded users with loyalty points from 4 major Canadian loyalty program providers, which can be redeemed for popular consumer products such as groceries, air travel, movies, or gas. Although monetary health incentives (eg, paying people to walk more) have shown promise with evidence of short- and long-term effects [18], only a limited amount of research has examined alternative types of financial incentives [19]. Research in consumer psychology and decision making on individuals’ responsiveness to loyalty points in particular suggests that they are overvalued by consumers in general [20] and that the way individuals behave with respect to accumulating and spending points is nonlinear [21,22]. This behavior is idiosyncratic to factors such as the effort required to earn the reward [23] and the computational ease with which individuals are able to translate points to equivalent dollar values [24]. In public health campaigns, when large financial incentives are unlikely to be suitable or sustainable [25], opportunities for more financially feasible types of incentives are worthy of further study. In addition, a robust understanding of the likely effectiveness of such programs requires a more nuanced examination of program factors such as which individuals are likely to respond to these types of interventions as well as what program design features (eg, size and timing of rewards) [26] are influential in maintaining engagement with the platform.

In the first few weeks after its launch in 2016, Carrot underwent several important program changes, resulting in a nonexperimental observational study [27] that can be examined to shed light on factors influencing engagement. Our primary objective was to examine the impact of reducing reward size on engagement to tackle competing predictions: although previous research has suggested that the size of a financial incentive is important for sustained engagement and thus behavior change [28,29], which is consistent with principles of economic rationality, other research [30] and theory in consumer psychology suggest that the magnitude of the incentive may be somewhat inelastic to size [30-32]. The secondary objectives were to examine whether reward timing, type of reward, and health intervention content influence engagement.

Methods

Background

Carrot Insights Inc was a private company that developed the Carrot Rewards app, in conjunction with a number of federal and provincial government partners (the federal-provincial funding arrangement is described elsewhere) [16,31]. British Columbia (BC) was the company’s founding provincial partner, and Carrot Insights Inc also partnered with 4 Canadian health charities (ie, Heart & Stroke Foundation of Canada, Diabetes Canada, YMCA Canada, BC Alliance for Healthy Living), primarily for the purpose of reviewing/approving health content delivered by the app. The marketing assets of one charity and 4 loyalty partners were also leveraged in the initial weeks of the app launching in BC with, for example, 1.64 million emails sent to the members of 3 of the 5 partners [31].

App Registration

Carrot Rewards was made available on the Apple App Store and Google Play app stores on March 3, 2016, in both English and French (Canada’s official languages). On downloading the app, users entered their age, gender, postal code, and loyalty program card number of 1 of 4 programs of their choice (ie, movie, gas, grocery, or airline). To successfully register, users had to have entered a valid BC postal code and be aged 13 years or older (the age cutoff of the participating loyalty programs). British Columbians could download the app in 1 of 3 ways: organically (ie, finding it in the app store on their own), via an email invitation from a partner, or by using the promotional code friend referral mechanism [31].

Intervention Overview

Once the app was downloaded and registration was completed, users were offered 1 to 2 educational health quizzes per week over the first 5 weeks after registration, each containing 5 to 7 questions related to public health priorities identified by the BC Ministry of Health—healthy eating and physical activity/sedentary behavior (3 quizzes each)—and 2 separate health risk assessments that included items from national health surveys (regarding physical activity, eating and smoking habits, alcohol consumption, mental health, and overall well-being as well as the frequency of influenza immunization). The timing, content, and order of quizzes were the same for all individuals, other than the initial quiz timing, which will be discussed in greater detail in the Study Design section. Quizzes were developed to inform and familiarize users about self-regulatory health skills [32] or stepping stone behaviors (ie, goal setting, tracking, action planning, and barrier identification), skills that have been demonstrated in the past to promote health behaviors [31]. After completing a health quiz or health risk assessment and immediately earning incentives (US $0.04 to US $1.48 depending on the length, timing, and date of completion of the quiz), users could view relevant health information on partner websites. Each health quiz or assessment was designed to take approximately 1 to 3 min to complete.

Study Design and Participants

During the roll-out of Carrot in BC, there were 3 notable changes in the program introduced by its administrators, which provided the basis for the program variance that we explored in this study. These changes were driven largely by economic necessity rather than by theory or hypothesis testing but also presented the opportunity for a longitudinal nonexperimental observational study [27].

The first 2 changes were related to the number of points that participants could earn for completing quizzes. Specifically, during the study period following the launch of the app, there were (1) differences in the number of points offered across quizzes to compensate for differing quiz duration and timing (as demonstrated in the columns for each participant in Table 1) and (2) reductions in reward magnitude offered for the same quizzes over time (as demonstrated across in the rows for each quiz in Table 1). Owing to the unforeseen popularity of the platform and the need to manage costs within a finite budget financed by Carrot’s public sector partners, the number of reward points awarded for the completion of each quiz was reduced over time. This meant that early subscribers received more points for the initial quizzes than those who were enrolled later in the study window we examined, as demonstrated by comparing the point profiles for each sample participant in Table 1. Of note, the content of the quizzes remained invariant across time and, as mentioned previously, was informed by behavioral theories in self-regulation and habit formation [33].

Table 1.

Sample profiles of points awarded for quiz completion by initial registration date (Movie Reward Program).

| Quiz number and names | Participant A (joined March 9) | Participant B (joined March 16) | Participant C (joined March 23) | Participant D (joined March 30) | Participant E (joined April 6) | ||||||

| 1. Welcome to Carrot | |||||||||||

|

|

Day | Day 1 | Day 1 | Day 1 | Day 1 | Day 1 | |||||

|

|

Points | 100 | 38 | 25 | 25 | 17 | |||||

| 2. What Does Eating a Rainbow Taste Like? | |||||||||||

|

|

Day | Day 1 | Day 1 | Day 1 | Day 1 | Day 1 | |||||

|

|

Points | 98 | 33 | 33 | 33 | 17 | |||||

| 3. No Gym or Equipment Needed | |||||||||||

|

|

Day | Day 1 | Day 1 | Day 5 | Day 5 | Day 5 | |||||

|

|

Points | 165 | 101 | 58 | 58 | 33 | |||||

| 4. Stand Up for Your Health | |||||||||||

|

|

Day | Day 5 | Day 5 | Day 10 | Day 10 | Day 10 | |||||

|

|

Points | 53 | 40 | 40 | 16 | 16 | |||||

| 5. Carrot Health Survey 1 | |||||||||||

|

|

Day | Day 10 | Day 10 | Day 15 | Day 15 | Day 15 | |||||

|

|

Points | 23 | 23 | 16 | 16 | 16 | |||||

| 6. Rethink Sugary Drinks | |||||||||||

|

|

Day | Day 15 | Day 15 | Day 20 | Day 20 | Day 20 | |||||

|

|

Points | 18 | 18 | 16 | 16 | 16 | |||||

| 7. The 2 Colours You Shouldn’t Eat Without | |||||||||||

|

|

Day | Day 20 | Day 20 | Day 25 | Day 25 | Day 25 | |||||

|

|

Points | 17 | 17 | 17 | 17 | 17 | |||||

| 8. Is Exercise Really Like Medicine? | |||||||||||

|

|

Day | Day 25 | Day 25 | Day 30 | Day 30 | Day 30 | |||||

|

|

Points | 17 | 15 | 15 | 15 | 15 | |||||

| 9. Carrot Health Survey 2 | |||||||||||

|

|

Day | Day 30 | Day 30 | Day 35 | Day 35 | Day 35 | |||||

|

|

Points | 14 | 14 | 14 | 14 | 14 | |||||

The third change introduced during the evaluation period was in the number of quizzes that a participant received on the day that they registered for the app: participants who self-registered between March 3 and March 17 were awarded points for registering and were immediately offered 2 quizzes (and thus opportunities to earn reward points) on the day of registration, whereas participants who registered after March 17 were also awarded points for registering but offered only one quiz on the day of registration (as demonstrated in Table 1 by comparing the offer day for participants A and B with those noted for participants C, D, and E). This created variance in terms of both (1) the number of quizzes offered at the time of enrollment and (2) the number of opportunities to earn reward points before participants faced their first 5-day waiting period between quizzes.

Taking advantage of these program-level changes to examine their impact on app engagement and attrition, we examined quiz acceptance rates over the first 5 weeks after registration for BC residents who received the first 9 offers (initial registration plus 8 quizzes) under the launch campaign and who registered for the Carrot app between the launch (March 3, 2016) and July 21, 2016 (n=54,817), with within-subjects repeated measures for each quiz, yielding 383,719 participant-level observations.

Outcome Measures

For the purpose of this research, we explored the extent to which the 2 sources of program-level variance influenced the likelihood that a participant chose to engage with a given quiz. Thus, our outcome measure was a binary measure of whether a participant chose to complete each of the 8 quizzes during the initial 5 weeks postregistration.

Data Analyses

Independent Variables: Natural Experiment Factors

Although we observed the number of points earned for those who completed each quiz offer, including the change in the level of reward points offered (see Reward Points Schedule (first table) in Multimedia Appendix 1 for averages across waves), one limitation of our data is that they do not contain the number of points that were offered to participants who did not choose to complete a particular quiz offer; that is, because points awarded were based on the date of completion of a particular quiz, we only observed how many points a participant earned if they completed a particular quiz. Therefore, to explore the impact of these point changes on participants’ probability of quiz acceptance, it was necessary to impute the number of points that participants who did not complete a quiz would have been offered for the completion of a particular quiz. In this case, there were 4 important pieces of observable information that inform this data imputation: (1) the app was designed such that when it was opened, participants were shown the number of points they could earn by completing each quiz on a given date; (2) the date when a particular quiz was made available to a participant; (3) the schedule of how many points an individual could have earned to complete a quiz on a given date; and (4) the date of quiz completion for all participants who completed a particular quiz. On the basis of the assumption that participants were choosing to either complete or not complete a particular quiz and to ensure the robustness of our results, we imputed the missing observations for reward points that would have been offered to noncompleting participants in 2 ways, which are detailed in Multimedia Appendix 1. As the regression results for the 2 imputation approaches were consistent (as demonstrated in the third table in Multimedia Appendix 1–Regression Results by Imputation Methods), we reported only the results for the average days to completion imputation here.

Finally, as discussed previously, another important independent variable of interest was the combination of the differences in the timing of the quizzes and the number of reward points offered. To examine the impact of the timing structure on participants’ probability of quiz acceptance, we created a dummy variable to act as the independent variable that indicates whether or not a participant was facing their first delay between quizzes.

Observed Variables: Individual, Intervention, and Reward Program Factors

We also examined the influence of 4 observed variables that differed across participants or quizzes but were independent of the program-level changes described previously, which are the primary focus of this study. These were (1) self-reported demographic variables of age and gender that participants logged at the time of registration, (2) dummy variables indicating the content of each quiz (healthy eating, exercise/physical activity, and health risk assessment), and (3) a set of indicator variables for each reward program under which participants could earn points via the Carrot app. Additionally, we controlled for whether a participant completed the quiz preceding the focal quiz. This accounts for the nature of the quizzes and the likely path dependence present in intervention programs of this type (ie, the propensity to respond to a quiz is likely correlated with the decision to respond to the previous quiz).

The reward program indicator variables were potentially important observed variables for 3 reasons, and all speak to our attempts to address alternative possible explanations for observed variations in quiz participation. First, each reward program has a different level of engagement with its participants and engaged in different levels of marketing efforts promoting their partnership with Carrot. As such, the inclusion of this variable may theoretically capture the potentially different levels of promotion of the Carrot app undertaken by each reward program. Second, the enrolled participant base of each reward program has varying demographic characteristics that may not be captured in the self-reported age and gender variables described earlier. Table 2 summarizes the census-level demographics (by forward sorting area—the first 3 characters of a Canadian postal code) for each program, and although generally the bases of the programs are similar, we observed some important differences between the gas program and others in terms of socioeconomic status and lifestyle characteristics (eg, commute method, urban vs extra urban). As such, we believe that this observed variable may also capture unobserved demographic differences at the individual level. Finally, each reward program varies with respect to the earnings and redemption mechanisms of the programs. One important difference between programs is that although the actual cash value of the points awarded for each completion of each quiz remained consistent across programs, the discrete number of points varied because the dollar-to-point conversion bases of the programs were not the same. For example, for the same quiz, participants would earn the same real monetary value in points for completion, the airline and grocery programs award might be 5 points, the movie reward program might offer 10 points, and the gas program might award 100 points. As such, there was a possible numeracy effect that was captured by this observed variable. Finally, some programs offer more utilitarian rewards on redemption (eg, gas, groceries), whereas others offer more experiential redeemed rewards (eg, movies, travel), which have been demonstrated to impact individual responses to reward programs [23]. Theoretically, the inclusion of this observed variable should capture the variance associated with psychological and behavioral factors. Descriptive statistics and a correlation matrix for all measures are summarized in Table 3.

Table 2.

Demographic characteristics of the rewards program (census-level data at the forward sorting area level).

| Demographic variables | Movies | Airline | Grocery | Gas |

| Age (years), mean (SD) | 33 (0.06) | 40 (0.21) | 41 (0.16) | 42 (0.30) |

| Male, n (%) | 14503 (35.40) | 1797 (39.98) | 1742 (21.66) | 1130 (44.68) |

| Household incomea (US $), mean (SD) | 52,975 (76.36) | 53,600 (262.72) | 52,822 (161.36) | 50,616 (250.36) |

| Bachelor’s degree or higher, mean % (SD) | 21 (0.00) | 25 (0.00) | 18 (0.00) | 17 (0.00) |

| Active transport for commute, mean % (SD) | 9 (0.00) | 11 (0.00) | 8 (0.00) | 8 (0.00) |

| Public transit for commute, mean % (SD) | 13 (0.00) | 14 (0.00) | 9 (0.00) | 9 (0.00) |

| Motor vehicle for commute, mean % (SD) | 77 (0.00) | 73 (0.00) | 82 (0.00) | 82 (0.00) |

| Immigrant population, mean % (SD) | 33 (0.00) | 35 (0.00) | 26 (0.00) | 27 (0.00) |

| Aboriginal population, mean % (SD) | 3 (0.00) | 3 (0.00) | 5 (0.00) | 5 (0.00) |

aCan $ currency converted at US $1.3.

Table 3.

Descriptive statistics and correlation matrix.

| Variables | Mean (SD) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 1. Point change since previous quiz | 0.003 (0.503) | 1 | N/Aa | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| 2. First delay in quizzes | 0.143 (0.350) | 0.27 | 1 | N/A | N/A | N/A | N/A | N/A | N/A | N/A |

| 3. Movie rewards program | 0.731 (0.443) | −0.02 | 0.00 | 1 | N/A | N/A | N/A | N/A | N/A | N/A |

| 4. Airline rewards program | 0.080 (0.271) | 0.04 | 0.00 | −0.49 | 1 | N/A | N/A | N/A | N/A | N/A |

| 5. Grocery rewards program | 0.143 (0.350) | −0.01 | 0.00 | −0.68 | −0.12 | 1 | N/A | N/A | N/A | N/A |

| 6. Eating knowledge quiz | 0.286 (0.452) | −0.23 | −0.26 | 0.00 | 0.00 | 0.00 | 1 | N/A | N/A | N/A |

| 7. Exercise knowledge quiz | 0.429 (0.495) | 0.41 | 0.47 | 0.00 | 0.00 | 0.00 | −0.55 | 1 | N/A | N/A |

| 8. Completed previous study | 0.806 (0.395) | 0.09 | 0.16 | 0.12 | −0.03 | −0.09 | −0.04 | 0.11 | 1 | N/A |

| 9. Gender (1=male) | 0.350 (0.477) | 0.00 | 0.00 | 0.04 | 0.04 | −0.11 | 0.00 | 0.00 | −0.02 | 1 |

| 10. Age | 35.116 (13.195) | 0.00 | 0.00 | −0.29 | 0.11 | 0.21 | 0.00 | 0.00 | −0.05 | −0.04 |

aN/A: not applicable.

Statistical Methods

Data manipulation was conducted using SPSS version 24 (IBM Corporation), and statistical analysis was performed using the xtlogit procedure in STATA version 12.1 (Stata Corp). These random effects panel logit regression method was used to explore the impact of each of our program change variables, observed variables, and control variables on participants’ probability of quiz acceptance across the 8 quiz offers received in the 5 weeks postregistration (the outcome measure). We ran our model using the average days to quiz completion imputation procedures for the data missing from individuals who did not complete a given quiz, as described in Multimedia Appendix 1. The results of this analysis are presented in the following section.

Results

Engagement

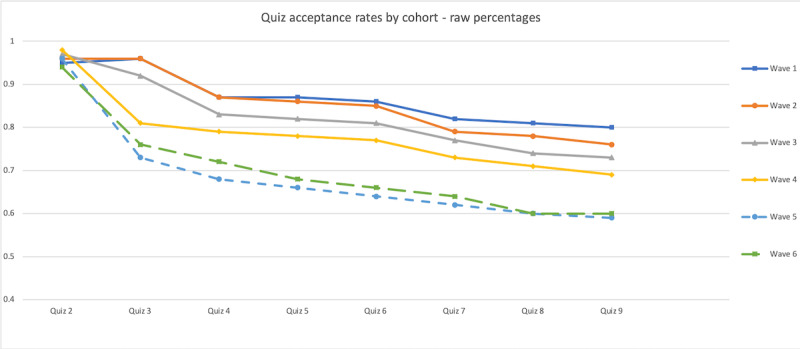

Although participants self-registered continuously throughout the study period, the date on which they registered placed them in different point schedules (see Table 1 or Reward Points Schedule (first table) in Multimedia Appendix 1 for more details). For the purpose of this study, we created analytical groups that we refer to as waves, which represent the point schedule in place at the time of their registration with the app. These groupings allow us to explore the effects of exogenous point changes on participant program engagement. Figure 1 demonstrates participant response rates for each of the first 8 quizzes across waves. Although not a conclusive analysis, we observed 4 key characteristics of these curves. First, the response rates to quiz 2 across the 6 waves were consistently high across all waves, ranging from 95% to 97%. This suggests that despite the significant difference in reward points offered for the completion of this quiz across waves (as demonstrated in Table 1), participants across waves are approximately equally likely to respond to this quiz. Second, response rates to the final quiz examined (quiz 9) varied, ranging from 80.5% (19,525/24,249) for wave 1 registrants to 60% (2930/4893) for wave 6 registrants, which suggests the possibility of intervening factors that predicted participant engagement between the time of initial registration and quiz 9. Third, a statistical test of the equality of the slopes from quiz 4 onward indicates that response rates across registrants in each of the waves did not differ significantly from one another after quiz 4. This suggests that the factors driving the differences in response rates appear to be independent of the underlying differences in responsiveness driven by the time of registration in the program. These results also provide support for the belief that participants were not fundamentally different from one another across the study period and indicate that it is unlikely that there were significant differences between registrants over the study period with respect to their attitudes to the underlying behavior of interest (ie, as in diffusion theories [34] where innovators, early adopters, and so on only emerge over an extended life cycle of a product). Finally, we also note that much of the decline in responsiveness across waves occurs around the time of quizzes 3 and 4, which coincides with the timing delay noted previously and which we explored in greater detail in our regression analysis.

Figure 1.

Quiz acceptance rates across quizzes by wave.

Regression Results

Overall, from the regression results summarized in Table 4, the regression results with respect to the features of this observational study suggest that the impact of a change in points on the likelihood of response to a quiz is positive and statistically significant (P<.001); however, the magnitude of the coefficient suggests that the elasticity of this response is limited. Specifically, in our data, a 10% decrease in points offered from the previous quiz resulted in a 1% decrease in the likelihood of response to a given quiz. Second, we found that the impact of the first delay that participants face was negative and statistically significant (P<.001). This result demonstrates that the longer delay between quizzes 2 and 3 faced by those who registered after March 17 decreased their likelihood of response by 64%.

Table 4.

Regression results (N=383,719 observations of 54,917 individuals, with 95% CIs in parentheses).

| Dependent variable=probability of quiz acceptance | Estimated coefficients (95% CI) |

| Point change since previous quiz | 1.100 (1.065-1.137) |

| First delay in quizzes | 0.361 (0.345-0.378) |

| Gender (1=male) | 0.845 (0.804-0.887) |

| Age | 0.999 (0.998-1.001) |

| Eating knowledge quiza | 0.896 (0.861-0.932) |

| Exercise knowledge quiza | 1.206 (1.156-1.257) |

| Movie rewards programb | 3.552 (3.176-3.971) |

| Airline rewards programb | 2.096 (1.840-2.386) |

| Grocery rewards programb | 1.402 (1.247-1.575) |

| Completed previous study | 71.891 (67.261-76.839) |

| Model fit—McFadden pseudo-R2 | 0.172 |

aKnowledge quiz estimates relative to health risk assessments.

bRewards program estimates relative to the gasoline rewards program.

With respect to the observed variables, we first examined the impact of demographic characteristics on the likelihood of quiz acceptance. We observed no significant effect of age (P=.52) on the likelihood of response; however, gender did have a significant impact (P<.001), whereby male participants were 15% to 16% less likely to respond to a given quiz than female participants. The next feature we explored in the model was the impact of quiz content on response rates. We observed that relative to the health risk assessments, participants were 21% more likely to respond to physical activity–related quiz offers (P<.001) and 10% less likely to respond to healthy eating–related quiz offers (P<.001). Finally, the results indicate that the reward program under which a participant registers had a significant impact on the likelihood of a quiz response. Specifically, relative to the gas rewards program, we observed that the movie rewards program participants were 355% more likely to respond to a given quiz (P<.001), the airline rewards program participants were 210% more likely to respond to a given quiz (P<.001), and grocery rewards program participants were 140% more likely to respond to a given quiz (P<.001).

Discussion

This study responds to a call in the literature to create a more comprehensive understanding of how contextual and program factors relate to program effectiveness [35] and, specifically, to explore how the type, amount, and timing of incentives impact program outcomes [36]. It does so by exploring how the variance induced by changes in the features of health interventions under the Carrot Rewards program (eg, incentive size, variability, quiz timing) during its BC roll-out, as well as observed differences between intervention content, reward program, and characteristics of participants, all impact the likelihood of quiz response. Thus, it contributes to the broader literature on how financial incentives [35] (particularly loyalty points) [36] and/or mHealth apps [28,37,38] can be deployed to improve uptake and engagement with health education–based interventions [37,39,40] and encourage health-related behaviors such as physical activity [18,36], management of chronic health conditions [28,41,42], smoking cessation [43,44], weight loss [45], and medication adherence and clinical treatment plans [40].

Effectiveness

Our study demonstrates that the ongoing provision of a stream of small incentives (as low as US $0.05 per offer) can largely sustain the initial high levels of responsiveness generated by these programs. Interestingly, however, our results also demonstrate that the responsiveness to reward point decreases is relatively inelastic when compared with the other features of the interventions being offered via the Carrot app. Specifically, our results indicate that reducing the number of points associated with a particular quiz by 10% only leads to a 1% (P<.001) decrease in the likelihood of offer response. This relative inelasticity is consistent with the findings of Carrera et al [46], who found that US $60 for 9 gym visits in 6 weeks was no more effective than US $30 [46]. However, detailed subgroup meta-analyses by Mitchell et al [26] suggest that larger incentives can, in some cases, produce larger effect sizes in a physical activity context. As health-related interventions are often multifaceted and include incentives as just one of several components intended to produce positive health-related behavior changes, it is likely that other program features (eg, reward timing, salience of feedback, goal setting approach) can play equally important roles. Thus, when combined with previous findings that suggest that the presence of financial incentives matters for responsiveness [47], our results suggest that a relatively small number of points may be nearly as effective as larger numbers of points. This may be particularly true when the incentives are delivered immediately on completion of the focal behavior in question, as it leverages the formidable bias toward the present, which has been demonstrated in behavioral economics [48,49] as well as speaks to the power of technologies such as smartphones to leverage that bias for increased behavioral compliance. This nearly instantaneous connection between behavior and reward may support the potential for diminution of the size (and thus cost) of incentives necessary to produce the requisite level of behavioral change. Despite findings that suggest that there is a minimum threshold for the size of daily incentives to maintain engagement and produce the desired behavior change [25,29], recent health incentive studies appear to be using much smaller incentives in part because of the ability of mHealth apps to deliver daily rewards, consistent with behavioral theories [26].

Contribution to Theory

In the context of the consumer psychology literature on loyalty points discussed earlier, our results support this prior work, which has demonstrated that consumers do not behave rationally (in the behavioral economics sense of the term) in their response to the quizzes and the size of the financial incentives offered. On the basis of previous research in mHealth and on the findings presented here, quiz response and thus app engagement appear to rely more on the simple presence of points (as a form of financial incentive), as opposed to the absolute quantity, as well as on the continued opportunity to earn more points without a delay. This may be for a variety of reasons. First, loyalty points already require users to accumulate over time, so there may be a built-in progress element and a recognition that the ultimate reward will be sometime in the future [22,23]. This may insulate programs such as the one discussed here from the negative effects seen in other studies where incentives get smaller over time. In addition, point programs have been hypothesized to cue goals related to gamification, where it is the acquisition of points itself that generates the affective value, rather than the economic reward per se. Our results demonstrate that the continuous, timely provision of small numbers of reward points throughout the program significantly improved retention and engagement with the Carrot app relative to previous programs that used reward points only for recruitment purposes [37] and provides support for previous findings regarding the efficiency of small, variable rewards and a decreasing schedule in maintaining engagement with health-based interventions [39,50]. Our study also provides a novel context in which to examine the behavior of individuals in response to loyalty points, but in a health context rather than a commercial context, pointing to the opportunity to use an alternative currency from which individuals already derive personal, affective value [33] as a type of financial incentive for health-related behaviors.

Program Design Considerations

Perhaps most importantly, our results suggest that other elements of the design of these interventions need to be considered when attempting to increase response rates. Existing research has demonstrated the critical importance of including direct end user feedback in the development of such apps early in the design to ensure both short- and long-term engagement [8,40,51,52]. One important finding from this study is that consistent with research on habit formation [30], an increased focus is needed to retain individuals during the early engagement period. Indeed, this was the largest driver of participant attrition that we found in our study, with a differential delay between early offers being associated with a 64% decrease in response probability. This suggests that other interventions and communication initiatives should be considered during this waiting period to retain participants. The good news is that once this hurdle is surmounted (ie, once a participant re-engages after the first waiting period), retention rates between interventions are remarkably high and consistent across the registration period studied, particularly considering the small magnitude of incentives that are being offered (Figure 1).

Additional findings of our study support the importance of nonincentive elements of this program, although by and large these results are much stronger in suggesting directions for further study than they are for creating concrete recommendations for program optimization. One important factor identified in our findings with respect to participant response rates is the content of the quiz being offered. Our results suggest that when the content of the quiz is focused on healthy eating, individuals are significantly less likely to respond (10% decrease in the likelihood of acceptance relative to health risk assessments), and when the content of the quiz focused on exercise or physical activity, individuals are more likely to respond (20% increase in the likelihood of acceptance relative to health risk assessments). These results suggest that more than just the simple time cost of each intervention should be considered when designing incentive programs, perhaps incorporating individual and/or psychological factors that may influence the attractiveness of a quiz, which may increase or decrease the perceived costs of quiz acceptance and ultimately affect the propensity for uptake of an intervention.

Similarly, among the observed variables that measured age and gender, quiz content, participation in previous quiz, and reward program, we find that the strongest predictor of the likelihood of quiz acceptance among all variables studied is the point program under which a participant is earning points for completing quiz offers via Carrot. Specifically, we find that individuals who choose to earn points in the movie (355%), airline (210%), and grocery (140%) programs all have a greater likelihood of response relative to the gasoline rewards program. We previously acknowledged that the differences between these reward programs may vary along multiple dimensions, including differential promotional efforts in support of Carrot, differences in the demographic composition of the participant base for each program, differences in individual responsiveness due to a numeracy effect (ie, holding actual economic value constant, the quantity of points earned per activity varies across programs, and people respond more to higher numbers of points earned), and differences in the earning and redemption mechanisms of each point program that may each influence the responsiveness of individuals. This facet of program design requires more exploration, but our results suggest that it is yet another factor that should be considered when designing optimal incentive-based intervention programs.

Limitations and Future Directions

One concern of the modeling approach used in this study is the possibility that the differential observed responsiveness to quizzes between what we have categorized as waves is due to underlying or inherent differences (whether demographic or psychographic) between participants who registered earlier versus later in the observed window of this study, rather than due to the previously described variations of the program. However, given (1) the very short time windows between the changes in the point scheme (as demonstrated in the first table in Multimedia Appendix 1–Reward Points Schedule), (2) the consistent slopes of the engagement curves described in the previous discussion of Figure 1, (3) the clustering approach at the participant level used in the estimation of the random effects regression model, and (4) the inclusion of a control for response to prior quizzes (which is likely to be autoregressive), we can be reasonably confident that program features drive the observed effects, rather than the unobservable characteristics of the participants. From observable data as well as theoretically, there is little to suggest that early registrants differ meaningfully from later registrants in terms of responsiveness; thus, the differences in ultimate outcomes are likely driven by features of the intervention rather than sampling considerations. Recall that participants were neither recruited nor assigned to waves; rather, those waves were our analytical construction to allow us to explore the exogenous changes to the program structure. Consequently, we would have no reason to believe that the presence of any particular individual in a given wave was anything other than random. Ideally, we would be able to more explicitly examine potential confounding factors such as individual-level differences at a psychological level (eg, promotion vs prevention orientation); regrettably, that information was not collected by the app as part of the registration process and therefore is not available.

Another limitation related to statistical analysis concerns the fact that we can only be certain of the number of points that participants saw for any given quiz for those individuals who completed the quiz in question. Although we have used conservative tests for imputation of the missing data (see Multimedia Appendix 1 for specific details), our ability to draw conclusions about individuals’ engagement is limited to those who remained engaged with the app and completed the quizzes, not about individuals who skipped or ceased participating. It is also not possible, with this study and data, to explore the extent to which individuals were aware of or sensitive to the point changes that occurred over time (eg, for participant A in Table 1, a decrease from quiz 3 of 165 points to quiz 5, where only 23 points were offered). We do find that for the individuals we were able to observe who continued to complete quizzes, even if the declining number of points was salient, it did not appear to have a large effect on engagement with the app.

We proposed several theoretical mechanisms by which different reward programs may affect engagement with the app (eg, the hedonic vs utilitarian nature of the rewards, numeracy effects), but in this study, we do not have the data to be able to empirically substantiate those hypotheses. In addition, we are unable to retroactively document the promotion or advertising of Carrot by each of the 4 participating loyalty programs or to identify underlying psychographic differences between enrollees in one program versus another. The inclusion of these factors in the model would potentially allow us to further control the reward program–specific characteristics to be able to make more informed recommendations for practitioners, program administrators, or government stakeholders in terms of the need for or effectiveness of promotion efforts to drive enrollment in and engagement with the app. In general, there is a lack of data within the literature to date to support the economic basis for the use of mHealth behavioral interventions [40,53]. Although this study does not directly tackle that issue, it does suggest an alternative dimension of cost consideration that may not be captured in existing frameworks such as the Consolidated Health Economic Evaluation Reporting Standards [53] and speaks to the need for ground effectiveness assessments in economic considerations as well as more traditional measurements such as reach. Finally, the realities of a program such as the one observed here in the variation of points offered confine us to a posthoc analysis of quizzes over a limited period. This constrains our ability to engage in repeated measures of a particular attitude or intention related to a specific context (eg, healthy eating, exercise) beyond the types of quizzes that happened to be administered within the period of observation.

Additional factors that could be integrated into the model include devising quasiexperimental studies to examine other contextual factors that may impact program engagement and effectiveness. These could include individual difference variables such as the impact of socioeconomic or health status on both app engagement and quiz effectiveness at driving attitudinal or behavioral change or program-centered factors, such as the comparative effectiveness of individual rewards versus team-based rewards, norm-based messaging, or differential offer timing. Future programming should use a modular approach such that content can be contained in a specific topic of interest to measure engagement in the quizzes in a more systematic manner and explore how the impacts of different program features may vary over time. Examining these modular programs using microrandomized controlled trial designs, similar to ongoing work by Kramer et al [54], would allow for a more targeted evaluation of how content, along with frequency, variations leads to greater and/or sustained engagement within the app.

Conclusions

The results of this study demonstrate that the Carrot program, built around an ongoing stream of a small number of reward points awarded instantly on completion of health interventions, was able to drive significantly higher engagement levels than demonstrated in previous literature exploring the intersection of mHealth apps and financial incentives. Furthermore, although previous studies have demonstrated that the presence of an incentive matters to user engagement, our study suggests that the number of points offered for these reward point–based health interventions is less important than other program design features such as the type of reward points being offered, the timing of intervention and reward offers, and the content of the health interventions for driving continued engagement by users.

Acknowledgments

The authors acknowledge the financial support of the Social Sciences and Humanities Research Council of Canada for this project.

Abbreviations

- BC

British Columbia

- mHealth

mobile health

Appendix

Point schedule changes across study window and missing data imputation for points offered.

Footnotes

Conflicts of Interest: MM received consulting fees from Carrot Insights Inc. from 2015 to 2018 as well as travel reimbursement in January and March 2019. MM had stock options in the company as well but these are now void since Carrot Insights Inc. went out of business in June 2019. LW was employed by Carrot Insights Inc. from March 2016 to June 2019 and also had stock options which are now void. The other authors report no conflicts of interest.

References

- 1.How Healthy Are Canadians? A Trend Analysis of the Health Of Canadians From a Healthy Living And Chronic Disease Perspective. Government of Canada. 2016. [2019-04-16]. https://www.canada.ca/content/dam/phac-aspc/documents/services/publications/healthy-living/how-healthy-canadians/pub1-eng.pdf.

- 2.Krueger H, Turner D, Krueger J, Ready AE. The economic benefits of risk factor reduction in Canada: tobacco smoking, excess weight and physical inactivity. Can J Public Health. 2014 Mar 18;105(1):e69–78. doi: 10.17269/cjph.105.4084. http://europepmc.org/abstract/MED/24735700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Global Action Plan on Physical Activity 2018–2030: More Active People for a Healthier World. World Health Organization. 2018. [2019-08-12]. https://www.who.int/ncds/prevention/physical-activity/global-action-plan-2018-2030/en/

- 4.Sallis JF, Bull F, Guthold R, Heath GW, Inoue S, Kelly P, Oyeyemi AL, Perez LG, Richards J, Hallal PC, Lancet Physical Activity Series 2 Executive Committee Progress in physical activity over the olympic quadrennium. Lancet. 2016 Sep 24;388(10051):1325–36. doi: 10.1016/S0140-6736(16)30581-5. [DOI] [PubMed] [Google Scholar]

- 5.Arigo D, Jake-Schoffman DE, Wolin K, Beckjord E, Hekler EB, Pagoto SL. The history and future of digital health in the field of behavioral medicine. J Behav Med. 2019 Feb;42(1):67–83. doi: 10.1007/s10865-018-9966-z. http://europepmc.org/abstract/MED/30825090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Heuveldop N. Ericsson Mobility Report. Ericsson Careers: Join our Global Team. 2017. Jun, [2019-05-03]. http://www.ericsson.com/assets/local/mobility-report/documents/2017/ericsson-mobility-report-june-2017-raso.pdf.

- 7.mHealth Economics 2017 – Current Status and Future Trends in Mobile Health. Research2Guidance. 2017. [2019-05-03]. https://research2guidance.com/product/mhealth-economics-2017-current-status-and-future-trends-in-mobile-health/

- 8.Perski O, Blandford A, West R, Michie S. Conceptualising engagement with digital behaviour change interventions: a systematic review using principles from critical interpretive synthesis. Transl Behav Med. 2017 Jun;7(2):254–67. doi: 10.1007/s13142-016-0453-1. http://europepmc.org/abstract/MED/27966189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.McCallum C, Rooksby J, Gray CM. Evaluating the impact of physical activity apps and wearables: interdisciplinary review. JMIR Mhealth Uhealth. 2018 Mar 23;6(3):e58. doi: 10.2196/mhealth.9054. https://mhealth.jmir.org/2018/3/e58/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schoeppe S, Alley S, van Lippevelde W, Bray NA, Williams SL, Duncan MJ, Vandelanotte C. Efficacy of interventions that use apps to improve diet, physical activity and sedentary behaviour: a systematic review. Int J Behav Nutr Phys Act. 2016 Dec 7;13(1):127. doi: 10.1186/s12966-016-0454-y. https://ijbnpa.biomedcentral.com/articles/10.1186/s12966-016-0454-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Vandelanotte C, Müller AM, Short CE, Hingle M, Nathan N, Williams SL, Lopez ML, Parekh S, Maher CA. Past, present, and future of ehealth and mhealth research to improve physical activity and dietary behaviors. J Nutr Educ Behav. 2016 Mar;48(3):219–28.e1. doi: 10.1016/j.jneb.2015.12.006. [DOI] [PubMed] [Google Scholar]

- 12.Dounavi K, Tsoumani O. Mobile health applications in weight management: a systematic literature review. Am J Prev Med. 2019 Jun;56(6):894–903. doi: 10.1016/j.amepre.2018.12.005. https://linkinghub.elsevier.com/retrieve/pii/S0749-3797(19)30025-X. [DOI] [PubMed] [Google Scholar]

- 13.Feter N, dos Santos TS, Caputo EL, da Silva MC. What is the role of smartphones on physical activity promotion? A systematic review and meta-analysis. Int J Public Health. 2019 Jun;64(5):679–90. doi: 10.1007/s00038-019-01210-7. [DOI] [PubMed] [Google Scholar]

- 14.How Uninstall Tracking Helps Improve App Store Ranking. Singular. 2016. [2019-06-19]. https://www.singular.net/

- 15.Handley MA, Lyles CR, McCulloch C, Cattamanchi A. Selecting and improving quasi-experimental designs in effectiveness and implementation research. Annu Rev Public Health. 2018 Apr 1;39:5–25. doi: 10.1146/annurev-publhealth-040617-014128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.National Healthy Living Platform: 'Carrot Rewards' Targets Lifestyle Improvements. Government of Canada. [2019-05-30]. https://www.canada.ca/en/news/archive/2015/07/national-healthy-living-platform-carrot-rewards-targets-lifestyle-improvements.html.

- 17.Scott RH. Nudge: improving decisions about health, wealth, and happiness. Choice Rev Online. 2008 Oct 1;46(2):46-0977. doi: 10.5860/choice.46-0977. [DOI] [Google Scholar]

- 18.Mitchell MS, Orstad SL, Biswas A, Oh PI, Jay M, Pakosh MT, Faulkner G. Financial incentives for physical activity in adults: systematic review and meta-analysis. Br J Sports Med. 2019 May 15;:-. doi: 10.1136/bjsports-2019-100633. epub ahead of print. [DOI] [PubMed] [Google Scholar]

- 19.Mitchell MS, Orstad SL, Biswas A, Oh PI, Jay M, Pakosh MT, Faulkner G. Financial incentives for physical activity in adults: systematic review and meta-analysis. Br J Sports Med. 2019 May 15;:-. doi: 10.1136/bjsports-2019-100633. [DOI] [PubMed] [Google Scholar]

- 20.van Osselaer SM, Alba JW, Manchanda P. Irrelevant information and mediated intertemporal choice. J Consum Psychol. 2004 Jan;14(3):257–70. doi: 10.1207/s15327663jcp1403_7. [DOI] [Google Scholar]

- 21.Kivetz R, Urminsky O, Zheng Y. The goal-gradient hypothesis resurrected: purchase acceleration, illusionary goal progress, and customer retention. J Mark Res. 2018 Oct 10;43(1):39–58. doi: 10.1509/jmkr.43.1.39. [DOI] [Google Scholar]

- 22.Bagchi R, Li X. Illusionary progress in loyalty programs: magnitudes, reward distances, and step-size ambiguity. J Consum Res. 2011 Feb 1;37(5):888–901. doi: 10.1086/656392. [DOI] [Google Scholar]

- 23.Kivetz R, Simonson I. Earning the right to indulge: effort as a determinant of customer preferences toward frequency program rewards. J Mark Res. 2018 Oct 10;39(2):155–70. doi: 10.1509/jmkr.39.2.155.19084. [DOI] [Google Scholar]

- 24.Kwong JY, Soman D, Ho CK. The role of computational ease on the decision to spend loyalty program points. J Consum Psychol. 2011 Apr;21(2):146–56. doi: 10.1016/j.jcps.2010.08.005. [DOI] [Google Scholar]

- 25.Kramer J, Tinschert P, Scholz U, Fleisch E, Kowatsch T. A cluster-randomized trial on small incentives to promote physical activity. Am J Prev Med. 2019 Feb;56(2):e45–54. doi: 10.1016/j.amepre.2018.09.018. https://linkinghub.elsevier.com/retrieve/pii/S0749-3797(18)32286-4. [DOI] [PubMed] [Google Scholar]

- 26.Mitchell M, White L, Lau E, Leahey T, Adams MA, Faulkner G. Evaluating the carrot rewards app, a population-level incentive-based intervention promoting step counts across two Canadian provinces: quasi-experimental study. JMIR Mhealth Uhealth. 2018 Sep 20;6(9):e178. doi: 10.2196/mhealth.9912. https://mhealth.jmir.org/2018/9/e178/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Craig P, Katikireddi SV, Leyland A, Popham F. Natural experiments: an overview of methods, approaches, and contributions to public health intervention research. Annu Rev Public Health. 2017 Mar 20;38:39–56. doi: 10.1146/annurev-publhealth-031816-044327. http://europepmc.org/abstract/MED/28125392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Badawy SM, Cronin RM, Hankins J, Crosby L, DeBaun M, Thompson AA, Shah N. Patient-centered ehealth interventions for children, adolescents, and adults with sickle cell disease: systematic review. J Med Internet Res. 2018 Jul 19;20(7):e10940. doi: 10.2196/10940. https://www.jmir.org/2018/7/e10940/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Strohacker K, Galarraga O, Williams DM. The impact of incentives on exercise behavior: a systematic review of randomized controlled trials. Ann Behav Med. 2014 Aug;48(1):92–9. doi: 10.1007/s12160-013-9577-4. http://europepmc.org/abstract/MED/24307474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Carrera M, Royer H, Stehr M, Sydnor J. Can financial incentives help people trying to establish new habits? Experimental evidence with new gym members. J Health Econ. 2018 Mar;58:202–14. doi: 10.1016/j.jhealeco.2018.02.010. http://europepmc.org/abstract/MED/29550665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mitchell M, White L, Oh P, Alter D, Leahey T, Kwan M, Faulkner G. Uptake of an incentive-based mhealth app: process evaluation of the carrot rewards app. JMIR Mhealth Uhealth. 2017 May 30;5(5):e70. doi: 10.2196/mhealth.7323. https://mhealth.jmir.org/2017/5/e70/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kowatsch T, Kramer J, Kehr F, Wahle F, Elser N, Fleisch E. Effects of charitable versus monetary incentives on the acceptance of and adherence to a pedometer-based health intervention: study protocol and baseline characteristics of a cluster-randomized controlled trial. JMIR Res Protoc. 2016 Sep 13;5(3):e181. doi: 10.2196/resprot.6089. https://www.researchprotocols.org/2016/3/e181/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Mitchell M, White L, Oh P, Alter D, Leahey T, Kwan M, Faulkner G. Uptake of an incentive-based mhealth app: process evaluation of the carrot rewards app. JMIR Mhealth Uhealth. 2017 May 30;5(5):e70. doi: 10.2196/mhealth.7323. https://mhealth.jmir.org/2017/5/e70/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Beal GM, Rogers EM, Bohlen JM. Validity of the concept of stages in the adoption process. Rural Sociol. 1957;22(2):166–8. https://scholar.google.com/scholar?cluster=8324822630205643742&hl=en&as_sdt=2005&sciodt=0,5. [Google Scholar]

- 35.Adams J, Giles EL, McColl E, Sniehotta FF. Carrots, sticks and health behaviours: a framework for documenting the complexity of financial incentive interventions to change health behaviours. Health Psychol Rev. 2014;8(3):286–95. doi: 10.1080/17437199.2013.848410. [DOI] [PubMed] [Google Scholar]

- 36.Faulkner G, Dale LP, Lau E. Examining the use of loyalty point incentives to encourage health and fitness centre participation. Prev Med Rep. 2019 Jun;14:100831. doi: 10.1016/j.pmedr.2019.100831. https://linkinghub.elsevier.com/retrieve/pii/S2211-3355(19)30019-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Goyal S, Morita PP, Picton P, Seto E, Zbib A, Cafazzo JA. Uptake of a consumer-focused mhealth application for the assessment and prevention of heart disease: the <30 days study. JMIR Mhealth Uhealth. 2016 Mar 24;4(1):e32. doi: 10.2196/mhealth.4730. https://mhealth.jmir.org/2016/1/e32/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Payne HE, Lister C, West JH, Bernhardt JM. Behavioral functionality of mobile apps in health interventions: a systematic review of the literature. JMIR Mhealth Uhealth. 2015 Feb 26;3(1):e20. doi: 10.2196/mhealth.3335. https://mhealth.jmir.org/2015/1/e20/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Leahey TM, Subak LL, Fava J, Schembri M, Thomas G, Xu X, Krupel K, Kent K, Boguszewski K, Kumar R, Weinberg B, Wing R. Benefits of adding small financial incentives or optional group meetings to a web-based statewide obesity initiative. Obesity (Silver Spring) 2015 Jan;23(1):70–6. doi: 10.1002/oby.20937. doi: 10.1002/oby.20937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Badawy SM, Kuhns LM. Texting and mobile phone app interventions for improving adherence to preventive behavior in adolescents: a systematic review. JMIR Mhealth Uhealth. 2017 Apr 19;5(4):e50. doi: 10.2196/mhealth.6837. https://mhealth.jmir.org/2017/4/e50/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Badawy SM, Barrera L, Sinno MG, Kaviany S, O'Dwyer LC, Kuhns LM. Text messaging and mobile phone apps as interventions to improve adherence in adolescents with chronic health conditions: a systematic review. JMIR Mhealth Uhealth. 2017 May 15;5(5):e66. doi: 10.2196/mhealth.7798. https://mhealth.jmir.org/2017/5/e66/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Barte JC, Wendel-Vos GC. A systematic review of financial incentives for physical activity: the effects on physical activity and related outcomes. Behav Med. 2017;43(2):79–90. doi: 10.1080/08964289.2015.1074880. [DOI] [PubMed] [Google Scholar]

- 43.Radley A, Ballard P, Eadie D, MacAskill S, Donnelly L, Tappin D. Give It Up For Baby: outcomes and factors influencing uptake of a pilot smoking cessation incentive scheme for pregnant women. BMC Public Health. 2013 Apr 15;13:343. doi: 10.1186/1471-2458-13-343. https://bmcpublichealth.biomedcentral.com/articles/10.1186/1471-2458-13-343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Giles EL, Robalino S, McColl E, Sniehotta FF, Adams J. The effectiveness of financial incentives for health behaviour change: systematic review and meta-analysis. PLoS One. 2014;9(3):e90347. doi: 10.1371/journal.pone.0090347. http://dx.plos.org/10.1371/journal.pone.0090347. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Jeffery RW. Financial incentives and weight control. Prev Med. 2012 Nov;55 Suppl:S61–7. doi: 10.1016/j.ypmed.2011.12.024. http://europepmc.org/abstract/MED/22244800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Carrera M, Royer H, Stehr M, Sydnor J. Can financial incentives help people trying to establish new habits? Experimental evidence with new gym members. J Health Econ. 2018 Mar;58:202–14. doi: 10.1016/j.jhealeco.2018.02.010. http://europepmc.org/abstract/MED/29550665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Mitchell MS, Goodman JM, Alter DA, John LK, Oh PI, Pakosh MT, Faulkner GE. Financial incentives for exercise adherence in adults: systematic review and meta-analysis. Am J Prev Med. 2013 Nov;45(5):658–67. doi: 10.1016/j.amepre.2013.06.017. [DOI] [PubMed] [Google Scholar]

- 48.Camerer CF, Loewenstein G. Advances in Behavioral Economics. Princeton, UK: Princeton University Press; 2003. Behavioral economics: past, present, future. [Google Scholar]

- 49.Volpp KG, Asch DA, Galvin R, Loewenstein G. Redesigning employee health incentives--lessons from behavioral economics. N Engl J Med. 2011 Aug 4;365(5):388–90. doi: 10.1056/NEJMp1105966. http://europepmc.org/abstract/MED/21812669. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Andrade LF, Barry D, Litt MD, Petry NM. Maintaining high activity levels in sedentary adults with a reinforcement-thinning schedule. J Appl Behav Anal. 2014;47(3):523–36. doi: 10.1002/jaba.147. http://europepmc.org/abstract/MED/25041789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Badawy SM, Thompson AA, Liem RI. Technology access and smartphone app preferences for medication adherence in adolescents and young adults with sickle cell disease. Pediatr Blood Cancer. 2016 May;63(5):848–52. doi: 10.1002/pbc.25905. [DOI] [PubMed] [Google Scholar]

- 52.Perski O, Blandford A, Ubhi HK, West R, Michie S. Smokers' and drinkers' choice of smartphone applications and expectations of engagement: a think aloud and interview study. BMC Med Inform Decis Mak. 2017 Feb 28;17(1):25. doi: 10.1186/s12911-017-0422-8. https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-017-0422-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Iribarren SJ, Cato K, Falzon L, Stone PW. What is the economic evidence for mHealth? A systematic review of economic evaluations of mHealth solutions. PLoS One. 2017;12(2):e0170581. doi: 10.1371/journal.pone.0170581. http://dx.plos.org/10.1371/journal.pone.0170581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kramer J, Künzler F, Mishra V, Presset B, Kotz D, Smith S, Scholz U, Kowatsch T. Investigating intervention components and exploring states of receptivity for a smartphone app to promote physical activity: protocol of a microrandomized trial. JMIR Res Protoc. 2019 Jan 31;8(1):e11540. doi: 10.2196/11540. https://www.researchprotocols.org/2019/1/e11540/ [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Point schedule changes across study window and missing data imputation for points offered.