Abstract

Introduction

Web‐based cognitive tests have potential for standardized screening in neurodegenerative disorders. We examined accuracy and consistency of cCOG, a computerized cognitive tool, in detecting mild cognitive impairment (MCI) and dementia.

Methods

Clinical data of 306 cognitively normal, 120 mild cognitive impairment (MCI), and 69 dementia subjects from three European cohorts were analyzed. Global cognitive score was defined from standard neuropsychological tests and compared to the corresponding estimated score from the cCOG tool containing seven subtasks. The consistency of cCOG was assessed comparing measurements administered in clinical settings and in the home environment.

Results

cCOG produced accuracies (receiver operating characteristic‐area under the curve [ROC‐AUC]) between 0.71 and 0.84 in detecting MCI and 0.86 and 0.94 in detecting dementia when administered at the clinic and at home. The accuracy was comparable to the results of standard neuropsychological tests (AUC 0.69–0.77 MCI/0.91–0.92 dementia).

Discussion

cCOG provides a promising tool for detecting MCI and dementia with potential for a cost‐effective approach including home‐based cognitive assessments.

Keywords: Alzheimer's disease, clinical decision support, cognition, computerized cognitive test, dementia, memory, mild cognitive impairment, neuropsychology, web‐based cognitive test

1. BACKGROUND

Despite the great progress in diagnostic biomarkers for Alzheimer's disease (AD) and other types of dementia, only 20% to 50% of dementia cases are recognized and documented. 1 This indicates a need for simple and efficient tools as well as clinical procedures for timely detection of neurodegenerative disorders. Although no cure for major neurodegenerative disorders such as AD is available, early diagnosis, combined with adequate management, can affect cognition, delay institutionalization, and lead to socioeconomic benefits. 2 , 3 Early detection and treatment of patients with cognitive impairment is estimated to be cost effective even when taking the increased assessment costs into account. 4

In clinical practice, elderly persons with suspected cognitive impairment are typically first evaluated with simple cognitive tests, such as the Mini‐Mental State Examination (MMSE), 5 Montreal Cognitive Assessment, 6 Clock Drawing Test 7 or Consortium to Establish a Registry for Alzheimer's Disease (CERAD) battery. 8 Further examinations with comprehensive neuropsychological assessments are ordered according to the clinical symptoms and cognitive screening results already obtained. Neuropsychological examinations are, however, time consuming and require a specialist psychologist. Web‐based tests may increase the availability of testing (in the clinic, and perhaps even at home) and help to reduce costs at the same time. 9 , 10 In addition, results can be more easily integrated into electronic patient data platforms. 11 Web‐based cognitive tests have been found to be promising in measuring cognition in population level 12 and in detecting mild cognitive impairment (MCI) and dementia. 13 , 14 However, test performance can vary depending on test devices. 12 , 15 , 16 Also retest reliability of self‐administered cognitive tests and their correlation to the traditional neuropsychological tests varies. 12 , 14 , 17

Despite the clear benefits and potential of web‐based tests, they are clearly underused in clinical settings. In this study, we present a web‐based self‐administrable cognitive test tool, cCOG, designed for early detection of neurodegenerative disorders. cCOG tasks were developed based on traditional cognitive tests to maintain the internal validity and to support clinicians to interpret the results. In validation, the correlation with standard neuropsychological tests (presenting the gold standard of cognition) was studied first. Then, the test tool accuracy to detect MCI and dementia was evaluated and compared to standard neuropsychological tests. Finally, the internal consistency of the cCOG was studied with a special interest in comparing measurements administered at clinical settings and in the home environment.

2. METHODS

2.1. Subjects

Three study cohorts including cCOG measurements were used:

-

1)

The PredictND (Predict Neurodegenerative Disorders) cohort (PredictND) included patients with MMSE ≥ 25 and contained data from 323 cognitively normal, MCI, and dementia patients from four European memory clinics. The data were acquired during 2015 to 2016. 18 , 19

-

2)

The VPH‐DARE@IT (Virtual Physiological Human: Dementia Research Enabled by Information Technology) cohort (VPHDARE, www.vph-dare.eu) contained data from 80 cognitively normal, MCI, and dementia patients from one memory clinic in Finland. This was acquired during 2015 to 2016. 20

-

3)

The Finnish Geriatric Intervention Study cohort (FINGER) 21 contained data from 92 subjects who had an overall cognitive performance at the mean level or slightly lower than expected for their age according to Finnish population norms, did not have a diagnosed MCI or dementia, but were at higher risk of developing dementia. The data were acquired during 2013 to 2014. All patients provided written informed consent for their clinical data to be used for research purposes. Demographic and clinical group characteristics of the cohorts are summarized in Table 1.

TABLE 1.

Characteristics of subjects (mean ± standard deviation) included in the three study cohorts PredictND, VPHDARE, and FINGER, and in the ADC reference cohort

| PredictND | PredictND ‐ longitudinal | |||||

|---|---|---|---|---|---|---|

| CN | MCI | DEM | CN | MCI | DEM | |

| n = 195 | n = 83 | n = 45 | n = 94 | n = 31 | n = 9 | |

| Female (%) | 66 | 37 | 47 | 66 | 42 | 33 |

| Age (years) | 64 ± 9 | 71 ± 7 | 71 ± 10 | 63 ± 8 | 70 ± 8 | 71 ± 12 |

| Education (years) | 14 ± 4 | 12 ± 4 | 13 ± 4 | 14 ± 3 | 13 ± 4 | 12 ± 5 |

| Neuropsychology | ||||||

| MMSE | 29.3 ± 1.0 | 27.9 ± 1.6 | 27.2 ± 1.9 | 29.4 ± 1.0 | 27.9 ± 1.6 | 27.6 ± 2.1 |

| Memory, learning b | 43 ± 10 | 37 ± 15 | 25 ± 16 | 43 ± 11 | 42 ± 18 | 26 ± 14 |

| Memory, recall b | 10 ± 3 | 6 ± 5 | 2 ± 4 | 9 ± 3 | 8 ± 5 | 3 ± 5 |

| TMT‐A (s) | 37 ± 16 | 47 ± 17 | 61 ± 49 | 34 ± 9 | 41 ± 12 | 71 ± 72 |

| TMT‐B (s) | 84 ± 46 | 131 ± 60 | 172 ± 82 | 73 ± 34 | 112 ± 55 | 158 ± 81 |

| Category fluency | 24 ± 7 | 20 ± 6 | 15 ± 5 | 25 ± 7 | 21 ± 6 | 15 ± 8 |

| VPHDARE | FINGER | ADC | ||||

|---|---|---|---|---|---|---|

| CN | MCI | DEM | CN | CN | DEM | |

| n = 19 | n = 37 | n = 24 | n = 92 | n = 138 | n = 470 | |

| Female (%) | 53 | 49 | 50 | 46 | 39 | 43 |

| Age (years) | 66 ± 6 | 70 ± 9 | 66 ± 8 | 70 ± 5 | 60 ± 8 | 66 ± 8 |

| Education (years) | 14 ± 4 | 11 ± 4 | 12 ± 4 | 10 ± 3 | 5 ± 1 a | 5 ± 1 a |

| Neuropsychology | ||||||

| MMSE | 28.8 ± 1.1 | 26.4 ± 2.4 | 23.9 ± 4.8 | 27.9 ± 1.9 | 28.4 ± 1.5 | 21.9 ± 4.8 |

| Memory, learning b | 42 ± 10 | 35 ± 13 | 24 ± 14 | 42 ± 12 | 44 ± 10 | 24 ± 10 |

| Memory, recall b | 9 ± 3 | 7 ± 4 | 4 ± 4 | 8 ± 4 | 10 ± 3 | 3 ± 3 |

| TMT‐A [s] | 37 ± 16 | 51 ± 26 | 68 ± 29 | 37 ± 16 | 37 ± 16 | 88 ± 64 |

| TMT‐B [s] | 84 ± 46 | 111 ± 53 | 144 ± 45 | 84 ± 46 | 84 ± 46 | 220 ± 112 |

| Category fluency | 23 ± 5 | 20 ± 6 | 15 ± 6 | 22 ± 6 | 23 ± 6 | 12 ± 6 |

“PredictND – longitudinal” is a subcohort for which cCOG was measured in all four time points.

Abbreviations: cCOG, computerized cognitive test; CERAD, Consortium to Establish a Registry for Alzheimer's Disease; CN, cognitively normal; DEM, dementia; MCI, mild cognitive impairment; MMSE, Mini‐Mental State Examination; RAVLT, Rey Auditory Verbal Learning Test; TMT, Trail Making Test.

Verhage rating scale for education

Converted from CERAD scores to RAVLT scores using z‐score comparison

In addition, the Amsterdam Dementia Cohort, composed of data from memory clinic patients assessed between 2004 to 2014, 22 , 23 was used as a separate reference data cohort for developing a composite cognitive score from standard neuropsychological tests (see below). Data from 138 cognitively normal individuals and 470 dementia patients were used.

2.2. Clinical assessment

The participants in all the cohorts received a clinical work‐up including medical history, physical assessment, traditional neuropsychological assessments, and laboratory tests. Subjects were diagnosed as cognitively normal when the cognitive complaints could not be confirmed by cognitive testing and criteria for MCI or dementia were not met. The Petersen criteria were used to define MCI. 24 , 25 Patients were diagnosed with dementia according to the criteria for the specific underlying neurodegenerative disorder. 26 , 27 , 28 , 29 , 30 , 31 , 32

2.3. Global cognitive score based on standard neuropsychological tests

A global cognitive score composed of several standard neuropsychological tests was developed to serve as a gold standard for the overall status of cognition. This score was developed to optimally separate cognitively normal cases from cases with cognitive impairment.

To construct a global cognitive score, we selected a subset of tests that was available in all the cohorts: MMSE 5 was selected as a measure for global cognition, learning and delayed recall scores of Rey Auditory Verbal Learning Task (RAVLT) 33 or CERAD word list memory task for episodic memory, 8 Trail Making Test A and B conditions (TMT‐A, TMT‐B) for mental processing speed and executive function, 34 categorical (animals) verbal fluency for language and executive function, 35 and digit span test (forward and backward) 36 for working memory/attention and executive functioning. To bridge differences between cohorts, Z‐scores for RAVLT and CERAD were used.

The independent Amsterdam Dementia Cohort was used for setting the parameters of the global cognitive score, which was defined as an index computed by feeding the abovementioned measures to the disease‐state index (DSI) classifier. 37 DSI is a supervised learning method that processes heterogeneous patient data to derive a numeric index value between zero and one denoting the disease status of a patient. In this study, a global cognitive score value of zero means a high similarity to subjects with dementia (worse cognitive performance) while the value of one means a high similarity to cognitively normal subjects (better cognitive performance). Finally, a global cognitive score was computed for all subjects of the PredictND, VPHDARE, and FINGER cohorts. The supporting information appendix gives more details about the method.

RESEARCH IN CONTEXT

Systematic review: We reviewed the scientific literature regarding the early detection of neurodegenerative disorders using computerized cognitive screening tests.

Interpretation: Results of this study indicate that our novel developed web‐based cognitive test tool, cCOG, is comparable to the traditional paper‐and‐pencil neuropsychological tests in detecting mild cognitive impairment (MCI) and dementia disorders. The parameters of the new test tool had strong correlations with traditional neuropsychological tests. In addition, consistency of self‐administered home assessments and superintended assessments conducted in clinic was high, especially for the total cCOG score.

Future directions: This article proposes that the new web‐based cognitive test tool is accurate in discriminating MCI and dementia from elderly with normal cognition. More research is needed to confirm its properties in detecting cognitive change over time. Future studies focusing on the use of cCOG in a stepwise diagnostic approach could in addition be beneficial.

2.4. Computerized cognitive test tool (cCOG)

In PredictND and VPHDARE, patients performed the computerized test tool (cCOG) as part of the aim of these studies to develop computer tools for dementia diagnostics. In FINGER, cCOG was performed as an exploratory measure after the completion of an interventional study, assessing the efficacy of a 2‐year lifestyle intervention on cognition. In all three studies, patients performed the web‐based test tool, cCOG, superintended at baseline at the clinical sites. In PredictND, participants were asked to repeat the test battery four times to evaluate performance in the clinic and at home: at baseline and 12 months superintended at the memory clinics, and 6 months and 18 months independently at home (for which an online reminder was sent twice, including a direct link to cCOG).

The computerized test battery is based on the three classical cognitive tasks: a modification of wordlist test, 8 , 33 simple reaction task, 38 and Trail Making Test. 34 It is divided into seven tasks, taking approximately 20 minutes to complete. A keyboard and mouse or a touchscreen device were used. The test battery is currently available in five languages: English, Finnish, Danish, Dutch, and Italian.

Task 1 (Episodic memory test: learning task) is a classical memory test in which the user is asked to remember 12 words shown one by one. Memory encoding is supported by a simultaneously presented visual image of the target word, that is, the word “CAR” is presented with a picture of a car. After word/picture combinations have been presented, the subject is asked to type as many words as she/he can remember. The same list is shown three times, followed by the immediate recalls. The order of the words varies between the rehearsal rounds.

Tasks 2–3 (Reaction tests) measure attention and reaction speed. Stimuli are letters shown on the screen indicating the direction (right and left) to which the user should react by pressing the arrow button as quickly as possible. In Task 2, the user should hit the arrow button on the right “→” whenever “R” is displayed. In Task 3, both “R” and “L” letters are displayed, and the user should hit the right arrow “→” for “R” and the left arrow “←” for “L.”

Tasks 4–5 (modified Trail Making Tests) measure visuomotor speed, attention, and executive function. In Task 4, the user is asked to select the numbers from 1 to 24 in the ascending order as quickly as possible. Numbers from 1 to 24 located on the squares are shown in random locations on the screen. In Task 5, the user must again click the numbers in order; however, this time each number from 1 to 24 is presented both in the circle and square. Altogether 48 stimuli are shown on the screen and the user is asked to select numbers in ascending order but every first time a circle and every second a square in a sequence (one inside circle, two inside square, three inside circle, etc.).

In Task 6 (Episodic memory test: Recall task), the user is asked to recall and type the words from Task 1.

In Task 7 (Episodic memory test; Recognition task), the user is asked to recognize the word/picture combinations shown previously in Task 1. The user is shown altogether 24 word/picture images and asked to recognize whether the word was shown in Task 1.

cCOG tasks were quantified as follows:

Task 1: the total number of correct words recalled in immediate trails,

Task 2 & 3: the average reaction time calculated for correct clicks,

Task 4 & 5: the duration for selecting numbers in ascending order from 1 to 24,

Task 6: the number of correct words in delayed recall, and

Task 7: the duration of time from the beginning until the end of the recognition task.

Thereafter, a linear regression model was developed using PredictND data, for estimating the global cognitive score (dependent variable) from the abovementioned seven features. This estimated score, cCOG score, was then computed for all subjects of the PredictND, VPHDARE, and FINGER cohorts. Finally, MMSE, global cognitive score, and cCOG scores were normalized for age, sex, and education years.

2.5. Data analysis

The Spearman correlation coefficient was computed between the cognitive features of cCOG and different clinical cognitive test results, for PredictND, VPHDARE, and FINGER data. The correlations are rated as follows: 0–0.39 weak, 0.40–0.59 moderate, and 0.60–1.0 strong. 39

The accuracy of the global scores (MMSE, global cognitive score, and cCOG) and cCOG individual subtasks in classifying patients to different diagnostic groups was studied. Two classifiers were developed: one for detecting MCI patients and one for detecting dementia patients. First, the PredictND data were divided randomly into training set (75% of cases) and test set (25% of cases). Then, the median value of the score (MMSE, global cognitive score, and cCOG) was computed for both diagnostics groups in the training set, and the cut‐off value was chosen as the midpoint between the median values. Finally, the test set was classified using the cut‐off value. A set of statistical performance measures was computed: area‐under‐the‐curve (AUC), sensitivity, specificity, and balanced accuracy (BACC, defined as the average of sensitivity and specificity). The whole process was repeated 1000 times and the average accuracy was calculated. In addition to cross‐validation using the PredictND data, classification performance was evaluated using the independent VPHDARE cohort. Because the FINGER cohort does not contain MCI and dementia patients, these data were not used for this part of the study.

Finally, consistency of cCOG measurements at clinics and at home in PredictND was studied by calculating the Pearson correlation coefficient between different time points and by comparing classification performance from four time points.

The Matlab toolbox R2017a (The MathWorks Inc., Natick, Massachusetts, USA) was used to run all data analyses.

3. RESULTS

3.1. Correlation with standard neuropsychological tests

Table 2 presents the correlation coefficients of MMSE, global cognitive score, cCOG, and the individual cCOG tasks, with results from standard neuropsychological tests. The correlation coefficients between global cognitive score and cCOG for PredictND, VPHDARE, and FINGER cohorts were 0.78, 0.81, and 0.63, respectively. When correlations between individual tasks and single cognitive tests were studied, the highest correlations were found between episodic memory learning (Task 1) and RAVLT/CERAD learning, r = 0.44–0.64, episodic memory recall (Task 6) and RAVLT/CERAD recall, r = 0.47–0.54, and modified trail making (B) (Task 5) and TMT‐B, r = 0.62–0.80.

TABLE 2.

Spearman correlations coefficients between cCOG tasks and standard neuropsychological tests

| PredictND (N = 328) VPH‐DARE@IT (N = 80) FINGER (N = 92) | MMSE | Global cognitive score | RAVLT/CERAD Learning | RAVLT/CERAD Recall | Fluency Animal | TMT‐ A | TMT‐ B | DigitSpan Forward | DigitSpan Backward | |

|---|---|---|---|---|---|---|---|---|---|---|

| MMSE | PDN | – | 0.61 | 0.42 | 0.46 | 0.40 | −0.31 | −0.40 | 0.22 | 0.19 |

| VPH | – | 0.82 | 0.67 | 0.58 | 0.61 | −0.49 | −0.64 | 0.41 | 0.55 | |

| FNG | – | 0.56 | 0.26 | 0.28 | 0.35 | −0.07 | −0.37 | 0.18 | 0.20 | |

| Global cognitive | PDN | 0.61 | – | 0.79 | 0.78 | 0.70 | −0.61 | −0.73 | 0.39 | 0.51 |

| score | VPH | 0.82 | – | 0.86 | 0.77 | 0.85 | −0.74 | −0.85 | 0.47 | 0.67 |

| FNG | 0.56 | – | 0.68 | 0.67 | 0.65 | −0.52 | −0.71 | 0.46 | 0.56 | |

| cCOG | PDN | 0.54 | 0.78 | 0.51 | 0.50 | 0.59 | −0.61 | −0.71 | 0.27 | 0.36 |

| VPH | 0.68 | 0.81 | 0.64 | 0.51 | 0.64 | −0.65 | −0.78 | 0.38 | 0.45 | |

| FNG | 0.17 | 0.63 | 0.39 | 0.46 | 0.50 | −0.37 | −0.53 | 0.38 | 0.32 | |

| Task 1: Episodic memory learning | PDN | 0.52 | 0.71 | 0.52 | 0.50 | 0.55 | −0.47 | −0.58 | 0.21 | 0.30 |

| VPH | 0.56 | 0.67 | 0.64 | 0.53 | 0.55 | −0.52 | −0.57 | 0.25 | 0.34 | |

| FNG | 0.12 | 0.54 | 0.44 | 0.49 | 0.39 | −0.20 | −0.40 | 0.46 | 0.31 | |

| Task 2: Simple reaction | PDN | −0.34 | −0.36 | −0.23 | −0.22 | −0.40 | 0.30 | 0.33 | −0.05 | −0.08 |

| VPH | −0.48 | −0.62 | −0.52 | −0.42 | −0.60 | 0.44 | 0.63 | −0.38 | −0.45 | |

| FNG | −0.01 | −0.42 | −0.24 | −0.37 | −0.26 | 0.25 | 0.30 | −0.19 | −0.16 | |

| Task 3: Choice reaction | PDN | −0.29 | −0.42 | −0.33 | −0.29 | −0.37 | 0.33 | 0.36 | −0.12 | −0.20 |

| VPH | −0.43 | −0.56 | −0.39 | −0.26 | −0.54 | 0.47 | 0.57 | −0.34 | −0.45 | |

| FNG | −0.11 | −0.11 | −0.01 | −0.13 | −0.11 | 0.14 | 0.16 | 0.13 | 0.13 | |

| Task 4: Modified Trail Making (A) | PDN | −0.27 | −0.49 | −0.17 | −0.18 | −0.35 | 0.60 | 0.57 | −0.24 | −0.31 |

| VPH | −0.44 | −0.58 | −0.38 | −0.30 | −0.46 | 0.55 | 0.66 | −0.44 | −0.49 | |

| FNG | −0.28 | −0.55 | −0.31 | −0.28 | −0.39 | 0.31 | 0.55 | −0.31 | −0.31 | |

| Task 5: Modified Trail Making (B) | PDN | −0.32 | −.60 | −0.30 | −0.29 | −0.42 | 0.65 | 0.70 | −0.28 | −0.37 |

| VPH | −0.65 | −0.74 | −0.51 | −0.43 | −0.57 | 0.58 | 0.80 | −0.41 | −0.51 | |

| FNG | −0.20 | −0.58 | −0.25 | −0.28 | −0.46 | 0.44 | 0.62 | −0.21 | −0.28 | |

| Task 6: Episodic memory recall | PDN | 0.48 | 0.66 | 0.49 | 0.54 | 0.49 | −0.42 | −0.50 | 0.17 | 0.26 |

| VPH | 0.53 | 0.59 | 0.52 | 0.53 | 0.48 | −0.43 | −0.51 | 0.27 | 0.22 | |

| FNG | 0.08 | 0.47 | 0.38 | 0.47 | 0.35 | −0.21 | −0.31 | 0.29 | 0.23 | |

| Task 7: Episodic memory recognition | PDN | −0.40 | −0.60 | −0.35 | −0.35 | −0.43 | 0.52 | 0.59 | −0.23 | −0.28 |

| VPH | −0.67 | −0.75 | −0.55 | −0.45 | −0.60 | 0.63 | 0.75 | −0.38 | −0.49 | |

| FNG | −0.15 | −0.39 | −0.12 | −0.19 | −0.42 | 0.30 | 0.40 | −0.32 | −0.26 |

Abbreviations: cCOG, computerized cognitive test; CERAD, Consortium to Establish a Registry for Alzheimer's Disease; CN, cognitively normal; DEM, dementia; MCI, mild cognitive impairment; MMSE, Mini‐Mental State Examination; RAVLT, Rey Auditory Verbal Learning Test; TMT, Trail Making Test.

Notes: Color scaling dependent on absolute values of correlation: no color for very weak or weak correlation (0–0.39), light red for moderate correlation (0.40–0.59), red for strong or very strong correlation (0.60–1.00).

Correlation coefficients between the global scores and age were –0.15 for MMSE, –0.16 for global cognitive score, and –0.26 for cCOG, and between the composite scores and education years 0.09 for MMSE, 0.12 for global cognitive score, and 0.17 for cCOG.

3.2. Classification accuracy in diagnostics

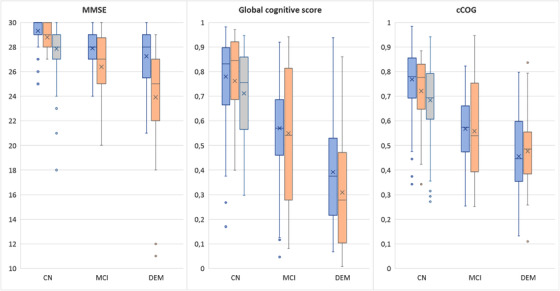

Figure 1 shows the distributions of MMSE, global cognitive score, and cCOG for different diagnostic groups in all three study cohorts. Table 3 presents classification performance in detecting MCI and dementia patients using MMSE, global cognitive score, and cCOG in PredictND. The results indicate that classification accuracy is comparable between global cognitive score and cCOG. Table A.1 in the supporting information appendix shows classification performance also for the individual cCOG tasks. The highest values can be observed for the memory tasks (learning and recall) both in detecting MCI and dementia while the reaction time tasks have clearly the lowest values.

FIGURE 1.

Distributions of Mini‐Mental State Examination, global cognitive score, and computerized cognitive tool (cCOG) global score shown for different diagnostic groups of the PredictND cohort (blue), VPHDARE cohort (orange), and FINGER cohort (gray) using boxplots. For each boxplot, the line and cross indicate the median and mean values, respectively, and the bottom and top edges of the box indicate the 25th and 75th percentiles, respectively. The whiskers extend to the most extreme data points not considered outliers, and the outliers are plotted individually

TABLE 3.

Classification performance in the PredictND cohort (mean; 95% confidence interval)

| CN (N = 195) vs MCI (N = 83) | CN (N = 195) vs DEM (N = 45) | |||||

|---|---|---|---|---|---|---|

| PredictND | MMSE | Global cognitive score | cCOG | MMSE | Global cognitive score | cCOG |

| AUC | 0.75 (0.63 0.86) | 0.77 (0.66 0.88) | 0.84 (0.75 0.92) | 0.84 (0.71 0.94) | 0.91 (0.77 0.99) | 0.92 (0.83 0.98) |

| BACC | 0.68 (0.58 0.78) | 0.71 (0.61 0.80) | 0.77 (0.67 0.86) | 0.78 (0.66 0.89) | 0.88 (0.76 0.96) | 0.83 (0.71 0.93) |

| Sensitivity | 0.66 (0.45 0.85) | 0.71 (0.50 0.90) | 0.77(0.57 0.95) | 0.72 (0.45 0.92) | 0.86 (0.64 1.00) | 0.75 (0.50 1.00) |

| Specificity | 0.71 (0.57 0.84) | 0.71 (0.58 0.84) | 0.77 (0.67 0.88) | 0.83 (0.71 0.94) | 0.89 (0.81 0.96) | 0.91 (0.83 0.98) |

Abbreviations: AUC, area under the curve; BACC; balanced accuracy; cCOG, computerized cognitive test; CN, cognitively normal; DEM, dementia; MCI, mild cognitive impairment; MMSE, Mini‐Mental State Examination.

The cut‐off values defined from the PredictND data were 28.3 (MMSE), 0.60 (global cognitive score), and 0.60 (cCOG) for detecting MCI patients, and 28.2 (MMSE), 0.49 (global cognitive score), and 0.52 (cCOG) for detecting dementia patients. When these cutoffs were applied to the VPHDARE data, the following balanced accuracies were obtained: 0.63 (MMSE), 0.71 (global cognitive score), and 0.67 (cCOG) in detecting MCI patients, and 0.71 (MMSE), 0.79 (global cognitive score), and 0.78 (cCOG) in detecting dementia patients.

3.3. Consistency of cCOG at clinic and at home

Consistency of the cCOG results between clinic and home‐based assessments was analyzed using the PredictND data. Of the 323 participants, 25 participants performed cCOG only at baseline, 94 participants performed cCOG twice, and 69 patients performed cCOG three times. Only 134 participants (94 cognitively normal, 31 MCI, and 9 dementia) performed cCOG at all four time points. Of these time points, baseline and 12‐month visits were administered superintended at memory clinics, and 6‐ and 18‐month visits at home using participants’ own computers. Assistance (mostly in typing) was reported in 21% of cases over all testing sessions; 31% of testing sessions at the first clinical visit, and 17% of testing session after the first visit (both at home and at the clinic). Furthermore, 79% of testing sessions were done using touchscreen in the clinic versus only 14% at home.

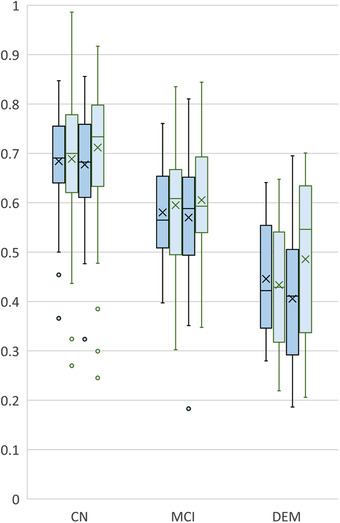

Figure 2 presents the distribution of cCOG results for the different diagnostic groups at the measurement points. AUC values were 0.79 (month 0), 0.72 (month 6), 0.76 (month 12), 0.76 (month 18) in detecting MCI patients, and 0.94 (month 0), 0.93 (month 6), 0.91 (month 12), and 0.87 (month 18) in detecting dementia showing a decent consistency between the clinic and home measurements. By comparison, AUC was 0.69 in detecting MCI and 0.91 in detecting dementia when using the global score from standard neuropsychological tests at the baseline.

FIGURE 2.

Computerized cognitive tool (cCOG) retest distributions in follow‐up setting. Distributions of cCOG for the cognitively normal (CN), mild cognitive impairment (MCI), and dementia (DEM) groups at baseline (first dark blue, clinic), month 6 (first light blue, home), month 12 (second dark blue, clinic), and month 18 (second light blue, home). The distributions have been computed only from the subjects having all four time points available (n = 136)

Table 4 presents the Pearson correlation coefficient for test‐retest consistency between the two cCOG measurements either at clinic, at home, or between clinic and home.

TABLE 4.

Pearson correlation coefficients between different time points for the cCOG tasks and the global score at clinic, at home, and between clinic and home

| Task 1: Episodic memory learning | Task 2: Simple reaction | Task 3: Choice reaction | Task 4: Modified Trail Making (A) | Task 5: Modified Trail Making (B) | Task 6: Episodic memory recall | Task 7: Episodic memory recognition | cCOG | |

|---|---|---|---|---|---|---|---|---|

| Clinic (N = 288) M0‐M12 | 0.75 | 0.48 | 0.42 | 0.69 | 0.69 | 0.72 | 0.64 | 0.82 |

| Home (N = 134) M6‐M18 | 0.69 | 0.48 | 0.54 | 0.63 | 0.44 | 0.59 | 0.78 | 0.77 |

| Clinic‐Home (N = 186) M0‐M6 | 0.54 | 0.42 | 0.24 | 0.63 | 0.63 | 0.57 | 0.59 | 0.67 |

| Clinic‐Home (N = 177) M6‐M12 | 0.64 | 0.34 | 0.35 | 0.62 | 0.62 | 0.67 | 0.55 | 0.72 |

| Clinic‐Home (N = 160) M12‐M18 | 0.69 | 0.34 | 0.48 | 0.64 | 0.61 | 0.65 | 0.66 | 0.74 |

Abbreviations: cCOG, computerized cognitive test; M0, baseline visit at memory clinic; M12, 12 months visit at memory clinic; M18, 18 months visit at home; M6, 6 months visit at home.

4. DISCUSSION

This study validated a self‐administrable web‐based cognitive test tool for the early detection of neurodegenerative disorders. The test was designed in such a way that tasks resemble standard neuropsychological tests making interpretation easier for clinicians. Classification accuracy was high in detecting both patients with MCI and dementia, and comparable to the global cognitive score derived from standard neuropsychological tests. Furthermore, accuracy was relatively consistent over time and between testing at home or in clinic.

A recent systematic review reported accuracies for 11 computerized tools in detecting either MCI or early dementia based on 14 studies. 40 The performance was reported either for the overall output of the tool, for the subtasks of the tool, or both. The median value of AUC was 0.85 and the balanced accuracy was 0.77 in detecting MCI. The corresponding values in detecting early dementia were 0.82 for AUC and 0.85 for balanced accuracy. These results are comparable to the performance reported in this work. The time needed for testing is also an important factor when considering feasibility. The time used in testing for those 11 tools varied between 10 and 45 minutes, which is comparable to about 20 minutes used for cCOG. Despite this review, to date, very few automated computerized tools are being used in clinical practice. Our study adds to this work by developing a web‐based cognitive test tool that uses tests that are easy to interpret and use in clinical practice. Furthermore, the developed test showed consistent performance when used at home or in clinic. In addition, computerized test‐batteries can be beneficial in emergency situations (such as the current COVID‐19 pandemic) for which remote assessments are needed.

Several factors can affect test accuracy. First, it is well known that diagnostic accuracy improves at later stages of neurodegenerative diseases. MMSE scores 20 to 24 are considered to suggest mild dementia. In PredictND, the average MMSE score for dementia patients was 27.2, indicating that these patients received their diagnosis at a very early phase. The cCOG assessment was performed in PredictND only in memory clinic patients with MMSE ≥ 25 explaining the normal MMSE value. The accuracy obtained in this cohort is thus fairly high despite the fact that these patients were all very mild dementia cases, in which diagnosis is more challenging. Interestingly, the cognitively normal subjects at risk in FINGER had the same average MMSE score (27.9) as MCI patients in PredictND. These results demonstrate possible differences in clinical populations but potentially also how diagnostic criteria are applied. Second, the number of subjects in the VPHDARE cohort was relatively low with only 19 cognitively normal subjects. This means that the impact of a single subject on accuracy is considerable and random effects may explain differences. Because of these reasons, the accuracy values reported should be considered only indicative.

cCOG had high correlations with the global cognitive score from standard neuropsychological tests in the three cohorts studied, r = 0.63–0.81. When correlations between individual cCOG tasks and single cognitive tests were studied, the highest correlations were found for the memory domain and executive functioning domain. For comparison, Mielke et al. 12 reported correlations of the CogState computerized tests and standard neuropsychological tests in a non‐demented elderly cohort including both cognitively normal and MCI subjects. The correlations were r = 0.13–0.34 for delayed recall and r = 0.24–0.47 for TMT‐B. In some studies correlations between the computerized tests and paper‐and‐pencil tests have been very weak (r = 0.09–0.26 for immediate recall, r = 0.09–0.23 for delayed recall, and r = 0.02–0.28 for TMT‐B), 41 but in other studies computerized cognitive batteries have yielded moderate to high correlation (r = 0.47–0.71) with traditional tests also in the healthy population. 14 In general, correlations between cCOG and traditional neuropsychological tests were good when compared to previously developed web‐based test batteries. 12 , 16 , 42 , 43 Also cCOG memory subscore correlations with traditional memory tests were comparable to those reported recently among MCI and healthy elderly. 17

The consistency over time was studied using data from four time points, two measured at clinic and two at home. cCOG showed very strong correlation both in clinic (r = 0.82) and at home (r = 0.77). For comparison, Hammers et al. 44 studied test‐retest reliability using the CogState test battery and reported correlations for different tasks between r = 0.23–0.79 in healthy controls, 0.33–0.75 in MCI subjects, and 0.59–0.80 in AD. Cacciamani et al. 45 reported test‐retest reliability for the CANTAB (Cambridge Neuropsychological Test Automated Battey) test in MCI subjects over three time points. The Paired Associates Learning test (total errors) provided the highest correlation over three measurements, r = 0.74–0.85. The same test gave correlation r = 0.68 in an older elderly cohort without neuropsychiatric diagnosis in Goncalves et al. 46 They reported the highest test‐retest performance for a reaction time test (RTI five‐choice movement time), r = 0.86, while the reaction times tests produced variable results in, 45 r = 0.03–0.82. In cCOG, correlations for the reaction time tests were only r = 0.42–0.54. In Maljkovic et al., 47 CANTAB was administered at home and the highest test‐retest correlation in a healthy control, MCI, and dementia cohort was obtained for memory tests, intraclass correlation > 0.71.

In our study, a single composite score was developed both for the standard neuropsychological tests and for the cCOG test tool. Recent research implies that composite scores of memory and global cognition can be more sensitive than single test scores in detecting cognitive impairment in prodromal AD, 48 , 49 supporting this approach. In addition, a single index score is easier to interpret in screening purposes than a battery including several separate scores. Finally, automatic adjustment of demographic variables is also straightforward in the computerized tests.

Regarding the feasibility of cCOG, assistance was requested in 21% of testing sessions. In most cases, assistance was needed in typing. To alleviate this challenge and improve usability, we have updated cCOG so that the user needs to type only the first three letters and if they are right, the word is completed automatically. Voice recognition could also be an option for future versions.

The main strength of this study was the use of three different cohorts in validation and comparison to standard neuropsychological tests. The main limitation, however, was a relatively limited sample size. Less than half of the PredictND subjects did cCOG at all four time points. Another limitation was that our study design was not optimal for defining test‐retest reliability. Instead of having two measurements made within a short period of time, the time difference was 6 or even 12 months. Yet, this can also be considered an advantage, preventing learning effects. In addition, testing at home was not controlled in any way as patients used their own computers. Only 14% of testing sessions were done using a touchscreen at home while the number was 79% at clinics. Although no big difference was observed between the clinic and home measurements, standardizing the hardware would potentially improve reproducibility. A systematic study about the impact of the hardware should be performed in the future. Finally, our study on the role of individual tasks remained limited. Because the number of dementia cases was relatively small, we could not evaluate how the tasks reflecting different domains of cognition performance in separating different dementia etiologies.

In conclusion, the web‐based cCOG test tool demonstrated corresponding accuracy in detecting MCI and dementia with a composite score derived from standard neuropsychological tests. In addition, cCOG results showed high consistency when measurements were administered at home and in the clinic. These results give support that cCOG could be a useful and cost‐efficient tool in early assessment for neurodegenerative diseases. Tools like this can even be administered at home and can pave the way for stepwise approach diagnostics in dementia.

CONFLICTS OF INTEREST

Hanneke FM Rhodius‐Meester performs contract research for Combinostics, all funding is paid to her institution. Teemu Paajanen reports no disclosures. Juha Koikkalainen and Jyrki Lötjönen report that Combinostics owns the following IPR related to the article: 1. J. Koikkalainen and J. Lötjönen. A method for inferring the state of a system, US 7,840,510 B2. 2. J. Lötjönen, J. Koikkalainen, and J. Mattila. State Inference in a heterogeneous system, US 10,372,786 B2. Koikkalainen and Lötjönen are shareholders in Combinostics. Shadi Mahdiani reports no disclosures. Marie Bruun reports no disclosures. Marta Baroni reports no disclosures. Afina W. Lemstra reports no disclosures. Philip Scheltens has received consultancy/speaker fees (paid to the institution) from Biogen, Novartis Cardiology, Genentech, AC Immune. He is PI of studies with Vivoryon, EIP Pharma, IONIS, CogRx, AC Immune, and FUJI‐film/Toyama. Sanna‐Kaisa Herukka reports no disclosures. Maria Pikkarainen reports no disclosures. Anette Hall reports no disclosures. Tuomo Hänninen reports no disclosures. Tiia Ngandu reports no disclosures. Miia Kivipelto has received research support from the Academy of Finland, Swedish Research Council, Joint Program of Neurodegenerative Disorders, Knut and Alice Wallenberg Foundation, Center for Innovative Medicine (CIMED) Stiftelsen Stockholms sjukhem, Konung Gustaf Vs och Drottning Victorias Frimurarstiftelse, Alzheimerfonden, Hjärnfonden, Region Stockholm (ALF and NSV grants). She takes part in the WHO guidelines development group, is a governance committee member of the Global Council on Brain Health, and is on the advisory board of Combinostics and Roche. Mark van Gils reports no disclosures. Steen Gregers Hasselbalch reports no disclosures. Patrizia Mecocci reports no disclosures. Anne Remes reports no disclosures. Hilkka Soininen has received fees as a member of advisory board of ACImmune, MERCK, and Novo Nordisk outside this work. Wiesje M van der Flier performs contract research for Biogen. Research programs of Wiesje van der Flier have been funded by ZonMW, NWO, EU‐FP7, Alzheimer Nederland, CardioVascular Onderzoek Nederland, Gieskes‐Strijbis fonds, Pasman stichting, Boehringer Ingelheim, Piramal Neuroimaging, Combinostics, Roche BV, AVID. She has been an invited speaker at Boehringer Ingelheim and Biogen. All funding is paid to her institution.

Supporting information

Supplementary information

ACKNOWLEDGMENTS

Research of the Alzheimer Center Amsterdam is part of the neurodegeneration research program of Amsterdam Neuroscience. The Alzheimer Center Amsterdam is supported by Stichting Alzheimer Nederland and Stichting VUmc fonds. The clinical database structure was developed with funding from Stichting Dioraphte. For development of the PredictND tool, VTT Technical Research Centre of Finland received funding from European Union's Seventh Framework Programme for research, technological development, and demonstration under grant agreements 601055 (VPH‐DARE@IT), 224328 (PredictAD), and 611005 (PredictND). The FINGER study was funded by Academy of Finland, Finnish Social Insurance Institution, Alzheimer's Research and Prevention Foundation, Juho Vainio Foundation; Swedish Research Council, Alzheimerfonden, Region Stockholm ALF and NSV, Center for Innovative Medicine (CIMED) at Karolinska Institutet, Knut and Alice Wallenberg Foundation, Stiftelsen Stockholms sjukhem; Joint Program of Neurodegenerative Disorders. The collaboration project DAILY (project number LSHM19123‐HSGF) is co‐funded by the PPP Allowance made available by Health‐Holland, Top Sector Life Sciences & Health, to stimulate public‐private partnerships. Wiesje M van der Flier holds the Pasman chair.

Rhodius‐Meester HFM, Paajanen T, Koikkalainen J, et al. cCOG: A web‐based cognitive test tool for detecting neurodegenerative disorders. Alzheimer's Dement. 2020;12:e12083 10.1002/dad2.12083

Hanneke F.M. Rhodius‐Meester and Teemu Paajanen contributed equally to this study and share first authorship

REFERENCES

- 1. Prince M, Bryce R, Ferri C. World Alzheimer Report. The benefits of early diagnosis and intervention 2011. Alzheimer's Disease International (ADI). September 2011.

- 2. Pouryamout L, Dams J, Wasem J, Dodel R, Neumann A. Economic evaluation of treatment options in patients with Alzheimer's disease: a systematic review of cost‐effectiveness analyses. Drugs. 2012;72:789‐802. [DOI] [PubMed] [Google Scholar]

- 3. Ngandu T, Lehtisalo J, Solomon A, et al. A 2 year multidomain intervention of diet, exercise, cognitive training, and vascular risk monitoring versus control to prevent cognitive decline in at‐risk elderly people (FINGER): a randomised controlled trial. Lancet. 2015;385:2255‐2263. [DOI] [PubMed] [Google Scholar]

- 4. Getsios D, Blume S, Ishak KJ, Maclaine G, Hernandez L. An economic evaluation of early assessment for Alzheimer's disease in the United Kingdom. Alzheimers Dement. 2012;8:22‐30. [DOI] [PubMed] [Google Scholar]

- 5. Folstein MF, Folstein SE, McHugh PR. “Mini‐mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189‐198. [DOI] [PubMed] [Google Scholar]

- 6. Nasreddine ZS, Phillips NA, Bedirian V, et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53:695‐699. [DOI] [PubMed] [Google Scholar]

- 7. Shulman KI. Clock‐drawing: is it the ideal cognitive screening test?. Int J Geriatr Psychiatry. 2000;15:548‐561. [DOI] [PubMed] [Google Scholar]

- 8. Morris JC, Edland S, Clark C, et al. The consortium to establish a registry for Alzheimer's disease (CERAD). Part IV. Rates of cognitive change in the longitudinal assessment of probable Alzheimer's disease. Neurology. 1993;43:2457‐2465. [DOI] [PubMed] [Google Scholar]

- 9. Wild K, Howieson D, Webbe F, Seelye A, Kaye J. Status of computerized cognitive testing in aging: a systematic review. Alzheimers Dement. 2008;4:428‐437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Casaletto KB, Heaton RK. Neuropsychological assessment: past and future. J Int Neuropsychol Soc. 2017;23:778‐790. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Bilder RM, Reise SP. Neuropsychological tests of the future: how do we get there from here?. Clin Neuropsychol. 2019;33:220‐245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Mielke MM, Machulda MM, Hagen CE, et al. Performance of the CogState computerized battery in the Mayo Clinic study on aging. Alzheimers Dement. 2015;11:1367‐1376. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Mackin RS, Insel PS, Truran D, et al. Unsupervised online neuropsychological test performance for individuals with mild cognitive impairment and dementia: results from the Brain Health Registry. Alzheimers Dement (Amst). 2018;10:573‐582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Morrison GE, Simone CM, Ng NF, Hardy JL. Reliability and validity of the NeuroCognitive Performance Test, a web‐based neuropsychological assessment. Front Psychol. 2015;6:1652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Ruggeri K, Maguire A, Andrews JL, Martin E, Menon S. Are we there yet? Exploring the impact of translating cognitive tests for dementia using mobile technology in an aging population. Front Aging Neurosci. 2016;8:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Wallace SE, Donoso Brown EV, Fairman AD, et al. Validation of the standardized touchscreen assessment of cognition with neurotypical adults. NeuroRehabilitation. 2017;40:411‐420. [DOI] [PubMed] [Google Scholar]

- 17. Morrison RL, Pei H, Novak G, et al. A computerized, self‐administered test of verbal episodic memory in elderly patients with mild cognitive impairment and healthy participants: a randomized, crossover, validation study. Alzheimers Dement (Amst). 2018;10:647‐656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Bruun M, Frederiksen KS, Rhodius‐Meester HFM, et al. Impact of a clinical decision support tool on prediction of progression in early‐stage dementia: a prospective validation study. Alzheimers Res Ther. 2019;11:25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Bruun M, Frederiksen KS, Rhodius‐Meester HFM, et al. Impact of a clinical decision support tool on dementia diagnostics in memory clinics: the PredictND validation study. Curr Alzheimer Res. 2019;16:91‐101. [DOI] [PubMed] [Google Scholar]

- 20. Cajanus A, Solje E, Koikkalainen J, et al. The association between distinct frontal brain volumes and behavioral symptoms in mild cognitive impairment, Alzheimer's disease, and frontotemporal dementia. Front Neurol. 2019;10:1059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Kivipelto M, Solomon A, Ahtiluoto S, et al. The Finnish geriatric intervention study to prevent cognitive impairment and disability (FINGER): study design and progress. Alzheimers Dement. 2013;9:657‐665. [DOI] [PubMed] [Google Scholar]

- 22. van der Flier WM, Pijnenburg YA, Prins N, et al. Optimizing patient care and research: the Amsterdam dementia cohort. J Alzheimers Dis. 2014;41:313‐327. [DOI] [PubMed] [Google Scholar]

- 23. van der Flier WM, Scheltens P. Amsterdam dementia cohort: performing research to optimize care. J Alzheimers Dis. 2018;62:1091‐1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Petersen RC, Lopez O, Armstrong MJ, et al. Practice guideline update summary: mild cognitive impairment. Neurology. 2018;90:126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Petersen RC. Mild cognitive impairment as a diagnostic entity. J Intern Med. 2004;256:183‐194. [DOI] [PubMed] [Google Scholar]

- 26. McKhann G, Drachman D, Folstein M, Katzman R, Price D, Stadlan EM. Clinical diagnosis of Alzheimer's disease: report of the NINCDS‐ADRDA work group under the auspices of department of health and human services task force on Alzheimer's disease. Neurology. 1984;34:939‐944. [DOI] [PubMed] [Google Scholar]

- 27. McKhann GM, Knopman DS, Chertkow H, et al. The diagnosis of dementia due to Alzheimer's disease: recommendations from the National Institute on Aging‐Alzheimer's Association workgroups on diagnostic guidelines for Alzheimer's disease. Alzheimers Dement. 2011;7:263‐269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Neary D, Snowden JS, Gustafson L, et al. Frontotemporal lobar degeneration: a consensus on clinical diagnostic criteria. Neurology. 1998;51:1546‐1554. [DOI] [PubMed] [Google Scholar]

- 29. Rascovsky K, Hodges JR, Knopman D, et al. Sensitivity of revised diagnostic criteria for the behavioural variant of frontotemporal dementia. Brain. 2011;134:2456‐2477. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Roman GC, Tatemichi TK, Erkinjuntti T, et al. Vascular dementia: diagnostic criteria for research studies. Report of the NINDS‐AIREN International Workshop. Neurology. 1993;43:250‐260. [DOI] [PubMed] [Google Scholar]

- 31. McKeith IG, Boeve BF, Dickson DW, et al. Diagnosis and management of dementia with Lewy bodies: fourth consensus report of the DLB Consortium. Neurology. 2017;89:88‐100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. McKeith IG, Dickson DW, Lowe J, et al. Diagnosis and management of dementia with Lewy bodies: third report of the DLB Consortium. Neurology. 2005;65:1863‐1872. [DOI] [PubMed] [Google Scholar]

- 33. Schoenberg MR, Dawson KA, Duff K, Patton D, Scott JG, Adams RL. Test performance and classification statistics for the Rey Auditory Verbal Learning Test in selected clinical samples. Arch Clin Neuropsychol. 2006;21:693‐703. [DOI] [PubMed] [Google Scholar]

- 34. Reitan R. Validity of the trail making test as an indicator of organic brain damage. Percept Mot Skills. 1958;8:271‐276. [Google Scholar]

- 35. Van der Elst W, Van Boxtel MP, Van Breukelen GJ, Jolles J. Normative data for the animal, profession and letter M naming verbal fluency tests for Dutch speaking participants and the effects of age, education, and sex. J Int Neuropsychol Soc. 2006;12:80‐89. [DOI] [PubMed] [Google Scholar]

- 36. Lindeboom J, Matto D. [Digit series and Knox cubes as concentration tests for elderly subjects]. Tijdschr Gerontol Geriatr. 1994;25:63‐68. [PubMed] [Google Scholar]

- 37. Mattila J, Koikkalainen J, Virkki A, et al. A disease state fingerprint for evaluation of Alzheimer's disease. J Alzheimers Dis. 2011;27:163‐176. [DOI] [PubMed] [Google Scholar]

- 38. Jahanshahi M, Brown RG, Marsden CD. Simple and choice reaction time and the use of advance information for motor preparation in Parkinson's disease. Brain. 1992;115(Pt 2):539‐564. [DOI] [PubMed] [Google Scholar]

- 39. Evans JD. Straightforward Statistics for the Behavioral Sciences. Pacific Grove, CA: Brooks/Cole Publishing; 1996. [Google Scholar]

- 40. Aslam RW, Bates V, Dundar Y, et al. A systematic review of the diagnostic accuracy of automated tests for cognitive impairment. Int J Geriatr Psychiatry. 2018;33:561‐575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Smith PJ, Need AC, Cirulli ET, Chiba‐Falek O, Attix DK. A comparison of the Cambridge Automated Neuropsychological Test Battery (CANTAB) with “traditional” neuropsychological testing instruments. J Clin Exp Neuropsychol. 2013;35:319‐328. [DOI] [PubMed] [Google Scholar]

- 42. Feenstra HEM, Murre JMJ, Vermeulen IE, Kieffer JM, Schagen SB. Reliability and validity of a self‐administered tool for online neuropsychological testing: the Amsterdam cognition scan. J Clin Exp Neuropsychol. 2018;40:253‐273. [DOI] [PubMed] [Google Scholar]

- 43. Hansen TI, Haferstrom EC, Brunner JF, Lehn H, Haberg AK. Initial validation of a web‐based self‐administered neuropsychological test battery for older adults and seniors. J Clin Exp Neuropsychol. 2015;37:581‐594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Hammers D, Spurgeon E, Ryan K, et al. Reliability of repeated cognitive assessment of dementia using a brief computerized battery. Am J Alzheimers Dis Other Demen. 2011;26:326‐333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Cacciamani F, Salvadori N, Eusebi P, et al. Evidence of practice effect in CANTAB spatial working memory test in a cohort of patients with mild cognitive impairment. Appl Neuropsychol Adult. 2018;25:237‐248. [DOI] [PubMed] [Google Scholar]

- 46. Goncalves MM, Pinho MS, Simoes MR. Test‐retest reliability analysis of the Cambridge Neuropsychological Automated Tests for the assessment of dementia in older people living in retirement homes. Appl Neuropsychol Adult. 2016;23:251‐263. [DOI] [PubMed] [Google Scholar]

- 47. Maljkovic V, Pugh M, Yaari R, Shen J, Juusola J. At home cognitive testing (CANTAB battery) in healthy controls and cognitively impaired patients: a feasibility study. Alzheimer's & Dementia. 2019;15:P440. [Google Scholar]

- 48. Paajanen T, Hanninen T, Tunnard C, et al. CERAD neuropsychological compound scores are accurate in detecting prodromal alzheimer's disease: a prospective AddNeuroMed study. J Alzheimers Dis. 2014;39:679‐690. [DOI] [PubMed] [Google Scholar]

- 49. Crane PK, Carle A, Gibbons LE, et al. Development and assessment of a composite score for memory in the Alzheimer's Disease Neuroimaging Initiative (ADNI). Brain Imaging Behav. 2012;6:502‐516. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary information