Abstract

Objective

The Reading the Mind in the Eyes Test (RMET) is a common measure of the Theory of Mind. Previous studies found a correlation between RMET performance and neurocognition, especially reasoning by analogy; however, the nature of this relationship remains unclear. Additionally, neurocognition was shown to play a significant role in facial emotion recognition. This study is planned to examine the nature of relationship between neurocognition and RMET performance, as well as the mediating role of facial emotion recognition.

Methods

One hundred fifty non-clinical youths performed the RMET. Reasoning by analogy was tested by Raven’s Standard Progressive Matrices (SPM) and facial emotion recognition was assessed by the Korean Facial Expressions of Emotion (KOFEE) test. The percentile bootstrap method was used to calculate the parameters of the mediating effects of facial emotion recognition on the relationship between SPM and RMET scores.

Results

SPM scores and KOFEE scores were both statistically significant predictors of RMET scores. KOFEE scores were found to partially mediate the impact of SPM scores on RMET scores.

Conclusion

These findings suggested that facial emotion recognition partially mediated the relationship between reasoning by analogy and social cognition. This study highlights the need for further research for individuals with serious mental illnesses.

Keywords: Social cognition, Reading the minds in eyes, Facial emotion recognition, Theory of mind, Neurocognition

INTRODUCTION

For successful social interactions, it is required to make inferences about others’ views and beliefs. This ability to infer someone’s mental states is called the Theory of Mind (ToM) [1]. Since ToM is paramount for social interaction, and social interactions are essential for daily living, any impairments in ToM abilities can significantly compromised an individual’s life and social functioning. Deficits in ToM have been observed in individuals with serious mental illness, such as schizophrenia and autism spectrum disorders [2,3]. Therefore, many researchers have tried to develop measurement tools to assess ToM ability. Such tools include tasks using false belief stories and pictures, Strange stories, and emotion recognition [4-7].

The Reading the Mind in the Eyes Test (RMET) [4,8] is the one of the most popular ToM measurement tools. In the RMET, participants are presented with pictures of the eye regions of a face and required to select the word that best matches the model’s complex mental state such as desire and goal. Since the RMET provides limited amount of information, its developers proposed that it would employ more automatic and implicit processes than other ToM tasks [4,8]. This was supported by some early findings that there was no correlation between RMET performance and neurocognitive function [4,8,9]. Contrary to early suggestion and findings, recent meta-analyses [10,11] have revealed a significant association of RMET performance with neurocognition, especially intelligence or reasoning by analogy. However, despite the relatively obvious correlation between the two tasks, the nature of relationship between RMET performance and neurocognitive function remains to be unclear.

Many studies have also reported an association of neurocognitive function with facial emotion recognition [12-14]. Facial emotion recognition is the ability to identify another person’s emotion based on their facial expression. In typical facial emotion recognition tasks, subjects are provided with photographs of whole faces and asked to choose the most suitable emotional category of the basic emotions of the facial expressions. This task is similar to the RMET in that the both tasks involves identifying another person’s state of mind based on external information, but the relationship between the two tasks has rarely been studied. From a theoretical standpoint, facial emotion recognition tasks appear easier than the RMET because the stimuli (external information) which subjects should match with internal information stored in memory are whole face rather than part of the faces. Therefore, it could be inferred that the RMET requires additional cognitive resources beyond those required for the facial emotion recognition task to attribute intentions or beliefs to the person in the photograph.

Based on these assumptions and on previous findings regarding neurocognitive function, facial emotion recognition, and the RMET, it was hypothesized that reasoning by analogy and facial emotion recognition would be independent predictors of RMET performance, and moreover, that facial emotion recognition would mediate the relationship between neurocognition, especially reasoning by analogy, and RMET performance.

METHODS

Subjects

A total of 150 healthy, non-clinical youths (74 men and 76 women) were enrolled via an Internet advertisement from May 2018 to October 2018. All participants satisfied the inclusion criterion of age from 20 to 30. The Mini-International Neuropsychiatric Interview (MINI) was used to exclude individuals with past or current psychiatric or neurological illnesses. The written informed consent was acquired from all subjects after the full explanation of study procedures. The study protocol was reviewed and approved by the Institutional Review Board of Severance Hospital (IRB No. 4-2014-0744). Subjects’ mean age and years of education were 23.1 (SD=2.51) and 14.4 (SD=1.40), respectively.

Neurocognitive function task

To quantify the ability of reasoning by analogy, the Standard Progressive Matrices (SPM) was used [15]. The SPM is composed of sixty non-colored diagrammatic puzzles with a missing part, which subjects should correctly choose one among six options. This test was reported to show high validity and reliability among diverse cultural groups [16]. In this study, SPM scores were estimated as the total of participants’ correct answers.

Facial emotion recognition task

To assess facial emotion recognition, photographs from the Korean Facial Expressions of Emotion (KOFEE) database [17] were used. This task is widely used for research on emotion recognition in Korea. This task is widely used for researches on emotion recognition in Korea [18,19]. The KOFEE consists of photographs of Korean models demonstrating neutral expressions and seven basic facial emotions (happiness, sadness, anger, disgust, surprise, fear, and contempt). All KOFEE facial expressions were coded using Ekman and Friesen’s (1978) Facial Action Coding System (FACS). Thus, each expression in KOFEE is a direct relation of facial expressions that are encountered in real life.

A total of 64 photographs, eight each for the seven basic emotions and neutral faces, were selected for the tasks. The selected photographs showed good interrater agreement in a previous standardization study [17] (n=105, Korean college students). The pictures were presented to subjects on response sheets along with the emotional categories of “happiness,” “sadness,” “anger,” “disgust,” “surprise,” “fear,” “contempt,” and “neutral.” Subjects were required to select the emotional label that best described the expressed emotion of the model in picture. In this study, KOFEE scores were scored as the sum of correct responses out of the 64 facial photographs.

The Reading the Mind in the Eyes Test

The RMET [4] is widely used assessment tool for social cognitive function. It presents thirty-six photographs of the eye part of the face, each expressing complex mental states of the individuals pictured. Each photograph is accompanied by four descriptors placed around the pictures. Subjects were requested to choose one word to describe the complex mental state of model in each photograph. One example of four descriptors are “irritated”, “disappointed,” “depressed,” and “accusing.” In this study, RMET scores were calculated as the sum of correct answers.

Data analysis

To identify that SPM scores and KOFEE scores are predictors of RMET performance, multiple regression analysis was performed. In addition, age and gender, which are expected to affect RMET, were also included as predictor variables in multiple regression analysis.

To explore the relation between the SPM, KOFEE, and RMET performance, bootstrapping mediation analysis was performed by using Hayes’ PROCESS macro for SPSS [20]. Bootstrapping analysis performs randomized resampling to yield significant confidence intervals for the indirect effect.

RESULTS

SPM, KOFEE, and RMET performance

Table 1 provides a summary of participants’ scores for the SPM, KOFEE, and RMET tasks.

Table 1.

Descriptive statistics for study variables (N=150)

| Variable | Mean | SD | Range |

|---|---|---|---|

| SPM score* | 52.54 | 5.16 | 33–60 |

| KOFEE score† | 53.87 | 4.48 | 42–63 |

| RMET score‡ | 26.31 | 3.49 | 15–33 |

number of correct answers on the Standard Progressive Matrices (SPM) tool,

number of correct answers on the facial emotion recognition task of the Korean Facial Expressions of Emotion (KOFEE) test,

number of correct answers on the Reading the Mind in the Eyes Test (RMET)

Predictors of RMET scores

Multiple linear regression analysis was done with gender, age, SPM score, and KOFEE score as predictor variables, and RMET score as an outcome variable. The overall model was statistically significant (R2=0.16, F=6.93, p<0.001). SPM scores (t=3.24, p<0.001, β=0.25) and KOFEE scores (t=3.15, p=0.002, β=0.25) were both statistically significant predictors of RMET scores. Table 2 shows a summary of these results.

Table 2.

Multiple regression analysis of domain variables and RMET performance

| Predictor | Outcome | β | t | p-value |

|---|---|---|---|---|

| Gender | RMET | -0.06 | -0.79 | 0.429 |

| Age | RMET | 0.15 | 1.92 | 0.057 |

| SPM | RMET | 0.25 | 3.24 | 0.001* |

| KOFEE | RMET | 0.25 | 3.15 | 0.002* |

p<0.05.

RMET: Reading the Mind in the Eyes Test, SPM: Standard Progressive Matrices KOFEE: Korean Facial Expressions of Emotion

Mediation analysis

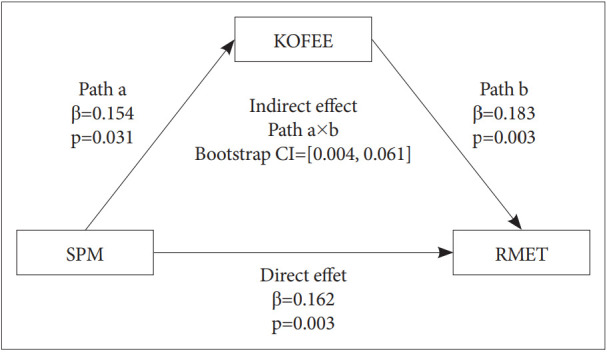

In the mediation analysis, KOFEE score was found to mediate the impact of SPM score on RMET score (total effect=0.190, t=3.549, p=0.001, direct effect=0.162, t=3.056, p=0.027, indirect effect=0.028, BootSE=0.014, 95% BootCI=0.004–0.061) (Figure 1). Given the nature of the cross-sectional data, directionality was verified by analyzing another model with SPM score as a predictor of KOFEE score, and RMET score as the mediator. The results of the alternative model revealed no significant direct association of SPM score with KOFEE score (p=0.23).

Figure 1.

Mediation model. KOFEE: Korean Facial Expressions of Emotion, SPM: Standard Progressive Matrices, RMET: Reading the Mind in the Eyes Test.

DISCUSSION

To our best knowledge, this study is the first study to explore the mediating role of facial emotion recognition between reasoning by analogy and RMET performance in non-clinical subjects. Our main findings were that reasoning by analogy and facial emotion recognition independently predicted RMET performance. More importantly, facial emotion recognition partially mediated the relationship between reasoning by analogy and RMET performance.

Reasoning by analogy, as measured by SPM, was independently associated to RMET performance. This result is inconsistent with the suggestion of the RMET developers who claim that the RMET and intelligence are not related in the non-clinical population [4], but consistent with recent meta-analysis [10,11]. According to meta-analysis of Baker et al. [10], which synthesized studies using various types of neurocognitive function tests, including SPM used in our study, the RMET showed a significant correlation with neurocognitive function. It has been consistently reported in more recent meta-analysis study [11]. The correlation between neurocognitive function and RMET was also observed in recent studies involving various clinically-ill patients groups, such as Autism spectrum disorder [11], Attention deficit hyperactivity disorder [21], and dementia [22]. Reasoning by analogy is the ability to understand rules and build abstractions by integrating relationships based on non-social visual information. According to the developers of the RMET [4], when RMET subjects match the descriptors regarding the complex mental state to corresponding part of facial expressions (i.e., the eye region of face), they should map the eyes in each photograph to examples of eye regions from their memory. And then subjects decode the semantic meaning (complex mental state) connected to the relatively specific eye regions. These close connections were established from the past interpersonal experiences by the neurocognitive functions including the reasoning by analogy. Another possible but not mutually exclusive explanation is that when subjects infer the model’s mental state based on a photograph of their eye region, reasoning by analogy may be needed to compare the photograph of eye regions to internal images stored in the subjects’ memory.

Facial emotion recognition was also independently associated to RMET performance. This finding is consistent with previous studies of non-clinical population [23,24], which showed correlation between neurocognitive function and facial emotion recognition. The correlation between neurocognitive function and facial emotion recognition was also observed in previous studies for clinically-ill patient groups, such as schizophrenia spectrum disorders [12-14], and bipolar disorder [25]. From a theoretical standpoint, the RMET is similar to facial emotion recognition, as both tasks require subjects to identify another person’s mental state based on facial information. This assumption also seems reasonable from a neurophysiological standpoint, as demonstrated by a previous study of event-related potential (ERP) showing that RMET scores associated significantly with the early ERP (N170) amplitude for face valence discrimination (positive-negative) [26].

More importantly, this study’s mediation analysis revealed that facial emotion recognition played a role of a partial mediator in the relationship between reasoning by analogy and RMET performance. Facial emotion recognition, as measured by KOFEE scores, differs from the RMET task in that it presents photographs of whole faces as stimuli (as opposed to the eye region only, as in the RMET), and its items are basic emotions (as opposed to complex mental states, as in the RMET). The developers of the RMET suggested that items conveying complex mental state are more challenging than basic emotions, since identifying complex mental states involves attributing beliefs or intentions to the other person [4]. From this suggestion, it could be inferred that the RMET requires additional cognitive resources beyond those required for the facial emotion recognition task to attribute beliefs or intentions to the person in the photograph. Additionally, RMET subjects must infer mental states using the limited amount of information (eye region vs. whole face) than subjects of the facial emotion recognition task. Thus, the RMET may require an additional pathway from reasoning by analogy. Given that there were similarities and differences between the RMET and the facial emotion recognition tasks, the relationship between reasoning by analogy and RMET performance may show both direct and indirect pathways through facial emotion recognition. For clinical implications, remediation therapy to enhance reasoning by analogy and/or facial emotion recognition could promote social cognition ability, especially decoding complex mental states from the limited amount of information in individual with serious mental illness such as schizophrenia, although in near future, the mediating role of facial emotion recognition between reasoning by analogy and RMET should be explored.

Limitations

First, this study used cross-sectional data, limiting its ability to draw firm conclusions regarding the causal relationship between reasoning by analogy, facial emotion recognition, and RMET performance. However, directionality was confirmed by analyzing an alternative model treating the RMET as a mediator. Second, measurement of neurocognitive function was restricted to reasoning by analogy (SPM). A range of other assessment tools for neurocognitive function could offer a more comprehensive view of this study’s results.

Conclusion

This study found that reasoning by analogy and facial emotion recognition are independent predictors of RMET performance. Furthermore, facial emotion recognition showed partial mediating effect on the relationship between reasoning by analogy and RMET performance. This study aimed to explore the underlying mechanism of RMET performance, and it highlights the need for further research focusing on serious mental illnesses such as schizophrenia and autism spectrum disorders, which detrimentally affect RMET performance and neurocognitive function.

Acknowledgments

This work was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT & Future Planning, Republic of Korea (grant number 2017R1A2B3008214).

Footnotes

The authors have no potential conflicts of interest to disclose.

Author Contributions

Conceptualization: Suk Kyoon An. Data curation: all authors. Formal analysis: Eunchong Seo, Se Jun Koo, Suk Kyoon An. Funding acquisition: Eun Lee, Suk Kyoon An. Investigation: all authors. Methodology: Eunchong Seo, Se Jun Koo. Project administration: Eun Lee, Suk Kyoon An. Resources: Eun Lee, Suk Kyoon An. Software: Eunchong Seo, Se Jun Koo. Supervision: Suk Kyoon An. Valildation: Suk Kyoon An. Visualization: Eunchong Seo. Writing—original draft: Eunchong Seo. Writing—review & editing: all authors.

REFERENCES

- 1.Baron-Cohen S. Social and pragmatic deficits in autism: cognitive or affective? J Autism Dev Disord. 1988;18:379–402. doi: 10.1007/BF02212194. [DOI] [PubMed] [Google Scholar]

- 2.Eack SM, Bahorik AL, McKnight SA, Hogarty SS, Greenwald DP, Newhill CE, et al. Commonalities in social and non-social cognitive impairments in adults with autism spectrum disorder and schizophrenia. Schizophr Res. 2013;148:24–28. doi: 10.1016/j.schres.2013.05.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sasson NJ, Pinkham AE, Carpenter KL, Belger A. The benefit of directly comparing autism and schizophrenia for revealing mechanisms of social cognitive impairment. J Neurodev Disord. 2011;3:87–100. doi: 10.1007/s11689-010-9068-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The “Reading the Mind in the Eyes” test revised version: a study with normal adults, and adults with Asperger syndrome or high-functioning autism. J Child Psychol Psychiatry. 2001;42:241–251. [PubMed] [Google Scholar]

- 5.Gregory C, Lough S, Stone V, Erzinclioglu S, Martin L, Baron-Cohen S, et al. Theory of mind in patients with frontal variant frontotemporal dementia and Alzheimer’s disease: theoretical and practical implications. Brain. 2002;125:752–764. doi: 10.1093/brain/awf079. [DOI] [PubMed] [Google Scholar]

- 6.Happé FG. An advanced test of theory of mind: Understanding of story characters’ thoughts and feelings by able autistic, mentally handicapped, and normal children and adults. J Autism Dev Disord. 1994;24:129–154. doi: 10.1007/BF02172093. [DOI] [PubMed] [Google Scholar]

- 7.Sarfati Y, Hardy-Baylé MC, Besche C, Widlöcher D. Attribution of intentions to others in people with schizophrenia: a non-verbal exploration with comic strips. Schizophr Res. 1997;25:199–209. doi: 10.1016/s0920-9964(97)00025-x. [DOI] [PubMed] [Google Scholar]

- 8.Baron-Cohen S, Jolliffe T, Mortimore C, Robertson M. Another advanced test of theory of mind: evidence from very high functioning adults with autism or asperger syndrome. J Child Psychol Psychiatry. 1997;38:813–822. doi: 10.1111/j.1469-7610.1997.tb01599.x. [DOI] [PubMed] [Google Scholar]

- 9.Ahmed FS, Stephen Miller L. Executive function mechanisms of theory of mind. J Autism Dev Disord. 2011;41:667–678. doi: 10.1007/s10803-010-1087-7. [DOI] [PubMed] [Google Scholar]

- 10.Baker CA, Peterson E, Pulos S, Kirkland RA. Eyes and IQ: a meta-analysis of the relationship between intelligence and “Reading the Mind in the Eyes.”. Intelligence. 2014;44:78–92. [Google Scholar]

- 11.Penuelas-Calvo I, Sareen A, Sevilla-Llewellyn-Jones J, Fernandez-Berrocal P. The “Reading the Mind in the Eyes” test in autism-spectrum disorders comparison with healthy controls: a systematic review and meta-analysis. J Autism Dev Disord. 2019;49:1048–1061. doi: 10.1007/s10803-018-3814-4. [DOI] [PubMed] [Google Scholar]

- 12.Yang C, Zhang T, Li Z, Heeramun-Aubeeluck A, Liu N, Huang N, et al. The relationship between facial emotion recognition and executive functions in first-episode patients with schizophrenia and their siblings. BMC Psychiatry. 2015;15:241. doi: 10.1186/s12888-015-0618-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lee SY, Bang M, Kim KR, Lee MK, Park JY, Song YY, et al. Impaired facial emotion recognition in individuals at ultra-high risk for psychosis and with first-episode schizophrenia, and their associations with neurocognitive deficits and self-reported schizotypy. Schizophr Res. 2015;165:60–65. doi: 10.1016/j.schres.2015.03.026. [DOI] [PubMed] [Google Scholar]

- 14.Addington J, Saeedi H, Addington D. Facial affect recognition: a mediator between cognitive and social functioning in psychosis? Schizophr Res. 2006;85:142–150. doi: 10.1016/j.schres.2006.03.028. [DOI] [PubMed] [Google Scholar]

- 15.Raven JC. Raven’s Progressive Matrices and Vocabulary Scales. Oxford, England: Oxford Pyschologists Press; 1998. [Google Scholar]

- 16.Raven JC. The Raven’s progressive matrices: change and stability over culture and time. Cogn Psychol. 2000;41:1–48. doi: 10.1006/cogp.1999.0735. [DOI] [PubMed] [Google Scholar]

- 17.Park JY, Oh JM, Kim SY, Lee M, Lee C, Kim BR, et al. Korean Facial Expressions of Emotion (KOFEE) Seoul, Korea: Section of Affect & Neuroscience, Institute of Behavioral Science in Medicine, Yonsei University College of Medicine; 2011. [Google Scholar]

- 18.Kim YR, Kim CH, Park JH, Pyo J, Treasure J. The impact of intranasal oxytocin on attention to social emotional stimuli in patients with anorexia nervosa: a double blind within-subject cross-over experiment. PLoS One. 2014;9:e90721. doi: 10.1371/journal.pone.0090721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Lee SB, Koo SJ, Song YY, Lee MK, Jeong YJ, Kwon C, et al. Theory of mind as a mediator of reasoning and facial emotion recognition: findings from 200 healthy people. Psychiatry Investig. 2014;11:105–111. doi: 10.4306/pi.2014.11.2.105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hayes AF. Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach. New York: Guilford Publications; 2017. [Google Scholar]

- 21.Tatar ZB, Cansız A. Executive function deficits contribute to poor theory of mind abilities in adults with ADHD. Appl Neuropsychol Adult. 2020:1–8. doi: 10.1080/23279095.2020.1736074. [DOI] [PubMed] [Google Scholar]

- 22.Yildirim E, Soncu Buyukiscan E, Demirtas-Tatlidede A, Bilgic B, Gurvit H. An investigation of affective theory of mind ability and its relation to neuropsychological functions in Alzheimer’s disease. J Neuropsychol. 2020 doi: 10.1111/jnp.12207. [Online Ahead of Print] [DOI] [PubMed] [Google Scholar]

- 23.Phillips LH, Channon S, Tunstall M, Hedenstrom A, Lyons K. The role of working memory in decoding emotions. Emotion. 2008;8:184–191. doi: 10.1037/1528-3542.8.2.184. [DOI] [PubMed] [Google Scholar]

- 24.Henry JD, Phillips LH, Beatty WW, McDonald S, Longley WA, Joscelyne A, et al. Evidence for deficits in facial affect recognition and theory of mind in multiple sclerosis. J Int Neuropsychol Soc. 2009;15:277–285. doi: 10.1017/S1355617709090195. [DOI] [PubMed] [Google Scholar]

- 25.David DP, Soeiro-de-Souza MG, Moreno RA, Bio DS. Facial emotion recognition and its correlation with executive functions in bipolar I patients and healthy controls. J Affect Disord. 2014;152-154:288–294. doi: 10.1016/j.jad.2013.09.027. [DOI] [PubMed] [Google Scholar]

- 26.Petroni A, Canales-Johnson A, Urquina H, Guex R, Hurtado E, Blenkmann A, et al. The cortical processing of facial emotional expression is associated with social cognition skills and executive functioning: a preliminary study. Neurosci Lett. 2011;505:41–46. doi: 10.1016/j.neulet.2011.09.062. [DOI] [PubMed] [Google Scholar]