In the second week of March 2020, the World Health Organization (WHO) announced the coronavirus disease 2019 (COVID-19) was officially a pandemic. This occurred 10 weeks after China reported to the WHO a cluster of pneumonia cases. In the context of a pandemic, multiple pressures, such as fear of death and economic collapse, may align. This scenario creates a fertile ground for ingrained cognitive biases, thereby disturbing the systematic approach upon which science usually relies.

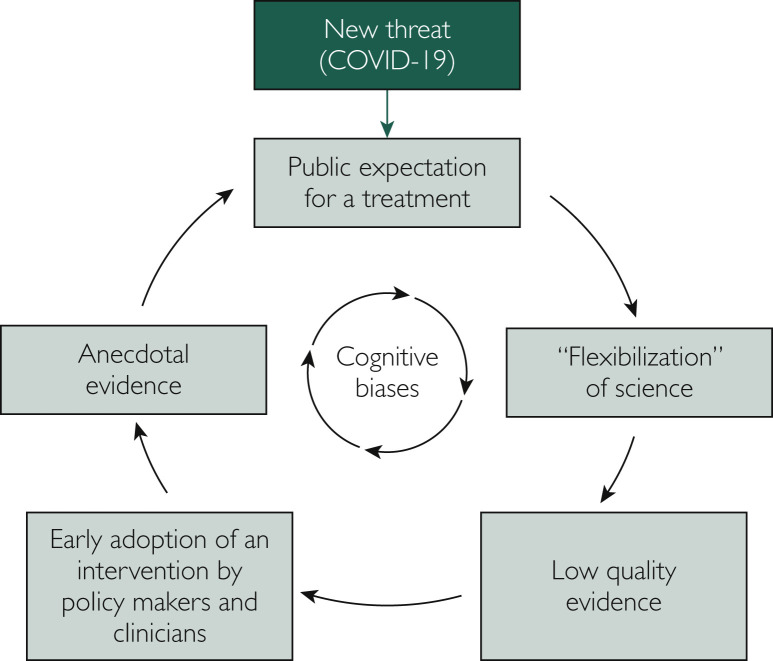

The senior author of the first published clinical study on the use of hydroxychloroquine for COVID-19 had stated in an interview with French newspaper Le Monde that “doctors can and should think like doctors, not like methodologists.”1 This study attracted attention and exerted influence after the release of its promising results. Even though methodological limitations were evident, this work generated several claims about the efficacy of hydroxychloroquine for patients with COVID-19. In ordinary times, this manuscript would be scrutinized by an extensive peer-review process that would potentially raise substantive concerns. A subsequent high-profile paper associating the use of hydroxychloroquine with increased mortality in the treatment of COVID-19 had to be retracted2 after scientists pointed out issues such as mismatched mortality rates when compared to Australian official reports, no release of the dataset for independent analysis, and lack of thorough ethical review. These are examples of a phenomenon we call the “flexibilization” of science, a part of a vicious cycle underpinned by cognitive biases and triggered by the COVID-19 pandemic (Figure ).

Figure.

Vicious cycle triggered by the COVID-19 epidemic.

The term “flexibilization” here refers to a loosening of methodological standards and the development of low-quality studies, leading to the creation of unreliable data and, later in the cycle, of anecdotal evidence. While low-quality evidence may generate new hypotheses that ultimately result in benefits for patients, it can also have the opposite effect. There are several historical examples that show how careful we need to be before making decisions based on the available evidence.3 , 4 In fact, some of these “surprising” results in previously published literature have taught us that therapeutic approaches that were initially found to be promising were instead causing harm to patients. Within this context, science and clinical research have been creating rigorous methodological standards in order to produce high-quality studies that allow us to have greater confidence in the evidence while mitigating unnecessary damage.

Contrary to what was once largely accepted as a normative model, human beings are not usually rational in their decision-making processes, often relying on many heuristics that may have afforded an evolutionary compensation and adaptation for our limited computational capacity. Take, for example, our inclination to search for evidence that confirms our prior beliefs, a tendency known as confirmation bias. We are naturally prone to this sort of intellectual ambush, and there is evidence to support that scientists are not immune to these systematic errors.5 For a group of people, once a belief is incorporated, even strong evidence contrary to such belief is not enough for a reinterpretation, a mechanism named belief perseveration. In the extreme case, exposing people to proof that is inconsistent to their understanding might lead them to reject the opposing hypothesis even more strongly, what has been called the backfire effect. For instance, among people highly concerned about vaccine side effects, receiving information about vaccines by the Centers for Disease Control and Prevention reduced their intent on vaccinating.6 In a time urging for cost-effective results, it is important to make a clear distinction between scientific method and scientists. The former is the enterprise that aims to diminish systematic error; the latter is reasonably susceptible for all sorts of biases when not following a systematic approach.

When low-quality studies are created, the risk of misinterpretation increases. In the setting of a highly connected world and increasing public exposure, there might be a temptation for researchers to report the results of their findings in an incorrect way, either highlighting benefits or downplaying the harms of a specific treatment. This is called the “spin” of reporting clinical research.7 Since “spin” is highly prevalent in the medical literature,8 one might speculate on the motivations behind it. In the context of COVID-19 pandemic, this seems to be mostly driven by an intrinsic desire to find a treatment that works against a disease that is having an important impact on society across the world. However, other motivations include lack of knowledge about methodological standards, opportunistic publishing, and an intent to influence readers.9 Studies evaluating the impact of “spin” have shown that clinicians are more likely to perceive a treatment as beneficial when “spin” is present.10 The general public may be more susceptible to be influenced by “spin”, especially if they lack the expertise to avoid misinterpretation of research data. This issue could be amplified by the Dunning-Kruger effect, a cognitive bias in which unskilled individuals tend to overestimate their ability in a given task.

During the COVID-19 pandemic, the early adoption of new interventions by clinicians and policy makers based on promising but often low-quality data is creating a scenario from which anecdotal evidence may emerge. Several countries have endorsed the use of hydroxychloroquine for COVID-19 in clinical scenarios outside of the undergoing research protocols. As an example, Brazil’s Ministry of Health has released a new treatment guideline for COVID-19 recommending the use of either hydroxychloroquine or chloroquine for patients with mild symptoms, such as cough, fatigue, anosmia, or headache,11 ignoring current best evidence. This off-label use allows for claims of efficacy based on informal reports of patients who recovered from the disease after taking the medication, adding an important layer of confusion and misinterpretation. Anecdotal evidence is more likely to emerge from this mild spectrum in which drug efficacy is easily confounded with the natural course of the disease that would otherwise improve with supportive care only.12 The belief that hydroxychloroquine might be a good intervention for COVID-19 led to the hoarding of this medication by the general public and health care workers around the world. This scenario created an uncertainty about drug availability to patients who need this medication, especially for those with rheumatologic diseases and in low-to-middle income countries with high rates of malaria.

Despite being arguably the lowest quality of scientific proof, there are several reasons to believe that anecdotal evidence, in particular, may be accorded more credence than such evidence truly merits. First, there is a narrative quality to anecdotal evidence that resonates with intuitive patterns of learning. Second, the context in which this sort of information is shared usually involves a known person, which might add an affective valence to the message. Finally, there is the availability heuristics, which leads people to misjudge the probability of an event being true based on how easily it can be recalled.

We emphasize the importance of interrupting the “flexibilization” of science in order to break a vicious cycle that may do more harm than good. While fast-paced clinical studies need to be done during a global crisis, they need to follow methodological standards in order to produce reliable and high-quality evidence. The administrative bureaucracy and procedures to perform a well-designed randomized controlled trial, for example, can be accelerated, but the pandemic should not be an excuse to overlook important aspects of methodological standards. In the era of COVID-19, we need to be even more vigilant about our own cognitive biases and limitations, and avoid the “flexibilization” of science as this may exert significant harm to our society.

Footnotes

Potential Competing Interests: The authors report no competing interests.

Supplemental Online Material

References

- 1.Gautret P., Lagier J.-C., Parola P., Hoang V.T., Meddeb L., Mailhe M. Hydroxychloroquine and azithromycin as a treatment of COVID-19: results of an open-label non-randomized clinical trial. Int J Antimicrob Agents. 2020;56(1):105949. doi: 10.1016/j.ijantimicag.2020.105949. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- 2.Mehra M.R., Ruschitzka F., Patel A.N. Retraction—hydroxychloroquine or chloroquine with or without a macrolide for treatment of COVID-19: a multinational registry analysis. Lancet. 2020;395(10240):1820. doi: 10.1016/S0140-6736(20)31324-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Contopoulos-Ioannidis D.G., Ntzani E.E., Ioannidis J.P.A. Translation of highly promising basic science research into clinical applications. Am J Med. 2003;114(6):477–484. doi: 10.1016/s0002-9343(03)00013-5. [DOI] [PubMed] [Google Scholar]

- 4.Ioannidis J.P.A. Why most published research findings are false. PLoS Med. 2005;2(8):e124. doi: 10.1371/journal.pmed.0020124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Emerson G.B., Warme W.J., Wolf F.M., Heckman J.D., Brand R.A., Leopold S.S. Testing for the presence of positive-outcome bias in peer review: a randomized controlled trial. Arch Intern Med. 2010;170(21):1934–1939. doi: 10.1001/archinternmed.2010.406. [DOI] [PubMed] [Google Scholar]

- 6.Nyhan B., Reifler J. Does correcting myths about the flu vaccine work? An experimental evaluation of the effects of corrective information. Vaccine. 2015;33(3):459–464. doi: 10.1016/j.vaccine.2014.11.017. [DOI] [PubMed] [Google Scholar]

- 7.Mahtani K.R. “Spin” in reports of clinical research. Evid Based Med. 2016;21(6):201–202. doi: 10.1136/ebmed-2016-110570. [DOI] [PubMed] [Google Scholar]

- 8.Boutron I., Dutton S., Ravaud P., Altman D.G. Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA. 2010;303(20):2058–2064. doi: 10.1001/jama.2010.651. [DOI] [PubMed] [Google Scholar]

- 9.Yavchitz A., Ravaud P., Altman D.G., Moher D.G., Hrobjartsson, Lasserson T. A new classification of spin in systematic reviews and meta-analyses was developed and ranked according to the severity. J Clin Epidemiol. 2016;75:56–65. doi: 10.1016/j.jclinepi.2016.01.020. [DOI] [PubMed] [Google Scholar]

- 10.Boutron I., Altman D.G., Hopewell S., Vera-Badillo F., Tannock I., Ravaud P. Impact of spin in the abstracts of articles reporting results of randomized controlled trials in the field of cancer: The SPIIN randomized controlled trial. J Clin Oncol. 2014;32(36):4120–4126. doi: 10.1200/JCO.2014.56.7503. [DOI] [PubMed] [Google Scholar]

- 11.Ministério da Saúde Orientações do Ministério da Saúde para Manuseio Medicamentoso Precoce de Pacientes com diagnóstico da COVID-19. https://portalarquivos.saude.gov.br/images/pdf/2020/May/21/Nota-informativa---Orienta----es-para-manuseio-medicamentoso-precoce-de-pacientes-com-diagn--stico-da-COVID-19.pdf Published May 20, 2020. Accessed June 9, 2020.

- 12.Wu Z., McGoogan J.M. Characteristics of and Important Lessons from the Coronavirus Disease 2019 (COVID-19) Outbreak in China: Summary of a Report of 72314 Cases from the Chinese Center for Disease Control and Prevention. JAMA - J Am Med Assoc. 2020;323(13):1239–1242. doi: 10.1001/jama.2020.2648. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.