Abstract

By 2040, age-related macular degeneration (AMD) will affect ~288 million people worldwide. Identifying individuals at high risk of progression to late AMD, the sight-threatening stage, is critical for clinical actions, including medical interventions and timely monitoring. Although deep learning has shown promise in diagnosing/screening AMD using color fundus photographs, it remains difficult to predict individuals’ risks of late AMD accurately. For both tasks, these initial deep learning attempts have remained largely unvalidated in independent cohorts. Here, we demonstrate how deep learning and survival analysis can predict the probability of progression to late AMD using 3298 participants (over 80,000 images) from the Age-Related Eye Disease Studies AREDS and AREDS2, the largest longitudinal clinical trials in AMD. When validated against an independent test data set of 601 participants, our model achieved high prognostic accuracy (5-year C-statistic 86.4 (95% confidence interval 86.2–86.6)) that substantially exceeded that of retinal specialists using two existing clinical standards (81.3 (81.1–81.5) and 82.0 (81.8–82.3), respectively). Interestingly, our approach offers additional strengths over the existing clinical standards in AMD prognosis (e.g., risk ascertainment above 50%) and is likely to be highly generalizable, given the breadth of training data from 82 US retinal specialty clinics. Indeed, during external validation through training on AREDS and testing on AREDS2 as an independent cohort, our model retained substantially higher prognostic accuracy than existing clinical standards. These results highlight the potential of deep learning systems to enhance clinical decision-making in AMD patients.

Subject terms: Image processing, Machine learning, Prognostic markers, Eye manifestations

Introduction

Age-related macular degeneration (AMD) is the leading cause of legal blindness in developed countries1. Through global demographic changes, the number of people with AMD worldwide is projected to reach 288 million by 20402. The disease is classified into early, intermediate, and late stages3. Late AMD, the stage associated with severe visual loss, occurs in two forms, geographic atrophy (GA) and neovascular AMD (NV). Making accurate time-based predictions of progression to late AMD is clinically critical. This would enable improved decision-making regarding: (i) medical treatments, especially oral supplements known to decrease progression risk, (ii) lifestyle interventions, particularly smoking cessation and dietary changes, and (iii) intensity of patient monitoring, e.g., frequent reimaging in clinic and/or tailored home monitoring programs4–8. It would also aid the design of future clinical trials, which could be enriched for participants with a high risk of progression events9.

Color fundus photography (CFP) is the most widespread and accessible retinal imaging modality used worldwide; it is the most highly validated imaging modality for AMD classification and prediction of progression to late disease10,11. Currently, two existing standards are available clinically for using CFP to predict the risk of progression. However, both of these were developed using data from the AREDS only; now, an expanded data set with more progression events is available following the completion of the AREDS25. Of the two existing standards, the most commonly used is the five-step Simplified Severity Scale (SSS)10. This is a points-based system whereby an examining physician scores the presence of two AMD features (macular drusen and pigmentary abnormalities) in both eyes of an individual. From the total score of 0–4, a 5-year risk of late AMD is then estimated. The other standard is an online risk calculator12. Like the SSS, its inputs include the presence of macular drusen and pigmentary abnormalities; however, it can also receive the individual’s age, smoking status, and basic genotype information consisting of two SNPs (when available). Unlike the SSS system, the online risk calculator predicts the risk of progression to late AMD, GA, and NV at 1–10 years.

Both existing clinical standards face limitations. First, the ascertainment of the SSS features from CFP or clinical examination requires significant clinical expertise, typical in retinal specialists, but remains time-consuming and error-prone13, even when performed by expert graders in a reading center11. Second, the SSS relies on two hand-crafted features and cannot receive other potentially risk-determining features. Recent work applying deep learning (DL)14 has shown promise in the automated diagnosis and triage of conditions including cardiac, pediatric, dermatological, and retinal diseases13,15–26, but not in predicting the risk of AMD progression on a large scale or at the patient level27. Specifically, Burlina et al. reported on the use of DL for estimating the AREDS 9-step severity grades of individual eyes, based on CFP in the AREDS data set27–29. However, this approach relied on previously published 5-year risk estimates at the severity class level30, rather than using the ground truth of actual progression/non-progression at the level of individual eyes, or the timing and subtype of any progression events. In addition, no external validation using an independent data set was performed in that study. Babenko et al.28 proposed a model to predict risk of progression to neovascular AMD only (i.e., not late AMD or GA). Importantly, the model was designed to predict 1-year risks only, i.e., at one fixed and very short interval only. Schmidt-Erfurth et al.29 proposed to use OCT images to predict progression to late AMD. Specifically, they used a data set (495 eyes, containing only 159 eyes that progressed to late AMD) with follow-up of 2 years. Their data set was annotated by two retinal specialists (rather than unified grading by reading center experts using a published protocol).

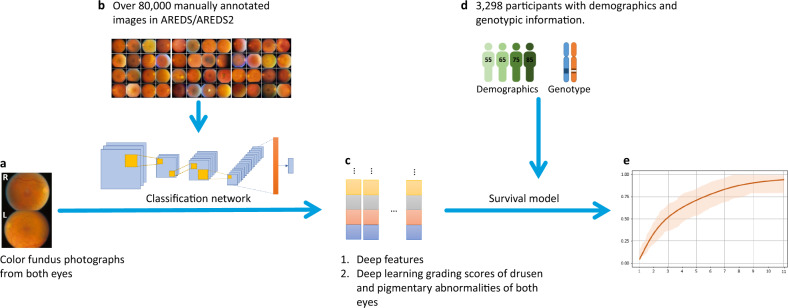

Here, we developed a DL architecture to predict progression with improved accuracy and transparency in two steps: image classification followed by survival analysis (Fig. 1). The model is developed and clinically validated on two data sets from the Age-Related Eye Disease Studies (AREDS31 and AREDS232), the largest longitudinal clinical trials in AMD (Fig. 2). The framework and data sets are described in detail in the “Methods” section.

Fig. 1. The two-step architecture of the framework.

a Raw color fundus photographs (CFP; field 2, i.e., 30° imaging field centered at the fovea). b Deep classification network, trained with CFP (all manually graded by reading center human experts). c Resulting deep features or deep learning grading. d Survival model, trained with imaging data, and participant demographic information, with/without genotype information: ARMS2 rs10490924, CFH rs1061170, and 52-SNP Genetic Risk Score. e Late age-related macular degeneration survival probability.

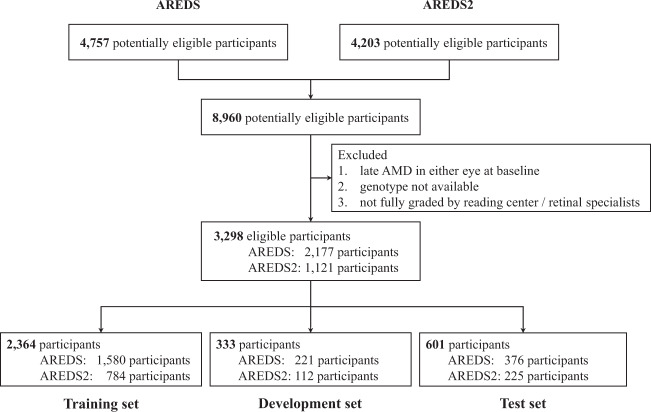

Fig. 2. Creation of the study data sets.

To avoid ‘cross-contamination’ between the training and test data sets, no participant was in more than one group.

Our framework has several important strengths. First, it performs progression predictions directly from CFP over a wide time interval (1–12 years). Second, training and testing were based on the ground truth of reading center-graded progression events at the level of individuals. Both training and testing benefitted from an expanded data set with many more progression events, achieved by using data from the AREDS2 alongside AREDS, for the first time in DL studies. Third, our framework can predict the risk not only of late AMD, but also of GA and NV separately. This is important since treatment approaches for the two subtypes of late AMD are very different: NV needs to be diagnosed extremely promptly, since delay in access to intravitreal anti-VEGF injections is usually associated with very poor visual outcomes33, while various therapeutic options to slow GA enlargement are under investigation34,35. Finally, the two-step approach has important advantages. By separating the DL extraction of retinal features from the survival analysis, the final predictions are more explainable and biologically plausible, and error analysis is possible. By contrast, end-to-end ‘black-box’ DL approaches are less transparent and may be more susceptible to failure36.

Results

Deep learning models trained on the combined AREDS/AREDS2 training sets and validated on the combined AREDS/AREDS2 test sets

The characteristics of the participants are shown in Table 1. The characteristics of the images are shown in Supplementary Table 1. The overall framework of our method is shown in Fig. 1 and described in detail in the “Methods” section. In short, first, a deep convolutional neural network (CNN) was adapted to (i) extract multiple highly discriminative deep features, or (ii) estimate grades for drusen and pigmentary abnormalities (Fig. 1b, c). Second, a Cox proportional hazards model was used to predict probability of progression to late AMD (and GA/NV, separately), based on the deep features (deep features/survival) or the DL grading (DL grading/survival) (Fig. 1d, e). In this step, additional participant information could be added, such as age, smoking status, and genetics. Separately, all of the baseline images in the test set were graded by 88 (AREDS) and 192 (AREDS2) retinal specialists. By using these grades as input to either the SSS or the online calculator, we computed the prediction results of the two existing standards: ‘retinal specialists/SSS’ and ‘retinal specialists/calculator’.

Table 1.

Characteristics of AREDS and AREDS2 participants.

| Characteristics | AREDS | AREDS2 |

|---|---|---|

| Participants characteristics | ||

| Number of participants | 2177 | 1121 |

| Age, mean (SD), y | 68.4 (4.8) | 70.9 (7.9) |

| Smoking history (never, former, current), % | 49.6/45.6/4.8 | 45.9/48.5/5.5 |

| CFH rs1061170 (TT/CT/CC), % | 33.3/46.4/20.3 | 17.8/41.7/40.5 |

| ARMS2 rs10490924 (GG/GT/TT), % | 55.2/36.5/8.3 | 40.5/42.8/16.7 |

| AMD Genetic Risk Score, mean (SD) | 14.2 (1.4) | 15.2 (1.3) |

| Follow-up, median (IQR), y | 10.0 (3.0) | 5.0 (2.0) |

| Progression to late AMD (classified by Reading Center) | ||

| Late AMD: % of participants at year 1/2/3/4/5/all years | 1.5/3.9/6.68/8.6/10.7/18.5 | 9.1/16.2/23.8/32.6/38.1/38.8 |

| GA: % of participants at year 1/2/3/4/5/all years | 0.6/1.5/2.8/4.0/5.1/10.1 | 4.8/9.0/13.0/17.8/20.8/21.0 |

| NV: % of participants at year 1/2/3/4/5/all years | 0.9/2.4/4.0/4.6/5.5/8.4 | 4.3/7.2/10.8/14.7/17.3/17.8 |

The AREDS contained a wide spectrum of baseline disease severity, from no age-related macular degeneration (AMD) to high-risk intermediate AMD. The AREDS2 contained a high level of baseline disease severity, i.e., high proportion of eyes at high risk of progression to late AMD.

SD standard deviation, y year, IQR interquartile range, AMD age-related macular degeneration.

The prediction accuracy of the approaches was compared using the 5-year C-statistic as the primary outcome measure. The 5-year C-statistic of the two DL approaches met and substantially exceeded that of both existing standards (Table 2). For predictions of progression to late AMD, the 5-year C-statistic was 86.4 (95% confidence interval 86.2–86.6) for deep features/survival, 85.1 (85.0–85.3) for DL grading/survival, 82.0 (81.8–82.3) for retinal specialists/calculator, and 81.3 (81.1–81.5) for retinal specialists/SSS. For predictions of progression to GA, the equivalent results were 89.6 (89.4–89.8), 87.8 (87.6–88.0), and 82.6 (82.3–82.9), respectively; these are not available for retinal specialists/SSS, since the SSS does not make separate predictions for GA or NV. For predictions of progression to NV, the equivalent results were 81.1 (80.8–81.4), 80.2 (79.8–80.5), and 80.0 (79.7–80.4), respectively.

Table 2.

The C-statistic (95% confidence interval) of the survival models in predicting risk of progression to late age-related macular degeneration on the combined AREDS/AREDS2 test sets (601 participants).

| Models | 1 | 2 | 3 | 4 | 5 | All years |

|---|---|---|---|---|---|---|

| Late AMD | ||||||

| Deep features/survival | 87.8 (87.5,88.1) | 85.8 (85.4,86.2) | 86.3 (86.1,86.6) | 86.7 (86.5,86.9) | 86.4 (86.2,86.6) | 86.7 (86.5,86.8) |

| DL grading/survival | 84.9 (84.6,85.3) | 84.1 (83.8,84.4) | 84.8 (84.5,85.0) | 84.8 (84.6,85.0) | 85.1 (85.0,85.3) | 84.9 (84.6,85.3) |

| Retinal specialists/calculator | – | 78.3 (77.9,78.8) | 81.8 (81.5,82.1) | 82.7 (82.4,82.9) | 82.0 (81.8,82.3) | – |

| Retinal specialists/SSSa | – | – | – | – | 81.3 (81.1,81.5) | – |

| Geographic atrophy | ||||||

| Deep features/survival | 89.2 (88.9,89.6) | 91.0 (90.7,91.2) | 88.7 (88.4,88.9) | 89.1 (88.9,89.3) | 89.6 (89.4,89.8) | 89.2 (89.1,89.4) |

| DL grading/survival | 88.6 (88.3,88.9) | 86.6 (86.4,86.9) | 87.6 (87.4,87.9) | 88.1 (87.9,88.3) | 87.8 (87.6,88.0) | 88.6 (88.3,88.9) |

| Retinal specialists/calculator | – | 77.5 (76.9,78.0) | 81.2 (80.8,81.6) | 82.0 (81.6,82.3) | 82.6 (82.3,82.9) | – |

| Retinal specialists/SSSa | – | – | – | – | – | – |

| Neovascular AMD | ||||||

| Deep features/survival | 85.4 (84.9,85.9) | 77.9 (77.3,78.5) | 81.7 (81.3,82.1) | 81.7 (81.4,82.1) | 81.1 (80.8,81.4) | 82.1 (81.8,82.4) |

| DL grading/survival | 78.0 (77.4,78.6) | 78.4 (77.9,78.9) | 79.2 (78.8,79.6) | 79.0 (78.7,79.4) | 80.2 (79.8,80.5) | 78.0 (77.4,78.6) |

| Retinal specialists/calculator | – | 75.5 (74.8,76.2) | 81.7 (81.2,82.1) | 81.4 (81.0,81.8) | 80.0 (79.7,80.4) | – |

| Retinal specialists/SSSa | – | – | – | – | – | – |

AMD age-related macular degeneration, DL deep learning, SSS Simplified Severity Scale.

aRetinal specialists/SSS—makes predictions at one fixed interval of 5 years and for late AMD only (i.e., not by disease subtype); unlike all other models, for SSS, late AMD is defined as NV or central GA (instead of NV or any GA); please refer to the Supplementary Table 2 for results using genotype information, and Supplementary Table 3 for multivariate analysis.

Similarly, for predictions at 1–4 years, the C-statistic was higher in all cases for the two DL approaches than the retinal specialists/calculator approach. Of the two DL approaches, the C-statistics of deep features/survival were higher in most cases than those of DL grading/survival. Predictions at these time intervals were not available for retinal specialists/SSS, since the SSS does not make predictions at any interval other than 5 years. Regarding the separate predictions of progression to GA and NV, deep features/survival also provided the most accurate predictions at most time intervals. Overall, DL-based image analysis provided more accurate predictions than those from retinal specialist grading using the two existing standards. For deep feature extraction, this may reflect the fact that DL is unconstrained by current medical knowledge and not limited to two hand-crafted features.

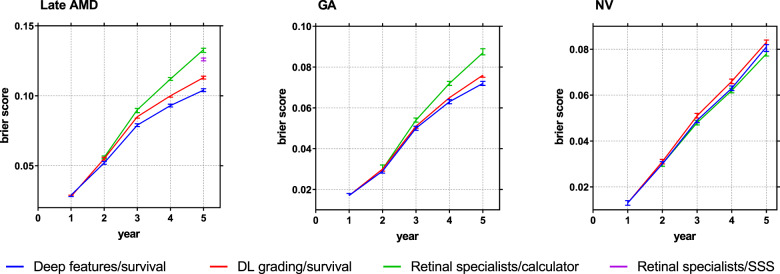

In addition, the prediction calibrations were compared using the Brier score (Fig. 3). For 5-year predictions of late AMD, the Brier score was lowest (i.e., optimal) for deep features/survival. We also split the data into five groups based on the AREDS SSS at baseline. We compared calibration plots for deep features/survival, DL grading/survival, and retinal specialist/survival with the actual progression data for the five groups (Supplement Fig. 1). The actual progression data for the five groups are shown in lines (Kaplan–Meier curves) and the predictions of our models are shown in lines with markers. The figure shows that the predictions of the deep features/survival model correspond better to the actual progression data than those of the other two models.

Fig. 3. Prediction error curves.

Prediction error curves of the survival models in predicting risk of progression to late age-related macular degeneration on the combined AREDS/AREDS2 test sets (601 participants), using the Brier score (95% confidence interval).

Deep learning models trained separately on individual cohorts (either AREDS or AREDS2) and validated on the combined AREDS/AREDS2 test sets

Models trained on the combined AREDS/AREDS2 cohort (Table 2) were substantially more accurate than those trained on either individual cohort (Table 3), with the additional advantage of improved generalizability. Indeed, one challenge of DL has been that generalizability to populations outside the training set can be variable. In this instance, the widely distributed sites and diverse clinical settings of AREDS/AREDS2 participants, together with the variety of CFP cameras used, help provide some assurance of broader generalizability.

Table 3.

The 5-year C-statistic (95% CI) results of models trained on only AREDS or only AREDS2, and validated on the combined AREDS/AREDS2 test sets (601 participants), without using genotype information.

| Models | Trained on AREDS | Trained on AREDS2 |

|---|---|---|

| Late AMD | ||

| Deep features/survival | 85.7 (85.5,85.9) | 83.9 (83.7,84.1) |

| DL grading/survival | 84.7 (84.5,84.9) | 82.1 (81.8,82.3) |

| Geographic atrophy | ||

| Deep features/survival | 89.3 (89.1,89.5) | 84.7 (84.4,85.0) |

| DL grading/survival | 90.2 (90.0,90.4) | 85.2 (84.9,85.5) |

| Neovascular AMD | ||

| Deep features/survival | 79.6 (79.3,80.0) | 74.0 (73.6,74.5) |

| DL grading/survival | 76.6 (76.2,76.9) | 75.5 (75.1,75.9) |

Deep learning models trained on AREDS and externally validated on AREDS2 as an independent cohort

In separate experiments, to externally validate the models on an independent data set, we trained the models on AREDS (2177 participants) and tested them on AREDS2 (1121 participants). Table 4 shows that deep features/survival demonstrated the highest accuracy of 5-year predictions in all scenarios, and DL grading/survival also had higher accuracy than retinal specialists/calculator.

Table 4.

The 5-year C-statistic (95% CI) results of models trained on the entire AREDS and tested on the entire AREDS2 (1121 participants), without using genotype information.

| Models | Tested on the entire AREDS2 |

|---|---|

| Late AMD | |

| Deep features/survival | 71.0 (70.2,71.7) |

| DL grading/survival | 69.7 (68.9,70.5) |

| Retinal specialists/calculator | 63.9 (63.2,64.6) |

| Retinal specialists/SSSa | 62.5 (62.3,62.7) |

| Geographic atrophy | |

| Deep features/survival | 75.3 (74.5,76.0) |

| DL grading/survival | 75.0 (74.0,76.0) |

| Retinal specialists/calculator | 64.4 (63.6,65.2) |

| Retinal specialists/SSSa | – |

| Neovascular AMD | |

| Deep features/survival | 62.8 (61.9,63.8) |

| DL grading/survival | 61.8 (61.0,62.7) |

| Retinal specialists/calculator | 61.8 (60.8,62.9) |

| Retinal specialists/SSSa | – |

aRetinal specialists/SSS—makes predictions at one fixed interval of 5 years and for late AMD only (i.e., not by disease subtype); unlike all other models, for SSS, late AMD is defined as NV or central GA (instead of NV or any GA).

Survival models with additional input of genotype

For all approaches possible, the predictions were tested with the additional input of genotype (Table 5). Interestingly, adding the genotype data, even the 52 SNP-based Genetic Risk Score (GRS; see “Methods” section) available only in rare research contexts37, did not improve the accuracy for deep features/survival or DL grading/survival; by contrast, adding just two SNPs (the maximum handled by the calculator) did improve the accuracy modestly for the retinal specialists/calculator approach. Multivariate analysis (Supplementary Table 2) demonstrated that deep features/DL grading, age, and AMD GRS contributed significantly to the survival models. The non-reliance of the DL approaches on genotype information favors their accessibility, as genotype data are typically unavailable for patients currently seen in clinical practice. It suggests that adding genotype information may partially compensate for the inferior accuracy obtained from human gradings, but contributes little to the accuracy of DL approaches, particularly deep feature extraction.

Table 5.

The accuracy (C-statistic, 95% confidence interval) of the three different approaches in predicting risk of progression to late AMD on the combined AREDS/AREDS2 test sets (601 participants), with the inclusion of accompanying genotype information.

| (a) Models | 1 | 2 | 3 | 4 | 5 | All years |

|---|---|---|---|---|---|---|

| Late AMD | ||||||

| Deep features/survival | 88.8 (88.0,89.7) | 85.4 (84.4,86.4) | 86.1 (85.2,86.9) | 86.9 (86.1,87.6) | 86.6 (85.8,87.4) | 86.8 (86.1,87.6) |

| DL grading/survival | 89.4 (88.2,90.6) | 83.8 (82.4,85.1) | 84.0 (83.0,85.1) | 85.0 (84.2,85.8) | 84.9 (84.2,85.6) | 85.5 (84.9,86.2) |

| Retinal specialists/calculator | – | 78.7 (78.3,79.1) | 82.4 (82.1,82.7) | 83.6 (83.3,83.8) | 83.1 (82.8,83.3) | – |

| Geographic atrophy | ||||||

| Deep features/survival | 89.5 (88.4,90.5) | 90.7 (89.9,91.5) | 88.6 (87.3,89.8) | 89.0 (88.0,89.9) | 89.5 (88.7,90.3) | 89.4 (88.7,90.2) |

| DL grading/survival | 87.0 (86.0,88.0) | 86.5 (85.6,87.5) | 85.9 (85.0,86.8) | 87.0 (86.3,87.7) | 87.6 (87.0,88.2) | 87.5 (87.0,88.0) |

| Retinal specialists/calculator | – | 77.6 (77.1,78.1) | 81.8 (81.5,82.2) | 83.0 (82.7,83.3) | 83.2 (83.0,83.5) | – |

| Neovascular AMD | ||||||

| Deep features/survival | 85.8 (84.6,87.0) | 79.1 (77.0,81.2) | 81.9 (80.6,83.3) | 82.4 (81.2,83.6) | 81.7 (80.7,82.7) | 82.6 (81.7,83.5) |

| DL grading/survival | 87.3 (86.3,88.3) | 77.3 (75.1,79.5) | 77.3 (75.6,79.0) | 78.7 (77.1,80.2) | 78.8 (77.4,80.1) | 80.0 (78.8,81.3) |

| Retinal specialists/calculator | – | 77.3 (76.6,77.9) | 82.0 (81.5,82.4) | 82.4 (82.1,82.8) | 80.9 (80.6,81.3) | – |

| (b) Models | 1 | 2 | 3 | 4 | 5 | All years |

|---|---|---|---|---|---|---|

| Late AMD | ||||||

| Deep features/survival | 87.9 (87.6,88.2) | 84.8 (84.4,85.2) | 85.9 (85.6,86.2) | 86.2 (86.0,86.4) | 86.0 (85.8,86.2) | 86.4 (86.2,86.6) |

| DL grading/survival | 84.2 (83.8,84.6) | 84.3 (84.0,84.6) | 84.9 (84.7,85.2) | 84.9 (84.7,85.1) | 85.4 (85.2,85.6) | 84.2 (83.8,84.6) |

| Geographic atrophy | ||||||

| Deep features/survival | 89.9 (89.6,90.1) | 90.1 (89.8,90.4) | 88.5 (88.2,88.8) | 88.5 (88.2,88.7) | 89.0 (88.8,89.2) | 88.7 (88.5,88.9) |

| DL grading/survival | 87.0 (86.6,87.3) | 86.2 (85.9,86.5) | 86.7 (86.5,87.0) | 87.1 (86.9,87.3) | 87.0 (86.8,87.2) | 87.0 (86.6,87.3) |

| Neovascular AMD | ||||||

| Deep features/survival | 83.7 (83.2,84.2) | 77.6 (77.0,78.3) | 81.5 (81.0,81.9) | 81.9 (81.6,82.3) | 81.3 (80.9,81.6) | 82.3 (82.1,82.6) |

| DL grading/survival | 77.6 (77.0,78.3) | 78.7 (78.2,79.2) | 79.9 (79.5,80.3) | 79.9 (79.5,80.2) | 81.0 (80.7,81.4) | 77.6 (77.0,78.3) |

(a) Use of CFH rs1061170 and ARMS2 rs10490924 status only. (b) Use of CFH/ARMS2 and the 52 SNP-based AMD Genetic Risk Score. The AMD online calculator is able to receive only CFH rs1061170 and ARMS2 rs10490924 status, not the 52 SNP-based AMD Genetic Risk Score.

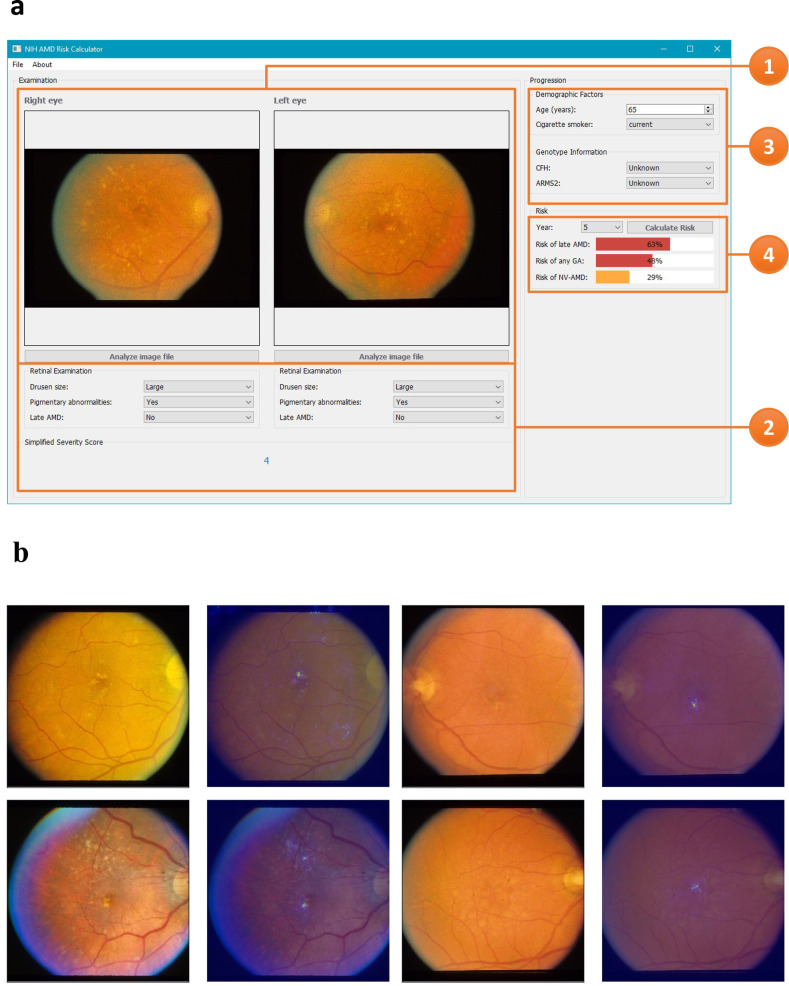

Research software prototype for AMD progression prediction

To demonstrate how these algorithms could be used in practice, we developed a software prototype that allows researchers to test our model with their own data. The application (shown in Fig. 4) receives bilateral CFP and performs autonomous AMD classification and risk prediction. For transparency, the researcher is given (i) grading of drusen and pigmentary abnormalities, (ii) predicted SSS, and (iii) estimated risks of late AMD, GA, and NV, over 1–12 years. This approach allows improved transparency and flexibility: users may inspect the automated gradings, manually adjust these if necessary, and recalculate the progression risks. Following further validation, this software tool may potentially augment human research and clinical practice.

Fig. 4. A screenshot of our research prototype system for AMD risk prediction.

a Screenshot of late AMD risk prediction. 1, Upload bilateral color fundus photographs. 2, Based on the uploaded images, the following information is automatically generated separately for each eye: drusen size status, pigmentary abnormality presence/absence, late AMD presence/absence, and the Simplified Severity Scale score. 3, Enter the demographic and (if available) genotype information, and the time point for prediction. 4, The probability of progression to late AMD (in either eye) is automatically calculated, along with separate probabilities of geographic atrophy and neovascular AMD. b Four selected color fundus photographs with highlighted areas used by the deep learning classification network (DeepSeeNet). Saliency maps were used to represent the visually dominant location (drusen or pigmentary changes) in the image by back-projecting the last layer of neural network.

Discussion

We developed, trained, and validated a framework for predicting individual risk of late AMD by combining DL and survival analysis. This approach delivers autonomous predictions of a higher accuracy than those from retinal specialists using two existing clinical standards. Hence, the predictions are closer to the ground truth of actual time-based progression to late AMD than when retinal specialists are grading the same bilateral CFP and entering these grades into the SSS or the online calculator. In addition, deep feature extraction generally achieved slightly higher accuracy than DL grading of traditional hand-crafted features.

Table 4 shows that the C-statistic values were lower for AREDS2, as expected, since the majority of its participants were at higher risk of progression38; though more difficult, predicting progression for AREDS2 participants is more representative of a clinically meaningful task. When compared to the results in Table 2, the accuracy of our models decreased less than retinal specialists/calculator and retinal specialists/SSS. This demonstrates the relative generalizability of our models. Furthermore, this suggests the ability of deep features/survival to differentiate individuals with relatively similar SSS more accurately than existing clinical standards (since nearly all AREDS2 participants had SSS ≥ 2 at baseline). It is also worth noting that it was possible to improve further the performance of our models on the AREDS2 data set, if the models had been modified to accommodate the unique characteristics of the AREDS2. Since the AREDS is enriched for participants with milder baseline AMD severity, it may be possible to improve the performance of our models on the AREDS2 data set by either training the models on progressors only or decreasing the prevalence of non-progressors. However, for fair comparisons to other results in this study, we kept our models unchanged.

In addition, unlike the SSS, whose 5-year risk prediction becomes saturated at 50%10, the DL models enable ascertainment of risk above 50%. This may be helpful in justifying medical and lifestyle interventions4,5,8, vigilant home monitoring6,7, and frequent reimaging39, and in planning shorter but highly powered clinical trials9. For example, the AREDS-style oral supplements decrease the risk of developing late AMD by ~25%, but only in individuals at higher risk of disease progression4,5. Similarly, if subthreshold nanosecond laser treatment is approved to slow progression to late AMD8, accurate risk predictions may be very helpful for identifying eyes that may benefit most.

Considering that the DL grading model is better at detecting drusen and pigmentary changes, we also took the grades from the DL grading model and used these as input to the SSS and the Casey calculator, to generate predictions (Supplementary Table 3). We found that the accuracy of the predictions was higher when using the grades from the DL grading than from the retinal specialists. Hence, it is the combination of the DL grading and the survival approach that provides accurate and automated predictions.

In addition, we compared C-statistic results on three DL models: nnet-survival40, DeepSurv41, and CoxPH (Supplementary Table 4). CoxPH had the best calibration performance using either the deep features or DL grading, though the differences were fairly small.

For all approaches, the accuracy was substantially higher for predictions of GA than NV. The DL-based approaches improved accuracy for both subtypes, but, for NV, insufficiently to reach that obtained for GA. Potential explanations include the partially stochastic nature of NV and/or higher suitability of predicting GA from en face imaging.

Error analysis (i.e., examining the reasons behind inaccurate predictions) was facilitated more easily by our two-step architecture, unlike in end-to-end, ‘black-box’ DL approaches. This revealed that the survival model accounted for most errors, since (i) classification network accuracy was relatively high (Supplementary Fig. 2), and (ii) when perfect classification results (i.e., taken from the ground truth) were used as input to the survival model, the 5-year C-statistic of DL grading/survival on late AMD improved only slightly, from 85.1 (85.0,85.3) to 85.8 (85.6–86.0). Supplementary Figure 3 demonstrates two example cases of progression. In the first case, both participants were the same age (73 years) and had the same smoking history (current), and AREDS SSS scores (4) at baseline. The retinal specialist graded the SSS correctly. However, participant 1 progressed to late AMD at year 2 (any GA at right eye), but participant 2 progressed to late AMD at year 5 (any GA at left eye). Hence, both the retinal specialist/calculator and retinal specialist/SSS approaches incorrectly assigned the same risk of progression to both participants (0.413). However, the deep feature/survival approach correctly assigned a higher risk of progression to participant 1 (0.751) and a lower risk to participant 2 (0.591). In the second case, both participants were the same age (73 years) and had the same smoking history (former). Their AREDS SSS scores at baseline were 3 and 4, respectively. The retinal specialist graded the SSS correctly. However, participant 3 progressed to late AMD at year 2 (NV at right eye), while participant 4 had still not progressed to late AMD by final follow-up at year 11. Hence, both the retinal specialist/calculator and retinal specialist/SSS approaches incorrectly assigned a lower risk of progression to participant 3 (0.259) and a higher risk to participant 4 (0.413). However, the deep feature/survival approach correctly assigned a higher risk of progression to participant 4 and a lower risk to participant 3 (0.569 vs 0.528).

The strengths of the study include the application of combining DL image analysis and deep feature extraction with survival analysis to retinal disease. Survival analysis has been used widely in AMD progression research4,38; in this study, it made best use of the data, specifically the timing and nature of any progression events at the individual level. Deep feature extraction has the advantages that the model is unconstrained by current medical knowledge and not limited to two features. It allows the model to learn de novo what features are most highly predictive of time-based progression events, and to develop multiple (e.g., 5, 10, or more) predictive features. Unlike shallow features that appear early in CNNs, deep features are potentially complex and high-order features, and might even be relatively invisible to the human eye. However, one limitation of deep feature extraction is that predictions based on these features may be less explainable and biologically plausible, and less amenable to error analysis, than those based on DL grading of traditional risk features36. Additional strengths include the use of two well-characterized cohorts, with detailed time-based knowledge of progression events at the reading center standard. By pooling AREDS and AREDS2 (which has not been used previously in DL studies), we were able to construct a cohort that had a wide spectrum of AMD severity but was enriched for cases of higher baseline severity. In addition, in other experiments, keeping the data sets separate enabled us to perform external validation using AREDS2 as an independent cohort.

In terms of limitations, as in the two existing clinical standards, AREDS/AREDS2 treatment assignment was not considered in this analysis10,12. Since most AREDS/AREDS2 participants were assigned to oral supplements that decreased risk of late AMD, the risk estimates obtained are closer to those for individuals receiving supplements. However, this seems appropriate, given that AREDS-style supplements are considered the standard of care for patients with intermediate AMD39. Another limitation is that this work relates to CFP only. DL approaches to optical coherence tomography (OCT) data sets hold promise for AMD diagnosis42,43, but no highly validated OCT-based tools exist for risk prediction. In the future, we plan to apply our framework to multi-modal imaging, including fundus autofluorescence and OCT data.

In conclusion, combining DL feature extraction of CFP with survival analysis achieved high prognostic accuracy in predictions of progression to late AMD, and its subtypes, over a wide time interval (1–12 years). Not only did its accuracy meet and surpass existing clinical standards, but additional strengths in clinical settings include risk ascertainment above 50% and without genotype data.

Methods

Data sets

For model development and clinical validation, two data sets were used: the AREDS31 and the AREDS232 (Fig. 2). The AREDS was a 12-year multi-center prospective cohort study of the clinical course, prognosis, and risk factors of AMD, as well as a phase III randomized clinical trial (RCT) to assess the effects of nutritional supplements on AMD progression31. In short, 4757 participants aged 55–80 years were recruited between 1992 and 1998 at 11 retinal specialty clinics in the United States. The inclusion criteria were wide, from no AMD in either eye to late AMD in one eye. The participants were randomly assigned to placebo, antioxidants, zinc, or the combination of antioxidants and zinc. The AREDS data set is publicly accessible to researchers by request at dbGAP (https://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000001.v3.p1).

Similarly, the AREDS2 was a multi-center phase III RCT that analyzed the effects of different nutritional supplements on the course of AMD32. 4203 participants aged 50–85 years were recruited between 2006 and 2008 at 82 retinal specialty clinics in the United States. The inclusion criteria were the presence of either bilateral large drusen or late AMD in one eye and large drusen in the fellow eye. The participants were randomly assigned to placebo, lutein/zeaxanthin, docosahexaenoic acid (DHA) plus eicosapentaenoic acid (EPA), or the combination of lutein/zeaxanthin and DHA plus EPA. AREDS supplements were also administered to all AREDS2 participants, because they were by then considered the standard of care39. We will make the AREDS2 data set publicly accessible upon publication.

In both studies, the primary outcome measure was the development of late AMD, defined as neovascular AMD or central GA. Institutional review board approval was obtained at each clinical site and written informed consent for the research was obtained from all study participants. The research was conducted under the Declaration of Helsinki and, for the AREDS2, complied with the Health Insurance Portability and Accessibility Act. For both studies, at baseline and annual study visits, comprehensive eye examinations were performed by certified study personnel using a standardized protocol, and CFP (field 2, i.e., 30° imaging field centered at the fovea) were captured by certified technicians using a standardized imaging protocol. Progression to late AMD was defined by the study protocol based on the grading of CFP31,32, as described below.

As part of the studies, 2889 (AREDS) and 1826 (AREDS2) participants consented to genotype analysis. SNPs were analyzed using a custom Illumina HumanCoreExome array37. For the current analysis, two SNPs (CFH rs1061170 and ARMS2 rs10490924, at the two loci with the highest attributable risk of late AMD), were selected, as these are the two SNPs available as input for the existing online calculator system. In addition, the AMD GRS was calculated for each participant according to methods described previously37. The GRS is a weighted risk score based on 52 independent variants at 34 loci identified in a large genome-wide association study37 as having significant associations with risk of late AMD. The online calculator cannot receive this detailed information.

The eligibility criteria for participant inclusion in the current analysis were: (i) absence of late AMD (defined as NV or any GA) at study baseline in either eye, since the predictions were made at the participant level, and (ii) presence of genetic information (in order to compare model performance with and without genetic information on exactly the same cohort of participants). Accordingly, the images used for the predictions were those from the study baselines only.

In the AREDS data set of CFPs, information on image laterality (i.e., left or right eye) and field status (field 1, 2, or 3) were available from the Reading Center. However, these were not available in the AREDS2 data set of CFPs. We therefore trained two Inception-v3 models, one for classifying laterality and the other for identifying field 2 images. Both models were first trained on the gold standard images from the AREDS and fine-tuned on a newly created gold standard AREDS2 set manually graded by a retinal specialist (TK). The AREDS2 gold standard consisted of 40 participants with 5164 images (4097 for training and 1067 for validation). The models achieved 100% accuracy for laterality classification and 97.9% accuracy (F1-score 0.971, precision 0.968, recall 0.973) for field 2 classification.

Gold standard grading

The ground truth labels used for both training and testing were the grades previously assigned to each CFP by expert human graders at the University of Wisconsin Fundus Photograph Reading Center. The reading center workflow has been described previously30. In brief, a senior grader performed initial grading of each photograph for AMD severity using a 4-step scale and a junior grader performed detailed grading for multiple AMD-specific features. All photographs were graded independently and without access to the clinical information. A rigorous process of grading quality control was performed at the reading center, including assessment for inter-grader and intra-grader agreement30. The reading center grading features relevant to the current study, aside from late AMD, were: (i) macular drusen status (none/small, medium (diameter ≥ 63 µm and <125 µm), and large (≥125 µm)), and (ii) macular pigmentary abnormalities related to AMD (present or absent).

In addition to undergoing reading center grading, the images at the study baseline were also assessed (separately and independently) by 88 retinal specialists in AREDS and 196 retinal specialists in AREDS2. The responses of the retinal specialists were used not as the ground truth, but for comparisons between human grading as performed in routine clinical practice and DL-based grading. By applying these retinal specialist grades as input to the two existing clinical standards for predicting progression to late AMD, it was possible to compare the current clinical standard of human predictions with those predictions achievable by DL.

Development of the algorithm

The overall framework of our method is shown in Fig. 1. First, a CNN was adapted to (i) extract multiple highly discriminative deep features, or (ii) estimate grades for drusen and pigmentary abnormalities (Fig. 1a–c). Second, a Cox proportional hazards model was used to predict probability of progression to late AMD (and GA/NV, separately), based on the deep features (deep features/survival) or the DL grading (DL grading/survival) (Fig. 1d, e). In this step, additional participant information could be added, such as age, smoking status, and genetics.

As the first stage in the workflow, the DL-based image analysis was performed using two different adaptations of ‘DeepSeeNet’13. DeepSeeNet is a CNN framework that was created for AMD severity classification. It has achieved state-of-the-art performance for the automated diagnosis and classification of AMD severity from CFP; this includes the grading of macular drusen, pigmentary abnormalities, the SSS13, and the AREDS 9-step severity scale44. In particular, using reading center grades as the ground truth, we have recently demonstrated that DeepSeeNet performs grading with accuracy that was superior to that of human retinal specialists (Supplementary Fig. 1). The two different adaptations are described here:

Deep features

The first adaptation was named ‘deep features’. This approach involved using DL to derive and weight predictive image features, including high-dimensional ‘hidden’ features45. Deep features were extracted from the second to last fully-connected layer of DeepSeeNet (the highlighted part in the classification network in Fig. 1). In total, 512 deep features could be extracted for each participant in this way, comprising 128 deep features for each of the two models (drusen and pigmentary abnormalities) in each of the two images (left and right eyes). After feature extraction, all 512 deep features were normalized as standard-scores. Feature selection was required at this point, to avoid overfitting and to improve the generalizability, because of the multi-dimensional nature of the features. Hence, we performed feature selection to group correlated features and pick one feature for each group46. Features with non-zero coefficients were selected and applied as input to the survival models described below.

Deep learning grading

The second adaptation of DeepSeeNet was named ‘DL grading’, i.e., referring to the grading of drusen and pigmentary abnormalities, the two macular features considered by humans most able to predict progression to late AMD. In this adaptation, the two predicted risk factors were used directly. In brief, one CNN was previously trained and validated to estimate drusen status in a single CFP, according to three levels (none/small, medium, or large), using reading center grades as the ground truth13. A second CNN was previously trained and validated to predict the presence or absence of pigmentary abnormalities in a single CFP.

Survival model

The second stage of our workflow comprised a Cox proportional hazards model47 to estimate time to late AMD (Fig. 1d, e). The Cox model is used to evaluate simultaneously the effect of several factors on the probability of the event, i.e., participant progression to late AMD in either eye. Separate Cox proportional hazards models were created to analyze time to late AMD and time to subtype of late AMD (i.e., GA and NV). In addition to the image-based information, the survival models could receive three additional inputs: (i) participant age; (ii) smoking status (current/former/never), and (iii) participant AMD genotype (CFH rs1061170, ARMS2 rs10490924, and the AMD GRS).

Experimental design

In both of the DeepSeeNet adaptations described, the DL CNNs used Inception-v3 architecture48, which is a state-of-the-art CNN for image classification; it contains 317 layers, comprising a total of over 21 million weights that are subject to training. Training was performed using two commonly used libraries: Keras (https://keras.io) and TensorFlow49. All images were cropped to generate a square image field encompassing the macula and resized to 512 × 512 pixels. The hyperparameters were learning rate 0.0001 and batch size 32. The training was stopped after 5 epochs once the accuracy on the development set no longer increased. All experiments were conducted on a server with 32 Intel Xeon CPUs, using a NVIDIA GeForce GTX 1080 Ti 11 Gb GPU for training and testing, with 512 Gb available in RAM memory. We fitted the Cox proportional hazard model using the deep features as covariates. Specifically, we selected the 16 features with the highest weights (i.e., the features found to be most predictive of progression to late AMD, with their inclusion as covariates in the Cox model). We performed feature selection using the ‘glmnet’ package46 in R version 3.5.2 statistical software.

Training and testing

For training and testing our framework, we used both the AREDS and AREDS2 data sets. In the primary set of experiments, eligible participants from both studies were pooled to create one broad cohort of 3298 individuals that combined a wide spectrum of baseline disease severity with a high number of progression events. The combined data set was split at the participant level in the ratio 70%/10%/20% to create three sets: 2364 participants (training set), 333 participants (development set), and 601 participants (hold-out test set).

Separately, all of the baseline images in the test set were graded by 88 (AREDS) and 192 (AREDS2) retinal specialists. By using these grades as input to either the SSS or the online calculator, we computed the prediction results of the two existing standards: ‘retinal specialists/SSS’ and ‘retinal specialists/calculator’.

For three of the four approaches (deep features/survival, DL grading/survival, and retinal specialists/calculator), the input was bilateral CFP, participant age, and smoking status; separate experiments were conducted with and without the additional input of genotype data. For the other approach (retinal specialists/SSS), the input was bilateral CFP only.

In addition to the primary set of experiments where eligible participants from the AREDS and AREDS2 were combined to form one data set, separate experiments were conducted where the DL models were: (i) trained separately on the AREDS training set only, or the AREDS2 training set only, and tested on the combined AREDS/AREDS2 test set, and (ii) trained on the AREDS training set only and externally validated by testing on the AREDS2 test set only.

Statistical analysis

As the primary outcome measure, the performance of the risk prediction models was assessed by the C-statistic50 at 5 years from study baseline. Five years from study baseline was chosen as the interval for the primary outcome measure since this is the only interval where comparison can be made with the SSS, and the longest interval where predictions can be tested using the AREDS2 data.

For binary outcomes such as progression to late AMD, the C-statistic represents the area under the receiver operating characteristic curve (AUC). The C-statistic is computed as follows: all possible pairs of participants are considered where one participant progressed to late AMD and the other participant in the pair progressed later or not at all; out of all these pairs, the C-statistic represents the proportion of pairs where the participant who had been assigned the higher risk score was the one who did progress or progressed earlier. A C-statistic of 0.5 indicates random predictions, while 1.0 indicates perfectly accurate predictions. We used 200 bootstrap samples to obtain a distribution of the C-statistic and reported 95% confidence intervals. For each bootstrap iteration, we sampled n patients with replacement from the test set of n patients.

As a secondary outcome measure of performance, we calculated the Brier score from prediction error curves, following the work of Klein et al.12. The Brier score is defined as the squared distances between the model’s predicted probability and actual late AMD, GA, or NV status, where a score of 0.0 indicates a perfect match. The Wald test was used to assess the statistical significance of each factor in the survival models51. It corresponds to the ratio of each regression coefficient to its standard error. The ‘survival’ package in R version 3.5.2 was used for Cox proportional hazards model evaluation. Finally, saliency maps were generated to represent the image locations that contributed most to decision-making by the DL models (for drusen or pigmentary abnormalities). This was done by back-projecting the last layer of the neural network. The Python package ‘keras-vis’ was used to generate the saliency map52.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information

Acknowledgements

The work was supported by the intramural program funds and contracts from the National Center for Biotechnology Information/National Library of Medicine/National Institutes of Health, the National Eye Institute/National Institutes of Health, Department of Health and Human Services, Bethesda Maryland (Contract HHS-N-260–2005–00007-C; ADB contract NO1-EY-5–0007; Grant No K99LM013001). Funds were generously contributed to these contracts by the following National Institutes of Health: Office of Dietary Supplements, National Center for Complementary and Alternative Medicine; National Institute on Aging; National Heart, Lung, and Blood Institute; and National Institute of Neurological Disorders and Stroke.

Author contributions

Conception and design: Y.P., T.D.K., E.Y.C., and Z.L. Analysis and interpretation: Y.P., T.D.K., Q.C., E.A., A.A., W.T.W., E.Y.C., and Z.L. Data collection: Y.P., T.D.K., Q.C., E.A., W.T.W., and E.Y.C. Drafting the work: Y.P., T.D.K., W.T.W., E.Y.C., and Z.L.

Data availability

The AREDS data set (NCT00000145) generated during and/or analyzed during the current study is available in the dbGAP repository, https://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000001.v3.p1. The AREDS2 data set generated during and/or analyzed during the current study has been deposited in dbGAP, accession number phs002015.v1.p1. However, due to an ongoing IRB review of the AREDS2 data set, the data will not be available in dbGAP for ~3 months post-publication. While the AREDS2 data set is being reviewed by the IRB, it is available from the corresponding author on reasonable request.

Code availability

Codes are available at https://github.com/ncbi-nlp/DeepSeeNet.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Yifan Peng, Tiarnan D. Keenan.

Contributor Information

Emily Y. Chew, Email: echew@nei.nih.gov

Zhiyong Lu, Email: zhiyong.lu@nih.gov.

Supplementary information

Supplementary information is available for this paper at 10.1038/s41746-020-00317-z.

References

- 1.Quartilho A, et al. Leading causes of certifiable visual loss in England and Wales during the year ending 31 March 2013. Eye. 2016;30:602–607. doi: 10.1038/eye.2015.288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wong WL, et al. Global prevalence of age-related macular degeneration and disease burden projection for 2020 and 2040: a systematic review and meta-analysis. Lancet Glob. Health. 2014;2:e106–e116. doi: 10.1016/S2214-109X(13)70145-1. [DOI] [PubMed] [Google Scholar]

- 3.Ferris FL, 3rd, et al. Clinical classification of age-related macular degeneration. Ophthalmology. 2013;120:844–851. doi: 10.1016/j.ophtha.2012.10.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Age-Related Eye Disease Study Research Group. A randomized, placebo-controlled, clinical trial of high-dose supplementation with vitamins C and E, beta carotene, and zinc for age-related macular degeneration and vision loss: AREDS report no. 8. Arch. Ophthalmol. 2001;119:1417–1436. doi: 10.1001/archopht.119.10.1417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Age-Related Eye Disease Study 2 Research Group. Lutein + zeaxanthin and omega-3 fatty acids for age-related macular degeneration: the Age-Related Eye Disease Study 2 (AREDS2) randomized clinical trial. JAMA. 2013;309:2005–2015. doi: 10.1001/jama.2013.4997. [DOI] [PubMed] [Google Scholar]

- 6.Domalpally A, et al. Imaging characteristics of choroidal neovascular lesions in the AREDS2-HOME Study: Report Number 4. Ophthalmol. Retin. 2019;3:326–335. doi: 10.1016/j.oret.2019.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Areds Home Study Research Group et al. Randomized trial of a home monitoring system for early detection of choroidal neovascularization home monitoring of the Eye (HOME) study. Ophthalmology. 2014;121:535–544. doi: 10.1016/j.ophtha.2013.10.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Guymer RH, et al. Subthreshold nanosecond laser intervention in age-related macular degeneration: The LEAD Randomized Controlled Clinical Trial. Ophthalmology. 2019;126:829–838. doi: 10.1016/j.ophtha.2018.09.015. [DOI] [PubMed] [Google Scholar]

- 9.Calaprice-Whitty, D. et al. Improving clinical trial participant prescreening with artificial intelligence (AI): a comparison of the results of AI-assisted vs standard methods in 3 oncology trials. Ther. Innov. Regul. Sci. 10.1177/2168479018815454 (2019). [DOI] [PubMed]

- 10.Ferris FL, et al. A simplified severity scale for age-related macular degeneration: AREDS Report No. 18. Arch. Ophthalmol. 2005;123:1570–1574. doi: 10.1001/archopht.123.11.1570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Age-Related Eye Disease Study Research Group. The Age-Related Eye Disease Study severity scale for age-related macular degeneration: AREDS Report No. 17. Arch. Ophthalmol. 2005;123:1484–1498. doi: 10.1001/archopht.123.11.1484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Klein ML, et al. Risk assessment model for development of advanced age-related macular degeneration. Arch. Ophthalmol. 2011;129:1543–1550. doi: 10.1001/archophthalmol.2011.216. [DOI] [PubMed] [Google Scholar]

- 13.Peng Y, et al. DeepSeeNet: a deep learning model for automated classification of patient-based age-related macular degeneration severity from color fundus photographs. Ophthalmology. 2019;126:565–575. doi: 10.1016/j.ophtha.2018.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ching, T. et al. Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface15, 10.1098/rsif.2017.0387 (2018). [DOI] [PMC free article] [PubMed]

- 15.Grassmann, F. et al. A deep learning algorithm for prediction of age-related eye disease study severity scale for age-related macular degeneration from color fundus photography. Ophthalmology10.1016/j.ophtha.2018.02.037 (2018). [DOI] [PubMed]

- 16.Liang H, et al. Evaluation and accurate diagnoses of pediatric diseases using artificial intelligence. Nat. Med. 2019;25:433–438. doi: 10.1038/s41591-018-0335-9. [DOI] [PubMed] [Google Scholar]

- 17.Hannun AY, et al. Cardiologist-level arrhythmia detection and classification in ambulatory electrocardiograms using a deep neural network. Nat. Med. 2019;25:65–69. doi: 10.1038/s41591-018-0268-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gulshan V, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 19.Poplin R, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018;2:158–164. doi: 10.1038/s41551-018-0195-0. [DOI] [PubMed] [Google Scholar]

- 20.Kermany DS, et al. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172:1122–1131.e1129. doi: 10.1016/j.cell.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 21.Esteva A, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ting DSW, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211–2223. doi: 10.1001/jama.2017.18152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ting DSW, et al. Deep learning in estimating prevalence and systemic risk factors for diabetic retinopathy: a multi-ethnic study. NPJ Digit. Med. 2019;2:24. doi: 10.1038/s41746-019-0097-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Raumviboonsuk P, et al. Deep learning versus human graders for classifying diabetic retinopathy severity in a nationwide screening program. NPJ Digit. Med. 2019;2:25. doi: 10.1038/s41746-019-0099-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Arcadu F, et al. Deep learning algorithm predicts diabetic retinopathy progression in individual patients. NPJ Digit. Med. 2019;2:92. doi: 10.1038/s41746-019-0172-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Abramoff MD, et al. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit. Med. 2018;1:39. doi: 10.1038/s41746-018-0040-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Burlina, P. M. et al. Use of deep learning for detailed severity characterization and estimation of 5-year risk among patients with age-related macular degeneration. JAMA Ophthalmol.10.1001/jamaophthalmol.2018.4118 (2018). [DOI] [PMC free article] [PubMed]

- 28.Babenko, A. & Lempitsky, V. Aggregating local deep features for image retrieval. In Proc. IEEE Intl. Conf. Comp. Vis. 1269–1277 (2015).

- 29.Schmidt-Erfurth U, et al. Artificial intelligence in retina. Prog. Retin Eye Res. 2018;67:1–29. doi: 10.1016/j.preteyeres.2018.07.004. [DOI] [PubMed] [Google Scholar]

- 30.Age-Related Eye Disease Study Research Group. The Age-Related Eye Disease Study system for classifying age-related macular degeneration from stereoscopic color fundus photographs: the Age-Related Eye Disease Study Report Number 6. Am. J. Ophthalmol. 2001;132:668–681. doi: 10.1016/S0002-9394(01)01218-1. [DOI] [PubMed] [Google Scholar]

- 31.Age-Related Eye Disease Study Research Group. The Age-Related Eye Disease Study (AREDS): design implications. AREDS report no. 1. Control Clin. Trials. 1999;20:573–600. doi: 10.1016/S0197-2456(99)00031-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Chew EY, et al. The Age-Related Eye Disease Study 2 (AREDS2): study design and baseline characteristics (AREDS2 report number 1) Ophthalmology. 2012;119:2282–2289. doi: 10.1016/j.ophtha.2012.05.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Flaxel, C. J. et al. Age-related macular degeneration preferred practice pattern(R). Ophthalmology10.1016/j.ophtha.2019.09.024 (2019). [DOI] [PubMed]

- 34.Liao, D. S. et al. Complement C3 inhibitor pegcetacoplan for geographic atrophy secondary to age-related macular degeneration: a randomized phase 2 trial. Ophthalmology10.1016/j.ophtha.2019.07.011 (2019). [DOI] [PubMed]

- 35.Fleckenstein M, et al. The progression of geographic atrophy secondary to age-related macular degeneration. Ophthalmology. 2018;125:369–390. doi: 10.1016/j.ophtha.2017.08.038. [DOI] [PubMed] [Google Scholar]

- 36.Ting, D. S. W. et al. Deep learning in ophthalmology: the technical and clinical considerations. Prog. Retin. Eye Res. 10.1016/j.preteyeres.2019.04.003 (2019). [DOI] [PubMed]

- 37.Fritsche LG, et al. A large genome-wide association study of age-related macular degeneration highlights contributions of rare and common variants. Nat. Genet. 2016;48:134–143. doi: 10.1038/ng.3448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ding Y, et al. Bivariate analysis of age-related macular degeneration progression using genetic risk scores. Genetics. 2017;206:119–133. doi: 10.1534/genetics.116.196998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.American Academy of Ophthalmology Retina/Vitreous Panel. Preferred Practice Pattern®Guidelines. Age-Related Macular Degeneration (American Academy of Ophthalmology, 2015).

- 40.Gensheimer MF, Narasimhan B. A scalable discrete-time survival model for neural networks. PeerJ. 2019;7:e6257. doi: 10.7717/peerj.6257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Katzman JL, et al. DeepSurv: personalized treatment recommender system using a Cox proportional hazards deep neural network. BMC Med. Res. Methodol. 2018;18:24. doi: 10.1186/s12874-018-0482-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.De Fauw J, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 2018;24:1342–1350. doi: 10.1038/s41591-018-0107-6. [DOI] [PubMed] [Google Scholar]

- 43.Lee CS, Baughman DM, Lee AY. Deep learning is effective for the classification of OCT images of normal versus age-related macular degeneration. Ophthalmol. Retin. 2017;1:322–327. doi: 10.1016/j.oret.2016.12.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Chen, Q. et al. A multi-task deep learning framework for the classification of Age-related macular degeneration. AMIA Jt. Summits Transl. Sci. Proc. 2019, 505–514 (2019). [PMC free article] [PubMed]

- 45.Lao J, et al. A deep learning-based radiomics model for prediction of survival in glioblastoma multiforme. Sci. Rep. 2017;7:10353. doi: 10.1038/s41598-017-10649-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Simon, N., Friedman, J., Hastie, T. & Tibshirani, R. Regularization paths for Cox’s proportional hazards model via coordinate descent. J. Stat. Softw.39, 10.18637/jss.v039.i05 (2011). [DOI] [PMC free article] [PubMed]

- 47.Cox, D. R. Breakthroughs in Statistics Springer Series in Statistics Ch. 37, 527–541 (Springer, 1992).

- 48.Szegedy, C. et al. in Proc. IEEE Conference on Computer Vision and Pattern Recognition 2818–2826 (2016).

- 49.Abadi, M. et al. TensorFlow: large-scale machine learning on heterogeneous distributed systems. Preprint at https://arxiv.org/abs/1603.04467 (2016).

- 50.Pencina MJ, D’Agostino RB. Overall C as a measure of discrimination in survival analysis: model specific population value and confidence interval estimation. Stat. Med. 2004;23:2109–2123. doi: 10.1002/sim.1802. [DOI] [PubMed] [Google Scholar]

- 51.Rosner, B. Fundamentals of Biostatistics (Cengage Learning, 2015).

- 52.Simonyan, K., Vedaldi, A. & Zisserman, A. Deep inside convolutional networks: Visualising image classification models and saliency maps. Preprint at https://arxiv.org/abs/1312.6034 (2013).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The AREDS data set (NCT00000145) generated during and/or analyzed during the current study is available in the dbGAP repository, https://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000001.v3.p1. The AREDS2 data set generated during and/or analyzed during the current study has been deposited in dbGAP, accession number phs002015.v1.p1. However, due to an ongoing IRB review of the AREDS2 data set, the data will not be available in dbGAP for ~3 months post-publication. While the AREDS2 data set is being reviewed by the IRB, it is available from the corresponding author on reasonable request.

Codes are available at https://github.com/ncbi-nlp/DeepSeeNet.