This cohort study uses data from the Canary Prostate Active Surveillance Study and University of California, San Francisco to investigate whether monitoring frequency of men with diagnosed prostate cancer can be tailored based on risk stability outcomes.

Key Points

Question

Can a subset of men on active surveillance for prostate cancer be identified who have very low risks of intermediate-term reclassification to higher-risk disease?

Findings

In this multicenter cohort study including 850 men and an independent validation cohort of 533 men, 7 clinical parameters available for nearly all men on surveillance predicted nonreclassification at 4 years, with high negative predictive value.

Meaning

These findings suggest that active surveillance regimens can be tailored to individual risk, and many men can be followed up at longer intervals than those specified by most current protocols, thereby reducing anxiety, toxic effects, and cost.

Abstract

Importance

Active surveillance is increasingly recognized as the preferred standard of care for men with low-risk prostate cancer. However, active surveillance requires repeated assessments, including prostate-specific antigen tests and biopsies that may increase anxiety, risk of complications, and cost.

Objective

To identify and validate clinical parameters that can identify men who can safely defer follow-up prostate cancer assessments.

Design, Setting, and Participants

The Canary Prostate Active Surveillance Study (PASS) is a multicenter, prospective active surveillance cohort study initiated in July 2008, with ongoing accrual and a median follow-up period of 4.1 years.

Men with prostate cancer managed with active surveillance from 9 North American academic medical centers were enrolled. Blood tests and biopsies were conducted on a defined schedule for least 5 years after enrollment. Model validation was performed among men at the University of California, San Francisco (UCSF) who did not enroll in PASS. Men with Gleason grade group 1 prostate cancer diagnosed since 2003 and enrolled in PASS before 2017 with at least 1 confirmatory biopsy after diagnosis were included. A total of 850 men met these criteria and had adequate follow-up. For the UCSF validation study, 533 active surveillance patients meeting the same criteria were identified. Exclusion criteria were treatment within 6 months of diagnosis, diagnosis before 2003, Gleason grade score of at least 2 at diagnosis or first surveillance biopsy, no surveillance biopsy, or missing data.

Exposures

Active surveillance for prostate cancer.

Main Outcomes and Measures

Time from confirmatory biopsy to reclassification, defined as Gleason grade group 2 or higher on subsequent biopsy.

Results

A total of 850 men (median [interquartile range] age, 64 [58-68] years; 774 [91%] White) were included in the PASS cohort. A total of 533 men (median [interquartile range] age, 61 [57-65] years; 422 [79%] White) were included in the UCSF cohort. Parameters predictive of reclassification on multivariable analysis included maximum percent positive cores (hazard ratio [HR], 1.30 [95% CI, 1.09-1.56]; P = .004), history of any negative biopsy after diagnosis (1 vs 0: HR, 0.52 [95% CI, 0.38-0.71]; P < .001 and ≥2 vs 0: HR, 0.18 [95% CI, 0.08-0.4]; P < .001), time since diagnosis (HR, 1.62 [95% CI, 1.28-2.05]; P < .001), body mass index (HR, 1.08 [95% CI, 1.05-1.12]; P < .001), prostate size (HR, 0.40 [95% CI, 0.25-0.62]; P < .001), prostate-specific antigen at diagnosis (HR, 1.51 [95% CI, 1.15-1.98]; P = .003), and prostate-specific antigen kinetics (HR, 1.46 [95% CI, 1.23-1.73]; P < .001). For prediction of nonreclassification at 4 years, the area under the receiver operating curve was 0.70 for the PASS cohort and 0.70 for the UCSF validation cohort. This model achieved a negative predictive value of 0.88 (95% CI, 0.83-0.94) for those in the bottom 25th percentile of risk and of 0.95 (95% CI, 0.89-1.00) for those in the bottom 10th percentile.

Conclusions and Relevance

In this study, among men with low-risk prostate cancer, heterogeneity prevailed in risk of subsequent disease reclassification. These findings suggest that active surveillance intensity can be modulated based on an individual’s risk parameters and that many men may be safely monitored with a substantially less intensive surveillance regimen.

Introduction

Although aggressive prostate cancer remains the second leading cause of cancer mortality among men in the United States,1 most prostate cancers at diagnosis have relatively low-risk characteristics, with minimal if any probability of distant progression and a natural history measurable often in decades.2 In recognition of this frequently indolent behavior, active surveillance—careful tumor monitoring using laboratory, imaging, and biopsy assessments—has been advanced as an alternative to immediate treatment with surgery or radiation and can delay or obviate the risks associated with such treatments.2

In the current decade, active surveillance has expanded from a primarily academic endeavor with limited uptake in community-based practice to an increasingly recognized standard of care alternative3,4 that is now endorsed by multiple guidelines as the preferred option for most low-risk tumors.5,6 However, surveillance does have its own limitations. Aside from known risks of undersampling higher-risk disease, all established surveillance protocols require repeated prostate-specific antigen (PSA) tests and follow-up biopsies to identify tumor grade or volume progression.5 These biopsies are uncomfortable, expensive, and associated with risks of significant bleeding and infection.7

No current consensus exists regarding the optimal frequency of surveillance assessments. In fact, given prostate cancer’s highly variable biology and natural history even within the low-risk group,8 a one-size-fits-all approach, managing all prostate cancers with the same schedule, makes little clinical or biological sense. Clinicians may therefore tailor surveillance intensity based on both tumor characteristics and patient factors, such as comorbidity and anxiety. However, no validated clinical tools currently exist to guide or standardize this strategy, and decisions are often made using heuristics on an ad hoc basis. We therefore aimed to analyze data from 2 large, prospective surveillance cohorts in order to develop and validate a clinical tool to identify those men among whom surveillance can be safely deintensified.

Methods

The model was developed using data from the Canary Prostate Active Surveillance Study (PASS). PASS is a multicenter, prospective cohort study actively enrolling men on active surveillance at 9 North American centers. Men eligible for surveillance per institutional criteria provide informed consent under local institutional review board supervision.9,10 In PASS, prescribed follow-up procedures include PSA every 3 months, clinic visits every 6 months, and ultrasound-guided biopsies at 6 to 12 months and 24 months after diagnosis, then every 2 years. Other tests, including magnetic resonance imaging (MRI) and biomarker assays, are performed at the clinicians’ discretion; because enrollment started in July 2008, most men did not undergo these tests. We included men diagnosed since 2003 and enrolled in PASS before 2017, with Gleason grade group (GG) 1 on diagnostic biopsy and GG1 or no tumor on confirmatory (first surveillance) biopsy. This study followed the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline.

For model validation, we included men undergoing surveillance at the University of California, San Francisco (UCSF) who were not participants in PASS.11 We again included men diagnosed since 2003 with GG1 tumors on diagnostic biopsy and GG1 or no tumor on confirmatory biopsy.

Statistical Analysis

We developed and validated a prediction model, designated the Canary model, using time-varying covariates based on the outcome of time to first reclassification during surveillance from a biopsy or PSA measurement time. For this study, reclassification was defined as any increase in GG to 2 or more. Men who were not reclassified were censored at the first date among treatment in the absence of reclassification, last date of contact, or 2 years after the last biopsy.

Predictors considered in model development included age at diagnosis (categorized by decade), body mass index (BMI), race/ethnicity (Black, White, or other), family history of prostate cancer, smoking status (current, former, or never), time since diagnosis, percent of biopsy cores involved with cancer, number of biopsies since diagnosis, number of negative biopsies since diagnosis, natural logarithm (ln) (prostate volume), ln (PSA at diagnosis), ln (last known PSA), clinical tumor (T) stage (T1 vs T2), 5α-reductase inhibitor (5ARI) use (current, former, or never), and a previously described PSA kinetic measure (PSAk).12 For men using 5ARI, an interaction term between time since diagnosis and 5ARI use was included in the PSAk calculation, and a sensitivity analysis was performed excluding these men.

Dynamic risk prediction based on partly conditional Cox proportional hazards regression for residual event time13,14 was used to construct a model predicting future risk of reclassification based on information available at each landmark time (eg, time of confirmatory biopsy). The model was built using forward selection based on at least 2 of the 3 following criteria: 2-tailed P value <.05, decrease in the Bayesian information criterion, or increase in area under the receiver operating curve (AUC). Models including all variables and employing least absolute shrinkage and selection operator were also considered.

The Canary model was built to be calculable at any given landmark time or event in the course of surveillance. As an illustrative and clinically relevant example, we determined the risk of reclassification over the next 4 years following confirmatory biopsy. For individuals from the UCSF cohort, absolute risk was calculated using relative risk estimates from the PASS model. Calibration of the model was assessed by comparing the observed reclassification risks with the model-predicted risks for groups, categorized by risk deciles. Although the UCSF cohort is known to be higher risk on average than PASS,15 the risk model was well calibrated in both cohorts. Kaplan-Meier curves for risk groups categorized by the lowest and highest 10th percentiles were presented to gauge the model’s utility for risk stratification.

The prognostic accuracy of the developed model was validated using time-dependent receiver operating characteristic curve analysis16 with AUC, as well as various accuracy summaries (true-positive fraction, false-positive fraction, positive predictive value, and negative predictive value) at selected cutoffs based on the percentiles of 4-year risk estimates at the time of confirmatory biopsy. PASS estimates were corrected for overfitting using 250 replicates of 2-fold cross-validation. Confidence intervals for these quantities were obtained via 500-fold bootstrap sampling for PASS. Decision curve analysis was performed to compare model-guided decision-making to a traditional “follow all” active surveillance strategy and to a watchful waiting “follow none” strategy.

To account for a possible ascertainment bias in the fact that for-cause (nonstudy) biopsy frequency may be driven by PSA kinetics and other informative clinical factors, we defined each biopsy as per protocol (on time), early, or late.12 A sensitivity analysis was performed in participants with all biopsies performed on time. Inverse probability weights were applied to the sensitivity analysis in order to account for nonrandom distribution of participants with only on-time biopsies.

A 2-sided P < .05 was considered significant for all analyses, which were performed using R statistics, version 3.4.1 (R Foundation). An online risk calculator based on the final model was developed using R Shiny apps.17

Results

Among 1420 men in PASS, 7 were excluded for treatment within 6 months of diagnosis, 44 for diagnosis before 2003, 307 for GG greater than or equal to 2 at diagnosis or first surveillance biopsy, 105 for no surveillance biopsy, and 107 for missing data, leaving a total of 850 men in the final analysis with a median (interquartile range) age of 64 (58-68) years. Of these, 774 (91%) were White, 42 (5%) were Black, and 34 (4%) were categorized as other. Among 1569 men in the UCSF cohort, 1352 were not in PASS. Of these, 137 were diagnosed before 2003, 415 had GG greater than or equal to 2 at diagnosis or first surveillance biopsy, 188 had no surveillance biopsy, and 79 had missing data, leaving a total of 533 for validation analysis with a median (interquartile range) age of 61 (57-65) years. Of these, 422 (79%) were White, 12 (2%) were Black, and 99 (19%) were classified as other. Table 1 summarizes demographic characteristics and clinical parameters in both cohorts. In PASS, 29 men (3%) were treated without reclassification, 140 (16%) were reclassified within 4 years, 44 (5%) were censored for lack of a biopsy beyond 2 years, and 280 (33%) were still at risk at 4 years. In the UCSF cohort, the corresponding numbers were 56 (11%), 117 (22%), 18 (3%), and 148 (28%), respectively.

Table 1. Participant Characteristics.

| Variable | PASS (n = 850) | UCSF (n = 533) |

|---|---|---|

| Age, No. (%), y | ||

| <60 | 342 (40) | 253 (47) |

| 60-70 | 427 (50) | 240 (45) |

| >70 | 81 (10) | 40 (8) |

| BMI, median (IQR) | 27 (25 to 30) | 27 (25 to 29) |

| Race/ethnicity, No.(%) | ||

| White | 774 (91) | 422 (79) |

| Black | 42 (5) | 12 (2) |

| Othera | 34 (4) | 99 (19) |

| Diagnostic percent positive cores, median (IQR), % | 8.3 (8.3 to 16.7) | 11 (7 to 19) |

| No. missing percent positive cores | 35 | 9 |

| Diagnostic PSA, median (IQR), ng/mL | 4.8 (3.6 to 6.4) | 5.4 (4.2 to 7.3) |

| No. PSA measurements, median (IQR) | 9 (5 to 15) | 10 (6 to 16) |

| PSAk at confirmatory bx, median (IQR), ng/mL/y | 0.03 (–0.01 to 0.05) | 0.04 (0.01 to 0.05) |

| Prostate size, median (IQR), mL | 42 (31 to 59) | 39 (30 to 54) |

| 5ARI medication use, No. (%) | 115 (14) | 11 (2) |

| Grade reclassification, No. (%) | 179 (21) | 154 (29) |

| Time between diagnostic and confirmatory biopsy, median (IQR) [range], y | 1.0 (0.6 to 1.2) [0.1 to 4.5] | 1.1 (0.8 to 1.3) [0.1 to 4.5] |

| Follow-up, median (IQR), y | ||

| From diagnostic bx, censored patients | 4.1 (2.6 to 5.7) | 3.5 (2.4 to 5.7) |

| From confirmatory bx, censored patients | 3.0 (1.6 to 4.6) | 2.1 (1.1 to 4.5) |

Abbreviations: 5ARI, 5α-reductase inhibitor; BMI, body mass index (calculated as weight in kilograms divided by height in meters squared); bx, biopsy; IQR, interquartile range; PASS, Canary Prostate Active Surveillance Study; PSA, prostate-specific antigen; PSAk, PSA kinetic measure; UCSF, University of California, San Francisco.

For PASS, Other constitutes 20 Asian; 2 Native Hawaiian or other Pacific Islander; 6 mixed race; 3 other; and 3 unknown/refused. For UCSF, Other constitutes 17 Asian/Pacific Islander; 82 unknown.

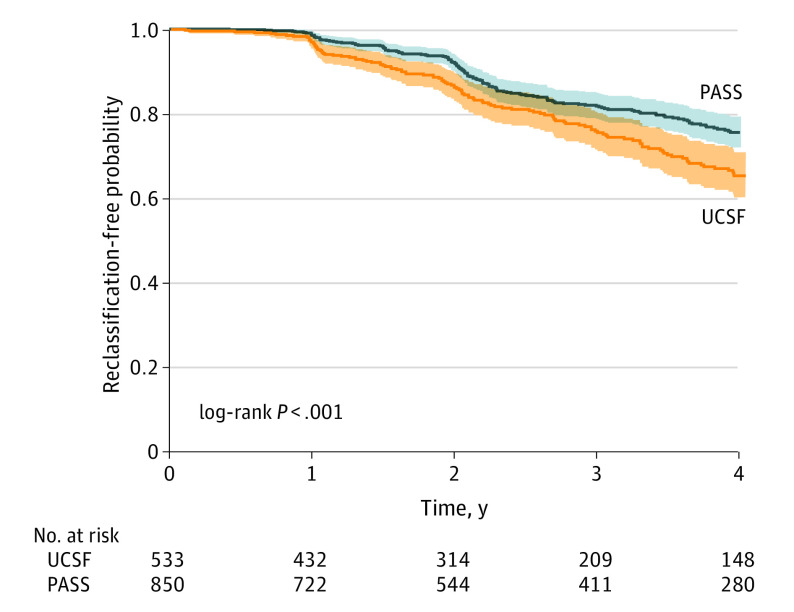

The results of the univariate survival analyses are illustrated by Figure 1. Despite comparable selection criteria, UCSF patients had higher-risk features and were more likely to experience reclassification by 3 and 4 years than PASS patients (4-year event-free probability, 0.65 [95% CI, 0.60-0.71] for UCSF and 0.76 [95% CI, 0.72-0.79] for PASS; P < .001). Table 2 summarizes the results of the Cox proportional hazards regression model. Final variables independently predictive of reclassification in the multivariable Canary model included maximum percent positive cores (hazard ratio [HR], 1.30 [95% CI, 1.09-1.56]; P = .004), history of any negative biopsies following diagnosis (1 vs 0: HR, 0.52 [95% CI, 0.38-0.71]; P < .001 and ≥2 vs 0: HR, 0.18 [95% CI, 0.08-0.4]; P < .001), time since diagnosis (HR, 1.62 [95% CI, 1.28-2.05]; P < .001), BMI (HR, 1.08 [95% CI, 1.05-1.12]; P < .001), prostate size (HR, 0.40 [95% CI, 0.25-0.62]; P < .001), PSA at diagnosis (HR, 1.51 [95% CI, 1.15-1.98]; P = .003), and PSAk (HR, 1.46 [95% CI, 1.23-1.73]; P < .001). Model fit and results were similar if we excluded men with a history of 5ARI exposure. The full and least absolute shrinkage and selection operator models, with substantially similar results, are presented in eTables 2 and 3 in the Supplement, respectively, and the AUC results for each model are listed in eTable 4 in the Supplement. The sensitivity analyses performed to account for potential ascertainment bias did not result in any meaningful differences in either parameter estimates or statistical significance of any model parameter (eTable 5 in the Supplement). The algorithm for calculating 4-year risk of reclassification is given in the eAppendix in the Supplement.

Figure 1. Reclassification-Free Probability Over Time.

Reclassification-free probability over time, beginning at the time of the confirmatory biopsy for PASS and UCSF. 95% CIs are indicated with dotted lines, and the risk table is included below the plots. PASS indicates Canary Prostate Active Surveillance Study; UCSF, University of California, San Francisco.

Table 2. Cox Proportional Hazards Regression Results.

| Variable | Univariable | Multivariable adjusted | ||

|---|---|---|---|---|

| HR (95% CI)a | P value | HR (95% CI) | P value | |

| Maximum percent positive cores (10% increase) | 1.75 (1.49-2.04) | <.001 | 1.30 (1.09-1.56) | .004 |

| Prior negative biopsy (since diagnosis) | ||||

| 1 vs 0 | 0.47 (0.35-0.64) | <.001 | 0.52 (0.38-0.71) | <.001 |

| ≥2 vs 0 | 0.20 (0.09-0.43) | <.001 | 0.18 (0.08-0.4) | <.001 |

| ln time since diagnosis, y | 1.17 (0.96-1.43) | .13 | 1.62 (1.28-2.05) | <.001 |

| BMI | 1.06 (1.02-1.10) | .002 | 1.08 (1.05-1.12) | <.001 |

| ln (prostate size), mL | 0.50 (0.35-0.71) | <.001 | 0.40 (0.25-0.62) | <.001 |

| ln (diagnostic PSA) | 1.02 (0.82-1.28) | .83 | 1.51 (1.15-1.98) | .003 |

| PSAk (0.10 increase) | 1.56 (1.29-1.90) | <.001 | 1.46 (1.23-1.73) | <.001 |

Abbreviations: BMI, body mass index; HR, hazard ratio; ln, natural logarithm; PSA, prostate-specific antigen; PSAk, PSA kinetic measure.

HRs and 95% CIs are calculated with robust variance estimates to account for correlations from multiple observations within the same individual.

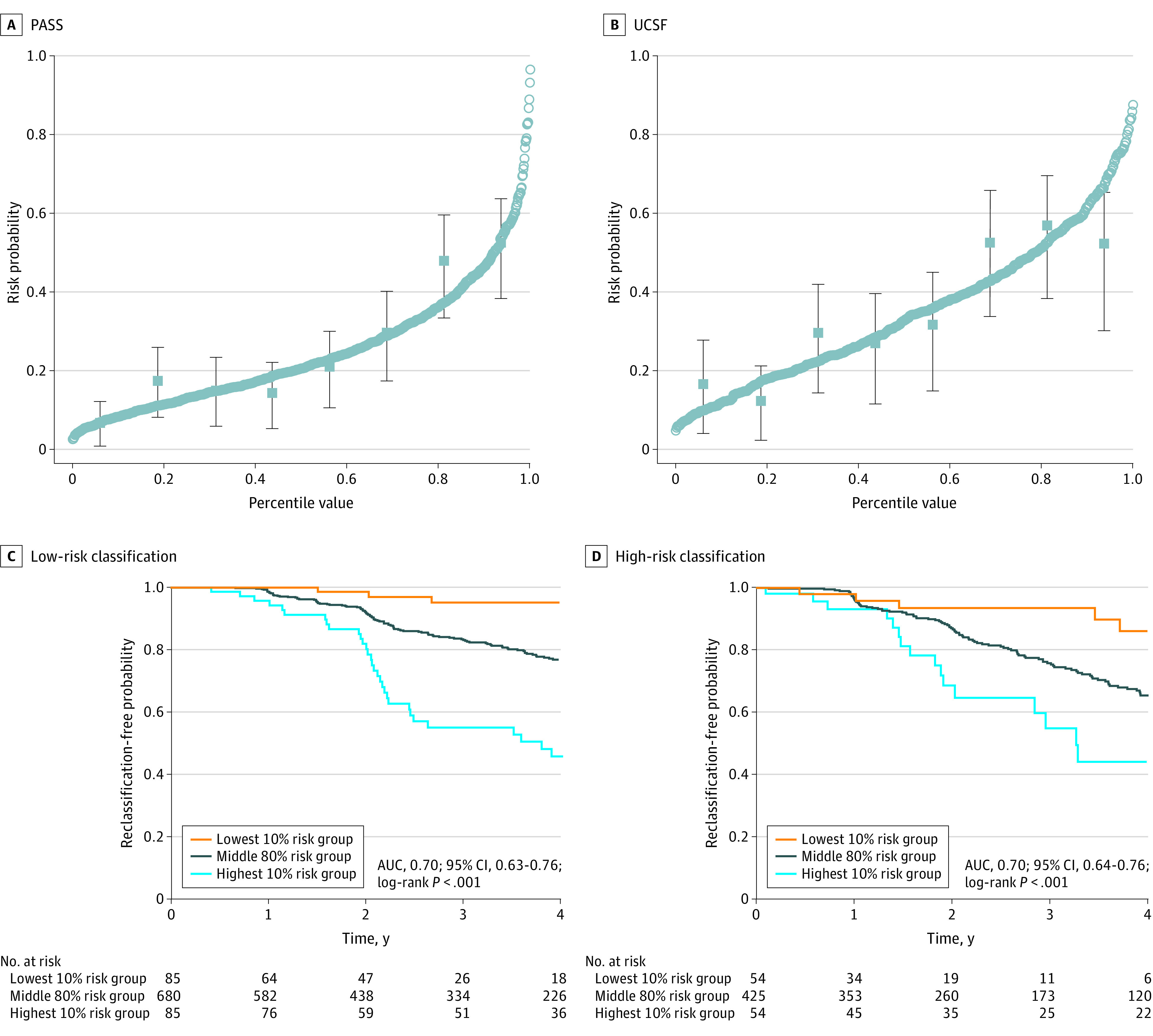

For prediction of nonreclassification at 4 years after confirmatory biopsy, the AUC was 0.70 (bootstrap-derived 95% CI, 0.63-0.76; P < .001) for the PASS cohort and 0.70 (95% CI, 0.64-0.76; P < .001) for the UCSF cohort. Figure 2A and 2B illustrate model calibration in the 2 cohorts. Calibration was good for both PASS and UCSF. Figure 2C and 2D demonstrate that the Canary model can be used to identify active surveillance patients at very low risk and high risk for reclassification at the time confirmatory biopsy. Table 3 and eTable 1 in the Supplement also demonstrate model prediction at the extremes within these overall low-risk cohorts. These results indicate, for example, that men in the lowest decile of risk (risk threshold of 0.08 [10th percentile]) have a negative predictive value of 0.95 (95% CI, 0.89-1.00) for reclassification and that, among men in the lowest quartile of risk (risk threshold of 0.13 [25th percentile]), avoiding all surveillance for 4 years would miss only 29 (95% CI, 16-42) reclassification events per 1000 men. The results were substantially similar for the UCSF validation cohort. eFigure 1 in the Supplement presents results of the decision curve analysis, demonstrating in both cohorts substantially greater net benefit for surveillance guided by the Canary model across a wide range of risk thresholds compared with either the standard, uniform-intensity “follow all” surveillance or the “follow none” approach along the lines of watchful waiting. The 4-year horizon is admittedly arbitrary, and for a man continuing to undergo PSA or other testing, the model can be updated as often as needed; an illustrative example is provided in eFigure 3 in the Supplement.

Figure 2. Canary Model Calibration Results for PASS vs UCSF.

Plotted circles in PASS (A) and UCSF (B) correspond to 4-year risk predictions from the model fit. Plotted squares with lines correspond to observed reclassification-free probability with 95% CIs based on a Kaplan-Meier curve analysis that divided the data into smaller sections from the model-based risk prediction results. Reclassification-free probability for low-risk classification (C) and high-risk classification (D) is shown at 4 years following confirmatory biopsy for participants in the lowest 10%, middle 80%, and highest 10% risk groups. UCSF recalibration results are in blue. AUC indicates area under the receiver operating curve; PASS, Canary Prostate Active Surveillance Study; and UCSF, University of California, San Francisco.

Table 3. Low-Risk Threshold Summary.

| Risk threshold (percentile)a | TPR (95% CIb) | FPR (95% CIb) | NPV (95% CIb) | PPV (95% CIb) | Follow-ups avoided per 1000 menc,d | Reclass missed, No. (95% CI) per 1000 mene |

|---|---|---|---|---|---|---|

| PASS | ||||||

| 0.08 (10th) | 0.98 (0.93-1.00) | 0.87 (0.75-1.00) | 0.95 (0.89-1.00) | 0.26 (0.23-0.30) | 100 | 5 (0-11) |

| 0.10 (15th) | 0.93 (0.86-1.00) | 0.80 (0.65-0.95) | 0.90 (0.84-0.97) | 0.27 (0.23-0.31) | 150 | 14 (5-24) |

| 0.11 (20th) | 0.90 (0.81-0.98) | 0.73 (0.58-0.89) | 0.88 (0.82-0.94) | 0.28 (0.24-0.32) | 200 | 23 (12-35) |

| 0.13 (25th) | 0.87 (0.78-0.95) | 0.67 (0.51-0.83) | 0.88 (0.83-0.94) | 0.29 (0.24-0.34) | 250 | 29 (16-42) |

| UCSF | ||||||

| 0.08 (3rd) | 0.99 (0.96-1.00) | 0.96 (0.93-0.99) | 0.87 (0.54-1.00) | 0.35 (0.30-0.41) | 34 (21-49) | 4 (0-15) |

| 0.10 (7th) | 0.98 (0.95-1.00) | 0.89 (0.84-0.94) | 0.92 (0.77-1.00) | 0.37 (0.32-0.43) | 66 (47-85) | 5 (0-15) |

| 0.11 (9th) | 0.96 (0.93-0.99) | 0.86 (0.81-0.92) | 0.88 (0.71-0.98) | 0.37 (0.32-0.43) | 94 (71-116) | 12 (2-26) |

| 0.13 (13th) | 0.94 (0.89-0.98) | 0.82 (0.77-0.89) | 0.84 (0.70-0.94) | 0.38 (0.32-0.43) | 126 (99-152) | 20 (7-38) |

Abbreviations: FPR, false-positive rate; NPV, negative predictive value; PASS, Canary Prostate Active Surveillance Study; PPV, positive predictive value; PSA, prostate-specific antigen; reclass, reclassification; TPR, true-positive rate; UCSF, University of California, San Francisco.

Risk thresholds calculated based on PASS model fit, predicting out 4 years from confirmatory biopsy. Risk threshold is set at PASS risk percentiles; corresponding UCSF percentiles are listed.

95% CI based on 500 bootstrap samples.

Includes all surveillance biopsies and PSA follow-ups for 4 years.

The PASS data are defined exactly by the row quantiles: By definition, a 10th-percentile threshold will avoid 10% of biopsies, a 15th-percentile threshold will avoid 15%, etc. The UCSF data are validating the Canary thresholds, with ranges in parentheses.

In PASS, the estimated number of reclassifications at 4 years from confirmatory biopsy is 245 per 1000 men; in UCSF, the No. is 346. Canary model predictions are based on 4-year predictions from confirmatory biopsy. PASS estimates have been corrected for overfitting using 250 repeats of 2-fold cross-validation.

A web tool to calculate Canary model results for use at the point of clinical care is available as the Calculator for Future Progression on Active Surveillance.18 See eFigure 2 in the Supplement for an illustrative example.

Discussion

Active surveillance is now endorsed by guidelines on both sides of the Atlantic as the preferred standard of care for most men with low-risk prostate cancer,5,6,19 and data from multicenter registries have confirmed rapid increases in its adoption in US community practice.3,4 However, even among the guidelines that now formally endorse active surveillance, only 1 focuses specifically on surveillance and addresses details regarding follow-up protocols. This guideline simply states that serial biopsies should be performed every 2 to 5 years or more often if clinically warranted—a statement based on review of existing protocols rather than on any specific evidence.5 To our knowledge, no strong evidence or validated clinical tools currently exist to help tailor active surveillance to an individual patient’s reclassification risk.2

This cohort study presents the development and successful validation of a model aimed at this goal. Although we present outcomes between confirmatory biopsy and the 4-year time point as a clinically relevant milestone, the model is intended to be calculable at any point in the surveillance trajectory as additional data accumulate. Our findings suggest that large subpopulations of men eligible for active surveillance could safely adopt a much less intensive regimen after confirmatory biopsy, deferring not only biopsy but also imaging studies and, at least in principle, many interval PSA tests. We stress, however, that the model is not intended to dictate specific care patterns; rather, it is meant to guide discussions between health care professionals and patients regarding how intensively a given tumor needs to be monitored.

The rates of missed reclassification for men in the lowest quartile of risk are quite low; assuming a biopsy was scheduled at 4 years, recognition of these events would be at worst delayed but not missed completely. In fact, only among men at the highest percentiles of risk does the probability of reclassification reach 50% (eTable 1 in the Supplement). The AUCs achieved by our model are good considering the generally narrow range of dynamic risk reflected in these cohorts, which include strictly low-risk disease. Models perform better when predictions can be made across a wider range of risk.12,20 Even in the unselected UCSF active surveillance cohort, which represents a relatively broad risk range, a prior analysis of nomograms intended to predict “indolent” disease found that their AUCs were typically only at or below 0.60.21 A particular strength of this analysis is the fact that the PASS protocol specifies regular biopsies every 12 to 24 months, and compliance has been quite high.

We do emphasize that, under current clinical paradigms, surveillance biopsies cannot be omitted altogether. Many men who are under surveillance in community practice often do not, in fact, undergo confirmatory biopsy or requisite follow-up biopsies, nor even MRI scans intended to serve as surrogates for biopsy (albeit with insufficient data to support such use).22 A randomized clinical trial of immediate treatment vs monitoring with PSAs alone showed significantly higher rates of disease progression in the monitoring arm.23 Both the PASS and UCSF protocols specify confirmatory biopsy within 1 year of diagnosis, and our model results may be less applicable for patients with a longer initial interval between biopsies. Hopefully, future subset analyses from this trial and longer follow-up in our cohorts and others will identify some men who need even less frequent or intense surveillance (including eventually monitoring some men with passive watchful waiting). Study results suggest that the Canary model represents a major step toward that goal, providing a tool for dynamic risk prediction and allowing for updating reclassification risks beyond the time of confirmatory biopsy.

Limitations

We did not include imaging or other biomarkers in our modeling efforts because, as discussed in the Methods section, most men with prolonged follow-up did not undergo these tests. Imaging tests—in particular multiparametric MRI—and genomic biomarkers have been proposed to help customize surveillance for individual patients. However, these tests are limited by interobserver variation24,25 and tumor heterogeneity,26 respectively. To our knowledge, neither has been formally evaluated in a prospective, active surveillance context; both are expensive, and neither is endorsed for routine use by the guidelines.5 Although both imaging and markers may play a greater role in the future, maximal information should first be extracted from data currently obtained as part of routine care, namely, the clinical parameters as reported in this study. Furthermore, the Canary model can serve as a clinical criterion standard model against which both imaging- and tissue-based biomarkers can be validated in the future.27

Individual reclassification risk may be affected by multiple unmeasured factors, including variations in ultrasound quality, biopsy technique, pathologic interpretation, etc. However, the good calibration between the PASS and UCSF cohorts is reassuring. The most important limitation of this analysis is the end point of reclassification. Prior studies have reported that GG2 tumors reflect a spectrum of risk and that quantifying the extent of Gleason pattern 4 disease yields improved predictions compared with dichotomizing GG1 vs GG2.28,29 Some GG2 tumors with minimal Gleason pattern 4 probably are biologically indistinguishable from GG1; in fact, they may frequently be overclassified even by experienced subspecialist pathologists.30

This phenomenon hampers efforts at model optimization, as it is naturally more difficult to predict an end point in a data set in which tumors meeting or not meeting the end point may actually be similar both biologically and clinically. Therefore, this end point is by definition conservative, and we emphasize that our focus is on negative predictive value. Reclassifying to GG2 on a surveillance biopsy may or may not indicate treatment (this clinical decision should consider all disease parameters in addition to grade31), but the large majority of those who maintain stability at GG1 are safe to continue on surveillance. Our goal explicitly is to select men who may not only continue to defer treatment but can further deintensify their surveillance regimen. Our goal is not to identify those patients who require immediate active treatment.

We do not propose that any discrete risk threshold from our model be applied in binary or strict fashion to clinical decision-making. Rather, the model should guide shared decision-making together with an individual man’s life expectancy, information preferences, and risk aversion. Presented with a low risk of reclassification over the next 4 years, a man may choose to defer surveillance interventions or not, reflecting on his past experiences with biopsy, other health concerns, etc. Furthermore, in considering this decision, for some men, an understanding of risk percentile (eg, that an individual’s risk is in the bottom 25% of his peers) may be as important as the absolute risk of reclassification. We acknowledge that this model is not straightforward to calculate at a glance, and we have made available a web-based calculator (eFigure 1 in the Supplement).

Conclusions

The 2018 US Preventive Services Task Force decision to upgrade prostate cancer screening to a recommendation for shared decision-making explicitly cited the increase in active surveillance rates as a key factor in its reconsideration but also referenced harms related to surveillance biopsies.32 Given that approximately half of all newly diagnosed prostate cancers are potentially appropriate for active surveillance, deintensifying surveillance for substantial numbers of patients would reduce risks of biopsy and further shift the benefit-harm ratio for early detection in favor of PSA-based testing. Biopsies are also the highest cost element of active surveillance,33 and reducing their frequency would be associated with substantial savings.

Prostate cancer reflects an extremely broad range of biologic and clinical risk, and the intensity of its management should be tailored as much as possible to an individual’s risk of disease progression. Even among those tumors categorized broadly as low risk, although some merit immediate treatment and many require canonical active surveillance, many others have negligible potential to progress, at least in the intermediate term, and can be followed much less intensively. We have developed and validated a multivariable model that can help identify those men who can safely deintensify their surveillance regimens. This model may serve as a clinical reference standard against which MRI and other imaging and biomarker tests may be assessed. With additional follow-up and other validation studies, we anticipate management can be still further personalized for men across the full range of prostate cancer risk.

eAppendix. Supplemental Methods

eFigure 1. Decision Curve Analysis

eFigure 2. Screenshot of Calculator for Future Progression on Active Surveillance

eFigure 3. An Illustration of Dynamic Risk Prediction

eTable 1. High-Risk Threshold Summary

eTable 2. Cox Proportional Hazards Regression Results of the Full Model

eTable 3. Cox Proportional Hazards Regression Results of the Alternative Model Selected by Least Absolute Shrinkage and Selection Operator

eTable 4. Comparison of Cross-Validated Area Under the Receiver Operator Curve (AUC) of the Three Different Models in PASS

eTable 5. Evaluation of Potential for Ascertainment Bias

eReferences

References

- 1.Siegel RL, Miller KD, Jemal A. Cancer statistics, 2020. CA Cancer J Clin. 2020;70(1):7-30. doi: 10.3322/caac.21590 [DOI] [PubMed] [Google Scholar]

- 2.Ganz PA, Barry JM, Burke W, et al. National Institutes of Health State-of-the-Science Conference: role of active surveillance in the management of men with localized prostate cancer. Ann Intern Med . 2012;156(8):591-595. doi: 10.7326/0003-4819-156-8-201204170-00401 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cooperberg MR, Carroll PR. Trends in management for patients with localized prostate cancer, 1990-2013. JAMA. 2015;314(1):80-82. doi: 10.1001/jama.2015.6036 [DOI] [PubMed] [Google Scholar]

- 4.Auffenberg GB, Lane BR, Linsell S, Cher ML, Miller DC. Practice- vs physician-level variation in use of active surveillance for men with low-risk prostate cancer: implications for collaborative quality improvement. JAMA Surg. 2017;152(10):978-980. doi: 10.1001/jamasurg.2017.1586 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chen RC, Rumble RB, Loblaw DA, et al. . Active surveillance for the management of localized prostate cancer (Cancer Care Ontario Guideline): American Society of Clinical Oncology clinical practice guideline endorsement. J Clin Oncol. 2016;34(18):2182-2190. doi: 10.1200/JCO.2015.65.7759 [DOI] [PubMed] [Google Scholar]

- 6.Sanda MG, Cadeddu JA, Kirkby E, et al. . Clinically localized prostate cancer: AUA/ASTRO/SUO guideline. Part I: risk stratification, shared decision making, and care options. J Urol. 2018;199(3):683-690. doi: 10.1016/j.juro.2017.11.095 [DOI] [PubMed] [Google Scholar]

- 7.Bjurlin MA, Wysock JS, Taneja SS. Optimization of prostate biopsy: review of technique and complications. Urol Clin North Am. 2014;41(2):299-313. doi: 10.1016/j.ucl.2014.01.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cooperberg MR, Erho N, Chan JM, et al. . The diverse genomic landscape of clinically low-risk prostate cancer. Eur Urol. 2018;74(4):444-452. doi: 10.1016/j.eururo.2018.05.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Newcomb LF, Thompson IM Jr, Boyer HD, et al. ; Canary PASS Investigators . Outcomes of active surveillance for clinically localized prostate cancer in the prospective, multi-institutional Canary PASS cohort. J Urol. 2016;195(2):313-320. doi: 10.1016/j.juro.2015.08.087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Prostate Active Surveillance Study (PASS) Clinicaltrials.gov identifier: NCT00756665. Updated December 23, 2019. Accessed July 22, 2020. https://clinicaltrials.gov/ct2/show/NCT00756665?term=NCT00756665&draw=2&rank=1

- 11.Masic S, Cowan JE, Washington SL, et al. . Effects of initial Gleason grade on outcomes during active surveillance for prostate cancer. Eur Urol Oncol. 2018;1(5):386-394. doi: 10.1016/j.euo.2018.04.018 [DOI] [PubMed] [Google Scholar]

- 12.Cooperberg MR, Brooks JD, Faino AV, et al. . Refined analysis of prostate-specific antigen kinetics to predict prostate cancer active surveillance outcomes. Eur Urol. 2018;74(2):211-217. doi: 10.1016/j.eururo.2018.01.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zheng Y, Heagerty PJ. Partly conditional survival models for longitudinal data. Biometrics. 2005;61(2):379-391. doi: 10.1111/j.1541-0420.2005.00323.x [DOI] [PubMed] [Google Scholar]

- 14.Maziarz M, Heagerty P, Cai T, Zheng Y. On longitudinal prediction with time-to-event outcome: comparison of modeling options. Biometrics. 2017;73(1):83-93. doi: 10.1111/biom.12562 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Inoue LYT, Lin DW, Newcomb LF, et al. . Comparative analysis of biopsy upgrading in four prostate cancer active surveillance cohorts. Ann Intern Med. 2018;168(1):1-9. doi: 10.7326/M17-0548 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Heagerty PJ, Zheng Y. Survival model predictive accuracy and ROC curves. Biometrics. 2005;61(1):92-105. doi: 10.1111/j.0006-341X.2005.030814.x [DOI] [PubMed] [Google Scholar]

- 17.RStudio. shinyapps.io. Accessed July 28, 2020. https://www.shinyapps.io/

- 18.Prostate Active Surveillance Study. Canary PASS active surveillance risk calculators. Accessed July 28, 2020. https://canarypass.org/calculators/

- 19.Mottet N, Bellmunt J, Bolla M, et al. . EAU-ESTRO-SIOG guidelines on prostate cancer. Part 1: screening, diagnosis, and local treatment with curative intent. Eur Urol. 2017;71(4):618-629. doi: 10.1016/j.eururo.2016.08.003 [DOI] [PubMed] [Google Scholar]

- 20.Cooperberg MR, Broering JM, Carroll PR. Risk assessment for prostate cancer metastasis and mortality at the time of diagnosis. J Natl Cancer Inst. 2009;101(12):878-887. doi: 10.1093/jnci/djp122 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wang S-Y, Cowan JE, Cary KC, Chan JM, Carroll PR, Cooperberg MR. Limited ability of existing nomograms to predict outcomes in men undergoing active surveillance for prostate cancer. BJU Int. 2014;114(6b):E18-E24. doi: 10.1111/bju.12554 [DOI] [PubMed] [Google Scholar]

- 22.Luckenbaugh AN, Auffenberg GB, Hawken SR, et al. ; Michigan Urological Surgery Improvement Collaborative . Variation in guideline concordant active surveillance followup in diverse urology practices. J Urol. 2017;197(3 pt 1):621-626. doi: 10.1016/j.juro.2016.09.071 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hamdy FC, Donovan JL, Lane JA, et al. 10-Year outcomes after monitoring, surgery, or radiotherapy for localized prostate cancer. N Engl J Med . 2016;375(15):1415-1424. doi: 10.1056/NEJMoa1606220 [DOI] [PubMed] [Google Scholar]

- 24.Sonn GA, Fan RE, Ghanouni P, et al. . Prostate magnetic resonance imaging interpretation varies substantially across radiologists. Eur Urol Focus. 2019;5(4):592-599. doi: 10.1016/j.euf.2017.11.010 [DOI] [PubMed] [Google Scholar]

- 25.Greer MD, Brown AM, Shih JH, et al. . Accuracy and agreement of PIRADSv2 for prostate cancer mpMRI: a multireader study. J Magn Reson Imaging. 2017;45(2):579-585. doi: 10.1002/jmri.25372 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Salami SS, Hovelson DH, Kaplan JB, et al. . Transcriptomic heterogeneity in multifocal prostate cancer. JCI Insight. 2018;3(21):3. doi: 10.1172/jci.insight.123468 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.McShane LM, Altman DG, Sauerbrei W, Taube SE, Gion M, Clark GM; Statistics Subcommittee of the NCI-EORTC Working Group on Cancer Diagnostics . Reporting recommendations for tumor marker prognostic studies. J Clin Oncol. 2005;23(36):9067-9072. doi: 10.1200/JCO.2004.01.0454 [DOI] [PubMed] [Google Scholar]

- 28.Reese AC, Cowan JE, Brajtbord JS, Harris CR, Carroll PR, Cooperberg MR. The quantitative Gleason score improves prostate cancer risk assessment. Cancer. 2012;118(24):6046-6054. doi: 10.1002/cncr.27670 [DOI] [PubMed] [Google Scholar]

- 29.Sauter G, Steurer S, Clauditz TS, et al. . Clinical utility of quantitative Gleason grading in prostate biopsies and prostatectomy specimens. Eur Urol. 2016;69(4):592-598. doi: 10.1016/j.eururo.2015.10.029 [DOI] [PubMed] [Google Scholar]

- 30.McKenney JK, Simko J, Bonham M, et al. ; Canary/Early Detection Research Network Prostate Active Surveillance Study Investigators . The potential impact of reproducibility of Gleason grading in men with early stage prostate cancer managed by active surveillance: a multi-institutional study. J Urol. 2011;186(2):465-469. doi: 10.1016/j.juro.2011.03.115 [DOI] [PubMed] [Google Scholar]

- 31.Leapman MS, Ameli N, Cooperberg MR, et al. . Quantified clinical risk change as an end point during prostate cancer active surveillance. Eur Urol. 2017;72(3):329-332. doi: 10.1016/j.eururo.2016.04.021 [DOI] [PubMed] [Google Scholar]

- 32.Grossman DC, Curry SJ, Owens DK, et al. ; US Preventive Services Task Force . Screening for prostate cancer: US Preventive Services Task Force recommendation statement. JAMA. 2018;319(18):1901-1913. doi: 10.1001/jama.2018.3710 [DOI] [PubMed] [Google Scholar]

- 33.Keegan KA, Dall'Era MA, Durbin-Johnson B, Evans CP Active surveillance for prostate cancer compared with immediate treatment: an economic analysis. Cancer. 2012;118(14):3512-3518. doi: 10.1002/cncr.26688 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eAppendix. Supplemental Methods

eFigure 1. Decision Curve Analysis

eFigure 2. Screenshot of Calculator for Future Progression on Active Surveillance

eFigure 3. An Illustration of Dynamic Risk Prediction

eTable 1. High-Risk Threshold Summary

eTable 2. Cox Proportional Hazards Regression Results of the Full Model

eTable 3. Cox Proportional Hazards Regression Results of the Alternative Model Selected by Least Absolute Shrinkage and Selection Operator

eTable 4. Comparison of Cross-Validated Area Under the Receiver Operator Curve (AUC) of the Three Different Models in PASS

eTable 5. Evaluation of Potential for Ascertainment Bias

eReferences