Abstract

Aim

This paper describes how we engaged with adolescents and health providers to integrate access to digital health interventions as part of a large-scale secondary school health and wellbeing survey in New Zealand.

Methods

We conducted nine participatory, iterative co-design sessions involving 29 adolescents, and two workshops with young people (n = 11), digital and health service providers (n = 11) and researchers (n = 9) to gain insights into end-user perspectives on the concept and how best to integrate digital interventions in to the survey.

Results

Students’ perceived integrating access to digital health interventions into a large-scale youth health survey as acceptable and highly beneficial. They did not want personalized/normative feedback, but thought that every student should be offered all the help options. Participants identified key principles: assurance of confidentiality, usability, participant choice and control, and language. They highlighted wording as important for ease and comfort, and emphasised the importance of user control. Participants expressed that it would be useful and acceptable for survey respondents to receive information about digital help options addressing a range of health and wellbeing topics.

Conclusion

The methodology of adolescent-practitioner-researcher collaboration and partnership was central to this research and provided useful insights for the development and delivery of adolescent health surveys integrated with digital help options. The results from the ongoing study will provide useful data on the impact of digital health interventions integrated in large-scale surveys, as a novel methodology. Future research on engaging with adolescents once interventions are delivered will be useful to explore benefits over time.

Keywords: Adolescent, digital health interventions, delivery of health care, health promotion, primary prevention, co-design

Introduction

Many digital health interventions have been shown to be effective for improving health and wellbeing in adolescents1–8 and are of significant interest due to their potential for enormous scalability and cost-effectiveness. Digital health interventions and digital surveys often recruit from the same communities and share the goal of improving health outcomes. Access to digital interventions could be integrated into digital surveys, for example, so that survey respondents are automatically and confidentially offered opportunities to register for or receive health information or links to programs or apps. Such an approach could offer significant potential gains. First, digital technology can allow anonymized linking of survey and intervention uptake data, enabling nuanced analysis of intervention uptake. Second, as survey respondents will have just reflected on their health, they may be particularly receptive to direct and easy access to health information and interventions. Health survey respondents are typically not provided with support regarding health concerns following surveys, therefore developing a simple and acceptable approach to do this could be an important ethical development.

We systematically reviewed the literature and found no large-scale population health surveys that incorporated access to digital interventions. Small-scale surveys and clinical screening tools that have provided access to tailored interventions or links to relevant web-based resources have had promising or positive results9–11 and been seen as useful by school health nurses and youth.12 Available data on uptake and appreciation of survey-integrated interventions for any age group is limited but shows potential to encourage participants to seek help and change behavior.10–14

Overview of the Youth2000 surveys

The Youth2000 surveys are a series of cross-sectional studies carried out in 2001, 2007 and 2012, focusing on the indicators of health and wellbeing in New Zealand secondary school students. The size of each survey was substantial, with approximately 100 schools and between 8500 and 10000 students involved in each survey.15 Nationally representative samples of secondary school students aged 12–18 years were recruited by randomly selecting schools within New Zealand and then randomly selecting students from included school rolls. The surveys featured hundreds of questions on a wide range of health topics, including substance abuse, sexual health, physical activity, emotional health and social connectedness. Anonymised, self-reported responses were collected through multimedia computer-assisted self-interviews (M-CASI). These were administered through laptops for the 2001 survey and tablets in the 2007 and 2012 surveys.15 Youth19 is the most recent survey of the Youth2000 series and is currently ongoing.

At a time when health surveys and brief health interventions each frequently use digital technology, often recruiting participants from the same population, we aimed to: (1) develop an adolescent health survey that included integrated ‘opt in’ digital interventions; (2) explore digital intervention uptake by, and impact on, subgroups of the surveyed population; and (3) improve the uptake of digital interventions among underserved groups.

In this study, we used participatory design principles to explore secondary school students’ perspectives on the concept of a health survey that includes integrated ‘opt in’ digital health interventions (the ‘Intervention-Integrated Survey’) and to ensure that they have a central role in its design and development. Participatory design involves end-users in decision making when designing new technologies and is deeply rooted in democratisation. Our research paradigm aligns with the contextmapping framework for participatory design methods proposed by Visser et al. (2005).16 Research steps including preparation, sensitizing participants, group sessions, analysis and communication were used. The process also included discussion of users’ values, facilitating ongoing participation, codetermination and respect. Any conflict that emerges during the design process is viewed as a resource that provides opportunities to create innovative solutions.17,18

We used a co-design process that involved repeated evaluation of designs by users from the early stages of the study. This iterative qualitative research process identifies and incorporates the perspectives of the target users.19–21 As researchers, we played the role of ‘facilitators’ providing survey questions, design templates and ideas to the participants. The users or student participants were actively involved in the design process, serving the role of experts in their own experience. Students were therefore empowered to generate new ideas and concepts in accordance with their needs and in cooperation with the researchers.22 Co-design principles have been successfully used in working with young people to develop web-based support tools to promote youth mental health23 and sexual health.24,25

This paper describes the process by which we engaged with young people and health practitioners, including digital service providers, to develop an intervention-integrated survey for adolescents. We aimed to explore secondary school students’ perspectives on the overall concept of intervention-integrated surveys and the particular interventions to be integrated. We also sought to develop processes for linking the survey and the digital help options in a way that was secure and appealing to young people. The survey (the Youth19 Rangatahi Smart Survey, or Youth19), the most recent survey of the Youth2000 series, was administered to approximately 7,500 adolescents in the Northern region of New Zealand in the second half of 2019.

Methods

Participants

Co-design sessions

We chose secondary school students as our co-designers to gain insights into end-user perspectives. We purposefully selected and recruited three schools from ethnically diverse low income areas and two schools from ethnically diverse high income areas. The study was advertised in each school and students over the age of 16 years volunteered to participate. The University Ethics Committee regard young persons aged 16 or above as able to give consent for their own participation in research. In total, 29 students (18 female; 9 male; 1 transmale; 1 nonbinary: 6 Māori, 1 Pacific Islander, 7 Asian, 16 European) were recruited for nine co-design sessions, each involving between two and four student participants.

Workshops

We invited secondary school students, young post-secondary school students, digital health service providers, and school and community health practitioners to participate in workshops. We held two workshops that included both adolescents and adults. In total, there were eight adolescents, three young adults, five digital health care service providers, six school or community stakeholders and nine researchers.

Study design

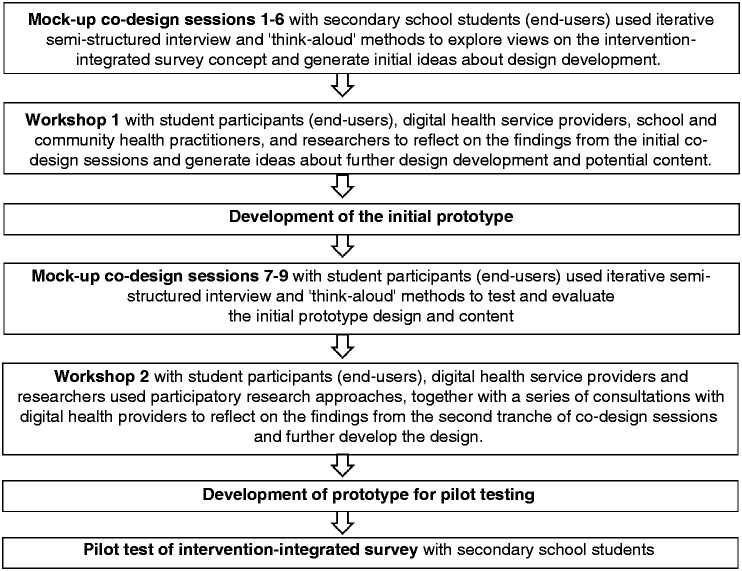

The study, conducted in 2018 and 2019, embodied adolescent-practitioner-researcher engagement through co-design sessions and workshops. The key steps of the study approaches are summarised in Figure 1 and detailed below.

Figure 1.

Summary of study steps.

Co-design sessions

Each session lasted up to one-and-a-half hours and involved a new set of students. Interview prompts are provided in Table 1. First, participants worked through parts of the survey to orientate themselves to the research questions. They were then prompted to comment on (1) their overall thoughts about the survey and (2) their perspectives on the concept of offering survey respondents the opportunity to have interventions sent to their phone or email. Each group of students evaluated the results from the previous session and generated new ideas to develop and improve.

Table 1.

Participatory co-design questions.

| Co-design sessions 1–6 |

| Overall impression |

| (a) What are your overall impressions? (Prompts: what is something you like or thought was OK? What did you like less/think was not good?) |

| (b) What did you think about the look and feel? (Prompts: what do you like/not like/ suggestions or thoughts on the graphics, the layout of questions? Are the current graphics OK or do these need to change?) |

| Question items |

| (c) What are your thoughts about the questions you have just gone through? (Prompts: how could this be improved on? what do you like or not like about it?) |

| (d) What are your thoughts about the length of the survey (Prompts: how could this be improved on? what do you like or not like about it?) |

| Offer of help |

| (e) What do you think about the idea? (Prompts: do you like/not like this? In what way? What might this achieve or not achieve for the young people? Would it be useful for all types of students? How might this be maximised? |

| (f) Do you think this is a good way to present this information? (Prompts: how could this be improved on? what do you like or not like about it? Is it creepy/ embarrassing/ should it be more generic?) [this discussion was based on several screenshots that were developed and provided in an iterative manner] |

| (g) At which point in the survey should this information be provided? (Prompts: Would be best at the end of the survey or after each set of relevant questions? For example, the option for digital interventions on smoking t be provided at the end of the questions on smoking. |

| Providing contact information |

| (h) Should the options be sent by text/ email /other/ all options be provided? (Prompts: Will students be comfortable with providing email/ phone contact details? Are there other/ better options (besides text and email) that we could offer? |

| (i) What are your views on having a follow up/reminder email sent? (Prompts: is this appropriate/helpful? How could we best do this?) Do you think this is a good way to present this? (Prompts: how could this be improved on? what do you like or not like about it?) [this discussion was based on several screenshots that were developed and provided in an iterative manner] |

| (j) At which point in the survey would they like to enter their details? (Prompts: At the first acceptance of help offer or at the end of the survey? |

| Text or email messaging |

| (k) Do you think this is a good way to present this information as a text message or an email? (Prompts: how could this be improved on? what do you like or not like about it? Is it creepy/ embarrassing/ should it be more generic?) [this discussion was based on several screenshots that were developed and provided in an iterative manner] |

| Co-design sessions 7–9 |

| Impressions of welcome section |

| (a) What are your overall impressions of the start section? (Prompts: how does it look visually? What do you think about wording? Is there anything that could be improved?) |

| Question items |

| (b) What are your thoughts about the questions you have just gone through? (Prompts: what are your overall impressions? how could this be improved on? what do you like or not like about it?) |

| (c) What are your thoughts about the length of the survey (Prompts: how could this be improved on? what do you like or not like about it?) |

| Offer of help |

| (d) What do you think about the idea? (Prompts: do you like/not like this? In what way? What might this achieve or not achieve for the young people? Would it be useful for all types of students? How might this be maximised?) |

| Providing contact information |

| (e) Should the options be sent by text/ email /other/ all options be provided? (Prompts: Will students be comfortable with providing email/ phone contact details? Are there other/ better options (besides text and email) that we could offer? |

| Impressions of outro section |

| (f) What are your overall impressions of the start section? (Prompts: how does it look visually? What do you think about wording? Is there anything that could be improved?) |

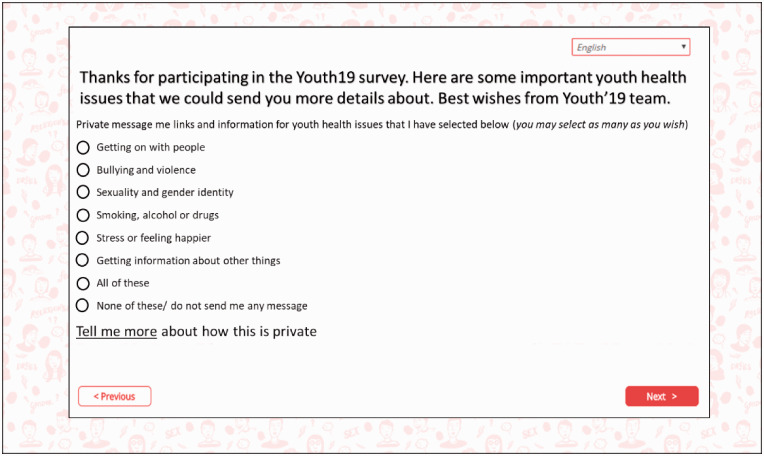

Participants in the initial co-design sessions (Sessions 1-6) were shown screenshots that demonstrated how the information on digital help options could be presented (Figure 2). Their perspectives on the following aspects of the overall concept were then explored: (1) where in the survey the information should be placed (e.g. at the end of the survey, after each set of relevant questions etc.); (2) how the information should be sent to survey respondents; (3) comfort with providing email/phone contact details; (4) other potential contact methods; (5) how the process could be done effectively, including when in the survey respondents would feel the most comfortable providing their contact details; and (6) the wording and formatting of messaging to be sent to respondents’ phones (via text) or email addresses.

Figure 2.

Draft screenshot of information on health interventions.

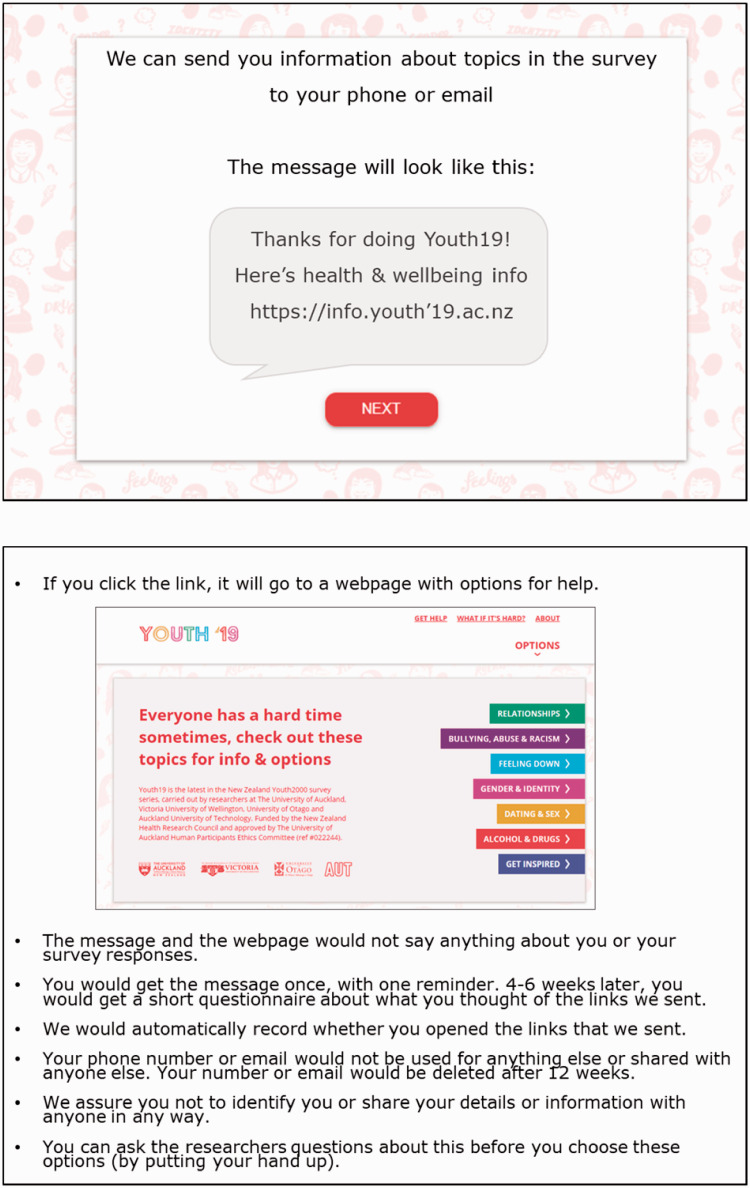

Participants in the second tranche of co-design sessions (Sessions 7–9) were provided screenshots of the proposed content at the end of the survey (Figure 3) and were asked their perspectives on the overall concept, wording and method of presentation. The remainder of these sessions focused on how the information would be sent to survey respondents (e.g. wording and formatting of messages) and the different types of ‘help’ that could be provided (e.g. specific topics).

Figure 3.

Example screenshots of end of survey information.

Workshops

We used workshops to explore perspectives on the consolidated findings of each tranche of co-design sessions. Workshops focused on selecting help options to be linked to the survey and developing processes to link the survey to the digital interventions in a way that was secure and appealing to young people. The workshops were designed to strengthen collaborative partnerships based on mutual awareness of the challenges and aspirations of the communities of concern. A key objective of these workshops was to support facilitated dialogue between secondary school students, researchers and digital and school health service providers.

Each workshop was conducted over 1–2 hours at a location convenient to participants. We convened semi-structured conversations in small groups, so that young people, digital health service providers and researchers could talk together about their mutual challenges, any constraints, and why these might exist. We were especially mindful of perceived power differentials and facilitated a process that gave voice to secondary school students.

Prototype development

The material and feedback from the co-design sessions and workshops were reviewed to develop the wording and look of the website, identify help options for potential inclusion and draft key topic areas, including the types of information that should be available under each topic. Researchers then searched for sources relating to each topic with the student participants’ and, school and digital health service providers’ ideas in mind, which would become accessible to students in a website format. Clinical expertise and peer review from members of the research team and external community clinicians were also considered when selecting sources.

Data interpretation

The focus was on participatory analyses and interpretation of the data gathered and the use of these findings to inform the design and delivery of links to digital health information and interventions. In each participatory design session, we used the “think aloud” method to gather information. This method involves research participants speaking aloud while performing specific tasks and provides detailed information about the thought processes of users during task performance.26 The approach is particularly useful for the design of computer systems. The process provided opportunities for participants to raise issues that may have been ignored in a more researcher-directed data collection strategy.

We used the affinity diagramming technique to interpret data generated from the co-design sessions.27 The principles of this analytic technique have been previously adapted to suit prototype evaluations,28 and interview data,29 making it well suited to this study. The co-design process involved repeated evaluation of designs. First, researchers read transcribed interviews and created notes based on these data. The notes were then reviewed, organised into themes, further reviewed, and presented to the next co-design meeting.

Pilot testing the survey with integrated digital interventions

Following the participatory design sessions, the intervention-integrated Youth19 survey was developed and piloted at two schools, one semi-private co-educational school and one boys-only state school. The two schools, provided us the opportunity to pilot test the survey amongst ethnic and socioeconomically diverse students similar to those who participated in the co-design sessions as well as the secondary school students who are likely to participate in the Youth19 survey.

Results

Intervention-integrated survey: Perceptions and process

There was a high level of consistency and enthusiasm for integrating access to help options in a large-scale youth health survey and this was universally viewed as highly beneficial and acceptable. As one student mentioned, “It is good to have an option of receiving help, and it is always good to have the choice of resources out there, as you never know. It’s half and half, people will either reach out to these or their family.” Another noted, “There are a lot of students that are going through a lot, so it’s nice to have these options out there.”

There was strong and consistent agreement for providing all survey respondents links to all help options, rather than personalized links based on their survey responses. As noted by one student, “Everyone needs a stepping stone, providing a helpful platform that helps them will be useful… and will be beneficial for all age groups.” Students’ perceived that providing personalised links based on survey feedback would not work as, “not a lot of people like being pushed to do something, especially if they have an issue. If someone has an issue and you say, we think you have a problem you should contact us or do this……they will be reluctant to reach out, to prove that they don’t have an issue.” Providing links to all help options was seen as a way to offer respondents the choice to engage with the material, without forcing it upon them. Students thought that allowing user autonomy in this way would be helpful, particularly with this demographic group. Another point of view was that, “if everybody is given it [health interventions], they won’t feel targeted, they are not the only people given it.” Students suggested that all help options should be listed when survey respondents are offered further information, as they may forget what was asked in the survey or believe they could only receive help regarding one matter.

Further, participants thought that it would be better to offer survey respondents the choice of interventions once at the end of the survey, regardless of whether the survey was fully completed, rather than at the end of specific sections. They thought that the latter approach could discourage survey responders from completing the survey or engaging with the information as it would become too repetitive. A few participants thought that it would be helpful to make the offer of further information available throughout the survey via a ‘help’ button in the corner, but the majority thought this was unnecessary as survey responders would most likely assume it was related to technical help for the survey and would not use it.

Providing cell phone numbers or email addresses to receive digital help options was considered acceptable if there was clear assurance that confidentiality and anonymity would be protected. Students highlighted that survey respondents would feel assured if, at the beginning of the survey, it was explained how their confidentiality would be ensured. However, they felt that a written statement would not be sufficient, as they believed the majority of responders would not read it and suggested that a verbal explanation would be more effective and would enhance usability. Further, students endorsed the idea of offering the choice of interventions via both text and email, as this would enhance usability for those who may not have access to either a smartphone or a computer.

Participants preferred a simple format with all the information available sent via one email or text message. They also stated that information should be sent to survey responders within a week of completing the survey and that having one or two follow up emails would be acceptable, as long as this contact was not too regular (e.g. one in a fortnight and one in a month). They indicated that these emails should be generic to assure users that their activity was not being monitored. To enable user control, participants recommended a generic subject line such as ‘Youth19 Survey’, which would allow survey responders the opportunity to open the information in their own time once they knew it was received and ensure that others around them who may happen to see the email would not be aware of its content.

Information on interventions to be integrated

Students and school and community health service providers indicated that offering access to digital health tools and information via a website would be a good way of integrating opt-in options in the digital health survey. Overall, participants found the types of information suggested acceptable and of potential benefit and identified key principles around wording, such as inclusivity, friendliness and neutral wording. Participants also identified key principles to be taken into account, namely: confidentiality, user choice and control, and usability. These key principles extended to the way in which information would be presented to survey responders. Students indicated that a short, sincere message from the Youth19 research team should be included in the email or text to remind survey responders that there are people who care about their health and wellbeing.

Digital health providers Participants indicated that the wording of any information presented to survey responders should be carefully considered to ensure ease and comfort of uptake. Students They preferred neutral language. For example, “would you like messages on the following issues?” was preferred over “here are some issues we think you might like more information about,” as the former indicated user choice and control over whether they received the information. Using neutral language was also perceived as important because it felt non-judgmental, which would allow users to receive help for themselves or others without feelings of shame for any behaviours. However, participants also suggested that messages should indicate sincerity and friendliness, allowing for users to feel like people genuinely cared for their health and wellbeing.

Participants gave feedback on the specific wording of key headings and the types of information that should be made available. For example, students preferred the heading ‘feeling down, stressed and worried’ over ‘feeling anxious or depressed.’ In the relationships section, participants suggested providing access to information on healthy relationships and consent. The heading ‘alcohol, cigarettes and other drugs’ was considered acceptable as it did not locate the problem within the respondent. This heading was preferred over ‘alcohol and other addictions’ due to the negative connotations of the word addictions, which could be seen as judgmental by users.

The health and wellbeing resources that were included in the intervention-integrated Youth19 survey are shown in Table 2.

Table 2.

Integrated information on health and wellbeing interventions.

| Source | Help options provided |

|---|---|

| Relationships | |

| The Lowdown | Website, phone line, text messaging, web chat |

| What’s Up | Website, phone line, web chat |

| Youthline | Website, phone line, text messaging, web chat |

| Harmonised | App |

| E Tū Whānau | Website |

| Bullying, abuse & racism | |

| What’s Up | Website, phone line, web chat |

| Icon | Website |

| Netsafe | Website, phone line |

| Are you okay? | Website, phone line |

| Youthline | Website |

| Human Rights Commission | Website |

| Youth Law | Website |

| Feeling down | |

| The Lowdown | Website, phone line, text messaging, web chat |

| Hikitia te Hā | Web video |

| SPARX | Computerised CBT program |

| Depression.org | Website |

| Aunty Dee | Interactive program |

| What’s Up | Website, phone line, web chat |

| Quest – Te Whitianga | App |

| Gender & identity | |

| Le Va | Website |

| The Lowdown | Website |

| Advice Hub – family planning | Website |

| Rainbow Youth | Website |

| Insideout | Website |

| Outline | Website, helpline |

| Dating & sex | |

| What’s Up | Website, phone line, web chat |

| Family Planning Hub | Website |

| Alcohol & drugs | |

| Quitline | Website, helpline, text messaging |

| New Zealand Drug Foundation | Website |

| Alcohol Drug Youth Helpline | Website, phone line, text messaging |

| Get inspired | |

| Inspiring Stories | Website |

| Good 2 Great | Website |

| Volunteering NZ | Website |

| Action Station | Website |

Discussion

In this innovative co-design process, we identified universal support from participating adolescents, digital health providers, and community stakeholders for integrating access to health interventions into a large scale youth health survey. In fact, adolescents considered this helpful and were occasionally surprised or disappointed that this was not the norm. Participants provided clear and consistent feedback for guiding this process including: all students should be offered all help options, rather than specific options based on personalized/normative feedback; assurance of confidentiality; usability; participant choice and control; and neutral, yet sincere, language. The tone of messaging, such as inclusivity, friendliness and being non-judgmental, was considered important to ensure ease and comfort of uptake. Identified interventions ranged from emotional health to internet safety and the types of information available in the selected digital resources was viewed as being acceptable and beneficial.

Service-user and service-provider collaboration and partnership was central to this research through the consultative process we embedded throughout the research. The participatory design methodology we used ensured that secondary school students, the end-users, were involved from the beginning of the study. The workshops provided further opportunities for the end-users to engage with and discuss ways to optimise the delivery of the intervention-integrated survey in ways that would work for them. Previous studies24,25,30,31 that engaged with young people in developing digital interventions have also identified that using co-design processes has highlighted the value of user-centred design that allowed for meaningful engagement and enabled focus on user need. For example, Nakarada-Kordic et al. (2017)31 conducted co-design sessions to develop an online resource for young people experiencing psychosis and found that participants discussed matters that were not expected by clinicians. This information allowed for the development of a resource that was more effective for its users. The current research highlighted the importance of wording as it is influential to the level of ease and comfort that users will have to engage with receiving information and emphasises user control. A study conducted by Buus et al. (2019)32 used similar methods to gain user perspectives on developing an app for people in suicidal crisis and found that participants perceived clinical language to be unhelpful to users.

Our study provides useful insights for digital health service providers about important factors, such as assuring confidentiality and user choice that must be considered when providing the option to seek help from a digital health survey. Confidentiality concerns are commonly raised by adolescents in relation to digital health interventions and need to be managed to facilitate participation.33–35 This study also emphasizes that it is important for researchers looking to integrate digital health interventions in surveys to meaningfully engage with service users in co-design and workshop settings to ensure that what is being provided to them will be the most beneficial.

This paper has focused on the methods used to collaborate with young people and stakeholders about the acceptability of integrating access to help options into a large-scale adolescent health survey. The results of the actual uptake of the interventions and their ‘real life’ usage will provide greater clarity on whether adolescents will actually engage with these help options when given the opportunity. Future research could also consider how to engage with adolescents once the interventions have been delivered to ensure they continue to be acceptable and beneficial over time.

Limitations

First, because each pair of students only attended one co-design session, it was time consuming to brief each new pair of students on the objectives of the project. Second, because each pair had differing views, the design choices of one group sometimes contrasted with those of other groups. However, we decided that this was the best approach, as it would allow us to build on the knowledge and experiences of a variety of students from diverse backgrounds. As mentioned previously, conflicts in opinion are regarded as resources in the co-design approach. Wadley et al. (2013)36 used a similar method when developing an online therapy for youth mental health, although they conducted separate co-design workshops for distinct groups of users, patients and clinicians.36 We also acknowledge that the views of the limited number of adolescents involved in the study may not reflect those of all adolescents and that participants’ expressed preferences may change over time.

A further limitation is that we engaged with students who had not previously used the digital help options tested and may not need to use them in future. Previous studies using similar methods have highlighted the need to engage with young people who have used the finalised intervention in order to receive their feedback.19,37 Hetrick et al. (2018)37 used a similar method when developing an app to facilitate self-monitoring and management of mood symptoms, and argued that it would be beneficial to continue co-design sessions as the intervention developed, as co-design is an iterative process. Given that young people are a heterogeneous group with varied needs and preferences, the adolescents involved in our co-design sessions may not be representative of those who need access to the health interventions. This limitation has been previously noted by others using a similar approach.19

Conclusion

In this innovative co-design study, adolescents and stakeholders were strongly supportive of the concept of integrating access to help options into a large-scale youth health survey and provided clear direction for its implementation.

Acknowledgements

None.

Contributorship

RPJ and TF researched literature, conceived the study and developed the protocol. RPJ was involved in gaining ethical approval. All authors were involved in participant recruitment, data collection and data analysis. RPJ and LD wrote the first draft of the manuscript. All authors reviewed and edited the manuscript and approved the final version of the manuscript.

Declaration of conflicting interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval

The study was approved by the University of Auckland Human Participant Ethics Committee (reference number 021823).

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was funded by the Health Research Council of New Zealand (grant number 18/473).

Guarantor

RPJ.

Peer review

This manuscript was reviewed by reviewers who have chosen to remain anonymous.

ORCID iD

Roshini Peiris-John https://orcid.org/0000-0001-7812-2268

References

- 1.Ezendam NPM, Brug J, Oenema A. Evaluation of the web-based computer-tailored FATaintPHAT intervention to promote energy balance among adolescents: results from a school cluster randomized trial. Arch Pediatr Adolesc Med 2012; 166: 248–255. [DOI] [PubMed] [Google Scholar]

- 2.de Josselin de Jong S, Candel M, Segaar D, et al. Efficacy of a web-based computer-tailored smoking prevention intervention for Dutch adolescents: randomized controlled trial. J Med Internet Res 2014; 16: e82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Civljak M, Stead LF, Hartmann-Boyce J, et al. Internet-based interventions for smoking cessation. Cochrane Database Syst Rev 2013; 10: CD007078. [DOI] [PubMed] [Google Scholar]

- 4.Evers KE, Paiva AL, Johnson JL, et al. Results of a transtheoretical model-based alcohol, tobacco and other drug intervention in middle schools. Addict Behav 2012; 37: 1009–1018. [DOI] [PubMed] [Google Scholar]

- 5.Merry SN, Stasiak K, Shepherd M, et al. The effectiveness of SPARX, a computerised self help intervention for adolescents seeking help for depression: randomised controlled non-inferiority trial. Brit Med J 2012; 344: e2598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Fleming T, Dixon R, Frampton C, et al. A pragmatic randomized controlled trial of computerized CBT (SPARX) for symptoms of depression among adolescents excluded from mainstream education. Behav Cogn Psychother 2012; 40: 529–541. [DOI] [PubMed] [Google Scholar]

- 7.Fleming T, Lucassen M, Stasiak K, et al. The impact and utility of computerised therapy for educationally alienated teenagers: the views of adolescents who participated in an alternative education-based trial. Clin Psychol 2016; 20: 94–102. [Google Scholar]

- 8.Perry Y, Werner-Seidler A, Calear A, et al. Preventing depression in final year secondary students: school-based randomized controlled trial. J Med Internet Res 2017; 19: e369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bannink R, Broeren S, Joosten-van Zwanenburg E, et al. Effectiveness of a web-based tailored intervention (E-health4Uth) and consultation to promote adolescents' health: randomized controlled trial. J Med Internet Res 2014; 16: e143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.De Bourdeaudhuij I, Maes L, De Henauw S, et al. HELENA Study Group. Evaluation of a computer-tailored physical activity intervention in adolescents in six European countries: the Activ-O-Meter in the HELENA intervention study. J Adolesc Health 2010; 46: 458–466. [DOI] [PubMed] [Google Scholar]

- 11.Maes L, Cook TL, Ottovaere C, et al. Pilot evaluation of the HELENA (healthy lifestyle in Europe by nutrition in adolescence) Food-O-Meter, a computer-tailored nutrition advice for adolescents: a study in six European cities. Public Health Nutr 2011; 14: 1292–1302. [DOI] [PubMed] [Google Scholar]

- 12.Bannink R, Broeren S, Joosten-van Zwanenburg E, et al. Use and appreciation of a web-based, tailored intervention (E-health4Uth) combined with counseling to promote adolescents' health in preventive youth health care: survey and log-file analysis. JMIR Res Protoc 2014; 3: e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Balter O, Fondell E, Balter K. Feedback in web-based questionnaires as incentive to increase compliance in studies on lifestyle factors. Public Health Nutr 2012; 15: 982–988. [DOI] [PubMed] [Google Scholar]

- 14.Martin RJ. The feasibility of providing gambling-related self-help information to college students who screen for disordered gambling via an online health survey: an exploratory study. JGI 2013; 12: 1–8. [Google Scholar]

- 15.Clark T, Fleming T, Bullen P, et al. Health and well-being of secondary school students in New Zealand: trends between 2001, 2007 and 2012. J Paediatr Child Health 2013; 49: 925–934. [DOI] [PubMed] [Google Scholar]

- 16.Visser FS, Stappers PJ, Van der Lugt R, et al. Contextmapping: experiences from practice. CoDesign 2005; 1: 119–149. [Google Scholar]

- 17.Gregory J. Scandinavian approaches to participatory design. Int J Eng Educ 2003; 19: 62–74. [Google Scholar]

- 18.Wakil N, Dalsgaard P. A Scandinavian approach to designing with children in a developing country – exploring the applicability of participatory methods. Lect Notes Comput Sc 2013; 8117: 754–761. [Google Scholar]

- 19.Yardley L, Williams S, Bradbury K, et al. Integrating user perspectives into the development of a web-based weight management intervention. Clin Obes 2012; 2: 132–141. [DOI] [PubMed] [Google Scholar]

- 20.Anthierens S, Tonkin-Crine S, Douglas E, et al. GRACE INTRO study team. General practitioners' views on the acceptability and applicability of a web-based intervention to reduce antibiotic prescribing for acute cough in multiple European countries: a qualitative study prior to a randomised trial. BMC Fam Pract 2012; 13: 101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nyman SR, Yardley L. Usability and acceptability of a website that provides tailored advice on falls prevention activities for older people. Health Informatics J 2009; 15: 27–39. [DOI] [PubMed] [Google Scholar]

- 22.Sanders EB, Tappers PJ. Co-creation and the new landscapes of design. Int J CoCreation Des Arts 2008; 4: 5–18. [Google Scholar]

- 23.Hagen P, Collin P, Metcalf A, et al. Participatory design of evidence-based online youth mental health promotion, intervention and treatment. Abbotsford, Victoria: Young and Well Cooperative Research Centre, 2012. [Google Scholar]

- 24.Halpern CT, Mitchell EM, Farhat T, et al. Effectiveness of web-based education on Kenyan and Brazilian adolescents’ knowledge about HIV/AIDS, abortion law, and emergency contraception: Findings from TeenWeb. Soc Sci Med 2008; 67: 628–637. [DOI] [PubMed] [Google Scholar]

- 25.Tortolero SR, Markham CM, Peskin MF, et al. It's your game: keep it real: delaying sexual behavior with an effective Middle school program. J Adolesc Health 2010; 46: 169–179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jaspers MWM, Steen T, van den Bos C, et al. The think aloud method: a guide to user interface design. Int J Med Inform 2004; 73: 781–795. [DOI] [PubMed] [Google Scholar]

- 27.Hartson R, Pyla PS. The UX book: process and guidelines for ensuring a quality user experience. Amsterdam: Morgan Kaufmann, 2012. [Google Scholar]

- 28.Lucero A. Using affinity diagrams to evaluate interactive prototypes. In: Abascal J, Barbosa S, Fetter M, et al. (eds) Human-computer interaction – INTERACT 2015. Lect notes comput Sci. Vol. 9297. Cham: Springer, 2015, pp. 231–248.

- 29.Kelders SM, Bohlmeijer ET, Van Gemert-Pijnen J. Participants, usage, and use patterns of a web-based intervention for the prevention of depression within a randomized controlled trial. J Med Internet Res 2013; 15: e172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gonsalves PP, Hodgson ES, Kumar A, et al. Design and development of the “POD adventures” smartphone game: a blended problem-solving intervention for adolescent mental health in India. Front Public Health 2019; 7: 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Nakarada-Kordic I, Hayes N, Reay SD, et al. Co-designing for mental health: creative methods to engage young people experiencing psychosis. Design Health 2017; 1: 229–244. [Google Scholar]

- 32.Buus N, Juel A, Haskelberg H, et al. User involvement in developing the MYPLAN mobile phone safety plan app: case study. JMIR Ment Health 2019; 6: e11965. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Clark DB, Moss HB. Providing alcohol-related screening and brief interventions to adolescents through health care systems: obstacles and solutions. PLoS Med 2010; 7: e1000214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wu E, Torous J, Hardaway R, et al. Confidentiality and privacy for smartphone applications in child and adolescent psychiatry: unmet needs and practical solutions. Child Adolesc Psychiatr Clin N Am 2017; 26: 117–124. [DOI] [PubMed] [Google Scholar]

- 35.Moreno MA, Ralston JD, Grossman DC. Adolescent access to online health services: perils and promise. J Adolesc Health 2009; 44: 244–251. [DOI] [PubMed] [Google Scholar]

- 36.Smith SR, Paay J, Calder P, et al. (eds). Participatory design of an online therapy for youth mental health. 25th Australian computer-human interaction conference: augmentation, application, innovation, collaboration. New York, NY: Association for Computing Machinery, 2013. [Google Scholar]

- 37.Hetrick SE, Robinson J, Burge E, et al. Youth codesign of a mobile phone app to facilitate self-monitoring and management of mood symptoms in young people with major depression, suicidal ideation, and self-harm. JMIR Ment Health 2018; 5: e9. [DOI] [PMC free article] [PubMed] [Google Scholar]