Abstract

Background

This study assesses acceptability and usability of home-based self-testing for severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) antibodies using lateral flow immunoassays (LFIA).

Methods

We carried out public involvement and pilot testing in 315 volunteers to improve usability. Feedback was obtained through online discussions, questionnaires, observations, and interviews of people who tried the test at home. This informed the design of a nationally representative survey of adults in England using two LFIAs (LFIA1 and LFIA2) which were sent to 10 600 and 3800 participants, respectively, who provided further feedback.

Results

Public involvement and pilot testing showed high levels of acceptability, but limitations with the usability of kits. Most people reported completing the test; however, they identified difficulties with practical aspects of the kit, particularly the lancet and pipette, a need for clearer instructions and more guidance on interpretation of results. In the national study, 99.3% (8693/8754) of LFIA1 and 98.4% (2911/2957) of LFIA2 respondents attempted the test and 97.5% and 97.8% of respondents completed it, respectively. Most found the instructions easy to understand, but some reported difficulties using the pipette (LFIA1: 17.7%) and applying the blood drop to the cassette (LFIA2: 31.3%). Most respondents obtained a valid result (LFIA1: 91.5%; LFIA2: 94.4%). Overall there was substantial concordance between participant and clinician interpreted results (kappa: LFIA1 0.72; LFIA2 0.89).

Conclusions

Impactful public involvement is feasible in a rapid response setting. Home self-testing with LFIAs can be used with a high degree of acceptability and usability by adults, making them a good option for use in seroprevalence surveys.

Keywords: SARS-CoV-2, COVID-19, lateral flow immunoassay, usability, home-testing

We found high levels of usability and acceptability among adults living in England, UK, of at-home self-testing with lateral flow immunoassays for severe acute respiratory syndrome coronavirus 2 antibodies. The tests provide an attractive solution for conducting large seroprevalence surveys in the community.

Lateral flow immunoassays (LFIA) offer a rapid point-of-care (POC) approach to severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) antibody testing. While LFIAs may not currently be accurate enough for individual-level clinical decisions [1, 2], they are valuable as a public health tool. On a population level, by conducting seroprevalence surveys through widespread random sampling of the general public, and by adjusting for the sensitivity and specificity characteristics of the LFIA used, it is possible to estimate the levels of past infection with SARS-CoV-2 in the community [3].

However, testing hundreds of thousands of people would be impractical if it required a blood sample to be drawn, followed by processing in a laboratory. One solution is to use self-sampling and self-testing in the home with participants reporting results to the researchers. However, there is limited understanding of public acceptability and usability of these LFIAs in the home setting, as most are currently designed as POC tests performed by healthcare professionals.

Self-sampling and self-testing are widely used in healthcare for monitoring, for example, in diabetes management [4], and for diagnostics, for example, for HIV [5, 6]. There are many advantages in terms of uptake, cost, patient activation, and scale [4, 6], but also potential disadvantages in relation to validity, usability, and practicality, which should be explored [6, 7]. Usability research on HIV self-testing has generally found good acceptability, the devices easy to use, and high validity in interpretation of self-reported test results [7–9]. However, these HIV test kits were designed for self-sampling and self-testing and went through several iterations before designs were appropriate for home use, and therefore the same levels of acceptability and usability for home-based self-testing for SARS-CoV-2 antibody using LFIAs cannot be assumed. As part of the REal-time Assessment of Community Transmission (REACT) programme [10], we evaluated the acceptability and usability of LFIAs for use in large seroprevalence surveys of SARS-CoV-2 antibody in the community.

METHODS

LFIAs Used

We evaluated 2 LFIAs with different usability characteristics from f5 LFIAs being validated in parallel in our laboratory-based study [11]. Both LFIAs required a blood sample from a finger-prick and produced a self-read test result after 10 or 15 minutes.

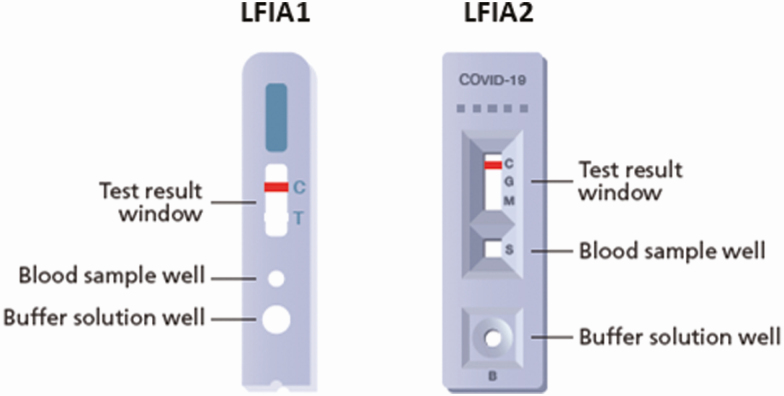

LFIA1 (Guangzhou Wondfo Biotech Co Ltd) was a cassette-based system containing a “control” indicator line and a “test” indicator line (for detection of combined IgM and IgG antibodies). LFIA2 (Fortress Orient Gene Biotech Co Ltd) was a cassette-based system containing a “control” indicator line and separate indicator lines for IgM and IgG (Figure 1).

Figure 1.

Design of point-of-care cassette-based LFIAs used in the study. Abbreviations: C, control; COVID-19, coronavirus disease 2019; G, IgG antibodies; LFIAs, lateral flow immunoassays; M, IgM antibodies; S, sample; T, test.

Study Design and Sampling

In early May 2020 we carried out rapid, iterative public involvement and a pilot usability study including an online forum with 4 discussion groups (n = 37), a study of LFIA1 test use with volunteers (n = 44), and a broader public sample (n = 234), and a nested observation and interview study (n = 25). Further details on the methods, including how we recruited participants from our existing involvement networks, are available online (Supplementary Material S1).

The test kits dispatched in the pilot study included 1 test cassette, 1 button-activated 28G lancet, and a 2 mL plastic pipette, alongside an instruction booklet also containing a weblink to an instructional video. Based on findings from the pilot study, for the larger population-based usability study, the lancet and pipette were replaced with 2 pressure-activated 23G (larger) lancets and a smaller 1 mL plastic pipette, respectively. The design and language in the instructional booklet and video were changed, and an alcohol wipe was also included in the kit.

In late May 2020 we carried out a larger population-based usability study of a representative sample of the adult population (aged 18 years and over) in England. We used addresses from the Postal Address File to draw a random sample of 30 000 households in England to which study invitation letters were sent. We allowed up to 4 adults aged 18 and over in the household to register for the study. Self-testing LFIA kits were then posted to each registered individual. On completion of the test, participants recorded their interpretation of the result as part of an online survey, with the option of uploading a photograph of the test result. Reminder letters were sent to participants who had not completed the online survey or uploaded a photograph within 10 days of test kits being dispatched.

Study Outcomes

Metrics to evaluate usability and acceptability were based on the HIV self-testing literature [5, 6, 8] and were measured as the percentage of participants responding to specific closed questions in the online survey. The questionnaire used is available as an online supplement (Supplementary Material S2). The main outcome was usability of the LFIA kits. This was defined as a participant’s ability to complete the antibody test, and how easy or difficult it was to understand the instructions and complete each step in the process.

Acceptability was measured in terms of people consenting to and using the provided self-test, and the proportion who reported they would be willing to repeat a self-administered finger-prick antibody test in the future.

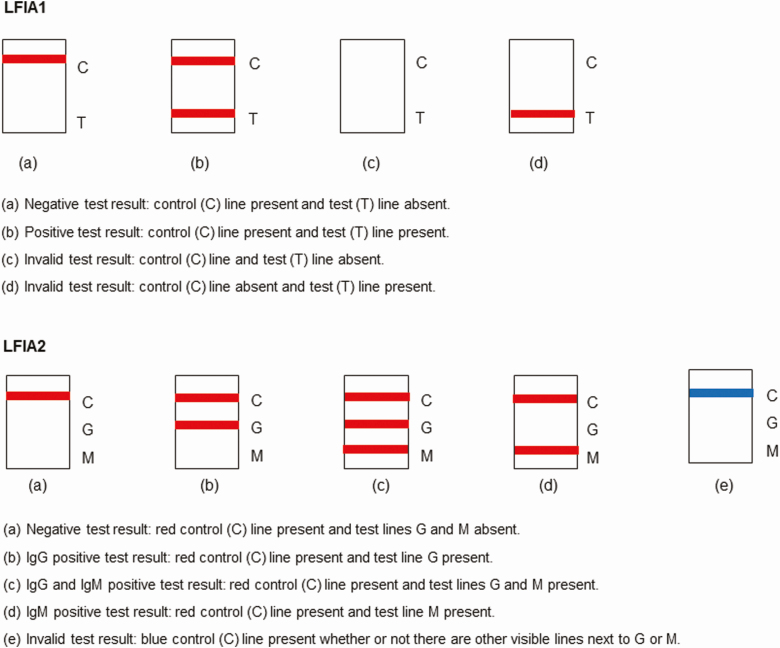

For LFIA1, there were 3 possible test outcomes (negative, positive, invalid), while LFIA2 had 5 possible test outcomes (negative, IgG positive, IgM positive, IgG and IgM positive, invalid) (Figure 2). To assess participants’ ability to correctly interpret their test result, a clinician reviewed a sample of the uploaded test photographs blinded to the participant’s interpretation, including all those reported as positive and unable to read, and a random sample of 200 participant-reported negative or invalid tests. The number of negative tests selected for review was chosen based on what was feasible in the time available to the clinician.

Figure 2.

Possible antibody test outcomes (appearance on test result window). Abbreviation: LFIAs, lateral flow immunoassays.

Data Analysis

Analyses were conducted in Stata (version 15.0, StataCorp).

Data obtained from the questionnaires on acceptability and usability were summarized by counts and descriptive statistics, and comparisons were made between LFIA1 and LFIA2 using Pearson’s chi-squared test.

Multivariate regression was used to identify sociodemographic factors independently associated with the proportion of participants conducting the test that achieved a valid result. Variables that appeared to be associated (P < .05) in the unadjusted analyses were considered in the adjusted analyses. Adjusted odds ratios (aOR) and 95% confidence intervals (CI) were estimated. Associations with a P-value < .05 in the adjusted analyses were considered statistically significant.

Agreement between participant-interpreted and clinician-interpreted result for test outcomes (negative, IgG positive, invalid, unable to read) was assessed using the Fleiss Kappa statistic. Interpretation of Kappa values was as follows: <0 poor agreement, 0.00–0.20 slight agreement, 0.21–0.40 fair agreement, 0.41–0.60 moderate agreement, 0.61–0.80 substantial agreement, and >0.8 almost perfect agreement [12].

The REACT laboratory-based study determined sensitivity and specificity of LFIAs in detecting IgG antibodies, and therefore only counted as positive IgG positive results (ie, “MG” or “G” but not “M”) [11]. Therefore, we used the same operational definition of positivity for LFIA2. Participants were informed in the instructions to consider IgM results as negative.

Ethics

The study obtained research ethics approval from the South Central-Berkshire B Research Ethics Committee (IRAS ID: 283787).

RESULTS

Overall, 315 members of the public contributed feedback during the involvement and pilot. This led to changes in the design and language used in the instructional video and booklet, the type and number of lancets, and the size of pipette in the LFIA kits (further details available online, Supplementary Material S1).

For the national study, 25 000 household invitation letters were sent, and 17 411 individuals registered for the study from 8508 households. Due to the maximum number of kits we had available for the study, 14 400 participants were selected at random from those who registered. Thus, 10 600 LFIA1 kits were distributed, with 8754 individual user surveys completed (82.6% response rate), and 3800 LFIA2 kits were distributed, with 2957 individual user surveys completed (77.8% response rate). Most commonly, 2 adults participated per household (Table 1). Baseline characteristics of study participants are shown in Table 1. The median age of participants across LFIA1 and LFIA2 was 51.0 years (range 18 to 95). There were some differences between LFIA1 and LFIA2 participants by ethnicity, region, and household size.

Table 1.

Characteristics of 11 711 Study Participants

| LFIA1 N = 8754 n (%)a | LFIA2 N = 2957 n (%)a | P valueb | |

|---|---|---|---|

| Age, y | |||

| Median (range) | 51.0 (18–95) | 51.0 (18–95) | |

| 18–24 | 782 (9.1) | 255 (8.8) | |

| 25–34 | 1185 (13.7) | 363 (12.5) | |

| 35–44 | 1372 (15.9) | 473 (16.3) | |

| 45–54 | 1745 (20.2) | 626 (21.5) | |

| 55–64 | 1799 (20.8) | 579 (19.9) | |

| 65+ | 1752 (20.3) | 610 (21.0) | .29 |

| Gender | |||

| Male | 4175 (47.7) | 1363 (46.1) | |

| Female | 4563 (52.1) | 1587 (53.7) | |

| Other | 6 (0.07) | 3 (0.10) | |

| Prefer not to say | 10 (0.11) | 3 (0.10) | |

| Do not know | (0.0) | 1 (0.03) | .24 |

| Ethnicity | |||

| White | 8330 (95.2) | 2764 (93.5) | |

| Asian / Asian British | 225 (2.6) | 104 (3.5) | |

| Black / African / Caribbean / Black British | 54 (0.62) | 32 (1.1) | |

| Mixed | 98 (1.1) | 38 (1.3) | |

| Other ethnic group | 39 (0.45) | 16 (0.54) | |

| Do not know | 8 (0.09) | 3 (0.10) | .01 |

| Education level | |||

| Degree level or above | 3175 (36.4) | 1114 (37.8) | |

| Other Higher Education below degree level | 926 (10.6) | 274 (9.3) | |

| A levels, NVQ level 3 and equivalents | 1577 (18.1) | 546 (18.6) | |

| GCSE/O level grade A*-C or 4–9, NVQ level 2 and equivalents | 1399 (16.0) | 468 (15.9) | |

| GCSE or O level below grade C, NVQ level 1 and equivalents | 494 (5.7) | 158 (5.4) | |

| Another type of qualification | 439 (5.0) | 147 (5.0) | |

| No qualification | 712 (8.2) | 237 (8.1) | .47 |

| Region of residence | |||

| East Midlands | 851 (9.7) | 273 (9.2) | |

| East of England | 1211 (13.8) | 358 (12.1) | |

| London | 805 (9.2) | 373 (12.6) | |

| North East | 301 (3.4) | 170 (5.8) | |

| North West | 1085 (12.4) | 365 (12.3) | |

| South East | 1521 (17.4) | 623 (21.1) | |

| South West | 1104 (12.6) | 326 (11.0) | |

| West Midlands | 988 (11.3) | 229 (7.7) | |

| Yorkshire & Humber | 888 (10.1) | 240 (8.1) | <.001 |

| Children living in household | |||

| No | 5975 (68.3) | 2019 (68.3) | |

| One or more children 5–11 years old | 1398 (16.0) | 465 (15.7) | |

| One or more children 12–15 years old | 885 (10.1) | 301 (10.2) | |

| One or more children 16–17 years old | 557 (6.4) | 176 (6.0) | .80 |

| Number of participants from same household | |||

| 1 | 1432 (16.4) | 568 (19.2) | |

| 2 | 5048 (57.7) | 1552 (52.5) | |

| 3 | 1458 (16.7) | 525 (17.8) | |

| 4 | 816 (9.3) | 312 (10.6) | <.001 |

Abbreviations: A*, GCSE or O-Level grade A star; C, GCSE or O-Level grade C; GCSE, General Certificate of Secondary Education; LFIA, lateral flow immunoassays; NVQ, National Vocational Qualification; O, Ordinary.

aPercentages are calculated from nonmissing values.

bP-value calculated using Pearson’s chi- squared test.

Acceptability

Acceptability of self-testing was high (Table 2). Almost all participants attempted the antibody test (LFIA1: 99.3%; LFIA2: 98.4%). Reasons for not attempting the test are shown in the table footnote. As in the pilot, most respondents were willing to perform another finger-prick antibody test in the future (LFIA1: 98.3%; LFIA2: 97.0%). Only a minority preferred to do the antibody test in a clinical care or community setting than at home. As with the pilot study, respondents with children showed a high willingness to perform the antibody test on them, and this proportion increased with the age of the children (Table 2).

Table 2.

Usability and Acceptability for Home-based Antibody Self-testing

| Characteristic | LFIA1 N = 8754 n (%)a | LFIA2 N = 2957 n (%)a | P valueb |

|---|---|---|---|

| Did you attempt the antibody test? c | |||

| Yes | 8693 (99.3) | 2911 (98.4) | |

| No | 61 (0.70) | 46 (1.6) | <.001 |

| Did you successfully manage to complete the antibody test? | |||

| Yes | 8475 (97.5) | 2848 (97.8) | |

| No, I only partially completed it | 160 (1.8) | 37 (1.3) | |

| No, I did not complete any of it | 16 (0.18) | 9 (0.31) | |

| Do not know | 42 (0.48) | 17 (0.58) | .10 |

| Did you have anyone helping you to administer the test? | |||

| Yes | 2270 (26.1) | 853 (29.3) | |

| No | 6423 (73.9) | 2058 (70.7) | .001 |

| Why did you not successfully complete the antibody test? | |||

| I did not understand the instructions | 1 (0.57) | 1 (2.2) | |

| It took too long | 1 (0.57) | 0 (0.0) | |

| I did not manage to use the lancet | 11 (6.3) | 2 (4.4) | |

| I did not manage to get a blood spot / drop | 6 (3.4) | 8 (17.4) | |

| I did not manage to use the pipette | 6 (3.4) | N/A | |

| I did not manage to get enough blood on the test | 18 (10.2) | 14 (30.4) | |

| I did not manage to get the buffer on the test | 64 (36.4) | 1 (2.2) | |

| I damaged the test | 78 (44.3) | 7 (15.2) | |

| It was too fiddly for me to manage | 8 (4.6) | 5 (10.9) | |

| I did not have some of the equipment I needed | 2 (1.1) | 0 (0.0) | |

| I could not read the result | 8 (4.6) | 1 (2.2) | |

| Preference for where to perform the finger-prick antibody test | |||

| Home | 5960 (68.1) | ||

| Clinical setting | 168 (1.9) | ||

| Do not mind which one | 2596 (29.7) | ||

| Neither | 19 (0.22) | ||

| Do not know | 11 (0.13) | ||

| Preference for where to perform the finger-prick antibody test | |||

| Home | 6424 (74.4) | ||

| Community testing centre | 139 (1.6) | ||

| Do not mind which one | 2152 (24.6) | ||

| Neither | 19 (0.22) | ||

| Do not know | 20 (0.23) | ||

| Preference for where to perform the finger-prick antibody test | |||

| Home | 2146 (72.6) | ||

| Clinical setting | 64 (2.2) | ||

| Community testing centre | 712 (24.1) | ||

| Do not mind which one | 16 (0.54) | ||

| None of these | 3 (0.10) | ||

| Do not know | 16 (0.54) | ||

| Willingness to try another finger-prick antibody test | |||

| Yes | 8603 (98.3) | 2867 (97.0) | |

| No | 70 (0.80) | 60 (2.0) | |

| Do not know | 81 (0.93) | 30 (1.0) | <.001 |

| Willingness to perform finger-prick antibody test on a child (Yes) d | |||

| Children 5–11 years old (n = 1398) / (n = 465) | 1187 (84.9) | 371 (79.8) | .009 |

| Children 12–15 years old (n = 885) / (n= 301) | 841 (95.0) | 279 (92.7) | .05 |

| Children 16–17 years old (n = 557) / (n = 176) | 541 (97.1) | 167 (94.9) | .11 |

Abbreviation: LFIA, lateral flow immunoassays.

aPercentages are calculated from nonmissing values.

bP-value calculated using Pearson’s chi- squared test.

cReasons for not attempting the test included not wanting to prick their finger with a lancet, not understanding the instructions, not wanting to see their own blood, damaging the test, not wanting to know the result, and not trusting the test.

dParticipants who had children aged 5–11, 12–15, and 16–17 years were asked whether they would carry out the test on children of that age living in their households. Denominator is the number of participants who reported having children of that age living in their household.

Usability

In the pilot study, most people (86.5%, 225/260) who attempted the test managed to complete it. However, significant usability issues were identified, including challenges with the lancet to obtain a blood drop and the pipette to transfer the blood to the sample well. The problems with the lancet led to some participants using alternative objects to draw blood, including pins and sewing needles, while others opened the lancet casing to access the blade. Some people reported minor problems putting buffer into the buffer well. This led to the inclusion of 2 lancets and changes to the instructions for the national study.

In the national study, almost all participants who attempted the antibody test reported completing it (97.5% for LFIA1 and 97.8% for LFIA2) (Table 2). Reasons for not completing the test are shown in the table. Of LFIA1 participants who reported damaging the test, the majority reported either accidentally removing the entire lid off the buffer bottle and spilling the solution all over the test cassette or putting the blood and buffer in the wrong well. For LFIA2, few participants damaged the test and they all reported putting the blood and buffer in the wrong well.

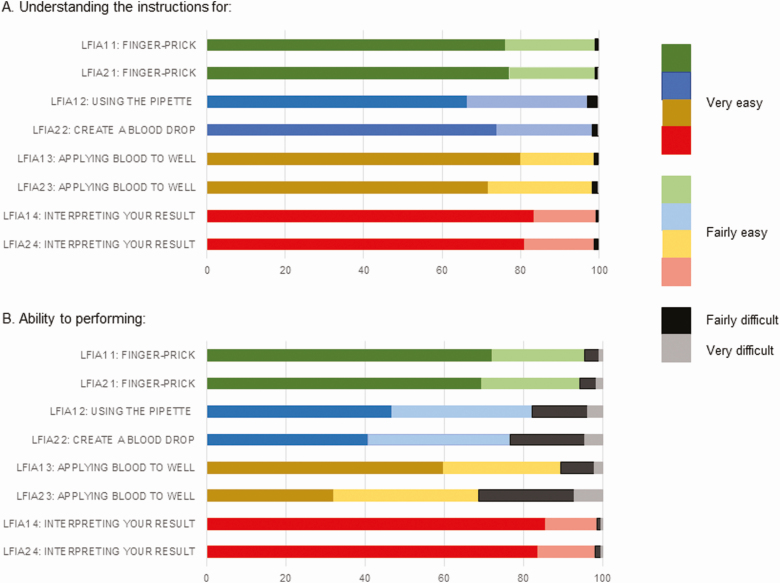

About 1 in 4 participants asked someone to help them to administer the test. Most found the instructions easy to understand (Figure 3), but as in the pilot, participants reported some difficulties in performing the test. For LFIA1, difficulties with using the pipette were reported by 17.7% (1512/8521) of participants. In addition, 10.6% (908/8556) had difficulties applying the blood to the sample well (Figure 3). Therefore, for LFIA2 the instructions were changed to omit the use of the pipette and instead directly transfer blood from the finger-prick site to the sample well. However, participants still found creating a blood drop from the finger-prick site (23.2%; 664/2862) and then applying the blood to the well (31.3%; 894/2858) difficult. LFIA2 was deployed after LFIA1 because there was a delay in arrival of LFIA2 from the supplier. This differential timing in dispatch of the kits had the unexpected benefit of allowing us to make iterative changes to the instructions.

Figure 3.

Usability of home-based self-test antibody kits. Abbreviation: LFIAs, lateral flow immunoassays.

Overall, 7.4% of LFIA1 and 4.8% of LFIA2 participants reported an invalid result (Table 3). There was some variation in the proportion of participant-reported invalid results using LFIA1 by age and gender. The higher the number of participants registered for the study in the same household, the lower the odds of the participant reporting an invalid result. No sociodemographic factors were associated with a participant-reported invalid result using LFIA2 (Table 4).

Table 3.

Reported Home-based Antibody Self-test Results

| Test result | LFIA1 N = 8475 n (%)a | LFIA2 N = 2848 n (%)a | P valueb |

|---|---|---|---|

| Valid | 7757 (91.5) | 2688 (94.4) | |

| Invalid | 626 (7.4) | 137 (4.8) | |

| Unable to read | 92 (1.1) | 23 (0.81) | <.001 |

| Confidence in having interpreted the result correctly | |||

| Very confident | 7806 (93.1) | 2593 (91.8) | |

| Fairly confident | 522 (6.2) | 204 (7.2) | |

| Not very confident | 39 (0.47) | 22 (0.78) | |

| Not at all confident | 16 (0.19) | 6 (0.21) | .07 |

| Photo of result uploaded | |||

| Yes | 7272 (85.8) | 2416 (84.8) | |

| No | 1203 (14.2) | 432 (15.2) | .20 |

Abbreviation: LFIA, lateral flow immunoassays.

aPercentages are calculated from nonmissing values.

bP-value calculated using Pearson’s chi- squared test.

Table 4.

Factors Associated with an Invalida Test Result Among Study Participants

| LFIA1 N = 8383 | LFIA2 N = 2825 | |||||||

|---|---|---|---|---|---|---|---|---|

| No. invalid (%) | cOR (95% CI) | P valueb | aORc (95% CI) | P valued | No. invalid (%) | cOR (95% CI) | P valueb | |

| Age, y | ||||||||

| 18–34 | 137 (7.2) | 1.3 (.94–1.7) | 1.4 (1.0–1.8) | 39 (6.5) | 2.1 (1.2–3.6) | |||

| 35–44 | 78 (5.9) | Reference | Reference | 25 (5.5) | 1.7 (.93–3.2) | |||

| 45–54 | 116 (6.9) | 1.2 (.88–1.6) | 1.3 (.93–1.7) | 22 (3.6) | 1.1 (.59–2.1) | |||

| 55–64 | 143 (8.4) | 1.5 (1.1–2.0) | 1.5 (1.2–2.1) | 18 (3.3) | Reference | |||

| 65+ | 141 (8.5) | 1.5 (1.1–2.0) | .03 | 1.5 (1.1–2.0) | .03 | 31 (5.5) | 1.7 (.94–3.1) | .06 |

| Gender | ||||||||

| Male | 267 (6.7) | Reference | - | Reference | 57 (4.4) | Reference | ||

| Female | 358 (8.2) | 1.3 (1.1–1.5) | .007 | 1.2 (1.0–1.5) | .02 | 79 (5.2) | 1.2 (.84–1.7) | .32 |

| Ethnicity | ||||||||

| White | 600 (7.5) | Reference | - | 133 (5.0) | Reference | |||

| Black, Asian and minority ethnic | 25 (6.3) | 0.82 (.54–1.2) | .35 | 4 (2.2) | 0.42 (.15–1.2) | .09 | ||

| Education level | ||||||||

| Degree level or above | 245 (8.1) | Reference | 57 (5.3) | Reference | ||||

| A levels, other Higher Education below degree level and equivalents | 167 (7.0) | 0.85 (.69–1.0) | 37 (4.7) | 0.88 (.58–1.4) | ||||

| GCSE/O level grade and equivalents | 124 (6.8) | 0.83 (.67–1.0) | 27 (4.5) | 0.84 (.53–1.3) | ||||

| No qualification | 54 (7.9) | 0.98 (.72–1.3) | .27 | 11 (5.1) | 0.96 (.50–1.9) | .88 | ||

| Number of participants from same household | ||||||||

| 1 | 122 (9.1) | Reference | Reference | 34 (6.4) | Reference | |||

| 2 | 382 (7.9) | 0.85 (.69–1.1) | 0.87 (.70–1.1) | 64 (4.3) | 0.65 (.43–1.0) | |||

| 3 | 77 (5.5) | 0.59 (.44–.79) | 0.61 (.45–.82) | 22 (4.3) | 0.66 (.38–1.1) | |||

| 4 | 45 (5.6) | 0.60 (.42–.85) | <.001 | 0.64 (.45–.92) | .003 | 17 (5.6) | 0.86 (.47–1.6) | .22 |

Abbreviations: aOR, adjusted odds ratio; cOR, crude odds ratio; GCSE, General Certificate of Secondary Education; LFIA, lateral flow immunoassays ; O, Ordinary.

aAs reported by the participant.

bP-value calculated using Pearson’s chi- squared test.

cAdjusted for age, gender, and number of participants registered for the study in the same household.

dP-value calculated using logistic regression analysis.

After adjusting for sociodemographic differences between LFIA1 and LFIA2 participants, there was no difference between LFIA1 and LFIA2 in terms of being able to read the result (1.1% vs 0.81%; aOR 0.76 (95% CI: .48–1.2); p. 0.24). But a lower percentage of invalid test results were reported by LFIA2 participants (7.9% vs 4.8%; aOR 0.64 (95% CI: .53–.77); P < .001).

Agreement Between Participant-interpreted and Clinician-interpreted Result

Table 5 shows concordance between participant and clinician interpreted results in the national study. For LFIA1, there was substantial agreement overall (kappa 0.72 (95% CI: .71–.73); P < .001), however there were important differences. While there was 100.0% agreement for results reported as negative, and 98.5% agreement for invalid results, a clinician confirmed only 62.8% of participant-reported positives. Visible reasons (from the photograph) were insufficient blood volume to cover the bottom of the sample well, or insufficient movement of the blood and buffer solution across the result window. In addition, the clinician was able to interpret the results of all but 4 out of 66 (6.1%) tests from the photographs of results participants reported as “unable to read.” The 4 results unreadable by the clinician were because blood had leaked out of the sample well and obscured the result window. Of participant-reported unable to read results, 78.8% were clinician-interpreted as invalid (for all these tests, the control and “test” lines were both absent).

Table 5.

Agreement Between Participants and Clinician for the Interpretation of LFIA Test Results

| Clinician result n (%) | ||||||

|---|---|---|---|---|---|---|

| LFIA1 | Negative | Positive | Invalid | Unable to read | Total | |

| Participant resulta | Negative | 200 (100.0) | 0 (0.0) | 0 (0.0) | 0 (0.0) | 200 (32.7) |

| Positive | 50 (34.5) | 91 (62.8) | 3 (2.1) | 1 (0.69) | 145 (23.7) | |

| Invalid | 3 (1.5) | 0 (0.0) | 197 (98.5) | 0 (0.0) | 200 (32.7) | |

| Unable to read | 10 (15.2) | 0 (0.0) | 52 (78.8) | 4 (6.1) | 66 (10.8) | |

| Total | 263 (100.0) | 91 (100.0) | 252 (100.0) | 5 (100.0) | 611 (100.0) | |

| LFIA2 | ||||||

| Participant result b | Negative | Positive (IgG or IgM/IgG) | Invalid | Unable to read | Total | |

| Negative | 228 (97.0) | 2 (0.85) | 0 (0.0) | 5 (2.1) | 235 (43.4) | |

| Positive: IgG or IgM/G | 3 (1.8) | 154 (93.9) | 2 (1.2) | 5 (3.1) | 164 (30.3) | |

| Invalid | 2 (1.6) | 0 (0.0) | 120 (98.4) | 0 (0.0) | 122 (22.6) | |

| Unable to read | 15 (75.0) | 1 (5.0) | 3 (15.0) | 1 (5.0) | 20 (3.7) | |

| Total | 248 (100.0) | 157 (100.0) | 125 (100.0) | 11 (100.0) | 541 (100.0) |

Abbreviation: LFIA, lateral flow immunoassays.

aKappa statistic 0.72 (95% CI 0.71–0.73; P < 0.001).

bKappa statistic 0.89 (95% CI 0.88–0.917; P < 0.001).

For LFIA2, there was almost perfect agreement between participant and clinician interpreted results (kappa 0.89 (95% CI: .88–.92); P< .001) for interpretation of IgG positivity, as per our operational definition (Table 5). There was 93.9% agreement for positive tests, 97.0% for negative, and 98.4% for invalid tests; 1.5% (3/200) of negative test photographs were blurred so the clinician was unable to read them; 1.6% (2/122) of invalid test photographs were clinician-interpreted as negative. Visible reasons for invalid test result readings were similar to LFIA1. Of participant-reported unable to read results, 75.0% were interpreted as negative by the clinician (Table 5). The clinician could not interpret the results from 10 photographs reported as readable results by participants, reasons included blurred photographs and shadowing obscuring the indicator lines.

DISCUSSION

Overall, we found that self-testing with the two LFIA kit designs used in this study was highly acceptable among adults living in England. High acceptability of in-home self-testing is in keeping with self-sampling and self-testing studies in diabetes management [4], and HIV diagnostics [5, 6].

The majority of participants who attempted the test successfully completed it despite some continued difficulties with using the pipette (LFIA1), creating a drop of blood from the finger-prick site (LFIA2), and applying the blood to the sample well (LFIA1 and LFIA2). Based on these findings, we amended the instructions further to give users the choice of either using a pipette or directly transferring a drop of blood from the finger-prick site to the sample well, based on personal preference.

Participant-reported invalid test results were low (LFIA1: 7.4%; LFIA2: 4.8%; P < .001). The lower proportion of invalid tests for LFIA2 could reflect the better designed buffer bottle (which was a significant issue for LFIA1), better performance characteristics of LFIA2 over LFIA1 (eg, easier movement of the blood and buffer solution across the result window), or improved ability to get sufficient blood in the sample well using the direct blood transfer technique over using a pipette. Participants’ ability to obtain a valid test result using LFIA1 varied marginally by age and gender and increased with the number of participants registered for the study in the same household. The latter observation is not surprising as observing another household member performing the test is likely to improve the performance of others in the same household. No sociodemographic differences in obtaining a valid result were found for LFIA2.

Of note, about 1 in 4 participants reported that they had help administering the test, irrespective of LFIA used. This could put individuals living alone at a disadvantage in terms of usability. However, comparing those that had help to those that did not, we found no difference in ability to complete the test (97.7% vs 98.2%; p. 0.08) or reported invalid results (6.1% vs 7.0%; p. 0.06).

Overall, there was good agreement between self-reported results and those reported by a clinician. Therefore, our findings broadly support self-reporting of home-based test results using LFIAs. But the public and individual health impact of misinterpreting a test result that is negative but read as positive is a concern, as an individual could falsely conclude that they have antibodies for SARS-CoV-2 and may change their behavior as a result. To mitigate against this, and given the scientific uncertainty over the clinical relevance of SARS-CoV2 antibodies, we made it clear in the instructions that the LFIAs used were for research purposes only, not 100% accurate at the individual level, and therefore participants should continue to follow the current Government advice, irrespective of their test result.

Our study is original because focusing on the acceptability and usability of LFIAs for self-testing for SARS-CoV-2 antibody in a home-based setting has not been done at such scale in the general population. It provides an attractive solution for conducting large seroprevalence surveys. The study has, however, some limitations. Study participants may not be representative of the general adult population of England. However, we had data on the England population profile (2011 census data [13]), as well as the study registration profile and survey completion profile of the study participants, which gave us an indication of response bias. Our sample was broadly similar to the England population profile. In addition, the usability study was conducted in parallel with our laboratory-based study of performance characteristics of LFIAs. As such, we did not know the accuracy of the LFIAs chosen for the usability study at the time, or whether either would perform well enough in the laboratory to be considered for the large national seroprevalence study planned as part of the REACT program [10]. However, given that the majority of commercially available LFIAs have a similar cassette-based design and test result read out to LFIA1 or LFIA2, we were confident that our results would be generalizable and applicable to whichever LFIA was finally selected. Findings from our laboratory-based study, including the performance characteristics of LFIA1 and LFIA2, are forthcoming [11].

CONCLUSIONS

Overall, our study has demonstrated that home-based self-testing LFIAs for use in large community-based seroprevalence surveys of SARS-CoV-2 antibody are both acceptable and feasible. Although this study identified a few usability issues, these have now been addressed. LFIA2, Fortress Orient Gene, has been selected for a large national seroprevalence study as part of the REACT program. This decision was based on criteria including the usability and acceptability determined in this study, a relatively low proportion of invalid results, a high concordance with clinician-read results, together with test performance in our laboratory evaluation of clinical samples [11].

Supplementary Data

Supplementary materials are available at Clinical Infectious Diseases online. Consisting of data provided by the authors to benefit the reader, the posted materials are not copyedited and are the sole responsibility of the authors, so questions or comments should be addressed to the corresponding author.

Notes

Author contributions. CA and HW conceptualized and designed the study and drafted the manuscript. CA, HW, PP, EC, VP, RR and MP undertook data collection and data analysis. ALJ and LN designed and produced the instruction booklet and video. SR and DA provided statistical advice. GC, WB, PE and AD provided study oversight. AD and PE obtained funding. SR, JC, SG, GF, MP, SS, HA, BF, DA, GC, WB, AD and PE critically reviewed the manuscript. All authors read and approved the final version of the manuscript. HW is the guarantor for this paper. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted, had full access to all the data in the study, and had final responsibility for the decision to submit for publication.

Acknowledgments. The authors thank key collaborators on this work—Ipsos MORI: Stephen Finlay, John Kennedy, Duncan Peskett, Sam Clemens and Kelly Beaver; and the Office for Life Sciences and Department of Health and Social Care for logistic support. The authors would like to thank the Jane Bruton and Kelly Gleason in the Patient Experience Research Centre, and public contributors for their contribution to this study: Joan Bedlington, Sandra Jayacodi, Amara Lalji, Maisie McKenzie, Pip Russell, Marney Williams. All data underlying the results are available as part of the article and no additional source data are required. The instruction booklet and video instructions are pending copyright application by the designer and will be available via the REACT programme website https://www.imperial.ac.uk/medicine/research-and-impact/groups/react-study/ once this process is completed.

Financial support. The work was supported by the Department of Health and Social Care in England. GC is supported by an NIHR Professorship. PE is Director of the MRC Centre for Environment and Health (MR/L01341X/1, MR/S019669/1). PE acknowledges support from the NIHR Imperial Biomedical Research Centre and the NIHR Health Protection Research Units in Environmental Exposures and Health and Chemical and Radiation Threats and Hazards, and the British Heart Foundation Centre for Research Excellence at Imperial College London (RE/18/4/34215). HW is a National Institute for Health Research (NIHR) Senior Investigator and receives funding from the Imperial NIHR Biomedical Research Centre, the NIHR Applied Research Collaborative North West London and the Wellcome Trust. AD is a National Institute for Health Research (NIHR) Senior Investigator and receives funding support from the Imperial NIHR Biomedical Research Centre, and NIHR Imperial Patient Safety Translational Research Centre (PSTRC).

Potential conflicts of interest. All authors have submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest. Conflicts that the editors consider relevant to the content of the manuscript have been disclosed.

References

- 1.Adams ER, Ainsworth M, Anand R, et al. Antibody testing for COVID-19: a report from the National COVID Scientific Advisory Panel. medRxiv 2020. doi: 2020.04.15.20066407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lassaunière R, Frische A, Harboe ZB, et al. Evaluation of nine commercial SARS-CoV-2 immunoassays. medRxiv 2020: doi: 2020.04.09.20056325 [Google Scholar]

- 3.Pollán M, Pérez-Gómez B, Pastor-Barriuso R, et al. Prevalence of SARS-CoV-2 in Spain (ENE-COVID): a nationwide, population-based seroepidemiological study. Lancet 2020. doi: S0140-6736(20)31483-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Schnell O, Alawi H, Battelino T, et al. Self-monitoring of blood glucose in type 2 diabetes: recent studies. J Diabetes Sci Technol 2013; 7:478–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Tonen-Wolyec S, Batina-Agasa S, Muwonga J, Mboumba Bouassa RS, Kayembe Tshilumba C, Bélec L. Acceptability, feasibility, and individual preferences of blood-based HIV self-testing in a population-based sample of adolescents in Kisangani, Democratic Republic of the Congo. PLoS One 2019; 14:e0218795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Figueroa C, Johnson C, Ford N, et al. Reliability of HIV rapid diagnostic tests for self-testing compared with testing by health-care workers: a systematic review and meta-analysis. Lancet HIV 2018; 5:e277–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Erbach M, Freckmann G, Hinzmann R, et al. Interferences and limitations in blood glucose self-testing: an overview of the current knowledge. J Diabetes Sci Technol 2016; 10:1161–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Majam M, Mazzola L, Rhagnath N, et al. Usability assessment of seven HIV self-test devices conducted with lay-users in Johannesburg, South Africa. PLoS One 2020; 15:e0227198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Saunders J, Brima N, Orzol M, et al. Prospective observational study to evaluate the performance of the BioSure HIV Self-Test in the hands of lay users. Sex Transm Infect 2018; 94:169–73. [DOI] [PubMed] [Google Scholar]

- 10.Riley S, Atchison C, Ashby D et al. REal-time assessment of community transmission (REACT) of SARS-CoV-2 virus: study protocol. Wellcome Open Res 2020; 5:200. Available at: https://doi.org/10.12688/wellcomeopenres.16228.1. [DOI] [PMC free article] [PubMed]

- 11.Flower B, Brown J, Simmons B, et al. Clinical and laboratory evaluation of SARS-CoV-2 lateral flow assays for use in a national COVID-19 sero-prevalence survey. Thorax (forthcoming). 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McHugh ML. Interrater reliability: the kappa statistic. Biochem Med (Zagreb) 2012; 22:276–82. [PMC free article] [PubMed] [Google Scholar]

- 13.Office_for_National_Statistics. UK 2011 census data. Available at: https://www.ons.gov.uk/census/2011census. Accessed 10 June 2020.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.