Abstract

The ability to rapidly and accurately recognize complex objects is a crucial function of the human visual system. To recognize an object, we need to bind incoming visual features, such as color and form, together into cohesive neural representations and integrate these with our preexisting knowledge about the world. For some objects, typical color is a central feature for recognition; for example, a banana is typically yellow. Here, we applied multivariate pattern analysis on time-resolved neuroimaging (MEG) data to examine how object-color knowledge affects emerging object representations over time. Our results from 20 participants (11 female) show that the typicality of object-color combinations influences object representations, although not at the initial stages of object and color processing. We find evidence that color decoding peaks later for atypical object-color combinations compared with typical object-color combinations, illustrating the interplay between processing incoming object features and stored object knowledge. Together, these results provide new insights into the integration of incoming visual information with existing conceptual object knowledge.

SIGNIFICANCE STATEMENT To recognize objects, we have to be able to bind object features, such as color and shape, into one coherent representation and compare it with stored object knowledge. The MEG data presented here provide novel insights about the integration of incoming visual information with our knowledge about the world. Using color as a model to understand the interaction between seeing and knowing, we show that there is a unique pattern of brain activity for congruently colored objects (e.g., a yellow banana) relative to incongruently colored objects (e.g., a red banana). This effect of object-color knowledge only occurs after single object features are processed, demonstrating that conceptual knowledge is accessed relatively late in the visual processing hierarchy.

Keywords: color, decoding, MEG, MVPA, object-color knowledge

Introduction

Successful object recognition depends critically on comparing incoming perceptual information with existing internal representations (Albright, 2012; Clarke and Tyler, 2015). A central feature of many objects is color, which can be a highly informative cue in visual object processing (Rosenthal et al., 2018). Although we know a lot about color perception itself, comparatively less is known about how object-color knowledge interacts with color perception and object processing. Here, we measure brain activity with MEG and apply multivariate pattern analyses (MVPA) to test how stored object-color knowledge influences emerging object representations over time.

Color plays a critical role in visual processing by facilitating scene and object recognition (Gegenfurtner and Rieger, 2000; Tanaka et al., 2001), and by giving an indication of whether an object is relevant for behavior (Conway, 2018; Rosenthal et al., 2018). Objects that include color as a strong defining feature have been shown to activate representations of associated colors (Hansen et al., 2006; Olkkonen et al., 2008; Witzel et al., 2011; Bannert and Bartels, 2013; Vandenbroucke et al., 2016; Teichmann et al., 2019), leading to slower recognition when there is conflicting color information (e.g., a red banana) (Tanaka and Presnell, 1999; Nagai and Yokosawa, 2003; for a meta-analysis, see Bramão et al., 2010). Neuroimaging studies on humans and nonhuman primates have shown that there are several color-selective regions along the visual ventral pathway (Zeki and Marini, 1998; Seymour et al., 2010, 2016; Lafer-Sousa and Conway, 2013; Lafer-Sousa et al., 2016). While the more posterior color-selective regions do not show a shape bias, the anterior color-selective regions do (Lafer-Sousa et al., 2016), supporting suggestions that color knowledge is represented in regions associated with higher-level visual processing (Tanaka et al., 2001; Simmons et al., 2007). A candidate region for the integration of stored knowledge and incoming visual information is the anterior temporal lobe (ATL) (Patterson et al., 2007; Chiou et al., 2014; Papinutto et al., 2016). In one study (Coutanche and Thompson-Schill, 2015), for example, brain activation patterns evoked by recalling a known object's color and its shape could be distinguished in a subset of brain areas that have been associated with perceiving those features, namely, V4 and lateral occipital cortex, respectively. In contrast, recalling an object's particular conjunction of color and shape could only be distinguished in the ATL, suggesting that the ATL processes conceptual object representations.

Time-resolved data measured with EEG or MEG can help us understand how concetual level processing interacts dynamically with perception. Previous EEG studies have examined the temporal dynamics of object-color knowledge as an index of the integration of incoming visual information and prior knowledge (Proverbio et al., 2004; Lu et al., 2010; Lloyd-Jones et al., 2012). For example, Lloyd-Jones et al. (2012) showed that shape information modulates neural responses at ∼170 ms (component N1), the combination of shape and color affected the signal at 225 ms (component P2), and the typicality of object-color pairing modulated components ∼225 and 350 ms after stimulus onset (P2 and P3). These findings suggest that the initial stages of object recognition may be driven by shape, with the interactions with object-color knowledge coming into play at a much later stage, perhaps as late as during response selection.

Using multivariate methods for time-resolved neuroimaging data, we can move beyond averaged measures (i.e., components) to infer what type of information is contained in the neural signal on a trial-to-trial basis. In the present study, we used MVPA to determine the time point at which neural activity evoked by congruently (e.g., yellow banana) and incongruently (e.g., red banana) colored objects differs, which indicates when stored knowledge is integrated with incoming visual information. Furthermore, we examined whether existing knowledge about an object's color influences perceptual processing of surface color and object identity. Overall, using color as a model, our findings elucidate the time course of interactions between incoming visual information and prior knowledge in the brain.

Materials and Methods

Participants

Twenty healthy volunteers (11 female, mean age = 28.9 years, SD = 6.9 years, 1 left-handed) participated in the study. All participants reported accurate color vision and had normal or corrected-to-normal visual acuity. Participants gave informed consent before the experiment started and were financially compensated. The study was approved by the Macquarie University Human Research Ethics Committee.

Stimuli

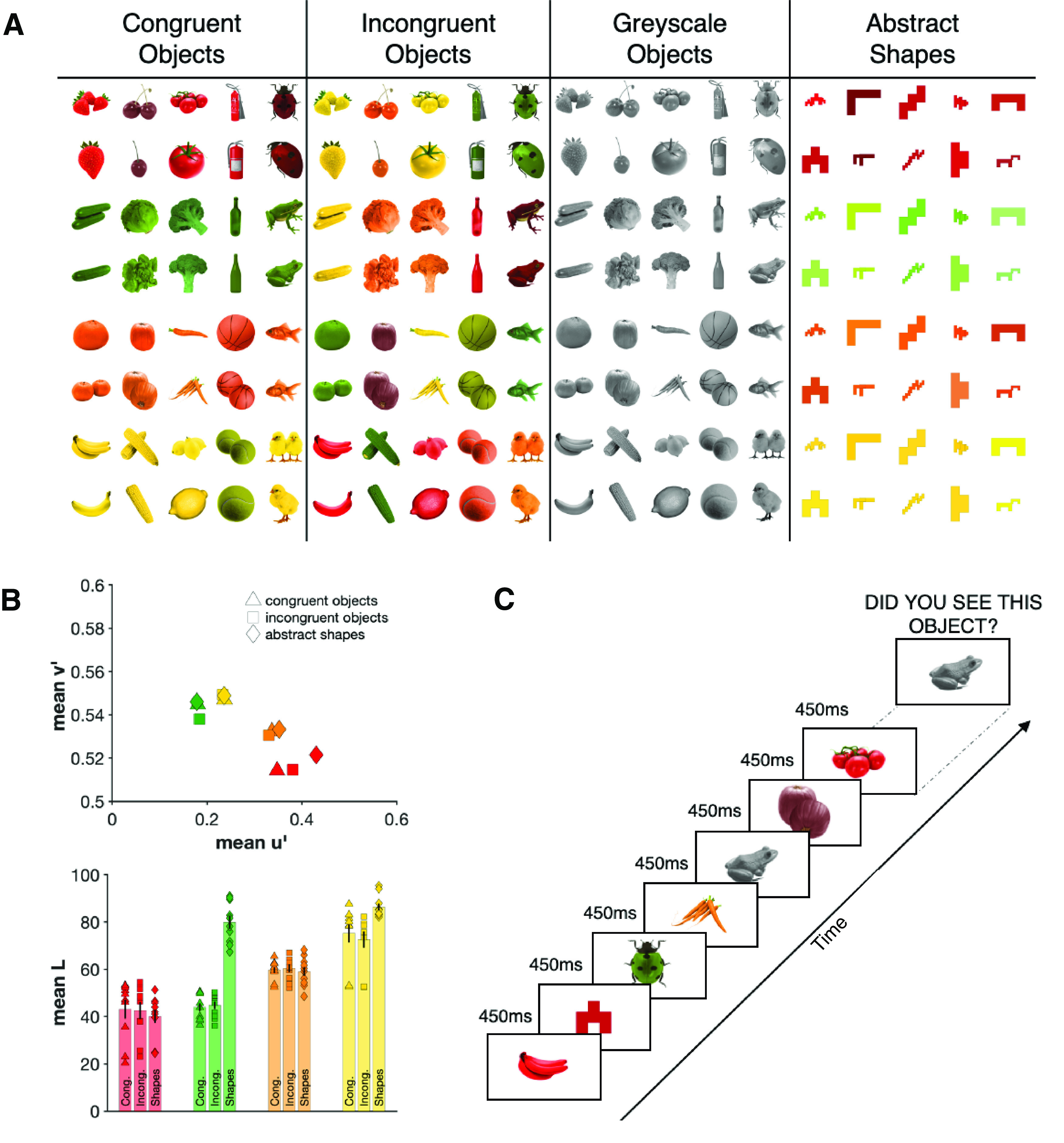

We identified five real-world objects that previous studies have shown to be strongly associated with each of four different colors (red, green, orange, and yellow; see Fig. 1A) (Joseph, 1997; Tanaka and Presnell, 1999; Naor-Raz et al., 2003; Therriault et al., 2009; Lloyd-Jones et al., 2012; Bannert and Bartels, 2013). Each color category had one manmade object (e.g., fire hydrant), one living object (e.g., ladybird), and three fruits or vegetables (e.g., strawberry, tomato, cherry). We sourced two exemplar images for each object class, resulting in 10 images for each color, 40 individual images in total. We then created incongruently colored objects by swapping the colors (e.g., yellow strawberry, red banana). For both congruent and incongruent stimuli, we did not use the native colors from the images themselves, but instead overlaid prespecified hues on desaturated (grayscale) images that were equated for luminance using the SHINE toolbox (Willenbockel et al., 2010). A grayscale image overlaid with its canonically associated color (e.g., yellow hue applied to grayscale banana) resulted in a congruent object; a grayscale image overlaid with a color different from its canonically associated color (e.g., red hue applied to grayscale banana) resulted in an incongruent object. Every congruent object exemplar had a single color-matched incongruent partner. For example, we used a specific shade of red and added it to the grayscale images of the strawberry to make the congruent strawberry and overlaid it onto the lemon to make the incongruent lemon. We then took a specific shade of yellow and overlaid it on the lemons to make the congruent lemon exemplar, and onto the strawberry to make the incongruent strawberry exemplar. That means, overall, we have the identical objects and colors in the congruent and the incongruent condition, a factor that is crucial to ensure our results cannot be explained by features other than color congruency. The only difference between these key conditions is that the color-object combination is either typical (congruent) or atypical (incongruent).

Figure 1.

A, All stimuli used in this experiment. The same objects were used in the congruent, incongruent, and grayscale conditions. There were two exemplars of each object. Colors in the congruent and incongruent condition were matched. The abstract shapes were identical across color categories. B, The mean chromaticity coordinates for the 2° observer under D65 illumination for each color category (top) as well as the mean lightness of all colored stimuli used in this experiment (bottom). The colors were transformed into CIELUV space using the OptProp toolbox (Wagberg, 2020). C, An example sequence of the main task. Participants viewed each object for 450 ms. After each sequence, one object was displayed and participants had to indicate whether they had seen this object in the previous sequence or not.

This procedure resulted in 40 congruent objects (10 of each color) and 40 incongruent objects (10 of each color; Fig. 1A). We added two additional stimulus types to this set: the full set of 40 grayscale images, and a set of 10 different angular abstract shapes, colored in each of the four hues (Fig. 1A). As is clear in Figure 1A, the colors of the abstract shapes appear brighter than the colors of the objects; this is because the latter were made by overlaying hue on grayscale, whereas the shapes were simply colored. As our principle goal was to ensure that the congruent objects appeared to have their typical coloring, we did not match the overall luminance of the colored stimuli. For example, if we equated the red of a cherry with the yellow of a lemon, neither object would look typically colored. Thus, each specific color pair is not equated for luminance; however, we have the same colors across different conditions (Fig. 1B).

All stimuli were presented at a distance of 114 cm. To add visual variability, which reduces the low-level featural overlap between the images, we varied the image size from trial to trial by 2 degrees of visual angle. The range of visual angles was therefore between ∼4.3 and 6.3 degrees.

Experimental design and statistical analysis

Experimental tasks

In the main task (Fig. 1C), participants completed eight blocks of 800 stimulus presentations each. Each individual stimulus appeared 40 times over the course of the experiment. Each stimulus was presented centrally for 450 ms with a black fixation dot on top of it. To keep participants attentive, after every 80 stimulus presentations, a target image was presented until a response was given indicating whether this stimulus had appeared in the last 80 stimulus presentations or not (50% present vs absent). The different conditions (congruent, incongruent, grayscale, abstract shape) were randomly intermingled throughout each block, and the target was randomly selected each time. On average, participants performed with 90% (SD = 5.4%) accuracy.

After completing the main blocks, we collected behavioral object-naming data to test for a behavioral congruency effect with our stimuli. On the screen, participants saw each of the objects again (congruent, incongruent, or grayscale) in a random order and were asked to name the objects as quickly as possible. As soon as voice onset was detected, the stimulus disappeared. We marked stimulus-presentation times with a photodiode and recorded voice onset with a microphone. Seventeen participants completed three blocks of this reaction time task, one participant completed two blocks; and for 2 participants, we could not record any reaction times. Each block contained all congruent, incongruent, and grayscale objects presented once.

Naming reaction times were defined as the difference between stimulus onset and voice onset. Trials containing naming errors and microphone errors were not analyzed. We calculated the median naming time for each exemplar for each person and then averaged the naming times for each of the congruent, incongruent, and grayscale conditions.

MEG data acquisition

While participants completed the main task of the experiment, neuromagnetic recordings were conducted with a whole-head axial gradiometer MEG (KIT), containing 160 axial gradiometers. We recorded the MEG signal with a 1000 Hz frequency. An online low-pass filter of 200 Hz and a high-pass filter of 0.03 Hz were used. All stimuli were projected on a translucent screen mounted on the ceiling of the magnetically shielded room. Stimuli were presented using MATLAB with Psychtoolbox extension (Brainard, 1997; Pelli, 1997; Kleiner et al., 2007). Parallel port triggers and the signal of a photodiode were used to mark the beginning and end of each trial. A Bimanual 4-Button Fiber Optic Response Pad (Current Designs) was used to record the responses.

Before entering the magnetically shielded room for MEG recordings, an elastic cap with five marker coils was placed on the participant's head. We recorded head shape with a Polhemus Fastrak digitiser pen and used the marker coils to measure the head position within the magnetically shielded room at the start of the experiment, halfway through and at the end.

MEG data analysis: preprocessing

FieldTrip (Oostenveld et al., 2011) was used to preprocess the MEG data. The data were downsampled to 200 Hz and then epoched from −100 to 500 ms relative to stimulus onset. We did not conduct any further preprocessing steps (filtering, channel selection, trial averaging, etc.) to keep the data in its rawest possible form.

MEG data analysis: decoding analyses

For all our decoding analyses, patterns of brain activity were extracted across all 160 MEG sensors at every time point, for each participant separately. We used a regularized linear discriminant analysis classifier, which was trained to distinguish the conditions of interest across the 160-dimensional space. We then used independent test data to assess whether the classifier could predict the condition above chance in the new data. We conducted training and testing at every time point and tested for significance using random effects Monte Carlo cluster statistics with Threshold Free Cluster Enhancement (TFCE, Smith and Nichols, 2009), corrected for multiple comparisons using the max statistic across time points (Maris and Oostenveld, 2007). Our aim was not to achieve the highest possible decoding accuracy, but rather to test whether the classifier could predict the conditions above chance at any of the time points (i.e., “classification for interpretation”) (Hebart and Baker, 2018). Therefore, we followed a minimal preprocessing pipeline and performed our analyses on a single-trial basis. Classification accuracy above chance indicates that the MEG data contain information that is different for the categories. We used the CoSMoMVPA toolbox (Oosterhof et al., 2016) to conduct all our analyses.

We ran several decoding analyses, which can be divided in three broad themes. First, we tested when we can differentiate between trials where congruently and incongruently colored objects were presented. This gives us an indication of the time course of the integration of visual object representations and stored conceptual knowledge. Second, we examined single-feature processing focusing on color processing and how the typicality of object-color combinations influences color processing over time. Third, we looked at another single feature, shape, and tested whether object-color combinations influence shape processing over time.

For the congruency analysis (see Fig. 2A), we tested whether activation patterns evoked by congruently colored objects (e.g., red strawberry) differ from activation patterns evoked by incongruently colored objects (e.g., yellow strawberry). Any differential response that depends on whether a color is typical or atypical for an object (a congruency effect) requires the perceived shape and color to be bound and compared with a conceptual object representation activated from memory. We trained the classifier on all congruent and incongruent trials, except for trials corresponding to one pair of matched exemplars (e.g., all instances of congruent and incongruent strawberries and congruent and incongruent bananas). We then tested the classifier using only the left-out exemplar pairs. We repeated this process until each matched exemplar pair had been left out (i.e., used as test data) once. Leaving an exemplar pair out ensures that there are identical objects and colors for both classes (congruent and incongruent) in both the training and the testing set, and that the stimuli of the test set have different shape characteristics than any of the training objects. As such, the only distinguishing feature between the conditions is the conjunction of shape and color features, which defines congruency. This allows us to compare directly whether (and at which time point) stored object representations interacts with incoming object-color information.

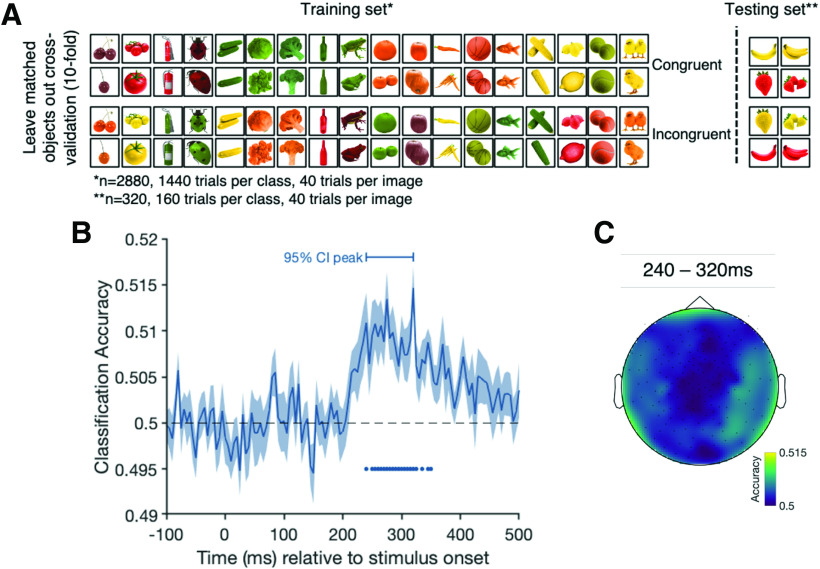

Figure 2.

Cross-validation and results of the congruency analysis contrasting trials from the congruent and incongruent conditions. A, The leave-one-matched-exemplar-out cross-validation approach for a single fold for the congruency decoding analysis. The classifier was trained on the trials shown on the left and tested on the trials on the right, ensuring that the classifier is not tested on the exemplars on which it trained. This limits the effect features other than congruency can have on classifier performance. B, The classification accuracy over time. Shading represents the SE across participants. Black dashed line indicates chance level (50%, congruent vs incongruent). Filled dots indicate significant time points, corrected for multiple comparisons. Horizontal bar above the curve represents the 95% CI of the peak. C, An exploratory sensor searchlight analysis in which we run the same analysis across small clusters of sensors. Colors represent the decoding accuracy for each sensor cluster averaged over the 95% CI of the peak time points.

Next, we focused on the time course of color processing. First, we examined the time course of color processing independent of congruency (see Fig. 3A). For this analysis, we trained the classifier on distinguishing between the four different color categories of the abstract shapes and tested its performance on an independent set of abstract shape trials. We always left one block out for the cross-validation (eightfold). The results of this analysis give an indication about the emergence of when the representations differ between different surface colors, but as we did not control the colors to be equal in luminance or have the same hue difference between each pair, this is not a pure chromatic measure. We did not control luminance because we used these colors to create our colored objects, which needed to look as realistic as possible. Thus, the color decoding analysis includes large and small differences in hue and in luminance between the categories. To look at the differences between each color pair, we also present confusion matrices showing the frequencies of the predicted color categories at peak decoding.

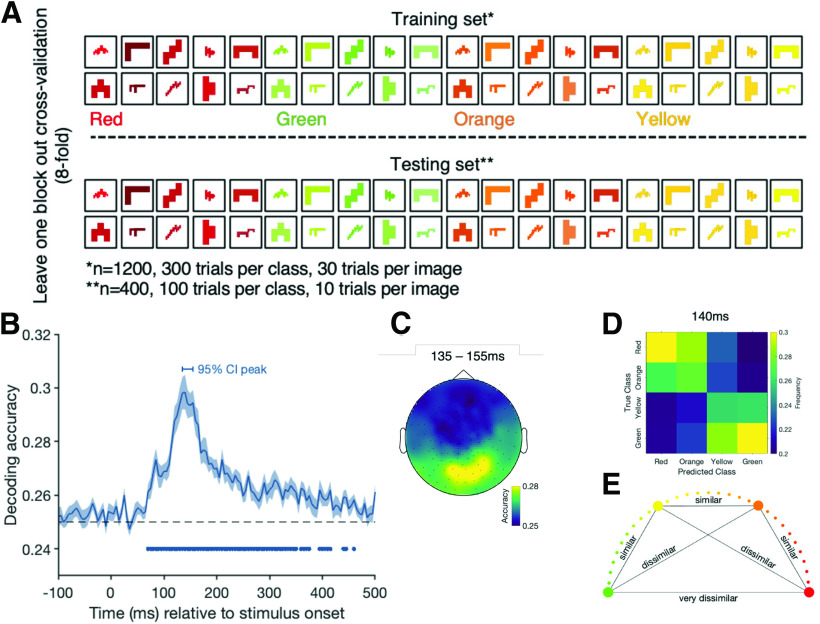

Figure 3.

A, The color decoding analysis when training the classifier to distinguish between the different color categories of the abstract shapes and testing on a block of independent abstract shape trials. B, The decoding accuracy for the color decoding analysis over time. Shading represents the SE across participants. Black dashed line indicates chance level (25%, red vs green vs orange vs yellow). Filled dots indicate significant time points, corrected for multiple comparisons. Horizontal bar above the curve represents the 95% CI of the peak. C, The results of an exploratory searchlight analysis over small sensor clusters averaged across the time points of the 95% CI for peak decoding. Colors represent the decoding accuracies at each sensor. D, A confusion matrix for peak decoding (140 ms) showing the frequencies at which color categories were predicted given the true class. E, The similarity of the color categories, which might underlie the results in D.

Our second color-processing analysis was to examine whether the conjunction of object and color influenced color processing (see Fig. 4A). Perceiving a strongly associated object in the context of viewing a certain color might lead to a more stable representation of that color in the MEG signal. For example, if we see a yellow banana, the banana shape may facilitate a representation of the color yellow earlier than if we see a yellow strawberry. To assess this possibility, we trained the classifier to distinguish between the surface colors of the abstract shapes (i.e., red, orange, yellow, green; chance: 25%). We then tested how well the classifier could predict the color of the congruent and incongruent objects. Training the classifier on the same abstract shapes across color categories makes it impossible that a certain shape-color combination drives an effect, as the only distinguishing feature between the abstract shapes is color. This analysis allows us to compare whether the typicality of color-form combinations has an effect on color processing.

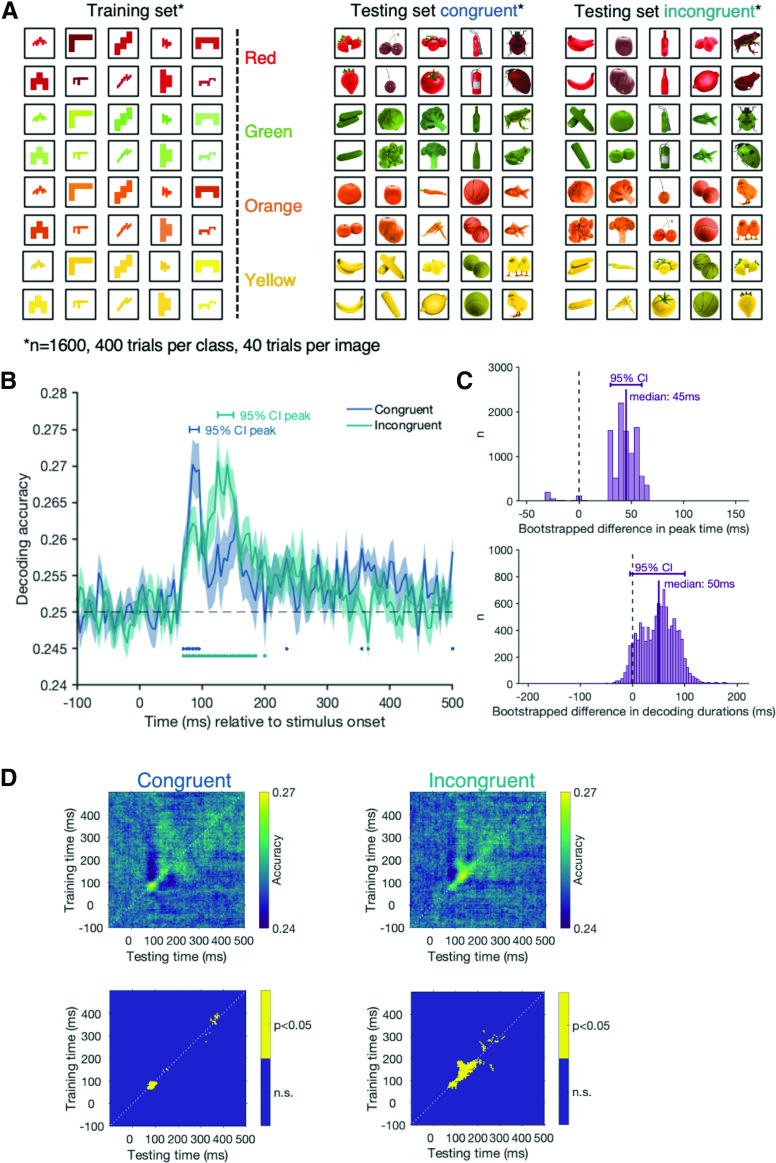

Figure 4.

Results of the color decoding analysis for the congruent and incongruent trials. Here, the classifier was trained to distinguish the color of all abstract shape trials and tested on the congruent and incongruent trials separately (A). B, The classification accuracy over time for the congruent (blue) and incongruent (green) trials. Shading represents the SE across participants. Black dashed line indicates chance level (25%, red vs green vs orange vs yellow). Blue (congruent) and green (incongruent) dots indicate time points at which we can decode the surface color significantly above chance, corrected for multiple comparisons. C, The bootstrapped differences in peak time (top) and the bootstrapped differences in decoding duration (bottom) for the congruent and the incongruent conditions. D, The results of the same analysis across all possible training and testing time point combinations. These time-time matrices allow us to examine how the signal for the congruent colors (left) and incongruent colors (right) evolves over time. Top row, The classification accuracy at every time point combination, with lighter pixels representing higher decoding accuracies. Bottom row, Clusters where decoding is significantly above chance (yellow), corrected for multiple comparisons.

In our final set of analyses, we examined the time course of shape processing. First, to assess the time course of shape processing independent of congruency, we trained a classifier to distinguish the five different abstract shapes in a pairwise fashion (see Fig. 5A). We always used one independent block of abstract shape trials to test the classifier performance (eightfold cross-validation). The results of this analysis indicate when information about different shapes is present in the neural signal, independent of other object features (e.g., color) or congruency. Second, we tested whether the conjunction of object and color has an effect on object decoding (see Fig. 6A). If object color influences early perceptual processes, we might see a facilitation for decoding objects when they are colored congruently or interference when the objects are colored incongruently. We used the grayscale object trials to train the classifier to distinguish between all of the objects. The stimulus set contained two exemplars of each item (e.g., strawberry 1 and strawberry 2). We used different exemplars for the training and testing set to minimize the effects of low-level visual features; however, given that there are major differences in object shapes and edges, we can still expect to see strong differences between the objects. The classifier was trained on one exemplar of all of the grayscale trials. We then tested the classifier's performance on the congruent and incongruent object trials using the exemplars the classifier did not train on. We then swapped the exemplars used for training and testing set until every combination had been used in the testing set. Essentially, this classifier is trained to predict which object was presented to the participant (e.g., was it a strawberry or a frog?) and we are testing whether there is a difference depending on whether the object is congruently or incongruently colored.

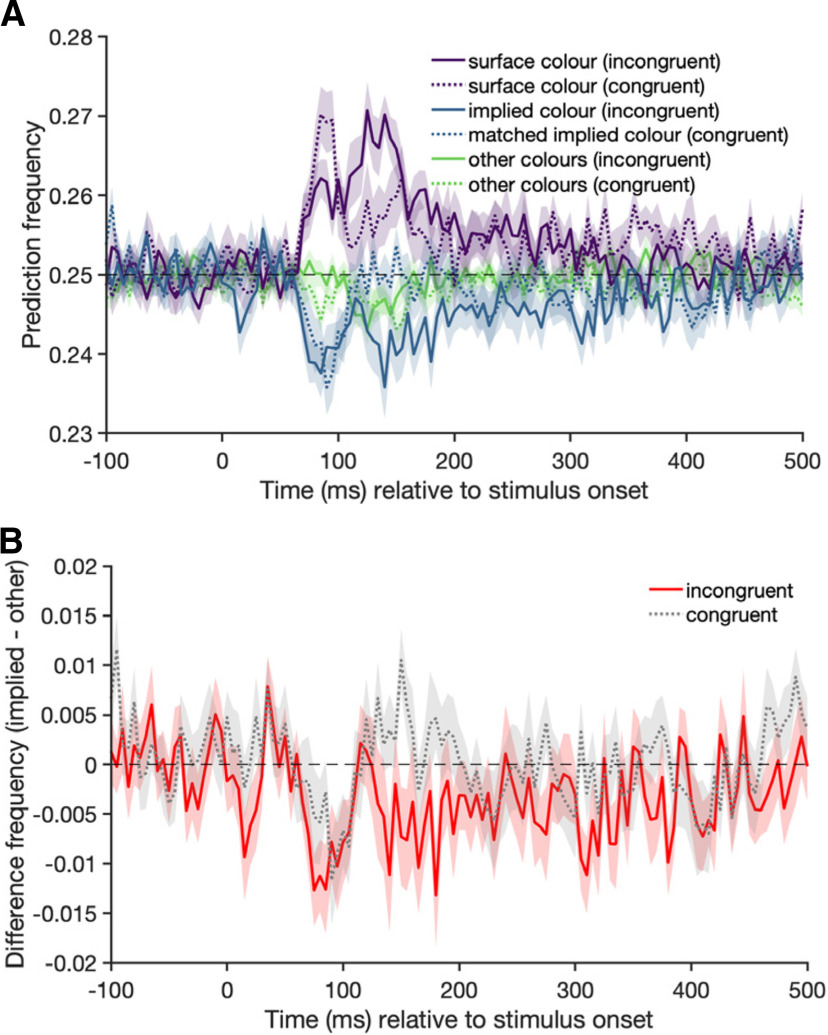

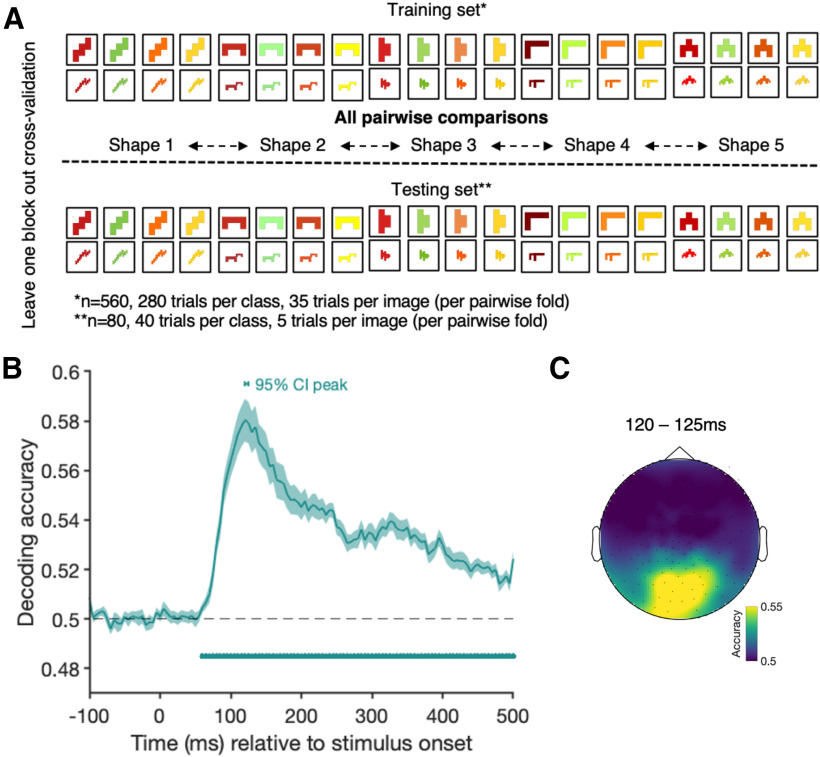

Figure 5.

A, The frequency of a predicted class when the classifier is trained on distinguishing colors in the abstract shape condition and tested on trials from the congruent (dotted lines) and incongruent (full lines) conditions. Shading represents the SE across participants. There are clear peaks for the correct prediction of the surface color between 100 and 150 ms (purple lines). In cases where the classifier makes an error, there is no evidence that the classifier picks the implied object color (blue lines) more frequently than the other incorrect colors (green lines). The classifier is trained on the abstract shape condition, which has an uneven color similarity; the errors in the incongruent condition have to be interpreted in relation to how often the matched implied color in the congruent condition is predicted. B, The difference of the classifier predicting the implied over the other colors for the congruent (grey) and incongruent (red) conditions.

Figure 6.

A, The shape decoding analysis when training the classifier to distinguish between the different categories of the abstract shapes and testing on a block of independent abstract shape trials. B, The decoding accuracy for the shape decoding analysis over time. Shading represents the SE across participants. Black dashed line indicates chance level (50%, pairwise comparison of all shapes). Filled dots indicate significant time points, corrected for multiple comparisons. Horizontal bar above the curve represents the 95% CI of the peak. C, The results of an exploratory searchlight analysis over small sensor clusters averaged across the time points of the 95% CI for peak decoding. Colors represent the decoding accuracies at each sensor.

Statistical inferences

In all our analyses, we used random-effects Monte-Carlo cluster statistic using threshold-free cluster enhancement (TFCE, Smith and Nichols, 2009) as implemented in the CoSMoMVPA toolbox to see whether the classifier could predict the condition of interest above chance. The TFCE statistic represents the support from neighboring time points, thus allowing for detection of sharp peaks and sustained small effects over time. We used a permutation test, swapping labels of complete trials, and reran the decoding analysis on the data with the shuffled labels 100 times per participant to create subject-level null distributions. We then used Monte-Carlo sampling to create a group-level null distribution consisting of 10,000 shuffled label permutations for the time-resolved decoding, and 1000 for the time-generalization analyses (to reduce computation time). The null distributions were then transformed into TFCE statistics. To correct for multiple comparisons, the maximum TFCE values across time in each of the null distributions was selected. We then transformed the true decoding values to TFCE statistics. To assess whether the true TFCE value at each time point is significantly above chance, we compared it with the 95th percentile of the corrected null distribution. Selecting the maximum TFCE value provides a conservative threshold for determining whether the observed decoding accuracy is above chance, corrected for multiple comparisons.

To assess at which time point the decoding accuracy peaks, we bootstrapped the participants' decoding accuracies for each analysis 10,000 times and generated 95% CIs for peak decoding. For the analyses in which we are comparing color and exemplar decoding for congruent and incongruent trials, we also compared the above-chance decoding durations. To test for the duration of above-chance decoding, we bootstrapped the data (10,000 times) and ran our statistics. At each iteration, we then looked for the longest period in which we have above-chance decoding in consecutive time points. We plotted the bootstrapped decoding durations and calculated medians to compare the distributions for the congruent and the incongruent condition.

Results

Behavioral results

We first present the data from our behavioral object-naming task to confirm that our stimuli induce a congruency effect on object naming times. All incorrect responses and microphone errors were excluded from the analysis (on average across participants: 10.1%). We then calculated the median reaction time for naming each stimulus. If a participant named a specific stimulus incorrectly across trials (e.g., incongruently colored strawberry was always named incorrectly), we removed this stimulus completely to ensure that the reaction times in one condition were not skewed. We ran a repeated-measures ANOVA to compare the naming times for the different conditions in the behavioral object naming task using JASP (JASP Team, 2020). Naming times were significantly different between the conditions (F(2,34) = 12.8; p < 0.001). Bonferroni-corrected post hoc comparisons show that participants were faster to name the congruently colored (701 ms) than the incongruently colored (750 ms) objects (pbonf < 0.001; 95% CI for mean difference [23.8, 72.8]). It took participants on average 717 ms to name the grayscale objects, which was significantly faster than naming the incongruently colored objects (pbonf = 0.007; 95% CI for mean difference [7.8, 56.8]) but not significantly slower than naming the congruently colored objects (pbonf = 0.33.; 95% CI for mean difference [−40.5, 8.5]). These results suggest that the objects we used here do indeed have associations with specific canonical colors, and we replicate that these objects are consistently associated with a particular color (Joseph, 1997; Tanaka and Presnell, 1999; Naor-Raz et al., 2003; Therriault et al., 2009; Lloyd-Jones et al., 2012; Bannert and Bartels, 2013).

In the main task, participants were asked to indicate every 80 trials whether they had seen a certain target object or not. The aim of this task was to keep participants motivated and attentive throughout the training session. On average, participants reported whether the targets were present or absent with 90% accuracy (SD = 5%, range: 81.25%–100%).

MEG results

The aim of our decoding analyses was to examine the interaction between object-color knowledge and object representations. First, we tested for a difference in the brain activation pattern for congruently and incongruently colored objects. The results show distinct patterns of neural activity for congruent compared with incongruent objects in a cluster of consecutive time points stretching from 250 to 325 ms after stimulus onset, demonstrating that brain activity is modulated by color congruency in this time window (Fig. 2B). Thus, binding of color and form must have occurred by ∼250 ms, and stored object-color knowledge is integrated with incoming information. An exploratory searchlight (Kaiser et al., 2016; Collins et al., 2018; Carlson et al., 2019) across small clusters (9 at a time) of MEG sensors suggests that this effect is driven a range of frontal, temporal, and parietal sensor clusters (Fig. 2C).

To examine the time course of color processing separately from congruency, we decoded the surface colors of the abstract shapes (Fig. 3A). Consistent with earlier results (Teichmann et al., 2019), we found that color can be decoded above chance from the abstract shape trials in a cluster stretching from 70 to 350 ms (Fig. 3B). Looking at data from an exploratory sensor searchlight analysis across small clusters of sensors shows that color information at peak decoding is mainly distinguishable from occipital and parietal sensors. To examine whether all colors could be dissociated equally well, we also looked at confusion matrices displaying how frequently each color category was predicted for each color (Fig. 3D). The results show that, at the decoding peak (140 ms), red and green are most easily distinguishable and that the prediction errors are not equally distributed: Red trials are more frequently misclassified as being orange than green or yellow, and green trials are more frequently misclassified as being yellow than orange or red. This indicates that colors that are more similar evoke a more similar pattern of activation than colors that are dissimilar (Fig. 3E).

To assess whether congruency influences color processing, we trained a classifier to distinguish between the colors in the abstract shape condition and then tested it on the congruent and incongruent trials separately (Fig. 4A). Color can be successfully classified in a cluster stretching from 75 to 125 ms for the congruent condition and in a cluster stretching from 75 to 185 ms for the incongruent trials (Fig. 4B). These results suggest that there may be a difference in the way color information is processed depending on the congruency of the image, specifically evident in the decoding peaks and decoding duration. To test whether there is a true difference in decoding time courses, we bootstrapped the data and looked at the peak decoding and the longest consecutive streak of above-chance decoding. Comparing the peak decoding times for the congruent and the incongruent condition, we find that they are different from each other (Fig. 4C, top). However, comparing the CIs of the decoding durations, we find no consistent differences between the congruent and the incongruent condition (Fig. 4C, bottom). This could be because onsets and offsets in above-chance decoding are affected by signal strength and thresholds (compare Grootswagers et al., 2017). The peak differences are a more robust measure and suggest that stronger color decoding occurs later in the incongruent compared with congruent condition. To get a complete picture of how these signals evolve over time, we used time-generalization matrices (Fig. 4D). To create time-generalization matrices, we trained the classifier on each time point of the training dataset and then tested it on all time points of the test set. The diagonal of these matrices corresponds to the standard time-resolved decoding results (e.g., training at 100 ms and testing at 100 ms). A decodable off-the-diagonal effect reflects a temporal asynchrony in information processing in the training and testing set (compare Carlson et al., 2011; King and Dehaene, 2014). Our data show that color category was decodable from both conditions early on (∼70 ms). In the incongruent condition, the activation associated with color seems to be sustained longer than for the congruent condition (Fig. 4D), but for both, decoding above chance occurs mainly along the diagonal. This suggests that the initial pattern of activation for color signals occurs at the same time but that the signals associated with color are prolonged when object-color combinations are unusual relative to when they are typical.

In an exploratory color analysis, we also examined which errors the classifier made when predicting the color of the incongruently colored objects. We looked at whether the implied object color is predicted more often than the surface color or the other colors. However, as errors were not equally distributed across the incorrect labels in the training (abstract shape) dataset, we need to compare the misclassification results for the incongruent condition to the results from the congruent condition, to take these differing base rates into account. For each object in the incongruent condition (e.g., yellow strawberry), we have a color-matched object in the congruent condition (e.g., yellow banana). We made use of these matched stimuli by looking at misclassifications and checking how frequently the implied color of an incongruent object (e.g., red for a yellow strawberry) was predicted compared with the matched congruent object (e.g., red for a yellow banana). If the implied color of incongruently colored objects was activated along with the surface color, we should see a higher rate of implied color predictions (e.g., red) for the incongruent object (e.g., yellow strawberry) than for the color-matched congruent object (e.g., yellow banana).

The results (Fig. 5) do not show this pattern: at the first peak (∼80–110 ms), the “other” colors are actually more likely to be chosen by the classifier than the implied color, for both the congruent and incongruent condition. A possible explanation for not seeing an effect of implied color in the color decoding analysis is that the decoding model is based on the actual color pattern, whereas the timing and mechanisms of implied color activation may be different (Teichmann et al., 2019).

The goal of the third analysis was to examine whether shape representations are affected by color congruency. It could be the case, for example, that the representation of banana shapes compared with strawberry shapes is enhanced when their colors are correct. First, we tested when shape representations can be decoded independent of color congruency. We trained the classifier to distinguish between the five different abstract shapes in a pairwise fashion and then tested its performance on independent data (Fig. 6A). The data show that shape information can be decoded in a cluster stretching from 60 to 500 ms (Fig. 6B). Running an exploratory searchlight analysis on small clusters of sensors (9 at a time) shows that shape information at peak decoding is mainly driven by occipital sensors (Fig. 6C).

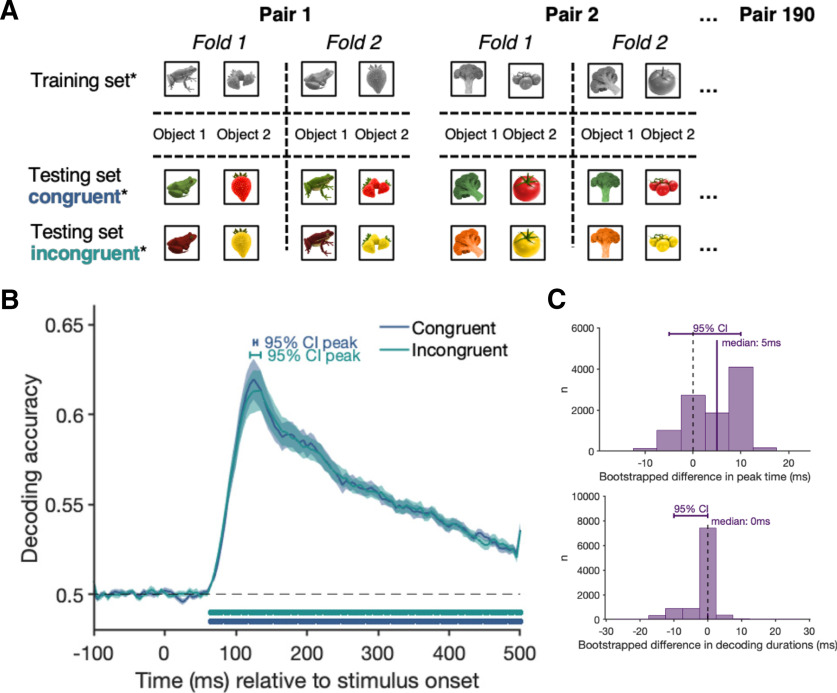

To examine whether color affects object processing, we trained a classifier to distinguish between trials in which the participant saw one of the exemplars of each of the 20 objects in grayscale (e.g., grayscale strawberry 1, grayscale cherry 1, etc.). We then tested at which time point the classifier could successfully cross-generalize to the other exemplar of that object in the congruent and incongruent condition separately (Fig. 7A). If object representations (e.g., banana) are influenced by the typicality of their colors, then cross-generalization should be different for congruent and incongruent trials. Although the exact images are unique, there are shared shape characteristics between exemplars (e.g., the two frog exemplars share some shape aspects despite being different postures), which can be expected to drive an effect. The results show the neural data have differential information about the object in a cluster stretching from 65 to 500 ms for both the congruent and the incongruent test sets (Fig. 7B). These results show that we can decode the object class early on, at a similar time to when we can decode the abstract shape conditions, suggesting that the classifier here is driven strongly by low-level features (e.g., shape), rather than being influenced by color congruency. The time course for congruent and incongruent object decoding is very similar in terms of peak decoding and decoding duration (Fig. 7C). Thus, our data suggest that there is no effect of color congruency on object processing.

Figure 7.

Results of the object exemplar decoding analysis. The classifier was trained to distinguish between all pairwise object categories in the grayscale object condition. We used one exemplar of each class for the training and the other exemplar for testing the classifier. Testing was done for the congruent and incongruent trials separately (A). B, The classification accuracy over time for the object decoding analysis when testing the classifier's performance on congruent (blue) and incongruent (green) trials. Shading represents the SE across participants. Black dashed line indicates chance level (50%, pairwise decoding for all 20 different object categories). Blue (congruent) and green (incongruent) dots indicate significant time points (p < 0.05), corrected for multiple comparisons. C, The bootstrapped differences in peak time (top) and the bootstrapped differences in decoding duration (bottom) for the congruent and the incongruent conditions.

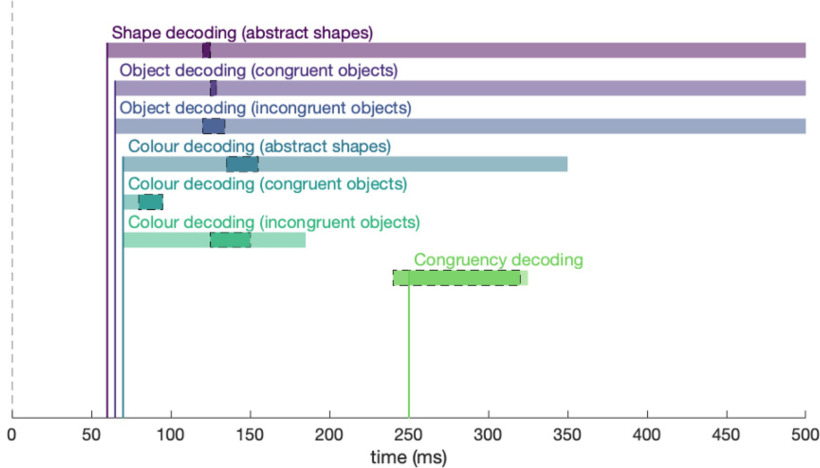

Overall, the results here show that single features present within the incoming visual stimuli are decodable earlier than the congruency between them, which can be seen as an index for accessing stored conceptual knowledge (Fig. 8). When we compare color and shape decoding for abstract shapes and for congruently and incongruently colored objects, the decoding onsets are very similar, suggesting that the initial processes of single-feature processing are not influenced by congruency. However, peak color decoding occurs later for incongruently colored compared with congruently colored objects, suggesting that color congruency influences color processing to some degree.

Figure 8.

Overview of the findings. Each colored bar represents the onset (x axis) and duration (length of colored bar) at which feature and conjunction information was contained in the neural signal. Darker shadings surrounded by dotted black lines represent the bootstrapped 95% CI for peak decoding. Dotted vertical line indicates stimulus onset.

Discussion

A crucial question in object recognition concerns how incoming visual information interacts with stored object concepts to create meaningful vision under varying situations. The aims of the current study were to examine the temporal dynamics of object-color knowledge and to test whether activating object-color knowledge influences the early stages of color and object processing. Our data provide three major insights: First, congruently and incongruently colored objects evoke a different neural representation after ∼250 ms, suggesting that, by this time, visual object features are bound into a coherent representation and compared with stored object representations. Second, color can be decoded at a similar latency (∼70 ms) regardless of whether participants view colored abstract shapes or congruently and incongruently colored objects. However, peak decoding occurs later when viewing incongruently colored objects compared with congruent ones. Third, we do not find an influence of color congruency on object processing, which may suggest that behavioral congruency effects are because of conflict at a later stage in processing.

Color congruency can act as an index to assess when prior knowledge is integrated with bound object features. When comparing brain activation patterns of the same objects presented in different colors, there was a decodable difference between congruent and incongruent conditions from ∼250 ms onward, suggesting that a stored object representation that contains information about the typical color of an object must have been activated by this stage. Before this time, the signal is primarily driven by processing of early perceptual features, such as color and shape, which were matched for the congruent and incongruent conditions (same objects, same colors, only the combination of color and shape differed). Although from our data we cannot draw direct conclusions about which brain areas are involved in the integration of incoming visual information and stored object knowledge, our congruency analysis adds to the fMRI literature by showing the relative time course at which a meaningful object representation emerges. Activating object-color knowledge from memory has been shown to involve the ATL (e.g., Coutanche and Thompson-Schill, 2015), and there is evidence that object-color congruency coding occurs in perirhinal cortex (Price et al., 2017). Further support on the involvement of the ATL in the integration of incoming sensory information and stored representations comes from work on patients with semantic dementia (e.g., Bozeat et al., 2002) and studies on healthy participants using TMS (e.g., Chiou et al., 2014). Higher-level brain areas in the temporal lobe have also been shown to be part of neuronal circuits involved in implicit imagery, supporting visual perception by augmenting incoming information with stored conceptual knowledge (e.g., Miyashita, 2004; Albright, 2012). The latency of congruency decoding here may thus reflect the time it takes to compare visual object representations with conceptual templates in higher-level brain areas, such as the ATL, or the time it takes for feedback or error signals about color congruency to arrive back in early visual areas.

Our results also show that color congruency has an effect on color processing. We found color decoding onset at a similar time (∼70 ms) for abstract shapes and congruently and incongruently colored objects. This indicates that color signals are activated initially independently of object shape, consistent with previous work showing that single features are processed first and that the conjunction of color and shape occurs at a later stage (e.g., Seymour et al., 2016). However, we also found differences between color processing in congruent and incongruent conditions: The color signal peaked later in the incongruent relative to the congruent condition, suggesting that congruency influences the time course of color processing to some degree. Our time-generalization analysis (Fig. 4D) supports this by showing that there is a different dynamic for congruent and incongruent trials. One possible explanation for this finding is that unusual feature pairings (e.g., shape and color or texture and color) might lead to local feedback signals that prolong color processing. Alternatively, consistent with the memory color literature (e.g., Hansen et al., 2006; Olkkonen et al., 2008; Witzel et al., 2011), it is possible that typical colors are coactivated along with other low-level features. For incongruent trials, this would then lead to two potential colors needing to be distinguished, extending the timeframe for processing and delaying the peak activation for the surface color of the object.

The time course of exemplar decoding we present is consistent with previous studies on object recognition. Here, we found that exemplar identity could be decoded at ∼65 ms. Similar latencies have been found in other MEG/EEG decoding studies (Carlson et al., 2013; Cichy et al., 2014; Isik et al., 2014; Contini et al., 2017; Grootswagers et al., 2019) and single-unit recordings (e.g., Hung et al., 2005). Behavioral data, including the reaction times collected from our participants, show that color influences object identification speed (e.g., Bramão et al., 2010). The neural data, however, did not show an effect of object color on the classifier's performance when distinguishing the neural activation patterns evoked by different object exemplars. For example, the brain activation pattern in response to a strawberry could be differentiated from the pattern evoked by a lemon, regardless of the congruency of their colors. This finding is consistent with previous results (Proverbio et al., 2004) but might seem puzzling because color congruency has been shown to have a strong effect on object naming (e.g., Tanaka and Presnell, 1999; Nagai and Yokosawa, 2003; Chiou et al., 2014). One plausible possibility is that the source of behavioral congruency effects may be at the stage of response selection, which would not show up in these early neural signals. More evidence is needed, but there is no evidence in the current data to suggest that color congruency influences early stages of object processing.

Our data demonstrate that object representations are influenced by object-color knowledge, but not at the initial stages of visual processes. Consistent with a traditional hierarchical view, we show that visual object features are processed before the features are bound into a coherent object that can be compared with existing, conceptual object representations. However, our data also suggest that the temporal dynamics of color processing are influenced by the typicality of object-color pairings. Building on the extensive literature on visual perception, these results provide a time course for the integration of incoming visual information with stored knowledge, a process that is critical for interpreting the visual world around us.

Footnotes

The authors declare no competing financial interests.

This work was supported by the Australian Research Council (ARC) Center of Excellence in Cognition and Its Disorders, International Macquarie University Research Training Program Scholarships to L.T. and T.G., and ARC Future Fellowship FT120100816 and ARC Discovery Project DP160101300 to T.A.C. A.N.R. was supported by ARC DP12102835 and DP170101840. G.L.Q. was supported by a joint initiative between the University of Louvain and the Marie Curie Actions of the European Commission Grant F211800012, and European Union's Horizon 2020 research and innovation program under the Marie Sklodowska-Curie Grant Agreement 841909. We thank the University of Sydney HPC service for providing High Performance Computing resources.

References

- Albright TD. (2012) On the perception of probable things: neural substrates of associative memory, imagery, and perception. Neuron 74:227–245. 10.1016/j.neuron.2012.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bannert MM, Bartels A (2013) Decoding the yellow of a gray banana. Curr Biol 23:2268–2272. 10.1016/j.cub.2013.09.016 [DOI] [PubMed] [Google Scholar]

- Bozeat S, Ralph MA, Patterson K, Hodges JR (2002) When objects lose their meaning: what happens to their use? Cogn Affect Behav Neurosci 2:236–251. 10.3758/CABN.2.3.236 [DOI] [PubMed] [Google Scholar]

- Brainard DH. (1997) The psychophysics toolbox. Spat Vis 10:433–436. 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- Bramão I, Faísca L, Petersson KM, Reis A (2010) The influence of surface color information and color knowledge information in object recognition. Am J Psychol 123:437–446. 10.5406/amerjpsyc.123.4.0437 [DOI] [PubMed] [Google Scholar]

- Carlson TA, Hogendoorn H, Kanai R, Mesik J, Turret J (2011) High temporal resolution decoding of object position and category. J Vis 11:9 10.1167/11.10.9 [DOI] [PubMed] [Google Scholar]

- Carlson TA, Tovar DA, Alink A, Kriegeskorte N (2013) Representational dynamics of object vision: the first 1000 ms. J Vis 13:1 10.1167/13.10.1 [DOI] [PubMed] [Google Scholar]

- Carlson TA, Grootswagers T, Robinson AK (2019) An introduction to time-resolved decoding analysis for MEG/EEG. ArXiv 04820:1905. [Google Scholar]

- Chiou R, Sowman PF, Etchell AC, Rich AN (2014) A conceptual lemon: theta burst stimulation to the left anterior temporal lobe untangles object representation and its canonical color. J Cogn Neurosci 26:1066–1074. 10.1162/jocn_a_00536 [DOI] [PubMed] [Google Scholar]

- Cichy RM, Pantazis D, Oliva A (2014) Resolving human object recognition in space and time. Nat Neurosci 17:455–462. 10.1038/nn.3635 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke A, Tyler LK (2015) Understanding what we see: how we derive meaning from vision. Trends Cogn Sci 19:677–687. 10.1016/j.tics.2015.08.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins E, Robinson AK, Behrmann M (2018) Distinct neural processes for the perception of familiar versus unfamiliar faces along the visual hierarchy revealed by EEG. Neuroimage 181:120–131. 10.1016/j.neuroimage.2018.06.080 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Contini EW, Wardle SG, Carlson TA (2017) Decoding the time-course of object recognition in the human brain: from visual features to categorical decisions. Neuropsychologia 105:165–176. 10.1016/j.neuropsychologia.2017.02.013 [DOI] [PubMed] [Google Scholar]

- Conway BR. (2018) The organization and operation of inferior temporal cortex. Annu Rev Vis Sci 4:381–402. 10.1146/annurev-vision-091517-034202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coutanche MN, Thompson-Schill SL (2015) Creating concepts from converging features in human cortex. Cereb Cortex 25:2584–2593. 10.1093/cercor/bhu057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gegenfurtner KR, Rieger J (2000) Sensory and cognitive contributions of color to the recognition of natural scenes. Curr Biol 10:805–808. 10.1016/S0960-9822(00)00563-7 [DOI] [PubMed] [Google Scholar]

- Grootswagers T, Wardle SG, Carlson TA (2017) Decoding dynamic brain patterns from evoked responses: a tutorial on multivariate pattern analysis applied to time series neuroimaging data. J Cogn Neurosci 29:677–697. 10.1162/jocn_a_01068 [DOI] [PubMed] [Google Scholar]

- Grootswagers T, Robinson AK, Carlson TA (2019) The representational dynamics of visual objects in rapid serial visual processing streams. Neuroimage 188:668–679. 10.1016/j.neuroimage.2018.12.046 [DOI] [PubMed] [Google Scholar]

- Hansen T, Olkkonen M, Walter S, Gegenfurtner KR (2006) Memory modulates color appearance. Nat Neurosci 9:1367–1368. 10.1038/nn1794 [DOI] [PubMed] [Google Scholar]

- Hebart MN, Baker CI (2018) Deconstructing multivariate decoding for the study of brain function. Neuroimage 180:4–18. 10.1016/j.neuroimage.2017.08.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hung CP, Kreiman G, Poggio T, DiCarlo JJ (2005) Fast readout of object identity from macaque inferior temporal cortex. Science 310:863–866. 10.1126/science.1117593 [DOI] [PubMed] [Google Scholar]

- Isik L, Meyers EM, Leibo JZ, Poggio T (2014) The dynamics of invariant object recognition in the human visual system. J Neurophysiol 111:91–102. 10.1152/jn.00394.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- JASP Team (2020) JASP (Version 0.13.1) [Computer software]. Available at https://jasp-stats.org/.

- Joseph JE. (1997) Color processing in object verification. Acta Psychol (Amst) 97:95–127. 10.1016/S0001-6918(97)00026-7 [DOI] [PubMed] [Google Scholar]

- Kaiser D, Oosterhof NN, Peelen MV (2016) The neural dynamics of attentional selection in natural scenes. J Neurosci 36:10522–10528. 10.1523/JNEUROSCI.1385-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- King JR, Dehaene S (2014) Characterizing the dynamics of mental representations: the temporal generalization method. Trends Cogn Sci 18:203–210. 10.1016/j.tics.2014.01.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleiner M, Brainard D, Pelli D (2007) What's new in Psychtoolbox-3? Perception 36 ECVP Abstract Supplement. [Google Scholar]

- Lafer-Sousa R, Conway BR (2013) Parallel, multi-stage processing of colors, faces and shapes in macaque inferior temporal cortex. Nat Neurosci 16:1870–1878. 10.1038/nn.3555 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lafer-Sousa R, Conway BR, Kanwisher NG (2016) Color-biased regions of the ventral visual pathway lie between face- and place-selective regions in humans, as in macaques. J Neurosci 36:1682–1697. 10.1523/JNEUROSCI.3164-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lloyd-Jones TJ, Roberts MV, Leek EC, Fouquet NC, Truchanowicz EG (2012) The time course of activation of object shape and shape+ colour representations during memory retrieval. PLoS One 7:e48550. 10.1371/journal.pone.0048550 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu A, Xu G, Jin H, Mo L, Zhang J, Zhang JX (2010) Electrophysiological evidence for effects of color knowledge in object recognition. Neurosci Lett 469:405–410. 10.1016/j.neulet.2009.12.039 [DOI] [PubMed] [Google Scholar]

- Maris E, Oostenveld R (2007) Nonparametric statistical testing of EEG-and MEG-data. J Neurosci Methods 164:177–190. 10.1016/j.jneumeth.2007.03.024 [DOI] [PubMed] [Google Scholar]

- Miyashita Y. (2004) Cognitive memory: cellular and network machineries and their top-down control. Science 306:435–440. 10.1126/science.1101864 [DOI] [PubMed] [Google Scholar]

- Nagai J, Yokosawa K (2003) What regulates the surface color effect in object recognition: color diagnosticity or category. Tech Rep Attention Cogn 28:1–4. [Google Scholar]

- Naor-Raz G, Tarr MJ, Kersten D (2003) Is color an intrinsic property of object representation? Perception 32:667–680. 10.1068/p5050 [DOI] [PubMed] [Google Scholar]

- Olkkonen M, Hansen T, Gegenfurtner KR (2008) Color appearance of familiar objects: effects of object shape, texture, and illumination changes. J Vis 8:13 10.1167/8.5.13 [DOI] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, Schoffelen JM (2011) FieldTrip: open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Comput Intell Neurosci 2011:156869. 10.1155/2011/156869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oosterhof NN, Connolly AC, Haxby JV (2016) CoSMoMVPA: multi-modal multivariate pattern analysis of neuroimaging data in Matlab/GNU Octave. Front Neuroinform 10:27. 10.3389/fninf.2016.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papinutto N, Galantucci S, Mandelli ML, Gesierich B, Jovicich J, Caverzasi E, Henry RG, Seeley WW, Miller BL, Shapiro KA, Gorno-Tempini ML (2016) Structural connectivity of the human anterior temporal lobe: a diffusion magnetic resonance imaging study. Hum Brain Mapp 37:2210–2222. 10.1002/hbm.23167 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT (2007) Where do you know what you know? The representation of semantic knowledge in the human brain. Nat Rev Neurosci 8:976–987. 10.1038/nrn2277 [DOI] [PubMed] [Google Scholar]

- Pelli DG. (1997) The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spat Vis 10:437–442. [PubMed] [Google Scholar]

- Price AR, Bonner MF, Peelle JE, Grossman M (2017) Neural coding of fine-grained object knowledge in perirhinal cortex. bioRxiv. doi: 10.1101/194829. [DOI]

- Proverbio AM, Burco F, del Zotto M, Zani A (2004) Blue piglets? Electrophysiological evidence for the primacy of shape over color in object recognition. Brain Res Cogn Brain Res 18:288–300. 10.1016/j.cogbrainres.2003.10.020 [DOI] [PubMed] [Google Scholar]

- Rosenthal I, Ratnasingam S, Haile T, Eastman S, Fuller-Deets J, Conway BR (2018) Color statistics of objects, and color tuning of object cortex in macaque monkey. J Vis 18:1. 10.1167/18.11.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seymour K, Clifford CW, Logothetis NK, Bartels A (2010) Coding and binding of color and form in visual cortex. Cereb Cortex 20:1946–1954. 10.1093/cercor/bhp265 [DOI] [PubMed] [Google Scholar]

- Seymour K, Williams MA, Rich AN (2016) The representation of color across the human visual cortex: distinguishing chromatic signals contributing to object form versus surface color. Cereb Cortex 26:1997–2005. 10.1093/cercor/bhv021 [DOI] [PubMed] [Google Scholar]

- Simmons WK, Ramjee V, Beauchamp MS, McRae K, Martin A, Barsalou LW (2007) A common neural substrate for perceiving and knowing about color. Neuropsychologia 45:2802–2810. 10.1016/j.neuropsychologia.2007.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith SM, Nichols TE (2009) Threshold-free cluster enhancement: addressing problems of smoothing, threshold dependence and localisation in cluster inference. Neuroimage 44:83–98. 10.1016/j.neuroimage.2008.03.061 [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Presnell LM (1999) Color diagnosticity in object recognition. Percept Psychophys 61:1140–1153. 10.3758/bf03207619 [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Weiskopf D, Williams P (2001) The role of color in high-level vision. Trends Cogn Sci 5:211–215. 10.1016/S1364-6613(00)01626-0 [DOI] [PubMed] [Google Scholar]

- Teichmann L, Grootswagers T, Carlson T, Rich AN (2019) Seeing versus knowing: the temporal dynamics of real and implied colour processing in the human brain. Neuroimage 200:373–381. 10.1016/j.neuroimage.2019.06.062 [DOI] [PubMed] [Google Scholar]

- Therriault DJ, Yaxley RH, Zwaan RA (2009) The role of color diagnosticity in object recognition and representation. Cogn Process 10:335–342. 10.1007/s10339-009-0260-4 [DOI] [PubMed] [Google Scholar]

- Vandenbroucke AR, Fahrenfort JJ, Meuwese JD, Scholte HS, Lamme VA (2016) Prior knowledge about objects determines neural color representation in human visual cortex. Cereb Cortex 26:1401–1408. 10.1093/cercor/bhu224 [DOI] [PubMed] [Google Scholar]

- Wagberg J. (2020) optprop - a color properties toolbox. MATLAB Central File Exchange. Available at https://www.mathworks.com/matlabcentral/fileexchange/13788-optprop-a-color-properties-toolbox.

- Willenbockel V, Sadr J, Fiset D, Horne GO, Gosselin F, Tanaka JW (2010) Controlling low-level image properties: the SHINE toolbox. Behav Res Methods 42:671–684. 10.3758/BRM.42.3.671 [DOI] [PubMed] [Google Scholar]

- Witzel C, Valkova H, Hansen T, Gegenfurtner KR (2011) Object knowledge modulates colour appearance. Iperception 2:13–49. 10.1068/i0396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeki S, Marini L (1998) Three cortical stages of colour processing in the human brain. Brain 121:1669–1685. 10.1093/brain/121.9.1669 [DOI] [PubMed] [Google Scholar]