Abstract

Objective

To describe the experience of implementing a deep learning-based computer-aided detection (CAD) system for the interpretation of chest X-ray radiographs (CXR) of suspected coronavirus disease (COVID-19) patients and investigate the diagnostic performance of CXR interpretation with CAD assistance.

Materials and Methods

In this single-center retrospective study, initial CXR of patients with suspected or confirmed COVID-19 were investigated. A commercialized deep learning-based CAD system that can identify various abnormalities on CXR was implemented for the interpretation of CXR in daily practice. The diagnostic performance of radiologists with CAD assistance were evaluated based on two different reference standards: 1) real-time reverse transcriptase-polymerase chain reaction (rRT-PCR) results for COVID-19 and 2) pulmonary abnormality suggesting pneumonia on chest CT. The turnaround times (TATs) of radiology reports for CXR and rRT-PCR results were also evaluated.

Results

Among 332 patients (male:female, 173:159; mean age, 57 years) with available rRT-PCR results, 16 patients (4.8%) were diagnosed with COVID-19. Using CXR, radiologists with CAD assistance identified rRT-PCR positive COVID-19 patients with sensitivity and specificity of 68.8% and 66.7%, respectively. Among 119 patients (male:female, 75:44; mean age, 69 years) with available chest CTs, radiologists assisted by CAD reported pneumonia on CXR with a sensitivity of 81.5% and a specificity of 72.3%. The TATs of CXR reports were significantly shorter than those of rRT-PCR results (median 51 vs. 507 minutes; p < 0.001).

Conclusion

Radiologists with CAD assistance could identify patients with rRT-PCR-positive COVID-19 or pneumonia on CXR with a reasonably acceptable performance. In patients suspected with COVID-19, CXR had much faster TATs than rRT-PCRs.

Keywords: Radiography, thoracic; COVID-19; COVID-19 diagnostic testing; Pneumonia; Deep learning

INTRODUCTION

The initial outbreak of coronavirus disease (COVID-19), caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) infection (1), originated in Wuhan, China in December 2019. Following this occurrence, the disease rapidly progressed into a pandemic, with more than 5.4 million confirmed patients and more than 340000 deaths worldwide as of May 26, 2020 (2). Early identification of infected patients and adequate isolation of patients to interrupt human-to-human transmission is of utmost importance in controlling the COVID-19 pandemic (2).

The primary diagnostic method for COVID-19 is detection of SARS-CoV-2 via real-time reverse transcriptase-polymerase chain reaction (rRT-PCR) in respiratory specimens (3). Several recent studies have shown that CT may exhibit findings of pneumonia in patients with initially negative rRT-PCR results (4,5,6) and can be considered as a screening tool for COVID-19 in epidemic areas. However, screening or early diagnosis using chest CT in patients suspected for COVID-19 may not be practical, owing to the risk of viral transmission during the examination, transportation of the patient, and difficulty with disinfecting the environment. Owing to this, chest CT is currently not recommended for screening or initial diagnosis of COVID-19 (7,8).

Chest X-ray radiograph (CXR) is the primary imaging technique in the diagnosis of pneumonia because of its easy accessibility, low cost, low radiation exposure, and reasonable diagnostic capability (9,10). Therefore, CXR using a portable radiography unit may be considered as a primary radiologic examination for COVID-19 because patient transportation can be minimized and disinfection is relatively easy (7). However, because of the intrinsic limitation of the two-dimensional projection image, where various anatomic or pathologic structures are overlapped, CXR have lower sensitivity compared to chest CTs (11,12) and are prone to reading errors and inter- or intra-reader variability (13). Thus, a computer-aided detection (CAD) system that can accurately identify pulmonary opacities suggestive of pneumonia may help promptly and accurately diagnose pneumonia, such as that observed in COVID-19 patients (14). In this regard, we implemented a deep learning-based CAD system for the interpretation of CXR of patients who were suspected for COVID-19.

We aimed to describe our experience of implementing a deep learning-based CAD system for the interpretation of CXR of suspected COVID-19 patients, as well as to investigate the diagnostic performance of CXR for COVID-19 using the CAD system.

MATERIALS AND METHODS

This single-center, retrospective study was approved by the Institutional Review Board of Seoul National University Hospital, and the requirement for informed consent was waived.

Patients and CXR Acquisition

We retrospectively included consecutive patients using the following criteria: 1) patients who visited a tertiary academic institution for the diagnosis of suspicious COVID-19 or management of confirmed COVID-19 between January 31, 2020 and March 10, 2020; and 2) patients who underwent CXR with a dedicated protocol for suspicious COVID-19 patients including CAD analyses. The initial CXR of each patient obtained after the visit were included in the present study. All CXR were obtained with a dedicated mobile X-ray system (DRX-revolution, Carestream Health). Erect posteroanterior X-rays or supine anteroposterior X-rays were obtained, depending on the patients' condition.

Implementation of the CAD System

A dedicated CXR examination protocol for patients suspicious of COVID-19 (“CXR AI CAD for COVID”) was established on January 28, 2020, and included CAD analysis. All subsequent CXR of patients who were suspected or already diagnosed with COVID-19 were obtained using this protocol. The CAD system integrated in the protocol was a commercialized deep-learning algorithm (Lunit INSIGHT CXR 2, Lunit Inc.) that was approved by the Ministry of Food and Drug Safety of Korea (15) that detects several thoracic abnormalities, including pulmonary nodules, consolidation, and pneumothorax. The CAD system was originally trained with 54221 normal CXR and 35613 abnormal CXR with four major thoracic diseases including pulmonary malignancy, pneumonia, pulmonary tuberculosis, and pneumothorax (15). The CAD system provided a probability score between 0% and 100% for the presence of any of the target abnormalities on each CXR and provided localization of abnormalities when the probability score was 15% or greater, with contour lines overlaid on CXR images (Figs. 1, 2, 3). The system was unable to provide detailed information on whether each detected abnormality was a nodule, mass, consolidation, or pneumothorax.

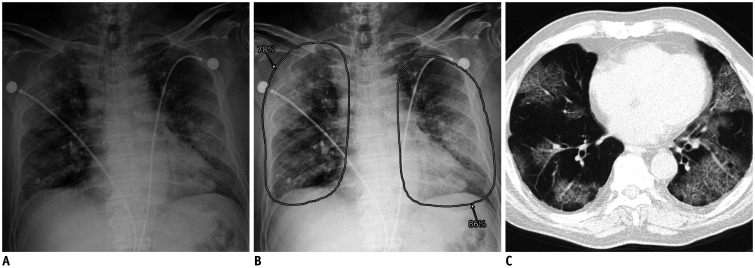

Fig. 1. Representative case of COVID-19 with true positive CXR.

A. CXR of patient with COVID-19 showing diffuse bilateral pulmonary opacities. B. Computer-aided detection system classified CXR as abnormal with probability score of 86%, with localization of increased opacities in both lungs. C. Formal radiology report suggested that opacities were likely indicative of pneumonia. Chest computed tomography image obtained on same day shows multifocal patchy ground-glass opacities in bilateral peripheral lungs. COVID-19 = coronavirus disease, CXR = chest X-ray radiograph

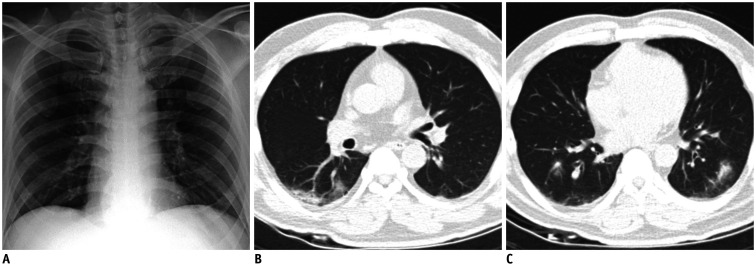

Fig. 2. Representative case of COVID-19 with false negative CXR.

A. CXR of patient with COVID-19 shows no definite pulmonary opacity. B, C. Computer-aided detection system classified CXR as normal, with probability score below 15% (threshold for visualization). Formal radiology report indicated no abnormal finding on CXR. Chest computed tomography images obtained on same day show multifocal patchy consolidations and ground-glass opacities in bilateral lungs.

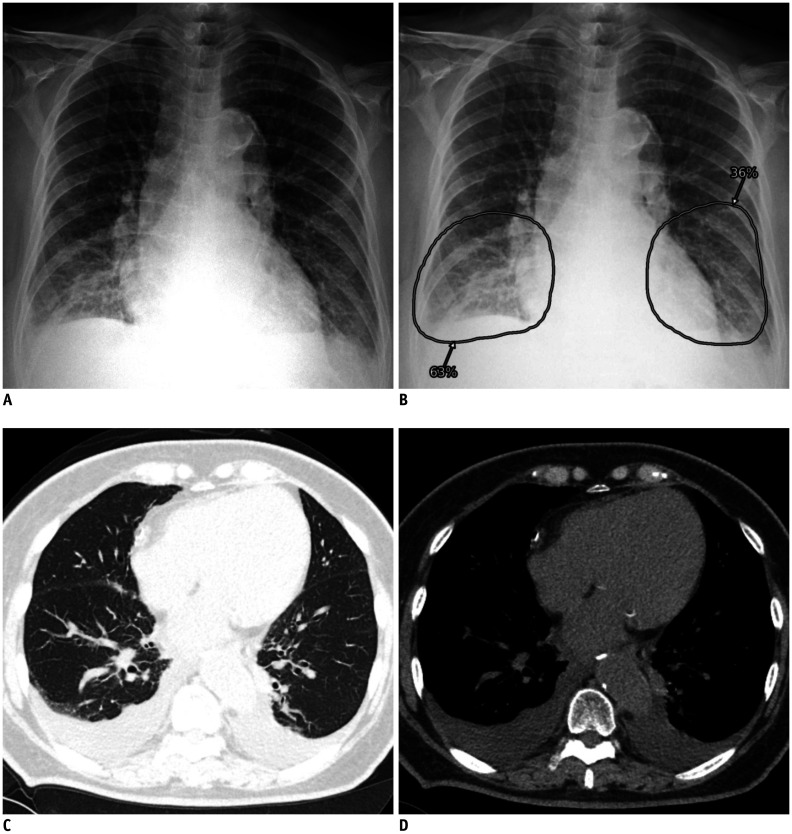

Fig. 3. Representative case of false positive identification on CXR.

A. CXR of patient with negative real-time reverse transcriptase-polymerase chain reaction result shows increased opacities at both lower lung fields. B. Computer-aided detection system classified CXR as abnormal with probability score of 63%, and localized opacities in both lower lung fields. C, D. Formal radiology report indicated presence of parenchymal infiltration at right lower lung and possibility of pneumonia. Chest computed tomography images obtained on same day exhibited bilateral pleural effusion, without relevant parenchymal abnormality.

After the acquisition of the CXR, analysis by the CAD system was automatically processed, and both radiologists and referring physicians could view the CAD result side-by-side with the original CXR image on the institution's picture archiving and communication system (PACS; Infinitt Gx PACS, INFINITT Healthcare). After evaluation of both CXR images and CAD results, radiologists documented a formal report using the PACS (formal radiology report, hereinafter). All CXR were interpreted by attending radiologists or by radiology residents supervised by attending radiologists. A total of 14 attending radiologists and 12 residents (1–29 years of experience in CXR interpretation) participated in CXR interpretation.

Diagnostic Performance Evaluation

The formal radiology reports of all CXR were retrospectively reviewed by one thoracic radiologist (9 years of experience in CXR and chest CT interpretation), and classified into those indicating the presence versus those indicating the absence of any abnormality suggesting pneumonia. For the CAD results, a probability score of 15% (threshold for visualization of localization information) was defined as the threshold for binary classification between positive and negative results.

The diagnostic performance of formal radiology reports and CAD results were evaluated using two different reference standards: 1) rRT-PCR results for SARS-CoV-2 infection, and 2) pulmonary abnormality suggesting pneumonia on chest CT. For evaluation based on rRT-PCR results, only patients with an available rRT-PCR result within 24 hours of the CXR were included. Positive rRT-PCR results from nasopharyngeal or oropharyngeal swabs indicated COVID-19.

For evaluation based on chest CT, only patients who underwent chest CT within 24 hours of the CXR were included. All chest CTs were obtained based on the decision of the referring physician, without pre-defined criteria for CT acquisition. Two thoracic radiologists (9 and 10 years of experience in CXR and chest CT interpretation) independently reviewed all chest CTs to determine the presence of abnormalities suggesting pneumonia. Discordant interpretations were arbitrated by the final decision of a senior thoracic radiologist (21 years of experience in CXR and chest CT interpretation). In patients with pulmonary abnormalities suggesting pneumonia on chest CTs, two thoracic radiologists determined whether the abnormality was visible on CXR until a consensus was reached. Subsequently, the formal radiology reports of the CXR and CAD results were compared to the presence of abnormalities on chest CTs. Positive radiology reports or CAD results with incorrect localization of abnormalities were regarded as false negatives.

Among patients with chest CTs, in cases of false positive identification by formal radiology reports or CAD, a single thoracic radiologist reviewed CXR and chest CTs to determine the cause of the false positive detection.

Subgroup Evaluation of Diagnostic Performance

We compared the rRT-PCR- and chest CT-based diagnostic performance of formal radiology reports and CAD results between patients with and without symptoms suggesting acute respiratory disease, as well as between patients with a symptom duration of ≤ 3 days or > 3 days.

Turnaround Time Evaluation

The turnaround time (TAT) of each CXR report by radiologists and rRT-PCR result (time interval between CXR or specimen acquisition and formal reporting) were obtained.

Statistical Analyses

The sensitivities, specificities, positive predictive values (PPVs), and negative predictive values (NPVs) of the formal radiology reports and the standalone CAD results were obtained. The sensitivities and specificities were compared using McNemar's test, while the PPVs and NPVs were compared using the method suggested by Leisenring et al. (16). Pearson's chi-square or Fisher's exact tests were used for the comparison of performance between different subgroups depending on the situation. Median tests were performed for the comparison of TATs.

All statistical analyses were performed using R (version 3.5.5, R Project for Statistical Computing), and p values < 0.05 were considered statistically significant.

RESULTS

A total of 395 patients (205 male and 190 female; mean age ± standard deviation, 53 ± 24 years) were included in this study. Among them, 283 (71.6%) patients had symptoms suggesting acute respiratory disease, and the median time interval between the symptom onset and CXR was 1 day (interquartile range [IQR], 4 days) (Table 1).

Table 1. Demographic, Clinical, and Radiological Information of Patients.

| Variables | Whole Patients (n = 395) | Patients with Chest CT (n = 119) | Patients without Chest CT (n = 276) | P* |

|---|---|---|---|---|

| Proportion of male patients, %† | 51.9 (205/395) | 63.0 (75/119) | 47.1 (130/276) | 0.004 |

| Mean age, years‡ | 58 (39) | 69 (20) | 47 (38) | < 0.001 |

| Proportion of symptomatic patients, %† | 71.6 (283/395) | 83.2 (99/119) | 66.7 (184/276) | < 0.001 |

| Time interval between symptom onset and CXR, dayठ| 1 (4) | 1 (4) | 1 (4) | 0.658 |

| Body temperature, ℃‡ǁ | 37.4 (1.4) | 37.6 (1.8) | 37.3 (1.3) | 0.141 |

| Proportion of rRT-PCR positive COVID-19 patients, %† | 4.1 (16/395) | 2.5 (3/119) | 4.7 (13/276) | 0.411 |

| Proportion of positive CAD results, %† | 36.7 (145/395) | 68.1 (81/119) | 23.2 (64/276) | < 0.001 |

| Proportion of positive formal radiology reports, %† | 31.9 (126/395) | 53.8 (64/119) | 22.5 (62/276) | < 0.001 |

*Comparison between patients with and without chest CT, †Numbers in parentheses indicate numerators/denominators, ‡Data indicate median (interquartile range), §112 patients (20 patients with chest CT and 92 patients without chest CT) without symptoms suggesting acute respiratory illness were excluded, ∥19 patients (0 patients with chest CT and 19 patients without chest CT) without documented body temperature were excluded. CAD = computer-aided detection, COVID-19 = coronavirus disease, CT = computed tomography, CXR = chest X-ray radiograph, rRT-PCR = real-time reverse transcriptase-polymerase chain reaction

In formal radiology reports, abnormalities suggesting pneumonia were reported in 31.9% (126/395) of CXR, while the CAD system identified abnormalities in 36.7% (145/395) of CXR. The CAD system identified the same abnormalities reported on the formal reports in 72.2% (91/126) of CXR with positive reports. Among the CXR in which the CAD system identified abnormalities, 33.1% (48/145) were discarded as false positives by the interpreting radiologists.

Diagnostic Performance for rRT-PCR Positive COVID-19

Among 332 patients (84.1%; 173 male and 159 female; mean age ± standard deviation, 57 ± 23 years) with available rRT-PCR results within 24 hours of CXR, SARS-CoV2 infections were identified in 16 patients (4.8%; 9 male and 7 female; mean age ± standard deviation, 60 ± 20 years). Among them, 12 patients (75%) were symptomatic, and the median time interval between the symptom onset and CXR was 5 days (IQR, 9 days).

The formal radiology reports exhibited sensitivity, specificity, PPV, and NPV of 68.8%, 66.7%, 9.5%, and 97.7%, respectively. The specificity (p = 0.005) and PPV (p = 0.033) of the formal radiology reports were significantly higher than those of the CAD output (sensitivity, 43.8%; specificity, 59.8%; PPV, 5.2%; NPV, 95.5%), but there was no significant difference in the sensitivity (p = 0.102) and NPV (p = 0.061) (Table 2).

Table 2. Diagnostic Performance of Formal Radiology Reports and CAD Results.

| Performance Measure | Formal Radiology Reports | CAD Results | P |

|---|---|---|---|

| SARS-CoV-2 infection on rRT-PCR (n = 332) (%) | |||

| Sensitivity | 68.8 (11/16; 46.0–91.5) | 43.8 (7/16; 19.4–68.1) | 0.102 |

| Specificity | 66.7 (211/316; 61.6–72.0) | 59.8 (189/321; 54.4–65.2) | 0.005 |

| PPV | 9.5 (11/116; 4.2–14.8) | 5.2 (7/134; 1.5–8.9) | 0.033 |

| NPV | 97.7 (211/216; 95.7–99.7) | 95.5 (189/198; 92.5–98.4) | 0.061 |

| Pulmonary abnormality suggesting pneumonia on chest CT (n = 119) (%) | |||

| Sensitivity | 81.5 (44/54; 71.1–91.8) | 81.5 (44/54; 71.1–91.8) | > 0.999 |

| Specificity | 72.3 (47/65; 61.4–83.2) | 52.3 (34/65; 40.2–64.5) | 0.002 |

| PPV | 71.0 (44/62; 59.7–82.3) | 58.7 (44/75; 47.5–69.8) | 0.002 |

| NPV | 82.5 (47/57; 72.6–92.3) | 77.3 (34/44; 64.9–89.7) | 0.242 |

Numbers in parentheses indicate numerators/denominators; 95% confidence intervals. NPV = negative predictive value, PPV = positive predictive value, SARS-CoV-2 = severe acute respiratory syndrome corona virus 2

Diagnostic Performance of Pneumonia Detection on CXR Based on Chest CT Findings

Chest CTs obtained within 24 hours of CXR were available in 119 patients (30.1%; 75 male and 44 female; mean age ± standard deviation, 69 ± 14 years). Among them, 45.3% (54/119) exhibited pulmonary abnormalities suggesting pneumonia on CT (Table 3). Formal radiology reports exhibited sensitivity, specificity, PPV, and NPV of 81.5%, 72.3%, 71.0%, and 82.5%, respectively. The specificity (p = 0.002) and PPV (p = 0.002) of formal radiology reports were significantly higher than those of the CAD output (sensitivity, 81.5%; specificity, 52.3%; PPV, 58.7%; NPV, 77.3%) (Table 2).

Table 3. Findings on Chest CTs Varied by rRT-PCR Results.

| Findings | Positive rRT-PCR (n = 3) | Negative rRT-PCR (n = 114) |

|---|---|---|

| Any abnormality suggesting pneumonia (%) | 2 (66.7) | 51 (44.7) |

| Consolidation (%)* | 1 (50.0) | 31 (60.8) |

| Ground-glass opacity (%)* | 1 (50.0) | 43 (84.3) |

| Micronodule (%)* | 0 (0) | 20 (39.2) |

| Interstitial thickening (%)* | 0 (0) | 7 (13.7) |

| Pleural effusion (%)* | 0 (0) | 25 (49.0) |

| Bilateral involvement (%)* | 1 (50.0) | 33 (64.7) |

| Multilobar involvement (%)* | 1 (50.0) | 43 (84.3) |

| Visible abnormality on CXR (%)* | 2 (100) | 47 (92.2) |

Two patients with chest CTs but without rRT-PCR results were excluded. *Numbers in parentheses indicate proportion among patients with any abnormality suggesting pneumonia.

Among the 119 patients with available chest CTs, three (2.5%) were rRT-PCR-positive COVID-19 patients, two of whom exhibited pulmonary abnormalities on chest CT (sensitivity, 66.7%). Formal radiology reports for CXR identified abnormalities in those two patients (sensitivity, 66.7%), while the standalone CAD results identified abnormality in only one patient (sensitivity, 33.3%).

Among the 54 patients with pulmonary abnormality suggesting pneumonia on CT, 92.6% (50/54) of the CXR had visible abnormality. Both the formal radiology reports and CAD results exhibited sensitivity of 86% (43/50; 95% confidence interval, 73.3–94.2%) for CXR with visible abnormalities, which were significantly higher than those for CXR with invisible abnormalities (25.0% [1/4; 95% confidence interval, 6.3–80.6%]; p = 0.017).

False positives on CXR occurred in 18 formal radiology reports and 32 CAD results (Table 4), with pleural effusion as the most common cause in both. The second most common cause of false positives was interstitial lung diseases for the formal radiology reports and pulmonary nodules for the CAD results.

Table 4. Causes of Flase Positive Identificatin by Formal Radiology Reports and CAD.

| Cause of False Positive Identification | Formal Radiology Report | CAD Results |

|---|---|---|

| Focal pulmonary abnormality (%) | 3 (16.7) | 13 (40.6) |

| Nodule | 0 | 7 (21.9) |

| Fibrotic scar | 2 (11.1) | 3 (9.4) |

| Bulla | 0 (0) | 2 (6.3) |

| Atelectasis | 1 (5.6) | 1 (3.1) |

| Diffuse pulmonary abnormality (%) | 5 (27.8) | 5 (15.6) |

| Interstitial lung disease | 3 (16.7) | 4 (12.5) |

| Other diffuse lung disease | 2 (11.1)* | 1 (3.1)† |

| Pleural abnormality (%) | 8 (44.4) | 13 (40.6) |

| Pleural effusion | 8 (44.4) | 12 (37.5) |

| Pneumothorax | 0 (0) | 1 (3.1) |

| Other causes (%) | 1 (5.6) | 3 (9.4) |

| Cardiac opacity | 0 (0) | 2 (6.3) |

| Osseous structures | 0 (0) | 1 (3.1) |

| Breast shadow | 1 (5.6) | 0 (0) |

| Unknown cause (%) | 1 (5.6) | 0 (0) |

| Total (%) | 18 (100) | 32 (100) |

*Pulmonary lymphangioleiomyomatosis (n = 1) and severe emphysema (n = 1), †Pulmonary lymphangioleiomyomatosis.

Variation in Performances with the Presence and Duration of Symptoms

For identification of rRT-PCR-positive COVID-19 patients, neither the performance of formal radiology reports nor the CAD results showed a significant between patients with and without symptoms suggesting acute respiratory disease (Table 5). Regarding the duration of symptoms, the formal radiology reports exhibited higher specificity (67.0% vs. 49.0%; p = 0.020) in patients with a symptom duration of ≤ 3 days. Both the formal radiology reports (3.3% vs. 19.4%; p = 0.016) and the CAD results (1.4% vs. 17.2%; p = 0.007) exhibited higher PPV in patients with symptom duration > 3 days.

Table 5. Performances Based on rRT-PCR Results Varied by Presence and Duration of Symptoms.

| Classifiers | Sensitivity | Specificity | PPV | NPV |

|---|---|---|---|---|

| Symptomatic patients (n = 247) | ||||

| Formal radiology report (%) | 66.7 (8/12; 34.9–90.1) | 64.3 (151/235; 57.8–70.4) | 8.7 (8/92; 3.8–16.4) | 97.4 (151/155; 93.5–99.3) |

| CAD (%) | 50.0 (6/12; 21.1–78.9) | 57.9 (136/235; 51.3–64.3) | 5.7 (6/105; 2.1–12.0) | 95.8 (136/142; 91.0–98.4) |

| Asymptomatic patients (n = 85) | ||||

| Formal radiology report (%) | 75.0 (3/4; 19.4–99.4) | 74.1 (60/81; 63.1–83.2) | 12.5 (3/24; 2.7–32.4) | 98.4 (60/61; 91.2–100) |

| p values* | > 0.999 | 0.106 | 0.695 | > 0.999 |

| CAD (%) | 25.0 (1/4; 0.6–80.6) | 64.2 (52/81; 52.8–74.6) | 3.3 (1/30; 0–17.2) | 94.5 (52/55; 84.9–98.9) |

| p values* | 0.585 | 0.317 | > 0.999 | 0.712 |

| Patients with duration of symptom ≤ 3 days (n = 184) | ||||

| Formal radiology report (%) | 50.0 (2/4; 6.7–93.2) | 67.0 (120/179; 59.6–73.9) | 3.3 (2/61; 0.4–11.3) | 98.4 (120/122; 94.2–99.8) |

| CAD (%) | 25.0 (1/4; 0.6–80.6) | 59.8 (107/179; 52.2–67.0) | 1.4 (1/73; 0–7.4) | 97.3 (107/110; 92.2–99.4) |

| Patients with duration of symptom > 3 days (n = 57) | ||||

| Formal radiology report (%) | 75.0 (6/8; 34.9–96.8) | 49.0 (24/49; 34.4–63.7) | 19.4 (6/31; 7.5–37.5) | 89.3 (24/26; 74.9–99.1) |

| p values† | 0.548 | 0.02 | 0.016 | 0.142 |

| CAD (%) | 62.5 (5/8; 24.5–91.5) | 51.0 (25/49; 36.3–65.6) | 17.2 (5/29; 5.8–35.8) | 92.3 (25/28; 71.8–97.7) |

| p values† | 0.546 | 0.271 | 0.007 | 0.098 |

Numbers in parentheses indicate numerators/denominators; 95% confidence intervals. *Comparison with performances in symptomatic patients, †Comparison with performances in patients with duration of symptom ≤ 3 days.

For identification of pneumonia demonstrated on chest CTs, neither the performance of formal radiology reports nor CAD results showed a significant difference between patients with and without symptoms suggesting acute respiratory disease, as well as between patients with symptom duration ≤ 3 days and > 3 days (Table 6).

Table 6. Performances Based on Chest CTs Varied by Presence and Duration of Symptoms.

| Classifiers | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) |

|---|---|---|---|---|

| Symptomatic patients (n = 99) | ||||

| Formal radiology report | 80.9 (38/47; 66.7–90.9) | 76.9 (40/52; 63.2–87.5) | 76.0 (38/50; 61.8–86.9) | 81.6 (40/49; 68.0–91.2) |

| CAD | 78.7 (37/47; 64.3–89.3) | 53.8 (28/52; 49.5–67.8) | 60.7 (37/61; 47.3–72.9) | 73.7 (28/38; 56.9–86.6) |

| Asymptomatic patients (n = 20) | ||||

| Formal radiology report | 85.7 (6/7; 42.1–99.6) | 53.8 (7/13; 25.1–80.8) | 50.0 (6/12; 21.1–78.9) | 87.5 (7/8; 47.3–99.7) |

| p values* | 0.757 | 0.096 | 0.075 | > 0.999 |

| CAD | 100 (7/7; 59.0–100) | 46.2 (6/13; 19.2–74.9) | 50.0 (7/14; 23.0–77.0) | 100 (6/6; 54.1–100) |

| p values* | 0.326 | 0.619 | 0.465 | 0.31 |

| Patients with duration of symptom ≤ 3 days (n = 72) | ||||

| Formal radiology report | 75.8 (25/33; 57.7–88.9) | 76.9 (30/39; 60.7–88.9) | 73.5 (25/34; 55.6–87.1) | 78.9 (30/38; 62.7–90.4) |

| CAD | 78.8 (26/33; 61.1–91.0) | 56.4 (22/39; 39.6–72.2) | 60.5 (26/43; 44.4–75.0) | 75.9 (22/29; 56.5–89.7) |

| Patients with duration of symptom > 3 days (n = 24) | ||||

| Formal radiology report | 92.9 (13/14; 66.1–99.8) | 70.0 (7/10; 34.8–93.3) | 81.3 (13/16; 54.4–96.0) | 87.5 (7/8; 47.3–99.7) |

| p values* | 0.245 | 0.69 | 0.728 | > 0.999 |

| CAD | 78.6 (11/14; 49.2–95.3) | 50.0 (5/10; 18.7–81.3) | 68.8 (11/16; 41.3–89.0) | 62.5 (5/8; 24.5–91.5) |

| p values* | > 0.999 | 0.737 | 0.763 | 0.655 |

Numbers in parentheses indicate numerators/denominators; 95% confidence intervals. *Comparison with performances in symptomatic patients, †Comparison with performances in patients with duration of symptom ≤ 3 days.

Evaluation of Turnaround Times

The median TAT for CXR reports was significantly shorter than that for rRT-PCR results (51 [IQR, 138] vs. 507 [IQR, 475] minutes; p < 0.001).

The TATs for CXR reports did not differ significantly between patients with positive versus those with negative rRT-PCR results (median TAT, 186 [IQR, 953] vs. 61 [IQR, 141] minutes; p = 0.200), or between patients with versus those without pulmonary abnormalities suggesting pneumonia on chest CTs (median TAT, 60 [IQR, 122] vs. 51 [IQR, 106] minutes; p = 0.920). Furthermore, the TATs for rRT-PCR results did not significantly differ between patients with positive versus those with negative rRT-PCR results (median TAT, 923 [IQR, 735] vs. 502 [IQR, 475] minutes; p = 0.141), or between patients with versus those without pulmonary abnormalities suggesting pneumonia on chest CTs (median TAT, 514 [IQR, 372] vs. 493 [IQR, 454] minutes; p = 0.786).

DISCUSSION

Herein, we have described our experience of a deep learning-based CAD system for the interpretation of CXR of suspected COVID-19 patients. The formal radiology reports with the assistance of CAD exhibited sensitivity of 68.8% and specificity of 66.7% for the identification of rRT-PCRpositive COVID-19 patients, and a sensitivity of 81.5% and specificity of 72.3% for the identification of pulmonary abnormalities suggesting pneumonia on chest CT.

The positive rate of rRT-PCR in our study (4.8%) was higher than the cumulative positive rate in Korea (2.3% as of April 4, 2020) (17), but similar to the data published in the earlier stage of the outbreak (4.5% as of March 2, 2020) (18).

Previous studies have reported that CXR of COVID-19 patients may appear normal (19,20,21). Furthermore, reported imaging findings of COVID-19 were bilateral ground-glass opacities with or without consolidations, which are non-specific in diagnosing COVID-19 (19,20,21,22,23). Not surprisingly, both formal radiology reports (sensitivity, 68.8%; specificity, 66.7%) and CAD results (sensitivity, 43.8%; specificity, 59.8%) showed limited diagnostic performance for patients with SARS-CoV-2 infection in the present study. The sensitivity of formal radiology reports in our study was similar to that of baseline CXR reported in a previous study by Wong et al. (21) (69%). Although there was no statistically significant difference, the standalone CAD results exhibited a substantially lower sensitivity compared to the formal radiology reports (68.8% vs. 43.8%), suggesting limited potential for diagnosis of COVID-19 on CXR with the CAD system only.

Despite the limited diagnostic performance, the radiological evaluation of lung lesions in COVID-19 patients may still have clinical implications. Previous studies reported the value of chest CT-based diagnosis of COVID-19 in patients with initial false-negative rRT-PCR results (4,5,6). In addition, Zhao et al. (24) reported that the extent of abnormalities on chest CT was greater in severe diseases. Furthermore, previous investigations on severe acute respiratory syndrome outbreak between 2002 and 2004 have reported that the extent of pulmonary opacity on CXR was a prognostic factor for the adverse patient outcome (25,26,27). However, obtaining chest CT for all patients suspected for COVID-19 can be very challenging in practice due to the limited availability of CT scanners dedicated to COVID-19 patients, as well as the contagion risk of the virus. Therefore, CXR can be used as the main radiologic examination for patients suspected for COVID-19 and may assist with patient management and prognosis prediction.

With regards to the identification of pulmonary abnormalities suggesting pneumonia based on chest CT, both the formal radiology reports and CAD results exhibited sensitivities of 81.5%. According to a previous multi-center cohort study by Self et al. (12) comprising 3423 patients in the emergency department, the sensitivity of CXR for pulmonary opacity, with chest CT as a reference standard, was 43.5%. Hence, we considered that the sensitivity of the CAD system would be acceptable for implementation in clinical practice to detect pneumonia. Although we were unable to determine the baseline performance of radiologists without use of the CAD system, it may enhance sensitivity for the detection of pulmonary abnormalities. In a previous study using the same CAD system, the sensitivity of radiology residents for identification of abnormal CXR were significantly enhanced (65.6–73.4%) after review of the CAD results (28).

Although the CAD system utilized in our study was only designed to detect a limited number of abnormalities, including pulmonary nodules, masses, consolidations, and pneumothorax, the CAD could actually identify other types of lung parenchymal abnormalities, such as ground-glass opacities, the representative imaging finding of COVID-19 (Fig. 1), since it was initially trained to identify various thoracic diseases including pneumonia (15). Because parenchymal abnormalities related to pneumonia, such as consolidation, ground-glass opacities, reticular opacities often look similar and are indistinguishable on CXR, the CAD system was trained to identify abnormalities related to pneumonia, and was not confined to consolidations. However, the non-specific identification of several abnormalities also resulted in false positives, which led to diminished specificity (59.8% for rRT-PCR-positive COVID-19; 58.7% for CT abnormality suggesting pneumonia) of the CAD (Table 3). However, these false positives could be significantly reduced in the formal reports, since CAD was only used as an assistant tool for radiologists, who were able to discard these false positives upon their interpretation of CXR.

In addition to morphologic types of abnormality on CXR, the visibility of abnormalities on CXR may be important for the detectability of the lesions. In our study, both the CAD system and formal radiology reports exhibited significantly higher sensitivities for visible abnormalities than invisible abnormalities. Although extensive ground-glass opacities that are clearly visible on CXR can be identified by the CAD (Fig. 1), subtle ground-glass opacities that are barely visible on CXR may be missed by the CAD (Fig. 2).

In our study, the formal radiology reports for CXR exhibited satisfactory TATs (median, 51 minutes), that were significantly shorter than those of the rRT-PCR results (median, 507 minutes). Therefore, we believe that CXR may help screening and triage of patients with high suspicion of COVID-19 pneumonia, especially during an outbreak where there are limited resources for hospitalization and intensive care (21,29). Considering that our study was performed in a tertiary-referral hospital where radiologists were readily available to interpret CXR, report TATs might be much longer in primary healthcare or community-level practice where immediate availability of radiologists may be limited. Although further studies are required, we believe the CAD system may assist with timely clinical decision making in those situations. In this situation, patients with positive radiographs could be subject to enhanced isolation, which would minimize the transmission of COVID-19 while waiting for rRT-PCR results.

In our practice, no significant difference was observed in the TATs of CXR reports and rRT-PCR results between patients with and without COVID-19, or between patients with and without pneumonia. If the CAD system could be integrated with a notification system that could inform radiologists or physicians of abnormal CAD results immediately after the acquisition of CXR, it may facilitate prioritization of patients with higher suspicion of pneumonia (30).

Our study has several limitations. First, the formal radiology reports analyzed in the present study were results of interpretation using the CAD system. Therefore, a direct comparison of performance between the radiologists and the CAD system was not possible, and we could not evaluate whether the CAD system improved the performance of radiologists. To evaluate the effect of the CAD system on the performance of radiologists in a suspected COVID-19 population, further investigation, including the interpretation before and after use of the CAD system, is warranted. Second, our study was performed in a single tertiary institution with a limited number of COVID-19 patients. Therefore, it is difficult to generalize our results, considering that the situation for evaluating patients suspected for COVID-19 may differ significantly across institutions or countries. Third, the CAD system utilized in our study was not trained for all radiographic abnormalities, nor was it trained specifically for COVID-19.

In summary, we implemented a deep learning-based CAD system for the interpretation of CXR of patients suspected for COVID-19. The formal radiology reports with the assistance of CAD exhibited reasonably acceptable performances for identification of rRT-PCR-positive COVID-19 patients (sensitivity, 68.8%) and CT abnormalities suggesting pneumonia (sensitivity, 81.5%). Moreover, the CAD system resulted in faster TATs than rRT-PCR results. In situations where there are limited medical resources, such as during an outbreak, CXR interpretation with the assistance of CAD may assist clinical decision making and management of patients suspected for COVID-19.

Footnotes

The present study was supported by a grant (grant number: 03-2019-0190) from the Seoul National University Hospital research fund.

Conflicts of Interest: Eui Jin Hwang, Hyungjin Kim, and Chang Min Park report research grants from the Lunit Inc., outside the present study. Jin Mo Goo report research grant from the INFINITT Healthcare, outside the present study.

References

- 1.Zhu N, Zhang D, Wang W, Li X, Yang B, Song J, et al. A novel coronavirus from patients with pneumonia in China, 2019. N Engl J Med. 2020;382:727–733. doi: 10.1056/NEJMoa2001017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.National Authorities. Coronavirus disease (COVID-19). Situation report-127. World Health Organization; 2020. [Accessed May 26, 2020]. Available at: https://www.who.int/docs/default-source/coronaviruse/situation-reports/20200526-covid-19-sitrep-127.pdf?sfvrsn=7b6655ab_8. [Google Scholar]

- 3.Interim guidelines for collecting, handling, and testing clinical specimens from persons for coronavirus disease 2019 (COVID-19) Centers for Disease Control and Prevention Web site; [Accessed May 26, 2020]. https://www.cdc.gov/coronavirus/2019-nCoV/lab/guidelines-clinical-specimens.html. Published May 22, 2020. [Google Scholar]

- 4.Ai T, Yang Z, Hou H, Zhan C, Chen C, Lv W, et al. Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology. 2020 Feb 26; doi: 10.1148/radiol.2020200642. [Epub] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Fang Y, Zhang H, Xie J, Lin M, Ying L, Pang P, et al. Sensitivity of chest CT for COVID-19: comparison to RT-PCR. Radiology. 2020 Feb 19; doi: 10.1148/radiol.2020200432. [Epub] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Xie X, Zhong Z, Zhao W, Zheng C, Wang F, Liu J. Chest CT for typical 2019-nCoV pneumonia: relationship to negative RT-PCR testing. Radiology. 2020 Feb 12; doi: 10.1148/radiol.2020200343. [Epub] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.ACR recommendations for the use of chest radiography and computed tomography (CT) for suspected COVID-19 infection. American College of Radiology Web site; [Accessed May 26, 2020]. https://www.acr.org/Advocacy-and-Economics/ACR-Position-Statements/Recommendations-for-Chest-Radiography-and-CT-for-Suspected-COVID19-Infection. Published March 11, 2020. [Google Scholar]

- 8.STR/ASER COVID-19 position statement. Society of Thoracic Radiology Web site. [Accessed May 26, 2020]. https://thoracicrad.org/?page_id=2879. Published March 11, 2020.

- 9.Expert Panel on Thoracic Imaging. Chung JH, Ackman JB, Carter B, Colletti PM, Crabtree TD, et al. ACR appropriateness Criteria® acute respiratory illness in immunocompetent patients. J Am Coll Radiol. 2018;15:S240–S251. doi: 10.1016/j.jacr.2018.09.012. [DOI] [PubMed] [Google Scholar]

- 10.Mandell LA, Wunderink RG, Anzueto A, Bartlett JG, Campbell GD, Dean NC, et al. Infectious Diseases Society of America/American Thoracic Society consensus guidelines on the management of community-acquired pneumonia in adults. Clin Infect Dis. 2007;44 Suppl 2:S27–S72. doi: 10.1086/511159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Esayag Y, Nikitin I, Bar-Ziv J, Cytter R, Hadas-Halpern I, Zalut T, et al. Diagnostic value of chest radiographs in bedridden patients suspected of having pneumonia. Am J Med. 2010;123:88.e1–88.e5. doi: 10.1016/j.amjmed.2009.09.012. [DOI] [PubMed] [Google Scholar]

- 12.Self WH, Courtney DM, McNaughton CD, Wunderink RG, Kline JA. High discordance of chest X-ray and computed tomography for detection of pulmonary opacities in ED patients: implications for diagnosing pneumonia. Am J Emerg Med. 2013;31:401–405. doi: 10.1016/j.ajem.2012.08.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Donald JJ, Barnard SA. Common patterns in 558 diagnostic radiology errors. J Med Imaging Radiat Oncol. 2012;56:173–178. doi: 10.1111/j.1754-9485.2012.02348.x. [DOI] [PubMed] [Google Scholar]

- 14.Chumbita M, Cillóniz C, Puerta-Alcalde P, Moreno-García E, Sanjuan G, Garcia-Pouton N, et al. Can artificial intelligence improve the management of pneumonia. J Clin Med. 2020;9:248. doi: 10.3390/jcm9010248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hwang EJ, Park S, Jin KN, Kim JI, Choi SY, Lee JH, et al. Development and validation of a deep learning-based automated detection algorithm for major thoracic diseases on chest radiographs. JAMA Netw Open. 2019;2:e191095. doi: 10.1001/jamanetworkopen.2019.1095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Leisenring W, Alonzo T, Pepe MS. Comparisons of predictive values of binary medical diagnostic tests for paired designs. Biometrics. 2000;56:345–351. doi: 10.1111/j.0006-341x.2000.00345.x. [DOI] [PubMed] [Google Scholar]

- 17.Coronavirus disease-19, Republic of Korea. Latest updates, cases in Korea. Korean Ministry of Health and Welfare Web site; [Accessed April 4, 2020]. http://ncov.mohw.go.kr/bdBoardList_Real.do?brdId=1&brdGubun=11&ncvContSeq=&contSeq=&board_id=&gubun=. Published April 4, 2020. [Google Scholar]

- 18.Korean Society of Infectious Diseases; Korean Society of Pediatric Infectious Diseases; Korean Society of Epidemiology; Korean Society for Antimicrobial Therapy; Korean Society for Healthcare-associated Infection Control and Prevention; Korea Centers for Disease Control and Prevention et al. Report on the epidemiological features of coronavirus disease 2019 (COVID-19) outbreak in the Republic of Korea from January 19 to March 2, 2020. J Korean Med Sci. 2020;35:e112. doi: 10.3346/jkms.2020.35.e112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yoon SH, Lee KH, Kim JY, Lee YK, Ko H, Kim KH, et al. Chest radiographic and CT findings of the 2019 novel coronavirus disease (COVID-19): analysis of nine patients treated in Korea. Korean J Radiol. 2020;21:494–500. doi: 10.3348/kjr.2020.0132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ng MY, Lee EY, Yang J, Yang F, Li X, Wang H, et al. Imaging profile of the COVID-19 infection: radiologic findings and literature review. Radiology: Cardiothoracic Imaging. 2020;2:e20003. doi: 10.1148/ryct.2020200034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wong HYF, Lam HYS, Fong AH, Leung ST, Chin TW, Lo CSY, et al. Frequency and distribution of chest radiographic findings in COVID-19 positive patients. Radiology. 2020 Mar 27; doi: 10.1148/radiol.2020201160. [Epub] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chung M, Bernheim A, Mei X, Zhang N, Huang M, Zeng X, et al. CT imaging features of 2019 novel coronavirus (2019-nCoV) Radiology. 2020;295:202–207. doi: 10.1148/radiol.2020200230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shi H, Han X, Jiang N, Cao Y, Alwalid O, Gu J, et al. Radiological findings from 81 patients with COVID-19 pneumonia in Wuhan, China: a descriptive study. Lancet Infect Dis. 2020;20:425–434. doi: 10.1016/S1473-3099(20)30086-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Zhao W, Zhong Z, Xie X, Yu Q, Liu J. Relation between chest CT findings and clinical conditions of coronavirus disease (COVID-19) pneumonia: a multicenter study. AJR Am J Roentgenol. 2020;214:1072–1077. doi: 10.2214/AJR.20.22976. [DOI] [PubMed] [Google Scholar]

- 25.Antonio GE, Ooi CG, Wong KT, Tsui EL, Wong JS, Sy AN, et al. Radiographic-clinical correlation in severe acute respiratory syndrome: study of 1373 patients in Hong Kong. Radiology. 2005;237:1081–1090. doi: 10.1148/radiol.2373041919. [DOI] [PubMed] [Google Scholar]

- 26.Hui DS, Wong KT, Antonio GE, Lee N, Wu A, Wong V, et al. Severe acute respiratory syndrome: correlation between clinical outcome and radiologic features. Radiology. 2004;233:579–585. doi: 10.1148/radiol.2332031649. [DOI] [PubMed] [Google Scholar]

- 27.Ko SF, Lee TY, Huang CC, Cheng YF, Ng SH, Kuo YL, et al. Severe acute respiratory syndrome: prognostic implications of chest radiographic findings in 52 patients. Radiology. 2004;233:173–181. doi: 10.1148/radiol.2323031547. [DOI] [PubMed] [Google Scholar]

- 28.Hwang EJ, Nam JG, Lim WH, Park SJ, Jeong YS, Kang JH, et al. Deep learning for chest radiograph diagnosis in the emergency department. Radiology. 2019;293:573–580. doi: 10.1148/radiol.2019191225. [DOI] [PubMed] [Google Scholar]

- 29.Orsi MA, Oliva AG, Cellina M. Radiology department preparedness for COVID-19: facing an unexpected outbreak of the disease. Radiology. 2020;295:E8. doi: 10.1148/radiol.2020201214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hwang EJ, Park CM. Clinical implementation of deep learning in thoracic radiology: potential applications and challenges. Korean J Radiol. 2020;21:511–525. doi: 10.3348/kjr.2019.0821. [DOI] [PMC free article] [PubMed] [Google Scholar]