Abstract

Purpose

To assess accuracy and adherence of visual field (VF) home monitoring in a pilot sample of patients with glaucoma.

Design

Prospective longitudinal feasibility and reliability study.

Methods

Twenty adults (median 71 years) with an established diagnosis of glaucoma were issued a tablet perimeter (Eyecatcher) and were asked to perform 1 VF home assessment per eye, per month, for 6 months (12 tests total). Before and after home monitoring, 2 VF assessments were performed in clinic using standard automated perimetry (4 tests total, per eye).

Results

All 20 participants could perform monthly home monitoring, though 1 participant stopped after 4 months (adherence: 98% of tests). There was good concordance between VFs measured at home and in the clinic (r = 0.94, P < .001). In 21 of 236 tests (9%), mean deviation deviated by more than ±3 dB from the median. Many of these anomalous tests could be identified by applying machine learning techniques to recordings from the tablets' front-facing camera (area under the receiver operating characteristic curve = 0.78). Adding home-monitoring data to 2 standard automated perimetry tests made 6 months apart reduced measurement error (between-test measurement variability) in 97% of eyes, with mean absolute error more than halving in 90% of eyes. Median test duration was 4.5 minutes (quartiles: 3.9-5.2 minutes). Substantial variations in ambient illumination had no observable effect on VF measurements (r = 0.07, P = .320).

Conclusions

Home monitoring of VFs is viable for some patients and may provide clinically useful data.

People with glaucoma, or at risk of developing glaucoma, require lifelong monitoring, including periodic (eg, annual1) visual field (VF) examinations.2 The volume of outpatient appointments required (>1 million/year in the UK alone3) is placing glaucoma services under increasing strain: as evidenced by a growing appointment backlog4 and instances of avoidable sight loss due to treatment delays.5 , 6 Globally, the challenge of glaucoma treatment is only likely to intensify over the coming decades,7 with aging societies,8 , 9 and calls for increased monitoring1 and earlier detection.10 Furthermore, hospital assessments cannot be performed with the frequency required for best patient care. Many studies have shown that intensive VF monitoring could help to identify and prioritize individuals most at risk of debilitating sight loss11, 12, 13, 14, 15 (ie, younger patients with fast-progressing VF loss16). Frequent (eg, monthly) monitoring is likely to be of particular benefit for those patients for whom rapid progression is most likely (eg, optic disc hemorrhage17, 18, 19) or most costly (eg, monocular vision20).

In short, the status quo of hospital-only VF monitoring is costly and insufficient. The solution may lie in home monitoring.14 , 21 , 22 By collecting additional VF data between appointments, hospital visits could be shortened, and in low-risk patients, appointments could be reduced in frequency or conducted remotely: decreasing demand on outpatient clinics. Home monitoring would further allow for more VF testing and more frequent VF testing: both important for rapid, robust clinical decision-making.12 , 23 For these reasons, interest in home monitoring is growing for glaucoma14 , 21 , 22, as well for the treatment of other chronic ophthalmic conditions24, 25, 26, 27, and in health care generally.28 This interest is likely to intensify after COVID-19, as both hospitals and patients look to clear backlogs and minimize in-person appointments.29 , 30

Technological advances mean VF home monitoring is now a realistic proposition. Several portable perimeters have been developed that use ordinary tablet computers (eg, Melbourne Rapid Fields,31, 32, 33 Eyecatcher34) or head-mounted displays (eg, imo,35 , 36 Mobile Virtual Perimetry37). Such devices are small and inexpensive enough for patients to take home, and several appear capable of approximating conventional standard automated perimetry (SAP) when operated under supervision.32 , 38 , 39

What remains unclear is whether VF home monitoring works in practice. Are patients with glaucoma willing and able to comply with a home-testing regimen (adherence)? And do “personal perimeters” continue to produce high-quality VF data when operated at home and unsupervised (accuracy)?

To investigate these questions, 20 people with established glaucoma were given a tablet perimeter (Eyecatcher) to take home for 6 months. They were asked to perform 1 VF assessment a month in each eye. Accuracy was assessed by comparing measurements made at home with conventional SAP assessments made at the study's start and end. Adherence was quantified as the percentage of tests completed. Eyecatcher is not yet available for general use; however, the source code is freely available online, as detailed in the Methods section.

To reflect the likely clinical reality of home monitoring, we used only inexpensive and commonly available hardware (approximately $350 per person). Ten participants were given no practice with the test before taking it home. The other 10 performed the test once in each eye under supervision. During home testing, the tablet computer's forward-facing camera recorded the participant. This allowed us to confirm that the correct eye was tested, to record variations in ambient illumination, and to investigate whether “affective computing” techniques (eg, head-pose tracking and facial-expression analysis to recognize human emotions) could identify suspect tests.40

Methods

Participants

Participants were 20 adults (10 female) aged 62-78 years (median: 71), with established diagnoses of primary open angle glaucoma (N = 18, including 6 normal tension), angle closure glaucoma (N = 1), or secondary glaucoma (N = 1). Participants lived across south England and Wales (see Supplemental Figure 1) and were under ongoing care from different consultant ophthalmologists. Participants were the first 20 respondents to an advertisement placed in the International Glaucoma Association newsletter (IGA News: https://glaucoma.uk) and were assessed by a glaucoma-accredited optometrist (P.C.) who recorded ocular and medical histories, logMAR (minimum angle of resolution) acuity, and SAP using a Humphrey Field Analyzer 3 (HFA; Carl Zeiss Meditec, Dublin, California, USA; Swedish Interactive Threshold Algorithm [SITA] Fast; 24-2 grid). All patients exhibited best corrected logMAR acuity <0.5 in the better eye, and none had undergone ocular surgery or laser treatment within 6 months before participation. Severity of VF loss in the worse eye, as measured by HFA mean deviation (MD), varied from −2.5 dB (early loss41) to −29.9 dB (advanced loss), although the majority of eyes exhibited moderate loss (median: −8.9 dB). All HFA assessments (4 per eye) are shown in the Results section, and all exhibited a false-positive rate below 15% (median: 0%).

Written informed consent was obtained before testing. Participants were not paid but were offered travel expenses. The study was approved by the Ethics Committee for the School of Health Sciences, City, University of London (#ETH1819-0532), and carried out in accordance with the tenets of the Declaration of Helsinki.

Procedure

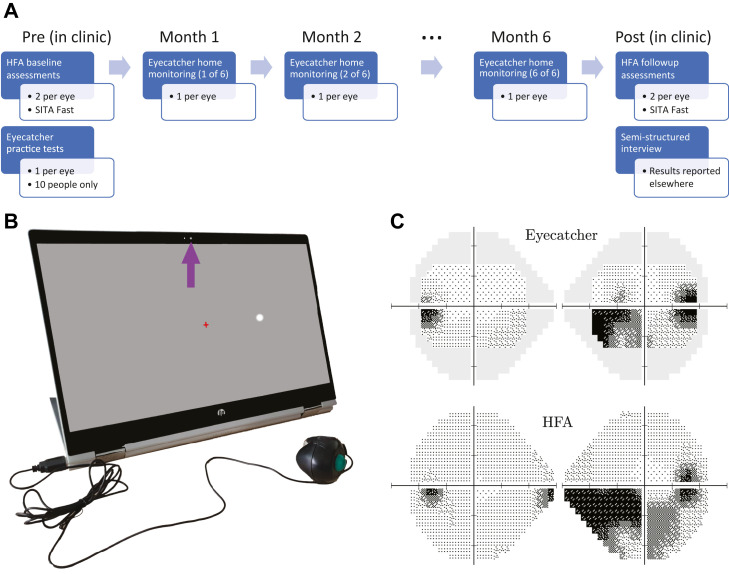

As shown in Figure 1 , A, participants were asked to perform 1 VF home assessment per eye, per month, for 6 months (12 tests total per participant). Beforehand, participants attended City, University of London, where they were issued with the necessary equipment, including a tablet computer (Figure 1, B), an eye patch, screen wipes, and a set of written instructions. All participants performed 2 HFA assessments in each eye (24-2 SITA Fast). Ten participants (50%) were also randomly selected to practice the Eyecatcher test once in each eye under supervision.

Figure 1.

Methods. (A) Study timeline. (B) Hardware: home perimetry was performed using an inexpensive tablet perimeter (Eyecatcher). During each Eyecatcher assessment, live recordings of the participant were made via the screen's front-facing camera (purple arrow). Participants were asked to fixate the central red cross throughout and press the button when a white (Goldmann III) dot was seen. (C) Output: example measures of visual field loss from a single participant, with same-patient data from the Humphrey Field Analyzer (HFA) for comparison. Grayscales were generated using the Matlab code available at: https://github.com/petejonze/VfPlot. SITA = Swedish Interactive Threshold Algorithm.

During the 6-month home-testing period, participants had access to support via telephone and email, and received an email reminder once a month when the test was due. After the home-monitoring period was complete, participants returned to City, University of London, and again performed 2 HFA assessments in each eye. They also completed a semistructured interview, designed to assess the acceptability of home monitoring and to identify any potential barriers to use. A qualitative assessment of these interviews will be reported elsewhere. One participant (ID 16) was unable to return because of the COVID-19 quarantine. They instead returned their computer by mail, and performed their exit interview via telephone. No follow-up HFA assessment could be performed with this individual, but given his ocular history, their VF was expected to have been stable.

The Eyecatcher VF Test

VFs were assessed using a custom screen perimeter (Figure 1, B), implemented on an inexpensive HP Pavilion ×360 15.6″ tablet laptop (HP Inc, Paolo Alto, California, USA). The test was a variant of the “Eyecatcher” VF test: described previously34 , 39 and freely available online at https://github.com/petejonze/Eyecatcher. It was modified in the present work to more closely mimic conventional static threshold perimetry, most notably by employing a ZEST thresholding algorithm,42 a central fixation cross, and a button press response. The software was implemented in Matlab using Psychtoolbox v343 and used bit stealing to ensure >10-bit luminance precision.44 The display measured 34.5 × 19.5 cm (34.8 × 20.1° visual angle, at the nominal viewing distance of 55 cm), and extensive photometric calibration was performed on each device to ensure luminance uniformity across the display (see Supplemental Figure 2 for technical details regarding screen calibration).

During the test, participants were asked to fixate a central cross and press a button when they saw a flash of light (Goldmann III dots with Gaussian-ramped edges). As in conventional perimetry, targets were presented against a 10 cd/m2 white background. Unlike conventional perimetry, participants received visual feedback (a “popping” dot) at the true stimulus location after each button press. This feedback was intended to keep participants motivated and alert during testing and was generally well received by participants, though 4 reported being sometimes surprised when feedback appeared at an unexpected location.

Testing was performed monocularly (fellow eye patched). The right eye was always tested first, and participants could take breaks between tests. Participants were asked to position themselves 55 cm from the screen (a distance marked on the response-button cable) and to perform the test in a dark, quiet room. In practice, we had no control over fixation stability, viewing distance, or ambient lighting. In anticipation that these may be important confounding factors, participants were recorded during testing using the tablet's front-facing camera (see the Results section). Note that the 55 cm viewing distance is farther than the conventional perimetric distance of 33 cm. This was partly for consistency with previous versions of Eyecatcher34 , 39 (versions that incorporated near-infrared eye tracking, which required an approximately 55 cm viewing distance). It was also partly to reduce (by a factor of approximately 1.5) the extent to which any head movements affected retinal stimulus size/location (ie, given the lack of chin rest). Note, however, that 33 cm has been used successfully by other tablet perimeters32 and would have allowed for the whole horizontal extent of the 24-2 grid to have been tested.

As shown in Figure 1, C, the output of each Eyecatcher assessment was a 4 × 6 grid of differential light sensitivity (DLS) estimates, corresponding to the central 24 locations of a standard 24-2 perimetric grid (±15° horizontal; ± 9° vertical). For analysis and reporting purposes, these values were transformed to be on the same decibel scale as the HFA . Because of the limited maximum-reliable luminance of the screen (175 cd/m2), the measurable range of values was 12.6 to 48 dB (HFA dB scale). Sensitivities below 12.6 dB could not be measured and were recorded as 12.6 dB. Note that it has been suggested that with conventional SAP, measurements below approximately 15 dB are unreliable and of limited utility.45, 46, 47

The MRF iPad app has shown promising results under laboratory settings32 and was considered for the present study. We chose to use our open source Eyecatcher software primarily for practical reasons (ie, we were familiar with it and could modify it to allow camera recordings and individual screen calibrations).

Analysis

Where appropriate, and as indicated in the text, pointwise DLS values from the HFA were adjusted for parity with Eyecatcher by setting estimated sensitivities below 12.6 dB to equal 12.6 dB. MD values were then recomputed as the weighted-mean difference from age-corrected normative values,48 using only the central 22 locations tested by both devices (ignoring the 2 blind spots). See Supplemental Material for technical details regarding the computation of MD. Nonadjusted MD values, as reported by the HFA device itself, are also reported in the Results section.

Results

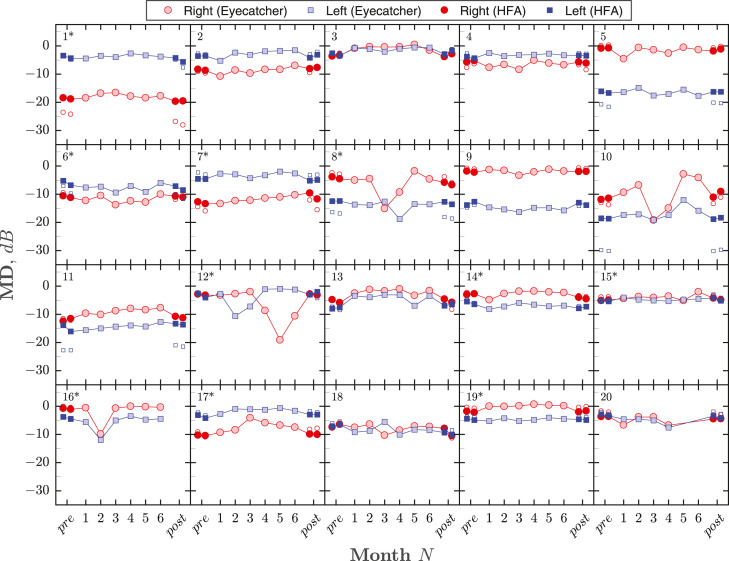

Figure 2 shows MDs for all eyes/tests. Adherence (percentage of tests completed) was 98.3%. Nineteen of 20 individuals completed the full regimen of 6 home-monitoring sessions. Participant 20 discontinued home testing after 4 sessions/mo after consultation with the study investigators. This was due to the test exacerbating chronic symptoms of vertigo (also experienced after SAP).

Figure 2.

Summary of visual field loss (mean deviation [MD]) for all eyes/tests. Each panel shows the complete data from a single participant. Numbers in the top-left of each panel give participant ID, with asterisks denoting the 10 individuals who received initial practice with Eyecatcher. The right eye (red circles) was always tested first, followed by the left eye (blue squares). Light-filled markers show the results for monthly Eyecatcher home-monitoring assessments. Dark-filled markers show the results of 2 Humphrey Field Analyzer (HFA) pretests and 2 HFA post-tests (all tests performed consecutively, same day). For parity, HFA values were computed using only the same 22 (paracentral) test locations as Eyecatcher, and any estimated sensitivities below 12.6 dB were set to 12.6 dB (to reflect the smaller dynamic range of the Eyecatcher test). Small unfilled markers show the unadjusted MD values as reported by the HFA (ie, using all 52 test points and the full dynamic range). These unfilled markers are most visible (ie, deviated from the adjusted values) only when field loss was severe. Note that participant 20 chose not to complete the final 2 home-monitoring tests, and participant 16 was unable to perform the final HFA assessments because of COVID-19 (see main text for details).

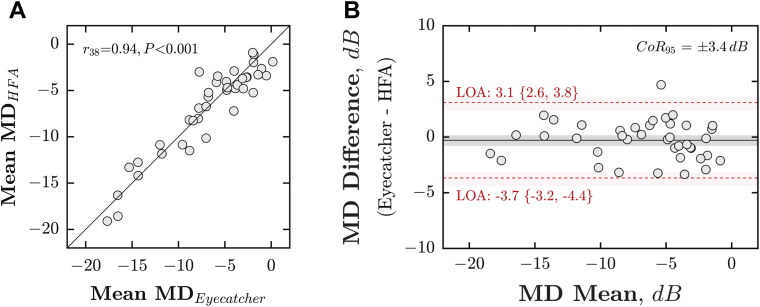

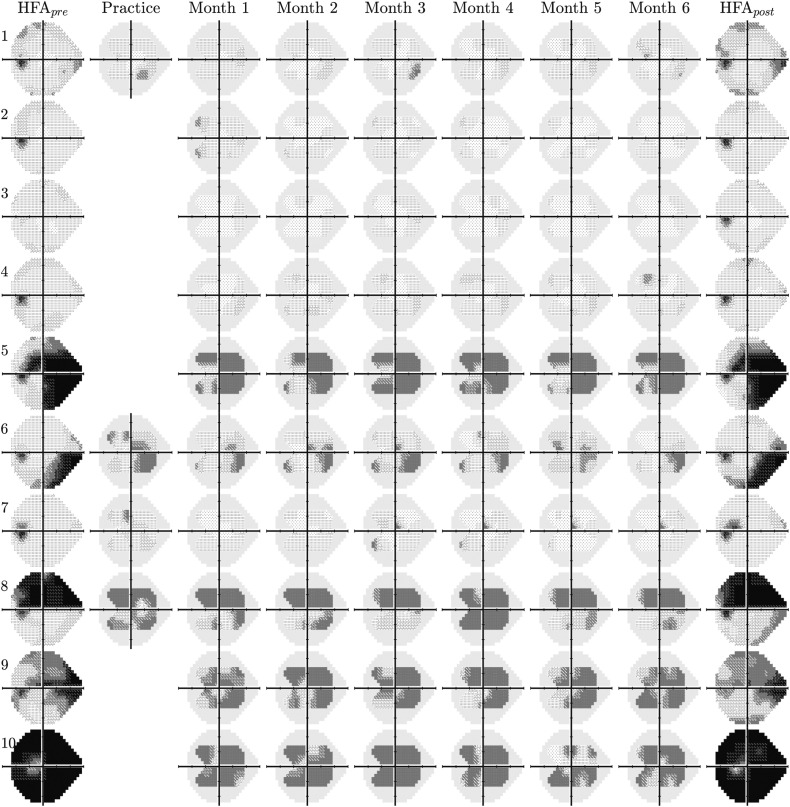

MD scores were strongly associated between VFs measured at home (mean of 6 Eyecatcher tests) and those measured in the lab (mean of 4 HFA tests), with a correlation of r 38 = 0.94 (Figure 3 , A; Pearson correlation; P < .001) and a 95% coefficient of repeatability of ±3.4 dB (Figure 3, B). For reference, mean agreement between random pairs of HFA assessments was 2.2 dB (95% confidence interval [CI95]: 1.8-2.6 dB; 20,000 random samples). As shown in Figure 4 , there was also good concordance between individual VF locations (Pearson correlation: r 878 = 0.86; P ≪ .001).

Figure 3.

Accuracy (concordance with Humphrey Field Analyzer [HFA]). (A) Scatter plot, showing mean deviation (MD) from the HFA (averaged across all 4 tests), against MD from Eyecatcher (averaged across all 6 home tests). Each marker represents a single eye. The solid diagonal line indicates unity (perfect correlation). Statistics show the results of a Pearson correlation. Note that the HFA MD values shown here were adjusted for parity with Eyecatcher's measurable range/locations (see the Methods section). If the unadjusted raw MD values were used, the correlation was r38 = 0.91, P < .001. (B) Bland-Altman agreement. Red horizontal dashed lines denote 95% limits of agreement, with 95% confidence intervals derived using bootstrapping (bias-corrected accelerated method, N = 20,000). The 95% coefficient of repeatability (CoR95) was ±3.4 dB.

Figure 4.

Raw visual field results for 10 randomly selected left eyes (see Supplemental Figures 3-5 for the other 30 eyes). The first and last columns show mean-averaged data from 2 “pre” and 2 “post” reference tests, performed in clinic using a Humphrey Field Analyzer (HFA) 3 (24-2, Swedish Interactive Threshold Algorithm Fast). The solid gray regions in the Eyecatcher plots denote those regions of the 24-2 grid not tested due to limited screen size. Only half of participants were randomly selected to complete a supervised practice test.

Some individual tests produced implausible data (eg, Figure 2: ID 8 test 3, ID 12 test 5). In total, there were 21 tests (9%) where MD deviated by more than ±3 dB from the average (median of all 6 tests). Of these, 13 (62%) occurred in the right eye (tested first), and 7 (33%) deviated by more than ±6 dB. As described in Supplemental Figure 6, these statistical outliers could be identified with reasonable sensitivity/specificity (area under the receiver operating characteristic curve: 0.78) by applying machine learning techniques to recordings from the tablets' front-facing camera.

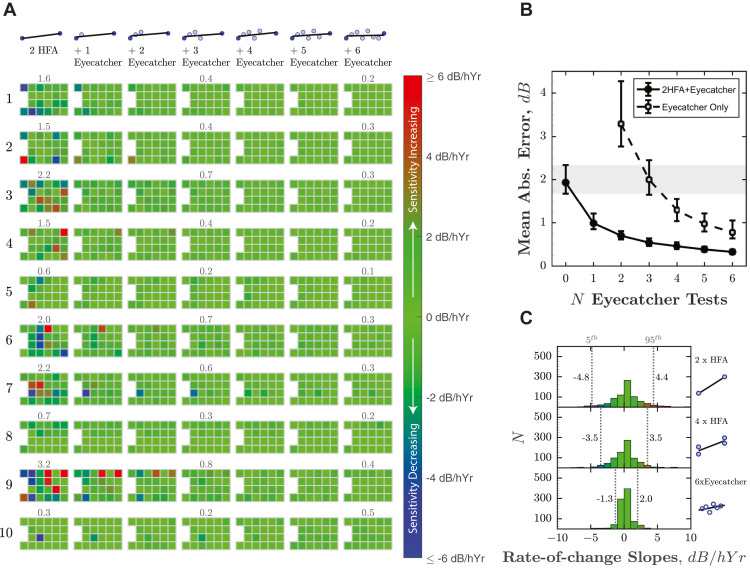

To quantify the extent to which regular home monitoring reduced VF measurement error (between test variability), Figure 5 shows the estimated rate of change (least-squares slopes) at each VF location. We assume that for the 6-month study period the true change in sensitivity was approximately zero, and so any nonzero slope estimates represent random error. This assumption is reasonable given the relatively short time frame, that all participants were believed to be perimetrically stable, and the fact that when all 4 HFA tests were considered, almost as many points exhibited positive slopes (increasing sensitivity, Figure 5, A, red squares) as negative slopes (decreasing sensitivity, Figure 5, A, blue squares): ratio = 0.86 (CI95 = 0.74-1.01; see Figure 5, C, for distribution).

Figure 5.

Reduction in measurement error (between-test measurement variability) following home monitoring. (A) Estimated rate of change (in dB per half-year), as increasing numbers of Eyecatcher tests are added to a single (randomly selected) pair of Humphrey Field Analyzer (HFA) pre-/post-test results, made 6 months apart. As described in the main text, the true change in sensitivity is assumed to be 0, so any nonzero values represent measurement error. Ten of 40 eyes are shown here (same eyes as Figure 4). Results from the remaining 30 eyes are given in Supplemental Figures 7-9. Numbers above tests show mean absolute error (MAE), which would ideally be zero. (B) Mean (±95% confidence interval [CI95]) MAE, averaged across all 40 eyes, as a function of N home-monitoring assessments (months). Filled circles correspond to the scenario in (A) and show how measurement error decreased as Eyecatcher data were added to a random pair of HFApre/HFApost assessments (ie, “ancillary home-monitoring scenario”). Unfilled markers show measurement error if Eyecatcher data were considered in isolation, without any HFA data (ie, “exclusive home-monitoring scenario”). Error bars denote 95% confidence intervals, derived using bootstrapping (bias-corrected accelerated method, N = 20,000). The shaded region highlights the CI95 (1.7-2.3 dB) given only a single random pair of HFA assessments (ie, the current clinical reality after 2 appointments). (C) Histograms showing the distributions of all 880 rate-of-change slopes (22 visual field locations × 2 eyes × 20 participants). Vertical dashed lines show the 5th and 95th percentiles.

When only a single (randomly selected) pair of HFA pre- and posttest results was considered (ie, the current clinical reality after 2 hospital appointments), mean absolute error (MAE) was 1.96 dB (CI95: 1.7-2.3; Figure 5, B, gray shaded region). As progressively more home-monitoring tests were also considered (Figure 5, B, filled circles), measurement error decreased to 0.35 dB (CI95: 0.3-0.4). In 37 of the 38 eyes (97%; HFApost data missing for participant 16), MAE was smaller when home-monitoring data were included, with MAE reducing by more than 50% in 90% of eyes (median reduction: 85%, CI95: 82%-87%). For reference, a reduction of 20% in variability is generally considered clinically meaningful and allows progression to be detected 1 visit earlier.49 If we consider the home-monitoring data alone (ie, without any HFA data included; Figure 5, B, unfilled squares), measurement error was still smaller after 6 home-monitoring tests (0.78 dB; CI95: 0.6-1.1) vs 2 HFA tests alone (1.96 dB), with a median reduction in MAE of 68% (CI95: 57%-76%).

Either with or without HFA data included, there was no significant difference in MAE between the eyes of participants who received initial practice with Eyecatcher and those who did not (independent samples t test: P with = .864, P without = .812).

In some individuals (eg, ID 3, ID 13), MDs measured at home were systematically higher, in both eyes, than those measured in clinic. This difference was not significant across the group as a whole (repeated measures t test of MD: t 39 = −1.08, P = .286) and may indicate individual differences in fixation stability or viewing distance. They are not likely due to ambient illumination levels, which tended to be highly variable (both within and between individuals), but with a little apparent effect on the data (see Supplemental Figures 10 and 11).

The median test duration for Eyecatcher was 4.5 minutes (quartiles: 3.9-5.2 minutes) and did not vary systematically across the 6 sessions (F (5,227) = 0.808, P = .547; see Supplemental Figure 12). For comparison, the median test duration for the HFA (SITA Fast) was 3.9 minutes (quartiles: 3.3-4.6 minutes), and was faster than Eyecatcher in 30 of 40 eyes (despite the HFA testing over twice as many VF locations).

Discussion

Home monitoring has the potential to deliver earlier and more reliable detection of disease progression, as well as service benefits via a reduction in in-person appointments. Here we demonstrate, in a preliminary sample of 20 volunteers, that patients with glaucoma are willing and able to comply with a monthly VF home-testing regimen, and that the VF data produced were of good quality.

A total of 98% of tests were completed successfully (adherence), and the data from 6 home-monitoring tests were in good agreement with 4 SAP tests conducted in clinic (accuracy). This is consistent with previous observations that experienced patients can perform VF testing with minimal oversight,50 as well as with recent findings from the Age-Related Eye Disease Study 2-HOME study group, showing that home monitoring of hyperacuity is able to improve the detection of neovascular age-related macular degeneration.51

The use of home-monitoring data was shown to reduce measurement error (between-test measurement variability). When home-monitoring data were added to 2 SAP assessments made 6 months apart (the current clinical reality), measurement error decreased by over 50% in 90% of eyes. Given that a 20% reduction in measurement variability is generally considered clinically meaningful(ie, allows progression to be detected 1 hospital visit earlier49), this suggests that, even with present technology, home monitoring could be beneficial for routine clinical practice (eg, support more rapid interventions). Furthermore, although we assume that ancillary home monitoring, designed to supplement and augment existing SAP, would be the generally preferred model, it was encouraging that robust VF estimates were obtained even when home-monitoring data were considered in isolation. This suggests that home monitoring may be viable in situations where hospital assessments are impractical, such as in domiciliary care, or in the wake of pandemics such as COVID-19.52

Home monitoring could also assist with clinical trials. For example, the recent UKGTS trial53 required 516 individuals to attend 16 VF assessments over 24 months: a substantial undertaking, of the sort that can make new treatments prohibitively costly to assess.54 , 55 By allowing more frequent measurements of geographically diverse individuals, home monitoring could lead to cheaper, more representative trials and could potentially reduce trial durations (ie, evidence treatment effects sooner).

There were, however, individual instances where the home-monitoring test performed poorly. In 21 tests (9%), MD deviated by more than ±3 dB from the median (of which 7 deviated by more than ±6 dB). As has been shown elsewhere by simulation,14 the effects of these anomalous tests were largely compensated for by the increased volume of “good” data. However, poor-quality data should ideally be averted at source, and it was encouraging that many of these 21 anomalous tests could be identified by applying machine learning techniques to recordings of participants made using the tablets' front-facing camera (see Supplemental Figure 6). It is also notable that when interviewed at the end of the study, some participants already suspected some tests being anomalous (eg, due to a long test duration, or a feeling that they had not performed well). Consideration may therefore need to be given in future as to whether participants should have the ability to repeat tests or provide confidence ratings.

Regarding adherence, 1 participant (ID 20) was advised by the study team to discontinue home monitoring after 4 months, after reporting that the test was compounding chronic symptoms of dizziness (though interestingly their data appeared relatively accurate and consistent up to this point; see Figure 2). This adverse effect was not unique to Eyecatcher, and the participant reported having occassionally experienced similar reactions following conventional SAP. However, this highlights that it may be helpful to tailor the use and frequency of home monitoring to the needs and abilities of individual patients, in contrast to the current “one size fits all” approach to VF monitoring.12 , 56 , 57 A full qualitative analysis of participants' views on the benefits and challenges of home monitoring is in preparation and will be reported elsewhere.

Study Limitations and Future Work

The present study is only an initial feasibility assessment, examining a small number of self-selecting volunteers. It remains to be seen how well home monitoring scales up to routine clinical practice or clinical trials. It will be particularly important to establish that home monitoring is sustainable over longer periods and is capable of detecting rapid progression.11, 12, 13, 14, 15

Cost-effectiveness of glaucoma home monitoring has also yet to be demonstrated, and it would be helpful to perform an economic evaluation of utility, similar to that reported recently for age-related macular degeneration home monitoring.58 For this, it would be instructive to consider not just home monitoring of VFs alone, but also in conjunction with home-tonometry, which also appears increasingly practicable.59 In the long term, there are even signs that optical coherence tomography60 and smartphone-based fundus imaging61, 62, 63 are becoming straightforward enough to be administered by lay persons, and these might also be explored in future home-monitoring trials.

It may be that targeted home monitoring—focused on high-risk/benefit patients with glaucoma—is cost-effective, even if the indiscriminate home monitoring of all patients is not.14 Thus, it may be best to concentrate home-monitoring resources on those patients whose age11 or condition17, 18, 19 makes them most likely to experience debilitating vision loss within their lifetime. It may also be worth considering the potential secondary benefits of home monitoring, such as improved patient satisfaction and retention,56 , 64 or better treatment adherence. Thus, it is well established that many patients with glaucoma find hospital visits stressful and inconvenient,56 , 65 and home monitoring might be welcomed as a way of saving time, travel, and money. Treatment adherence is known to increase markedly before a hospital appointment66 (“white-coat adherence”), or when patients receive automated reminders,67 , 68 and it is conceivable that the anticipation of regular home monitoring could provide a similar impetus. After COVID-19, home monitoring of VFs may also be desirable from a public health perspective, as a way of reducing the time each patient spends in clinic, and as a way of reducing the risk (real or perceived) of infection from conventional SAP apparatus.

Test Limitations and Future Work

The test itself (Eyecatcher) was intended only as a proof of concept, and was crude in many respects. In fact, we consider it highly encouraging—and somewhat remarkable—that the results were as promising as they were, given the low level of technical sophistication. Alternative measures are being developed elsewhere31, 32, 33 , 35, 36, 37 (in particular, the MRF), and there are several ways in which the present test could also be improved in future.

The test algorithm (a rudimentary implementation of ZEST42) was relatively inefficient and could be made faster and more robust: most straightforwardly by using prior information from previous tests and by using a more efficient stimulus-selection rule.69 Increased efficiency might be necessary if, for example, attempting to test all 54 locations in a standard 24-2 grid. The source code for the present test is freely available online for anyone wishing to view or modify it. Interestingly though, while Anderson and associates14 anticipated that home tests would be brief, the relatively long durations in the present study (median: 4.5 minutes per eye) were not cited as a concern by participants (although 2 individuals observed that test durations were longer and more variable than conventional SAP). It may be that when it comes to home monitoring, less focus should be placed on test duration than in conventional perimetry (ie, given the time saved by not having to travel to and wait in clinic). Instead, focus should be directed more toward usability (eg, the ability to pause, resume, or restart tests).

A further key limitation of the present test is that only paracentral vision was assessed (±15° horizontal; ± 9° vertical; the most central 24 points of the 24-2 grid). Although this seemed sufficient to assess the feasibility of home monitoring in principle, such a limited field of view would in practice hinder clinicians' ability to determine progression in the size or shape of field loss, and key areas of loss may be missed altogether (ie, most of the superior and inferior arcuate nerve fiber bundle areas were not tested). A wider field of view could be achieved by using a larger screen38 , 70 (at the cost of reduced portability), by requiring the user to fixate different areas of the screen throughout the course of the test31, 32, 33 , 39 (at the cost of increased complexity), or by reducing viewing distance31, 32, 33 (at the cost of greater measurement error due to head movements; see the Methods section). Alternatively, the future use of head-mounted displays (or “smart-glasses”) would allow for widefield testing, and would also obviate many practical concerns regarding uncontrolled viewing distance, improper patching, screen glare, or variations in ambient lighting. These potential confounds did not appear to be limiting factors in the present study, but could be problematic in less compliant individuals, or those disposed to cheat or malinger. Other ways in which the present hardware could be improved are by using eye tracking to monitor fixation; using near-infrared facial imaging systems, such as the iPad's TrueDepth camera, to track viewing distance with millimeter accuracy; and/or by integrating iris scanning to ensure that the correct eye/person is always tested. In the long term, test data will need to be integrated securely into medical records systems, and consideration given how to maintain accurate screen calibrations over extended periods of use.70

CRediT authorship contribution statement

Pete R. Jones: Conceptualization, Data curation, Formal analysis, Funding acquisition, Methodology, Software, Visualization, Writing - original draft, Writing - review & editing. Peter Campbell: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Writing - review & editing. Tamsin Callaghan: Conceptualization, Funding acquisition, Methodology, Project administration, Writing - review & editing. Lee Jones: Investigation. Daniel S. Asfaw: Software. David F. Edgar: Conceptualization, Funding acquisition, Methodology, Writing - review & editing. David P. Crabb: Conceptualization, Funding acquisition, Methodology, Resources, Writing - review & editing.

Acknowledgments

All authors have completed and submitted the ICMJE form for disclosure of potential conflicts of interest. Funding/Support: This study was funded by an International Glaucoma Association (now: “Glaucoma UK”) 2019 Award, and by a Fight for Sight (UK) project grant (#1854/1855). The author D.S.A. was supported by the European Union's Horizon 2020 research and innovation program under Marie Sklodowska-Curie grant agreement no. 675033. The funding organizations had no role in the design or conduct of this research. Financial Disclosures: No conflicting relationship exists for any author. D.P.C. reports unrestricted grants from Roche, Santen, and Allergan; speaker fees from THEA, Bayer, Santen, and Allergan; consultancy with CenterVue; all outside the present work. The other authors report no financial disclosures or support in kind. All authors attest that they meet the current ICMJE criteria for authorship.

Footnotes

Supplemental Material available at AJO.com.

Supplemental Data

Pete R. Jones, PhD, is a lecturer at City, University of London, and an honorary researcher at the UCL Child Vision Lab. His primary interest is the development of vision tests for hard to reach populations and for use outside of conventional clinical environments. For further information, see www.appliedpsychophysics.com.

References

- 1.Fung S.S.M., Lemer C., Russell R.A., Malik R., Crabb D.P. Are practical recommendations practiced? A national multi-centre cross-sectional study on frequency of visual field testing in glaucoma. Br J Ophthalmol. 2013;97:843–847. doi: 10.1136/bjophthalmol-2012-302903. [DOI] [PubMed] [Google Scholar]

- 2.Quigley H.A. Glaucoma. Lancet. 2011;377:1367–1377. doi: 10.1016/S0140-6736(10)61423-7. [DOI] [PubMed] [Google Scholar]

- 3.King A., Azuara-Blanco A., Tuulonen A. Glaucoma. BMJ. 2013;346:f3518. doi: 10.1136/bmj.f3518. [DOI] [PubMed] [Google Scholar]

- 4.Broadway D.C., Tibbenham K. Tackling the NHS glaucoma clinic backlog issue. Eye (Lond) 2019;33:1715–1721. doi: 10.1038/s41433-019-0468-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Foot B., MacEwen C. Surveillance of sight loss due to delay in ophthalmic treatment or review: frequency, cause and outcome. Eye (Lond) 2017;31:771–775. doi: 10.1038/eye.2017.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Healthcare Safety Investigatory Branch . 2020. Lack of timely monitoring of patients with glaucoma: Healthcare Safety Investigation I2019/001.https://www.hsib.org.uk/investigations-cases/lack-timely-monitoring-patients-glaucoma/ [Google Scholar]

- 7.Butt N.H., Ayub M.H., Ali M.H. Challenges in the management of glaucoma in developing countries. Taiwan J Ophthalmol. 2016;6:119–122. doi: 10.1016/j.tjo.2016.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tham Y.-C., Li X., Wong T.Y., Quigley H.A., Aung T., Cheng C.-Y. Global prevalence of glaucoma and projections of glaucoma burden through 2040: a systematic review and meta-analysis. Ophthalmology. 2014;121:2081–2090. doi: 10.1016/j.ophtha.2014.05.013. [DOI] [PubMed] [Google Scholar]

- 9.Buchan J.C., Amoaku W., Barnes B., et al. How to defuse a demographic time bomb: the way forward? Eye (Lond) 2017;31:1519–1522. doi: 10.1038/eye.2017.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Founti P., Topouzis F., Holló G., et al. Prospective study of glaucoma referrals across Europe: Are we using resources wisely? Br J Ophthalmol. 2018;102:329–337. doi: 10.1136/bjophthalmol-2017-310249. [DOI] [PubMed] [Google Scholar]

- 11.Saunders L.J., Russell R.A., Kirwan J.F., McNaught A.I., Crabb D.P. Examining visual field loss in patients in glaucoma clinics during their predicted remaining lifetime. Invest Ophthalmol Vis Sci. 2014;55:102–109. doi: 10.1167/iovs.13-13006. [DOI] [PubMed] [Google Scholar]

- 12.Wu Z., Saunders L.J., Daga F.B., Diniz-Filho A., Medeiros F.A. Frequency of testing to detect visual field progression derived using a longitudinal cohort of glaucoma patients. Ophthalmology. 2017;124:786–792. doi: 10.1016/j.ophtha.2017.01.027. [DOI] [PubMed] [Google Scholar]

- 13.Gardiner S.K., Crabb D.P. Frequency of testing for detecting visual field progression. Br J Ophthalmol. 2002;86:560–564. doi: 10.1136/bjo.86.5.560. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Anderson A.J., Bedggood P.A., George Kong Y.X., Martin K.R., Vingrys A.J. Can home monitoring allow earlier detection of rapid visual field progression in glaucoma? Ophthalmology. 2017;124:1735–1742. doi: 10.1016/j.ophtha.2017.06.028. [DOI] [PubMed] [Google Scholar]

- 15.Boodhna T., Crabb D.P. More frequent, more costly? Health economic modelling aspects of monitoring glaucoma patients in England. BMC Health Serv Res. 2016;16:611. doi: 10.1186/s12913-016-1849-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Chauhan B.C., Malik R., Shuba L.M., Rafuse P.E., Nicolela M.T., Artes P.H. Rates of glaucomatous visual field change in a large clinical population. Invest Ophthalmol Vis Sci. 2014;55:4135–4143. doi: 10.1167/iovs.14-14643. [DOI] [PubMed] [Google Scholar]

- 17.Chan T.C.W., Bala C., Siu A., Wan F., White A. Risk factors for rapid glaucoma disease progression. Am J Ophthalmol. 2017;180:151–157. doi: 10.1016/j.ajo.2017.06.003. [DOI] [PubMed] [Google Scholar]

- 18.Prata T.S., De Moraes C.G.V., Teng C.C., Tello C., Ritch R., Liebmann J.M. Factors affecting rates of visual field progression in glaucoma patients with optic disc hemorrhage. Ophthalmology. 2010;117:24–29. doi: 10.1016/j.ophtha.2009.06.028. [DOI] [PubMed] [Google Scholar]

- 19.Liu X., Kelly S.R., Montesano G., et al. Evaluating the impact of uveitis on visual field progression using large-scale real-world data. Am J Ophthalmol. 2019;207:144–150. doi: 10.1016/j.ajo.2019.06.004. [DOI] [PubMed] [Google Scholar]

- 20.Jones L., Taylor D.J., Sii F., Masood I., Crabb D.P., Shah P. The Only Eye Study (OnES): a qualitative study of surgeon experiences of only eye surgery and recommendations for patient safety. BMJ Open. 2019;9:e030068. doi: 10.1136/bmjopen-2019-030068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Faes L., Bachmann L.M., Sim D.A. Home monitoring as a useful extension of modern tele-ophthalmology. Eye (Lond) 2020;34:1950–1953. doi: 10.1038/s41433-020-0964-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hamzah J.C., Daka Q., Azuara-Blanco A. Home monitoring for glaucoma. Eye (Lond) 2020;34:155–160. doi: 10.1038/s41433-019-0669-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Crabb D.P., Russell R.A., Malik R., et al. NIHR Journals Library; Southampton, UK: 2014. Frequency of visual field testing when monitoring patients newly diagnosed with glaucoma: mixed methods and modelling. [PubMed] [Google Scholar]

- 24.Chew E.Y., Clemons T.E., Bressler S.B., et al. Randomized trial of a home monitoring system for early detection of choroidal neovascularization home monitoring of the Eye (HOME) study. Ophthalmology. 2014;121:535–544. doi: 10.1016/j.ophtha.2013.10.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Rathi S., Tsui E., Mehta N., Zahid S., Schuman J.S. The current state of teleophthalmology in the United States. Ophthalmology. 2017;124:1729–1734. doi: 10.1016/j.ophtha.2017.05.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ward E., Wickens R.A., O’Connell A., et al. Monitoring for neovascular age-related macular degeneration (AMD) reactivation at home: the MONARCH study. Eye (Lond) 2020 doi: 10.1038/s41433-020-0910-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Adams M., Ho C.Y.D., Baglin E., et al. Home monitoring of retinal sensitivity on a tablet device in intermediate age-related macular degeneration. Transl Vis Sci Technol. 2018;7:32. doi: 10.1167/tvst.7.5.32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Muse E.D., Barrett P.M., Steinhubl S.R., Topol E.J. Towards a smart medical home. Lancet. 2017;389:358. doi: 10.1016/S0140-6736(17)30154-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wu X., Chen J., Yun D., et al. Effectiveness of an ophthalmic hospital-based virtual service during COVID-19 [online ahead of print]. Ophthalmology. 2020 doi: 10.1016/j.ophtha.2020.10.012. https://www.sciencedirect.com/science/article/pii/S0161642020310101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Deiner M., Damato B., Ou Y. Implementing and monitoring at-home VR oculo-kinetic perimetry during COVID-19. Ophthalmology. 2020;127:1258. doi: 10.1016/j.ophtha.2020.06.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Schulz A.M., Graham E.C., You Y., Klistorner A., Graham S.L. Performance of iPad-based threshold perimetry in glaucoma and controls. Clin Experiment Ophthalmol. 2018;46:346–355. doi: 10.1111/ceo.13082. [DOI] [PubMed] [Google Scholar]

- 32.Prea S.M., Kong Y.X.G., Mehta A., et al. Six-month longitudinal comparison of a portable tablet perimeter with the Humphrey field analyzer. Am J Ophthalmol. 2018;190:9–16. doi: 10.1016/j.ajo.2018.03.009. [DOI] [PubMed] [Google Scholar]

- 33.Kong Y.X.G., He M., Crowston J.G., Vingrys A.J. A comparison of perimetric results from a tablet perimeter and Humphrey field analyzer in glaucoma patients. Transl Vis Sci Technol. 2016;5:2. doi: 10.1167/tvst.5.6.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Jones P.R., Smith N.D., Bi W., Crabb D.P. Portable perimetry using eye-tracking on a tablet computer—a feasibility assessment. Transl Vis Sci Technol. 2019;8:17. doi: 10.1167/tvst.8.1.17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Matsumoto C., Yamao S., Nomoto H., et al. Visual field testing with head-mounted perimeter ‘imo’. PLoS One. 2016;11:e0161974. doi: 10.1371/journal.pone.0161974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kimura T., Matsumoto C., Nomoto H. Comparison of head-mounted perimeter (imo®) and Humphrey field analyzer. Clin Ophthalmol (Auckland, NZ) 2019;13:501. doi: 10.2147/OPTH.S190995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Alawa K.A., Nolan R.P., Han E., et al. Low-cost, smartphone-based frequency doubling technology visual field testing using a head-mounted display. Br J Ophthalmol. 2019 doi: 10.1136/bjophthalmol-2019-314031. [DOI] [PubMed] [Google Scholar]

- 38.Jones P.R. An open-source static threshold perimetry test using remote eye-tracking (Eyecatcher): description, validation, and normative data. Transl Vis Sci Technol. 2020;9:18. doi: 10.1167/tvst.9.8.18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jones P.R., Lindfield D., Crabb D.P. Using an open-source tablet perimeter (Eyecatcher) as a rapid triage measure in a glaucoma clinic waiting area [online ahead of print] Br J Ophthalmol. 2020 doi: 10.1136/bjophthalmol-2020-316018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Jones P.R., Demaria G., Tigchelaar I., et al. The human touch: using a webcam to autonomously monitor compliance during visual field assessments. Transl Vis Sci Technol. 2020;9:31. doi: 10.1167/tvst.9.8.31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mills R.P., Budenz D.L., Lee P.P., et al. Categorizing the stage of glaucoma from pre-diagnosis to end-stage disease. Am J Ophthalmol. 2006;141:24–30. doi: 10.1016/j.ajo.2005.07.044. [DOI] [PubMed] [Google Scholar]

- 42.Turpin A., McKendrick A.M., Johnson C.A., Vingrys A.J. Properties of perimetric threshold estimates from full threshold, ZEST, and SITA-like strategies, as determined by computer simulation. Invest Ophthalmol Vis Sci. 2003;44:4787–4795. doi: 10.1167/iovs.03-0023. [DOI] [PubMed] [Google Scholar]

- 43.Kleiner M., Brainard D., Pelli D., Ingling A., Murray R., Broussard C. What’s new in Psychtoolbox-3. Perception. 2007;36:1–16. [Google Scholar]

- 44.Tyler C.W. Colour bit-stealing to enhance the luminance resolution of digital displays on a single pixel basis. Spa Vis. 1997;10:369–377. doi: 10.1163/156856897x00294. [DOI] [PubMed] [Google Scholar]

- 45.Gardiner S.K., Swanson W.H., Goren D., Mansberger S.L., Demirel S. Assessment of the reliability of standard automated perimetry in regions of glaucomatous damage. Ophthalmology. 2014;121:1359–1369. doi: 10.1016/j.ophtha.2014.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Gardiner S.K., Mansberger S.L. Effect of restricting perimetry testing algorithms to reliable sensitivities on test-retest variability. Invest Ophthalmol Vis Sci. 2016;57:5631–5636. doi: 10.1167/iovs.16-20053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Pathak M., Demirel S., Gardiner S.K. Reducing variability of perimetric global indices from eyes with progressive glaucoma by censoring unreliable sensitivity data. Transl Vis Sci Technol. 2017;6:11. doi: 10.1167/tvst.6.4.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Heijl A., Lindgren G., Olsson J. Normal variability of static perimetric threshold values across the central visual field. Arch Ophthalmol. 1987;105:1544–1549. doi: 10.1001/archopht.1987.01060110090039. [DOI] [PubMed] [Google Scholar]

- 49.Turpin A., McKendrick A.M. What reduction in standard automated perimetry variability would improve the detection of visual field progression? Invest Ophthalmol Vis Sci. 2011;52:3237–3245. doi: 10.1167/iovs.10-6255. [DOI] [PubMed] [Google Scholar]

- 50.Van Coevorden R.E., Mills R.P., Chen Y.Y., Barnebey H.S. Continuous visual field test supervision may not always be necessary. Ophthalmology. 1999;106:178–181. doi: 10.1016/S0161-6420(99)90016-7. [DOI] [PubMed] [Google Scholar]

- 51.Chew E.Y., Clemons T.E., Harrington M., et al. Effectiveness of different monitoring modalities in the detection of neovascular age-related: macular degeneration: the HOME study, report number 3. Retina. 2016;36:1542–1547. doi: 10.1097/IAE.0000000000000940. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jayaram H., Strouthidis N.G., Gazzard G. The COVID-19 pandemic will redefine the future delivery of glaucoma care. Eye (Lond) 2020;34:1205. doi: 10.1038/s41433-020-0958-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Garway-Heath D.F., Crabb D.P., Bunce C., et al. Latanoprost for open-angle glaucoma (UKGTS): a randomised, multicentre, placebo-controlled trial. Lancet. 2015;385:1295–1304. doi: 10.1016/S0140-6736(14)62111-5. [DOI] [PubMed] [Google Scholar]

- 54.Heijl A. Glaucoma treatment: by the highest level of evidence. Lancet. 2015;385:1264–1266. doi: 10.1016/S0140-6736(14)62347-3. [DOI] [PubMed] [Google Scholar]

- 55.Quigley H.A. Glaucoma neuroprotection trials are practical using visual field outcomes. Ophthalmol Glaucoma. 2019;2:69–71. doi: 10.1016/j.ogla.2019.01.009. [DOI] [PubMed] [Google Scholar]

- 56.Quigley H.A. 21st century glaucoma care. Eye (Lond) 2019;33:254–260. doi: 10.1038/s41433-018-0227-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Crabb D.P. A view on glaucoma—are we seeing it clearly? Eye (Lond) 2016;30:304–313. doi: 10.1038/eye.2015.244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Wittenborn J.S., Clemons T., Regillo C., Rayess N., Kruger D.L., Rein D. Economic evaluation of a home-based age-related macular degeneration monitoring system. JAMA Ophthalmol. 2017;135:452–459. doi: 10.1001/jamaophthalmol.2017.0255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Mudie L.I., LaBarre S., Varadaraj V., et al. The Icare HOME (TA022) study: performance of an intraocular pressure measuring device for self-tonometry by glaucoma patients. Ophthalmology. 2016;123:1675–1684. doi: 10.1016/j.ophtha.2016.04.044. [DOI] [PubMed] [Google Scholar]

- 60.Liu M.M., Cho C., Jefferys J.L., Quigley H.A., Scott A.W. Use of optical coherence tomography by non-expert personnel as a screening approach for glaucoma. J Glaucoma. 2018;27:64–70. doi: 10.1097/IJG.0000000000000822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Myers J.S., Fudemberg S.J., Lee D. Evolution of optic nerve photography for glaucoma screening: a review. Clin Experiment Ophthalmol. 2018;46:169–176. doi: 10.1111/ceo.13138. [DOI] [PubMed] [Google Scholar]

- 62.Russo A., Mapham W., Turano R., et al. Comparison of smartphone ophthalmoscopy with slit-lamp biomicroscopy for grading vertical cup-to-disc ratio. J Glaucoma. 2016;25:e777–e781. doi: 10.1097/IJG.0000000000000499. [DOI] [PubMed] [Google Scholar]

- 63.Wintergerst M.W.M., Brinkmann C.K., Holz F.G., Finger R.P. Undilated versus dilated monoscopic smartphone-based fundus photography for optic nerve head evaluation. Sci Rep. 2018;8:1–7. doi: 10.1038/s41598-018-28585-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Gurwitz J.H., Glynn R.J., Monane M., et al. Treatment for glaucoma: adherence by the elderly. Am J Public Health. 1993;83:711–716. doi: 10.2105/ajph.83.5.711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Glen F.C., Baker H., Crabb D.P. A qualitative investigation into patients’ views on visual field testing for glaucoma monitoring. BMJ Open. 2014;4:e003996. doi: 10.1136/bmjopen-2013-003996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Schwartz G.F., Quigley H.A. Adherence and persistence with glaucoma therapy. Surv Ophthalmol. 2008;53:S57–S68. doi: 10.1016/j.survophthal.2008.08.002. [DOI] [PubMed] [Google Scholar]

- 67.Boland M.V., Chang D.S., Frazier T., Plyler R., Jefferys J.L., Friedman D.S. Automated telecommunication-based reminders and adherence with once-daily glaucoma medication dosing: the automated dosing reminder study. JAMA Ophthalmol. 2014;132:845–850. doi: 10.1001/jamaophthalmol.2014.857. [DOI] [PubMed] [Google Scholar]

- 68.Okeke C.O., Quigley H.A., Jampel H.D., et al. Interventions improve poor adherence with once daily glaucoma medications in electronically monitored patients. Ophthalmology. 2009;116:2286–2293. doi: 10.1016/j.ophtha.2009.05.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Watson A.B. QUEST+: a general multidimensional Bayesian adaptive psychometric method. J Vis. 2017;17:10. doi: 10.1167/17.3.10. [DOI] [PubMed] [Google Scholar]

- 70.Kyu Han H., Jones P.R. Plug and play perimetry: evaluating the use of a self-calibrating digital display for screen-based threshold perimetry. Displays. 2019;60:30–38. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Pete R. Jones, PhD, is a lecturer at City, University of London, and an honorary researcher at the UCL Child Vision Lab. His primary interest is the development of vision tests for hard to reach populations and for use outside of conventional clinical environments. For further information, see www.appliedpsychophysics.com.