Abstract

Executive dysfunction is a well-documented, yet nonspecific corollary of various neurological diseases and psychiatric disorders. Here, we applied computational modeling of latent cognition for executive control in amyotrophic lateral sclerosis (ALS) patients. We utilized a parallel reinforcement learning model of trial-by-trial Wisconsin Card Sorting Test (WCST) behavior. Eighteen ALS patients and 21 matched healthy control participants were assessed on a computerized variant of the WCST (cWCST). ALS patients showed latent cognitive symptoms, which can be characterized as bradyphrenia and haphazard responding. A comparison with results from a recent computational Parkinson’s disease (PD) study (Steinke et al., 2020, J Clin Med) suggests that bradyphrenia represents a disease-nonspecific latent cognitive symptom of ALS and PD patients alike. Haphazard responding seems to be a disease-specific latent cognitive symptom of ALS, whereas impaired stimulus-response learning seems to be a disease-specific latent cognitive symptom of PD. These data were obtained from the careful modeling of trial-by-trial behavior on the cWCST, and they suggest that computational cognitive neuropsychology provides nosologically specific indicators of latent facets of executive dysfunction in ALS (and PD) patients, which remain undiscoverable for traditional behavioral cognitive neuropsychology. We discuss implications for neuropsychological assessment, and we discuss opportunities for confirmatory computational brain imaging studies.

Keywords: executive dysfunction, computational modeling, reinforcement learning, amyotrophic lateral sclerosis, Parkinson’s disease, Wisconsin Card Sorting Test

1. Introduction

The ability to maintain goal-directed behavior effectively is an important prerequisite for successful daily life, in particular in face of interfering information [1,2,3]. This overarching cognitive ability is often referred to as executive control [4,5]. The Wisconsin Card Sorting Test (WCST) [6,7,8] is considered as a gold standard for the neuropsychological assessment of cognitive flexibility, which represents an important facet of the broader concept of executive control [4,9,10,11].

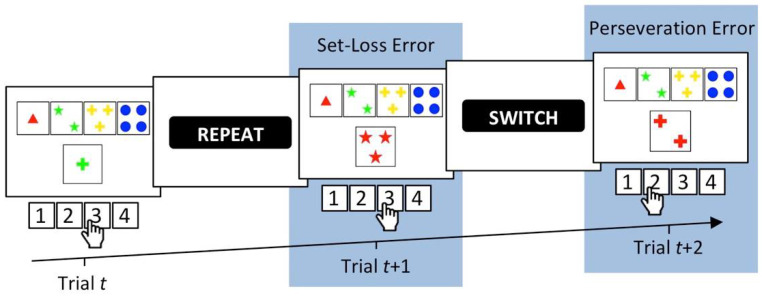

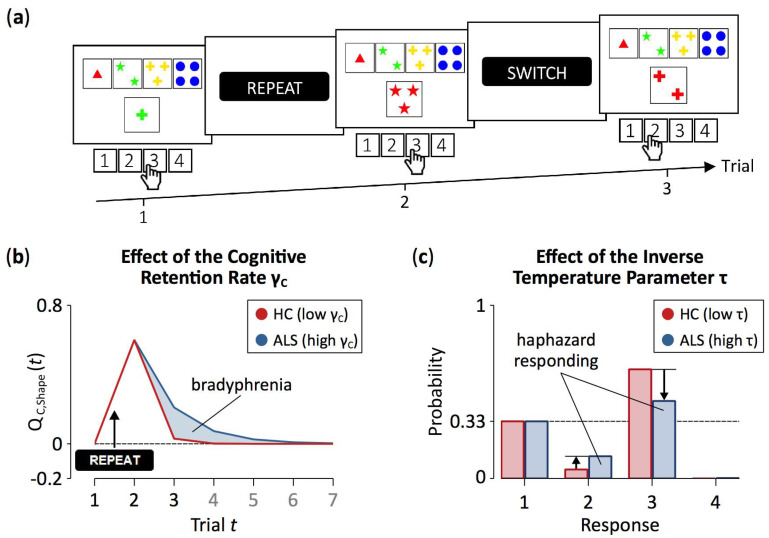

The WCST requires participants to sort stimulus cards to key cards by categories that change periodically, as detailed in Figure 1. In order to identify the currently prevailing category, participants have to rely on the examiner’s positive and negative feedback on each trial. Negative feedback on a WCST-trial requests switching the previously applied category, whereas positive feedback indicates that the previously applied category should be repeated. Of major interest for the present study are perseveration errors (PE), which refer to non-desirable category repetitions following negative feedback, and set-loss errors (SLE), which refer to unsolicited category switches following positive feedback [12].

Figure 1.

A showcase trial sequence on the computerized WCST [12,13,14] which was used in this study [15]. On Trial t, the stimulus card depicts one green cross. It could be sorted by the color category (inner left key card, response 2), the number category (far left key card, response 1), or the shape category (inner right key card, response 3). The observation of response 3 indicates that the shape category was applied. A subsequent positive feedback (i.e., “REPEAT”) indicated that this response was correct and that the shape category should be repeated on the upcoming trials. However, on Trial t + 1, response 3 was pressed, indicating that the number category was applied. Erroneous switches of the applied category following positive feedback are called set-loss errors. Next, a negative feedback (i.e., “SWITCH”) indicates that this response was incorrect, implying that a category switch is required. On Trial t + 2, the number category was erroneously repeated as response 2 was pressed. Erroneous repetitions of categories after negative feedback are called perseveration errors.

Increased WCST error propensities (usually measured as enhanced PE and/or SLE rates; [16]) are well-documented neuropsychological corollaries of many neurodegenerative diseases [15,17,18,19,20]. Among these diseases—and of particular interest for the current study—is amyotrophic lateral sclerosis (ALS), which is characterized by a loss of upper and lower motor neurons in the brain and spinal cord neurons [21]. Accumulating research suggests that ALS pathophysiology comprises non-motor, prefrontal cortical areas in a non-negligible subset of patients [22,23,24,25]. As such, about 15% of ALS patients are affected by the behavioral variant of frontotemporal dementia [26,27,28]. Of further interest for the present study is Parkinson’s disease (PD), which is primarily characterized by a loss of dopaminergic neurons in nigro-striatal pathways [29,30]. Increased WCST error propensities were also observed in many other neurological diseases [31,32,33] and psychiatric disorders [34,35,36,37,38]. Therefore, the named behavioral measures of increased WCST error propensities do not provide sufficient nosological specificity to serve as pathognomonic neuropsychological symptoms of particular neurological diseases or psychiatric disorders [12,39].

The insufficient nosological specificity of WCST error propensities may relate to the ‘impureness’ of behavioral WCST measures [11,12,40,41,42,43]. That is to say that behavioral WCST measures may originate from a mixture of multiple covert cognitive processes. The dysfunction of any single cognitive process, or of any combination of these processes, could manifest itself as increased WCST error propensities [12]. These considerations suggest that WCST measures may be severely limited in their diagnostic utility as a consequence of their impurity at the level of covert cognitive processes.

Yet, increased WCST error propensities may still arise from potentially dissociable covert cognitive impairments in diverse patient groups. Such circumscribed cognitive impairments may not be detectable by WCST error analyses because they may all be behaviorally expressed as enhanced PE and/or SLE rates, as discussed above. As one example, both ALS patients and PD patients show increased error propensities on the WCST (see above). Despite this commonality between the two groups of patients, the neurodegenerative alterations that occur in ALS patients could still affect a set of covert cognitive processes that remain spared in PD patients, who, in contrast, show alterations in a different set of covert cognitive processes [12]. Thus, dissociable covert cognitive impairments might be detected across these (and other) groups of patients with pathophysiological characteristics that are at least partially distinct. This possibility remains viable despite the fact that it has been clear for a long time that the nosological specificity of the commonly considered behavioral WCST measures remains unsatisfactory [31,44,45].

Computational cognitive neuropsychology offers an approach to decompose behavior that was observed on neuropsychological assessment instruments into covert cognitive processes [41,46]. Computational cognitive neuropsychology utilizes mathematical formalization of (1) the assumed covert cognitive processes, and (2) the way in which these processes interact [47,48,49,50,51,52,53,54,55,56,57]. Analyzing behavior on a neuropsychological assessment instrument, such as the WCST, via computational modeling allows estimating a set of latent variables, which reflect the efficacy of the assumed covert cognitive processes. Computational cognitive neuropsychology yields covert cognitive variables across diverse patient groups, which may be more specifically related to some of the pathophysiological characteristics of neurodegenerative diseases than behavioral measures, such as WCST error propensities. The present study exemplifies how computational cognitive neuropsychology may contribute to advancements in the field of executive control by (1) providing novel WCST-based latent variables reflecting different facets of cognitive flexibility, and (2) analyzing their nosological specificity in patients with ALS in comparison with patients with PD.

Several previously published studies conducted computational modeling of behavioral performance on the WCST [42,58,59,60,61,62,63,64,65,66,67,68,69,70], but surprisingly none of these earlier studies applied reinforcement learning (RL) models [71,72,73,74,75,76,77]. RL represents a suitable framework for modeling WCST behavior because of the potential reinforcement-quality of WCST feedback stimuli [40,78] that were illustrated in Figure 1. The present study relies on a particular modification of RL, which we referred to as parallel RL in two related previous studies from our group [33,34].

Our parallel RL model [33,34] conceptualizes WCST behavior as resulting from two parallel, yet independent RL processes [40,79]. Cognitive learning at a conceptually higher level is complemented by sensorimotor learning at a conceptually lower level ([40] provides a rationale for the introduction of the two parallel RL levels). With regard to the WCST, cognitive learning considers objects of thought (i.e., which category to apply; cf. Figure 1) that guide the selection of task-appropriate responses: The reception of positive feedback enhances activation values of the currently applied category, whereas the reception of negative feedback reduces activation values of the currently applied category. Simple sensorimotor learning bypasses these objects of thought as it is solely concerned with selecting responses, irrespective of the to-be-applied category: Responses that were followed by positive feedback tend to be repeated, whereas responses that were followed by negative feedback tend to be avoided on upcoming trials.

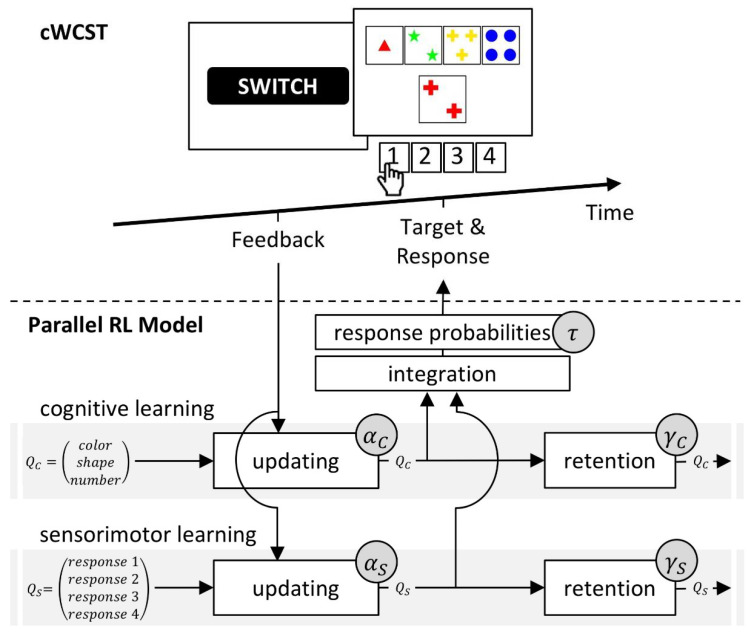

The parallel RL model comprises independently variable learning rates for positive and negative feedback because learning from positive and negative outcomes seems to be supported by distinct brain systems [80,81,82]. Our parallel RL model hence quantifies individual learning rates for positive and negative feedback for both cognitive and sensorimotor learning as latent variables. Our parallel RL model also comprises two additional latent variables that are not inherent parts of canonical RL models [71]. First, separate retention rates at cognitive and sensorimotor levels [83,84] quantify how well previously learned information remained available on current trials. Second, a ‘soft-max’ function [71,85] expresses how well finally executed responses accorded to the information that had been learned from feedback; we refer to this latent variable as the inverse temperature parameter (see also [86,87]). Figure 2 gives a schematic representation of the parallel RL model.

Figure 2.

A schematic representation of the cWCST and the parallel RL model. A negative feedback on the cWCST (top) indicates that the previously executed response (not depicted) was incorrect, implying that a category switch is required. The subsequent stimulus card is sorted by the color category, as indicated by observing response 1. The parallel RL model (bottom) assumes independent trial-by-trial cognitive and sensorimotor learning (upper and lower grey bar, respectively). Core to cognitive and sensorimotor learning are feedback predictions for the application of categories () and the execution of responses (), respectively. Feedback predictions are updated in response to received feedback. Individual cognitive () and sensorimotor learning rates (), which are further separated for positive and negative feedback, quantify the strengths of updating. For the subsequent target, cognitive and sensorimotor feedback predictions are integrated. Response probabilities are rendered from these integrated feedback predictions, with an individual inverse temperature parameter () quantifies how well response probabilities accord to integrated feedback predictions. Retention mechanisms ensure that feedback predictions transfer to the next trial. Here, individual cognitive () and sensorimotor retention rates () quantify the strengths of retention of feedback predictions.

In a recently published study [41], we characterized PD patients’ alterations in latent variables of the parallel RL model by studying PD patients ‘on’ and ‘off’ their clinically prescribed dopamine (DA) medication and a matched healthy control (HC) group on a computerized WCST (cWCST) [88]. Application of the parallel RL model revealed that PD patients showed enhanced cognitive retention rates and reduced sensorimotor retention rates compared to HC participants. We concluded that (1) enhanced cognitive retention rates express bradyphrenia [89,90,91,92], and that (2) reduced sensorimotor retention rates express disturbed stimulus-response learning [93,94,95] at the level of latent cognitive symptoms in PD patients. Systemic DA replacement therapy did not remedy these two anomalies in PD patients but incurred non-desirable side effects such as decreasing cognitive learning rates in response to positive feedback. Iatrogenic side effects of DA replacement therapy in PD patients may be due to an overdosing of meso-limbic and/or cortical DA systems [96,97,98,99].

The present study examined the specificity of executive dysfunction in ALS through (1) a computational analysis of WCST behavior in patients with ALS and (2) a comparison between their latent cognitive profile and that of patients with PD from an earlier computational study [41]. In order to achieve these goals, we characterized ALS patients’ alterations in latent variables of the parallel RL model [33] by studying ALS patients and a matched HC group on a cWCST variant [15]. Executive dysfunctions are among the well-known neuropsychological sequelae of ALS pathophysiology [15,17,100], which comprises—at least in a non-negligible subset of ALS patients—prefrontal cortical areas [22,23,24,25]. This led us to predict that ALS patients may show evidence for alterations in latent variables of cognitive learning, which is putatively supported by prefrontal cortical areas of the brain [41,79,101]. Overall, the present computational cWCST study was designed to gain information about the nosological specificity of alterations in latent variables of the parallel RL model in ALS patients compared to PD patients.

2. Materials and Methods

2.1. Participants

Twenty-one ALS patients were recruited from the ALS/MND clinic at Hannover Medical School. All ALS patients fulfilled the revised El Escorial criteria for clinically probable or definite ALS [102]. ALS patients who had a history of any neurological disease other than ALS or any psychiatric disorder were not considered for participation in this study. Furthermore, ALS patients who had highly restricted pulmonary function or were not able to press a button on the response pad due to motor impairment were not considered for inclusion in this study. We excluded two ALS patients because of invalid cWCST behavior. cWCST behavior was considered invalid if participants committed odd errors (i.e., responses that match no viable sorting category) on more than 20% of all completed trials. Note that odd errors on more than 20% of all completed trials could indicate that card sorting behavior did not accord to instructed categories. We also excluded one ALS patient who showed clinical signs of frontotemporal dementia [103], resulting in a final sample of 18 ALS patients. Seventeen ALS patients had limb-onset disease and one patient had bulbar-onset disease. None of the patients had a percutaneous endoscopic gastrostomy.

The healthy control (HC) group comprised 21 participants without any diagnosed neurological or psychiatric disorder. HC participants were age-, gender- and education-matched to the initial sample of ALS patients [15]. Table A1 in Appendix A shows ALS patients’ and HC participants’ demographic, clinical, and neuropsychological characteristics. Participants were offered payment as compensation for participation (30 €). The study protocol was approved by the local ethics committee (vote number: 6269) and was in accordance with the Declaration of Helsinki. All participants gave written informed consent. Note that an initial analysis of this data was reported by [15].

2.2. Computerized Wisconsin Card Sorting Test

Participants completed a variant of the cWCST [15]. Participants were required to sort stimulus cards to key cards W = {one red triangle, two green stars, three yellow crosses, and four blue balls} by one of three sorting categories U = {color, shape, number}. Card sorts were indicated by button presses V = {response 1, response 2, response 3, response 4} on a standard computer keyboard. Buttons were spatially mapped to key cards. Stimulus cards varied on the three dimensions color, shape, and number and never shared more than one feature with any of the key cards, rendering the applied sorting category unambiguously identifiable [104]. The target display presented the four key cards, which appeared invariantly above the stimulus card.

Card sorts were followed by positive and negative feedback cues that were the German words for repeat (i.e., ‘bleiben’) or shift (i.e., ‘wechseln’), respectively. A positive feedback cue indicated that the executed response was correct and that the applied sorting category should be repeated on the upcoming trial. A negative feedback cue indicated that the executed response was incorrect and that the applied sorting category should be shifted on the upcoming trial [105]. On any trial, the application of only one of the three sorting categories was correct.

The target display was presented until a button was pressed. Feedback cues remained on screen for 500 ms. The interval between a button press (i.e., target display offset) and feedback cue onset was 1500 ms and the interval between feedback cue offset and target display onset was 1000 ms. Participants completed a practice session of 15 trials prior to the experimental session. The experimental session consisted of a pseudo-randomly generated sequence of 120 trials. The correct sorting category switched after a variable number of trials (mean number of trials until a switch of the correct category was 3.8). Participants were explicitly informed about the three viable sorting categories and about the fact that correct sorting categories would change periodically. The experiment was programmed using Presentation® (Neurobehavioral Systems, Berkeley, CA USA).

2.3. Error Analysis

We analyzed PE (i.e., erroneous repetitions of the applied category after negative feedback) and SLE (i.e., erroneous switches of the applied category after positive feedback) [12,40,41]. Conditional error probabilities were computed as the ratio of the number of committed errors and the number of trials on which a respective error type was possible (i.e., all trials following negative or positive feedback for PE and SLE, respectively). We analyzed conditional error probabilities by means of a Bayesian repeated measures analysis of variance (ANOVA) using JASP version 0.11.1 (JASP Team, Amsterdam, The Netherlands) [106]. The Bayesian ANOVA included the within-subjects factor error type (PE vs. SLE) and the between subjects factor group (HC vs. ALS).

We reported results of the Bayesian ANOVA as analysis of effects [107]. That is, evidence for an effect (i.e., a main effect of error type or group or the interaction of error type and group) in the data was quantified by inclusion Bayes factors (BFinclusion). Inclusion Bayes factors give the change from prior probability odds to posterior probability odds for the inclusion of an effect. Prior probabilities for the inclusion of an effect P(inclusion) were computed as the sum of all prior probabilities of ANOVA models that included the effect of interest. Posterior probabilities for the inclusion of an effect P(inclusion|data) were computed as the sum of all posterior probabilities of these ANOVA models. We used default settings of JASP for the Bayesian ANOVA as well as uniform prior probabilities for all ANOVA models. Descriptive statistics were reported as mean conditional error probabilities with 95% credibility intervals. We computed 95% credibility intervals as the interval of 1.96 standard errors of the mean around the mean.

2.4. Computational Modeling

The parallel RL model [40,41] is based on a conceptualization of cWCST behavior as parallel cognitive and sensorimotor learning. Cognitive and sensorimotor learning are operationalized by Q-learning algorithms [71,108,109,110,111]. The implemented Q-learning algorithms operate on feedback prediction values, which quantify the strength of prediction of positive (feedback prediction values larger than 0) or negative feedback (feedback prediction values less than 0) following the application of a specific category (for cognitive learning) or the execution of a response (for sensorimotor learning). On any trial, feedback prediction values are updated in response to an observed feedback (Equations (A3) and (A7)). How strong feedback prediction values are updated is modulated by prediction errors, which are the difference between the observed feedback and the current feedback prediction value (Equations (A2) and (A6)). Higher absolute prediction errors indicate stronger updating of feedback prediction values. Appendix B gives a mathematical description of the parallel RL model as well as details of parameter estimation.

The parallel RL model comprises cognitive and sensorimotor learning rate parameters. Learning rates quantify the extent to which prediction errors are integrated into feedback prediction values of the applied category (for cognitive learning; Equation (A3)) or the executed response (for sensorimotor learning; Equation (A7)). On a behavioral level, a high cognitive learning rate indicates that a participant strongly adapts category selection to received feedback (i.e., a participant tends strongly to repeat or switch an applied category following positive or negative feedback, respectively), whereas a low cognitive learning rate indicates a marginal adaptation of category selection to received feedback. Likewise, a high sensorimotor learning rate indicates that a participant strongly adapts the sensorimotor selection of responses to received feedback (i.e., a participant tends strongly to repeat or avoid the execution of a particular response following positive or negative feedback, respectively). In contrast, a low sensorimotor learning rate indicates that a participant barely adapts the sensorimotor selection of responses to received feedback. As we implemented separate cognitive and sensorimotor learning rate parameters for trials that follow positive and negative feedback, each participant is characterized by a set of four learning rate parameters, i.e., cognitive learning rates for positive () and negative feedback () as well as sensorimotor learning rates for positive () and negative feedback ().

The parallel RL model also parameterizes retention rates [83,84] at cognitive and sensorimotor levels (i.e., and , respectively). Retention rates quantify the extent to which feedback prediction values from the previous trial will transfer to the current trial [40,41,83,84] (Equations (A1) and (A5)). Higher retention rates indicate more pronounced transfers to current trials. At the cognitive level, higher retention rates should support adequate WCST behavior in response to positive feedback (attenuating SLE), but higher retention rates should interfere with adequate WCST behavior in response to negative feedback (enhancing PE). At the sensorimotor level, higher retention rates should lead to repetitive patterns of responding following positive feedback, as the resulting positive feedback prediction values retain higher levels of activation. In contrast, higher retention rates should lead to non-repetitive responding following negative feedback, as the resulting negative feedback prediction values retain larger (negative) levels of activation. Overall, retention rates reflect participants’ flexibility vs. stability [112,113,114] at cognitive or sensorimotor levels. With regard to successful cWCST behavior, high degrees of flexibility at the cognitive level are advantageous in response to negative feedback, whereas high degrees of stability are advantageous in response to positive feedback.

Eventual card sorts on any trial arise from the integration of cognitive and sensorimotor feedback prediction values (Equation (A8)). The extent to which finally overt responses accord to these integrated feedback prediction values is quantified by an individual inverse temperature parameter [71,85] (Equation (A9)). A low inverse temperature parameter indicates that responses strictly accord to integrated cognitive and sensorimotor learning, whereas a high inverse temperature parameter indicates that card sorts are less dependent of integrated cognitive and sensorimotor learning. Thus, with a high inverse temperature parameter, responses appear to be more random.

We used Bayesian tests for direction to quantify evidence for group-related shifts of model parameters [108,115]. Bayes factors larger than 1 indicate evidence for an increase of a model parameter in ALS patients when compared to HC participants. Bayes factors less than 1 indicate evidence for a decrease in a model parameter in ALS patients when compared to HC participants. We interpreted Bayes factors by classes of evidential strength following [116]; Bayes factors larger than 10 (or less than 1/10) were interpreted as strong evidence for the presence of an effect, and Bayes factors larger than 100 (or less than 1/100) were interpreted as extreme evidence for the presence of an effect. This classification of Bayes factors was also used for the interpretation of results of the behavioral analysis. The implemented code of computational modeling analysis can be downloaded from https://osf.io/46nj5/.

3. Results

3.1. Error Analysis

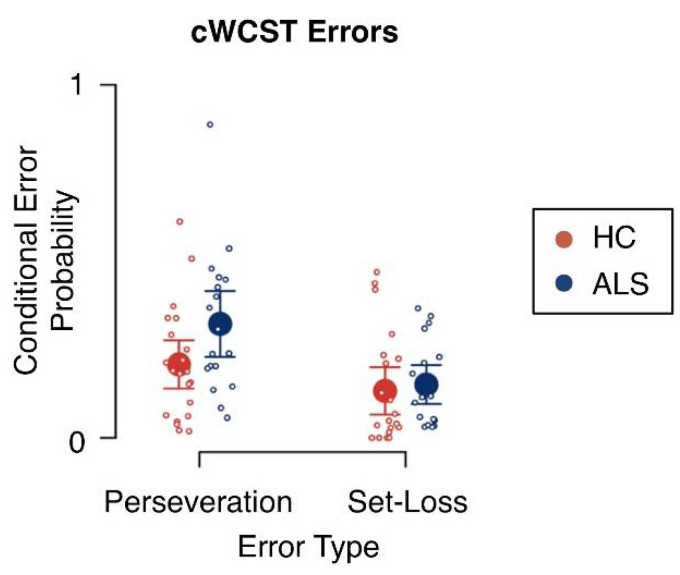

Mean conditional error probabilities are shown in Figure 3 and results of the Bayesian ANOVA are reported in Table 1. There was extreme evidence for an effect of error type on conditional error probabilities (BFinclusion = 836.42). Conditional PE probabilities (m = 0.26; SE = 0.03) were generally higher than conditional SLE probabilities (m = 0.14; SE = 0.02). There was no evidence for a main effect of group on conditional error probabilities (BFinclusion = 1.27) and there was no evidence for an interaction effect of error type and group on conditional error probabilities (BFinclusion = 2.50).

Figure 3.

Conditional error probabilities. Large circles indicate mean conditional error probabilities. Error bars indicate the 95% credibility interval. Small circles indicate individual conditional error probabilities. HC = healthy control participants; ALS = amyotrophic lateral sclerosis patients.

Table 1.

Analysis of effects of error type and group on conditional error probabilities.

| Effects | P(Inclusion) | P(Inclusion|Data) | BFinclulsion |

|---|---|---|---|

| Error Type | 0.600 | 0.999 | 836.42 ** |

| Group | 0.600 | 0.656 | 1.27 |

| Error Type x Group | 0.200 | 0.385 | 2.50 |

** extreme evidence.

3.2. Computational Modeling

Cognitive learning rates were overall higher after positive (median = 0.48, IQR = 0.11) than after negative feedback (median = 0.06, IQR = 0.04), indicating that participants showed stronger cognitive learning after positive than after negative feedback. In contrast, sensorimotor learning rates were overall higher after negative feedback (median = 0.05, IQR = 0.02) than after positive feedback (median < 0.01, IQR = 0.01). In fact, the sensorimotor learning rate after positive feedback was virtually zero, indicating that participants showed sensorimotor learning after negative feedback, whereas no sensorimotor learning happened after positive feedback. Taken together, cognitive learning rates were overall higher than sensorimotor learning rates, indicating a generally stronger contribution of cognitive learning on cWCST behavior when compared to sensorimotor learning. The sensorimotor retention rate (median = 0.21, IQR = 0.23) was overall higher than the cognitive retention rate (median = 0.11, IQR = 0.12). Thus, participants retained more sensorimotor information from previous trials than cognitive information.

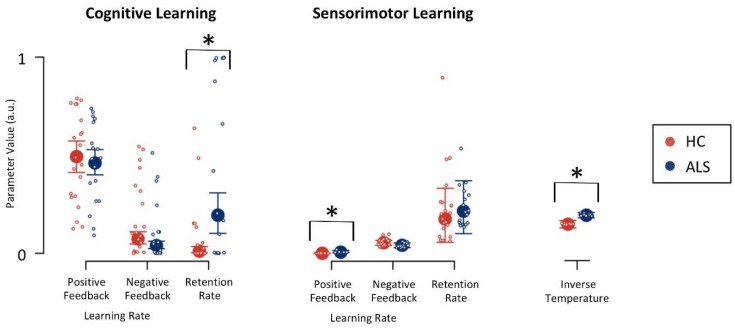

Figure 4 shows parameter estimates for ALS patients and healthy control participants. There was strong evidence (BF = 37.46; Table 2) for an increased cognitive retention rate in ALS patients (median = 0.19, IQR = 0.21) when compared to healthy control participants (median = 0.01, IQR = 0.03), indicating that ALS patients retained more cognitive-learning information from previous trials. There was strong evidence (BF = 17.07) for an increased inverse temperature parameter in ALS patients (median = 0.19, IQR = 0.03) when compared to healthy control participants (median = 0.15, IQR = 0.04), indicating an overall more random responding of ALS patients. Lastly, there was strong evidence (BF = 14.96) for an increased sensorimotor learning rate following positive feedback in ALS patients (median = 0.01, IQR = 0.01) when compared to healthy control participants (median < 0.01, IQR < 0.01).

Figure 4.

Model parameters for cognitive and sensorimotor learning. Large circles indicate medians of group-level posterior distributions. Error bars indicate lower and upper quartiles of group-level posterior distributions. Small circles indicate medians of individual-level posterior distributions; a.u. = arbitrary units; * strong evidence for the presence of a group difference; HC = healthy control participants; ALS = amyotrophic lateral sclerosis patients.

Table 2.

Bayes factors for effects of group on model parameters.

| Parameter | Description | Bayes Factor |

|---|---|---|

| cognitive learning rate following positive feedback | 0.81 | |

| cognitive learning rate following negative feedback | 0.34 | |

| cognitive retention rate | 37.46 * | |

| sensorimotor learning rate following positive feedback | 14.96 * | |

| sensorimotor learning rate following negative feedback | 0.44 | |

| sensorimotor retention rate | 1.49 | |

| inverse temperature parameter | 17.07 * |

* strong evidence.

4. Discussion

The present data demonstrate how specific facets of executive dysfunction in ALS patients may be investigated through the application of computational techniques. Computational modeling of cWCST behavior by means of the parallel RL model [40] revealed that ALS patients show increased cognitive retention rates when compared to HC participants, indicating that ALS patients retained more cognitive information learned on previous trials. Furthermore, ALS patients showed increased inverse temperature parameters when compared to HC participants, indicating that ALS patients’ actual responding was more independent from learned information. In the following sections, we discuss some implications for neuropsychological sequelae of ALS. We also discuss the potential nosological specificity of computationally derived latent variables, and more generally some of the implications of the computational approach to neuropsychological assessment. Lastly, we outline study limitations and future research directions.

4.1. Implications for Neuropsychological Sequelae of ALS

Since ALS pathophysiology [15,17] comprises prefrontal cortical areas in a non-negligible subset of patients [22,23,24,25], we predicted that ALS patients may show evidence for alterations in latent variables of cognitive (i.e., putatively cortical) learning [41,79,101]. In line with this prediction, computational modeling of cWCST behavior revealed strong evidence for increased cognitive retention rates in ALS patients when compared to HC participants (see Figure 4 and Table 2). Please note that there was no evidence for any differences between ALS patients and HC participants with regard to neuropsychological characteristics as assessed by the Edinburgh Cognitive and Behavioural ALS Screen (ECAS) [117] (see Table A1).

ALS patients showed increased cognitive retention rates when compared to HC participants, indicating that ALS patients retained more learned cognitive information from previous trials (see Figure 4). With higher cognitive retention rates, objects of thought (i.e., categories on the cWCST) retain higher activation levels from trial-to-trial (for illustration, see Figure 5a,b). These higher retention rates support adequate cWCST behavior in response to positive feedback, since the to-be-repeated categories remain at high levels of activation on upcoming trials. In contrast, high retention rates interfere with shifting the applied categories in response to negative feedback, since outdated categories exert stronger proactive interference on upcoming trials. Thus, flexibility of cognitive learning is reduced in ALS patients compared to HC participants. On the other hand, HC participants’ configurations of the cognitive retention rate might support adequate cWCST behavior in response to both, positive and negative feedback. We conclude that ALS pathophysiology [21] is associated with a latent cognitive symptom which can probably be best referred to as bradyphrenia (i.e., ‘inflexibility of thought’) [41,89]. The evidence for bradyphrenia in ALS patients corroborates the assumption that ALS should not merely be considered as a motor neuron disease [22,25,28]. Instead, a number of cognitive dysfunctions appear as a corollary of ALS pathophysiology [118,119,120].

Figure 5.

Exemplary effects of between-group variations of latent variables. Panel (a) shows the exemplary trial sequence on the cWCST as depicted in Figure 1. Panel (b) shows cognitive-learning feedback prediction values (referred to in the text as activation levels of objects of thought) for the application of the shape category across seven trials, the first three of them are shown in (a). The received positive feedback for the application of the shape category on Trial 1 causes an increase in feedback prediction values. With high configurations of the cognitive retention rate (i.e., ), such as seen in ALS patients, feedback prediction values retain higher levels of activation from trial to trial. Note that the shape category was not applied on Trials 4 to 7. Panel (c) shows response probabilities on Trial 3. The probability of executing response 3 is highest (i.e., application of the shape category), followed by the probability of executing response 1 (i.e., application of the color category) and the probability of executing response 2 (i.e., application of the number category). With high configurations of the inverse temperature parameter (i.e., ), such as seen in ALS patients, differences between response probabilities are attenuated, biasing response probabilities towards a uniform probability of 0.33. Note that the probability of executing an odd response (i.e., responses that match no viable sorting category; e.g., response 4 on Trial 3) is close to zero on any trial. Because the response probabilities of odd responses are virtually zero, such probabilities remain mostly unaffected by the inverse temperature parameter. The presented effects of model parameters were computed by varying exclusively the parameter of interest at arbitrary values while holding all other model parameters constant.

Bradyphrenia is typically considered as a hallmark cognitive symptom of PD [89]. As such, our previous computational study [41] revealed evidence for bradyphrenia in PD patients, i.e., increased cognitive retention rates. However, results of the present study suggest that bradyphrenia is not specifically related to PD. In contrast to this common opinion, bradyphrenia should be conceived as a disease-nonspecific symptom, which occurs as a functional corollary of pathophysiological alterations in both PD and ALS patients. Computational cognitive neuropsychology provides an explicit definition of bradyphrenia at the level of covert cognitive processes that support cognitive flexibility (i.e., high retention of the activation of objects of thought) along with a novel computational indicator of bradyphrenia (i.e., increased cognitive retention rates). Thus, our computational modeling analysis revealed that both ALS and PD patients can be characterized at the level of covert cognitive processes by an increased retention of the activation of objects of thought (i.e., bradyphrenia) [41].

Computational modeling also revealed that ALS patients show increased inverse temperature parameters in comparison to HC participants. The inverse temperature parameter expresses how well finally executed responses corresponded to the information that has been learned from feedback which was received on previous trials (for illustration, see Figure 5a,c) [71,85,86,87]. With high values of the inverse temperature parameter, overt responses are more independent of learned information, rendering responding more haphazard. Thus, our results indicate that ALS pathophysiology [21] comprises another symptom, which might be best described as haphazard responding. Haphazard responding in ALS patients could also be related to subclinical manifestations of frontal disinhibition, which represents a major symptom of frontotemporal dementia [100,103]. Alternatively, it also remains possible that the increased inverse temperature parameter in ALS patients expresses ALS-related motor impairments, such as deficient fine motor skills that compromise responding as required for successful cWCST behavior [100].

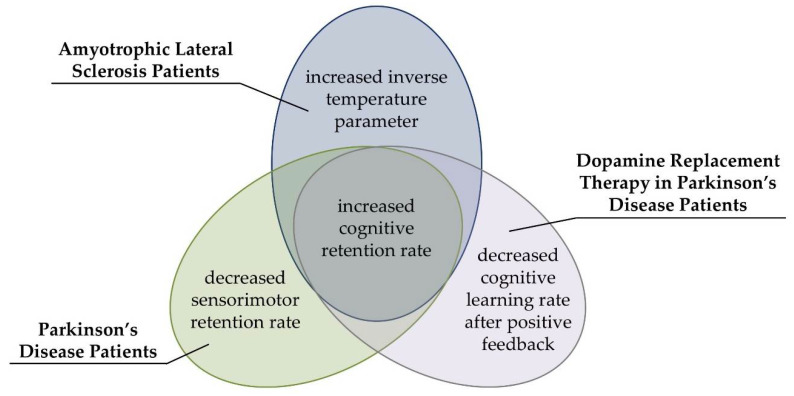

4.2. Computational Modeling Provides Nosologically Specific Indicators of Executive Dysfunctions

There was no evidence for alterations in conditional PE or SLE probabilities in ALS patients (see Table 1), and there was no evidence for such alterations in PD patients in our previous study [41]. Thus, ALS patients were phenomenologically indiscernible from PD patients with regard to analyses of behavioral cWCST error measures. Computational modeling [40] revealed that ALS and PD patients’ [41] likewise showed evidence for increased cognitive retention rates when compared with their respective HC participants. Thus, both ALS and PD pathophysiology seems to comprise a disease-nonspecific latent cognitive symptom, i.e., bradyphrenia (for illustration, see Figure 6) [41,89]. In addition to this common symptom, there were also differences between ALS and PD patients [41] in other latent variables of the parallel RL model [40]. ALS patients showed increased inverse temperature parameters when compared to HC participants (see Figure 4), whereas PD patients showed decreased sensorimotor retention rates when compared to HC participants in a related publication [41]. Furthermore, DA replacement therapy in PD patients decreased cognitive learning rates after positive feedback in that previous publication [41]. Thus, our data suggest that computational modeling by means of the parallel RL model [40] provides shared disease-related, and also nosologically specific alterations in latent variables in ALS and PD patients.

Figure 6.

Patterns of alterations in latent variables of the parallel RL model as revealed by the present study and our previous computational study in PD [41] (see the text for details).

Our results corroborate that behavioral WCST measures, such as PE and/or SLE rates, do not provide sufficient nosological specificity to serve as pathognomonic neuropsychological symptoms of particular neurological diseases or psychiatric disorders [12,39]. We proposed that the insufficient nosological specificity of these traditional WCST error measures may be related to their ‘impureness’ [11,12,40,41,42,43]. That is, behavioral WCST measures may originate from a mixture of multiple covert cognitive processes, and diverse patient groups might show differentiable impairments in covert cognitive processes. However, such cognitive impairments may not be detectable via WCST error measures because they may all be behaviorally expressed as enhanced PE and/or SLE rates. In line with this assumption, computational modeling of covert cognitive processes by means of the parallel RL model [40] revealed that ALS and PD patients show disease-nonspecific increased cognitive retention rates (indicating bradyphrenia), but also disease-specific alterations in other latent variables (see Figure 6). We conclude that ALS and PD patients can be characterized by a mixture of shared and specific impairments at the level of covert cognitive processes, and we suggest that computational modeling provides an appropriate technique to complement studies of disease-related cognitive impairments.

The question of how latent variables of the parallel RL model [40] could be specifically related to some of the pathophysiologic characteristics of ALS and PD deserves further inquiry. Cognitive learning, as implemented in the parallel RL model [40], is assumed to be supported by prefrontal cortical areas of the brain [41,79]. As such, dysfunctions in prefrontal cortical areas might manifest as altered latent variables of cognitive learning. We found here and in our previous publication [34] that cognitive retention rates were increased in ALS and PD patients (see Figure 6). Thus, bradyphrenia represents a disease-nonspecific latent symptom, which may be related to to-be-delineated shared pathophysiological aspects of both diseases (ALS and PD).

ALS pathophysiology comprises prefrontal cortical areas in a non-negligible subset of patients [22,23,24,25]. More precisely, there is increasing evidence that ALS pathophysiology expands to the premotor cortex (PMC; Brodmann areas 6, 8, and 9) as well as to the dorsolateral prefrontal cortex (DLPFC; Broadman areas 46 & 9) [22,25]. In PD patients, the loss of DA neurons is most severe in nigro-striatal pathways [29,30] but other DA systems, such as the meso-cortical system, appear to be affected as well [121]. Importantly, the meso-cortical system is assumed to be overstimulated by DA replacement therapy in at least some PD patients [96,97,98,99]. The meso-cortical DA system comprises—among other cortical areas—the PMC and the DLPFC [122], so that these two cortical areas seem to be affected in PD patients as well as by PD patients’ DA replacement therapy. Thus, we hypothesize a correlation between increased cognitive retention rates and potential dysfunctions in the PMC and/or the DLPFC in both diseases that we consider here. This hypothesized correlation also stresses the notion that cognitive learning, as implemented in the parallel RL model [40], may be supported by prefrontal cortical areas of the brain [41,79,101]. Moreover, our data suggest that bradyphrenia should not be considered in a disease-specific manner, but that bradyphrenia might provide a marker of prefrontal dysfunctions in general.

There were some striking divergences between alterations in latent variables in ALS and PD patients (see Figure 6). First, inverse temperature parameters were found to be increased in ALS patients, but they were not found to be altered in PD patients [41]. Thus, increased inverse temperature parameters could be related to dysfunctions in the motor cortex of ALS patients [21]. Second, PD patients showed decreased sensorimotor retention rates, which indicated impaired stimulus-response learning in PD patients [41]. The sensorimotor retention rate was not found to be altered in ALS patients. Sensorimotor learning is putatively supported by striatal areas [41,79], which are primarily affected in PD patients [29,30]. Hence, decreased sensorimotor retention rates could be specifically related to PD patients’ dysfunctions in striatal brain areas. Finally, DA replacement therapy in PD patients induced decreased cognitive learning rates following positive feedback [41]. DA replacement therapy overstimulates the meso-limbic DA system in PD patients [96,97,98,99], which is associated with anticipation of reward or positive feedback [123,124]. Decreased cognitive learning rates following positive feedback due to DA replacement therapy might be a computational corollary of potential overstimulation of the meso-limbic DA system in PD patients through clinically titrated DA replacement therapy [41].

As an interim conclusion, latent variables of the parallel RL model [40] could be specifically related to some of the pathophysiologic characteristics of ALS and PD. The comparative analysis of ALS and PD patients’ alterations in latent computational variables remains correlational and should be interpreted with caution. However, this comparative analysis led to testable neuroanatomical hypotheses. In particular, the proposed relationship between cognitive retention rates and the prefrontal cortical areas should be addressed by confirmatory brain imaging studies. These WCST-based imaging studies should combine computational modeling with brain imaging [108], such as diffusion-weighted magnetic resonance imaging for the detection of disease-related micro-structural changes in brain areas of interest [125], or lesion-(latent) symptom mapping [62]. Such brain imaging studies should also address potential relationships of latent variables and other brain areas, which appear to be affected in ALS patients. For example, several studies suggest that dysfunctions in frontotemporal brain areas [24,126,127] and the basal ganglia [128] are related to executive dysfunctions in ALS patients.

4.3. Implications for Neuropsychological Assessment

The neuropsychological assessment of cognitive dysfunctions is almost entirely based on behavioral observations [12], with counting the occurrence of particular behavioral events, such as the number of PE and/or SE committed in the context of the WCST, as the current state-of-the-art approach to neuropsychological assessment. Such counts serve as indicators for the degree to which particular cognitive dysfunctions are present. For example, typical inferences from the presence of enhanced PE rates on the WCST would be that the assessed participant shows signs of cognitive inflexibility [4,9,10,11,40]. There are three shortcomings of this procedure [41]. First, neuropsychological assessment refers to cognitive assessment, yet the referenced cognitive processes remain unobservable. Thus, neuropsychological assessment involves inferences that go beyond behavioral observations. Second, cognitive constructs, which are referred to by neuropsychological assessment, are often vaguely defined. That is, cognitive constructs are typically verbal re-descriptions of the behavioral observations that were made (take PE as an indicator of cognitive inflexibility as an example). Moreover, the referenced behavioral observations may not originate from a single cognitive process but should better be conceived as resulting from a mixture of multiple covert cognitive processes [11,12,40,41,42,43]. Finally, observable behavioral measures on neuropsychological assessment instruments do not possess satisfactory nosological specificity for diverse patient groups, as detailed above [12,39].

Computational cognitive neuropsychology offers a route towards advanced neuropsychological assessment, which could remedy some of these shortcomings. Computational modeling offers a technique to decompose behavior on neuropsychological assessment instruments into assumed latent cognitive processes, allowing inferences closer to the level of covert cognitive processes [41,46]. Importantly, latent variables, which reflect the efficacy of covert cognitive processes, are unambiguously defined (see Appendix B) and, thereby, may replace the traditional verbal constructs of neuropsychological assessment [47,48,49,50,51,52,53,54,55,56,57]. The present study exemplifies that latent variables obtained from computational modeling may provide indicators of shared latent symptoms as well as nosologically specific differentiable facets of latent executive dysfunctions. Thus, computational cognitive neuropsychology offers the potential to improve inferential validity of neuropsychological assessment.

4.4. Study Limitations and Directions for Future Research

In line with previous studies [40,41], estimates of the sensorimotor learning rate after positive feedback were virtually zero (see Figure 4), indicating that sensorimotor learning did not happen following positive feedback (for a detailed discussion, see [40]). However, there was strong evidence for an effect of group membership on the sensorimotor learning rate after positive feedback (see Table 2). Inspection of Figure 4 revealed that this evidence for a group effect on the sensorimotor learning rate following positive feedback was most likely due to one single outlier in the ALS patients. Thus, we did not interpret the evidence for a group effect on this close-to-zero model parameter.

A recent meta-analytical review [15] reported evidence for enhanced PE rates in ALS patients. In the present study, mean conditional PE probabilities were increased in ALS patients when compared to HC participants (see Figure 3). However, this increase in conditional PE probabilities was not supported by any evidence from the Bayesian ANOVA (see Table 1). This missing evidence was most likely due to the small sample size typical for neuropsychological ALS studies [129], which constitutes a limitation of this study.

There are two differences between the cWCST variant that we utilized in the present study and the cWCST variant that was used to characterize PD patients’ alterations in latent variables of the parallel RL model [41]. First, in the present study, the cWCST was completed after a fixed number of 120 trials. In contrast, the cWCST variant that was utilized to assess PD patients ended after a fixed number of 40 completed categories irrespective of the number of completed trials (median = 212 trials, min = 170 trials, max = 281 trials). Second, in this study, the prevailing sorting category switched after a random number of trials, which was defined prior to the experiment. On the cWCST variant that was utilized to assess PD patients, participants had to complete a minimum of two correct card sorts to trigger a switch of the prevailing sorting category. However, estimates of the latent variables of interest (i.e., latent variables that were alternating between patient groups and healthy controls) were comparable in size between the current study of ALS patients and the previous study of PD patients [41]. We conclude that any differences between the administered cWCST variants do not interfere with comparisons of alterations in latent variables of ALS and PD patients. However, future research should address how specific configurations of the cWCST could affect parameter estimates of the parallel RL model.

We assessed neuropsychological characteristics of ALS patients and HC participants by means of the ECAS [117]. The ECAS allows for the assessment of both ALS-specific and ALS-nonspecific neuropsychological characteristics with strong clinical validity [130]. However, we did not find evidence for any differences between ALS patients and HC participants with regard to the ECAS (see Table A1). The missing evidence was most likely due to the small sample size, which is typical for neuropsychological ALS studies [129]. Alternatively, a more extensive evaluation of the neuropsychological characteristics of ALS patients and HC participants may have been required.

In a recent study [40], we demonstrated that the parallel RL model provides a better conceptualization of behavioral cWCST data than competing computational models [42]. This conclusion was based on the analysis of a large sample of young volunteers (N = 375). It remains to be seen whether this finding transfers to other populations, such as ALS patients. As the sample size was relatively small in the present study, we did not consider any model comparisons. However, future studies should test which of all applicable computational models provides the best conceptualization of the to-be-studied behavioral data and base further analyses on the winning computational model [131].

5. Conclusions

Computational modeling of cWCST behavior revealed specific facets of executive dysfunction in ALS patients, which are difficult to study by traditional WCST error measures. Our data indicate ALS-related latent cognitive symptoms, which might be best referred to as bradyphrenia and as haphazard responding. Bradyphrenia seems to represent a disease-nonspecific latent cognitive symptom in ALS and PD patients, but disease-specific latent cognitive symptoms were also discernible. Thus, our results suggest that latent variables of the parallel RL model [40] provide nosologically specific indicators of latent facets of executive dysfunction in ALS and PD patients. The comparative analysis of ALS and PD patients’ alterations in latent dysexecutive symptoms resulted in novel hypotheses about which brain areas support these specific facets of executive dysfunction, paving the way for future confirmatory brain imaging studies.

Acknowledgments

Thanks are due to Maj-Britt Vogts and Stefanie Fürkötter for collecting the data.

Appendix A

Table A1.

Demographic, clinical, and psychological characteristics.

| HC (N = 21) | ALS (N = 18) | Bayes Factor | ||||||

|---|---|---|---|---|---|---|---|---|

| Maximum | Mean | SD | n | Mean | SD | n | ||

| Age (years) | 57.67 | 9.16 | 21 | 58.11 | 10.05 | 18 | 0.32 | |

| Female [%] | 22% | 29% | 0.91 # | |||||

| Education (years) | 14.29 | 2.93 | 21 | 13.92 | 2.16 | 18 | 0.34 | |

| Disease Duration (months) | 50.47 | 82.93 | 17 | |||||

| FVC | 85.75 | 12.84 | 16 | |||||

| ESS | 24 | 6.12 | 3.06 | 17 | ||||

| ALSFRS-EX: total | 60 | 47.41 | 6.99 | 17 | ||||

| Bulbar Subscore | 16 | 13.94 | 1.89 | 17 | ||||

| Fine Motor Subscore | 16 | 11.41 | 2.87 | 17 | ||||

| Gross Motor Subscore | 16 | 11.12 | 5.02 | 17 | ||||

| Respiratory Subscore | 16 | 10.94 | 1.71 | 17 | ||||

| Progression Rate | 16 | 0.71 | 0.57 | 17 | ||||

| MoCA | 30 | 27.24 | 2.68 | 21 | 26.72 | 3.18 | 18 | 0.35 |

| FAB | 18 | 17.19 | 1.25 | 21 | 16.50 | 2.19 | 16 | 0.57 |

| ECAS: total | 136 | 104.38 | 11.58 | 21 | 103.89 | 12.19 | 18 | 0.32 |

| ECAS: ALS-Specific | 100 | 75.48 | 10.63 | 21 | 75.89 | 10.26 | 18 | 0.40 |

| Language | 28 | 26.62 | 1.94 | 21 | 25.94 | 2.13 | 18 | 0.48 |

| Fluency | 24 | 11.52 | 5.83 | 21 | 10.89 | 4.13 | 18 | 0.33 |

| Executive | 48 | 37.33 | 4.50 | 21 | 39.06 | 5.92 | 18 | 0.48 |

| ECAS: ALS-Nonspecific | 36 | 28.86 | 3.07 | 21 | 28.00 | 3.66 | 18 | 0.31 |

| Memory | 24 | 17.24 | 2.83 | 21 | 16.44 | 3.54 | 18 | 0.40 |

| Visuospatial | 12 | 11.62 | 0.74 | 21 | 11.56 | 0.62 | 18 | 0.32 |

Bayes factors were obtained from Bayesian independent samples t-tests. # Bayes factor was obtained from Bayesian contingency tables. All inferential statistics were carried out by means of JASP [106]. ALS = Amyotrophic lateral sclerosis patients; HC = healthy control participants; FVC = forced vital capacity; ESS = Epworth Sleepiness Scale [132]; ALSFRS-EX = Amyotrophic Lateral Sclerosis Functional Rating Scale—Self-Rating Scale [133]; MoCA = Montreal Cognitive Assessment [134]; FAB = Frontal Assessment Battery [135]; ECAS = Edinburgh Cognitive and Behavioural ALS Screen [117]; Please note that the present study utilized different WCST-based inclusion criteria for ALS patients and HC participants when compared to the initial publication of the data [15].

Appendix B

The cognitive Q-learning algorithm operates on feedback prediction values for the application of sorting categories. is a 3 (categories) × 1 vector that quantifies feedback prediction values for the application of sorting categories on trial t. The strength of transfer of feedback prediction values from trial to trial is modeled as:

| (A1) |

with is the individual cognitive retention rate. Trial-wise cognitive prediction errors are computed with regard to the applied category u ∈ U on trial t as:

| (A2) |

r(t) denotes the received feedback on trial t, which is 1 for positive feedback and −1 for negative feedback. Feedback prediction values for the application of sorting categories are updated as:

| (A3) |

is a 3 (categories) × 1 dummy vector, which is 1 for the applied category u and 0 for all other categories on trial t. The dummy vector ensures that only the feedback prediction value for the applied category u on trial t is updated by the cognitive prediction error . denotes the cognitive learning rate which was further separated for trials following positive and negative feedback (i.e., and , respectively).

Feedback prediction values for the application of categories are matched to responses, which is represented by a 4 (responses) x 1 vector . For response v ∈ V on trial t + 1, is computed as:

| (A4) |

with is a 3 (categories) × 1 vector that represents the match between a stimulus card and response v with regard to sorting categories. Let be 1 if a stimulus card matches response v by category u and let be 0 elsewise. is the transpose of . To account for responses that match no viable sorting category (i.e., responses that certainly yield a negative feedback with regard to cognitive learning), feedback prediction values of such responses are assigned a value of .

The sensorimotor Q-learning algorithm parallels the cognitive Q-learning algorithm. However, the sensorimotor Q-learning algorithm directly operates on feedback prediction values for the execution of responses. A 4 (responses) × 1 vector quantifies feedback prediction values for the execution of responses on trial t. The strength of the transfer of feedback prediction values from trial to trial is given by:

| (A5) |

denotes the individual sensorimotor retention rate. The sensorimotor prediction error on trial t is computed as:

| (A6) |

Here, is the feedback prediction value of executed response v on trial t. Feedback prediction values for the execution of responses are updated as:

| (A7) |

Again, denotes a 4 (responses) × 1 dummy vector that is 1 for the executed response v and 0 for all other responses on trial t. ensures that only feedback prediction values of the executed response are updated by the sensorimotor prediction error . We assumed independent individual sensorimotor learning rate parameters for positive and negative feedback, and , respectively.

Feedback prediction values of cognitive and sensorimotor Q-learning algorithms are linear integrated. More precisely, integrated feedback prediction values for trial t + 1 are computed as:

| (A8) |

The response probability of response v on trial t + 1 is derived from integrated feedback prediction values using a “softmax” logistic function [71]:

| (A9) |

with denotes the individual inverse temperature parameter.

Parameters of the parallel RL model (i.e., ) were estimated using hierarchical Bayesian analysis [111,136,137,138,139,140,141,142] by means of RStan [143]. Hierarchical Bayesian analysis allows for individual differences in model parameters while pooling information across all individuals by means of hyper- (i.e., group-level) parameters [84,136,138]. More precisely, any individual-level model parameter of participant i was drawn from a hyper-level normal distribution with mean and standard deviation . In order to facilitate hierarchical Bayesian analysis, we implemented a non-centered parameterization [111,144]. That is, individual-level model parameters were sampled from a standard normal distribution, which was multiplied by a hyper-level scale parameter and shifted by a hyper-level location parameter . The individual deviation of model parameter of participant i from was modeled by an individual-level location parameter [40,41]. Taken together, model parameter of participant i was implemented as:

| (A10) |

In order to account for effects of ALS pathology on model parameters, we introduced the between-subjects effect group [41,108,145]. That is, group-related shifts of model parameters were implemented by adding the hyper-level parameter to the hyper-level location parameter for all HC participants. Please note that we implemented the hyper-level parameter for all HC participants rather than for all ALS patients because the sample size was larger for HC participants, which allowed for estimation of with higher statistical power.

We further facilitated hierarchical Bayesian analysis by conducting parameter estimation in an unconstrained space [40,41,111,137,144]. That is, all location and scale parameters were free to vary without any constraints. The linear integration of location and scale parameters was then mapped to a constrained space (i.e., [0, 1]) using the Probit function. The Probit function is the inverse-cumulative standard normal distribution. Taken together, individual-level model parameter for any ALS patient was modeled as:

| (A11) |

and individual-level model parameter of any HC participant was modeled as:

| (A12) |

In line with previous research, we assumed normal prior distributions (μ = 0, σ = 1) for location parameters and half Cauchy prior distributions (μ = 0, σ = 5) for scale parameters [40,41,111]. Parameter estimation was done using three chains of 1000 iterations, including 500 warm-up iterations each. Parameter estimation was inspected quantitatively by the statistic [146] and the effective sample size statistic as well as visually by trace-plots. Feedback prediction values of the parallel RL model were initialized as 0.

We used Bayesian tests for direction to quantify evidence for group-related shifts of model parameters [108,115]. For any model parameter z, we computed a Bayes factor (BF) by dividing the posterior density of parameter below zero by the posterior density above zero. Please note that Bayesian tests were applicable, as prior distributions of were symmetric around zero [147].

Descriptive statistics of model parameters were obtained from posterior distributions of hyper-level location parameters. More precisely, the posterior distribution of model parameter z of ALS patients was given by:

| (A13) |

For HC participants, the posterior distribution of model parameter z was given by:

| (A14) |

Descriptive statistics of posterior distributions were reported as medians with lower and upper quartiles. We computed individual-level parameter estimates as medians of individual-level posterior distributions as derived by Equations (A11) and (A12). Correlational analysis was done using JASP version 0.11.1 [106]. The implemented code is available from https://osf.io/46nj5/.

Author Contributions

Conceptualization, A.S. and B.K.; Data curation, A.S., F.L. and C.S.; Formal analysis, A.S.; Funding acquisition, F.L. and B.K.; Investigation, A.S., F.L., C.S., S.P. and B.K.; Methodology, A.S. and B.K.; Project administration, F.L., C.S., S.P. and B.K.; Resources, S.P. and B.K.; Software, A.S.; Supervision, B.K.; Validation, A.S.; Visualization, A.S.; Writing—original draft, A.S. and B.K.; Writing—review and editing, A.S., F.L., C.S., S.P. and B.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Karlheinz-Hartmann Stiftung, Hannover, Germany. Florian Lange received funding from the German National Academic Foundation and the FWO and European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No 665501.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Duncan J., Emslie H., Williams P., Johnson R., Freer C. Intelligence and the frontal lobe: The organization of goal-directed behavior. Cogn. Psychol. 1996;30:257–303. doi: 10.1006/cogp.1996.0008. [DOI] [PubMed] [Google Scholar]

- 2.Grafman J., Litvan I. Importance of deficits in executive functions. Lancet. 1999;354:1921–1923. doi: 10.1016/S0140-6736(99)90438-5. [DOI] [PubMed] [Google Scholar]

- 3.MacPherson S.E., Gillebert C.R., Robinson G.A., Vallesi A. Editorial: Intra- and inter-individual variability of executive functions: Determinant and modulating factors in healthy and pathological conditions. Front. Psychol. 2019;10:432. doi: 10.3389/fpsyg.2019.00432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Diamond A. Executive functions. Annu. Rev. Psychol. 2013;64:135–168. doi: 10.1146/annurev-psych-113011-143750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Miller E.K., Cohen J.D. An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- 6.Berg E.A. A simple objective technique for measuring flexibility in thinking. J. Gen. Psychol. 1948;39:15–22. doi: 10.1080/00221309.1948.9918159. [DOI] [PubMed] [Google Scholar]

- 7.Grant D.A., Berg E.A. A behavioral analysis of degree of reinforcement and ease of shifting to new responses in a Weigl-type card-sorting problem. J. Exp. Psychol. 1948;38:404–411. doi: 10.1037/h0059831. [DOI] [PubMed] [Google Scholar]

- 8.Heaton R.K., Chelune G.J., Talley J.L., Kay G.G., Curtiss G. Wisconsin Card Sorting Test Manual: Revised and Expanded. Psychological Assessment Resources Inc.; Odessa, FL, USA: 1993. [Google Scholar]

- 9.MacPherson S.E., Sala S.D., Cox S.R., Girardi A., Iveson M.H. Handbook of Frontal Lobe Assessment. Oxford University Press; New York, NY, USA: 2015. [Google Scholar]

- 10.Lezak M.D., Howieson D.B., Bigler E.D., Tranel D. Neuropsychological Assessment. 5th ed. Oxford University Press; New York, NY, USA: 2012. [Google Scholar]

- 11.Strauss E., Sherman E.M.S., Spreen O. A Compendium of Neuropsychological Tests: Administration, Norms, and Commentary. Oxford University Press; New York, NY, USA: 2006. [Google Scholar]

- 12.Lange F., Seer C., Kopp B. Cognitive flexibility in neurological disorders: Cognitive components and event-related potentials. Neurosci. Biobehav. Rev. 2017;83:496–507. doi: 10.1016/j.neubiorev.2017.09.011. [DOI] [PubMed] [Google Scholar]

- 13.Barceló F. The Madrid card sorting test (MCST): A task switching paradigm to study executive attention with event-related potentials. Brain Res. Protoc. 2003;11:27–37. doi: 10.1016/S1385-299X(03)00013-8. [DOI] [PubMed] [Google Scholar]

- 14.Lange F., Kröger B., Steinke A., Seer C., Dengler R., Kopp B. Decomposing card-sorting performance: Effects of working memory load and age-related changes. Neuropsychology. 2016;30:579–590. doi: 10.1037/neu0000271. [DOI] [PubMed] [Google Scholar]

- 15.Lange F., Vogts M.-B., Seer C., Fürkötter S., Abdulla S., Dengler R., Kopp B., Petri S. Impaired set-shifting in amyotrophic lateral sclerosis: An event-related potential study of executive function. Neuropsychology. 2016;30:120–134. doi: 10.1037/neu0000218. [DOI] [PubMed] [Google Scholar]

- 16.Kopp B., Maldonado N., Scheffels J.F., Hendel M., Lange F. A meta-analysis of relationships between measures of Wisconsin card sorting and intelligence. Brain Sci. 2019;9:349. doi: 10.3390/brainsci9120349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Beeldman E., Raaphorst J., Twennaar M., de Visser M., Schmand B.A., de Haan R.J. The cognitive profile of ALS: A systematic review and meta-analysis update. J. Neurol. Neurosurg. Psychiatry. 2016;87:611–619. doi: 10.1136/jnnp-2015-310734. [DOI] [PubMed] [Google Scholar]

- 18.Lange F., Brückner C., Knebel A., Seer C., Kopp B. Executive dysfunction in Parkinson’s disease: A meta-analysis on the Wisconsin Card Sorting Test literature. Neurosci. Biobehav. Rev. 2018;93:38–56. doi: 10.1016/j.neubiorev.2018.06.014. [DOI] [PubMed] [Google Scholar]

- 19.Binetti G., Magni E., Padovani A., Cappa S.F., Bianchetti A., Trabucchi M. Executive dysfunction in early Alzheimer’s disease. J. Neurol. Neurosurg. Psychiatry. 1996;60:91–93. doi: 10.1136/jnnp.60.1.91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Crawford J.R., Blackmore L.M., Lamb A.E., Simpson S.A. Is there a differential deficit in fronto-executive functioning in Huntington’s Disease? Clin. Neuropsychol. Assess. 2000;1:4–20. [Google Scholar]

- 21.Wijesekera L.C., Leigh P.N. Amyotrophic lateral sclerosis. Orphanet J. Rare Dis. 2009;4:3. doi: 10.1186/1750-1172-4-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Abrahams S., Goldstein L.H., Kew J.J.M., Brooks D.J., Lloyd C.M., Frith C.D., Leigh P.N. Frontal lobe dysfunction in amyotrophic lateral sclerosis. Brain. 1996;119:2105–2120. doi: 10.1093/brain/119.6.2105. [DOI] [PubMed] [Google Scholar]

- 23.Kew J.J.M., Goldstein L.H., Leigh P.N., Abrahams S., Cosgrave N., Passingham R.E., Frackowiak R.S.J., Brooks D.J. The relationship between abnormalities of cognitive function and cerebral activation in amyotrophic lateral sclerosis: A neuropsychological and positron emission tomography study. Brain. 1993;116:1399–1423. doi: 10.1093/brain/116.6.1399. [DOI] [PubMed] [Google Scholar]

- 24.Pettit L.D., Bastin M.E., Smith C., Bak T.H., Gillingwater T.H., Abrahams S. Executive deficits, not processing speed relates to abnormalities in distinct prefrontal tracts in amyotrophic lateral sclerosis. Brain. 2013;136:3290–3304. doi: 10.1093/brain/awt243. [DOI] [PubMed] [Google Scholar]

- 25.Tsermentseli S., Leigh P.N., Goldstein L.H. The anatomy of cognitive impairment in amyotrophic lateral sclerosis: More than frontal lobe dysfunction. Cortex. 2012;48:166–182. doi: 10.1016/j.cortex.2011.02.004. [DOI] [PubMed] [Google Scholar]

- 26.Goldstein L.H., Abrahams S. Changes in cognition and behaviour in amyotrophic lateral sclerosis: Nature of impairment and implications for assessment. Lancet Neurol. 2013;12:368–380. doi: 10.1016/S1474-4422(13)70026-7. [DOI] [PubMed] [Google Scholar]

- 27.Ringholz G.M., Appel S.H., Bradshaw M., Cooke N.A., Mosnik D.M., Schulz P.E. Prevalence and patterns of cognitive impairment in sporadic ALS. Neurology. 2005;65:586–590. doi: 10.1212/01.wnl.0000172911.39167.b6. [DOI] [PubMed] [Google Scholar]

- 28.Phukan J., Elamin M., Bede P., Jordan N., Gallagher L., Byrne S., Lynch C., Pender N., Hardiman O. The syndrome of cognitive impairment in amyotrophic lateral sclerosis: A population-based study. J. Neurol. Neurosurg. Psychiatry. 2012;83:102–108. doi: 10.1136/jnnp-2011-300188. [DOI] [PubMed] [Google Scholar]

- 29.Hawkes C.H., Del Tredici K., Braak H. A timeline for Parkinson’s disease. Parkinsonism Relat. Disord. 2010;16:79–84. doi: 10.1016/j.parkreldis.2009.08.007. [DOI] [PubMed] [Google Scholar]

- 30.Braak H., Del Tredici K. Nervous system pathology in sporadic Parkinson disease. Neurology. 2008;70:1916–1925. doi: 10.1212/01.wnl.0000312279.49272.9f. [DOI] [PubMed] [Google Scholar]

- 31.Demakis G.J. A meta-analytic review of the sensitivity of the Wisconsin Card Sorting Test to frontal and lateralized frontal brain damage. Neuropsychology. 2003;17:255–264. doi: 10.1037/0894-4105.17.2.255. [DOI] [PubMed] [Google Scholar]

- 32.Lange F., Seer C., Müller-Vahl K., Kopp B. Cognitive flexibility and its electrophysiological correlates in Gilles de la Tourette syndrome. Dev. Cogn. Neurosci. 2017;27:78–90. doi: 10.1016/j.dcn.2017.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lange F., Seer C., Salchow C., Dengler R., Dressler D., Kopp B. Meta-analytical and electrophysiological evidence for executive dysfunction in primary dystonia. Cortex. 2016;82:133–146. doi: 10.1016/j.cortex.2016.05.018. [DOI] [PubMed] [Google Scholar]

- 34.Roberts M.E., Tchanturia K., Stahl D., Southgate L., Treasure J. A systematic review and meta-analysis of set-shifting ability in eating disorders. Psychol. Med. 2007;37:1075–1084. doi: 10.1017/S0033291707009877. [DOI] [PubMed] [Google Scholar]

- 35.Romine C. Wisconsin Card Sorting Test with children: A meta-analytic study of sensitivity and specificity. Arch. Clin. Neuropsychol. 2004;19:1027–1041. doi: 10.1016/j.acn.2003.12.009. [DOI] [PubMed] [Google Scholar]

- 36.Shin N.Y., Lee T.Y., Kim E., Kwon J.S. Cognitive functioning in obsessive-compulsive disorder: A meta-analysis. Psychol. Med. 2014;44:1121–1130. doi: 10.1017/S0033291713001803. [DOI] [PubMed] [Google Scholar]

- 37.Snyder H.R. Major depressive disorder is associated with broad impairments on neuropsychological measures of executive function: A meta-analysis and review. Psychol. Bull. 2013;139:81–132. doi: 10.1037/a0028727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Bosia M., Buonocore M., Guglielmino C., Pirovano A., Lorenzi C., Marcone A., Bramanti P., Cappa S.F., Aguglia E., Smeraldi E., et al. Saitohin polymorphism and executive dysfunction in schizophrenia. Neurol. Sci. 2012;33:1051–1056. doi: 10.1007/s10072-011-0893-9. [DOI] [PubMed] [Google Scholar]

- 39.Roca M., Parr A., Thompson R., Woolgar A., Torralva T., Antoun N., Manes F., Duncan J. Executive function and fluid intelligence after frontal lobe lesions. Brain. 2010;133:234–247. doi: 10.1093/brain/awp269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Steinke A., Lange F., Kopp B. Parallel model-based and model-free reinforcement learning for card sorting performance. 2020. under review. [DOI] [PMC free article] [PubMed]

- 41.Steinke A., Lange F., Seer C., Hendel M.K., Kopp B. Computational modeling for neuropsychological assessment of bradyphrenia in Parkinson’s disease. J. Clin. Med. 2020;9:1158. doi: 10.3390/jcm9041158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Bishara A.J., Kruschke J.K., Stout J.C., Bechara A., McCabe D.P., Busemeyer J.R. Sequential learning models for the Wisconsin card sort task: Assessing processes in substance dependent individuals. J. Math. Psychol. 2010;54:5–13. doi: 10.1016/j.jmp.2008.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Miyake A., Friedman N.P. The nature and organization of individual differences in executive functions. Curr. Dir. Psychol. Sci. 2012;21:8–14. doi: 10.1177/0963721411429458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Alvarez J.A., Emory E. Executive function and the frontal lobes: A meta-analytic review. Neuropsychol. Rev. 2006;16:17–42. doi: 10.1007/s11065-006-9002-x. [DOI] [PubMed] [Google Scholar]

- 45.Nyhus E., Barceló F. The Wisconsin Card Sorting Test and the cognitive assessment of prefrontal executive functions: A critical update. Brain Cogn. 2009;71:437–451. doi: 10.1016/j.bandc.2009.03.005. [DOI] [PubMed] [Google Scholar]

- 46.Steinke A., Lange F., Seer C., Kopp B. Toward a computational cognitive neuropsychology of Wisconsin card sorts: A showcase study in Parkinson’s disease. Comput. Brain Behav. 2018;1:137–150. doi: 10.1007/s42113-018-0009-1. [DOI] [Google Scholar]

- 47.Botvinick M.M., Plaut D.C. Doing without schema hierarchies: A recurrent connectionist approach to normal and impaired routine sequential action. Psychol. Rev. 2004;111:395–429. doi: 10.1037/0033-295X.111.2.395. [DOI] [PubMed] [Google Scholar]

- 48.Cooper R.P., Shallice T. Hierarchical schemas and goals in the control of sequential behavior. Psychol. Rev. 2006;113:887–916. doi: 10.1037/0033-295X.113.4.887. [DOI] [PubMed] [Google Scholar]

- 49.Beste C., Humphries M., Saft C. Striatal disorders dissociate mechanisms of enhanced and impaired response selection—Evidence from cognitive neurophysiology and computational modelling. NeuroImage Clin. 2014;4:623–634. doi: 10.1016/j.nicl.2014.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Forstmann B.U., Wagenmakers E.-J. An Introduction to Model-Based Cognitive Neuroscience. Springer; New York, NY, USA: 2015. [Google Scholar]

- 51.Palminteri S., Justo D., Jauffret C., Pavlicek B., Dauta A., Delmaire C., Czernecki V., Karachi C., Capelle L., Durr A., et al. Critical roles for anterior insula and dorsal striatum in punishment-based avoidance learning. Neuron. 2012;76:998–1009. doi: 10.1016/j.neuron.2012.10.017. [DOI] [PubMed] [Google Scholar]

- 52.Palminteri S., Lebreton M., Worbe Y., Grabli D., Hartmann A., Pessiglione M. Pharmacological modulation of subliminal learning in Parkinson’s and Tourette’s syndromes. Proc. Natl. Acad. Sci. USA. 2009;106:19179–19184. doi: 10.1073/pnas.0904035106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ambrosini E., Arbula S., Rossato C., Pacella V., Vallesi A. Neuro-cognitive architecture of executive functions: A latent variable analysis. Cortex. 2019;119:441–456. doi: 10.1016/j.cortex.2019.07.013. [DOI] [PubMed] [Google Scholar]

- 54.Giavazzi M., Daland R., Palminteri S., Peperkamp S., Brugières P., Jacquemot C., Schramm C., Cleret de Langavant L., Bachoud-Lévi A.-C. The role of the striatum in linguistic selection: Evidence from Huntington’s disease and computational modeling. Cortex. 2018;109:189–204. doi: 10.1016/j.cortex.2018.08.031. [DOI] [PubMed] [Google Scholar]

- 55.Suzuki S., O’Doherty J.P. Breaking human social decision making into multiple components and then putting them together again. Cortex. 2020;127:221–230. doi: 10.1016/j.cortex.2020.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Cleeremans A., Dienes Z. Computational models of implicit learning. In: Sun R., editor. The Cambridge Handbook of Computational Psychology. Cambridge University Press; New York, NY, USA: 2001. pp. 396–421. [Google Scholar]

- 57.Beste C., Adelhöfer N., Gohil K., Passow S., Roessner V., Li S.-C. Dopamine modulates the efficiency of sensory evidence accumulation during perceptual decision making. Int. J. Neuropsychopharmacol. 2018;21:649–655. doi: 10.1093/ijnp/pyy019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.D’Alessandro M., Lombardi L. A dynamic framework for modelling set-shifting performances. Behav. Sci. 2019;9:79. doi: 10.3390/bs9070079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Kimberg D.Y., Farah M.J. A unified account of cognitive impairments following frontal lobe damage: The role of working memory in complex, organized behavior. J. Exp. Psychol. Gen. 1993;122:411–428. doi: 10.1037/0096-3445.122.4.411. [DOI] [PubMed] [Google Scholar]

- 60.Levine D.S., Prueitt P.S. Modeling some effects of frontal lobe damage—Novelty and perseveration. Neural Netw. 1989;2:103–116. doi: 10.1016/0893-6080(89)90027-0. [DOI] [Google Scholar]

- 61.Granato G., Baldassarre G. Goal-directed top-down control of perceptual representations: A computational model of the Wisconsin Card Sorting Test; Proceedings of the 2019 Conference on Cognitive Computational Neuroscience; Berlin, Germany. 13–16 September 2019; Brentwood, TN, USA: Cognitive Computational Neuroscience; 2019. [Google Scholar]