Abstract

Background Hospital readmissions are a key quality metric, which has been tied to reimbursement. One strategy to reduce readmissions is to direct resources to patients at the highest risk of readmission. This strategy necessitates a robust predictive model coupled with effective, patient-centered interventions.

Objective The aim of this study was to reduce unplanned hospital readmissions through the use of artificial intelligence-based clinical decision support.

Methods A commercially vended artificial intelligence tool was implemented at a regional hospital in La Crosse, Wisconsin between November 2018 and April 2019. The tool assessed all patients admitted to general care units for risk of readmission and generated recommendations for interventions intended to decrease readmission risk. Similar hospitals were used as controls. Change in readmission rate was assessed by comparing the 6-month intervention period to the same months of the previous calendar year in exposure and control hospitals.

Results Among 2,460 hospitalizations assessed using the tool, 611 were designated by the tool as high risk. Sensitivity and specificity for risk assignment were 65% and 89%, respectively. Over 6 months following implementation, readmission rates decreased from 11.4% during the comparison period to 8.1% ( p < 0.001). After accounting for the 0.5% decrease in readmission rates (from 9.3 to 8.8%) at control hospitals, the relative reduction in readmission rate was 25% ( p < 0.001). Among patients designated as high risk, the number needed to treat to avoid one readmission was 11.

Conclusion We observed a decrease in hospital readmission after implementing artificial intelligence-based clinical decision support. Our experience suggests that use of artificial intelligence to identify patients at the highest risk for readmission can reduce quality gaps when coupled with patient-centered interventions.

Keywords: intelligence, artificial, patient readmission, delivery of health care, quality of health care technology assessment, biomedical, clinical decision-making, decision-making

Background and Significance

Artificial Intelligence in Health Care

Although artificial intelligence (AI) is widely utilized in many disciplines outside of medicine, its role in routine clinical practice remains limited. 1 Even where AI has been implemented in a clinical setting, published studies primarily focus on reporting of the algorithm's performance characteristics (e.g., area under the curve) rather than measuring the effect of AI implementation on measures of human health. In a sense, AI in health care is in its infancy. Several quality gaps have emerged as targets for AI-based predictive analytics. These include early recognition and management of sepsis and hospital readmission. 2 3 4

Hospital Readmission

Hospital readmission is often used as a surrogate outcome to assess the quality of initial hospitalization care and care transitions. The underlying rationale is that readmissions are often preventable because they may result as complications from the index hospitalization, foreseeable consequences of the initial illness, or failures in transitions of care. 5 6 Hospital readmission is also seen as a significant contributor to the cost of health care. The annual cost of unplanned hospital readmissions for Medicare alone has been estimated at $17.4 billion. 7 The validity of hospital readmission as a surrogate quality outcome has been criticized, and many consider this binary outcome to be an oversimplification that fails to account for the complexity of patient care and individual patient context. 5 6 Notwithstanding, hospitals are assigned financial penalties for excessive readmission rates, 8 and there is evidence that financial incentives are effective for reducing hospital readmission rates. 9

Facing this reality, many hospitals have developed interventions aimed at reducing preventable hospital readmissions. 8 One strategy is to identify patients who are at the highest risk for readmission and focus resources and attention on this high-risk group. This requires accurate identification of patients at high risk for readmission coupled with individualized interventions that are effective in this population.

Predictive Analytics for Hospital Readmission

Various models have been used to predict hospital readmission, including logistic regression, decision trees, and neural networks. 2 10 11 12 13 14 One widely used predictive model—the LACE index—takes into account length of stay, acuity, comorbidities, and emergency department utilization to predict 30-day hospital readmission. 15 However, the LACE index has been criticized because it may not apply to some populations, 16 and it may provide little value-add when compared with clinical judgment alone. 17

An abundance of studies has described the application of AI-based techniques to predict hospital readmission. 18 19 20 21 22 23 24 25 26 Although many studies have concluded that AI-based models are superior to traditional models for risk stratification, other studies have observed otherwise. 14 27 28 29 30 31 32 33 34 35 36 37 38 39

While clinical factors have primarily been used to predict readmission, there has also been interest in incorporating sociodemographic factors into models to more accurately account for patients' sociopersonal contexts, which are increasingly recognized to affect health-related outcomes. 10 11 12 13 40 This is critical because health behaviors, social factors, and economic factors are estimated to account for 70% of a person's health. 40

Despite great enthusiasm to use AI to reduce hospital readmissions, most studies appear to stop short of translating the predictive abilities gathered at the computing bench into action at the patient's bedside. This highlights the need to utilize in silico predictive modeling to improve patient-centered outcomes in vivo. Doing so requires coupling of risk stratification with evidence-based interventions which prevent readmissions among those at risk. Thus, even if AI-based methods prove effective at identifying patients who are at risk for readmission, the potential impact of these methods will be lost if they are not coupled with effective interventions. Although interventions such as self-care have been proposed, their impact on readmission rates remains unclear. 41

Objectives

At our institution, we aimed to reduce readmission rates as part of larger efforts at our institution to help achieve high-value care consistent with the triple aim. 42 43 We hypothesized that coupling AI-based predictive modeling with a clinical decision support (CDS) intervention designed to reduce the risk of readmission would reduce hospital readmission rates. Our clinical question in population, intervention, control, and outcomes (PICO) form was as follows: (P) Among patients hospitalized at a regional hospital, (I) does application of an AI-based CDS tool (C) compared with standard care, and (O) reduce hospital readmission rate.

Methods

Human Subjects Protection

The data collected and work described herein were initially conducted as part of an ongoing quality improvement initiative. The data analysis plan was reviewed by the Mayo Clinic Institutional Review Board (application 19–007555) and deemed “exempt.”

Artificial Intelligence-Based Clinical Decision Support Tool

The AI tool combined clinical and nonclinical data to predict a patient's risk of hospital readmission within 30 days using machine learning. The tool was developed by a third-party commercial developer (Jvion; Johns Creek, Georgia) and licensed for use by Mayo Clinic. Details on the data used by the vendor as well as their decision tree-based modeling approach have been described in detail elsewhere. 44 Clinical data incorporated into risk prediction included diagnostic codes, vital signs, laboratory orders and results, medications, problem lists, and claims. Based on the vendor's description, nonclinical data included sociodemographic information available from public and private third-party databases. Data that were not available at the patient level were matched at the ZIP + 4 code level.

The tool extracted inputs from the electronic health record (EHR), combined them with inputs from the nonclinical sources, processed them, and generated a report for each high-risk patient daily in the early morning prior to the start of the clinical work day. Each patient's individual report identified a risk category, risk factors, and targeted recommendations intended to address the identified risk factors. The risk factors contributing to a patient's high risk were displayed to provide model interpretability to the frontline clinical staff. There were 26 possible recommendations that could be generated by the tool. Examples included recommendations to arrange specific postdischarge referrals (e.g., physical therapy, dietician, and cardiac rehabilitation), implementation of disease-specific medical management plans (e.g., weight monitoring and action plan, pain management plan), enhanced coordination with the patient's primary care provider, and enhanced education (e.g., predischarge education).

At the time of the implementation, the institution had limited extensible information technology resources because other sites within the institution were undergoing an EHR transition. For this reason, automatically generated reports were not integrated directly within the EHR but were instead made available to clinical staff via a standalone web-based tool. The machine learning model was trained by the vendor on data from other institutions that were previous clients. Per the vendor, that model was adjusted for our institution using 2 years of historical data.

Pilot Program and Setting

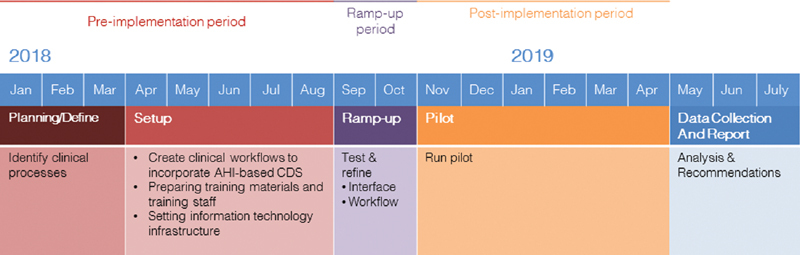

The pilot occurred at Mayo Clinic Health System, La Crosse Hospital in La Crosse, Wisconsin. The project was conducted over a 12-month period: the first 6 months were used to prepare for the pilot, with the AI tool integrated into clinical practice for the following 6 months ( Fig. 1 ).

Fig. 1.

Project timeline.

Local Adaptation

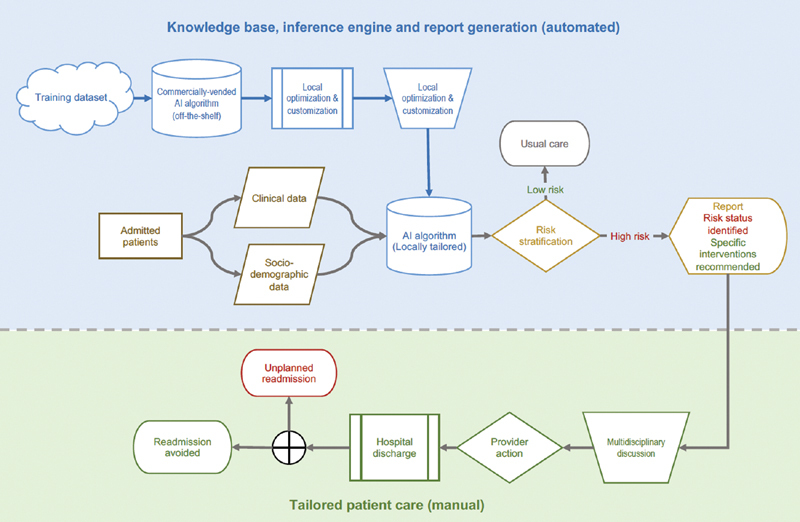

Prior to the pilot, the project team collaborated with local practice partners to map local workflows and identify how to best integrate the tool ( Fig. 2 ). Patients identified as high risk were identified with a purple dot placed next to their name on the unit whiteboard. Recommendations generated by the tool were discussed at the daily huddle, and the care team determined how to best implement them. Additionally, discharge planners contacted physicians daily to review recommendations, and the tool was further used during a transition of care huddle in collaboration with outpatient care coordinators.

Fig. 2.

Care process flow.

Implementation Timing

The 30-day all-cause readmission rates were extracted from administrative records. Admissions for the following reasons were excluded because they were considered planned readmissions: chemotherapy, radiation therapy, rehabilitation, dialysis, labor, and delivery. The first 2 months after the tool went live (September and October 2018) were considered the ramp-up period and were excluded from both the preimplementation and postimplementation periods ( Fig. 1 ). The 8 months prior to the ramp-up period were considered the preimplementation period. The 6 months of the pilot (November 2018 through April 2019) were included in the postimplementation period. The 6 months a year prior to the postimplementation period (November 2017 through April 2018) were considered the comparison period for the primary analysis ( Fig. 1 ).

Statistical Analysis

The primary outcome was the change in all-cause hospital readmission rate for the first 6 months of the implementation period compared with the same 6 months the year prior. We chose to measure all-cause readmission rates—rather than preventable readmission rates—due to ambiguity in which readmissions are considered preventable and our desire to calculate a conservative and unqualified estimate of the tool's impact. However, five types of readmissions were excluded because they were considered universally planned (see above). The timeframe for this analysis was chosen to reduce the impact of seasonal variations on hospital admissions on the results. As a sensitivity analysis, we also compared with the readmission rate over the preimplementation period after exclusion of the ramp-up period. We furthermore conducted a difference-in-differences analysis for the same time points, taking into account baseline changes in readmissions over the study time period at three other regional hospitals within our health system ( Table 1 ) chosen for their similarity and proximity. All comparison hospitals were regional hospitals within the not-for-profit Mayo Clinic Health System, which were located within nonmetropolitan cities in Minnesota and Wisconsin within the United States of America. Readmission rates for the control hospitals were pooled using a weighted average based on number of admissions. To perform a statistical test of significance for the difference in differences, we use a hypothetical scenario assuming a common effect equal to the pre–post readmission reduction in the control hospitals. Finally, subset analysis was performed to analyze only those patients classified by the tool as “high risk.” Predictive accuracy of the AI algorithm was assessed by assessing the readmission rate among patients identified as “high risk” and “low risk” prior to the tool's integration into clinical workflows (i.e., before interventions targeting high-risk patients were implemented).

Table 1. Hospital characteristics.

| Hospital | City population (people) | City poverty rate | City median age (y) | Hospital beds | Hospital staffing level | Distance from intervention hospital (miles) | ||

|---|---|---|---|---|---|---|---|---|

| Physician | Nurse | Advanced practice provider | ||||||

| La Crosse, WI | 51,886 | 23.4% | 28.8 | 142 | 189 | 563 | 74 | 0 |

| Eau Claire, WI | 60,086 | 17.2% | 31.3 | 192 | 234 | 793 | 98 | 88 |

| Mankato, MN | 41,701 | 24.7% | 25.9 | 167 | 139 | 561 | 78 | 155 |

| Red Wing, MN | 16,365 | 14.0% | 43.4 | 50 | 50 | 145 | 25 | 96 |

JMP version 14.1 (SAS Institute, Cary, North Carolina) was used for statistical analysis. Chi-square test was utilized to assess changes in readmission rates. All hypothesis tests were two sided.

Results

Risk Stratification and Recommendation Engine Performance

To achieve a balance between sensitivity and specificity and avoid overburdening staff, the algorithm was tailored to identify the top 20th percentile at risk for readmission using retrospective tuning data. Sensitivity and specificity were 65 and 89%, respectively. Negative and positive predictive values were 95 and 43%, respectively. The tool identified a total of 611 high-risk hospitalizations out of 2,460 total hospitalizations over the pilot period (24%).

Hospital Readmission Rate

During the 6-month postimplementation period (November 2018 through April 2019), the readmission rate was 8.1%, which represented a statistically significant decrease compared with the same months the year prior (November 2017 through April 2018; 11.4%; p < 0.001) and yielding a number needed to treat of 30 patients to prevent one readmission. Readmission rate was also decreased compared with the trailing 10 months preimplementation, after excluding the ramp-up period (i.e., November 2017 through August 2018; 8.1 vs. 10.8%, p < 0.001).

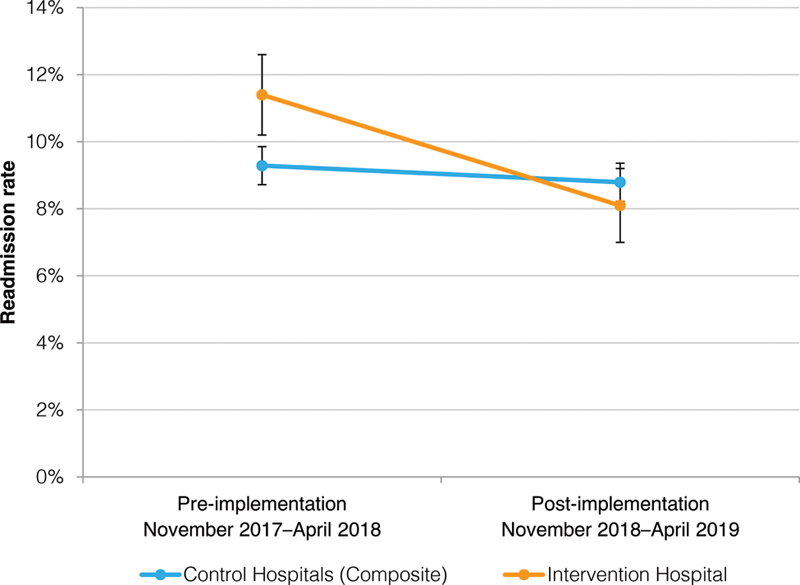

Difference in Differences

The intervention hospital experienced an absolute readmission reduction rate of 3.3%, decreasing from 11.4% 1 year prior to the implementation period to 8.1% in the postimplementation period. In contrast, control hospitals experienced an absolute readmission reduction rate of 0.5%, decreasing from 9.3 to 8.8%. Taking into account the absolute readmission reduction rate at control hospitals, the adjusted difference in differences reduction in the intervention hospital was 2.8%, corresponding to 25% relative reduction from the baseline rate of 11.4% ( p < 0.001; Fig. 3 ).

Fig. 3.

Pre-post difference-in-difference analysis. Error bars represent 95% confidence intervals.

High-Risk Subgroup Analysis

Subgroup analysis was performed to consider the outcomes of patients who were identified by the AI-based CDS as being at high risk for hospital readmission. In this subgroup, the readmission rate decreased to 34% in the postimplementation period, compared with 43% during the same 6 months 1 year prior ( p = 0.001). This corresponded to a number needed to treat of 11 patients to avoid one readmission ( Table 2 ).

Table 2. Readmission rates by risk group.

| Preintervention | Postintervention | NNT | p -Value | |

|---|---|---|---|---|

| High-risk subgroup | 43% | 34% | 11 | 0.001 |

| Low-risk subgroup | 5.0% | 6.1% | 0.08 | |

| All patients | 11.4% | 8.1% | 30 | <0.001 |

In contrast, the readmission rate in patients identified by the AI-based CDS as being at low risk of hospital readmission did not significantly differ in the same two time periods, suggesting that the difference in overall readmissions was accounted for by changes in the high-risk subgroup ( Table 2 ).

Discussion

Summary of Results

We observed a significant reduction in hospital readmission following implementation of AI-based CDS. This difference persisted when two different pre-implementation time periods were considered and when a difference-in-difference sensitivity analysis was performed to account for reduction in hospital readmission observed at control hospitals. The overall reduction was due to the reduction in readmission rate among patients identified as “high-risk.” The overall number needed to treat was 30 admitted patients to prevent one readmission. Focusing only on patients who received an intervention (patients designated as “high-risk”), results in a number needed to treat of 11.

Qualitative Experience

Although this study was designed to be primarily quantitative, several qualitative insights emerged as we underwent planning and implementation along with the partner site. Anecdotal experience and staff feedback identified that staff felt the generated recommendations were not sufficiently tailored to individual patients, were inappropriate, or were not useful. In many cases, staff shared that the same recommendations were provided frequently for very dissimilar patients. It is impossible to separate the effect of the knowledge base and recommendation engine from the actual clinical implementation. However, our observations suggest that the most useful component of the AI tool was its ability to identify and prioritize patients who are at risk for hospital readmission. This, in turn, prompted multidisciplinary discussions and collaboration to reduce the risk of readmission. The management recommendation engine may have played less of a role in the outcomes observed, as for most patients the interventions recommended were basically a standard checklist and were very similar for most patients. These observations are consistent with the widely accepted belief that AI performs well with tasks with a discrete and verifiable endpoint (e.g., predicting risk of hospital readmission) but is less adept at handling complex tasks without discrete endpoints or defined standards, especially when tasks involve incorporation of sociopersonal context (e.g., formulating a clinical management plan). 45

These complex and nuanced tasks can become even more challenging for AI when factors that need to be considered when formulating a plan are not available to the system in a structured format. 46 Even as these data elements become available as discrete elements in the EHR, AI-based algorithms may face challenges when handling and consideration of these factors requires a degree of finesse and interpersonal skills.

Artificial Intelligence for Hospital Readmission Reduction: Facilitators and Barriers

AI-based tools must be developed on the bedrock of high-performing predictive modeling capabilities, since tailored interventions can only be enacted once high-risk patients are identified. One key facilitator of our ability to reduce hospital readmissions was our ability to identify patients at highest risk for hospital readmission. The observation that the risk reduction was highest in the high-risk subgroup suggests that interventions targeted toward high-risk patients account for most of the change observed. While a sensitivity of 65% may seem low, safety-net decision support systems such as this one may benefit more from a high specificity that reduces the burden on staff. 47

Notwithstanding, there are many real and perceived barriers that may have limited our ability to use AI to improve patient care. Although we observed acceptable performance characteristics, analytical models may become unreliable if the model is not tailored to the local setting and population. 48 Additionally, as discussed earlier, key data that reliably predict the outcome of interest may not be readily available as structured, discrete data inputs from the EHR, 46 or the available data may be insufficient to reliably make a prediction even when available as discrete elements (i.e., “big data hubris”). 49 We hypothesize that these limitations hamper the ability of the tool to generate meaningful recommendations for care management.

Implementation may fail when a system is poorly integrated into clinical workflows or when recommended interventions fail to reduce readmission rates. It has widely been recognized that implementation of CDS systems—including those based on AI—requires great care to ensure that decision support is accepted and used by end users. 45 This process requires that CDS is integrated into existing workflows in a manner that is acceptable to care team members. 45

Strengths and Limitations

Strengths of this study include that we assessed the impact of AI-based CDS on an important clinical outcome—namely, hospital readmission—in comparison to a control group. We also performed a subgroup analysis to assess the groups within which the effect size was the largest. In addition to assessing the quantitative impact, we also discuss qualitative challenges that may constrain the CDS. Limitations include that the results may not generalize to other AI-based CDS systems or the same AI-based CDS system applied to different patient populations. We also cannot separate out the effect of the risk prediction engine from the recommendation engine, thereby limiting our ability to understand why the intervention was effective. Furthermore, we cannot rule out that other quality improvement initiatives may have impacted the observed improvements on hospital readmissions. Other methods, such as a cluster randomized controlled trial, may better control for confounding factors.

Conclusion

AI may be used to predict hospital readmission. Integration of predictive analytics, coupled with interventions and multidisciplinary team-based discussions aimed at high-risk patients, can be used to reduce hospital readmission rates. The entity to which a task is delegated (i.e., computer or human) must be carefully considered. Practical and technical barriers limit current adoption of AI in routine clinical practice.

Clinical Relevance Statement

In a nonrandomized and controlled study, hospital readmissions decreased following pilot implementation of AI-based CDS, to 8.1% during the 6-month pilot period, compared with 11.4% during the comparison period ( p < 0.001). This difference remained significant when adjusted for a decrease in readmission rates in control hospitals. AI-based CDS can be used to identify high-risk patients and prompt interventions to decrease readmission rates.

Multiple Choice Questions

-

When implementing artificial intelligence based CDS, which of the following represents the greatest challenge?

Risk prediction based on clinical factors.

Risk prediction based on socio-personal factors.

Formulating clinical management plans based on socio-personal factors.

Lack of available training data.

Correct Answer: The correct answer is option c. Formulating clinical management plans based on sociopersonal factors. In the present study, a risk prediction model based on third-party and local training data predicted risk of hospital readmission based on clinical and sociopersonal factors with a sensitivity and specificity of 65 and 89%. However, clinical recommendations were observed to be insufficiently tailored to individual patients and often were inappropriate for a given patient. While artificial intelligence performs well with prediction and grouping tasks—and training data pertaining to clinical and sociopersonal factors are available—it is less adept at sociopersonally nuanced clinical decision-making.

-

Which of the following strategies is most likely to make artificial intelligence-based CDS more cost-effective by reducing the number needed to treat to prevent one hospital admission?

Focusing clinical interventions on “high-risk” patients.

Focusing clinical interventions on both “high-risk” and “low-risk” patients.

Strictly following recommendations generated by the recommendation engine.

Expanding the list of possible recommendations generated by the recommendation engine.

Correct Answer: The correct answer is option a. Focusing clinical interventions on “high-risk” patients. In the present study, the overall number of patients needed to treat to avoid one hospital readmission was 30. There was no significant change in hospital readmission rate among the low-risk subgroup, despite the implementation of clinical interventions. On the other hand, there was a significant reduction in readmission rate among the high-risk subgroup, with a number needed to treat of 11 among this subgroup. This suggests that interventions expended in the low-risk subgroup did not have an impact on hospital readmission rate, but interventions in the high-risk subgroup did. Therefore, focusing clinical interventions on high-risk patients reduces the number needed to treat and improves the cost effectiveness of the CDS. Users of the tool described in the study noted the recommendation engine as a weakness due to similar and relatively generic clinical recommendations being generated for individual patients. There is no evidence that strictly following these recommendations or expanding the list of possible recommendations would improve cost effectiveness.

Funding Statement

Funding This study received its financial support from Mayo Clinic research funds.

Conflict of Interest Mayo Clinic and the third-party AI-based CDS software tool developer (Jvion; Johns Creek, Georgia) have existing licensing agreements for unrelated technology. Jvion was not involved in the production of this manuscript except to answer queries made during the peer review process regarding details of the software. Jvion otherwise played no role in the conception, drafting, or revision of the manuscript nor did they play a role in the decision to submit it for publication. The pilot project was funded with internal Mayo Clinic funds.

Protection of Human and Animal Subjects

This study was reviewed by the Mayo Clinic Institutional Review Board and deemed “exempt.”

References

- 1.Car J, Sheikh A, Wicks P, Williams M S. Beyond the hype of big data and artificial intelligence: building foundations for knowledge and wisdom. BMC Med. 2019;17(01):143. doi: 10.1186/s12916-019-1382-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Jamei M, Nisnevich A, Wetchler E, Sudat S, Liu E. Predicting all-cause risk of 30-day hospital readmission using artificial neural networks. PLoS One. 2017;12(07):e0181173. doi: 10.1371/journal.pone.0181173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Komorowski M, Celi L A, Badawi O, Gordon A C, Faisal A A. The Artificial Intelligence Clinician learns optimal treatment strategies for sepsis in intensive care. Nat Med. 2018;24(11):1716–1720. doi: 10.1038/s41591-018-0213-5. [DOI] [PubMed] [Google Scholar]

- 4.Seymour C W, Kennedy J N, Wang S. Derivation, validation, and potential treatment implications of novel clinical phenotypes for sepsis. JAMA. 2019;321(20):2003–2017. doi: 10.1001/jama.2019.5791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hasan M. Readmission of patients to hospital: still ill defined and poorly understood. Int J Qual Health Care. 2001;13(03):177–179. doi: 10.1093/intqhc/13.3.177. [DOI] [PubMed] [Google Scholar]

- 6.Clarke A. Readmission to hospital: a measure of quality or outcome? Qual Saf Health Care. 2004;13(01):10–11. doi: 10.1136/qshc.2003.008789. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Jencks S F, Williams M V, Coleman E A. Rehospitalizations among patients in the Medicare fee-for-service program. N Engl J Med. 2009;360(14):1418–1428. doi: 10.1056/NEJMsa0803563. [DOI] [PubMed] [Google Scholar]

- 8.Kripalani S, Theobald C N, Anctil B, Vasilevskis E E. Reducing hospital readmission rates: current strategies and future directions. Annu Rev Med. 2014;65:471–485. doi: 10.1146/annurev-med-022613-090415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Desai N R, Ross J S, Kwon J Y. Association between hospital penalty status under the hospital readmission reduction program and readmission rates for target and nontarget conditions. JAMA. 2016;316(24):2647–2656. doi: 10.1001/jama.2016.18533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Lee E W. Selecting the best prediction model for readmission. J Prev Med Public Health. 2012;45(04):259–266. doi: 10.3961/jpmph.2012.45.4.259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Cholleti S, Post A, Gao J. Leveraging derived data elements in data analytic models for understanding and predicting hospital readmissions. AMIA Annu Symp Proc. 2012;2012:103–111. [PMC free article] [PubMed] [Google Scholar]

- 12.Kulkarni P, Smith L D, Woeltje K F. Assessing risk of hospital readmissions for improving medical practice. Health Care Manage Sci. 2016;19(03):291–299. doi: 10.1007/s10729-015-9323-5. [DOI] [PubMed] [Google Scholar]

- 13.Swain M J, Kharrazi H. Feasibility of 30-day hospital readmission prediction modeling based on health information exchange data. Int J Med Inform. 2015;84(12):1048–1056. doi: 10.1016/j.ijmedinf.2015.09.003. [DOI] [PubMed] [Google Scholar]

- 14.Artetxe A, Beristain A, Graña M. Predictive models for hospital readmission risk: a systematic review of methods. Comput Methods Programs Biomed. 2018;164:49–64. doi: 10.1016/j.cmpb.2018.06.006. [DOI] [PubMed] [Google Scholar]

- 15.van Walraven C, Dhalla I A, Bell C. Derivation and validation of an index to predict early death or unplanned readmission after discharge from hospital to the community. CMAJ. 2010;182(06):551–557. doi: 10.1503/cmaj.091117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cotter P E, Bhalla V K, Wallis S J, Biram R W. Predicting readmissions: poor performance of the LACE index in an older UK population. Age Ageing. 2012;41(06):784–789. doi: 10.1093/ageing/afs073. [DOI] [PubMed] [Google Scholar]

- 17.Damery S, Combes G. Evaluating the predictive strength of the LACE index in identifying patients at high risk of hospital readmission following an inpatient episode: a retrospective cohort study. BMJ Open. 2017;7(07):e016921. doi: 10.1136/bmjopen-2017-016921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hopkins B S, Yamaguchi J T, Garcia R. Using machine learning to predict 30-day readmissions after posterior lumbar fusion: an NSQIP study involving 23,264 patients. J Neurosurg Spine. 2019:1–8. doi: 10.3171/2019.9.SPINE19860. [DOI] [PubMed] [Google Scholar]

- 19.Mahajan S M, Ghani R. Using ensemble machine learning methods for predicting risk of readmission for heart failure. Stud Health Technol Inform. 2019;264:243–247. doi: 10.3233/SHTI190220. [DOI] [PubMed] [Google Scholar]

- 20.Wolff P, Graña M, Ríos S A, Yarza M B. Machine learning readmission risk modeling: a pediatric case study. BioMed Res Int. 2019;2019:8.532892E6. doi: 10.1155/2019/8532892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Eckert C, Nieves-Robbins N, Spieker E. Development and prospective validation of a machine learning-based risk of readmission model in a large military hospital. Appl Clin Inform. 2019;10(02):316–325. doi: 10.1055/s-0039-1688553. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Awan S E, Bennamoun M, Sohel F, Sanfilippo F M, Dwivedi G. Machine learning-based prediction of heart failure readmission or death: implications of choosing the right model and the right metrics. ESC Heart Fail. 2019;6(02):428–435. doi: 10.1002/ehf2.12419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Min X, Yu B, Wang F. Predictive modeling of the hospital readmission risk from patients' claims data using machine learning: a case study on COPD. Sci Rep. 2019;9(01):2362. doi: 10.1038/s41598-019-39071-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Kalagara S, Eltorai A EM, Durand W M, DePasse J M, Daniels A H. Machine learning modeling for predicting hospital readmission following lumbar laminectomy. J Neurosurg Spine. 2018;30(03):344–352. doi: 10.3171/2018.8.SPINE1869. [DOI] [PubMed] [Google Scholar]

- 25.Kakarmath S, Golas S, Felsted J, Kvedar J, Jethwani K, Agboola S. Validating a machine learning algorithm to predict 30-day re-admissions in patients with heart failure: protocol for a prospective cohort study. JMIR Res Protoc. 2018;7(09):e176. doi: 10.2196/resprot.9466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mahajan S M, Mahajan A S, King R, Negahban S. Predicting risk of 30-day readmissions using two emerging machine learning methods. Stud Health Technol Inform. 2018;250:250–255. [PubMed] [Google Scholar]

- 27.Baig M M, Hua N, Zhang E. A machine learning model for predicting risk of hospital readmission within 30 days of discharge: validated with LACE index and patient at risk of hospital readmission (PARR) model. Med Biol Eng Comput. 2020;58(07):1459–1466. doi: 10.1007/s11517-020-02165-1. [DOI] [PubMed] [Google Scholar]

- 28.Gupta S, Ko D T, Azizi P. Evaluation of machine learning algorithms for predicting readmission after acute myocardial infarction using routinely collected clinical data. Can J Cardiol. 2020;36(06):878–885. doi: 10.1016/j.cjca.2019.10.023. [DOI] [PubMed] [Google Scholar]

- 29.Baig M M, Hua N, Zhang E. Machine learning-based risk of hospital readmissions: predicting acute readmissions within 30 days of discharge. Conf Proc IEEE Eng Med Biol Soc. 2019;2019:2178–2181. doi: 10.1109/EMBC.2019.8856646. [DOI] [PubMed] [Google Scholar]

- 30.Goto T, Jo T, Matsui H, Fushimi K, Hayashi H, Yasunaga H.Machine learning-based prediction models for 30-day readmission after hospitalization for chronic obstructive pulmonary disease COPD 201916(5-6):338–343. [DOI] [PubMed] [Google Scholar]

- 31.Awan S E, Bennamoun M, Sohel F, Sanfilippo F M, Chow B J, Dwivedi G. Feature selection and transformation by machine learning reduce variable numbers and improve prediction for heart failure readmission or death. PLoS One. 2019;14(06):e0218760. doi: 10.1371/journal.pone.0218760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Morgan D J, Bame B, Zimand P. Assessment of machine learning vs standard prediction rules for predicting hospital readmissions. JAMA Netw Open. 2019;2(03):e190348. doi: 10.1001/jamanetworkopen.2019.0348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Golas S B, Shibahara T, Agboola S. A machine learning model to predict the risk of 30-day readmissions in patients with heart failure: a retrospective analysis of electronic medical records data. BMC Med Inform Decis Mak. 2018;18(01):44. doi: 10.1186/s12911-018-0620-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Rojas J C, Carey K A, Edelson D P, Venable L R, Howell M D, Churpek M M. Predicting intensive care unit readmission with machine learning using electronic health record data. Ann Am Thorac Soc. 2018;15(07):846–853. doi: 10.1513/AnnalsATS.201710-787OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Desautels T, Das R, Calvert J. Prediction of early unplanned intensive care unit readmission in a UK tertiary care hospital: a cross-sectional machine learning approach. BMJ Open. 2017;7(09):e017199. doi: 10.1136/bmjopen-2017-017199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Garcia-Arce A, Rico F, Zayas-Castro J L. Comparison of machine learning algorithms for the prediction of preventable hospital readmissions. J Healthc Qual. 2018;40(03):129–138. doi: 10.1097/JHQ.0000000000000080. [DOI] [PubMed] [Google Scholar]

- 37.Mortazavi B J, Downing N S, Bucholz E M. Analysis of machine learning techniques for heart failure readmissions. Circ Cardiovasc Qual Outcomes. 2016;9(06):629–640. doi: 10.1161/CIRCOUTCOMES.116.003039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Shameer K, Johnson K W, Yahi A. Predictive modeling of hospital readmission rates using electronic medical record-wide machine learning: a case-study using Mount Sinai Heart Failure Cohort. Pac Symp Biocomput. 2017;22:276–287. doi: 10.1142/9789813207813_0027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Frizzell J D, Liang L, Schulte P J. Prediction of 30-day all-cause readmissions in patients hospitalized for heart failure: comparison of machine learning and other statistical approaches. JAMA Cardiol. 2017;2(02):204–209. doi: 10.1001/jamacardio.2016.3956. [DOI] [PubMed] [Google Scholar]

- 40.Hood C M, Gennuso K P, Swain G R, Catlin B B. County health rankings: relationships between determinant factors and health outcomes. Am J Prev Med. 2016;50(02):129–135. doi: 10.1016/j.amepre.2015.08.024. [DOI] [PubMed] [Google Scholar]

- 41.Negarandeh R, Zolfaghari M, Bashi N, Kiarsi M. Evaluating the effect of monitoring through telephone (tele-monitoring) on self-care behaviors and readmission of patients with heart failure after discharge. Appl Clin Inform. 2019;10(02):261–268. doi: 10.1055/s-0039-1685167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Institute of Medicine . Washington (DC): National Academies Press; 2008. Evidence-Based Medicine and the Changing Nature of Healthcare: 2007 IOM Annual Meeting Summary. [PubMed] [Google Scholar]

- 43.Berwick D M, Nolan T W, Whittington J. The triple aim: care, health, and cost. Health Aff (Millwood) 2008;27(03):759–769. doi: 10.1377/hlthaff.27.3.759. [DOI] [PubMed] [Google Scholar]

- 44.Chen S, Bergman D, Miller K, Kavanagh A, Frownfelter J, Showalter J. Using applied machine learning to predict healthcare utilization based on socioeconomic determinants of care. Am J Manag Care. 2020;26(01):26–31. doi: 10.37765/ajmc.2020.42142. [DOI] [PubMed] [Google Scholar]

- 45.Shortliffe E H, Sepúlveda M J. Clinical decision support in the era of artificial intelligence. JAMA. 2018;320(21):2199–2200. doi: 10.1001/jama.2018.17163. [DOI] [PubMed] [Google Scholar]

- 46.Navathe A S, Zhong F, Lei V J. Hospital readmission and social risk factors identified from physician notes. Health Serv Res. 2018;53(02):1110–1136. doi: 10.1111/1475-6773.12670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Romero-Brufau S, Huddleston J M, Escobar G J, Liebow M. Why the C-statistic is not informative to evaluate early warning scores and what metrics to use. Crit Care. 2015;19:285. doi: 10.1186/s13054-015-0999-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Yu S, Farooq F, van Esbroeck A, Fung G, Anand V, Krishnapuram B. Predicting readmission risk with institution-specific prediction models. Artif Intell Med. 2015;65(02):89–96. doi: 10.1016/j.artmed.2015.08.005. [DOI] [PubMed] [Google Scholar]

- 49.Lazer D, Kennedy R, King G, Vespignani A.Big data. The parable of Google Flu: traps in big data analysis Science 2014343(6176):1203–1205. [DOI] [PubMed] [Google Scholar]