Abstract

Rapid and accurate detection of COVID-19 coronavirus is necessity of time to prevent and control of this pandemic by timely quarantine and medical treatment in absence of any vaccine. Daily increase in cases of COVID-19 patients worldwide and limited number of available detection kits pose difficulty in identifying the presence of disease. Therefore, at this point of time, necessity arises to look for other alternatives. Among already existing, widely available and low-cost resources, X-ray is frequently used imaging modality and on the other hand, deep learning techniques have achieved state-of-the-art performances in computer-aided medical diagnosis. Therefore, an alternative diagnostic tool to detect COVID-19 cases utilizing available resources and advanced deep learning techniques is proposed in this work. The proposed method is implemented in four phases, viz., data augmentation, preprocessing, stage-I and stage-II deep network model designing. This study is performed with online available resources of 1215 images and further strengthen by utilizing data augmentation techniques to provide better generalization of the model and to prevent the model overfitting by increasing the overall length of dataset to 1832 images. Deep network implementation in two stages is designed to differentiate COVID-19 induced pneumonia from healthy cases, bacterial and other virus induced pneumonia on X-ray images of chest. Comprehensive evaluations have been performed to demonstrate the effectiveness of the proposed method with both (i) training-validation-testing and (ii) 5-fold cross validation procedures. High classification accuracy as 97.77%, recall as 97.14% and precision as 97.14% in case of COVID-19 detection shows the efficacy of proposed method in present need of time. Further, the deep network architecture showing averaged accuracy/sensitivity/specificity/precision/F1-score of 98.93/98.93/98.66/96.39/98.15 with 5-fold cross validation makes a promising outcome in COVID-19 detection using X-ray images.

Keywords: Covid-19, Coronavirus detection, Deep learning, X-ray, Pneumonia, Computer-aided diagnosis

1. Introduction

Coronaviruses, a family of viruses, cause infection and consequently illness ranging from the common cold to severe diseases like Severe Acute Respiratory Syndrome (SARS) and Middle East Respiratory Syndrome (MERS). A novel coronavirus, COVID-19, is the infection caused by SARS-CoV-2. A study by World Health Organization (WHO) proves that COVID-19 virus like SARS cause open holes in lungs and appear like a honeycomb [1]. The first outbreak of Covid-19 was identified in Wuhan, Hubei, China, in December [2]. Within three months (On March 11, 2020) of the first outbreak, WHO declared the COVID-19 a pandemic [3]. By 09 April 2020, this virus affects more than 15.5 lakhs people and more than 90 thousand people lost their lives [4]. The report from Imperial College, London suggests that more than 90% percent of the world’s population could have been affected and could have killed 40.6 million people if no mitigation measures have been taken to combat the virus [5].

People suffering from COVID-19 have moderate respiratory illness that can be cured without any special treatment of antibiotics. However, people facing from medical complications like diabetes, chronic respiratory diseases, and cardiovascular diseases are more likely to suffer from this virus. According to the reports of WHO, common symptoms of COVID-19 are same as that of common flu, which include fever, tiredness, dry cough, and shortness of breath, aches, pains and sore throat [6]. These common symptoms make difficult to detect the virus at an early stage. As this is a virus, so there is no chance that it can be limited by anti-biotics, which works on bacterial or fungal infections.

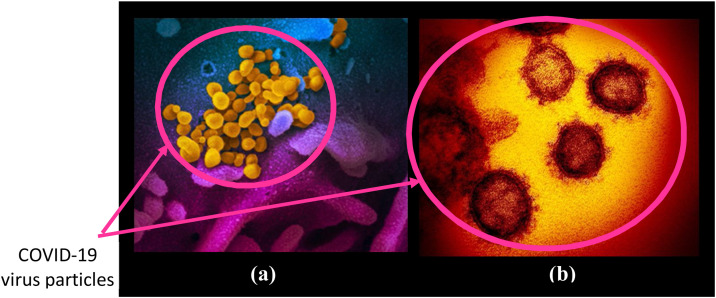

The National Institute of Allergy and Infectious Diseases (NIAID) and Rocky Mountain Laboratories (RML) have released some images of COVID-19 virus using scanning and transmission electron microscopy [7,8]. Fig. 1 shows the sample images of COVID-19 virus captured by NIAID and RML using different microscopes. Image in Fig. 1(a) shows the COVID-19 virus captured by scanning electron microscope from a US patient where virus particles are shown in yellow color and emerge from the cells that are shown in blue and pink color. Image shown in Fig. 1(b) is captured by the transmission electron microscope. This figure is clearly able to illustrate that COVID-19 virus looks similar form outside as most of the corona viruses including SARS and MERS, sharing the bump covered spherical surface.

Fig. 1.

COVID-19 virus image captured by: (a) scanning electron microscope in false color; (b) transmission electron microscope.

1.1. Challenges

Primarily, bacterial and viral pathogens are two leading causes of pneumonia. It was found in some patients that COVID-19 virus, like any other bacteria or virus, causes pneumonia. However, the treatment is different in all these cases. The initial screening/testing allows knowing whether an individual has pneumonia or not. Further diagnosis of pneumonia, that is whether the patient is carrying COVID-19 virus induced pneumonia/bacterial pneumonia or/a viral pneumonia different from COVID-19, is crucial to prevent the spread of virus. If an individual is found infected then according to the diagnosis some precautionary measures can be taken. Bacterial Pneumonia requires intensive antibiotic treatment while viral pneumonia is treated with intensive care. Precautionary measures in case of COVID-19 virus also include keeping the patient in quarantine for some days to reduce the possibility to infect others. It is also crucial to determine the spread of COVID-19 virus in various parts of the world and take appropriate measure to slow down the spread. Therefore, accurate and timely diagnosis of COVID-19 virus induced pneumonia poses the biggest challenge.

The WHO approved method of testing corona virus are the reverse transmission polymerase chain reaction (RT-PCR) method where the short sequences of DNA or RNA are analyzed and reproduced or amplified [9]. However, some people require more than one test to rule out the possibility of corona virus. The WHO guidelines of laboratory testing suggest that negative results do not rule out the possibility that the person is containing virus [10]. Limited availability of screening workstations and testing kits to detect COVID-19 creates tremendous burden to medical professionals and staffs to handle the situation. In this scenario, rapid and accurate detection of COVID-19 suspected cases is a great challenge for medical experts. Exponential increase of cases also arises the need of multiple testing to get the idea about true situation and in making appropriate decision accordingly.

Early detection of COVID-19 suspected cases is also a challenge when it comes to public health security and control of pandemic. Any failure in detection of COVID-19 virus induced disease results in increase in mortality rate. The incubation period which means the time between catching the virus and beginning to have symptoms of the disease is 1–14 days. This makes it much more difficult to detect COVID-19 disease at a very early stage based on the symptoms shown by the individual.

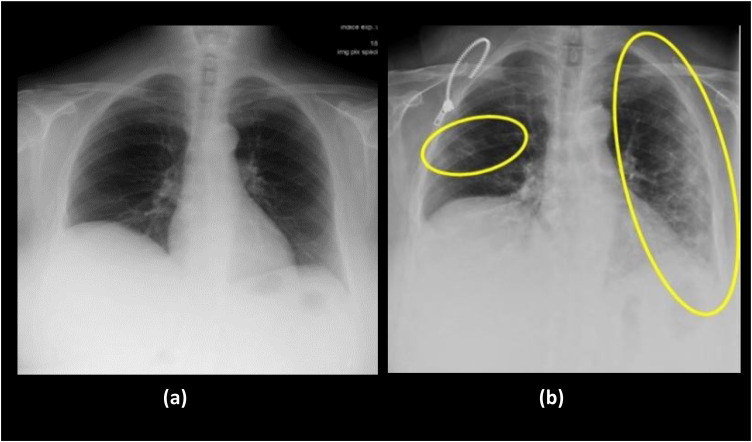

Despite the presence of many imaging modalities, chest radiography is considered to have high suboptimal sensitivity for important clinical findings [11,12]. X-ray imaging is frequently used modality by medical practitioners to diagnose pneumonia with the obvious fact that X-ray imaging system is an essential part of medical care worldwide. Easy availability of X-ray machines make it need of the time to use for detection COVID-19 cases in the absence of screening workbenches and kits. Also there may be certain cases when patients imaged for other reasons and their scans reveal finding potentially suggestive for COVID-19. The X-ray images, as shown in Fig. 2 , are chest images taken in the interval of one year from a patient before and after COVID-19 infection [13]. The findings from X-ray images strongly suggests that even in the initial stages of COVID-19, the affect can be seen in lungs particularly in lower lobes and posterior segments, with peripheral and subpleural distribution. The lesions diffuse more and more as the time progresses. However, the biggest challenge encounter here is that the examination of each X-ray image and extraction of important findings take a lot of valuable time and presence of medical experts in the domain. Therefore, computer assistance is needed for medical practitioners to aid in detection of COVID-19 cases with X-ray images. In the current scenario when in each day lakhs of people are required to be check whether they contain deadly COVID-19 virus or not, an automatic, reliable and accurate computer-aided method is imperative to detect the presence of disease. Deep learning techniques in computer-aided methods contribute significantly in analyzing medical images in state-of-the-art manner and show excellent performances. Therefore, the present work proposes a deep learning based two-stage method to detect and classify pneumonia cases using X-ray images.

Fig. 2.

X-ray images of lungs taken in year: (a) 2019; (b) 2020 from a 72-year-old woman suffering from COVID-19. Images show cough and respiratory distress. The yellow circle and ovoid indicate the typical subpleural peripheral opacities [13].

2. State-of-the-Art methods

Methods that have been designed for the automated detection of COVID-19 coronavirus cases are summarized in Table 1 . These state-of-the-art methods are designed using either chest X-ray images [[14], [15], [16], [17], [18], [19], [20]] or CT images [[21], [22], [23]] and based on deep learning approaches. Machine learning approaches in comparison to deep learning approaches highly depends on expertise in extraction and selection of relevant features and shows limited performance. Therefore in recent years, deep learning techniques are preferred significantly due to major advantages like (i) maximum utilization of unstructured data, (ii) elimination of the need for feature engineering, (iii) ability to deliver high-quality results, (iv) elimination of unnecessary costs, and (v) elimination of the need for data labeling. Therefore, deep learning techniques are frequently used now-a-days to automatically extract relevant features to classify the object of interest. Table 1 shows that the researchers trained deep learning networks like Mobile Net, residual, and VGG, and presents a comparative study of their performances in the detection of COVID-19 cases. Apostolopoulos and Bessiana achieved 97.8% accuracy in classification of COVID-19 with VGG19 architecture [14]. Ozturk et al. showed the classification of COVID-19, no-finding and pneumonia showing 87% accuracy [15]. Sethy et al. [16] worked on to classify COVID-19+ and COVID-19- cases. However, state-of-the-art studies, listed so far, are not designed to differentiate COVID-19 induced pneumonia cases form other viral induced pneumonia cases. Such identification is needed to avoid misdiagnosis of COVID-19 virus as a common viral infection because COVID-19 virus infection has a different line of treatment. In addition, Abhiyev [24] et al., Tariq [25] et al., Bharati et al. [26] and Apostolopoulos et al. [27] proposed pulmonary chest disease classification by applying deep learning approaches. Research is beginning to focus on show the identification of COVID-19 cases with a variety of other pulmonary diseases like Fibrosis, Edema, and Effusion etc.

Table 1.

Brief detail of previous research works related to COVID-19 detection.

| Author (Year) [reference no.] | Method Used | Data Type | Training model | Image classes | Performance measure (Accuracy%) |

|---|---|---|---|---|---|

| Apostolopoulos and Bessiana (2020) [14] | Deep Transfer Learning | Chest X-ray | VGG 19, Mobile Net | COVID-19, Pneumonia, Normal | 97.8% |

| Ozturk (2020) [15] | Deep Learning | Chest X-ray | DarkCovidNet | Covid+, Pneumonia, No-findings | 87.0% |

| Sethy (2020) [16] | Deep Learning | Chest X-ray | ResNet50 + SVM | COVID-19+ vs COVID-19- | 95.4% |

| Yoo (2020) [17] | Deep Learning | Chest X-ray | ResNet 18 | COVID-19 vs Tuberculosis | 95.0% |

| Panwar (2020) [18] | Deep Learning | Chest X-ray | nCOVnet using VGG16 | COVID-19 vs other | 88.1% |

| Albahli (2020) [19] | Deep Learning | Chest X-ray | ResNet152 | COVID-19+ vs Other chest diseases |

87.0% |

| Civit-Masot (2020) [20] | Deep Learning | Chest X-ray | VGG16 | COVID-19+ vs Other |

86.0% |

| Wang (2020) [21] | Deep Learning | Chest CT | DeCovNet | COVID-19+ vs COVID-19- |

90.1% |

| Singh (2020) [22] | Deep Learning | Chest CT | MODE-based CNN | COVID-19 vs COVID-19- | 93.3% |

| Ahuja (2020) [23] | Deep Learning | Chest CT | ResNet 18 | COVID-19 vs COVID-19- | 99.4% |

Therefore, the present work deals with the designing of a novel deep network-based two-stage approach to detect covid-19 induced pneumonia cases. At the first stage, clinical cases are classified into viral pneumonia, bacterial pneumonia and normal cases with ResNet50 deep network architecture. Further as covid-19 induced pneumonia is due to virus, therefore at the second stage all the identified viral pneumonia cases are differentiate into covid-19 induced pneumonia and other viral pneumonia with ResNet101 deep network architecture. This two-stage approach is designed to provide a fast, systematic and reliable computer-aided solution for the detection of covid-19 cases to the patients visiting hospitals and go for initial screening with X-ray scan of their chest. Comprehensive evaluations have been performed to demonstrate the effectiveness of the proposed method with both (i) training-validation-testing and (ii) 5-fold cross validation procedures. Further various experiments have been performed to demonstrate the strength of the architecture (ResNet101) to detect covid-19 cases in comparison to the other deep-learning architectures used in state-of-the-art studies.

3. Materials

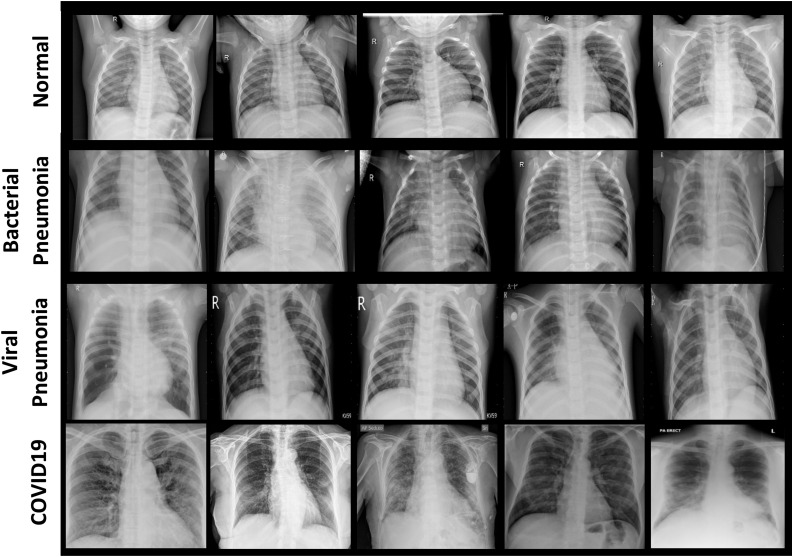

Two open-source image databases, Cohen [28] and Kaggle [29], are used to carry out this research work. Fig. 3 shows few example chest X-ray images of all the four cases, that are considered in this research work, i.e., normal / healthy (first row), bacterial pneumonia (second row), viral pneumonia (third row) and COVID-19 (fourth row). Chest X-ray images of COVID-19 infected patients have been obtained from GitHub repository shared by Dr. Joseph Cohen consisting annotated chest X-ray and CT scan images of COVID-19, acute respiratory distress syndrome (ARDS), severe accurate respiratory syndrome (SARS) and middle east respiratory syndrome (MERS). This repository contains 250 chest X-ray images of confirmed COVID-19 virus infection. The chest X-ray images of healthy persons, patients suffering from bacterial and other viral pneumonia have been obtained from Kaggle repository [29]. It contains 315 chest X-ray images of normal healthy persons, 300 images of patients suffering from bacterial pneumonia and 350 images of patients suffering from viral pneumonia.

Fig. 3.

Example chest X-ray images of four different cases: Normal / healthy person (first row), Patient suffering from bacterial pneumonia (second row), patient suffering from viral pneumonia (third row) and patient suffering from COVID-19 (fourth row).

4. Methodology

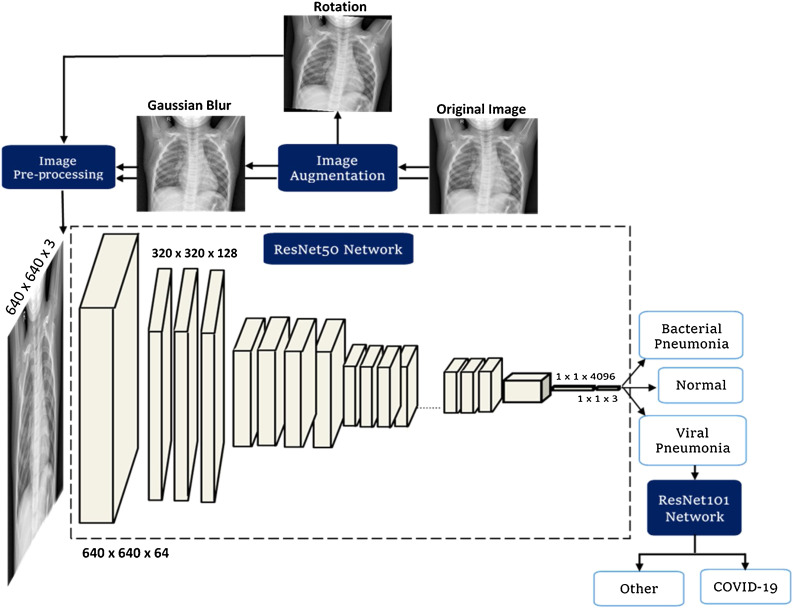

The proposed methodology, as shown in Fig. 4 , consists of the four phases, namely (i) image preprocessing, (ii) data augmentation, (iii) training of deep learning ResNet50 network to differentiate viral induced pneumonia, bacterial induced pneumonia and normal cases (iv) training ResNet-101 network to detect the presence of COVID-19 from positive viral induced pneumonia cases using X-ray images. The steps followed in these four phases are explained in this section.

Fig. 4.

Systematic block diagram of the proposed method to identify the presence of COVID-19 virus using X-ray images by differentiating pneumonia caused by COVID-19 virus from the pneumonia caused by bacteria and other viruses.

*Viral Pneumonia + indicates that viral pneumonia X-ray images are along with COVID-19 X-ray images in the dataset of stage-I network.

4.1. Image preprocessing

The image preprocessing is done in two steps:

Step1: All the images, originally acquired, were first checked to find out the minimum height and width present in the dataset images. After finding this minimum dimension, all the dataset images were resized to this dimension. Minimum dimension as obtained in our work is 640 × 640. Thus, all the images of dataset are resized to the size of 640 × 640.

Step2: Pre-processing of resized images is done according to the ImageNet database. ImageNet database is a publicly available computer vision dataset containing millions of images with more than thousand image classes. ImageNet Large Scale Visual Recognition Challenge (ILSVRC) uses subsets of images from ImageNet database to foster the development of state-of-the-art algorithms [30,31]. A network already trained on ImageNet database is used in this work to train the network on our dataset. This method, where a pre-trained network on ImageNet database is used to train the network on new dataset, is termed as transfer learning. Therefore, the resized images obtained after step1 are preprocessed according to the images in ImageNet database. In case of ImageNet database, a pixel wise division by 255, followed by a subtraction of the ImageNet mean and division by the ImageNet standard deviation is done. All the images of our dataset are processed in the same way. Each pixel of three channels in an image is individually normalized with the following ImageNet statistics:

Mean: 0.485 for channel1, 0.456 for channel2, and 0.406 for channel3.

Standard deviation: 0.229 for channel1, 0.224 for channel2, 0.225 for channel3.

This preprocessing step of image normalization is integrated in FastAi library before training the model.

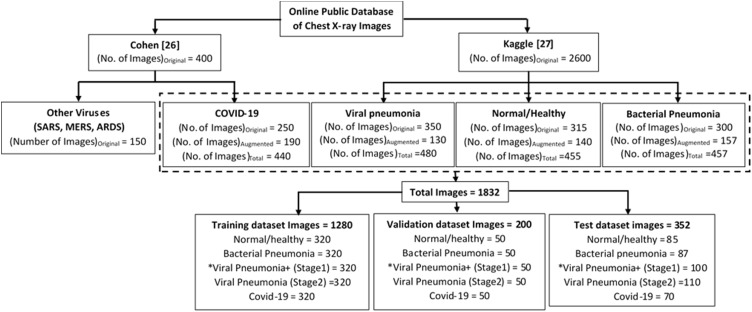

4.2. Data augmentation

Data augmentation is a strategy, which enables to increase the data significantly. Fig. 5 shows the distribution of X-ray images, obtained from both the databases, in each of the four classes, viz., normal/healthy and pneumonia caused by viral, bacteria and COVID-19 infection separately. It can be clearly observed from Fig. 5, that there is a difference in original number of acquired images in each image class from these two databases. This difference in number of images create a huge class imbalance. This class imbalance could create many problems like overfitting in which the model cannot generalize well on the unseen dataset, on the other hand accuracy cannot be a suitable performance metric in such case. The condition of overfitting causes the model to learn the details of the training dataset to the extent that it cannot generalize well and therefore, to combat this problem of overfitting, regularization technique like data augmentation are employed in this work. Consequently, number of images of COVID-19 and other classes are increased using augmentation techniques to prevent the model from overfitting. The data augmentation techniques, which are employed in this work, are rotation and Gaussian blur.

-

(i)

Rotation: Images were rotated at various angles in the range of -15° to 15° to generate a greater number of images and these augmented images were included in the training dataset.

-

(ii)

Gaussian blur: Gaussian filter of kernel size 5 × 5 is employed to remove high frequency components from the images and make them blur or smoother. The images obtained after blurring by Gaussian filter are also included in the training dataset.

Fig. 5.

Distribution of acquired database among various classes showing number of images.

However, while testing the networks, only images those are non-augmented were passed into the network to check the robustness of the model while predicting a class of the image. It means, here it is insured that the model is not over-fitted to only the images those are augmented.

4.3. Transfer learning with convolutional neural network

Transfer learning method is employed to train convolutional neural network (CNN) in the present work. In transfer learning method, a pre-trained CNN network on ImageNet database with saved weights was loaded and then trained on the dataset used in this work. The advantage of using transfer learning method to train the CNN is that the initial layers of the network are already trained, which are otherwise very hard to train due to the vanishing gradient problem. The other benefit is that the network already has learned basic features like recognizing shape, edges of the image etc. Thus, the pre-trained model benefits from the knowledge acquired in the form of learning basic features of the images from the existing database. This method of training the network reduces the computational time as only the final layers of the network needs to be trained.

Further, out of various architectures of CNN network, the residual network architectures (ResNet) have already outperformed to other network architectures like VGG, inception, dense networks in terms of computational time and accuracy in variety of challenges [[32], [33], [34], [35], [36]]. Therefore, the proposed method to detect the presence of COVID-19 is implemented in two stages having different residual network at each stage [36]. The trainings of both residual networks have been done via transfer learning.

In the pre-trained model, the last layers of the model are adapted according to the number of classes in which the dataset is to be differentiated. The last convolutional layer stores the extracted features, which passes through the model to convert into the predictions for each of the classes. The pre-trained weights of the initial convolutional layers, which serves as the backbone of the model, are freeze and only the last convolutional layers are trained to convert those extracted features into predictions for the specified classes for the new dataset. At the last convolutional layer, the model is cut to add new layers as (i) AdaptiveConcatPool2D layer, (ii) Flatted Layer, (iii) Blocks of Rectified Linear Unit (ReLU), (iv) dropout layer, (v) linear layer and (vi) Batch Norm1D. The last linear block has the number of outputs equal to the number of classes in which the dataset is to be distinguished for classification. This same methodology is followed at both the stages of proposed method.

The whole pre-processed dataset of X-ray images were split in 80:20 ratio according to Pareto principle. This means that 80% of the whole dataset is used for training (70%) and validation (10%), and the remaining 20% is used for testing the network. Further as shown in Fig. 5, same number of images in each image class, viz., bacterial pneumonia, viral pneumonia, normal/healthy and COVID-19 are taken to construct the training and validation datasets.

-

-

Stage-I residual network

Residual network of the stage-I is trained to differentiate X-ray images of bacterial induced pneumonia, viral induced pneumonia and normal healthy people. Residual network architecture with 50 layers (ResNet50) is employed at this stage [35].

Learning Rate assessment

Learning rate is a very imperative hyper-parameter while training the deep learning networks. After each epoch or iteration, the weights of the neurons are updated according to loss between the input and predicted values. After each epoch the weights ( are updated by the formula as shown in eq. (1).

| (1) |

where is the number of epoch processed. is the loss function and is the gradient of weight The α is learning rate and is the updated new weight. The choice of optimal learning rate can be very hard sometimes, as (i) a high value of learning rate can cause weights to converge quickly and may result in loosing sub-optimal weights and unstable training process, (ii) a small value of learning rate may result in slow training process and can cause delay in training the network, (iii)continuous iterations over different learning rates to optimize the network model need manual intervention. Therefore, in present work, the optimal choice of learning rate is made according to the study proposed by Smith [37] where the best rate can be found by varying learning rate cyclically between the reasonable boundary limits. Training of networks with cyclically varying learning rates instead of fixed values achieves improved classification accuracy often in fewer iterations and without the need of manual intervention. This method to find the optimal learning rate to train network model is available in FastAi library with function lr_find. The function lr_find launches a learning range test to provide a defined way to find out optimal learning rate.

-

-

Stage-II residual network

Residual network of stage-II is trained to detect the presence of COVID-19 induced pneumonia from other virus-induced pneumonia on X-ray images. Therefore, two sets of images are used to model stage-II network (i) chest X-ray images of patients suffering from COVID-19, and (ii) chest X-ray images of patients suffering from other viral induced pneumonia. Residual network architecture with 101 layers (ResNet101) is employed at this stage.

At the time of final implementation of the proposed method, those samples are predicted positive for viral pneumonia at stage-I, would be passed into the stage-II model to detect the presence of COVID-19 virus.

4.4. Performance metrics

Performance of the proposed two-stage COVID-19 detection system is evaluated via clinically important statistical measures like accuracy, precision and recall. These measures are briefly described as:

-

a)

Accuracy: It is a parameter that evaluates the capability of a method by measuring a ratio of correctly predicted cases out of total number of cases. Mathematically, it is expressed as:

| Accuracy = (TP + TN)/ (TP + FP + FN + TN) | (2) |

where TP: Number of correct predictions of positive cases by the method; TN: Number of correct predictions of negative cases by the method; FP: Number of incorrect predictions of positive cases by the method; TN: Number of incorrect predictions of negative cases by the method. However, accuracy is not always good to evaluate the performance of the model especially in case of asymmetrical dataset. Therefore, there is a need to evaluate the other performance metrics to test the model.

-

b)

Precision: It is the ratio of correctly predicted positive cases to the total predicted positive cases. High precision relates to the low false positive rate. It is expressed as:

| Precision = TP/TP + FP | (3) |

-

c)

Recall: It is the ratio of correctly predicted positive observations to the all observations in actual class.

| Recall = TP/TP + FN | (4) |

-

d)

Specificity: It is the ratio of correctly predicted negative observations to all the actual negative observations.

| Specificity = TN/FP + TN | (5) |

-

e)

F1-Score: F1 Score is measured in case of uneven class distribution especially with a large number of true negative observations. It provides a balance between Precision and Recall.

| (6) |

-

f)

Receiver operating characteristics (ROC) curve: It plots true positive rate (sensitivity) vs false positive rate (1-specificity) across a wide range of values and area under the curve represents the predictability of a binary classifier. The high value of area under the curve demonstrates that the model performs really well on the unseen dataset.

5. Results and discussion

The proposed COVID-19 detection method is implemented in Python with FastAi library. FastAi library requires very less code in standard deep learning domain and hence it can provide state-of-the-art results quickly and easily. Additionally, this library takes care of variations in image sizes and normalization methods according to the model. FastAi library facilitates faster training of the deep learning networks in compared to Keras, Tensorflow, pytorch and achieves a higher accuracy. FastAi is built on top of pytorch which means the trained models are in pytorch format. Intel i9-9820X CPU with NVIDIA GeForce RTX 2080 Ti having 16 GB memory is used to provide a separate memory to train the model which decreases the overall computational time. Jupyter notebook is used to write the python code.

5.1. Stage-I network model (ResNet50)

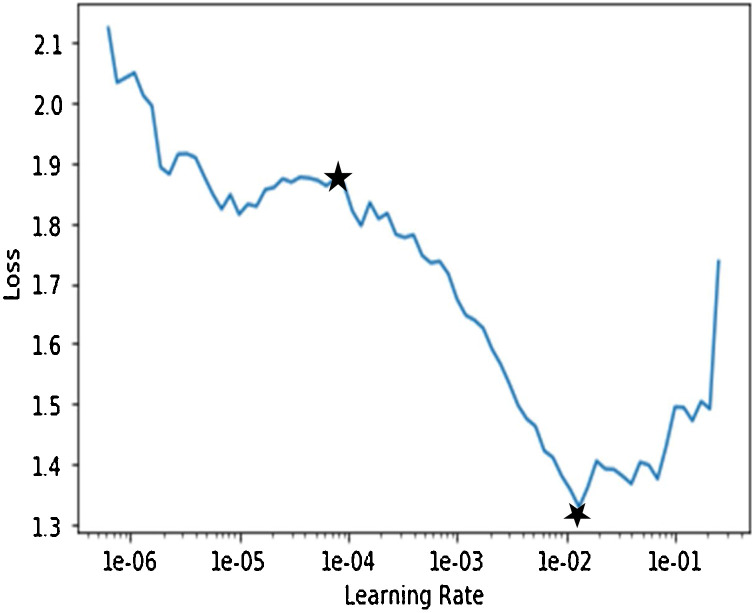

Deep network model of stage-I is trained to differentiate X-ray images of bacterial induced pneumonia, viral induced pneumonia and normal healthy cases. Deep network training involves the backpropagation of errors from the last layer to first and weights are constantly changing in this process. Various parameters related to model were optimized during training. The optimized values of parameters during training of stage-I network model are (i) number of layers: 50, (ii) batch size: 8, (iii) number of epochs: 11, (iv) optimizer: gradient descent, (v) loss function: categorical cross entropy and (vi) activation function of the last (classification) layer: softmax. Learning rate is one more important parameter. It is optimized in this work by analyzing the plot of loss vs learning rate during training of stage-I network model as shown in Fig. 6 . Here, the loss reflects the deviation of predicted value by the model from ground-truth (labelled) value. Higher the value of loss means higher is the deviation of predicted value from ground-truth value. Learning range can be seen on this plot to find appropriate learning rate to train the model. The plot clearly illustrates by seeing within marked stars on plot that the loss is continuously decreasing from 1e-04 (approx.) to 1e-02 (approx.). Therefore, to decrease the loss continuously during the training phase of the model, any value from 1e-04 to 1e-02 can be chosen. Any value greater than this range results in increasing loss between predicted and desired output. Start with the low learning rate, loss improves slowly and then training accelerates. Thus optimal value chosen for training the model is 1e-04.

Fig. 6.

Plot of training loss with the learning rate for stage-1 ResNet50 network.

Trained ResNet50 model has 95.3% training accuracy. The validation dataset consists of 150 X-ray images out of which 50 images consists of pneumonia patients, 50 images consists of persons suffering from viral pneumonia and the remaining 50 images consists of normal healthy people. Some X-ray images in validation dataset of lungs with people suffering from bacterial and viral pneumonia were misclassified as normal is due to close image resemblance between these two classes of image. In addition, this could also be a possibility that the infected patient with viral or bacterial pneumonia is at an initial stage that the lungs are not much infected by the disease.

Further, the images of validation dataset are divided into following three categories to provide a wide illustration of decision making by stage-I network model: (i) random samples, (ii) most incorrect samples and (iii) most correct samples. This segregation of validation dataset images is made on the basis of loss between predicted and ground-truth values, and the probability score for the actual class. The activation maps of the X-ray images of these three categories, depict the areas in the image about the feature maps learned by the model to distinguish among various classes and show the regions in the images due to which designed model predicts a particular class.

While testing the model, network predicts the probability score (or weightage) for each class. Probability score of the predicted class is based on the loss value between the actual ground-truth and the predicted value by the model. The class, which has the highest probability score (minimum loss value), is assigned as a predicted class by the model. The confusion matrix, as shown in Table 2 , summarizes the results on test dataset. It can be seen that the designed model at stage-I have the predication of (i) bacterial pneumonia with 91.95% (80/87) recall and 93.02% (80/86) precision, (ii) viral pneumonia with 92.00% (92/100) recall and precision both and (iii) normal cases with 95.29% (81/85) recall and 94.19% (81/86) precision. Out of 87 test images of bacterial pneumonia, 3 are misclassified as normal image and 4 are misclassified as viral pneumonia. Out of 100 test images of viral pneumonia, 2 are misclassified as normal images and 6 are misclassified as bacterial pneumonia. Out of 85 test images of healthy cases, 4 are misclassified as viral pneumonia. Thus the designed model at stage-I have shown a sufficiently high overall classification accuracy of 93.01% on test dataset.

Table 2.

Confusion matrix on the test results at stage-I of deep network classifier.

| Ground-Truth |

Overall | ||||

|---|---|---|---|---|---|

| Predicted Class | Bacterial Pneumonia | Normal | Viral Pneumonia | Classification | Precision |

| Bacterial Pneumonia | 80 | 0 | 6 | 86 | 93.02% |

| Normal | 3 | 81 | 2 | 86 | 94.19% |

| Viral-Pneumonia | 4 | 4 | 92 | 100 | 92.00% |

| Overall Ground-truth | 87 | 85 | 100 | 272 | |

| Recall | 91.95% | 95.29% | 92.00% | ||

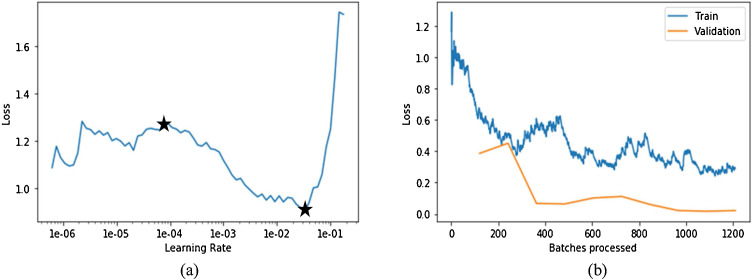

5.2. Stage-II network model (ResNet101)

Deep network model of stage-II is trained separately to differentiate the presence of COVID-19 from viral pneumonia X-ray images. The optimized values of parameters during training of stage-II network model are (i) number of layers: 101, (ii) batch size: 32, (iii) number of epochs: 25, (iv) optimizer: gradient descent, (v) loss function: categorical cross entropy and (vi) Activation function of the last (classification) layer: softmax. Learning rate is also optimized to reduce the computational time. The plot in Fig. 7 (a) shows the variation of loss with learning rate during training of stage-II network. It can be seen that there is a high decreasing slope from 1e-4 to 5e-1. Therefore, the learning rate chosen here is 1e-4 as it will cause the loss between predicted and actual value to decrease at a fast rate. Further, the plot in Fig. 7(b) shows the variation of training and validation loss with number of batches processed. The batch size in 1 epoch, which is 16, indicates the number of samples processed before model is updated. The entire dataset is passed backward and forward through the network only once. The number of batches processed is clearly an indication of number of epochs. Plot shows that the training loss is continuously decreasing till value 0.08 in approximately 110 batches being processed and thereafter, loss remains constant for further iterations. Therefore, this is the point where training of the network should be stopped having minimum validation error as it is not decreasing further. However, if the model is continued to be trained further to minimize the training loss, then there could be a problem of overfitting. Overfitting means that model was not learned the decision boundary or it has not been generalized to the unseen data, and has learned the data itself.

Fig. 7.

(a) Plot of training loss with the learning rate for stage-2 ResNet101 network; (b) Plot of loss with each epoch for both training and validation dataset.

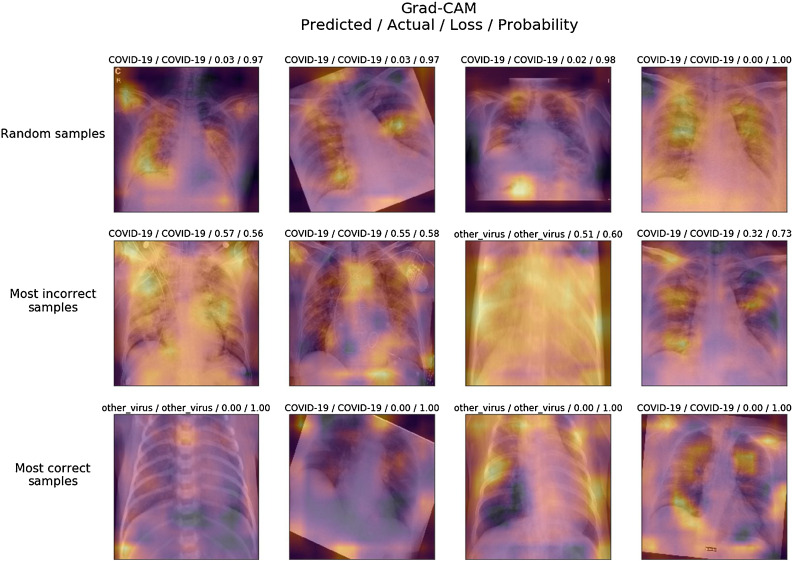

The deep ResNet101 model was trained with 100% training accuracy. The validation dataset at stage-II consists of 100 X-ray images, out of which 50 images consist of persons suffering from viral pneumonia and the remaining 50 images belong to COVID-19 pneumonia cases. Fig. 8 shows the activation maps of samples of validation dataset in three categories as random, most incorrect and most correct samples. Discriminatory location/features indicating the presence of COVID-19 in X-ray images can be assessed by visualizing these activation maps. In most of the images of infected patients by viral pneumonia have the likelihood of presence of a disease in the air sacks of the lungs.

Fig. 8.

Activation maps of the predicted class by the stage-II network model. For example if the model predicts COVID-19 class of some image, the above visualization shows the areas in the image where the model thinks about COVID-19 presence.

The test dataset consists 180 images; out of which 70 chest X-ray images are related to patients suffering from COVID-19 and 110 images are related to patients suffering from other viral pneumonia. No augmented image is taken in test dataset to maintain the robustness of model by excluding the possibility to be over-fitted on augmented data class. The confusion matrix, as shown in Table 3 , summarizes the results on test dataset. It can be seen that the designed model at stage-II have the predication of (i) COVID-19 pneumonia cases with 97.14% (68/70) recall and 97.14% (68/70) precision, (ii) other viral pneumonia cases with 98.18% (108/110) recall and 98.18% (108/110) precision. Out of 70 test images of COVID-19 pneumonia, 2 are misclassified as other viral pneumonia. Out of 110 test images of other viral pneumonia, 2 are misclassified as COVID-19 pneumonia. Thus, the designed model at stage-II have shown a sufficiently high overall classification accuracy of 97.78% on test dataset.

Table 3.

Confusion matrix on the test results at stage-II of deep network classifier.

| Ground-Truth |

Overall | |||

|---|---|---|---|---|

| Predicted Class | COVID-19 | Other Viral Pneumonia | Classification | Precision |

| COVID-19 | 68 | 02 | 70 | 97.14% |

| Other Viral-Pneumonia | 02 | 108 | 110 | 98.18% |

| Overall Ground-truth | 70 | 110 | 180 | |

| Recall | 97.14% | 98.18% | ||

5.3. Architecture of stage-I and stage-II network models

Table 4 illustrates the architectural details of stage-I (ResNet50) and stage-II (ResNet101) residual networks. ResNet101 contains more layered blocks as compared to ResNet50. Thus, ResNet101 network is allowed to learn a large number of parameters than ResNet50 due to its large depth, and making it easier to extract latent space features differentiating Covid-19 from other types of virus induced pneumonia. These learnable parameters in ResNet101 are over 7.6 billion whereas these parameters in ResNet50 are over 23.2 million. Higher number of parameters allows the ResNet101 network to create deep abstract representation of the input to obtain a good classification accuracy. At stage-II, ResNet101 model has to provide classification in between COVID-19 cases and a large number of other virus induced pneumonias cases such as SARS, MERS, and ARDS into a single class. Therefore, a deep 101-layered residual network is suitable to learn a large number of parameters required to generalize the classification of COVID-19 cases form other viral pneumonia cases. Even though the depth of the network is very high, the complexity of the network is still lower than the 16/19 layered VGG network, which contains 15.3/19.6 billion parameters, allowing the network to train faster with less computational cost.

Table 4.

Illustration of ResNet101 and ResNet50 network architectures.

| Layer name | Output size | 101-layer | 50-layer |

|---|---|---|---|

| Conv1 | 112 × 112 | 7 × 7,64,stride 2 | |

| 3 × 3 max pool, stride 2 | |||

| Conv2_x | 56 × 56 | X3 | X3 |

| Conv3_x | 28 × 28 | X4 | X3 |

| Conv4_x | 14 × 14 | X23 | X3 |

| Conv5_x | 7 × 7 | X3 | X3 |

| 1 × 1 | Average pool, 3D Fully Connected Layer, Softmax | ||

5.4. Performance analysis of ResNet101 with other networks in detection of COVID-19 cases

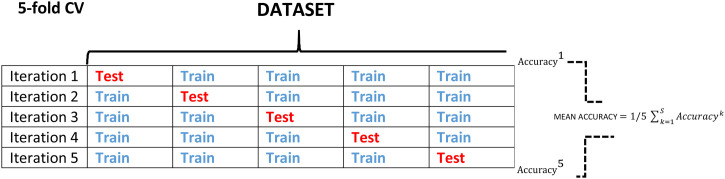

Table 5 illustrates the performance comparison of stage-II model, i.e., ResNet101 network architecture in detection of Covid-19 cases with the other CNN architectures like VGG16, DenseNet121 and ResNet18 [36,38,39]. Five-fold cross validation procedure is followed to evaluate the generalized performance of the model and better comparison of it with other network architectures. Fig. 9 depicts the five-fold cross validation procedure where one unique fold is chosen as a test set and the remaining folds are chosen as a training dataset. The model is fitted on the training dataset and evaluated on test dataset. Same procedure is followed for each of the iteration. The performance parameters obtained for each of the five folds are averaged out to get the resultant evaluation performance parameters of the model. This five-fold cross validation is performed with a dataset consisting 750 images. These 750 images of dataset are divided into five folds of 150 images with each fold having 75 images of COVID-19 and rest 75 images of other viral pneumonias. The weights of the initial layers, which are pre-trained on the ImageNet database, are kept frozen for all the architectures and the last convolutional layers are same as mentioned earlier. The parameters for training for all the networks are: learning rate: 0.001, batch size = 32, number of epochs: 15, weight decay = 0.3 for a fair comparison. Table 5 shows the results of 5-fold cross validation in terms of (i) confusion matrix, expressed by True Positive (TP), True Negative (TN), False Positive (FP), False Negative (FN) and (ii) performance measures like accuracy, recall, specificity, precision, F1-score. It can be observed that the deeper networks achieved a higher performance in comparison to shallower networks on comparing the results of different models from the Table 5. The higher values of quantitative parameters like accuracy, recall, specificity and F1 score clearly demonstrate the superiority of ResNet101 architecture than the other architectures. ResNet101 has the best performance by achieving averaged score of accuracy/sensitivity/specificity/Precision/F1-score as 98.93/98.934/98.66/96.39/98.154 over 5-folds on the same dataset.

Table 5.

The table compares the 5-fold cross validation performances of various classifiers in the detection of Covid-19.

| MODELS/FOLD | Confusion matrix and Performance results (%) |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| TP | TN | FP | FN | Accuracy | Recall | Specificity | Precision | F1-score | ||

| ResNet18 | Fold-1 | 75 | 75 | 0 | 0 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 |

| Fold-2 | 75 | 73 | 2 | 0 | 98.67 | 100.00 | 97.30 | 97.40 | 100.00 | |

| Fold-3 | 75 | 68 | 7 | 0 | 95.33 | 100.00 | 90.60 | 91.46 | 95.54 | |

| Fold-4 | 73 | 73 | 2 | 2 | 97.33 | 97.33 | 97.30 | 97.33 | 97.33 | |

| Fold-5 | 71 | 72 | 3 | 4 | 95.33 | 94.67 | 96.00 | 95.95 | 95.30 | |

| Mean | 97.33 | 98.4 | 96.20 | 96.42 | 97.63 | |||||

| VGG16 | Fold-1 | 72 | 74 | 1 | 3 | 97.33 | 96.00 | 98.60 | 98.63 | 97.30 |

| Fold-2 | 72 | 74 | 1 | 3 | 97.33 | 96.00 | 98.60 | 98.63 | 97.30 | |

| Fold-3 | 74 | 72 | 3 | 1 | 97.33 | 98.67 | 96.00 | 96.10 | 97.37 | |

| Fold-4 | 74 | 73 | 2 | 1 | 98.00 | 98.67 | 97.30 | 97.37 | 98.01 | |

| Fold-5 | 65 | 75 | 0 | 10 | 93.33 | 86.67 | 100.00 | 100.00 | 92.86 | |

| Mean | 96.66 | 95.20 | 98.1 | 98.14 | 96.56 | |||||

| DenseNet121 | Fold-1 | 75 | 70 | 5 | 0 | 96.67 | 100.00 | 93.33 | 93.75 | 96.77 |

| Fold-2 | 75 | 73 | 2 | 0 | 98.67 | 100.00 | 97.33 | 97.40 | 98.68 | |

| Fold-3 | 75 | 72 | 3 | 0 | 98.00 | 100.00 | 96.00 | 96.15 | 98.04 | |

| Fold-4 | 73 | 73 | 2 | 2 | 97.33 | 97.33 | 97.30 | 97.33 | 97.33 | |

| Fold-5 | 73 | 73 | 2 | 2 | 97.33 | 97.33 | 97.30 | 97.33 | 97.33 | |

| Mean | 97.60 | 98.93 | 96.20 | 96.39 | 97.63 | |||||

| ResNet101 | Fold-1 | 75 | 75 | 0 | 0 | 100.00 | 100.00 | 100.00 | 100.00 | 96.77 |

| Fold-2 | 75 | 71 | 4 | 0 | 97.33 | 100.00 | 94.67 | 94.94 | 97.40 | |

| Fold-3 | 75 | 74 | 1 | 0 | 100.00 | 100.00 | 98.67 | 98.68 | 99.34 | |

| Fold-4 | 71 | 75 | 0 | 4 | 97.33 | 94.67 | 100.00 | 100.00 | 97.26 | |

| Fold-5 | 75 | 75 | 0 | 0 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | |

| Mean | 98.93 | 98.934 | 98.66 | 96.39 | 98.154 | |||||

Fig. 9.

Depiction of 5-fold cross validation for performance analysis.

5.5. Covid-19 detection in the presence of other pulmonary diseases

This experiment aims to (i) realize the detection of COVID-19 cases in the presence of pulmonary diseases like Atelectasis, Cardiomegaly, Consolidation, Edema and Effusion and (ii) get the suitable model for this classification task. Therefore, in this experiment, different deep learning models are trained and their performances are compared in classifying Atelectasis, Cardiomegaly, Consolidation, Edema and Effusion and COVID-19 cases.

The open source database called CheXpert [40], which consists of 224,316 frontal view chest X-ray images of 14 disease classes acquired from 65,240 unique subjects, is used to perform this experiment. Five classes of pulmonary diseases, viz., Edema, Effusion, Atelectasis, Cardiomegaly, Consolidation have been chosen for this experiment with only frontal images. Original images obtained from this database of these five classes are resized to 320 × 320 for further preprocessing according to the ImageNet database. Preprocessing has been done as stated earlier in section 4.1.

Different models, like ResNet18, ResNet101, DenceNet121 and VGG-16, are trained as a binary classifier to detect the presence of each disease in view of that a person may have more than one disease at a time. Network models and learning rate assessment procedure are same as mentioned in the previous experiments. Area under the ROC curve (AUROC) becomes imperative in determining the performance of the models because of huge class imbalance in the dataset. Table 6 shows the performance comparison in terms of AUROC of ResNet18, ResNet101, DenceNet121 and VGG-16 models in each binary classification task, i.e., one disease versus rest other diseases. It can be observed that the detection of COVID-19 among other pulmonary diseases is highly promising on X-ray images with all the deep network models. Moreover, the predictions of this experiment can finally be pooled together to identify the types of diseases present with the possibility of more than one disease at a time. Consequently after this preliminary work, it can be said that a deep network architecture-based computer-aided solutions may help in the detection of COVID-19 cases and they can be used in parallel testing to patients visiting to a hospital or clinic suffering for other pulmonary diseases to avoid the spread of infection.

Table 6.

Performance comparison of different deep network models to detect a specific pulmonary disease in presence of rest others using area under the receiver operating characteristic curve as a performance measure.

| Network | Edema | Effusion | Atelectasis | Cardiomegaly | Consolidation | Covid-19 |

|---|---|---|---|---|---|---|

| VGG-16 | 0.809 | 0.883 | 0.809 | 0.766 | 0.892 | 0.998 |

| ResNet -18 | 0.868 | 0.899 | 0.816 | 0.797 | 0.905 | 0.999 |

| ResNet-101 | 0.889 | 0.907 | 0.813 | 0.810 | 0.905 | 0.982 |

| DenseNet-121 | 0.883 | 0.908 | 0.809 | 0.794 | 0.895 | 0.999 |

5.6. Limitations

There are certain limitations too of the proposed work. First, the network design could be improved in order to increase the sensitivity or true positive rate for COVID-19 detection. For example, in the current workflow, if the stage1 model misclassify a viral pneumonia image as healthy or bacterial pneumonia, the further detection of COVID-19 or other viruses completely fails. Therefore, in the current design the accurate detection of COVID-19 heavily relies on the stage1 model. Second, the limited number of COVID-19 images makes a bit difficult to train the deep learning models from scratch, which is overcome by using deep transfer learning method in the current study. The present work is carried out with the images from two databases, i.e., Cohen [28] and Kaggle [29]. The work can be extended with a greater number of images of COVID-19 form other databases. Last, the pipeline for COVID-19 detection could also be extended to detect other virus like MERS, SARS, AIDS and H1N1.

6. Conclusion

In this study, a promising two-stage strategy to detect COVID-19 cases while differentiating it from bacterial pneumonia, viral pneumonia and healthy normal people with the X-ray images of chest using deep residual learning networks is proposed. The first stage model shows a good performance with an accuracy of 93.01% in differentiating viral induced pneumonia, bacteria induced pneumonia and normal/healthy people. The viral induced pneumonia X-ray images were further analyzed for detecting the presence of COVID-19. The second stage model to detect the presence of COVID-19 shows an exceptional performance with an accuracy of 97.22%. The model is reliable, accurate, fast and requires less computational requirements to detect the presence of pneumonia caused by COVID-19 virus from the viral induced pneumonia so that appropriate treatment could be given. In the present scenario, parallel testing can be used to avoid the spread of infection to frontline workers and generate primary diagnosis to understand if a patient is affected or not by COVID-19. Therefore proposed method, when impacting management, can be used as an alternative diagnostic tool with potential candidature in detection of COVID-19 cases. Finally, the present work suggests that it may be possible to detect COVID-19 using deep learning models, since all current studies show good results. The high accuracies obtained by several methods suggest that the deep learning models find something in images and that something makes deep networks capable of distinguishing the images correctly. Research as to whether the results of the deep learning methods constitute a reliable diagnosis is left for the future.

Conflict of interest

None declared

References

- 1.Zhang W. Imaging changes of severe COVID-19 pneumonia in advanced stage. Intensive Care Med. 2020;46(5):841–843. doi: 10.1007/s00134-020-05990-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Xu Y., Li X., Zhu B., Liang H., Fang C., Gong Y. Characteristics of pediatric SARS-CoV-2 infection and potential evidence for persistent fecal viral shedding. Nat Med. 2020;26(4):502–505. doi: 10.1038/s41591-020-0817-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.World Health Organization “WHO Director-General’s opening remarks at the media briefing on COVID-19-11 March 2020” https://www.who.int/dg/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19---11-march-2020. Accessed 11th March, 2020.

- 4.World Health Organization. “Coronavirus disease (COVID-2019) situation reports” https://www.who.int/emergencies/diseases/novel-coronavirus-2019/situation-reports/. Accessed 10th April, 2020.

- 5.Walker P.G.T. Imperial College London; 2020. The global impact of Covid-19 and strategies for mitigation and suppression. https://www.imperial.ac.uk/mrc-global-infectious-disease-analysis/covid-19/report-12-global-impact-covid-19/. Accessed 26th March, 2020. [Google Scholar]

- 6.World Health Organization “Coronavirus overview, prevention and symptoms” https://www.who.int/health-topics/coronavirus#tab=tab_1. Accessed 12th April.

- 7.Thermo Fisher Scientific “An introduction to electron microscopy” https://www.fei.com/introduction-to-electron-microscopy/sem/. Accessed 15th April, 2020.

- 8.Jacinta Bowler “This is what the Covid-19 virus looks like under electron microscope” https://www.sciencealert.com/this-is-what-the-covid-19-virus-looks-like-under-electron-microscopes. Accessed 14th February, 2020.

- 9.Corman V.M., Landt O., Kaiser M., Molenkamp R., Meijer A., Chu D.K. Detection of 2019 novel coronavirus (2019-nCoV) by real-time RT-PCR. Euro Surveill. 2020;25(3):2000045. doi: 10.2807/1560-7917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Tang A., Tong Z.D., Wang H.L., Dai Y.X., Li K.F., Liu J.N. Detection of novel coronavirus by RT-PCR in stool specimen from asymptomatic child, China. Emerging Infect Dis. 2020;26(6):1337–1339. doi: 10.3201/eid2606.200301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Annarumma M., Withey S.J., Bakewell R.J., Pesce E., Goh V., Montana G. Automated triaging of adult chest radiographs with deep artificial neural networks. Radiology. 2019;291(1):196–202. doi: 10.1148/radiol.2018180921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Mazurowski M.A., Buda M., Saha A., Bashir M.R. Deep learning in radiology: an overview of the concepts and a survey of the state of the art with focus on MRI. J Magn Reson Imaging. 2019;49(4):939–954. doi: 10.1002/jmri.26534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gracia MM“Imaging the coronavirus disease COVID-19” https://healthcare-in-europe.com/en/news/imaging-the-coronavirus-disease-covid-19.html. Accessed 13th April.

- 14.Apostolopoulos I.D., Bessiana T. Covid-19: automatic detection from X-ray images utilizing transfer learning with convolutional neural networks. Physical and Engineering Sciences in Medicine. 2020;43(635):40. doi: 10.1007/s13246-020-00865-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ozturk T., Talo M., Yildirim E.A., Baloglu U.B., Yildirim O., Rajendra Acharya U. Automated detection of COVID-19 cases using deep neural networks with X-ray images. Comput Biol Med. 2020;121 doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sethy P.K., Behera S.K., Ratha P.K., Biswas P. Detection of coronavirus disease (COVID-19) based on deep features and support vector machine. International Journal of Mathematical, Engineering and Management Sciences. 2020;5(4):643–651. doi: 10.33889/IJMEMS.2020.5.4.052. [DOI] [Google Scholar]

- 17.Yoo S.H., Geng H., Chiu T.L., Yu S.K., Cho D.C., Heo J. Deep learning-based decision-tree classifier for COVID-19 diagnosis from chest X-ray imaging. Front Med (Lausanne) 2020;7(427):1–8. doi: 10.3389/fmed.2020.00427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Panwar H., Gupta P.K., Siddiqui M.K., Morales-Menendez R., Singh V. Application of deep learning for fast detection of COVID-19 in X-Rays using nCOVnet. Chaos Solitons Fractals. 2020;138 doi: 10.1016/j.chaos.2020.109944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Albahli S. A deep neural network to distinguish covid-19 from other chest diseases using X-ray images. Curr Med Imaging Rev. 2020;16:1–11. doi: 10.2174/1573405616666200604163954. [DOI] [PubMed] [Google Scholar]

- 20.Civit-Masot J., Luna-Perejón F., Morales M.D., Civit A. Deep learning system for COVID-19 diagnosis aid using X-ray pulmonary images. Applied Science. 2020;10(13):4640. doi: 10.3390/app10134640. [DOI] [Google Scholar]

- 21.Wang X., Deng X., Fu Q., Zhou Q., Feng J., Ma H. A weakly-supervised framework for COVID-19 classification and lesion localization from chest CT. IEEE Trans Med Imaging. 2020;39(8):2615–2625. doi: 10.1109/TMI.2020.2995965. [DOI] [PubMed] [Google Scholar]

- 22.Singh D., Kumar V., Vaishali, Kaur M. Classification of COVID-19 patients from chest CT images using multi-objective differential evolution–based convolutional neural networks. Eur J Clin Microbiol Infect Dis. 2020;39:1379–1389. doi: 10.1007/s10096-020-03901-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ahuja S., Panigrahi B.K., Dey N., Rajinikanth V., Gandhi T.K. Deep transfer learning-based automated detection of COVID-19 from lung CT scan slices. Appl Intell. 2020 doi: 10.1007/s10489-020-01826-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Abiyev R.H., Ma’aitah M.K.S. Deep convolutional neural networks for chest diseases detection. J Healthc Eng. 2018;2018 doi: 10.1155/2018/4168538. 11 pages. doi:10.1155/2018/4168538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tariq Z., Shah S.K., Lee Y. 2019. Lung disease classification using deep convolutional neural network. in: 2019 IEEE international conference on bioinformatics and biomedicine (BIBM) pp. 732–735. [Google Scholar]

- 26.Bharati S., Podder P., Mondal M.R.H. Hybrid deep learning for detecting lung diseases from X-ray images. Inform Med Unlocked. 2020;20 doi: 10.1016/j.imu.2020.100391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Apostolopoulos I.D., Aznaouridis S.I., Tzani M.A. Extracting possibly representative COVID-19 biomarkers from X-ray images with deep learning approach and image data related to pulmonary diseases. J Med Biol Eng. 2020;40:462–469. doi: 10.1007/s40846-020-00529-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cohen J.P., Morrison P., Dao L. 2020. COVID-19 image data collection.https://github.com/ieee8023/covid-chestxray-dataset [Google Scholar]

- 29.Mooney P. 2018. Chest x-ray images (pneumonia) Online, https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia, tanggal akses. [Google Scholar]

- 30.Deng J., Dong W., Socher R., Li L., Li Kai, Fei-Fei Li. 2009. "ImageNet: a large-scale hierarchical image database," 2009 IEEE Conference on Computer Vision and pattern recognition, Miami, FL; pp. 248–255. [DOI] [Google Scholar]

- 31.Russakovsky O., Deng J., Su H., Krause J., Satheesh S., Ma S. Imagenet large scale visual recognition challenge. Int J Comput Vis. 2015;115(3):211–252. [Google Scholar]

- 32.Goodfellow I., Bengio Y., Courville A. MIT press; 2016. Deep learning. [Google Scholar]

- 33.Zagoruyko S., Komodakis N. Wide residual networks. In: Wilson Richard C., Hancock Edwin R., Smith William A.P., editors. Proceedings of the British machine vision Conference (BMVC) BMVA Press; 2016. pp. 87.1–87.12. [Google Scholar]

- 34.Dhankhar P. ResNet-50 and VGG-16 for recognizing facial emotions. International Journal of Innovations in Engineering and Technology (IJIET). 2019;13(4):126–130. [Google Scholar]

- 35.Vatathanavaro S., Tungjitnob S., Pasupa K. 2018. White blood cell classification: a comparison between VGG-16 and ResNet-50 models. In proceeding of the 6th joint symposium on computational intelligence (JSCI6), 12 December 2018, Bangkok, Thailand; pp. 4–5. [Google Scholar]

- 36.He K., Zhang X., Ren S., Sun J. 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition; pp. 770–778. [Google Scholar]

- 37.Smith L.N. 2017. Cyclical learning rates for training neural networks. In 2017 IEEE Winter Conference on Applications of Computer Vision (WACV) pp. 464–472. [Google Scholar]

- 38.Simonyan K., Zisserman A. Very deep convolutional networks for large-scale image recognition. Computational and Biological Learning Society. 2015:1–14. [Google Scholar]

- 39.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. 2017. Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition; pp. 2261–2269. [DOI] [Google Scholar]

- 40.Irvin J., Rajpurkar P., Ko M., Yu Y., Ciurea-Ilcus S., Chute C. Chexpert: A large chest radiograph dataset with uncertainty labels and expert comparison. Proc Conf AAAI Artif Intell. 2019;33:590–597. [Google Scholar]