Abstract

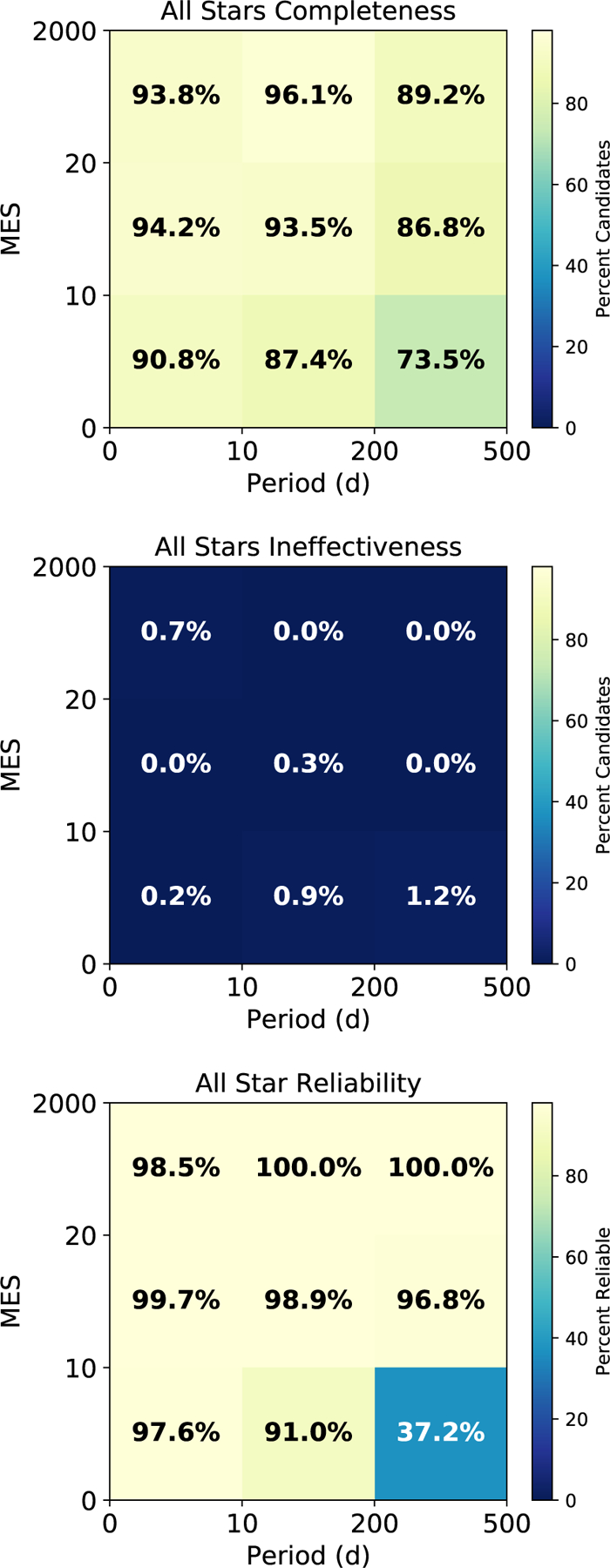

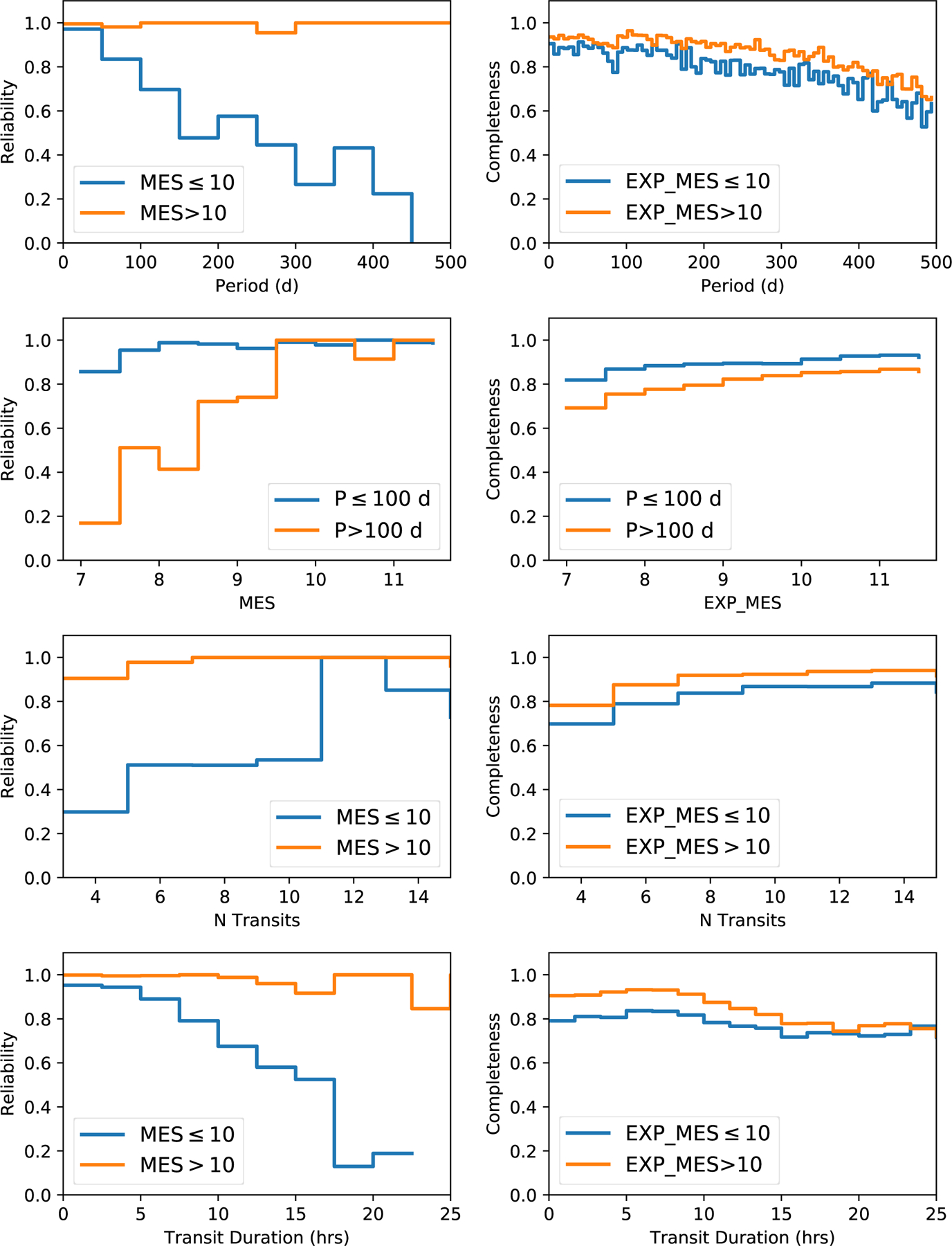

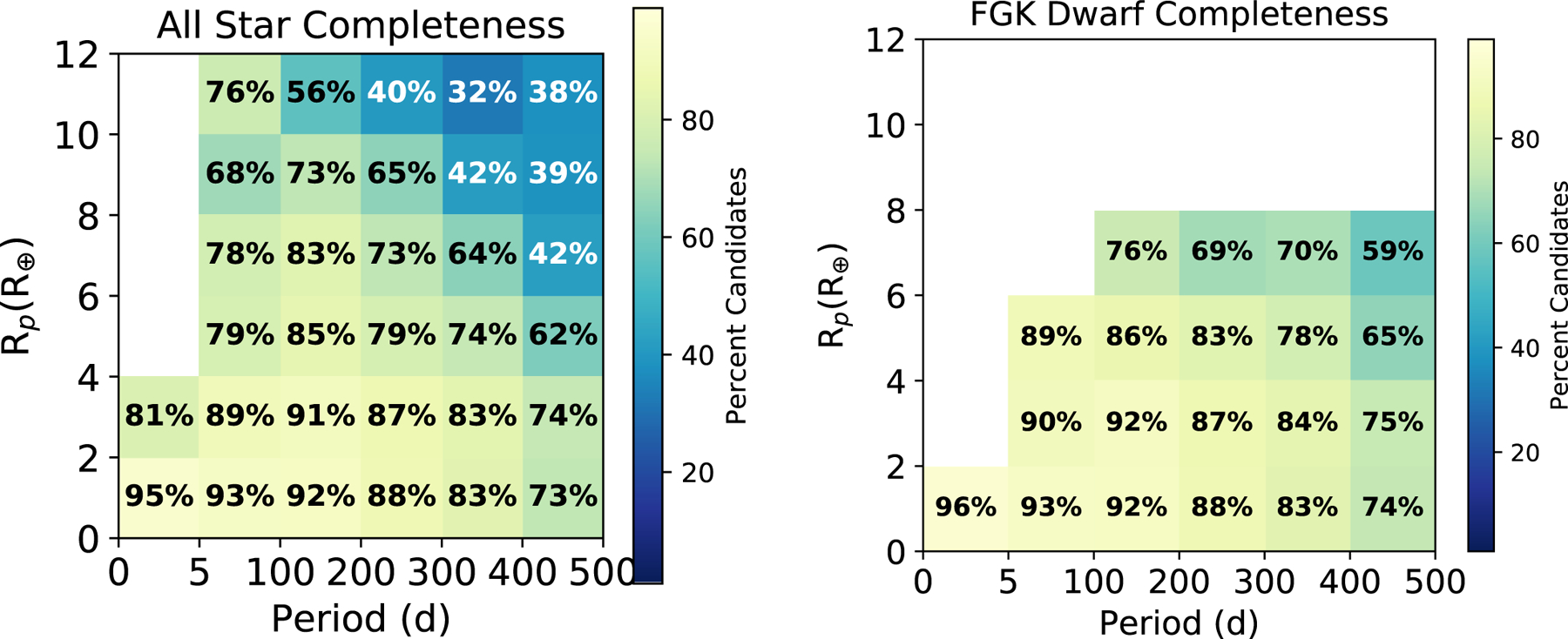

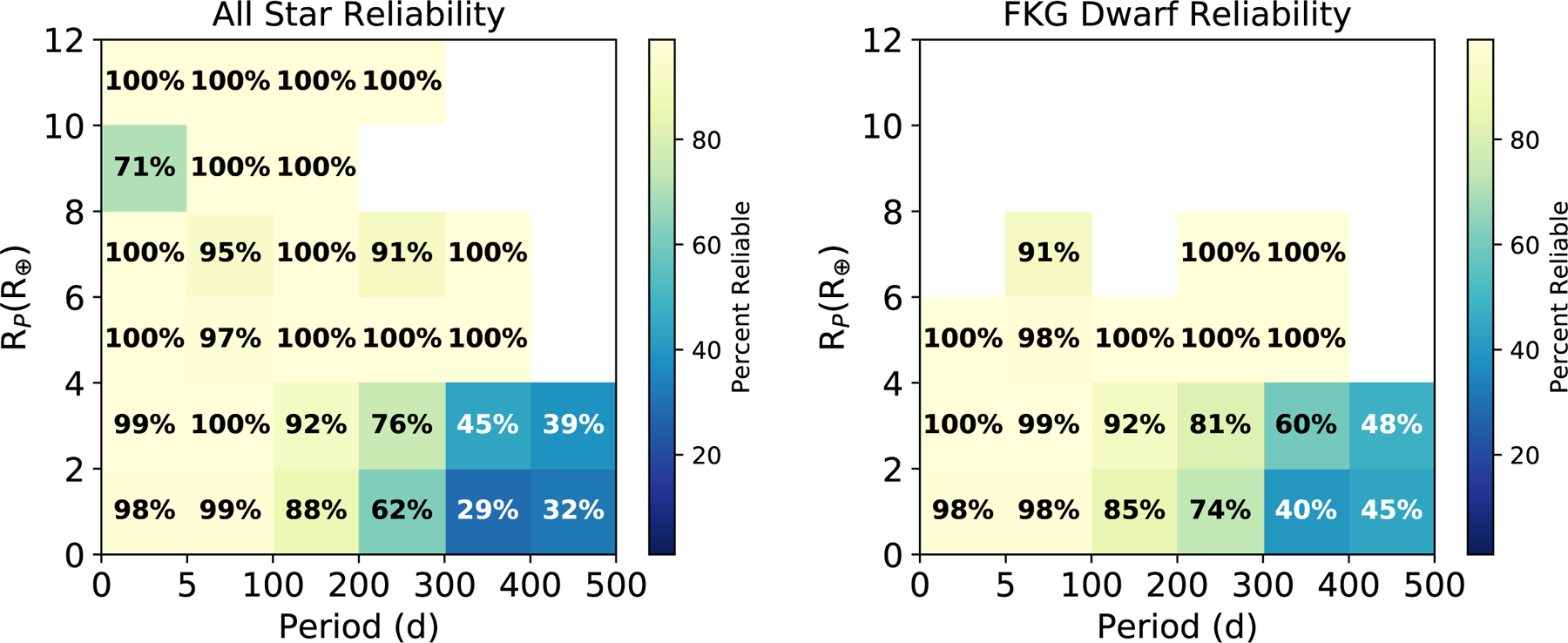

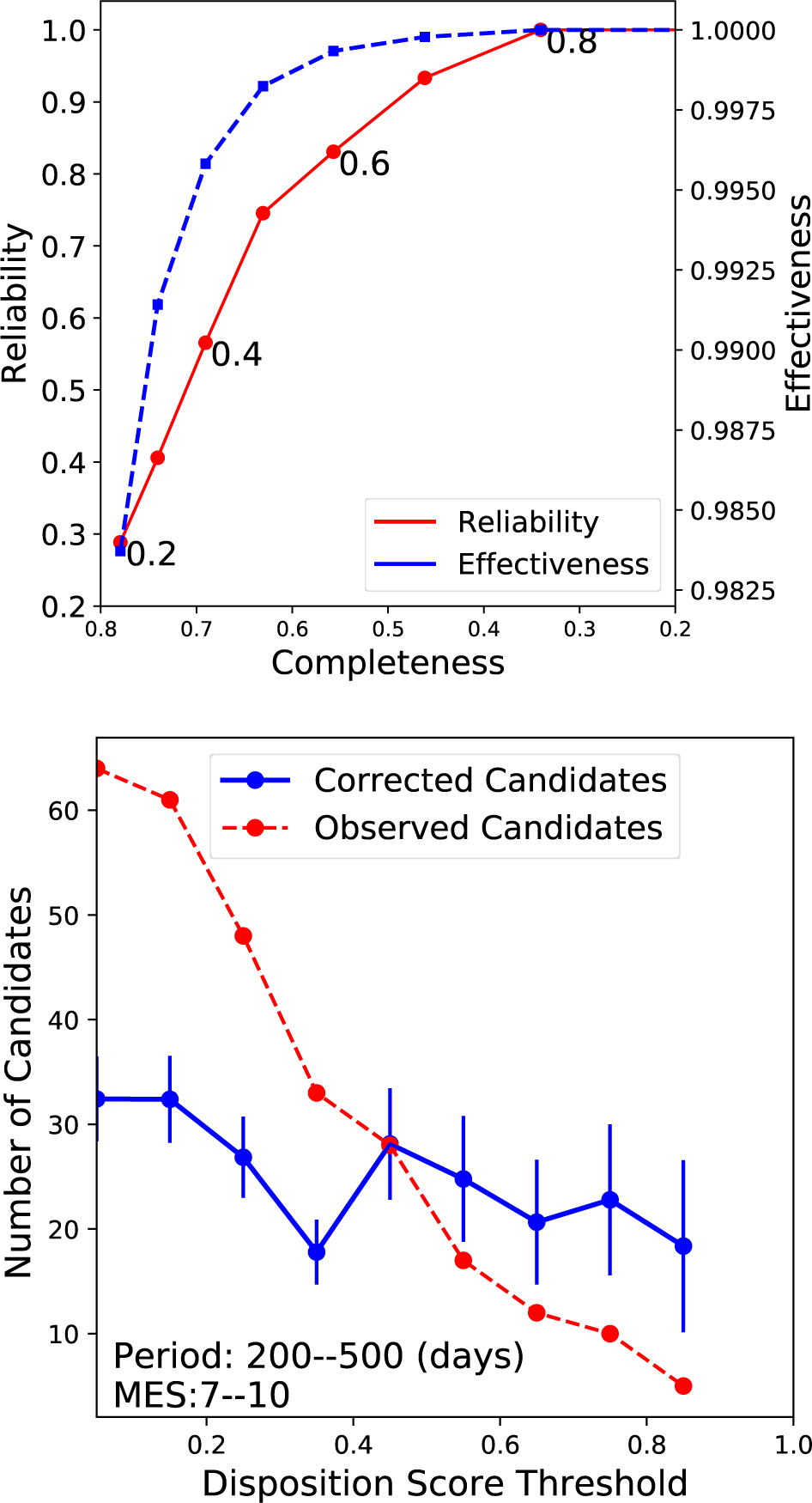

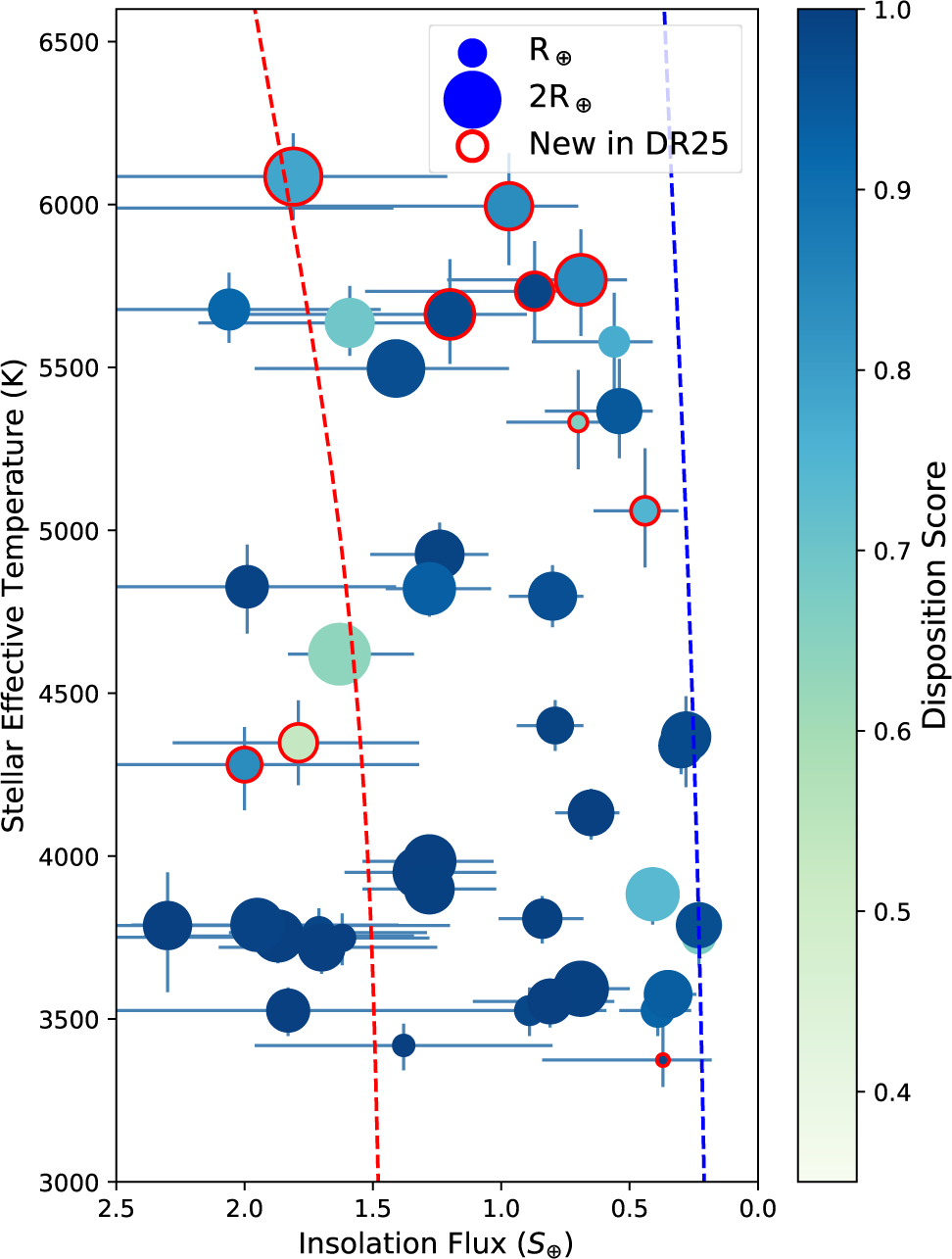

We present the Kepler Object of Interest (KOI) catalog of transiting exoplanets based on searching four years of Kepler time series photometry (Data Release 25, Q1–Q17). The catalog contains 8054 KOIs of which 4034 are planet candidates with periods between 0.25 and 632 days. Of these candidates, 219 are new in this catalog and include two new candidates in multi-planet systems (KOI-82.06 and KOI-2926.05), and ten new high-reliability, terrestrial-size, habitable zone candidates. This catalog was created using a tool called the Robovetter which automatically vets the DR25 Threshold Crossing Events (TCEs) found by the Kepler Pipeline (Twicken et al. 2016). Because of this automation, we were also able to vet simulated data sets and therefore measure how well the Robovetter separates those TCEs caused by noise from those caused by low signal-to-noise transits. Because of these measurements we fully expect that this catalog can be used to accurately calculate the frequency of planets out to Kepler’s detection limit, which includes temperate, super-Earth size planets around GK dwarf stars in our Galaxy. This paper discusses the Robovetter and the metrics it uses to decide which TCEs are called planet candidates in the DR25 KOI catalog. We also discuss the simulated transits, simulated systematic noise, and simulated astrophysical false positives created in order to characterize the properties of the final catalog. For orbital periods less than 100 d the Robovetter completeness (the fraction of simulated transits that are determined to be planet candidates) across all observed stars is greater than 85%. For the same period range, the catalog reliability (the fraction of candidates that are not due to instrumental or stellar noise) is greater than 98%. However, for low signal-to-noise candidates found between 200 and 500 days, our measurements indicate that the Robovetter is 73.5% complete and 37.2% reliable across all searched stars (or 76.7% complete and 50.5% reliable when considering just the FGK dwarf stars). We describe how the measured completeness and reliability varies with period, signal-to-noise, number of transits, and stellar type. Also, we discuss a value called the disposition score which provides an easy way to select a more reliable, albeit less complete, sample of candidates. The entire KOI catalog, the transit fits using Markov chain Monte Carlo methods, and all of the simulated data used to characterize this catalog are available at the NASA Exoplanet Archive.

Keywords: catalogs, planetary systems, planets and satellites: detection, stars: statistics, surveys, techniques: photometric

1. INTRODUCTION

Kepler’s mission to measure the frequency of Earth-size planets in the Galaxy is an important step towards understanding the Earth’s place in the Universe. Launched in 2009, the Kepler Mission (Koch et al. 2010; Borucki 2016) stared almost continuously at a single field for four years (or 17, ≈90 day quarters), recording the brightness of ≈200,000 stars (≈160,000 stars at a time) at a cadence of 29.4 minutes over the course of the mission. Kepler detected transiting planets by observing the periodic decrease in the observed brightness of a star when an orbiting planet crossed the line of sight from the telescope to the star. Kepler’s prime-mission observations concluded in 2013 when it lost a second of four reaction wheels, three of which were required to maintain the stable pointing. From the ashes of Kepler rose the K2 mission which continues to find exoplanets in addition to a whole host of astrophysics enabled by its observations of fields in the ecliptic (Howell et al. 2014; Van Cleve et al. 2016b). While not the first to obtain high-precision, long-baseline photometry to look for transiting exoplanets (see e.g., Barge et al. 2008; O’Donovan et al. 2006), Kepler and its plethora of planet candidates revolutionized exoplanet science. The large number of Kepler planet detections from the same telescope opened the door for occurrence rate studies and has enabled some of the first measurements of the frequency of planets similar to the Earth in our Galaxy. To further enable those types of studies, we present here the planet catalog that resulted from the final search of the Data Release 25 (DR25) Kepler mission data along with the tools provided to understand the biases inherent in the search and vetting done to create that catalog.

First, we put this work in context by reviewing some of the scientific achievements accomplished using Kepler data. Prior to Kepler, most exoplanets were discovered by radial velocity methods (e.g. Mayor & Queloz 1995), which largely resulted in the detection of Neptune-to Jupiter-mass planets in orbital periods of days to months. The high precision photometry and the four-year baseline of the Kepler data extended the landscape of known exoplanets. To highlight a few examples, Barclay et al. (2013) found evidence for a moon-size terrestrial planet in a 13.3 day period orbit, Quintana et al. (2014) found evidence of an Earth-size exoplanet in the habitable zone of the M dwarf Kepler-186, and Jenkins et al. (2015) statistically validated a super-Earth in the habitable zone of a G-dwarf star. Additionally, for several massive planets Kepler data has enabled measurements of planetary mass and atmospheric properties by using the photometric variability along the entire orbit (Shporer et al. 2011; Mazeh et al. 2012; Shporer 2017). Kepler data has also revealed hundreds of compact, co-planar, multi-planet systems, e.g., the six planets around Kepler-11 (Lissauer et al. 2011a), which collectively have told us a great deal about the architecture of planetary systems (Lissauer et al. 2011b; Fabrycky et al. 2014). Exoplanets have even been found orbiting binary stars, e.g., Kepler-16 (AB) b (Doyle et al. 2011).

Other authors have taken advantage of the long time series, near-continuous data set of 206,1501 stars to advance our understanding of stellar physics through the use of asteroseismology. Of particular interest to this catalog is the improvement in the determination of stellar radius (e.g., Huber et al. 2014; Mathur et al. 2017) which can be one of the most important sources of error when calculating planetary radii. Kepler data was also used to track the evoluation of star-spots created from magnetic activity and thus enabled the measurement of stellar rotation rates (e.g. Aigrain et al. 2015; García et al. 2014; McQuillan et al. 2014; Zimmerman et al. 2017). Studying stars in clusters enabled Meibom et al. (2011) to map out the evolution of stellar rotation as stars age. Kepler also produced light curves of 28762 eclipsing binary stars (Prša et al. 2011; Kirk et al. 2016) including unusual binary systems, such as the eccentric, tidally-distorted, Heartbeat stars (Welsh et al. 2011; Thompson et al. 2012; Shporer et al. 2016) that have opened the doors to understanding the impact of tidal forces on stellar pulsations and evolution (e.g., Hambleton et al. 2017; Fuller et al. 2017).

The wealth of astrophysics, and the size of the Kepler community, is in part due to the rapid release of Kepler data to the NASA Archives: the Exoplanet Archive (Akeson et al. 2013) and the MAST (Mikulski Archives for Space Telescopes). The Kepler mission released data from every step of the processing (Thompson et al. 2016a; Stumpe et al. 2014; Bryson et al. 2010), including its planet searches. The results of both the original searches for periodic signals (known as the TCEs or Threshold Crossing Events) and the well-vetted KOIs (Kepler Objects of Interest) were made available for the community. The combined list of Kepler’s planet candidates found from all searches can be found in the cumulative KOI table3. The KOI table we present here is from a single search of the DR25 light curves4. While the search does not include new observations, it was performed using an improved version of the Kepler Pipeline (version 9.3, Jenkins 2017a). For a high-level summary of the changes to the Kepler Pipeline, see the DR25 data release notes (Thompson et al. 2016b; Van Cleve et al. 2016a). The Kepler Pipeline has undergone successive improvements since launch as the data characteristics have become better understood.

The photometric noise at time scales of the transit is what limits Kepler from finding small terrestrial-size planets. Investigations of the noise properties of Kepler exoplanet hosts by Howell et al. (2016) showed that those exoplanets with the radii ≤1.2R⊕ are only found around the brightest, most photometrically quiet stars. As a result, the search for the truly Earth-size planets are limited to a small subset of Kepler’s stellar sample. Analyses by Gilliland et al. (2011, 2015) show that the primary source of the observed noise was indeed inherent to the stars, with a smaller contributions coming from imperfections in the instruments and software. Unfortunately, the typical noise level for 12th magnitude solar-type stars is closer to 30ppm (Gilliland et al. 2015) than the 20ppm expected prior to launch (Jenkins et al. 2002), causing Kepler to need a longer baseline to find a significant number of Earth-like planets around Sun-like stars. Ultimately, this higher noise level impacts Kepler’s planet yield. And, because different stars have different levels of noise, the transit depth to which the search is sensitive varies across the sample of stars. This bias must be accounted for when calculating occurrence rates, and is explored in-depth for this run of the Kepler Pipeline by the transit injection and recovery studies of Burke & Catanzarite (2017a,b) and Christiansen (2017).

To confirm the validity and further characterize identified planet candidates, the Kepler mission benefited from an active, funded, follow-up observing program. This program used ground-based radial velocity measurements to determine the mass of exoplanets (e.g., Marcy et al. 2014) when possible and also ruled out other astrophysical phenomena, like background eclipsing binaries, that can mimic a transit signal. The follow-up program obtained high-resolution imaging of ≈90% of known KOIs (e.g., Furlan et al. 2017) to identify close companions (bound or unbound) that would be included in Kepler’s rather large 3.98 ″ pixels. The extra light from these companions must be accounted for when determining the depth of the transit and the radii of the exoplanet. While the Kepler Pipeline accounts for the stray light from stars in the Kepler Input Catalog (Brown et al. 2011; and see flux fraction in §2.3.1.2 of the Kepler Archive Manual; Thompson et al. 2016a), the sources identified by these high-resolution imaging catalogs were not included. Based on the analysis by Ciardi et al. (2015), where they considered the effects of multiplicity, planet radii are underestimated by a factor averaging≃1.5 for G dwarfs prior to vetting, or averaging≃1.2 for KOIs that have been vetted with high-resolution imaging and Doppler spectroscopy. The effect of unrecognized dilution decreases for planets orbiting the K and M dwarfs, because they have a smaller range of possible stellar companions

Even with rigorous vetting and follow-up observations, most planet candidates in the KOI catalogs cannot be directly confirmed as planetary. The stars are too dim and the planets are too small to be able to measure a radial velocity signature for the planet. Statistical methods study the likelihood that the observed transit could be caused by other astrophysical scenarios and have succeeded in validating thousands of Kepler planets (e.g. Morton et al. 2016; Torres et al. 2015; Rowe et al. 2014; Lissauer et al. 2014).

The Q1–Q16 KOI catalog (Mullally et al. 2015) was the first with a long enough baseline to be significantly impacted by another source of false positives, the long-period false positives created by the instrument itself. In that catalog (and again in this one), the majority of long-period, low SNR TCEs are ascribed to instrumental effects incompletely removed from the data before the TCE search. Kepler has a variety of short timescale (on the order of a day or less), non-Gaussian noise sources including focus changes due to thermal variations, signals imprinted on the data by the detector electronics, noise caused by solar flares, and the pixel sensitivity changing after the impact of a high energy particle (known as a sudden pixel sensitivity drop-out, or SPSD). Because the large number of TCEs associated with these types of errors, and because the catalog was generated to be intentionally inclusive (i.e. high completeness), many of the long-period candidates in the Q1–Q16 KOI catalog are expected to simply be noise. We were faced with a similar problem for the DR25 catalog and spent considerable effort writing software to identify these types of false positives, and for the first time we include an estimate for how often these signals contaminate the catalog.

The planet candidates found in Kepler data have been used extensively to understand the frequency of different types of planets in the Galaxy. Many studies have shown that small planets (< 4R⊕) in short period orbits are common, with occurrence rates steadily increasing with decreasing radii (Burke & Seader 2016; Howard et al. 2012; Petigura et al. 2013; Youdin 2011). Dressing & Charbonneau (2013, 2015), using their own search, confined their analysis to M dwarfs and orbital periods less than 50 d and determined that multi-planet systems are common around these low mass stars. Therefore planets are more common than stars in the Galaxy (due, in part, to the fact that low mass stars are the most common stellar type). Fulton et al. (2017), using improved measurements of the stellar properties (Petigura et al. 2017a), looked at small planets with periods of less than 100 d and showed that there is a valley in the occurrence of planets near 1.75R⊕. This result improved upon the results of Howard et al. (2012) and Lundkvist et al. (2016) and further verified the evaporation valley predicted by Owen & Wu (2013) and Lopez & Fortney (2013) for close-in planets.

Less is known about the occurrence of planets in longer period orbits. Using planet candidates discovered with Kepler, several papers have measured the frequency of small planets in the habitable zone of sun-like stars (see e.g. Burke et al. 2015; Foreman-Mackey et al. 2016; Petigura et al. 2013) using various methods. Burke et al. (2015) used the Q1–Q16 KOI catalog (Mullally et al. 2015) and looked at G and K stars and concluded that 10% (with an allowed range of 1–200%) of solar-type stars host planets with radii and orbital periods within 20% of that of the Earth. Burke et al. (2015) considered various systematic effects and showed that they dominate the uncertainties and concluded that improved measurements of the stellar properties, the detection efficiency of the search, and the reliability of the catalog will have the most impact in narrowing the uncertainties in such studies.

1.1. Design Philosophy of the DR25 catalog

The DR25 KOI catalog is designed to support rigorous occurrence rate studies. To do that well, it was critical that we not only identify the exoplanet transit signals in the data but also measure the catalog reliability (the fraction of transiting candidates that are not caused by noise), and the completeness of the catalog (the fraction of true transiting planets detected).

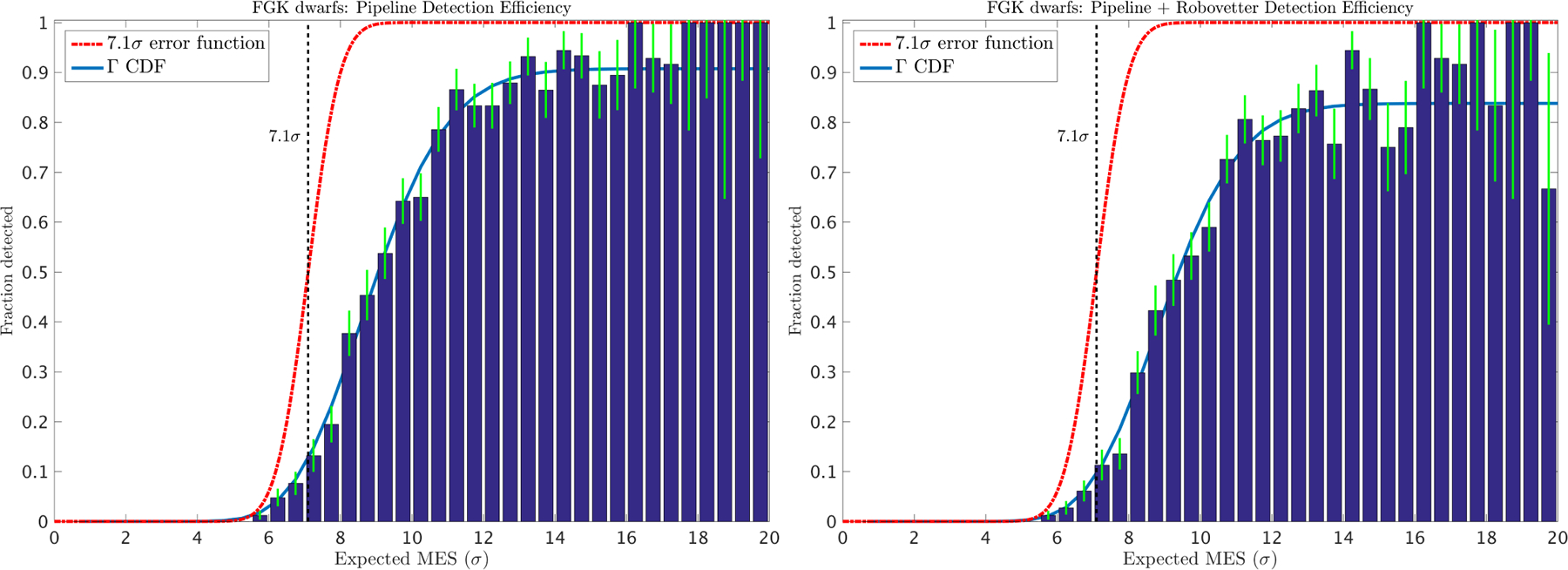

The measurement of the catalog completeness has been split into two parts: the completeness of the TCE list (the transit search performed by the Kepler Pipeline) and the completeness of the KOI catalog (the vetting of the TCEs). The completeness of the Kepler Pipeline and its search for transits has been studied by injecting transit signals into the pixels and examining what fraction are found by the Kepler Pipeline (Christiansen 2017; Christiansen et al. 2015, 2013a). Burke et al. (2015) applied the appropriate detection efficiency contours (Christiansen 2015) to the 50–300 d period planet candidates in the Q1–Q16 KOI catalog (Mullally et al. 2015) in order to measure the occurrence rates of small planets. However, that study was not able to account for those transit signals correctly identified by the Kepler Pipeline but thrown-out by the vetting process. Along with the DR25 KOI catalog, we provide a measure of the completeness of the DR25 vetting process.

Kepler light curves contain variability that is not due to planet transits or eclipsing binaries. While the reliability of Kepler catalogs against astrophysical false positives is mostly understood (see e.g. Morton et al. 2016), the reliability against false alarms (a term used in this paper to indicate TCEs caused by intrinsic stellar variability, over-contact binaries, or instrumental noise, i.e., anything that does not look transit-like) has not previously been measured. Instrumental noise, statistical fluctuations, poor detrending, and/or stellar variability can conspire to produce a signal that looks similar to a planet transit. When examining the smallest exoplanets in the longest orbital periods, Burke et al. (2015) demonstrated the importance of understanding the reliability of the catalog, showing that the occurrence of small, earth-like-period planets around G dwarf stars changed by a factor of ≈10 depending on the reliability of a few planet candidates. In this catalog we measure the reliability of the reported planet candidates against this instrumental and stellar noise.

The completeness of the vetting process is measured by vetting thousands of injected transits found by the Kepler Pipeline. Catalog reliability is measured by vetting signals found in scrambled and inverted Kepler light curves and counting the fraction of simulated false alarms that are dispositioned as planet candidates. This desire to vet both the real and simulated TCEs in a reproducible and consistent manner demands an entirely automated method for vetting the TCEs.

Automated vetting was introduced in the Q1–Q16 KOI catalog (Mullally et al. 2015) with the Centroid Robovetter and was then extended to all aspects of the vetting process for the DR24 KOI catalog (Coughlin et al. 2016). Because of this automation, the DR24 catalog was the first with a measure of completeness that extended to all parts of the search, from pixels to planet candidates. Now, with the DR25 KOI catalog and simulated false alarms, we also provide a measure of how effective the vetting techniques are at identifying noise signals and translate that into a measure of the catalog reliability. As a result, the DR25 KOI catalog is the first to explicitly balance the gains in completeness against the loss of reliability, instead of always erring on the side of high completeness.

1.2. Terms and Acronyms

We try to avoid unnecessary acronyms and abbreviations, but a few are required to efficiently discuss this catalog. Here we itemize those terms and abbreviations that are specific to this paper and are used repeatedly. The list is short enough that we choose to group them by meaning instead of alphabetically.

TCE: Threshold Crossing Event. Periodic signals identified by the transiting planet search (TPS) module of the Kepler Pipeline (Jenkins 2017b).

obsTCE: Observed TCEs. TCEs found by searching the observed DR25 Kepler data and reported in Twicken et al. (2016).

injTCE: Injected TCEs. TCEs found that match a known, injected transit signal (Christiansen 2017).

invTCE: Inverted TCEs. TCEs found when searching the inverted data set in order to simulate instrumental false alarms (Coughlin 2017b).

scrTCE: Scrambled TCEs. TCEs found when searching the scrambled data set in order to simulate instrumental false alarms (Coughlin 2017b).

TPS: Transiting Planet Search module. This module of the Kepler Pipeline performs the search for planet candidates. Significant, periodic events are identified by TPS and turned into TCEs.

DV: Data Validation. Named after the module of the Kepler Pipeline (Jenkins 2017b) which characterizes the transits and outputs one of the detrended light curves used by the Robovetter metrics. DV also created two sets of transit fits: original and supplemental (§2.4).

ALT: Alternative. As an alternative to the DV detrending, the Kepler Pipeline implements a detrending method that uses the methods of Garcia (2010) and the out-of-transit points in the pre-search data conditioned (PDC) light curves to detrend the data. The Kepler Pipeline performs a trapezoidal fit to the folded transit on the ALT detrended light curves.

MES: Multiple Event Statistic. A statistic that measures the combined significance of all of the observed transits in the detrended, whitened light curve assuming a linear ephemeris (Jenkins 2002).

KOI: Kepler Object of Interest. Periodic, transit-like events that are significant enough to warrant further review. A KOI is identified with a KOI number and can be dispositioned as a planet candidate or a false positive. The DR25 KOIs are a subset of the DR25 obsTCEs.

PC: Planet Candidate. A TCE or KOI that passes all of the Robovetter false positive identification tests. Planet candidates should not be confused with confirmed planets where further analysis has shown that the transiting planet model is overwhelmingly the most likely astrophysical cause for the periodic dips in the Kepler light curve.

FP: False Positive. A TCE or KOI that fails one or more of the Robovetter tests. Notice that the term includes all types of signals found in the TCE lists that are not caused by a transiting exoplanet, including eclipsing binaries and false alarms.

MCMC: Markov chain Monte Carlo. This refers to transit fits which employ a MCMC algorithm in order to provide robust errors for fitted model parameters for all KOIs (Hoffman & Rowe 2017).

1.3. Summary and Outline of the Paper

The DR25 KOI catalog is a uniformly-vetted list of planet candidates and false positives found by searching the DR25 Kepler light curves and includes a measure of the catalog completeness and reliability. In the brief outline that follows we highlight how the catalog was assembled, how we measure the completeness and reliability, and discuss those aspects of the process that are different from the DR24 KOI catalog (Coughlin et al. 2016).

In §2.1 we describe the observed TCEs (obsTCEs) which are the periodic signals found in the actual Kepler light curves. For reference, we also compare them to the DR24 TCEs. To create the simulated data sets necessary to measure the vetting completeness and the catalog reliability, we ran the Kepler Pipeline on light curves that either contained injected transits, were inverted, or were scrambled. This creates injTCEs, invTCEs, and scrTCEs, respectively (see §2.3).

We then created and tuned a Robovetter to vet all the different sets of TCEs. §3 describes the metrics and the logic used to disposition TCEs into PCs and FPs. Because the DR25 obsTCE population was significantly different than the DR24 obsTCEs, we developed new metrics to separate the PCs from the FPs (see Appendix A for the details on how each metric operates.) Several new metrics examine the individual transits for evidence of instrumental noise (see §A.3.7.) As in the DR24 KOI catalog, we group FPs into four categories (§4) and provide minor false positive flags (Appendix B) to indicate why the Robovetter decided to pass or fail a TCE. New to this catalog is the addition of a disposition score (§3.2) that gives users a measure of the Robovetter’s confidence in each disposition.

Unlike previous catalogs, for the DR25 KOI catalog the choice of planet candidate versus false positive is no longer based on the philosophy of “innocent until proven guilty”. We accept certain amounts of collateral damage (i.e., exoplanets dispositioned as FP) in order to achieve a catalog that is uniformly vetted and has acceptable levels of both completeness and reliability, especially for the long period and low signal-to-noise PCs. In §5 we discuss how we tuned the Robovetter using the simulated TCEs as populations of true planet candidates and true false alarms. We provide the Robovetter source code and all the Robovetter metrics for all of the sets of TCEs (obsTCEs, injTCEs, invTCEs, and scrTCEs) to enable users to create a catalog tuned for other regions of parameter space if their scientific goals require it.

We assemble the catalog (§6) by federating to previously known KOIs before creating new KOIs. Then to provide planet parameters, each KOI is fit with a transit model which uses a Markov Chain Monte Carlo (MCMC) algorithm to provide error estimates for each fitted parameter (§6.3). In §7 we summarize the catalog and discuss the performance of the vetting using the injTCE, invTCE, and scrTCE sets. We show that both decrease significantly with decreasing number of transits and decreasing signal-to-noise. We then discuss how one may use the disposition scores to identify the highest quality candidates, especially at long periods (§7.3.4.) We conclude that not all declared planet candidates in our catalog are actually astrophysical transits, but we can measure what fraction are caused by stellar and instrumental noise. Because of the interest in terrestrial, temperate planets, we examine the high quality, small candidates in the habitable zone in §7.5. Finally, in §8 we give an overview of what must be considered when using this catalog to measure accurate exoplanet occurrence rates, including what information is available in other Kepler products to do this work.

2. THE Q1–Q17 DR25 TCES

2.1. Observed TCEs

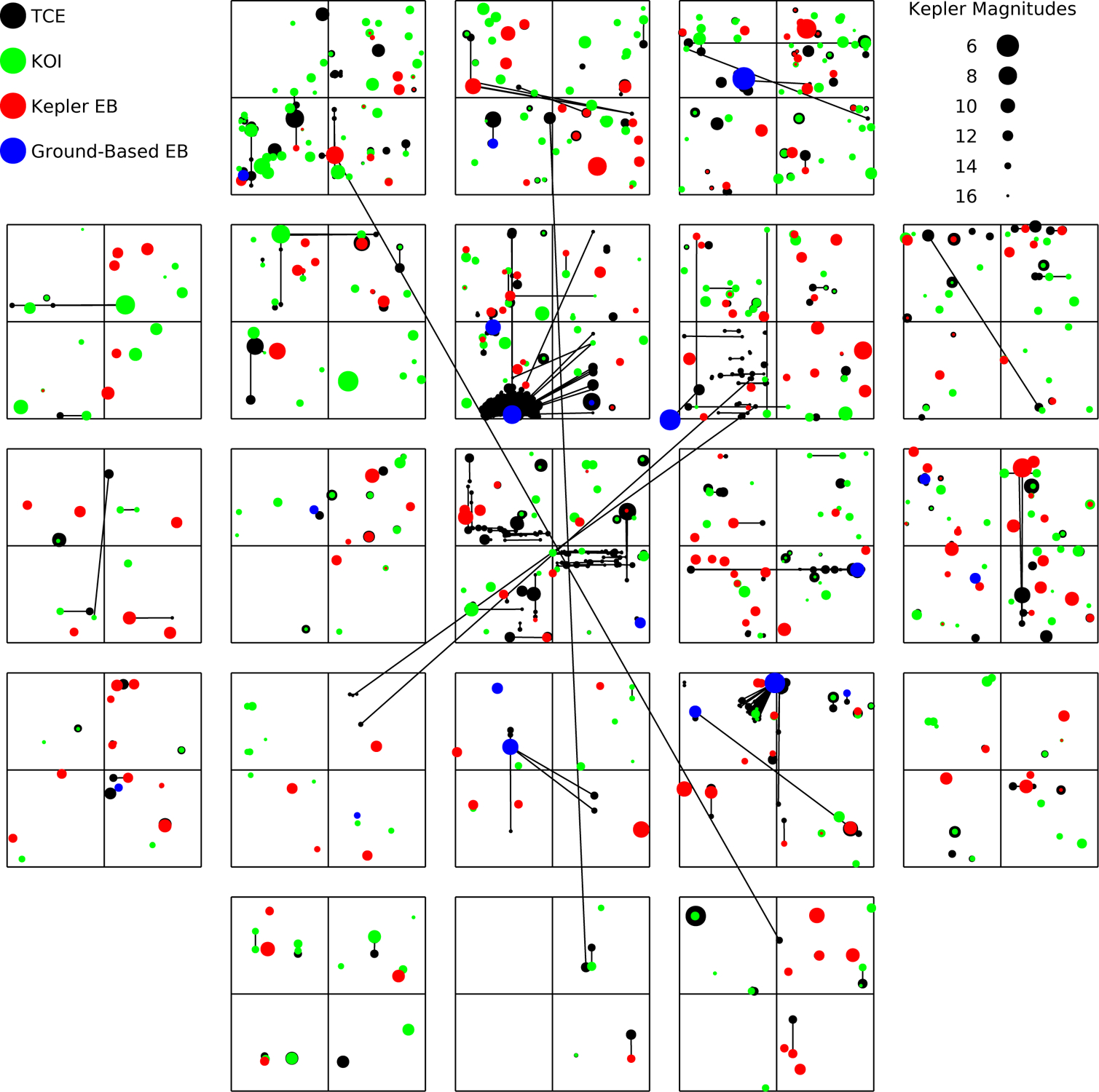

As with the previous three Kepler KOI catalogs (Coughlin et al. 2016; Mullally et al. 2015; Rowe et al. 2015a), the population of events that were used to create KOIs and planet candidates are known as obsTCEs. These are periodic reductions of flux in the light curve that were found by the TPS module and evaluated by the DV module of the Kepler Pipeline (Jenkins 2017b)5. The DR25 obsTCEs were created by running the SOC 9.3 version of the Kepler Pipeline on the DR25, Q1–Q17 Kepler time-series. For a thorough discussion of the DR25 TCEs and on the pipeline’s search see Twicken et al. (2016).

The DR25 obsTCEs, their ephemerides, and the metrics calculated by the pipeline are available at the NASA Exoplanet Archive (Akeson et al. 2013). In this paper we endeavor to disposition these signals into planet candidates and false positives. Because the obsTCEs act as the input to our catalog, we first describe some of their properties as a whole and reflect on how they are different from the obsTCE populations found with previous searches.

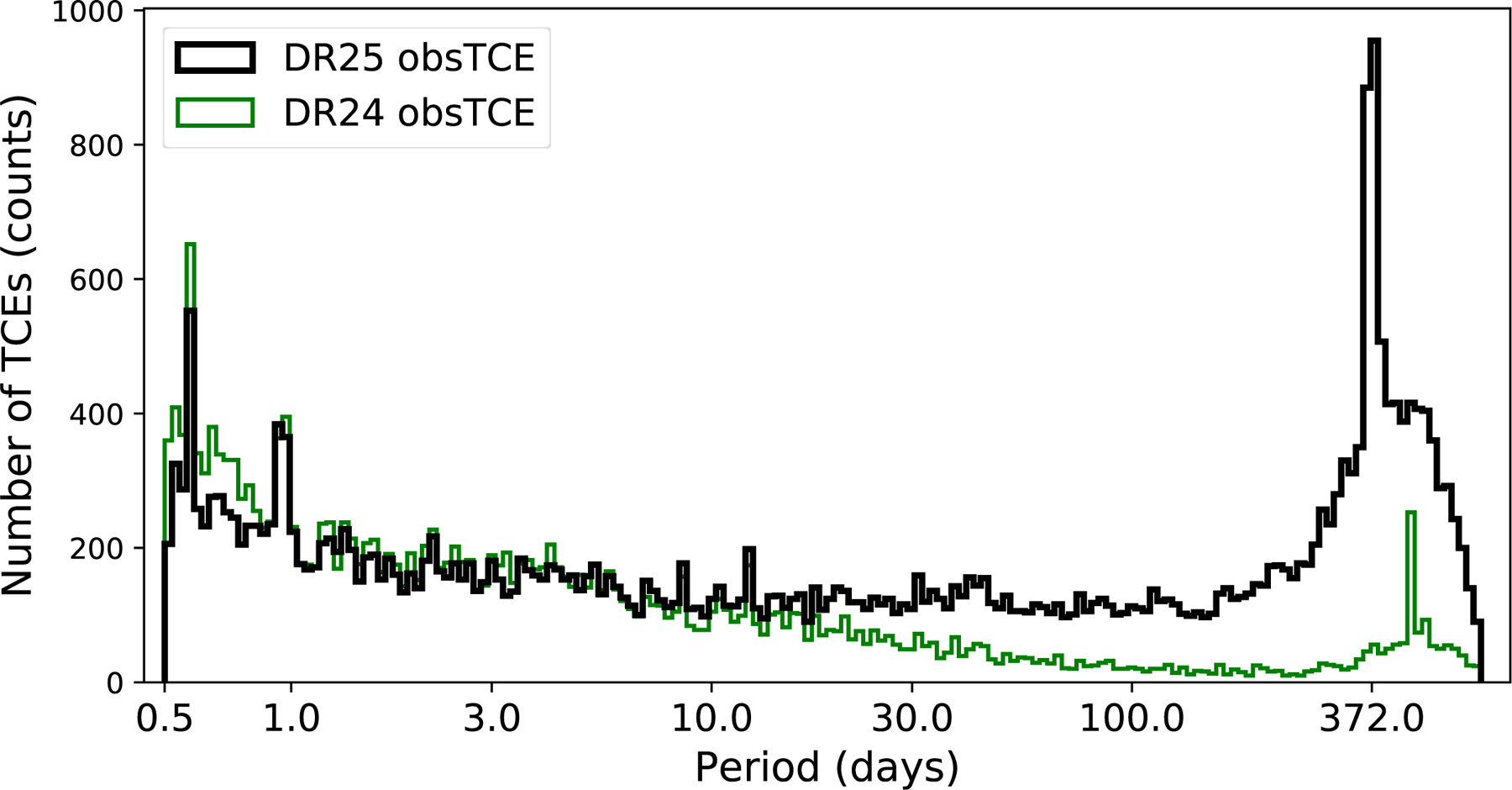

We have plotted the distribution of the 32,534 obsTCEs in terms of period in Figure 1. Notice that there is an excessive number of short and long period obsTCEs compared to the number of expected transiting planets. Not shown, but worth noting is that the number of obsTCEs increases with decreasing MES.

Figure 1.

Histogram of the period in days of the DR25 obsTCEs (black) using uniform bin space in the base ten logarithm of the period. The DR24 catalog obsTCEs (Seader et al. 2015) are shown in green for comparison. The number of long-period TCEs is much larger for DR25 and includes a large spike in the number of TCEs at the orbital period of the spacecraft (372 days). The long and short period spikes for both distributions are discussed in §2.1.

As with previous catalogs, the short period (< 10 d) excess is dominated by true variability of stars due to both intrinsic stellar variability (e.g., spots or pulsations) and contact/near-contact eclipsing binaries. The long period excess is dominated by instrumental noise. For example, a decrease in flux following a cosmic ray hit (known as an SPSD; Van Cleve et al. 2016a), can match up with other decrements in flux to produce a TCE. Also, image artifacts known as rolling-bands are very strong on some channels (see §6.7 of Van Cleve & Caldwell 2016) and since the spacecraft rolls approximately every 90 d, causing a star to move on/off a Kepler detector with significant rolling band noise, these variations can easily line up to produce TCEs at Kepler’s heliocentric orbital period (≈372 days, 2.57 in log-space). This is the reason for the largest spike in the obsTCE population seen in Figure 1. The narrow spike at 459 days (2.66 in log-space) in the DR24 obsTCE distribution is caused by edge-effects near three equally spaced data gaps in the DR24 data processing. The short period spikes in the distribution of both the DR25 and DR24 obsTCEs is caused by contamination by bright variable stars (see §A.6 and Coughlin et al. 2014).

Generally, the excess of long period TCEs is significantly larger than it was in the DR24 TCE catalog (Seader et al. 2015), also seen in Figure 1. Most likely, this is because DR24 implemented an aggressive veto known as the bootstrap metric (Seader et al. 2015). For DR25 this metric was calculated, but was not used as a veto. Also, other vetoes were made less strict causing more TCEs across all periods to be created.

To summarize, for DR25 the number of false signals among the obsTCEs is dramatically larger than in any previous catalog. This was done on purpose in order to increase the Pipeline completeness by allow more transiting exoplanets to be made into obsTCEs.

2.2. Rogue TCEs

The DR25 TCE table at the NASA Exoplanet Archive contains 32,534 obsTCEs and 1498 rogue TCEs6 for a total of 34,032. The rogue TCEs were created because of a bug in the Kepler pipeline which failed to veto certain TCEs with three transit events. This bug was not in place when characterizing the Pipeline using flux-level transit injection (see Burke & Catanzarite 2017b,a) and because the primary purpose of this catalog is to be able to accurately calculate occurrence rates, we do not use the rogue TCEs in the creation and analysis of the DR25 KOI catalog. Also note that all of the TCE populations (observed, injection, inversion, and scrambling, see the next section) had rogue TCEs that were removed prior to analysis. The creation and analysis of this KOI catalog only rely on the non-rogue TCEs. Although they are not analyzed in this study we encourage the community to examine the designated rogue TCEs as the list does contain some of the longest period events detected by Kepler.

2.3. Simulated TCEs

In order to measure the performance of the Robovetter and the Kepler Pipeline, we created simulated transits, simulated false positives, and simulated false alarms. The simulated transits are created by injecting transit signals into the pixels of the original data. The simulated false positives were created by injecting eclipsing binary signals and positionally off-target transit signals into the pixels of the original data (see Coughlin 2017b and Christiansen 2017 for more information). The simulated false alarms were created in two separate ways: by inverting the light curves, and by scrambling the sequence of cadences in the time series. The TCEs that resulted from these simulated data are available at the Exoplanet Archive on the Kepler simulated data page.7

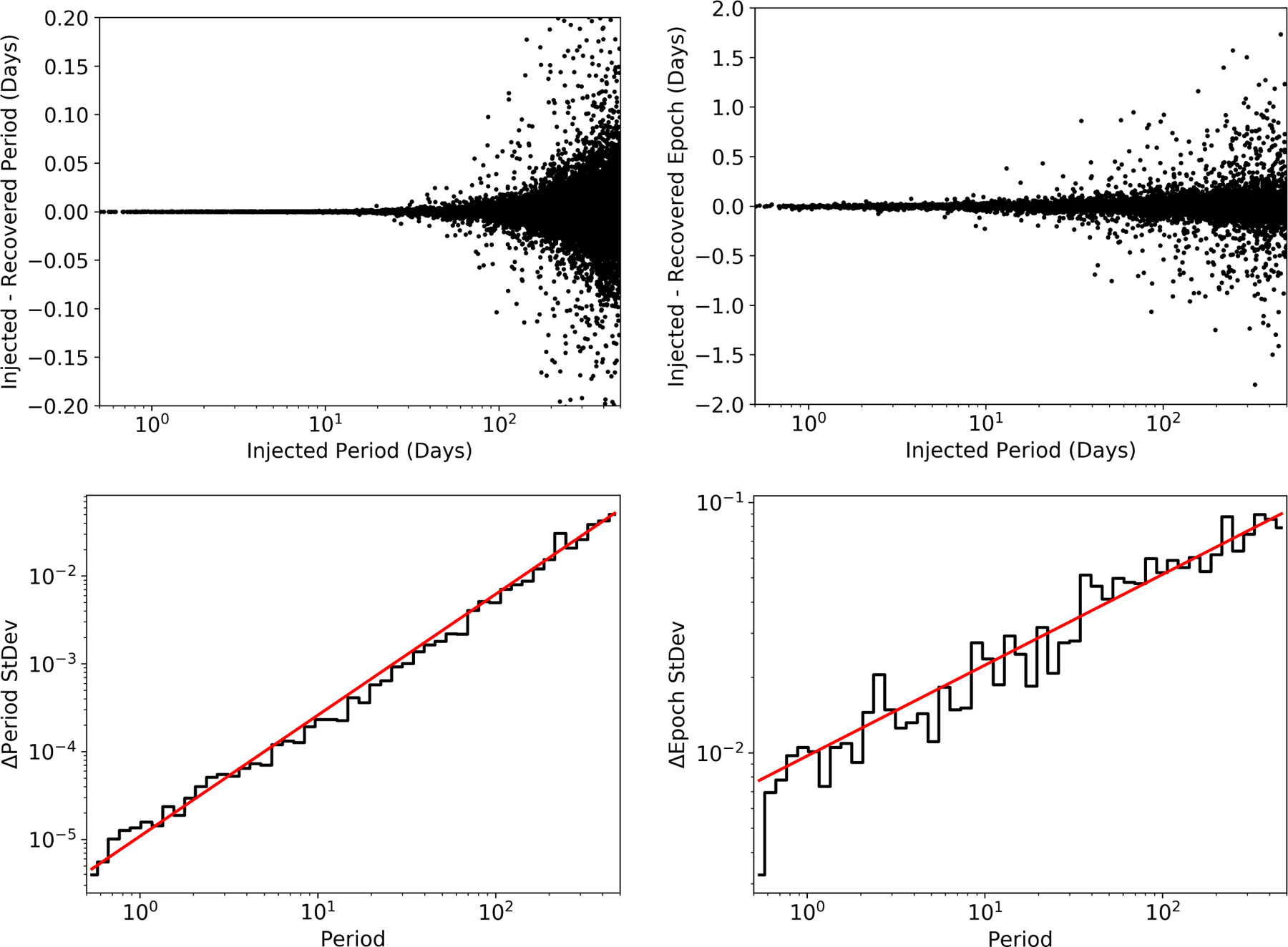

2.3.1. True Transits – Injection

We empirically measure the completeness of the Kepler Pipeline and the subsequent vetting by injecting a suite of simulated transiting planet signals into the calibrated pixel data and observing their recovery, as was done for previous versions of the Kepler Pipeline (Christiansen et al. 2013a; Christiansen 2015; Christiansen et al. 2016). The full analysis of the DR25 injections are described in detail in Christiansen (2017). In order to understand the completeness of the Robovetter, we use the on-target injections (Group 1 in Christiansen 2017); we briefly describe their properties here. For each of the 146,294 targets, we generate a model transit signal using the Mandel & Agol (2002) formulation, with parameters drawn from the following uniform distributions: orbital periods from 0.5–500 days (0.5–100 days for M dwarf targets), planet radii from 0.25–7 R⊕ (0.25–4 R⊕ for M dwarf targets), and impact parameters from 0–1. After some re-distribution in planet radius to ensure sufficient coverage where the Kepler Pipeline is fully incomplete (0% recovery) to fully complete (100% recovery), 50% of the injections have planet radii below 2R⊕ and 90% below 40R⊕. The signals are injected into the calibrated pixels, and then processed through the remaining components of the Kepler Pipeline in an identical fashion to the original data. Any detected signals are subjected to the same scrutiny by the Pipeline and the Robovetter as the original data. By measuring the fraction of injections that were successfully recovered by the Pipeline and called a PC by the Robovetter with any given set of parameters (e.g., orbital period and planet radius), we can then correct the number of candidates found with those parameters to the number that are truly present in the data. While the observed population of true transiting planets is heavily concentrated towards short periods, we chose the 0.5–500 day uniform period distribution of injections because more long-period, low signal-to-noise transits are both not recovered and not vetted correctly — injecting more of these hard-to-find, long-period planets ensures that we can measure the Pipeline and Robovetter completeness. In this paper we use the set of on-target, injected planets that were recovered by the Kepler Pipeline (the injTCEs, whose period distribution is shown in Figure 2) to measure the performance of the Robovetter. Accurate measurement of the Robovetter performance is limited to those types of transits injected and recovered.

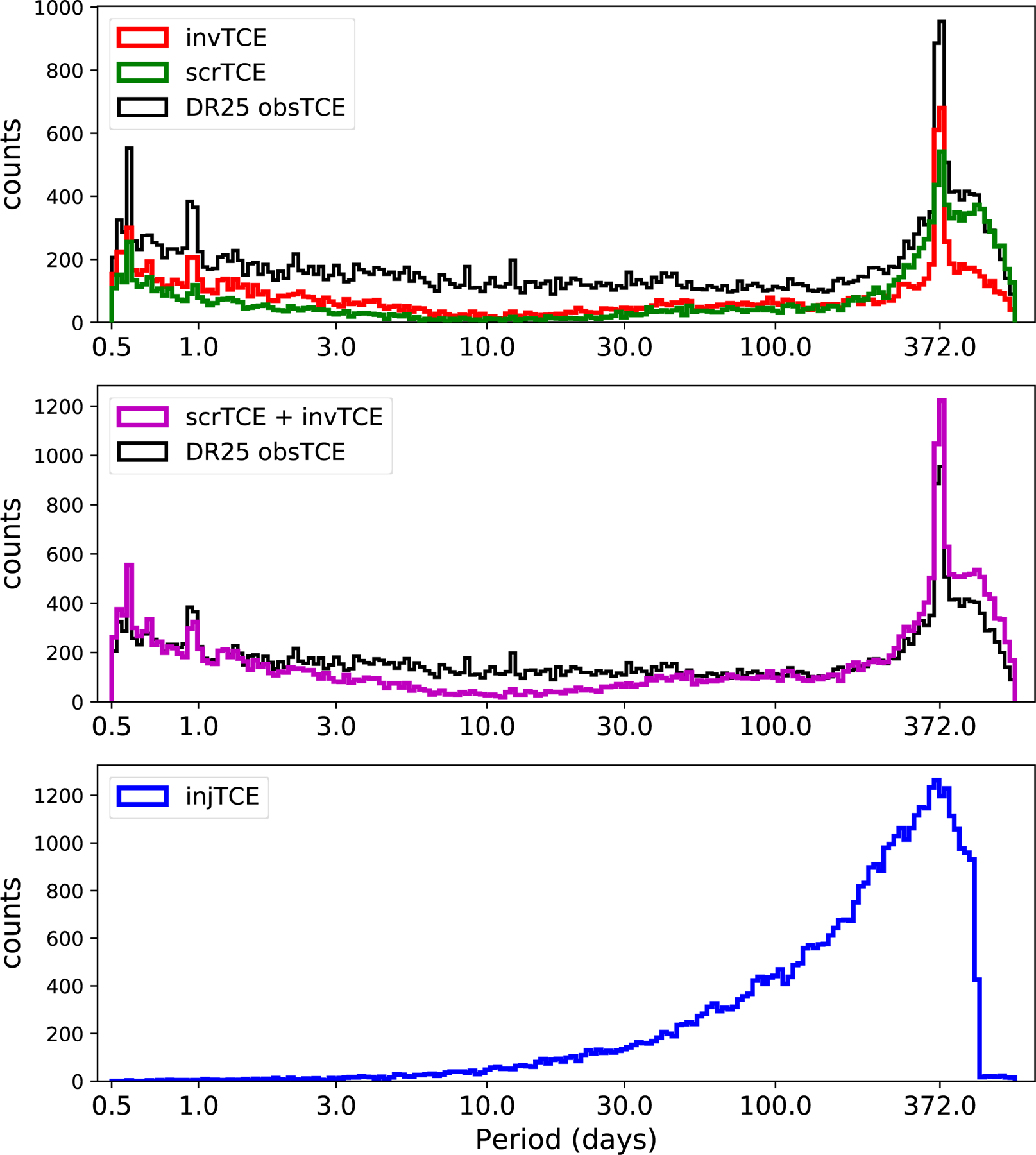

Figure 2.

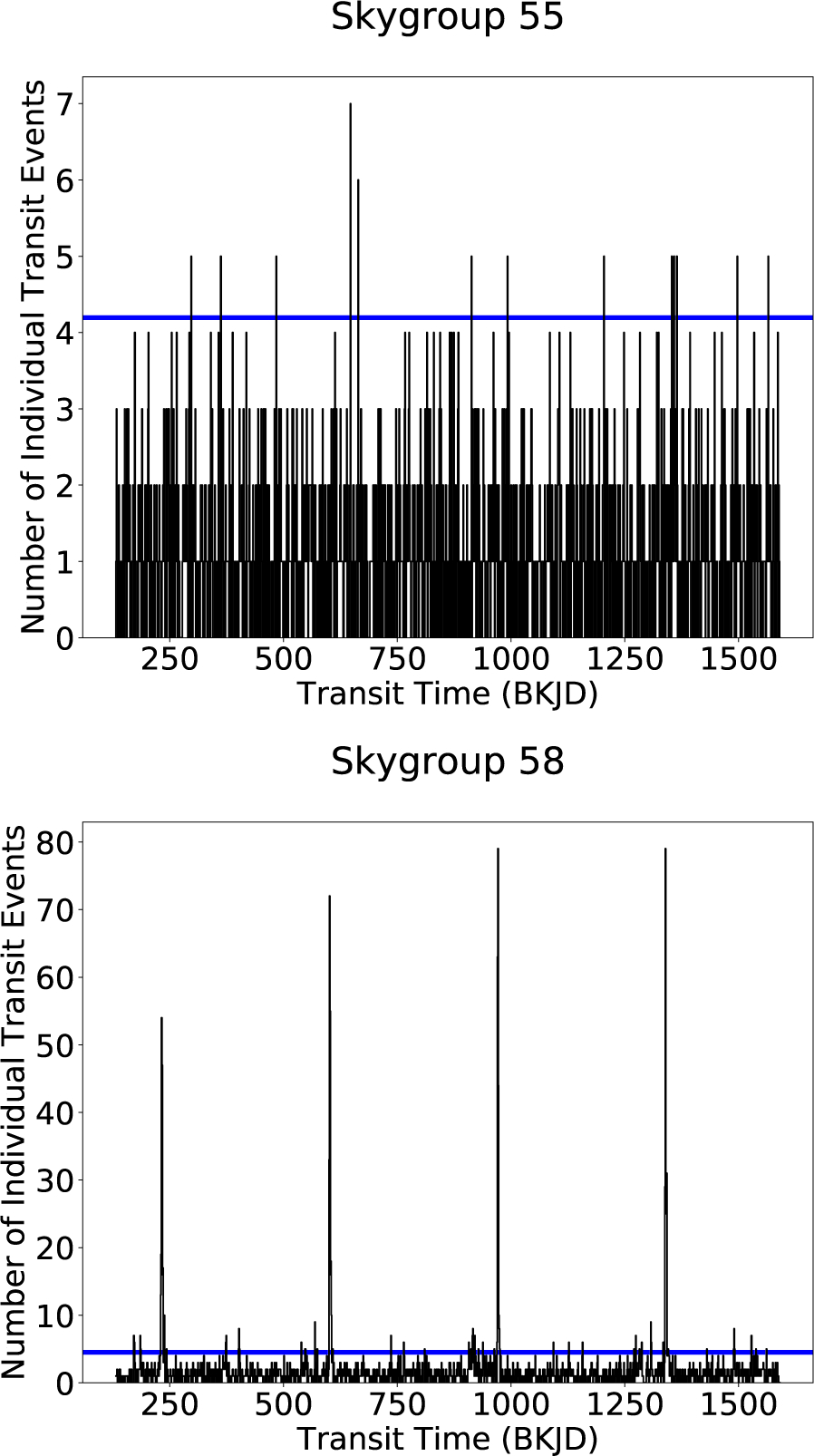

Histogram of the period in days of the cleaned invTCEs (top, red), the cleaned scrTCEs (top, green), and injTCEs (bottom, blue) in uniform, base-ten logarithmic spacing. The middle plot shows the union of the invTCEs and the scrTCEs in magenta. The DR25 obsTCEs are shown for comparison on the top two figures in black. At shorter periods (< 30 days) in the top figure, the difference between the simulated false alarm sets and the observed data represents the number of transit-like KOIs; at longer periods we primarily expect false alarms. Notice that the invTCEs do a better job of reproducing the one-year spike, but the scrTCEs better reproduce the long-period hump. Because the injTCEs are dominated by long-period events (significantly more long-period events were injected), we are better able to measure the Robovetter completeness for long-period planets than short-period planets.

It is worth noting that the injections do not completely emulate all astrophysical variations produced by a planet transiting a star. For instance, the injected model includes limb-darkening, but not the occultation of stellar pulsations or granulation, which has been shown to cause a small, but non-negligible, error source on measured transit depth (Chiavassa et al. 2017) for high signal-to-noise transits.

2.3.2. False Alarms – Inverted and Scrambled

To create realistic false alarms that have noise properties similar to our obsTCEs, we inverted the light curves (i.e., multiplied the normalized, zero-mean flux values by negative one) before searching for transit signals. Because the pipeline is only looking for transit-like (negative) dips in the light curve, the true exoplanet transits should no longer be found. However, quasi-sinusoidal signals due to instrumental noise, contact and near-contact binaries, and stellar variability can still create detections. In order for inversion to exactly reproduce the false alarm population, the false alarms would need to be perfectly symmetric (in shape and frequency) under flux inversion, which is not true. For example, stellar oscillations and star-spots are not sine waves and SPSDs will not appear the same under inversion. However, the rolling band noise that is significant on many of Kepler’s channels is mostly symmetric. The period distribution of these invTCEs is shown in Figure 2. The distribution qualitatively emulates those seen in the obsTCEs; however there are only ~60% as many. This is because the population does not include the exoplanets nor the eclipsing binaries, but it is also because many of the sources of false alarms are not symmetric under inversion. The one-year spike is clearly seen, but is not as large as we might expect, likely because the broad long-period hump present in the DR25 obsTCE distribution is mostly missing from the invTCE distribution. We explore the similarity of the invTCEs to obsTCEs in more detail in §4.2.

Another method to create false alarms is to scramble the order of the data. The requirement is to scramble the data enough to lose the coherency of the binary stars and exoplanet transits, but to keep the coherency of the instrumental and stellar noise that plagues the Kepler data set. Our approach was to scramble the data in coherent chunks of one year. The fourth year of data (Q13–Q16) was moved to the start of the light curve, followed by the third year (Q9–Q12), then the second (Q5–Q8), and finally the first (Q1–Q4). Q17 remained at the end. Within each year, the order of the data did not change. Notice that in this configuration each quarter remains in the correct Kepler season preserving the yearly artifacts produced by the spacecraft.

Two additional scrambling runs of the data, with different scrambling orders than described above, were performed and run through the Kepler pipeline and Robovetter, but are not discussed in this paper, as they were produced after the analysis for this paper was complete. These runs could be very useful in improving the reliability measurements of the DR25 catalog — see Coughlin 2017b for more information.

2.3.3. Cleaning Inversion and Scrambling

As will be described in §4.1, we want to use the invTCE and scrTCE sets to measure the reliability of the DR25 catalog against instrumental and stellar noise. In order to do that well, we need to remove signals found in these sets that are not typical of those in our obsTCE set. For inversion, there are astrophysical events that look similar to an inverted eclipse, for example the self-lensing binary star, KOI 3278.01 (Kruse & Agol 2014), and Heartbeat binaries (Thompson et al. 2012). With the assistance of published systems and early runs of the Robovetter, we identified any invTCE that could be one of these types of astrophysical events; 54 systems were identified in total. Also, the shoulders of inverted eclipsing binary stars and high signal-to-noise KOIs are found by the Pipeline, but are not the type of false alarm we were trying to reproduce, since they have no corresponding false alarm in the original, un-inverted light curves. We remove any invTCEs that were found on stars that had 1) one of the identified astrophysical events, 2) a detached eclipsing binary listed in Kirk et al. (2016) with morphology values larger than 0.6, or 3) a known KOI. After cleaning, we are left with 14953 invTCEs; their distribution is plotted in the top of Figure 2.

For the scrambled data, we do not have to worry about the astrophysical events that emulate inverted transits, but we do have to worry about triggering on true transits that have been rearranged to line up with noise. For this reason we remove from the scrTCE population all that were found on a star with a known eclipsing binary (Kirk et al. 2016), or on an identified KOI. The result is 13782 scrTCEs; their distribution is plotted in the middle panel of Figure 2. This will not remove all possible sources of astrophysical transits. Systems with only two transits (which would not be made into KOIs), or systems with single transits from several orbiting bodies would not be identified in this way. For example, KIC 3542116 was identified by Rappaport et al. (2017) as a star with possible exocomets, and it is a scrTCE dispositioned as an FP. We expect the effect of not removing these unusual events to be negligible on our reliability measurements relative to other systematic differences between the obsTCEs and the scrTCEs.

After cleaning the invTCEs and scrTCEs, the number of scrTCEs at periods longer than 200 d closely matches the size and shape of the obsTCE distribution, except for the one-year spike. The one-year spike is well represented by the invTCEs. The distribution of the combined invTCE and scrTCE data sets, as shown in the middle plot of Figure 2, qualitatively matches the relative frequency of false alarms present in the DR25 obsTCE population. Tables 1 and 2 lists those invTCEs and scrTCEs that we used when calculating the false alarm effectiveness and false alarm reliability of the PCs.

Table 1.

invTCEs used in the analysis of catalog reliability

| TCE-ID (KIC-PN) | Period days | MES | Disposition PC/FP |

|---|---|---|---|

| 000892667–01 | 2.261809 | 7.911006 | FP |

| 000892667–02 | 155.733356 | 10.087069 | FP |

| 000892667–03 | 114.542735 | 9.612742 | FP |

| 000892667–04 | 144.397127 | 8.998353 | FP |

| 000892667–05 | 84.142047 | 7.590044 | FP |

| 000893209–01 | 424.745158 | 9.106225 | FP |

| 001026133–01 | 1.346275 | 10.224972 | FP |

| 001026294–01 | 0.779676 | 8.503883 | FP |

| 001160891–01 | 0.940485 | 12.176910 | FP |

| 001160891–02 | 0.940446 | 13.552523 | FP |

| 001162150–01 | 1.130533 | 11.090898 | FP |

| 001162150–02 | 0.833482 | 8.282225 | FP |

| 001162150–03 | 8.114960 | 11.956621 | FP |

| 001162150–04 | 7.074370 | 14.518677 | FP |

| 001162150–05 | 5.966962 | 16.252800 | FP |

Note—The first column is the TCE-ID and is formed using the KIC Identification number and the TCE planet number (PN). This table is published in its entirety in the machine-readable format. A portion is shown here for guidance regarding its form and content.

Table 2.

scrTCEs used in the analysis of catalog reliability

| TCE-ID (KIC-PN) | Period days | MES | Disposition PC/FP |

|---|---|---|---|

| 000757099–01 | 0.725365 | 8.832907 | FP |

| 000892376–01 | 317.579997 | 11.805184 | FP |

| 000892376–02 | 1.532301 | 11.532692 | FP |

| 000892376–03 | 193.684366 | 14.835271 | FP |

| 000892376–04 | 432.870540 | 11.373951 | FP |

| 000892376–05 | 267.093312 | 10.308785 | FP |

| 000892376–06 | 1.531632 | 10.454597 | FP |

| 000893004–01 | 399.722285 | 7.240176 | FP |

| 000893507–02 | 504.629640 | 15.434824 | FP |

| 000893507–03 | 308.546946 | 12.190248 | FP |

| 000893507–04 | 549.804329 | 12.712417 | FP |

| 000893507–05 | 207.349237 | 11.017911 | FP |

| 000893647–01 | 527.190559 | 13.424537 | FP |

| 000893647–02 | 558.164884 | 13.531707 | FP |

| 000893647–03 | 360.260977 | 9.600089 | FP |

| … | … | … | … |

Note—The first column is the TCE-ID and is formed using the KIC Identification number and the TCE planet number (PN). This table is published in its entirety in the machine-readable format. A portion is shown here for guidance regarding its form and content.

2.4. TCE Transit Fits

The creation of this KOI catalog depends on four different transit fits: 1) the original DV transit fits, 2) the trapezoidal fits performed on the ALT Garcia (2010) detrended light curves, 3) the supplemental DV transit fits, and 4) the MCMC fits (see §6.3). The Kepler Pipeline fits each TCE with a Mandel & Agol (2002) transit model using Claret (2000) limb darkening parameters. After the transit searches were performed for the observed, injected, scrambled, and inverted TCEs, we discovered that the transit fit portion of DV was seeded with a high impact parameter model that caused the final fits to be biased towards large values, causing the planet radii to be systematically too large (for further information see Christiansen 2017 and Coughlin 2017b). Since a consistent set of reliable transit fits are required for all TCEs, we refit the transits. The same DV transit fitting code was corrected for the bug and seeded with the Kepler identification number, period, epoch, and MES of the original detection. These “supplemental” DV fits do not have the same impact parameter bias as the original. Sometimes the DV fitter fails to converge and in these cases we were not able to obtain a supplemental DV transit fit, causing us to fall back on the original DV fit. Also, at times the epoch wanders too far from the original detection; in these cases we do not consider it to be a successful fit and again fall back on the original fit.

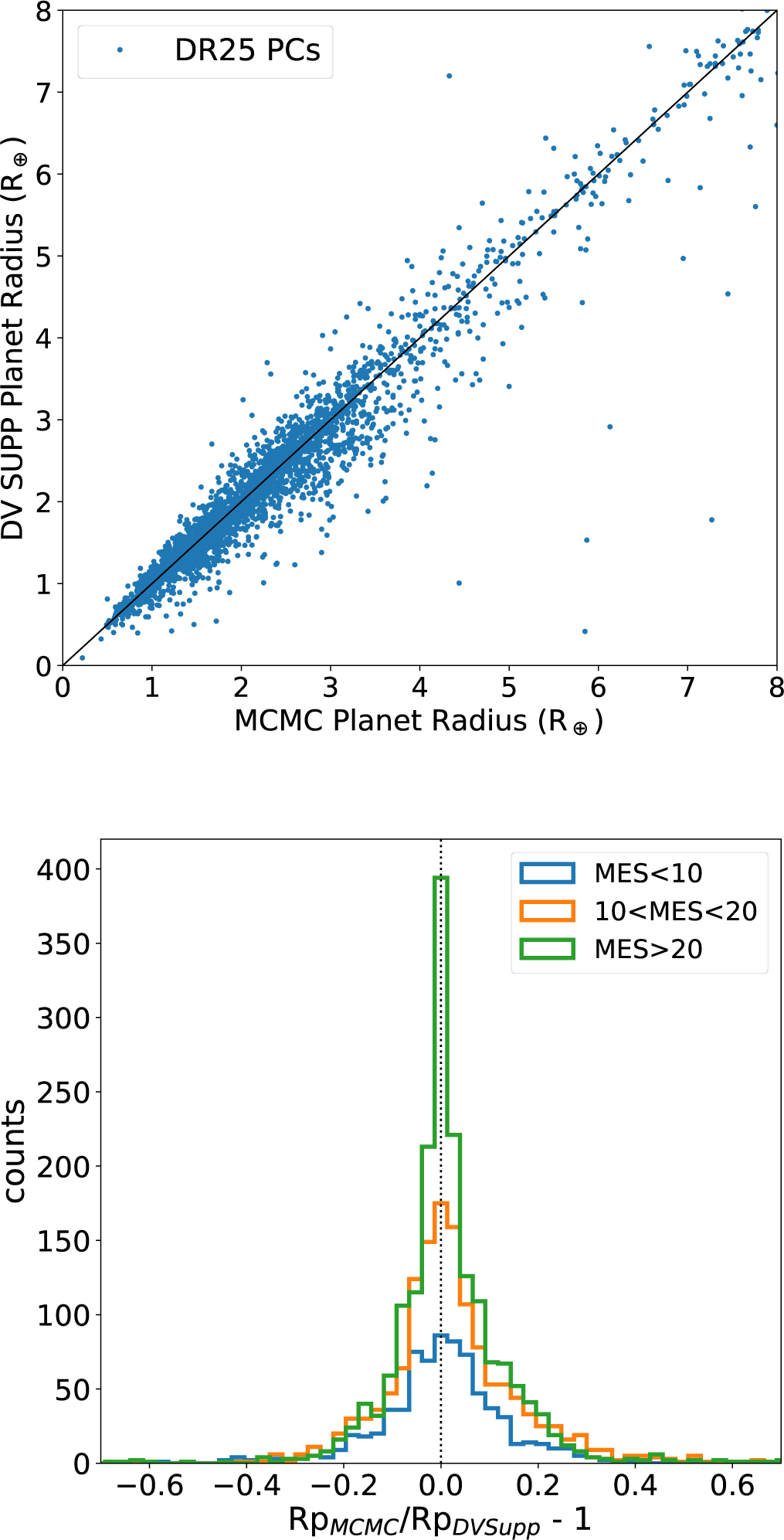

Because the bug in the transit fits was only discovered after all of the metrics for the Robovetter were run, the original DV and trapezoidal fits were used to disposition all of the sets of TCEs. These are the same fits that are available for the obsTCEs in the DR25 TCE table at the NASA Exoplanet Archive. Most Robovetter metrics are agnostic to the parameters of the fit, and so the supplemental DV fits would only change a few of the Robovetter decisions. While the Robovetter itself runs in a few minutes, several of the metrics used by the Robovetter (see Appendix A) require weeks to compute, so we chose not to update the metrics in order to achieve this minimal improvement. And for all sets of TCEs, the original DV fits are listed in the Robovetter input files8 The supplemental fits are used to understand the completeness and reliability of the catalog as a function of fitted parameters (such as planet radii or insolation flux). For all sets of TCEs, the supplemental DV fits are available as part of the Robovetter results tables linked from the TCE documentation page9 for the obsTCEs and from the simulated data page10 (see Christiansen 2017; Coughlin 2017b) for the injected, inverted, and scrambled TCEs. The MCMC fits are only provided for the KOI population and are available in the DR25 KOI table11 at the NASA Exoplanet Archive. The MCMC fits have no consistent offset from the supplemental DV fits. To show this, we plot the planet radii derived from the two types of fits for the planet candidates in DR25 and show the distribution of fractional change in planet radii; see Figure 3. The median value of the fractional change is 0.7% with a standard deviation of 18%. While individual systems disagree, there is no offset in planet radii between the two populations. The supplemental DV fitted radii and MCMC fitted radii agree within 1-sigma of the combined error bar (i.e., the square-root of the sum of the squared errors) for 78% of the KOIs and 93.4% of PCs (only 1.8% of PC’s radii differ by more than 3-sigma). The differences are caused by discrepancies in the detrending and because the MCMC fits include a non-linear ephemeris in its model when appropriate (i.e., to account for transit-timing variations).

Figure 3.

Top: Comparison of the DR25 PCs fitted planet radii measured by the MCMC fits and the DV supplemental fits. The 1:1 line is drawn in black. Bottom: Histogram of the difference between the MCMC fits and the DV fits for the planet candidates in different MES bins. While individual objects have different fitted values, as a group the planet radii from the two fits agree.

2.5. Stellar Catalog

Some of the derived parameters from transit fits (e.g., planetary radius and insolation flux) of the TCEs and KOIs rely critically on the accuracy of the stellar properties (e.g., radii, mass, and temperature). For all transit fits used to create this catalog we use the DR25 Q1–Q17 stellar table provided by Mathur et al. (2017), which is based on conditioning published atmospheric parameters on a grid of Dartmouth isochrones (Dotter et al. 2008). The best-available observational data for each star is used to determine the stellar parameters; e.g., asteroseismic or high-resolution spectroscopic data, when available, is used instead of broad-band photometric measurements. Typical uncertainties in this stellar catalog are ≈27% in radius, ≈17% in mass, and ≈51% in density, which is somewhat smaller than previous catalogs.

After completion of the DR25 catalog an error was discovered: the metallicities of 780 KOIs were assigned a fixed erroneous value ([Fe/H] = 0.15 dex). These targets can be identified by selecting those that have the metallicity provenance column set to “SPE90”. Since radii are fairly insensitive to metallicity and the average metallicity of Kepler stars is close to solar, the impact of this error on stellar radii is typically less than 10% and does not significantly change the conclusions in this paper. Corrected stellar properties for these stars will be provided in an upcoming erratum to Mathur et al. (2017). The KOI catalog vetting and fits rely exclusively on the original DR25 stellar catalog information. Because the stellar parameters will continue to be updated (with data from missions such as Gaia, Gaia Collaboration et al. 2016b,a) we perform our vetting and analysis independent of stellar properties where possible and provide the fitted information relative to the stellar properties in the KOI table. A notable exception is the limb darkening values; precise transit models require limb darkening coefficients that depends on the stellar temperature and gravity. However, limb-darkening coefficients are fairly insensitive to the most uncertain stellar parameters in the stellar properties catalog (e.g., surface gravity; Claret 2000).

3. THE ROBOVETTER: VETTING METHODS AND METRICS

The dispositioning of the TCEs as PC and FP is entirely automated and is performed by the Robovetter12. This code uses a variety of metrics to evaluate and disposition the TCEs.

Because the TCE population changed significantly between DR24 and DR25 (see Figure 1), the Robovetter had to be improved in order to obtain acceptable performance. Also, because we now have simulated false alarms (invTCEs and scrTCEs) and true transits (injTCEs), the Robovetter could be tuned to keep the most injTCEs and remove the most invTCEs and scrTCEs. This is a significant change from previous KOI catalogs that prioritized completeness above all else. In order to sufficiently remove the long period excess of false alarms, this Robovetter introduces new metrics that evaluate individual transits (in addition to the phase-folded transits), expanding the work that the code Marshall (Mullally et al. 2016) performed for the DR24 KOI catalog.

Because most of the Robovetter tests and metrics changed between DR24 and DR25, we fully describe all of the metrics. In this section we summarize the important aspects of the Robovetter logic and only provide a list of each test’s purpose. The details of these metrics, and more details on the Robovetter logic, can be found in Appendix A. We close this section by explaining the creation of the “disposition score”, a number which conveys the confidence in the Robovetter’s disposition.

3.1. Summary of the Robovetter

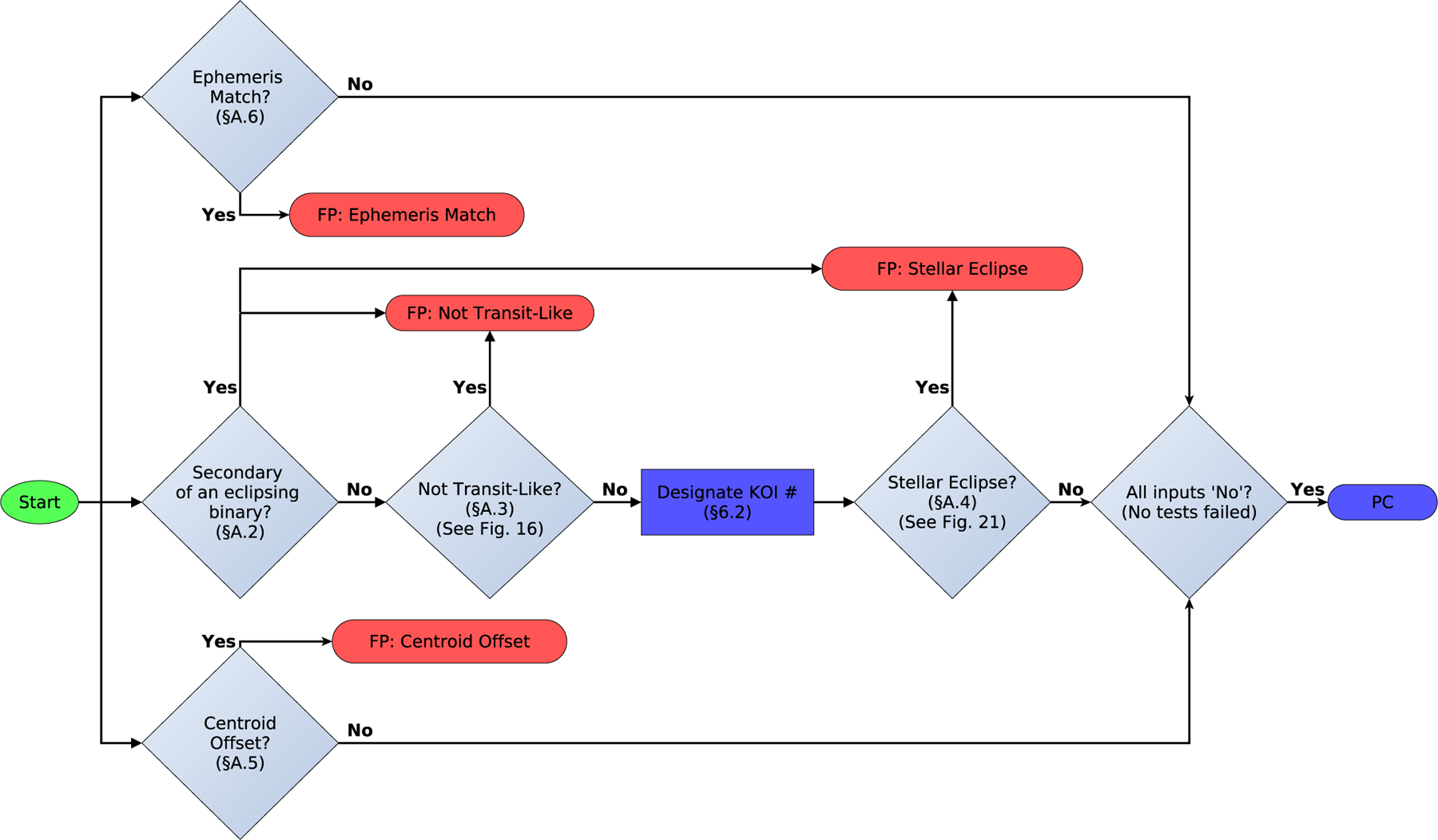

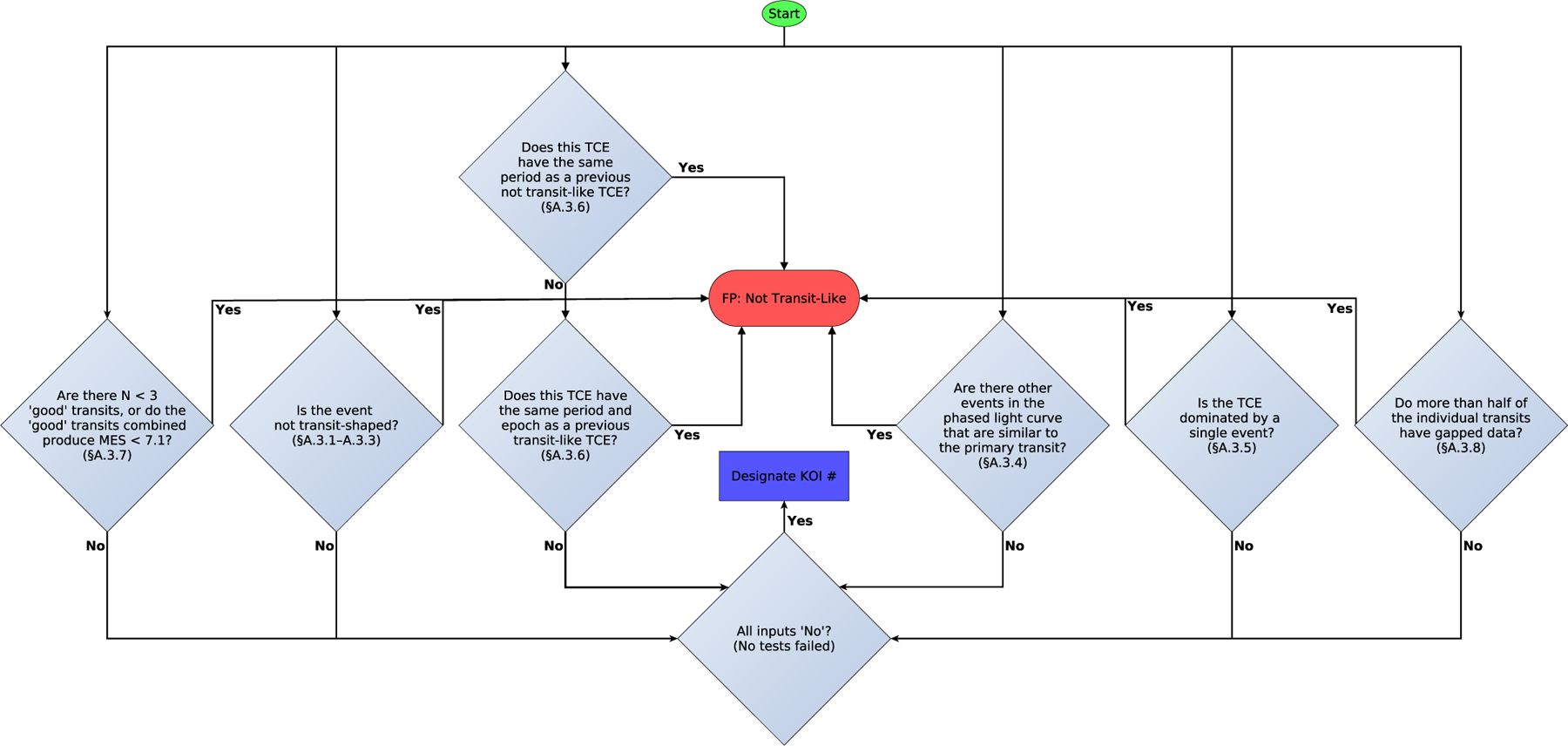

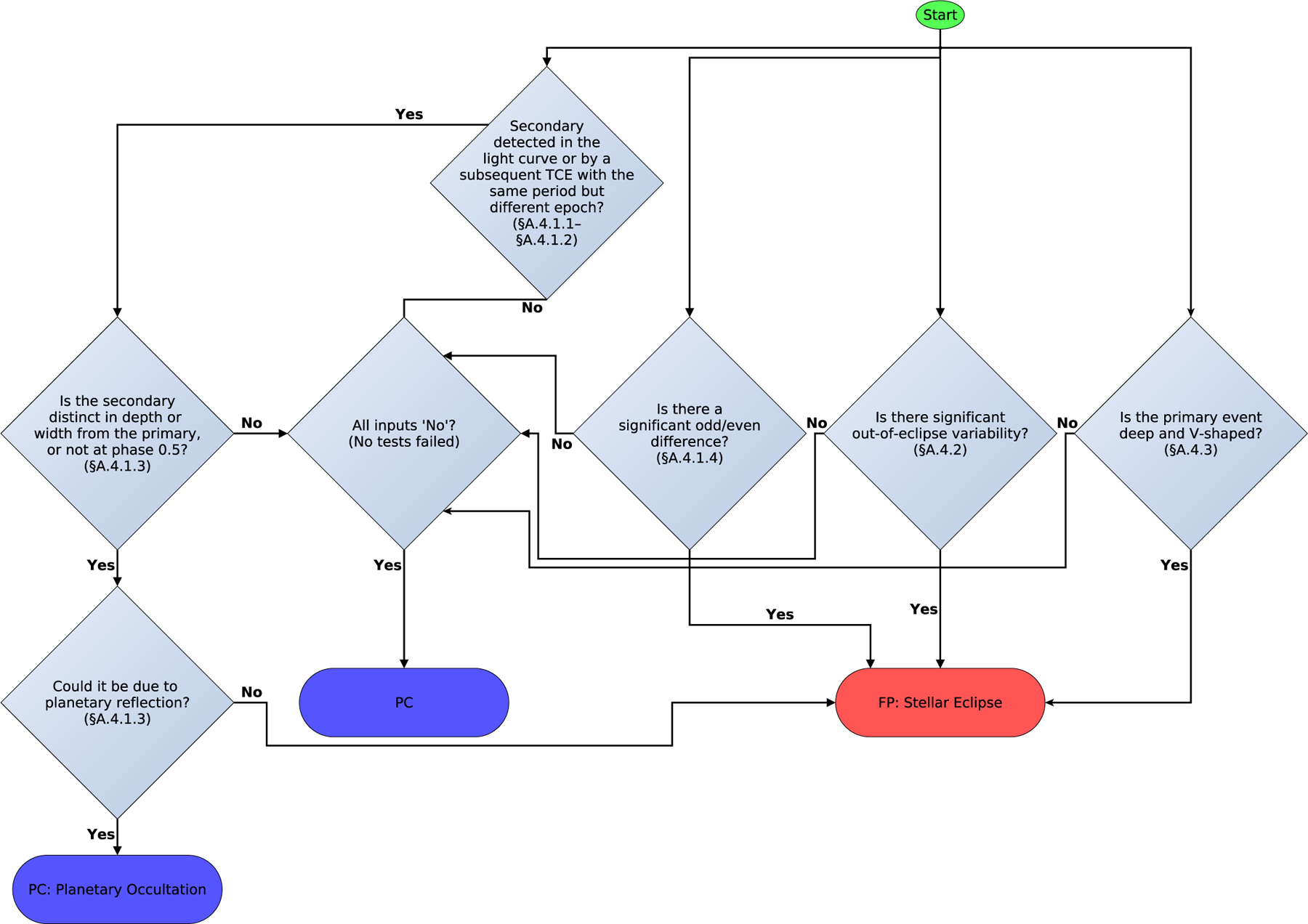

In Figure 4 we present a flowchart that outlines our robotic vetting procedure. Each TCE is subjected to a series of “yes” or “no” questions (represented by diamonds) that either disposition it into one or more of the four FP categories, or else disposition it as a PC. Behind each question is a series of more specific questions, each answered by quantitative tests.

Figure 4.

Overview flowchart of the Robovetter. Diamonds represent “yes” or “no” decisions that are made with quantitative metrics. A TCE is dispositioned as an FP if it fails any test (a “yes” decision) and is placed in one or more of the FP categories. (A TCE that is identified as being the secondary eclipse of a system is placed in both the Not Transit-Like and Stellar Eclipse categories.) If a TCE passes all tests (a “no” decision for all tests) it is dispositioned as a PC. The section numbers on each component correspond to the sections in this paper where these tests are discussed. More in-depth flowcharts are provided for the not transit-like and stellar eclipse modules in Figures 16 and 21.

First, if the TCE under investigation is not the first in the system, the Robovetter checks if the TCE corresponds to a secondary eclipse associated with an already examined TCE in that system. If not, the Robovetter then checks if the TCE is transit-like. If it is transit-like, the Robovetter then looks for the presence of a secondary eclipse. In parallel, the Robovetter looks for evidence of a centroid offset, as well as an ephemeris match to other TCEs and variable stars in the Kepler field.

Similar to previous KOI catalogs (Coughlin et al. 2016; Mullally et al. 2015; Rowe et al. 2015a), the Robovetter assigns FP TCEs to one or more of the following false positive categories:

Not Transit-Like (NT): a TCE whose light curve is not consistent with that of a transiting planet or eclipsing binary. These TCEs are usually caused by instrumental artifacts or non-eclipsing variable stars. If the Robovetter worked perfectly, all false alarms, as we have defined them in this paper, would be marked as FPs with only this Not Transit-Like flag set.

Stellar Eclipse (SS): a TCE that is observed to have a significant secondary event, v-shaped transit profile, or out-of-eclipse variability that indicates the transit-like event is very likely caused by an eclipsing binary. Self-luminous, hot Jupiters with a visible secondary eclipse are also in this category, but are still given a disposition of PC. In previous KOI catalogs this flag was known as Significant Secondary.

Centroid Offset (CO): a TCE whose signal is observed to originate from a source other than the target star, based on examination of the pixel-level data.

Ephemeris Match Indicates Contamination (EC): a TCE that has the same period and epoch as another object, and is not the true source of the signal given the relative magnitudes, locations, and signal amplitudes of the two objects. See Coughlin (2014).

The specific tests that caused the TCE to fail are specified by minor flags. These flags are described in Appendix B and are available for all FPs. Table 3 gives a summary of the specific tests run by the Robovetter when evaluating a TCE. The table lists the false positive category (NT, SS, CO or EC) of the test and also which minor flags are set by that test. Note that there are several informative minor flags, which are listed in Appendix B, but are not listed in Table 3 because they do not change the disposition of a TCE. Also, Appendix B tabulates how often each minor flag was set to help understand the frequency of each type of FP.

Table 3.

Summary of the DR25 Robovetter tests

| Test Name | Section | Major Flags | Minor Flags | Brief Description |

|---|---|---|---|---|

| Is Secondary | A.2 | NT SS | IS_SEC_TCE | The TCE is a secondary eclipse. |

| LPP Metric | A.3.1 | NT | LPP_DV LPP_ALT | The TCE is not transit-shaped. |

| SWEET NTL | A.3.2 | NT | SWEET_NTL | The TCE is sinusoidal. |

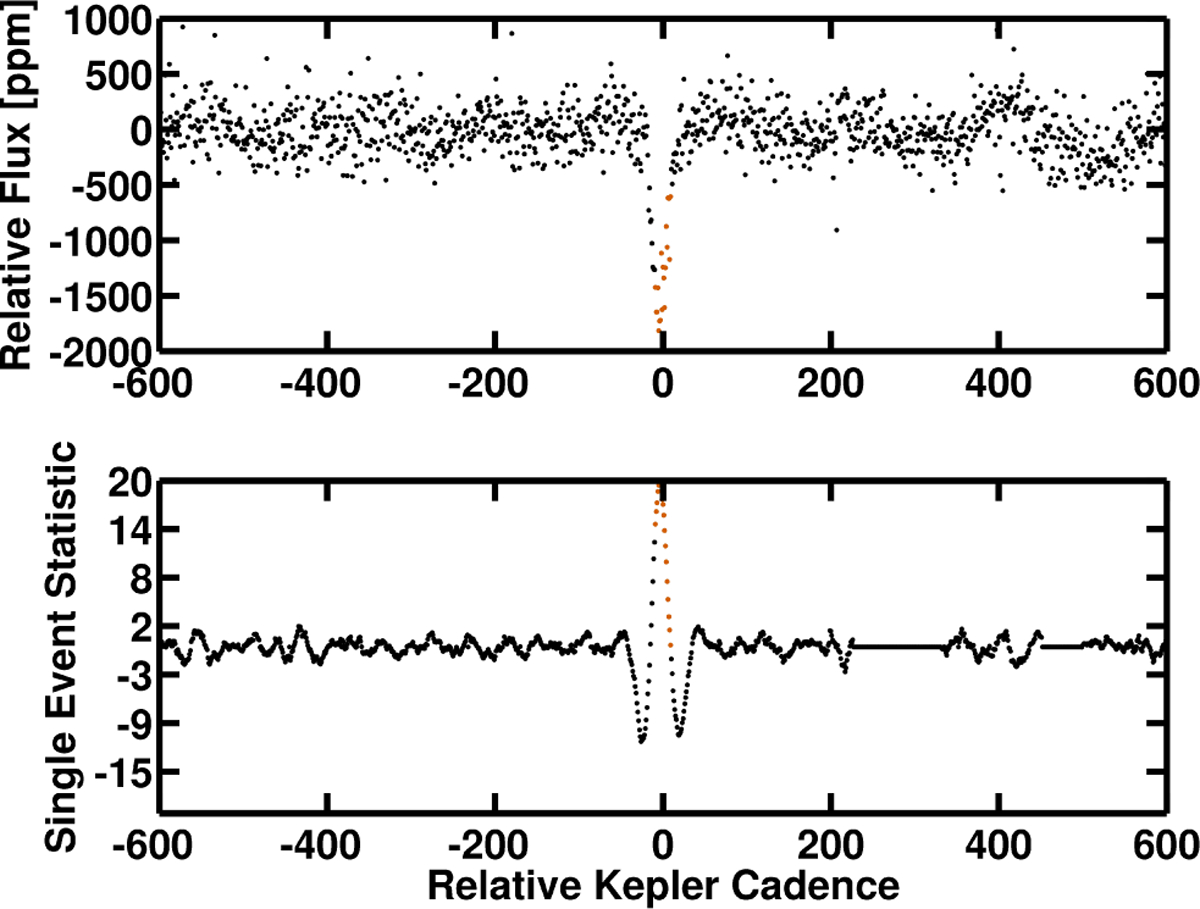

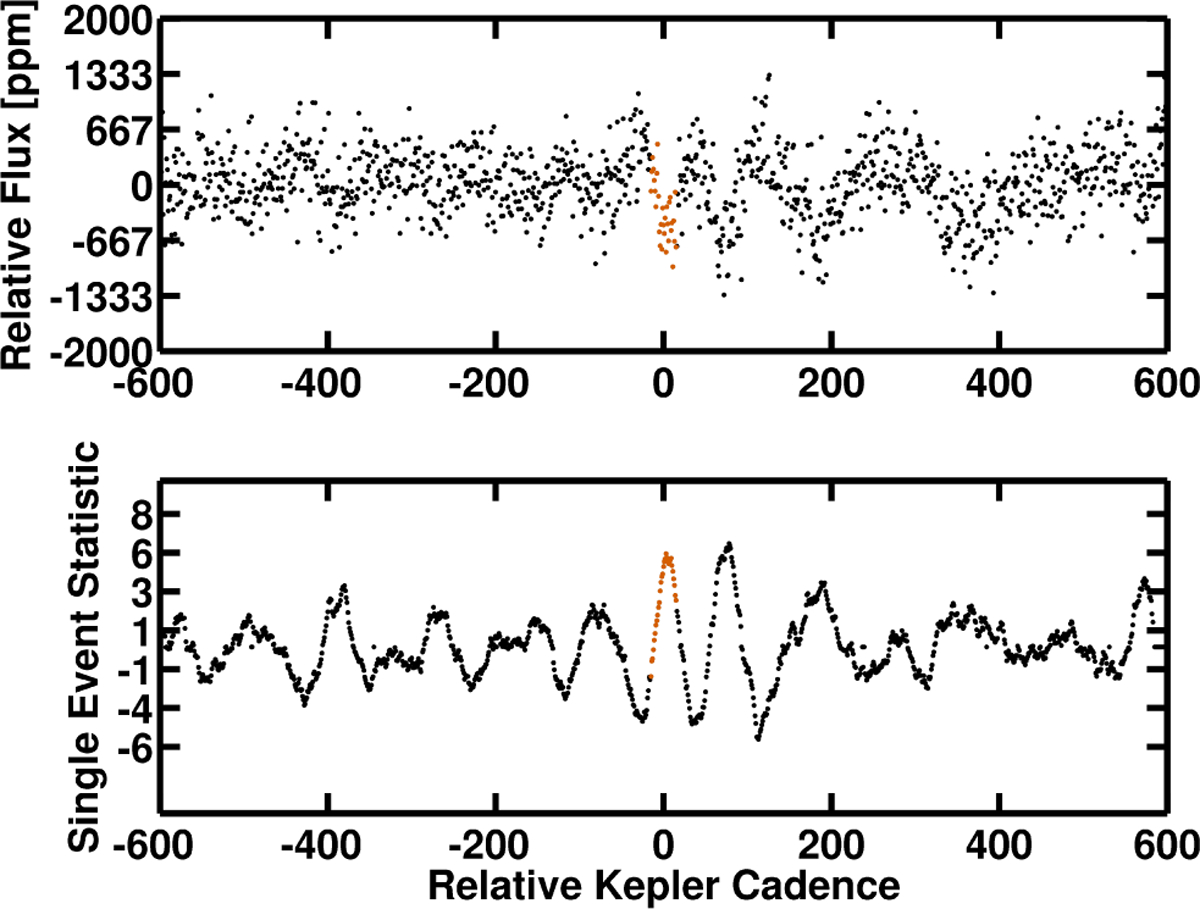

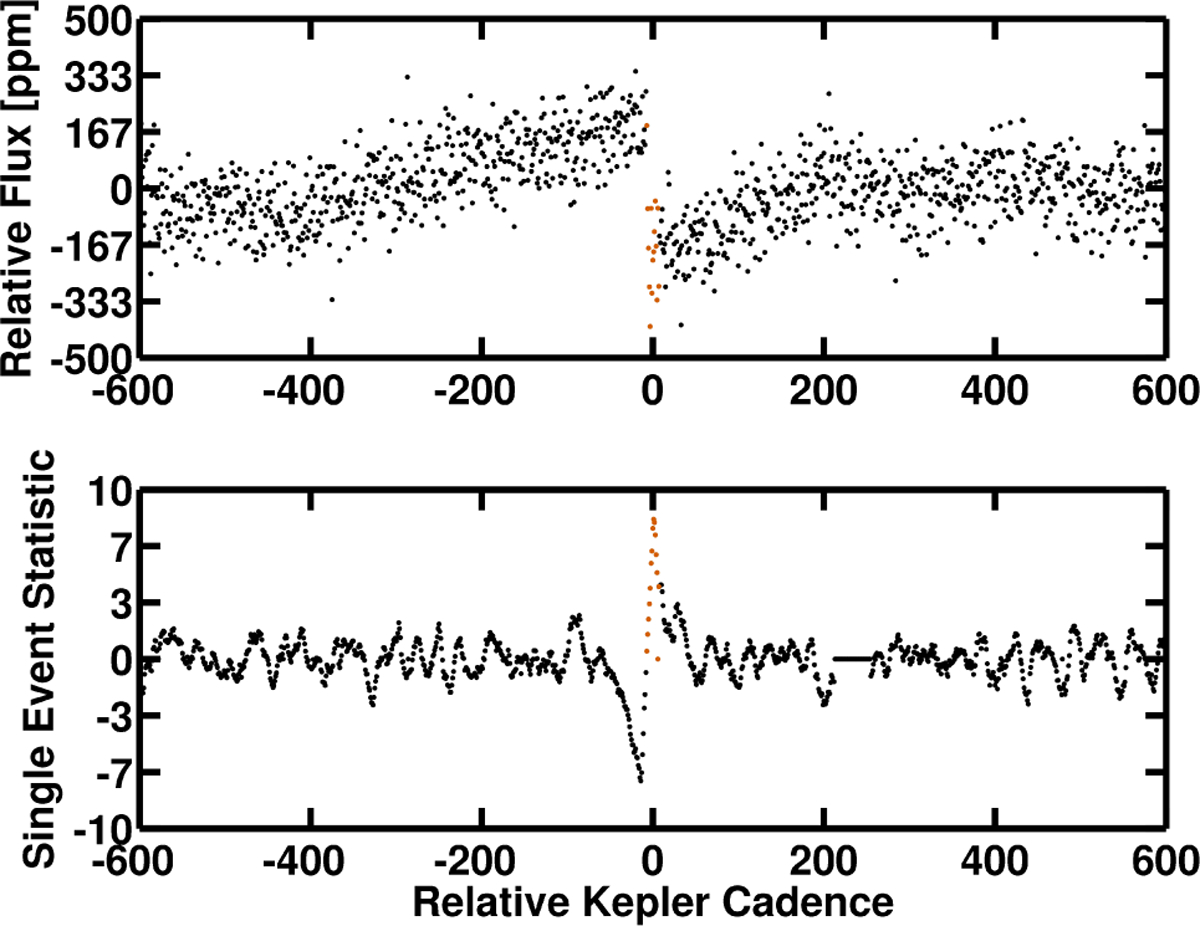

| TCE Chases | A.3.3 | NT | ALL_TRANS_CHASES | The individual TCE events are not uniquely significant. |

| MS1 | A.3.4 | NT | MOD_NONUNIQ_DV MOD_NONUNIQ_ALT |

The TCE is not significant compared to red noise. |

| MS2 | A.3.4 | NT | MOD_TER_DV MOD_TER_ALT |

Negative event in phased flux as significant as TCE. |

| MS3 | A.3.4 | NT | MOD_POS_DV MOD_POS_ALT |

Positive event in phased flux as significant as TCE. |

| Max SES to MES | A.3.5 | NT | INCONSISTENT_TRANS | The TCE is dominated by a single transit event. |

| Same Period | A.3.6 | NT | SAME_NTL_PERIOD | Has same period as a previous not transit-like TCE. |

| Tracker | A.3.7.6 | … | INDIV_TRANS_TRACKER | No match between fitted and discovery transit time. |

| Gapped Transits | A.3.8 | NT | TRANS_GAPPED | The fraction of transits identified as bad is large. |

| MS Secondary | A.4.1.2 | SS | MOD_SEC_DV MOD_SEC_ALT |

A significant secondary event is detected. |

| Secondary TCE | A.4.1.1 | SS | HAS_SEC_TCE | A subsequent TCE on this star is the secondary. |

| Odd Even | A.4.1.4 | SS | DEPTH_ODDEVEN_DV DEPTH_ODDEVEN_ALT MOD_ODDEVEN_DV MOD_ODDEVEN_ALT |

The depths of odd and even transits are different. |

| SWEET EB | A.4.2 | SS | SWEET_EB | Out-of-phase tidal deformation is detected. |

| V Shape Metric | A.4.3 | SS | DEEP_V_SHAPE | The transit is deep and v-shaped. |

| Planet OccultationPC | A.4.1.3 | SS | PLANET_OCCULT_DV PLANET_OCCULT_ALT |

Significant secondary could be planet occupation. |

| Planet Half PeriodPC | A.4.1.3 | … | PLANET_PERIOD_IS_HALF_DV PLANET_PERIOD_IS_HALF_ALT |

Planet scenario possible at half the DV period. |

| Resolved Offset | A.5.1 | CO | CENT_RESOLVED_OFFSET | The transit occurs on a spatially resolved target. |

| Unresolved Offset | A.5.1 | CO | CENT_UNRESOLVED_OFFSET | A shift in the centroid position occurs during transit. |

| Ghost Diagnostic | A.5.2 | CO | HALO_GHOST | The transit strength in the halo pixels is too large. |

| Ephemeris Match | A.6 | EC | EPHEM_MATCH | The ephemeris matches that of another source. |

Note—More details about all of these tests and how they are used by the Robovetter can be found in the sections listed in the second column.

These tests override previous tests and will cause the TCE to become a planet candidate.

New to this Robovetter are several tests that look at individual transits. The tests are named after the code that calculates the relevant metric and are called: Rubble, Marshall, Chases, Skye, Zuma, and Tracker. Each metric only identifies which transits can be considered “bad”, or not sufficiently transit-like. The Robovetter only fails the TCE if the number of remaining good transits is less than three, or if the recalculated MES, using only the good transits, drops below 7.1.

Another noteworthy update to the Robovetter in DR25 is the introduction of the v-shape metric, originally introduced in Batalha et al. (2013). The intent is to remove likely eclipsing binaries which do not show significant secondary eclipses by looking at the shape and depth of the transit (see §A.4.3).

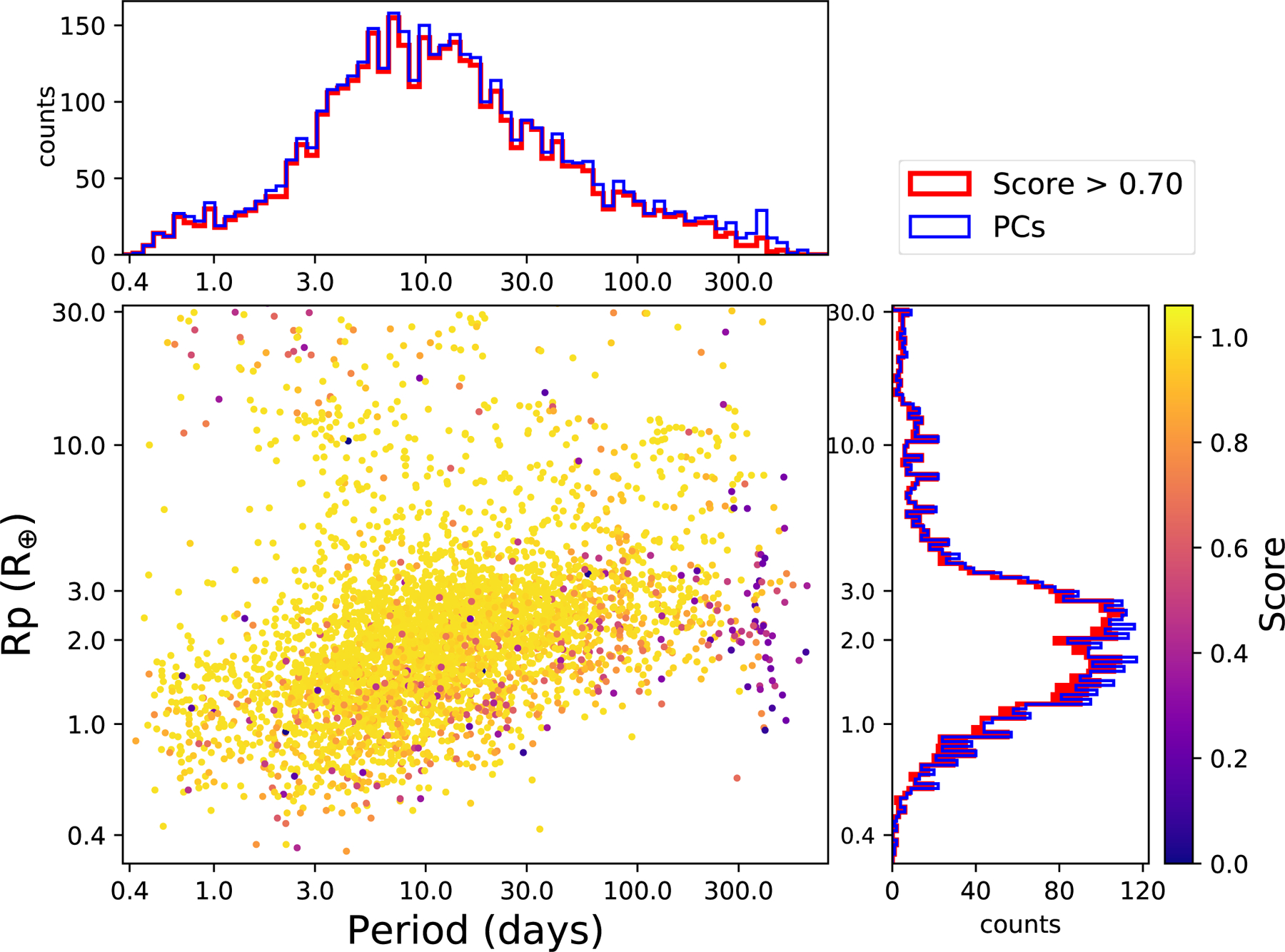

3.2. Disposition Scores

We introduce a new feature to this catalog called the Disposition Score. Essentially the disposition score is a value between 0 and 1 that indicates the confidence in a disposition provided by the Robovetter. A higher value indicates more confidence that a TCE is a PC, regardless of the disposition it was given. This feature allows one to select the highest quality PCs by ranking KOIs via the disposition score, for both use in selecting samples for occurrence rate calculations and prioritizing individual objects for follow-up. We stress that the disposition score does not map directly to a probability that the signal is a planet. However, in §7.3.4 we discuss how the disposition score can be used to adjust the reliability of a sample.

The disposition score was calculated by wrapping the Robovetter in a Monte Carlo routine. In each Monte Carlo iteration, for each TCE, new values are chosen for most of the Robovetter input metrics by drawing from an asymmetric Gaussian distribution 13 centered on the nominal value. The Robovetter then dispositions each TCE given the new values for its metrics. The disposition score is simply the fraction of Monte Carlo iterations that result in a disposition of PC. (We used 10,000 iterations for the results in this catalog.) For example, if a TCE that is initially dispositioned as a PC has several metrics that are just barely on the passing side of their Robovetter thresholds, in many iterations at least one will be perturbed across the threshold. As a result, many of the iterations will produce a false positive and the TCE will be dispositioned as a PC with a low score. Similarly, if a TCE only fails due to a single metric that was barely on the failing side of a threshold, the score may be near 0.5, indicating that it was deemed a PC in half of the iterations. Since a TCE is deemed a FP even if only one metric fails, nearly all FPs have scores less than 0.5, with most very close to 0.0. PCs have a wider distribution of scores from 0.0 to 1.0 depending on how many of their metrics fall near to the various Robovetter thresholds.

To compute the asymmetric Gaussian distribution for each metric, we examined the metric distributions for the injected on-target planet population on FGK dwarf targets. In a 20 by 20 grid in linear period space (ranging from 0.5 to 500 d) and logarithmic MES space (ranging from 7.1 to 100), we calculated the median absolute deviation (MAD) for those values greater than the median value and separately for those values less than the median value. We chose to use MAD because it is robust to outliers. MES and period were chosen as they are fundamental properties of a TCE that well characterize each metric’s variation. The MAD values were then multiplied by a conversion factor of 1.4826 to put the variability on the same scale as a Gaussian standard deviation (Hampel 1974; Ruppert 2010). A two-dimensional power-law was then fitted to the 20 by 20 grid of standard deviation values, separately for the greater-than-median and less-than-median directions. With this analytical approximation for a given metric, an asymmetric Gaussian distribution can be generated for each metric for any TCE given its MES and period.

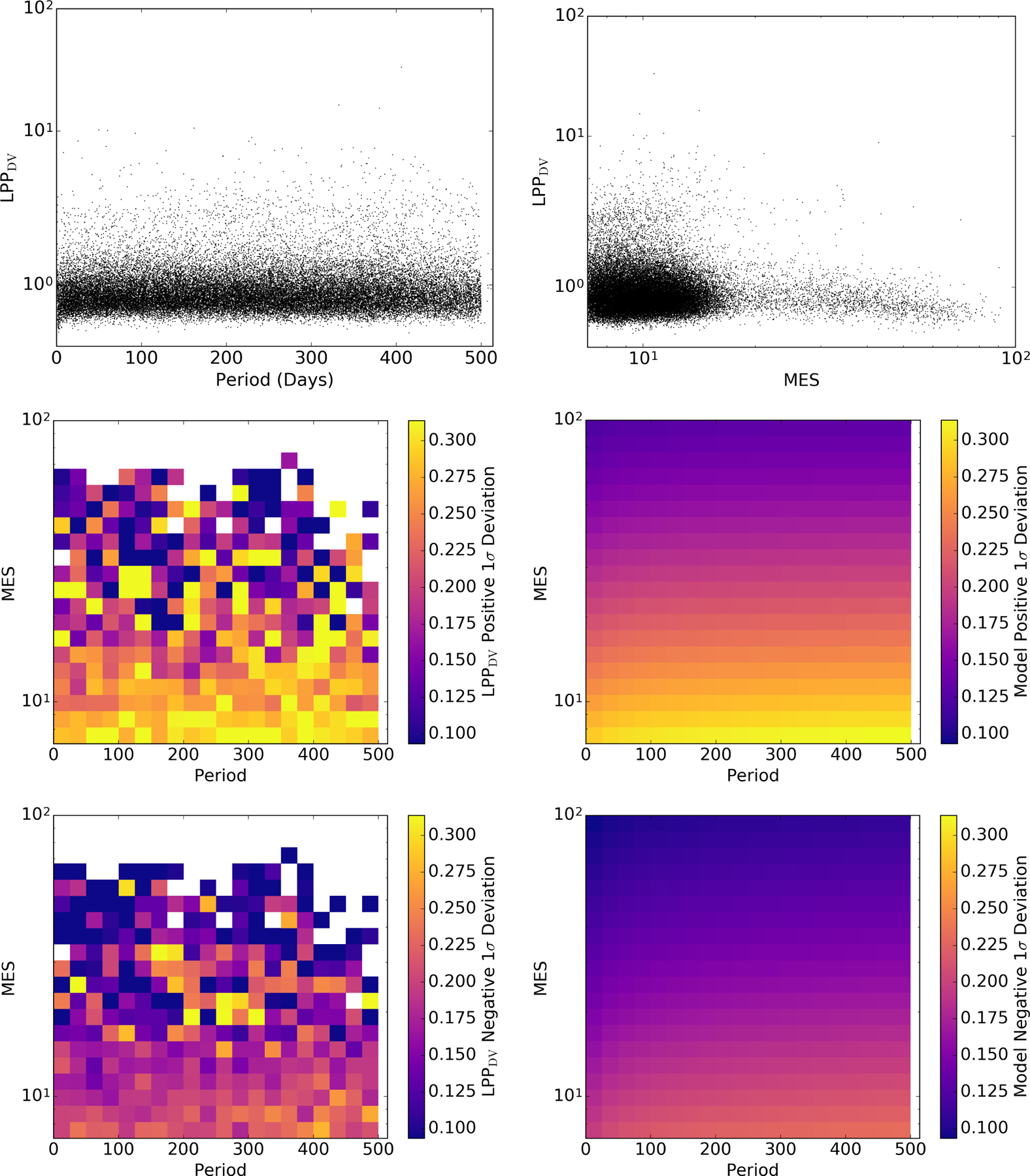

An example is shown in Figure 5 for the LPP metric (Locality Preserving Projections, see §A.3.1) using the DV detrending. The top-left plot shows the LPP values of all on-target injected planets on FGK dwarf targets as a function of period, and the top-right shows them as a function of MES. The middle-left plot shows the measured positive 1σ deviation (in the same units as the LPP metric) as a function of MES and period, and the middle-right plot shows the resulting best-fit model. The bottom plots show the same thing but for the negative 1σ deviation. As can be seen, the scatter in the LPP metric has a weak period dependence, but a strong MES dependence, due to the fact it is easier to measure the overall shape of the light curve (LPP’s goal) with higher MES (signal-to-noise).

Figure 5.

The top-left plot shows the LPPDV values of all on-target injected planets on FGK dwarf targets as a function of period, and the top-right shows them as a function of MES. The middle-left plots shows the measured positive 1σ deviation (in the same units as LPPDV) as a function of MES and period, and the middle-right plot shows the resulting best-fit model. The bottom plots show the same thing, but for the negative 1σ deviation (again in the same units as LPPDV). These resulting model distributions are used when computing the Robovetter disposition score.

Most, but not all, of the Robovetter metrics were amenable to this approach. Specifically, the list of metrics that were perturbed in the manner above to generate the score values were: LPP (both DV and ALT), all the Model-shift metrics (MS1, MS2, MS3, and MS Secondary, both DV and ALT), TCE Chases, max-SES-to-MES, the two odd/even metrics (both DV and ALT), Ghost Diagnostic, and the recomputed MES using only good transits left after the individual transit metrics.

4. CALCULATING COMPLETENESS AND RELIABILITY

We use the injTCE, scrTCE, and invTCE data sets to determine the performance of the Robovetter and to measure the completeness and the reliability of the catalog. As a reminder, the reliability we are attempting to measure is only the reliability of the catalog against false alarms and does not address the astrophysical reliability (see §8). As discussed in §2.1, the long-period obsTCEs are dominated by false alarms and so this measurement is crucial to understand the reliability of some of the most interesting candidates in our catalog.

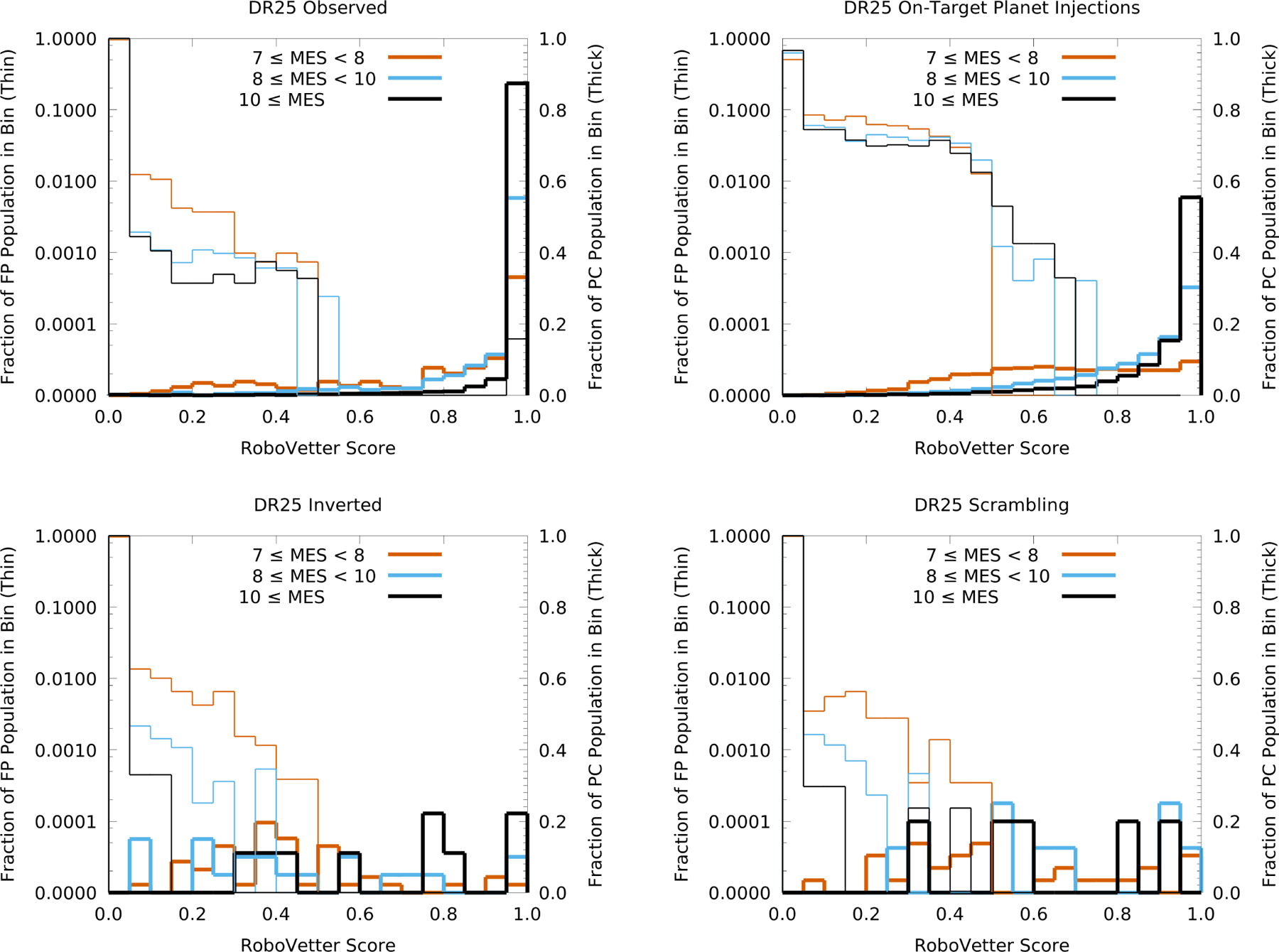

Robovetter completeness, C, is the fraction of injected transits detected by the Kepler Pipeline that are passed by the Robovetter as PCs. As long as the injTCEs are representative of the observed PCs, completeness tells us what fraction of the true planets are missing from the final catalog. Completeness is calculated by dividing the number of on-target injTCEs that are dispositioned as PCs by the total number of injTCEs (Ninj).

| (1) |

If the parameter space under consideration becomes too large and there are gradients in the actual completeness, differences between the injTCEand the obsTCE populations will prevent the completeness measured with Equation 1 from matching the actual Robovetter completeness. For example, there are more long-period injTCEs than short-period ones, which is not representative of the observed PCs, the true fraction of candidates correctly dispositioned by the Robovetter is not accurately represented by binning over all periods. With this caveat in mind, we use C in this paper to indicate the value we can measure, as shown in Equation 1.

The candidate catalog reliability, R, is defined as the ratio of the number of PCs which are truly exoplanets () to the total number of PCs () from the obsTCE data set.

| (2) |

Calculating the reliability for a portion of the candidate catalog is not straight forward because we do not know which PCs are the true transiting exoplanets and cannot directly determine . Instead, we use the simulated false alarm data sets to understand how often false alarms sneak past the Robovetter and contaminate our final catalog.

4.1. Reliability Derivation

To assess the catalog reliability against false alarms, we will assume that the scrTCEs and invTCEs are similar (in frequency and type) to the obsTCEs. One way to calculate the reliability of the catalog from our false alarm sets is to first calculate how often the Robovetter correctly identifies false alarms as FPs, a value we call effectiveness (E). Then, given the number of FPs we identify in the obsTCE set, we determine the reliability of the catalog against the type of false alarms present in the simulated sets (invTCEs and scrTCEs). This method assumes the relative frequency of the different types of false alarms is well emulated by the simulated data sets, but does not require the total number of false alarms to be well emulated.

Robovetter effectiveness (E) is defined as the fraction of FPs correctly identified as FPs in the obsTCE data set,

| (3) |

where is the number of identified FPs which are truly FPs and is the total number of measured FPs. Notice we are using N to indicate the measured number, and T to indicate the “True” number.

If the simulated (sim) false alarm TCEs accurately reflect the obsTCE false alarms, E can be estimated as the number of simulated false alarm TCEs dispositioned as FPs divided by the number of simulated TCEs (Nsim),

| (4) |

For our analysis of the DR25 catalog, we primarily use the union of the invTCEs and the scrTCEs as the population of simulated false alarms when calculating E, see §7.3.

Recall that the Robovetter makes a binary decision, and TCEs are either PCs or FPs. For this derivation we do not take into consideration the reason the Robovetter calls a TCE an FP (i.e., some false alarms fail because the Robovetter indicates there is a stellar eclipse or centroid offset). For most of parameter space, an overwhelming fraction of FPs are false alarms in the obsTCE data set. Future studies will look into separating out the effectiveness for different types of FPs using the set of injected astrophysical FPs (see §2.3).

At this point we drop the obs and sim designations in subsequent equations, as the simulated false alarm quantities are all used to calculate E. The N values shown below refer entirely to the number of PCs or FPs in the obsTCE set so that N = NPC + NFP = TPC + TFP. We rewrite the definition for reliability (Eq. 2) in terms of the number of true false alarms in obsTCE, TFP,

| (5) |

When we substitute NFP = N − NPC in Equation 5 we get another useful way to think about reliability, as one minus the number of unidentified FPs relative to the number of candidates,

| (6) |

However, the true number of false alarms in the obsTCE data set, TFP, is not known. Using the effectiveness value (Equation 4) and combining it with our definition for effectiveness (Equation 3) we get,

| (7) |

and substituting into equation 6 we get,

| (8) |

which relies on the approximation of E from Equation 4 and is thus a measure of the catalog reliability using all measurable quantities.

This method to calculate reliability depends sensitively on the measured effectiveness which relies on how well the set of known false alarms match the false alarms in the obsTCE data set. For example, a negative reliability can result if the measured effectiveness is lower than the true value. In these cases, it implies that there should be more PCs than exist, i.e., the number of unidentified false alarms is smaller than the number of remaining PCs to draw from.

4.2. The Similarity of the Simulated False Alarms

In order to use the scrTCE and invTCE sets to determine the reliability of our catalog we must assume that the properties of these simulated false alarms are similar to those of the false alarms in the obsTCE set. Specifically, this simulated data should mimic the observed not transit-like FPs, e.g., instrumental noise and stellar spots. For instance, our assumptions break down if all of the simulated false alarms were long-duration rolling-band FPs, but only a small fraction of the observed FPs were caused by this mechanism. Stated another way, the method we use to measure reliability, hinges on the assumption that for a certain parameter space the fraction of a particular type of FP TCEs is the same between the simulated and observed data sets. This is the reason we removed the TCEs caused by KOIs and eclipsing binaries in the simulated data sets (see §2.3.3). Inverted eclipsing binaries and transits are not the type of FP found in the obsTCE data set. Since the Robovetter is very good at eliminating inverted transits, if they were included, we would have an inflated value for the effectiveness, and thus incorrectly measure a higher reliability.

Figure 2 demonstrates that the number of TCEs from inversion and scrambling individually is smaller than the number of obsTCEs. At periods less than ≈100 days this difference is dominated by the lack of planets and eclipsing binaries in the simulated false alarm data sets. At longer periods, where the TCEs appear to be dominated by false alarms, this difference is dominated by the cleaning (§2.3.3). Effectively, we search a significantly smaller number of stars for instances of false alarms. The deficit is also caused by the fact that all types of false alarms are not accounted for in these simulations. For instance, the invTCE set will not reproduce false alarms caused by sudden dropouts in pixel sensitivity caused by cosmic rays (i.e., SPSDs). The scrTCE set will not reproduce the image artifacts from rolling band because the artifacts are not as likely to line-up at exactly one Kepler-year. However, despite these complications, the period distribution of false alarms in these simulated data sets basically resembles the same period distribution as the obsTCE FP population once the two simulated data sets are combined. And since reliability is calculated using the fraction of false alarms that are identified (effectiveness), the overabundance that results from combining the sets is not a problem.

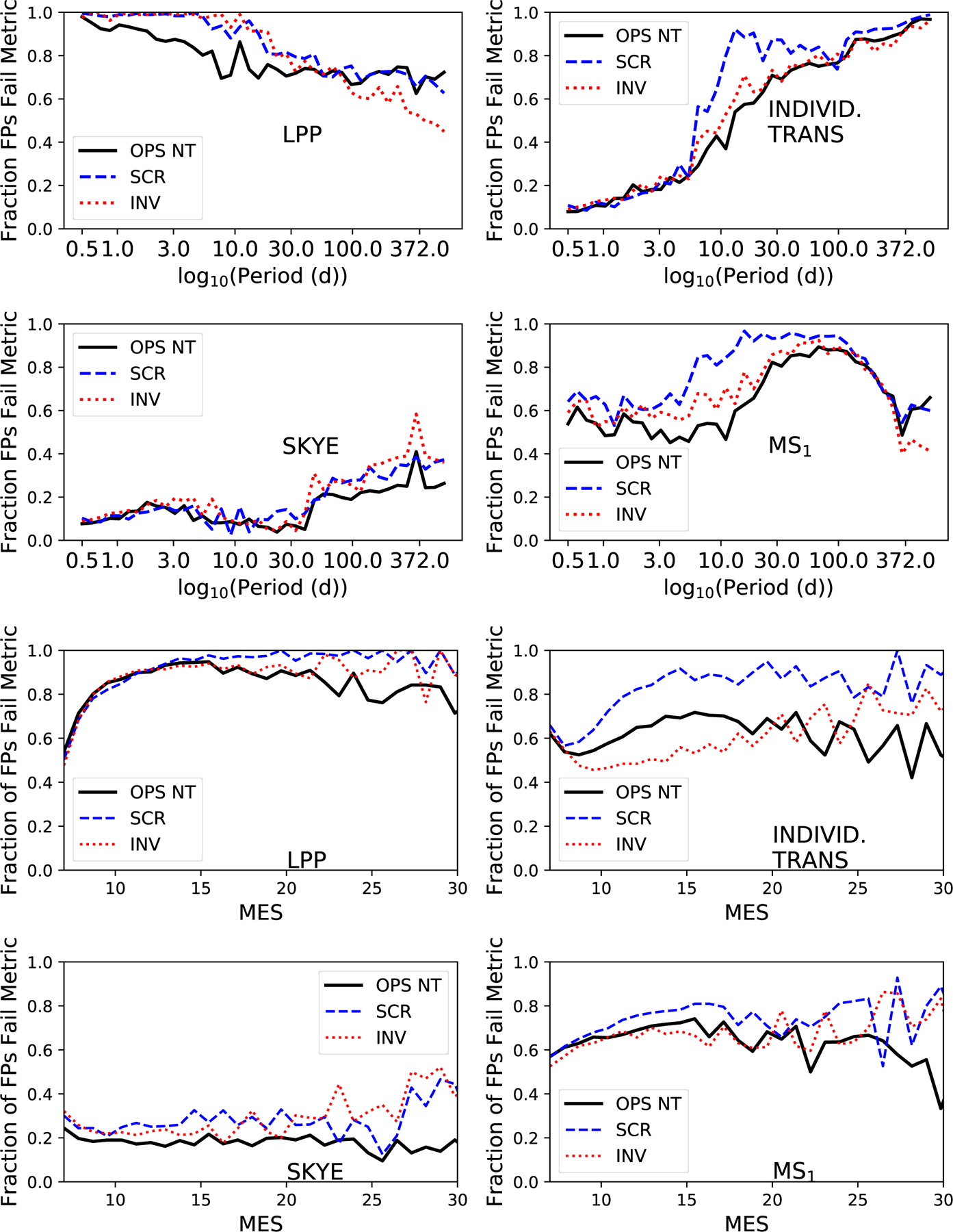

Another way to judge how well the simulated data sets match the type of FP in the obsTCEs is to look at some of the Robovetter metrics. Each metric measures some aspect of the TCEs. For example, the LPP Metric measures whether the folded and binned light curves are transit shaped, and Skye measures whether the individual transits are likely due to rolling band noise. If the simulated TCEs can be used to measure reliability in the way described above, then the fraction of false alarms in any period bin caused by any particular metric should match between the two sets. In Figure 6 we show that this is basically true for both invTCEs and scrTCEs, especially for periods longer than 100 days or MES less than 15. Keep in mind that more than one metric can fail any particular TCE, so the sum of the fractions across all metrics will be greater than one. The deviations between TCE sets is as large as 40% for certain period ranges and such differences may cause systematic errors in our measurements of reliability. But, since the types of FPs overlap, it is not clear how to propagate this information into a formal systematic error bar on the reliability.

Figure 6.

The fraction of not-transit-like FPs failed by a particular Robovetter metric plotted against the logarithm of the period (top two rows) or linear MES (bottom two rows). The fraction is plotted for the obsTCE set in black, the scrTCE set in blue, and the invTCE set in red. The metric under consideration is listed on each plot. For each metric we include fails from either detrending (DV or ALT). Upper left: LPP metric failures. Upper Right: TCEs that fail after removing a single transit due to any of the individual transit metrics. Lower left: TCEs that fail after removing a single transit due to the Skye metric. Lower right: Model Shift 1 metric failures. Notice that there is a basic similarity between the trends seen in the three data sets, especially at long periods and low MES.

For our discussion of the reliability estimate, we are cautiously satisfied with this basic agreement. Given that neither of the two sets perform better across all regions of parameter space, and since having more simulated false alarms improves the precision on effectiveness, we have calculated the catalog reliability using a union of the scrTCE and invTCE sets after they have been cleaned as described in §2.3.3.

5. TUNING THE ROBOVETTER FOR HIGH COMPLETENESS AND RELIABILITY

As described in the previous section, the Robovetter makes decisions regarding which TCEs are FPs and PCs based on a collection of metrics and thresholds. For each metric we apply a threshold and if the TCE’s metrics’ values lies above (or below, depending on the metric) the threshold then the TCE is called a FP. Ideally the Robovetter thresholds would be tuned so that no true PCs are lost and all of the known FPs are removed; however, this is not a realistic goal. Instead we sacrifice a few injTCEs in order to improve our measured reliability.

How to set these thresholds is not obvious and the best value can vary depending on which population of planets you are studying. We used automated methods to search for those thresholds that passed the most injTCEs and failed the most invTCEs and scrTCEs. However, we only used the thresholds found from this automated optimization to inform how to choose the final set of thresholds. This is because the simulated TCEs do not entirely emulate the observed data and many of the metrics have a period and MES dependence. For example, the injections were heavily weighted towards long periods and low MES so our automated method sacrificed many of the short period candidates in order to keep more of the long period injTCEs. Others may wish to explore similar methods to optimize the thresholds and so we explain our efforts to do this below.

5.1. Setting Metric Thresholds Through Optimization

For the first step in Robovetter tuning, we perform an optimization that finds the metric thresholds that maximize the fraction of TCEs from the injTCE set that are classified as PCs (i.e., completeness) and minimizes the fraction of TCEs from the scrTCE and invTCE sets identified as PCs (minimizes ineffectiveness or 1 − E.) Optimization varies the thresholds of the subset of Robovetter metrics described below, looking for those thresholds that maximize completeness and minimize ineffectiveness.

This optimization is performed jointly across a subset of the metrics described in §3. The set of metrics chosen for the joint optimization, called “optimized metrics” are: LPP (§A.3.1), the Model-shift uniqueness test metrics (MS1, MS2, and MS3; §A.3.4), Max SES to MES (§A.3.5), and TCE Chases (§A.3.3). Both the DV and ALT versions of these metrics, when applicable, were used in the optimization.

Metrics not used in the joint optimization are incorporated by classifying TCEs as PCs or FPs using fixed a priori thresholds prior to optimizing the other metrics. After optimization, a TCE is classified as a PC only if it passes both the non-optimized metrics and the optimized metrics. Prior to optimization the fixed thresholds for these non-optimized metrics pass about 80% of the injTCE set, so the final optimized set can have at most 80% completeness. Note, the non-optimized metric thresholds for the DR25 catalog changed after doing these optimizations. The overall effect was that the final measured completeness of the catalog increased (see §7), especially for the low MES TCEs. If the optimization were redone with these new thresholds, then it would find that the non-optimized metrics pass 90% of the injTCEs. We decided this change was not sufficient reason to rerun the optimization since it was only being used to inform and not set the final thresholds.

Optimization is performed by varying the selected thresholds, determining which TCEs are classified as PCs by both the optimized and non-optimized metrics using the new optimized thresholds, and computing C and 1 − E. Our optimization seeks thresholds that minimize the objective function , where C0 is the target completeness, so the optimization tries to get as close as possible to 1 − E = 0 and C = C0. We varied C0 in an effort to reduce the ineffectiveness. The thresholds are varied from random starting seed values, using the Nelder-Mead simplex algorithm via the MATLAB fminsearch function. This MATLAB function varies the thresholds until the objective function is minimized. There are many local minima, so the optimal thresholds depend sensitively on the random starting threshold values. The optimal thresholds we report are the smallest of 2000 iterations with different random seed values.

Our final optimal threshold used a target of C0 = 0.8, which resulted in thresholds that yielded 1 − E = 0.0044 and C = 0.799. We experimented with smaller values of C0, but these did not significantly lower ineffectiveness. We also performed an optimization that maximized reliability defined in §4.1 rather than minimizing ineffectiveness. This yielded similar results. We also explored the dependence of the optimal thresholds on the range of TCE MES and period. We found that the thresholds have a moderate dependence, while the ineffectiveness and completeness have significant dependence on MES and period range. Exploration of this dependence of Robovetter threshold on MES and period range is a topic for future study.

5.2. Picking the Final Robovetter Metric Thresholds