Abstract

The COVID-19 crisis has revealed structural failures in governance and coordination on a global scale. With related policy interventions dependent on verifiable evidence, pandemics require governments to not only consider the input of experts but also ensure that science is translated for public understanding. However, misinformation and fake news, including content shared through social media, compromise the efficacy of evidence-based policy interventions and undermine the credibility of scientific expertise with potentially longer-term consequences. We introduce a formal mathematical model to understand factors influencing the behavior of social media users when encountering fake news. The model illustrates that direct efforts by social media platforms and governments, along with informal pressure from social networks, can reduce the likelihood that users who encounter fake news embrace and further circulate it. This study has implications at a practical level for crisis response in politically fractious settings and at a theoretical level for research about post-truth and the construction of fact.

Keywords: Fake news, Policy sciences, Equilibrium model, COVID-19

Introduction

‘This is a free country. Land of the free. Go to China if you want communism’ yelled an American protester at a nurse counter-protesting the resumption of commercial activity 5 weeks into the country’s COVID-19 crisis (Armus and Hassan 2020). Like many policy challenges, the COVID-19 crisis is exposing deep-seated political and epistemological divisions, fueled in part contestation over scientific evidence and ideological tribalism stoked in online communities. The proliferation of social media has democratized access to information with evident benefits, but also raises concerns about the difficulty users face in distinguishing between truth and falsehood. The perils of ‘fake news’—false information masquerading as verifiable truth, often disseminated online—are acutely apparent during public health crises, with false equivalence drawn between scientific evidence and uninformed opinion.

In an illustrative episode from April 2020, the scientific community’s largely consensus views about the need for social distancing to limit the spread of COVID-19 were challenged by protesters in the American states of Minnesota, Michigan, and Texas, who demanded in rallies that governors immediately relax social distancing protocols and re-open shuttered businesses. Populist skepticism about COVID-19 response in the USA had arguably been growing since President Donald Trump’s early dismissals of the severity of the virus (US White House 2020) and his call for protestors to ‘liberate’ states undertaking containment measures (Shear and Mervosh 2020). These actions were seen by some as evidence of the presidential administration’s willingness to politicize virus response; indeed, critical language from some politicians and commentators cast experts and political opponents as unnecessarily panicky and politically motivated to overstate the need for lock-downs and business closures. Despite the salience of this recent phenomenon, anti-science populism has an arguably extended history, not only for issues related to public health (e.g., virus response and, prior to COVID-19, vaccinations) but also for climate change (Fischer 2020; Huber 2020; Lejano and Dodge 2017; Lewandowsky et al. 2015). Anti-science skepticism, often lacking a broad audience and attention from mainstream media, is left to peddle scientifically unsubstantiated claims in online communities, where such content remains widely accessible and largely unregulated (Edis 2020; Szabados 2019). As such, the issue of fake news deserves closer scrutiny with the world facing its greatest public health crisis in a century.

There is no consensus definition of fake news (Shu et al. 2017). Based on a survey of articles published between 2003 and 2017, Tandoc et al. (2018) propose a typology for how the concept can be operationalized: satire, parody, fabrication, manipulation, propaganda, and advertising. Waszak et al. (2018) propose a similar typology (with the overlapping categories of fabricated news, manipulated news, and advertising news) but add ‘irrelevant news’ to capture the cooptation of health terms and topics to support unrelated arguments. Shu et al. (2017) cite verifiable lack of authenticity and intent to deceive as general characteristics of fake news. Making a distinction between fake news and brazen falsehoods, which has implications for this study’s focus on the behavior of the individual information consumer, Tandoc et al. (2018) argue ‘while news is constructed by journalists, it seems that fake news is co-constructed by the audience, for its fakeness depends a lot on whether the audience perceives the fake as real. Without this complete process of deception, fake news remains a work of fiction’ (p. 148).

Amidst the COVID-19 crisis, during which trust in government is not merely an idle theoretical topic but has substantial implications for public health, deeper scholarly understandings about the power and allure of fake news are needed. According to Porumbescu (2018), ‘the evolution of online mass media is anything but irrelevant to citizens’ evaluations of government, with discussions of “fake news,” “alternative facts,” “the deep state,” and growing political polarization rampant’ (p. 234). With the increasing level of global digital integration comes the growing difficulty of controlling the dissemination of misinformation. Efforts by social media platforms (as the underlying organizational structures and operations; referred to hereafter as SMPs) and governments have targeted putative sources of misinformation, but engagement (i.e., sharing and promoting links) with fake news by individual users is an additional realm in which the problem of fake news can be addressed.

Examining the motivations driving an individual’s engagement with fake news, this study introduces a formal mathematical model that illustrates the cost to an individual of making low- or high-level efforts to resist fake news. The intent is to reveal mechanisms by which SMPs and governments can intervene at individual and broader scales to contain the spread of willful misinformation. This article continues with a literature review focusing on fake news in social media and policy efforts to address it. This is followed by the presentation of the model, with a subsequent section focusing on policy insights and recommendations that connect the findings of the model to practical implications. The conclusion reflects more broadly on the ‘post-truth’ phenomenon as it relates to policymaking and issues a call for continued research around epistemic contestation.

Literature review

A canvassing of literature about fake news could draw from an array of disciplines including communications, sociology, psychology, and economics. We focus on the treatment of fake news by the public policy literature—an angle that engages discussions about cross-cutting issues like misinformation, politicization of fact, and the use of knowledge in policymaking. The review is in two parts. The first focuses on the intersection of fake news, social media, and pandemics (in particular the COVID-19 crisis), and the second on policy efforts to address individual reactions to fake news.

Fake news, social media, and pandemics

Fake news and social media as topics of analysis are closely intertwined, as the latter is considered a principal conduit through which the former spreads; indeed, Shu et al. (2017) call social media ‘a powerful source for fake news dissemination’ (p. 23). The aftermath of the 2016 US presidential election sent scholars scrambling to the topic of fake news, misinformation, and populism; as such, information-filtering through political and cognitive bias is a topic now enjoying a spirited revival in the literature (Fang et al. 2019; Polletta and Callahan 2019; Cohen 2018; Allcott and Gentzkow 2017; DiFranzo and Gloria-Garcia 2017; Flaxman et al. 2016; Zuiderveen Borgesius et al. 2016). A popular heuristic for conceptualizing the phenomenon of social media-enabled fake news is the notion of the ‘echo chamber’ effect (Shu et al. 2017; Barberá et al. 2015; Agustín 2014; Jones et al. 2005), in which information consumers intentionally self-expose only to content and communities that confirm their beliefs and perceptions while avoiding those that challenges them. The effect leads to the development of ideologically homogenous social networks whose members derive collective satisfaction from frequently repeated narratives (a process McPherson et al. (2001) label ‘homophily’). This phenomenon leads to ‘filter bubbles’ (Spohr 2017) in which ‘algorithmic curation and personalization systems […] decreases [users’] likelihood of encountering ideologically cross-cutting news content’ (p. 150). The filtering mechanism is both self-imposed and externally imposed based on the algorithmic efforts of SMPs to circulate content that maintains user interest (Tufekci 2015). For example, in a Singapore-based study of the motivations behind social media users’ efforts to publicly confront fake news, Tandoc et al. (2020) find that users are driven most by the relevance of the issue covered, their interpersonal relationships, and their ability to convincingly refute the misinformation; according to the authors, ‘participants were willing to correct when they felt that the fake news post touches on an issue that is important to them or has consequences to their loved ones and close friends’ (p. 393). As the issue of misinformation has gained further salience during the COVID-19 episode, this review continues by exploring scholarship about fake news in the context of pandemics.

Research has shown that fake news and misinformation can have detrimental effects on public health. In the context of pandemics, fake news operates by ‘masking healthy behaviors and promoting erroneous practices that increase the spread of the virus and ultimately result in poor physical and mental health outcomes’ (Tasnim et al. 2020; n.p.), by limiting the dissemination of ‘clear, accurate, and timely transmission of information from trusted sources’ (Wong et al. 2020; p. 1244), and by compromising short-term containment efforts and longer-term recovery efforts (Shaw et al. 2020). First used by World Health Organization Director-General Tedros Adhanom Ghebreyesus in February 2020 to describe the rapid global spread of misinformation about COVID-19 through social media (Zarocostas 2020), the term ‘infodemic’ has recently gained popularity in pandemic studies (Hu et al. 2020; Hua and Shaw 2020; Medford et al. 2020; Pulido et al. 2020). Similar terms are ‘pandemic populism’ (Boberg et al. 2020) and the punchy albeit casual ‘covidiocy’ (Hogan 2020). Predictably, misinformation has proliferated with the rising salience of COVID-19 (Cinelli et al. 2020; Frenkel et al. 2020; Hanafiah and Wan 2020; Pennycook et al. 2020; Rodríguez et al. 2020; Singh et al. 2020). In an April 2020 press conference, US President Donald Trump made ambiguous reference to the possible value of ingesting disinfectants to treat the virus (New York Times 2020), an utterance that elicited both concern and ridicule.

Scholarly efforts to understand misinformation in the COVID-19 pandemic contribute to an existing body of similar research in other contexts, including the spread of fake news during outbreaks of Zika (Sommariva et al. 2018), Ebola (Spinney 2019; Fung et al. 2016), and SARS (Taylor 2003). Research about misinformation and COVID-19 draws also on existing research about online information-sharing behaviors more generally. For example, in a study about the role of fake news in public health, Waszak et al. (2018) find that among the most shared links on common social media, 40 percent contained fallacious content (with vaccination having the highest incidence, at 90 percent). In taking a still broader view, understandings about the politicization of public health information draw from research about science denialism more generally, including the process by which climate denial narratives as ‘alternative facts’ are socio-culturally constructed to protect ideological imaginaries (see Fischer (2019) for a similar discussion related to climate change). On the other hand, there is also evidence that the use of social media as a conduit for information dissemination by public health authorities and governments has been useful, including communicating the need for social distancing, indicating support for healthcare workers, and providing emotional encouragement during lock-down (Thelwall and Thelwall 2020). As such, it is crucial to distinguish between productive uses of social media from unproductive ones, with the operative characteristic being the effect on safety and wellbeing of information consumers and the broader public.

Policy efforts to address individual reactions to fake news

The second part of this review explores literature about policy efforts to address fake news, such as it is understood as a policy problem. The phenomenon of fake news can be considered an individual-level issue, and this is the perspective adopted by this review and study. Within a ‘marketplace’ of information exchange, consumers encounter information and must decide whether to engage with it or to discredit and dismiss it. As such, many policy interventions targeting fake news focus on verification by helping equip social media users with the tools to identify and confront fake news (Torres et al. 2018). Nevertheless, the efficacy of such policy tools depends on their calibration to individual cognitive and emotional characteristics. For example, Lazer et al. (2018) outline cognitive biases that determine the allure of fake news, including self-selection (limiting one’s consumption only to affirming content), confirmation (giving greater credibility to affirming content), and desirability (accepting only affirming content). To this list Rini (2017) adds ‘credibility excess’ as a way of ascribing ‘inappropriately high testimonial credibility [to a news item] on the basis of [the source’s] demography’ (p. E-53).

A well-developed literature also indicates that cognitive efforts and characteristics determine an individual’s willingness to engage with fake news. According to a study about individual behaviors in response to the COVID-19 crisis in the USA, Stanley et al. (2020) find that ‘individuals less willing to engage effortful, deliberative, and reflective cognitive processes were more likely to believe the pandemic was a hoax, and less likely to have recently engaged in social-distancing and hand-washing’ (n.p.). The individual cognitive perspective is utilized also by Castellacci and Tveito (2018) in a review of literature about the impact of internet use on individual wellbeing: ‘the effects of Internet on wellbeing are mediated by a set of personal characteristics that are specific to each individual: psychological functioning, capabilities, and framing conditions’ (p. 308). Ideological orientation has likewise been found to associate with perceptions about and reactions to fake news; for example, Guess et al. (2019) find in a study of Facebook activity during the 2016 US presidential election that self-identifying political conservatives (the ‘right’) were more likely than political liberals (the ‘left’) to share fake news and that the user group aged 65 and older (controlling for ideology) shared over six times more fake news articles than did the youngest user group. A similar age-related effect on psychological responses to social media rumors is observed by He et al. (2019) in a study of usage patterns for messaging application WeChat; older users who are new to the application struggle more to manage their own rumor-induced anxiety. Network type also plays a role in determining fake news engagement; circulation of fake news and misinformation was found to be higher among anonymous and informal (individual and group) social media accounts than among official and formal institutional accounts (Kouzy et al. 2020).

The analytical value of connecting individual behavior with public policy interventions has prompted studies about the conduits through which policies influence social media consumers. According to Rini (2017), the problem of fake news ‘will not be solved by focusing on individual epistemic virtue. Rather, we must treat fake news as a tragedy of the epistemic commons, and its solution as a coordination problem’ (p. E-44). This claim makes reference to a scale and topic – the actions of government – that are underexplored in studies about the failure to limit the spread of fake news. Venturing as well into the realm of interpersonal dynamics, Rini continues by arguing that the development of unambiguous norms can enhance individual accountability, particularly around the transmission of fake news through social media sharing as a ‘testimonial endorsement’ (p. E-55). Extending the conversation about external influences on individual behavior, Lazer et al. (2018) classify ‘political interventions’ into two categories: (1) empowerment of individuals to evaluate fake news (e.g., training, fact-checking websites, and verification mechanisms embedded within social media posts to evaluate information source authenticity) and (2) SMP-based controls on dissemination of fake news (e.g., identification of media-active ‘bots’ and ‘cyborgs’ through algorithms). The authors also advocate wider applicability of tort lawsuits related to the harm caused by individuals sharing fake news. From a cognitive perspective, Van Bavel et al. (2020) add ‘prebunking’ as a form of psychological inoculation that exposes users to a modest amount of fake news with the purpose of helping them develop an ability to recognize it; the authors cite support in similar studies by van der Linden et al. (2017) and McGuire (1964). These interventions, in addition to crowd-sourced verification mechanisms whereby users rate the perceived accuracy of social media posts by other users, have the goal of conditioning and nudging users to reflect more deeply on the accuracy of the news they encounter.

Given that the model introduced in the following section concerns the issue of fake news from the perspective of individual behavior and that topics addressed by fake news are often ideologically contentious, it is appropriate to acknowledge the literature related to cognitive bias, beliefs, and ideologies with reference to political behavior. In a study about narrative framing, Mullainathan and Shleifer (2005) explore how the media, whether professional or otherwise, seeks to satisfy the confirmation biases of targeted or segmented viewer groups; when extended to the current environment of online media, narrative targeting increases the likelihood that fake news will be shared due to its attractiveness to particular audiences. In turn, this narrative targeting perpetuates the process by which information consumers construct their own narratives about political issues in a way that comports with their ideologies (Kim and Fording 1998; Minar 1961) and personalities (Duckitt and Sibley 2016; Caprara and Vecchione 2013). This tendency is shown to be strongly influenced by not only by (selectively observed) reality but also by individual perceptions that Kinder (1978; p. 867) labels ‘wishful thinking.’ Further, the cognitive tendency to classify reality through sweeping categorizations (e.g., ‘liberals’ vs. ‘conservatives,’ a common polarity in American politics) compels individuals to associate more strongly with one side and distance further from the other (Vegetti and Širinic 2019; Devine 2015) and thereby biases an individual’s cognitive processing of information (Van Bavel and Pereira 2018). This observation is arguably relevant to the current online discourses and content of fake news, which often reflect extreme party-political rivalries and left–right partisanship (Spohr 2017; Gaughan 2016). These issues are relevant as they bear strongly on the choices of individuals about effort levels related to their interactions with and subjective judgments of fake news.

Finally, few attempts have been made to apply formal mathematical modeling to understand the behavior of individuals with respect to the consumption of fake news on social media; a notable example is Shu et al. (2017), who model the ability of algorithms to detect fake news, and Tong et al. (2018), who model the ‘multi-cascade’ diffusion of fake news. Papanastasiou (2020) uses a formal mathematical model to illustrate the behavior of SMPs in response to sharing fake news by users. To this limited body of research we contribute a formal mathematical model addressing the motivations of users to engage with or dismiss fake news when encountered.

The model

The model presented in this section examines the behavior of a hypothetical digital citizen (DC) who encounters fake news while using social media. The vulnerability of the DC in this encounter depends on the DC’s level of effort in resisting fake news. To illustrate this dynamic, we adopt the modeling approach used by Lin et al. (2019) and Hartley et al. (2019) that considers the equilibrium choices of a rational decision-maker as determined by individual attributes and external factors. The advantage of this model is its incorporation of factors related to ethical standards in addition to cost–benefit considerations in decision-making. This type of model has been widely used in studies related to taxpayer behavior (Eisenhauer 2006, 2008; Yaniv 1994; Beck and Jung 1989; Srinivasan 1973), in which the tension between ethics and perceived benefits of acting unethically is comparable to that faced by a DC.

The model’s parameters are intended to aid thinking about issues within the ambit of public policy, making clearer the assumptions about individual behaviors and consequences of those behaviors as addressable by government interventions. While the model examines DC behavior, it is not intended to be meaningful for research about psychology and individual or group behavior more generally; mentions of behavior and its motivations and effects are made in service only to arguments about the policy implications of governing behavior in free societies. The remainder of this section specifies the model, which aims to formally and systematically observe dynamics among effort levels, standards, and utility for consuming and sharing fake news.

Choice of effort level

The model assumes that the DC is a rational decision-maker; that is, in reacting to fake news she maximizes her utility as determined by two factors: consumer utility (the benefits accruing to the DC by engaging in a particular way with fake news) and ethical standards. Regarding consumer utility, the DC makes an implicit cost–benefit analysis; for ethical standards, she behaves consistently with her personally held ethical norms. For simplicity, we assume that the DC’s overall utility function U takes the following form:

| 1 |

where

e is the DC’s effort level in reacting to fake news. For simplicity of exposition, the DC is assumed to choose from two levels of effort: low (e = eL) and high (e = eH). If the DC chooses low effort, she increases her consumption of fake news; with high effort, she reduces her consumption. In the equation q = eH/eL, we assume that q > 1 and that a higher q represents a higher cost to the DC because it reflects a higher level of effort.

α is the weight the DC gives to her consumer utility and (1 − α) is the weight she gives to her utility from ethical behavior. We assume that α is a function of effort level as follows: and α(eL) = 1. That is, by choosing the high-effort level, the DC derives her utility not only from consumption (α > 0) but also from ethical behavior (). If choosing the low-effort level, the DC derives her utility only from consumption (α = 1).

W(e) is the consumer utility that the DC gains from engagement with social media, which is assumed to depend on her effort level as follows: and where ω > 1. That is, if the DC chooses the low-effort level, she derives less consumer utility due to the negative effects of engaging with fake news (as described in the literature review).

S represents the DC’s utility from observing her own standards (beyond cost–benefit considerations) for guiding online behavior. The assumption is that the DC gains utility by aligning her behavior choice (regarding whether to engage with fake news) with ethical norms and her own beliefs.

Given the utility function in Eq. (1), the DC exerts high effort in reacting to fake news if and only if:

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

where

| 9 |

That is, the DC chooses high-level effort if and low-level effort if .

A negative value for φ reflects a trivial scenario in which condition (8) is satisfied at any value of S because the right-hand side of the inequality is negative. This scenario occurs if and only if (. This condition reflects a situation in which the relative cost of making high effort (q) is low and the consumer utility loss of making low effort (ω) is high such that q < ωα for a given α; this implies that high effort is the DC’s utility-maximizing choice. This scenario suggests an environment in which social media is a mature platform with a well-established regulatory regime and rational and discerning users.

A positive value for φ reflects the inverse scenario. This scenario is the main focus of this modeling exercise because it better reflects the existing dynamics of the current social media landscape. This scenario occurs if and only if , which reflects a situation in which the relative cost of making high effort (q) is high and the consumer utility loss of making low effort (ω) is low such that for a given α; this implies that low effort is the DC’s utility-maximizing choice. This scenario suggests an environment in which social media is poorly regulated and characterized by irrational use.

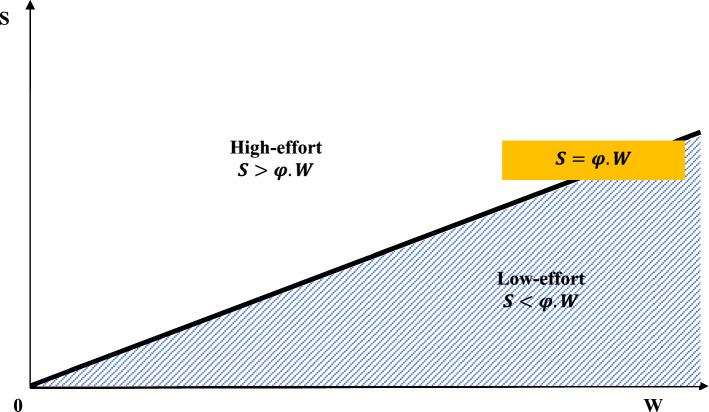

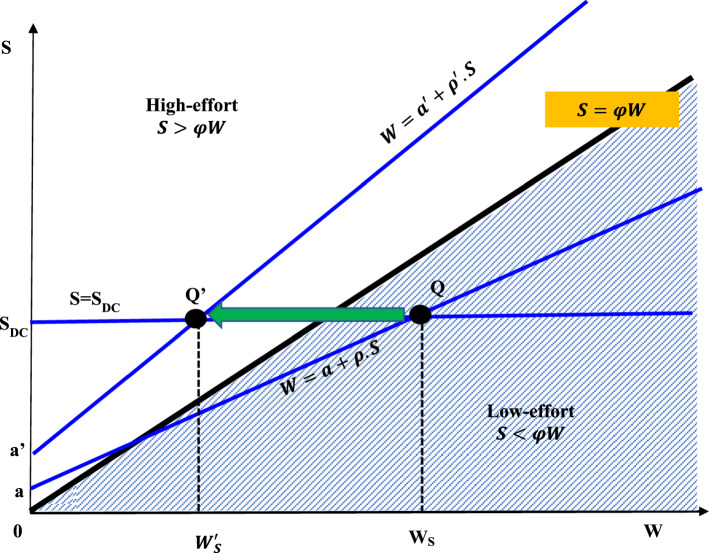

Figure 1 graphically illustrates this scenario; consumer utility W is represented on the horizontal axis and ethical behavior utility S on the vertical axis. The locus S = φ·W establishes a boundary line dividing the plane into two areas. In the area below the boundary line (the shaded area), the condition S < φ·W holds, depicting the DC’s choice of low effort; in the upper (non-shaded) area, the condition S > φ·W holds, depicting the DC’s choice of high effort.

Fig. 1.

Effort level by DC in reacting to fake news

Digital citizen’s behavior at equilibrium

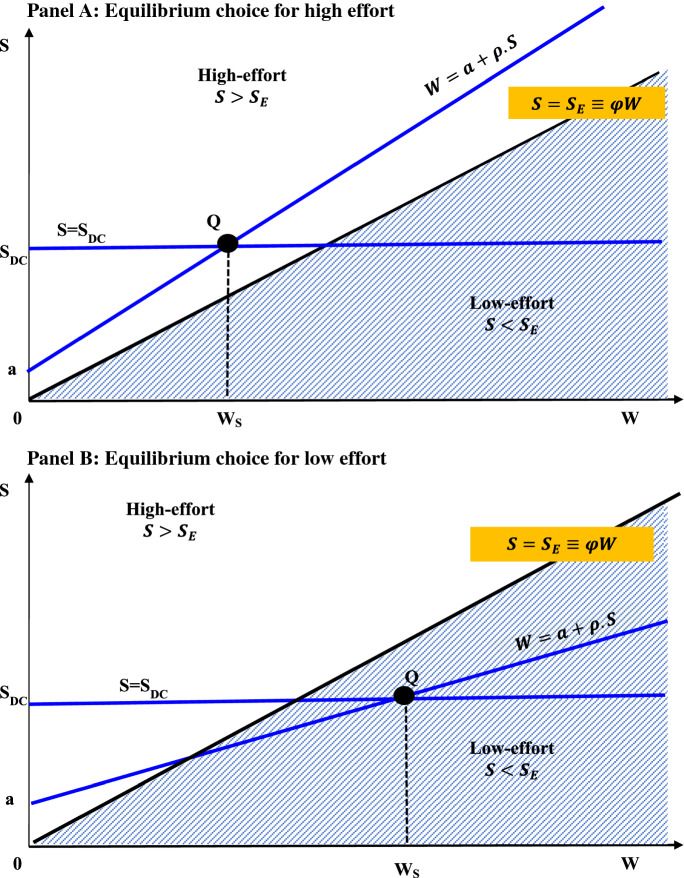

Given the latter situation presented in “Choice of effort level” section, the DC’s equilibrium decision depends on demand and supply dynamics governing her behavior. We assume that the DC’s demand curve is a horizontal line S = SDC (Fig. 2), which implies that at any given level of SDC (the utility she gains from ethical behavior), her demand for consumer utility ranges from 0 to +∞. Specifically, this assumes that the standards adopted by a DC are endogenous and shaped by the DC’s belief systems and other individual-specific factors; thus, these standards are fixed at a given level regardless of the value of W (the consumer utility the DC gains from engagement with social media). It is plausible that the DC’s standards may shift relative to the value of W, but there is no guiding heuristic about the direction of this relationship and for modeling simplicity the DC’s standards are assumed to be absolute instead of relative. Regarding the source of the supply of consumer utility, the model assumes that a DC’s personal social networks impact this variable, as represented by an upward linear curve and taking the following form:

| 10 |

where ρ > 0.

Fig. 2.

Digital citizen’s equilibrium choice for effort level

The upward slope of this supply curve implies that the DC derives a higher consumer utility W from interacting with her personal social networks as her S (the utility from ethical behavior) increases; that is, the DC is satisfied with online activity when not needing to compromise her ethical standards to access it. The coefficient ρ (ρ > 0) represents the value-set adopted by the DC’s personal social network. A greater ρ indicates that this network ‘publicly’ grants ethical behavior positive feedback, resulting in a larger consumer utility reward for the DC; this is manifest in expressions of validation such as number of ‘likes’ and positive comments. As shown in Fig. 2, the DC’s equilibrium decision exists at point Q, where the demand curve S = SDC crosses the supply curve ; the equation thus applies. Point Q can exist in the upper (high-effort) area (Panel A) or lower (low-effort) area (Panel B). The model implies that the DC’s equilibrium effort level depends on multiple factors including her individual preferences and characteristics of the setting.

Policy insights and recommendations

Policy insights

The model introduced in “The model” section shows that the DC’s choice of reacting productively to fake news falls into the high- or low-effort area and is an equilibrium decision. That is, the choice remains stable as long as the model’s key policy-related parameters,1 which include q, ω, and ρ, do not change significantly. This section discusses how a changes in each of these policy parameters can potentially alter the DC’s equilibrium choice by shifting the boundary line S = φW between the high- and low-effort areas and by shifting the supply curve W = a+ρ·S.

Shifting the borderline S = φW

Two policy parameters that have the ability to shift the boundary line S=φW are q and ω. Coefficient φ depends on q and ω in differing ways. Taking the derivative of φ with regard to q from Eq. (9) yields the following equation:

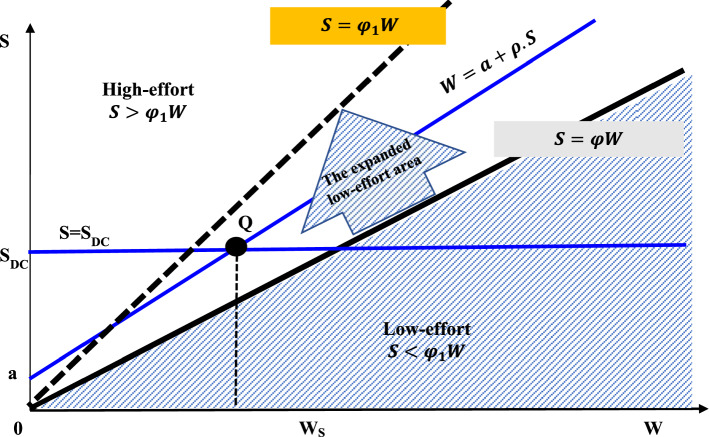

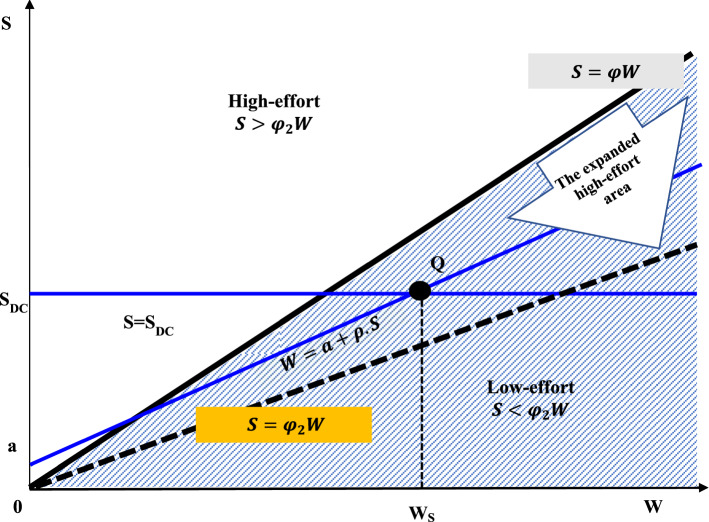

| 11 |

This equation indicates that φ is an increasing function of q. In other words, φ rises if q rises and φ falls if q falls. As such, an increase in q increases φ and thereby rotates the boundary line S = φW counter-clockwise. As shown in Fig. 3, this counter-clockwise rotation expands the lower (low-effort) area and shrinks the upper (high-effort) area. This implies that a rise in the relative cost of making high effort (compared to making low effort) can push the DC’s equilibrium choice from the high-effort into the low-effort area, illustrating the risk that the DC’s effort level falls and remains low when a setting changes in a way that does not encourage high effort. On the other hand, a decrease in q lowers φ and thereby rotates the boundary line S = φW clockwise. As shown in Fig. 4, this clockwise rotation expands the upper (high-effort) area and shrinks the lower (low-effort) area. This implies that a fall in the relative cost of making high effort (compared to making low effort) can push the DC’s equilibrium choice from the low-effort to the high-effort area.

Fig. 3.

Effect of increasing q or lowering ω on equilibrium choice of effort. DC’s equilibrium choice changes from the high-effort to low-effort area as φ rises to φ1 due to an increase in q or a reduction in ω

Fig. 4.

Effect of decreasing q or increasing ω on the DC’s equilibrium choice of effort. DC’s equilibrium choice moves from the low-effort to high-effort area as φ declines to φ2 due to a fall in q or an increase in ω

Similarly, taking the derivative of φ with regard to ω from Eq. (9) yields the following equation:

| 12 |

This equation indicates that φ is a decreasing function of ω. As such, a change in ω causes the boundary line S = φW to rotate. If ω falls, φ rises; hence, the boundary line rotates counter-clockwise, expanding the low-effort area and shrinking the high-effort area. This shift can push the DC’s equilibrium choice from the high-effort to low-effort area (Fig. 3), suggesting that weak regulation of fake news reduces the consumer utility loss caused by low effort and thereby increases the possibility of the DC falling from high- to low-effort equilibrium. On the other hand, if ω increases, φ decreases; hence, the boundary line rotates clockwise, enlarging the high-effort area and shrinking the low-effort area. This shift can push the DC’s equilibrium choice from the low-effort to high-effort area (Fig. 4). This suggests that increasing the consumer utility loss caused by low effort can induce the DC to change her equilibrium effort choice from low to high level.

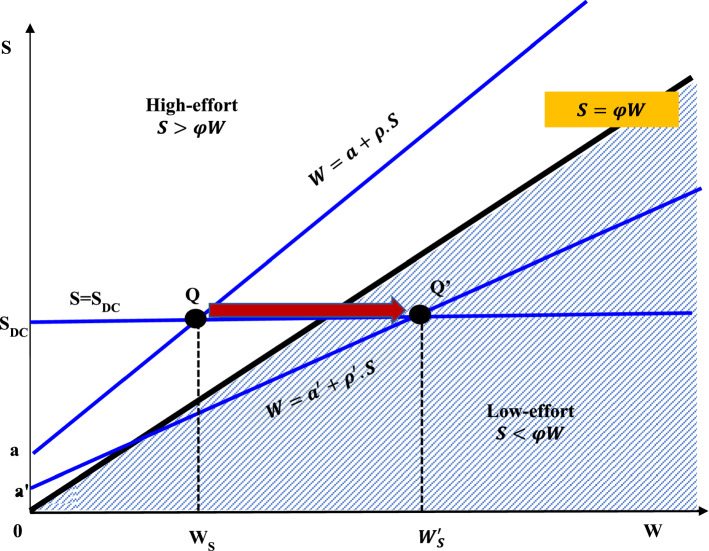

Shifting the supply curve

A shift of the supply curve can also change the DC’s equilibrium choice of effort level. As shown in Fig. 5, an increase in ρ and/or a can shift the supply curve upward, while the external setting represented by the boundary line remains unchanged. This shift can change the equilibrium point from Q, which is in the low-effort area, to Q’, which is in the high-effort area (Fig. 5). This suggests that an improvement in the ethical standards of the DC’s social media networks can induce her to change her equilibrium effort choice from low to high level. On the other hand, as shown in Fig. 6, a decrease in ρ and/or a can shift the supply curve downward, while the external setting represented by the boundary line remains unchanged. This shift can change the equilibrium point from Q, which is in the high-effort area, to Q’, which is in the low-effort area (Fig. 6). This suggests that a deterioration in the ethical standards of the DC’s personal social networks can induce her to change her equilibrium effort choice from high to low level.

Fig. 5.

Effect of increasing ρ to ρ′. An increase of ρ to ρ′ can push the DC’s equilibrium effort choice from low to high level

Fig. 6.

Effect of decreasing ρ to ρ′. A decrease in ρ to ρ′ can move the DC’s equilibrium effort choice from high to low level

Policy recommendations

While the model is based on the actions of individuals within a social media environment that implicitly incentivizes certain types of online behavior depending on individual values and preferences, the objective of the model is to derive insights for practice by demonstrating relationships among variables that fall within the ambit of external influence (policies of government or SMPs). In particular, the model is notable for its illustration of equilibrium, which has an important policy implication: behavioral change is achievable through an initial policy push that shifts the DC into a ‘high effort’ mindset, and once the altered conditions are stable and maintained, it can be postulated that DCs will maintain their new level of effort. This differs from policy intervention based on punitive measures, which often do little to change mindset and as such must be repeatedly reinforced and maintained at the cost of monitoring and enforcement.

This subsection outlines actions that can be taken by the DC, SMPs, and governments to facilitate the previously introduced model mechanisms. Table 1 summarizes the policy insights and recommendations inferred from the modeling exercises in “Policy insights” section. Mechanism 1 addresses the need to expand the high-effort area such that the DC’s equilibrium choice of effort defaults to a high level. As shown by the model, there are two sub-mechanisms for achieving this objective: (1) decrease the cost to the DC of making high effort relative to low effort (q), and (2) increase the consumer utility loss of making low effort (ω).

Table 1.

Measures for moving DC equilibrium effort choice from low to high level

| Model mechanism | Action items | ||

|---|---|---|---|

| Digital citizen | Social media platforms | Governments | |

| Mechanism 1: decrease q or increase ω (expand the high-effort area; Fig. 4) | Education and training for individual assessment of fake news (media literacy) |

Algorithms to detect fake news Crowdsourcing capabilities for detection Collaboration on research about impacts of fake news |

Guidelines and protocols for social media platforms Regulatory standards and enforcement |

| Mechanism 2: increasing ρ to ρ’ (reduce the DC’s consumer utility of engaging with fake news; Fig. 5) | Identification with increased ethical standards of the DC’s personal social network | Cultivation of a shared online standard of conduct regarding the treatment of fake news | |

Regarding the first sub-mechanism, the DC’s critical evaluation of fake news should be made easier. For the DC, action items include acquiring the necessary training [e.g., ‘media literacy’ (Lee 2018) and critical thinking skills (Baron and Crootof 2017)] and analytical tools (e.g., personal technologies and applications) to evaluate the authenticity of websites and news shared on social media.2 Additional action items include the adoption by SMPs of increasingly sophisticated algorithms for detecting fake news (Shu et al. 2017; Kumar and Geethakumari 2014) and for verifying contributors (Brandtzaeg et al. 2016; Schifferes et al. 2014), and the development of crowdsourcing capabilities for the same purpose (Pennycook and Rand 2019; Kim et al. 2018). For government, action items include the dissemination of official guidelines and protocols for SMPs to address fake news as based on research and feedback from platform administrators and users, and a threshold level of regulatory tolerance calibrated to particular contexts based on social, economic, and political factors. Despite the potential efficacy of these actions, they may raise concerns about the protection of free speech, as the definition of fake news is contestable and as government intervention could be seen to privilege certain points of view (Baron and Crootof 2017).3

Regarding the second sub-mechanism, the negative effects of fake news (e.g., those effecting the sharer of content or the ‘third-person’; Jang and Kim 2018; Emanuelson 2017) should be studied and communicated meaningfully to the DC. This involves collaboration among SMPs, researchers, and governments to specify ways in which misinformation compromises individual or collective wellbeing (e.g., by advocating counterproductive and unhealthy practices during a pandemic like COVID-19; Cuan-Baltazar et al. 2020; Pennycook et al. 2020). It is assumed that the utility derived from ethical behavior would compel a rational or standardly ‘ethical’ DC, in the face of evidence about the harmful impacts of fake news, to adopt techniques related to the first mechanism (i.e., being more diligent about identifying and resisting fake news). Nevertheless, it is acknowledged that such interventions represent the outer limits of politically feasible policy reach in a ‘free society,’ and on matters of speech there is often little action government can take without establishing a direct link between speech and imminent material danger. The COVID-19 crisis is an opportunity to further analytically underscore the link among fake news, public perceptions and resulting behaviors, and negative public health outcomes; as the crisis continues to unfold in some countries while being relatively well mitigated in others, research will be able to empirically test these connections.

Mechanism 2 addresses the need to reduce the DC’s consumer utility of engaging with fake news. This is achieved by shifting the utility supply curve upwards. Remaining unchanged is the external setting, which determines the relative size of areas of high and low effort. For this model, the external setting is characterized by two factors: (1) the degree of maturity of the SMP with respect to organization-level regulatory mechanisms that address fake news and any willfully harmful exploitation of the platform, and (2) the self-regulating and discerning behaviors of users with respect to fake news. Within the second mechanism, the constancy of utility that an individual derives from ethical behavior, as represented between the default and alternative scenarios (Q and Q’; Fig. 5), eliminates the need to directly appeal on a policy level to ethical sensibilities as a motivation for high effort. With a DC defaulting to the high-effort zone, external circumstances reflect an ethical standard on the part of the DC’s social media community that may compel the DC to resist fake news (for a discussion about the relationship between group influence and individual-level media and technology use, see Kim et al. 2016, Contractor et al. 1996, and Fulk et al. 1990). The negative effects of engaging with fake news—as made clear to the DC through action items for the first mechanism—are regulated by a ‘social sanctioning’ mechanism (e.g., through public shunning or shaming the DC for sharing fake news) that can be motivated by what Batson and Powell (2003) describe as altruism, collectivism, and prosocial behavior. This community-based policing also reflects the kind of collective action observed in the absence of adequate regulatory mechanisms, as discussed by new institutionalism scholars including Ostrom (1990).

Finally, it is necessary to broaden this analytical perspective by acknowledging the influence of power and control over information. SMPs are owned and controlled by what have arguably become some of the twenty-first century’s most dominant and politically connected commercial actors. As in many industries, the SMP market structure is shaped by consolidation pressures, and these are evident in examples like Facebook’s ownership of picture sharing platform Instagram and mobile messaging application WhatsApp, and in Google’s ownership of video sharing platform YouTube and online advertising company DoubleClick. Growing political scrutiny has focused on the political relationships these and other major internet conglomerates have cultivated with American policymakers (Moore and Tambini 2018), on their monopolistic and accumulative behaviors (Ouellet 2019), on their influence on voter preference (Kreiss and McGregor 2019; Wilson 2019), and on their shaping of discourses about issues related to privacy and user identity (Hoffmann et al. 2018). Amidst growing concern about the collection and use of private information and the perceived preference given to content reflecting certain political ideologies, calls have been issued to strengthen regulations on large technology firms (Flew et al. 2019; Smyth 2019; Hemphill 2019). As society grows more technologically connected and the dissemination of information more democratized, the contribution of an informed public to the functionality of representative and democratic systems of government and policymaking grows increasingly dependent on the commercial choices of a relatively small number powerful technology companies. It is thus appropriate to interpret the findings of this study within the larger context of an information and communications ecosystem whose rules appear to be defined as much by commercial interest as by public policy. In executing the policy recommendations outlined in this section, policymakers should remain aware of power dynamics in commercial spheres and maintain objectivity, fairness, and so-called arm’s-length distance from SMPs and related entities subject to regulation.

Conclusion

This article has examined the dynamics of how social media users react to fake news, with findings that support targeted public policies and SMP strategies and that underscore the role of informal influence and social sanctioning among members of personal online networks. The study’s formal mathematical model conceptualizes the effort level of a DC in resisting fake news when encountered, illustrating how a decrease the cost of high effort (e.g., more convenient ability to filter content) and an increase in the cost of low effort (e.g., negative consequences of engaging fake news) can nudge the DC toward a more diligent posture in the face of misinformation. These findings lend depth to existing studies about how social media users react to fake news, including through self-guided verification, correction, and responses to correction (Tandoc et al. 2018; Lewandowsky et al. 2017; Zubiaga and Ji 2014). They also confirm related findings by Pennycook and Rand (2019) that a DC’s susceptibility to fake news may be a function more of lax effort in analytical reasoning than of partisanship and its manifestation in ‘motivated reasoning.’ These findings provide support for formal interventions by governments (e.g., guidelines, protocols, and regulations on content) and by SMPs (e.g., algorithms and crowdsourcing for content verification).

It is appropriate to acknowledge the model’s limitations—namely that, as with any model based on human or collective social behavior, the certitude with which quantification and statistical inferences can be conclusively made is lower than for other disciplines; this leads potentially to the trap of false precision. As such, policymakers should be duly cautious about over-reliance on this model and indeed all models based on social science, as some assumptions about the use of rational choice in policy decisions have long been challenged (Hay 2004; Ostrom 1991; Kahneman 1994). As previously stated, the issue of cognitive bias is salient in any model that addresses the behavior of actors in interactive settings like social media and political arenas, where ideologies often influence cognitive processes. This model rests on assumptions about behavior that are based on existing theories and empirical observations, and the transparency with which this article has sought to introduce it intended to help analysts fully understand, apply, and modify it. We contend that the model provides a useful approximation appropriate for the policy field’s state of knowledge about human behavior and preference—particularly amidst the contestation of truth and socialization of information sharing that define the modern era. At the same time, we see the aforementioned caveat as an opportunity for future research, including the refinement of the model with further understandings about how individuals interact with fake news and about the efficacy with which public policies and the actions of SMPs are capable of limiting the spread of fake news and mitigating its impact.

The challenge of fake news not only mandates consideration about individual behaviors and their aggregation across a community of hundreds of millions of social media users, but also invites abstract contemplation about the construction of truth in what has been labeled a ‘post-factual’ era (Perl et al. 2018; Berling and Bueger 2017). The democratization of fact-finding and dissemination, made possible by the proliferation of information and communications technology, provides an egalitarian platform for any user of any interest—including the politically opportunistic and malicious. The rostrum of narrative authority is no longer solely occupied by the ‘fourth estate’—newspapers ‘of record’ and network or public broadcast media. With the growing sophistication and savviness of fake news operatives has come a torrent of misinformation; at the same time, many users are likewise savvy in their recognition and dismissal of fake news. In the context of COVID-19, the influence of fake news and misinformation on the behavior of even a modest share of the population could substantially compromise crisis mitigation and recovery efforts. Despite their access to high-level official information, even political leaders have shown vulnerability to the allure of fake news in the COVID-19 pandemic. An example is the promotion by US President Donald Trump of the anti-malarial drug hydroxychloroquine—a drug about which the scientific community lacked, at the time, full evidence of its efficacy in treating COVID-19 (Owens 2020). The intuitive policy intervention would appear to be more robust means of fact-checking content on social media and efforts to disabuse the public of false notions concerning the science of the virus. Nevertheless, according to Van Bavel et al. (2020), ‘fact-checking may not keep up with the vast amount of false information produced in times of crisis like a pandemic’ (n.p.). Additionally, Fischer (2020) argues that facts are no tonic for ideological denialism on matters related to science. Such episodes illustrate the existential risks posed by political forces built on the redefinition or rejection of scientific fact.

In closing, it is appropriate to consider practical pathways for policymaking amidst the rise of fake news. Careful not to imply that postmodernism is responsible for fake news, Fischer (2020) draws from an earlier work about climate change policy (2009) in arguing that ‘the public and its politicians […] need to find ways to develop a common, socially meaningful dialogue that moves beyond acrimonious rhetoric to permit an authentic socio-cultural discussion’ (p. 149). The uncomfortable dilemma for research about the politics of contentious issues like climate change and pandemic response is how to reconcile the preeminence of scientific fact in official policy discourses with constructivist and deliberative perspectives that may exist within or outside such discourses. The precarity of the COVID-19 crisis, as reminiscent of longer-evolving crises such as climate change, suggests urgent questions for future research. Does the raucous debate about the severity of COVID-19, in a country like the United States, indicate democratic robustness and political process legitimacy? Are all discourses, even as ‘alternative truths,’ equally legitimate as policy inputs in deliberative settings? Is scientific evidence politically assailable as a product of elite agendas and framing efforts? Research is thus needed to map narratives in the virtual realm and ‘contact-trace’ their origins and later influence on discourses and policymaking. At a general level, this study provides a foundation for exploring how post-truth narratives are reproduced in the commons, not only in the COVID-19 crisis but also in unforeseen crises that will inevitably arise.

Footnotes

We do not consider consumer utility α to be a policy parameter because it is structurally or endogenously associated with the DC and thus not easily altered through public policy.

In the USA, public awareness about the issue of SMP-driven content verification grew after Twitter added a link into posts by President Donald Trump concerning accusations of voter fraud. According to a New York Times article (Conger and Alba 2020), ‘the links — which were in blue lettering at the bottom of the posts and punctuated by an exclamation mark — urged people to “get the facts” about voting by mail. Clicking on the links led to a CNN story that said Mr. Trump’s claims were unsubstantiated and to a list of bullet points that Twitter had compiled rebutting the inaccuracies.’

In reference to the previous example involving US President Donald Trump’s Twitter posts, the backlash against any attempt to regulate fake news—or attempts to allow it to be published but with user warnings—also engendered a politically charged backlash that claimed ideological suppression. This led to a rise in attention within some ideological communities given to an alternative SMP (called ‘Parler’) that claims to be unbiased; a conservative commentator implied that no post on Parler would be fact-checked (Brewster 2020).

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Agustín, F. (2014). Echo chamber. The European Parliament and citizen participation in the public policy process. In The changing role of law in the age of supra-and transnational governance (pp. 287–300). Nomos Verlagsgesellschaft mbH & Co. KG.

- Allcott H, Gentzkow M. Social media and fake news in the 2016 election. Journal of Economic Perspectives. 2017;31(2):211–236. [Google Scholar]

- Armus, T., & Hassan, J. (2020). Go to China if you want communism’: Anti-quarantine protester clashes with people in scrubs. Washington Post, April 20. https://www.washingtonpost.com/nation/2020/04/20/go-china-if-you-want-communism-anti-quarantine-protester-clashes-with-people-scrubs/.

- Barberá P, Jost JT, Nagler J, Tucker JA, Bonneau R. Tweeting from left to right: Is online political communication more than an echo chamber? Psychological Science. 2015;26(10):1531–1542. doi: 10.1177/0956797615594620. [DOI] [PubMed] [Google Scholar]

- Baron, S., & Crootof, R. (2017). Fighting fake news. The Information Society Project and The Floyd Abrams Institute for Freedom of Expression, workshop report. https://law.yale.edu/sites/default/files/area/center/isp/documents/fighting_fake_news_-_workshop_report.pdf.

- Batson CD, Powell AA. Altruism and prosocial behavior. Handbook of Psychology. 2003;5:463–484. [Google Scholar]

- Beck PJ, Jung WO. Taxpayer compliance under uncertainty. Journal of Accounting and Public Policy. 1989;8(1):1–27. [Google Scholar]

- Berling TV, Bueger C. Expertise in the age of post-factual politics: An outline of reflexive strategies. Geoforum. 2017;84:332–341. [Google Scholar]

- Boberg, S., Quandt, T., Schatto-Eckrodt, T., & Frischlich, L. (2020). Pandemic populism: Facebook pages of alternative news media and the corona crisis—A computational content analysis. arXiv preprint arXiv:2004.02566.

- Brandtzaeg PB, Lüders M, Spangenberg J, Rath-Wiggins L, Følstad A. Emerging journalistic verification practices concerning social media. Journalism Practice. 2016;10(3):323–342. [Google Scholar]

- Brewster, J. (2020). As Twitter labels trump tweets, some republicans flock to new social media site. Forbes, June 25. https://www.forbes.com/sites/jackbrewster/2020/06/25/as-twitter-labels-trump-tweets-some-republicans-flock-to-new-social-media-site/#f87653d78c8f.

- Caprara GV, Vecchione M. Personality approaches to political behavior. In: Huddy L, Sears DO, Levy JS, editors. The Oxford handbook of political psychology. Oxford: Oxford University Press; 2013. pp. 23–58. [Google Scholar]

- Castellacci F, Tveito V. Internet use and well-being: A survey and a theoretical framework. Research Policy. 2018;47(1):308–325. [Google Scholar]

- Cinelli, M., Quattrociocchi, W., Galeazzi, A., Valensise, C. M., Brugnoli, E., Schmidt, A. L., et al. (2020). The COVID-19 social media infodemic. arXiv preprint arXiv:2003.05004. [DOI] [PMC free article] [PubMed]

- Cohen JN. Exploring echo-systems: How algorithms shape immersive media environments. Journal of Media Literacy Education. 2018;10(2):139–151. [Google Scholar]

- Conger, K., & Alba, D. (2020). Twitter refutes inaccuracies in Trump’s tweets about mail-in voting. New York Times, 20 May. https://www.nytimes.com/2020/05/26/technology/twitter-trump-mail-in-ballots.html.

- Contractor NS, Seibold DR, Heller MA. Interactional influence in the structuring of media use in groups: Influence in members’ perceptions of group decision support system use. Human Communication Research. 1996;22(4):451–481. [Google Scholar]

- Cuan-Baltazar JY, Muñoz-Perez MJ, Robledo-Vega C, Pérez-Zepeda MF, Soto-Vega E. Misinformation of COVID-19 on the internet: Infodemiology study. JMIR Public Health and Surveillance. 2020;6(2):e18444. doi: 10.2196/18444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devine CJ. Ideological social identity: Psychological attachment to ideological in-groups as a political phenomenon and a behavioral influence. Political Behavior. 2015;37(3):509–535. [Google Scholar]

- DiFranzo D, Gloria-Garcia K. Filter bubbles and fake news. XRDS: Crossroads, The ACM Magazine for Students. 2017;23(3):32–35. [Google Scholar]

- Duckitt J, Sibley CG. Personality, ideological attitudes, and group identity as predictors of political behavior in majority and minority ethnic groups. Political Psychology. 2016;37(1):109–124. [Google Scholar]

- Edis T. A revolt against expertise: Pseudoscience, right-wing populism, and post-truth politics. Disputatio. 2020;9(13):1–29. [Google Scholar]

- Eisenhauer JG. The shadow price of morality. Eastern Economic Journal. 2006;32(3):437–456. [Google Scholar]

- Eisenhauer JG. Ethical preferences, risk aversion, and taxpayer behavior. The Journal of Socio-Economics. 2008;37(1):45–63. [Google Scholar]

- Emanuelson E. Fake left, fake right: Promoting an informed public in the era of alternative facts. Administrative Law Review. 2017;70(1):209–232. [Google Scholar]

- Fang A, Habel P, Ounis I, MacDonald C. Votes on twitter: Assessing candidate preferences and topics of discussion during the 2016 US presidential election. SAGE Open. 2019;9(1):2158244018791653. [Google Scholar]

- Fischer F. Democracy and expertise: Reorienting policy inquiry. Oxford: Oxford University Press; 2009. [Google Scholar]

- Fischer F. Knowledge politics and post-truth in climate denial: On the social construction of alternative facts. Critical Policy Studies. 2019;13(2):133–152. [Google Scholar]

- Fischer F. Post-truth politics and climate denial: Further reflections. Critical Policy Studies. 2020;14:124–130. [Google Scholar]

- Flaxman S, Goel S, Rao JM. Filter bubbles, echo chambers, and online news consumption. Public Opinion Quarterly. 2016;80(S1):298–320. [Google Scholar]

- Flew T, Martin F, Suzor N. Internet regulation as media policy: Rethinking the question of digital communication platform governance. Journal of Digital Media & Policy. 2019;10(1):33–50. [Google Scholar]

- Frenkel, S., Alba, D., & Zhong, R. (2020). Surge of virus misinformation stumps Facebook and Twitter. The New York Times. March 8. https://www.nytimes.com/2020/03/08/technology/coronavirus-misinformation-social-media.html.

- Fulk J, Schmitz J, Steinfield CW. A social influence model of technology use. Organizations and Communication Technology. 1990;117:140. [Google Scholar]

- Fung ICH, Fu KW, Chan CH, Chan BSB, Cheung CN, Abraham T, Tse ZTH. Social media’s initial reaction to information and misinformation on Ebola, August 2014: Facts and rumors. Public Health Reports. 2016;131(3):461–473. doi: 10.1177/003335491613100312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaughan AJ. Illiberal democracy: The toxic mix of fake news, hyperpolarization, and partisan election administration. Duke Journal of Constitutional Law & Public Policy. 2016;12:57. [Google Scholar]

- Guess A, Nagler J, Tucker J. Less than you think: Prevalence and predictors of fake news dissemination on Facebook. Science Advances. 2019;5(1):eaau4586. doi: 10.1126/sciadv.aau4586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanafiah, K. M., & Wan, C. D. (2020). Public knowledge, perception and communication behavior surrounding COVID-19 in Malaysia. https://advance.sagepub.com/articles/Public_knowledge_perception_and_communication_behavior_surrounding_COVID-19_in_Malaysia/12102816.

- Hartley K, Tortajada C, Biswas AK. A formal model concerning policy strategies to build public acceptance of potable water reuse. Journal of Environmental Management. 2019;250:109505. doi: 10.1016/j.jenvman.2019.109505. [DOI] [PubMed] [Google Scholar]

- Hay C. Theory, stylized heuristic or self-fulfilling prophecy? The status of rational choice theory in public administration. Public Administration. 2004;82(1):39–62. [Google Scholar]

- He L, Yang H, Xiong X, Lai K. Online rumor transmission among younger and older adults. SAGE Open. 2019;9(3):2158244019876273. [Google Scholar]

- Hemphill TA. ‘Techlash’, responsible innovation, and the self-regulatory organization. Journal of Responsible Innovation. 2019;6(2):240–247. [Google Scholar]

- Hoffmann AL, Proferes N, Zimmer M. “Making the world more open and connected”: Mark Zuckerberg and the discursive construction of Facebook and its users. New Media & Society. 2018;20(1):199–218. [Google Scholar]

- Hogan, M. (2020). The covidiocy chronicles: Who are this week’s biggest celebrity fools? The Telegraph (UK). 14 April. https://www.telegraph.co.uk/music/artists/celebrity-covididiots-stars-making-fools-thanks-coronavirus/.

- Hu, Z., Yang, Z., Li, Q., Zhang, A., & Huang, Y. (2020). Infodemiological study on COVID-19 epidemic and COVID-19 infodemic. Under review. https://www.researchgate.net/profile/Zhiwen_Hu/publication/339501808_Infodemiological_study_on_COVID-19_epidemic_and_COVID-19_infodemic/links/5e78a0834585157b9a547536/Infodemiological-study-on-COVID-19-epidemic-and-COVID-19-infodemic.pdf.

- Hua J, Shaw R. Corona virus (COVID-19) “Infodemic” and emerging issues through a data lens: The case of China. International Journal of Environmental Research and Public Health. 2020;17(7):2309. doi: 10.3390/ijerph17072309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huber RA. The role of populist attitudes in explaining climate change skepticism and support for environmental protection. Environmental Politics. 2020;29(6):959–982. [Google Scholar]

- Jang SM, Kim JK. Third person effects of fake news: Fake news regulation and media literacy interventions. Computers in Human Behavior. 2018;80:295–302. [Google Scholar]

- Jones BD, Baumgartner FR, De La Mare E. The supply of information and the size of government in the United States. Seattle: Center for American Politics and Public Policy, University of Washington; 2005. [Google Scholar]

- Kahneman D. New challenges to the rationality assumption. Journal of Institutional and Theoretical Economics (JITE)/Zeitschrift für die gesamte Staatswissenschaft. 1994;150(1):18–36. [Google Scholar]

- Kim H, Fording RC. Voter ideology in Western democracies, 1946–1989. European Journal of Political Research. 1998;33(1):73–97. [Google Scholar]

- Kim, J., Tabibian, B., Oh, A., Schölkopf, B., & Gomez-Rodriguez, M. (2018). Leveraging the crowd to detect and reduce the spread of fake news and misinformation. In Proceedings of the eleventh ACM international conference on web search and data mining (pp. 324–332).

- Kim Y, Wang Y, Oh J. Digital media use and social engagement: How social media and smartphone use influence social activities of college students. Cyberpsychology, Behavior, and Social Networking. 2016;19(4):264–269. doi: 10.1089/cyber.2015.0408. [DOI] [PubMed] [Google Scholar]

- Kinder DR. Political person perception: The asymmetrical influence of sentiment and choice on perceptions of presidential candidates. Journal of Personality and Social Psychology. 1978;36(8):859. [Google Scholar]

- Kouzy R, Abi Jaoude J, Kraitem A, El Alam MB, Karam B, Adib E, et al. Coronavirus goes viral: Quantifying the COVID-19 misinformation epidemic on Twitter. Cureus. 2020;12(3):e7255. doi: 10.7759/cureus.7255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreiss D, McGregor SC. The “arbiters of what our voters see”: Facebook and Google’s struggle with policy, process, and enforcement around political advertising. Political Communication. 2019;36(4):499–522. [Google Scholar]

- Kumar KK, Geethakumari G. Detecting misinformation in online social networks using cognitive psychology. Human-centric Computing and Information Sciences. 2014;4(1):1–22. [Google Scholar]

- Lazer DM, Baum MA, Benkler Y, Berinsky AJ, Greenhill KM, Menczer F, Metzger MJ, Nyhan B, Pennycook G, Rothschild D, Schudson M, Sloman SA, Sunstein CR, Thorson EA, Watts DJ, Zittrain J. The science of fake news. Science. 2018;359(6380):1094–1096. doi: 10.1126/science.aao2998. [DOI] [PubMed] [Google Scholar]

- Lee NM. Fake news, phishing, and fraud: A call for research on digital media literacy education beyond the classroom. Communication Education. 2018;67(4):460–466. [Google Scholar]

- Lejano RP, Dodge J. The narrative properties of ideology: The adversarial turn and climate skepticism in the USA. Policy Sciences. 2017;50(2):195–215. [Google Scholar]

- Lewandowsky S, Ecker UK, Cook J. Beyond misinformation: Understanding and coping with the “post-truth” era. Journal of Applied Research in Memory and Cognition. 2017;6(4):353–369. [Google Scholar]

- Lewandowsky S, Oreskes N, Risbey JS, Newell BR, Smithson M. Seepage: Climate change denial and its effect on the scientific community. Global Environmental Change. 2015;33:1–13. [Google Scholar]

- Lin JY, Vu K, Hartley K. A modeling framework for enhancing aid effectiveness. Journal of Economic Policy Reform. 2019;23(2):138–160. [Google Scholar]

- McGuire WJ. Some contemporary approaches. In: Berkowitz L, editor. Advances in experimental social psychology. London: Academic Press; 1964. pp. 191–229. [Google Scholar]

- McPherson M, Smith-Lovin L, Cook JM. Birds of a feather: Homophily in social networks. Annual Review of Sociology. 2001;27(1):415–444. [Google Scholar]

- Medford, R. J., Saleh, S. N., Sumarsono, A., Perl, T. M., & Lehmann, C. U. (2020). An “Infodemic”: Leveraging high-volume twitter data to understand public sentiment for the COVID-19 outbreak. medRxiv. [DOI] [PMC free article] [PubMed]

- Minar DM. Ideology and political behavior. Midwest Journal of Political Science. 1961;5(4):317–331. [Google Scholar]

- Moore M, Tambini D, editors. Digital dominance: The power of Google, Amazon, Facebook, and Apple. Oxford: Oxford University Press; 2018. [Google Scholar]

- Mullainathan S, Shleifer A. The market for news. American Economic Review. 2005;95(4):1031–1053. [Google Scholar]

- New York Times. (2020). Warnings of the dangers of ingesting disinfectants follow Trump’s remarks. April 24. https://www.nytimes.com/2020/04/24/us/coronavirus-us-usa-updates.html.

- Ostrom E. Governing the commons: The evolution of institutions for collective action. Cambridge: Cambridge University Press; 1990. [Google Scholar]

- Ostrom E. Rational choice theory and institutional analysis: Toward complementarity. The American Political Science Review. 1991;85(1):237–243. [Google Scholar]

- Ouellet, M. (2019). Capital as power: Facebook and the symbolic monopoly rent. In E. George (ed.), Digitalization of society and socio-political Issues 1: Digital, communication and culture (Chap. 8, pp. 81–94).

- Owens B. Excitement around hydroxychloroquine for treating COVID-19 causes challenges for rheumatology. The Lancet Rheumatology. 2020;2:e257. doi: 10.1016/S2665-9913(20)30089-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papanastasiou Y. Fake news propagation and detection: A sequential model. Management Science. 2020;66:1826–1846. [Google Scholar]

- Pennycook, G., McPhetres, J., Zhang, Y., & Rand, D. (2020). Fighting COVID-19 misinformation on social media: Experimental evidence for a scalable accuracy nudge intervention. Working paper. [DOI] [PMC free article] [PubMed]

- Pennycook G, Rand DG. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proceedings of the National Academy of Sciences. 2019;116(7):2521–2526. doi: 10.1073/pnas.1806781116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perl A, Howlett M, Ramesh M. Policy-making and truthiness: Can existing policy models cope with politicized evidence and willful ignorance in a “post-fact” world? Policy Sciences. 2018;51(4):581–600. [Google Scholar]

- Polletta F, Callahan J. Deep stories, nostalgia narratives, and fake news: Storytelling in the Trump era. In: Mast J, Alexander JC, editors. Politics of meaning/meaning of politics. Cham: Palgrave Macmillan; 2019. pp. 55–73. [Google Scholar]

- Porumbescu GA. Assessing the implications of online mass media for citizens’ evaluations of government. Policy Design and Practice. 2018;1(3):233–240. [Google Scholar]

- Pulido CM, Villarejo-Carballido B, Redondo-Sama G, Gómez A. COVID-19 infodemic: More retweets for science-based information on coronavirus than for false information. International Sociology. 2020;35(4):377–392. [Google Scholar]

- Rini R. Fake news and partisan epistemology. Kennedy Institute of Ethics Journal. 2017;27(2):E43–E64. [Google Scholar]

- Rodríguez CP, Carballido BV, Redondo-Sama G, Guo M, Ramis M, Flecha R. False news around COVID-19 circulated less on Sina Weibo than on Twitter. How to overcome false information? International and Multidisciplinary Journal of Social Sciences. 2020;9(2):107–128. [Google Scholar]

- Schifferes S, Newman N, Thurman N, Corney D, Göker A, Martin C. Identifying and verifying news through social media: Developing a user-centred tool for professional journalists. Digital Journalism. 2014;2(3):406–418. [Google Scholar]

- Shaw R, Kim YK, Hua J. Governance, technology and citizen behavior in pandemic: Lessons from COVID-19 in East Asia. Progress in Disaster Science. 2020;6:100090. doi: 10.1016/j.pdisas.2020.100090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shear, M. D., & Mervosh, S. (2020). Trump encourages protest against governors who have imposed virus restrictions. New York Times, April 18. https://www.nytimes.com/2020/04/17/us/politics/trump-coronavirus-governors.html.

- Shu K, Sliva A, Wang S, Tang J, Liu H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explorations Newsletter. 2017;19(1):22–36. [Google Scholar]

- Singh, L., Bansal, S., Bode, L., Budak, C., Chi, G., Kawintiranon, K., et al. (2020). A first look at COVID-19 information and misinformation sharing on Twitter. arXiv preprint arXiv:2003.13907.

- Smyth SM. The Facebook Conundrum: Is it time to usher in a new era of regulation for big tech? International Journal of Cyber Criminology. 2019;13(2):578–595. [Google Scholar]

- Sommariva S, Vamos C, Mantzarlis A, Đào LUL, Martinez Tyson D. Spreading the (fake) news: Exploring health messages on social media and the implications for health professionals using a case study. American Journal of Health Education. 2018;49(4):246–255. [Google Scholar]

- Spinney L. In Congo, fighting a virus and a groundswell of fake news. Science. 2019;363(6424):213–214. doi: 10.1126/science.363.6424.213. [DOI] [PubMed] [Google Scholar]

- Spohr D. Fake news and ideological polarization: Filter bubbles and selective exposure on social media. Business Information Review. 2017;34(3):150–160. [Google Scholar]

- Srinivasan TN. Tax evasion: A model. Journal of Public Economics. 1973;2:339–346. [Google Scholar]

- Stanley, M., Seli, P., Barr, N., & Peters, K. (2020). Analytic-thinking predicts hoax beliefs and helping behaviors in response to the COVID-19 pandemic. 10.31234/osf.io/7456n.

- Szabados K. Can we win the war on science? Understanding the link between political populism and anti-science politics. Populism. 2019;2(2):207–236. [Google Scholar]

- Tandoc EC, Jr, Lim D, Ling R. Diffusion of disinformation: How social media users respond to fake news and why. Journalism. 2020;21(3):381–398. [Google Scholar]

- Tandoc EC, Jr, Lim ZW, Ling R. Defining “fake news” A typology of scholarly definitions. Digital Journalism. 2018;6(2):137–153. [Google Scholar]

- Tasnim, S., Hossain, M., & Mazumder, H. (2020). Impact of rumors or misinformation on coronavirus disease (COVID-19) in social media. 10.31235/osf.io/uf3zn. [DOI] [PMC free article] [PubMed]

- Taylor, N. (2003). Net-spread panic proves catchier than a killer virus. South China Morning Post, April 8. https://www.scmp.com/article/411771/net-spread-panic-proves-catchier-killer-virus.

- Thelwall, M., & Thelwall, S. (2020). Retweeting for COVID-19: Consensus building, information sharing, dissent, and lockdown life. arXiv preprint arXiv:2004.02793.

- Tong, A., Du, D. Z., & Wu, W. (2018). On misinformation containment in online social networks. In Advances in neural information processing systems (pp. 341–351).

- Torres, R., Gerhart, N., & Negahban, A. (2018). Combating fake news: An investigation of information verification behaviors on social networking sites. In Proceedings of the 51st Hawaii international conference on system sciences.

- Tufekci Z. Facebook said its algorithms do help form echo chambers, and the tech press missed it. New Perspectives Quarterly. 2015;32(3):9–12. [Google Scholar]

- U.S. White House. (2020). Remarks by President Trump, Vice President Pence, and members of the coronavirus task force in press conference. Press briefing, 27 February. https://www.whitehouse.gov/briefings-statements/remarks-president-trump-vice-president-pence-members-coronavirus-task-force-press-conference/.

- Van Bavel JJ, Baicker K, Boggio PS, Capraro V, Cichocka A, Cikara M, et al. Using social and behavioural science to support COVID-19 pandemic response. Nature Human Behavior. 2020;4:460–471. doi: 10.1038/s41562-020-0884-z. [DOI] [PubMed] [Google Scholar]

- Van Bavel JJ, Pereira A. The partisan brain: An identity-based model of political belief. Trends in Cognitive Sciences. 2018;22(3):213–224. doi: 10.1016/j.tics.2018.01.004. [DOI] [PubMed] [Google Scholar]

- van der Linden S, Leiserowitz A, Rosenthal S, Maibach E. Inoculating the public against misinformation about climate change. Global Challenges. 2017;1(2):1600008. doi: 10.1002/gch2.201600008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vegetti F, Širinić D. Left–right categorization and perceptions of party ideologies. Political Behavior. 2019;41(1):257–280. [Google Scholar]

- Waszak PM, Kasprzycka-Waszak W, Kubanek A. The spread of medical fake news in social media—The pilot quantitative study. Health Policy and Technology. 2018;7(2):115–118. [Google Scholar]

- Wilson, R. (2019). Cambridge analytica, Facebook, and Influence Operations: A case study and anticipatory ethical analysis. In European conference on cyber warfare and security (pp. 587–595). London: Academic Conferences International Limited.

- Wong JE, Leo YS, Tan CC. COVID-19 in Singapore—Current experience: Critical global issues that require attention and action. JAMA, the Journal of the American Medical Association. 2020;323(13):1243–1244. doi: 10.1001/jama.2020.2467. [DOI] [PubMed] [Google Scholar]

- Yaniv G. Tax evasion and the income tax rate: a theoretical reexamination. Public Finance = Finances publiques. 1994;49(1):107–112. [Google Scholar]

- Zarocostas J. How to fight an infodemic. The Lancet. 2020;395(10225):676. doi: 10.1016/S0140-6736(20)30461-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zubiaga A, Ji H. Tweet, but verify: Epistemic study of information verification on twitter. Social Network Analysis and Mining. 2014;4(1):163. [Google Scholar]

- Zuiderveen Borgesius F, Trilling D, Möller J, Bodó B, De Vreese CH, Helberger N. Should we worry about filter bubbles? Internet policy review. Journal on Internet Regulation. 2016;5(1):1–6. [Google Scholar]