Abstract

This paper examines an under-explored unintended consequence of public reporting: the potential for demand rationing. Public reporting, although intended to increase consumer access to high-quality products, may have provided the perverse incentive for high-quality providers facing fixed capacity and administrative pricing to avoid less profitable types of residents. Using data from the nursing home industry before and after the implementation of the public reporting system in 2002, we find that high-quality nursing homes facing capacity constraints reduced admissions of less profitable Medicaid residents while increasing the more profitable Medicare and private-pay admissions, relative to low-quality nursing homes facing no capacity constraints. These effects, although small in magnitude, are consistent with provider rationing of demand on the basis of profitability and underscore the important role of institutional details in designing effective public reporting systems for regulated industries.

Keywords: demand rationing, public reporting, capacity constraints, administrative pricing, nursing home industry, Nursing Home Compare

1. INTRODUCTION

In markets with asymmetric information, quality disclosure could increase consumer access to high-quality products and provide incentives for quality improvement. Publicly reported quality information (hereafter ‘public reporting’) has become a prominent part of the quality improvement landscape over the last quarter century across the healthcare spectrum. The Affordable Care Act relies heavily on market-based reforms such as public reporting in an effort to maintain and encourage quality while holding down costs.

However, in regulated industries with capacity constraints and administrative pricing, public reporting may cause the unintended consequence of reducing access to high-quality products for at least some consumers. Specifically, if public reporting increases demand for high-quality products, the quantity demanded for those products may rise above the quantity supplied in the presence of capacity constraints. Coupled with largely fixed administrative pricing, this excess demand has to be rationed by some non-price mechanism, which is unlikely to be random. Indeed, it is possible that the consumers who are a primary target of public reporting in health care—those who generally are underserved and may be less able to identify high-quality providers on their own—are most likely to be crowded out of high-quality providers.

Using data from the nursing home industry, we examine this under-explored issue of demand rationing under public reporting. We classify nursing homes along the dimensions of quality and capacity constraints and then use a difference-in-differences approach on the basis of both this classification and the timing of the intervention to identify the effect of quality disclosure, comparing how the payer-specific admissions changed for high-quality, capacity-constrained nursing homes after reporting, relative to other types of nursing homes. We find that after reporting, high-quality, capacity-constrained nursing homes increased their admissions of the more lucrative Medicare and private-pay residents and decreased their admissions of the less lucrative Medicaid residents, relative to low-quality nursing homes facing no capacity constraints. Our findings are consistent with demand rationing in the presence of capacity constraints and substantial differences in profit margin across residents of different payers in the industry. Furthermore, our findings underscore the important role of institutional details in designing effective public reporting for regulated industries. The magnitude of the effect is modest but potentially meaningful to nursing homes.

In theory, these findings could also be consistent with a demand-side response in the form of heterogeneous consumer behavior by payer type. If the more profitable patient types, Medicare and private-pay patients, are more responsive to quality information than the less profitable type, Medicaid patients, the less responsive Medicaid patients could be crowded out of high-quality nursing homes even if the nursing homes themselves do not engage in patient selection on profitability. However, we find that the decrease in Medicaid admissions at high-quality, capacity-constrained homes is considerably larger in states with lower Medicaid rates. This result cannot be explained by heterogeneous consumer response by payer type because differences in consumer response to quality information by payer type should not be correlated with state Medicaid rates. Thus, although we cannot definitively rule out heterogeneous consumer response by payer type, we believe our results are driven mainly by provider demand rationing.

This study contributes to several strands of the literature. First, a large literature has examined the effects of public reporting on provider and consumer behavior in various sectors (see Dranove and Jin (2010) for a review).1 However, this literature has paid little attention to the role of capacity constraints that are common in healthcare sectors; thus, a primary contribution of our paper is the examination of the role of capacity constraints in provider response to public reporting, given administratively set prices. Second, our study relates indirectly to the literature on patient selection behavior (Dranove et al., 2003; Gowrisankaran and Town, 2003; Werner et al., 2005, 2011; Mukamel et al., 2009), particularly on the incentive to select on health risk under public reporting. Although little support has been found for selection on health risk in response to public reporting for nursing homes, it is arguably more plausible for nursing homes to base admissions decisions on profitability rather than on health risk, as attracting more profitable patients provides a more immediate and certain payoff than avoiding sicker patients.2 Public reporting changes nursing homes’ ability to attract more profitable patients, and this effect may outweigh any incentive to avoid sicker patients. Third, our study is related to the literature on Medicaid access challenges due to low reimbursement rates (Nyman, 1985, 1988; Grabowski, 2001) and related healthcare disparities issues (Casalino et al., 2007; Chien et al., 2007; Konetzka and Werner, 2009). These access and disparity issues may be exacerbated if disadvantaged residents are rationed out of high-quality providers.

2. BACKGROUND INFORMATION ON THE NURSING HOME INDUSTRY AND NHC

Broadly, nursing homes serve two markets, chronic care and post-acute care. Chronic-care residents have physical and/or cognitive impairments that necessitate assistance with activities of daily living. They are generally considered long-stay residents, with an average length of stay of about 2 years. Medicaid is the dominant payer, and the rest is mainly self-pay.3 Most nursing homes also provide short-term post-acute rehabilitative care to individuals following a hospital stay, with an average length of stay less than 30 days (Linehan, 2012). Medicare is the dominant payer for post-acute care. The general consensus is that private-pay and Medicare residents are associated with substantially higher profit margins relative to Medicaid residents (Troyer, 2002; Floyd, 2004; Feinstein and Fischbeck, 2005; Medicare Payment Advisory Commission, 2005). Thus, if nursing homes are interested in the most profitable residents, they will be interested first in Medicare and private-pay admissions and last in Medicaid admissions.

Capacity constraints have long been thought to play a role in nursing home quality. Excess demand is a direct consequence of Certificate of Need Laws in many states, which have historically been used to limit capacity in order to control costs. Even though excess demand may no longer exist in many markets (Grabowski, 2001), a significant number of nursing homes still face capacity constraints in the form of high occupancy. In our national sample, about 25% of nursing homes have occupancy rates above 95%.

Nursing home quality has been under scrutiny for decades for well-publicized, persistent quality problems. In 2002, the Centers for Medicare and Medicaid Services (CMS) launched a web-based public reporting system called Nursing Home Compare (NHC), which publishes quarterly ratings on chronic and post-acute care quality measures at all Medicare-certified or Medicaid-certified nursing homes (CMS, 2002). NHC was first launched as a pilot program in six states in April 2002 (Colorado, Florida, Maryland, Ohio, Rhode Island, and Washington) and then was implemented nationally in November 2002. NHC began rating nursing homes on ten quality measures, six for chronic care, and four for post-acute. One measure was immediately dropped by CMS; thus, the system effectively included nine measures, which were consistently reported in our study period. Table I lists those measures used in our analysis.4 NHC also reports other quality information such as staffing (licensed nurse hours and certified nurse aids per resident per day) and total numbers of regulatory deficiency citations.5

Table I.

Quality measures included in initial launch of NHC (standardized so that higher numbers indicate higher quality, i.e., the scores reported in this table = 100% – the raw reported scores)

| Quality measure | Mean (standard deviation) |

|---|---|

| Chronic-care measures | |

| Percent of residents whose need for help with daily activities has increased | 84.2 (8.4) |

| Percent of residents with moderate to severe pain | 92.1 (6.8) |

| Percent of high-risk residents with pressure sores | 86.3 (7.4) |

| Percent of low-risk residents with pressure sores | 97.4 (2.9) |

| Percent of residents who were physically restrained | 90.9 (9.4) |

| Percent of residents with a urinary tract infection | 92.0 (5.1) |

| Post-acute measures | |

| Percent of residents with delirium | 96.4 (5.0) |

| Percent of residents with moderate to severe pain | 76.0 (14.4) |

| Percent of residents with improvement in walking | 94.4 (5.0) |

NHC, Nursing Home Compare.

The 2002 release of NHC was actively promoted through a multimedia campaign.6 Visits to the NHC website increased tenfold in the six pilot states; with the national launch of NHC, website visits quadrupled nationally, jumping from fewer than 100,000 visits per month to over 400,000 visits in November 2002 (Inspector General, 2004).

3. CONCEPTUAL FRAMEWORK

We characterize nursing home markets as subject to imperfect and asymmetric information, preventing consumers from assessing the level of clinical quality with certainty. In such a market, sellers face a demand curve that is relatively inelastic with respect to true quality. They choose a quality level that is lower than the socially optimal level under perfect information. Dranove and Satterthwaite (1992) posit that an improvement in quality information (such as public reporting) improves precision with which consumers observe sellers’ quality, leading to an increase in the elasticity of demand with respect to quality. This implies that the equilibrium quality increases with public reporting, improving welfare.7 This welfare improvement is the intended effect of most public reporting policies.

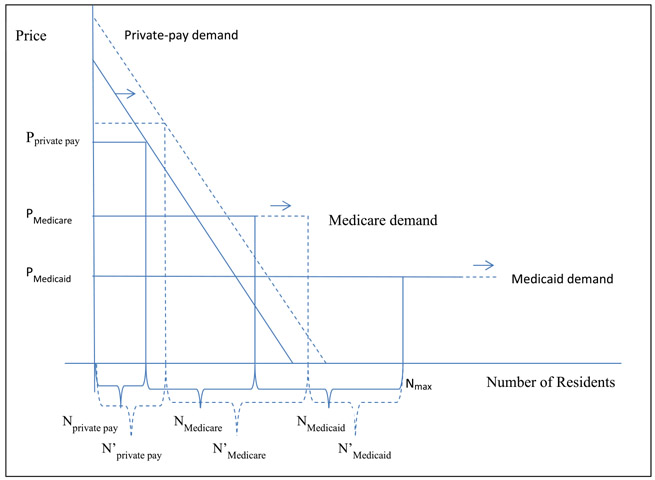

We base our analysis on both the Dranove and Satterthwaite model and a general model of excess demand with multiple payers used in prior nursing home research (Nyman, 1985). In Nyman’s model, nursing homes face a downward-sloping demand curve for private-pay residents and a horizontal demand curve for Medicaid residents at a constant price set by government. We add consideration of Medicare and public reporting to this model, depicted in Figure 1. Prices for Medicare, like those for Medicaid, are assumed to be constant and set by the government, reflected in a horizontal demand curve, but Medicare price is assumed to be higher than Medicaid price (and costs are higher but not proportionately higher). Nursing homes choose the private-pay price that maximizes profits from private-pay residents, accepting the corresponding number of private-pay admissions at a price that is higher than the Medicare price or the Medicaid price. In this scenario, private-pay residents with high willingness to pay will be admitted first (Nprivate pay); then Medicare residents will be admitted (NMedicare); and then remaining beds are filled with Medicaid residents (NMedicaid), generally leaving excess demand by Medicaid residents because of fixed capacity (indicated by Nmax). Under public reporting, if consumers care about quality, disclosure of high quality could be expected to shift the private-pay demand curve outward, resulting in more private-pay residents. Demand by Medicare (and potentially Medicaid) residents will also increase, reflected in an extension of the horizontal demand curves. Therefore, for a high-quality, capacity-constrained nursing home with the new demand curves under public reporting, private-pay admissions increase () and Medicare admissions may also increase (). Given fixed capacity, Medicaid admissions will decrease in high-quality, capacity-constrained homes; they are more likely to land in low-quality homes, especially those without capacity constraints.

Figure 1.

Demand for nursing home care in a multi-payer, capacity-constrained nursing home when high quality is revealed

Note: This graph illustrates the admission strategy by a capacity-constrained nursing home that is disclosed to be high quality, both before and after the disclosure. Solid lines are for before the disclosure and dashed lines are for after the disclosure. See the text for details.

We therefore expect differential changes in payer-specific admissions and that these responses will vary by whether the nursing home is capacity constrained and reported to be of high quality. Specifically, we hypothesize that capacity-constrained homes that score well on NHC will increase private-pay and Medicare admissions but decrease Medicaid admissions, relative to non-capacity-constrained nursing homes that do not score well.

4. DATA AND EMPIRICAL APPROACH

To test our hypothesis, we classify nursing homes into four types according to whether they were revealed to be of clinical high quality or low quality, with or without capacity constraints. This classification allows us to examine the changes in payer-specific admissions for high-quality, capacity-constrained homes (those that potentially need to ration demand) relative to changes in payer-specific admissions for other types of nursing homes within the same market after public reporting.

To identify effects that can plausibly be attributed to public reporting, we adopt several strategies. First, we take advantage of the variation in timing of the implementation of NHC owing to the six-state pilot being launched 7 months earlier. This allows the use of a difference-in-differences estimator that captures the change in pilot states relative to all other states in April 2002 and the change in non-pilot states relative to pilot states in November 2002. Second, we include facility fixed effects and quarterly time dummies in all of our regressions to isolate changes over time in quality and outcomes, controlling for time effects and time-invariant omitted variables at the facility level. We also include state-specific year dummies to account for varying secular trends at the state level (e.g., state nursing home staffing regulation changes and Medicaid generosity changes over time). Third, using the algorithm published by the CMS, we are able to calculate quality measures at the facility level both before and after they were publicly reported; this allows us to control for any underlying relationship between quality and our outcomes prior to public reporting. Finally, we capitalize on the fact that quality measures were separately reported for the post-acute and chronic-care populations, which allows us to estimate changes in payer mix that are specific to the type of quality revealed for each population.

4.1. Data

We use two primary data sources. The first is the nursing home Minimum Data Set (MDS) for years 2000 to 2005. The MDS contains detailed clinical data collected at regular intervals for every resident in a Medicare-certified or Medicaid-certified nursing home. These data are used by the CMS to calculate the quality measures reported on NHC. We impute from the MDS the quarterly facility-level publicly reported quality measures both before and after the implementation of NHC. MDS also records the payer source for each resident upon admission, which we use to calculate payer-specific admissions.

We extract facility characteristics (bed size and ownership status, staffing, and deficiencies) from the Online Survey, Certification and Reporting (OSCAR) dataset. The OSCAR data are collected every 9 to 15 months from state inspections of all federally certified Medicare and Medicaid nursing homes in the USA.8 We obtain various local market control variables such as demographics and economics conditions from the Census.

Our national dataset includes approximately 80,000 observations from approximately 16,000 skilled nursing facilities from 2000 to 2005. Table II provides summary statistics on this sample. To examine the demand rationing effect, we include in our analysis sample only those markets that have at least one high-quality and capacity-constrained nursing home and at least one nursing home of another type. Thus, the sample used in our main regression has approximately 64,000 observations on about 12,000 nursing homes.

Table II.

Summary statistics based on the 2000–2005 national sample

| Variables | Mean (standard deviation) |

|---|---|

| Facility-level variables (quarterly data) | |

| Total quarterly admissions | 48.4 (48.8) |

| Medicaid | 3.8 (5.5) |

| Medicare | 31.9 (34.7) |

| Private pay | 12.7 (18.9) |

| Medicaid county share of new admissions | 18.3 (28.1) |

| Medicare HRR share of new admissions | 2.1 (3.4) |

| Private-pay county share of new admissions | 19.0 (27.9) |

| Number of regulatory deficiencies | 6.2 (5.6) |

| Licensed nurses only | 1.5 (7.2) |

| CNAs only | 2.5 (6.5) |

| Government facility | 0.06 (0.24) |

| Not-for-profit facility | 0.28 (0.45) |

| For-profit facility | 0.66 (0.48) |

| Number of beds | 102.9 (66.8) |

| County-level control variables (annual data) | |

| County population in 1000s | 96.5 (304.2) |

| Proportion of county population, female | 50.5 (1.8) |

| Proportion of county population, male and age 65 years and older | 6.3 (1.8) |

| Proportion of county population, female and age 65 years and older | 8.6 (2.3) |

| Proportion of county population, non-Hispanic White | 82.0 (18.2) |

| Proportion of county population, non-Hispanic Black | 8.8 (14.4) |

| Proportion of county population, Hispanic of any race | 5.9 (11.3) |

| Proportion of county population in poverty | 13.2 (5.4) |

| Unemployment rate | 4.4 (1.6) |

| Median household income in $1000s | 36.4 (8.9) |

| # of hospital beds per 1000 county residents | 26.9 (30.4) |

| # of nurses per 1000 county residents | 29.2 (27.3) |

HRR, hospital referral region; CNAs, certified nursing aides.

4.1.1. Dependent variables.

We define the payer-specific admission as the number of residents of a specific payer who were admitted to a given nursing home in a given quarter. We define Medicare admissions as those having a 5-day Medicare MDS assessment (required for Medicare payment), Medicaid as those with any indication of Medicaid payment on their admissions assessment and no Medicare 5-day assessment, and anyone else as private pay.9

4.1.2. Key independent variables.

The raw NHC quality measures are reported as percentages of residents who experienced a given adverse event. We convert the measures to 100 * (1 – the raw percentages) so that higher scores indicate higher quality (see Table I for the mean of the converted quality measures). Given the high dimensionality of the individual quality measures, we calculate z-scores for each measure for a nursing home relative to all homes in the local market and then average the z-scores across all reported quality measures, thus obtaining an overall quality score for each nursing home. We also calculate overall quality scores separately for the chronic-care measures and for the post-acute care measures to allow us to test for the possibility that demand for the two types of care may respond differently to the two types of quality information.

For chronic-care residents (Medicaid and private pay), we define the market as the county.10 Post-acute markets are arguably more similar to hospital markets, and thus, we define post-acute markets using two different hospital market definitions defined by the Dartmouth Atlas, hospital referral regions (HRRs) as regional hospital markets and hospital service areas (HSAs) as local hospital markets.11

We use tertiles of our overall quality score based on the initial release of NHC. The highest tertile is classified as high quality and the lowest tertile as low quality; the middle tertile is dropped. Given that our overall quality score is based on within-market z-scores, the high-quality category broadly represents the nursing homes within a market that score well on average across the clinical quality measures compared with other homes in the market, and the low-quality category broadly represents the nursing homes within a market that score poorly. This approach is valid if consumers look across all or most measures in assessing the quality of a facility.12 In an alternative specification, we define a time-varying version of the quality classification on the basis of each quarter’s quality score for both the pre-NHC and post-NHC quarters.

We classify all the nursing homes into capacity-constrained ones and non-capacity-constrained ones on the basis of whether the home’s occupancy rate was higher than 95% in the third quarter of 2002, the quarter prior to the initial launch of NHC, if the home is located in a non-pilot state; if the home is located in a pilot state, we use the occupancy rate in the first quarter of 2002 (the quarter before the launch of NHC in the pilot states). We then combine both the quality and capacity classifications to produce four types of nursing homes: high quality, capacity constrained; high quality, not capacity constrained; low quality, capacity constrained; and low quality, not capacity constrained.

We include the other quality domains reported by the NHC as separate variables: licensed nurse hours per resident per day and certified nursing aids per resident per day; and the total numbers of health and fire safety deficiencies. Although not widely advertised, these domains were available online prior to 2002, so we focus on the release of the clinical quality measures as the primary intervention.

4.1.3. Other variables.

We control for various nursing home and market characteristics. Nursing home characteristics include proprietary status (for-profit or not-for-profit with public being the omitted category) and bed size from OSCAR. Market-level characteristics include two sets of variables. The first set includes payer-specific admissions aggregated to the market level; we control for those variables to account for time-varying market-level trends in payer-specific demand. The second set includes the following variables from the Census: total population, population age distribution (proportion of male and female populations age 65 years above), racial composition (proportion of total population who are non-Hispanic White, non-Hispanic Black, and Hispanic of all races with the proportion of other races as the omitted category), and economic conditions (unemployment rate, median household income, and proportion of the population in poverty).

4.2. Estimation

We estimate a main specification and a number of alternatives. Our main specification examines the effect of the initial public reports on payer-specific quarterly admissions. In this specification, we use the overall quality score on the basis of the initial launch of NHC. We use facility fixed-effects linear models, regressing nursing homes’ Medicaid admissions, Medicare admissions, and private-pay admissions on the facility types (high or low quality, with and without capacity constraints) interacted with post-NHC indicators, controlling for time dummies and time-varying nursing home and local market characteristics, as follows:

where Yj,t is the outcome in nursing home j in year t (payer-specific quarterly admissions); Type1–3j indicate the type of facility to be high quality and capacity constrained, high quality but not capacity constrained, and low quality and capacity constrained, respectively; Postj,t indicates post-implementation of NHC (quarter 2 of 2002 for nursing homes in pilot states and quarter 4 of 2002 for nursing homes in non-pilot states); Xj,t is the time-varying facility-level controls variables; Xm,t is the time-varying market-level control variables; λt is the set of year-quarter dummies and state-year dummies; and ηj is the set of nursing home fixed effects.

Because the timing of the post-NHC variable varies for pilot and non-pilot states, the coefficients on the facility types interacted with the post-NHC variable reflect two effects: the changes after NHC relative to the omitted facility type and the extent to which these changes happened earlier for the pilot states relative to the non-pilot states (and conversely, the extent to which these changes happened later for the non-pilot states relative to the pilot states). Thus, the combined effect captures relatively short-term deviations from underlying trends in the outcome variables at the time of pilot implementation and at the time of national implementation. To the extent that nursing homes in pilot states had a gradual reaction to the policy that took more than several quarters, we may underestimate the effect by using them as a control group for the national launch. We cluster the robust standard errors at the facility level to account for potential autocorrelation of the errors.

4.2.1. Additional specifications.

We modify our main specification to address several potential concerns. We separately examine the following:

The potential for prior beliefs. Several prior studies of public reporting have addressed the issue of prior beliefs, hypothesizing that public reporting should only have an effect when it reveals information that is different from prior beliefs (Mukamel and Mushlin, 1998; Dafny and Dranove, 2008; Dranove and Sfekas, 2008). Although our main specification includes facility-level fixed effects, it is useful to examine a more flexible form that allows specifically for pre-trending in the correlation between revealed quality and payer-specific admissions prior to public reporting, because consumers may have had information about quality through other sources and may have incorporated this information gradually over time in their choice of nursing homes, even in absence of public reporting. In a robustness check, we account for these prior beliefs through the use of interaction terms between quality scores and individual year-quarter dummy variables. This specification allows the relationship between quality scores and payer-specific admissions to change over time in a more flexible way than our simple ‘post’ variable but does not capitalize on the difference-in-difference setup of the pilot implementation.

Alternative definition of the overall quality score. Because it is not clear a priori how consumers weigh different quality measures, we also define an alternative high-quality indicator variable to indicate whether a nursing home scored better than the local market median on the majority of the reported quality measures (more than four out of the nine quality measures).13

The potential for quality to change with public reporting.14 We test an alternative specification that includes time-varying quality indicators (using the same definition of high and low quality but calculating the overall quality score separately for each quarter in both the pre-periods and post-periods).

Alternative definitions of dependent variables. We also examine payer mix (payer-specific percent of residents in each nursing home) and payer-specific market shares (percent of all new admissions of each payer type in the local market who are admitted to a given nursing home).

Use of HSAs instead of HRRs to define post-acute markets.

The potential for different effects for post-acute and long-stay quality. Instead of a single overall score interacted with the post-indicator, we include two sets of interactions with the post-indicator within each facility type, one for the overall post-acute quality score and one for the overall chronic-care quality score.

Using count models rather than the linear models. Because of the nature of our dependent variables (admission counts), we also estimate a fixed-effect negative binomial model of our main specification to ensure robustness to the functional form.

Using weighted regressions by bed size. In order to address possible heterogeneity of response by facility size, we also estimate weighted regressions with the weight being the number of licensed beds in the pre-NHC quarter.15

Stratifying the sample by state average Medicaid price. In order to test whether our results are driven mainly by provider response or consumer response, we split the sample by whether the state average Medicaid price is higher than the national average. If our results are mainly driven by provider response, we should obtain stronger results in states with lower Medicaid price because providers have a greater incentive to avoid Medicaid patients when Medicaid rates are low relative to Medicare rates (which, with some adjustments, are federally set).16

5. RESULTS

5.1. Main results

Table III presents coefficient estimates from our main specification examining the effect of the initial quality disclosure on payer-specific admissions, on the basis of separate regressions for each payer. The coefficients of interest are those of the facility types interacted with the post-NHC indicator, representing the effect of reported quality coupled with capacity constraints. As expected, we find statistically significant increases in private-pay admissions and decreases in Medicaid admissions for nursing homes reported to be of high quality that were also capacity constrained, relative to nursing homes of low quality that were not capacity constrained at baseline. The corresponding coefficient in the Medicare admission regression is also positive, although statistically insignificant. These results are consistent with those of high-quality and capacity-constrained nursing homes selectively admitting more profitable private-pay and Medicare residents and rationing out Medicaid residents after public reporting. The magnitudes of the coefficients are modest. For example, the high-quality capacity-constrained nursing home on average loses 0.22 Medicaid admissions (or 5.8% from baseline Medicaid quarterly admissions), gains 0.58 admissions in private-pay residents (or 4.5%), and gains 0.28 admissions in Medicare (or less than 1%) in each quarter post-NHC, relative to the low-quality, non-capacity-constrained homes. Note that, as with all the coefficients of facility type interacted with the post-indicator, the negative coefficient of the low-quality, capacity-constrained facility in the Medicaid regression implies a drop in Medicaid admissions in this facility type relative to low-quality, but non-capacity-constrained facilities, which may not be an absolute drop. This is reasonable because nursing homes that are capacity constrained would not have room to take in the Medicaid residents crowded out of the high-quality, capacity-constrained facilities.

Table III.

Effects of public reporting on payer-specific admissions dependent variable = payer-specific admission count

| (1) |

(2) |

(3) |

|

|---|---|---|---|

| Variable | Medicaid | Medicare | Private pay |

| Key independent variables | |||

| High quality, capacity constrained * post | −0.22*** (0.083) | 0.28 (0.350) | 0.58** (0.274) |

| High quality, not capacity constrained * post | 0.13* (0.067) | −0.28 (0.270) | 0.16 (0.201) |

| Low quality, capacity constrained * post | −0.22*** (0.084) | 0.34 (0.424) | −0.42 (0.330) |

| Control variables | |||

| Nurse aide hours per resident day * post | −0.00 (0.024) | 0.09 (0.060) | 0.04 (0.050) |

| Licensed nurse hours per resident day * post | 0.01 (0.015) | −0.15** (0.067) | −0.06** (0.028) |

| Number of regulatory deficiencies * post | 0.01* (0.006) | 0.04** (0.021) | −0.04*** (0.015) |

| Nurse aide hours per resident day | −0.00 (0.006) | −0.05*** (0.018) | −0.01 (0.011) |

| Licensed nurse hours per resident day | 0.00 (0.006) | 0.01 (0.013) | 0.01 (0.008) |

| Number of regulatory deficiencies | −0.00 (0.004) | −0.01 (0.015) | 0.03** (0.011) |

| Total Medicare admissions in market | −0.00** (0.000) | 0.00*** (0.000) | 0.00 (0.000) |

| Total private-pay admissions in market | −0.00** (0.000) | 0.00** (0.001) | 0.01*** (0.001) |

| Total Medicaid admissions in market | 0.01*** (0.001) | 0.00 (0.002) | −0.00 (0.001) |

| Government facility | 0.07 (0.193) | −0.57 (0.779) | 1.27* (0.679) |

| Not-for-profit facility | −0.06 (0.111) | 0.13 (0.444) | 0.88** (0.353) |

| Number licensed beds in facility | 0.01*** (0.002) | 0.08*** (0.013) | 0.03*** (0.007) |

| County population | 0.00*** (0.001) | 0.00 (0.004) | 0.01*** (0.003) |

| County proportion female | −0.06 (0.119) | 1.67*** (0.447) | 0.10 (0.304) |

| County proportion non-Hispanic White | −0.07 (0.090) | −1.49*** (0.439) | 0.58* (0.326) |

| County proportion non-Hispanic Black | −0.21* (0.116) | −0.37 (0.539) | 0.47 (0.385) |

| County proportion Hispanic (any race) | −0.18* (0.103) | −0.09 (0.486) | 0.27 (0.361) |

| County proportion of men 65 years and older | 0.37* (0.222) | 2.00** (0.949) | −0.94 (0.626) |

| County proportion of women 65 years and older | −0.40** (0.185) | −0.15 (0.752) | −0.07 (0.530) |

| County unemployment rate | −0.01 (0.017) | 0.08 (0.072) | 0.03 (0.048) |

| County proportion in poverty | −0.01 (0.017) | 0.20*** (0.072) | −0.00 (0.050) |

| County median household income ($1000s) | −0.04*** (0.015) | 0.04 (0.065) | 0.01 (0.047) |

| County number of hospital beds per 1000 | 0.00 (0.003) | −0.00 (0.014) | 0.00 (0.011) |

| County number of nurses per 1000 | −0.00 (0.002) | 0.02 (0.010) | −0.00 (0.008) |

| Constant | 13.31 (10.785) | 22.73 (48.852) | −56.54 (35.595) |

| Observations | 64,384 | 64,433 | 64,390 |

| Number of nursing homes | 12,125 | 12,109 | 12,111 |

Notes: Robust standard errors in parentheses and clustered at the facility level. Estimations include facility fixed effects, year-quarter dummies, and state-year dummies.

Significant at p < 0.01

Significant at p < 0.05

Significant at p < 0.10.

Control variables included in the regression behave largely as expected, although some are noisy and difficult to interpret in the nursing home fixed-effects context, as some variables have minimal variation over time. Larger facilities have more admissions of all types; more affluent areas have fewer Medicaid admissions; not-for-profit facilities (often seen as a marker for quality in nursing homes) have more private-pay admissions. Facilities with more regulatory deficiencies attract fewer private-pay residents, and this effect is amplified after NHC. However, after NHC, an increase in regulatory deficiencies is also associated with more Medicare admissions, contrary to expectations; likewise, the effect of licensed nurse staffing seems to move in the unexpected direction after NHC. However, unlike the clinical quality measures, nurse staffing information was available online prior to 2002, so we cannot interpret these coefficients as a policy experiment in the same way. It is possible that the new information about clinical quality dominated the licensed staffing effect.

5.2. Robustness checks

Table IV reports coefficient estimates from the more flexible specification based on interactions between the high-quality and capacity-constrained facility type and individual quarterly time dummies. Although inherently noisy, the coefficient estimates in the Medicaid admission count regression exhibit a break in trend from more Medicaid admissions (relative to the reference period of quarter 3 of 2002) toward fewer Medicaid admissions for the high-quality capacity-constrained facilities after public reporting, as can be seen from the generally positive coefficient estimates prior to NHC and generally negative coefficients post-NHC. We also see that Medicare and private-pay admissions generally increase post-NHC relative to the pre-NHC period, although the pre-NHC trend is too noisy to interpret. These results suggest that the decline in Medicaid admissions and the increases in private-pay and Medicare admissions found in our primary specification are not driven by prior beliefs about quality.

Table IV.

Flexible specification accounting for prior beliefs

| Coefficient on high quality, capacity constrained × quarter |

|||

|---|---|---|---|

| Medicaid | Medicare | Private pay | |

| 2000 Quarter 1 | 0.08 (0.186) | −0.70 (0.718) | −0.56 (0.561) |

| 2000 Quarter 2 | 0.20 (0.175) | 0.61 (0.689) | −0.32 (0.525) |

| 2000 Quarter 3 | 0.16 (0.169) | 0.51 (0.651) | −0.78 (0.507) |

| 2000 Quarter 4 | 0.39** (0.172) | 0.74 (0.627) | −0.25 (0.488) |

| 2001 Quarter 1 | 0.35** (0.177) | −0.54 (0.584) | −0.77* (0.458) |

| 2001 Quarter 2 | 0.41** (0.173) | −0.23 (0.553) | −0.84* (0.430) |

| 2001 Quarter 3 | 0.11 (0.156) | −0.89 (0.546) | −0.80* (0.420) |

| 2001 Quarter 4 | 0.13 (0.153) | −0.98* (0.502) | −0.47 (0.387) |

| 2002 Quarter 1 | 0.20 (0.142) | −1.58*** (0.463) | 0.08 (0.348) |

| 2002 Quarter 2 | 0.19 (0.134) | −0.15 (0.398) | 0.12 (0.280) |

| 2002 Quarter 3 (reference quarter) | 0 | 0 | 0 |

| 2002 Quarter 4 | −0.21 (0.145) | 0.25 (0.377) | −0.19 (0.277) |

| 2003 Quarter 1 | 0.02 (0.158) | 0.41 (0.483) | −0.00 (0.351) |

| 2003 Quarter 2 | 0.14 (0.166) | 0.11 (0.496) | −0.12 (0.389) |

| 2003 Quarter 3 | −0.09 (0.172) | 0.45 (0.529) | −0.10 (0.409) |

| 2003 Quarter 4 | −0.12 (0.171) | 0.32 (0.565) | 0.22 (0.430) |

| 2004 Quarter 1 | −0.01 (0.177) | −0.81 (0.605) | −0.70 (0.453) |

| 2004 Quarter 2 | −0.04 (0.176) | −0.46 (0.596) | 0.28 (0.449) |

| 2004 Quarter 3 | −0.11 (0.181) | −0.26 (0.609) | 0.22 (0.461) |

| 2004 Quarter 4 | 0.06 (0.185) | 0.28 (0.649) | −0.01 (0.476) |

| 2005 Quarter 1 | −0.04 (0.185) | 0.02 (0.710) | 0.24 (0.489) |

| 2005 Quarter 2 | −0.23 (0.188) | 0.64 (0.705) | 0.23 (0.496) |

| 2005 Quarter 3 | 0.05 (0.192) | 1.37* (0.698) | 0.54 (0.498) |

| 2005 Quarter 4 | 0.01 (0.188) | 1.31* (0.713) | 0.59 (0.517) |

Notes: Dependent variable = payer-specific admission count. This table presents the coefficient estimates of the year-quarter dummies interacted with the high-quality and capacity-constrained facility type in the flexible specification. The coefficients are relative to the omitted facility type of low-quality and non-capacity-constrained. See the text for details. Robust standard errors in parentheses and clustered at the facility level. Estimations include facility fixed effects, year-quarter dummies, state-year-quarter dummies, and the full set of controls in Table III. Quarter 1 is 2000 Q1, quarter 2 is 2002 Q2, and so on until quarter 24, which is 2005 Q4; quarter 11 is the base quarter, which is 2002 Q3.

Significant at p < 0.01

Significant at p < 0.05

Significant at p < 0.10.

In Table V, we present results from multiple robustness checks. When we allow the quality score to vary over time, results are consistent with our main results and in fact become slightly larger in magnitude (panel A). This implies that our results are robust to the possibility that quality may be changing over time and that consumers may be aware of these trends. This also suggests that the changes in payer-specific admissions that we found upon the initial launch of public reporting cannot be explained simply by changes in quality in response to public reporting. When we replace the high-quality indicator based on the overall quality score by an indicator based on whether a nursing home scored better than the local market median on the majority of the reported quality measures, we obtain stronger crowding-out effects—a larger decline in Medicaid admissions and a larger increase in Medicare admissions in the higher-quality, capacity-constrained facilities (panel B). When we use payer mix as our dependent variable—the percent of admissions to each facility that correspond to each payer, as opposed to the raw admission counts—we still see a decline in Medicaid for the high-quality, capacity-constrained facilities, but an increase in Medicare instead in private pay (panel C). (This latter difference may be explained by growing aggregate Medicare demand vs. stagnant private-pay demand.) When we define our dependent variable as market share, we see a similar although less statistically significant pattern (panel D). When we define post-acute markets using HSAs instead of HRRs, the results are again similar although slightly smaller in magnitude (panel E).17 When we use county, instead of HRR, as the local market for defining the high-quality and low-quality categories based on the overall quality score, the results again are qualitatively similar (panel F). When we estimate a fixed-effect negative binomial count model, the marginal effects are also qualitatively similar to our main results (panel G).18 Finally, weighting by licensed bed size also produces coefficient estimates indicating fewer Medicaid admissions and more Medicare and private-pay admissions in high-quality, capacity-constrained homes (panel H).19

Table V.

Results from additional robustness checks and sub-analyses

| Variable | Medicaid | Medicare | Private pay |

|---|---|---|---|

| Panel A: Time-varying quality | |||

| High quality, capacity constrained * post | −0.39*** (0.110) | −0.08 (0.451) | 0.72** (0.327) |

| High quality, not capacity constrained * post | −0.10 (0.104) | −1.37*** (0.376) | 0.63** (0.275) |

| Low quality, capacity constrained * post | −0.33*** (0.108) | 0.08 (0.518) | −0.69 (0.433) |

| Panel B: Using alternative definition of high quality (‘majority of quality measures better than the local market median’) | |||

| High quality, capacity constrained * post | −0.45*** (0.11) | 2.94*** (0.54) | 0.45 (0.49) |

| High quality, not capacity constrained * post | −0.18* (0.10) | 1.00*** (0.38) | −0.20 (0.29) |

| Low quality, capacity constrained * post | −0.52*** (0.10) | 1.37*** (0.48) | −0.46 (0.37) |

| Panel C: Payer mix as dependent variable | |||

| High quality, capacity constrained * post | −0.01** (0.004) | 0.01** (0.005) | −0.00 (0.005) |

| High quality, not capacity constrained * post | 0.00 (0.004) | −0.00 (0.004) | −0.00 (0.004) |

| Low quality, capacity constrained * post | −0.00 (0.003) | 0.01** (0.005) | −0.01 (0.005) |

| Panel D: Market share as dependent variable | |||

| High quality, capacity constrained * post | −0.24 (0.23) | 0.07** (0.03) | 0.23 (0.18) |

| High quality, not capacity constrained * post | 0.11 (0.14) | 0.02 (0.03) | 0.13 (0.11) |

| Low quality, capacity constrained * post | −0.28 (0.20) | −0.09** (0.04) | 0.27 (0.19) |

| Panel E: HSAs as post-acute market | |||

| High quality, capacity constrained * post | −0.23** (0.104) | 0.06 (0.472) | 0.57 (0.368) |

| High quality, not capacity constrained * post | 0.16 (0.110) | −1.07** (0.434) | 0.53 (0.322) |

| Low quality, capacity constrained * post | −0.13 (0.112) | −0.22 (0.561) | −0.66 (0.445) |

| Panel F: County as the market for overall quality measure | |||

| High quality, capacity constrained * post | −0.29** (0.104) | 0.13 (0.451) | 0.66* (0.346) |

| High quality, not capacity constrained * post | 0.15 (0.108) | −0.89** (0.426) | 0.69** (0.323) |

| Low quality, capacity constrained * post | −0.18* (0.111) | −0.58 (0.561) | −0.72 (0.448) |

| Panel G: Estimating fixed-effect negative binomial models (marginal effects reported) | |||

| High quality, capacity constrained * post | −0.21** (0.10) | 0.84*** (0.18) | 0.15** (0.07) |

| High quality, not capacity constrained * post | 0.13** (0.06) | 0.41*** (0.13) | −0.06 (0.06) |

| Low quality, capacity constrained * post | −0.35*** (0.12) | 0.05 (0.17) | −0.09 (0.08) |

| Panel H: Weighting regressions by bed size | |||

| High quality, capacity constrained * post | −0.24** (0.11) | 0.84 (0.54) | 0.83 (0.56) |

| High quality, not capacity constrained * post | 0.16 (0.10) | −0.08 (0.38) | 0.10 (0.29) |

| Low quality, capacity constrained * post | −0.28** (0.12) | 0.88 (0.64) | −0.70 (0.50) |

Notes: Robust standard errors in parentheses and clustered at the facility level. Estimations include facility fixed effects, year-quarter dummies, state-year dummies, and the full set of controls in Table III, except for in panel H, where the state-year dummies were dropped in order to achieve convergence (see footnote 18 for details).

HSA, hospital service area.

Significant at p < 0.01

Significant at p < 0.05

Significant at p < 0.10.

In Table VI, we present results from regressions in which we model post-acute and chronic-care quality separately, allowing payer-specific admissions to be affected differentially by the two types of quality. These results show that high chronic-care quality in capacity-constrained facilities is more likely to result in more private-pay admissions and fewer Medicaid admissions; the effect on Medicare admissions is positive but not statistically significant. In contrast, capacity-constrained homes that score well on post-acute care quality see substantial and significant increases in Medicare admissions, with decreases in Medicaid and increases in private pay that are smaller and statistically insignificant. This is consistent with expectations in that hospital discharge planners and potential Medicare residents should care most about post-acute quality, although they may also care about chronic-care quality as post-acute admissions often become chronic-care residents after their Medicare stay; similarly, long-stay Medicaid and private-pay residents seem to care more about the chronic-care quality measures. Thus, demand rationing and the crowd-out of Medicaid residents may manifest itself differently in different types of facilities owing to this sorting mechanism. Those facilities that do well on post-acute care are able to attract more Medicare residents than before public reporting and those that do well on chronic care are able to attract more private-pay residents.

Table VI.

Separating chronic-care and post-acute quality dependent variable = payer-specific admission count

| Variable | Medicaid | Medicare | Private pay |

|---|---|---|---|

| Chronic-care quality | |||

| High quality, capacity constrained * post | −0.24* (0.146) | 0.32 (0.682) | 1.03* (0.576) |

| High quality, not capacity constrained * post | 0.23* (0.139) | 0.02 (0.585) | 0.98** (0.480) |

| Low quality, capacity constrained * post | −0.24 (0.146) | −0.08 (0.727) | −0.40 (0.583) |

| Post-acute quality | |||

| High quality, capacity constrained * post | −0.21 (0.158) | 1.70** (0.729) | 0.14 (0.616) |

| High quality, not capacity constrained * post | −0.10 (0.131) | 0.65 (0.556) | −0.21 (0.440) |

| Low quality, capacity constrained * post | −0.06 (0.132) | 0.90 (0.702) | −0.67 (0.552) |

Notes: Robust standard errors in parentheses and clustered at the facility level. Estimations include facility fixed effects, year-quarter dummies, state-year dummies, and the full set of controls in Table III.

Significant at p < 0.01

Significant at p < 0.05

Significant at p < 0.10.

5.3. Alternative explanation: consumer-driven versus provider-driven response?

Our aforementioned results are consistent with provider demand rationing based on patient profitability. However, at least in theory, they may be also consistent with heterogeneous consumer response. For example, because Medicaid patients may be less educated than Medicare and private-pay patients or less likely to access the quality information, they may pay less attention to reported quality measures and thus not try as hard to get into a high-quality nursing home. Therefore, even if nursing homes were indifferent to patient profitability by payer type, fewer Medicaid patients (and more Medicare and private-pay patients) might end up in the high-quality nursing homes. To distinguish this potential consumer-driven response from our focus on provider-driven response, we test whether the responses are stronger in states with lower Medicaid rates than in states with higher Medicaid rates. Specifically, we obtain the 2002 state average Medicaid per-diem rates from Grabowski et al. (2004) (exhibit 2, p. W4-367) and split our sample in two sub-samples, one containing nursing homes located in states where the average Medicaid rate was higher than the national average and the other containing nursing homes in states where the average Medicaid rate was lower than the national average. We estimate our main specification on these two sub-samples separately and report the main coefficient estimates in Table VII. Those estimates show that the Medicaid crowding-out effect is considerably stronger in states with lower Medicaid rates than in states with high Medicaid rates, suggesting that our Medicaid crowding-out results are driven mainly by provider response rather than by heterogeneous consumer response.

Table VII.

Splitting the sample by whether the state average Medicaid per-diem rate is higher than the national average

| Variable | Medicaid | Medicare | Private pay |

|---|---|---|---|

| Panel A: Medicaid rate > national mean | |||

| High quality, capacity constrained * post | −0.11 (0.10) | 0.49 (0.48) | 0.64* (0.38) |

| High quality, not capacity constrained * post | −0.01 (0.09) | −0.08 (0.43) | 0.13 (0.33) |

| Low quality, capacity constrained * post | −0.28*** (0.11) | −0.15 (0.58) | −0.28 (0.44) |

| Panel B: Medicaid rate < national mean | |||

| High quality, capacity constrained * post | −0.38*** (0.15) | −0.02 (0.51) | 0.59 (0.38) |

| High quality, not capacity constrained * post | 0.24** (0.09) | −0.41 (0.34) | 0.13 (0.25) |

| Low quality, capacity constrained * post | −0.12 (0.14) | 1.32** (0.58) | −0.55 (0.47) |

Notes: Robust standard errors in parentheses and clustered at the facility level. Estimations include facility fixed effects, year-quarter dummies, state-year dummies, and the full set of controls in Table III.

Significant at p < 0.01

Significant at p < 0.05

Significant at p < 0.10.

6. DISCUSSION AND CONCLUSION

We have two main findings in this study. First, high-quality, capacity-constrained nursing homes appear to gain private-pay and Medicare admissions in the presence of report cards. Second, Medicaid residents increasingly are crowded out of higher-quality facilities with high occupancy. The counterintuitive lack of a substantial overall market share effect found in other studies (e.g., Grabowski and Town, 2011; Werner et al., 2012) may be explained by this heterogeneity by payer type. Our results also point to the importance of paying attention to institutional details—in our case, capacity constraints coupled with administrative pricing—when policy makers design public reporting system for regulated industries.

Although these conclusions are consistent with our hypotheses, the magnitudes of these effects are modest, representing, for example, about a 6% decrease in Medicaid admissions from the average. The need for demand rationing depends on the magnitude of consumers’ overall response to public reporting, which can be small for several reasons. First, many consumers, especially those with chronic-care needs who generally have mental/physical impairments, may not be aware of NHC, despite CMS’s efforts to advertise. Second, the NHC quality information may be too noisy for consumers because of its high dimensionality. Third, hospital discharge planners may ignore reported quality if they have a long-term business relationship with specific nursing homes. Finally, quality is only one consideration in choosing a nursing home. Being close to family and friends can be important for most chronic-care residents, and this preference may dampen demand elasticity to quality.

Despite the modest demand rationing in the context of NHC, the existence of any demand rationing suggests that public reporting may exacerbate, rather than ameliorate, disparities in access to high-quality healthcare providers. It is foreseeable that as the NHC system is improved such that consumers of all types increasingly turn to it to choose providers, the adverse consequence of demand rationing could be amplified. In particular, the current five-star system that was launched in 2008 provides quality information in a much simpler format and thus may imply a different story. Studying the effect of the five-star system is an important future research area.

Footnotes

For studies specific to the nursing home industry, see Castle and Lowe (2005), Mukamel et al. (2008), Werner et al. (2009), and Zinn et al. (2005) for improvement on reported measures after NHC; see Stevenson (2006) for effects on occupancy using data from an early period; and see Grabowski and Town (2011) for quality and market share. See Fung et al. (2008) for studies in various other healthcare sectors.

To our knowledge, the study of Mukamel et al. (2004) is the only study that has considered this potential crowd-out effect, in which they find that more affluent and more educated patients are more likely to be treated by high-quality surgeons and less affluent and less educated patients are more likely to be treated by low-quality surgeons after public reporting on cardiac surgeons in New York. Although they did not look directly at capacity, these results were suggestive that demand rationing may be at play as one would not expect any utility-maximizing individuals to increase choice of lower-quality providers as a result of public reporting.

In this paper, we make no distinction between self-pay out of pocket and self-pay with private long-term care insurance because the vast majority of private long-term care insurance covers a fixed per-diem rate and the consumers have to pay out of pocket the difference between a self-pay price and the per-diem rate.

A few measures were dropped or added more than a year after the initial launch. Because we focus on the effect of the initial launch, we do not include those measures in our analysis.

Although some quality information was available prior to 2002 through a preliminary version of NHC, it was not widely known or used.

For example, full-page newspaper advertisements were run in 71 newspapers across all 50 states (Inspector General, 2004). Each advertisement displayed local examples of the quality information available on the NHC website under the banner ‘How do your local nursing homes compare?’ CMS also ran national television advertisements promoting the website and worked with state long-term care Ombudsmen to promote awareness of NHC.

Although welfare could decrease if quality exceeds the optimal level in a type of medical arms race.

Fewer than 5% of nursing homes are not certified for Medicare or Medicaid and would not appear in our sample, but these facilities are also not subject to NHC.

The private-pay category is thus heterogeneous, as it may include individuals with other types of government payment (e.g., VA/Champus) and individuals with long-term care insurance as well as those paying truly out of pocket. Residents paid by other types of government payments consist of a very small portion of the total residents and including them or not should have a negligible effect on our estimates. Nursing homes charge the same price to those with private long-term care insurance and to those paying fully out of pocket.

Although use of the county or other geopolitical boundaries as a market for healthcare services is subject to criticism (Kessler and McClellan, 2000; Zwanziger et al., 2002), recent work has found that counties serve as good approximations to exogenous predicted demand in the case of chronic-care in nursing homes (Mehta, 2007).

Because we use HRR as the market for the post-acute care quality measures and county as the market for chronic care quality measures, we face the decision whether we should use HRR or county as the relevant local market for the overall quality score across the post-acute care and chronic care quality measures. We decide to use HRR as the market in the main analysis and check the robustness of the results to using county as the market. Results are qualitatively similar.

It may not be valid if consumers put very disproportionate weights on some of the clinical measures, especially those that are not well correlated with the others, but we have no evidence from the literature to inform any particular weighting scheme.

We thank an anonymous reviewer for this useful suggestion.

Indeed, the quality category varies substantially over time in our data. For example, the correlation between the quality categories for the same facility in consecutive quarters is between 0.5 and 0.6, and the correlation between the NHC national launch quarter (2003 Q3) and the last quarter of our sample (2005 Q4) is 0.24.

We thank an anonymous reviewer for this suggestion.

We thank an anonymous reviewer for this suggestion.

We do not, however, have a good explanation for the negative and significant coefficient estimate for high-quality facilities that are not capacity constrained in the Medicare admission regression.

Because we encountered a non-convergence issue when trying to estimate the fixed-effect negative binomial model with the full set of controls in Table III, we were forced to compromise on our specification for this robustness check. We found that if the state-year dummies were left out, the models were able to converge, but not otherwise. Thus, Table V panel G presents the marginal effects from estimating the fixed effect negative binomial models without the state-year dummies.

Our estimate of Medicare admissions in high-quality, non-capacity-constrained nursing homes is negative and statistically significant in panels A, E, and F of Table V. This is puzzling, and we acknowledge the sensitivity of this estimate to different specifications.

REFERENCES

- Casalino LP, Elster A, Eisenberg A, Lewis E, Montgomery J, Ramos D. 2007. Will pay-for-performance and quality reporting affect health care disparities? Health Affairs 26: w405–w414. [DOI] [PubMed] [Google Scholar]

- Castle NG, Lowe TJ. 2005. Report cards and nursing homes. The Gerontologist 45: 48–67. [DOI] [PubMed] [Google Scholar]

- Centers for Medicare and Medicaid. 2002. Nursing Home Quality Initiatives Overview. Centers for Medicare and Medicaid Services. Available from: http://www.cms.hhs.gov/NursingHomeQualityInits/downloads/NHQIOverview.pdf [accessed August 26, 2003]. [Google Scholar]

- Chien AT, Chin MH, Davis AM, Casalino LP. 2007. Pay for performance, public reporting, and racial disparities in health care: how are programs being designed? Medical Care Research & Review 64: 283S–304S. [DOI] [PubMed] [Google Scholar]

- Dafny L, Dranove D. 2008. Do report cards tell consumers anything they don’t know already? The case of Medicare HMOs. RAND Journal of Economics 39: 790–821. [DOI] [PubMed] [Google Scholar]

- Dranove D, Jin GZ. 2010. Quality disclosure and certification: theory and practice. Journal of Economic Literature 48: 935–963. [Google Scholar]

- Dranove D, Satterthwaite MA. 1992. Monopolistic competition when price and quality are imperfectly observable. RAND Journal of Economics 23: 518–534. [Google Scholar]

- Dranove D, Sfekas A. 2008. Start spreading the news: a structural estimate of the effects of New York hospital report cards. Journal of Health Economics 27: 1201–1207. [DOI] [PubMed] [Google Scholar]

- Dranove D, Kessler D, McClellan M, Satterthwaite M. 2003. Is more information better? The effects of “Report cards” on health care providers. Journal of Political Economy 111: 555–588. [Google Scholar]

- Feinstein AT, Fischbeck K. 2005. Lehman Brothers Health Care Facilities Accounting Review: Behind the Numbers V. Lehman Brothers: New York. [Google Scholar]

- Floyd WR, Chairman and Chief Executive Officer, Beverly Enterprises Inc. 2004. Investor Update. CIBC World Markets Healthcare Conference, New York, NY. [Google Scholar]

- Fung CH, Lim YW, Mattke S, Damberg C, Shekelle PG. 2008. Systematic review: the evidence that publishing patient care performance data improves quality of care. Annals of Internal Medicine 148: 111–123. [DOI] [PubMed] [Google Scholar]

- Gowrisankaran G, Town RJ. 2003. Competition, payers, and hospital quality. Health Services Research 38: 1403–1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabowski DC. 2001. Medicaid reimbursement and the quality of nursing home care. Journal of Health Economics 20: 549–569. [DOI] [PubMed] [Google Scholar]

- Grabowski DC, Town RJ. 2011. Does information matter? Competition, quality, and the impact of nursing home report cards. Health Services Research 46: 1698–1719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabowski DC, Feng Z, Intrator O, Mor V. 2004. Recent trends in state nursing home payment policies. Health Affairs (Suppl Web Exclusives): W4-363-73. [DOI] [PubMed] [Google Scholar]

- Inspector General. 2004. Inspection Results on Nursing Home Compare: Completeness and Accuracy. Department of Health and Human Services Office of Inspector General: Washington, DC. [Google Scholar]

- Kessler DP, McClellan MB. 2000. Is hospital competition socially wasteful? Quarterly Journal of Economics 115: 577–615. [Google Scholar]

- Konetzka RT, Werner RM. 2009. Disparities in long-term care: building equity into market-based reforms. Medical Care Research & Review 66: 491–521. [DOI] [PubMed] [Google Scholar]

- Linehan K 2012. Medicare’s Post-Acute Care Payment: A Review of the Issues and Policy Proposals. In Forum NHP, Issue Brief No. 847. [PubMed] [Google Scholar]

- Medicare Payment Advisory Commission. 2005. Report to the Congress: Medicare Payment Policy. Medicare Payment Advisory Commission: Washington, DC. [Google Scholar]

- Mehta A 2007. Spatial competition and market definition in the nursing home industry Working Paper, Boston University. [Google Scholar]

- Mukamel DB, Mushlin AI. 1998. Quality of care information makes a difference: an analysis of market share and price changes after publication of the New York State Cardiac Surgery Mortality Reports. Medical Care 36: 945–954. [DOI] [PubMed] [Google Scholar]

- Mukamel DB, Weimer DL, Zwanziger J, Gorthy SF, Mushlin AI. 2004. Quality report cards, selection of cardiac surgeons, and racial disparities: a study of the publication of the New York State Cardiac Surgery Reports. Inquiry 41: 435–446. [DOI] [PubMed] [Google Scholar]

- Mukamel DB, Weimer DL, Spector WD, Ladd H, Zinn JS. 2008. Publication of quality report cards and trends in reported quality measures in nursing homes. Health Services Research 43: 1244–1262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukamel DB, Ladd H, Weimer DL, Spector WD, Zinn JS. 2009. Is there evidence of cream skimming among nursing homes following the publication of the Nursing Home Compare report card? The Gerontologist 49: 793–802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nyman JA. 1985. Prospective and “cost-plus” Medicaid reimbursement, excess Medicaid demand, and the quality of nursing home care. Journal of Health Economics 4: 237–259. [DOI] [PubMed] [Google Scholar]

- Nyman JA. 1988. Excess-demand, the percentage of Medicaid patients, and the quality of nursing-home care. Journal of Human Resources 23: 76–92. [Google Scholar]

- Stevenson DG. 2006. Is a public reporting approach appropriate for nursing home care? Journal of Health Politics, Policy and Law 31: 773–810. [DOI] [PubMed] [Google Scholar]

- Troyer JL. 2002. Cross-subsidization in nursing homes: explaining rate differentials among payer types. Southern Economic Journal 68: 750–773. [Google Scholar]

- Werner RM, Asch DA, Polsky D. 2005. Racial profiling: the unintended consequences of coronary artery bypass graft report cards. Circulation 111: 1257–1263. [DOI] [PubMed] [Google Scholar]

- Werner RM, Konetzka RT, Stuart EA, Norton EC, Polsky D, Park J. 2009. Impact of public reporting on quality of postacute care. Health Services Research 44: 1169–1187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner RM, Konetzka RT, Stuart EA, Polsky D. 2011. Changes in patient sorting to nursing homes under public reporting: improved patient matching or provider gaming? Health Services Research 46: 555–571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werner RM, Norton EC, Konetzka RT, Polsky D. 2012. Do consumers respond to publicly reported quality information? Evidence from nursing homes. Journal of Health Economics 31: 50–61. [DOI] [PubMed] [Google Scholar]

- Zinn J, Spector W, Hsieh L, Mukamel DB. 2005. Do trends in the reporting of quality measures on the nursing home compare web site differ by nursing home characteristics? Gerontologist 45: 720–730. [DOI] [PubMed] [Google Scholar]

- Zwanziger J, Mukamel DB, Indridason I. 2002. Use of resident-origin data to define nursing home market boundaries. Inquiry 39: 56–66. [DOI] [PubMed] [Google Scholar]