Abstract

Objective

The study sought to evaluate a novel electronic health record (EHR) add-on application for chronic disease management that uses an integrated display to decrease user cognitive load, improve efficiency, and support clinical decision making.

Materials and Methods

We designed a chronic disease management application using the technology framework known as SMART on FHIR (Substitutable Medical Applications and Reusable Technologies on Fast Healthcare Interoperability Resources). We used mixed methods to obtain user feedback on a prototype to support ambulatory providers managing chronic obstructive pulmonary disease. Each participant managed 2 patient scenarios using the regular EHR with and without access to our prototype in block-randomized order. The primary outcome was the percentage of expert-recommended ideal care tasks completed. Timing, keyboard and mouse use, and participant surveys were also collected. User experiences were captured using a retrospective think-aloud interview analyzed by concept coding.

Results

With our prototype, the 13 participants completed more recommended care (81% vs 48%; P < .001) and recommended tasks per minute (0.8 vs 0.6; P = .03) over longer sessions (7.0 minutes vs 5.4 minutes; P = .006). Keystrokes per task were lower with the prototype (6 vs 18; P < .001). Qualitative themes elicited included the desire for reliable presentation of information which matches participants’ mental models of disease and for intuitive navigation in order to decrease cognitive load.

Discussion

Participants completed more recommended care by taking more time when using our prototype. Interviews identified a tension between using the inefficient but familiar EHR vs learning to use our novel prototype. Concept coding of user feedback generated actionable insights.

Conclusions

Mixed methods can support the design and evaluation of SMART on FHIR EHR add-on applications by enhancing understanding of the user experience.

Keywords: electronic health records, decision support systems, clinical, SMART on FHIR, human-computer interaction, cognitive load

INTRODUCTION

Background and Significance

In 1997, Dr Diana Forsythe and colleagues1 reported a preimplementation study outlining the steps needed to successfully integrate electronic health records (EHRs) into ambulatory care. These steps included designing EHRs to account for the varying workflows of ambulatory providers, staging implementation to enable smoother transitions, and providing educational support for providers as they learn to use this new tool. Their work emphasizes the need to not only collect quantitative measures regarding clinical and efficiency outcomes, but also utilize ethnographic data-gathering methods to truly understand the user experience.1,2

Unfortunately, this advice went largely unheeded in the era following the American Recovery and Reinvestment Act of 2009, in which EHRs were rapidly deployed in the United States,3 with insufficient consideration to designing for human factors. Many EHR displays remain analogous to paper-based charts despite known usability concerns. Moreover, EHR-based clinical decision support has not reached its potential and has at times been associated with unintended consequences such as alert fatigue.3–6 EHR workflow changes such as increased documentation time have been linked to provider burnout,7,8 while providers report insufficient time available to deliver recommended care.9–12

Cognitive load theory

The usability issues of current EHRs can be understood within the framework of cognitive load theory. This theory states that individuals experience cognitive load when working memory is required to process information.13,14 In other words, as task complexity requires more allocation of attention resources, cognitive load increases and performance can be hampered. One aspect of task complexity is the number of subtasks which must be held in working memory in order to complete the larger action. Such complexity is prevalent in current EHRs which often requiring navigation of multiple screens to locate desired information and implement clinical decisions. EHR complexity has been associated with increased cognitive load and medical error.15

Current EHR displays

Work by Dr Forsythe’s team in the era of paper charts identified information management as a key aspect of clinical workflow requiring support from emerging EHR technologies.16 However, even today, information displays in current EHRs generally parallel paper medical records.4 Information is presented within categories related to where the information originated (eg, with laboratory results in a separate section from medications), known as source-oriented information display.17 Source-oriented display dissociates meaningful relationships among data and goes against natural provider workflow by separating information gathering and clinical decision making.18 Such dissociation requires more working memory and thus increases cognitive load.

Research has shown that providers naturally process patient information by clinical concepts such as disease state or physiologic system.17,19 Providers using source-based displays need to manually integrate patient data into these clinical concepts, which increases cognitive load. Particularly for ambulatory care, in which chronic conditions are managed via multiple brief encounters over time, integrated EHR displays that organize information by clinical concept may better support care.20

As an example, chronic obstructive pulmonary disease (COPD) is a condition in which significant care gaps exist.21–23 Among eligible patients with COPD, recommended preventive care such as lung cancer screening has a low uptake of 24%.23 COPD is often undertreated due to well-documented issues with provider understanding of guidelines and access to relevant clinical information.21,22 Providers often fail to integrate information regarding disease status (eg, recent history of COPD exacerbations) and current medications in order to choose the correct therapy for the patient, a task that requires considerable cognitive load.21

Currently, an ambulatory provider managing COPD with a conventional source-oriented EHR display would likely navigate to separate sections of the chart for smoking history, medications, and disease state. The provider must create a clinical plan and place relevant orders through a likely separate order entry module. Moreover, a separate workflow would likely be used for preventive tasks such as lung cancer screening or pneumonia vaccination. An EHR display that integrates these separate concepts and tasks, with the option to access information on clinical guidelines, would decrease cognitive load and potentially improve clinical care.20

Emerging EHR capabilities

EHR applications that provide concept-oriented information display and decision support may become more common due to evolving technology standards. The recently developed technology framework known as Substitutable Medical Applications and Reusable Technologies on Fast Healthcare Interoperability Resources (SMART on FHIR) (pronounced “smart on fire”) allows for add-on third-party applications to function within existing EHRs.24,25 This is similar to the way standardized technology protocols allow non-Apple developers to create applications that run seamlessly on an iPhone.

These new EHR standards are enabling third-party developers beyond EHR vendors to create applications to support user workflows such as management of specific diseases. For example, in 2016 the University of Utah launched its ReImagine EHR initiative to use SMART on FHIR to develop interoperable EHR add-on applications. The initiative generated a neonatal bilirubin management application, which has been widely and voluntarily adopted by providers and has demonstrated measurable savings in user time, effort, and provision of quality care.26

Because conventional EHR vendors are responsible for providing functionality for an enormous number of clinical and administrative tasks across entire health systems, their attention to usability testing can be limited despite known best practices.3,27,28 With the advent of add-on applications using SMART on FHIR by smaller developers, new approaches to smaller-scale, rapid usability evaluation may be needed. This evaluation must incorporate both quantitative metrics such as clinical outcomes, often requested by stakeholders, and also the qualitative data on user experience championed by Dr Forsythe, which is key to acceptance into practice.2

OBJECTIVE

Our goal was to develop and evaluate a novel add-on EHR application that uses an integrated display to support ambulatory providers in the management common chronic diseases, starting with COPD, hypertension, and diabetes. This integrated display would allow for a “one-stop shop” by displaying information relevant to each disease and providing clinical decision support in a single view in order to reduce cognitive load, increase provider efficiency, and support evidence-based care. We used a mixed-methods approach to evaluate and incorporate user feedback into the design of a prototype to support COPD care.

MATERIALS AND METHODS

This study describes an ongoing, iterative process to design, evaluate, and refine a SMART on FHIR chronic disease management application (“Disease Manager”) created by the University of Utah ReImagine EHR team. The evaluation specifically focuses on a prototype module for COPD care. This study was approved by the University of Utah Institutional Review Board.

Initial application development

The study was conducted at University of Utah Health (UUH), an academic healthcare system using the Epic EHR (EPIC Systems, Verona, WI). UUH supports a ReImagine EHR team of clinical informaticists, sociotechnical experts, software developers, and clinical champions with the mission of developing user-friendly add-on applications to the existing EHR using standards-based interoperability frameworks such as SMART on FHIR.26 Disease Manager was developed under this mission with the goal of supporting chronic disease management in the ambulatory setting.

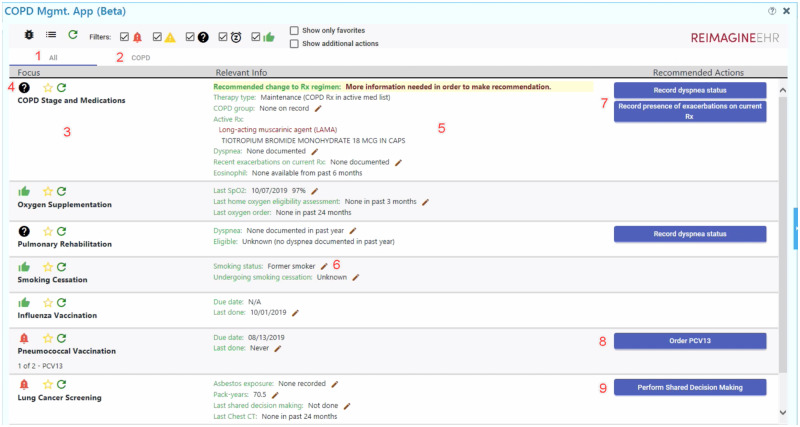

COPD was the first disease to be prototyped with Disease Manager. COPD was chosen based on an institutional and global need for management support.22 A team of clinical providers in pulmonology and primary care drafted an initial set of COPD care domains which would benefit from better EHR support. This requirements list was cross-referenced to the 2019 GOLD (Global Initiative for Chronic Obstructive Lung Disease) guidelines to produce specific decision-making algorithms.29 The targeted care domains were COPD staging, pharmacotherapy, oxygen supplementation, pulmonary rehabilitation, tobacco cessation, influenza and pneumonia vaccination, lung cancer screening, and obstructive sleep apnea screening. ReImagine EHR developers then used these domains and clinical algorithms as the basis for creating a dynamic integrative display to support user information needs and clinical decision making (Figure 1).

Figure 1.

Disease Manager prototype for user evaluation, with annotations. Screenshot of the initial Disease Manager view for a test patient. Numbers overlaid onto the screenshot are referenced in the main text to give detail regarding the application’s functionality. Permission to use this image was obtained from the University of Utah ReImagine EHR team. COPD: chronic obstructive pulmonary disease; EHR: electronic health record.

The design process was iterative, beginning with ReImagine EHR researchers reviewing initial versions and refining mockups through consensus in a group setting. This research group included human factors experts, clinicians, clinical informaticists, and software developers. Next, heuristic evaluations were independently completed by 3 experts using Nielsen’s heuristics to evaluate critical usability principles.30 These findings were used to further refine Disease Manager to produce a prototype suitable for user testing.

Participant recruitment

Recruitment emails were sent to all faculty providers in the UUH Division of Family Medicine as well as all primary care providers working in UUH Community Clinics, a group comprising over 100 providers across 12 clinic locations throughout the Salt Lake City area. The emails requested volunteers to participate in the evaluation of COPD care workflow using the current EHR and also a new tool developed by ReImagine EHR. A $100 gift card was offered for participation in a 25- to 45-minute feedback session. The first 15 providers to respond were scheduled for a session. Consent was verbally obtained from all participants after they received and reviewed an Institutional Review Board–approved informed consent cover letter.

Study overview

We used a mixed-methods approach to evaluate participants’ existing EHR workflow compared with workflow when Disease Manager was available as an add-on to the usual EHR. The primary experimental design was a 2 × 2 block-randomized within-subject factorial design.

Two similar case scenarios were developed which required medication management, vaccination, and risk factor screening (see Supplementary Appendix A for details). Participants completed each case scenario, once using the usual EHR and once with the usual EHR plus Disease Manager. Each participant served as their own control using both systems in random order.

Procedures

Participants completed case scenarios using a de-identified test EHR environment. Two patient records within the test environment were modified to reflect the case scenario parameters. Each participant provider’s personalized EHR settings (eg, note templates and favorite orders) were imported from the production EHR in order to replicate participants’ standard workflow as much as possible. At the start of each case, participants were given a card describing the patient case and scenario assumptions (Supplementary Appendix A). Participants were asked to deliver their best judgement of ideal clinical care for each case scenario by placing orders or verbally stating any shared decision making or counseling they felt were indicated. Participants were shown how to access Disease Manager from within the usual EHR but were given no further instructions. If participants had questions during testing, the interviewer replied using a set of predetermined, standardized responses as described in Supplementary Appendix A.

After each case but before a semi-structured interview, participants completed a user experience survey containing visual analog scales of the workload domains used in the NASA Task Load Index (NASA-TLX).31

Participants completed both the case scenarios and subsequent semi-structured interviews while using a laptop containing Tobii Pro software (Tobii Group, Stockholm, Sweden). Tobii Pro continuously captures eye movements, audio, screen display, and keyboard and mouse usage. After participants completed each case and survey, a semi-structured interview was conducted including a retrospective think-aloud format to understand participants’ mental models as they engaged in simulated COPD care.

The retrospective think-aloud functionality of Tobii Pro records participants’ commentary as the participants watch the prior recording of themselves working through the case scenario. In addition to providing screen capture and audio, Tobii Pro overlays participants’ eye movements during the scenario onto the screen capture. As participants viewed these recordings, they provided feedback as to what they were thinking during various tasks. As needed, participants were prompted with standardized questions such as “What are you doing right now? In order to do what?” These questions were based on a modified cognitive task analysis derived from action identification methods.32

Clinical quality and efficiency outcomes

Seven binary outcomes measured completion of recommended clinical tasks: prescription of rescue therapy, prescription of maintenance therapy, pulmonary rehabilitation, lung cancer screening, pneumonia vaccination, smoking cessation counseling, and sleep apnea screening (Supplementary Appendix A). If other tasks were completed (eg, ordering a spacer), they were separately tallied as additional care tasks. The total clinical tasks completed was calculated as a sum of the number of recommended and additional care tasks completed. The proportion of recommended COPD care tasks completed was calculated as number of recommended care tasks completed divided by the number of recommended care tasks for the cases scenario (6 or 7 depending on the scenario) (see Supplementary Appendix A).

Efficiency outcomes included recommended tasks completed per minute, time spent providing care, keystrokes, and mouse clicks. The number of recommended tasks completed per minute was calculated as the number of recommended tasks completed divided by the total time.

Participant surveys

Six perceived workload measures were derived from the NASA-TLX survey: mental demand, physical demand, temporal demand, performance, effort, and frustration. Perceived workload measures were calculated by converting visual analog scales to scores between 0 and 100.

Qualitative data collection

Qualitative data consisted of the transcribed Tobii Pro audio recordings of both the scenario-based system interactions and subsequent interviews. Transcriptions were imported into NVivo v 12.6.0 software (QSR International, Doncaster, Australia) for concept coding.

Data analysis

Quantitative analysis

Univariate generalized linear models with generalized estimating equations were used for the evaluation of all measures to account for correlation within timings from the same participants. Logistic regression was used to estimate the odds and probability of participants completing a specific recommended task. Linear regression was used for all other outcomes.

In order to mitigate potential effects of order of presentation (eg, participants who first use Disease Manager behaving differently in the second scenario because of exposure to the decision support of Disease Manager), a block randomization scheme was used to randomize the order of case scenarios and experimental condition. Additionally, the results were analyzed for an interaction effect by fitting multivariate models which included EHR interface, order of presentation, and an interaction term.

All statistical analyses were performed using R version 3.6.1 (R Foundation for Statistical Computing, Vienna, Austria) and 2-tailed tests. P values <.05 were considered significant.

Qualitative analysis

Qualitative analysis used an inferential open-coding approach.33 Three investigators (C.R.W., T.T., R.L.C.) with doctoral training and expertise in cognitive psychology, human factors, biomedical informatics, and clinical practice individually reviewed the first transcript to create precodes, as suggested by Patton.34 The coding constructs were clarified through iterative discussion across multiple meetings to identify emergent constructs. The process was repeated iteratively with further transcripts until no new coding categories emerged. R.L.C. and T.T. continued to code in parallel with consensus discussion until their kappa coefficient for agreement reached 0.54, in keeping with an established definition of “moderate agreement” being a Cohen’s kappa >0.40.35 Remaining transcripts were coded by R.L.C., with consensus review of final codes. Group review of quotations across transcripts was conducted to identify emergent themes.

Reliability and validity were established according to verification strategies recommended by Morse et al.36 Methodologic coherence was established through saturation during transcript review, with constructs replicated across transcripts. Investigator responsiveness was enhanced by the diversity of experience of the investigators and intensive discussion, which allowed for theory development. Although a general theoretical perspective was used in interview design, investigators did not attempt to validate those constructs during review, but rather used an open-coding approach to allow themes to emerge.

RESULTS

Prototype functionality

The resulting prototype Disease Manager COPD module is a dynamic integrated display which allows users to see relevant information, update clinical data, receive decision support, and place orders relevant to the patient’s current COPD clinical status (Figure 1). The application is optionally available from within the usual EHR.

Users can either view all Disease Manager recommendations at once (Figure 1, item 1) or from a disease-specific tab (Figure 1, item 2). For the test patient displayed in Figure 1, the patient only has COPD, so these views are identical. Other modules, such as for hypertension and diabetes, would automatically display and be incorporated into the “All” view when applicable.

Disease-oriented information is provided for each care domain, such as COPD staging and medications (Figure 1, item 3). Users can at a glance determine the overall status of each domain (Figure 1, item 4), represented pictorially as a thumbs-up when no action is needed, a question mark when further information is needed, a caution sign when the problem may require attention (eg, an intervention that is due soon), an alarm when the problem requires action, or a snooze icon when the user has chosen to postpone an item.

A key function of Disease Manager is to integrate relevant information within the EHR obtained through FHIR queries into a single integrated display. As an example, within the COPD staging and medications domain, available information from within the EHR is provided on the patient’s symptoms, active medications organized by drug class, and lab results (Figure 1, item 5). Pencil icons denote areas where the user can click to open modules for editing EHR data (Figure 1, item 6).

Finally, for each domain, recommendations are provided in the form of actionable one-click buttons (Figure 1, items 7-9). These buttons support actions such as inputting further clinical information (Figure 1, item 7), placing orders (Figure 1, item 8), or opening a separate SMART on FHIR application as a submodule (Figure 1, item 9). Importantly, the display is dynamic. Figure 1 shows the provider’s initial view; after the provider performs the recommended action of inputting the patient’s COPD symptoms, the display will refresh with appropriate orderable medications as determined by the patient’s symptoms and current medications.

Study participants

The 15 participants were primary care providers from 10 UUH clinics geographically spread throughout the Salt Lake City area. Owing to recording equipment failure, 2 were not analyzed. The remaining 13 study participants were diverse in their specialties and clinical experience (Table 1).

Table 1.

Demographic characteristics of participants completing the study

| Gender | |

| Female | 8 (62) |

| Male | 5 (38) |

| Age group | |

| 30-39 y | 7 (54) |

| 40-54 y | 5 (38) |

| 55+ y | 1 (8) |

| Medical specialty | |

| Family medicine | 8 (62) |

| Internal medicine | 2 (15) |

| Internal medicine/pediatrics | 3 (23) |

| Profession | |

| Physician | 10 (77) |

| Nurse practitioner | 1 (8) |

| Physician assistant | 2 (15) |

| Time in medical practice | |

| <5 y | 4 (31) |

| 5-9 y | 2 (15) |

| 10+ y | 7 (54) |

| Time using Epic electronic health record | |

| <5 y | 3 (23) |

| 5-9 y | 6 (46) |

| 10+ y | 4 (31) |

| Current clinical FTE | |

| <0.3 FTE | 1 (8) |

| 0.3-0.49 FTE | 3 (23) |

| 0.5-0.79 FTE | 4 (31) |

| 0.8-1.0 FTE | 5 (38) |

| Frequency seeing COPD patients | |

| Monthly or less | 2 (15) |

| Several times a month | 6 (46) |

| At least weekly | 3 (23) |

| Several times a week | 2 (15) |

Values are n (%).

COPD: chronic obstructive pulmonary disease; FTE: full-time equivalent.

Clinical quality and efficiency outcomes

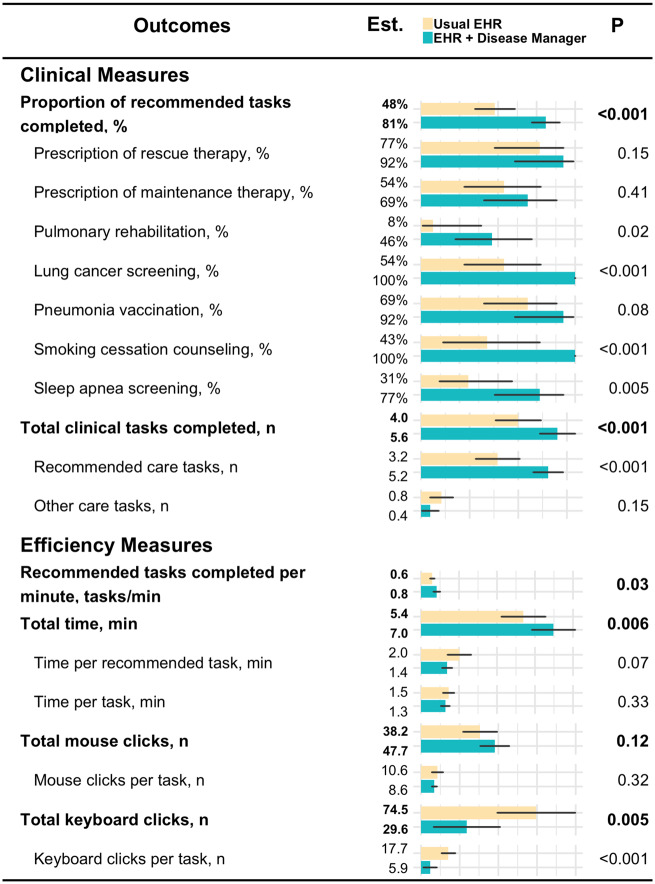

Overall, performance was significantly better when Disease Manager was used. Participants performed more overall recommended care tasks using Disease Manager vs the usual EHR (81% vs 48%; P < .001), as well as more recommended tasks per minute (0.8 vs 0.6; P = .03). The number of keystrokes per task was reduced when using Disease Manager (6 vs 18; P < .001) (Figure 2).

Figure 2.

Clinical and efficiency measures. Clinical and efficiency outcomes of participant tests using both the usual electronic health record (EHR) and the usual EHR + Disease Manager. Generalized linear models were used to estimate means and 95% confidence intervals. Generalized estimating equations were used to account for correlation within subjects.

The increase in total care tasks completed resulted in more overall time spent in Disease Manager compared with the usual EHR (7.0 minutes vs 5.4 minutes; P = .006) (Figure 2). Of the 7 recommended COPD care tasks measured, participants using Disease Manager were significantly more likely to provide lung cancer screening, sleep apnea screening, pulmonary rehabilitation referral, and smoking cessation counseling (Figure 2).

Participant-reported outcomes

Participant self-ratings of all NASA-TLX workload measures showed a trend favoring Disease Manager. However, only scores for frustration were statistically significant (27 vs 38; P = .04) (Figure 3).

Figure 3.

Participant-reported workload measures. Following each case scenario, participants rated each workload domain on a visual analog scale. This was converted to a score of 0-100 points. Estimated marginal means and P values are based on generalized linear models. Generalized estimating equations are used to account for correlation within subjects. EHR: electronic health record.

Effect of case scenario ordering

Multivariate modeling was conducted for all quantitative measures to evaluate for potential interaction between the tool used (usual EHR or usual EHR + Disease Manager) and order of presentation. The interaction term was not significant in the modeling of any clinical outcomes or the primary efficiency outcome of percent of ideal care tasks completed. A significant interaction term was noted for total keystrokes and total mouse clicks (Supplementary Figure C1).

For participant-reported workload measures, all domains except for performance had a significant interaction, with poorer scores for the usual EHR if participants first completed a case scenario with access to Disease Manager (Supplementary Figure C2).

Qualitative analysis

Participants’ narratives regarding usability were in 4 general thematic areas: (1) trust in the system is foundational to acceptance, (2) lack of action-feedback disrupts attention, (3) mental model matching is key to cognitive processing, and (4) navigational affordances (cues to action) decrease cognitive load. Together, these results provided actionable insights (Table 2). Supplementary Appendix B contains a full map of interview concepts and representative quotations.

Table 2.

User feedback and developer response. Example qualitative themes elicited during semi-structured interviews which generated actionable insights for improving Disease Manager.

| Construct | Representative quote(s) | Action taken by developers |

|---|---|---|

| Trust: Is the user confident in the validity of information and recommendations? |

|

|

| Trust: Is the user confident in the validity of information and recommendations? |

|

|

| Action/feedback: How do users identify the results of their actions taken within the interface? |

|

|

| Mental model mapping: How does the user’s conception of the information space match the display? |

|

|

| Affordance: How intuitive is the display in supporting the user’s information needs, navigation, and desired actions? |

|

|

COPD: chronic obstructive pulmonary disease; EHR: electronic health record; GOLD: Global Initiative for Chronic Obstructive Lung Disease.

Theme 1. Trust in the system is foundational to acceptance: Several participants expressed a general distrust of information within the usual EHR that carried over into their experience with Disease Manager. These users would leave the integrated display of Disease Manager in order to search for confirmatory information within the rest of the EHR. One participant explained his actions as:

“I was checking her age to make sure that it [Disease Manager’s vaccine recommendation] was the right one… right now our [usual EHR] pulls out the pneumonia vaccine for a lot of patients under the age of 65 that are incorrect.”

Participants emphasized the importance of addressing their validity concerns completely before launching Disease Manager in a clinical environment, with one participant saying:

“If I still have to click all over the chart to confirm that this is all accurate, then I’m eventually going to stop using it.”

Theme 2. Lack of action-feedback disrupts attention: Participants were highly sensitive to action feedback when interacting with the novel interface of Disease Manager. When the interface behaved unexpectedly in response to user actions, attention to the task at hand was disrupted. This was a particular issue when attempting to place orders within Disease Manager, as several participants were not confident that their actions within Disease Manager would carry over into the main EHR order input system. Participants made statements such as:

“I figured something was happening when I clicked these. It just wasn’t popping up the way I expected it to.”

Based on these observations, developers modified Disease Manager to provide improved action-feedback for the ordering system.

Theme 3. Mental model matching is key to cognitive processing: This theme emerged in the user experience narratives and also in the participants’ more concrete descriptions of their processes.

Participants reported a disrupted user experience due to a mental model mismatch regarding conceptualization of shortness of breath, also known as dyspnea. Both cases described a patient with some degree of shortness of breath (Supplementary Appendix A). In accordance with GOLD guidelines, Disease Manager recommends pharmacotherapy in response to this dyspnea. However, when Disease Manager asked participants to categorize the patient’s symptoms, they only selected “dyspnea present” roughly half the time. Participants reported being unsure how to reconcile the binary “dyspnea present/not present” model used by Disease Manager with their more nuanced conceptualization of the patient’s breathing status. Participants asked clarifying questions (which per protocol were not answered) such as:

“Dyspnea… currently or in the past? Or with exertion?”

“Whose definition of dyspnea are you using?”

Developers used this feedback to revise Disease Manager to better conform to users’ mental models of COPD symptoms (Table 2).

Regarding actual processes, participants’ mental model of their approach to patient care consistently mapped onto tasks related to either information gathering or medical reasoning. This mental model transcended the EHR itself and was present in every transcript regardless of whether the usual EHR or Disease Manager was in use (see Supplementary Appendix B). These categories reflect the SOAP (subjective/objective assessment/plan) format of a traditional medical plan of care.37 Participants described subcategories of medical reasoning included prescribing pharmacotherapy, provision of preventive care, and assessing patient preferences for shared decision making. These subcategories were paralleled in the concept-oriented design of the Disease Manager display (Figure 1).

Theme 4. Navigational affordances (cues to action) decrease cognitive load: Participants reported desiring cues to support the processes of information gathering and medical reasoning. Participants made repeated comments that the usual EHR does not always support navigation between tasks with statements such as:

“It [the usual EHR] was pretty frustrating because I had to go to the web, I had to go to place orders, I had to go to an outside app… whereas on the other one [Disease Manager] it was all pretty much right there.”

The integrated display of Disease Manager provided support for the tightly linked processes of information gathering and clinical decision making, therefore requiring less use of working memory and decreasing cognitive load. To quote one participant:

“[In the usual EHR] the medications are in one place, whether or not they’ve had the screening done is in a different place… [Disease Manager] consolidates that and then gives you a reminder on the things we underdo.”

DISCUSSION

Disease Manager uses a single dynamic interface to address provider information needs, support clinical decision making, and streamline order entry (Figure 1) in keeping with existing research on integrated displays.4,17,19,20 Participants’ own observations show that Disease Manager supports a similar process as the usual EHR of information gathering and medical reasoning. However, the integrated display reduced the number of items users were required to hold in memory during these processes, thus decreasing cognitive load.

Integration of qualitative and quantitative data

Qualitative results provided context to the quantitative results showing that Disease Manger increased quality with a mixed impact on efficiency. We found significant congruencies among data sources. Quantitative data identified areas of interest with regard to user behaviors such as increased provision of preventive care with Disease Manager. Qualitative data then explained these findings via participants’ observations that Disease Manager helped them to direct attention toward preventive tasks.

Care quality and efficiency

Participants using Disease Manager completed more recommended COPD care by spending additional time providing that care. These additional care tasks were largely preventive tasks such as lung cancer screening that were not a part of their workflow in the usual EHR. This reflects the known clinical reality that prevention is de-emphasized in ambulatory care.9–12,22,38,39 These findings suggest that our concept-based display, which groups together the acute and preventive aspects of disease management, can bring preventive tasks to the provider’s attention without the increased cognitive load demanded by a source-based display.

The decrease in keystrokes per task (6 keystrokes per task vs 18 keystrokes per task) by first-time users of Disease Manager suggests that dramatic improvements in efficiency over the present system are possible. Our goal as we continue to develop Disease Manager is to maintain the quality gains demonstrated in the present study while also translating these early efficiency gains into actual saved time. Our team has previously demonstrated the capability of bringing to production a SMART on FHIR application which simultaneously saves time and improves quality of care.26

Effect of case scenario ordering

We had been concerned that participants randomly assigned to Disease Manager first might give different care than they normally would with the usual EHR based on prompting from the first scenario. Such an interaction would artificially improve participant performance with the usual EHR.

However, our analysis did not show a significant interaction effect for any clinical or time-related outcomes. There were significant interactions for mouse clicks, keyboard strokes, and participant-rated measures of workload, which were all increased in participants using the usual EHR second (Supplementary Appendix C). We hypothesize that these results represent participants’ largely unsuccessful attempts to replicate the workflow of Disease Manager within their usual EHR, which is not designed to support concept-oriented workflows. This hypothesis is supported by participant comments such as “I found myself thinking about the previous trial where I got to use the tool and trying to recreate what I had used in the tool.”

Qualitative results

Nearly 30 years ago, Dr Forsythe wrote that mixed methods were critical to the evaluation of medical software, given that quantitative measures rarely provide information about user acceptance.2 The qualitative portion of the present study highlights both the enthusiasm from EHR users for our integrated display and also their concerns regarding the time required to learn new workflows and follow additional clinical recommendations. These results are similar to a recent study of concept-oriented EHR add-ons in the intensive care unit setting, which found improved usability but an initial tension in the introduction of a new tool into a preexisting workflow.40 Users reported that reliable information, appropriate action feedback, shared mental models, and affordances to guide navigation were key to their ultimate adoption of Disease Manager. These themes generated practical suggestions regarding information presentation, interface design, and system features, which have been used to improve Disease Manager (Table 2 and Supplementary Appendix B).

Limitations and future research

This study had limitations. First, our analysis was conducted at a single institution within a single EHR. The standards-based approach using SMART on FHIR should allow for translation across other health systems and within other EHR vendors, although some adaptation based on variations in institutional care practices and technologic resources will likely be necessary.24,41

The prototype testing used standardized COPD case scenarios and included assumptions such as disregarding insurance limitations or potential alternate diagnoses (Supplementary Appendix A). While such assumptions limit real-world generalizability, minimizing variability in early-stage testing allowed us to quickly identify the most common usability issues using fewer resources.

This study describes the use of Disease Manager to manage a single condition, COPD. Support is being actively developed for other chronic diseases such as hypertension and diabetes in order to improve usefulness of the application across diverse patient populations.

Finally, while the sample size was sufficient for reaching saturation for the qualitative analyses, it was more limited with regard to the quantitative analyses. While several large differences were detected, more moderate effects may not have been detected, especially regarding interaction effects. We also did not adjust for multiple testing; however, owing to their magnitude, many of the effects would have remained significant even with such an adjustment. These limitations were appropriate in the context of formative prototype evaluation. A larger sample size is warranted during our next steps to conduct more testing in the clinical setting.

CONCLUSION

With the emergence of SMART on FHIR add-on EHR applications, more customized EHR tools can be expected to enter the EHR marketplace.24,41 With this in mind, Dr. Forsythe’s emphasis on participant observation as an ethnographic data-gathering method to understand user needs and experience is more prescient than ever.1,2 Our mixed-methods approach to simultaneously collect clinical and efficiency data along with qualitative user feedback functioned well as a user-centered approach to evaluation.

The promising results generated from this approach have enabled us to produce a SMART on FHIR application for chronic disease management suitable for beta user testing in the clinical setting. As of February 2020, COPD and hypertension modules have been deployed in the production environment in order to evaluate impact on provider efficiency and adherence to clinical guidelines.

FUNDING

This work was funded primarily by the University of Utah. Support for qualitative data collection was provided by the Health Studies Fund, Department of Family and Preventive Medicine, University of Utah School of Medicine (PI: RLC). Additional funding is from the National Institutes of Health (R38 HL 143605, “Utah Stimulating Access to Research in Residency”, trainee: RLC) and the Agency for Healthcare Research and Quality (R18 HS26198, “Scalable decision support and shared decision making for lung cancer screening”, PI: KK).

AUTHOR CONTRIBUTIONS

RLC had full access to all of the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. All authors were involved in concept and design. RLC, PVK, TT, and CRW were involved in acquisition, analysis, or interpretation of data. RLC, PVK, TT, TJR, and CRW were involved in drafting of the manuscript. All authors were involved in critical revision of the article for important intellectual content. RLC, PVK, and CRW were involved in statistical analysis. RLC and KK obtained funding. JPB, MCF, DKM, CN, SR-L, PBW, and DES were involved in administrative, technical, or material support. KK and CRW were involved in study supervision.

SUPPLEMENTARY APPENDIX MATERIAL

Supplementary Appendix material is available at Journal of the American Medical Informatics Association online.

CONFLICT OF INTEREST STATEMENT

CN, DKM, KK, MCF, PBW, and SR-L are co-inventors of the application described in the manuscript. The University of Utah is considering commercializing this application. CN is an unpaid Clinical Information Model Initiative Working Group co-chair at the nonprofit Health Level Seven (HL7) standard development organization. PBW reports consulting outside the submitted work with Phast Services in the area of OpenCDS and healthcare information exchange and interoperability standards. MCF reports consulting outside the submitted work with Hitachi. KK reports honoraria, consulting, or sponsored research outside the submitted work with McKesson InterQual, Hitachi, Pfizer, Premier, Klesis Healthcare, RTI International, Vanderbilt University, the University of Washington, the University of California at San Francisco, and the U.S. Office of the National Coordinator for Health IT (via ESAC, JBS International, A+ Government Solutions, Hausam Consulting, and Security Risk Solutions) in the area of health information technology. KK is also an unpaid board member of the nonprofit HL7 International health information technology standard development organization.

Supplementary Material

REFERENCES

- 1. Aydin CE, Forsythe DE.. Implementing computers in ambulatory care: implications of physician practice patterns for system design. Proc AMIA Annu Fall Symp 1997; 1997: 677–81. [PMC free article] [PubMed] [Google Scholar]

- 2. Forsythe DE, Buchanan BG.. Broadening our approach to evaluating medical information systems. Proc Annu Symp Comput Appl Med Care 1991; 1991: 8–12. [PMC free article] [PubMed] [Google Scholar]

- 3. Sheikh A, Sood HS, Bates DW.. Leveraging health information technology to achieve the “triple aim” of healthcare reform. J Am Med Inform Assoc 2015; 22 (4): 849–56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Liebman DL, Chiang MF, Chodosh J.. Realizing the promise of electronic health records: moving beyond “Paper on a Screen.” Ophthalmology 2019; 126 (3): 331–4. [DOI] [PubMed] [Google Scholar]

- 5. Krousel-Wood M, McCoy AB, Ahia C, et al. Implementing electronic health records (EHRs): health care provider perceptions before and after transition from a local basic EHR to a commercial comprehensive EHR. J Am Med Inform Assoc 2018; 25 (6): 618–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Powers EM, Shiffman RN, Melnick ER, et al. Efficacy and unintended consequences of hard-stop alerts in electronic health record systems: a systematic review. J Am Med Inform Assoc 2018; 25 (11): 1556–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Adler-Milstein J, Zhao W, Willard-Grace R, Knox M, Grumbach K.. Electronic health records and burnout: Time spent on the electronic health record after hours and message volume associated with exhaustion but not with cynicism among primary care clinicians. J Am Med Inform Assoc 2020; 27 (4): 531–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Kroth PJ, Morioka-Douglas N, Veres S, et al. Association of electronic health record design and use factors with clinician stress and burnout. JAMA Netw Open 2019; 2 (8): e199609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Chen LM, Farwell WR, Jha AK.. Primary care visit duration and quality: does good care take longer? Arch Intern Med 2009; 169 (20): 1866–72. [DOI] [PubMed] [Google Scholar]

- 10. Bucher S, Maury A, Rosso J, et al. Time and feasibility of prevention in primary care. Fam Pract 2017; 34 (1): 49–56. [DOI] [PubMed] [Google Scholar]

- 11. Shires DA, Stange KC, Divine G, et al. Prioritization of evidence-based preventive health services during periodic health examinations. Am J Prev Med 2012; 42 (2): 164–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Yarnall KSH, Pollak KI, Østbye T, et al. Primary care: is there enough time for prevention? Am J Public Health 2003; 93 (4): 635–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Miller GA. The magical number seven plus or minus two: some limits on our capacity for processing information. Psychol Rev 1956; 63 (2): 81–97. [PubMed] [Google Scholar]

- 14. Wickens CD. Multiple resources and performance prediction. Theor Issues Ergon Sci 2002; 3 (2): 159–77. [Google Scholar]

- 15. Lyell D, Magrabi F, Coiera E.. The effect of cognitive load and task complexity on automation bias in electronic prescribing. Hum Factors 2018; 60 (7): 1008–21. [DOI] [PubMed] [Google Scholar]

- 16. Rosenal TW, et al. Support for information management in critical care: a new approach to identify needs. Proc Annu Symp Comput Appl Med Care 1995; 1995: 2–6. [PMC free article] [PubMed] [Google Scholar]

- 17. Zeng Q, Cimino JJ, Zou KH.. Providing concept-oriented views for clinical data using a knowledge-based system: an evaluation. J Am Med Inform Assoc 2002; 9 (3): 294–305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Wickens CD, Andre AD.. Proximity compatibility and information display: effects of color, space, and object display on information integration. Hum Factors 1990; 32 (1): 61–77. [DOI] [PubMed] [Google Scholar]

- 19. Dore L, et al. An object oriented computer-based patient record reference model. Proc Annu Symp Comput Appl Med Care 1995; 1995: 377–81. [PMC free article] [PubMed] [Google Scholar]

- 20. Clarke MA, Steege LM, Moore JL, et al. Determining primary care physician information needs to inform ambulatory visit note display. Appl Clin Inform 2014; 5 (1): 169–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Salinas GD, Williamson JC, Kalhan R, et al. Barriers to adherence to chronic obstructive pulmonary disease guidelines by primary care physicians. Int J Chron Obstruct Pulmon Dis 2011; 6: 171–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Overington JD, Huang YC, Abramson MJ, et al. Implementing clinical guidelines for chronic obstructive pulmonary disease: barriers and solutions. J Thorac Dis 2014; 6 (11): 1586–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Zahnd WE, Eberth JM.. Lung cancer screening utilization: a behavioral risk factor surveillance system analysis. Am J Prev Med 2019; 57 (2): 250–5. [DOI] [PubMed] [Google Scholar]

- 24. Mandel JC, Kreda DA, Mandl KD, et al. SMART on FHIR: a standards-based, interoperable apps platform for electronic health records. J Am Med Inform Assoc 2016; 23 (5): 899–908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Mandl KD, Gottlieb D, Ellis A.. Beyond one-off integrations: a commercial, substitutable, reusable, standards-based, electronic health record-connected app. J Med Internet Res 2019; 21 (2): e12902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Kawamoto K, Kukhareva P, Shakib JH, et al. Association of an electronic health record add-on app for neonatal bilirubin management with physician efficiency and care quality. JAMA Netw Open 2019; 2 (11): e1915343. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Ratwani RM, Benda NC, Hettinger AZ, et al. Electronic health record vendor adherence to usability certification requirements and testing standards. JAMA 2015; 314 (10): 1070–1. [DOI] [PubMed] [Google Scholar]

- 28. Mazur LM, Mosaly PR, Moore C, et al. Association of the usability of electronic health records with cognitive workload and performance levels among physicians. JAMA Netw Open 2019; 2 (4): e191709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Singh D, Agusti A, Anzueto A, et al. Global strategy for the diagnosis, management, and prevention of chronic obstructive lung disease: the GOLD science committee report 2019. Eur Respir J 2019; 53 (5): 1900164. [DOI] [PubMed] [Google Scholar]

- 30. Nielsen J. Finding usability problems through heuristic evaluation. In: proceedings of the SIGCHI Conference on Human Factors in Computing Systems - CHI ’92; Monterey, CA; 1992: 373–80.

- 31. Hart SG, Staveland LE.. Development of NASA-TLX (Task Load Index): results of empirical and theoretical research. Adv Psychol 1988; 52: 139–83. [Google Scholar]

- 32. Vallacher RR, Wegner DM.. What do people think they’re doing? Action identification and human behavior. Psychol Rev 1987; 94 (1): 3–15. [Google Scholar]

- 33. Gale NK, Heath G, Cameron E, et al. Using the framework method for the analysis of qualitative data in multi-disciplinary health research. BMC Med Res Methodol 2013; 13 (1): 117., [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Patton M. Qualitative Research & Evaluation Methods. 4th ed Thousand Oaks, CA: Sage; 2015. [Google Scholar]

- 35. Landis JR, Koch GG.. The measurement of observer agreement for categorical data. Biometrics 1977; 33 (1): 159–74. [PubMed] [Google Scholar]

- 36. Morse JM, Barrett M, Mayan M, et al. Verification strategies for establishing reliability and validity in qualitative research. Int J Qual Methods 2002; 1 (2): 13–22. [Google Scholar]

- 37. Pearce PF, Ferguson LA, George GS, Langford CA. The essential SOAP note in an EHR age. Nurse Pract 2016; 41 (2): 29–36. [DOI] [PubMed] [Google Scholar]

- 38. Sebo P, Maisonneuve H, Cerutti B, et al. Overview of preventive practices provided by primary care physicians: a cross-sectional study in Switzerland and France. PLoS One 2017; 12 (9): e0184032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Garrison GM, Traverse CR, Fish RG.. A case study of visit-driven preventive care screening using clinical decision support: the need to redesign preventive care screening. Health Serv Res Manag Epidemiol 2016; 3: 2333392816650344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Dziadzko MA, Herasevich V, Sen A, et al. User perception and experience of the introduction of a novel critical care patient viewer in the ICU setting. Int J Med Inform 2016; 88: 86–91. [DOI] [PubMed] [Google Scholar]

- 41. Bloomfield RA, Polo-Wood F, Mandel JC Jr, et al. Opening the Duke electronic health record to apps: implementing SMART on FHIR. Int J Med Inform 2017; 99: 1–10. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.