Abstract

Objective

In 2009, a prominent national report stated that 9% of US hospitals had adopted a “basic” electronic health record (EHR) system. This statistic was widely cited and became a memetic anchor point for EHR adoption at the dawn of HITECH. However, its calculation relies on specific treatment of the data; alternative approaches may have led to a different sense of US hospitals’ EHR adoption and different subsequent public policy.

Materials and Methods

We reanalyzed the 2008 American Heart Association Information Technology supplement and complementary sources to produce a range of estimates of EHR adoption. Estimates included the mean and median number of EHR functionalities adopted, figures derived from an item response theory-based approach, and alternative estimates from the published literature. We then plotted an alternative definition of national progress toward hospital EHR adoption from 2008 to 2018.

Results

By 2008, 73% of hospitals had begun the transition to an EHR, and the majority of hospitals had adopted at least 6 of the 10 functionalities of a basic system. In the aggregate, national progress toward basic EHR adoption was 58% complete, and, when accounting for measurement error, we estimate that 30% of hospitals may have adopted a basic EHR.

Discussion

The approach used to develop the 9% figure resulted in an estimate at the extreme lower bound of what could be derived from the available data and likely did not reflect hospitals’ overall progress in EHR adoption.

Conclusion

The memetic 9% figure shaped nationwide thinking and policy making about EHR adoption; alternative representations of the data may have led to different policy.

Keywords: electronic health records; American Recovery and Reinvestment Act, hospitals, adoption, validity and reliability

INTRODUCTION

In 2009, the Health Information Technology for Economic and Clinical Health (HITECH) Act was enacted to stimulate information technology (IT) adoption by hospitals and healthcare providers.1,2At that time, the most prominently reported data on electronic health record (EHR) adoption in hospitals were derived from a New England Journal of Medicine (NEJM) paper by Jha et al reporting that 9% of hospitals had adopted what, by a very specific definition, constituted a “basic” EHR.3 Since 2009, the “9%” figure has been the primary baseline for reporting progress in hospital EHR adoption and the success of the HITECH Act.4 References to this figure have, in general, overlooked the specific definition of a “basic” EHR, and how a hospital’s achievement of this status was calculated. In the original study, achieving “basic” EHR status required that a hospital adopt all of a set of 10 specific components. Many of the components included in this definition were “basic” only in comparison to the definition of a “comprehensive” EHR offered in the same study. A press release by the Centers for Medicare & Medicaid Services highlighted the advanced nature of the “basic” EHR, describing it as including “advanced components that go even beyond the requirements of Meaningful Use (MU) Stage 1.”5 Moreover, the calculation gave zero credit to hospitals that had achieved 7, 8, or even 9 of the 10 criteria.

The “9%” statistic very quickly took on great significance and was widely cited in a variety of ways; however, context for its use was often absent from these citations. For example, a 2010 perspective paper by then-National Coordinator Dr. David Blumenthal (a coauthor of the original NEJM study), invoked the NEJM paper with the proposition that, “the proportion of US health care professionals and hospitals that have begun the transition to electronic health information systems is remarkably small.”6 A 2013 White House press release announced that, “In 2008, only 17 percent of physicians were using advanced electronic health records and just 9 percent of hospitals had adopted electronic health records.”7 Sources such as The Wall Street Journal and Kaiser Health News similarly omitted the “basic” modifier, while others, such as The New York Times, used the “basic” modifier without noting the components that are required to qualify as “basic.”8–10 These unqualified statements could readily be misinterpreted as meaning that only 9% of hospitals had adopted any form of EHRs, and therefore 91 percent of hospitals were exclusively using paper records.

The “9%” statistic also became an important metric for measuring HITECH’s success. Several papers published by subsequent national coordinators cited the NEJM paper as defining the starting point of EHR adoption.11–13 The Office of the National Coordinator’s data briefs and dashboards also commonly used the 9 percent figure as a baseline for hospital EHR adoption.14–16 These data were picked up by outlets including the Electronic Health Reporter, FierceHealthcare, and atheanahealth’s athenaInsight, all of which invoked some form of the “9%” statistic as a baseline and generally did not include a clear definition of “basic.”17–19

Dependence on the “9%” statistic may have also led to the impression that hospitals were lagging behind office-based providers in adoption of EHRs by contrasting this result with a study by the same group reporting that 17% of office-based physicians had adopted a “basic” EHR, as in the White House press release above.7,20 This comparison was statistically invalid and misleading since office-based providers were required to have all of 8 components to achieve a basic EHR, whereas hospitals were required to have all of 10. It is possible that a perception of office-based EHR adoption exceeding that of hospitals in 2008 influenced the structure of incentives authorized by Congress. Physicians who participated in MU received on average $31 000 in incentive payments while hospitals that participated received, on average, $3.3 million.21 By 2019, a far larger proportion of hospitals than physicians participated in MU; over the life of the program, 98% of eligible hospitals participated and received $21.8 billion in federal MU incentives, while 60% of eligible office-based providers participated and received $16.2 billion.22,23

These factors raise the important question: What was the actual state of hospital EHR adoption in 2008 at the dawn of HITECH? We seek to answer this question through 2 approaches. First, using the same survey data source the authors of the NEJM paper used to arrive at the “9%” figure, in this article we offer several adoption metrics and compute from these metrics a broad range of adoption estimates with 9% as the lower bound. Second, we draw upon similar data sources and evidence from other published studies to offer an additional range of estimates. Based on the results drawn from these 2 approaches, we argue that a more valid portrayal of hospital adoption in 2008 would place this figure much higher than 9% and that measures of hospitals’ progress over time should use a different baseline.

We also offer in this article a case study illustrating that choices of metrics matter enormously for policy making at any scale because metrics can become memes and their meaning can get distorted through inaccurate or incomplete reporting. Moreover, choices of metrics reverberate into the future. In the case of EHR adoption, continued overreliance on this 9% figure, especially when coupled with misunderstanding of what is meant by a “basic” EHR, has the potential to influence future policy making as we move out of the HITECH era, for example, by leading to overestimates of the impact of an incentive program.

MATERIALS AND METHODS

Our primary analysis is based on data from the 2008 American Hospital Association (AHA) Information Technology Supplement, which was used to generate the initial rate of basic EHR adoption reported in 2009. We first present this data as a simple “heat map” with hospitals as rows and components as columns. Filled cells in the heat map indicate implemented components, so that the overall filled area depicts the extent of adoption of the 10 basic components in 2008. We then weighted responses to this survey using the variables used in Jha et al, and produced several alternative estimates based on different approaches to treating this data.3

To complement this reanalysis, we drew upon 3 alternative data sources to generate additional estimates of EHR adoption. We report the single item from the 2008 AHA Annual Survey of hospitals which asked whether the hospital had partially or fully implemented an EHR. We similarly pulled data from a contemporary Healthcare Information and Management Systems Society (HIMSS) presentation, which contained their estimate of EHR adoption in 2008.24 Finally, we performed a structured search of the contemporary literature to identify estimates of EHR adoption by other authors.

Estimates from the AHA IT supplement

Method 1: hospitals fulfilling all basic criteria

Achievement of this adoption criterion required a hospital to meet all of 10 specific criteria of a basic EHR. We use a replication of this result reported by Jha et al as a point of departure to consider other potential approaches. We weighted responses by estimating the probability of responding to the survey depending on region, hospital size, cardiac ICU presence, urban location, system membership, ownership status, and teaching status using a logistic regression. We then weighted estimates using the inverse of the probability of responding.

Methods 2a and 2b: median and mean number of basic EHR components implemented

Rather than report the percent of hospitals with all 10 components, we computed each hospital’s progress toward a “basic” EHR as the number of components it had implemented. We estimated the national level of adoption as the percentage of the 10 basic components implemented by the median hospital and mean percentage of implemented components.

Methods 3a and 3b: item response theory approaches

We used a 2-parameter logistic model based on item response theory (IRT) to estimate each hospitals’ underlying EHR “ability.”25–27 To do so, we used hospitals’ patterns of responses to the 10 survey items that comprise a basic EHR and estimated a single latent trait representing their EHR “ability.” In estimating each hospital’s ability, this model accounts for the difficulty of implementing each functionality (ie, what proportion of respondents answered the question positively) and discrimination (ie, how predictive each positive answer was of a higher overall score) of each survey item. This approach yielded 2 distinct estimates:

Median Ability Estimate: After estimating the ability score of each hospital, we calculated the EHR ability of the median hospital. This approach takes the difficulty of implementing each EHR functionality into account, as indicated by the total number of hospitals that had implemented it. For example, implementation of computerized provider order entry (CPOE) can be seen as substantially more difficult than basic demographics, because many fewer hospitals had implemented CPOE.

Estimated Ability Confidence Interval Contains Basic EHR: The approach used by Jha et al does not account for measurement error inherent in every survey. A hospital could have been incorrectly scored as not having (or having) a “basic” EHR if it responded incorrectly to any of the 10 items on the survey. Incorrect responses might have occurred based on the knowledge limitations of respondents or simple error. The IRT approach to estimating hospitals’ EHR abilities produces a standard error of measurement for each point along the calculated ability measure, which is the inverse of the square root of the information generated from responses to all survey items at that point. From this measure, we calculated a 95% confidence interval. We estimated national basic EHR adoption as the fraction of all hospitals whose ability confidence interval overlaps the ability estimate of hospitals that had reported all 10 components required of a basic EHR.

Estimates from other data sources

Method 4: HIMSS stage 3 achievement

In 2008, HIMSS Analytics used proprietary survey data to place hospitals into 1 of 8 “EMR [electronic medical record] Adoption Model” stages, ranging 0–7.24 Accordingly, we estimated national progress toward EHR adoption as the fraction of hospitals that have reached each stage. We selected Stage 3 as the most relevant stage to reflect hospital progress toward EHRs, because it is the halfway point toward a complete EHR system. Unlike higher stages, Stage 3 contains no functionalities that exceed those included in the definition of a “basic” EHR.

Method 5: single item self-report

The 2008 AHA Annual Survey included a single question asking hospitals, “Does your hospital have an electronic health record?” Hospitals had 3 response options: “Yes, fully implemented,” “Yes, partially implemented,” or “No.” We report the percent of hospitals that indicated they had fully implemented or at least partially implemented an EHR.

Method 6: Literature review

We conducted a review of the literature to identify alternative contemporary estimates of the level of EHR adoption by hospitals. Using PubMed, we applied the search term “electronic health records” OR “electronic medical records” AND “adoption” OR “Use” AND “hospital*” OR “Inpatient” and filtered results to articles published between January 1, 2007 and December 31, 2010. This resulted in 407 publications. After review of titles, we excluded 362 studies because they did not focus on hospitals or were not empirical, or were not set in the United States. Upon review of the abstract and text, we excluded an additional 40 studies that did not contain at least 1 specific estimate of EHR adoption or explicitly based their estimate on the “9%” study, resulting in 5 total studies.

RESULTS

Unweighted survey responses of all nonfederal acute care hospitals are presented in the “heat map” comprising Figure 1. Rates of reported implementation for each component range from 84% for patient demographics to 27% for physician notes. These raw data form the basis of most of the estimates of EHR implementation that follow.

Figure 1.

Hospital adoption of “basic” EHR components in 2008.

Estimates from the AHA IT supplement

Method 1: hospitals fulfilling all basic criteria

Jha et al reported that 9% of hospitals reported implementation of all 10 components required for a basic EHR in at least 1 clinical unit. This estimate and alternatives are presented in Table 1.

Table 1.

Alternative measures of hospital EHR adoption in 2008

| Measure | Estimated EHR Adoption | Interpretation |

|---|---|---|

| Hospitals fulfilling all basic criteria | 9% | 9% of hospitals reported having implemented all 10 components required of a basic EHR using Jha et al’s definition. |

| Median number of basic components | 60% | In 2008, the median hospital had adopted 60% of the 10 components required of a basic EHR in 2008. |

| Mean number of basic components | 58% | In aggregate, hospitals had adopted 58% of the components required of a basic EHR in 2008. |

| Median estimated ability using IRT | 57% | The median hospital had an estimated EHR ability equivalent to 57% of the ability of hospitals with a basic EHR. |

| Estimated ability with measurement error (IRT) | 30% | 30% of hospitals, had EHR ability scores consistent with adopting a basic EHR after accounting for measurement error. |

| HIMSS stage 3 achievement | 42% | 42% of hospitals had achieved Stage 3 or higher on the HIMSS EMR Adoption Model. |

| Hospital self-report | 73%/17% | 17% of hospitals reported having fully implemented an EHR and 73% of hospitals reported at least partially implementing an EHR in 2008. |

Source: Authors’ analyses of 2008 American Hospital Association Information Technology Survey, 2008 American Hospital Association Annual Survey, and Healthcare Information and Management Systems Society Presentation.

Abbreviations: EHR, electronic health record; EMR, electronic medical record; HIMSS, Healthcare Information and Management Systems Society; IRT, item response theory;

Methods 2a and 2b: median and mean number of basic EHR components implemented

Hospitals ranged widely on the number of basic components implemented, as shown in Table 2. The modal number of components implemented was 8 of 10. The median hospital reported adopting 6 of 10 (60%) components required of a basic EHR. Of the 10 components required to have a basic EHR, the average number of components adopted by US hospitals was 5.8 (58%). This means that, in aggregate, 58% of basic components had been adopted.

Table 2.

Weighted percentage of hospitals that reported adopting basic components

| Number of Reported Components | Percent of Hospitals | Inverse cumulative percent |

|---|---|---|

| 0 | 8% | 100% |

| 1 | 5% | 92% |

| 2 | 4% | 87% |

| 3 | 7% | 83% |

| 4 | 9% | 76% |

| 5 | 8% | 67% |

| 6 | 9% | 59% |

| 7 | 13% | 50% |

| 8 | 16% | 37% |

| 9 | 12% | 21% |

| 10 | 9% | 9% |

Methods 3a and 3b: IRT approaches

Median ability estimated

The median ability score estimated using IRT was 5.7 out of 10, so that the median hospital’s EHR ability was 57% of the ability of a hospital that reported a “basic” EHR.

Estimated ability with measurement error

Based on the standard error of the estimate from the IRT approach, a score of 10 (the maximum) lies within the 95% confidence interval for 885 (30%) hospitals. That includes the 267 hospitals that scored a 10 out of 10 as well as hospitals that had 9 of 10 items and were missing only Medication CPOE (178 hospitals), diagnostic test results (38), physician notes (103), advanced directives (6), discharge summaries (5), or patient demographics (1) and hospitals that had 8 of 10 items and were missing only Medication CPOE and physician notes (259) or Medication CPOE and diagnostic test results (28).

Estimates from other data sources

Method 4: HIMSS stage 3 achievement

42% of hospitals had achieved Stage 3 or higher on the HIMSS EMR Adoption Model.

Method 5: Single item self-report

In 2008, 73% of hospitals reported having implemented any EHR on the AHA’s Annual Survey. 17% of hospitals reported having fully implemented an EHR while 55% reported partially implementing.

Method 6: literature review

As reported in Table 3, 5 studies containing 6 point estimates of adoption were identified in our literature review. Authors used data from 3 sources: HIMSS, the AHA Annual Survey, and the National Ambulatory Hospital Medical Care Survey to define EHR adoption and used varied criteria. Across these studies, all of which focused on years prior to 2008, estimates of the level of adoption of EHRs varied from 11.6% in 2004 to 46% in 2005–2006. In addition to these estimates, other studies that reported national rates of EHR adoption focused on multiple functionalities without estimating a single point estimate.33–35

Table 3.

Contemporary alternative estimates of EHR adoption

| Study | EHR Adoption Estimate | Years of Estimate | Sample | Estimate Description |

|---|---|---|---|---|

| Jones et al (2010)28 | 24.5% | 2003–2006 | 2086 nonfederal general acute care hospitals located in the United States | Using HIMSS analytics data, the authors defined a hospital as having a basic EHR if they had operational electronic patient record, clinical data repository, and clinical decision support systems. |

| Geisler et al (2010)29 | 46%; 17% | 2005–2006 | 694 emergency departments in acute care hospitals. | Using National Ambulatory Hospital Medical Care Survey, the authors identified the percent of EDs reporting the presence of an EHR and the percent that met their criteria for a basic EHR (demographic information, CPOE, lab and imaging results). |

| Kazley and Ozcan (2008)30 | 11.6% | 2004 | 2979 nonfederal acute care hospitals | Using HIMSS analytics data, the authors reported the percent of hospitals that reported having a fully automated EHR. |

| Ford et al (2007)31 | 21.7% | 2007 | 1814 US Hospitals that reported to HIMSS and AHA Surveys | Using AHA Annual Survey data, the authors reported the percent of hospitals that reported having a fully implemented EHR in 2007. |

| McCullough et al (2010)32 | 13% | 2007 | 3401 nonfederal, acute care US hospitals | Using AHA Annual Survey data, the authors reported the percent of hospitals that reported having a fully implemented EHR in 2007. |

Abbreviations: AHA, American Hospital Association; CPOE, computerized provider order entry; ED, emergency department; HIMSS, Healthcare Information and Management Systems Society;

DISCUSSION

Our reanalysis of the original hospital IT survey data and analyses of other sources led to several conclusions.

First, at the dawn of HITECH in 2008, US hospitals had made substantial investments in EHR technology, significantly greater than that implied by the “9%” figure even when that figure is accurately described as “9% of US hospitals had achieved all 10 components of a basic EHR.” Any of the new estimates of EHR adoption we report here, most of which were drawn from the same data used by the Jha et al paper, leads to that conclusion, as did evidence from contemporary studies. More simply, the results in Table 2, seen as a histogram in Figure 2, convey very different meaning than “9% of US hospitals had a basic EHR” and radically different from the “9% of US hospitals have an/any EHR” which was reported as the “9%” statistic emerged as a meme. The result in Figure 2 could have just as well been reported as “over half of US hospitals have implemented 6 of the 10 components of a basic EHR.” This would have conveyed a dramatically different message.

Figure 2.

Weighted percentage of hospitals that reported adopting basic components.

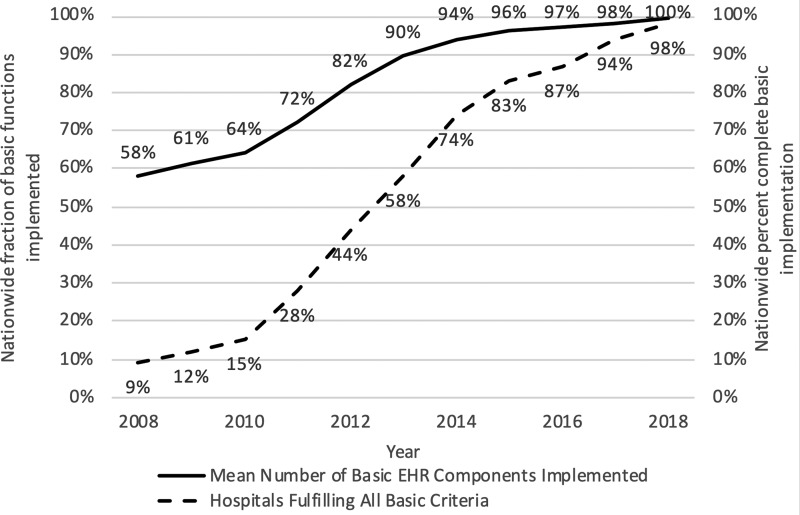

Figure 3.

Alternative presentation of hospital progress toward basic EHR.

Our review of the literature on EHR adoption published during the same time period indicates that the estimated level of EHR adoption depended enormously on the criteria researchers selected, resulting in a wide range of reported adoption rates. Though all 5 included studies were published prior to 2008, they also all reported adoption rates greater than 9%. On the whole, these reports from other sources therefore support a contention that the “9%” figure was an underestimate of the hospital EHR adoption rate in 2008. The varied estimates also highlight the extent to which the “9%” figure depended on the authors’ choices of criteria. One key reason why the “basic” cut point may have underrepresented adoption of EHRs was that 2 of the components of a “basic” EHR—medication CPOE and physician notes—were usually adopted substantially later in the EHR adoption process than the other 8 components.36 This made it difficult to reach the “all-or-nothing” threshold for a basic EHR even when otherwise advanced systems were in place.

Second, use of “9%” hospital EHR adoption as a baseline measure to chart hospital EHR adoption and gauge the success of the HITECH program likely led to invalid conclusions about the trajectory of EHR adoption. Use of an alternative baseline figure, such as the weighted average of hospitals’ progress toward a basic EHR, computed as the mean number of implemented basic components, would have created a very different impression of progress in EHR adoption as depicted in Figure 3. While this trend still points toward significant progress, it more accurately depicts a higher starting point in 2008 and flatter increase over time toward the goal of a basic system as originally defined by Jha et al. By focusing on how many of the measured components were adopted, this approach would have shifted the focus to considering the value of each component rather than only valuing the implementation of all functions and would have exhibited less downward bias had hospitals chosen not to adopt 1 of the 10 measured components.

The potential for misleading conclusions based on the 9% figure was heightened because reports on EHR adoption presented in more recent years have portrayed basic EHR adoption in terms of deployment of certified EHRs. This new metric could not be computed at baseline, leading inevitably to an “apples to oranges” comparison. For instance, a prominent 2019 report by Kaiser Health News presented a figure, without explanation, showing EHR adoption at 9% in 2008 and 72% in 2011: the 9% figure undoubtedly refers to the “basic” figure while the 72% number refers to adoption of a certified EHR (consistently applying the criteria of Jha et al, adoption of a basic EHR would have stood at only 28% in 2011.).9,14 Our findings show that the “apples” used in the comparison should not be the extremely low figure reported by Jha et al, which gives minimal credit to the considerable adoption progress that had been made in US hospitals by 2008.

Third, this analysis offers an important methodological lesson about the power of thresholding decisions in analyzing data and reporting results. In all empirical research, investigators make subjective and often arbitrary decisions when converting objective data into information. In this case, the thresholding decision was aggressive and extreme in its consequences. Values on a binary achievement index composed of multiple indicators that require all indicators to be achieved will be skewed and will conceal information contained in the indicators themselves. When extreme thresholding is used, the number of cases achieving the criterion will be more dependent on the correlation among the indicators than their distributions of values. Consider a hypothetical extreme example where each of 10 indicators comprising an index is achieved in 90% of cases. If nonachievement of each indicator is randomly distributed, the index will indicate composite achievement of 35%. However, if nonachievement is perfectly correlated such that all cases failed on the same indicator, the index will indicate composite achievement of zero. If correlation is perfect in the complementary sense where 10% of cases failed on all indicators, then composite achievement will reach an upper bound of 90%. In this case, we see where an extreme thresholding condition led to misleading conclusions masking the progress in EHR adoption the US as a nation had made prior to 2009.

Fourth, also methodological in character, the “9%” statistic was originally reported without reference to measurement error, and error in responses to the survey could have created uncertainty about the true level of adoption within responding hospitals. In this case, a likely reason for measurement error is that respondents were not knowledgeable about the state of EHR implementation at their hospital, and this may have been common because of varied sophistication of hospital information technology departments. The IRT analysis (Method 3b) demonstrated that, without changing the thresholding criterion, attention to measurement error inherent in the survey alone raises the estimate of basic EHR adoption to 30%. The only confidence interval reported in the study reflects uncertainty due to sampling error and does not address the potential for measurement error among surveyed hospitals.

Conclusions

There is clearly great value in setting a clearly and constantly defined goal, such as the attainment of a basic EHR. At the crux of the argument in this article is whether the primary reported metric that shapes public opinion and drives policy should be the extent of complete attainment of that goal or the extent of progress toward it.

We can only speculate on the policy implications of the choice to use a complete attainment metric on the drafting of the original HITECH legislation, which specified the structure of Meaningful Use incentive payments and the subsequent design of the HITECH program, which was delegated to the Department of Health and Human Services in the executive branch of the government. Greater attention to the data reported as a histogram in Figure 2 might have led to an incentive structure more generous to providers than hospitals because it better depicts the significant progress many hospitals had made toward the goal of a basic EHR. As implemented, the relative generosity of incentives to hospitals resulted in high levels of hospital participation and only 60% of physicians participating in the MU program, with physician participation peaking in 2013 before dropping as physicians left the incentive program.37

The presentation of this data based on a threshold may also have influenced the relatively blunt policy design pursued for hospitals. The all-or-nothing approach concealed meaningful variability in adoption by equating as “have nothings” all of the 91% of hospitals that did not achieve the threshold. This approach to the data was replicated in the MU program, which treated achievement of each stage as all-or-nothing. This approach modeled the use of MU achievement as a trigger for incentive payment while concealing the variation in EHR adoption patterns. Displaying this data as a heat map, as in Figure 1, might have drawn policymakers toward an approach designed to help hospitals “fill in” missing areas in the figure. Such a policy might have directed incentives toward hospitals that had made less progress in 2008, because they had access to fewer resources to do so, and directed fewer resources to the many hospitals that had nearly completed adoption of a basic EHR. Leveling of adoption should have been very important to policy makers because interoperability, which was a major goal of HITECH, requires all participants in health information exchange to have equivalent technical capabilities. The Regional Extension Centers program, created through HITECH, did give some priority to less well-resourced hospitals and physicians, but the $720 million allocated to the Extension Centers program pales in comparison with the $38 billion paid out as Meaningful Use incentives.38,39

The consequences of tailoring adoption incentives would therefore be far greater in promoting adoption and interoperability in prioritizing implementation support.

As we continue into the post-HITECH era, we believe it is essential that future policy design is informed by accurately understood dynamics of the programs of the past decade. In 2008, a more accurate statement would have been that 73% of hospitals had begun the transition to an EHR, that in aggregate national progress toward a basic EHR was 58% complete, and that only 9% of hospitals had implemented a complete basic EHR. The memetic “9%” statistic was but one way, and an extreme way, of reporting hospital EHR adoption in 2008. We seek here to correct the impression left by that report. History requires it, and to paraphrase an often-used aphorism, we will repeat the mistakes of history unless we learn from them.

FUNDING

Dr Everson was supported by the Agency for Healthcare Research and Quality K12HS026395.

AUTHOR CONTRIBUTIONS

CF devised the initial idea of the study with input from JE and JR. JE, JR, and CF jointly drafted and revised the manuscript. JE conducted data analysis in consultation with CF. JE and JR performed the literature review. JR conducted archival of relevant documents.

CONFLICT OF INTEREST STATEMENT

None declared.

REFERENCES

- 1. Berner ES, Detmer DE, Simborg D.. Will the wave finally break? A brief view of the adoption of electronic medical records in the United States. J Am Med Inform Assoc 2004; 12 (1): 3–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Blumenthal D, Tavenner M.. The “meaningful use” regulation for electronic health records. N Engl J Med 2010; 363 (6): 501–4. [DOI] [PubMed] [Google Scholar]

- 3.Jha AK, DesRoches CM, Campbell EG, et al Use of electronic health records in US hospitals. N Engl J Med 2009; 360 (16): 1628–38. [DOI] [PubMed] [Google Scholar]

- 4. Adler-Milstein J, DesRoches CM, Furukawa MF, et al. More than half of US hospitals have at least a basic EHR, but stage 2 criteria remain challenging for most. Health Aff 2014; 33 (9): 1664–71. [DOI] [PubMed] [Google Scholar]

- 5.Centers for Medicare & Medicare Services. A record of progress on health information technology. 2013. https://www.healthit.gov/sites/default/files/2019-08/record_of_hit_progress_infographic_april_update.pdf Accessed May 14, 2020. [Google Scholar]

- 6. Blumenthal D, Launching H.. Launching HITECH. N Engl J Med 2010; 362 (5): 382–5. [DOI] [PubMed] [Google Scholar]

- 7. Lambrew J. More than half of doctors now use electronic health records thanks to administration policies. Obama White House, 2013. https://obamawhitehouse.archives.gov/blog/2013/05/24/more-half-doctors-now-use-electronic-health-records-thanks-administration-policies Accessed May 14, 2020.

- 8. Evans M. Hospital beds get digital upgrade. The Wall Street Journal; 2018. https://www.wsj.com/articles/hospital-beds-get-digital-upgrade-1544371200? utm_source=newsletter&utm_medium=email&utm_campaign=newsletter_axiosvitals&stream=top Accessed September 12, 2018

- 9. Schulte F, Fry E. Death by 1,000 clicks: where electronic health records went wrong; 2019. https://khn.org/news/death-by-a-thousand-clicks/ Accessed March 28, 2019

- 10. Lohr S. The lessons thus far from the transition to digital patient records; 2014. https://bits.blogs.nytimes.com/2014/07/28/digital-patient-records-the-sober-lessons-so-far/? mtrref=undefined&gwh=2140B48DBF27D4455031ECEACD12A266&gwt=pay&assetType=REGIWALL Accessed July 28, 2014

- 11. Blumenthal D. Wiring the health system—origins and provisions of a new federal program. N Engl J Med 2011; 365 (24): 2323–9. [DOI] [PubMed] [Google Scholar]

- 12. DesRoches CM, Charles D, Furukawa MF, et al. Adoption of electronic health records grows rapidly, but fewer than half of US hospitals had at least a basic system in 2012. Health Aff 2013; 32 (8): 1478–85. [DOI] [PubMed] [Google Scholar]

- 13. Furukawa MF, Patel V, Charles D, Swain M, Mostashari F.. Hospital electronic health information exchange grew substantially in 2008–12. Health Aff 2013; 32 (8): 1346–54. [DOI] [PubMed] [Google Scholar]

- 14. Charles D, Gabriel M, Searcy T.. Adoption of Electronic Health Record Systems among the US Non-Federal Acute Care Hospitals: 2008–2014 Washington, DC: The Office of the National Coordinator for Health Information Technology; 2015.

- 15. Henry J, Pylypchuk Y, Searcy T, Patel V.. Adoption of Electronic Health Record Systems among US Non-Federal Acute Care Hospitals: 2008–2015 Washington, DC: The Office of the National Coordinator for Health Information Technology; 2016. https://dashboard.healthit.gov/evaluations/data-briefs/non-federal-acute-care-hospital-ehr-adoption-2008-2015.php Accessed May 14, 2020.

- 16.The Office of the National Coordinator for Health Information Technology. Washington, DC. Adoption of Electronic Health Record Systems among U.S. Non-Federal Acute Care Hospitals: 2008–2015. https://dashboard.healthit.gov/evaluations/data-briefs/non-federal-acute-care-hospital-ehr-adoption-2008-2015.php Accessed May 14, 2020.

- 17. Rupp S. The largest hospital in each state; 2016. https://electronichealthreporter.com/largest-hospital-state/ Accessed October 28, 2016

- 18. Hirsch MD. ONC: 96 percent of acute care hospitals have adopted certified EHRs; 2016. https://www.fiercehealthcare.com/ehr/onc-96-percent-acute-care-hospitals-have-adopted-certified-ehrs Accessed May 14, 2020.

- 19.Knowledge Hub. Secondary Knowledge Hub. https://www.athenahealth.com/knowledge-hub Accessed May 14, 2020.

- 20. DesRoches CM, Campbell EG, Rao SR, et al. Electronic health records in ambulatory care—a national survey of physicians. N Engl J Med 2008; 359 (1): 50–60. [DOI] [PubMed] [Google Scholar]

- 21.EHR Incentive Programs: Data and Program Reports. Secondary EHR Incentive Programs: Data and Program Reports. https://www.cms.gov/Regulations-and-Guidance/Legislation/EHRIncentivePrograms/DataAndReports Accessed May 14, 2020.

- 22.The Office of the National Coordinator for Health Information Technology. Percent of All Eligible and Critical Access Hospitals that have Demonstrated Meaningful Use of Certified Health IT. Washington, DC: The Office of the National Coordinator for Health Information Technology; 2016. https://dashboard.healthit.gov/quickstats/pages/FIG-Hospitals-EHR-Incentive-Programs.php Accessed May 14, 2020.

- 23.The Office of the National Coordinator for Health Information Technology. Percent of Physicians that have Demonstrated Meaningful Use of Certified Health IT. Washington, DC: The Office of the National Coordinator for Health Information Technology; 2016. https://dashboard.healthit.gov/apps/physicians-medicare-meaningful-use.php Accessed May 14, 2020.

- 24.HIMSS Analytics. EMRAM (EMR Adoption Model) & Patient Engagement: Trends and Value Proposition. In: proceedings of the HIMSS ASIAPAC ROADSHOW; August 2016; Bangkok, Thailand.

- 25. Baker FB, Kim S-H.. Item Response Theory: Parameter Estimation Techniques. 2nd ed New York: Marcel Dekker; 2014. [Google Scholar]

- 26. Rizopoulos D. LTM: an R package for latent variable modeling and item response theory analyses. J Stat Soft 2006; 17 (5): doi:10.18637/jss.v017.i05. [Google Scholar]

- 27.Thissen D. Reliability and measurement precision. In: Wainer H, ed. Computerized adaptive testing: A primer Mahwah, NJ: Lawrence Erlbaum Associates Publishers; 2000: 159–84. [Google Scholar]

- 28. Jones SS, Adams JL, Schneider EC, Ringel JS, McGlynn EA.. Electronic health record adoption and quality improvement in US hospitals. Am J Manag Care 2010; 16(12 Suppl HIT): SP64–71. [PubMed] [Google Scholar]

- 29. Geisler BP, Schuur JD, Pallin DJ.. Estimates of electronic medical records in US emergency departments. PLoS One 2010; 5 (2): e9274. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Kazley AS, Ozcan YA.. Do hospitals with electronic medical records (EMRs) provide higher quality care? An examination of three clinical conditions. Med Care Res Rev 2008; 65 (4): 496–513. [DOI] [PubMed] [Google Scholar]

- 31. Ford EW, Menachemi N, Huerta TR, Yu F.. Hospital IT adoption strategies associated with implementation success: implications for achieving meaningful use. J Healthc Manag 2010; 55 (3): 175–88. [PubMed] [Google Scholar]

- 32. McCullough JS, Casey M, Moscovice I, Prasad S.. The effect of health information technology on quality in US hospitals. Health Aff 2010; 29 (4): 647–54. [DOI] [PubMed] [Google Scholar]

- 33. Li P, Bahensky JA, Jaana M, Ward MM.. Role of multihospital system membership in electronic medical record adoption. Healthc Manag Rev 2008; 33 (2): 169–77. [DOI] [PubMed] [Google Scholar]

- 34. Blavin FE, Buntin M, Friedman CP.. Alternative measures of electronic health record adoption among hospitals. Am J Manag Care 2010; 16(Suppl 12): e293–301. [PubMed] [Google Scholar]

- 35. Menachemi N, Brooks RG, Schwalenstocker E, Simpson L.. Use of health information technology by children’s hospitals in the United States. Pediatrics 2009; 123 (Supplement 2): S80–S84. [DOI] [PubMed] [Google Scholar]

- 36. Adler-Milstein J, Everson J, Lee S-Y.. Sequencing of EHR adoption among US hospitals and the impact of meaningful use. J Am Med Inform Assoc 2014; 21 (6): 984–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Everson J, Richards MR, Buntin MB. Horizontal and vertical integration's role in meaningful use attestation over time. Health Serv Res 2019; 54 (5): 1075–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.American Institutes for Research. Evaluation of the Regional Extension Center Program. Washington DC: American Institutes for Research; 2016. [Google Scholar]

- 39. Lynch K, Kendall M, Shanks K, et al. The Health IT Regional Extension Center Program: evolution and lessons for health care transformation. Health Serv Res 2014; 49 (1pt2): 421–37. [DOI] [PMC free article] [PubMed] [Google Scholar]