Abstract

Self-regulation is studied across various disciplines, including personality, social, cognitive, health, developmental, and clinical psychology; psychiatry; neuroscience; medicine; pharmacology; and economics. Widespread interest in self-regulation has led to confusion regarding both the constructs within the nomological network of self-regulation and the measures used to assess these constructs. To facilitate the integration of cross-disciplinary measures of self-regulation, we estimated product-moment and distance correlations among 60 cross-disciplinary measures of self-regulation (23 self-report surveys, 37 cognitive tasks) and measures of health and substance use based on 522 participants. The correlations showed substantial variability, though the surveys demonstrated greater convergent validity than did the cognitive tasks. Variables derived from the surveys only weakly correlated with variables derived from the cognitive tasks (M = .049, range = .000 to .271 for the absolute value of the product-moment correlation; M = .085, range = .028 to .241 for the distance correlation), thus challenging the notion that these surveys and cognitive tasks measure the same construct. We conclude by outlining several potential uses for this publicly available database of correlations.

Self-regulation is important because of its potential role in the development and maintenance of many behaviors. As recently defined by the National Institutes of Health (NIH), “self-regulation refers to the process of managing emotional, motivational and cognitive resources to align mental states and behavior with our goals” (NIH, 2015). Constructs within the nomological network of self-regulation have been implicated in a variety of risky health behaviors and outcomes, including poor diet; physical inactivity; alcohol, tobacco, and other substance use problems; risky sexual behaviors; risky driving; and longevity (Bickel, Odum, & Madden, 1999; Bogg & Roberts, 2004; Kern & Friedman, 2008; Moffitt et al., 2011). Modifiable behaviors such as these substantially affect health and account for approximately 40% of the risk associated with preventable premature deaths in the United States (NIH, 2015). Thus, interest lies in the characteristics of people who can and cannot successfully initiate and sustain behavior change. As part of the NIH’s Science of Behavior Change initiative, our project focuses on better understanding and measuring self-regulation.

Accurately measuring self-regulation and identifying potentially modifiable facets of self-regulation are crucial to the development of more effective interventions. However, widespread interest in self-regulation has led to “conceptual confusion and measurement mayhem” (Morrison & Grammer, 2016, p. 327). As summarized by an NIH report, the nomological network of self-regulation “encompasses a wide range of behavioral and psychological constructs and processes, including, but not limited to: conscientiousness, self-control, response inhibition, impulsivity/impulse control, behavioral disinhibition, temporal discounting, emotion regulation, cognitive control (including goal selection, updating, representation and maintenance; response selection, inhibition or suppression; and performance or conflict monitoring), cognitive/emotional homeostasis, effort modulation, and flexible adaptation” (NIH, 2015; also see Nigg, 2017, Table 1). Constructs within the nomological network of self-regulation are studied across various disciplines, including personality, social, cognitive, health, developmental, and clinical psychology; psychiatry; neuroscience; medicine; pharmacology; and economics (Nigg, 2017). However, limited cross-talk among researchers from these disciplines has led to discipline-specific terms and measures for these constructs.

Table 1.

Self-Report Surveys Administered

| Barratt Impulsivity Scale (BIS-11) |

| Behavioral Inhibition System/Behavioral Activation System (BIS/BAS) |

| Brief Self-Control Scale |

| Carstensen Future Time Perspective |

| Dickman’s Functional & Dysfunctional Impulsivity |

| Domain-Specific Risk-Taking Scale (DOSPERT), Expected Benefits |

| Domain-Specific Risk-Taking Scale (DOSPERT), Risk Perception |

| Domain-Specific Risk-Taking Scale (DOSPERT), Risk-Taking |

| Emotion Regulation Questionnaire |

| Eysenck I-7 Impulsiveness and Venturesomeness |

| Five-Facet Mindfulness Questionnaire |

| Short Grit Scale |

| Mindful Attention and Awareness Scale |

| Multidimensional Personality Questionnaire, Control |

| Selection Optimization Compensation Scale |

| Short Self-Regulation Questionnaire |

| Stanford Leisure Time Activities Survey |

| Ten-Item Personality Questionnaire |

| Theories of Willpower Scale |

| Three-Factor Eating Questionnaire |

| UPPS-P Impulsive Behavior Scale |

| Zimbardo Time Perspective Inventory |

| Zuckerman’s Sensation Seeking Scale |

Note. Key references for these surveys are available at https://scienceofbehaviorchange.org/measures/.

Given the “extraordinary diversity” (Duckworth & Kern, 2011, p. 259) in how self-regulation is operationalized and evaluated across disciplines, several calls for clarification and cross-disciplinary integration have been made (e.g., Duckworth & Kern, 2011; Zhou, Chen, & Main, 2012; Welsh & Peterson, 2014; Morrison & Grammer, 2016; Nigg, 2017; Eisenberg, 2017). These calls have applied to both the constructs within the nomological network of self-regulation and the measures used to assess these constructs. In this paper, we focus on the measures while remaining agnostic to competing theories within and across disciplines regarding how to operationalize self-regulation. Whereas others have provided conceptual clarification based on theory and expertise (e.g., Zhou et al., 2012; Diamond, 2013; Morrison & Grammer, 2016; Nigg, 2017), Duckworth and Kern (2011) conducted a meta-analysis examining the convergent validity of measures of self-regulation based on 282 independent samples. Specifically, they examined self-report surveys, informant-report surveys, cognitive tasks assessing executive function, and cognitive tasks assessing delay discounting. Duckworth and Kern (2011) concluded that the measures demonstrated moderate convergent validity overall, though the correlations among the measures showed substantial variability. Furthermore, they found much stronger evidence of convergent validity for the surveys than for the cognitive tasks.

To facilitate the integration of cross-disciplinary measures of self-regulation, we provide a database with the product-moment and distance correlations among 60 cross-disciplinary measures of self-regulation (23 self-report surveys, 37 cognitive tasks; see Eisenberg et al., 2018). As part of a larger project using these data, Eisenberg et al. (2019) investigated the dimensionality of these surveys and cognitive tasks via exploratory factor analysis, and Enkavi et al. (2019) evaluated the test-retest reliability of these surveys and cognitive tasks. Providing this database of product-moment and distance correlations is intended to complement previous work examining the convergent and divergent validity of these measures, including the meta-analysis conducted by Duckworth and Kern (2011). Whereas the correlations presented by Duckworth and Kern (2011) are based on summary statistics pooled across multiple publications and samples of participants (with each sample completing a subset of measures), the correlations presented in this paper are based on a single sample of participants who completed an extensive battery of surveys and cognitive tasks. We provide both product-moment correlations, which measure the strength and direction of the linear association between two variables, and distance correlations, which measure any dependence (i.e., linear and nonlinear associations) between two variables. These correlations can help assess convergent and divergent validity across the surveys and cognitive tasks. The database also includes health and substance use measures, which can help establish criterion validity. Other potential uses of the database include the specification of Bayesian prior distributions, meta-analysis, and integrative data analysis, which we describe later in the paper.

Methods

Participants and Procedures

Data were collected on Amazon’s Mechanical Turk (MTurk), an online marketplace where participants are paid to complete HITs (human intelligence tasks). In recent years, MTurk has gained popularity in psychological research for providing access to a large pool of participants who are often more diverse in geographic location, demographics, and abilities compared to participants recruited from universities and the surrounding communities. Crump, McDonnell, and Gureckis (2013) found that the quality of data collected on MTurk was comparable to that of data collected in laboratories. Hauser and Schwarz (2016) found that MTurk participants passed more online attention checks than did undergraduate students, though Barends and de Vries (2019) noted that some MTurk participants actively search for attention checks while otherwise providing careless responses.

Adults between 18 and 50 years old who were living in the United States were invited to participate, though four participants reported being between 50 and 60 years old. Participants were given one week to complete an approximately 10-hour battery of surveys and cognitive tasks, which were deployed in a random order through Experiment Factory (Sochat et al., 2016). Participants who completed the battery were paid $60 plus an average of $10 in bonuses based on performance (range = $65 to $75). Payments were prorated for those who did not complete the battery. The study protocol and data collection were preregistered on the Open Science Framework (https://osf.io/amxpv/).

Participants who did not complete the battery (102 of 662 participants who enrolled) or failed quality checks for the cognitive tasks (38 of 560 participants, described below) were excluded, resulting in a sample of 522 participants. Of the 522 participants, 50.2% were female and 78.8% were non-Latino White. Participants were fairly diverse in age (M = 33.63, SD = 7.88, range = 20 to 59 years old), education (0.6% less than high school, 14.8% high school diploma, 40.8% some college, 36.8% bachelor’s degree, 7.1% master’s degree or higher), and household income (M = $48,084, SD = $31,253, maximum = $240,000).

Measures

Based on an extensive review of the literature from various disciplines, we identified, administered, and scored 23 surveys and 37 cognitive tasks that all putatively measure constructs within the nomological network of self-regulation.

Surveys.

The 23 surveys administered are listed in Table 1 and can be viewed on Experiment Factory (https://expfactory.github.io/experiments/). A description of each survey is available at https://scienceofbehaviorchange.org/measures/.

Cognitive tasks.

The 37 cognitive tasks administered are listed in Table 2 and can be viewed on Experiment Factory (https://expfactory.github.io/experiments/). A description of each cognitive task is available at https://scienceofbehaviorchange.org/measures/ (also see Enkavi et al., 2019). Quality checks were applied to all cognitive tasks to ensure that (1) average response times were not unreasonably fast, (2) not too many responses were omitted, (3) accuracy was reasonably high, and (4) responses were sufficiently distributed (i.e., the participant did not press only a single key). The specific criteria differed across cognitive tasks, but in general we required that median response times were longer than 200 milliseconds, no more than 25% of responses were omitted, accuracy was higher than 60%, and no single response was given more than 95% of the time. Failing a quality check resulted in that participant’s data being removed for the failed cognitive task. Participants who failed quality checks for four or more cognitive tasks (38 of 560 participants) were excluded from the sample entirely. For the stop signal tasks, probabilistic selection task, and two-step decision task, performance criteria served as additional quality checks. Failing a quality check based on performance criteria led to removal of that participant’s data for the failed cognitive task.

Table 2.

Cognitive Tasks Administered

| Adaptive N-Back | Local-Global Letter Task |

| Angling Risk Task | Motor Selective Stop Signal |

| Attention Network Task | Probabilistic Selection Task |

| Bickel Titrator | Psychological Refractory Period |

| Choice Reaction Time | Raven’s Progressive Matrices |

| Cognitive Reflection Task | Recent Probes |

| Columbia Card Task, Cold | Shape Matching Task |

| Columbia Card Task, Hot | Shift Task |

| Dietary Decision Task | Simon Task |

| Digit Span | Simple Reaction Time |

| Directed Forgetting | Spatial Span |

| Discount Titrator | Stimulus Selective Stop Signal |

| Dot Pattern Expectancy | Stop Signal |

| Go-No Go | Stroop |

| Hierarchical Learning Task | Three-By-Two |

| Holt & Laury | Tower of London |

| Information Sampling Task | Two-Step Decision |

| Keep Track Task | Writing Task |

| Kirby |

Note. Key references for these cognitive tasks are available at https://scienceofbehaviorchange.org/measures/.

Demographics and health.

Participants reported their demographics, height and weight, substance use (alcohol, nicotine, marijuana, and other drugs), history of medical and neurological disorders, and mental health (Kessler Psychological Distress Scale, K6; Kessler et al., 2002). Eleven of these variables were selected for inclusion in the database: body mass index; six items from the K6 on feeling nervous, hopeless, restless or fidgety, depressed, everything was an effort, or worthless in the past 30 days; one item on tobacco use (“On average, how many cigarettes do you now smoke a day?”); and three items on alcohol use (“How many drinks containing alcohol do you have on a typical day when you are drinking?”, “How often do you have six or more drinks on one occasion?”, “How often during the last year have you found that you were not able to stop drinking once you had started?”). Because self-regulation has been implicated in a variety of risky health behaviors, these measures can be used to evaluate the criterion validity of the surveys and cognitive tasks (see Eisenberg et al., 2019).

Calculation of Product-Moment and Distance Correlations

Product-moment and distance correlations were computed among the 66 variables derived from the 23 surveys, 138 variables derived from the 37 cognitive tasks, and 11 variables pertaining to health and substance use (total = 215 variables). These 215 variables resulted in 215C2 = 23,005 (product-moment or distance) correlations computed using pairwise deletion. The product-moment correlations were estimated using the cor function in R, and the distance correlations were estimated using the dcor function available through the energy package in R (Rizzo & Székely, 2016). Whereas the product-moment correlation measures the strength and direction of the linear association between two variables X and Y, the distance correlation measures any dependence (i.e., linear and nonlinear associations) between X and Y (Székely, Rizzo, & Bakirov, 2007). The distance correlation ranges from 0 to 1 and equals 0 only if X and Y are independent. The appendix provides the formula for the distance correlation.

Results

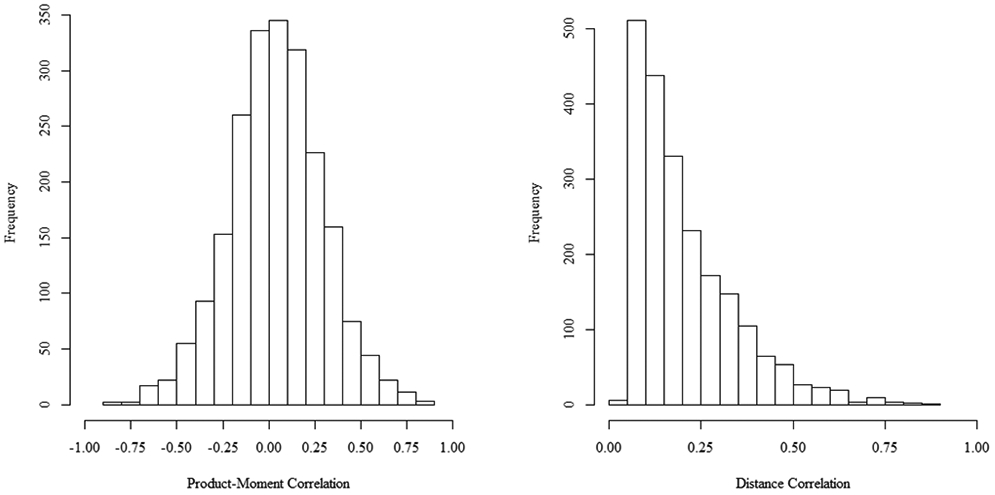

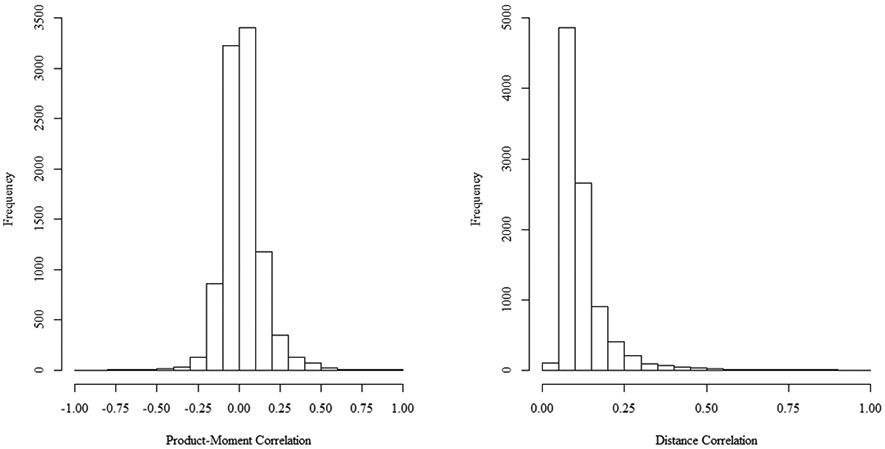

The correlation database is available as supplemental material, and the data used to generate these correlations are available at https://github.com/IanEisenberg/Self_Regulation_Ontology/tree/master/Data. Figure 1 presents the distribution of product-moment and distance correlations among the 66 variables derived from the 23 surveys, and Figure 2 presents the distribution of product-moment and distance correlations among the 138 variables derived from the 37 cognitive tasks. As shown in Figures 1 and 2, the correlations showed substantial variability. Among the 66 survey variables, the absolute value of the product-moment correlations1 ranged from .000 to .879 (M = .202), and the distance correlations ranged from .045 to .865 (M = .207). Among the 138 cognitive task variables, the absolute value of the product-moment correlations ranged from .000 to .974 (M = .087), and the distance correlations ranged from .028 to .956 (M = .121). The surveys demonstrated greater convergent validity than did the cognitive tasks based on both the product-moment and distance correlations (hereafter denoted r and , respectively).

Figure 1.

Distribution of product-moment and distance correlations among the 66 variables derived from the surveys.

Figure 2.

Distribution of product-moment and distance correlations among the 138 variables derived from the cognitive tasks.

A few of the most highly correlated variable pairs were from the Eysenck I-7, UPPS-P Impulsive Behavior Scale, and Zuckerman’s Sensation Seeking Scale, likely because these surveys include identical or nearly identical items (e.g., “Would you enjoy water skiing?” on the Eysenck I-7; “I would enjoy water skiing” on the UPPS-P Impulsive Behavior Scale; and “I would like to take up the sport of water skiing, I would not like to take up water skiing” [forced choice] on Zuckerman’s Sensation Seeking Scale). For example, the Eysenck I-7 venturesomeness subscale correlated with the UPPS-P sensation seeking subscale at r = .879 ( = .865) and Zuckerman’s thrill seeking subscale at r = .865 ( = .840), and the UPPS-P sensation seeking subscale correlated with Zuckerman’s thrill seeking subscale at r = .831 ( = .807). Other highly correlated variable pairs were derived from the same cognitive task and reflect different ways to score the same data from the cognitive task.

Overall, variables derived from the surveys were not highly correlated with variables derived from the cognitive tasks. For the 66 × 138 = 9,108 pairs involving one survey variable and one cognitive task variable, the absolute value of the product-moment correlations ranged from .000 to .271 (M = .049), and the distance correlations ranged from .028 to .241 (M = .085). The two strongest correlations between one survey variable and one cognitive task variable were as follows. Expected benefits from engaging in unethical behaviors (from the Domain-Specific Risk-Taking Scale [DOSPERT]; e.g., taking questionable deductions on your income tax return) and motivation on fixed win trials of the Information Sampling Task were correlated at r = .271 ( = .241). The Behavioral Inhibition System/Behavioral Activation System (BIS/BAS) drive subscale and Dot Pattern Expectancy d’ were correlated at r = −.246 ( = .241). Many of the remaining correlations between one survey variable and one cognitive task variable were much lower than expected. For example, the Eysenck I-7 impulsiveness scale (e.g., “Do you generally do and say things without stopping to think?”) only weakly correlated with the Tower of London planning time (r = −.023, = .070), average move time (r = −.016, = .065), number of extra moves (r = .063, = .082), and number of optimal solutions (r = −.064, = .092).

To illustrate the database’s utility for assessing criterion validity, we highlight variables correlated with body mass index and alcohol use. Body mass index most strongly correlated with the emotional eating (r = .377, = .371) and uncontrolled eating (r = .291, = .297) subscales of the Three-Factor Eating Questionnaire, followed by the Brief Self-Control Scale (r = −.266, = .281) and Stanford Leisure Time Activities Survey (one item on physical activity; r = −.209, = .241). However, body mass index only weakly correlated with health sensitivity (r = −.135, = .136) and taste sensitivity (r = −.042, = .074) from the Dietary Decision Task. All three alcohol use items most strongly correlated with the disinhibition subscale of Zuckerman’s Sensation Seeking Scale (r = .435, = .436 for number of drinks when drinking; r = .514, = .492 for having six or more drinks on one occasion; r = .310, = .295 for unable to stop drinking). This is likely due in part to forced choice items such as “Heavy drinking usually ruins a party because some people get loud and boisterous, Keeping the drinks full is the key to a good party” and “I feel best after taking a couple of drinks, Something is wrong with people who need liquor to feel good.”

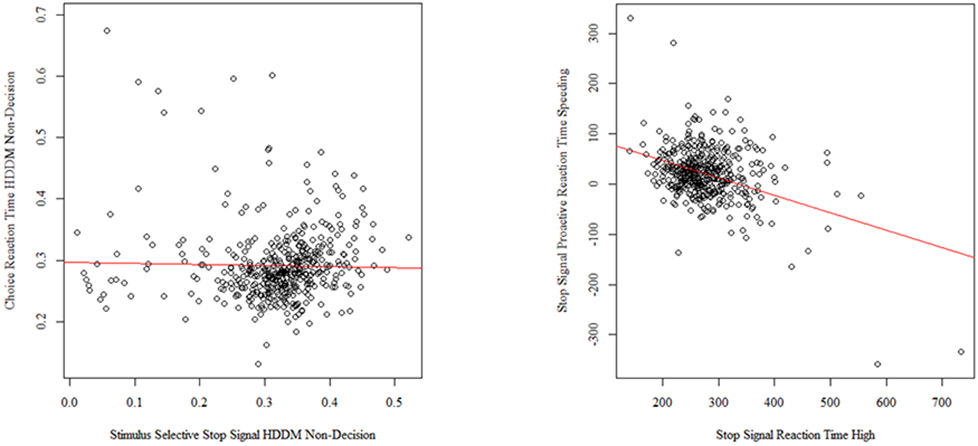

Overall, the distance correlations were consistent with the product-moment correlations. However, the product-moment and distance correlations differed by ∣.100∣ or more for 177 (0.8%) of the 23,005 variable pairs. Figure 3 provides scatterplots for two variable pairs—one where the distance correlation was .212 points higher in magnitude than the corresponding product-moment correlation and one where the distance correlation was .130 points lower in magnitude than the corresponding product-moment correlation. For the scatterplot on the left, Choice Reaction Time non-decision time showed greater variability at lower levels of Stimulus Selective Stop Signal non-decision time (both estimated from a drift diffusion model). This fanning out of scores may explain why the distance correlation (.235) was higher in magnitude than the corresponding product-moment correlation (−.022). For the scatterplot on the right, a few outliers appear to be driving up the product-moment correlation, such that the distance correlation (.257) was lower in magnitude than the corresponding product-moment correlation (−.388). When excluding the two cases with speeding scores less than −300, the product-moment and distance correlations were much less discrepant (r = −.243, = .217).

Figure 3.

Scatterplots of variable pairs with discrepant product-moment and distance correlations. The ordinary least squares regression line is provided for each scatterplot. For the variable pair on the left, the distance correlation (.235) was .212 points higher in magnitude than the corresponding product-moment correlation (−.022). For the variable pair on the right, the distance correlation (.257) was .130 points lower in magnitude than the corresponding product-moment correlation (−.388).

Discussion

Researchers across various disciplines have recognized the importance of self-regulation, but limited cross-talk among these researchers has stymied efforts to better understand and measure self-regulation. To facilitate the integration of cross-disciplinary measures of self-regulation, we provide a database with the product-moment and distance correlations among 60 cross-disciplinary measures of self-regulation (23 self-report surveys, 37 cognitive tasks) and measures of health and substance use based on 522 participants. The correlations showed substantial variability, though the surveys demonstrated greater convergent validity than did the cognitive tasks. Although we used overlapping (and not identical) sets of measures, our results coincide with those of Duckworth and Kern (2011). That is, Duckworth and Kern’s (2011) meta-analysis similarly demonstrated greater convergent validity among the surveys (average r = .50 and .54 for self-report and informant-report, respectively) than among the cognitive tasks (average r = .15 and .21 for executive function and delay discounting, respectively).

Given that we selected cognitive tasks that all putatively measure constructs within the nomological network of self-regulation, poor convergent validity among the cognitive tasks may be due to poor construct validity of the cognitive tasks. That is, some of the cognitive tasks may not measure what they purport to measure. Alternatively, poor convergent validity among the cognitive tasks may be due to greater measurement error or difficulties with the purity of cognitive tasks. Cognitive tasks make demands on several cognitive processes not intended to be measured, thus calling into question the ability of cognitive tasks to isolate and validly measure a single cognitive process (Rabbitt, 1997). As an exploratory analysis, we estimated partial correlations among the cognitive task variables while controlling for participants’ fluid intelligence as measured by Raven’s Progressive Matrices (Raven, 1998). Conceivably, fluid intelligence may affect participants’ performance on many of the cognitive tasks included in this study, though controlling for participants’ fluid intelligence did not meaningfully change the relations among the cognitive task variables (M = .073 for the absolute value of the partial correlations).

Variables derived from the cognitive tasks were also not highly correlated with variables derived from the surveys. Astonishingly, the maximum product-moment correlation between one survey variable and one cognitive task variable was .271 despite examining 9,108 variable pairs. Controlling for participants’ fluid intelligence did not meaningfully change the relations among the survey and cognitive task variables (M = .047 and maximum = .275 for the absolute value of the partial correlations). Similarly, in Duckworth and Kern’s (2011) meta-analysis, variables derived from the surveys only weakly correlated with variables derived from the cognitive tasks (average r = .10 and .15 between self-report surveys and cognitive tasks assessing executive function and delay discounting, respectively). These weak correlations challenge the notion that the surveys and cognitive tasks measure the same construct. One possibility is that the surveys measure trait self-regulation whereas the cognitive tasks do not. Relative to the cognitive tasks, the surveys can more easily assess trait self-regulation by asking participants to consider their usual behaviors or abilities over time (e.g., “I usually think before I act” from the Short Self-Regulation Questionnaire, “I am able to work effectively toward long-term goals” from the Brief Self-Control Scale). Consistent with this hypothesis, in this sample, Enkavi et al. (2019) found much higher test-retest reliability for the surveys than for the cognitive tasks when repeated an average of 111 days later (median intraclass correlation = .674 and .311, respectively). More broadly, our results coincide with previous research suggesting that surveys and cognitive tasks often provide unique information when assessing psychological constructs (e.g., Meyer et al., 2001). Reconciling information gleaned from these two methods remains a challenge.

Finally, the health and substance use measures included in the database correlated more strongly with variables derived from the surveys than with those derived from the cognitive tasks. Although this pattern of results provides stronger evidence for the criterion validity of the surveys, other criteria not included in the database may be more strongly predicted by performance on the cognitive tasks. Furthermore, the surveys and health and substance use measures were all self-reported, which may inflate their correlations.

The database has several potential uses beyond our present use of examining the convergent validity of a wide array of surveys and cognitive tasks. First, the database can help researchers select a small subset of surveys and/or cognitive tasks to optimally assess self-regulation. Duckworth and Kern (2011) recommended administering both surveys and cognitive tasks and aggregating across multiple measures to reduce measurement error. Similarly, other researchers found that latent factors or summary measures combining across multiple cognitive tasks showed substantially greater test-retest reliability than the individual cognitive tasks (e.g., Miyake et al., 2000; Eisenberg et al., 2019). Although two highly correlated measures do not necessarily assess the same construct, administering a second measure that is perfectly (or almost perfectly) correlated with the first provides little to no additional information. In our sample, variable pairs with very strong correlations (i.e., ∣ r ∣ ≥ .800) were mostly limited to those derived from the same cognitive task or from surveys with identical or nearly identical items, though the UPPS-P lack of perseverance subscale correlated at r = −.809 ( = .772) with the Short Grit Scale and at r = −.802 ( = .767) with the Short Self-Regulation Questionnaire. Given these strong correlations, researchers generally should not pair these surveys to reduce the battery length and response burden. Furthermore, although surveys are easier and faster to administer than cognitive tasks, cognitive tasks provide added utility. For example, whereas surveys may suffer from response bias, performance on cognitive tasks is “difficult if not impossible to fake” (Duckworth & Kern, 2011, p. 266). When defining latent factors or summary measures based on multiple cognitive tasks, we would caution researchers to carefully consider the theoretical basis for these latent factors or summary measures and to critically review the fit of their measurement models given the weak correlations observed in this sample. These same considerations would apply when attempting to define latent factors or summary measures based on a combination of surveys and cognitive tasks. As noted previously, the surveys and cognitive tasks may not measure the same construct, such that combining them to form a single latent factor or summary measure would be inappropriate.

Second, the correlations may be used to specify Bayesian prior distributions. Although a review of the Bayesian framework is beyond the scope of this paper, briefly, parameters are treated as random in the Bayesian framework, not fixed as in the frequentist framework. A posterior distribution is defined for the parameter of interest by combining a user-specified prior distribution for the parameter with a model for the observed data. The prior distribution quantifies the user’s prior beliefs about the parameter based on his or her expertise, results from a pilot investigation, other publications, and/or meta-analyses. A diffuse prior distribution assigns relatively little weight to the researcher’s prior beliefs about the parameter, though more informative prior distributions can be specified depending on the availability of relevant findings. For example, Muthén and Asparouhov (2012) recently discussed the utility of a Bayesian approach to factor analysis. When adopting a frequentist approach, we can perform either exploratory factor analysis, which freely estimates all possible loadings, or confirmatory factor analysis, which constrains all cross-loadings to zero based on a theorized factor structure for the data. A Bayesian approach to factor analysis provides much more flexibility than a frequentist approach because we can encode prior beliefs that are stronger than those from exploratory factor analysis but weaker than those from confirmatory factor analysis (Levy & Mislevy, 2016). Specifically, Muthén and Asparouhov (2012) proposed assigning highly informative prior distributions to cross-loadings such that their posterior distributions are pulled toward zero but are not constrained to zero. The database provided in this paper can help researchers specify informative prior distributions for cross-loadings and other parameters when investigating the factor structure of constructs within the nomological network of self-regulation.

Finally, the database can be used when conducting a meta-analysis (either in the frequentist or Bayesian framework) or integrative data analysis. Integrative data analysis refers to pooling raw data across multiple samples of participants and fitting models to the pooled data (Curran & Hussong, 2009). Synthesizing different measures administered to different samples (referred to as measure harmonization) has been recognized as an important challenge and possible threat to the internal validity of integrative data analysis (Brincks et al., 2018). Because the database includes 60 cross-disciplinary surveys and cognitive tasks measuring self-regulation, it can help link samples that each complete a subset of these surveys or cognitive tasks.

By examining the relations among 60 cross-disciplinary measures of self-regulation, we aimed to provide a resource for addressing widespread confusion regarding the constructs within the nomological network of self-regulation and the measures used to assess these constructs. Given the importance of self-regulation, further efforts are needed to promote cross-disciplinary integration and cross-talk among researchers interested in self-regulation.

Supplementary Material

Acknowledgments

This work was supported by the National Institutes of Health Science of Behavior Change Common Fund under Grant UH2DA041713 administered by the National Institute on Drug Abuse.

Appendix

Distance Correlation Formula

The formulas and notation used throughout this appendix are based on Székely, Rizzo, and Bakirov (2007). The distance correlation of X and Y is defined as

| (A1) |

where is the squared distance covariance of X and Y, is the distance variance of X, is the distance variance of Y, and . The distance covariance of X and Y is defined as

| (A2) |

where A and B are n × n matrices and n denotes the number of participants. Each element in A equals where akl = ∣Xk – Xl∣p, and are marginal means, is the grand mean, and k, l = 1, 2, … , n. Similarly, each element in B equals where bkl = ∣Yk – Yl∣q, and are marginal means, is the grand mean, and k, l = 1, 2, … , n. The distance variance of X is defined as

| (A3) |

and the distance variance of Y is defined as

| (A4) |

where A and B have the same definitions as above.

Footnotes

The absolute value was used to report the range and mean of the product-moment correlations because not all of the survey and cognitive task variables were scored in the same direction. That is, higher scores could represent greater self-regulation for some survey and cognitive task variables but lower self-regulation for others.

References

- Barends AJ, & de Vries RE (2019). Noncompliant responding: Comparing exclusion criteria in MTurk personality research to improve data quality. Personality and Individual Differences, 143, 84–89. 10.1016/j.paid.2019.02.015 [DOI] [Google Scholar]

- Bickel WK, Odum AL, & Madden GJ (1999). Impulsivity and cigarette smoking: Delay discounting in current, never, and ex-smokers. Psychopharmacology, 146(4), 447–454. 10.1007/PL00005490 [DOI] [PubMed] [Google Scholar]

- Bogg T, & Roberts BW (2004). Conscientiousness and health-related behaviors: A meta-analysis of the leading behavioral contributors to mortality. Psychological Bulletin, 130(6), 887–919. 10.1037/0033-2909.130.6.887 [DOI] [PubMed] [Google Scholar]

- Brincks A, Montag S, Howe GW, Huang S, Siddique J, Ahn S, Sandler IN, Pantin H, & Brown CH (2018). Addressing methodologic challenges and minimizing threats to validity in synthesizing findings from individual-level data across longitudinal randomized trials. Prevention Science, 19(Suppl 1), 60–73. 10.1007/s11121-017-0769-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crump MJC, McDonnell JV, & Gureckis TM (2013). Evaluating Amazon’s Mechaninal Turk as a tool for experimental behavioral research. PLOS ONE, 8(3), 1–18. 10.1371/journal.pone.0057410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curran PJ, & Hussong AM (2009). Integrative data analysis: The simultaneous analysis of multiple data sets. Psychological Methods, 14(2), 81–100. 10.1037/a0015914 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diamond A (2013). Executive functions. Annual Review of Psychology, 64, 135–168. 10.1146/annurev-psych-113011-143750 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duckworth AL, & Kern ML (2011). A meta-analysis of the convergent validity of self-control measures. Journal of Research in Personality, 45(3), 259–268. 10.1016/j.jrp.2011.02.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisenberg IW, Bissett PG, Canning JR, Dallery J, Enkavi AZ, Gabrieli SW, Gonzalez O, Green AI, Greene MA, Kiernan M, Kim SJ, Li J, Lowe M, Mazza GL, Metcalf SA, Onken L, Parikh SS, Peters E, Prochaska JJ, Scherer EA, Stoeckel LE, Valente MJ, Wu J, Xie H, MacKinnon DP, Marsch LA, & Poldrack RA (2018). Applying novel technologies and methods to inform the ontology of self-regulation. Behaviour Research and Therapy, 101, 46–57. 10.1016/j.brat.2017.09.014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisenberg IW, Bissett PG, Enkavi AZ, Li J, MacKinnon DP, Marsch LA, & Poldrack RA (2019). Uncovering mental structure through data-driven ontology discovery. Nature Communications, 10, 1–13. 10.1038/s41467-019-10301-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Enkavi AZ, Bissett PG, Mazza GL, MacKinnon DP, Marsch LA, & Poldrack RA (2019). A large-scale analysis of test-retest reliabilities of self-regulation measures. Proceedings of the National Academy of Sciences of the United States of America, 116(12), 5472–5477. 10.1073/pnas.1818430116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eisenberg N (2017). Commentary: What’s in a word (or words)—On the relations among self-regulation, self-control, executive functioning, effortful control, cognitive control, impulsivity, risk-taking, and inhibition for developmental psychopathology—Reflections on Nigg (2017). Journal of Child Psychology and Psychiatry, 58(4), 384–386. 10.1111/jcpp.12707 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauser DJ, & Schwarz N (2016). Attentive Turkers: MTurk participants perform better on online attention checks than do subject pool participants. Behavior Research Methods, 48(1), 400–407. 10.3758/s13428-015-0578-z [DOI] [PubMed] [Google Scholar]

- Kern ML, & Friedman HS (2008). Do conscientious individuals live longer? A quantitative review. Health Psychology, 27(5), 505–512. 10.1037/0278-6133.27.5.505 [DOI] [PubMed] [Google Scholar]

- Kessler RC, Andrews G, Colpe LJ, Hiripi E, Mroczek DK, Normand S-LT, Walters EE, & Zaslavsky AM (2002). Short screening scales to monitor population prevalences and trends in non-specific psychological distress. Psychological Medicine, 32(6), 959–976. 10.1017/S0033291702006074 [DOI] [PubMed] [Google Scholar]

- Levy R, & Mislevy RJ (2016). Bayesian psychometric modeling. Boca Raton, Florida: Chapman & Hall/CRC. [Google Scholar]

- Meyer GJ, Finn SE, Eyde LD, Kay GG, Moreland KL, Dies RR, Eisman EJ, Kubiszyn TW, & Reed GM (2001). Psychological testing and psychological assessment: A review of evidence and issues. American Psychologist, 56(2), 128–165. 10.1037/0003-066X.56.2.128 [DOI] [PubMed] [Google Scholar]

- Miyake A, Friedman NP, Emerson MJ, Witzki AH, & Howerter A (2000). The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cognitive Psychology, 41(1), 49–100. 10.1006/cogp.1999.0734 [DOI] [PubMed] [Google Scholar]

- Moffitt TE, Arseneault L, Belsky D, Dickson N, Hancox RJ, Harrington H, Houts R, Poulton R, Roberts BW, Ross S, Sears MR, Thomson WM, & Caspi A (2011). A gradient of childhood self-control predicts health, wealth, and public safety. Proceedings of the National Academy of Sciences of the United States of America, 108(7), 2693–2698. 10.1073/pnas.1010076108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morrison FJ, & Grammer JK (2016). Conceptual clutter and measurement mayhem: Proposals for cross-disciplinary integration in conceptualizing and measuring executive function In Griffin JA, McCardle P, & Freund L (Eds.), Executive function in preschool-age children: Integrating measurement, neurodevelopment, and translational research (pp. 327–348). Washington, DC: American Psychological Association; 10.1037/14797-015 [DOI] [Google Scholar]

- Muthén B, & Asparouhov T (2012). Bayesian structural equation modeling: A more flexible representation of substantive theory. Psychological Methods, 17(3), 313–335. 10.1037/a0026802 [DOI] [PubMed] [Google Scholar]

- National Institutes of Health (2015, January 8). Assay development and validation for self-regulation targets. Retrieved from https://grants.nih.gov/grants/guide/rfa-files/RFA-RM-14-020.html

- Nigg JT (2017). Annual Research Review: On the relations among self-regulation, self-control, executive functioning, effortful control, cognitive control, impulsivity, risk-taking, and inhibition for developmental psychopathology. Journal of Child Psychology and Psychiatry, 58(4), 361–383. 10.1111/jcpp.12675 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rabbitt P (1997). Introduction: Methodologies and models in the study of executive function In Rabbitt P (Ed.), Methodology of frontal and executive function (pp. 1–37). East Sussex: Psychology Press. [Google Scholar]

- Raven JC (1998). Raven’s progressive matrices and vocabulary scales. Oxford: Oxford Pyschologists Press. [Google Scholar]

- Rizzo ML, & Székely GJ (2016). E-statistics: Multivariate inference via the energy of data. R package version 1.7–0. [Google Scholar]

- Sochat VV, Eisenberg IW, Enkavi AZ, Li J, Bissett PG, & Poldrack RA (2016). The Experiment Factory: Standardizing behavioral experiments. Frontiers in Psychology, 7 10.3389/fpsyg.2016.00610 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Székely GJ, Rizzo ML, & Bakirov NK (2007). Measuring and testing dependence by correlation of distances. The Annals of Statistics, 35(6), 2769–2794. 10.1214/009053607000000505 [DOI] [Google Scholar]

- Welsh M, & Peterson E (2014). Issues in the conceptualization and assessment of hot executive functions in childhood. Journal of the International Neuropsychological Society, 20(2), 152–156. 10.1017/S1355617713001379 [DOI] [PubMed] [Google Scholar]

- Zhou Q, Chen SH, & Main A (2012). Commonalities and differences in the research on children’s effortful control and executive function: A call for an integrated model of self-regulation. Child Development Perspectives, 6(2), 112–121. 10.1111/j.1750-8606.2011.00176.x [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.