Abstract

De novo assembly of a human genome using nanopore long-read sequences has been reported, but it used more than 150,000 CPU hours and weeks of wall-clock time. To enable rapid human genome assembly, we present Shasta, a de novo long-read assembler, and polishing algorithms named MarginPolish and HELEN. Using a single PromethION nanopore sequencer and our toolkit, we assembled 11 highly contiguous human genomes de novo in 9 d. We achieved roughly 63× coverage, 42-kb read N50 values and 6.5× coverage in reads >100 kb using three flow cells per sample. Shasta produced a complete haploid human genome assembly in under 6 h on a single commercial compute node. MarginPolish and HELEN polished haploid assemblies to more than 99.9% identity (Phred quality score QV = 30) with nanopore reads alone. Addition of proximity-ligation sequencing enabled near chromosome-level scaffolds for all 11 genomes. We compare our assembly performance to existing methods for diploid, haploid and trio-binned human samples and report superior accuracy and speed.

Subject terms: Genome assembly algorithms, Genome assembly algorithms

High contiguity human genomes can be assembled de novo in 6 h using nanopore long-read sequences and the Shasta toolkit.

Main

Reference-based methods such as GATK1 can infer human variations from short-read sequences, but the results only cover ~90% of the reference human genome assembly2,3. These methods are accurate with respect to single-nucleotide variants and short insertions and deletions (indels) in this mappable portion of the reference genome4. However, it is difficult to use short reads for de novo genome assembly5, to discover structural variations (SVs)6,7 (including large indels and base-level resolved copy number variations), or to resolve phasing relationships without exploiting transmission information or haplotype panels8.

Third generation sequencing technologies, including linked-reads9–11 and long-read technologies12,13, overcome the fundamental limitations of short-read sequencing for genome inference. In addition to increasingly being used in reference guided methods2,14–16, long-read sequences can generate highly contiguous de novo genome assemblies17.

Nanopore sequencing, as commercialized by Oxford Nanopore Technologies (ONT), is particularly useful for de novo genome assembly because it can produce high yields of very long 100+ kilobase (kb) reads18. Very long reads hold the promise of facilitating contiguous, unbroken assembly of the most challenging regions of the human genome, including centromeric satellites, acrocentric short arms, ribosomal DNA arrays and recent segmental duplications19–21. The de novo assembly of a nanopore sequencing based human genome has been reported18. This earlier effort needed 53 ONT MinION flow cells and the assembly required more than 150,000 CPU hours and weeks of wall-clock time, quantities that are unfeasible for high throughput human genome sequencing efforts.

To enable easy, cheap and fast de novo assembly of human genomes we developed a toolkit for nanopore data assembly and polishing that is orders of magnitude faster than state-of-the-art methods. We use a combination of nanopore and proximity-ligation (HiC) sequencing9 and our toolkit, and we report improvements in human genome sequencing coupled with reduced time, labor and cost.

Results

Eleven human genomes sequenced in 9 d

We selected for sequencing 11, low-passage (six passages), human cell lines of the offspring of parent-child trios from the 1,000 Genomes Project22 and genome-in-a-bottle (GIAB)23 sample collections. Samples were selected to maximize captured allelic diversity (see Methods).

We carried out PromethION nanopore sequencing and HiC Illumina sequencing for the 11 genomes. We used three flow cells per genome, with each flow cell receiving a nuclease flush every 20–24 h. This flush removed long DNA fragments that could cause the pores to become blocked over time. Each flow cell received a fresh library of the same sample after the nuclease flush. A total of two nuclease flushes were performed per flow cell, and each flow cell received a total of three sequencing libraries. We used Guppy v.2.3.5 with the high accuracy flipflop model for basecalling (see Methods).

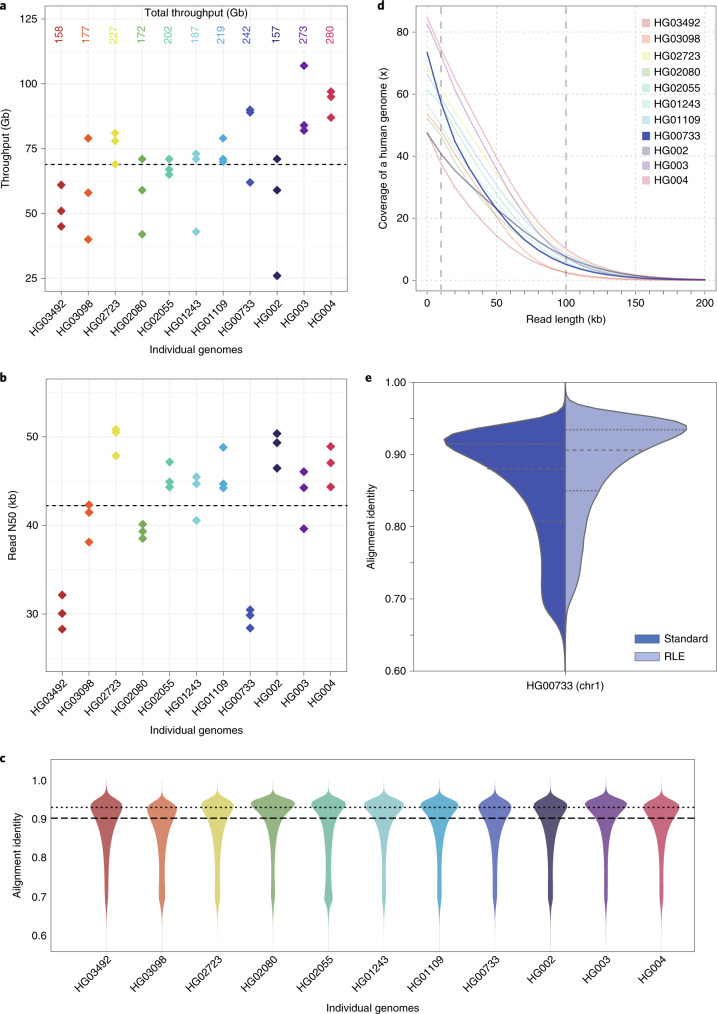

Nanopore sequencing of all 11 genomes took 9 d and produced 2.3 terabases (Tb) of sequence. We ran up to 15 flow cells in parallel during these sequencing runs. Results are shown in Fig. 1 and Supplementary Tables 1–3. Nanopore sequencing yielded an average of 69 gigabases (Gb) per flow cell, with the total throughput per individual genome ranging between 48× (158 Gb) and 85× (280 Gb) coverage per genome (Fig. 1a). The read N50 values for the sequencing runs ranged between 28 and 51 kb (Fig. 1b). (An N50 value is a weighted median; it is the length of the sequence in a set for which all sequences of that length or greater sum to 50% of the set’s total size.) We aligned nanopore reads to the human reference genome (GRCh38) and calculated their alignment identity to assess sequence quality (see Methods). We observed that the median and modal alignment identity was 90 and 93%, respectively (Fig. 1c). The sequencing data per individual genome included an average of 55× coverage arising from >10-kb reads and 6.5× coverage from >100-kb reads (Fig. 1d). This was in large part due to size selection that yielded an enrichment of reads longer than 10 kb. To test the generality of our sequencing methodology for other samples, we sequenced high-molecular weight DNA isolated from a human saliva sample using identical sample preparation. The library was run on a MinION (roughly one-sixth the throughput of a ProMethION flow cell) and yielded 11 Gb of data at a read N50 of 28 kb (Supplementary Table 4), extrapolating both are within the lower range achieved with cell-line derived DNA.

Fig. 1. Nanopore sequencing data.

a, Throughput in gigabases from each of three flow cells for 11 samples, with total throughput at top. Each point is a flow cell. b, Read N50 values for each flow cell. Each point is a flow cell. c, Alignment identities against GRCh38. Medians in a–c shown by dashed lines, dotted line in c is the mode. Each line is a single sample comprising three flow cells. d, Genome coverage as a function of read length. Dashed lines indicate coverage at 10 and 100 kb. HG00733 is accentuated in dark blue as an example. Each line is a single sample comprising three flow cells. e, Alignment identity for standard and RLE reads. Data for HG00733 chromosome 1 flow cell 1 are shown (4.6 Gb raw sequence). Dashed lines denote quartiles.

Shasta assembler for long sequence reads

Shasta was designed to be orders of magnitude faster and cheaper at assembling a human-scale genome from nanopore reads than the Canu assembler used in our earlier work18. During most Shasta assembly phases, reads are stored in a homopolymer-compressed form using run-length encoding (RLE)24–26. In this form, identical consecutive bases are collapsed, and the base and repeat count are stored. For example, GATTTACCA would be represented as (GATACA, 113121). This representation is insensitive to errors in the length of homopolymer runs, thereby addressing the dominant error mode for Oxford Nanopore reads12. As a result, assembly noise due to read errors is decreased, and notably higher identity alignments are facilitated (Fig. 1e). A marker representation of reads is also used, in which each read is represented as the sequence of occurrences of a predetermined, fixed subset of short k-mers (marker representation) in its run-length representation. A modified MinHash27,28 scheme is used to find candidate pairs of overlapping reads, using as MinHash features consecutive occurrences of m markers (default m = 4). Optimal alignments in marker representation are computed for all candidate pairs. The computation of alignments in marker representation is very efficient, particularly as various banded heuristics are used. A marker graph is created in which each vertex represents a marker found to be aligned in a set of several reads. The marker graph is used to assemble sequence after undergoing a series of simplification steps. The assembler runs on a single machine with a large amount of memory (typically 1–2 Tb for a human assembly). All data structures are kept in memory, and no disk I/O takes place except for initial loading of the reads and final output of assembly results.

Benchmarking Shasta

We compared Shasta to three contemporary assemblers: Wtdbg2 (ref. 29), Flye30 and Canu31. We ran all four assemblers on available read data from two diploid human samples, HG00733 and HG002, and one haploid human sample, CHM13. HG00733 and HG002 were part of our collection of 11 samples, and data for CHM13 came from the T2T consortium32.

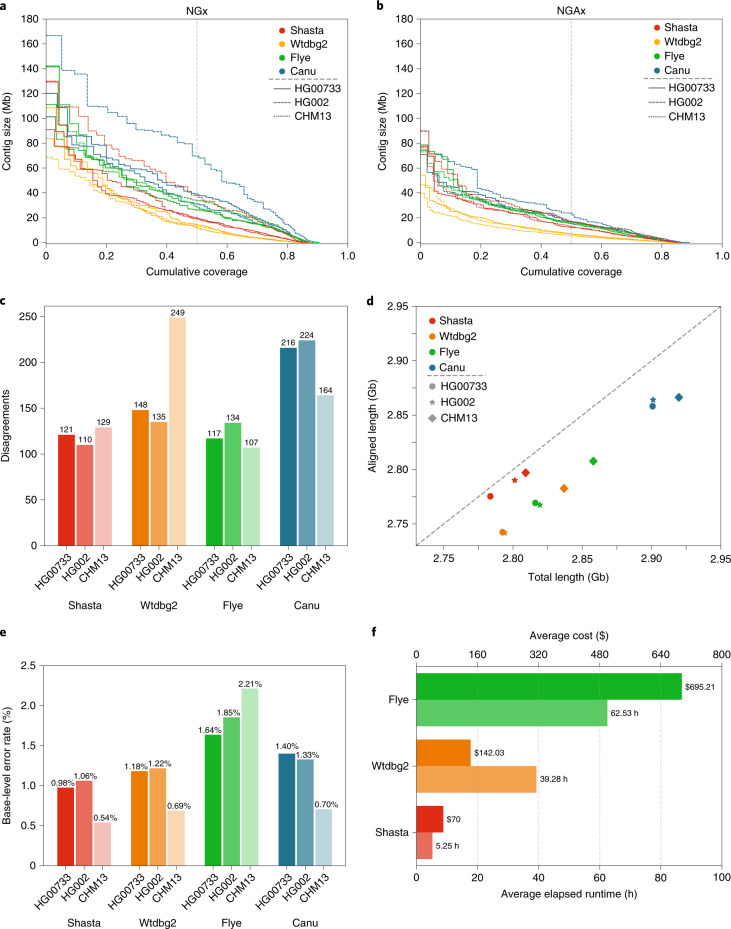

Canu consistently produced the most contiguous assemblies, with contig NG50 values of 40.6, 32.3 and 79.5 Mb, for samples HG00733, HG002 and CHM13, respectively (Fig. 2a). (NG50 is similar to N50, but for 50% of the estimated genome size.) Flye was the second most contiguous, with contig NG50 values of 25.2, 25.9 and 35.3 Mb, for the same samples. Shasta was next with contig NG50 values of 21.1, 20.2 and 41.1 Mb. Wtdbg2 produced the least contiguous assemblies, with contig NG50 values of 15.3, 13.7 and 14.0 Mb.

Fig. 2. Assembly metrics for Shasta, Wtgdb2, Flye and Canu before polishing.

a, NGx plot showing contig length distribution. The intersection of each line with the dashed line is the NG50 for that assembly. b, NGAx plot showing the distribution of aligned contig lengths. Each horizontal line represents an aligned segment of the assembly unbroken by a disagreement or unmappable sequence with respect to GRCh38. The intersection of each line with the dashed line is the aligned NGA50 for that assembly. c, Assembly disagreement counts for regions outside centromeres, segmental duplications and, for HG002, known SVs. d, Total generated sequence length versus total aligned sequence length (against GRCh38). e, Balanced base-level error rates for assembled sequences. f, Average runtime and cost for assemblers (Canu not shown).

Conversely, aligning the samples to GRCh38 and evaluating with QUAST33, Shasta had between 4.2 and 6.5× fewer disagreements (locations where the assembly contains a breakpoint with respect to the reference assembly) per assembly than the other assemblers (Supplementary Table 5). Breaking the assemblies at these disagreements and unaligned regions with respect to GRCh38, we observe much smaller absolute variation in contiguity (Fig. 2b and Supplementary Table 5). However, a substantial fraction of the identified disagreements likely reflect true SVs with respect to GRCh38. To address this, we discounted disagreements within chromosome Y, centromeres, acrocentric chromosome arms, QH-regions and known recent segmental duplications (all of which are enriched in SVs34,35); in the case of HG002, we further excluded a set of known SVs36. We still observe between 1.2× and 2× fewer disagreements in Shasta relative to Canu and Wtdbg2, and comparable results against Flye (Fig. 2c and Supplementary Table 6). To account for differences in the fraction of the genomes assembled, we analyzed disagreements contained within the intersection of all the assemblies (that is, in regions where all assemblers produced a unique assembled sequence). This produced results highly consistent with the previous analysis and suggests Shasta and Flye have the lowest and comparable rates of misassembly (Methods, see Supplementary Table 7). Finally, we used QUAST to calculate disagreements between the T2T Consortium’s chromosome X assembly, a highly curated, validated assembly32 and the subset of each CHM13 assembly mapping to it; Shasta has two to 17 times fewer disagreements than the other assemblers while assembling almost the same fraction of the assembly (Supplementary Table 8).

Canu consistently assembled the largest genomes (average 2.91 Gb), followed by Flye (average 2.83 Gb), Wtdbg2 (average 2.81 Gb) and Shasta (average 2.80 Gb). Due to their similarity, we would expect the most of these assembled sequences to map to another human genome. Discounting unmapped sequence, the differences are smaller: Canu produced an average of 2.86 Gb of mapped sequence per assembly, followed by Shasta (average 2.79 Gb), Flye (average 2.78 Gb) and Wtdbg2 (average 2.76 Gb) (Fig. 2d, see Methods). This analysis supports the notion that Shasta is currently relatively conservative versus its peers, producing the highest ratio of directly mapped assembly per sample.

For HG00733 and CHM13 we examined a library of bacterial artificial chromosome (BAC) assemblies (Methods). The BACs were largely targeted at known segmental duplications (473 of 520 BACs lie within 10 kb of a known duplication). Examining the subset of BACs for CHM13 and HG00733 that map to unique regions of GRCh38 (see Methods), we find Shasta contiguously assembles all 47 BACs, with Flye performing similarly (Supplementary Table 9). In the full set, we observe that Canu (411) and Flye (282) contiguously assemble a larger subset of the BACs than Shasta (132) and Wtdbg2 (108), confirming the notion that Shasta is relatively conservative in these duplicated regions (Supplementary Table 10). Examining the fraction of contiguously assembled BACs of all BACs represented in each assembly we can measure an aspect of assembly correctness. In this regard Shasta (97%) produces a much higher percentage of correct BACs in duplicated regions versus its peers (Canu 92%, Flye 87%, Wtdbg2 88%). In the intersected set of BACs attempted by all assemblers (Supplementary Table 11), Shasta, 100%; Flye, 100%; Canu, 98.50% and Wtdbg2, 90.80% all produce comparable results.

Shasta produced the most base-level accurate assemblies (average balanced error rate 0.98% on diploid and 0.54% on haploid), followed by Wtbdg2 (1.18% on diploid and 0.69% on haploid), Canu (1.40% on diploid and 0.71% on haploid) and Flye (1.64% on diploid and 2.21% on haploid) (Fig. 2e, see Methods and Supplementary Table 12). We also calculated the base-level accuracy in regions covered by all the assemblies and observe results consistent with the whole-genome assessment (Supplementary Table 13).

Shasta, Wtdbg2 and Flye were run on a commercial cloud, allowing us to reasonably compare their cost and runtime (Fig. 2e, see Methods). Shasta took an average of 5.25 h to complete each assembly at an average cost of US$70 per sample. In contrast, Wtdbg2 took 7.5× longer and cost 3.7× as much, and Flye took 11.9× longer and cost 9.9× as much. Due to the anticipated cost and complexity of porting it to Amazon Web Services (AWS), the Canu assemblies were run on a large, institutional compute cluster, consuming up to US$19,000 (estimated) of compute and took around 4–5 d per assembly (Methods, see Supplementary Tables 14 and 15).

To assess the use of using Shasta for SV characterization we created a workflow to extract putative heterozygous SVs from Shasta assembly graphs (Methods). Extracting SVs from an assembly graph for HG002, the length distribution of indels shows the characteristic spikes for known retrotransposon lengths (Supplementary Fig. 1). Comparing these SVs to the high-confidence GIAB SV set we find good concordance, with a combined F1 score of 0.68 (Supplementary Table 16).

Contiguously assembling major histocompatibility complex (MHC) haplotypes

The MHC region is difficult to resolve using short reads due to its repetitive and highly polymorphic nature37, and recent efforts to apply long-read sequencing to this problem have shown promise18,38. We analyzed the assemblies of CHM13 and HG00733 to see if they spanned the MHC region. For the haploid assemblies of CHM13 we find MHC is entirely spanned by a single contig in all four assemblers’ output, and most closely resembles the GL000251.2 haplogroup among those provided in GRCh38 (Fig. 3a, Supplementary Fig. 2 and Supplementary Table 17). In the diploid assembly of HG00733 two contigs span most of the MHC for Shasta and Flye, while Canu and Wtdbg2 span the region with one contig (Fig. 3b and Supplementary Fig. 3). However, we note that all these chimeric diploid assemblies lead to sequences that do not closely resemble any haplogroup (Methods).

Fig. 3. Shasta MHC assemblies compared with the reference human genome.

Unpolished Shasta assembly for CHM13 and HG00733, including HG00733 trio-binned maternal and paternal assemblies. Shaded gray areas are regions in which coverage (as aligned to GRCh38) drops below 20. Horizontal black lines indicate contig breaks. Blue and green describe unique alignments (aligning forward and reverse, respectively) and orange describes multiple alignments.

To attempt to resolve haplotypes of HG00733 we used trio-binning39 to partition the reads for HG00733 into two sets based on likely maternal or paternal lineage and assembled the haplotypes (Methods). For all assemblers and each haplotype assembly, the global contiguity worsened substantially (as the available read data coverage was approximately halved and, further, not all reads could be partitioned), but the resulting disagreement count decreased (Supplementary Table 18). When using haploid trio-binned assemblies, the MHC was spanned by a single contig for the maternal haplotype (Fig. 3c, Supplementary Fig. 4 and Supplementary Table 19), with high identity to GRCh38 and having the greatest contiguity and identity with the GL000255.1 haplotype. For the paternal haplotype, low coverage led to discontinuities (Fig. 3d) breaking the region into three contigs.

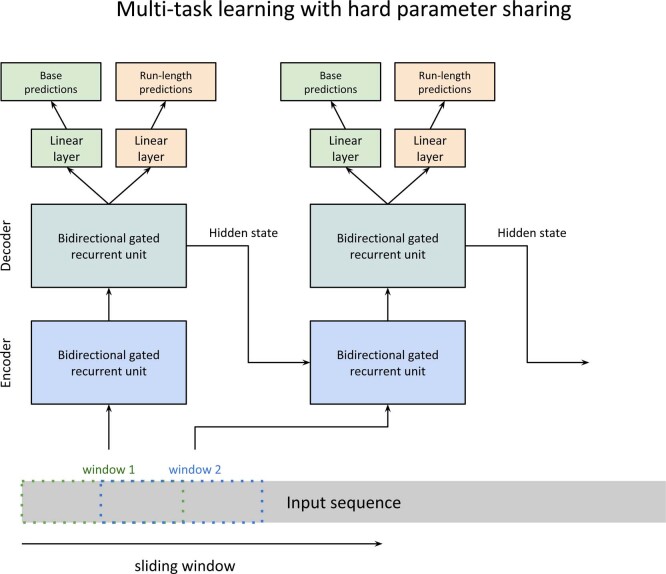

Deep neural network-based polishing for long-read assemblies

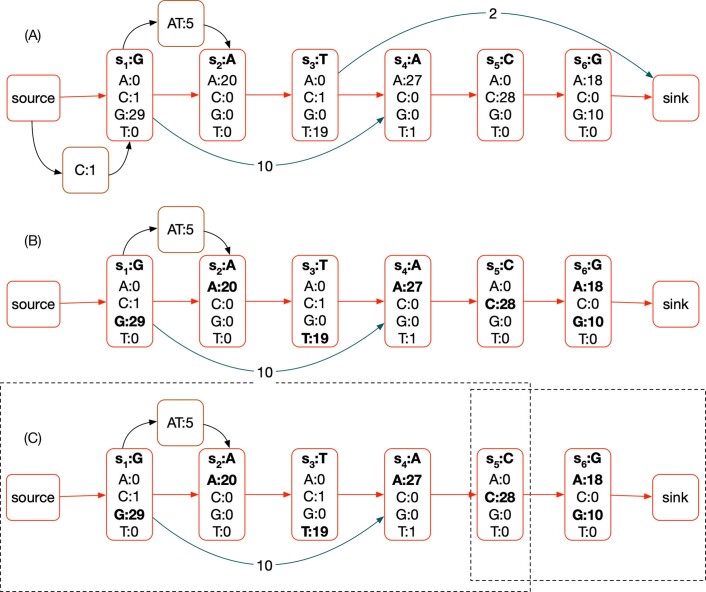

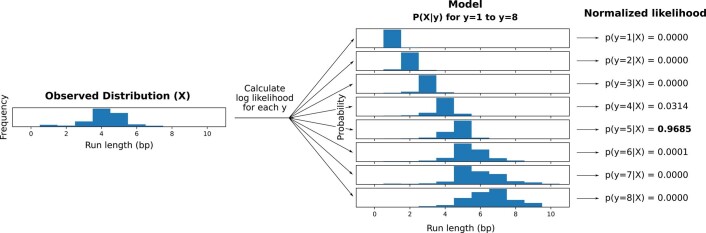

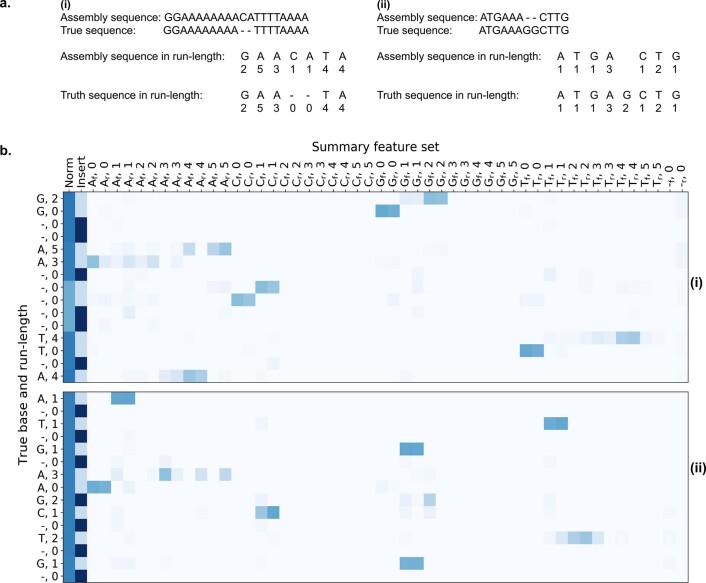

We developed a deep neural network-based consensus sequence polishing pipeline designed to improve the base-level quality of the initial assembly. The pipeline consists of two modules: MarginPolish and the homopolymer encoded long-read error-corrector for Nanopore (HELEN). MarginPolish uses a banded form of the forward–backward algorithm on a pairwise hidden Markov model (pair-HMM) to generate pairwise alignment statistics from the RLE alignment of each read to the assembly. From these statistics, MarginPolish generates a weighted RLE partial order alignment (POA)40 graph that represents potential alternative local assemblies. MarginPolish iteratively refines the assembly using this RLE POA, and then outputs the final summary graph for consumption by HELEN. HELEN uses a multi-task recurrent neural network (RNN)41 that takes the weights of the MarginPolish RLE POA graph to predict a nucleotide base and run length for each genomic position. The RNN takes advantage of contextual genomic features and associative coupling of the POA weights to the correct base and run length to produce a consensus sequence with higher accuracy.

To demonstrate the effectiveness of MarginPolish and HELEN, we compared them with the state-of-the-art nanopore assembly polishing workflow: four iterations of Racon polishing42 followed by Medaka. MarginPolish is analogous in function to Racon, both using pair-HMM-based methods for alignment and POA graphs for initial refinement. Similarly, HELEN is analogous to Medaka, in that both use a deep neural network and both work from summary statistics of reads aligned to the assembly.

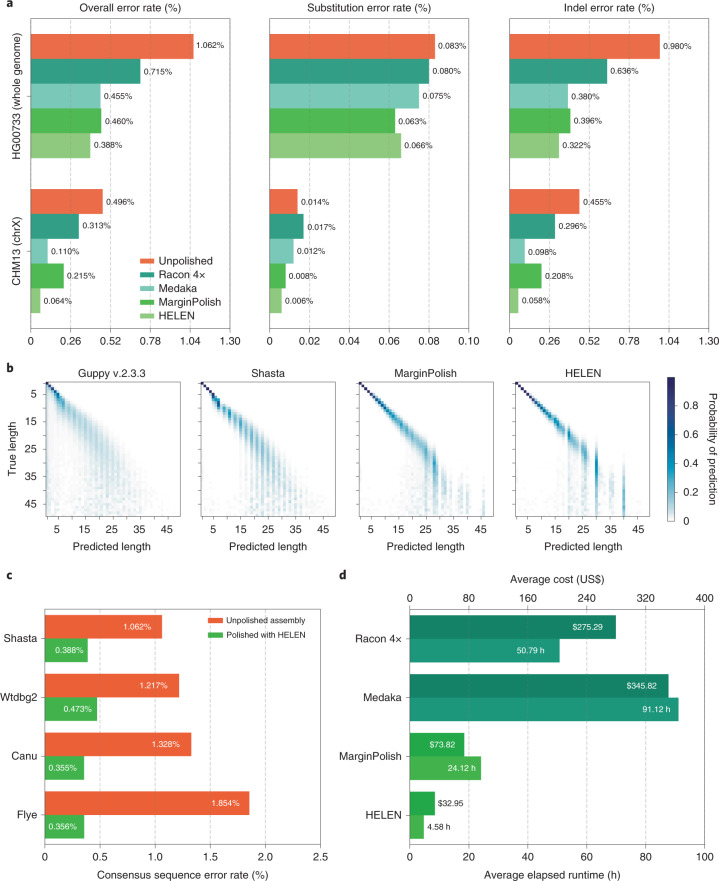

Figure 4a and Supplementary Tables 20–22 detail error rates for the four methods performed on the HG00733 and CHM13 Shasta assemblies (see Methods) using Pomoxis. For the diploid HG00733 sample MarginPolish and HELEN achieve a balanced error rate of 0.388% (Phred quality score QV = 24.12), compared to 0.455% (QV = 23.42) by Racon and Medaka. For both polishing pipelines, a notable fraction of these errors are likely due to true heterozygous variations. For the haploid CHM13 we restrict comparison to the highly curated X chromosome sequence provided by the T2T consortium32. We achieve a balanced error rate of 0.064% (QV = 31.92), compared to Racon and Medaka’s 0.110% (QV = 29.59).

Fig. 4. Polishing assembled genomes.

a, Balanced error rates for the four methods on HG00733 and CHM13. b, Row-normalized heatmaps describing the predicted run lengths (x axis) given true run lengths (y axis) for four steps of the pipeline on HG00733. Guppy v.2.3.3 was generated from 3.7 Gb of RLE sequence. Shasta, MarginPolish and HELEN were generated from whole assemblies aligned to their respective truth sequences. c, Error rates for MarginPolish and HELEN on four assemblies. d, Average runtime and cost.

For all assemblies, errors were dominated by indel errors; for example, substitution errors are 3.16 and 2.9 times fewer than indels in the polished HG000733 and CHM13 assemblies, respectively. Many of these errors relate to homopolymer length confusion; Fig. 4b analyzes the homopolymer error rates for various steps of the polishing workflow for HG00733. Each panel shows a heatmap with the true length of the homopolymer run on the y axis and the predicted run length on the x axis, with the color describing the likelihood of predicting each run length given the true length. Note that the dispersion of the diagonal steadily decreases. The vertical streaks at high run lengths in the MarginPolish and HELEN confusion matrix are the result of infrequent numerical and encoding artifacts (see Methods and Supplementary Fig. 5).

Figure 4c and Supplementary Table 23 show the overall error rate after running MarginPolish and HELEN on HG00733 assemblies generated by different assembly tools, demonstrating that they can be usefully employed to polish assemblies generated by other tools.

To investigate the benefit of using short reads for further polishing, we polished chromosome X of the CHM13 Shasta assembly after MarginPolish and HELEN using 10X Chromium reads with the Pilon polisher43. This led to a roughly twofold reduction in base errors, increasing the QV from roughly 32 (after polishing with MarginPolish and HELEN) to around 36 (Supplementary Table 24). Notably, attempting to use Pilon polishing on the raw Shasta assembly resulted in much poorer results (QV = 24).

Figure 4d and Supplementary Table 25 describe average runtimes and costs for the methods (see Methods). MarginPolish and HELEN cost a combined US$107 and took 29 h of wall-clock time on average, per sample. In comparison Racon and Medaka cost US$621 and took 142 wall-clock hours on average, per sample. To assess single-region performance we additionally ran the two polishing workflows on a single contig (roughly 1% of the assembly size), MarginPolish/HELEN was three times faster than Racon (1×)/Medaka (Supplementary Table 26).

Long-read assemblies contain nearly all human coding genes

To evaluate the accuracy and completeness of an assembled transcriptome we ran the Comparative Annotation Toolkit44, which can annotate a genome assembly using the human GENCODE45 reference human gene set (Table 1, Methods and Supplementary Tables 27–30).

Table 1.

CAT transcriptome analysis of human protein coding genes for HG00733 and CHM13

| Sample | Assembler | Polisher | Genes found (%) | Missing genes | Complete genes (%) |

|---|---|---|---|---|---|

| HG00733 | Canu | HELEN | 99.741 | 51 | 67.038 |

| Flye | HELEN | 99.405 | 117 | 71.768 | |

| Wtdbg2 | HELEN | 97.429 | 506 | 66.143 | |

| Shasta | HELEN | 99.228 | 152 | 68.069 | |

| Shasta | Medaka | 99.141 | 169 | 66.27 | |

| CHM13 | Shasta | HELEN | 99.111 | 175 | 74.202 |

| Shasta | Medaka | 99.035 | 190 | 73.836 |

For the HG00733 and CHM13 samples we found that Shasta assemblies polished with MarginPolish and HELEN contained nearly all human protein coding genes, having, respectively, an identified ortholog for 99.23% (152 missing) and 99.11% (175 missing) of these genes. Using the restrictive definition that a coding gene is complete in the assembly only if it is assembled across its full length, contains no frameshifts and retains the original intron–exon structure, we found that 68.07% and 74.20% of genes, respectively, were complete in the HG00733 and CHM13 assemblies. Polishing the Shasta assemblies alternatively with the Racon–Medaka pipeline achieved similar but uniformly less complete results.

Comparing the MarginPolish and HELEN polished assemblies for HG00733 generated with Flye, Canu and Wtdbg2 to the similarly polished Shasta assembly we found that Canu had the fewest missing genes (just 51), but that Flye, followed by Shasta, had the most complete genes. Wtdbg2 was clearly an outlier, with notably larger numbers of missing genes (506). For comparison we additionally ran BUSCO46 using the eukaryote set of orthologs on each assembly, a smaller set of 303 expected single-copy genes (Supplementary Tables 31 and 32). We find comparable performance between the assemblies, with small differences largely recapitulating the pattern observed by the larger CAT analysis.

Comparison of Shasta and PacBio HiFi assemblies

We compared the CHM13 Shasta assembly polished using MarginPolish and HELEN with the recently released Canu assembly of CHM13 using PacBio HiFi reads47. HiFi reads are based on circular consensus sequencing technology that delivers substantially lower error rates. The HiFi assembly has a lower NG50 (29.0 versus 41.0 megabase (Mb)) than the Shasta assembly (Supplementary Fig. 6). Consistent with our other comparisons to Canu, the Shasta assembly also contains a much lower disagreement count relative to GRCh38 (1073) than the Canu-based HiFi assembly (8,469), a difference that remains after looking only at disagreements within the intersection of the assemblies (380 versus 594). The assemblies have an almost equal NGAx (~20.0 Mb), but the Shasta assembly covers a smaller fraction of GRCh38 (95.28 versus 97.03%) (Supplementary Fig. 7 and Supplementary Table 33). Predictably, the HiFi assembly has a higher QV than the polished Shasta assembly (QV = 41 versus QV = 32).

Scaffolding to near chromosome scale

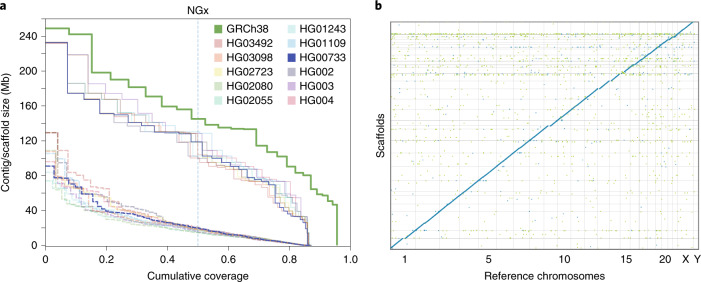

To achieve chromosome length sequences, we scaffolded all of the polished Shasta assemblies with HiC proximity-ligation data using HiRise48 (see Methods and Fig. 5a). On average, 891 joins were made per assembly. This increased the scaffold NG50 values to near chromosome scale, with a median of 129.96 Mb, as shown in Fig. 5a, with additional assembly metrics in Supplementary Table 36. Proximity-ligation data can also be used to detect misjoins in assemblies. In all 11 Shasta assemblies, no breaks to existing contigs were made while running HiRise to detect potential misjoins. Aligning HG00733 to GRCh38, we find no notable rearrangements and all chromosomes are spanned by one or a few contigs (Fig. 5b), with the exception of chrY, which is absent because HG00733 is female. Similar results were observed for HG002 (Supplementary Fig. 8).

Fig. 5. HiRise scaffolding for 11 genomes.

a, NGx plots for each of the 11 genomes, before (dashed) and after (solid) scaffolding with HiC sequencing reads, GRCh38 minus alternate sequences is shown for comparison. b, Dot plot showing alignments between the scaffolded HG00733 Shasta assembly and GRCh38 chromosome scaffolds. Blue indicates forward aligning segments, green indicates reverse, with both indicating unique alignments.

Discussion

With sequencing efficiency for long reads improving, computational considerations are paramount in determining overall time, cost and quality. Simply put, large genome de novo assembly will not become ubiquitous if the requirements are weeks of assembly time on large computational clusters. We present three new methods that provide a pipeline for the rapid assembly of long nanopore reads. Shasta can produce a draft human assembly in around 6 h and US$70 using widely available commercial cloud nodes. This cost and turnaround time is much more amenable to rapid prototyping and parameter exploration than even the fastest competing method (Wtdbg2), which was on average 7.5 times slower and 3.7 times more expensive.

The combination of the Shasta assembler and nanopore long-read sequences produced using the PromethION sequencer realizes substantial improvements in throughput; we completed all 2.3 Tb of nanopore data collection in 9 d, running up to 15 flow cells simultaneously.

In terms of assembly, we obtained an average NG50 of 18.5 Mb for the 11 genomes, roughly three times higher than for the first nanopore-sequenced human genome, and comparable with the best achieved by alternative technologies13,49. We found the addition of HiC sequencing for scaffolding necessary to achieve chromosome scale assemblies. However, our results are consistent with previous modeling based on the size and distribution of large repeats in the human genome, which predicts that an assembly based on 30 times coverage of such reads of >100 kb would approach the continuity of complete human chromosomes18,32.

Relative to alternate long-read and linked-read sequencing, the read identity of nanopore reads is lower, however, improving over time12,18. We observe modal read identity of 92.5%, resulting in better than QV = 30 base quality for haploid polished assembly from nanopore reads alone. The accurate resolution of highly repetitive and recently duplicated sequence will depend on long-read polishing, because short reads are generally not uniquely mappable. Further polishing using complementary data types, including PacBio HiFi reads49 and 10X Chromium50, will likely prove useful in achieving QV 40+ assemblies.

Shasta produces a notably more conservative assembly than competing tools, trading greater correctness for contiguity and total produced sequence. For example, the ratio of total length to aligned length is relatively constant for all other assemblers, where approximately 1.6% of sequence produced does not align across the three evaluated samples. In contrast, on average just 0.38% of Shasta’s sequence does not align to GRCh38, representing a more than four times reduction in unaligned sequence. Additionally, we note substantially lower disagreement counts, resulting in much smaller differences between the raw NGx and corrected NGAx values. Shasta also produces substantially more base-level accurate assemblies than the other competing tools. MarginPolish and HELEN provide a consistent improvement of base quality over all tested assemblers, with more accurate results than the current state-of-the-art long-read polishing workflow.

We assembled and compared haploid, trio-binned and diploid samples. Trio-binned samples show great promise for haplotype assembly, for example contiguously assembling an MHC haplogroup, but the halving of effective coverage resulted in ultimately less contiguous human assemblies with higher base-error rates than the related, chimeric diploid assembly. This can potentially be rectified by merging the haplotype assemblies to produce a pseudo-haplotype or increasing sequencing coverage. Indeed, the improvements in contiguity and base accuracy in CHM13 over the diploid samples illustrate what can be achieved with higher coverage of a haploid sample. We believe that one of the most promising directions for the assembly of diploid samples is the integration of phasing into the assembly algorithm itself, as pioneered by others17,51,52. We anticipate that the new tools we have described here are suited for this next step: the Shasta framework is well placed for producing phased assemblies over structural variants, MarginPolish is built off of infrastructure designed to phase long reads2 and the HELEN model could be improved to include haplotagged features for the identification of heterozygous sites.

Connected together, the tools we present enabled a polished assembly to be produced in around 24 h and for roughly US$180, against the fastest comparable combination of Wtdbg2, Racon and Medaka that costs 5.3 times more and is 4.3 times slower while producing measurably worse results in terms of disagreements, contiguity and base-level accuracy. Substantial further parallelism of polishing, the main time drain in our current pipeline, is easily possible.

We are working toward the goal of having a half-day turnaround of our complete computational pipeline. With real-time basecalling, a DNA-to-de novo assembly could conceivably be achieved in less than 96 h. Such speed would enable screening of human genomes for abnormalities in difficult-to-sequence regions.

Methods

Sample selection

The goal of sample selection was to select a set of individuals that collectively captured the maximum amount of weighted allelic diversity53. To do this, we created a list of all low-passage lymphoblastoid cell lines that are part of a trio available from the 1,000 Genomes Project collection54 (we selected trios to allow future addition of pedigree information, and low-passage line to minimize acquired variation). In some cases, we considered the union of parental alleles in the trios due to not having genotypes for the offspring. Let a weighted allele be a variant allele and its frequency in the 1,000 Genomes Project Phase 3 confidence variant set (VCF). We selected the first sample from our list that contained the largest sum of frequencies of weighted alleles, reasoning that this sample should have the largest expected fraction of variant alleles in common with any other randomly chosen sample. We then removed the variant alleles from this first sample from the set of variant alleles in consideration and repeated the process to pick the second sample, repeating the process recursively until we had selected seven samples. This set greedily, heuristically optimizes the maximum sum of weighted allele frequencies in our chosen sample subset. We also added the three Ashkenazim Trio samples and the Puerto Rican individual (HG00733). These four samples were added for the purposes of comparison with other studies that are using them23.

Cell culture

Lymphoblastoid cultures for each individual were obtained from the Coriell Institute Cell Repository (coriell.org) and were cultured in RPMI 1640 supplemented with 15% fetal bovine serum (Life Technologies). The cells underwent a total of six passages (p3 + 3). After expansion, cells were collected by pelleting at 300g for 5 min. Cells were resuspended in 10 ml of PBS and a cell count was taken using a BiRad TC20 cell counter. Cells were aliquoted into 50 ml of conical tubes containing 50 million cells, pelleted as above and washed with 10 ml of PBS before a final pelleting after which the PBS was removed and the samples were flash frozen on dry ice and stored at −80 °C until ready for further processing.

DNA extraction and size selection

We extracted high-molecular weight DNA using the Qiagen Puregene kit. We followed the standard protocol with some modifications. Briefly, we lysed the cells by adding 3 ml of Cell Lysis Solution per 10 million cells, followed by incubation at 37 °C for up to 1 h. We performed mild shaking intermediately by hand and avoided vortexing. Once clear, we split the lysate into 3-ml aliquots and added 1 ml of protein precipitation solution to each of the tubes. This was followed by pulse vortexing three times for 5 s each time. We next spun this at 2,000g for 10 min. We added the supernatant from each tube to a new tube containing 3 ml of isopropanol, followed by 50× inversion. The high-molecular weight DNA precipitated and formed a dense thread-like jelly. We used a disposable inoculation loop to extract the DNA precipitate. We then dipped the DNA precipitate, while it was on the loop, into ice-cold 70% ethanol. After this, the DNA precipitate was added to a new tube containing 50–250 µl 1× TE buffer. The tubes were heated at 50 °C for 2 h and then left at room temperature overnight to allow resuspension of the DNA. The DNA was then quantified using Qubit and NanoDrop.

We used the Circulomics Short-Read Eliminator kit to deplete short fragments from the DNA preparation. We size selected 10 µg of DNA using the Circulomics recommended protocol for each round of size selection.

Nanopore sequencing

We used the SQK-LSK109 kit and its recommended protocol for making sequencing libraries. We used 1 µg of input DNA per library. We prepared libraries at a 3× scale since we performed a nuclease flush on every flow cell, followed by the addition of a fresh library.

We used the standard PromethION scripts for sequencing. At around 24 h, we performed a nuclease flush using the ONT recommended protocol. We then reprimed the flow cell, and added a fresh library corresponding to the same sample. After the first nuclease flush, we restarted the run setting the voltage to −190 mV. We repeated the nuclease flush after another around 24 h (that is, around 48 h into sequencing), reprimed the flow cell, added a fresh library and restarted the run setting the run voltage to −200 mV.

We performed basecalling using Guppy v.2.3.5 on the PromethION tower using the graphics processing units (GPUs). We used the MinION DNA flipflop model (dna_r9.4.1_450bps_flipflop.cfg), as recommended by ONT.

Chromatin crosslinking and extraction from human cell lines

We thawed the frozen cell pellets and washed them twice with cold PBS before resuspension in the same buffer. We transferred aliquots containing five million cells by volume from these suspensions to separate microcentrifuge tubes before chromatin crosslinking by addition of paraformaldehyde (EMS catalog no. 15714) to a final concentration of 1%. We briefly vortexed the samples and allowed them to incubate at room temperature for 15 min. We pelleted the crosslinked cells and washed them twice with cold PBS before thoroughly resuspending in lysis buffer (50 mM Tris-HCl, 50 mM NaCl, 1 mM EDTA, 1% SDS) to extract crosslinked chromatin.

The HiC method

We bound the crosslinked chromatin samples to SPRI beads, washed three times with SPRI wash buffer (10 mM Tris-HCl, 50 mM NaCl, 0.05% Tween-20) and digested by DpnII (20 U, NEB catalog no. R0543S) for 1 h at 37 °C in an agitating thermal mixer. We washed the bead-bound samples again before incorporation of Biotin-11-dCTP (ChemCyte catalog no. CC-6002–1) by DNA Polymerase I, Klenow Fragment (10 U, NEB catalog no. M0210L) for 30 min at 25 °C with shaking. Following another wash, we carried out blunt-end ligation by T4 DNA Ligase (4,000 U, NEB Catalog No. M0202T) with shaking overnight at 16 °C. We reversed the chromatin crosslinks, digested the proteins, eluted the samples by incubation in crosslink reversal buffer (5 mM CaCl2, 50 mM Tris-HCl, 8% SDS) with Proteinase K (30 µg, Qiagen catalog no. 19133) for 15 min at 55 °C followed by 45 min at 68 °C.

Sonication and Illumina library generation with biotin enrichment

After SPRI bead purification of the crosslink-reversed samples, we transferred DNA from each to Covaris microTUBE AFA Fiber Snap-Cap tubes (Covaris catalog no. 520045) and sonicated to an average length of 400 ± 85 base pairs using a Covaris ME220 Focused-Ultrasonicator. Temperature was held stably at 6 °C and treatment lasted 65 s per sample with a peak power of 50 W, 10% duty factor and 200 cycles per burst. The average fragment length and distribution of sheared DNA was determined by capillary electrophoresis using an Agilent FragmentAnalyzer 5200 and HS NGS Fragment Kit (Agilent catalog no. DNF-474–0500). We ran sheared DNA samples twice through the NEBNext Ultra II DNA Library Prep Kit for Illumina (catalog no. E7645S) End Preparation and Adapter Ligation steps with custom Y adapters to produce library preparation replicates. We purified ligation products via SPRI beads before Biotin enrichment using Dynabeads MyOne Streptavidin C1 beads (ThermoFisher catalog no. 65002).

We performed indexing PCR on streptavidin beads using KAPA HiFi HotStart ReadyMix (catalog no. KK2602) and PCR products were isolated by SPRI bead purification. We quantified the libraries by Qubit 4 fluorometer and FragmentAnalyzer 5200 HS NGS Fragment Kit (Agilent catalog no. DNF-474-0500) before pooling for sequencing on an Illumina HiSeq X at Fulgent Genetics.

Analysis methods

Read alignment identities

To generate the identity violin plots (Fig. 1c,e) we aligned all the reads for each sample and flow cell to GRCh38 using minimap2 (ref. 24) with the map-ont preset. Using a custom script get_summary_stats.py in the repository https://github.com/rlorigro/nanopore_assembly_and_polishing_assessment, we parsed the alignment for each read and enumerated the number of matched (N=), mismatched (NX), inserted (NI) and deleted (ND) bases. From this, we calculated alignment identity as N=/(N= + NX + NI + ND). These identities were aggregated over samples and plotted using the seaborn library with the script plot_summary_stats.py in the same repository. This method was used to generate Fig. 1c,e. For Fig. 1e, we selected reads from HG00733 flowcell1 aligned to GRCh38 chr1. The ‘Standard’ identities are used from the original reads/alignments. To generate identity data for the ‘RLE’ portion, we extracted the reads above, run-length encoded the reads and chr1 reference, and followed the alignment and identity calculation process described before. Sequences were run-length encoded using a simple script — https://github.com/rlorigro/runlength_analysis/blob/master/runlength_encode_fasta.py — and aligned with minimap2 using the map-ont preset and –k 19.

Base-level error-rate analysis with Pomoxis

We analyzed the base-level error rates of the assemblies using the assess_assembly tool of the Pomoxis toolkit (https://github.com/nanoporetech/pomoxis). The assess_assembly tool is tailored to compute the error rates in a given assembly compared to a truth assembly. It reports an identity error rate, insertion error rate, deletion error rate and an overall error rate. The identity error rate indicates the number of erroneous substitutions, the insertion error rate is the number of incorrect insertions and the deletion error rate is the number of deleted bases averaged over the total aligned length of the assembly to the truth. The overall error rate is the sum of the identity, insertion and deletion error rates. For the purpose of simplification, we used the indel error rate, which is the sum of insertion and deletion error rates.

The assess_assembly script takes an input assembly and a reference assembly to compare against. The assessment tool chunks the reference assembly to 1-kb regions and aligns it back to the input assembly to get a trimmed reference. Next, the input is aligned to the trimmed reference sequence with the same alignment parameters to get an input assembly to the reference assembly alignment. The total aligned length is the sum of the lengths of the trimmed reference segments where the input assembly has an alignment. The total aligned length is used as the denominator while averaging each of the error categories to limit the assessment in only correctly assembled regions. Then the tool uses stats_from_bam, which counts the number of mismatch bases, insert bases and delete bases at each of the aligned segments, and reports the error rate by averaging them over the total aligned length.

Truth assemblies for base-level error-rate analysis

We used HG002, HG00733 and CHM13 for base-level error-rate assessment of the assembler and the polisher. These three assemblies have high-quality assemblies publicly available, which are used as the ground truth for comparison. Two of the samples, HG002 and HG00733, are diploid samples; hence, we picked one of the two possible haplotypes as the truth. The reported error rate of HG002 and HG00733 include some errors arising due to the zygosity of the samples. The complete hydatidiform mole sample CHM13 is a haploid human genome that is used to assess the applicability of the tools on haploid samples. We have gathered and uploaded all the files we used for assessment in one place at https://console.cloud.google.com/storage/browser/kishwar-helen/truth_assemblies/.

To generate the HG002 truth assembly, we gathered the publicly available GIAB high-VCF against GRCh38 reference sequence. Then we used bedtools to create an assembly (FASTA) file from the GRCh38 reference and the high-confidence variant set. We got two files using this process for each of the haplotypes, and we picked one randomly as the truth. All the diploid HG002 assembly is compared against this one chosen assembly. GIAB also provides a bed file annotating high-confidence region where the called variants are highly precise and sensitive. We used this bed file with assess_assembly to ensure that we compare the assemblies only in the high-confidence regions.

The HG00733 truth is from the publicly available phased PacBio high-quality assembly of this sample55. We picked phase0 as the truth assembly and acquired it from the National Center for Biotechnology Information under accession GCA_003634895.1. We note that the assembly is phased but not haplotyped, such that portions of phase0 will include sequences from both parental haplotypes and is not suitable for trio-binned analyses. Furthermore, not all regions were fully phased; regions with variants that are represented as some combination of both haplotypes will result in lower QV and a less accurate truth.

For CHM13, we used the v.0.6 release of CHM13 assembly by the T2T consortium32. The reported quality of this truth assembly in QV value is 39. One of the attributes of this assembly is chromosome X. As reported by the T2T assembly authors, chromosome X of CHM13 is the most complete (end-to-end) and high-quality assembly of any human chromosome. We obtained the chromosome X assembly, which is the highest-quality truth assembly (QV ≥ 40) we have.

QUAST/BUSCO

To quantify contiguity, we primarily depended on the tool QUAST33. QUAST identifies misassemblies as main rearrangement events in the assembly relative to the reference. We use the phrase ‘disagreement’ in our analysis, as we find ‘misassembly’ inappropriate considering potentially true structural variation. For our assemblies, we quantified all contiguity stats against GRCh38, using autosomes plus chromosomes X and Y only. We report the total disagreements given that their relevant ‘size’ descriptor was greater than 1 kb, as is the default behavior in QUAST. QUAST provides other contiguity statistics in addition to disagreement count, notably total length and total aligned length as reported in Fig. 2d. To determine total aligned length (and unaligned length), QUAST performs collinear chaining on each assembled contig to find the best set of nonoverlapping alignments spanning the contig. This process contributes to QUAST’s disagreement determination. We consider an unaligned sequence to be the portions of the assembled contigs that are not part of this best set of nonoverlapping alignments. All statistics are recorded in Supplementary Table 5. For all QUAST analyses, we used the flags min-identity 80 and fragmented.

QUAST also produces an NGAx plot (similar to an NGx plot) that shows the aligned segment size distribution of the assembly after accounting for disagreements and unalignable regions. The intermediate segment lengths that would allow NGAx plots to be reproduced across multiple samples on the same axis (as is shown in Fig. 2b) are not stored, so we created a GitHub fork of QUAST to store this data during execution at https://github.com/rlorigro/quast. Finally, the assemblies and the output of QUAST were parsed to generate figures with an NGx visualization script, ngx_plot.py, found at http://github.com/rlorigro/nanopore_assembly_and_polishing_assessment/.

For NGx and NGAx plots, a total genome size of 3.23 Gb was used to calculate cumulative coverages.

BUSCO46 is a tool that quantifies the number of benchmarking universal single-copy orthologs present in an assembly. We ran BUSCO via the option within QUAST, comparing against the eukaryota set of orthologs from OrthoDB v.9.

Disagreement assessments

To analyze the QUAST-reported disagreements for different regions of the genome, we gathered the known segmental duplication regions8, centromeric regions for GRCh38 and known regions in GRCh38 with structural variation for HG002 from GIAB36. We used a Python script quast_sv_extractor.py that compares each reported disagreement from QUAST to the segmental duplication, SV and centromeric regions and discounts any disagreement that overlaps with these regions. The quast_sv_extractor.py script can be found at https://github.com/kishwarshafin/helen/tree/master/helen/modules/python/helper.

The segmental duplication regions of GRCh38 defined in the ucsc.collapsed.sorted.segdups file can be downloaded from https://github.com/mvollger/segDupPlots/.

The defined centromeric regions of GRCh38 for all chromosomes are used from the available summary at https://www.ncbi.nlm.nih.gov/grc/human.

For GIAB HG002, known SVs for GRCh38 are available in NIST_SVs_Integration_v0.6/ under ftp://ftp-trace.ncbi.nlm.nih.gov/giab/ftp/data/AshkenazimTrio/analysis/. We used the Tier1+2 bed file available at the GIAB ftp site.

We further exclude SV enriched regions like centromeres, secondary constriction regions, acrocentric arms, large tandem repeat arrays, segmental duplications and the Y chromosome plus 10-kb pairs on either side of them. The file is available at https://github.com/kishwarshafin/helen/blob/master/masked_regions/GRCh38_masked_regions.bed.

To analyze disagreements within the intersection of the assembled sequences we performed the following analysis. For each assembly we used minimap2 and samtools to create regions of unique alignment to GRCh38. For minimap2 we used the options --secondary=no -a --eqx -Y -x asm20 -m 10000 -z 10000,50 -r 50000 --end-bonus=100 -O 5,56 -E 4,1 -B 5. We fed these alignments into samtools view with options -F 260 -u- and then samtools sort with option -m. We then scanned 100 basepair windows of GRCh38 to find windows where all assemblies for the given sample were aligned with a one-to-one mapping to GRCh38. We then report the sum of disagreements across these windows. The script for this analysis can be found at https://github.com/mvollger/consensus_regions.

Trio-binning

We performed trio-binning on two samples, HG002 and HG00733 (ref. 39). For HG00733, we obtained the parental read sample accessions (HG00731, HG00732) from the 1,000 Genome Database. Then we counted k-mers with meryl to create maternal and paternal k-mer sets. Based on manual examination of the k-mer count histograms to determine an appropriate threshold, we excluded k-mers occurring fewer than six times for maternal set and five times for paternal set. We subtracted the paternal set from the maternal set to get k-mers unique to the maternal sample and similarly derived unique paternal k-mer set. Then for each read, we counted the number of occurrences of unique maternal and paternal k-mers and classified the read based on the highest occurrence count. During classification, we avoided normalization by k-mer set size. This resulted in 35.2× maternal, 37.3× paternal and 5.6× unclassified for HG00733. For HG002, we used the Illumina data for the parental samples (HG003, HG004) from GIAB project23. We counted k-mers using meryl and derived maternal paternal sets using the same protocol. We filtered k-mers that occur fewer than 25 times in both maternal and paternal sets. The classification resulted in 24× maternal, 23× paternal and 3.5× unknown. The commands and data source are detailed in the Supplementary Information.

Transcript analysis with comparative annotation toolkit (CAT)

We ran the CAT44 to annotate the polished assemblies to analyze how well Shasta assembles transcripts and genes. Each assembly was individually aligned to the GRCh38 reference assembly using Cactus56 to create the input alignment to CAT. The GENCODE45 V30 annotation was used as the input gene set. CAT was run in the transMap mode only, without Augustus refinement, since the goal was only to evaluate the quality of the projected transcripts. All transcripts on chromosome Y were excluded from the analysis since some samples lacked a Y chromosome.

Run-length confusion matrix

To generate run-length confusion matrices from reads and assemblies, we run-length encoded the assembly/read sequences and reference sequences using a purpose-built Python script, measure_runlength_distribution_from_fasta.py. The script requires a reference and sequence file and can be found in the GitHub repository https://github.com/rlorigro/runlength_analysis/. The run-length encoded nucleotides were aligned to the run-length encoded reference nucleotides with minimap2. As run-length encoded sequences cannot have identical adjacent nucleotides, the number of unique k-mers is diminished with respect to standard sequences. As minimap2 uses empirically determined sizes for seed k-mers, we used a k-mer size of 19 to approximately match the frequency of the default size (15) used by the presets for standard sequences. For alignment of reads and assemblies we used the map-ont and asm20 presets, respectively.

By iterating through the alignments, each match position in the cigar string (mismatched nucleotides are discarded) was used to find a pair of lengths (x, y) such that x is a predicted length and y is the true (reference) length. For each pair, we updated a matrix that contains the frequency of every possible pairing of prediction versus truth, from length 1 to 50 bp. Finally, this matrix is normalized by dividing each element by the sum of the observations for its true run length and plotted as a heatmap. Each value represents the probability of predicting a length for a given true length.

Runtime and cost analysis

Our runtime analysis was generated with multiple methods detailing the amount of time the processes took to complete. These methods include the Unix command time and a home-grown resource tracking script, which can be found in the https://github.com/rlorigro/TaskManager repository. We note that the assembly and polishing methods have different resource requirements, and do not all fully use available CPUs, GPUs and memory over the program’s execution. As such, we report runtimes using wall-clock time and the number of CPUs the application was configured to use, but do not convert to CPU hours. Costs reported in the figures are the product of the runtime and AWS instance price. Because portions of some applications do not fully use CPUs, cost could potentially be reduced by running on a smaller instance that would be fully used, and runtime could be reduced by running on a larger instance that can be fully used for some portion of execution. We particularly note the long runtime of Medaka and found that for most of the total runtime, only a single CPU was used. Last, we note that data transfer times are not reported in runtimes. Some of the data required or generated exceeds hundreds of gigabytes, which could be notable in relation to the runtime of the process. Notably, the images generated by MarginPolish and consumed by HELEN were often greater than 500 GB in total.

All recorded runtimes are reported in the supplement. For Shasta, times were recorded to the tenth of the hour. All other runtimes were recorded to the minute. All runtimes reported in figures were run on the AWS cloud platform.

Shasta runtime reported in Fig. 2f was determined by averaging across all 12 samples. Wtdbg2 runtime was determined by summing runtimes for wtdbg2 and wtpoa-cns and averaging across the HG00733, HG002 and CHM13 runs. Flye runtime was determined by averaging across the HG00733, HG002 and CHM13 runs, which were performed on multiple instance types (x1.16xlarge and x1.32xlarge). We calculated the total cost and runtime for each run and averaged these amounts; no attempt to convert these to a single instance type was performed. Precise Canu runtimes are not reported, as they were run on the National Institutes of Health (NIH) Biowulf cluster. Each run was restricted to nodes with 28 cores (56 hyperthreads) (2×2680v4 or 2×2695v3 Intel CPUs) and 248 GB of RAM or 16 cores (32 hyperthreads) (2×2650v2 Intel CPUs) and 121 GB of RAM. Full details of the cluster are available at https://hpc.nih.gov. The runs took between 219,000 and 223,000 CPU hours (4–5 wall-clock days). No single job used more than 80 GB of RAM/12 CPUs. We find the r5.4xlarge (US$1.008 per hour) to be the cheapest AWS instance type possible considering this resource usage, which puts estimated cost between US$18,000 and US$19,000 per genome.

For MarginPolish, we recorded all runtimes, but used various thread counts that did not always fully use the instance’s CPUs. The runtime reported in the figure was generated by averaging across eight of the 12 samples, selecting runs that used 70 CPUs (of the 72 available on the instance). The samples this was true for were GM24385, HG03492, HG01109, HG02055, HG02080, HG01243, HG03098 and CHM13. Runtimes for read alignments used by MarginPolish were not recorded. Because MarginPolish requires an aligned BAM, we found it unfair to not report this time in the figure as it is a required step in the workflows for MarginPolish, Racon and Medaka. As a proxy for the unrecorded read alignment time used to generate BAMs for MarginPolish, we added the average alignment time recorded while aligning reads in preparation for Medaka runs. We note that the alignment for MarginPolish was done by piping output from minimap2 directly into samtools sort, and piping this into samtools view to filter for primary and supplementary reads. Alignment for Medaka was done using mini_align, which is a wrapper for minimap2 bundled in Medaka that simultaneously sorts output.

Reported HELEN runs were performed on GCP except for HG03098, but on instances that match the AWS instance type p2.8xlarge in both CPU count and GPU (NVIDIA Tesla P100). As such, the differences in runtime between the platforms should be negligible, and we have calculated cost based on the AWS instance price for consistency. The reported runtime is the sum of time taken by call_consensus.py and stitch.py. Unannotated runs were performed on UCSC hardware. Racon runtimes reflect the sum of four series of read alignment and polishing. The time reported in the figure is the average of the runtime of this process run on the Shasta assembly for HG00733, HG002 and CHM13.

Medaka runtime was determined by averaging across the HG00733, HG002 and CHM13 runs after running Racon four times on the Shasta assembly. We again note that this application in particular did not fully use the CPUs for most of the execution, and in the case of HG00733 appeared to hang and was restarted. The plot includes the average runtime from read alignment using minialign; this is separated in the tables in the Supplementary Information. We ran Medaka on an x1.16xlarge instance, which had more memory than was necessary. When determining cost, we chose to price the run based on the cheapest AWS instance type that we could have used accounting for configured CPU count and peak memory usage (c5n.18xlarge). This instance could have supported eight more concurrent threads, but as the application did not fully use the CPUs, we find this to be a fair representation.

Assembly of MHC

Each of the eight GRCh38 MHC haplotypes were aligned using minimap2 (with preset asm20) to whole-genome assemblies to identify spanning contigs. These contigs were then extracted from the genomic assembly and used for alignment visualization. For dot plots, Nucmer 4.057 was used to align each assembler’s spanning contigs to the standard chr6:28000000-34000000 MHC region, which includes 500-Mb flanks. Output from this alignment was parsed with Dot58, which has a web-based graphical user interface for visualization. All defaults were used in both generating the input files and drawing the figures. Coverage plots were generated from reads aligned to chr6, using a script, find_coverage.py, located at http://github.com/rlorigro/nanopore_assembly_and_polishing_assessment/.

The best matching alt haplotype (to Shasta, Canu and Flye) was chosen as a reference haplotype for quantitative analysis. Haplotypes with the fewest supplementary alignments across assemblers were top candidates for QUAST analysis. Candidates with comparable alignments were differentiated by identity. The highest contiguity/identity MHC haplotype was then analyzed with QUAST using -min-identity 80. For all MHC analyses regarding Flye, the unpolished output was used.

BAC analysis

At a high level, the BAC analysis was performed by aligning BACs to each assembly, quantifying their resolution and calculating identity statistics on those that were fully resolved.

We obtained 341 BACs for CHM13 (refs. 59,60) and 179 for HG00733 (ref. 8) (complete BAC clones of VMRC62), which had been selected primarily by targeting complex or highly duplicated regions. We performed the following analysis on the full set of BACs (for CHM13 and HG00733), and a subset selected to fall within unique regions of the genome. To determine this subset, we selected all BACs that are greater than 10 kb away from any segmental duplication, resulting in 16 of HG00733 and 31 of CHM13. This subset represents simple regions of the genome that we would expect all assemblers to resolve.

For the analysis, BACs were aligned to each assembly with the command minimap2 -secondary=no -t 16 -ax asm20 assembly.fasta bac.fasta>assembly.sam and converted to a PAF-like format that describes aligned regions of the BACs and assemblies. Using this, we calculated two metrics describing how resolved each BAC was: closed is defined as having 99.5% of the BAC aligned to a single locus in the assembly; attempted is defined as having a set of alignments covering ≥95% of the BAC to a single assembly contig where all alignments are at least 1 kb away from the contig end. If such a set exists, it counts as attempted. We further calculated median and mean identities (using the alignment identity metric described above) of the closed BACs. These definitions were created such that a contig that is counted as attempted but not closed likely reflects a disagreement. The code for this can be found at https://github.com/skoren/bacValidation.

Short-read polishing

Chromosome X of the CHM13 assembly (assembled first with Shasta, then polished with MarginPolish and HELEN) was obtained by aligning the assembly to GRCh38 (using minimap2 with the –x asm20 flag). The 10X Chromium reads were downloaded from the Nanopore Whole Genome Sequencing Consortium (https://github.com/nanopore-wgs-consortium/CHM13/).

The 10X reads were from a NovaSeq eitinstrument at a coverage of approximately 50×. The reads corresponding to chromosome X were extracted by aligning the entire read set to the whole CHM13 assembly using the 10X Genomics Long Ranger Align pipeline (v.2.2), then extracting those corresponding to the corresponding chromosome X contigs with samtools. Pilon43 was run iteratively for a total of three rounds, in each round aligning the reads to the current assembly with Long Ranger and then running Pilon with default parameters.

Structural variant assessment

To create an assembly graph in GFA format, Shasta v.0.1.0 was run using the HG002 sequence data with -MarkerGraph.simplifyMaxLength 10 to reduce bubble removal and -MarkerGraph.highCoverageThreshold 10 to reduce the removal of edges normally removed by the transitive reduction step.

To detect structural variation inside the assembly graphs produced by Shasta, we extracted unitigs from the graph and aligned them back to the linear reference. Unitigs are walks through the assembly graph that do not traverse any node end that includes a bifurcation. We first processed the Shasta assembly graphs with gimbricate (https://github.com/ekg/gimbricate). We used gimbricate to recompute overlaps in nonRLE space and to remove nodes in the graph only supported by a single sequencing read.

To remove overlaps from the graph edges, we then ‘bluntified’ resulting GFAs with vg find -F (https://github.com/vgteam/vg). We then applied odgi unitig (https://github.com/vgteam/odgi) to extract unitigs from the graph, with the condition that the starting node in the unitig generation must be at least 100 bp long. To ensure that the unitigs could be mapped back to the linear reference, we appended a random walk of 25 kb after the natural end of each unitig, with the expectation that even should unitigs would yield around 50 kb of mappable sequence. Finally, we mapped the unitigs to GRCh38 with minimap2 with a bandwidth of 25 kb (-r25000) and called variants in the alignments using paftools.js from the minimap2 distribution. We implemented the process in a single script that produces variant calls from the unitig set of a given graph: https://github.com/ekg/shastaGFA/blob/master/shastaGFAtoVCF_unitig_paftools.sh.

The extracted variants were compared to the structural variants from the GIAB benchmark in HG002 (v.0.6, ref. 36). Precision, recall and F1 scores were computed on variants not overlapping simple repeats and within the benchmark’s high-confidence regions. Deletions in the assembly and the GIAB benchmark were matched if they had at least 50% reciprocal overlap. Insertions were matched if located at less than 100 bp from each other and similar in size (50% reciprocal similarity).

Shasta

The following describes Shasta v.0.1.0 (https://github.com/chanzuckerberg/shasta/releases/tag/0.1.0), which was used throughout our analysis. All runs were done on an AWS x1.32xlarge instance (1,952 GB memory, 128 virtual processors). The runs used the Shasta recommended options for best performance (-memoryMode filesystem -memoryBacking 2M). Rather than using the distributed version of the release, the source code was rebuilt locally for best performance as recommended by Shasta documentation.

RLE of input reads

Shasta represents input reads using RLE. The sequence of each input read is represented as a sequence of bases, each with a repeat count that says how many times each of the bases is repeated. Such a representation has previously been used in biological sequence analysis24–26.

For example, the read

CGATTTAAGTTA

is represented as follows using RLE:

CGATAGTA

11132121

Using RLE makes the assembly process less sensitive to errors in the length of homopolymer runs, which are the most common type of errors in Oxford Nanopore reads. For example, consider these two reads:

CGATTTAAGTTA

CGATTAAGGGTTA

Using their raw representation, these reads can be aligned like this:

CGATTTAAG––TTA

CGATT–AAGGGTTA

Aligning the second read to the first required a deletion and two insertions. But in RLE, the two reads become:

CGATAGTA

11132121

CGATAGTA

11122321

The sequence portions are now identical and can be aligned trivially and exactly, without any insertions or deletions:

CGATAGTA

CGATAGTA

The differences between the two reads only appear in the repeat counts:

11132121

11122321

The Shasta assembler uses 1 byte to represent repeat counts, and as a result it only represents repeat counts between 1 and 255. If a read contains more than 255 consecutive bases, it is discarded on input. In the data we have analyzed so far, such reads are extremely rare.

Some properties of base sequences in RLE

In the sequence portion of the RLE, consecutive bases are always distinct. If they were not, the second one would be removed from the RLE sequence, while increasing the repeat count for the first one.

With ordinary base sequences, the number of distinct k-mers of length k is 4k. But with run-length base sequences, the number of distinct k-mers of length k is 4 × 3k−1. This is a consequence of the previous bullet.

The run-length sequence is generally shorter than the raw sequence and cannot be longer. For a long random sequence, the number of bases in the run-length representation is three-quarters of the number of bases in the raw representation.

Markers

Even with RLE, errors in input reads are still frequent. To further reduce sensitivity to errors, and also to speed up some of the computational steps in the assembly process, the Shasta assembler also uses a read representation based on markers. Markers are occurrences in reads of a predetermined subset of short k-mers. By default, Shasta uses for this purpose k-mers with k = 10 in RLE, corresponding to an average approximately 13 bases in raw read representation.

Just for the purposes of illustration, consider a description using markers of length 3 in RLE. There is a total 4 × 32 = 36 distinct such markers. We arbitrarily choose the following fixed subset of the 36, and we assign an identity to each of the k-mers in the subset as follows:

TGC 0

GCA 1

GAC 2

CGC 3

Consider now the following portion of a read in run-length representation (here, the repeat counts are irrelevant and so they are omitted):

CGACACGTATGCGCACGCTGCGCTCTGCAGC

GAC TGC CGC TGC

CGC TGC GCA

GCA CGC

Occurrences of the k-mers defined in the example above are shown and define the markers in this read. Note that markers can overlap. Using the marker identities defined in the list, we can summarize the sequence of this read portion as follows:

2 0 3 1 3 0 3 0 1

This is the marker representation of this read portion. It just includes the sequence of markers occurring in the read, not their positions. Note that the marker representation loses information, as it is not possible to reconstruct the complete initial sequence from the marker representation. This also means that the marker representation is insensitive to errors in the sequence portions that do not belong to any markers.

The Shasta assembler uses a random choice of the k-mers to be used as markers. The length of the markers k is controlled by assembly parameter Kmers.k with a default value of ten. Each k-mer is randomly chosen to be used as a marker with probability determined by assembly parameter --Kmers.probability with a default value of 0.1. With these default values, the total number of distinct markers is approximately 0.1 × 4 × 39 ≅ 7,900.

The only constraint used in selecting k-mers to be used as markers is that if a k-mer is a marker, its reverse complement should also be a marker. This makes it easy to construct the marker representation of the reverse complement of a read from the marker representation of the original read. It also ensures strand symmetry in some of the computational steps.

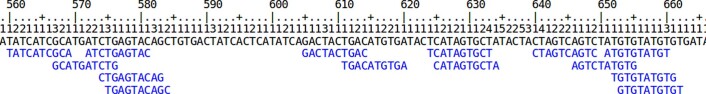

It is possible that the random selection of markers is not optimal, and that it may be best to select the markers based on their frequency in the input reads or other criteria. These possibilities have not yet been investigated. Extended Data Fig. 1 shows the run-length representation of a portion of a read and its markers, as displayed by the Shasta http server.

Extended Data Fig. 1. Read Markers.

Markers aligned to a run length encoded read.

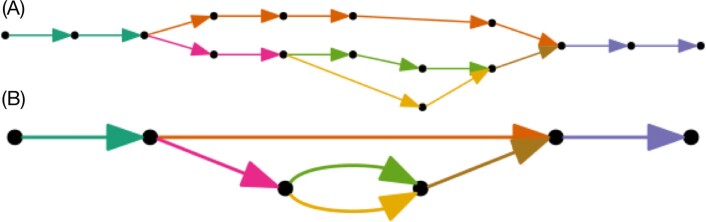

Marker alignments

The marker representation of a read is a sequence in an alphabet consisting of the marker identities. This sequence is much shorter than the original sequence of the read but uses a much larger alphabet. For example, with default Shasta assembly parameters, the marker representation is ten times shorter than the run-length encoded read sequence, or about 13 times shorter than the raw read sequence. Its alphabet has around 8,000 symbols, many more than the four symbols that the original read sequence uses.

Because the marker representation of a read is a sequence, we can compute an alignment of two reads directly in marker representation. Computing an alignment in this way has two important advantages:

The shorter sequences and larger alphabet make the alignment much faster to compute.

The alignment is insensitive to read errors in the portions that are not covered by any marker.

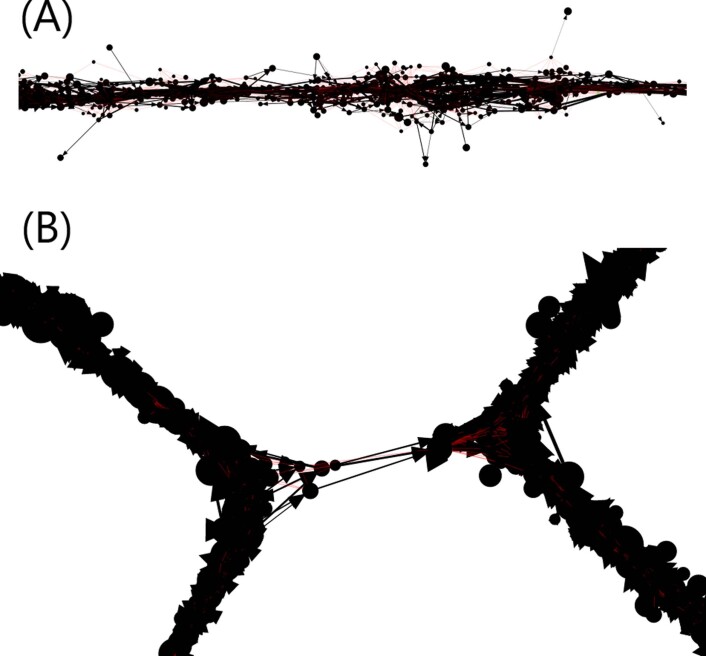

For these reasons, the marker representation is orders of magnitude more efficient than the raw base representation when computing read alignments. Extended Data Fig. 2 shows an example alignment matrix.

Extended Data Fig. 2. Marker Alignment.

A marker alignment represented as a dot-plot. Elements that are identical between the two sequences are displayed in green or red - the ones in green are the ones that are part of the optimal alignment computed by the Shasta assembler. Because of the much larger alphabet, matrix elements that are identical between the sequences but are not part of the optimal alignment are infrequent. Each alignment matrix element here corresponds on average to a 13 13 block in the alignment matrix in raw base sequence.

Computing optimal alignments in marker representation

To compute the (likely) optimal alignment (example highlighted in green in Extended Data Fig. 2), the Shasta assembler uses a simple alignment algorithm on the marker representations of the two reads to be aligned. It effectively constructs an optimal path in the alignment matrix, but using some ‘banding’ heuristics to speed up the computation:

The maximum number of markers that an alignment can skip on either read is limited to a maximum, under control of assembly parameter Align.maxSkip (default value 30 markers, corresponding to around 400 bases when all other Shasta parameters are at their default). This reflects the fact that Oxford Nanopore reads can often have long stretches in error. In the alignment matrix shown in Extended Data Fig. 2, there is a skip of about 20 markers (two light-gray squares) following the first ten aligned markers (green dots) on the top left.

The maximum number of markers that an alignment can skip at the beginning or end of a read is limited to a maximum, under control of assembly parameter Align.maxTrim (default value 30 markers, corresponding to around 400 bases when all other Shasta parameters are at their default). This reflects the fact that Oxford Nanopore reads often have an initial or final portion that is not usable. These first two heuristics are equivalent to computing a reduced band of the alignment matrix.

To avoid alignment artifacts, marker k-mers that are too frequent in either of the two reads being aligned are not used in the alignment computation. For this purpose, the Shasta assembler uses a criterion based on absolute number of occurrences of marker k-mers in the two reads, although a relative criterion (occurrences per kilobase) may be more appropriate. The current absolute frequency threshold is under control of assembly parameter Align.maxMarkerFrequency (default ten occurrences).

Using these techniques and with the default assembly parameters, the time to compute an optimal alignment is 10−3–10−2 s in the Shasta implementation as of release v.0.1.0 (April 2019). A typical human assembly needs to compute 108 read alignments that results in a total compute time ~105–106 s or ~103–104 s of elapsed time (1–3 h) on a machine with 128 virtual processors. This is one of the most computationally expensive portions of a Shasta assembly. Some additional optimizations are possible in the code that implement this computation and may be implemented in future releases.

Finding overlapping reads

Even though computing read alignments in marker representation is fast, it still is not feasible to compute alignments among all possible pairs of reads. For a human size genome with ∼106−107 reads, the number of pairs to consider would be ∼1012−1014, and even at 10−3 s per alignment the compute time would be ∼109−1011 s or ∼107−109 s elapsed time (∼102−104 d) when using 128 virtual processors.

Therefore, some means of narrowing down substantially the number of pairs to be considered is essential. The Shasta assembler uses for this purpose a slightly modified MinHash27,28 scheme based on the marker representation of reads.

In overview, the MinHash algorithm takes as input a set of items each characterized by a set of features. Its goal is to find pairs of the input items that have a high Jaccard similarity index: that is, pairs of items that have many features in common. The algorithm proceeds by iterations. At each iteration, a new hash table is created and a hash function that operates on the feature set is selected. For each item, the hash function of each of its features is evaluated, and the minimum hash function value found is used to select the hash table bucket that each item is stored in. It can be proved that the probability of two items ending up in the same bucket equals the Jaccard similarity index of the two items: that is, items in the same bucket are more likely to be highly similar than items in different buckets61. The algorithm then adds to the pairs of potentially similar items all pairs of items that are in the same bucket.

When all iterations are complete, the probability that a pair of items was found at least once is an increasing function of the Jaccard similarity of the two items. In other words, the pairs found are enriched for pairs that have high similarity. One can now consider all the pairs found (hopefully a much smaller set than all possible pairs) and compute the Jaccard similarity index for each, then keep only the pairs for which the index is sufficiently high. The algorithm does not guarantee that all pairs with high similarity will be found, only that the probability of finding all pairs is an increasing function of their similarity.

The algorithm is used by Shasta with items being oriented reads (a read in either original or reverse complemented orientation) and features being consecutive occurrences of m markers in the marker representation of the oriented read. For example, consider an oriented read with the following marker representation: 18,45,71,3,15,6,21

If m is selected as equal to four (the Shasta default, controlled by assembly parameter MinHash.m), the oriented read is assigned the following features:

(18,45,71,3)

(45,71,3,15)

(71,3,15,6)

(3,15,6,21)

From this picture of an alignment matrix in marker representation, we see that streaks of four or more common consecutive markers are relatively common. We have to keep in mind that, with Shasta default parameters, four consecutive markers span an average 40 bases in RLE or about 52 bases in the original raw base representation. At a typical error rate around 10%, such a portion of a read would contain on average five errors. Yet, the marker representation in run-length space is sufficiently robust that these common ‘features’ are relatively common despite the high error rate. This indicates that we can expect the MinHash algorithm to be effective in finding pairs of overlapping reads.

However, the MinHash algorithm has a feature that is undesirable for our purposes: namely, that the algorithm is good at finding read pairs with high Jaccard similarity index. For two sets X and Y, the Jaccard similarity index is defined as the ratio