Abstract

Supervised training of deep learning models requires large labeled datasets. There is a growing interest in obtaining such datasets for medical image analysis applications. However, the impact of label noise has not received sufficient attention. Recent studies have shown that label noise can significantly impact the performance of deep learning models in many machine learning and computer vision applications. This is especially concerning for medical applications, where datasets are typically small, labeling requires domain expertise and suffers from high inter- and intra-observer variability, and erroneous predictions may influence decisions that directly impact human health. In this paper, we first review the state-of-the-art in handling label noise in deep learning. Then, we review studies that have dealt with label noise in deep learning for medical image analysis. Our review shows that recent progress on handling label noise in deep learning has gone largely unnoticed by the medical image analysis community. To help achieve a better understanding of the extent of the problem and its potential remedies, we conducted experiments with three medical imaging datasets with different types of label noise, where we investigated several existing strategies and developed new methods to combat the negative effect of label noise. Based on the results of these experiments and our review of the literature, we have made recommendations on methods that can be used to alleviate the effects of different types of label noise on deep models trained for medical image analysis. We hope that this article helps the medical image analysis researchers and developers in choosing and devising new techniques that effectively handle label noise in deep learning.

Keywords: label noise, deep learning, machine learning, big data, medical image annotation

Graphical Abstract

1. Introduction

1.1. Background

Deep learning has already made an impact on many branches of medicine, in particular medical imaging, and its impact is only expected to grow (Ching et al., 2018; Topol, 2019b). Even though it was first greeted with much skepticism (Wang et al., 2017a), in a few short years it proved itself to be a worthy player in solving many problems in medicine, including problems in disease and patient classification, patient treatment recommendation, outcome prediction, and more (Ching et al., 2018). Many experts believe that deep learning will play an important role in the future of medicine and will be an enabling tool in medical research and practice (Topol, 2019a; Prevedello et al., 2019). With regard to medical image analysis, methods that use deep learning have already achieved impressive, and often unprecedented, performance in many tasks ranging from low-level image processing tasks such as denoising, enhancement, and reconstruction (Wang et al., 2018b), to more high-level image analysis tasks such as segmentation, detection, classification, and registration (Ronneberger et al., 2015; Haskins et al., 2019), and even more challenging tasks such as discovering links between the content of medical images and patient’s health and survival (Xu et al., 2019; Mobadersany et al., 2018).

The recent success of deep learning has been attributed to three main factors (LeCun et al., 2015; Sun et al., 2017). First, technical advancements in network architecture design, network parameter initialization, and training methods. Second, increasing availability of more powerful computational hardware, in particular graphical processing units and parallel processing, that allow training of very large models on massive datasets. Last, but not least, increasing availability of very large and growing datasets. However, even though in some applications it has become possible to curate large datasets with reliable labels, in most applications it is very difficult to collect and accurately label datasets large enough to effortlessly train deep learning models. A solution that is becoming more popular is to employ non-expert humans or automated systems with little or no human supervision to label massive datasets (Guo et al., 2016; Deng et al., 2009; Ipeirotis et al., 2010). However, datasets collected using such methods typically suffer from very high label noise (Wang et al., 2018a; Kuznetsova et al., 2018), thus they have limited applicability in medical imaging.

The challenge of obtaining large datasets with accurate labels is particularly significant in medical imaging. The available data is typically small to begin with, and data access is hampered by such factors as patient privacy and institutional policies. Furthermore, labeling of medical images is very resource-intensive because it depends on domain experts. In some applications, there is also significant inter-observer variability among experts, which will necessitate obtaining consensus labels or labels from multiple experts and proper methods of aggregating those labels (Bridge et al., 2016; Nir et al., 2018). Some studies have been able to employ a large number of experts to annotate large medical image datasets (Gulshan et al., 2016; Esteva et al., 2017). However, such efforts depend on massive financial and logistical resources that are not easy to obtain in many domains. Alternatively, a few studies have successfully used automated mining of medical image databases such as hospital picture archiving and communication systems (PACS) to build large training datasets (Yan et al., 2018; Irvin et al., 2019). However, this method is not always applicable as historical data may not include all the desired labels or images. Moreover, label noise in such datasets is expected to be higher than in expert-labeled datasets. There have also been studies that have used crowd-sourcing methods to obtain labels from non-experts (Gurari et al., 2015; Albarqouni et al., 2016). Even though this method may have potential for some applications, it has a limited scope because in most medical applications non-experts are unable to provide useful labels. Even for relatively simple segmentation tasks, computerized systems have been shown to generate significantly less accurate labels compared with human experts and crowdsourced non-experts (Gurari et al., 2015). In general, lack of large datasets with trustworthy labels is considered to be one of the biggest challenges facing a wider adoption and successful deployment of deep learning methods in medical applications (Langlotz et al., 2019; Ching et al., 2018; Ravì et al., 2016).

1.2. Aims and scope of this paper

Given the outline presented above, it is clear that relatively small datasets with noisy labels are, and will continue to be, a common scenario in training deep learning models in medical image analysis applications. Hence, algorithmic approaches that can effectively handle the label noise are highly desired. In this manuscript, we first review and explain the recent advancements in training deep learning models in the presence of label noise. We review the methods proposed in the general machine learning literature, most of which have not yet been widely employed in medical imaging applications. Then, we review studies that have addressed label noise in deep learning with medical imaging data. Finally, we present the results of our experiments on three medical image datasets with noisy labels, where we investigate the performance of several strategies to deal with label noise, including a number of new methods that we have developed for each application. Based on our results, we make general recommendations to improve deep learning with noisy training labels in medical imaging data.

In the field of medical image analysis, in particular, the notion of label noise is elusive and not easy to define. The term has been used in the literature to refer to different forms of label imperfections or corruptions. Especially in the era of big data, label noise may manifest itself in various forms. Therefore, at the outset we need to clarify the intended meaning of label noise in this paper and demarcate the scope of this study to the extent possible.

To begin with, it should be clear that we are only interested in label noise, and not data/measurement noise. Specifically, consider a set {xi, yi} of medical images, xi, and their corresponding labels, yi. Although xi may include measurement noise, that is not the focus of this review. We are only interested in the noise in the label, yi. Typically, the label y is a discrete variable and can be either an image-wise label, such as in classification problems, or a pixel/voxel-wise label, such as in dense segmentation. Moreover, in this paper we are only concerned with labeled data. Semi-supervised methods are methods that use both labeled and unlabeled training data. Many semi-supervised methods synthesize (noisy) labels for unlabeled data, which are then used for training. Such studies fall within the scope of this study if they use novel or sophisticated methods to handle noisy synthesized labels. Another form of label imperfection that is becoming more common in medical image datasets is when there is only image-level label, and no pixel-level annotations are available (Wang et al., 2017b; Irvin et al., 2019). This type of label is referred to as weak label and is used by methods that are termed weakly supervised learning or multiple-instance learning methods. This type of label imperfection is also beyond the scope of this study. Luckily, there are recent review articles that cover these types of label imperfections. Semi-supervised learning, multiple-instance learning, and transfer learning in medical image analysis have been reviewed in (Cheplygina et al., 2019). Focusing only on medical image segmentation, another recent paper reviewed methods for dealing with scarce and imperfect annotations in general, including weak and sparse annotations (Tajbakhsh et al., 2019).

The organization of this article is as follows. In Section 2 we briefly describe methods for handling label noise in classical (i.e., pre-deep learning) machine learning. In Section 3 we review studies that have dealt with label noise in deep learning. Then, in Section 4 we take a closer look into studies that have trained deep learning models on medical image datasets with noisy labels. Section 5 contains our experimental results with three medical image datasets, where we investigate the impact of label noise and the potential of techniques and remedies for dealing with noisy labels in deep learning. Conclusions are presented in Section 6.

2. Label noise in classical machine learning

Learning from noisy labels has been a long-standing challenge in machine learning (Frénay and Verleysen, 2013; García et al., 2015). Studies have shown that the negative impact of label noise on the performance of machine learning methods can be more significant than that of measurement/feature noise (Zhu and Wu, 2004; Quinlan, 1986). The complexity of label noise distribution varies greatly depending on the application. In general, label noise can be of three different types: class-independent (the simplest case), class-dependent, and class and feature-dependent (potentially much more complicated). Most of the methods that have been proposed to handle noisy labels in classical machine learning fall into one of the following three categories (Frénay and Verleysen, 2013):

Methods that focus on model selection or design. Fundamentally, these methods aim at selecting or devising models that are more robust to label noise. This may include selecting the model, the loss function, and the training procedures. It has been known that the impact of label noise depends on the type and design of the classifier model. For example, naive Bayes and random forests are more robust than other common classifiers such as decision trees and support vector machines (Nettleton et al., 2010; Folleco et al., 2008), and that boosting can exacerbate the impact of label noise (Abellán and Masegosa, 2010; McDonald et al., 2003; Long and Servedio, 2010), whereas bagging is a better way of building classifier ensembles in the presence of significant label noise (Dietterich, 2000). Studies have also shown that 0–1 label loss is more robust than smooth alternatives (e.g., exponential loss, log-loss, squared loss, and hinge-loss) (Manwani and Sastry, 2013; Patrini et al., 2016). Other studies have modified standard loss functions to improve their robustness to label noise, for example by making the hinge loss negatively unbounded as proposed in (Van Rooyen et al., 2015). Furthermore, it has been shown that proper reweighting of training samples can improve the robustness of many loss functions to label noise (Liu and Tao, 2015; Natarajan et al., 2013).

Methods that aim at reducing the label noise in the training data. A popular approach is to train a classifier using the available training data with noisy labels or a small dataset with clean labels and identify mislabeled data samples based on the predictions of this classifier (Segata et al., 2009). Voting among an ensemble of classifiers has been shown to be an effective method for this purpose (Brodley et al., 1996; Sluban et al., 2010). K-nearest neighbors (KNN)-based analysis of the training data has also been used to remove mislabeled instances (Wilson and Martinez, 1997, 2000). More computationally intensive approaches include those that identify mislabeled instances via their impact on the training process. For example, (Zhang et al., 2009; Malossini et al., 2006) propose to detect mislabeled instances based on their impact on the classification of other instances in a leave-one-out framework. Some methods are similar to outlier-detection techniques. They define some criterion to reflect the classification uncertainty or complexity of a data point and prune those training instances that exceed a certain threshold on that criterion (Gamberger et al., 2000; Sun et al., 2007).

Methods that perform classifier training and label noise modeling in a unified framework. Methods in this class can overlap with those of the two aformentioned classes. For instance, some methods learn to denoise labels or to identify and down-weight samples that are more likely to have incorrect labels in parallel with classifier training. Some methods in this category improve standard classifiers such as support vector machines, decision trees, and neural networks by proposing novel training procedures that are more robust to label noise (Khardon and Wachman, 2007; Lin et al., 2004). Alternatively, different forms of probabilistic models have been used to model the label noise and thereby improve various classifiers (Kaster et al., 2010; Kim and Ghahramani, 2006).

3. Deep learning with noisy labels

Deep learning models typically require much more training data than the more traditional machine learning models do. In many applications the training data are labeled by non-experts or even by automated systems. Therefore, the label noise level is usually higher in these datasets compared with the smaller and more carefully prepared datasets used in classical machine learning.

Many recent studies have demonstrated the negative impact of label noise on the performance of deep learning models and have investigated the nature of this impact. It has been shown that, even with regularization, current convolutional neural network (CNN) architectures used for image classification and trained with standard stochastic gradient descent (SGD) algorithms can fit very large training datasets with completely random labels (Zhang et al., 2016). Obviously, the test performance of such a model would be similar to random assignment because the model has only memorized the training data. Given such an enormous representation capacity, it may seem surprising that large deep learning models have achieved record-breaking performance in many real-world applications. The answer to this apparent contradiction, as suggested by (Arpit et al., 2017), is that when deep learning models are trained on typical datasets with mostly correct labels, they do not memorize the data. Instead, at least in the beginning of training, they learn the dominant patterns shared among the data samples. It has been conjectured that this behavior is due to the distributed and hierarchical representation inherent in the design of the state of the art deep learning models and the explicit regularization techniques that are commonly used when training them (Arpit et al., 2017). One study empirically confirmed these ideas by showing that deep CNNs are robust to strong label noise (Rolnick et al., 2017). For example, in hand-written digit classification on the MNIST dataset, if the label accuracy was only 1% higher than random labels, a classification accuracy of 90% was achieved at test time. A similar behavior was observed on more challenging datasets such as CIFAR100 and ImageNet, albeit at much lower label noise levels. This suggests strong learning (as opposed to memorization) tendency of large CNNs. However, somewhat contradictory results have been reported by other studies. For face recognition, for example, it has been found that label noise can have a significant impact on the accuracy of a CNN and that training on a smaller dataset with clean labels is better than training on a much larger dataset with significant label noise (Wang et al., 2018a). The theoretical reasoning and experiments in (Chen et al., 2019b) suggested a quadratic relation between the label noise ratio in the training data and test error.

Although the details of the interplay between memorization and learning mentioned above is not fully understood, experiments in (Arpit et al., 2017) suggest that this trade-off depends on the nature and richness of the data, amount of label noise, model architecture, as well as training procedures including regularization. Ma et al. (2018) show that the local intrinsic dimensionality of the features learned by a deep learning model depends on the label noise. Formal definition of local intrinsic dimensionality is given by Houle (2017). It quantifies the dimensionality of the underlying data manifold. More specifically, given a data point xi, local intrinsic dimensionality of the data manifold is a measure of the rate of encounter of other data points as the radius of a ball centered at xi grows. Ma et al. (2018) showed that when training on data with noisy labels, the local dimensionality of the features initially decreases as the model learns the dominant patterns in the data. As the training proceeds, the model begins to overfit to the data samples with incorrect labels and the dimensionality starts to increase. Drory et al. (2018) establish an analogy between the performance of deep learning models and KNN under label noise. Using this analogy, they empirically show that deep learning models are highly sensitive to label noise that is concentrated, but that they are less sensitive when the label noise is spread across the training data.

The theoretical work on understanding the impact of label noise on the training and generalization of deep neural networks is still ongoing (Martin and Mahoney, 2017). On the practical side, many studies have shown the negative impact of noisy labels on the performance of these models in real-world applications (Yu et al., 2017; Moosavi-Dezfooli et al., 2017; Speth and Hand, 2019). Not surprisingly, therefore, this topic has been the subject of much research in recent years. We review some of these studies below, organizing them under six categories. As this categorization is arbitrary, there is much overlap among the categories and some studies may be argued to belong to more than one category.

Table 1 shows a summary of the methods we have reviewed. For each category of methods, we have shown a set of representative studies along with the applications addressed in the experimental results of the original paper. For each category of methods, we have also suggested some applications in medical image analysis that can benefit from the methods developed in those papers.

Table 1.

Summary of the main categories of methods for learning with noisy labels, representative studies, and potential applications in medical image analysis. The left column indicates the six categories under which we classify the studies reviewed in Sections 2 and 3. The middle column lists several representative studies from the fields of machine learning and computer vision and the applications considered in those studies. The right column suggests potential applications for the methods in each category in medical image analysis. In this column, where applicable, we have cited relevant published studies from the field of medical image analysis and experiments reported in Section 5 of this paper as examples of the application of methods adapted or developed in each category.

| Methods category | Representative studies from machine learning and computer vision literature | Potential applications in medical image analysis |

|---|---|---|

| Label cleaning and pre-processing |

Ostyakov et al. (2018) - image classification Lee et al. (2018) - image classification Northcutt et al. (2017) - image classification Veit et al. (2017) - image classification Gao et al. (2017) - regression, classification, semantic segmentation |

most applications, including disease and pathology classification (Pham et al. (2019); experiments in Section 5.2) and lesion detection and segmentation (experiments in Section 5.1) |

| Network architecture |

Sukhbaatar and Fergus (2014) - image classification Vahdat (2017) - image classification Yao et al. (2018) - image classification |

lesion detection (Dgani et al. (2018)), pathology classification (experiments in Section 5.2) |

| Loss functions |

Ghosh et al. (2017) - image and text classification Zhang and Sabuncu (2018) - image classification Wang et al. (2019b) - image classification, object detection Rusiecki (2019) - image classification Boughorbel et al. (2018) - electronic health records Hendrycks et al. (2018) - image and text classification |

lesion detection (experiments in Section 5.1), pathology classification (experiments in Section 5.2), segmentation (Matuszewski and Sintorn (2018); experiments in Section 5.3) |

| Data re-weighting |

Ren et al. (2018) - image classification Shu et al. (2019) - image classification Khetan et al. (2017) - image classification Tanno et al. (2019) - image classification Shen and Sanghavi (2019) - image classification |

lesion detection (Le et al. (2019)) and segmentation (experiments in Section 5.1), lesion classification (Xue et al. (2019); experiments in Section 5.2), segmentation (Zhu et al. (2019); Mirikharaji et al. (2019)) |

| Data and label consistency |

Lee et al. (2019) - image classification Zhang et al. (2019) - image classification Speth and Hand (2019) - facial attribute recognition Azadi et al. (2015) - image classification Wang et al. (2018c)- image classification Reed et al. (2014) - image classification, emotion recognition, object detection |

lesion detection and classification, segmentation (Yu et al. (2019a)) |

| Training procedures |

Zhong et al. (2019) - face recognition Jiang et al. (2017) - image classification Sukhbaatar and Fergus (2014) - image classification Han et al. (2018b) - image classification (Zhang et al., 2017) - image classification Acuna et al. (2019) - boundary segmentation Yu et al. (2018) - boundary segmentation |

most applications, including segmentation (experiments in Section 5.3; Min et al. (2018); Nie et al. (2018); Zhang et al. (2018)), lesion detection (experiments in Section 5.1), and classification (Fries et al. (2019)) |

3.1. Label cleaning and pre-processing

The methods in this category aim at identifying and either fixing or discarding training data samples that are likely to have incorrect labels. This can be done either prior to training or iteratively in parallel with the training of the main model. Vo et al. (2015) proposed supervised and unsupervised image ranking methods for identifying correctly-labeled images in a large corpus of images with noisy labels. The proposed methods were based on matching each image with a noisy label to a set of representative images with clean labels. This method improved the classification accuracy by 4–6% over the baseline CNN models on three datasets. Veit et al. (2017) trained two CNNs in parallel using a small dataset with correct labels and a large dataset with noisy labels. The two CNNs shared the feature extraction layers. One CNN used the clean dataset to learn to clean the noisy dataset, which was used by the other CNN to learn the main classification task. Experiments showed that this training method was more effective than training on the large noisy dataset followed by fine-tuning on the clean dataset. Ostyakov et al. (2018) trained an ensemble of classifiers on data with noisy labels using cross-validation and used the predictions of the ensemble as soft labels for training the final classifier.

CleanNet, proposed by Lee et al. (2018), extracts a feature vector from a query image with a noisy label and compares it with a feature vector that is representative of its class. The representative feature vector for each class is computed from a small clean dataset. The similarity between these feature vectors is used to decide whether the label is correct. Alternatively, this similarity can be used to assign weights to the training samples, which is the method proposed for image classification by Lee et al. (2018). Han et al. (2019) improved upon CleanNet in several ways. Most importantly, they removed the need for a clean dataset by estimating the correct labels in an iterative framework. Moreover, they allowed for multiple prototypes (as opposed to only one in CleanNet) to represent each class. Both of these studies reported improvements in image classification accuracy of 1–5% depending on the dataset and noise level.

A number of proposed methods for label denoising are based on classification confidence. Rank Pruning, proposed by Northcutt et al. (2017), identifies data points with confident labels and updates the classifier using only those data points. This method is based on the assumption that data samples for which the predicted probability is close to one are more likely to have correct labels. However, this is not necessarily true. In fact, there is extensive recent work showing that standard deep learning models are not “well calibrated” (Guo et al., 2017; Lakshminarayanan et al., 2017). A classifier is said to have a calibrated prediction confidence if its predicted class probability indicates its likelihood of being correct. For a perfectly-calibrated classifier, . It has been shown that deep learning models produce highly over-confident predictions. Many studies in recent years have aimed at improving the calibration of deep learning models (Gal and Ghahramani, 2015; Kendall and Gal, 2017; Pawlowski et al., 2017). In order to reduce the reliance on classifier calibration, the Rank Pruning algorithm, as its name suggests, ranks the data samples based on their predicted probability and removes the data samples that are least confident. In other words, Rank Pruning assumes that the predicted probabilities are accurate in the relative sense needed for ranking. In light of what is known about poor calibration of deep learning models, this might still be a strong assumption. Nonetheless, Rank Pruning was shown empirically to lead to substantial improvements in image classification tasks in the presence of strong label noise. Identification of incorrect labels based on prediction confidence was also shown to be highly effective in extensive experiments on image classification by Ding et al. (2018), improving the classification accuracy on CIFAR-10 by up to 20% in the presence of very strong label nosie. Köhler et al. (2019) proposed an iterative label noise filtering approach based on similar concepts as Rank Pruning. This method estimates prediction uncertainty (using such methods as Deep Ensembles (Lakshminarayanan et al., 2017) or Monte-Carlo dropout (Kendall and Gal, 2017)) during training and relabels data samples that are likely to have incorrect labels.

A different approach, is proposed by Gao et al. (2017). In this approach, termed deep label distribution learning (DLDL), the initial noisy labels are smoothed to obtain a “label distribution”, which is a discrete distribution for classification problems. The authors propose methods for obtaining this label distribution from one-hot labels for several applications including multi-class classification and semantic segmentation. For semantic segmentation, for example, a simple kernel smoothing of the segmentation mask is suggested to account for unreliable boundaries. Once this smooth label is obtained, the deep learning model is trained by minimizing the Kullback-Leibler (KL) divergence between the model output and the smooth noisy label. Label smoothing is a well-know trick for improving the test performance of deep learning models (Szegedy et al., 2016; Müller et al., 2019). The DLDL approach was improved by Yi and Wu (2019), where the authors introduced a cross-entropy-based loss term to encourage closeness of estimated labels and the initial noisy labels and proposed a back-propagation method to iteratively update the initial label distributions as well.

Ratner et al. (2016) used a generative model to model labeling of large datasets used in deep learning and proposed a label denoising method under this scenario. Zhou et al. (2017) proposed a GAN for removing label noise from synthetic data generated to train a CNN. This method was shown to be highly effective in removing label noise and improving the model performance. GANs were used to generate a training dataset with clean labels from an initial dataset with noisy labels by Chiaroni et al. (2019).

3.2. Network architecture

Several studies have proposed adding a “noise layer” to the end of deep learning models. The noise layer proposed by Sukhbaatar et al. (2014) is equivalent to multiplication with the transition matrix between noisy and true labels. The authors developed methods for learning this matrix in parallel with the network weights using error back-propagation. A similar noise layer was proposed by Thekumparampil et al. (2018) for training a generative adversarial network (GAN) under label noise. Sukhbaatar and Fergus (2014) proposed methods for estimating the transition matrix from either a clean or a noisy dataset. Reductions of up to 3.5% in classification error were reported on different datasets. A similar noise layer was proposed by Goldberger and Ben-Reuven (2016), where the authors proposed an EM-type method for optimizing the parameters of the noise layer. Importantly, the authors extended their model to the more general case where the label noise also depends on image features. This more complex case, however, could not be optimized with EM and a back-propagation method was exploited instead. Bekker and Goldberger (2016) used a combination of EM and error back-propagation for end-to-end training with a noise layer. Jindal et al. (2016) suggested that aggressive dropout regularization (with a rate of 90%) can improve the effectiveness of such noise layers.

Focusing on noisy labels obtained from multiple annotators, Tanno et al. (2019) proposed a simple and effective method for estimating the correct labels and annotator confusion matrices in parallel with CNN training. The key observation was that, in order to avoid the ambiguity in simultaneous estimation of true labels and annotator confusion matrices, the traces of the confusion matrices had to be penalized. The entire model including the CNN weights and confusion matrices were learned via SGD. The method was shown to be highly effective in estimating annotator confusion matrices for various annotator types including inaccurate and adversarial ones. Improvements of 8–11% in image classification accuracy were reported compared to the best competing methods.

A number of studies have integrated different forms of probabilistic graphical models into deep neural networks to handle label noise. Xiao et al. (2015) proposed a graphical model with two discrete latent variables y and z, where y was the true label and z was a one-hot vector of size 3 that denoted whether the label noise was zero, class-independent, or class-conditional. Two separate CNNs estimated y and z, and the entire model was optimized in an EM framework. The method required a small dataset with clean labels. The authors showed significant gains compared with baseline CNNs in image classification from large datasets with noisy labels. Vahdat (2017) employed an undirected graphical model to learn the relationship between correct and noisy labels. The model allowed incorporation of domain-specific sources of information in the form of joint probability distribution of labels and hidden variables. Their method improved the classification accuracy of baseline CNNs by up to 3% on three different datasets. For image classification, Misra et al. (2016) proposed to jointly train two CNNs to disentangle the object presence and relevance in a framework similar to the graphical model-based methods described above. Model parameters and true labels were estimated using SGD. A more elaborate model was proposed by Yao et al. (2018), where an additional latent variable was introduced to model the trustworthiness of the noisy labels.

3.3. Loss functions

A large number of studies keep the model architecture, training data, and training procedures largely intact and only change the loss function (Izadinia et al., 2015). Ghosh et al. (2017) studied the conditions for robustness of a loss function to label noise for training deep learning models. They showed that mean absolute value of error, MAE, (defined as the ℓ1 norm of the difference between the true and predicted class probability vectors) is tolerant to label noise. This means that, in theory, the optimal classifier can be learned by training with basic error back-propagation. They showed that cross-entropy and mean square error did not possess this property. For a multi-class classification problem, denoting the vector of true and predicted probabilities with p(y = j|x) and , respectively, the cross-entropy loss function is defined as . The MAE loss is defined as . As opposed to cross-entropy that puts more emphasis on hard examples (desirable for training with clean labels), MAE tends to treat all data points more equally. However, a more recent study argued that because of the stochastic nature of the optimization algorithms used to train deep learning models, training with MAE down-weights difficult samples with correct labels, leading to significantly longer training times and reduced test accuracy (Zhang and Sabuncu, 2018). The authors proposed their own loss functions based on Box-Cox transformation to combine the advantages of MAE and cross-entropy. Similarly, Wang et al. (2019b) analyzed the gradients of cross-entropy and MAE loss functions to show their weaknesses and advantages. They proposed an improved MAE loss function (iMAE) that overcame MAE’s poor sample weighting strategy. Specifically, they showed that the ℓ1 norm of the gradient of LMAE with respect to the logit vector was equal to 4p(y|x)(1 − p(y|x)), leading to down-weighting of difficult but informative data samples. To fix this shortcoming, they suggested to transform the MAE weights nonlinearly with a new weighting defined as exp(T p(y|x))(1 − p(y|x)), where the hyperparameter T was set equal to 8 for training data with noisy labels. In image classification experiments on the CIFAR-10 dataset, compared with cross-entropy and MAE losses, their proposed iMAE loss improved the classification by approximately 1–5% when label noise was low and up to 25% when label noise was very high. In another experiment on person reidentification in video, iMAE improved the mean average precision by 13% compared with cross-entropy.

Thulasidasan et al. (2019) proposed modifying the cross-entropy loss function to enable abstention. Their proposed modification allowed the model to abstain from making a prediction on some data points at the cost of incurring an abstention penalty. They showed that this policy could improve the classification performance on both random label noise as well as systematic datadependent label noise. Rusiecki (2019) proposed a trimmed cross-entropy loss based on trimmed absolute value criterion. Their central assumption is that, with a well-trained model, data samples with wrong labels result in high loss values. Hence, their proposed loss function simply ignores the training samples with the largest loss values. Note that the central idea in (Rusiecki, 2019) (of down-weighting hard data samples) seems to run against many prevalent techniques in machine learning such as boosting (Freund et al., 1999), hard example mining (Shrivastava et al., 2016), and loss functions such as focal loss (Lin et al., 2017), that steer the training process to focus on hard examples. This is because when the training labels are correct, data points with high loss values constitute the hard examples that the model has not learned yet. Hence, focusing on those examples generally helps improve the model performance. On the other hand, when there is significant label noise, assuming that the model has attained a decent level of accuracy, data points with unusually high loss values are likely to have wrong labels. This idea is not restricted to (Rusiecki, 2019) and it is an idea that is shared by many methods reviewed in this article. This paradigm shift is a good example of the dramatic effect of label noise on the machine learning methodology.

Patrini et al. (2017) proposed two simple ways of improving the robustness of a loss function to label noise for training deep learning models. The proposed correction methods are based on the error confusion matrix T, defined as , where and y are the noisy and true labels, respectively. Assuming T is non-singular, one of the proposed correction strategies is . This correction is a linear weighting of the loss values for each possible label, where the weights, given by T, are the probability of the true label given the observed label. The authors name this correction method “backward correction” because it is intuitively equivalent to going one step back in the noise process described by the Markov chain represented by T. The alternative approach, named forward correction, is based on correcting the model predictions and only applies to composite proper loss functions (Reid and Williamson (2010)), which include cross-entropy. The corrected loss is defined as lcorr(h(x)) = l(TTψ−1((h(x))), where h is the vector of logits, and ψ−1 is the inverse of the link function for the loss function in consideration, which is the standard softmax for cross-entropy loss. The authors show that both these corrections lead to unbiased loss functions, in the sense that . They also propose a method for estimating T from noisy data and show that their methods lead to performance improvements on a range of computer vision problems and deep learning models. Similar methods have been proposed by Hendrycks et al. (2018), and Boughorbel et al. (2018), where it is suggested to use a small dataset with clean labels to estimate T. Boughorbel et al. (2018) alternate between training on a clean dataset with a standard loss function and training on a larger noisy dataset with the corrected loss function. Mnih and Hinton (2012) proposed a similar loss function based on penalizing the disagreement between the predicted label and the posterior of the true label.

3.4. Data re-weighting

Broadly speaking, these methods aim at down-weighting those training samples that are more likely to have incorrect labels. Ren et al. (2018) proposed to weight the training data using a meta-learning approach. That method required a separate dataset with clean labels, which was used to determine the weights assigned to the training data with noisy labels. Simply put, it optimized the weights on the training samples by minimizing the loss on the clean validation data. The authors showed that this weighting scheme was equivalent to assigning larger weights to training data samples that were similar to the clean validation data in terms of both the learned features and optimization gradient directions. Experiments showed that this method improved upon baseline methods by 0.5% and 3% on CIFAR-10 and CIFAR-100 with only 1000 images with clean labels. More recently, Wang et al. (2019a) proposed to re-weight samples by optimization gradient re-scaling. The underlying idea, again, is to give larger weights to samples that are easier to learn, hence more likely to have correct labels. Pumpout, proposed by Han et al. (2018a), is also based on gradient scaling. The authors propose two methods for identifying data samples that are likely to have incorrect lables. One of their methods is based on the assumption that data samples with incorrect labels are likely to display unusually high loss values. Their second method is based on the value of the backward-corrected loss (Patrini et al., 2017); they suggest that the condition indicates data samples with incorrect labels. For training data samples that are suspected of having incorrect labels, the gradients are scaled by −γ, where 0 < γ < 1. In other words, they perform a scaled gradient ascent on the samples with incorrect labels. In several experiments, including image classification with MNIST and CIFAR-10 datasets, they show that their method avoids fitting to incorrect labels and reduces the classification error by up to 40%.

Shen and Sanghavi (2019) proposed a training strategy that can be interpreted as a form of data re-weighting. In each training epoch, they remove a fraction of the data for which the loss is the largest, and update the model parameters to minimize the loss function on the remaining training data. This method assumes that the model gradually converges towards a good classifier such that the mis-labeled training samples exhibit unusually high loss values as training progresses. The authors proved that this simple approach learns the optimal model in the case of generalized linear models. For deep CNNs that are highly nonlinear, they empirically showed the effectiveness of their method on several image classification tasks. As in the case of this method, there is often a close connection between some of the data re-weighting methods and methods based on robust loss functions. Shu et al. (2019) built upon this connection and developed it further by proposing to learn a data re-weighting scheme from data. Instead of assuming a pre-defined weighting scheme, they used a multi-layer perceptron (MLP) model with a single hidden layer to learn a suitable weighting strategy for the task and the dataset at hand. The MLP in this method is trained on a small dataset with clean labels. Experiments on datasets with unbalanced and noisy labels showed that the learned weighting scheme conformed with those proposed in other studies. Specifically, for data with noisy labels the model learned to down-weight samples with large loss functions, the opposite of the form learned for datasets with unbalanced classes. One can argue that this observation empirically justifies the general trend towards down-weighting training samples with large loss values when training with noisy labels.

A common scenario involves labels obtained from multiple sources or annotators with potentially different levels of accuracy. This is a heavily-researched topic in machine learning. A simple approach to tackling this scenario is to use expectation-maximization (EM)-based methods such as (Warfield et al., 2004; Raykar et al., 2010) to estimate the true labels and then proceed to train the deep learning model using the estimated labels. Khetan et al. (2017) proposed an iterative method, whereby model predictions were used to estimate annotator accuracy and then these accuracies were used to train the model with a loss function that properly weighted the label from each annotator. The model was updated via gradient descent, whereas annotator confusion matrices were optimized with an EM method. By contrast, Tanno et al. (2019) estimated the network weights as well as annotator confusion matrices via gradient descent.

3.5. Data and label consistency

It is usually the case that the majority of the training data samples have correct labels. Moreover, there is considerable correlation among data points that belong to the same class (or the features computed from them). These correlations can be exploited to reduce the impact of incorrect labels. A typical example is the work of Lee et al. (2019), where the authors consider the correlation of the features learned by a deep learning model. They suggest that the features learned by various layers of a deep learning model on data samples of the same class should be highly correlated (i.e., clustered). Therefore, they propose training an ensemble of generative models (in the form of linear discriminant classifiers) on the features of the penultimate layer and possibly also other layers of a trained deep learning model. They show significant improvements in classification accuracy on several network architectures, noise levels, and datasets. On CIFAR-10 dataset, they report classification accuracy improvements of 3–20%, with larger improvements for higher label noise levels, compared with a baseline CNN. On more difficult datasets such as CIFAR-100 and SVHN, smaller but still significant improvements of approximately 3–10% are reported. Another example is the work of Zhang et al. (2019), where the authors proposed a method to leverage the multiplicity of data samples with the same (noisy) label in each training batch. All samples with the same label were fed into a light-weight neural network model that assigned a confidence weight to each sample based on the probability of it having the correct label. These weights were used to compute a representative feature vector for that class, which was then used to train the main classification model. Compared with other competing methods, 1–4% higher classification accuracies were reprorted on several datasets. For face identification, Speth and Hand (2019) proposed feature embedding to detect data samples with incorrect labels. Their proposed verification framework used a multi-label Siamese CNN to embed a data point in a lower-dimensional space. The distance of the point to a set of representative points in this lower-dimensional space was used to determine whether the label was incorrect.

Azadi et al. (2015) propose a method that they name “auxiliary image regularization”. Their method requires a small set of auxiliary images with clean labels in addition to the main training dataset with noisy labels. The core idea of auxiliary image regularization is to encourage representation consistency between training images (with noisy labels) and auxiliary images (with known correct labels). For this purpose, their proposed loss function includes a term based on group sparsity that encourages the features of a training image to be close to those of a small number of auxiliary images. Clearly, the auxiliary images should include good representatives of all expected classes. This method improved the classification accuracy by up to 8% on ImageNet dataset. Chen et al. (2019a) proposed a manifold regularization technique that penalized the KL divergence between the class probability predictions of similar data samples. Because searching for similar samples in high-dimensional data spaces was challenging, they suggested using data augmentation to synthesize similar inputs. They reported 1–3% higher classification accuracy compared with several alternative methods on CIFAR-10 and CIFAR-100. Li et al. (2017a) proposed BundleNet, where multiple images with the same (noisy) labels were stacked together and fed as a single input to the network. Even though the authors do not provide a clear justification of their method and its difference with standard mini-batch training, they show empirically that their method improves the accuracy on image classification with noisy labels. Wang et al. (2018c) used the similarity between images in terms of their deep features in an iterative framework to identify and down-weight training samples that were likely to have incorrect labels. Consistency between predicted labels and data (e.g., images or features) was exploited by Reed et al. (2014). The authors considered the true label as a hidden variable and proposed a model that simultaneously learned the relation between true and noisy labels (i.e., label noise distribution) and an auto-encoder model to reconstruct the data from the hidden variables. They showed improved performance in detection and classification tasks.

3.6. Training procedures

The methods in this category are very diverse. Some of them are based on well-known machine learning methods such as curriculum learning and knowledge distillation, while others focus on modifying the settings of the training pipeline such as learning rate and regularization.

Several methods based on curriculum learning have been proposed to combat label noise. Curriculum learning, first proposed by Bengio et al. (2009), is based on training a model with examples of increasing complexity or difficulty. In the method proposed by Jiang et al. (2017), an LSTM network called Mentor-Net provides a curriculum, in the form of weights on the training samples, to a second network called Student-Net. On CIFAR-100 and ImageNet with various label noise levels, their method improved the classification accuracy by up to 20% and 2%, respectively. Guo et al. (2018) proposed another method based on curriculum learning, named CurriculumNet, for training a model from massive datasets with noisy labels. This method first clusters the training data in some feature space and identifies samples that are more likely to have incorrect labels as those that fall in low-density clusters. The data are then sequentially presented to the main CNN model to be trained. This technique achieved good results on several datasets including ImageNet. The Self-Error-Correcting CNN proposed by Liu et al. (2017) is based on similar ideas; the training begins with noisy labels but as the training proceeds the network is allowed to change a sample’s label based on a confidence policy that gives more weight to the network predictions with more training.

Li et al. (2017b) adopted a knowledge distillation approach (Hinton et al., 2015) to train an auxiliary model on a small dataset with clean labels to guide the training of the main model on a large dataset with noisy labels. In brief, their approach amounts to using a pseudo-label, which is a convex combination of the noisy label and the label predicted by the auxiliary model. To reduce the risk of overfitting the auxiliary model on the small clean dataset, the authors introduced a knowledge graph based on the label transition matrix. Reed et al. (2014) also proposed using a convex combination of the noisy labels and labels predicted by the model at its current training stage. They suggested that as the training proceeds, the model becomes more accurate and its predictions can be weighted more strongly, thereby gradually forgetting the original incorrect labels. Zhong et al. (2019) used a similar approach for face identification. They first trained their model on a small dataset with less label noise and then fine-tuned it on data with stronger label noise using an iterative label update strategy similar to that explained above. Their method led to improvements of up to 2% in face recognition accuracy. Following a similar training strategy, Köhler et al. (2019) suggested that there is a point (e.g., a training epoch) when the model learns the true data features and is about to fit to the noisy labels. They proposed two methods, one based on the predictions on a clean dataset and another based on prediction uncertainty measures, to identify that stage in training. The output of the model at that stage can be used to fix the incorrect labels.

A number of studies have proposed methods involving joint training of more than one model. For example, one work suggested simultaneously training two separate but identical networks with random initialization, and only updating the network parameters when the predictions of the two networks differed Malach and Shalev-Shwartz (2017). The idea is that when training with noisy labels, the model starts by learning the patterns in data samples with correct labels. Later in training, the model will struggle to overfit to samples with incorrect labels. The proposed method hopes to reduce the impact of label noise because the decision as to whether or not to update the model is made based on the predictions of the two models and independent of the noisy label. In other words, on data with incorrect labels both models are likely to produce the same prediction, i.e., they will predict the correct label. On easy examples with correct labels, too, both models will make the same (correct) prediction. On hard examples with correct labels, on the other hand, the two models are more likely to disagree. Hence, with the proposed training strategy, the data samples that will be used in later stages of training will shrink to the hard data samples with correct labels. This strategy also improves the computational efficiency since it performs many updates at the start of training but avoids unnecessary updates on easy data samples once the models have sufficiently converged to predict the correct label on those samples. This idea was developed into co-teaching Han et al. (2018b), whereby the two networks identified label-noise-free samples in their mini-batches and shared the update information with the other network. The authors compare their method with several state of the art techniques including Mentor-Net (Jiang et al. (2017). Their method outperformed competing methods in most experiments, while narrowly underperforming in some experiments. Co-teaching was further improved in Yu et al. (2019b), where the authors suggested to focus the training on data samples with lower loss values in order to reduce the risk of training on data with incorrect labels. Along the same lines, Li et al. (2019) proposed a meta-learning objective that encouraged consistent predictions between a student model trained on noisy labels and a teacher model trained on clean labels. The goal was to train the student model to be tolerant to label noise. Towards this goal, artificial label noise was added on data with correct labels to train the student model. The student model was encouraged to be consistent with the teacher model using a meta-objective in the form of the KL divergence between prediction probabilities. Their method outperformed several competing methods by 1–2% on CIFAR-10 and Clothing1M datasets.

Experiments in Chen et al. (2019b) showed that co-teaching was less effective as the label noise increased. Instead, the authors showed that selecting the data samples with correct labels using cross-validation was more effective. In their proposed approach, the training data was divided into two folds. The model was iteratively trained on one fold and tested on the other. Data samples for which the predicted and noisy labels agreed were assumed to have the correct label and were used in the next training epoch. One study proposed to learn the network parameters by optimizing the joint likelihood of the network parameters and true labels Tanaka et al. (2018). Compared with standard training with cross-entropy loss, this method improved the classification accuracy on CIFAR-10 by 2% with low label noise rate to 17% when label noise rate was very high.

Some studies have suggested modifying the learning rate, batch size, or other settings in the training methodology. For example, for applications where multiple datasets with varying levels of label noise are available, Song et al. (2015) have proposed training strategies in terms of the order of using different datasets during training and proper learning rate adjustments based on the level of label noise in each dataset. Assuming that separate clean and noisy datasets are available, the same study has shown that using different learning rates for training with noisy and clean samples can improve the performance. It has also shown that the optimal ordering of using the two datasets (i.e., whether to train on the noisy dataset or the clean dataset first) depends on the choice of the learning rate. It has also been suggested that when label noise is strong, the effective batch size decreases, and that batch size should be increased with a proper scaling of the learning rate (Rolnick et al., 2017). Sukhbaatar and Fergus (2014) proposed to include samples from a noisy dataset and a clean dataset in each training mini-batch, giving higher weights to the samples with clean labels.

Mixup is a less intuitive but simple and effective method (Zhang et al., 2017). It synthesizes new training data points and labels via a convex combination of pairs of training data points and their labels. More specifically, given two randomly selected training data and label pairs (xi, yi) and (xj, yj), a new training data point and label are synthesized as and , where λ ∈ [0, 1] is sampled from a beta distribution. Although mixup is known primarily as a data augmentation and regularization strategy, it has been shown to be remarkably effective for combatting label noise. Compared with basic emprirical risk minimization on CIFAR-10 dataset with different levels of label noise, mixup reduced the classification error by 6.5–12.5%. The authors argue that the reason for this behavior is because interpolation between datapoints makes memorization on noisy labels, as observed in (Zhang et al., 2016), more difficult. In other words, it is easier for the network to learn the linear iterpolation between datapoints with correct labels than to memorize the interploation when labels are incorrect. The same idea was successfully used in video classification by Ostyakov et al. (2018).

For object boundary segmentation, two studies proposed to improve noisy labels in parallel with model training (Yu et al., 2018; Acuna et al., 2019). This is a task for which large datasets are known to suffer from significant label noise and model performance to be very sensitive to label noise. Both methods consider the true boundary as a latent variable that is estimated in an alternating optimization framework in parallel with model training. One major assumption in Acuna et al. (2019) is the preservation of the length of the boundary during optimization, resulting in a bipartite graph assignment problem. In Yu et al. (2018), a level-set formulation was introduced instead, providing much higher flexibility in terms of the shape and length of the boundary while preserving its topology. Both studies compared their methods with baseline CNNs in terms of F-measure for object edge detection and report impressive improvements. In particular, Acuna et al. (2019) improved upon their baseline CNN by 2–5% on segmentation of different objects. Similarly, Yu et al. (2018) reported improvements of 1–17% compared to a baseline CNN.

4. Deep learning with noisy labels in medical image analysis

In this section, we review studies that have addressed label noise in training deep learning models for medical image analysis. We use the same categorization as in the previous section.

4.1. Label cleaning and pre-processing

For classification of thoracic diseases from chest x-ray scans, Pham et al. (2019) used label smoothing to handle noisy labels. They compared their label smoothing method with simple methods such as ignoring data samples with noisy labels. They found that label smoothing can lead to improvements of up to 0.08 in the area under the receiver operating characteristic curve (AUC).

4.2. Network architectures

The noise layer proposed by Bekker and Goldberger (2016), reviewed above, was used for breast lesion detection in mammograms by Dgani et al. (2018) and slightly improved the detection accuracy.

4.3. Loss functions

To train a network to segment virus particles in transmission electron microscopy images using original annotations that consisted of only the approximate center of each virus, Matuszewski and Sintorn (2018) dilated the annotations with a small and a large structuring element to generate noisy masks for foreground and background, respectively. Consequently, parts of the image in the shape of the union of rings were marked as uncertain regions that were ignored during training. The Dice similarity and intersection-over-union loss functions were modified to ignore those regions. Promising results were reported for both loss functions. Rister et al. (2018) showed that for segmentation of abdominal organs in CT images from noisy training annotations, the intersection-over-union (IOU) loss consistently outperformed the cross-entropy loss. The mean DSC achieved with the IOU loss was 1–13% higher than the DSC achieved with the cross-entropy loss.

4.4. Data re-weighting

Le et al. (2019) used a data re-weighting method similar to that proposed by Ren et al. (2018) to deal with noisy annotations in pancreatic cancer detection from whole-slide digital pathology images. They trained their model on a large corpus of patches with noisy labels using weights computed from a small set of patches with clean labels. This strategy improved the classification accuracy by 10% compared with training on all patches with clean and noisy labels without re-weighting. For skin lesion classification in dermoscopy images with noisy labels, Xue et al. (2019) used a data re-weighting method that amounted to removing data samples with high loss values in each training batch. This method, which is similar to some of the methods reviewed above such as the method of Shen and Sanghavi (2019), increased the classification accuracy by 2 – 10%, depending on the label noise level.

For segmentation of heart, clavicles, and lung in chest radiographs, Zhu et al. (2019) trained a deep learning model to detect incorrect labels. This model assigned a weight to each sample in a training batch, aiming to down-weight samples with incorrect labels. The main segmentation model was trained in parallel using a loss function that made use of these weights. A pixel-wise weighting was proposed by Mirikharaji et al. (2019) for skin lesion segmentation from highly inaccurate annotations. The method needed a small dataset with correct segmentations alongside the main, larger, dataset with noisy segmentations. For each training image with noisy segmentation, a weight map of the same size was considered to indicate the pixel-wise confidence in the accuracy of the noisy label. These maps were updated in parallel with network parameters with alternating optimization. The authors proposed to optimize the weights on the images in the noisy dataset by reducing the loss on the clean dataset. In essence, the weight on a pixel is increased if that leads to a reduction in the loss on the clean dataset. If increasing the weight on a pixel increases the loss on the clean dataset, that weight is set to zero because the label for that pixel is probably incorrect.

4.5. Data and label consistency

For segmentation of the left atrium in MRI from labeled and unlabeled data, Yu et al. (2019a) proposed training two separate models: a teacher model that produced noisy labels and label uncertainty maps on unlabeled images, and a student model that was trained using the generated noisy labels while taking into account the label uncertainty. The student model was trained to make correct predictions on the clean dataset and to be consistent with the teacher model on noisy labels with uncertainty below a threshold. The teacher model was updated in a moving average scheme involving the weights of the student model.

4.6. Training procedures

For bladder, prostate, and rectum segmentation in MRI, Nie et al. (2018) trained a model on a dataset with clean labels and used it to predict segmentation masks for a separate unlabeled dataset. In parallel, a second model was trained to estimate a confidence map to indicate the regions where the predicted labels were more likely to be correct. The confidence maps were used to sample the unlabeled dataset for additional training data for the main model. Improvements of approximately 3% in Dice similarity coefficient (DSC) were reported.

Min et al. (2018) employed the ideas proposed by Malach and Shalev-Shwartz (2017) to develop label-noise-robust methods for medical image segmentation. As we reviewed above, the main idea in the method of Malach and Shalev-Shwartz (2017) was to jointly train two separate models and update the models only on the data samples on which the predictions of the two models differed. Instead of considering only the final layer predictions, Min et al. (2018) introduced attention modules at various depths in the networks to use the gradient information at different feature maps to identify and down-weight samples with incorrect labels. They reported promising results for cardiac and glioma segmentation in MRI.

For cystic lesion segmentation in lung CT, Zhang et al. (2018) generated initial noisy segmentations using unsupervised K-means clustering. These segmentations were used to train a CNN. Assuming that the CNN was more accurate than K-means, CNN predictions were used as the training labels for the next epoch. This process was repeated, generating new labels at the end of each training epoch. Experiments showed that the final trained CNN achieved significantly higher segmentation accuracy compared with the K-means method used to generate the initial segmentations. A rather similar method was used for classification of aortic valve malfunctions in MRI by Fries et al. (2019). Using a small dataset of expert-annotated images, simple classifiers based on intensity and shape features were developed. Subsequently, a factor graph-based model was trained to estimate the classification accuracies of these classifiers and to generate pseudo-ground-truth labels on a massive unlabeled dataset. This dataset was then used to train a deep learning classifier. This model significantly outperformed models trained on a small set of expert-labeled images.

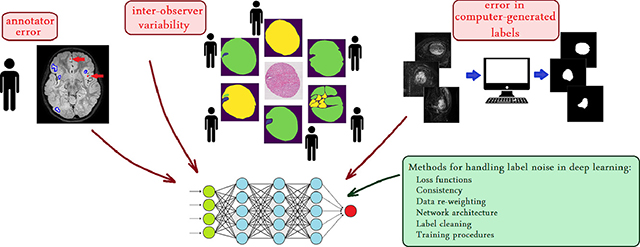

5. Experiments

In this section, we present our experiments on three medical image datasets with noisy labels, in which we explored several methods that we implemented, adapted, or developed to analyze and reduce the effect of label noise. Our experiments represent three different machine learning problems, namely, detection, classification, and segmentation. The three datasets associated with these experiments represent three different noise types, namely, label noise due to systematic error by a human annotator, label noise due to inter-observer variability, and error/noise in labels generated by an algorithm (Figure 1). In developing and comparing techniques, our goal was not to achieve the best, state-of-the-art results in each experiment, as that would have required careful design of network architectures, data pre-processing, and training procedures for each problem. Instead, our goal was to show the effects of label noise and the relative effectiveness, merits, and shortcomings of potential methods on common label noise types in medical image datasets.

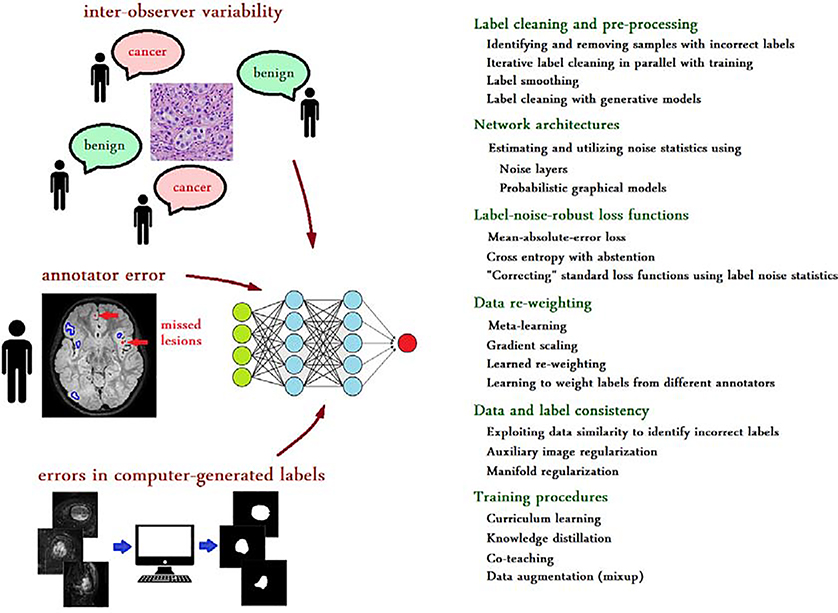

Fig. 1.

Label noise is a common feature of medical image datasets. Left: The major sources of label noise include inter-observer variability, human annotator’s error, and errors in computer-generated labels. The significance of label noise in such datasets is likely to increase as larger datasets are prepared for deep learning. Right: A quick overview of possible strategies to deal with, or to account for label noise in deep learning.

5.1. Brain lesion detection and segmentation

5.1.1. Data and labels

We used 165 MRI scans from 88 tuberous sclerosis complex (TSC) subjects. Each scan included T1, T2, and FLAIR images. An experienced annotator segmented the lesions in these scans. We then randomly selected 12 scans for accurate annotation and assessment of label noise. Two annotators jointly reviewed these scans in four separate sessions to find and fix missing or inaccurate annotations. The last reading did not find any missing lesions in any of the 12 scans. Example scans and their annotations are shown in Figure 2. We used these 12 scans and their annotations for evaluation only. We refer to these scans as “the clean dataset”. We used the remaining 153 scans and their imperfect annotations for training. These are referred to as “the noisy dataset”.

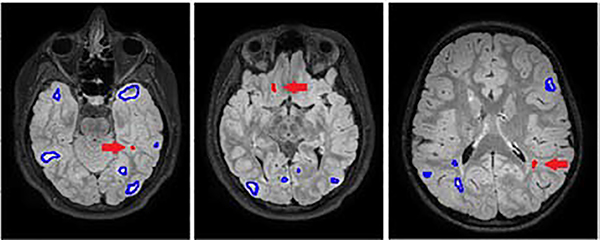

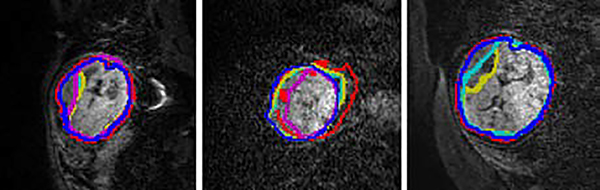

Fig. 2.

The FLAIR images from three TSC subjects and the lesions that were detected (in blue) and missed (in red) by an experienced annotator in the first reading.

In the 12 scans in the clean dataset, 306 lesions were detected in the first reading and 68 lesions in the followup readings, suggesting that approximately 18% of the lesions were missed in the first reading. Annotation error can be modeled as a random variable, in the sense that if the same annotators annotate the same scan a second time (with some time interval) they may not make the same errors. Nonetheless, our analysis shows that smaller or fainter lesions were more likely to be missed. Specifically, Welchs t-tests showed that the lesions that had been missed in the first reading were less dark on the T1 image (p<0.001), smaller in size (p<0.001), and farther away from the closest lesion (p=0.004), compared with lesions that were detected in the first reading. Therefore, in this application the intrinsic limitation of human annotator attention results in systematic errors (noise) in labels.

5.1.2. Methods

For the joint detection and segmentation of lesions in this application, we used a baseline CNN similar to the 3D U-Net (Çiçek et al., 2016). This CNN included four convolutional blocks in each of the contracting and expanding parts. The first convolutional block included 14 feature maps, which increased by a factor of 2 in subsequent convolutional blocks, resulting in the coarsest convolutional block with 112 feature maps. Each convolutional block processed its feature maps with additional convolutional layers with residual connections. All convolutional operations were followed by ReLU activation. The CNN worked on blocks of size 643 voxels and it was applied in a sliding-window fashion to process an image. In addition, since this application can be regarded as a detection task, we also used a method based on Faster-RCNN (Ren et al., 2015), where we used a 3D U-Net architecture for the backbone of this method. To train Faster-RCNN, we followed the training methodology of Ren et al. (2015), but made changes to adapt it to 3D images. Based on the distribution of lesion size in our data, we used five different anchor sizes and three different aspect ratios, for a total of 15 anchors in Faster-RCNN. The smallest and largest anchors were 3 × 3 × 7 mm3 and 45 × 45 × 61 mm3, respectively. Our evaluation was based on two-fold subject-wise cross-validation, each time training the model on data from approximately half of the subjects and testing on the remaining subjects. Following the latest recommendations in the literature on lesion detection applications (Carass et al., 2017; Commowick et al., 2018; Hashemi et al., 2019), our main evaluation criterion was lesion-count F1 score; but since this is considered a joint segmentation and detection task, we also computed DSC when applicable (i.e., for the 3D U-Net). It is noteworthy that due to the criteria that are used in diagnosis/prognosis and disease modifying treatments, lesion-count measures such as lesion-count F1-score have been considered more appropriate performance measures for lesion detection and segmentation algorithms compared to DSC (Commowick et al., 2018; Hashemi et al., 2019).

The methods developed, implemented, and compared in this task include:

Faster-RCNN trained on noisy labels.

Faster-RCNN trained on clean data. Same as the above, but evaluated using two-fold cross-validation on the clean data.

Faster-RCNN trained with MAE loss (Ghosh et al., 2017).

3D U-Net CNN trained on noisy labels with DSC loss.

3D U-Net CNN trained on clean data. Same as the above, but evaluated using two-fold cross-validation on the clean data.

3D U-Net CNN trained with MAE loss (Ghosh et al., 2017).

3D U-Net CNN trained with iMAE loss (Wang et al., 2019b).

3D U-Net CNN with data re-weighting. In this method, we ignored data samples with very high loss values. We kept the mean and standard deviation of the losses of the 100 most recent training samples. If the loss for a training sample was higher than 1.5 standard deviations of the mean, the network weights were not updated on that sample. To the best of our knowledge, such a method has not been proposed for brain lesion detection/segmentation prior to this work.

Iterative label cleaning. This is a novel technique that we have developed for this application. We first trained a random forest classifier to distinguish the true lesions missed by the annotator from the false positive lesions in CNN predictions. This classification was based on six lesion features: mean image intensity in T1, T2, and FLAIR, lesion size, distance to the closest lesion, and mean prediction uncertainty, where uncertainty was computed using the methods of Kendall and Gal (2017). Then, during training of the CNN on the noisy dataset, after each training epoch the random forest classifier was applied on the CNN-detected lesions that were not present in the original noisy labels. Lesions that were classified as true lesions were added to the noisy labels. Hence, this method iteratively improved the noisy labels in parallel with CNN training.

5.1.3. Results

As shown in Table 2, 3D U-Net achieved higher detection accuracy than Faster-RCNN. Since our focus is on label noise, we discuss the results of experiments with each of these two networks independently. For 3D U-Net, both MAE and iMAE loss functions resulted in lower lesion-count F1 score and DSC, compared with the baseline CNN trained with a DSC loss. However, both MAE and iMAE have been proposed as improvements to the cross-entropy. With a cross-entropy loss, our CNN achieved performance similar to iMAE. Interestingly, for Faster-RCNN, compared with the baseline that was trained with the cross-entropy loss, using the MAE loss did improve the lesion-count F1 score by 0.041. This indicates that such loss functions, initially proposed for classification and detection tasks, may be more useful for lesion detection than for lesion segmentation applications. The data re-weighting method resulted in lesion-count F1 score and DSC that were substantially higher than the baseline CNN. Moreover, iterative label cleaning achieved much higher lesion-count F1 score and DSC than the baseline and outperformed the data re-weighting method too. The increase in the lesion-count F1 score shows that iterative label cleaning improves detection of small lesions. The increase in DSC is also interesting and less expected since small lesions account for a small fraction of the entire lesion volume, which greatly affects the DSC. We attribute the increase in DSC to a better training of the CNN with improved labels. In other words, improving the labels by detecting and adding small lesions helped learning a better CNN that performed better on segmenting larger lesions as well. Comparing the first and the second rows of Table 2 shows that training on the clean dataset achieved results similar to training on the noisy dataset that included an order of magnitude larger number of scans. A similar observation was made for Faster-RCNN, where the lesion-count F1 score increased by 0.012 when trained on the clean dataset. This shows that in this application a small dataset with clean labels can be as good as a large dataset with noisy labels. In creating our clean dataset, we had to limit ourselves to a small number (12) of scans due to limited annotator time. It is likely that the results could further improve with a larger clean dataset.

Table 2.

Performance metrics (DSC and lesion-count F1 score) obtained in the experiment on TSC brain lesion detection using different techniques listed in Section 5.1.2 compared with the baseline models trained with noisy labels (i.e., Faster-RCNN trained on noisy labels, and 3D U-Net CNN) and baseline models trained on clean data (i.e., Faster-RCNN trained on clean data, and 3D U-Net trained on clean data). The best performance metric value (in each column) has been highlighted in bold. The results show that in this application methods based on data re-weighting and iterative label cleaning substantially improved the performance of the CNNs trained with noisy labels. The best results in terms of both the DSC and the lesion-count F1 score were obtained from our 3D U-Net with iterative label cleaning.

| Method | DSC | lesion-count F1 score |

|---|---|---|

| Faster-RCNN trained on noisy labels | - | 0.541 |

| Faster-RCNN trained on clean data | - | 0.553 |

| Faster-RCNN trained with MAE loss (Ghosh et al., 2017) | - | 0.582 |

| 3D U-Net CNN | 0.584 | 0.747 |

| 3D U-Net CNN trained on clean data | 0.578 | 0.743 |

| 3D U-Net CNN trained with MAE loss | 0.541 | 0.695 |

| 3D U-Net CNN trained with iMAE loss | 0.485 | 0.657 |

| 3D U-Net CNN with data re-weighting | 0.600 | 0.802 |

| 3D U-Net with Iterative label cleaning | 0.605 | 0.819 |

5.2. Prostate cancer digital pathology classification

5.2.1. Data and labels

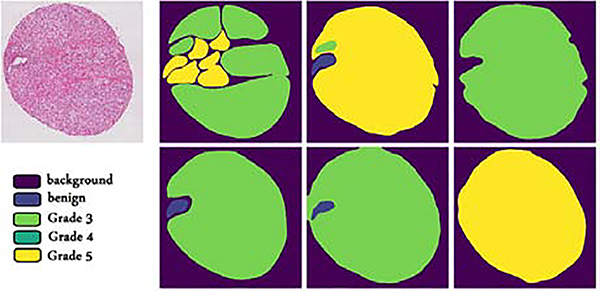

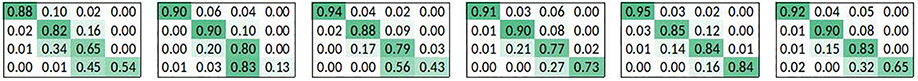

We use the data from Gleason2019 challenge. The goal of the challenge is to classify prostate tissue micro-array (TMA) cores as one of the four classes: benign and cancerous with Gleason grades 3, 4, and 5. Data collection and labeling have been described by Nir et al. (2018). In summary, TMA cores have been classified in detail (i.e., pixel-wise) by six pathologists independently. The Cohen’s kappa coefficient for the general pathologists on this task is approximately between 0.40 and 0.60 (Allsbrook Jr et al., 2001; Nir et al., 2018), where a value of 0.0 indicates chance agreement and 1.0 indicates perfect agreement. The inter-observer variability also depends on experience (Allsbrook Jr et al., 2001); pathologists who labeled this dataset had different experience levels, ranging from 1 to 27 years. Hence, this is a classification problem and label noise is caused by inter-observer variability due to the subjective nature of grading. An example TMA core and pathologists’ annotations are shown in Figure 3.

Fig. 3.