Graphical abstract

Keywords: Electronic health record, Immunization, Quality, Bayes, Attribution, Propensity score

Highlights

-

•

Reusable method for estimating impact of CEHRT on health care quality.

-

•

Method applies Bayesian analysis, estimates impact of CEHRT on immunization scores.

-

•

No statistically significant difference in the odds of meeting immunization score.

Abstract

Objective

To provide a methodology for estimating the effect of U.S.-based Certified Electronic Health Records Technology (CEHRT) implemented by primary care physicians (PCPs) on a Healthcare Effectiveness Data and Information Set (HEDIS) measure for childhood immunization delivery.

Materials and methods

This study integrates multiple health care administrative data sources from 2010 through 2014, analyzed through an interrupted time series design and a hierarchical Bayesian model. We compared managed care physicians using CEHRT to propensity-score matched comparisons from network physicians who did not adopt CEHRT. Inclusion criteria for physicians using CEHRT included attesting to the Childhood Immunization Status clinical quality measure in addition to meeting “Meaningful Use” (MU) during calendar year 2013. We used a first-presence patient attribution approach to develop provider-specific immunization scores.

Results

We evaluated 147 providers using CEHRT, with 147 propensity-score matched providers selected from a pool of 1253 PCPs practicing in Maryland. The estimate for change in odds of increasing immunization rates due to CEHRT was 1.2 (95% credible set, 0.88–1.73).

Discussion

We created a method for estimating immunization quality scores using Bayesian modeling. Our approach required linking separate administrative data sets, constructing a propensity-score matched cohort, and using first-presence, claims-based childhood visit information for patient attribution. In the absence of integrated data sets and precise and accurate patient attribution, this is a reusable method for researchers and health system administrators to estimate the impact of health information technology on individual, provider-level, process-based, though outcomes-focused, quality measures.

Conclusion

This research has provided evidence for using Bayesian analysis of propensity-score matched provider populations to estimate the impact of CEHRT on outcomes-based quality measures such as childhood immunization delivery.

1. Introduction

In 2009, the U.S. embarked on incentivizing providers to adopt government-certified electronic health record technologies (CEHRTs), aiming to reduce costs and inefficiencies in the health care system, while improving quality [1] (abbreviations are listed in Supplementary Materials, Table 1). Electronic clinical quality measures (eCQMs) were introduced along with CEHRTs as an approach to quantify the improvement in clinical performance and outcomes. One of the eCQMs is childhood immunization (NQF#0038), which requires having a series of vaccinations administered by the second year of age.

Despite the importance of immunization for reducing morbidity and mortality [2], [3], [4] and the potential for CEHRT to improve immunization rates, the immunization rate in the U.S. remains below national levels [5], [6]. In 2013, 70.4 percent of children ages 19–35 months received the full Center for Disease Control and Prevention (CDC) recommended vaccine series [7]. For Medicaid children through 2 years of age in 2014, the national mean immunization rate was 62.1 percent [8].

Within Medicaid agencies, Healthcare Effectiveness Data and Information Set (HEDIS) measures are the primary method of tracking quality [9]. HEDIS generally uses administrative data with occasionally hybrid methodologies for medical record data supplements to calculate process-based quality measures. Hybrid methods are applied to quality calculations when administrative data is lacking [10]. HEDIS managed care health care quality measures are calculated at the health plan level and not the individual provider level [11].

The literature assessing the correlation of quality measures to the use of CEHRT suggest that quality improvement is more likely if the CEHRT functionality tracks closely with the quality metric and if providers use the CEHRT functionality effectively. The functionalities of best-practice alerts, order sets, and panel-level reporting, have led providers to receive statistically significantly higher “meaningful use” quality scores related to the EHR functionality [12]. These “best practice alerts,” or clinical decision support (CDS), pertained to the preventative services of tobacco cessation, breast cancer screening, colorectal cancer screening, pneumonia vaccination, and body mass index screening [12], [13].

CEHRT-based CDS focuses on prompting providers to take some type of action on particular patients, usually during or immediately surrounding the patient encounter. CDS may be effective as a childhood-vaccine intervention because it takes advantage of the presence of the child during well- and sick-child visits to reduce the likelihood of missed opportunities to administer or catch up on vaccines. How the CDS is implemented within the clinical workflow can differentially impact the effect of CEHRT on vaccination rates, with some studies showing improvements in vaccine rates [14], [15] while others not showing the expected improvements [16]. With standardized Electronic Health Record (EHR) systems, Medicaid agencies will soon be able to collect patient-level data by provider to compare like providers to like providers longitudinally, allowing for pay-for-outcomes models. However, until the adoption of certified EHRs is widespread, the integration of health-care related data sources is easier, patient attribution is precise and accurate, and electronic clinical measures become more commonplace, any longitudinal analysis of quality must rely on HEDIS-like quality methodologies. Maryland Medicaid uses the HEDIS Childhood Immunization Status score, Combination 7 (HEDIS immunization measure), as a standard measure to quantify immunization rates for its child population.

2. Objective

The primary objective of this study is to develop a methodology for estimating the effect of CEHRT use by Maryland Medicaid managed care network providers on a HEDIS immunization measure to provide a working model for measuring provider-specific outcomes. The approach used by the research team may be used for other health technology adoptions or other outcome measures where a single health practitioner can be reasonably assumed to be responsible for care leading to the outcome.

3. Materials and methods

3.1. Study design

This research used a retrospective, interrupted time series to assess the association between CEHRT use and a provider-specific HEDIS immunization measure (Fig. 1 ) [17]. The first observation begins in 2010, a full year before Maryland began the Medicaid EHR Incentive Program. Providers selected as the intervention group adopted their EHR in 2011 or 2012 but achieved MU in 2013 (Fig. 1).

Fig. 1.

Research design for studying the effect of CEHRT on HEDIS immunization measure.* *Solid, dark grey boxes represent measurement periods for the HEDIS immunization measure for the intervention group. Solid, white boxes represent HEDIS immunization measurement periods for the comparison group. The box with a hatched pattern represents the contamination buffer. Abbreviations: CY: Calendar Year; MU: Meaningful Use.

Due to Medicaid’s EHR Incentive Program participation rules – which allowed a provider to participate in the program only once per year – this study applied a one-year measurement pause or “contamination buffer.” (Fig. 1) The “contamination buffer” is necessary because providers who have achieved MU in 2013 likely acquired their EHR in 2012. However, the provider who acquired and implemented their EHR in 2012 could have done so at any point during that year. The exact implementation dates were unknown to the research team.

3.2. Study sample

The study population included any Maryland Medicaid Managed Care Organization (MCO) primary care network physician who was continuously enrolled in an MCO over the four-year study period and provided immunizations. From this population, the intervention group contained those providers who adopted an EHR and achieved MU in 2013 while reporting eCQM NQF#0038 (Childhood Immunization Status) (n = 147). In 2013, providers participating in the EHR Incentive program were not required to submit the Childhood Immunization Status eCQM. Because providers could opt to report the Childhood Immunization Status measure, we assumed that providers submitting on this measure likely intended to use their CEHRT to at least track vaccines administered to their patients. We did not use the NQF#0038 rate reported by providers who adopted EHR at any point in this study.

The providers who met MU in 2013 and attested to meeting NQF#0038 (the “intervention group”) were compared to PCPs who gave immunizations but had not adopted CEHRT (the “comparison group”). We compared the intervention group’s HEDIS immunization measure result to the HEDIS immunization measure results of the comparison group. The authors created a propensity score matching (PSM) comparison group using R’s MatchIt package and the nearest neighbor approach, 1:1 match ratio, without replacement (n = 147) [18], [19].

We operationalized PSM by scoring the likelihood to adopt CEHRT matching against data by each calendar year on multiple provider specifications: primary group affiliation; specialization; Early and Periodic Screening, Diagnostic, and Treatment (EPSDT) certification status; participation in the Vaccines for Children Program (VFC); and Medicaid claims and encounter volume [20]. The number, size, and distribution of individuals within groups is available in Supplementary Materials, Table 2.

3.3. Outcome measure

The outcome measure, the HEDIS immunization measure, requires that the following vaccines be administered before age 2: four diphtheria, tetanus and acellular pertussis (DTaP); three inactive polio virus (IPV); one measles, mumps and rubella (MMR); two H influenza type B (HiB); two hepatitis B (HepB), one varicella-zoster virus (“chicken pox”) (VZV); four pneumococcal conjugate (PCV); two hepatitis A (HepA); and two or three rotavirus (RV). See Supplementary Materials, Tables 3 and 4 for additional details about the outcome measure. For each outcome measurement period in this study, we calculate the HEDIS immunization measure.

3.4. Patient attribution

In order to calculate a HEDIS immunization measure at the provider level, the authors used a passive patient-to-provider attribution algorithm based on the first presence of Evaluation and Management, preventative medicine Current Procedural Terminology (CPT) codes signaling the child’s first, outpatient visit with a PCP, which is a similar approach used by Medicare for quality reporting programs [21], [22]. Because Medicaid may not necessarily confirm that a child’s visit is initial versus periodic (e.g., CPT code 99381 versus 99391), we included codes 99391 and 99392. However, CPT codes 99391 and 99392 are only used to bind a recipient to a PCP if encounter history does not reveal an earlier 99381 or 99382 code. Additionally, we restricted the search to only those encounters for Medicaid beneficiaries aged less than 2.

3.5. Propensity score

Because researchers and health system administrators will likely be using this method while the health care delivery system is actively providing care and the health information technology has already been implemented, most, if not all of the research designs will be observational, using a quasi-experimental research method. Thus, a clear comparison group will be lacking. One method for establishing a comparison group for quasi-experimental research designs is PSM (Fig. 2 ). PSM simulates the conditional probability of being selected for the treatment group given a series of confounders related to the outcome but not the treatment [23], [24]. In observational studies, where an intervention does not have a clear comparison group such as randomized control trials, PSM can help to match observed pre-intervention assignment variables of individuals in the intervention group to those of a theoretical comparison group [25]. By matching observed pre-intervention variables of the intervention group and a comparison group, researchers and health care administrators may improve the likelihood that differences in outcome between intervention and comparison groups are due to the intervention alone and not unmeasured covariates. In other words, the more similar the intervention and comparison group are to each other on various characteristics before intervention, the more likely the researcher or administrator is confident that they can better infer causal inferences between intervention and outcomes [25].

Fig. 2.

Propensity score distribution.

Koepke et al. used surveys to identify provider and patient characteristic that correlated with childhood immunization rates [20]. Following Koepke et al.’s work, the authors’ analyzed provider characteristics such as practice size, provider specialty, participation in the VFC program on childhood vaccination rates, but found the strongest correlation with provider type [20]. For this research, PSM is operationalized by comparing the intervention group to the potential comparison group, scoring the likelihood to adopt CEHRT matching against data by each calendar year on: provider primary group affiliation (calendar years 2010–2012), EPSDT certification status (calendar years 2010–2012); provider specialization; participation in the VFC program, total Medicaid claims and encounter volume (calendar years 2010–2012); percent of Medicaid claims and encounter volume for children under 2 years of age (calendar years 2010–2012); and, percent of Medicaid claims and encounter volume between the ages of 3 and 18 (calendar years 2010–2012).

Because we are interested in provider-level outcome measures, we conducted PSM at the individual provider level (controlling for group membership) instead of creating propensity scores at the provider-cross-group level.

Fig. 2 depicts the propensity score’s distribution, pre- and post-matching. Each open circle is a unique provider. The closer the “Matched Intervention Units” scatter plot resembles the “Matched Comparison Units,” the better the match. As Fig. 2 shows, visually, the matched intervention and comparison groups seem well matched.

A full-variable balance plot (“love plot”) provides a better visualization technique to assess the impact of PSM on creating a suitable comparison group for analysis. Fig. 3 shows the balance plot of our propensity score matching, comparing each variable pre- and post-matching.

Fig. 3.

Propensity score matching balance plot. Distance: A construct of the love plot, it measures the difference between the propensity score; EPSDT: Early and Periodic Screening, Diagnostic, and Treatment; PV: Patient volume for the individual provider for the given year; Provider Group: A unique ID coded for the group across years, with no practical meaning for PSM; and, VFC: Vaccines for Children.

Fig. 3 depicts the study variables as ranked by order of improvement. The dotted line is the 0.1 mean difference threshold. The closer each variable moves to the dotted line, the better the match (Fig. 3). Supplementary Materials, Table 5, provides the exact values for each variable in the intervention and comparison group, and the results of a statistical test of differences.

3.6. Data sources

This study used six data sets provided by three data stewards. Data stewards included: the Maryland Department of Health (MDH), the State of Maryland Board of Physicians (MD BoP), and the Maryland Health Care Commission (MHCC). Nearly all the data sources are administrative and should be available to researchers and health-system administrators in other States. For this study, we controlled for programmatic confounders which may have impacted CEHRT acquisition or vaccine administration, such as Maryland’s state payor incentive program, which provided financial incentives to certain providers who adopted an EHR, and Maryland’s VFC and their EPSDT Program (also known as Healthy Kids).

The study data sets included: (1) The primary administrative data set for Maryland Medicaid, Maryland’s Medicaid Management Information System (MMIS), which contains claims and encounters data and is used to calculate HEDIS measurements for the statewide Value-Based Purchasing program and this study (Fig. 4 , Ⓐ). (2) The administrative data set on the use of the health technology, in this study, Maryland’s electronic Medicaid Incentive Payment Program (eMIPP), which contains data related to the EHR Incentive Program, including participation, EHR type, and MU measures (Fig. 4, Ⓑ). (3) MDBoP, which contains licensure and survey data on all physicians licensed in Maryland and is used to obtain demographic information on providers who self-identify as possessing an EHR (Fig. 4, Ⓒ). (4) Maryland’s VFC, which contains data on provider immunization administration and participation in the VFC (Fig. 4, Ⓓ). (5) Maryland’s state payor incentive program, which is s state-specific program that offers incentives to primary care practices for adopting and using certified EHR technology, and whose data base includes data on provider participation and year of participation (Fig. 4, Ⓔ). And (6) Maryland’s EPSDT Program (also known as Healthy Kids), which certifies Maryland Medicaid pediatricians as meeting national EPSDT standards (Fig. 4, Ⓕ). Summary statistics for all variables extracted from these data sources are available in Supplementary Materials, Table 6.

Fig. 4.

Data Aggregation Map.

3.7. Data linking

As shown in Fig. 4, the National Provider Identifier (NPI) – a unique, provider-specific identifier, self-attested to by every health care provider via the National Plan and Provider Enumeration System (NPPES) – is the primary key used to combine all data sets. All source records for each data set utilizes the NPI to track records except Maryland’s VFC program. To ensure that only individual physicians with NPIs known to NPPES are included in the analysis, we used the NPPES Application Program Interface to validate provider type, status (active or inactive), and NPI type (Type I individual providers only). Additionally, we compared provider type and specialty codes across MCOs and with NPPES where necessary to confirm a single provider type and specialty for each provider.

Maryland’s VFC program uses an Organization Identifier, which tracks the practice location to which various vaccines are provided and stored. To incorporate provider VFC participation data into the master data set used for this analysis, we de-duplicated VFC participation by primary provider contact for each Organization Identifier. Then, using first and last name matches within Microsoft Access, we pulled NPIs from all other data sets. If, after leveraging current data sets, we could not find an NPI, we searched the NPPES registry using the search criteria of first and last name and participating state of Maryland. For each calendar year, we assigned a binary variable to designation participation in the VFC program.

To calculate the outcome measure – the HEDIS immunization quality measure – we obtained the encounter and claims history for all recipients who are linked to PCPs using Evaluation and Management codes. All recipients were tracked within MMIS using unique system keys. For Maryland, the unique key was the Medical Assistance Numbers (MA Numbers for providers or Recipient IDs for Medicaid beneficaries). Medicaid recipients may have more than one MA number; however, all MA Numbers are linked to the first MA Number received by a Maryland Medicaid recipient: a Legacy MA Number. Each time a recipient received a particular vaccine at the designed time interval, the PCP associated with that recipient is credited with administering the vaccination. By vaccine and by calendar year, the counts of recipients assigned to the PCP receiving the vaccine were summed, as are the total number recipients assigned to the PCP. Using this process, we could obtain vaccination rates by provider by vaccination. To obtain vaccination combination scores, the appropriate vaccine counts were summed and a ratio of vaccination to total affiliated recipients by PCP was calculated.

Eq. (1) details the vaccination combination score calculation. nPatient (PCP) is the number of patients associated with a particular PCPi, from j1-jn. nVaccines is the number of vaccines administered in the correct time interval, k, for each patient (ptj-ptjn). See Supplementary Table 3 for the appropriate time interval for each vaccination.

| (1) |

Note: I(p) = 1 if p is true, 0, if false.

All intervention and potential comparison group providers participate with multiple groups with a varying number of group members over time. This means that varying degrees of confounding exist among group members of the estimated effect of EHRs on quality. Thus, the analytic model chosen must account for group-based confounding. A “group” is defined as any provider who practices under and is affiliated with a Type 2 “Organization” NPI in Maryland Medicaid. To account for location-based confounding, the MA number was used in place of the NPI to establish group affiliation (data obtained as shown in Fig. 4).

We linked all the data sources using Microsoft Access (Fig. 4, Ⓖ). After combining these data, we identified 78,922 children affiliated with a unique MCO network physician, 15,060 of whom were affiliated with one of the 147 intervention providers and 63,866 were affiliated with the cohort of 1253 potential comparison group providers (Fig. 4, Ⓗ).

We restricted each provider in each calendar year to a primary group as providers practice with many groups, and because this analysis recognizes that the intervention group is comprised of providers who use an EHR at a physical practice location. For the intervention group, in calendar year 2013, the group to which they are primarily affiliated is represented by the pay-to MA number to which they released their Medicaid EHR Incentive. For all other periods and for all comparison group periods, we used an iterative approach to identify primary group affiliation, based on data in the Fee-for-Service (FFS) MMIS file.

To identify all other primary group affiliations but those for the intervention group in calendar year 2013, we queried the FFS MMIS file for any group affiliations. Next, by calendar year, we counted the number of days a provider is affiliated with a group from the initial begin date of the group affiliation to the lesser of the date within the calendar year or the end of the calendar year. By calendar year, the group with the greatest number of days in which the provider is affiliated was marked as the provider’s primary group. If we could not identify a primary group in this way, we used earliest begin date.

3.8. Model specification and analysis

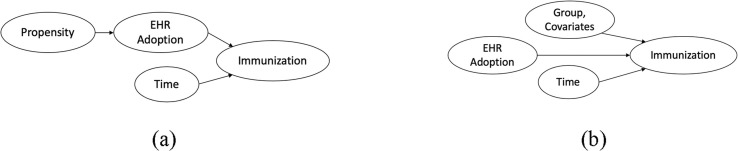

The essential causal model is shown in Fig. 5 . In the absence of “back doors” or “colliders,” the desired relationship (EHR Adoption → Immunization) is identifiable [26].

Fig. 5.

Presumed causal models: (a) With propensity score (b) Without propensity score.* *This model is the basis of the Bayesian statistical model developed in this study.

The relationship between the data used for this analysis is complex due to its hierarchical structure (Fig. 4). The data includes three nested levels: groups, providers, and time. Providers administer vaccinations for each study year. Providers are nested within groups, which themselves adopting CEHRT. In practice, these relationships may be even more complex: over time, providers may move between primary groups, and thus have different group members. Furthermore, each level of the data has varying levels of uncertainty thus allowing for the integration of background information to inform the likelihood of an immunization status. For example, provider-level immunization rates are likely different than group-level immunization rates.

To address the many relationships between and within the hierarchical nature of the data, we used a Bayesian-modeling method to address those relationships directly. This helped dealing with the: (1) complexity of the relationships between the data; (2) resulting uncertainty at each level of the data; (3) relatively low sample size; and (4) greater generalizability of the posterior distribution. Bayesian statistics offer methodological benefits that address all four concerns [27].

We specified hierarchical models, expressed as Bayesian analysis Using Gibbs Sampling (BUGS) models and non-informative conjugate priors [28]. A non-informative conjugate prior is a higher-level prior distribution that is within the same family as the underlying distribution it is feeding within the model, but its distribution is specified to be vague [29]. Using BUGS, the model focused on provider vaccination rates as affected by base vaccination rates, behavior of the rest of the provider’s group, and other relevant covariates. The second “level” was the difference in vaccination rates across the years, which in turn is a function of CEHRT status.

Likelihood was derived by Eq. (2), which states that the likelihood of theta, the parameter you care about, given y, the data you observed, is the product of each of the probabilities of y from y1 to yn, given theta, a probability whose expression is known (e.g, normal distribution):

| (2) |

The posterior distribution of is derived from Bayes’ theorem or Bayes’ rule, where the posterior distribution of theta given y is proportional to () the prior distribution of theta times the likelihood of theta given y, where p is the probability function for the data and L is the likelihood [20]. (Eq. (3)):

| (3) |

We used the programming language R to calculate the posterior distribution [18]. The package rjags allows for BUGS models to run Markov Chain Monte Carlo (MCMC) simulations [30], [31], [32]. We used the Convergence Diagnosis and Output Analysis (CODA) package to calculate outputs and diagnostics on MCMC samples [33]. We ran Bayesian models with 1000 adaptations, 20,000 iterations, 4 chains, and 1000 burn-in. These settings are standard for a model of this size and mean. The final posterior distributions are evaluated at 20,000 samples in each of 4 chains to prevent local maximization, with adaptions and burn-in accounted for before initializing iterations.

We specified multiple models in an iterative fashion, visually inspecting the sampling distributions for key nodes of the model to determine whether the samples are converging around a mean using trace and kernel density plots [34]. We also monitored the quality of chain mixing via the Gelman and Rubin diagnostic and the presence of autocorrelation through the Raftery and Lewis diagnostic and autocorrelation diagrams [35]. We then used the Deviance Information Criterion (DIC) and a modified χ2-like goodness-of-fit test as the measures of merit [36], [37].

A tradition χ2-statistic compares the sum of squares of standardized residuals for the expected values under a particular model. A weakness in Gelman’s approach to developing a χ2-like statistic is that it does not have a χ2 distribution [37]. Having a χ2 distribution facilitates model comparison through p-values. One method of creating a χ2-like goodness-of-fit test compares the proportion of posterior means exceeding the 95th quantile of a distribution based on equally probable quantiles of a Poisson distribution derived at the base model’s mean [37]. Supplementary Materials, Table 7 shows the comparison model fit using the DIC and χ2-like goodness-of-fit.

Fig. 6 is a visualization of the Bayesian model directed acyclic graph (DAG) displaying a hierarchical logit model (comparing the HEDIS immunization measure of CEHRT users to non-users) with a Poisson distribution at the provider (j,k) level. The key parameter is delta.delta.mean.EHR: the change in, the change in, immunization status score due to CEHRT use.

Fig. 6.

Directed acyclic graph for a hierarchical Poisson distribution for HEDIS immunization measure, without propensity score covariate adjustment.* *Single ovals: stochastic nodes (random variables with probability distributions); Double ovals: deterministic nodes (variables functionally dependent on parents); and, Rectangles: deterministic nodes from the data. SeeTable 1for explanations of the variable names.

The model incorporates fixed covariates for demographics, random effects at the provider (j) level, and data-derived group-mean data. Prior distributions used throughout the model specification process are all non-informative conjugate priors. A non-informative conjugate prior is a higher-level prior distribution that is within the same family as the underlying distribution it is feeding within the model, but its distribution is specified to be vague [20]. The details for each node are described in Table 1 .

Table 1.

List and description of nodes.

| Node | Description | Notes |

|---|---|---|

| Data-Derived Deterministic Nodes | ||

| p.preexp[j] | The probability of successfully meeting Immunization Status Score Combo 7 pre-intervention (pooled years 2010 and 2011) | |

| covariates[j] | State Regulated Payor EHR Incentive Program participation, each of 2011–2013 and 2014 and licensure survey data for prior EHR use in years 2009 and 2010* | |

| 2013GrpProp[k] | The total group Immunization Status Score Combo 7 group rate by group for 2013 | |

| 2014GrpProp[k] | The total group Immunization Status Score Combo 7 group rate by group for 2014 | |

| n.provider[j] | The number of providers in the analysis (294) | |

| indicator.EHR[j] | A binary variable indicating whether provider is in the intervention group | |

| PSM[j] | Provider-specific propensity score | |

| Stochastic Nodes | ||

| delta.noEHR[j] | The change in the probability of successfully meeting Immunization Status Score for non-EHR users | |

| delta.mean.noEHR | Population mean change in Immunization Status Score | Non-informative normal conjugate prior, mean 0, precision <0.001 |

| delta.noEHR.prec | Variance of population mean change in Immunization Status Score | Non-informative gamma conjugate prior, mean 0.5, precision 0.5 |

| delta.delta.EHR[j] | The change in the probability of successfully meeting Immunization Status Score, comparing EHR users to non-EHR users. | |

| delta.delta.mean.EHR | Population mean change in Immunization Status Score for EHR users | Non-informative normal conjugate prior, mean 0, precision <0.001 |

| delta.EHR.prec | Variance of population mean change in Immunization Status Score for EHR users | Non-informative gamma conjugate prior, mean 0.5, precision 0.5 |

| r.observed[j] | The observed number of successes (Immunization Status Score numerators) | Inferred by p.provider, but fed directly from r.expected[j] as a Poisson distribution |

| Error[j] | Provider-level random-effects | |

| mean.error | Population-level random effects | Non-informative normal conjugate prior, normal distribution with mean 0 and precision defined using tau.error |

| tau.error | Population-level random effects variance | Non-informative gamma conjugate prior, gamma distribution with mean and precision 0.001 |

| Deterministic Nodes | ||

| Delta1[j]§ | The primary node estimating the change in the probability of successfully meeting Immunization Status score. Delta1[j] = delta.delta.EHR[j]*indicator.EHR[j] + delta.noEHR[j] + PSM[j] |

|

| p.provider[j,k] | The success rate for provider [j] in group [k]. | |

| r.expected[j] | The expected number of successes for provider [j]. | |

The authors’ removed calendar year 2012 from the analysis to account for various unknown time periods in which EHR Incentive Program participants may have installed their EHRs.

Delta1[j] = delta.noEHR + PSM for non-EHR users; Delta1[j] = delta.delta.EHR[j] + delta.noEHR + PSM for EHR users.

4. Results

To estimate the effect of CEHRT on HEDIS immunization measure, we monitored the mean change in the log-odds change in HEDIS immunization measure due to CEHRT use. The Bayesian model estimates the posterior sampling distribution mean log-odds of 0.20. See Supplementary Materials, Fig. 1 for the trace and kernel density plots. As shown in Table 2 , comparing the intervention group to its comparison group showed a 21% estimated improvement in the odds of meeting HEDIS immunization measure (Combination 7) due to EHR use. However, as the credible set – analogous to the confidence interval in frequentist statistics – contains 1, this result implied no statistically significant difference between the intervention and comparison groups.

Table 2.

Mean change in the change in odds of increasing HEDIS immunization measure due to CEHRT adoption.

| Variable | Mean | SD | 95% Credible Set |

|---|---|---|---|

| delta.delta.mean.EHR | 1.21 | 4.50 | (0.88–1.73) |

Since EHR software may provide different methods for assisting providers with improving their immunization status scores (e.g., better user interface or more helpful CDS), we created binary variables for each EHR developer with ten or more users. Then, we re-ran our model while stratifying based on the underlying EHR. Table 3 lists these developers and the absolute group mean change in the HEDIS immunization measure. Estimates with credible sets not containing 1 are denoted with an asterisk. Only Epic EHR produced a credible set that did not contain 1, when comparing the group mean change in immunization score to that of the overall intervention group mean.

Table 3.

Effect of EHR developer on HEDIS immunization measure.

| EHR vendor | Number of users | Absolute difference in odds of meeting the HEDIS immunization measure, comparing EHR developer to all EHR users |

|---|---|---|

| Allscripts | 13 | −0.34 |

| Aprima Medical Software, Inc. | 10 | 0.02 |

| eClinicalWorks, LLC | 38 | 0.87 |

| Epic Systems Corporation | 16 | −0.88* |

| GE Healthcare | 17 | 0.59 |

| Sage | 10 | 0.43 |

Note 1: Welch, two-sample t-test, comparing EHR developer user change in group mean odds to EHR developer overall change in mean odds (1.21).

Note 2: Excluded EHR vendors with group membership less than 10: Acrendo Software, Inc., Amazing Charts, athenahealth, Inc., Bizmatics, Inc., Connexin Software, Inc., drchrono, Inc, Enable healthcare, Inc., Glenwood Systems, LLC., Greenway Health, LLC, MedPlus, Practice Fusion, and Viteria Healthcare Solutions, LLC.

Credible Set not containing 1.

In sum, comparing EHR users meeting Meaningful Use in Calendar Year 2013 (the “intervention” group) to a propensity-score matched provider population not adopting an EHR (the “comparison” group) within two-years during pre- and post-implementation, revealed no statistically significant difference in the odds of meeting the HEDIS immunization measure.

5. Discussion

As CEHRT becomes more commonplace within the health care system, EHRs will continue to evolve and mature [38]. As EHRs mature over time, their value should continuously be assessed in assisting providers to meet value-based care outcomes requirements and future quality measure reporting needs. However, an established methodology to measure the impact of CEHRT adoption, including new features that may be added to improve CEHRT functionality, is lacking. And, as concerns over the usability of CEHRT continue to grow, providers and their technology vendors should aggressively assess and adapt their technology to meet user needs.

This research highlights that, although providers may choose to be measured on a particular quality metric due to their specialization and patient population (PCPs administering vaccinations to children), just because they have adopted an EHR does not mean that EHR will improve their quality score or that the EHR itself is designed to improve that specific measure’s score. Thus, the flexibility in measure selection for various quality and value-based programs may not be as important as EHR functional flexibility in driving quality improvement. Unfortunately, just at a time when CEHRT has made it easier for providers to select technology with certain baseline functionality, it has also made it difficult for providers to obtain EHRs that are tailored to their needs, which is where EHR use will actually be more meaningful [39], [40].

Medicaid agencies, as they continue to leverage federal funding to modernize their information systems, will soon integrate clinical data with administrative data to measure quality. The linking of this data may supplant the need to do resource intensive medical records review that occurs with hybrid HEDIS measures. In the near future, Medicaid agencies will likely use quality scores as calls-to-action, revealing trends in patient health status to providers and MCOs as a means to target interventions to improve quality for the individual before health declines, instead of using quality scores as a posterior, population-based summary statistic.

Our research shows that it is possible to leverage available administrative data, coupled with a Bayesian analytic framework and patient-attribution methodology, to estimate provider-specific immunization rates for CEHRT users. In this study, we used several methodologic approaches that may be of use to researchers and administrators, including: the linking of multiple administrative datasets using National Provider Identifiers; the use of nearest neighbor (1:1) propensity score matching to compare primary care physicians who met the HEDIS immunization measure and used CEHRT to matched comparisons from network physicians who provided immunizations but did not adopt EHR; the binding of patients to primary care physicians using first-presence, claims-based childhood visit information; and, the use of a Bayesian hierarchical model to measure the change in immunization status score due to EHR use. Moreover, this study showed that in our Medicaid target population, acquiring and using CEHRT for immunization status reporting was not sufficient to create a differential impact on immunization rates.

Our methodology can be used to evaluate the impact of CEHRT’s new and maturing functionalities in achieving value-based quality measures and improving population health outcomes [41], [42], [43]. New CEHRT functionalities and data types captured in EHRs by PCPs are increasingly used to risk stratify and manage patient populations on a health system or community level [44], [45], [46], [47]. However, the value of such CEHRT functionalities, data types (e.g., social determinants of health) [48], [49], [50] and potential data challenges in improving population health quality measures requires additional research [51], [52]. The methodology used in this study can inform such studies in effectively controlling various population-level moderators and mediators while measuring the net effect of EHR features on population-level quality measures [53].

Finally, findings generated by our methodology offer a reusable approach for researchers and health system administrators to estimate the impact of health information technology on individual, provider-level, process-based, though outcomes-focused, quality measures. This method is an alternative to current approach of assessing the value of CEHRT features through questionnaires and item response theory [54]. For example, CEHRT functionality may be particularly important for public health crisis [55], [56], [57], such as the current novel corona virus disease (COVID-19) pandemic, when health care systems focused their attention on emergency care and individuals may have participated in shelter-in-place requirements. Indeed, the CDC has reported lower ordered doses and administration of non-influenza vaccine doses during and surrounding the peak of COVID-19 outbreak [58]. Our approach can be used to estimate the impact of effective CEHRT functionalities to limit pandemic-related actions that has reduced preventative and non-emergent care.

5.1. Limitations

This study has some limitations: (1) As with all studies that utilize PSM to develop comparison groups, this study assumes non-ignorability or selection-on-observables to define covariates for propensity score matching. The authors attempted to mitigate this weakness by using data supported by immunization research [20]. The finding of an odds close to one of change in the immunization measure could have been due to unwarranted controlling of confounding variables. However, our causal analysis obviates that concern (Fig. 5). (2) This study compares data 2 years before and 2 years after implementation of the Medicaid EHR Incentive Program and expects to see a noticeable change in quality after EHR implementation within 1 year. Although it is reasonable to assume that a change in the HEDIS immunization measure may occur within a year, it may not be accurate to assume that every provider who self-selected as implementing an EHR in 2013 did so at the same time and at the same level. It is possible that EHR implementation may take different providers different amounts of time and once implemented, different providers may take longer to fully integrate the system into their practice workflow. However, we looked at 2 years of immunizations after the index year. (3) This study also assumes that a provider’s primary group is the group from which most of their claims and encounters derive. This assumption is plausible, as claim volume attributed to and time spent with a group likely stand for a proxy for the amount of physical time spent delivering care at the particular practice. (4) This research did not control for particular group and provider characteristics such as how a group implemented their EHR, how an individual provider used the EHR, and whether or not providers are aware of the immunization schedule and will administer vaccines according to the schedule. (5) Finally, most likely due to small sample size, the results of the absolute mean difference in the change in immunization status score for Epic CEHRT might be unreliable.

6. Conclusion

This research has provided evidence for using Bayesian analysis of propensity-score matched provider populations to estimate the impact of CEHRT on outcomes-based quality measures. This method can be re-used to assess the impact of provider-level CEHRT on various quality measure outcomes.

Credit authorship contribution statement

Paul J. Messino: Conceptualization, Methodology, Software, Formal analysis, Investigation, Data curation, Writing - original draft, Visualization. Hadi Kharrazi: Writing - review & editing. Julia M. Kim: Writing - review & editing. Harold Lehmann: Supervision, Validation, Methodology, Writing - review & editing.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgments

Acknowledgements

We thank the Maryland Department of Health, the Maryland Health Care Commission, and the Maryland Board of Physicians for supplying the data used in this study.

Funding

No funding supported this research.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.jbi.2020.103567.

Appendix A. Supplementary material

The following are the Supplementary data to this article:

References

- 1.Congressional Budget Office Evidence on the costs and benefits of health information technology. Evid. Rep. Technol. Assess. 2018;132:1–71. doi: 10.23970/ahrqepcerta132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Centers for Disease Control and Prevention Ten great public health achievements – United States, 2001–2010. MMWR. Morb. Mort. Wkly. Rep. 2014;60(19):619–623. [PubMed] [Google Scholar]

- 3.Committee on the Assessment of Studies of Health Outcomes Related to the Recommended Childhood Immunization Schedule; Board on Population Health and Public Health Practice; Institute of Medicine. The Childhood Immunization Schedule and Safety, National Academies Press (US), Washington (DC), 2013. [PubMed]

- 4.Maciosek M.V., Edwards N.M., Coffield A.B. Priorities among effective clinical preventive services. Am. J. Prev. Med. 2006;31(1):90–96. doi: 10.1016/j.amepre.2006.03.011. [DOI] [PubMed] [Google Scholar]

- 5.Luman E.T., Barker L.E., Shaw K.M. Timeliness of childhood vaccinations in the United States: days undervaccinated and number of vaccines delayed. JAMA. 2005;293(10):1204–1211. doi: 10.1001/jama.293.10.1204. [DOI] [PubMed] [Google Scholar]

- 6.Luman E.T., McCauley M.M., Stokley S., Chu S.Y., Pickering L.K. Timeliness of childhood immunizations. Pediatrics. 2002;110(5):935–939. doi: 10.1542/peds.110.5.935. [DOI] [PubMed] [Google Scholar]

- 7.Elam-Evans L.D., Yankey D., Singleton J.A. National, state, and selected local area vaccination coverage among children aged 19–35 months—United States. MMWR. Morb. Mort. Wkly. Rep. 2013;63(34):741–748. [PMC free article] [PubMed] [Google Scholar]

- 8.S.M. Burwell Annual Report on the Quality of Care for Children in Medicaid and CHIP Health and Human Services Secretary, 2015. Retrieved from https://www.medicaid.gov/medicaid/quality-of-care/downloads/2015-child-sec-rept.pdf on August 1, 2018.

- 9.National Committee for Quality Assurance. States Using NCQA Programs, June 2020. Available at https://www.ncqa.org/public-policy/work-with-states-map/ (Accessed June 17, 2020).

- 10.Centers for Medicare & Medicaid Services, Using the Hybrid Method to Calculate Measures for the Child and Adult Core Sets. Technical Assistance Brief, October 2014. Available at: https://www.medicaid.gov/medicaid/quality-of-care/downloads/hybrid-brief.pdf (Accessed on June 17, 2020).

- 11.Ohldin A., Mims A. The search for value in health care: a review of the national committee for quality assurance efforts. J. Natl. Med. Assoc. 2002;94(5):344–350. [PMC free article] [PubMed] [Google Scholar]

- 12.Ancker J.S., Kern L.M., Edwards A., Nosal S., Stein D.M., Hauser D., Kaushal Associations between healthcare quality and use of electronic health record functions in ambulatory care. JAMA. 2015;22(4):864–871. doi: 10.1093/jamia/ocv030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Bae J., Ford E.W., Kharrazi H.H.K., Huerta T.R. Electronic medical record reminders and smoking cessation activities in primary care. Addict. Behav. 2018;77:203–209. doi: 10.1016/j.addbeh.2017.10.009. [DOI] [PubMed] [Google Scholar]

- 14.Stockwell M.S., Fiks A.G. Utilizing health information technology to improve vaccine communication and coverage. Hum. Vacc. Immunotherapeut. 2013;9(8):1802–1811. doi: 10.4161/hv.25031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Fiks A.G., Grundmeier R.W., Biggs L.M., Localio A.R., Alessandrini E.A. Impact of clinical alerts within an electronic health record on routine childhood immunization in an urban pediatric population. Pediatrics. 2007;120(4):707–714. doi: 10.1542/peds.2007-0257. [DOI] [PubMed] [Google Scholar]

- 16.Szilagyi P.G., Serwint J.R., Humiston S.G., Rand C.M., Schaffer S., Vincelli P., Curtis C.R. Effect of provider prompts on adolescent immunization rates: A randomized trial. Acad. Pediatr. 2015;15(2):149–157. doi: 10.1016/j.acap.2014.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Penfold R.B., Zhang F. Use of interrupted time series analysis in evaluating health care quality improvements. Acad. Pediatr. 2013;(6 Suppl.):S38–S44. doi: 10.1016/j.acap.2013.08.002. [DOI] [PubMed] [Google Scholar]

- 18.R Core Team, R: A Language and Environment for Statistical Computing, R Foundation for Statistical Computing, Vienna, Austria, 2017.

- 19.MatchIt [Computer software]. Retrieved from http://gking.harvard.edu/matchit.

- 20.Koepke C.P., Vogel C.A., Kohrt A.E. Provider characteristics and behaviors as predictors of immunization coverage. Am. J. Prev. Med. 2001;4:250–255. doi: 10.1016/s0749-3797(01)00373-7. [DOI] [PubMed] [Google Scholar]

- 21.Dowd B., Li C., Swenson T. Medicare’s Physician Quality Reporting System (PQRS): quality measurement and beneficiary attribution. Medicare Medicaid Res. Rev. 2014;4(2) doi: 10.5600/mmrr.004.02.a04. mmrr2014.004.02.a04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pham H.H., Malley A.S.O., Bach P.B. Primary care physicians’ links to other physicians through Medicare patients: the scope of care coordination. Ann. Intern. Med. 2009;150(4):236–242. doi: 10.7326/0003-4819-150-4-200902170-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brookhart Alan M., Schneeweiss Sabastian, Rothman Kenneth J., Glynn Robert J., Avorn Jerry, Sturmer T. Variable selection for propensity score models. Am. J. Epidemiol. 2006;163(12):1149–1156. doi: 10.1093/aje/kwj149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rosenbaum Paul R., Rubin D.B. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70(1):41–55. [Google Scholar]

- 25.Kaplan D., Chen J. A two-step Bayesian approach for propensity score analysis: simulations and case study. Psychometrika. 2012;77(3):581–609. doi: 10.1007/s11336-012-9262-8. [DOI] [PubMed] [Google Scholar]

- 26.J. Pearl, M. Glymour, N.P. Jewell, Causal Inference in Statistics: A Primer, Chichester, UK, 2016.

- 27.van de Schoot R., Depaoli S. Bayesian analyses: Where to start and what to report. Eur. Health Psychol. 2014;16(2):75–84. [Google Scholar]

- 28.WinBUGS [computer software], Retrieved from https://www.mrc-bsu.cam.ac.uk/software/bugs/the-bugs-project-winbugs/.

- 29.Glickman M.E., Dyk D.A. Basic Bayesian Methods. In: Ambrosius W., editor. Topics in Biostatistics (Methods in Molecular Biology) Totowa; NJ: 2007. [Google Scholar]

- 30.rjags [Computer software], Retrieved from http://mcmc-jags.sourceforge.net.

- 31.M. Plummer, Bayesian Graphical Models using MCMC, Retrieved from https://cran.r-project.org/web/packages/rjags/rjags.pdf on August13, 2018.

- 32.Lunn D., Speigelhalter D., Thomas A., Best N. The BUGS project: Evolution, critique and future directions. Stat. Med. 2009;28(25):3049–3067. doi: 10.1002/sim.3680. [DOI] [PubMed] [Google Scholar]

- 33.Plummer M., Best N., Cowles K., Vines K. CODA: Convergence diagnosis and output analysis for MCMC. R News. 2006;6:7–11. [Google Scholar]

- 34.Ho D.E., Imai K., King G. Matching as nonparametric preprocessing for reducing model dependence in parametric causal inference. Polit. Anal. 2011;15(3):199–236. [Google Scholar]

- 35.Martyn A., Best N., Cowles K. Package R “coda” correlation. R News. 2016;6(1):7–11. [Google Scholar]

- 36.A. Gelman, J.B. Carlin, H.S. Stern, et al., Bayesian Data Analysis, second ed., London, England, 2004, pp. 182–184.

- 37.Johnson V.E. A Bayesian χ2 test for goodness-of-fit. Ann. Stat. 2004;32(6):2361–2384. [Google Scholar]

- 38.Kharrazi H., Gonzalez C.P., Lowe K.B., Huerta T.R., Ford E.W. Forecasting the maturation of electronic health record functions among US hospitals: Retrospective analysis and predictive model. J. Med. Internet Res. 2018;20(8):e1045. doi: 10.2196/10458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Payne T.H., Corley S., Cullen T.A., Gandhi T.K. Report of the AMIA EHR-2020 task force on the status and future direction of EHRs. J. Am. Med. Inform. Assoc. 2015;22(5):1102–1110. doi: 10.1093/jamia/ocv066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Chan K.S., Kharrazi H., Parikh M.A. Assesing electronic health record implementation challenges using item response theory. Am. J. Manag. Care. 2016;22(12):e409–e415. [PubMed] [Google Scholar]

- 41.Hatef E., Lasser E.C., Kharrazi H.H.K., Perman C. A population health measurement framework: Evidence-based metrics for assessing community-level population health in the global budget context. Popul. Health Manag. 2018;21(4):261–270. doi: 10.1089/pop.2017.0112. [DOI] [PubMed] [Google Scholar]

- 42.Kharrazi H., Lasser E.C., Yasnoff W.A. A proposed national research and development agenda for population health informatics: Summary recommendations from a national expert workshop. J. Am. Med. Inform. Assoc. 2017;24(1) doi: 10.1093/jamia/ocv210. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kharrazi H., Weiner J.P. IT-enabled community health interventions: Challenges, opportunities, and future directions. EGEMS. 2014;2(3) doi: 10.13063/2327-9214.1117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kharrazi H., Chi W., Chang H.Y. Comparing population-based risk-stratification model performance using demographic, diagnosis and medication data extracted from outpatient electronic health records versus administrative claims. Med. Care. 2017;55(8):789–796. doi: 10.1097/MLR.0000000000000754. [DOI] [PubMed] [Google Scholar]

- 45.Chang H.Y., Richards T.M., Shermock K.M. Evaluating the impact of prescription fill rates on risk stratification model performance. Med. Care. 2017;55(12):1052–1060. doi: 10.1097/MLR.0000000000000825. [DOI] [PubMed] [Google Scholar]

- 46.Lemke K.W., Gudzune K.A., Kharrazi H., Weiner J.P. Assessing markers from ambulatory laboratory tests for predicting high-risk patients. Am. J. Manag. Care. 2018;24(6):e190–e195. [PubMed] [Google Scholar]

- 47.Kharrazi H., Chang H.Y., Heins S.E., Weiner J.P., Gudzune K.A. Assessing the impact of body mass index information on the performance of risk adjustment models in predicting health care costs and utilization. Med. Care. 2018;56(12):1042–1050. doi: 10.1097/MLR.0000000000001001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Hatef E., Searle K.M., Predmore Z. The impact of social determinants of health on hospitalization in the veterans health administration. Am. J. Prev. Med. 2019;56(6):811–818. doi: 10.1016/j.amepre.2018.12.012. [DOI] [PubMed] [Google Scholar]

- 49.Hatef E., Kharrazi H., Nelson K. The association between neighborhood socioeconomic and housing characteristics with hospitalization: Results of a national study of veterans. J. Am. Board Fam. Med. 2019;32(6):890–903. doi: 10.3122/jabfm.2019.06.190138. [DOI] [PubMed] [Google Scholar]

- 50.Hatef E., Weiner J.P., Kharrazi H. A public health perspective on using electronic health records to address social determinants of health: The potential for a national system of local community health records in the United States. Int. J. Med. Inform. 2019;124:86–89. doi: 10.1016/j.ijmedinf.2019.01.012. [DOI] [PubMed] [Google Scholar]

- 51.Kharrazi H., Wang C., Scharfstein D. Prospective EHR-based clinical trials: the challenge of missing data. J. Gen. Intern. Med. 2014;29(7):976–978. doi: 10.1007/s11606-014-2883-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Shabestari O., Roudsari A. Challenges in data quality assurance for electronic health records. Stud. Health Technol. Inform. 2013;183:37–41. [PubMed] [Google Scholar]

- 53.Hatef E., Kharrazi H., VanBaak E. A state-wide health IT infrastructure for population health: Building a community-wide electronic platform for Maryland's all-payer global budget. Online J, Public Health Inform. 2017;9(3):e195. doi: 10.5210/ojphi.v9i3.8129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Chan K.S., Kharrazi H., Parikh M.A., Ford E.W. Assessing electronic health record implementation challenges using item response theory. Am. J. Manag. Care. 2016;22(12):e409–e415. [PubMed] [Google Scholar]

- 55.Dixon B.E., Kharrazi H., Lehmann H.P. Public health and epidemiology informatics: Recent research and trends in the United States. Yearb. Med. Inform. 2015;10(1):199–206. doi: 10.15265/IY-2015-012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Dixon B.E., Pina J., Kharrazi H., Gharghabi F., Richards J. What's past is prologue: A scoping review of recent public health and global health informatics literature. Online J. Public Health Inform. 2015;7(2):e216. doi: 10.5210/ojphi.v7i2.5931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Gamache R., Kharrazi H., Weiner J.P. Public and population health informatics: The bridging of big data to benefit communities. Yearb. Med. Inform. 2018;27(1):199–206. doi: 10.1055/s-0038-1667081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Santoli J.M., Lindley M.C., DeSilva M.B. Effects of the COVID-19 pandemic on routine pediatric vaccine ordering and administration—United States, 2020. MMWR. Morb. Mort. Wkly. Rep. 2020;69 doi: 10.15585/mmwr.mm6919e2. https://stacks.cdc.gov/view/cdc/87780 (Accessed on June 18, 2020) [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.