Abstract

We address the problem of generating novel molecules with desired interaction properties as a multi-objective optimization problem. Interaction binding models are learned from binding data using graph convolution networks (GCNs). Since the experimentally obtained property scores are recognised as having potentially gross errors, we adopted a robust loss for the model. Combinations of these terms, including drug likeness and synthetic accessibility, are then optimized using reinforcement learning based on a graph convolution policy approach. Some of the molecules generated, while legitimate chemically, can have excellent drug-likeness scores but appear unusual. We provide an example based on the binding potency of small molecules to dopamine transporters. We extend our method successfully to use a multi-objective reward function, in this case for generating novel molecules that bind with dopamine transporters but not with those for norepinephrine. Our method should be generally applicable to the generation in silico of molecules with desirable properties.

Keywords: Cheminformatics, Deep learning, Generative methods, QSAR, Reinforcement learning

Introduction

The in silico (and experimental) generation of molecules or materials with desirable properties is an area of immense current interest (e.g. [1–28]). However, difficulties in producing novel molecules by current generative methods arise because of the discrete nature of chemical space, as well as the large number of molecules [29]. For example, the number of drug-like molecules has been estimated to be between 1023 and 1060 [30–34]. Moreover, a slight change in molecular structure can lead to a drastic change in a molecular property such as binding potency (so-called activity cliffs [35–37]).

Earlier approaches to understanding the relationship between molecular structure and properties used methods such as random forests [38, 39], shallow neural networks [40, 41], Support Vector Machines [42], and Genetic Programming [43]. However, with the recent developments in Deep Learning [44, 45], deep neural networks have come to the fore for property prediction tasks [3, 46–48]. Notably, Coley et al. [49] used Graph convolutional networks effectively as a feature encoder for input to the neural network.

In the past few years, there have been many approaches to applying Deep Learning for molecule generation. Most papers use the Simplified Molecular-Input Line-Entry System (SMILES) strings as inputs [50], and many use a Variational AutoEncoder architecture (e.g. [3, 17, 51]), with Bayesian Optimization in the latent space to generate novel molecules. However, the use of a sequence-based representational model has a specific difficulty, as any method using them has to learn the inherent rules, in this case of SMILES strings. More recent approaches, such as Grammar Variational AutoEncoders [52, 53] have been developed in attempts to overcome this problem but still the molecules generated are not always valid. Some other approaches try to use Reinforcement Learning for generating optimized molecule [54]. However, they too make use of SMILES strings which as indicated poses a significant problem. In particular, the SMILES grammar is entirely context-sensitive: the addition of an extra atom or bracket can change the structure of the encoded molecule dramatically, and not just ‘locally’ [55].

Earlier approaches have tended to choose a specific encoding for the molecules to be used as an input to the model, such as one hot encoding [56, 57], Extended Connectivity Fingerprints [58, 59] and Generative Examination Networks [60] use SMILES strings directly. We note that these encodings do not necessarily capture the features that need to be obtained for prediction of a specific property (and all encodings extract quite different and orthogonal features [61]).

In contrast, the most recent state-of-the-art methods, including hypergraph grammars [62], Junction Tree Variational Auto Encoders [63] and Graph Convolutional Policy Networks [34], use a graphical representation of molecules rather than SMILES strings and have achieved 100% validity in molecular generation. Graph-based methods have considerable utility (e.g. [64–70] and can be seen as a more natural representation of molecules as substructures map directly to subgraphs, but subsequences are usually meaningless. However, these have only been used to compare the models on deterministic properties such as the Quantitative Estimate of Drug-likeness (QED) [71], logP, etc. that can be calculated directly from molecular structures (e.g. Using RDKit, http://www.rdkit.org/). For many other applications, molecules having a higher score for a specific measured property are more useful. We here try to tackle this problem (Fig. 1).

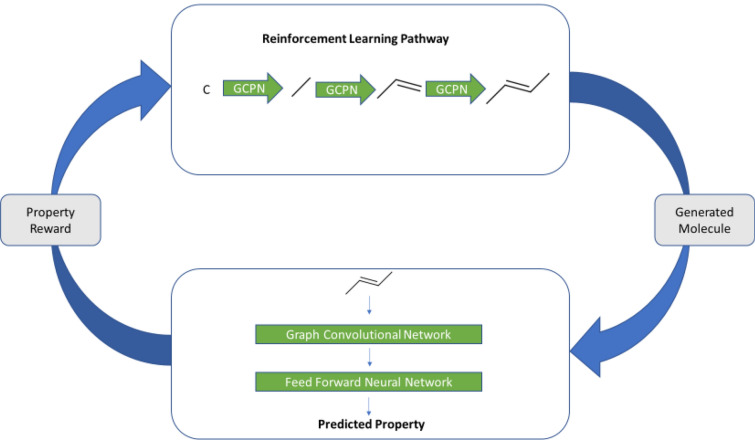

Fig. 1.

Block diagram of our basic system. A molecule is generated by the Reinforcement Learning (RL) pathway using a Graph Convolutional Policy Networks. This molecule is then used as an input for the property prediction module which outputs the property score as predicted by the module. This score is then used as the reward feedback for the RL pathway and the cycle restarts

Methods

Our system (Fig. 1) consists of two parts: Property Prediction and Molecular Generation. For both the parts, we represent the molecules as graphs [72] since they are a more natural representation than are SMILES strings, and substructures are simply subgraphs. We train a model to predict the property scores of the molecules, specifically the binding constant of various molecules at the dopamine and norepinephrine transporters (using a dataset from BindingDB). The first part, used for (training) the property prediction part, is a Graph Convolutional Network as a feature encoder together with a Feed Forward Network. We also use an Adaptive Robust Loss Function (as suggested by [73]) since the experimental data are bound to be error prone. For the Molecular Generation task, we use the method proposed by You and colleagues [34]. In particular, we (and they) use Reinforcement Learning for this task since it allows us to incorporate both the molecular constraints and the desired properties using reward functions. This part uses graph convolution policy networks (GCPNs), a model consisting of a GCN that predicts the next action (policy) given the molecule state. It is further guided by expert pretraining and adversarial loss for generating valid molecules. Our code (https://github.com/dbkgroup/prop_gen) is essentially an integration of the property prediction code of Yang and colleagues [74, 75] (https://github.com/swansonk14/chemprop) and the reinforcement learning code provided by You and colleagues [34].

Molecular property prediction

As noted, the supervised property prediction model consists of a graph-convolution network for feature extraction followed by a fully interconnected feedforward network for property prediction.

Feature extraction

We represent the molecules as directed graphs, with each atom () having a feature vector () and each bond (between atom & ) having feature vector (). For each incoming bond a feature vector is obtained by concatenating the feature vector of the atom to which the bond is incoming and the feature vector of the bond. Thus the input tensor is of the size . The Graph Convolution approach allows the message (feature vector) for a bond to be passed around the entire graph using the approach described below.

The initial atom-bond feature vector that we use incorporates important molecular information that the GCN encoder can then incorporate in later layers. The initial representations for the atom and bond features are taken from https://github.com/swansonk14/chemprop and summarized in Table 1, below. Each descriptor is a one-hot vector covering the index-range represented by it (except the Atomic Mass). For Atomic Number, Degree, Formal Charge, Chiral Tag, Number of Hydrogens and Hybridization, the feature vector contains one additional dimension to allow uncommon values (values not in the specified range).

Table 1.

Atom and bond features used in the present work

| Indices | Atom description |

|---|---|

| 0–100 | Atomic number (1 to 100) |

| 101–107 | Degree (1 to 5) |

| 108–113 | Formal charge (− 2 to + 2) |

| 114–118 | Chiral tag (0 to 4) |

| 119–124 | Number of hydrogens (0 to 4) |

| 125–130 | Hybridization (SP, SP2, SP3, SP3D, SP3D2) |

| 131 | Aromatic atom |

| 132 | Atomic mass * 0.01 |

| Indices | Bond description |

|---|---|

| 133 | Bond present |

| 134–136 | Bond type (single, double, triple) |

| 137 | Aromatic bond |

| 138 | Conjugated bond |

| 139 | Bond present in ring |

| 140–146 | Bond stereo code (RdKit) |

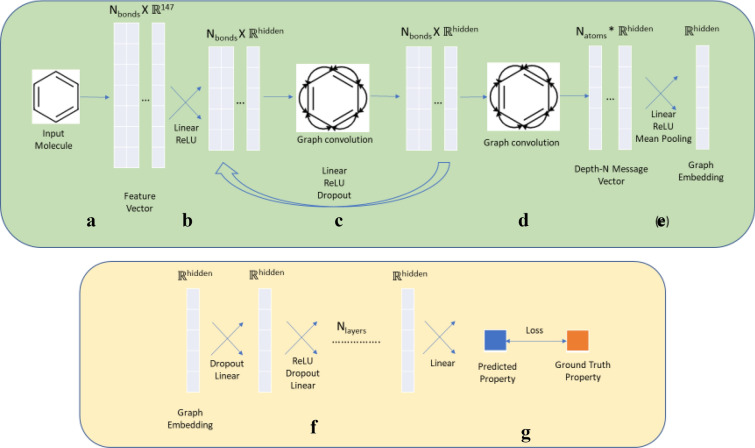

The initial atom-bond feature vector is then passed through a linear layer followed by ReLU Activation [76, 77] to get the Depth-0 message vector for each bond. For each bond, the message vectors for the neighbouring bonds are summed up (Convolution step) and passed through a linear layer followed by ReLU and a Dropout layer to get the Depth-1 message vectors. This process is continued up to a specified Depth-(N-1) message vectors. To get the Depth-N message vectors, the Depth-(N-1) vectors of all the incoming bonds for an atom are summed and then passed through a dense layer followed by ReLU and Dropout. The final graph embedding for the molecule is obtained by averaging the depth-N message vectors over all the atoms. The exact details for this model can be found in Sect. “Hyperparameter optimization” (Fig. 2).

Fig. 2.

The property prediction pipeline for our method. The steps in green represent the feature extraction using Graph Convolution and the steps in orange represent regression of property scores. a The molecule is represented is a feature vector with features described as in Sect. “Molecular property prediction”. b The feature vector is passed through a linear layer to get Depth-0 message. c Through repeated graph convolution (message passing) followed by Linear Layer, we get Depth N-1 message. d Each atom’s final message is calculated by summing up the messages (also Graph Convolution) of the neighbouring atoms. e The resultant message is passed through a Linear Layer and the mean of all the atoms is taken to get the final embedding. f The property score is regressed from the graph embedding by a Feed Forward Neural Network. g The loss between predicted property and ground truth property is then backpropagated to change the weights

Regression

To perform property prediction the embedding extracted by the GCN is fed into a fully connected network. Each intermediate layer consists of a Linear Layer followed by ReLU activation and Dropout that map the hidden vector to another vector of the same size. Finally the penultimate nodes are passed through a Linear Layer to output the predicted property score. The Ki values present in the dataset were obtained experimentally so might contain experimental errors. If we were to train our model with a simple loss function such as root mean square (RMS) error loss, it would not be able to generalize well because of the presence of outliers in the training set. Overcoming this problem requires training the data with the help of a robust loss function that takes care of the outliers present in the training data. There are several types of robust loss functions such as Pseudo-Huber loss [78], Cauchy loss, etc., but each of them has an additional hyperparameter value (for example δ in Huber Loss) which is treated as a constant while training. This means that we have to manually tune the hyperparameter each time we train to get the optimum value which may result in extensive training time. To overcome this problem, as proposed by [73], we have used a general robust loss function that has the hyperparameters as shape parameter (α) which controls the robustness of the loss, and the scale parameter (c) which controls the size of the loss’s quadratic bowl near x = 0. This loss is dubbed as a “general” loss since it takes the form of other loss functions for particular values of α (e.g. L2 loss for α = 2, Charbonnier loss for α = 1, Cauchy loss for α = 0). The authors also propose that “by viewing the loss function as the negative log likelihood of a probability distribution, and by treating robustness of the distribution as a latent variable” we can use gradient-based methods to maximize the likelihood without manual parameter tuning. In other words, we can now train the hyperparameters α and c rather which overcomes the earlier problem of manually tuning the hyperparameters. The loss function and the corresponding probability distribution are described in Eq. 1 and 2 respectively.

| 1 |

| 2 |

Reinforcement learning for molecular generation

We follow the method described by the GCPN paper [34] for the molecular generation task, with the difference being that the final property reward is the value calculated by the previously trained model for the newly generated molecules. GCPN is a state-of-the-art molecule generator that utilizes Proximal Policy Optimization (PPO) as a Reinforcement Learning paradigm for generating molecules. A comparison of GCPN with other generative approaches can be found in Tables 2 and 3 which compare the ability of generators to produce molecules having higher property scores and targeted property scores, respectively. Note that even though we have chosen GCPN for the molecule generation pipeline, our strategy can be implemented using any graph-based Reinforcement Learning generator since we just need to use the predicted property score as the reward function.

Table 2.

Comparison of the top 3 property scores of generated molecules found by each model

| Method | Penalized logP | QED | ||||||

|---|---|---|---|---|---|---|---|---|

| 1st | 2nd | 3rd | Validity | 1st | 2nd | 3rd | Validity | |

| ZINC | 4.52 | 4.30 | 4.23 | 100% | 0.948 | 0.948 | 0.948 | 100% |

| ORGAN | 3.63 | 3.49 | 3.44 | 0.4% | 0.896 | 0.824 | 0.820 | 2.2% |

| JT-VAE | 5.30 | 4.93 | 4.49 | 100% | 0.925 | 0.911 | 0.910 | 100% |

| GCPN | 7.98 | 7.85 | 7.80 | 100% | 0.948 | 0.947 | 0.946 | 100% |

Table 3.

Comparison of the effectiveness of property targeting task

| Method | −2.5 ≤ logP ≤ − 2 | 5 ≤ logP ≤ 5.5 | 150 ≤ MW ≤ 200 | 500 ≤ MW ≤ 550 | ||||

|---|---|---|---|---|---|---|---|---|

| Success | Diversity | Success | Diversity | Success | Diversity | Success | Diversity | |

| ZINC | 0.3% | 0.919 | 1.3% | 0.909 | 1.7% | 0.938 | 0 | – |

| ORGAN | 0 | – | 0.2% | 0.909 | 15.1% | 0.759 | 0.1% | 0.907 |

| JT-VAE | 11.3% | 0.846 | 7.6% | 0.907 | 0.7% | 0.824 | 16.0% | 0.898 |

| GCPN | 85.5% | 0.392 | 54.7% | 0.855 | 76.1% | 0.921 | 74.1% | 0.920 |

MW here stands for the Molecular Weight. Success is defined as the percentage of generated molecules in the target range and Diversity is defined as the average pairwise Tanimoto distance between the Morgan fingerprints of the molecules. Citations to ORGAN and JT-VAE are given in the legend to Table 2

Italics values refer to the best results among the methods compared

Molecular representation

As in the previous part, we represent the molecules as graphs, more specifically as () where ∈ is the adjacency matrix, ∈ is the node (atom) feature matrix and is the edge-conditioned adjacency tensor (since the number of bond-types is 3, namely single, double and triple bond), with being the number of atoms and being the length of feature vector for each atom. More specifically, if there exists a bond of type between atoms and, and if there exists any bond between atoms and .

Reinforcement learning setup

Our model environment builds a molecule step by step with the addition of a new bond in each step. We treat graph generation as a Markov Decision Process such that the next action is predicted based only on the current state of the molecule, not on the path that the generative process has taken. This reduces the need for sequential models such as RNNs and the disadvantages of vanishing gradients associated with them, as well as reducing the memory load on the model. More specifically, the decision process follows the equation: , where p is the probability of next state () given the previous state ().

We can initialize the generative process with either a single C atom (as in Experiments 1 and 2) or with another molecule (as in Experiments 3, 4 and 5). At any point in the generation process, the state of the environment is the graph of the current molecule that has been built up so far. The action space is a vector of length 4 which contains the information—First Atom, Second Atom, Bond type and Stop. The stop signal is either 0 or 1 indicating whether the generation is complete, based on valence rules. If the action defies the rules of chemistry in the resultant molecule, the action is not considered and the state remains as it is.

We make use of both intermediate and final rewards to guide the decision-making process. The intermediate rewards include stepwise validity checks such that a small constant value is added to the reward if the molecule passes the valency checks. The final reward includes the pKi value of the final molecule as predicted by the trained model and the validity rewards (+1 for not having any steric strain and +1 for absence of functional groups that violate ZINC functional group filters). Two other metrics are the quantitative estimation of drug-likeness (QED) [71] and the synthetic accessibility (SA) [80] score. Since our final goal is to generate drug-like molecules that can be synthetically generated, we also add the QED and 2*SA score of the final molecule to the reward.

Apart from this, we also use adversarial rewards so that the generated molecules resemble (prediction) the given set of molecules (real). We define the adversarial rewards in Eq 3.

| 3 |

where is the policy network, is the discriminator network, represents the input graph and is the underlying data distribution which is defined either over final graphs (for final rewards) or intermediate graphs (for intermediate rewards) (just as proposed by You and colleagues [34]). Alternate training of generator (policy network) and discriminator by gradient descent methods will not work in our case since is a non-differentiable graph object. Therefore we add— to our rewards and use policy gradient methods [81] to optimize the total rewards. The discriminator network comprises a Graph Convolutional Network for generating the node embedding and a Feed Forward Network to output whether the molecule is real or fake. The GCN mechanism is same as that of the policy network which is described in the next section.

Graph convolutional policy network

We use Graph Convolutional Networks (GCNs) as the policy function for the bond prediction task. This variant of graph convolution performs message passing over each edge type for a fixed depth . “The node embedding for the next depth () is calculated as described in Eq. 4

| 4 |

where is the slice of the tensor , , , is a trainable weight matrix for the edge type, and is the node embedding learned in the layer with [34]. is the number of atoms in the current molecule and is the number of possible atom types (C,N,O etc.) that can be added to the molecule (one atom is added in each step) with representing the dimension of the embedding. We use mean over the edge features as the Aggregate (AGG) function to obtain the node embedding for a layer. This process is repeated times until we get the final node embedding.

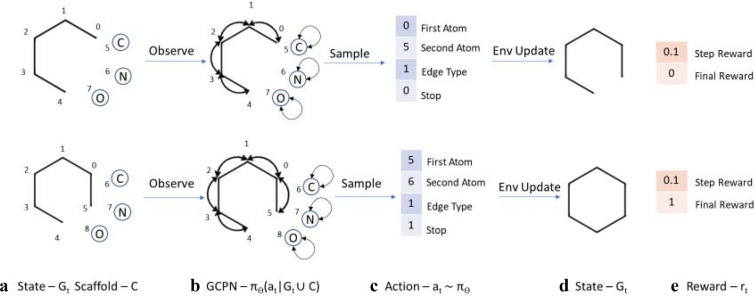

This node embedding is then used as the input to four Multilayer Perceptrons (MLP, denoted by ), that map a matrix to representing the probability of selecting a particular entity from the given entities. The specific entity is then sampled from the probability distribution thus obtained. Note that since the action space is a vector of length 4, we use 4 perceptrons to sample each component of the vector. The first atom has to be from the current molecule state while the second atom can be from the current molecule (forming a cycle) or a new atom outside the molecule (adding a new atom). For selecting the first atom, the original embedding is passed to the MLP and outputs a vector of length equal to . While selecting the second atom, the embedding of first atom is concatenated to the original embedding and passed to the MLP giving a vector of length equal to + . While selecting the edge type, the concatenated embedding of the first () and second () atom is used as an input to MLP and outputs a vector of length equal to 3 (number of bond types). Finally, the mean embedding of the atoms is passed to MLP to output a vector of length 2 indicating whether to stop the generation. This process is described in Eqs. 5, 6, 7, 8, 9 (Fig. 3).

| 5 |

| 6 |

| 7 |

| 8 |

| 9 |

Fig. 3.

The reinforcement learning pathway for systemic generation of molecules (Redrawn from You et al. [34]). a The state is defined as the current graph and the possible atom types . b The GCPN conducts message passing to encode the state as node embeddings and estimates the policy function. c The action to be performed () is sampled from the policy function. The environment performs a chemical valency check on the intermediate state and returns (d) the next state and (e) the associated reward ()

Policy gradient training

For our experiments, we use Proximal Policy Optimization (PPO) [81], the state-of-the-art policy gradient method, for optimizing the total reward. The objective function for PPO is described in Eq 10.

| 10 |

Here, St, at Rt are the state, action and reward respectively at timestep t, V(St) is the value associated with state St, πθ is the policy function and γ is the discount factor. Also note that , which is an estimator of the advantage function at timestep t, has been estimated using Generalized Advantage Estimation [82] with the GAE parameter λ, since it reduces the variance of the estimate.

For estimating the value of V we use an MLP with the embedding X as the input. Apart from this, we also use expert pretraining [83] which has shown to stabilise the training process. For our experiment, any ground truth molecule can be used as an expert for imitation. We randomly select a subgraph from the ground truth molecule as the state . The action is also chosen randomly such that it adds an atom or bond in the graph . This pair (, ) is used for calculating the expert loss.

| 11 |

Note that we use the same dataset of ground truth molecules for calculating the expert loss and the adversarial rewards. For the rest of the paper, we will call this dataset the “expert dataset” and the random molecule selected from the dataset the “expert molecule”.

System evaluation

In this section we evaluate the system described above on the task of generating small molecules that interact with the dopamine transporter but not (so far as possible) with the norepinephrine transporter.

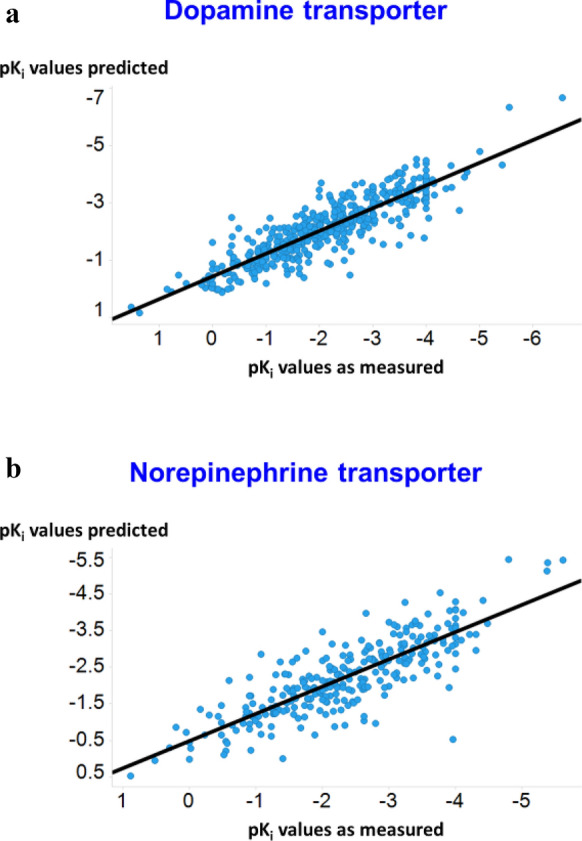

Property prediction

In this section we evaluate the performance of the supervised property prediction component. Dopamine Transporter binding data was obtained from www.bindingdb.org (https://bit.ly/2YACT5u). The training data consist of some molecules which are labelled with their Ki values and some which are labelled with IC50 values. For this paper, we have used IC50 values and Ki values interchangeably in order to increase the size of the training dataset. Molecules having large Ki values in the dataset were not labelled accurately (with labels such as ~ 1000) but the use of a robust loss function allowed us to incorporate these values directly. As stated above we use log transformed values (pKi). (We also attempted to learn the Ki values of the molecules, but the distribution was found to be heteroscedastic; hence we focus on predicting the pKi values.) Data are shown in Fig. 4a for the dopamine transporter and 4b for the norepinephrine transporter pKi values.

Fig. 4.

Predicted and experimental values for the test sets of the dopamine (a) and norepinephrine (b) transporters. Lines are lines of best fit (a) y = 0.44 + 0.79x, r2 = 0.79; b y = 0.49 + 0.74x, r2 = 0.68)

Hyperparameter optimization

As the property prediction is a general algorithm with a large number of hyperparameters, we attempted to improve generalisation on the transporter problem using Bayesian optimization on the RMSE error between the predicted pKi values and the actual pKi values of the validation set. For this task we consider the hyperparameters to be the depth of the GCN encoder, the dimensions of the message vectors, the number of layers in the Feed Forward Network, and the Dropout constant. We use tenfold cross validation on the train and validation dataset with the test set held out. The model score is defined as the mean RMS error of the ten-folds and we use Bayesian optimization to minimize the model score.

For the case of the dopamine transporter, the optimum hyperparameters that were obtained are 3 (depth of GCN), 1300 (dimensions of message vector), 2 (FFN layers) and 0.1 (Dropout).The RMS error on the test dataset for the dopamine transporter after Hyperparameter Optimization was found to be 0.57 as compared to an error of 0.65 without it. We attribute this quite significant remaining error to the errors present in the dataset. Similarly for the norepinephrine transporter, the test RMS error was found to be 0.66 after hyperparameter optimization and the optimum hyperparameters obtained are 5 (depth of GCN), 900 (dimensions of message vector), 3 (FFN layers), 0.15 (Dropout).

Implementation details

For the prediction of pKi value of both Dopamine and Norepinephrine transporters, we split the overall dataset into train (80%), validation (10%) and test (10%) datasets randomly. The training is done with a batch size of 50 molecules and for 100 epochs. All the network weights were initialized using Xavier initialization [84]. The first two epochs are warmup epochs [85] where the learning rate increases from 1e−4 to 1e−3 linearly and after that it decreases exponentially to 1e−4 by the last epoch. The model is saved after an epoch if the RMS error on the validation dataset is less than the previous best and the error for the test dataset is calculated using the saved model which has the least error on the validation dataset. The code was written in PyTorch library and the training was done using an NVIDIA RTX 2080Ti GPU on a Windows 10 system with 256 GB RAM and Intel 18-Core Xeon W-2195 processor.

Single-objective molecular generation

To begin the RL evaluation we consider molecular generation with a single objective (dopamine transporter interaction). For all the experiments we use the following implementation details. The learning rate for training all the networks is taken to be 1e−3 and linearly decreasing to 0 by 3e7 timesteps. The depth of GCN network for both the GCPN and the Discriminator network is taken to be 3 and the node embedding size was taken to be 128. The code was written using the TensorFlow library and training was done using an NVIDIA RTX 2080Ti GPU as per the previous paragraph.

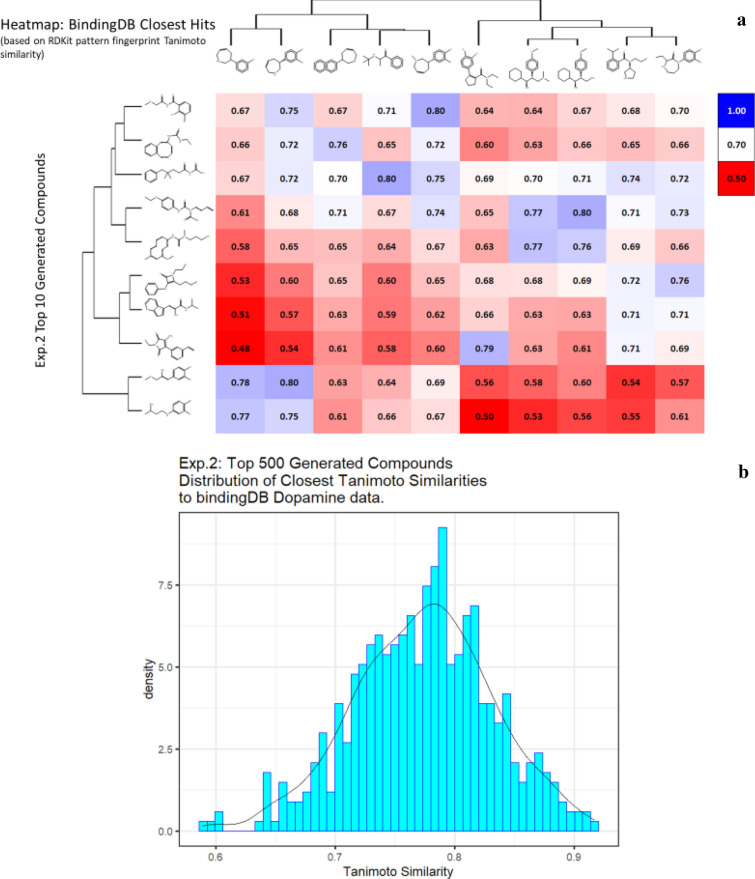

For the task of analysing the results we provide the ‘top 10’ molecules generated as in Fig. 5. However, we aim to generate molecules that are in some sense similar to the original training dataset by systematically modifying the RL pathway in the following experiments. For each experiment, we find the closest molecule in the BindingDB dataset to the top 10 generated molecules. The relative closeness is measured by calculating its Tanimoto Similarity between the RDKit fingerprints and visualize the distribution of the TS values.

Fig. 5.

In silico generation by DeepGraphMolGen of novel molecules with predicted binding capacity to the dopamine transporter. Molecules were generated as described in the text. a Top 10 molecules as predicted by DeepGraphMolGen versus the closest molecule in the BindingdB dataset and the Tanimoto similarity thereto (encoded using the RDKit patterned fingerprint). b Distribution of Tanimoto similarities to a molecule in BindingdB dataset of the top 500 molecules

First, we initialize the molecule with a single Carbon atom in the beginning of the generative process. The expert dataset in this case is chosen to be the ZINC dataset [86], which is a free dataset containing (at that time) some 230 M commercially available compounds. However, for our experiments, we use 250 K randomly selected molecules from ZINC as our expert dataset to make the experiments computationally tractable. The top generated molecules and their predicted properties are given in Additional file 1: Table S1 (including data on QED and SA) with a subset of the data illustrated in Fig. 5. Note that in all cases the values of QED and SA both exceeded 0.8.

Although the above experiment was able to generate optimized molecules, there is no certainty that the predictions are correct due to the errors in the model as well as the errors that were propagated by the experimental errors in the data. We thus attempt to generate molecules that are similar to the more potent molecules. In the next experiment, we choose the expert dataset to be the original dataset on which we trained the molecules (we will call this the Dopamine Dataset), while omitting molecules having Ki greater than 1000. We again choose the initial molecule to be a single carbon atom. The equivalent data are given in Additional file 2: Table S2, with similar plots to those of Fig. 5 given in Fig. 6.

Fig. 6.

In silico generation by DeepGraphMolGen of novel molecules with predicted binding capacity to the dopamine transporter. Molecules were generated as described in the text. a Top 10 molecules as predicted by DeepGraphMolGen versus the closest molecule in the BindingdB dataset and the Tanimoto similarity thereto (encoded using the RDKit patterned fingerprint). b Distribution of Tanimoto similarities to a molecule in BindingdB dataset of the top 500 molecules

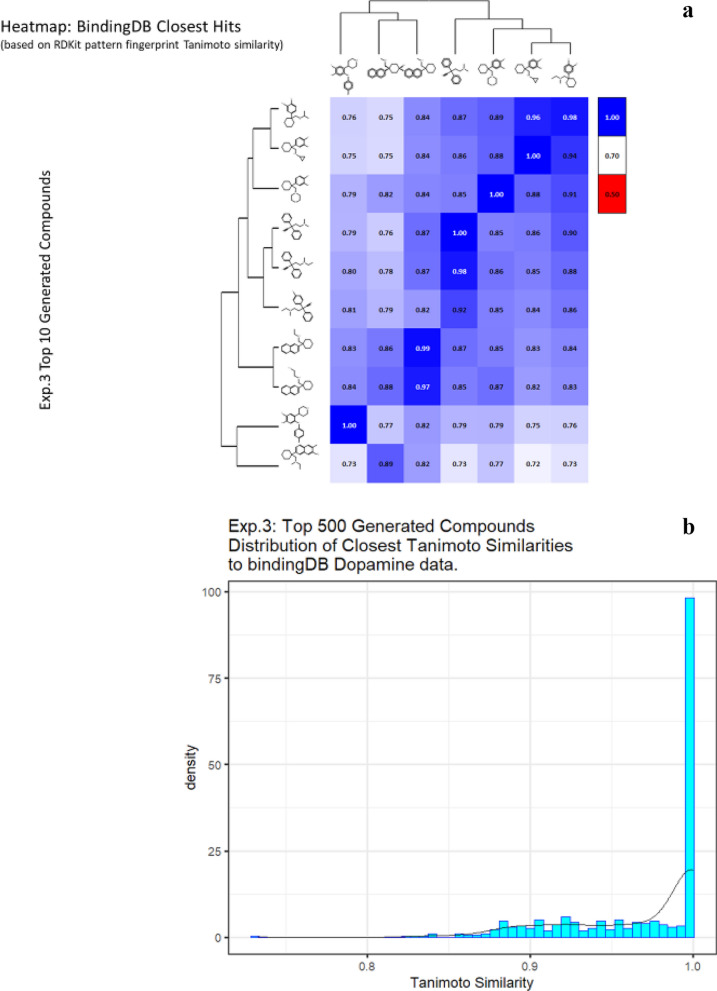

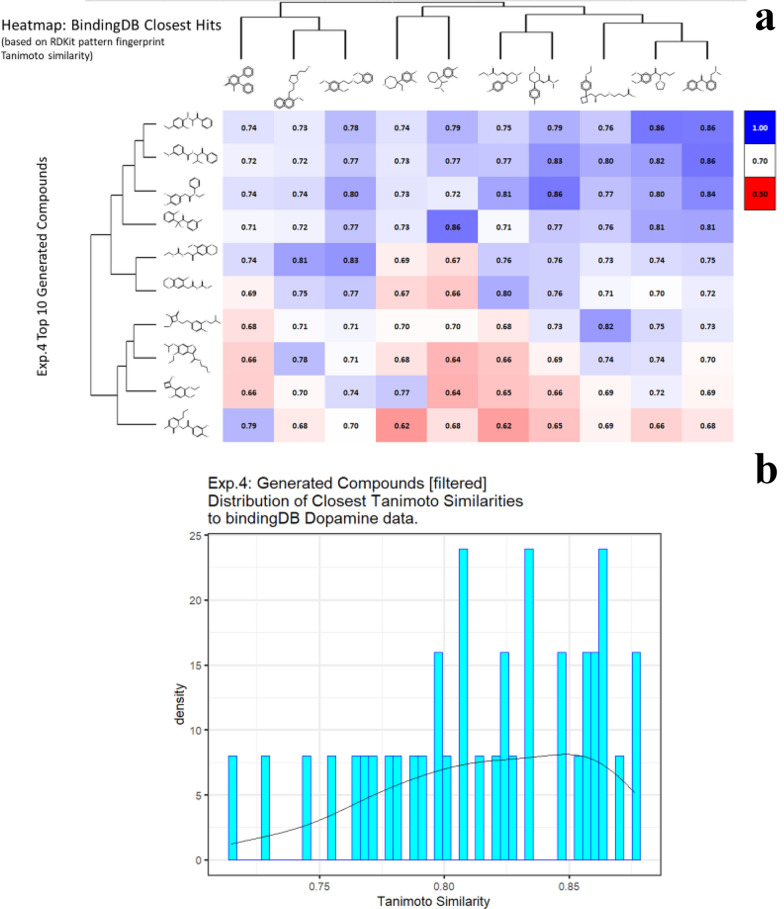

Another way to ensure that the generated molecules will have a high affinity towards dopamine transporter is to explicitly ensure that the molecules have higher TS with already known molecules that have high pKi values. We attempt to achieve this by initializing the generative process with a random molecule from the Dopamine Dataset having Ki < 1000. We conduct two experiments using this process, one where we restrict the number of atoms (other than hydrogen) to be lower than 25 (Additional file 3: Table S3 and Fig. 7), and another (Additional file 4: Table S4 and Fig. 8) where we restrict the number of atoms to be less than 15. For both these experiments, we use the ZINC dataset as the expert dataset. The results are summarized in the tables below. Note that in some cases we obtain a TS of 1; this is encouraging as in this case the algorithm found no need to add anything to the original molecule and could recapitulate it.

Fig. 7.

In silico generation by DeepGraphMolGen of novel molecules with predicted binding capacity to the dopamine transporter using a generative method in which the number of heavy atoms is constrained to be lower than 25. Molecules were generated as described in the text. a Top 10 molecules as predicted by DeepGraphMolGen versus the closest molecule in the BindingdB dataset and the TS thereto (encoded using the RDKit patterned fingerprint). b Distribution of Tanimoto similarities (RDKit patterned encoding) to a molecule in BindingdB dataset of the top 500 molecules

Fig. 8.

In silico generation by DeepGraphMolGen of novel molecules with predicted binding capacity to the dopamine transporter using a generative method in which the number of heavy atoms is constrained to be lower than 15. Molecules were generated as described in the text. a Top 10 molecules as predicted by DeepGraphMolGen versus the closest molecule in the BindingdB dataset and the TS thereto (encoded using the RDKit patterned fingerprint). b Distribution of Tanimoto similarities (RDKit patterned encoding) to the closest molecule in BindingdB dataset of the top 500 molecules

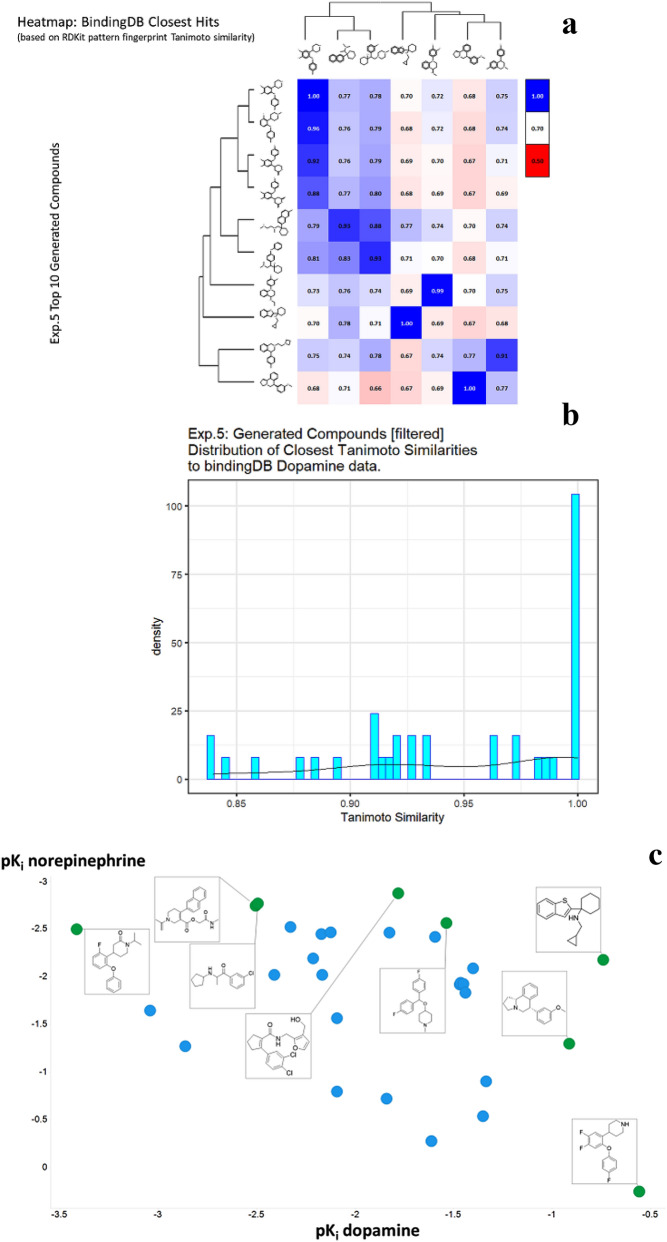

Multi-objective molecular generation

Even though generating molecules having higher affinity towards a particular ligand in itself is quite sought after, in many cases we might wish to seek molecules that bind to one receptor but explicitly do not bind to another one (kinase inhibitors might be one such example). We attempt to achieve this here with the help of our Reinforcement Learning pipeline by modifying the reward function to be a weighted combination of pKi values for the two different targets. Explicitly, we attempt to generate molecules that have high binding affinity to the Dopamine Transporter but a much lower binding affinity to the Norepinephrine Transporter. Thus, we modify the reward function used in the previous experiments to add 2 times the predicted pKi values for Dopamine Transporter and -1 times the predicted pKi values for the Norepinephrine Transporter. The higher weight is given to the dopamine component since we wish to generate molecules that do bind to it. Clearly we could use any other weightings as part of the reward function, so those chosen are simply illustrative. For this experiment we initialize the process with a random molecule from the Dopamine dataset having a number of atoms lower than 25 and choose the expert dataset to be ZINC. The results of this experiment are summarized in Additional file 5: Table S5 and Fig. 9. As above, some molecules have a TS of 1 to examples in the dataset, for the same reasons.

Fig. 9.

In silico generation by DeepGraphMolGen of novel molecules with predicted binding capacity to the dopamine transporter using a generative method in which the number of heavy atoms is constrained to be lower than 25. Molecules were generated as described in the text. a Top 10 molecules as predicted by DeepGraphMolGen versus the closest molecule in the BindingdB dataset and the TS thereto (encoded using the RDKit patterned fingerprint). b Distribution of Tanimoto similarities (RDKit patterned encoding) to the closest molecule in BindingdB dataset of the top 500 molecules. c Plot of those molecules with differential affinities for the dopamine and norepinephrine transporters

Only in rare cases do candidate solutions for multi- (in this case two-)objective optimisation problems have unique solutions that are optimal for both [87], and there is a trade-off that is left to the choice of the experimenter. Thus, Fig. 9c also illustrates the molecules on the Pareto front for the two objectives, showing how quite changes in structure can move one swiftly along the Pareto front. Consequently our method also provides a convenient means of attacking multi-objective molecular optimisation problems.

Conclusions

Overall, the present molecular graph-based generative method has a number of advantages over grammar-based encodings, in particular that it necessarily creates valid molecules. As stressed by Coley and colleagues [49], such methods still retain any inherent limitations of 2D methods as a priori they do not encode 3D information. This said, there is evidence that 3D structures do not add much benefit when forming QSAR models [88–92], so we do not consider this a major limitation for now. Some of the molecules generated might be seen by some (however subjectively) as ‘unusual, even though they scored well on both drug-likeness and synthetic accessibility metrics. This probably says much about the size of plausible drug space that exists relative to the fraction that has actually been explored [93–95], and implies that generative methods can have an important role to play in medicinal chemistry. Also, for generating desired molecules, the QSAR models need to be accurate and robust in order to evaluate accurately the property of the generated molecules. Recent works such as [96] include uncertainty metrics for property discrimination, and benchmarking models are also available [97]. In conclusion, we here add to the list of useful, generative molecular methods for virtual screening by combining molecular graph encoding, reinforcement learning and multi-objective optimisation within a single strategy.

Supplementary information

Additional file 1. Molecules generated by Experiment 1 having QED > 0.8 and SA score > 0.8.

Additional file 2. Molecules generated by Experiment 2 having QED > 0.8 and SA score > 0.8.

Additional file 3. Molecules generated by Experiment 3 having QED > 0.8 and SA score > 0.8.

Additional file 4. Molecules generated by Experiment 4 having QED > 0.8 and SA score > 0.8.

Additional file 5. Molecules generated by Experiment 5 having QED > 0.8 and SA score > 0.8.

Acknowledgements

The work of SS and DBK is supported as part of EPSRC grant EP/S004963/1 (SuSCoRD).

Authors’ contributions

YK wrote most of the software, while SOH contributed some of the cheminformatics. SS, NS, TJR, DB & DBK contributed ideas and supervision. All authors contributed to and approved the final manuscript.

Competing interests

The authors have no conflicts of interest to report.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information accompanies this paper at 10.1186/s13321-020-00454-3.

References

- 1.Yang X, Zhang J, Yoshizoe K, Terayama K, Tsuda K. ChemTS: an efficient python library for de novo molecular generation. Sci Technol Adv Mater. 2017;18(1):972–976. doi: 10.1080/14686996.2017.1401424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gómez-Bombarelli R, Aguilera-Iparraguirre J, Hirzel TD, Duvenaud D, Maclaurin D, Blood-Forsythe MA, Chae HS, Einzinger M, Ha DG, Wu T, et al. Design of efficient molecular organic light-emitting diodes by a high-throughput virtual screening and experimental approach. Nat Mater. 2016;15(10):1120. doi: 10.1038/nmat4717. [DOI] [PubMed] [Google Scholar]

- 3.Gómez-Bombarelli R, Wei JN, Duvenaud D, Hernández-Lobato JM, Sánchez-Lengeling B, Sheberla D, Aguilera-Iparraguirre J, Hirzel TD, Adams RP, Aspuru-Guzik A. Automatic chemical design using a data-driven continuous representation of molecules. ACS Cent Sci. 2018;4(2):268–276. doi: 10.1021/acscentsci.7b00572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Sanchez-Lengeling B, Aspuru-Guzik A. Inverse molecular design using machine learning: generative models for matter engineering. Science. 2018;361(6400):360–365. doi: 10.1126/science.aat2663. [DOI] [PubMed] [Google Scholar]

- 5.Kadurin A, Nikolenko S, Khrabrov K, Aliper A, Zhavoronkov A. druGAN: an advanced generative adversarial autoencoder model for de novo generation of new molecules with desired molecular properties in silico. Mol Pharm. 2017;14(9):3098–3104. doi: 10.1021/acs.molpharmaceut.7b00346. [DOI] [PubMed] [Google Scholar]

- 6.Olier I, Sadawi N, Bickerton GR, Vanschoren J, Grosan C, Soldatova L, King RD. Meta-QSAR: a large-scale application of meta-learning to drug design and discovery. Mach Learn. 2018;107(1):285–311. doi: 10.1007/s10994-017-5685-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Popova M, Isayev O, Tropsha A. Deep reinforcement learning for de novo drug design. Sci Adv. 2018;4(7):eaap7885. doi: 10.1126/sciadv.aap7885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tabor DP, Roch LM, Saikin SK, Kreisbeck C, Sheberla D, Montoya JH, Dwaraknath S, Aykol M, Ortiz C, Tribukait H, et al. Accelerating the discovery of materials for clean energy in the era of smart automation. Nat Rev Mater. 2018;3:5–20. [Google Scholar]

- 9.Colby SM, Nuñez JR, Hodas NO, Corley CD, Renslow RR. Deep learning to generate in silico chemical property libraries and candidate molecules for small molecule identification in complex samples. Anal Chem. 2020;92(2):1720–1729. doi: 10.1021/acs.analchem.9b02348. [DOI] [PubMed] [Google Scholar]

- 10.Baskin II. The power of deep learning to ligand-based novel drug discovery. Expert Opin Drug Discov. 2020 doi: 10.1080/17460441.2020.1745183. [DOI] [PubMed] [Google Scholar]

- 11.Hong SH, Ryu S, Lim J, Kim WY. Molecular generative model based on an adversarially regularized autoencoder. J Chem Inf Model. 2020;60(1):29–36. doi: 10.1021/acs.jcim.9b00694. [DOI] [PubMed] [Google Scholar]

- 12.Lim J, Hwang SY, Moon S, Kim S, Kim WY. Scaffold-based molecular design with a graph generative model. Chem Sci. 2020;11(4):1153–1164. doi: 10.1039/c9sc04503a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rifaioglu AS, Nalbat E, Atalay V, Martin MJ, Cetin-Atalay R, Doğan T. DEEPScreen: high performance drug-target interaction prediction with convolutional neural networks using 2-D structural compound representations. Chem Sci. 2020;11(9):2531–2557. doi: 10.1039/c9sc03414e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Yasonik J. Multiobjective de novo drug design with recurrent neural networks and nondominated sorting. J Cheminform. 2020;12(1):14. doi: 10.1186/s13321-020-00419-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yoshimori A, Kawasaki E, Kanai C, Tasaka T. Strategies for design of molecular structures with a desired pharmacophore using deep reinforcement learning. Chem Pharm Bull (Tokyo) 2020;68(3):227–233. doi: 10.1248/cpb.c19-00625. [DOI] [PubMed] [Google Scholar]

- 16.Walters WP, Murcko M. Assessing the impact of generative AI on medicinal chemistry. Nat Biotechnol. 2020;38(2):143–145. doi: 10.1038/s41587-020-0418-2. [DOI] [PubMed] [Google Scholar]

- 17.Griffiths RR, Hernández-Lobato JM. Constrained Bayesian optimization for automatic chemical design using variational autoencoders. Chem Sci. 2020;11(2):577–586. doi: 10.1039/c9sc04026a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Cova TFGG, Pais AACC. Deep learning for deep chemistry: optimizing the prediction of chemical patterns. Front Chem. 2019;7:809. doi: 10.3389/fchem.2019.00809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Noh J, Kim J, Stein HS, Sanchez-Lengeling B, Gregoire JM, Aspuru-Guzik A, Jung Y. Inverse design of solid-state materials via a continuous representation. Matter. 2019;1(5):1370–1384. [Google Scholar]

- 20.Grisoni F, Schneider G. De novo molecular design with generative long short-term memory. Chimia. 2019;73(12):1006–1011. doi: 10.2533/chimia.2019.1006. [DOI] [PubMed] [Google Scholar]

- 21.Grisoni F, Merk D, Friedrich L, Schneider G. Design of natural-product-inspired multitarget ligands by machine learning. ChemMedChem. 2019;14(12):1129–1134. doi: 10.1002/cmdc.201900097. [DOI] [PubMed] [Google Scholar]

- 22.Gupta A, Müller AT, Huisman BJH, Fuchs JA, Schneider P, Schneider G. Generative Recurrent Networks for de novo drug design. Mol Inform. 2018;37(1–2):1700111. doi: 10.1002/minf.201700111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Merk D, Friedrich L, Grisoni F, Schneider G. De novo design of bioactive small molecules by artificial intelligence. Mol Inform. 2018;37(1–2):1700153. doi: 10.1002/minf.201700153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schneider G. Generative models for artificially-intelligent molecular design. Mol Inform. 2018;37(1–2):188031. doi: 10.1002/minf.201880131. [DOI] [PubMed] [Google Scholar]

- 25.Schneider P, Walters WP, Plowright AT, Sieroka N, Listgarten J, Goodnow RA, Fisher J, Jansen JM, Duca JS, Rush TS, et al. Rethinking drug design in the artificial intelligence era. Nat Rev Drug Discov. 2020;19:353–364. doi: 10.1038/s41573-019-0050-3. [DOI] [PubMed] [Google Scholar]

- 26.Button A, Merk D, Hiss JA, Schneider G. Automated de novo molecular design by hybrid machine intelligence and rule-driven chemical synthesis. Nat mach Intell. 2019;1(7):307–315. [Google Scholar]

- 27.Moret M, Friedrich L, Grisoni F, Merk D, Schneider G. Generative molecular design in low data regimes. Nat Mach Intell. 2020;2:171–180. [Google Scholar]

- 28.Ståhl N, Falkman G, Karlsson A, Mathiason G, Boström J. Deep reinforcement learning for multiparameter optimization in de novo drug design. J Chem Inf Model. 2019;59(7):3166–3176. doi: 10.1021/acs.jcim.9b00325. [DOI] [PubMed] [Google Scholar]

- 29.Arús-Pous J, Blaschke T, Ulander S, Reymond JL, Chen H, Engkvist O. Exploring the GDB-13 chemical space using deep generative models. J Cheminform. 2019;11(1):20. doi: 10.1186/s13321-019-0341-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Reymond JL. The Chemical Space Project. Acc Chem Res. 2015;48(3):722–730. doi: 10.1021/ar500432k. [DOI] [PubMed] [Google Scholar]

- 31.Bohacek RS, McMartin C, Guida WC. The art and practice of structure-based drug design: a molecular modeling perspective. Med Res Rev. 1996;16(1):3–50. doi: 10.1002/(SICI)1098-1128(199601)16:1<3::AID-MED1>3.0.CO;2-6. [DOI] [PubMed] [Google Scholar]

- 32.Ertl P. Cheminformatics analysis of organic substituents: identification of the most common substituents, calculation of substituent properties, and automatic identification of drug-like bioisosteric groups. J Chem Inf Comput Sci. 2003;43(2):374–380. doi: 10.1021/ci0255782. [DOI] [PubMed] [Google Scholar]

- 33.O’Hagan S, Kell DB. Analysing and navigating natural products space for generating small, diverse, but representative chemical libraries. Biotechnol J. 2018;13(1):1700503. doi: 10.1002/biot.201700503. [DOI] [PubMed] [Google Scholar]

- 34.You J, Liu B, Ying R, Pande V, Leskovec J: Graph Convolutional Policy Network for Goal-Directed Molecular Graph Generation. arXiv 2018:1806.02473v02471

- 35.Dimova D, Stumpfe D, Bajorath J. Method for the evaluation of structure-activity relationship information associated with coordinated activity cliffs. J Med Chem. 2014;57:6553–6563. doi: 10.1021/jm500577n. [DOI] [PubMed] [Google Scholar]

- 36.Stumpfe D, Hu Y, Dimova D, Bajorath J. Recent progress in understanding activity cliffs and their utility in medicinal chemistry. J Med Chem. 2014;57(1):18–28. doi: 10.1021/jm401120g. [DOI] [PubMed] [Google Scholar]

- 37.Stumpfe D, Dimova D, Bajorath J. Composition and topology of activity cliff clusters formed by bioactive compounds. J Chem Inf Model. 2014;54(2):451–461. doi: 10.1021/ci400728r. [DOI] [PubMed] [Google Scholar]

- 38.Teixeira AL, Leal JP, Falcao AO. Random forests for feature selection in QSPR models—an application for predicting standard enthalpy of formation of hydrocarbons. J Cheminform. 2013;5(1):9. doi: 10.1186/1758-2946-5-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ambure P, Halder AK, Gonzalez Diaz H, Cordeiro M. QSAR-co: an open source software for developing robust multitasking or multitarget classification-based QSAR models. J Chem Inf Model. 2019;59(6):2538–2544. doi: 10.1021/acs.jcim.9b00295. [DOI] [PubMed] [Google Scholar]

- 40.Zupan J, Gasteiger J. Neural networks for chemists. Weinheim: Verlag Chemie; 1993. [Google Scholar]

- 41.Livingstone D. Data analysis for chemists. Oxford: Oxford University Press; 1995. [Google Scholar]

- 42.Mahé P, Vert JP. Virtual screening with support vector machines and structure kernels. Comb Chem High Throughput Screen. 2009;12(4):409–423. doi: 10.2174/138620709788167926. [DOI] [PubMed] [Google Scholar]

- 43.O’Hagan S, Kell DB. The KNIME workflow environment and its applications in Genetic Programming and machine learning. Genetic Progr Evol Mach. 2015;16:387–391. [Google Scholar]

- 44.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 45.Schmidhuber J. Deep learning in neural networks: an overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 46.Gawehn E, Hiss JA, Schneider G. Deep learning in drug discovery. Mol Inform. 2016;35(1):3–14. doi: 10.1002/minf.201501008. [DOI] [PubMed] [Google Scholar]

- 47.Ching T, Himmelstein DS, Beaulieu-Jones BK, Kalinin AA, Do BT, Way GP, Ferrero E, Agapow PM, Zietz M, Hoffman MM, et al. Opportunities and obstacles for deep learning in biology and medicine. J R Soc Interface. 2018;15(141):20170387. doi: 10.1098/rsif.2017.0387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Mater AC, Coote ML. Deep Learning in Chemistry. J Chem Inf Model. 2019;59(6):2545–2559. doi: 10.1021/acs.jcim.9b00266. [DOI] [PubMed] [Google Scholar]

- 49.Coley CW, Barzilay R, Green WH, Jaakkola TS, Jensen KF. Convolutional embedding of attributed molecular graphs for physical property prediction. J Chem Inf Model. 2017;57(8):1757–1772. doi: 10.1021/acs.jcim.6b00601. [DOI] [PubMed] [Google Scholar]

- 50.Weininger D. SMILES, a chemical language and information system.1. Introduction to methodology and encoding rules. J Chem Inf Comput Sci. 1988;28(1):31–36. [Google Scholar]

- 51.Dai H, Tian Y, Dai B, Skiena S, Song L (2018) Syntax-directed variational autoencoder for structured data. arXiv. 1802.08786v08721

- 52.Kusner MJ, Paige B, Hernández-Lobato JM (2017) Grammar Variational Autoencoder. arXiv. 1703.01925v01921

- 53.Blaschke T, Olivecrona M, Engkvist O, Bajorath J, Chen HM. Application of generative autoencoder in de novo molecular design. Mol Inform. 2018;37(1–2):1700123. doi: 10.1002/minf.201700123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Xu Y, Lin K, Wang S, Wang L, Cai C, Song C, Lai L, Pei J. Deep learning for molecular generation. Future Med Chem. 2019;11(6):567–597. doi: 10.4155/fmc-2018-0358. [DOI] [PubMed] [Google Scholar]

- 55.O’Boyle N, Dalke A (2018) DeepSMILES: an adaptation of SMILES for use in machine-learning of chemical structures. ChemRxiv. 7097960.v7097961

- 56.Goodfellow I, Bengio Y, Courville A. Deep learning. Boston: MIT Press; 2016. [Google Scholar]

- 57.Stokes JM, Yang K, Swanson K, Jin W, Cubillos-Ruiz A, Donghia NM, MacNair CR, French S, Carfrae LA, Bloom-Ackerman Z, et al. A deep learning approach to antibiotic discovery. Cell. 2020;180(4):688–702. doi: 10.1016/j.cell.2020.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Zahoránszky-Kőhalmi G, Bologa CG, Oprea TI. Impact of similarity threshold on the topology of molecular similarity networks and clustering outcomes. J Cheminform. 2016;8:16. doi: 10.1186/s13321-016-0127-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Segler MHS, Kogej T, Tyrchan C, Waller MP (2017) Generating focussed molecule libraries for drug discovery with recurrent neural networks. arXiv. 1701.01329v01321 [DOI] [PMC free article] [PubMed]

- 60.van Deursen R, Ertl P, Tetko IV, Godin G. GEN: highly efficient SMILES explorer using autodidactic generative examination networks. J Cheminform. 2020;12(1):22. doi: 10.1186/s13321-020-00425-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.O’Hagan S, Kell DB. Consensus rank orderings of molecular fingerprints illustrate the ‘most genuine’ similarities between marketed drugs and small endogenous human metabolites, but highlight exogenous natural products as the most important ‘natural’ drug transporter substrates. ADMET DMPK. 2017;5(2):85–125. [Google Scholar]

- 62.Kajino H (2018) Molecular hypergraph grammar with its application to molecular optimization. arXiv. 1809.02745v02741

- 63.Jin W, Barzilay R, Jaakkola T. Junction tree variational autoencoder for molecular graph generation. arXiv 2018:1802.04364v04362

- 64.Zang C, Wang F (2020) MoFlow: an invertible flow model for generating molecular graphs. arXiv. 2006.10137

- 65.Tavakoli M, Baldi P (2020) Continuous representation of molecules using graph variational autoencoder. arXiv:2004.08152v08151

- 66.Samanta B, De A, Ganguly N, Gomez-Rodriguez M (2018) Designing random graph models using variational autoencoders with applications to chemical design. arXiv.1802.05283

- 67.Flam-Shepherd D, Wu T, Aspuru-Guzik A (2020) Graph deconvolutional generation. arXiv. 2002.07087v07081

- 68.Kearnes S, McCloskey K, Berndl M, Pande V, Riley P. Molecular graph convolutions: moving beyond fingerprints. J Comput Aided Mol Des. 2016;30(8):595–608. doi: 10.1007/s10822-016-9938-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Bresson X, Laurent T (2019) A two-step graph convolutional decoder for molecule generation. arXiv. 1906.03412

- 70.Kearnes S, Li L, Riley P (2019) Decoding molecular graph embeddings with reinforcement learning. arXiv. 1904.08915

- 71.Bickerton GR, Paolini GV, Besnard J, Muresan S, Hopkins AL. Quantifying the chemical beauty of drugs. Nat Chem. 2012;4(2):90–98. doi: 10.1038/nchem.1243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Zhang Z, Cui P, Zhu W (2018) Deep learning on graphs: a survey. arXi: 1812.04202v04201

- 73.Barron JT (2017) A general and adaptive robust loss function. arXiv. 1701.03077v03010

- 74.Yang K, Swanson K, Jin W, Coley C, Eiden P, Gao H, Guzman-Perez A, Hopper T, Kelley B, Mathea M et al (2019) Analyzing learned molecular representations for property prediction. arXiv. 1904.01561v01564 [DOI] [PMC free article] [PubMed]

- 75.Yang K, Swanson K, Jin W, Coley C, Eiden P, Gao H, Guzman-Perez A, Hopper T, Kelley B, Mathea M, et al. Analyzing learned molecular representations for property prediction. J Chem Inf Model. 2019;59(8):3370–3388. doi: 10.1021/acs.jcim.9b00237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Goodacre R, Trew S, Wrigley-Jones C, Saunders G, Neal MJ, Porter N, Kell DB. Rapid and quantitative analysis of metabolites in fermentor broths using pyrolysis mass spectrometry with supervised learning: application to the screening of Penicillium chryosgenum fermentations for the overproduction of penicillins. Anal Chim Acta. 1995;313:25–43. [Google Scholar]

- 77.Jarrett K, Kavukcuoglu K, Ranzato M, Lecun Y (2009) What is the best multi-stage architecture for object recognition? IEEE I Conf Comp Vis; pp. 2146–2153

- 78.Ashkezari-Toussi S, Sadoghi-Yazdi H. Robust diffusion LMS over adaptive networks. Signal Process. 2019;158:201–209. [Google Scholar]

- 79.Guimaraes GL, Sanchez-Lengeling B, Outeiral C, Farias PLC, Aspuru-Guzik A (2017) Objective-Reinforced Generative Adversarial Networks (ORGAN) for sequence generation models. arXiv. 1705.10843

- 80.Ertl P, Schuffenhauer A. Estimation of synthetic accessibility score of drug-like molecules based on molecular complexity and fragment contributions. J Cheminform. 2009;1(1):8. doi: 10.1186/1758-2946-1-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Schulman J, Wolski F, Dhariwal P, Radford A, Klimov O (2017) Proximal policy optimization algorithms. arXiv. 1707.06347v06342

- 82.Schulman J, Moritz P, Levine S, Jordan M, Abbeel P (2015) High-dimensional continuous control using generalized advantage estimation. arXiv. 1506.02438

- 83.Levine S, Koltun V. Guided policy search. Proc ICML. 2013;28:1–9. [Google Scholar]

- 84.Glorot X, Bengio Y. Understanding the difficulty of training deep feedforward neural networks. Proc AISTATs. 2010;9:249–256. [Google Scholar]

- 85.Li Y, Wei C, Ma T (2019) Towards explaining the regularization effect of initial large learning rate in training neural networks. arXiv. 1907.04595v04592

- 86.Sterling T, Irwin JJ. ZINC 15—ligand discovery for everyone. J Chem Inf Model. 2015;55:2324–2337. doi: 10.1021/acs.jcim.5b00559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Besnard J, Ruda GF, Setola V, Abecassis K, Rodriguiz RM, Huang XP, Norval S, Sassano MF, Shin AI, Webster LA, et al. Automated design of ligands to polypharmacological profiles. Nature. 2012;492(7428):215–220. doi: 10.1038/nature11691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Nettles JH, Jenkins JL, Bender A, Deng Z, Davies JW, Glick M. Bridging chemical and biological space: “target fishing” using 2D and 3D molecular descriptors. J Med Chem. 2006;49(23):6802–6810. doi: 10.1021/jm060902w. [DOI] [PubMed] [Google Scholar]

- 89.Hu G, Kuang G, Xiao W, Li W, Liu G, Tang Y. Performance evaluation of 2D fingerprint and 3D shape similarity methods in virtual screening. J Chem Inf Model. 2012;52(5):1103–1113. doi: 10.1021/ci300030u. [DOI] [PubMed] [Google Scholar]

- 90.Oprea TI. On the information content of 2D and 3D descriptors for QSAR. J Brazil Chem Soc. 2002;13(6):811–815. [Google Scholar]

- 91.Brown RD, Martin YC. The information content of 2D and 3D structural descriptors relevant to ligand-receptor binding. J Chem Inf Comp Sci. 1997;37(1):1–9. [Google Scholar]

- 92.Hong HX, Xie Q, Ge WG, Qian F, Fang H, Shi LM, Su ZQ, Perkins R, Tong WD. Mold2, molecular descriptors from 2D structures for chemoinformatics and toxicoinformatics. J Chem Inf Model. 2008;48(7):1337–1344. doi: 10.1021/ci800038f. [DOI] [PubMed] [Google Scholar]

- 93.Hann MM, Keserü GM. Finding the sweet spot: the role of nature and nurture in medicinal chemistry. Nat Rev Drug Discov. 2012;11(5):355–365. doi: 10.1038/nrd3701. [DOI] [PubMed] [Google Scholar]

- 94.Pitt WR, Parry DM, Perry BG, Groom CR. Heteroaromatic rings of the future. J Med Chem. 2009;52:2952–2963. doi: 10.1021/jm801513z. [DOI] [PubMed] [Google Scholar]

- 95.Roughley SD, Jordan AM. The medicinal chemist’s toolbox: an analysis of reactions used in the pursuit of drug candidates. J Med Chem. 2011;54(10):3451–3479. doi: 10.1021/jm200187y. [DOI] [PubMed] [Google Scholar]

- 96.Scalia G, Grambow CA, Pernici B, Li Y-P, Green WH (2019) Evaluating scalable uncertainty estimation methods for DNN-based molecular property prediction. arXiv. 1910.03127 [DOI] [PubMed]

- 97.Brown N, Fiscato M, Segler MHS, Vaucher AC. GuacaMol: benchmarking models for de novo molecular design. J Chem Inf Model. 2019;59(3):1096–1108. doi: 10.1021/acs.jcim.8b00839. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. Molecules generated by Experiment 1 having QED > 0.8 and SA score > 0.8.

Additional file 2. Molecules generated by Experiment 2 having QED > 0.8 and SA score > 0.8.

Additional file 3. Molecules generated by Experiment 3 having QED > 0.8 and SA score > 0.8.

Additional file 4. Molecules generated by Experiment 4 having QED > 0.8 and SA score > 0.8.

Additional file 5. Molecules generated by Experiment 5 having QED > 0.8 and SA score > 0.8.