Abstract

Artificial intelligence (AI) has great potential to accelerate scientific discovery in medicine and to transform healthcare. In radiology, AI is about to enter into clinical practice and has a wide range of applications covering the whole diagnostic workflow. However, AI applications are not all smooth sailing. It is crucial to understand the potential risks and hazards that come with this new technology. We have to implement AI in the best possible way to reflect the time-honored ethical and legal standards while ensuring the adequate protection of patient interests. These issues are discussed under the light of core biomedical ethics principles and principles for AI-specific ethical challenges while giving an overview of the statements that were proposed for the ethics of AI applications in radiology.

With the advent of artificial intelligence (AI), we are facing a paradigm shift in our conceptualization of medical practice (1). Artificial intelligence is explained as the capability of a machine to imitate human intelligence (2). Machine learning (ML), is a subset of AI that entails all approaches allowing computers to learn from data. A subfield of ML, in turn, is deep learning (DL), which, inspired by neural structures, identifies distinctions from data automatically and in the process acquires the ability to draw relationships (2).

Currently, we are increasingly interacting with AI at many levels of our lives. Whenever we use smart assistants that are built in on our smartphones, recommendation tools on our streaming services or real-time navigation applications, we are, in fact, using AI. In the field of medicine, algorithms that can detect atrial fibrillation and diagnose diabetic retinopathy have been approved by the US Food and Drug Administration (FDA) and have already entered into the real-world setting (3). In radiology, AI algorithms that can diagnose intracranial hemorrhage on computed tomography (CT) and analyze breast density on mammography already had approval from the FDA and made their way into radiology practice (3). Current AI research and development focus on scheduling assistance, image and acquisition quality optimization, workflow prioritization, lesion detection and characterization, segmentation, and prognosis prediction and we will soon witness more AI utilization in radiology clinics (4). Nevertheless, AI applications are not all smooth sailing. It is crucial to understand potential risks and hazards that come with this new technology.

Potential risks of AI

AI algorithms can perpetuate human biases, and due to their “black-box” nature, there is often a lack of transparency in decision-making. When the algorithm is trained on data that inherit biases or do not include under-represented population characteristics, existing disparities can be reinforced. This problem has already been encountered in fields outside medicine where AI has been used.

When researchers at ProPublica, an American nonprofit organization performing investigative journalism in the interest of the public, evaluated an AI-assisted risk assessment system to predict a defendant’s future recidivism, implicit bias came to light (5). Upon analyzing 7000 algorithm-generated risk scores for the likelihood of committing future crimes and their corresponding judicial outcomes, they found out that the algorithm had a tendency to assign a higher risk score for African Americans regardless of the gravity of the committed crime and the criminal history of the defendant. The algorithm could assign an African American with minor offenses and no repeat crimes a higher risk score than it did for a white American who is a seasoned criminal (5).

Due to the multiplicity of data fed into AI algorithms, all hidden pathways of algorithm-made decisions would not be fully comprehended by its users. When an AI system makes an error, who will be, therefore, responsible for the unwanted outcomes: the user, the auditor, or the producer of the algorithm? The discussion became overheated when the first fatal autonomous car accident had occurred in 2018: Was the operator, the manufacturer, or the software vendor of the car faulty? (6). Finding who is liable would not be an easy task in every scenario and we should keep developing regulations along with the new technology to make AI a reality (6). The physicians following the standard of care in medical practice, will not be held liable for an unwanted outcome (7). Under current laws, these sanctions still hold the same when physicians use AI to make medical decisions (7). However, laws could change, new AI-aided standard of care could be defined, and the liability discussions could become challenging in healthcare (7, 8).

Initiatives to govern AI from an ethical standpoint

Guiding principles set forth for AI applications in radiology need to favor the advancement of public good and social value, the promotion of safety and good governance, the demonstration of transparency and accountability, and the engagement of all affected communities and stakeholders. Therefore, patients, radiologists, researchers, other stakeholders, and governments must work together to enact an ethical framework for AI that at the same time does not thwart new developments.

Conventional methods for the regulation of a product, research, or development might not be suitable for adoption into AI technologies. Since different phases of implementation require different actions, the recommended management consists of two steps: Before the implementation, i.e., the ex ante phase, where the risks need to be foreseen and precautioned, and after the implication, i.e., the ex post phase, where the harm that occurred has to be retrospectively evaluated and a solution for it needs to be found (9). The difficulties in realizing those regulations also vary according to the phase. The ex ante regulations might be constrained by AI systems that could be “discreet” (which require little to no physical infrastructure), “discrete” (which might be designed without deliberate coordination), “diffuse” (which could be conducted by multi-institutional and multinational collaboration), and “opaque” (whose potential perils would be challenging to detect by outside observers) (9). Moreover, the ex post regulation could be inefficient owing to issues of foreseeability and control, due to “the autonomous nature of AI” (9).

Many organizations have already published statements to guide the AI applications—such as the European Commission-drafted Ethics Guidelines for Trustworthy AI (10), the Asilomar AI Principles (11), and the Montreal Declaration for Responsible AI (12). In a recent review that mapped the existing ethics guidelines for AI across many fields including medicine, researchers uncovered 11 clusters of ethical principles that emerged from the analysis of 84 different guidelines: transparency, justice and fairness, non-maleficence, responsibility, privacy, beneficence, freedom and autonomy, trust, dignity, sustainability, and solidarity (13). The most prominent principle was transparency, whereas sustainability, dignity, and solidarity were under-represented compared to others (13). This could serve as a warning for us in the field of radiology to not overlook any major ethical principles.

The FDA released a discussion paper, entitled Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning-Based Software as a Medical Device, to support the development of safe and effective medical devices that use AI algorithms (14). As its title implies, the paper proposes a regulatory framework for the evolving technologies of AI. So far, the FDA-cleared algorithms are mainly the so-called “locked” algorithms, which do not continually learn every time the algorithm is used. The manufacturer can intermittently modify these “locked” algorithms by training it with new data. On the other hand, there is massive room for improvement regarding the use of the so-called “adaptive” or “continuously learning” algorithms, which can learn by themselves without manual modification. Adaptive algorithms can continue to learn through real-world usage. To regulate such algorithms, this new FDA framework is adopting a total product lifecycle approach, which enables us to evaluate and monitor pre- and post-marketing modulations. This audit is going to require a premarket review that includes the algorithm’s performance, anticipated modifications, and the manufacturer’s ability to control the risks of modifications. These pre-specified reports are intended to maintain the safety and effectiveness of “software as medical device”, which can learn and adapt itself via real-world usage (15).

Current radiology landscape

Recently, a statement entitled Ethics of AI in Radiology was published, which is a collaborative work of the American College of Radiology, the European Society of Radiology, the Radiological Society of North America, the Society for Imaging Informatics in Medicine, the European Society of Medical Imaging Informatics, the Canadian Association of Radiologists, and the American Association of Physicists in Medicine (16). This statement approaches the topics in three layers: the ethics of data, ethics of algorithms, and ethics of practice (Table 1). The Royal Australian and New Zealand College of Radiologists (RANZCR) have also published guidelines entitled Ethical Principles for AI in Medicine (17). They aim to develop standards for the use of AI tools in research or clinical practice, while training the radiologists and radiation oncologists in ML and AI (Table 2).

Table 1.

Multisociety ethics statement for AI applications in radiology

| Title of the document | Ethics of AI in Radiology |

|---|---|

| Issuer | American College of Radiology, European Society of Radiology, Radiological Society of North America, Society for Imaging Informatics in Medicine, European Society of Medical Imaging Informatics, Canadian Association of Radiologists, American Association of Physicists in Medicine |

| Summary of principles |

|

| Aims | To foster trust among all parties that radiology AI will do the right thing for patients and the community, and to see to it that these ethical aspirations are applied to all aspects of AI in radiology |

| Target audience | Radiologists and all others who build and use radiology AI products |

| General statement | “Everyone involved with radiology AI has a duty to understand it deeply, to appreciate when and how hazards may manifest, to be transparent about them, and to do all they can to mitigate any harm they might cause.” |

| Important dates | Call for comments: 26 February–15 April 2019 Publication of final version: 1 October 2019 |

AI, artificial intelligence.

Table 2.

The Royal Australian and New Zealand College of Radiologists’ ethics statement for AI applications in radiology

| Title of the document | Ethical Principles for AI in Medicine |

|---|---|

| Issuer | The Royal Australian and New Zealand College of Radiologists |

| Summary of principles |

|

| Aims | To complement existing medical ethical frameworks, to develop standards of practice for research in AI tools, to regulate market access for ML and AI, to develop standards of practice for deployment of AI tools in medicine, to upskill medical practitioners in ML and AI, to use ML and AI in medicine in an ethical manner |

| Target audience | Stakeholders, developers, health service executives and clinicians |

| General statement | “These tools should at all times reflect the needs of patients, their care and their safety, and they should respect the clinical teams that care for them.” |

| Important dates | Call for comments: 21 February–26 April 2019 Publication of final version: 30 August 2019 |

AI, artificial intelligence; ML, machine learning.

Both of these papers emphasize the value of patient privacy and support every effort to protect and preserve the security of the patient’s data (16, 17). Moreover, both papers discuss the avoidance of bias and prejudices by using varied data acquired from the general population as well as the minority groups. Both similarly define the role of AI in the decision-making process. The RANZCR paper restrains the role of AI in a consultative position and leaves the final decision to the patient and doctor’s mutual communication (17). Likewise, when AI is part of the process, the multisociety paper recommends that the decision be made after transparent communication between healthcare institutions, practitioners, and patients (16). There are minor differences between papers in the explanation of who is liable for AI-aided decisions. The RANZCR paper assures “shared liability” within the realms of the manufacturer, practitioner, and the management that allowed the usage of AI (17). While the multisociety paper supports the “shared liability” concept, it first calls for the precise definition of “AI-aided standard of care,” since in routine practice the person who is or is not delivering the standard of care is liable (7, 8, 16). Finally, both agree that the radiologists should be involved in the development of a code of ethics for AI applications in radiology (16, 17).

AI ethics statements should be open to new comments, amendments, and continuous update. It is imperative to keep communication between all involved parties transparent and protect patient’s privacy and data.

Core biomedical and AI-specific ethics principles

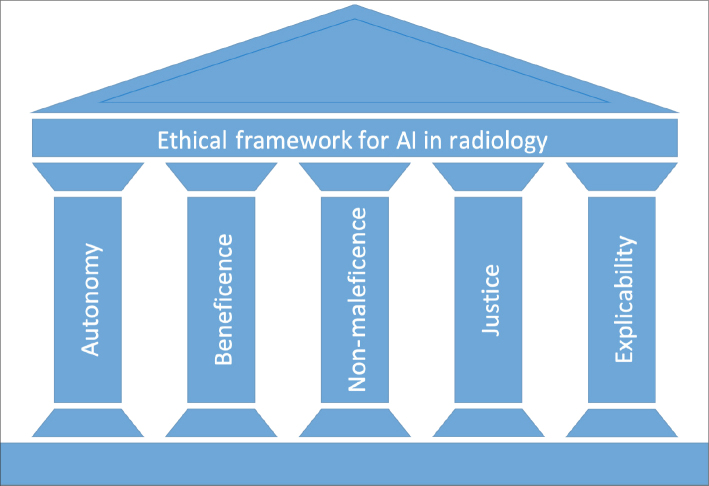

The ethical framework for AI applications in radiology should always reflect the long-established core principles of biomedical ethics—autonomy, beneficence, non-maleficence, and justice (18). This ethical framework should also comprise specific issues that AI applications are prone to violate, such as transparency and accountability (19, 20). Here, the issue is discussed under the light of core biomedical ethics principles and principles for AI-specific ethical challenges (Fig.). The key points are summarized in Table 3.

Figure.

Ethical framework for artificial intelligence applications in radiology.

Table 3.

Summary of core ethical principles, potential threats and possible solutions

| Core ethical principles | Explanation | Potential threat | Possible solution |

|---|---|---|---|

| Autonomy | The right of the patients to make their own choices |

|

|

| Beneficence and non-maleficence | Being impartial toward and avoiding harm to the patients |

|

|

| Justice | Fair distribution of medical goods and services |

|

|

| Explicability | Transparency and accountability of the decision-making process |

|

|

GDPR, general data protection regulation (of the European Union).

Autonomy

The principle of autonomy means that patients have the right to make their own choices. If the patient is not competent (e.g., a child or an adult lacking mental capacity), their legal guardian would be responsible for their rights. In healthcare, informed consent is utilized to ensure the principle of autonomy (18).

Medical images contain not just pixel data, but also protected health information (PHI) such as patient demographics, technical image parameters, and institutional information. While PHI data access is granted only on a need-to-know basis, data collection should be audited by institutional review boards to protect data usage and to ensure compliance with the patient’s consent (21). When developing and implementing AI in radiology, medical images may be repeatedly used for training and validation of algorithms; therefore, informed consents need to be adapted in a way that also accounts for continuous usage. This might be achieved by ongoing communication between researchers and research subjects (22).

In order to ensure patient privacy and confidentiality during the entire process of data collection, handling, storage and evaluation, bylaws such as the General Data Protection Regulation (GDPR), which was recently enacted in Europe, should be followed. Due to the back-tracking possibilities of data by AI algorithms, data pseudonymization—along with anonymization—is included in the GDPR to enhance data protection (23). Full anonymization, however, may be impossible depending on the body region scanned, as body contours can be rendered, and even facial recognition could be possible from medical images (24–26). Although automatic image deidentification tools are available, their accuracy is still not established (27). An ID badge, name-tag or name-shaped jewelry on images can give away a patient’s identity; therefore, a robust image deidentification process still requires a human review of each image (27, 28). To ensure confident anonymization, this tedious image-per-image control was performed before the release of Chest X-ray dataset, which consists of 100 000 images from 30 000 patients (29), and DeepLesion dataset, comprising 32 000 CT images from 4400 unique patients (30), by the US National Institute of Health (NIH) Clinical Center researchers.

Data collection and usage in AI also raises issues about data breaches, necessitating the need for the protection of patient data from cyber-attacks (25, 31, 32). Data privacy and protection insights could be borrowed from such long-established initiatives that collect patient data as the UK Biobank (https://www.ukbiobank.ac.uk). The UK Biobank stores all patient data securely, tests its IT systems regularly, and also commissions external experts to check the security of its systems. The system not only removes all personal identifiers but also prohibits, under a legal agreement, the researchers from trying to identify a patient. The individuals with access to patients’ personal identifiers are restricted to a limited number within the UK Biobank.

Currently, initiatives for electronic patient records such as healthbank.coop or patientendossier.ch leave the decision to the individual patient to tackle the data ownership issues. Patients can access their health data and define under which terms their data could be accessible to third parties, which could also enable them to receive individual monetary compensation. Authorizing patients on such management of their data might be a solution for data ownership issues and can be adopted on a country scale.

Beneficence and non-maleficence

The beneficence (“do good”) and non-maleficence (“do no harm”) principles are closely related to each other, hinting at being impartial, avoiding harm to the patient or anything that could be against the patient’s well-being (18). Artificial intelligence applications in radiology should be designed in a way that reflects both principles and promotes individual and collective well-being (12). Potential applications of AI in radiology include optimization of lesion detection, segmentation, characterization, image quality control, ground truth generation, and organization of the clinical workflow. On the one hand, AI-aided patient stratification would allow faster and more accurate decision-making and improve the well-being of individuals. On the other hand, such stratification could be used for commercial, non-beneficial purposes (32, 33). For instance, insurance companies could stratify patients in terms of their specific predicted risks of disease occurrence or projected treatment costs and raise the premiums accordingly (32).

Additional risks arise from training AI algorithms on biased data or implementing analysis strategies that maximize the profits of algorithm designers (32, 33). Algorithms can be designed for deception as in the case of Volkswagen, whereby the German automaker created one that was programmed to fake low nitrogen oxide emission levels to meet American standards during regulatory testing, while the actual, real-world emission was 40 times higher (32).

Another challenge could arise in using continuous self-learning algorithms in medical applications. To overcome this challenge and to intervene promptly in the case of veering off the target, self-learning algorithms should require feedback loops and constant monitoring for potential biases. While the identification and minimalization of the bias are consistently promoted, novel findings also need to be incorporated to allow continuous optimization towards higher quality standards (32, 33). By factoring in “locked” and “adaptive” algorithm differences and requiring “product lifecycle reports” for “adaptive” algorithms, the new FDA framework can help stave off these challenges. Strategies also need to be implemented for how new true-positive findings in a retrospective analysis or new false-positive findings in a prospective setting should be treated. In this context, similar guidelines to that in use for reporting adverse drug reactions could be adapted, which could be developed following careful scrutiny of end-user feedback loops.

Justice

The justice principle requires a fair distribution of medical goods and services (18). The development of AI should promote justice while eliminating unfair discriminations, ensuring shareable benefits, and preventing the infliction of new harm that can arise from implicit bias (20, 32).

Fairness, equity, and solidarity lie at the very foundation of justice (19). Personal or professional views about justice neither should be imposed on others nor on AI algorithms. The competing moral concerns should always be recognized and acknowledged. For instance, conflict can arise in the allocation of resources: Every physician might give priority to their patients rather than the patient who first truly needs it. This could occur due either to unawareness of other patients’ information or to cognitive biases. Whatever the reason, the effects of such moral conflicts could become part of an AI algorithm, which in turn perpetuates the same biased allocation. This could be overcome by allowing the patients to select among available resources and respecting their autonomy (18, 19).

In a recent study, researchers showed a healthcare allocation algorithm widely used in US hospitals systematically discriminating against African American patients (34). As a result, researchers found that African Americans, who were actually sicker than white Americans, were assigned a lower risk score and, therefore, did not get the additional help that they needed (34). We should be aware of similar problems that could arise when we use AI-aided work list prioritization algorithms, which can decide in favor of one group of patients due to implicit biases rather than prioritizing a real emergency in radiology departments.

Last but not least, interests might differ between the AI developer and the AI user, and some parties may only partly follow the ethical code. Therefore, ethical AI design needs also to factor in unethical human interventions (35).

Explicability (transparency and accountability)

Transparency and accountability principles can be brought under the explicability principle (19, 20). Artificial intelligence systems should be auditable, comprehensible and intelligible by “natural” intelligence at every level of expertise, and the intention of developers and implementers of AI systems should be explicitly shared (10).

Transparency

If an AI system fails or causes harm, we should be able to determine the underlying reasons, and if the system is involved in decision-making, there should be satisfactory explanations for the whole decision making process. This process should be auditable by the healthcare providers or authorities, thus enabling legal liability to be assigned to an accountable body (19, 21). Artificial intelligence algorithms may be susceptible to differences in imaging protocols and variations in patient characteristics. Therefore, transparent communication about patient selection criteria and sharing the code that is used to develop the algorithm and validate it in external datasets are required to ensure the generalizability of algorithms in different centers or settings (2, 3).

Accountability

Diagnosis- or treatment-related autonomous decisions that are made by AI may cause issues regarding who is accountable for these decisions as well as open a debate over who will be responsible if an autonomous system makes a mistake—its developer or user (31). Before broad adoption, AI applications in radiology should be held to the same degree of accountability as for new medications or medical devices that are at our disposal in radiology (2).

Research ethics for AI

In computer science, and particularly in AI research, there is a growing number of articles published in repositories, such as arxiv.org. Medical imaging journals that focus on AI applications are nowadays accepting papers for submission that were previously published on preprint servers. Other medical journals should also embrace the repository publishing and accept the articles that are already published as preprints (36). Although preprints are essential for the improvement of algorithms and reproducibility of research, these methods should not directly translate into clinical usage. Before the implementation of any AI method, we need rigorously validated and methodologically transparent research, in which data and codes are also explicitly shared (3). As with all applications developed for diagnostic purposes, AI algorithms that were developed for radiology practice also need to follow the Standards for Reporting of Diagnostic Accuracy Studies (STARD) statement (37).

Biomedical image challenges/competitions have the potential to foster AI research. The nature of competitions will drive the research groups to work and collaborate on solving specific problems independently and concurrently, which is notoriously different from the way traditional hypothesis-driven research is conducted (28). While a single investigator or group employs a serial path in traditional research that follows hypothesis formulation, institutional review board approval, data acquisition, knowledge discovery, manuscript submission steps; different research groups work to promote a parallel process in competitions, which contributes multiple participants from varying disciplines to find solutions to the same problem, supports sharing the discoveries openly with the community and gathers continuous feedback for knowledge discovery and dissemination (28). Although competitions may be an excellent way of accelerating AI research in medical imaging, they are not ready to replace or complement the traditional hypothesis-driven research. A recent review of 150 biomedical image analysis competitions revealed several limitations, including poor and incomplete challenge reporting that does not allow interpretability and reproducibility of the results, lack of transparency and representativeness of the (i.e., gold-standard) reference data, lack of missing data handling, the heterogeneity of ranking methods, lack of standardization and heterogeneity in challenge design (38). The researchers showed how challenge rankings, namely the winner algorithms, could have been different by using a different choice of metric and aggregation scheme (38). These problems emphasize the need for AI competitions to follow the standards to ensure fairness and transparency along with interpretability and reproducibility of results to really contribute to scientific discovery (28,38).

Conclusion

Artificial intelligence has great potential to accelerate scientific discovery in medicine and to transform healthcare. First, we have to recognize that “there is nothing artificial about AI,” as Fei-Fei Li, the co-director of the Stanford Human-Centered AI Institute, puts it (39). “It’s inspired by people, it’s created by people, and—most importantly—it impacts people”. “Natural” intelligence will and should always be responsible for decision making (31). Current healthcare systems rely on the opinion and recommendation of board-certified medical professionals; the use of AI will not change their responsibility and obligations of providing optimal medical care (7, 8).

Every new technology is subject to scrutiny and concerns before full acceptance. In the literature, early discussions about PACS implementation (40) featured such questions: If images were accessible by other clinicians, wouldn’t this carry the risk of diminishing the need for a radiologist? In the history of radiology, similar debates came once again to the fore when computer-aided detection (CAD) systems got into clinics: Would the new technology replace the radiologist? In hindsight, such concerns proved to be groundless: Medical images always required the interpretation of a trained eye despite the availability and usage of CAD systems, which led to fatigue due to their high false-positive rates (41). As the autonomous AI algorithms surpassed human performance on diagnosing a single or small set of diseases, the same concerns found echo across the scientific community (41). Throughout the history of radiology, radiologists consistently dealt with new technologies from first-hand and they proved to be very good at adopting evolving technologies. AI technologies will augment and complement the skills of radiologists rather than replace them (41).

We have to implement AI in the best possible way to reflect the time-honored ethical and legal standards while ensuring the adequate protection of patient interests. Education will undoubtedly play a key role in facilitating the ethical interaction of “natural” and artificial intelligence. We should start informing the public as well as training the radiology professionals in order to increase awareness of the issue and its inherent complexity. It is crucial for the entire community of radiologists to actively engage in and contribute to the formulation of ethical standards that will govern the use of—and research on—AI in medical imaging (2).

Finally, yet importantly, we should adopt Bayesian reasoning at every level of interaction with AI: We ought to revise our general view as well as ethics statements as we gain more information about AI applications (42). Right now, all AI-related ethics statements deal only with the foreseeable future concerns; to prepare for the more distant future, regular updating of these statements should be mandatory.

Main points.

It is crucial to understand the potential risks of AI and to implement in radiology clinics in the best possible way to reflect the time-honored ethical and legal standards.

All radiology communities should actively engage in the formulation of ethical standards that will govern the use of—and research on—AI in medical imaging.

Current AI-related ethics statements only address the foreseeable future concerns; in order to prepare for the more distant future, regular updating of these statements should be mandatory.

Acknowledgments

The author would like to thank Dr. Thomas J. Weikert, Dr. Christian Vogler, Dr. Gregor Sommer, Dr. Bram Stieltjes, Dr. Alexander W. Sauter, and Prof. Dr. Jens Bremerich, and Joe Cocker for providing helpful suggestions.

Footnotes

Conflict of interest disclosure

The author declared no conflicts of interest.

References

- 1.Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2:35. doi: 10.1186/s41747-018-0061-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Tang A, Tam R, Cadrin-Chênevert A, et al. Canadian Association of Radiologists White Paper on Artificial Intelligence in Radiology. Can Assoc Radiol J. 2018;69:1–16. doi: 10.1016/j.carj.2018.02.002. [DOI] [PubMed] [Google Scholar]

- 3.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25:44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 4.Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJWL. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Angwin J, Larson J, Mattu S, Kirchner L. Machine Bias. [Accessed: 28 October 2019];ProPublica. 2016 Available at: https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing. [Google Scholar]

- 6.Schmelzer R. What Happens When Self-Driving Cars Kill People? [Accessed: 28 October 2019];Forbes. 2019 Available at: https://www.forbes.com/sites/cognitiveworld/2019/09/26/what-happens-with-self-driving-cars-kill-people/#2989c715405c. [Google Scholar]

- 7.Sullivan HR, Schweikart SJ. Are current tort liability doctrines adequate for addressing injury caused by AI? AMA J Ethics. 2019;21:160–166. doi: 10.1001/amajethics.2019.160. [DOI] [PubMed] [Google Scholar]

- 8.Price WN, Gerke S, Cohen IG. Potential liability for physicians using artificial intelligence. JAMA. 2019;321:2281–2282. doi: 10.1001/jama.2019.15064. [DOI] [PubMed] [Google Scholar]

- 9.Scherer MU. Regulating artificial intelligence systems: risks, challenges, competencies, and strategies. Harv J Law Technol. 2016;29:354–400. doi: 10.2139/ssrn.2609777. [DOI] [Google Scholar]

- 10.High-Level Expert Group on Artificial Intelligence. Ethics Guidelines for Trustworthy AI. 2019. [Accessed: 28 October 2019]. Available at: https://ec.europa.eu/futurium/en/ai-alliance-consultation/guidelines.

- 11.Asilomar Conference. Asilomar AI Principles; Principles developed in conjunction with the 2017 Asilomar conference [Benevolent AI 2017]; 2017; [Accessed: 28 October 2019]. Available at: https://futureoflife.org/ai-principles/ [Google Scholar]

- 12.de Montréal U. Montreal Declaration for a Responsible Development of Artifical Intelligence. 2018. [Accessed: 28 October 2019]. pp. 1–21. Available at: http://nouvelles.umontreal.ca/en/article/2017/11/03/montreal-declaration-for-a-responsible-development-of-artificial-intelligence/

- 13.Jobin A, Ienca M, Vayena E. The global landscape of AI ethics guidelines. Nat Mach Intell. 2019;1:389–399. doi: 10.1038/s42256-019-0088-2. [DOI] [Google Scholar]

- 14.FDA. Proposed Regulatory Framework for Modifications to Artificial Intelligence / Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) 2019. [Accessed: 28 October 2019]. Available at: https://www.fda.gov/downloads/MedicalDevices/DigitalHealth/SoftwareasaMedicalDevice/UCM635052.pdf.

- 15.Wiens J, Saria S, Sendak M, et al. Do no harm: a roadmap for responsible machine learning for health care. Nat Med. 2019;25:1337–1340. doi: 10.1038/s41591-019-0609-x. [DOI] [PubMed] [Google Scholar]

- 16.Geis JR, Brady A, Wu CC, et al. Ethics of artificial intelligence in radiology: summary of the joint European and North American multisociety statement. Insights Imaging. 2019;10:101. doi: 10.1186/s13244-019-0785-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.The Royal Australian and New Zealand College of Radiologists. Ethical Principles for Artificial Intelligence in Medicine. 2019. [Accessed: 28 October 2019]. Available at: https://www.ranzcr.com/whats-on/news-media/340-media-release-ethical-principles-for-ai-in-medicine.

- 18.Beauchamp T, Childress JF. Principles of Biomedical Ethics. Oxford University Press; 2013. [Google Scholar]

- 19.Floridi L, Cowls J, Beltrametti M, et al. AI4People – An ethical framework for a good AI society : opportunities, risks, principles, and recommendations. Minds Mach. 2018;32:1–24. doi: 10.1007/s11023-018-9482-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kohli M, Geis R. Ethics, artificial intelligence, and radiology. J Am Coll Radiol. 2018;15:1317–1319. doi: 10.1016/j.jacr.2018.05.0. [DOI] [PubMed] [Google Scholar]

- 21.Liew C. The future of radiology augmented with Artificial Intelligence: A strategy for success. Eur J Radiol. 2018;102:152–156. doi: 10.1016/j.ejrad.2018.03.019. [DOI] [PubMed] [Google Scholar]

- 22.Finlay T, Collett C, Kaye J, et al. Dynamic Consent: a potential solution to some of the challenges of modern biomedical research. BMC Med Ethics. 2017;18:1–10. doi: 10.1186/s12910-016-0162-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.The European Commission. Directive (EU) 2016/1148 of the European Parliament and of the Council of 6 July 2016 concerning measures for a high common level of security of network and information systems across the Union. [Accessed: 28 October 2019];Off J Eur Union. 2016 6:30. Available at: https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32016L1148&from=EN. [Google Scholar]

- 24.Prior FW, Brunsden B, Hildebolt C, et al. Facial recognition from volume-rendered magnetic resonance imaging data. IEEE Trans Inf Technol Biomed. 2009;13:5–9. doi: 10.1109/TITB.2008.2003335. [DOI] [PubMed] [Google Scholar]

- 25.Jaremko JL, Azar M, Bromwich R, et al. Canadian Association of Radiologists White Paper on Ethical and Legal Issues Related to Artificial Intelligence in Radiology. Can Assoc Radiol J. 2019;70:107–118. doi: 10.1016/j.carj.2019.03.001. [DOI] [PubMed] [Google Scholar]

- 26.Schwarz CG, Kremers WK, Therneau TM, et al. Identification of anonymous MRI research participants with face-recognition software. N Engl J Med. 2019;381:1684–1686. doi: 10.1056/NEJMc1908881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Langlotz CP, Allen B, Erickson BJ, et al. A roadmap for foundational research on artificial intelligence in medical imaging: from the 2018 NIH/RSNA/ACR/the academy workshop. Radiology. 2019;291:781–791. doi: 10.1148/radiol.2019190613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Prevedello LM, Halabi SS, Shih G, et al. Challenges related to artificial intelligence research in medical imaging and the importance of image analysis competitions. Radiol Artif Intell. 2019;1:e180031. doi: 10.1148/ryai.2019180031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wang X, Peng Y, Lu L, Lu Z, Bagheri M, Summers RM. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. Proc - 30th IEEE Conf Comput Vis Pattern Recognition, CVPR 2017; 2017; pp. 3462–3471. [DOI] [Google Scholar]

- 30.Yan K, Wang X, Lu L, Summers RM. DeepLesion: automated mining of large-scale lesion annotations and universal lesion detection with deep learning. J Med Imaging. 2018;5:036501. doi: 10.1117/1.JMI.5.3.036501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Pesapane F, Volonté C, Codari M, Sardanelli F. Artificial intelligence as a medical device in radiology: ethical and regulatory issues in Europe and the United States. Insights Imaging. 2018;9:745–753. doi: 10.1007/s13244-018-0645-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Char DS, Shah NH, Magnus D. Implementing machine learning in health care — addressing ethical challenges. N Engl J Med. 2018;378:981–983. doi: 10.1056/NEJMp1714229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Vayena E, Blasimme A, Cohen IG. Machine learning in medicine: addressing ethical challenges. PLoS Med. 2018;15:e1002689. doi: 10.1371/journal.pmed.1002689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366:447–453. doi: 10.1126/science.aax2342. [DOI] [PubMed] [Google Scholar]

- 35.Shaw J. Artificial Intelligence & Ethics. Harvard Magazine [Internet] 2019. Jan-Feb. [Accessed: 28 October 2019]. pp. 44–49. Available at: https://harvardmagazine.com/2019/01/artificial-intelligence-limitations.

- 36.Oakden-Rayner L, Beam AL, Palmer LJ. Medical journals should embrace preprints to address the reproducibility crisis. Int J Epidemiol. 2018;47:1363–1365. doi: 10.1093/ije/dyy105. [DOI] [PubMed] [Google Scholar]

- 37.Bossuyt PM, Reitsma JB, Bruns DE, et al. STARD 2015: An updated list of essential items for reporting diagnostic accuracy studies. Radiology. 2015;277:826–832. doi: 10.1148/radiol.2015151516. [DOI] [PubMed] [Google Scholar]

- 38.Maier-Hein L, Eisenmann M, Reinke A, et al. Why rankings of biomedical image analysis competitions should be interpreted with care. Nat Commun. 2018;9:1–13. doi: 10.1038/s41467-018-07619-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hempel Jessi. Fei-Fei Li’s quest to make AI humanity. [Accessed: 28 October 2019];Wired [Internet] 12:1–34. Available at: https://www.wired.com/story/fei-fei-li-artificial-intelligence-humanity/ [Google Scholar]

- 40.Hall Ferris M. Perils of PACS. Radiology. 1998;213:307–308. doi: 10.1148/radiology.213.1.r99au32307. [DOI] [PubMed] [Google Scholar]

- 41.Langlotz CP. Will artificial intelligence replace radiologists? Radiol Artif Intell. 2019;1:e190058. doi: 10.1148/ryai.2019190058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hornberger J. Introduction to Bayesian reasoning. Int J Technol Assess Health Care. 2001;17:9–16. doi: 10.1017/S0266462301104022. [DOI] [PubMed] [Google Scholar]